3.1.1. Multi-Scale Feature Extraction Method

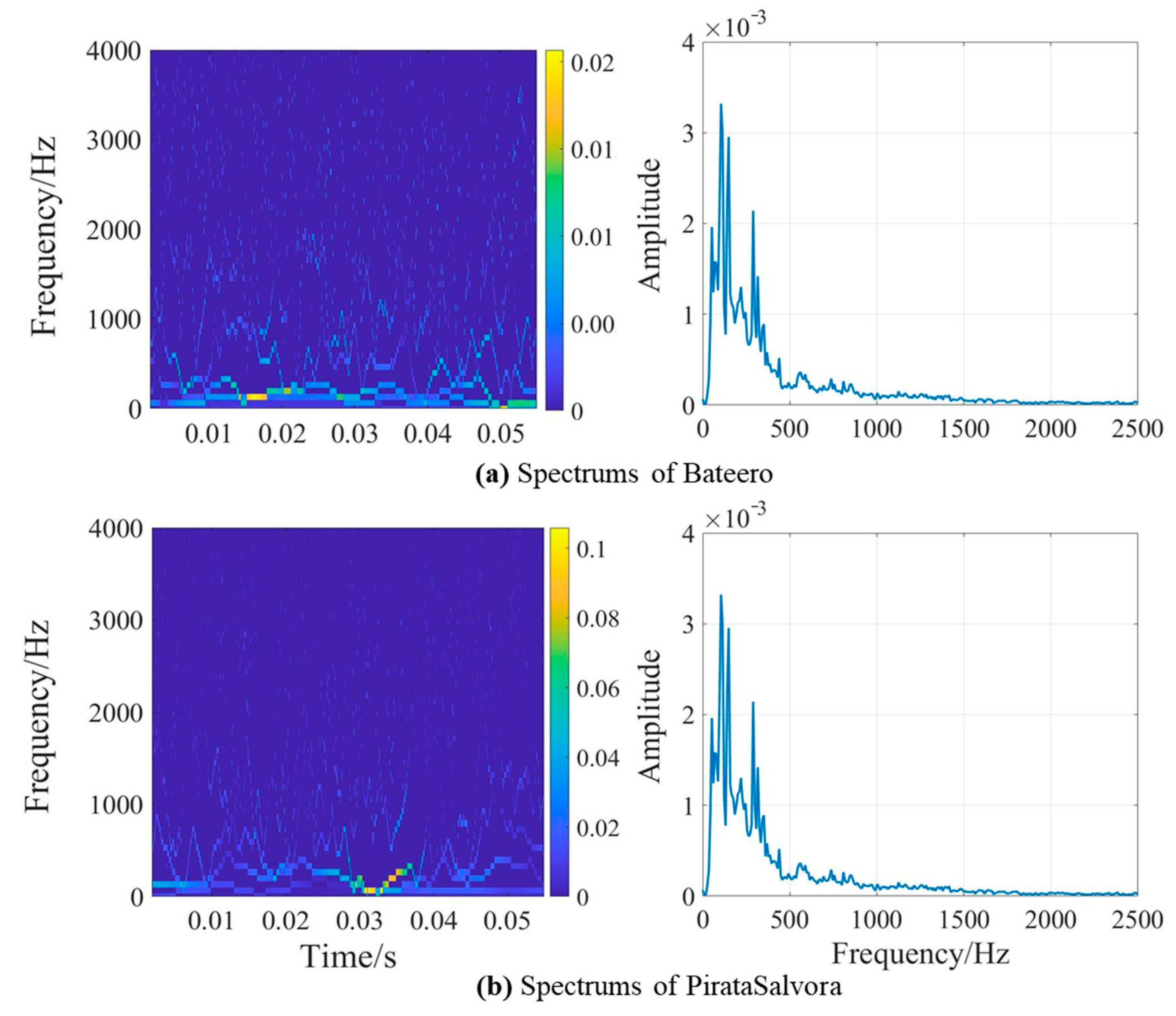

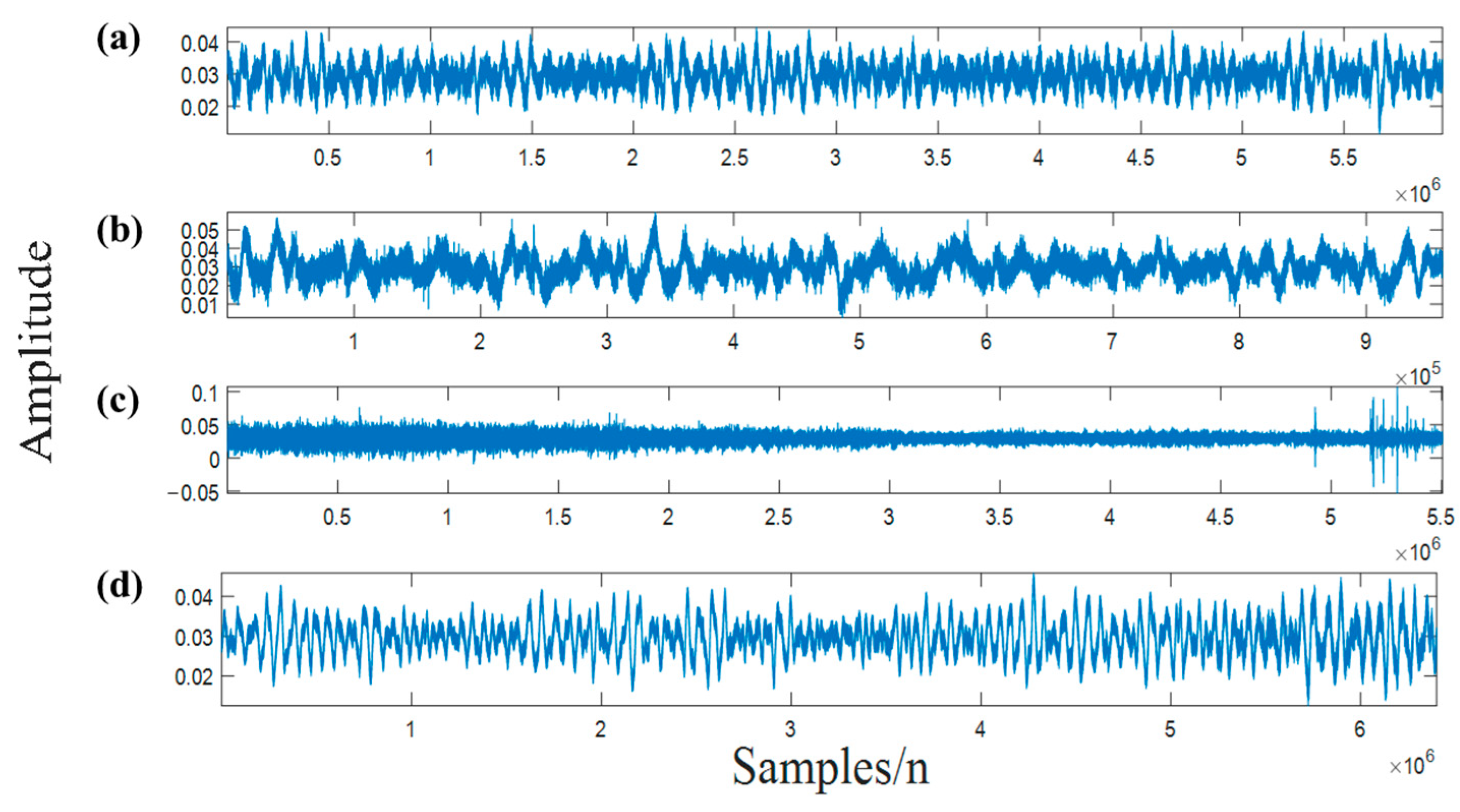

Feature extraction is a critical component of the system to improve its generalization ability. It aims to extract various types of features that contain as much information as possible about the ship-radiated noise. However, due to the noise-like character in the power spectrum of ship-radiated noise, it is complicated to accurately obtain the character of the noise in its spectrum. Moreover, ship-radiated noise has a significantly wider frequency range that reflects noise characteristics in its spectrum. As a result, it is difficult to construct suitable filters to obtain powerful information and compress low-dimensional features from ship-radiated noise without any prior knowledge. Learnable amplitude–time–frequency features tend to include more comprehensive information, but a considerable portion is useless or conflicting. In this work, we experimentally evaluate HHT-based features. The following equation can be used to describe the observed signal:

where

is the original time domain ship-radiated noise,

represents the pure ship-radiated noise, and

denotes the natural noise. In practice, a hydrophone system can effectively focus on a valid signal

when the radiated noise is much greater than the background ambient noise. In feature extraction processing, it is simulated as pure ship-radiated noise because

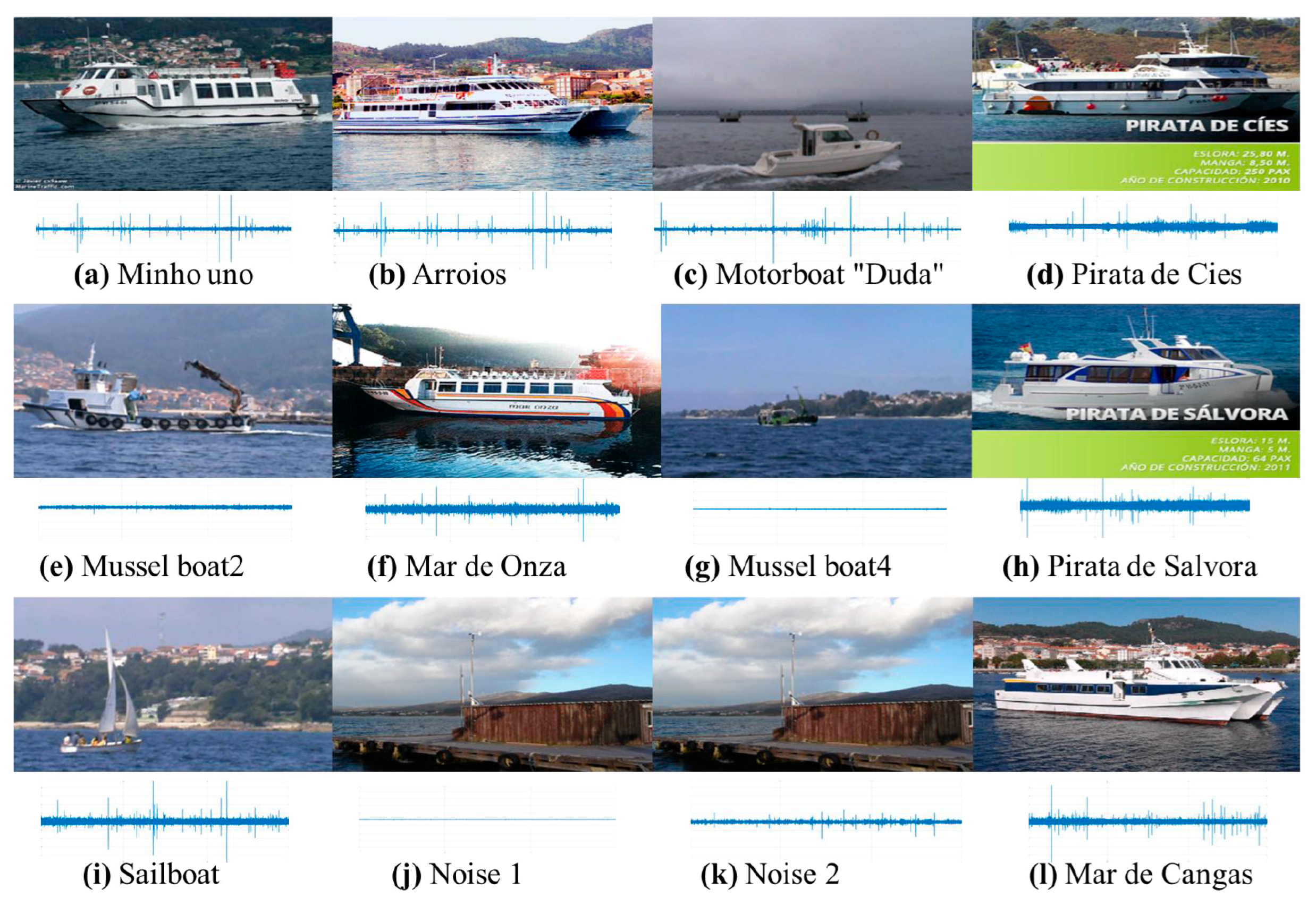

is the main component. The ship-radiated noise signal is intercepted into samples with a length of 3000 sampling points.

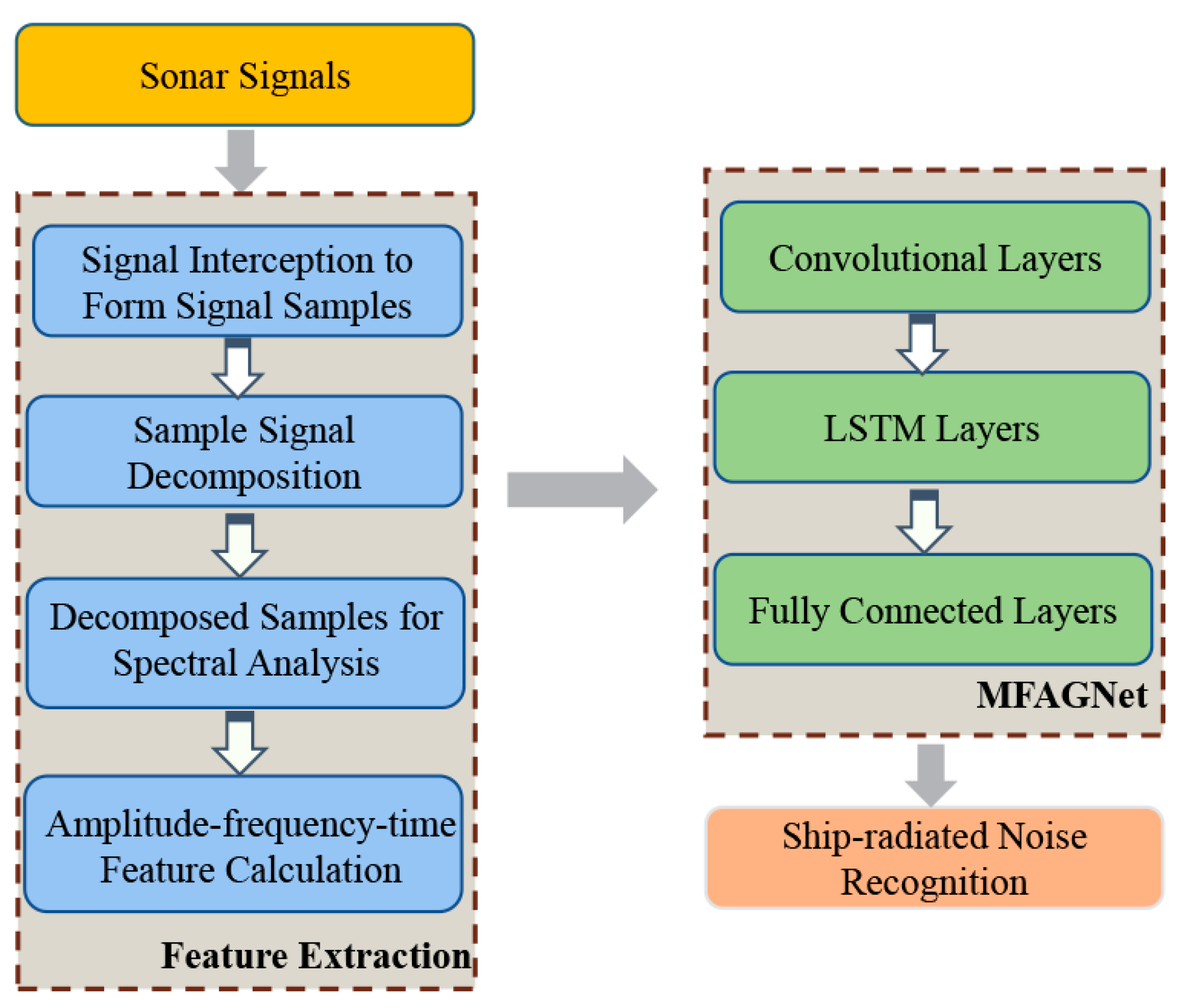

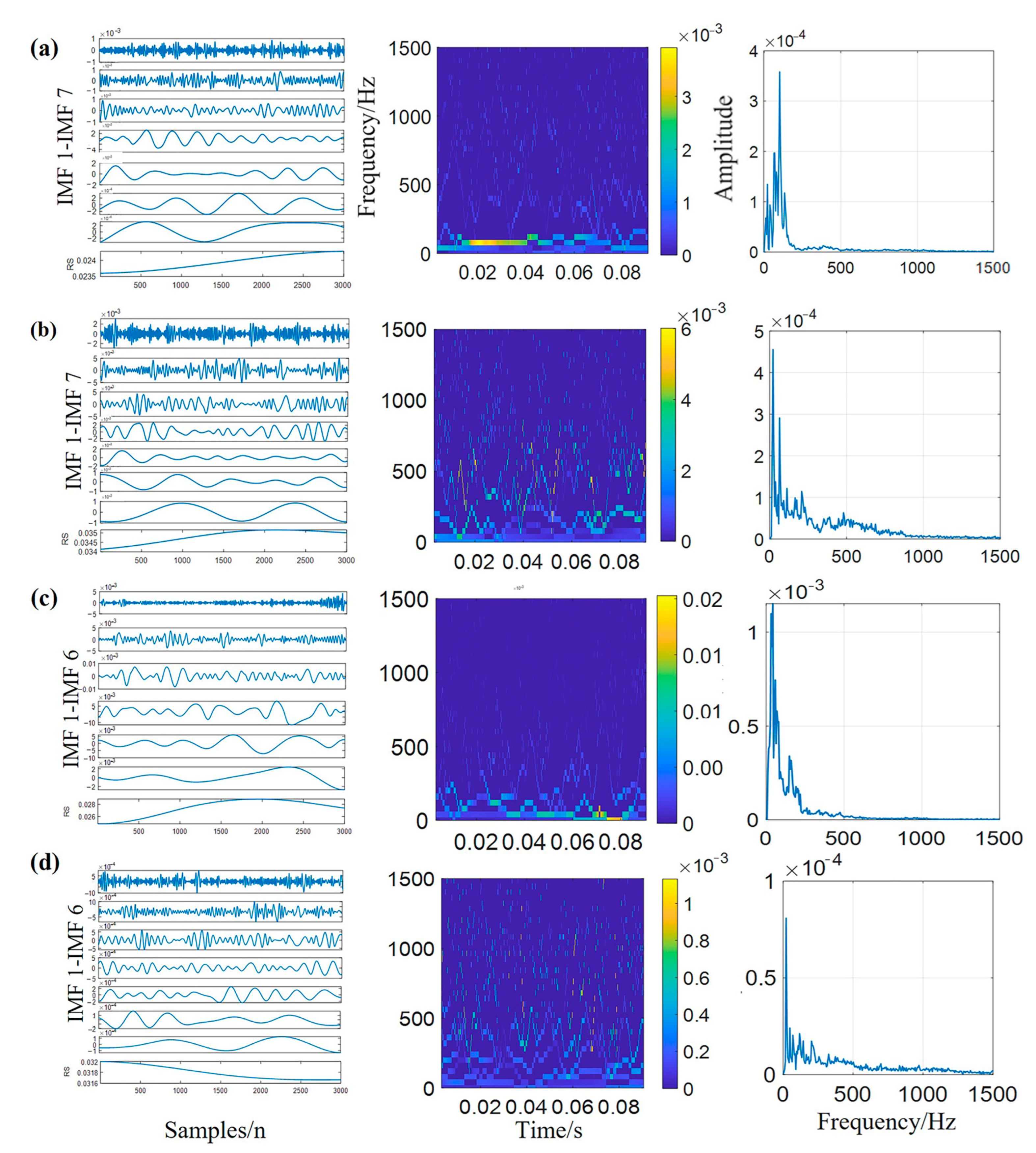

After improved HHT-based extraction algorithms, each intercepted sample is transformed into a feature matrix, which can be utilized by the following recognition system. The process of multi-scale feature extraction is illustrated in

Figure 3. In this study, we extracted six representative features, which include energy, frequency and time domain amplitude. Features are extracted based on the explanation given below, and the resulting amplitude–time–frequency domain feature data are stored in the novel form of the feature matrix. These feature matrices serve as input to the proposed MFAGNet model, as well as machine learning and deep learning models applied for comparison in this study.

- 1.

Improved Hilbert-Huang transform algorithm

The Hilbert-Huang transform algorithm requires the following two steps: EMD and Hilbert spectrum analysis (HSA). The essence of the EMD algorithm decomposes any signal into a collection of the so-called intrinsic mode functions (IMFs) [

38], and each of the IMFs is a narrow-band signal with a distinct instantaneous frequency that could be computed by using the HHT [

39].

where

represents oscillatory modes embedded in

with both amplitude

and frequency and

.

is the instantaneous angular frequency. The instantaneous frequency extracts the frequency that varies with time from the signal.

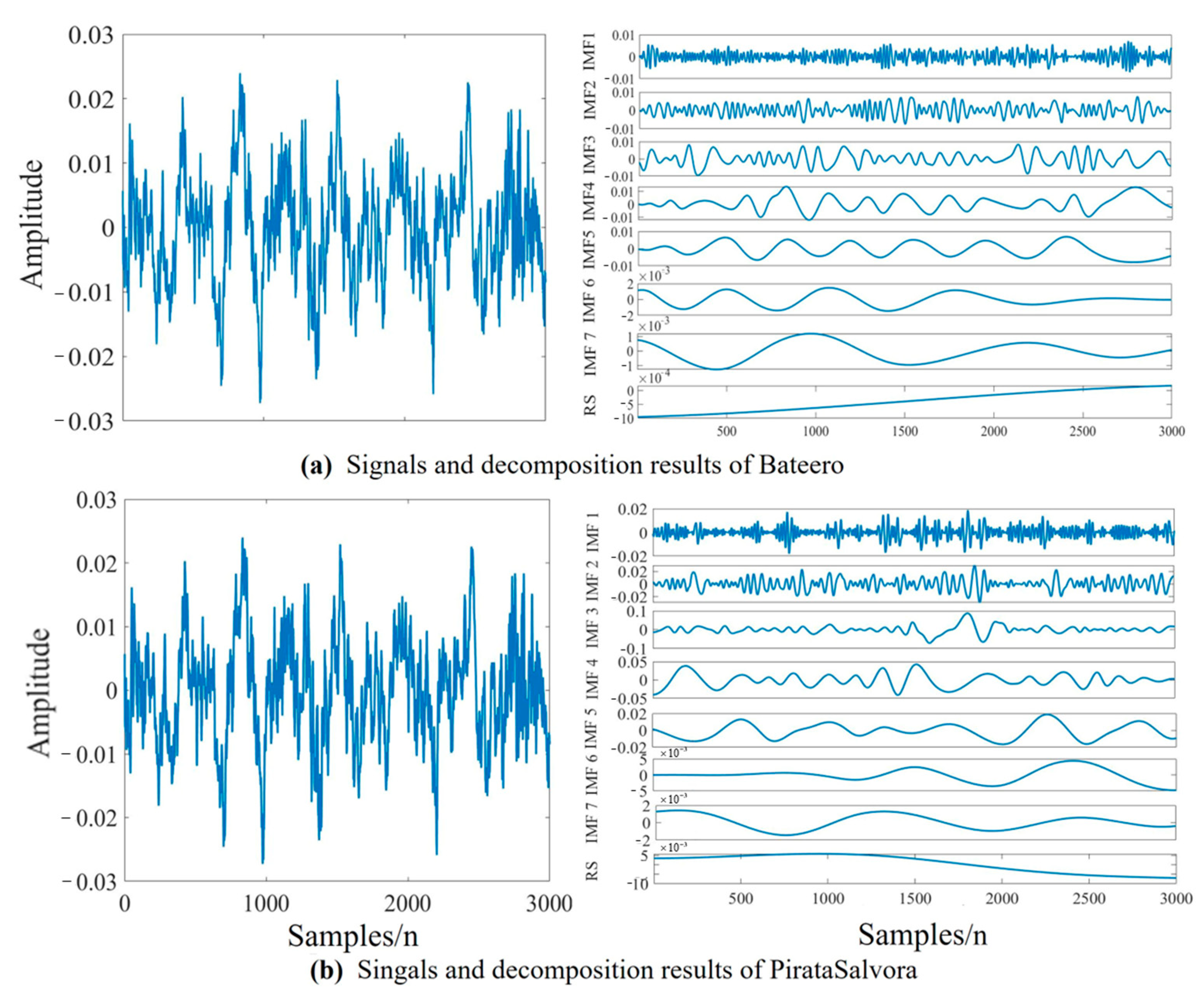

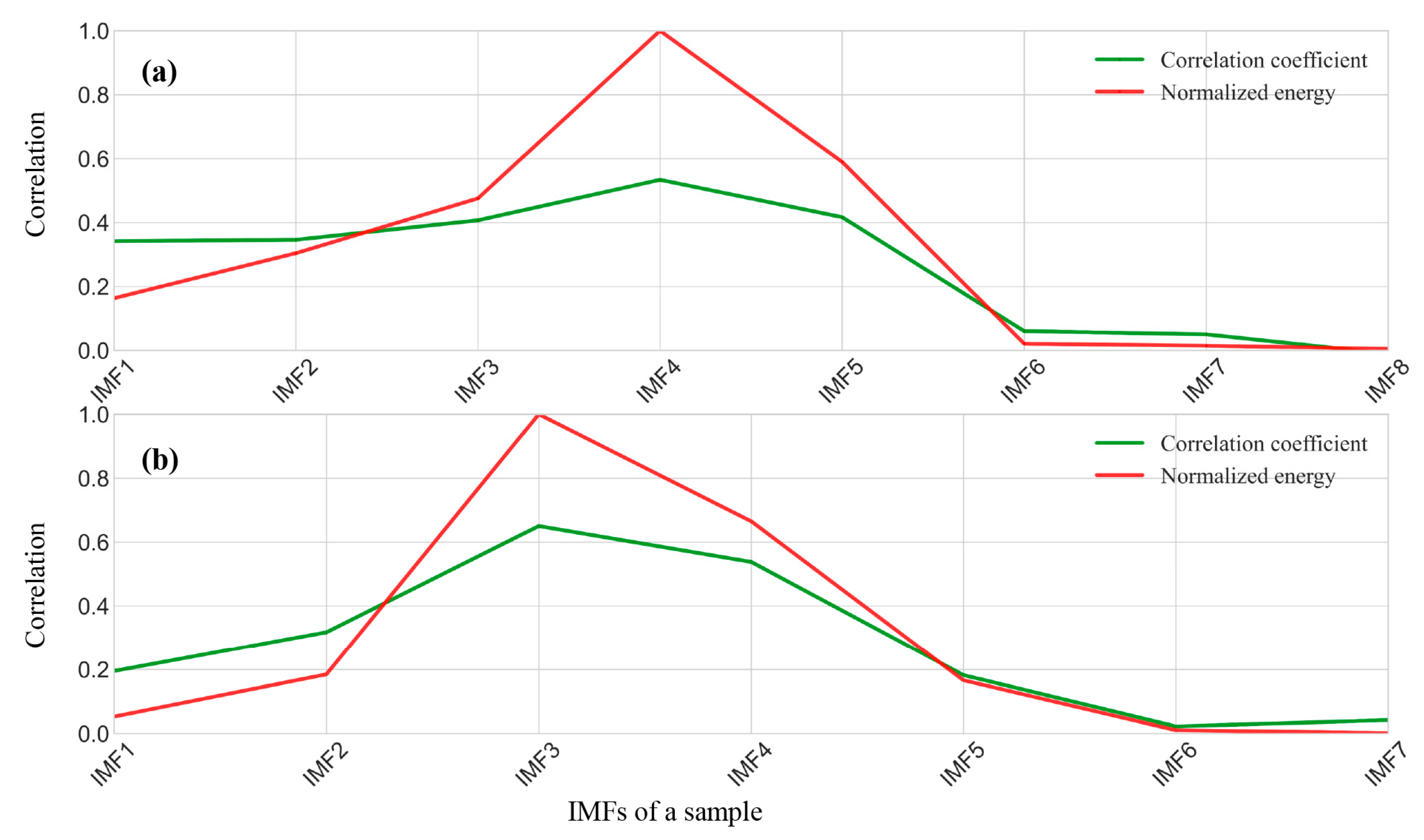

Compared with signal processing methods that apply fixed basis functions, such as Fourier transform and wavelet transform, the EMD algorithm can intuitively, directly and adaptively decompose a given signal to a collection of IMFs. However, the ship-radiated noise consists of a broadband component, narrow-band spectral and random environment noise, so it has strong non-stationary non-linear characteristics. Traditional EMD methods are susceptible to mode aliasing when processing such signals, leading to the ineffective decomposition of signals. Therefore, the feature extraction process used an improved version of the traditional EMD algorithm named the MEEMD algorithm, which calculates the permutation entropy (PE) to eliminate false components in the signal, screens out the main IMF components, and then suppresses mode aliasing. Adaptive decomposition and the acquisition of effective IMFs can provide valuable information on the feature extraction and recognition of ship-radiated noises.

In this work, we utilize the improved Hilbert–Huang transform algorithm to achieve better feature extraction. At first, the Hilbert transform is carried out based on the decomposed signal. It is applied to calculate the instantaneous frequency of each order IMF, in order to gain the amplitude–time–frequency representation of the signal, namely the Hilbert spectrum. The Hilbert spectrum represents the distribution of instantaneous amplitudes in the frequency–time plane, with good time–frequency aggregation. Additionally, the Hilbert marginal spectrum is gained by calculating the integral of the Hilbert spectrum over the whole period, which demonstrates the distribution of the same frequency amplitude or energy superposition value in the frequency domain during the entire time–frequency range. The Hilbert spectrum and Hilbert marginal spectrum are expressed in Equations (3) and (4).

where

and

are the Hilbert spectrum and Hilbert marginal spectrum, respectively.

- 2.

Amplitude–time–frequency domain features

For sampled ship radiated noise, MEEMD will decompose these signals into a series of IMFs, including specific components. Once the sampled ship-radiated noise is decomposed, a sequence of IMFs can be obtained. However, direct observation of these IMF waves in the time domain still yields ambiguous results. Consequently, the first 6 IMFs that contain the main information, and the amplitude–time–frequency characteristic information is extracted by calculating the Hilbert–Huang spectrum and Hilbert marginal spectrum.

In the time domain, the Hilbert energy can be obtained by integrating the square of the Hilbert spectrum with time, which represents the energy accumulated by each frequency over the entire time length. It can be expressed as follows:

where

represents Hilbert energy.

In the frequency domain, the Hilbert instantaneous energy spectrum can be obtained by integrating the frequency with the square of the Hilbert spectrum. Instantaneous energy represents the energy accumulated in the whole frequency domain at each time, and it can be expressed as follows:

where

represents the Hilbert instantaneous energy.

One can suppose that each IMF component contains N sampling points, and the instantaneous amplitude of the

th sampling point after Hilbert transform is denoted as

, and the instantaneous frequency is

. The instantaneous energy can be expressed as follows:

where

. In the whole sampling time, the instantaneous energy variation range can be obtained as follows:

where any order of IMF energy

is defined as follows:

The average amplitude of any order of IMF

can be defined as follows:

In addition to the energy feature that can express noise information carried in the samples, the frequency feature is also essential to express the ship-radiated noise information of samples. The average instantaneous frequency of each order IMF is defined as follows:

The instantaneous frequency of any order of IMF always fluctuates around a central frequency, which is the central frequency of this order of IMF. Here, the IMF center frequency

of each order of IMF is defined as follows:

because we select the first six-order IMFs and extract 6 amplitude–time–frequency representative features of each order of IMF, respectively. After extraction of the features, each sample is formed as a feature matrix with a dimension of 1 × 36.

3.1.2. The Proposed MFAGNet Model

For this system, the design of the recognition network is a crucial part. In this section, we describe the proposed MFAGNet network architecture in detail. Moreover, in addition to building high-performance networks, data preprocessing often plays an effective role in the effectiveness of the network. Therefore, taking into account the complex multi-scale features, the data preprocessing method is presented and described below.

- 1.

The architecture of MFAGNet

In order to enhance ship recognition performance, a new hybrid neural network termed MFAGNet is proposed, which primarily combines the CNN and LSTM according to the types of extracted feature matrices.

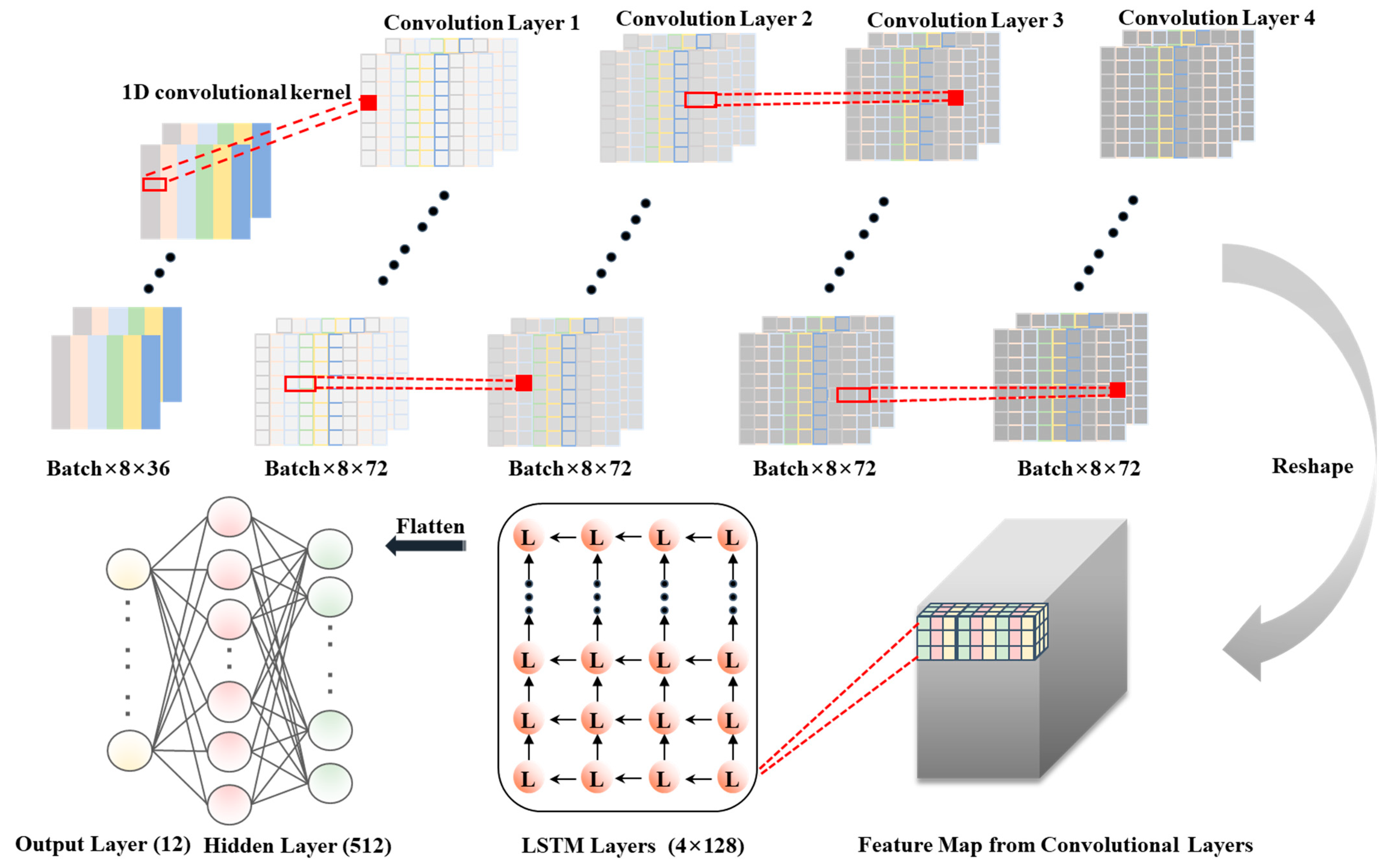

Figure 4 shows the proposed neural network structure that contains various types of modules, such as multiple convolutional neural layers, recurrent layers, and fully connected layers. The key concept of MFAGNet is to better use feature matrices that have both spatial and temporal coupling characteristics as the basis for the samples. Applying these strategies to construct network models will considerably enhance the training efficiency of the neural network model and the accuracy of the recognition performance.

Convolutional neural networks are capable of learning not only image feature information but also sequence data, including various signal data, time series data, and natural language processing [

40]. Taking into account the ability of 1D CNN to extract serial information features, 1D convolutional layers are applied as the shallow layer of MFAGNet. With this design approach, temporal information on the extracted features can be directly learned and enriched. Following multiple convolution layers, LSTM layers are employed as a second module to extract features at each time index. Eventually, the recognition results are outputted by fully connected layers.

- (1)

Convolutional Layers:

Two-dimensional CNN architectures have traditionally been applied to image processes to extract detailed image information features. However, input feature matrices used in ship recognition are one-dimensional in nature, making them unsuitable for 2D and 3D CNN architectures. The 2D and 3D CNN architectures require data converted to image form, which leads to the loss of detailed information. In addition, the 1D CNN operation does not alter feature orders, greatly reducing the network complexity. Although it will reduce the number of training parameters, the pooling layer is still removed to prevent the loss of feature information.

MFAGNet contains four convolutional layers.

Table 2 shows the size of the convolutional kernels and the layer number of filters in each convolutional layer, and

Figure 4 displays the architecture of the convolutional layers. After conducting numerous experiments, it was found that the best convolutional structures are those with kernel sizes of 2 × 72, 2 × 72, 2 × 72, 2 × 72 and 2 × 72 for each layer. It is possible to enhance the ability of convolutional layers to extract multiple features. The output of each convolutional layer is taken as input to a batch normalization layer and a Randomized Leaky Rectified Linear Units (RReLU) layer. The operations are well defined by Equation (14).

where

is the

th feature map generated by the

th layer.

represents the weight associated with the

th convolution kernel and the

th feature map. BN signifies the batch normalization operation and

denotes the function of the activation we selected for each layer.

is the bias concerned with the

th convolution kernel and

means the convolution operation between the input feature map and the convolution kernel.

- (2)

LSTM Layers:

As the input data have sequential characteristics, it is necessary to apply LSTM layers to extract features from the ship noise signals. The typical recurrent neural network (RNN) only has a chain structure of repetitive modules with a non-linear tanh function layer inside [

41,

42]. However, it is complicated for a basic RNN to learn complex non-linear features with this simple structure, and the training process is prone to gradient instability. In contrast, LSTM has a repeating module structure that is more elaborate and has an excellent capability to extract time series features [

43]. It employs specialized hidden units that enable the retention of long-term memory, while mitigating the issue of gradient vanishing [

44]. Therefore, LSTM is selected to design recurrent layers with the aim of extracting temporal features. LSTM can be mathematically represented by the following expressions.

Initially, the forget gate gating signal determines the discarded information in the cell state, as follows:

where

denotes the input data at the current moment,

is the weight matrix of the forgot gate,

represents the bias term and then the last moment state

is multiplied by the weight matrix.

indicates that the data are transformed to a number between 0 and 1 by the sigmoid activation function.

Then, the input gate determines the amount of information updated on the cell status. The input data go through the sigmoid activation function to create the updated candidate vectors. In addition, the calculation of the input gate is applied to decide which input data need to be updated, as follows:

where

represents the state of the update gate and

denotes the input node whose value between −1 and 1 passes through a tanh activation function. They work together to control the update of the cell state. The previous steps are combined with the forgetting gate to determine the update state. The old state

is multiplied by

and added together with the result of the input gate to decide the updated information. It can obtain the update state with the value

.

where the output gate controls the next hidden gating state, and is determined by the following two parts: the first part is the output vector value generated by the updated cell state, while the second part is used to calculate the result of the gate state, which can be described as the following equations:

where

is the result of the output gate and it is calculated through the tanh activation function with

to obtain the output data

. The combination of these different gate signals ensures that the information does not disappear owing to time lapse or extraneous interference.

The LSTM network layers contain four layers, and the input data are derived from the features obtained from the CNN layers. The first LSTM layer contains 128 cells, and the output dimension of all the other three LSTM layers is also 128. The effective features are extracted by four LSTM layers.

- (3)

Fully connected layers:

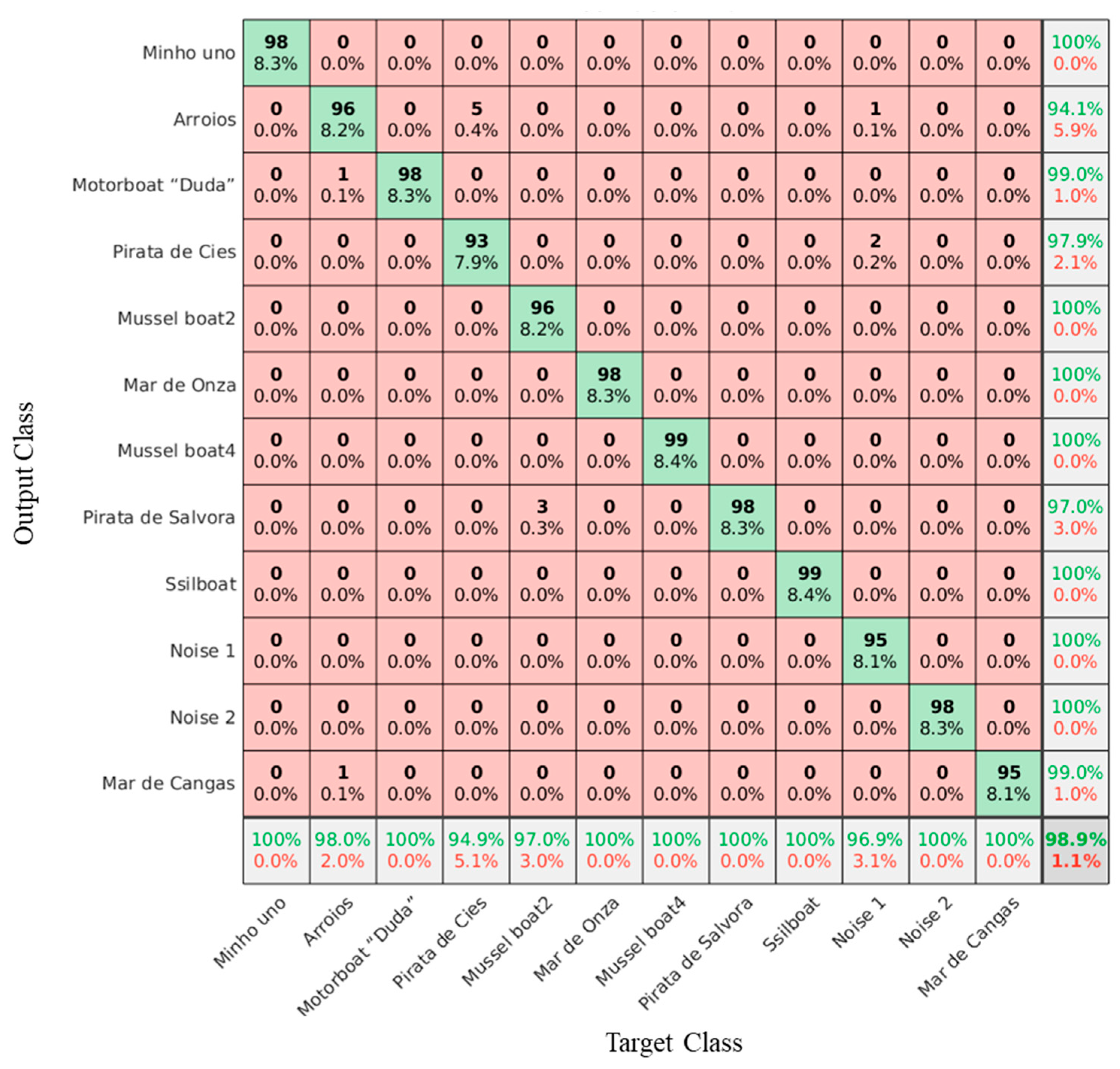

The fully connected layers in our proposed MFAGNet model consist of one hidden layer with 512 fully connected neurons. The output result layer has a total of 12 neurons, corresponding to the 12 specific noises. In this work, the feature map of the last time step in the final LSTM layer is selected to be inputted to the fully connected layer through a flattening operation. After the hidden layer and the dropout layer, the obtained feature extraction results are inputted into the Softmax layer for optimal classification results. The Softmax activation function can be described as follows:

where

represents the model parameters we obtained, and

N denotes the total number of specific noises. The Softmax classifier calculates the probability of each class and normalizes all exponential probabilities. The highest prediction probability is chosen as the recognition result of the network.

Regarding the convolutional and hidden layers of the proposed network, the RReLU activation function is selected and described as follows:

where

is randomly sampled in the range [0.125, 0.25]. On the one hand, it has negative values, which allow gradients to be calculated in the negative part of the function. On the other hand, it can be set to a random number in this interval during the training and a fixed value during the testing. This design can effectively reduce the existence of dead neurons to learn gradients in the training.

Table 2.

The architecture of MFAGNet.

Table 2.

The architecture of MFAGNet.

| Layer Type | Configuration |

|---|

| Convolutional Layers |

| Convolution 1D + BatchNorm | Filters: 2 × 72, RReLU |

| Convolution 1D + BatchNorm | Filters: 2 × 72, RReLU |

| Convolution 1D + BatchNorm | Filters: 2 × 72, RReLU |

| Convolution 1D + BatchNorm | Filters: 2 × 72, RReLU |

| LSTM Layers | |

| LSTM + Dropout | Filters: 128, Tanh, 0.5 |

| LSTM + Dropout | Filters: 128, Tanh, 0.5 |

| LSTM + Dropout | Filters: 128, Tanh, 0.5 |

| LSTM + Dropout | Filters: 128, Tanh, 0.5 |

| Fully Connected Layers | |

| Fully Connected + Dropout | Filters: 512, RReLU, 0.5 |

| Output Layer | Filters: 12, RReLU |

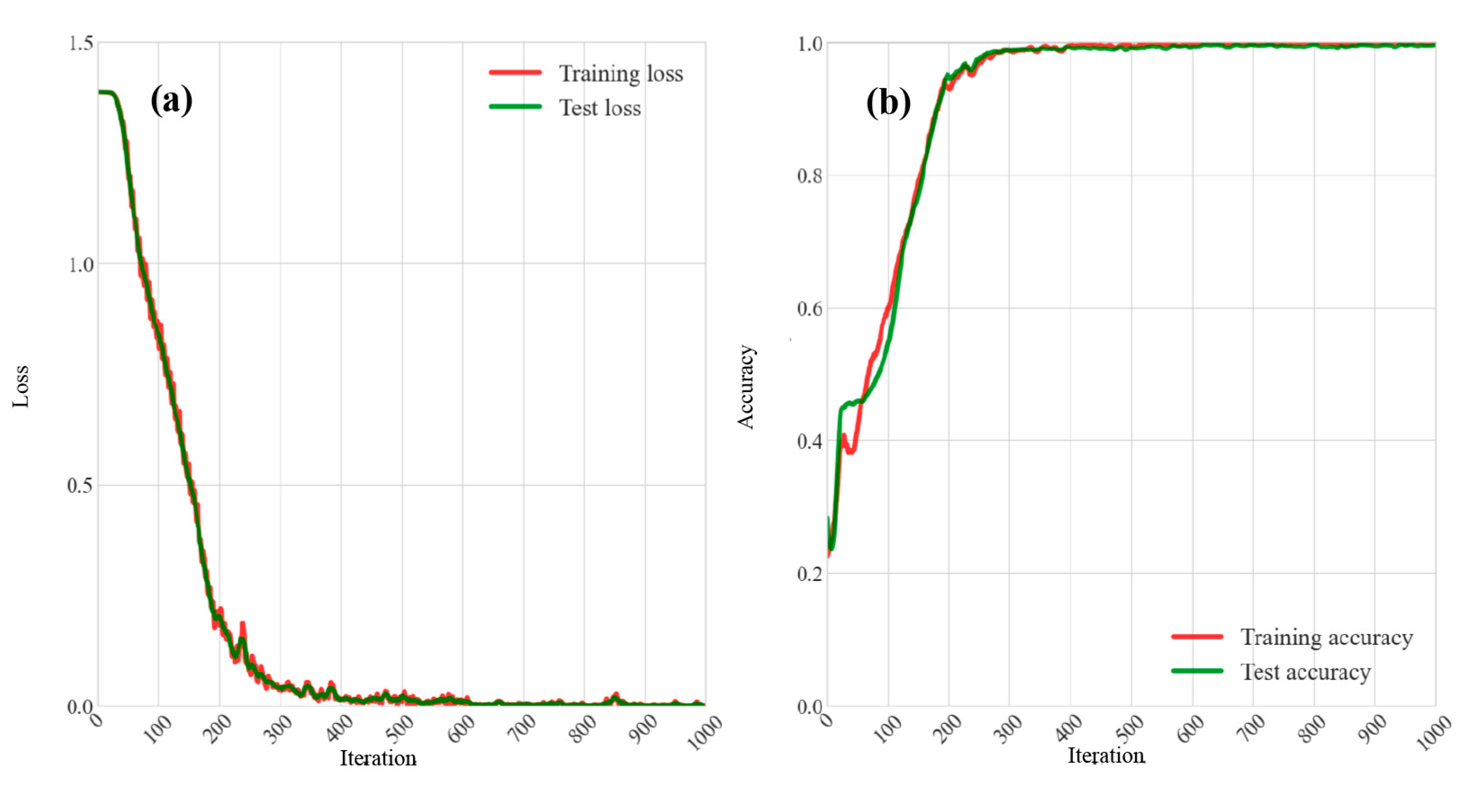

A mini-batch gradient descent approach is employed to train the neural network and the training set is partitioned into multiple groups. The model achieves gradient updates at each mini-batch data iteration. The Softmax cross entropy as the loss function is adopted with the adaptive motion estimation (Adam) optimizer. During the training process, the main objective is to minimize the loss between the predicted values and the ground truth by utilizing the optimizer strategy. The loss function is given as follows:

where

represents the total number of classes and neurons in the output layer.

indicates batch size.

denotes the hidden features of the

th sample.

is the ground truth label of

.

is the weight matrix of class

and

represents the bias term. Through multiple rounds of parameter tuning experiments, MFAGNet is selected as the optimal parameter configuration. The learning rate of the model is set at 1 × 10

−4, while the batch size is set to 128 and the dropout parameter is set to 0.5. The model is trained for 1000 epochs on two 3090 Ti GPUs, and the best-performing model based on classification accuracy is selected.