1. Introduction

Despite being a basic guarantee of human life, grain security is at risk owing to global population growth and accelerated climate change in recent years [

1]. Crop type information is fundamental for crop yield estimation [

2], crop pest monitoring [

3], and growth monitoring [

4], which are critical for maintaining grain security. However, the extraction and acquisition of crop type information are difficult, largely because the manual methods required are costly in terms of labor and resources [

5], often resulting in small sample sizes.

Remote sensing has been widely used in extracting crop-type information due to its wide monitoring range, low cost, and high timeliness [

6,

7,

8]. Vegetation indices can be formed by combining visible and near-infrared bands of images, which can measure the condition of surface vegetation simply and effectively [

9,

10,

11]. Therefore, most mature application methods use a vegetation index for crop classification [

12]. Supported by time series of Moderate Resolution Imaging Spectroradiometer (MODIS) data, Zhang et al. [

13] used the fast Fourier transform to smooth normalized difference vegetation index (NDVI) time-series curves while related parameters such as the curve’s mean, phase, and amplitude were used to extract the spatial distribution data of crops in North China. Xiao et al. [

14] used the NDVI, the enhanced vegetation index (EVI), and the land surface water index (LSWI) calculated from MODIS multi-temporal images to classify rice, water, and evergreen plants. With the recent increase in the use of algorithm iterations and remote sensing data, a variety of machine learning methods such as support vector machine (SVM) [

15], k-means algorithm [

16], maximum likelihood method [

17], extreme gradient boosting (XGBoost) [

18], and random forest (RF) [

12] have been successfully applied to remote sensing crop classification [

19]. For example, using the RF method, Markus et al. [

20] used single-temporal Sentinel-2 data to classify seven crops in Austria. They achieved an overall accuracy of 76%, establishing the foundation for applying Sentinel-2 data in crop extraction. Waldner et al. [

21] used time-series images from Landsat 8 and SPOT-4 to classify wheat, corn, and sunflower using artificial neural networks, achieving the highest classification accuracy of 85%. Furthermore, Wen et al. [

5] used time-series Landsat data and limited high-quality samples to achieve large-scale corn classification mapping using the RF method. As one of the more effective methods for crop classification, dynamic time warping (DTW) is widely used in crop classification [

22]. Belgiu et al. [

23] evaluated how a time-weighted dynamic time warping (TWDTW) method that uses Sentinel-2 time series perform when applied to pixel-based and object-based classifications of various crop types in three different study areas. However, the above classification methods require the manual design of crop features, which relies on expert knowledge and fixed scenes, resulting in the insufficient generalization ability of these methods [

24]. Therefore, these methods are not dependable for large-scale intelligent crop classification with multiple scenes.

After ten years of development, the concept of deep learning was proposed in 2006, and it has since made breakthroughs in many remote sensing applications, such as land cover classification [

25], building change detection [

26], and complex ground object detection [

27]. In the field of crop classification, deep learning has become a mainstream method with large-scale applications [

28]. Compared with traditional crop classification methods, deep learning methods can automatically mine deep features from remote sensing data; can make full use of time, spatial, and spectral information in images; and have better anti-noise and generalization abilities, making them the mainstream method of large-scale crop classification [

29,

30,

31].

Recurrent neural network (RNN) methods, such as long short-term memory (LSTM) [

32], gated recurrent units (GRU) [

33], and Conv1D-CNN [

34], originated from natural language processing and are particularly dependable for sequential data. Thus, they have been applied to crop extraction methods based on time-series images. For example, Zhong et al. [

35] used Landsat time series data and land use survey results from Yolo County, California, to test the classification accuracy of LSTM and Conv1D-CNN methods in a variety of summer crops. However, obtaining complete time-series data is difficult, often resulting in cloud occlusions or missing data. To address these limitations, some data-filling methods exist. For example, Zhao et al. [

36] classified crops from missing Sentinel-2 time series data using the filled missing data method and GRU. However, these processes requiring multiple data sources are complex and undependable for large-scale crop extraction. Furthermore, to introduce the spatial information of an image, such as texture and planting structure, to assist crop classification [

37], most studies have used a specific patch size around the point as the model input. For example, Xie et al. [

38] decomposed images into patches of different sizes as inputs in a convolutional neural network (CNN) for crop classification to compare the effect of patch size on crop classification accuracy. Additionally, Seyd et al. [

39] used 11 × 11 pixels patches for classification and designed a two-stream attention CNN network to extract the spatial and spectral information of crops simultaneously. These methods generally use fixed-size patches as input, but plot sizes can vary greatly in actual large-scale scenes, lowering the model’s accuracy. Furthermore, crop samples are generally obtained manually from the field, which can result in a small number of samples depending on staffing and available resources. A standard solution to this issue is to use a deep network for feature extraction followed by a machine learning method as the classifier, as demonstrated by Yang et al. [

40], who used a combination of CNN and RF for crop classification. However, there are significant differences in training and principles between deep neural networks (DNNs) and machine learning methods. The direct combination of these methods cannot fully exploit the advantages of deep learning. Limited by the spatial and spectral resolutions of satellite images, the spatial resolution of satellite images generally used for crop classification is low. For example, the highest resolution of Sentinel-2 is 10 m. To improve the boundary accuracy of crop extraction results, Kussul et al. [

41] used plot vector data to optimize crop extraction results and proposed a new voting optimization method that could significantly improve the accuracy of the results. However, obtaining high-precision plot vector data and applying them to large-scale crop extraction is challenging. Using the images themselves for optimization may be a feasible method.

Above all, large-scale crop extraction still has the following problems:

(1) Due to inevitable conditions such as cloud cover and missing data, it is difficult to obtain complete time-series images, especially for large-scale crop classification. Moreover, there are few studies on crop extraction from single-temporal remote sensing images [

42,

43].

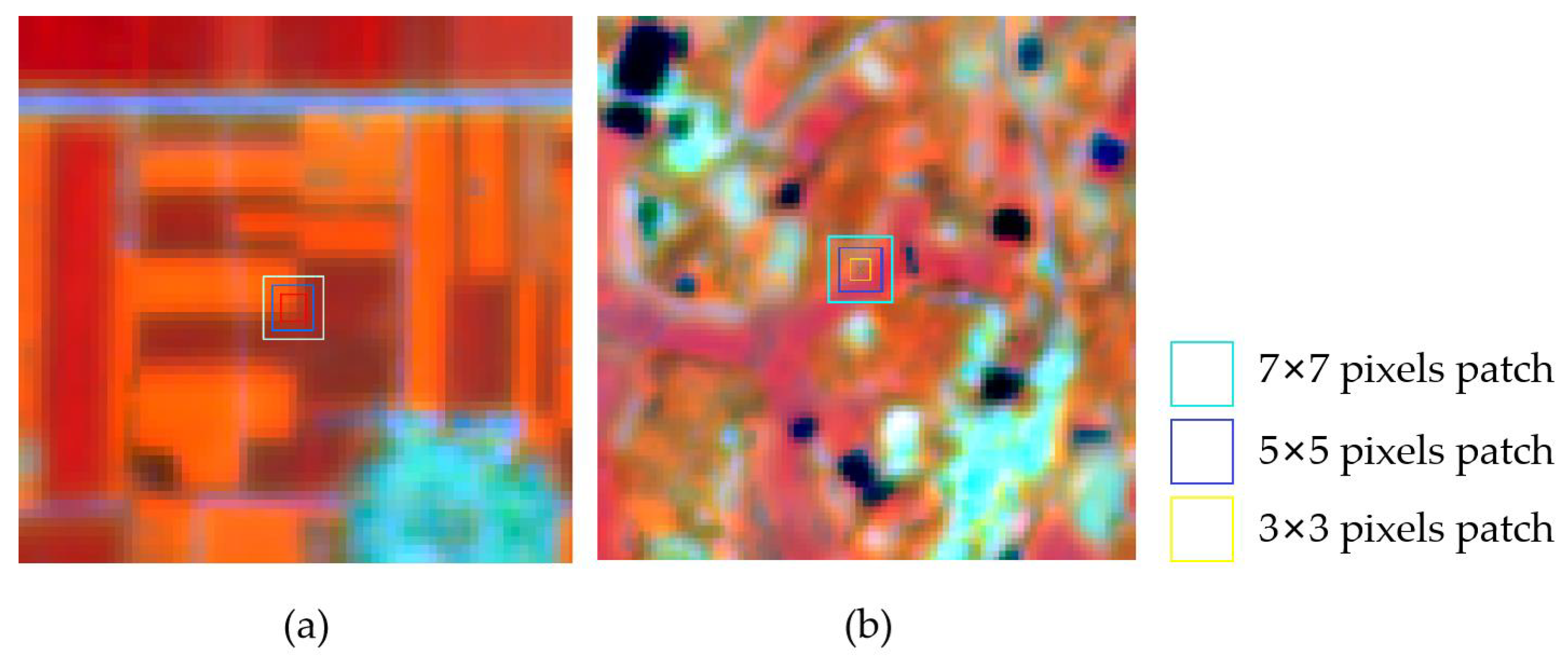

(2) Using patches as model inputs may introduce noise while introducing spatial information. As shown in

Figure 1, in mixed planting or terrace scenarios, the crops are small, long, and narrow in the area. Therefore, the number of pixels different from the central pixels category in a large patch exceeds half of the total redundant information may lead to classification errors.

(3) Insufficient crop samples are usually obtained, and since deep learning methods with more parameters are prone to overfitting, there is a risk of the model having insufficient generalization ability and low accuracy in large-scale classification.

(4) Limited by the spatial resolution of multispectral images, the phenomenon of mixed pixels is consequential, resulting in inaccurate crop boundaries.

In response to these problems, we propose a deep neural network for large-scale crop classification using single-temporal images named selective patches TabNet (SPTNet). The contributions of this study are as follows.

(1) A selective patch module that can adaptively fuse the features of patches of different sizes is designed to improve the network’s ability to extract small crop plots in complex scenes.

(2) TabNet [

44] and multitask learning were introduced to capture the spectral and spatial information of the central pixel to improve the weight of the central pixel during the classification process and enhance the network’s generalization ability, which effectively reduced the negative impact of insufficient sample numbers.

(3) Superpixel segmentation was implemented in the post-processing of classification results to increase the boundaries of crop plots.

(4) High classification accuracy was achieved using the above modules and insufficient crop phenology information. A large-scale crop mapping of three major crops in 2022 in Henan Province, China, was produced to meet the government’s demands on crop yield estimation and agricultural insurance.

4. Discussion

4.1. The Split Strategy Selection of SPM

In the split stage of the SK-Conv structure, the feature maps were processed using convolution kernels of different sizes to obtain the features of different receptive fields. However, our method differs from that of SK-Conv. Using our method, in the split stage, patches of different sizes were used for splitting rather than the sizes of the convolution kernels. Using the size of the convolution kernel in the split stage can obtain multi-scale receptive fields, whereas using patches can balance spatial information and noise. We conducted experiments to verify the advantages and disadvantages of these two methods. To ensure the fairness of the comparison, other structures and parameter settings of the network were consistently used across methods. The results are presented in

Table 8.

The results show that the F1 score using convolutional kernel sizes in the split stage is 0.9480, which is less than that using patch sizes in the split stage. The scale of the input vector is too small to provide a multi-scale receptive field because the input of the model is a patch of 7 × 7 pixels. This eliminates the advantage of using convolutional kernels for splitting. Furthermore, using patches of the same size as the input in each branch of SPM introduces more noise, which may also be responsible for the reduced accuracy. The method of using patch sizes in the split stage introduces prior information, which can better adapt to different sizes of crop plots in complex scenes. Therefore, we used different patch sizes for the split stage in the structure.

4.2. The Contribution of Different Sizes of Patches to Crop Extraction

In this section, we describe the experiments conducted to explore the contribution of different patch sizes to crop extraction. Specifically, in

Figure 7a, we removed the structure of one patch and retained only the other two patches. To ensure fairness in the experiment, the other parameter settings remained consistent across methods. The qualitative results of the experiments are presented in

Table 9. Regardless of which patch is removed, the classification accuracy decreases. It shows that these three different patch sizes contribute to improving classification accuracy. In the case of removing the 3 × 3 pixel patches, the classification accuracy decreased the most, whereas the classification accuracy decreased the least when removing the 5 × 5 pixel patches. This shows that the 3 × 3 pixel patches have the smallest contribution. The 5 × 5 pixel patches have the largest contribution in the classification process, possibly because the overall plot size in Henan was more proportional to 5 × 5 pixel patches. Of note, the classification accuracy of rice decreased significantly in the case of removing 3 × 3 pixel patches, which may be because rice is mostly planted in smaller hilly areas.

4.3. Advantages and Limitations

This study proposes a dependable network for large-scale crop extraction and achieves satisfactory classification accuracy using only single-temporal Sentinel-2 images. First, the proposed structure can adaptively select and fuse the spatial information of the image according to the size of the plot in the actual scene, thereby improving its ability to extract small features. Second, the proposed structure uses TabNet and a multitask learning strategy to extract the spectral features and improve the stability of the network while modeling the spectral information of the central pixels separately. TabNet, which has a similar strcuture to a decision tree, can enhance the network’s generalization ability and alleviate the overfitting problem caused by small sample sizes. Using multitask learning strategies can enhance the weight of the spectral features of pixels and further improve classification accuracy. Finally, the application of an SLIC algorithm to post-process the extraction results could improve the accuracy of the classification result boundary.

The SPTNet obtained the highest extraction accuracy than other methods, regardless of the crop. In the qualitative comparison, the SPTNet greatly improved the extraction ability of small crop plots, and the edge of crops has also been ameliorated. These comparisons prove the advantages of our method.

Although our method has obvious advantages for Henan crop extraction, there are still some problems. To avoid the problem of missing time-series images, we used single-temporal images as data sources, for which the extraction accuracy of staple crops, such as corn, peanut, and rice, is generally high. However, the classification of certain characteristic economic crops in the absence of crop phenology information remains a limiting factor. The spectral characteristics of some economic crops, such as soybeans and grapeseed, are relatively similar. Thus, using only single-temporal images to distinguish them is a challenge. Furthermore, it is difficult to overcome image resolution limitations, evidenced by the fact that the classification accuracy did not significantly improve after applying the SLIC algorithm, a superpixel segmentation method based on pixel spectral features.

In future research, we will attempt to use time-series remote sensing images for crop classification. To ensure the integrity of the data and make full use of the high-precision, single-temporal extraction method proposed in this paper, we will attempt to extract the key time points of crop phenology and classify crops under the premise of using the shortest time series data. We will also attempt to combine Sentinel-2 images with high-resolution data for crop extraction. Sentinel-2 images with high spectral resolution were used for crop extraction, and high-resolution images with high spatial resolution were used for plot boundary extraction. We hope to optimize the boundaries of the crop extraction results through high-spatial-resolution plot extraction results, which can improve the overall accuracy of crop classification. Finally, we will try to add SAR data for crop classification. SAR satellites can image in any weather condition, which can make up for the lack of optical data. Furthermore, it is difficult to overcome image resolution limitations, evidenced by the fact that the classification accuracy did not significantly improve after applying the SLIC algorithm, a superpixel segmentation method based on pixel spectral features.