Can the Perception Data of Autonomous Vehicles Be Used to Replace Mobile Mapping Surveys?—A Case Study Surveying Roadside City Trees

Abstract

1. Introduction

- We show that the perception data of an autonomous car can be used to replace designated mobile mapping surveys when the geometry of roadside objects, such as trees, is to be measured.

- As a case study, we measure the stem attributes of roadside trees for a city tree register using perception data of an autonomous car system.

- We compare the obtained accuracy against a conventional mobile laser scanning survey for the same trees.

- We discuss the data processing logic needed in general when post-processing perception data of autonomous cars for mapping and surveying applications.

2. Short Review on Using Perception Data of Autonomous Vehicles for Surveying

3. Material and Methods

3.1. Test Area

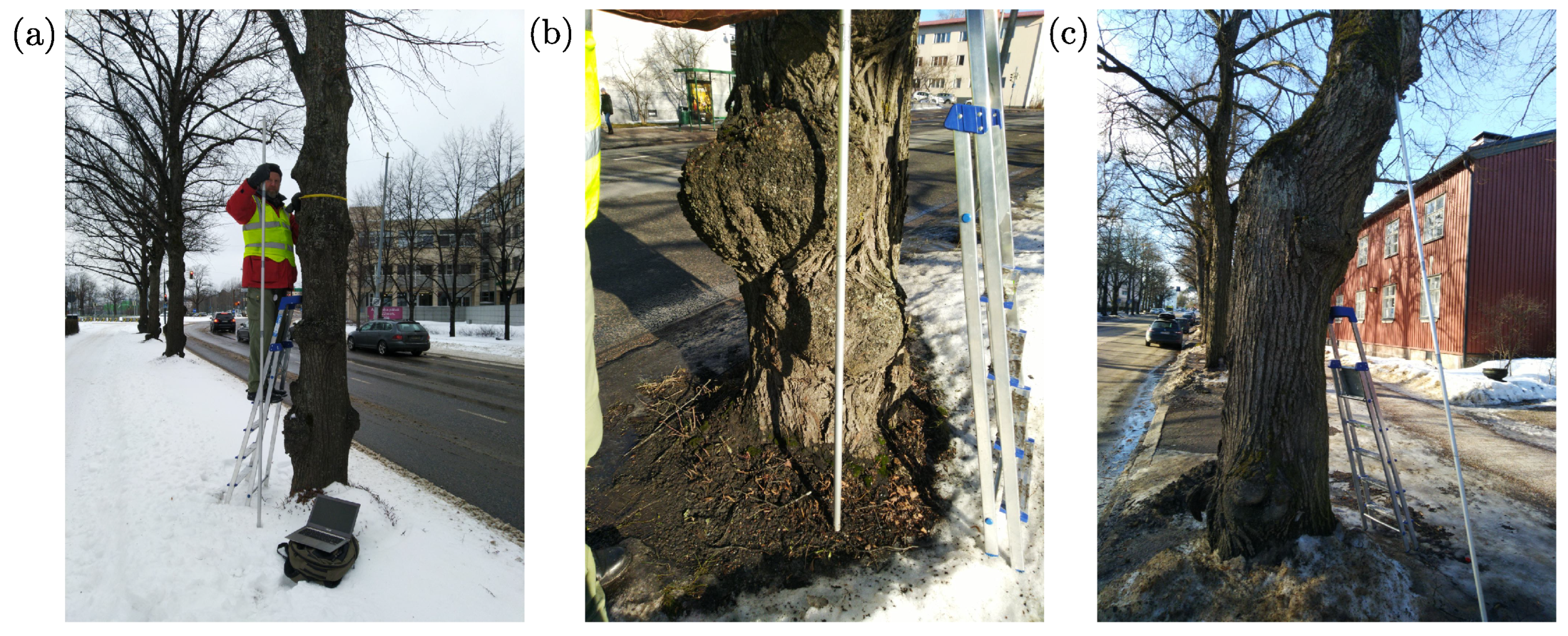

3.2. Data Acquisition

3.2.1. Autonomous Mapping and Driving Research Platform ARVO

3.2.2. Mobile Mapping System ROAMER

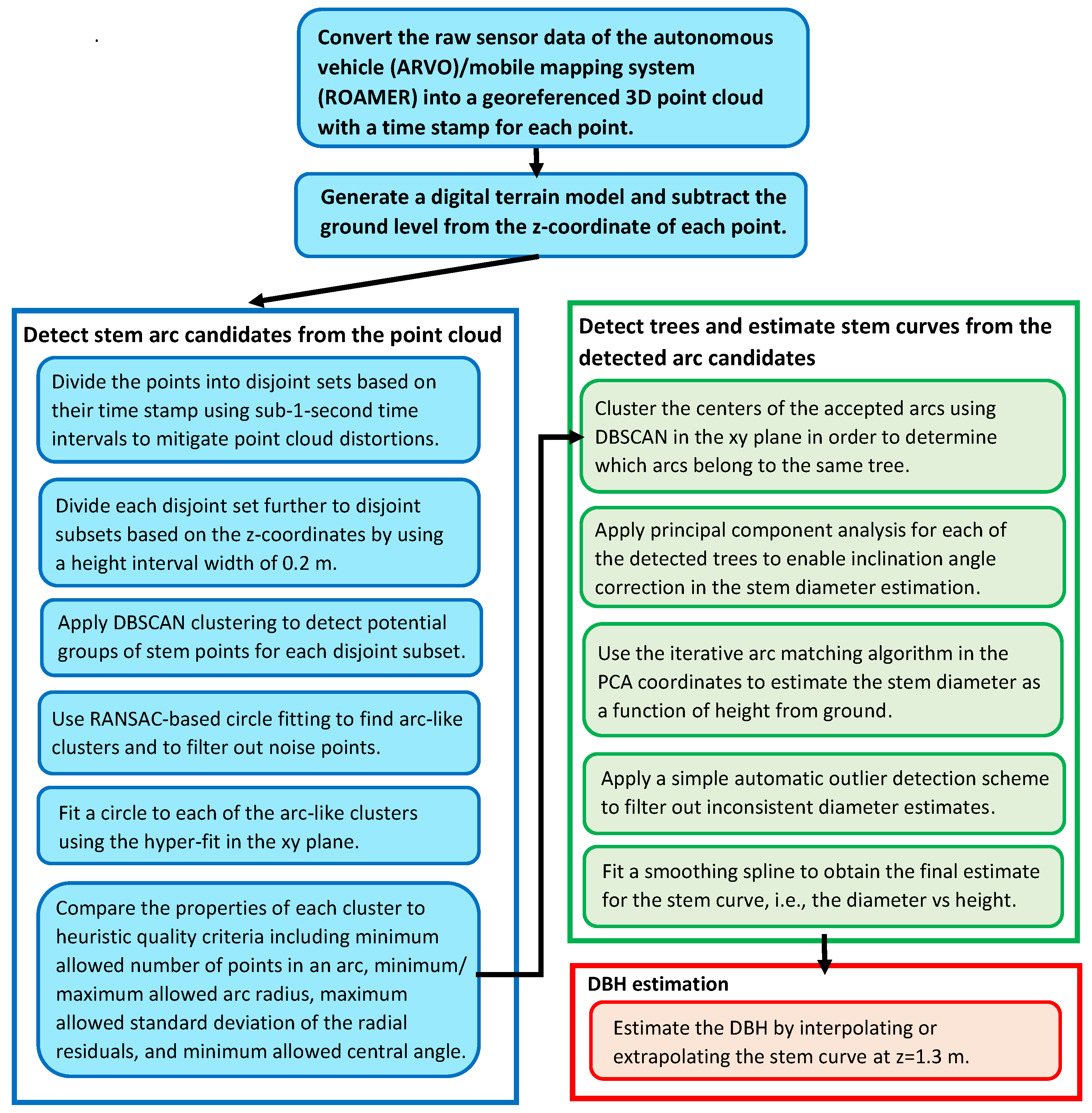

3.3. Data Processing

3.3.1. Post-Processing the Raw Sensor Data of the Autonomous Car System ARVO into a 3D Point Cloud

3.3.2. Post-Processing the Raw Sensor Data of the Mobile Mapping System ROAMER into a 3D Point Cloud

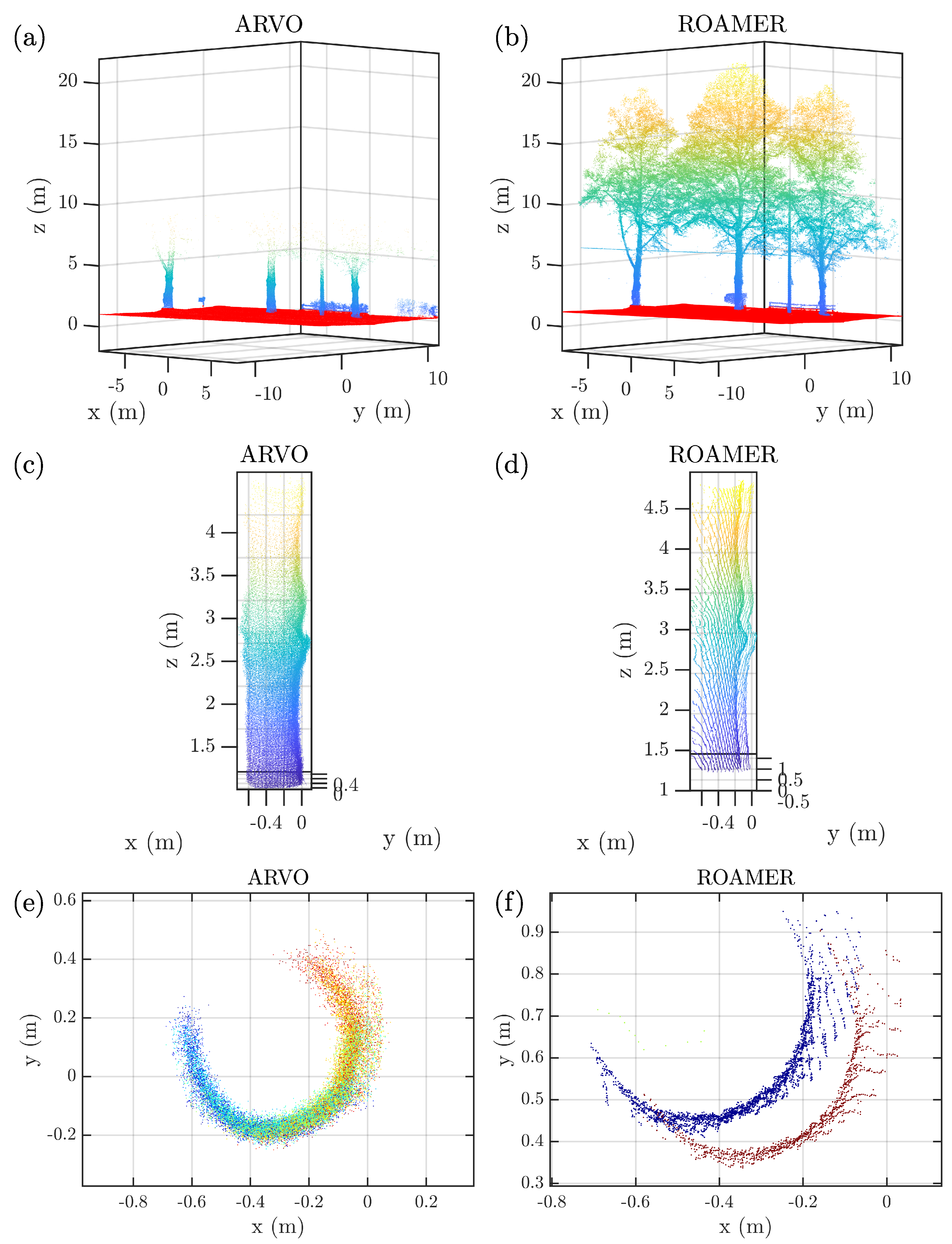

3.3.3. Cropping and Illustrating the Point Cloud Data

3.3.4. Digital Terrain Model Creation

3.3.5. Stem Curve and DBH Estimation

- Find the nearest diameter estimates based on the height z (including the height interval itself), and compute the median (MEDIAN) and median absolute deviation (MAD) of these diameter values. If both of the following inequalities are fulfilled, the point is classified as an outlier

- (a)

- ,

- (b)

- cm.

- If the point is not included in the largest connected set of diameter estimates, it is regarded as an outlier. A set of diameter estimates is regarded as a connected set if all vertical distances between any consecutive diameter estimates sorted based on their z-value satisfy m.

- If the stem curve is estimated from a height interval taller than 3.0 m, the DBH is computed by fitting a line to the lowest 3.0 m of the fitted smoothing spline and then extrapolating the line to m.

- If the stem curve is estimated from a height interval shorter than 3.0 m, a square root function , with h denoting the tree height, is fitted to the stem curve, and then evaluated at m to estimate the DBH.

3.4. Statistical Analysis

4. Results

4.1. Completeness and Correctness Related to Stem Detection

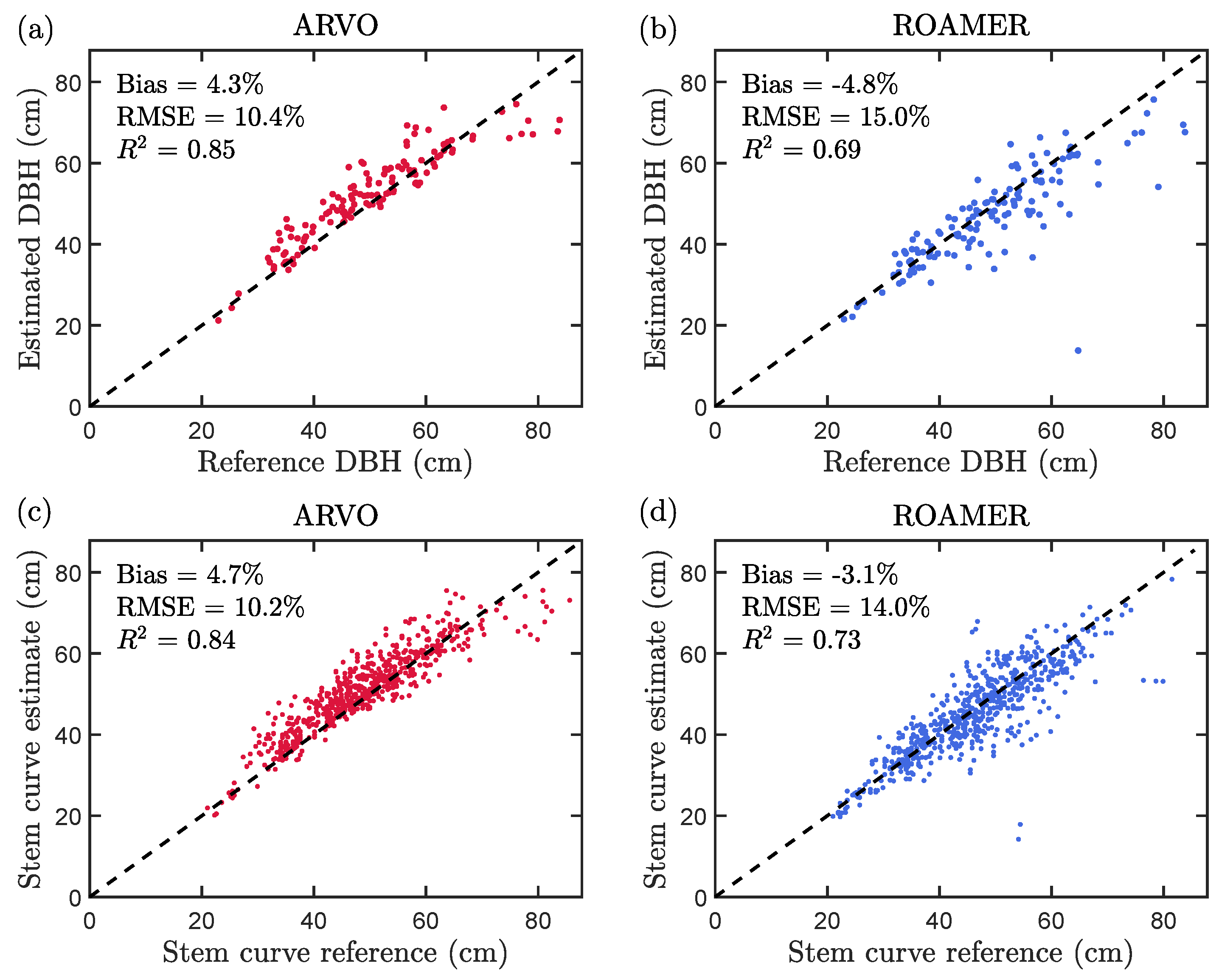

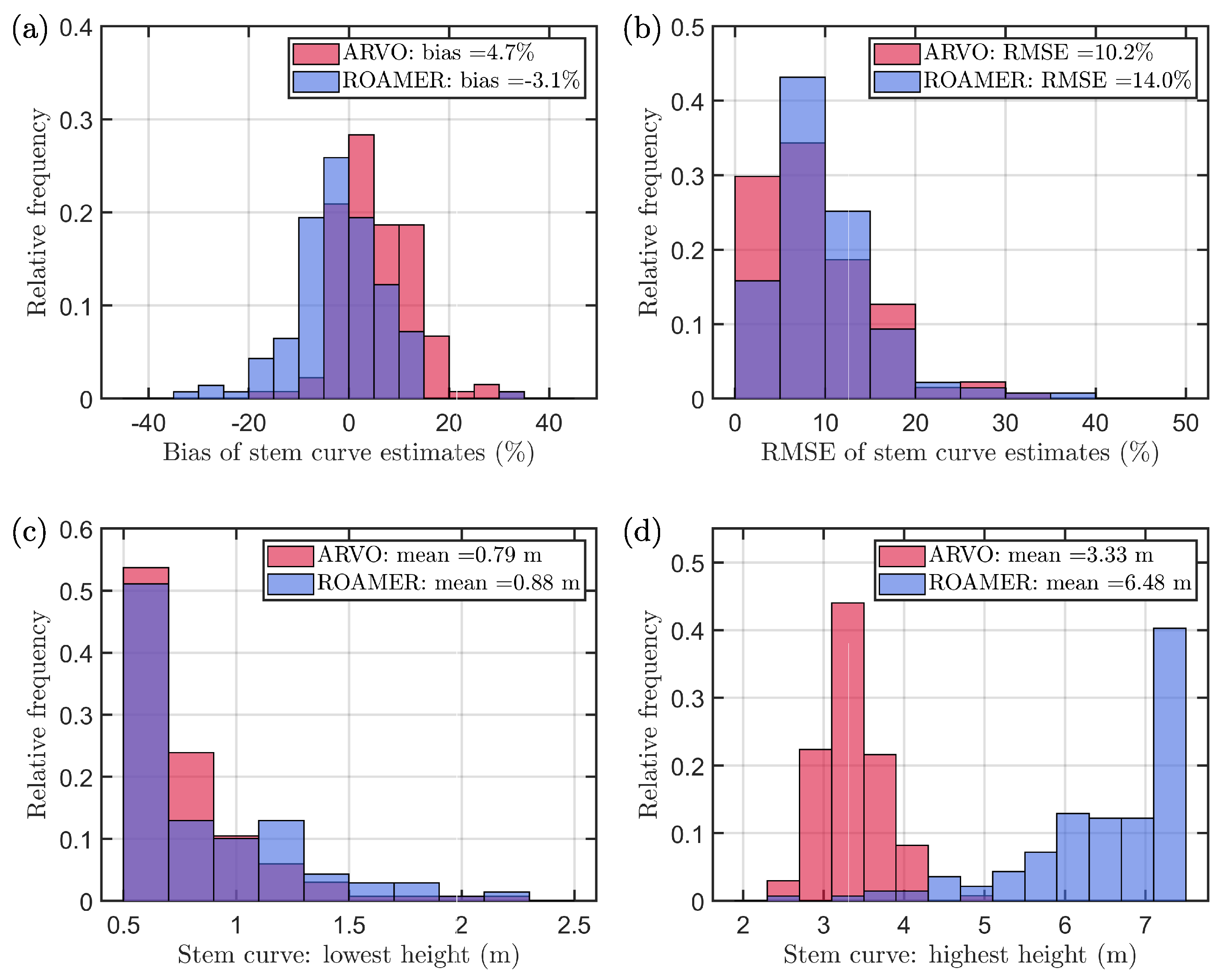

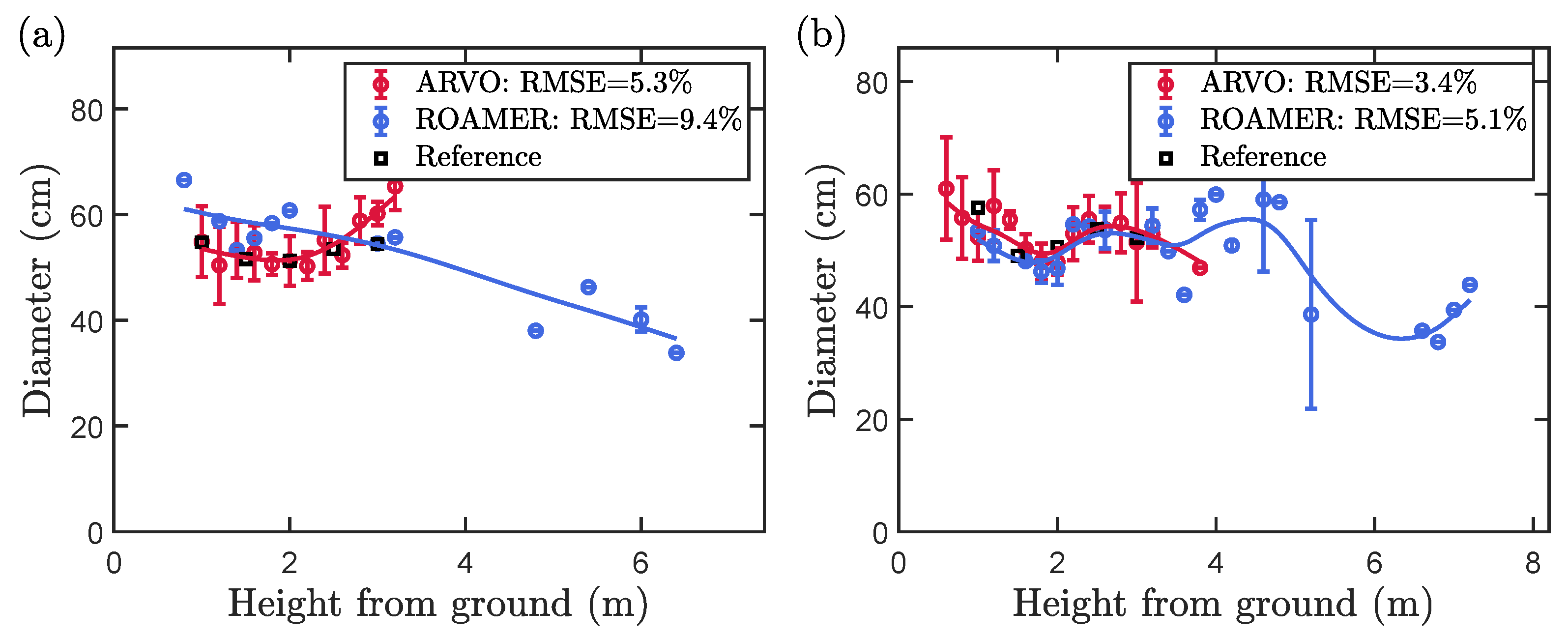

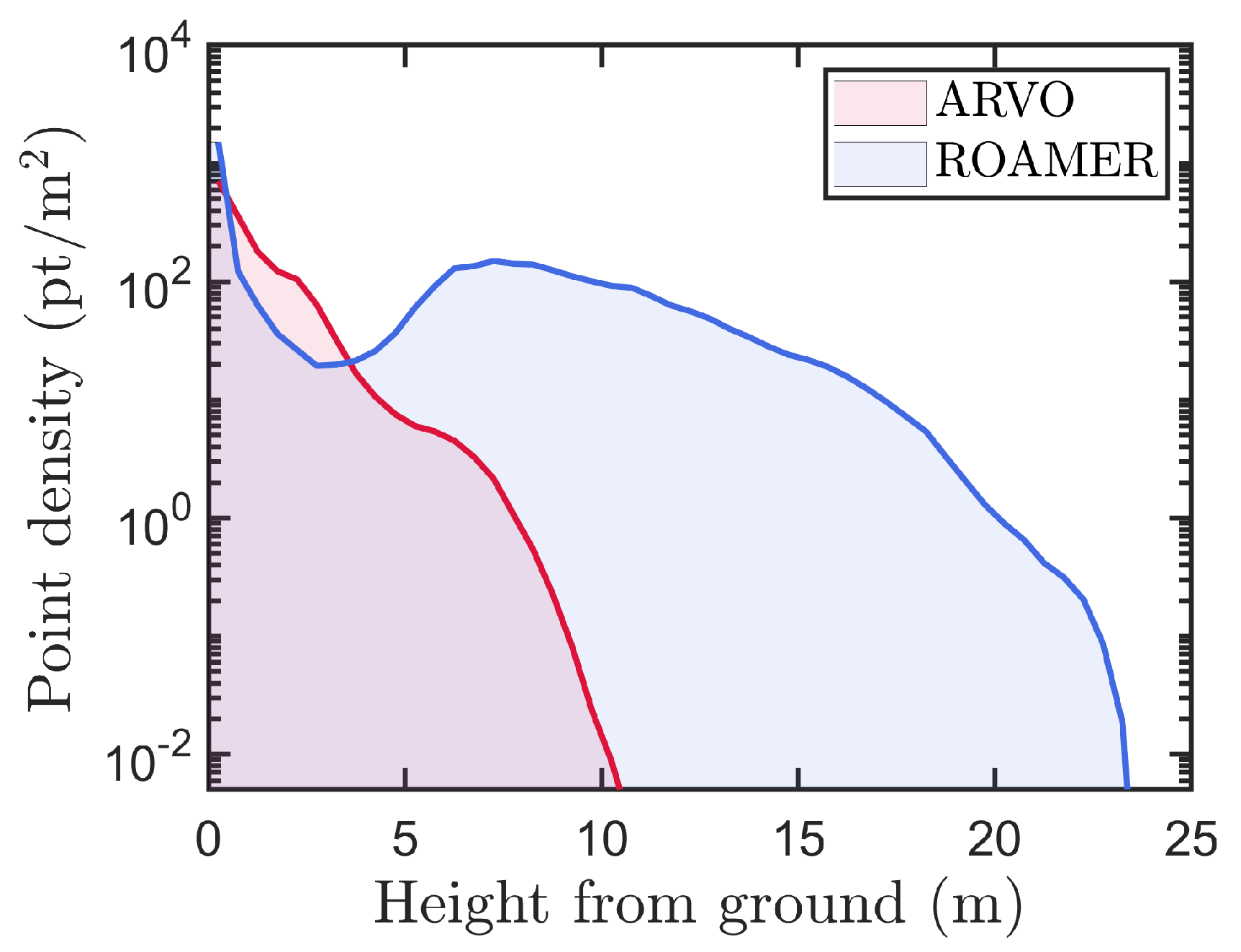

4.2. Accuracy Related to the Measured Stem Attributes

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Holland-Letz, D.; Kässer, M.; Kloss, B.; Müller, T. Mobility’s Future: An Investment Reality Check. 2021. Available online: https://www.mckinsey.com/industries/automotive-and-assembly/our-insights/mobilitys-future-an-investment-reality-check (accessed on 18 October 2022).

- Rasshofer, R.H.; Gresser, K. Automotive radar and lidar systems for next generation driver assistance functions. Adv. Radio Sci. 2005, 3, 205–209. [Google Scholar] [CrossRef]

- Kukko, A.; Kaijaluoto, R.; Kaartinen, H.; Lehtola, V.V.; Jaakkola, A.; Hyyppä, J. Graph SLAM correction for single scanner MLS forest data under boreal forest canopy. ISPRS J. Photogramm. Remote Sens. 2017, 132, 199–209. [Google Scholar] [CrossRef]

- Karam, S.; Vosselman, G.; Peter, M.; Hosseinyalamdary, S.; Lehtola, V. Design, calibration, and evaluation of a backpack indoor mobile mapping system. Remote Sens. 2019, 11, 905. [Google Scholar] [CrossRef]

- Lee, G.H.; Fraundorfer, F.; Pollefeys, M. Structureless pose-graph loop-closure with a multi-camera system on a self-driving car. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 564–571. [Google Scholar]

- Elhashash, M.; Albanwan, H.; Qin, R. A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors 2022, 22, 4262. [Google Scholar] [CrossRef] [PubMed]

- Hyyppä, E.; Hyyppä, J.; Hakala, T.; Kukko, A.; Wulder, M.A.; White, J.C.; Pyörälä, J.; Yu, X.; Wang, Y.; Virtanen, J.P.; et al. Under-canopy UAV laser scanning for accurate forest field measurements. ISPRS J. Photogr. Remote Sens. 2020, 164, 41–60. [Google Scholar] [CrossRef]

- Hyyppä, E.; Yu, X.; Kaartinen, H.; Hakala, T.; Kukko, A.; Vastaranta, M.; Hyyppä, J. Comparison of Backpack, Handheld, Under-Canopy UAV, and Above-Canopy UAV Laser Scanning for Field Reference Data Collection in Boreal Forests. Remote Sens. 2020, 12, 3327. [Google Scholar] [CrossRef]

- Li, Z.; Tan, J.; Liu, H. Rigorous boresight self-calibration of mobile and UAV LiDAR scanning systems by strip adjustment. Remote Sens. 2019, 11, 442. [Google Scholar] [CrossRef]

- Seif, H.G.; Hu, X. Autonomous driving in the iCity—HD maps as a key challenge of the automotive industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Al Najada, H.; Mahgoub, I. Autonomous vehicles safe-optimal trajectory selection based on big data analysis and predefined user preferences. In Proceedings of the 2016 IEEE 7th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 20–22 October 2016; pp. 1–6. [Google Scholar]

- Virtanen, J.P.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Turppa, T.; Hyyppä, H.; Hyyppä, J. Nationwide point cloud—The future topographic core data. ISPRS Int. J. Geo-Inf. 2017, 6, 243. [Google Scholar] [CrossRef]

- Jomrich, F.; Sharma, A.; Rückelt, T.; Burgstahler, D.; Böhnstedt, D. Dynamic Map Update Protocol for Highly Automated Driving Vehicles. In Proceedings of the 3rd International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2017), Porto, Portugal, 22–24 April 2017; pp. 68–78. [Google Scholar]

- Xu, W.; Zhou, H.; Cheng, N.; Lyu, F.; Shi, W.; Chen, J.; Shen, X. Internet of vehicles in big data era. IEEE/CAA J. Autom. Sin. 2017, 5, 19–35. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Yue, W.; Shu, S.; Tan, W.; Hu, C.; Huang, Y.; Wu, J.; Liu, H. A voxel-based method for automated identification and morphological parameters estimation of individual street trees from mobile laser scanning data. Remote Sens. 2013, 5, 584–611. [Google Scholar] [CrossRef]

- Forsman, M.; Holmgren, J.; Olofsson, K. Tree stem diameter estimation from mobile laser scanning using line-wise intensity-based clustering. Forests 2016, 7, 206. [Google Scholar] [CrossRef]

- Zhao, Y.; Hu, Q.; Li, H.; Wang, S.; Ai, M. Evaluating carbon sequestration and PM2. 5 removal of urban street trees using mobile laser scanning data. Remote Sens. 2018, 10, 1759. [Google Scholar] [CrossRef]

- Ylijoki, O.; Porras, J. Perspectives to definition of big data: A mapping study and discussion. J. Innov. Manag. 2016, 4, 69–91. [Google Scholar] [CrossRef]

- Laney, D. 3D data management: Controlling data volume, velocity and variety. META Group Res. Note 2001, 6, 1. [Google Scholar]

- Mayer-Schönberger, V.; Cukier, K. Big Data: A Revolution that Will Transform How We Live, Work, and Think; Houghton Mifflin Harcourt: New York, NY, USA, 2013. [Google Scholar]

- Rosenzweig, J.; Bartl, M. A review and analysis of literature on autonomous driving. E-J. Mak. Innov. 2015, 1–57. Available online: https://michaelbartl.com/ (accessed on 25 March 2023).

- Jo, K.; Kim, C.; Sunwoo, M. Simultaneous localization and map change update for the high definition map-based autonomous driving car. Sensors 2018, 18, 3145. [Google Scholar] [CrossRef]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Liu, S.; Liu, L.; Tang, J.; Yu, B.; Wang, Y.; Shi, W. Edge computing for autonomous driving: Opportunities and challenges. Proc. IEEE 2019, 107, 1697–1716. [Google Scholar] [CrossRef]

- Montanaro, U.; Dixit, S.; Fallah, S.; Dianati, M.; Stevens, A.; Oxtoby, D.; Mouzakitis, A. Towards connected autonomous driving: Review of use-cases. Veh. Syst. Dyn. 2019, 57, 779–814. [Google Scholar] [CrossRef]

- Fujiyoshi, H.; Hirakawa, T.; Yamashita, T. Deep learning-based image recognition for autonomous driving. IATSS Res. 2019, 43, 244–252. [Google Scholar] [CrossRef]

- Liu, L.; Lu, S.; Zhong, R.; Wu, B.; Yao, Y.; Zhang, Q.; Shi, W. Computing systems for autonomous driving: State of the art and challenges. IEEE Internet Things J. 2020, 8, 6469–6486. [Google Scholar] [CrossRef]

- Ilci, V.; Toth, C. High definition 3D map creation using GNSS/IMU/LiDAR sensor integration to support autonomous vehicle navigation. Sensors 2020, 20, 899. [Google Scholar] [CrossRef]

- Wong, K.; Gu, Y.; Kamijo, S. Mapping for autonomous driving: Opportunities and challenges. IEEE Intell. Transp. Syst. Mag. 2020, 13, 91–106. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Pannen, D.; Liebner, M.; Hempel, W.; Burgard, W. How to keep HD maps for automated driving up to date. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2288–2294. [Google Scholar]

- Mozaffari, S.; Al-Jarrah, O.Y.; Dianati, M.; Jennings, P.; Mouzakitis, A. Deep learning-based vehicle behavior prediction for autonomous driving applications: A review. IEEE Trans. Intell. Transp. Syst. 2020, 23, 33–47. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef] [PubMed]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixao, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Lin, X.; Wang, F.; Yang, B.; Zhang, W. Autonomous vehicle localization with prior visual point cloud map constraints in GNSS-challenged environments. Remote Sens. 2021, 13, 506. [Google Scholar] [CrossRef]

- Feng, D.; Harakeh, A.; Waslander, S.L.; Dietmayer, K. A review and comparative study on probabilistic object detection in autonomous driving. IEEE Trans. Intell. Transp. Syst. 2021, 23, 9961–9980. [Google Scholar] [CrossRef]

- Wang, M.; Chen, Q.; Fu, Z. Lsnet: Learned sampling network for 3d object detection from point clouds. Remote Sens. 2022, 14, 1539. [Google Scholar] [CrossRef]

- Chalvatzaras, A.; Pratikakis, I.; Amanatiadis, A.A. A Survey on Map-Based Localization Techniques for Autonomous Vehicles. IEEE Trans. Intell. Veh. 2023, 8, 1574–1596. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Pham, Q.H.; Sevestre, P.; Pahwa, R.S.; Zhan, H.; Pang, C.H.; Chen, Y.; Mustafa, A.; Chandrasekhar, V.; Lin, J. A*3D dataset: Towards autonomous driving in challenging environments. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2267–2273. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Huang, X.; Cheng, X.; Geng, Q.; Cao, B.; Zhou, D.; Wang, P.; Lin, Y.; Yang, R. The ApolloScape dataset for autonomous driving. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 954–960. [Google Scholar]

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S.; et al. A2d2: Audi autonomous driving dataset. arXiv 2020, arXiv:2004.06320. [Google Scholar]

- Masello, L.; Sheehan, B.; Murphy, F.; Castignani, G.; McDonnell, K.; Ryan, C. From traditional to autonomous vehicles: A systematic review of data availability. Transp. Res. Rec. 2022, 2676, 161–193. [Google Scholar] [CrossRef]

- Manninen, P.; Hyyti, H.; Kyrki, V.; Maanpää, J.; Taher, J.; Hyyppä, J. Towards High-Definition Maps: A Framework Leveraging Semantic Segmentation to Improve NDT Map Compression and Descriptivity. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 5370–5377. [Google Scholar]

- Daniel, A.; Subburathinam, K.; Paul, A.; Rajkumar, N.; Rho, S. Big autonomous vehicular data classifications: Towards procuring intelligence in ITS. Veh. Commun. 2017, 9, 306–312. [Google Scholar] [CrossRef]

- Yoo, A.; Shin, S.; Lee, J.; Moon, C. Implementation of a sensor big data processing system for autonomous vehicles in the C-ITS environment. Appl. Sci. 2020, 10, 7858. [Google Scholar] [CrossRef]

- Wang, H.; Feng, J.; Li, K.; Chen, L. Deep understanding of big geospatial data for self-driving: Data, technologies, and systems. Future Gener. Comput. Syst. 2022, 137, 146–163. [Google Scholar] [CrossRef]

- Zhu, L.; Yu, F.R.; Wang, Y.; Ning, B.; Tang, T. Big data analytics in intelligent transportation systems: A survey. IEEE Trans. Intell. Transp. Syst. 2018, 20, 383–398. [Google Scholar] [CrossRef]

- Hyyppä, J.; Kukko, A.; Kaartinen, H.; Matikainen, L.; Lehtomäki, M. SOHJOA-Projekti: Robottibussi Suomen Urbaaneissa Olosuhteissa; Metropolia Ammattikorkeakoulu: Helsinki, Finland, 2018; pp. 65–73. [Google Scholar]

- Maanpää, J.; Taher, J.; Manninen, P.; Pakola, L.; Melekhov, I.; Hyyppä, J. Multimodal end-to-end learning for autonomous steering in adverse road and weather conditions. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 699–706. [Google Scholar]

- Maanpää, J.; Melekhov, I.; Taher, J.; Manninen, P.; Hyyppä, J. Leveraging Road Area Semantic Segmentation with Auxiliary Steering Task. In Proceedings of the Image Analysis and Processing–ICIAP 2022: 21st International Conference, Lecce, Italy, 23–27 May 2022; Proceedings Part I; Springer: Cham, Switzerland, 2022; pp. 727–738. [Google Scholar]

- Taher, J.; Hakala, T.; Jaakkola, A.; Hyyti, H.; Kukko, A.; Manninen, P.; Maanpää, J.; Hyyppä, J. Feasibility of hyperspectral single photon LiDAR for robust autonomous vehicle perception. Sensors 2022, 22, 5759. [Google Scholar] [CrossRef]

- Velodyne. VLP-16 Puck LITE, 2018. 63-9286 Rev-H Datasheet. Available online: https://www.mapix.com/wp-content/uploads/2018/07/63-9286_Rev-H_Puck-LITE_Datasheet_Web.pdf (accessed on 25 March 2023).

- Velodyne. VLS-128 Alpha Puck, 2019. 63-9480 Rev-3 datasheet. Available online: https://www.hypertech.co.il/wp-content/uploads/2016/05/63-9480_Rev-3_Alpha-Puck_Datasheet_Web.pdf (accessed on 25 March 2023).

- Novatel. PwrPak7-E1. 2020. Available online: https://novatel.com/products/receivers/enclosures/pwrpak7 (accessed on 25 March 2023).

- Quigley, M.; Gerkey, B.; Conley, K.; Faust, J.; Foote, T.; Leibs, J.; Berger, E.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software in Robotics, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- El Issaoui, A.; Feng, Z.; Lehtomäki, M.; Hyyppä, E.; Hyyppä, H.; Kaartinen, H.; Kukko, A.; Hyyppä, J. Feasibility of mobile laser scanning towards operational accurate road rut depth measurements. Sensors 2021, 21, 1180. [Google Scholar] [CrossRef] [PubMed]

- Holopainen, M.; Vastaranta, M.; Kankare, V.; Hyyppä, H.; Vaaja, M.; Hyyppä, J.; Liang, X.; Litkey, P.; Yu, X.; Kaartinen, H.; et al. The use of ALS, TLS and VLS measurements in mapping and monitoring urban trees. In Proceedings of the 2011 Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011; pp. 29–32. [Google Scholar]

- Kukko, A.; Kaartinen, H.; Hyyppä, J.; Chen, Y. Multiplatform mobile laser scanning: Usability and performance. Sensors 2012, 12, 11712–11733. [Google Scholar] [CrossRef]

- Kaartinen, H.; Hyyppä, J.; Kukko, A.; Jaakkola, A.; Hyyppä, H. Benchmarking the performance of mobile laser scanning systems using a permanent test field. Sensors 2012, 12, 12814–12835. [Google Scholar] [CrossRef]

- Vaaja, M.; Hyyppä, J.; Kukko, A.; Kaartinen, H.; Hyyppä, H.; Alho, P. Mapping topography changes and elevation accuracies using a mobile laser scanner. Remote Sens. 2011, 3, 587–600. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using laser-based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar] [CrossRef]

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Kukko, A.; Kaartinen, H. Detection of vertical pole-like objects in a road environment using vehicle-based laser scanning data. Remote Sens. 2010, 2, 641–664. [Google Scholar] [CrossRef]

- Novatel. Inertial Explorer, 2020. D18034 Version 9 brochure. Available online: https://www.amtechs.co.jp/product/Waypoint_D18034_v9.pdf (accessed on 25 March 2023).

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Lampinen, J.; Kaartinen, H.; Kukko, A.; Puttonen, E.; Hyyppä, H. Object classification and recognition from mobile laser scanning point clouds in a road environment. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1226–1239. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD’96), Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Al-Sharadqah, A.; Chernov, N. Error analysis for circle fitting algorithms. Electron. J. Stat. 2009, 3, 886–911. [Google Scholar] [CrossRef]

- Hyyppä, E.; Kukko, A.; Kaijaluoto, R.; White, J.C.; Wulder, M.A.; Pyörälä, J.; Liang, X.; Yu, X.; Wang, Y.; Kaartinen, H.; et al. Accurate derivation of stem curve and volume using backpack mobile laser scanning. ISPRS J. Photogramm. Remote Sens. 2020, 161, 246–262. [Google Scholar] [CrossRef]

- Pollock, D.S.G. Smoothing with Cubic Splines; Queen Mary University of London, School of Economics and Finance: London, UK, 1993. [Google Scholar]

- De Boor, C. A Practical Guide to Splines; Springer: New York, NY, USA, 1978; Volume 27. [Google Scholar]

- Liang, X.; Kukko, A.; Hyyppä, J.; Lehtomäki, M.; Pyörälä, J.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Wang, Y. In-situ measurements from mobile platforms: An emerging approach to address the old challenges associated with forest inventories. ISPRS J. Photogramm. Remote Sens. 2018, 143, 97–107. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 9297–9307. [Google Scholar]

- Hyyppä, E.; Kukko, A.; Kaartinen, H.; Yu, X.; Muhojoki, J.; Hakala, T.; Hyyppä, J. Direct and automatic measurements of stem curve and volume using a high-resolution airborne laser scanning system. Sci. Remote Sens. 2022, 5, 100050. [Google Scholar] [CrossRef]

| Number of Trees | Road Length (m) | DBH | Stem Curve | |||||

|---|---|---|---|---|---|---|---|---|

| Mean (cm) | Std. (cm) | Minimum (cm) | Maximum (cm) | Mean (cm) | Minimum Height (m) | Maximum Height (m) | ||

| 139 | 1310 | 49.3 | 12.5 | 23.0 | 83.8 | 49.2 | 1.0 | 2.9 |

| Parameter | ARVO | ROAMER |

|---|---|---|

| Arc detection | ||

| Width of height interval in the z-direction (m) | 0.2 | 0.2 |

| Duration of time interval (s) | 0.2 | 0.1 |

| Neighborhood radius for DBSCAN (cm) | 7.5 | 7.5 |

| Point number threshold for DBSCAN | 4 | 4 |

| Outlier threshold in the radial direction for RANSAC (cm) | 3.5 | 3.0 |

| Minimum ratio of non-outlying points for RANSAC | 0.75 | 0.75 |

| Minimum number of points in arc | 15 | 15 |

| Maximum standard deviation of radial residuals in the arc (cm) | 1.75 | 1.75 |

| Minimum diameter of the arc (cm) | 10.0 | 10.0 |

| Maximum diameter of the arc (cm) | 80.0 | 80.0 |

| Minimum central angle of the arc (rad) | ||

| Clustering arcs into trees | ||

| Neighborhood radius for DBSCAN (cm) | 50 | 50 |

| Minimum number of arcs for DBSCAN | 3 | 3 |

| Minimum z difference between highest and lowest arc (m) | 1.0 | 1.0 |

| Detection of outlying diameter estimates | ||

| Number of nearest diameter estimates in the z-direction to use for comparison | 5 | 5 |

| Maximum of | 2.0 | 2.0 |

| Maximum of (cm) | 4.0 | 4.0 |

| Largest allowed z difference between the nearest arcs (m) | 4.0 | 4.0 |

| System | Completeness | Correctness | DBH | Stem Curve | ||

|---|---|---|---|---|---|---|

| Bias | RMSE | Bias | RMSE | |||

| ARVO | 96.4% | 87.6% | 2.1 cm (4.3%) | 5.2 cm (10.4%) | 2.3 cm (4.7%) | 4.9 cm (10.2%) |

| ROAMER | 100.0% | 84.2% | −2.3 cm (−4.8%) | 7.4 cm (15.0%) | −1.5 cm (−3.1%) | 6.6 cm (14.0%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hyyppä, E.; Manninen, P.; Maanpää, J.; Taher, J.; Litkey, P.; Hyyti, H.; Kukko, A.; Kaartinen, H.; Ahokas, E.; Yu, X.; et al. Can the Perception Data of Autonomous Vehicles Be Used to Replace Mobile Mapping Surveys?—A Case Study Surveying Roadside City Trees. Remote Sens. 2023, 15, 1790. https://doi.org/10.3390/rs15071790

Hyyppä E, Manninen P, Maanpää J, Taher J, Litkey P, Hyyti H, Kukko A, Kaartinen H, Ahokas E, Yu X, et al. Can the Perception Data of Autonomous Vehicles Be Used to Replace Mobile Mapping Surveys?—A Case Study Surveying Roadside City Trees. Remote Sensing. 2023; 15(7):1790. https://doi.org/10.3390/rs15071790

Chicago/Turabian StyleHyyppä, Eric, Petri Manninen, Jyri Maanpää, Josef Taher, Paula Litkey, Heikki Hyyti, Antero Kukko, Harri Kaartinen, Eero Ahokas, Xiaowei Yu, and et al. 2023. "Can the Perception Data of Autonomous Vehicles Be Used to Replace Mobile Mapping Surveys?—A Case Study Surveying Roadside City Trees" Remote Sensing 15, no. 7: 1790. https://doi.org/10.3390/rs15071790

APA StyleHyyppä, E., Manninen, P., Maanpää, J., Taher, J., Litkey, P., Hyyti, H., Kukko, A., Kaartinen, H., Ahokas, E., Yu, X., Muhojoki, J., Lehtomäki, M., Virtanen, J.-P., & Hyyppä, J. (2023). Can the Perception Data of Autonomous Vehicles Be Used to Replace Mobile Mapping Surveys?—A Case Study Surveying Roadside City Trees. Remote Sensing, 15(7), 1790. https://doi.org/10.3390/rs15071790