A Machine Learning Approach to Derive Aerosol Properties from All-Sky Camera Imagery

Abstract

1. Introduction

2. Instruments, Data and Methods

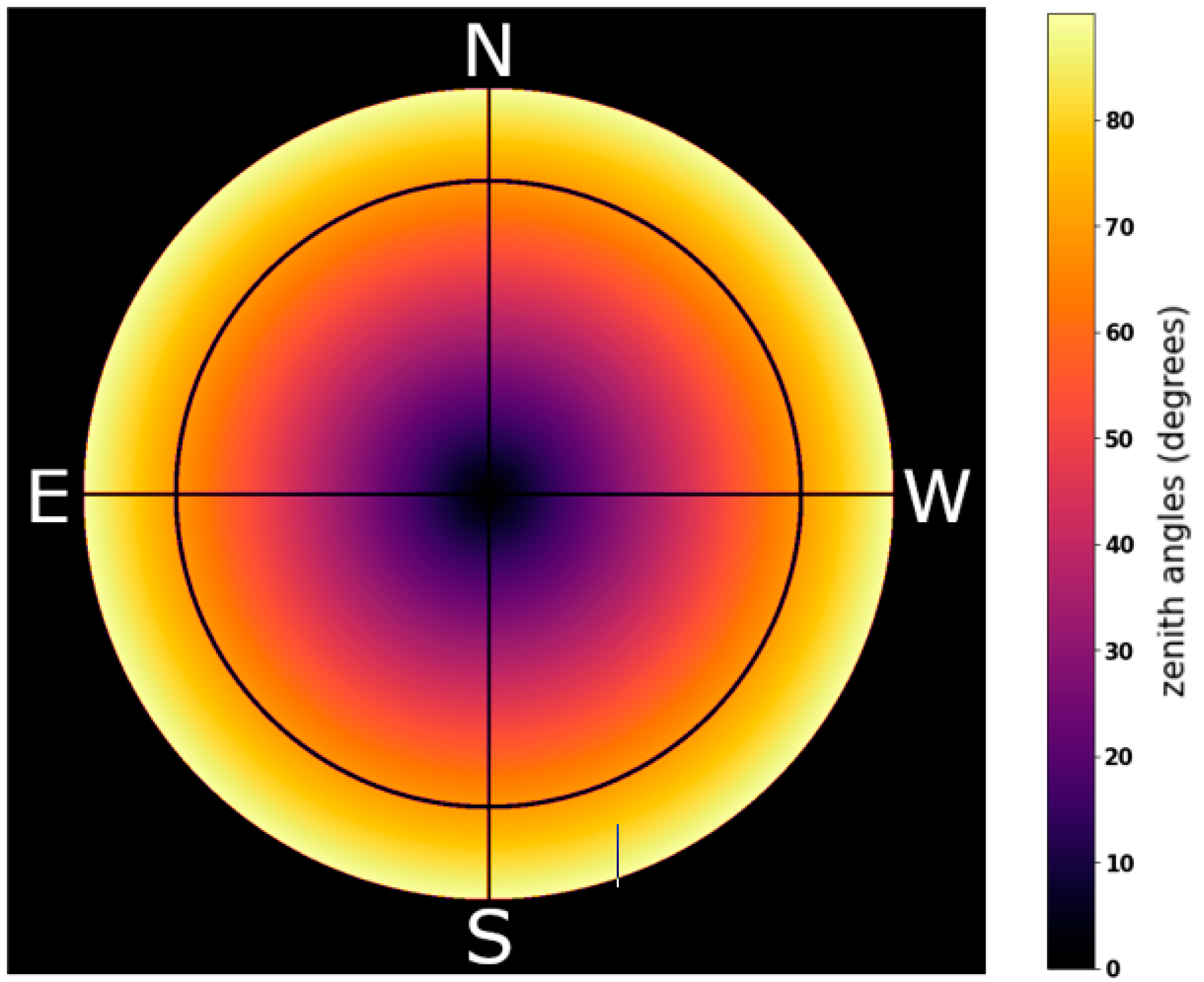

2.1. Signal Preprocessing

- (A)

- Crop-off the images:

- (B)

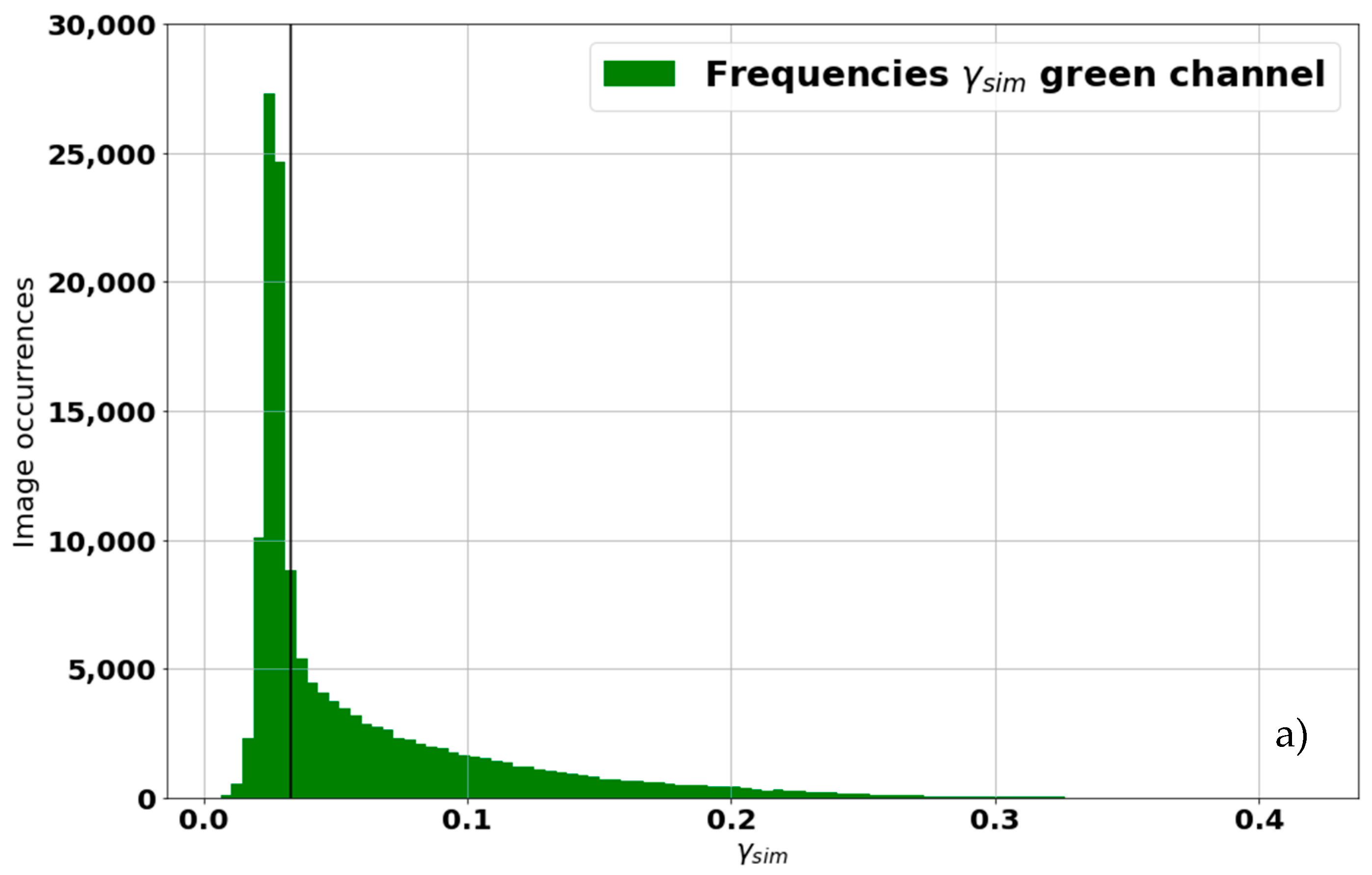

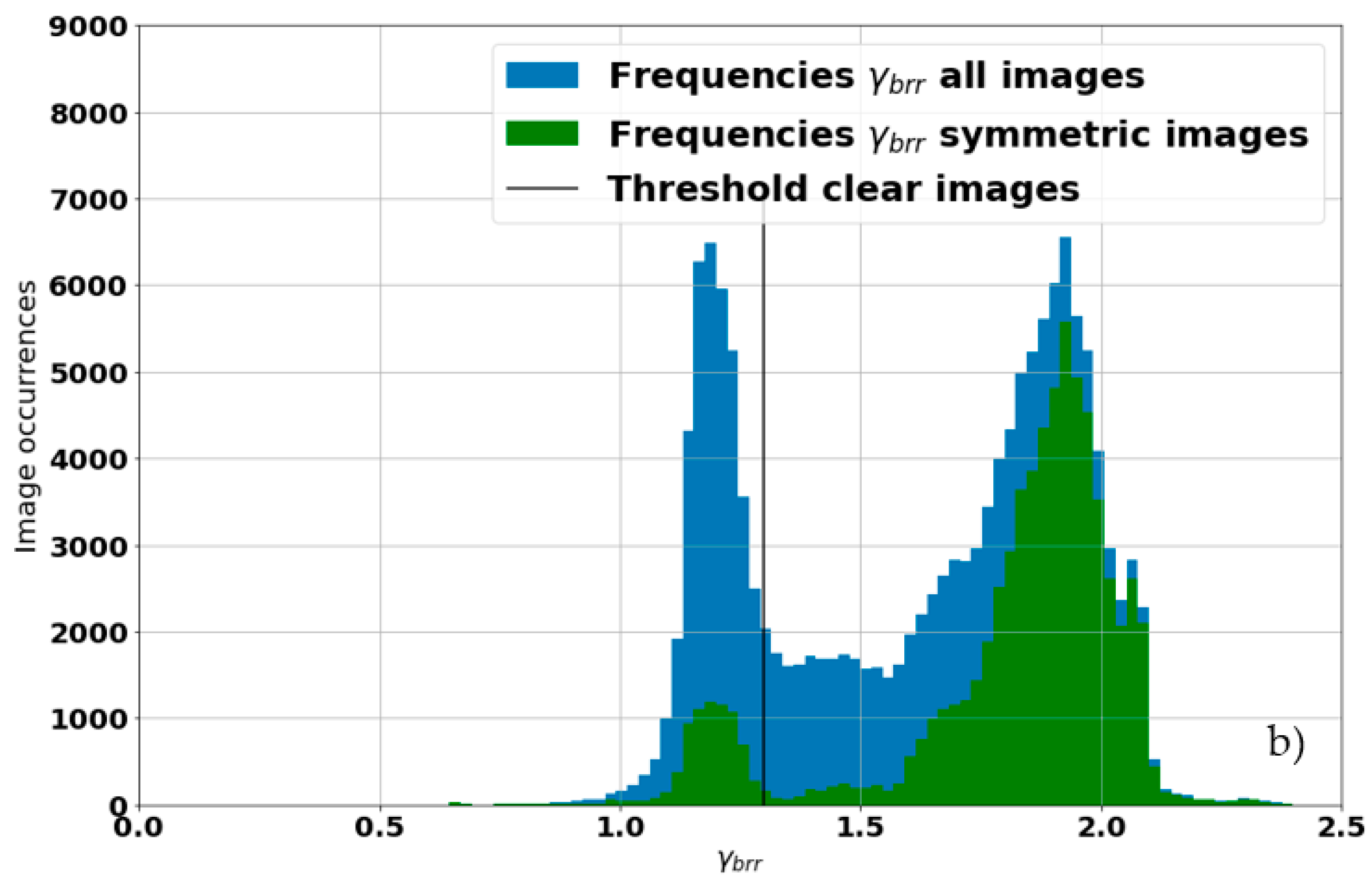

- Partial cloud screening:

- (C)

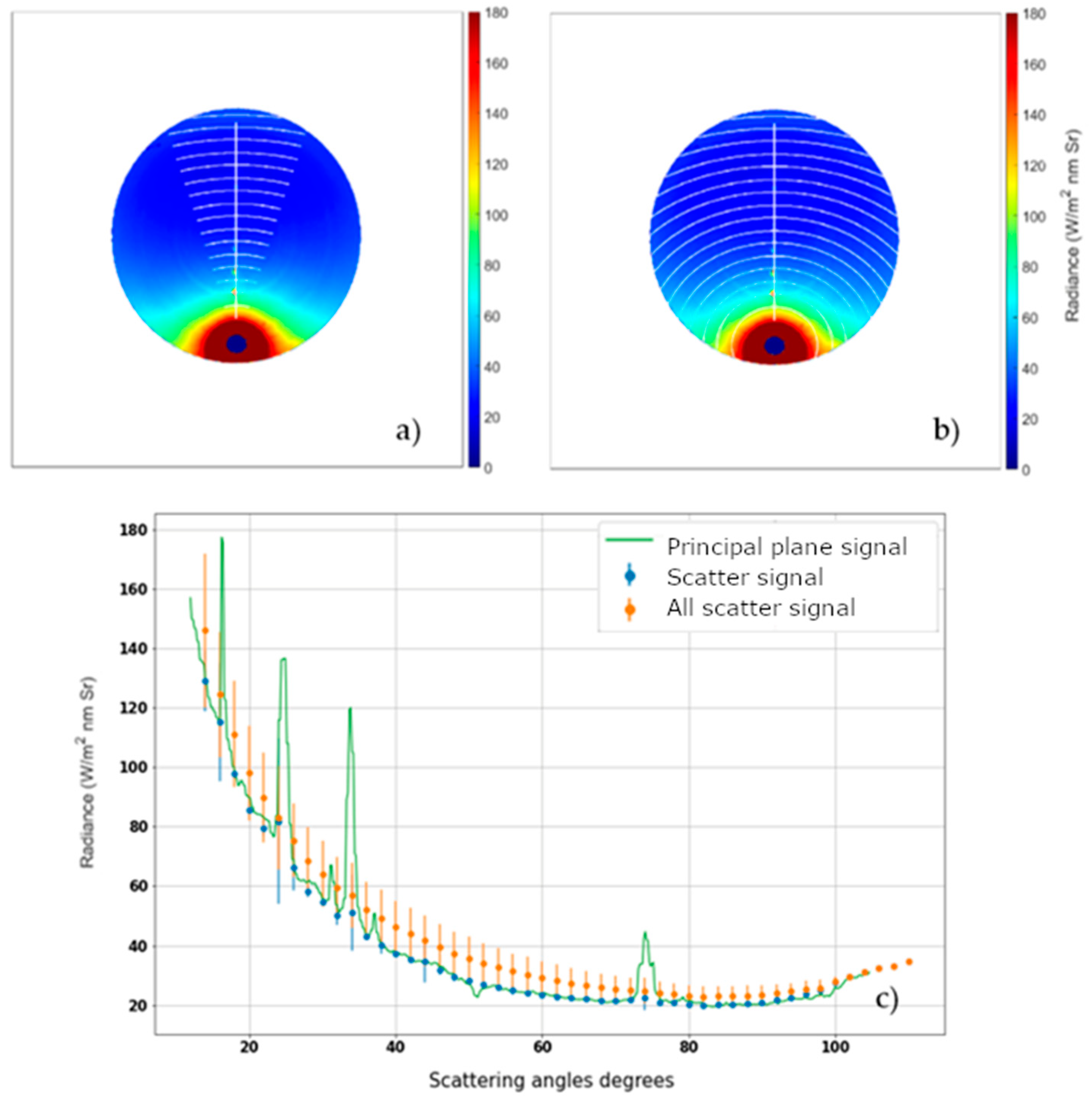

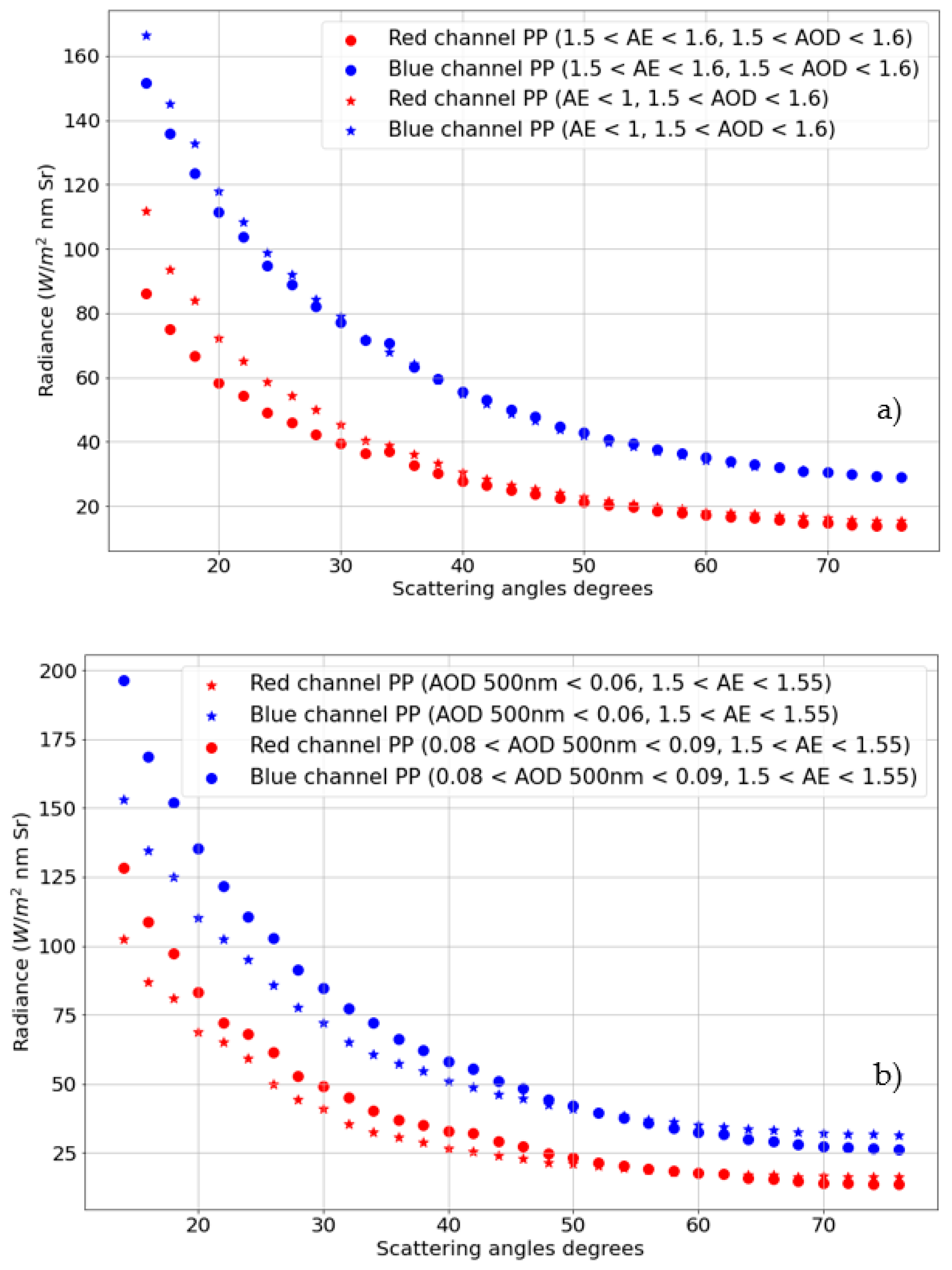

- Generation of input signals:

2.2. Machine Learning Model: Gaussian Process Regression

2.3. Approaches to Prediction

3. Results

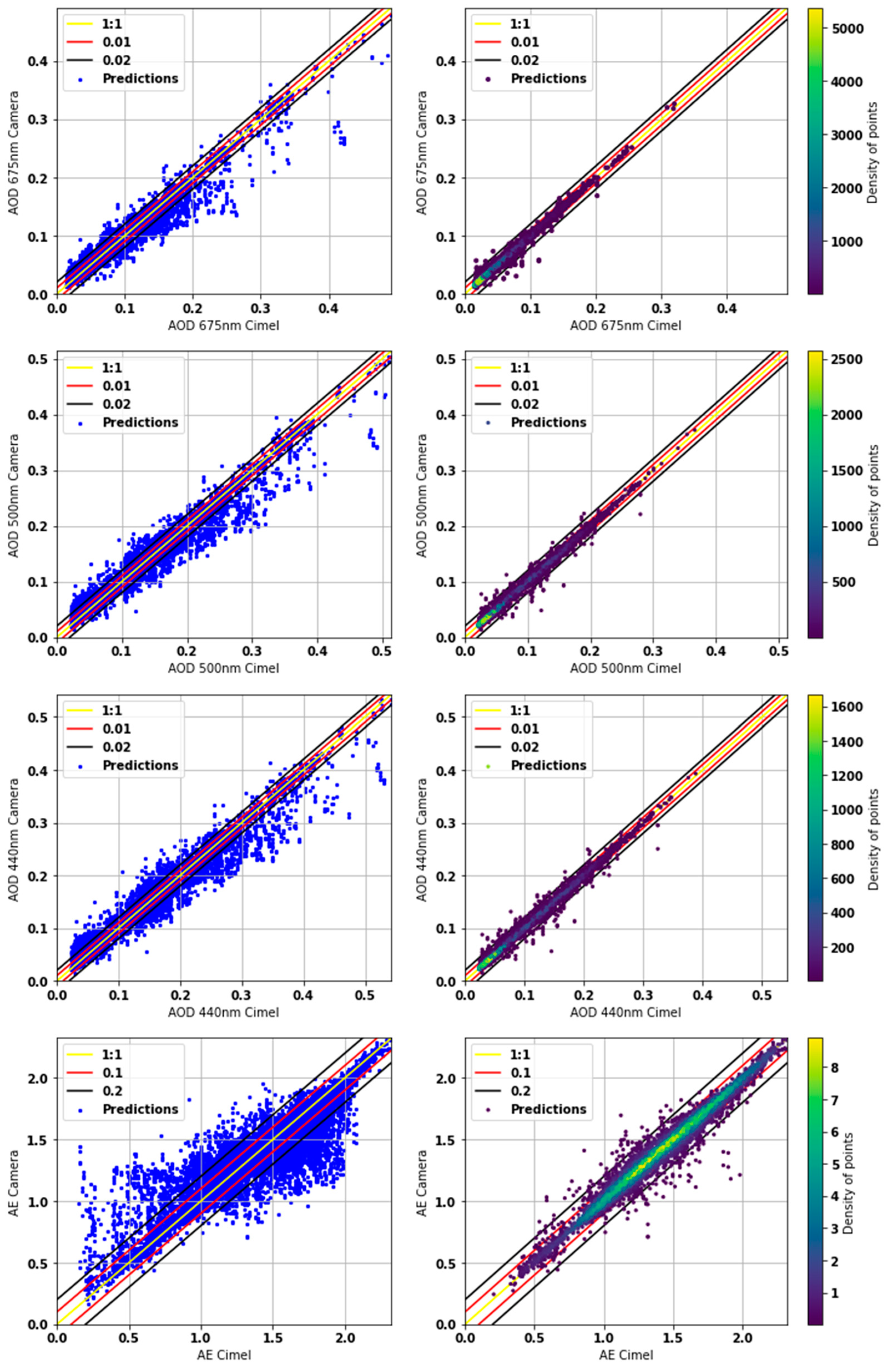

3.1. The Raw Prediction Performances

3.2. Data Quality Control

3.3. Comparison with Other Machine Learning Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- IPCC. Climate Change 2013: The Physical Science Basis. In Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Stocker, T.F., Qin, D., Plattner, G.-K., Tignor, M., Allen, S.K., Boschung, J., Nauels, A., Xia, Y., Bex, V., Midgley, P.M., Eds.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2013; p. 1535. [Google Scholar]

- Kim, D.; Ramanathan, V. Solar radiation budget and radiative forcing due to aerosols and clouds. J. Geophys. Res. Atmos. 2008, 113, 148–227. [Google Scholar] [CrossRef]

- Eddy, C. Aerosol Direct Radiative Forcing: A Review. Atmos. Aerosols Reg. Charact. Chem. Phys. 2012, 379–394. [Google Scholar] [CrossRef]

- Maciel, F.V.; Diao, M.; Patnaude, R. Examination of aerosol indirect effects during cirrus cloud evolution. Atmos. Chem. Phys. 2023, 23, 1103–1129. [Google Scholar] [CrossRef]

- Manolache, C.; Boldeanu, M.; Talianu, C.; Cucu, H. Unsupervised deep learning models for aerosol layers segmentation. In Proceedings of the 2022 14th International Conference on Communications (COMM), Chongqing, China, 10–12 June 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Haywood, J.; Boucher, O. Estimates of the direct and indirect radiative forcing due to tropospheric aerosols: A review. Rev. Geophys. 2000, 38, 513–543. [Google Scholar] [CrossRef]

- Lohmann, U.; Feichter, J. Global indirect aerosol effects: A review. Atmos. Meas. Tech. 2005, 5, 715–737. [Google Scholar] [CrossRef]

- Pilahome, O.; Ninssawan, W.; Jankondee, Y.; Janjai, S.; Kumharn, W. Long-term variations and comparison of aerosol optical properties based on MODIS and ground-based data in Thailand. Atmos. Environ. 2022, 286, 119218. [Google Scholar] [CrossRef]

- Luong, N.D.; Hieu, B.T.; Hiep, N.H. Contrasting seasonal pattern between ground-based PM2.5 and MODIS satellite-based aerosol optical depth (AOD) at an urban site in Hanoi, Vietnam. Environ. Sci. Pollut. Res. 2021, 29, 41971–41982. [Google Scholar] [CrossRef]

- Yu, F.; Luo, G.; Nair, A.A.; Tsigaridis, K.; Bauer, S.E. Use of machine learning to reduce uncertainties in particle number concentration and aerosol indirect radiative forcing predicted by climate models. Geophys. Res. Lett. 2022, 49, e2022GL098551. [Google Scholar] [CrossRef]

- Xie, W.; Liu, D.; Yang, M.; Chen, S.; Wang, B.; Wang, Z.; Xia, Y.; Liu, Y.; Wang, Y.; Zhang, C. SegCloud: A novel cloud image segmentation model using a deep convolutional neural network for ground-based all-sky-view camera observation. Atmos. Meas. Tech. 2020, 13, 1953–1961. [Google Scholar] [CrossRef]

- Kolios, S.; Hatzianastassiou, N. Quantitative Aerosol Optical Depth Detection during Dust Outbreaks from Meteosat Imagery Using an Artificial Neural Network Model. Remote Sens. 2019, 11, 1022. [Google Scholar] [CrossRef]

- Choi, W.; Lee, H.; Park, J. A First Approach to Aerosol Classification Using Space-Borne Measurement Data: Machine Learning-Based Algorithm and Evaluation. Remote Sens. 2021, 13, 609. [Google Scholar] [CrossRef]

- Gong, X.; Wex, H.; Müller, T.; Henning, S.; Voigtländer, J.; Wiedensohler, A.; Stratmann, F. Understanding aerosol microphysical properties from 10 years of data collected at Cabo Verde based on an unsupervised machine learning classification. Atmos. Chem. Phys. 2022, 22, 5175–5194. [Google Scholar] [CrossRef]

- Kang, Y.; Kim, M.; Kang, E.; Cho, D.; Im, J. Improved retrievals of aerosol optical depth and fine mode fraction from GOCI geostationary satellite data using machine learning over East Asia. ISPRS J. Photogramm. Remote Sens. 2021, 183, 253–268. [Google Scholar] [CrossRef]

- Lipponen, A.; Reinvall, J.; Väisänen, A.; Taskinen, H.; Lähivaara, T.; Sogacheva, L.; Kolmonen, P.; Lehtinen, K.; Arola, A.; Kolehmainen, V. Deep-learning-based post-process correction of the aerosol parameters in the high-resolution Sentinel-3 Level-2 Synergy product. Atmos. Meas. Tech. 2022, 15, 895–914. [Google Scholar] [CrossRef]

- Liang, T.; Liang, S.; Zou, L.; Sun, L.; Li, B.; Lin, H.; He, T.; Tian, F. Estimation of Aerosol Optical Depth at 30 m Resolution Using Landsat Imagery and Machine Learning. Remote Sens. 2022, 14, 1053. [Google Scholar] [CrossRef]

- Lee, J.; Shi, Y.; Cai, C.; Ciren, P.; Wang, J.; Gangopadhyay, A.; Zhang, Z. Machine Learning Based Algorithms for Global Dust Aerosol Detection from Satellite Images: Inter-Comparisons and Evaluation. Remote Sens. 2021, 13, 456. [Google Scholar] [CrossRef]

- Li, J.; Wong, M.S.; Lee, K.H.; Nichol, J.E.; Abbas, S.; Li, H.; Wang, J. A physical knowledge-based machine learning method for near-real-time dust aerosol properties retrieval from the Himawari-8 satellite data. Atmos. Environ. 2022, 280, 119098. [Google Scholar] [CrossRef]

- Di Noia, A.; Hasekamp, O.P.; van Harten, G.; Rietjens, J.H.H.; Smit, J.M.; Snik, F.; Henzing, J.S.; de Boer, J.; Keller, C.U.; Volten, H. Use of neural networks in ground-based aerosol retrievals from multi-angle spectropolarimetric observations. Atmos. Meas. Tech. 2015, 8, 281–299. [Google Scholar] [CrossRef]

- Lary, D.J.; Remer, L.A.; MacNeill, D.; Roscoe, B.; Paradise, S. Machine Learning and Bias Correction of MODIS Aerosol Optical Depth. IEEE Geosci. Remote Sens. Lett. 2009, 6, 694–698. [Google Scholar] [CrossRef]

- Albayrak, A.; Wei, J.; Petrenko, M.; Lynnes, C.; Levy, R.C. Global bias adjustment for MODIS aerosol optical thickness using neural network. J. Appl. Remote Sens. 2013, 7, 073514. [Google Scholar] [CrossRef]

- Lanzaco, B.L.; Olcese, L.E.; Palancar, G.G.; Toselli, B.M. An Improved Aerosol Optical Depth Map Based onMachine-Learning and MODIS Data: Development and Application in South America. Aerosol Air Qual. Res. 2017, 17, 1523–1536. [Google Scholar] [CrossRef]

- Cazorla, A.; Olmo, F.; Alados-Arboledas, L. Using a Sky Imager for aerosol characterization. Atmos. Environ. 2008, 42, 2739–2745. [Google Scholar] [CrossRef]

- Cazorla, A.; Shields, J.E.; Karr, M.E.; Olmo, F.J.; Burden, A.; Alados-Arboledas, L. Technical Note: Determination of aerosol optical properties by a calibrated sky imager. Atmos. Chem. Phys. 2009, 9, 6417–6427. [Google Scholar] [CrossRef]

- Huttunen, J.; Kokkola, H.; Mielonen, T.; Mononen, M.E.J.; Lipponen, A.; Reunanen, J.; Lindfors, A.V.; Mikkonen, S.; Lehtinen, K.E.J.; Kouremeti, N.; et al. Retrieval of aerosol optical depth from surface solar radiation measurements using machine learning algorithms, non-linear regression and a radiative transfer-based look-up table. Atmos. Chem. Phys. 2016, 16, 8181–8191. [Google Scholar] [CrossRef]

- Zbizika, R.; Pakszys, P.; Zielinski, T. Deep Neural Networks for Aerosol Optical Depth Retrieval. Atmosphere 2022, 13, 101. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, J.; Fan, W.; Yang, Q.; Zhao, D. Review of aerosol optical depth retrieval using visibility data. Earth-Sci. Rev. 2020, 200, 102986. [Google Scholar] [CrossRef]

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine learning in geosciences and remote sensing. Geosci. Front. 2016, 7, 3–10. [Google Scholar] [CrossRef]

- Valdelomar, P.C.; Gómez-Amo, J.L.; Peris-Ferrús, C.; Scarlatti, F.; Utrillas, M.P. Feasibility of Ground-Based Sky-Camera HDR Imagery to Determine Solar Irradiance and Sky Radiance over Different Geometries and Sky Conditions. Remote Sens. 2021, 13, 5157. [Google Scholar] [CrossRef]

- Román, R.; Torres, B.; Fuertes, D.; Cachorro, V.E.; Dubovik, O.; Toledano, C.; Cazorla, A.; Barreto, A.; Bosch, J.L.; Lapyonok, T.; et al. Remote sensing of lunar aureole with a sky camera: Adding information in the nocturnal retrieval of aerosol properties with GRASP code. Remote Sens. Environ. 2017, 196, 238–252. [Google Scholar] [CrossRef]

- Román, R.; Antuña-Sánchez, J.C.; Cachorro, V.E.; Toledano, C.; Torres, B.; Mateos, D.; Fuertes, D.; López, C.; González, R.; Lapionok, T.; et al. Retrieval of aerosol properties using relative radiance measurements from an all-sky camera. Atmos. Meas. Tech. 2022, 15, 407–433. [Google Scholar] [CrossRef]

- Kazantzidis, A.; Tzoumanikas, P.; Nikitidou, E.; Salamalikis, V.; Wilbert, S.; Prahl, C. Application of simple all-sky imagers for the estimation of aerosol optical depth. In AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2017; Volume 1850, p. 140012. [Google Scholar] [CrossRef]

- Estellés, V.; Martínez-Lozano, J.A.; Utrillas, M.P.; Campanelli, M. Columnar aerosol properties in Valencia (Spain) by ground-based Sun photometry. J. Geophys. Res. Atmos. 2007, 112, D11201. [Google Scholar] [CrossRef]

- Segura, S.; Estellés, V.; Esteve, A.; Marcos, C.; Utrillas, M.; Martínez-Lozano, J. Multiyear in-situ measurements of atmospheric aerosol absorption properties at an urban coastal site in western Mediterranean. Atmos. Environ. 2016, 129, 18–26. [Google Scholar] [CrossRef]

- Marcos, C.R.; Gómez-Amo, J.L.; Peris, C.; Pedrós, R.; Utrillas, M.P.; Martínez-Lozano, J.A. Analysis of four years of ceilometer-derived aerosol backscatter profiles in a coastal site of the western Mediterranean. Atmos. Res. 2018, 213, 331–345. [Google Scholar] [CrossRef]

- Gómez-Amo, J.L.; Estellés, V.; Marcos, C.; Segura, S.; Esteve, A.R.; Pedrós, R.; Utrillas, M.P.; Martínez-Lozano, J.A. Impact of dust and smoke mixing on column-integrated aerosol properties from observations during a severe wildfire episode over Valencia (Spain). Sci. Total Environ. 2017, 599–600, 2121–2134. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Amo, J.; Freile-Aranda, M.; Camarasa, J.; Estellés, V.; Utrillas, M.; Martínez-Lozano, J. Empirical estimates of the radiative impact of an unusually extreme dust and wildfire episode on the performance of a photovoltaic plant in Western Mediterranean. Appl. Energy 2018, 235, 1226–1234. [Google Scholar] [CrossRef]

- Estellés, V.; Campanelli, M.; Smyth, T.J.; Utrillas, M.P.; Martínez-Lozano, J.A. Evaluation of the new ESR network software for the retrieval of direct sun products from CIMEL CE318 and PREDE POM01 sun-sky radiometers. Atmos. Meas. Tech. 2012, 12, 11619–11630. [Google Scholar] [CrossRef]

- Holben, B.N.; Eck, T.F.; Slutsker, I.; Tanré, D.; Buis, J.P.; Setzer, A.; Vermote, E.; Reagan, J.A.; Kaufman, Y.J.; Nakajima, T.; et al. AERONET—A Federated Instrument Network and Data Archive for Aerosol Characterization. Remote Sens. Environ. 1998, 66, 1–16. [Google Scholar] [CrossRef]

- Olmo, F.J.; Cazorla, A.; Alados-Arboledas, L.; López-Álvarez, M.A.; Hernández-Andrés, J.; Romero, J. Retrieval of the optical depth using an all-sky CCD camera. Appl. Opt. 2008, 47, H182–H189. [Google Scholar] [CrossRef]

- Scarlatti, F.; Amo, J.G.; Catalán-Valdelomar, P.; Peris-Ferrús, C.; Utrillas, M.P. Retrieving aerosol properties using signals from an All-Sky camera and a random forest model. In Remote Sensing of Clouds and the Atmosphere XXVI; SPIE: Bellingham, WA, USA, 2021; Volume 11859, pp. 157–162. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning; the MIT Press: Cambridge, MA, USA, 2006; ISBN 026218253X. Available online: www.GaussianProcess.org/gpml (accessed on 20 February 2023).

- Dubovik, O.; Fuertes, D.; Litvinov, P.; Lopatin, A.; Lapyonok, T.; Doubovik, I.; Xu, F.; Ducos, F.; Chen, C.; Torres, B.; et al. A Comprehensive Description of Multi-Term LSM for Applying Multiple a Priori Constraints in Problems of Atmospheric Remote Sensing: GRASP Algorithm, Concept, and Applications. Front. Remote Sens. 2021, 2, 706851. [Google Scholar] [CrossRef]

- Dubovik, O.; King, M.D. A flexible inversion algorithm for retrieval of aerosol optical properties from Sun and sky radiance measurements. J. Geophys. Res. 2000, 105, 148–227. [Google Scholar] [CrossRef]

- Dubovik, O.; Herman, M.; Holdak, A.; Lapyonok, T.; Tanre, D.; Deuze, J.L.; Ducos, F.; Sinyuk, A.; Lopatin, A. Statistically optimized inversion algorithm for enhanced retrieval of aerosol properties from spectral multi-angle polarimetric satellite observations. Atmos. Meas. Tech. 2011, 4, 975–1018. [Google Scholar] [CrossRef]

- Gómez-Amo, J.L.; Estellés, V.; di Sarra, A.; Pedrós, R.; Utrillas, M.P.; Martínez-Lozano, J.A.; González-Frias, C.; Kyrö, E.; Vilaplana, J.M. Operational considerations to improve total ozone measurements with a Microtops II ozone monitor. Atmos. Meas. Tech. 2012, 5, 759–769. [Google Scholar] [CrossRef]

| Methods | Outputs | Nu * (%) (QA) | MAE (QA) | RMSE (QA) | a (QA) ± δ | b (QA) ± δ | R2 (QA) | PERC QA (%) |

|---|---|---|---|---|---|---|---|---|

| RGBtoAOD | AOD675 | 81–93 (95–99) | 0.006 (0.004) | 0.012 (0.005) | 0.904 (0.992) ± 0.002 | 0.0066 (0.0005) ± 0.0001 | 0.96 (0.99) | 70 |

| AOD500 | 73–85 (87–96) | 0.010 (0.005) | 0.019 (0.01) | 0.883 (0.989) ± 0.002 | 0.0118 (0.0011) ± 0.0002 | 0.94 (0.99) | 75 | |

| AOD440 | 60–75 (73–87) | 0.016 (0.004) | 0.030 (0.027) | 0.851 (0.951) ± 0.003 | 0.024 (0.006) ± 0.0004 | 0.88 (0.97) | 74 | |

| REDtoALL | AOD675 | 81–93 (95–99) | 0.006 (0.004) | 0.012 (0.005) | 0.904 (0.992) ± 0.002 | 0.0066 (0.0005) ± 0.0001 | 0.96 (0.99) | 70 |

| AOD500 | 74–88 (91–98) | 0.008 (0.004) | 0.015 (0.008) | 0.913 (0.991) ± 0.002 | 0.0095 (0.0009) ± 0.0002 | 0.96 (0.99) | 68 | |

| AOD440 | 70–85 (88–97) | 0.010 (0.003) | 0.017 (0.01) | 0.914 (0.988) ± 0.002 | 0.0111 (0.0012) ± 0.0002 | 0.96 (0.99) | 67 | |

| AE | 64–81 (87–96) | 0.12 (0.05) | 0.18 (0.09) | 0.746 (0.968) ± 0.003 | 0.342 (0.041) ± 0.004 | 0.78 (0.97) | 52 | |

| BRRtoALL | AOD675 | 77–87 (81–90) | 0.008 (0.004) | 0.014 (0.012) | 1.009 (1.012) ± 0.002 | 0.0044 (0.0018) ± 0.0002 | 0.95 (0.97) | 68 |

| AOD500 | 68–81 (73–84) | 0.012 (0.005) | 0.022 (0.022) | 0.977 (0.993) ± 0.002 | 0.0099 (0.0068) ± 0.0003 | 0.92 (0.93) | 68 | |

| AOD440 | 63–78 (68–82) | 0.015 (0.005) | 0.028 (0.028) | 0.962 (0.983) ± 0.003 | 0.0136 (0.0097) ± 0.0004 | 0.91 (0.92) | 68 | |

| AE | 70–85 (86–97) | 0.10 (0.05) | 0.16 (0.08) | 0.874 (0.981) ± 0.004 | 0.178 (0.026) ± 0.003 | 0.83 (0.98) | 58 | |

| REDtoALL2 | AOD675 | 81–93 (95–99) | 0.006 (0.004) | 0.012 (0.005) | 0.904 (0.992) ± 0.002 | 0.0066 (0.0005) ± 0.0001 | 0.96 (0.99) | 70 |

| AOD500 | 74–88 (91–98) | 0.008 (0.004) | 0.015 (0.008) | 0.913 (0.991) ± 0.002 | 0.0095 (0.0009) ± 0.0002 | 0.96 (0.99) | 68 | |

| AOD440 | 70–85 (88–97) | 0.010 (0.003) | 0.017 (0.01) | 0.914 (0.988) ± 0.002 | 0.0111 (0.0012) ± 0.0002 | 0.96 (0.99) | 67 | |

| AE | 64–81 (87–96) | 0.12 (0.05) | 0.18 (0.09) | 0.746 (0.968) ± 0.003 | 0.342 (0.041) ± 0.004 | 0.78 (0.97) | 52 |

| Models | Outputs | Nu * (%) (QA) | MAE (QA) | RMSE (QA) | a (QA) ± δ | b (QA) ± δ | R2 (QA) | PERC QA (%) |

|---|---|---|---|---|---|---|---|---|

| GP (Red to all) | AOD675 | 81–93 (95–99) | 0.006 (0.004) | 0.012 (0.005) | 0.904 (0.992) ± 0.002 | 0.0066 (0.0005) ± 0.0001 | 0.96 (0.99) | 70 |

| AOD500 | 74–88 (91–98) | 0.008 (0.004) | 0.015 (0.008) | 0.913 (0.991) ± 0.002 | 0.0095 (0.0009) ± 0.0002 | 0.96 (0.99) | 68 | |

| AOD440 | 70–85 (88–97) | 0.010 (0.003) | 0.017 (0.01) | 0.914 (0.988) ± 0.002 | 0.0111 (0.0012) ± 0.0002 | 0.96 (0.99) | 67 | |

| AE | 64–81 (87–96) | 0.12 (0.05) | 0.18 (0.09) | 0.746 (0.968) ± 0.003 | 0.342 (0.041) ± 0.004 | 0.78 (0.97) | 52 | |

| ANN (Red to all) | AOD675 | 75–90 | 0.009 | 0.015 | 0.904 ± 0.004 | 0.0066 ± 0.0002 | 0.93 | |

| AOD500 | 58–84 | 0.011 | 0.018 | 0.901 ± 0.001 | 0.0111 ± 0.0001 | 0.94 | ||

| AOD440 | 56–80 | 0.013 | 0.019 | 0.920 ± 0.003 | 0.0080 ± 0.0004 | 0.95 | ||

| AE | 39–68 | 0.17 | 0.24 | 0.669 ± 0.008 | 0.43 ± 0.02 | 0.67 | ||

| RF (Red to all) | AOD675 | 81–93 | 0.007 | 0.013 | 0.903 ± 0.003 | 0.0059 ± 0.0001 | 0.95 | |

| AOD500 | 69–87 | 0.010 | 0.016 | 0.913 ± 0.003 | 0.0074 ± 0.0004 | 0.96 | ||

| AOD440 | 61–83 | 0.012 | 0.019 | 0.908 ± 0.003 | 0.0091 ± 0.0007 | 0.95 | ||

| AE | 48–72 | 0.16 | 0.22 | 0.669 ± 0.008 | 0.43 ± 0.02 | 0.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Scarlatti, F.; Gómez-Amo, J.L.; Valdelomar, P.C.; Estellés, V.; Utrillas, M.P. A Machine Learning Approach to Derive Aerosol Properties from All-Sky Camera Imagery. Remote Sens. 2023, 15, 1676. https://doi.org/10.3390/rs15061676

Scarlatti F, Gómez-Amo JL, Valdelomar PC, Estellés V, Utrillas MP. A Machine Learning Approach to Derive Aerosol Properties from All-Sky Camera Imagery. Remote Sensing. 2023; 15(6):1676. https://doi.org/10.3390/rs15061676

Chicago/Turabian StyleScarlatti, Francesco, José L. Gómez-Amo, Pedro C. Valdelomar, Víctor Estellés, and María Pilar Utrillas. 2023. "A Machine Learning Approach to Derive Aerosol Properties from All-Sky Camera Imagery" Remote Sensing 15, no. 6: 1676. https://doi.org/10.3390/rs15061676

APA StyleScarlatti, F., Gómez-Amo, J. L., Valdelomar, P. C., Estellés, V., & Utrillas, M. P. (2023). A Machine Learning Approach to Derive Aerosol Properties from All-Sky Camera Imagery. Remote Sensing, 15(6), 1676. https://doi.org/10.3390/rs15061676