1. Introduction

Precipitation nowcasting aims to predict the kilometer-wise rainfall intensity within the next two hours [

1]. It plays a vital role in daily life, such as traffic planning, disaster alerts, and agriculture [

2]. Precipitation nowcasting is often defined as a spatiotemporal sequence prediction task [

3,

4,

5,

6,

7]. A sequence of historical radar echo images is taken in and a sequence of future radar echo images is predicted [

3]. In this paper, we denote the historical radar echo images as

X and the future (to be predicted) radar echo images as

Y. The rainfall intensity distribution of the whole dataset will be

(or

, because both

X and

Y are drawn from the same distribution), and the precipitation nowcasting task can be represented as

.

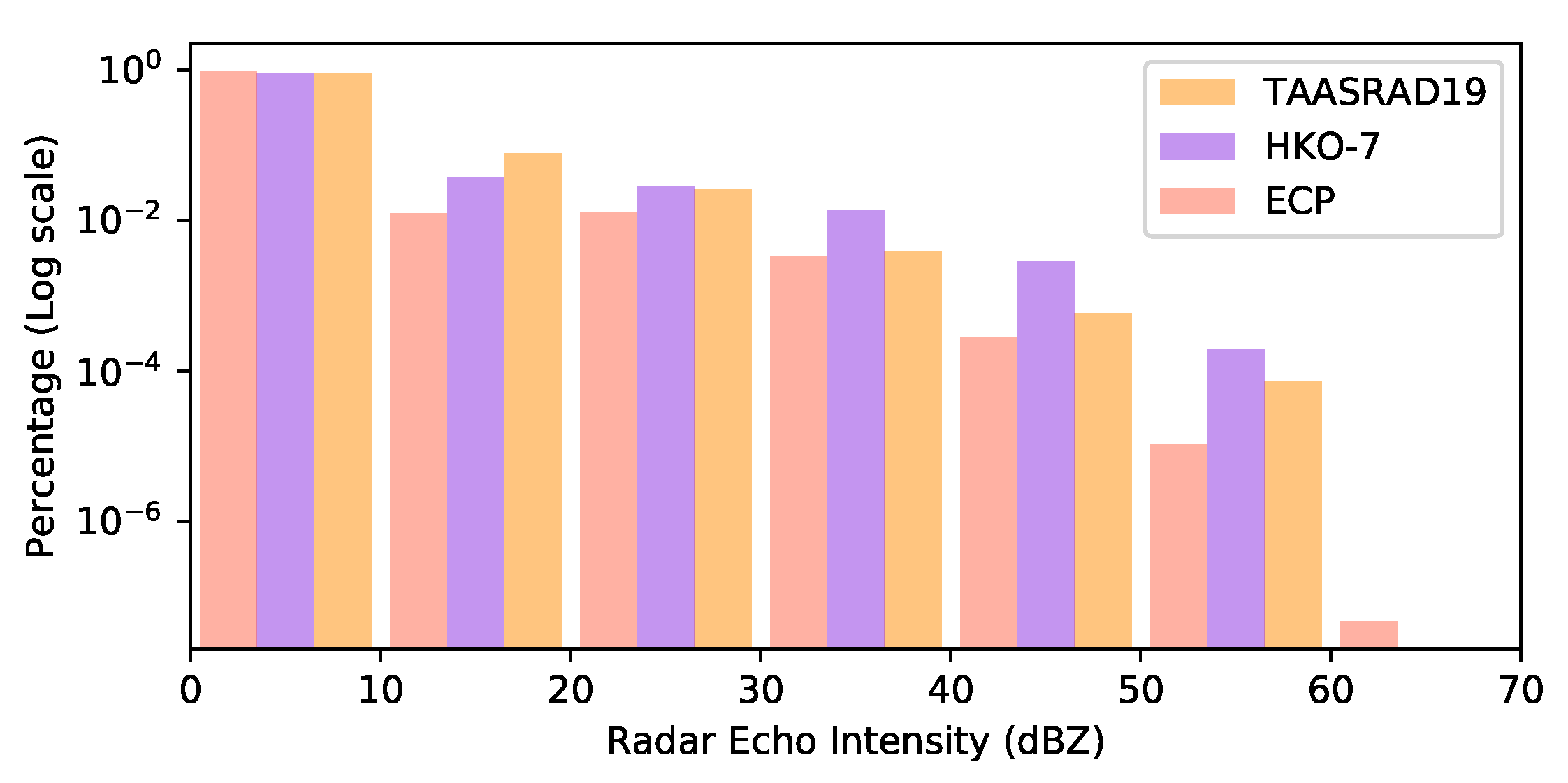

However, due to the highly skewed distribution of rainfall intensities, the traditional approach has a limited ability to forecast heavy rainfall scenarios [

8]. For instance, using the Italian dataset TAASRAD19 [

9] as an example, the number of pixels with a radar reflective intensity greater than 50 dBZ accounts for only 0.066% of the total number of pixels, and only 0.45% of pixels are greater than 40 dBZ. The same situation also exists in the ECP dataset [

10] and HKO-7 dataset [

11], as illustrated in

Figure 1. Radar echo intensity (dBZ) does not correspond to the rainfall intensity (mm/h). The conversion from radar reflectivity to rainfall intensity requires a precipitation estimation algorithm, such as the Z–R relation formula. This paper focuses on radar echo intensity prediction; rainfall intensity can be estimated from the predicted radar echo intensity using an estimation algorithm [

3].

Heavy rainfall scenarios are often rare; however, if left unaddressed, they will have more severe consequences than moderate to light rainfall scenarios. Therefore, efforts have been devoted to improving the heavy rain forecasting performance, with reweighting and resampling being the most popular strategies [

8,

11,

12]. These strategies could increase the heavy rainfall sample weights based on

.

However, adjusting the sample weights or prediction losses based on would undermine the conditional distribution , downgrading the majority classes’ performance and hurting the overall rainfall prediction accuracy.

In this work, we propose a new strategy, mutual-information-based reweighting (MIR), to improve the nowcasting prediction for imbalanced rainfall data. Mutual information

measures the dependence between random variables

X and

Y, with high mutual information corresponding to high-dependency

X and

Y (easy-to-learn) tasks, and low mutual information corresponding to low-dependency (hard-to-learn) tasks [

13].

In the task of precipitation nowcasting, we calculate the mutual information of the radar echo data and observe that tasks with more mutual information exhibit greater resilience to the issue of data imbalance. Specifically, when the mutual information is high, MIR employs relatively mild reweighting factors to preserve the original distribution of . Conversely, for tasks with low mutual information, MIR employs higher reweighting factors to enhance the prediction performance. This approach boosts the performance of the minority groups without negatively impacting the overall prediction performance.

Furthermore, we propose a simple curriculum-style training strategy, the time superimposing strategy (TSS). The primary advantage of curriculum learning is that it enables machines to start learning with more manageable tasks and gradually progress to more challenging ones. Inspired by this, TSS first trains the model with the highest mutual information task and gradually stacks the lower mutual information tasks into the training task set. Regarding implementation, the TSS strategy only requires control over the forecast time length during loss calculation during the training phase, which can be achieved by adding just one or two lines of code.

This work is an extension of our previous work [

14], which fused MIR and TSS together. In this paper, we elaborate on the MIR and TSS strategies separately, provide a more detailed experimental analysis, and extensively discuss different aspects of the proposed strategies.

The remainder of this paper is organized as follows.

Section 2 briefly reviews the related works about deep learning models and the data imbalance problem in precipitation nowcasting. In

Section 3, we describe how to compute the mutual information for the precipitation nowcasting task. Then, a reweighting strategy, MIR (

Section 3.2), and a curriculum-learning-style training strategy, TSS (

Section 3.3), are proposed based on the mutual information of the training tasks. Extensive experiments in

Section 4 reveal that the proposed MIR and TSS strategies reinforce the state-of-the-art models’ performances using a large gap without downgrading the overall prediction performance.

Section 5 discusses several research questions. The conclusions are shown in

Section 6.

3. Methodology

In this section, we begin by explaining the process of calculating conditional distribution and mutual information, which is essential when identifying tasks with a high or low information content. Next, we explore the connection between mutual information and the data imbalance problem, and present a novel mutual information-based reweighting approach that addresses the limitations of existing methods. Finally, we introduce a curriculum-style learning strategy that guides the model to learn tasks progressively. This approach prioritizes tasks with a high level of mutual information, allowing for the model to master them before moving on to those with lower mutual information.

3.1. Estimating the Mutual Information on Precipitation Nowcasting Tasks

Existing deep-learning-based models [

10,

11,

18] usually regard the precipitation nowcasting task as a spatiotemporal forecasting problem. Models encode information from a sequence of

historical radar echo images and generate a sequence of

m future radar echo images that are most likely to occur, which can be formulated as

where

is the radar echo image sequence,

is the temporal length, and

H and

W are the height and the width of images, respectively. Each pixel in the rainfall data has an echo intensity value within

, corresponding to the rainfall intensity.

In information theory, the mutual information

is proposed to quantify the information gain achieved by

Y by knowing

X, and vice versa [

13]. This is defined as

, where information entropy

and conditional entropy

. When

X and

Y are independent,

and

; when

X determines

Y,

.

However, calculating the mutual information in a high-dimensional task is challenging. Mutual information measures the dependence between random variables X and Y, which involves an estimate of the probability density distribution and an estimate of the marginal distributions and . When the task is low-dimensional, it is relatively easy to obtain sufficient training data to estimate ; however, when the task is high-dimensional, it is hard to obtain extensive enough training datasets to estimate . This phenomenon is called the curse of dimensionality. As a result, previous researchers usually train large and over-parameterized generative models with limited training data to approximate .

To avoid training an approximated generative model for estimation, we transfer the high-dimensional radar echo image intensity prediction task into a one-dimensional radar echo pixel intensity prediction task. More specifically, in this section, we regard the precipitation nowcasting task as a series of pixel prediction tasks with different forecasting lengths. As the dimension of Y shrinks to 1, estimating and is straightforward and easy. In this way, mutual information is calculated.

To calculate the joint probability distribution, we first propose redefining the precipitation nowcasting task at the pixel level:

where

denotes the value of the pixel

i at time

,

refers to the set of spatiotemporal neighbors (Here, neighbors of pixel

i are the pixels from the length-

l cube centered at pixel

i) of pixel

i at time

, and

represents the value of pixel

i at time

, where

. It is important to note that

. Equations (

1) and (

2) are equivalent only if

covers current as well as all past image pixels.

Next, we employ a three-dimensional Gaussian convolution,

, of size

on each pixel

i to merge the information of spatiotemporal neighboring pixels.

During this procedure, only the first-order spatiotemporal information is kept, and higher-order information such as standard deviation and gradient direction is lost. Then, Equation (

2) could be rewritten as:

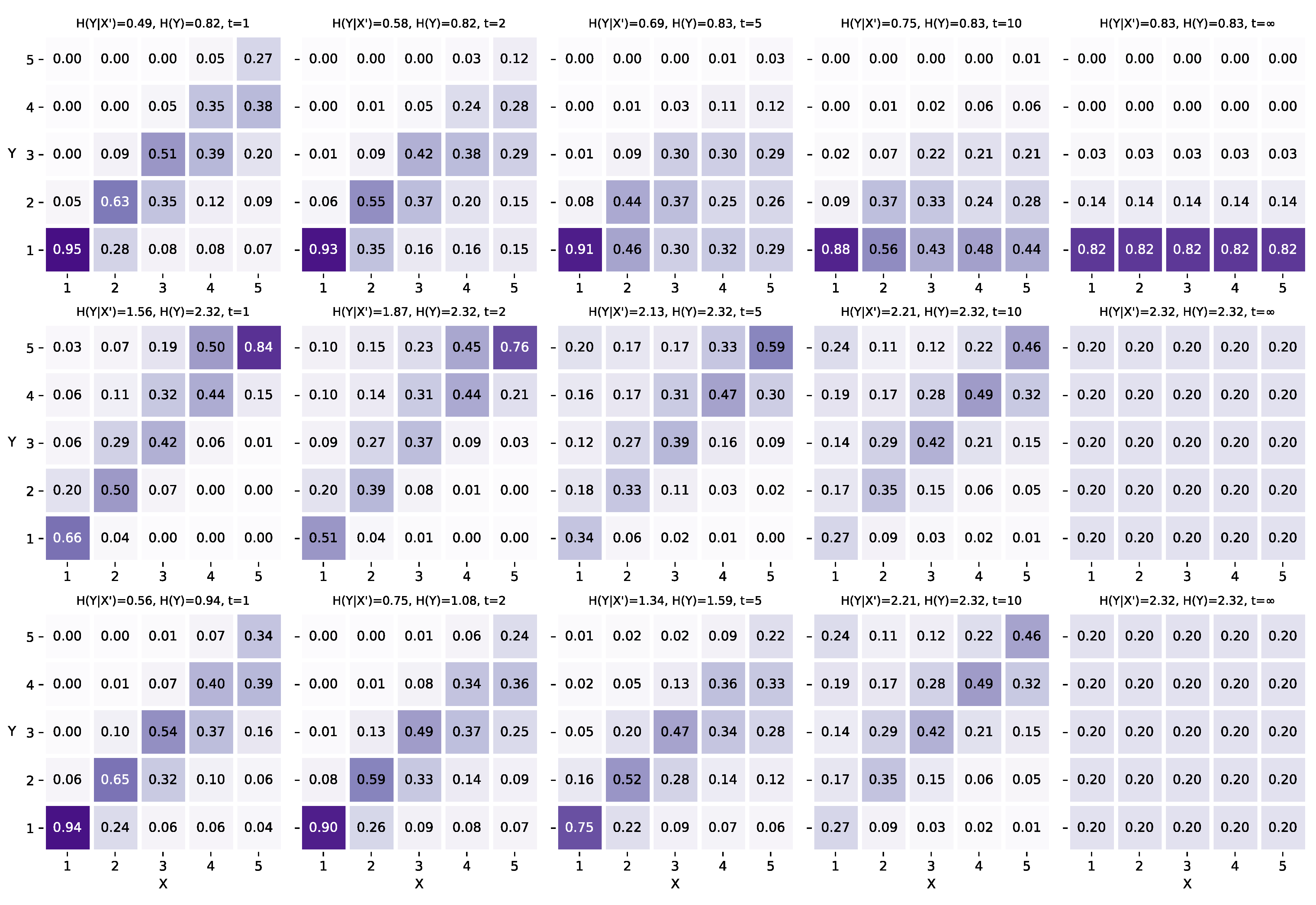

Third, we compute the conditional distribution

across the whole training dataset, which approximates

. The conditional probability is computed as:

Finally, mutual information is computed as:

Here, the probability and can be obtained similarly, as . The mutual information indicates the degree to which X determines Y; therefore, we can use it to measure the degree to which determines .

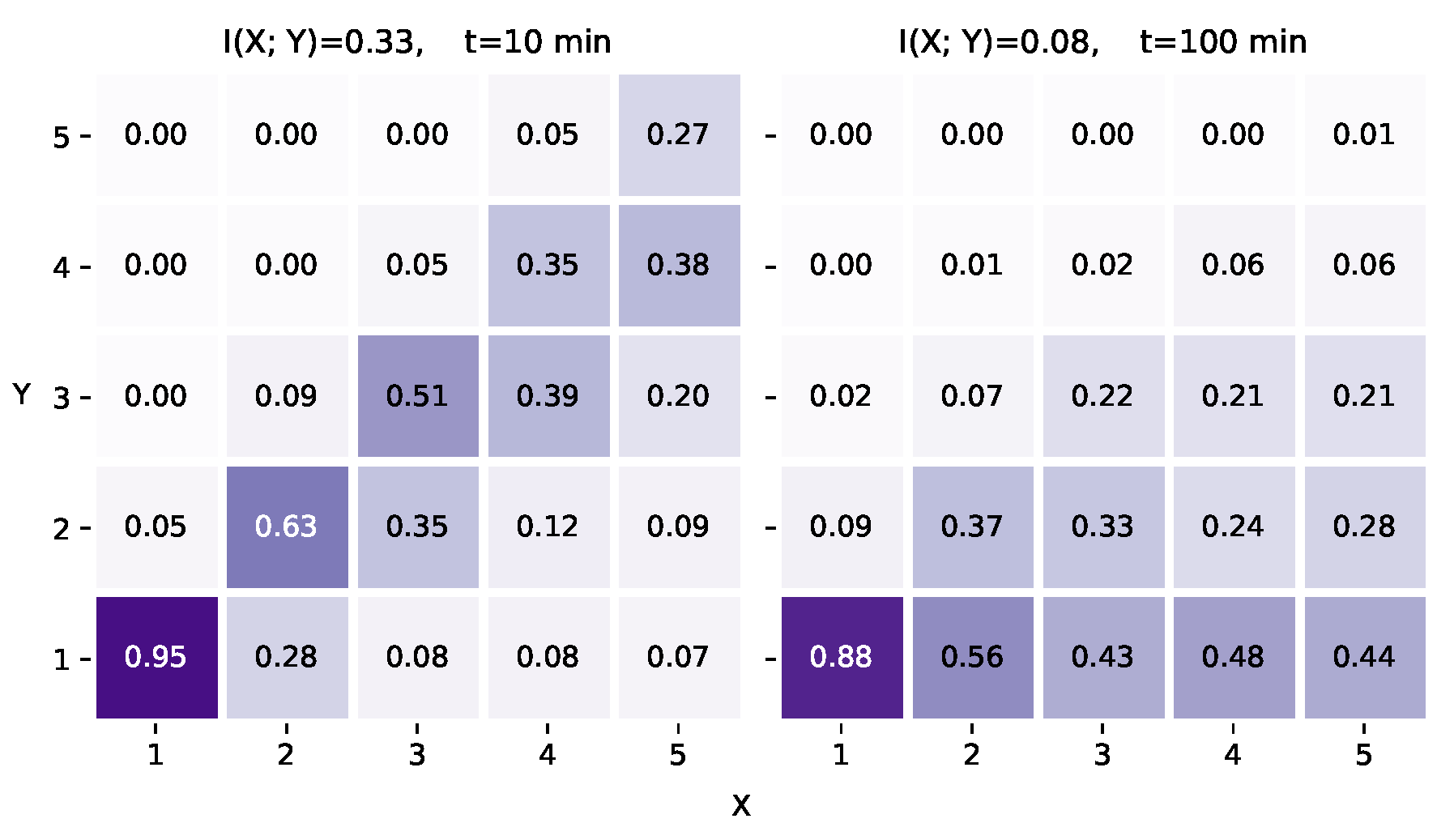

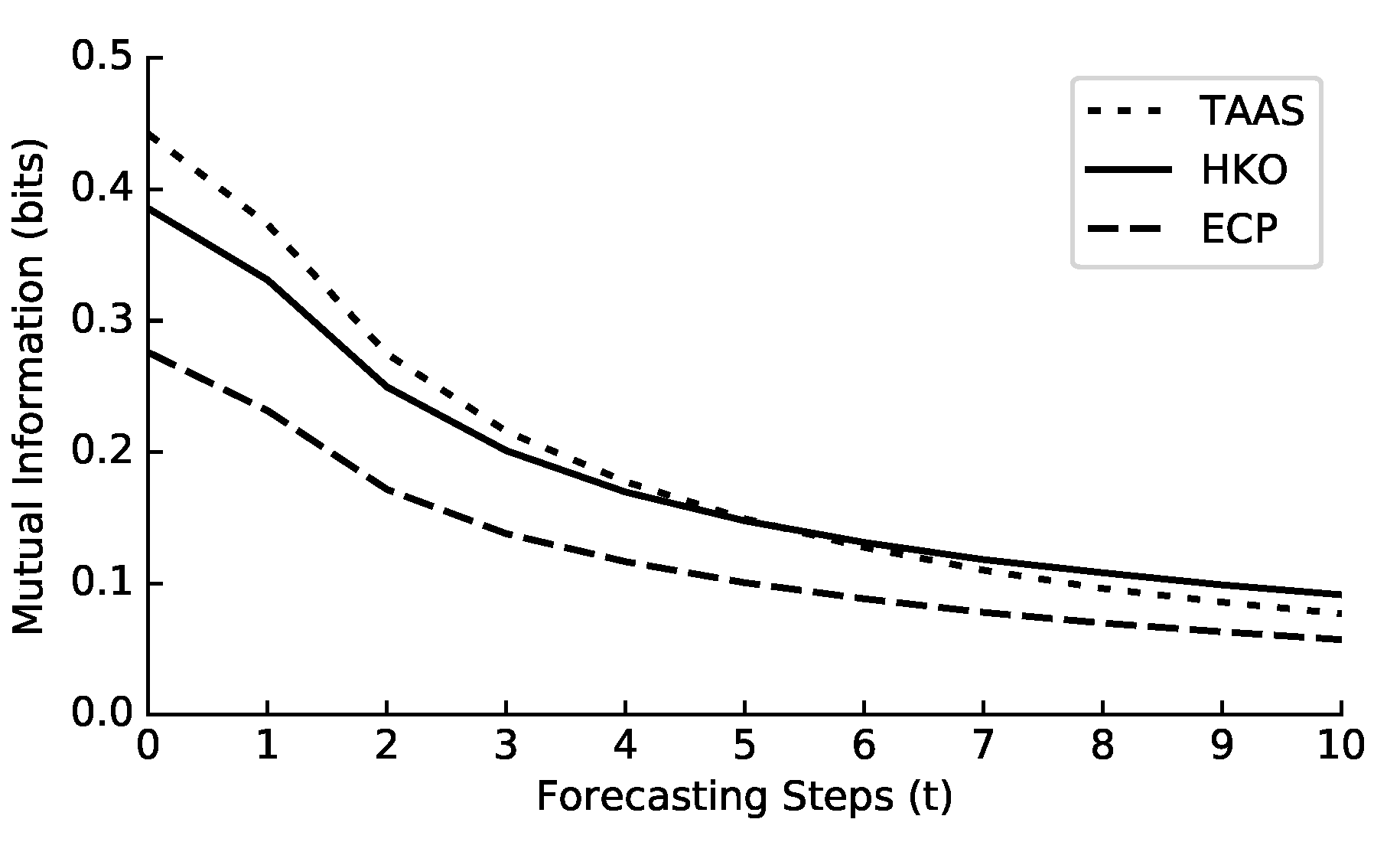

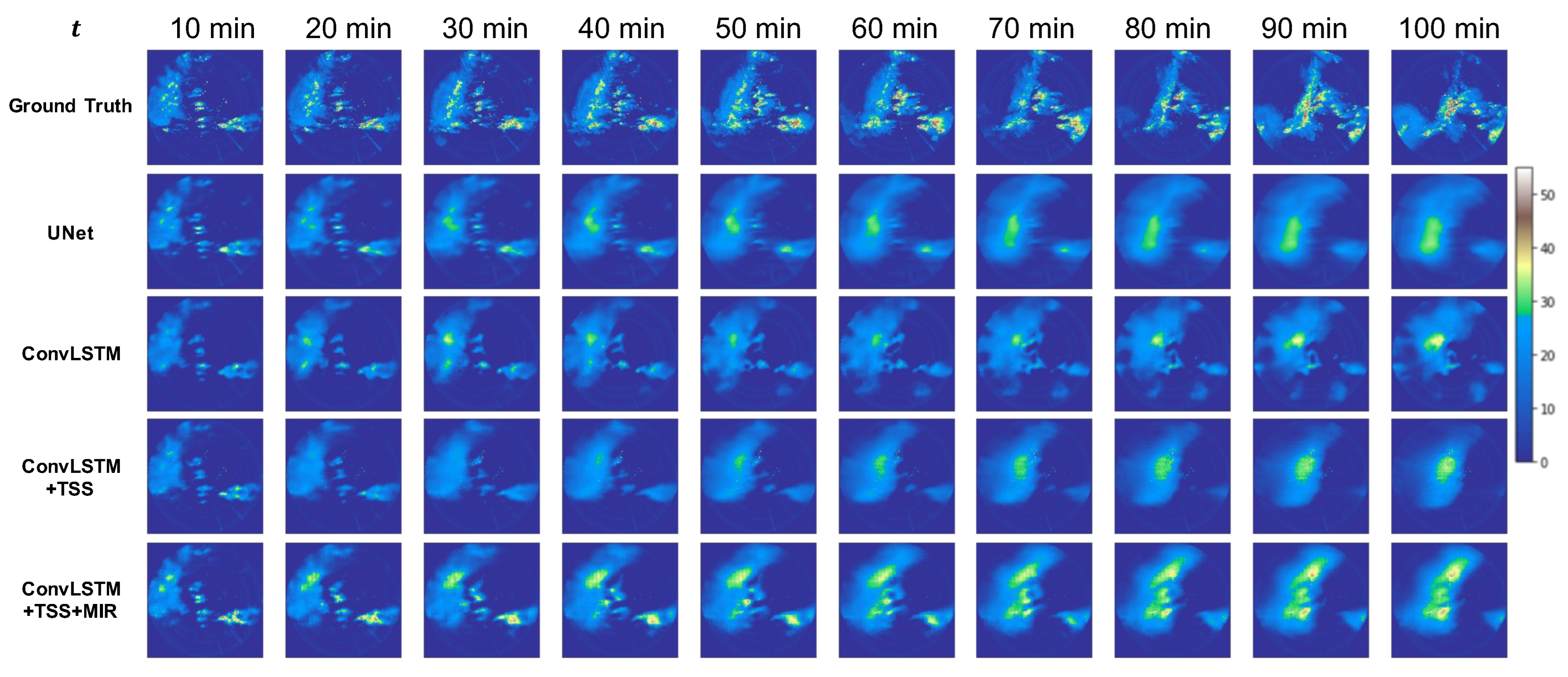

Figure 2 displays two conditional distribution matrices. To facilitate interpretation, the rainfall intensity is divided into five categories of equal size. The mutual information for the three precipitation nowcasting datasets with different

t values is shown in

Figure 3, where

. It should be noted that the mutual information does not always monotonically decrease with increasing

t. For instance, the mutual information fluctuates periodically when dealing with periodic data as

t increases.

3.2. Mutual Information-Based Reweighting (MIR) Strategy

While reweighting methods based on may decrease the quality of generated images, sacrificing part of the majority’s performance to improve the minority is still acceptable in precipitation nowcasting, where heavy rainfall is more critical. This subsection proposes a new reweighting scheme that considers mutual information to adjust the weighting factors. To better understand the relationship between data imbalances and mutual information, consider a binary classification experiment.

3.2.1. Motivating Example

In this experiment, the training data are sampled from two one-dimensional Gaussian distributions A and B, where , , and . The objective is to train a binary classifier to distinguish whether a testing sample is generated from A or B. The testing dataset is balanced, and a three-layer, fully connected network is used as the model.

Table 1 displays the mean absolute error (MAE) for different levels of imbalance ratios and

settings. The mutual information values are indicated within the brackets. The model’s prediction is considered correct when the MAE equals to 0, and is regarded as random guessing when the MAE equals 0.5.

Traditionally, the data imbalance issue has been associated with the reduced performance of minority classes due to the imbalanced . This holds true when is constant. However, when the imbalance ratio is constant, the MAE decreases as and mutual information increases, indicating that the impact of data imbalance is reduced. The model becomes resilient to data imbalances when the standard deviation equals one, and . This experiment demonstrates that the imbalanced distribution does not necessarily lead to poor performance for the minority class. High mutual information tasks result in better model training when the imbalance ratio is constant compared to low mutual information tasks.

In an imbalanced setting, such as 1:99, the mutual information is lower than in a balanced setting because the information entropy , representing the upper bound of mutual information. Therefore, the trend of mutual information values within each imbalance ratio is more important than the value itself. When the imbalance ratio or is constant, mutual information can help to identify settings that are more resilient to the impact of data imbalance. Thus, it is unnecessary to use reweighting strategies for high mutual information tasks, avoiding the side effect of image quality degradation.

3.2.2. MIR Strategy

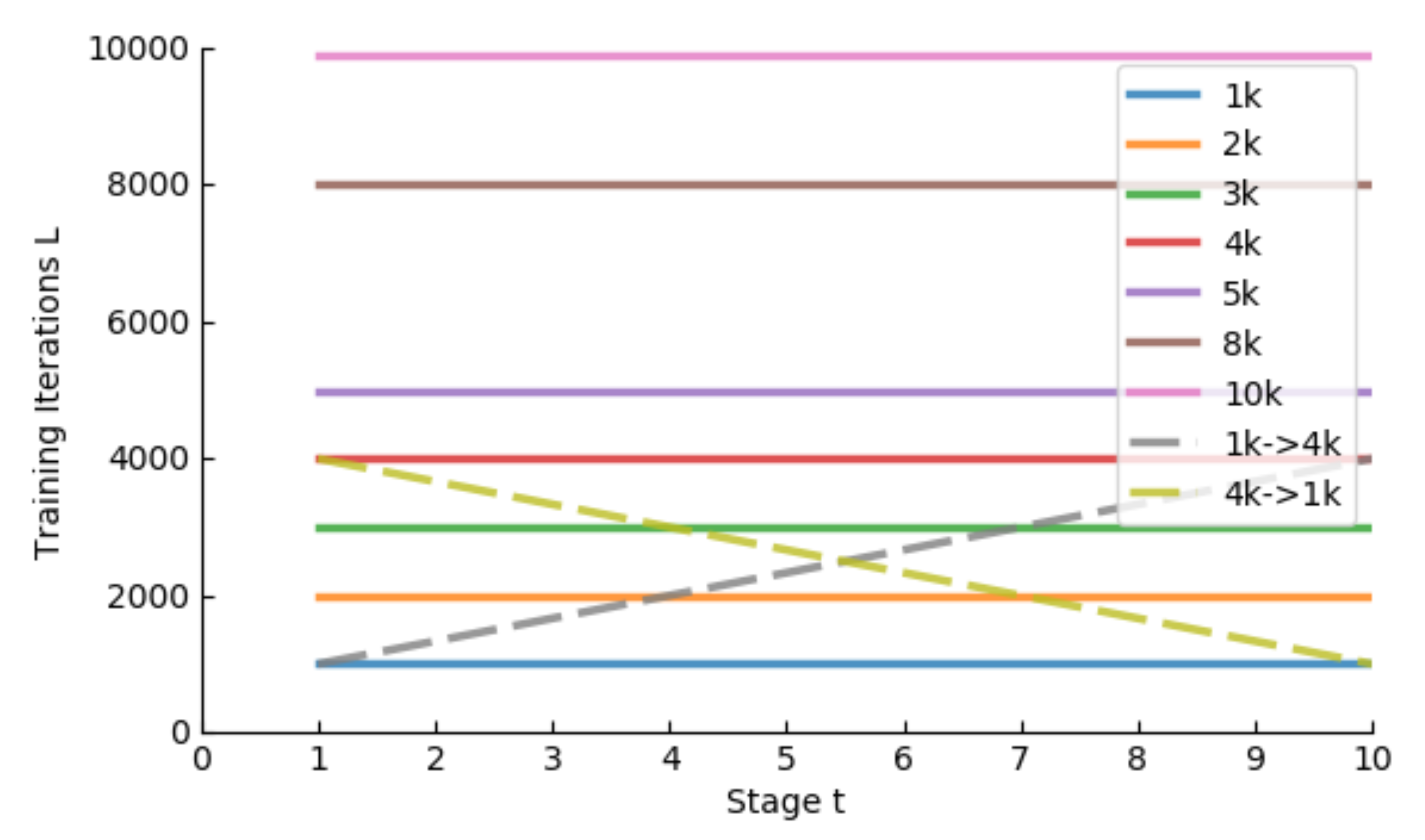

Figure 3 shows that mutual information is high for small values of

t. Therefore, a rebalancing strategy is unnecessary and could lead to misinformation in

. To address this issue, we propose a reweighting ratio

,

as the exponential factor of the reweighting factor

w, based on

:

where

w uses the same reweighting factors as WMSE [

11]. The new weighting factor

is directly multiplied by the respective loss to derive the reweighted loss. A simple solution is

because mutual information negatively correlates with

t. The proposed

meets the requirement of an unweighted loss at higher mutual information and a precipitous

for lower mutual information. This approach avoids degrading image quality and undermining the original distribution of reweighting strategies.

In this paper, we adopted the same weighting factor

w of

following the weighted mean square error (WMSE) [

11], which is

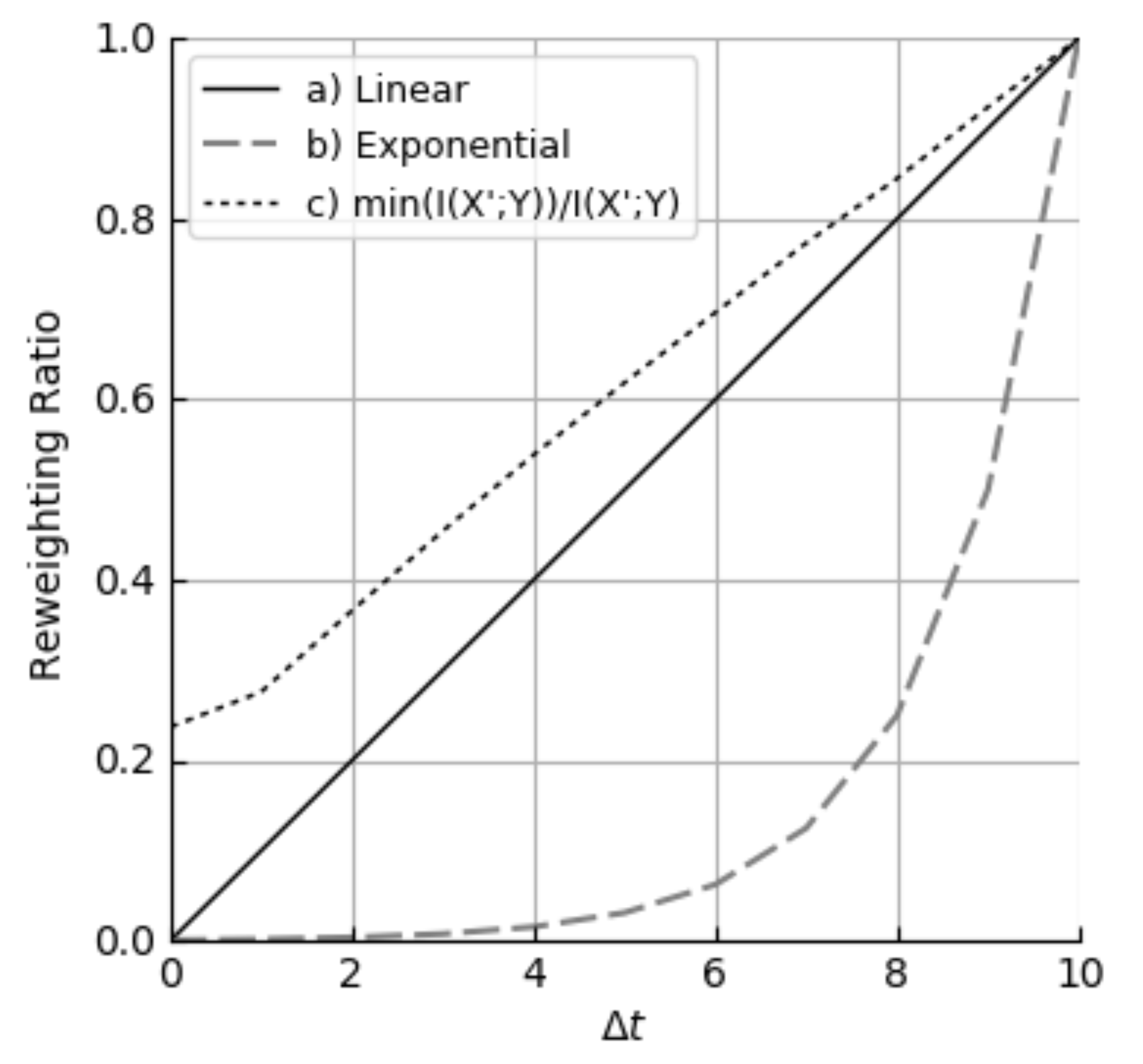

Since the degree to which affecting the model’s resistance of data imbalance was unknown, we tried several different naive solutions:

- (a)

Linear to t: , where , is a constant that controls the expected growing speed of . The code is shown in Algorithm 1.

- (b)

Exponential: , where is a constant that depends on the expected growing speed of , and in this paper.

- (c)

Linear to :

. When

,

. As shown in

Figure 4, this solution is similar to a special version of the linear solution.

| Algorithm 1 MIR Strategy |

- Input:

Model , Input Data x, Ground Truth , WMSE Function , Weighting Factor w. - Output:

Loss . - 1:

- 2:

- 3:

fordo

- 4:

- 5:

- 6:

- 7:

end for - 8:

return

|

3.3. Time Superimposing Strategy (TSS)

Traditionally, neural network models are simultaneously trained with all tasks, from

to

. The graph in

Figure 3 illustrates that the training task at

provides the highest amount of information. As the forecasting length

t increases, the mutual information steadily decreases. We adopt a curriculum learning approach to improve training efficiency and reorganize the training order of different forecasting length tasks. A straightforward strategy is to start with high mutual information tasks and gradually move to low mutual information tasks.

Suppose there is a set of training tasks, and the model is trained with all the tasks in the set during every iteration of the training process. The task set starts with only the task for , and progressively incorporates other forecasting tasks with increasing lengths until .

To be specific, the initial training task is . In the next stage, we simultaneously train and . In stage three, we simultaneously train , , and , and so on.

We name this method the time superimposing strategy (TSS). TSS could be simplified into a loss function controlling forecasting length. The TSS with fixed training iterations per stage is shown in Algorithm 2. More TSS variants are discussed in

Section 4.3.

| Algorithm 2 TSS Strategy |

- Input:

Total Iteration , Iteration Per Stage L, Model’s Output , Ground Truth , Loss Function . - Output:

Loss . - 1:

- 2:

- 3:

return

|

6. Conclusions and Future Work

In the precipitation nowcasting task, previous studies have attributed poor prediction performances regarding heavy rainfall samples to the data imbalance issue. We found that prediction performance is related to both mutual information (MI) and data imbalance.

In this paper, we redefined the precipitation nowcasting task at the pixel level to estimate the conditional distribution and the mutual information . We found that higher corresponds to better data imbalance resistance. Inspired by this finding, a reweighting method, MIR, preserves more information by assigning smooth weighting factors for high data. MIR successfully avoids downgrading the performance of the majority class. By studying the relationship between and the forecasting timespan t, we found that a smaller t benefits the model’s training. Combining this feature with the merit of curriculum learning, ordered from easy to hard, we proposed a curriculum-learning-style training strategy. The experimental results demonstrated the superiority of the proposed strategies. With the help of the approximated and , we also tried to explain how -based reweighting works and to find an informative precipitation dataset. This work is only a preliminary exploration since is not fully utilized. More mutual information-based strategies remain to be discovered.