Abstract

Rice is a globally significant staple food crop. Therefore, it is crucial to have adequate tools for monitoring changes in the extent of rice paddy cultivation. Such a system would require a sustainable and operational workflow that employs open-source medium to high spatial and temporal resolution satellite imagery and efficient classification techniques. This study used similar phenological data from Sentinel-2 (S2) optical and Sentinel-1 (S1) Synthetic Aperture Radar (SAR) satellite imagery to identify paddy rice distribution with deep learning (DL) techniques. Using Google Earth Engine (GEE) and U-Net Convolutional Neural Networks (CNN) segmentation, a workflow that accurately delineates smallholder paddy rice fields using multi-temporal S1 SAR and S2 optical imagery was investigated. The study′s accuracy assessment results showed that the optimal dataset for paddy rice mapping was a fusion of S2 multispectral bands (visible and near infra-red (VNIR), red edge (RE) and short-wave infrared (SWIR)), and S1-SAR dual polarization bands (VH and VV) captured within the crop growing season (i.e., vegetative, reproductive, and ripening). Compared to the random forest (RF) classification, the DL model (i.e., ResU-Net) had an overall accuracy of 94% (three percent higher than the RF prediction). The ResU-Net paddy rice prediction had an F1-Score of 0.92 compared to 0.84 for the RF classification generated using 500 trees in the model. Using the optimal U-Net classified paddy rice maps for the dates analyzed (i.e., 2016–2020), a change detection analysis over two epochs (2016 to 2018 and 2018 to 2020) provided a better understanding of the spatial–temporal dynamics of paddy rice agriculture in the study area. The results indicated that 377,895 and 8551 hectares of paddy rice fields were converted to other land-use over the first (2016–2018) and second (2018–2020) epochs. These statistics provided valuable insight into the paddy rice field distribution changes across the selected districts analyzed. The proposed DL framework has the potential to be upscaled and transferred to other regions. The results indicated that the approach could accurately identify paddy rice fields locally, improve decision making, and support food security in the region.

1. Introduction

On a global scale, over 12% of the world′s cropland area is assigned to rice agriculture, with South and East Asia being the two central regions for paddy rice production [1]. Paddy rice production accounted for over 212 million metric tons in 2020, making China a significant player in providing a third of the total global paddy rice production [1,2]. A system that adequately monitors paddy rice fields is crucial, providing a staple grain supply to 7.5 billion people globally [3]. Effective monitoring of rice intensification is essential to addressing food security and socioeconomic and sustainable development concerns [4]. It provides valuable information for policy and decision-makers handling critical issues such as food production, climate change mitigation and adaptation, and water and health security.

Advances in remote sensing technology offer the scientific community and stakeholders in the agricultural sector an effective tool for monitoring and mapping the spatial extent of agricultural landscapes. In addition, remote sensing offers smallholder farmers valuable statistics for decision-making and planning, such as crop growth cycles and yield forecasting [5,6,7]. Smallholder farming areas are typically characterized by small-size plots (usually less than 2 hectares) and heterogeneous with unclear field patterns. This pattern of farming provides over 70% of China′s food production [8]. Considering the small sizes of paddy rice fields, utilizing a Moderate Resolution Imaging Spectroradiometer (250 m) would prove ineffective for accurate cropland mapping due to its coarse spatial resolution. However, European Space Agency (ESA) medium to high-spatial-resolution satellite data like Sentinel-1 (S1) Synthetic Aperture Radar (SAR) and Sentinel-2 (S2) optical sensors with 10 m spatial resolution have immense potential for agricultural mapping and diverse applications [9,10]. The Google Earth Engine (GEE) cloud-computing platform offers massive computing power for geospatial analysis on a planetary scale [11] and has vast applications such as landcover mapping and monitoring [12], mapping of paddy rice fields [4,13], and other environmental applications requiring multi-temporal remote sensing data inputs [13,14,15,16,17]. For effective paddy rice field mapping, identifying earth observation (EO) data acquired during the rice crop growth cycle (i.e., vegetative, reproductive and ripening stages) is critical. By adopting a phenological approach, end-users can identify important cyclic and seasonal growing patterns unique to the rice crop and incorporate this valuable information in the mapping process. These phenological characteristics facilitate the accurate mapping of rice paddy fields. The backscatter coefficient values of S1 SAR data change under varying paddy rice stage conditions, making it suitable for tracking the growth stages of paddy rice crops [18,19]. The three distinct paddy rice growing stages (i.e., vegetative, reproductive, and ripening) can serve as valuable input for remote sensing analysis and accurate delineation of paddy rice fields.

The use of optical-derived indices such as the Enhanced Vegetation Index (EVI) [20,21], the Land Surface Water Index (LSWI) [22], and the Normalized Difference Vegetation Index (NDVI) [23], have been explored to extract phenological information as inputs for mapping accurately the spatial extent of rice fields with a combination of field observations [24,25]. Reference [25] explored the use of optical-derived indices to map paddy rice fields using the MODIS sensor onboard the National Aeronautics and Space Administration’s (NASA) Earth observation satellite (EOS) Terra satellite MODIS sensor. The vegetation indices explored were EVI, LSWI, and NDVI, respectively. The authors explored time-series indices to identify rice crop growth stages (flooding and transplanting) by identifying the sensitivity of LSWI to surface moisture increase during its phenological stages. Though a logical approach, the limitation of the study was the coarse resolution of the MODIS imagery used for the analysis. The research community and operators can utilize this information for paddy rice field mapping with access to medium and fine-resolution satellite data (like Sentinel and Landsat sensor imagery) through cloud-based platforms such as GEE. Recent studies have demonstrated that Landsat or Sentinel optical sensor-derived indices (such as LSWI, NDVI, and EVI) are effective proxies for discriminating flooding or transplanting signals of paddy rice fields [4,26]. Studies have demonstrated the value of these optically-derived indices in discriminating flooding/transplanting signals [25,26].

Traditional classifiers, such as the Maximum Likelihood Classifier or shallow machine learning classifiers (e.g., Random Forest (RF), Support Vector Machine (SVM), etc.), have become standard algorithms for mapping agricultural fields [19,27]. However, due to technological advances and the availability of high-performance computing machines, Deep Learning (DL) remote sensing applications are gaining ground quickly [9,10,28]. In the study by [10], the authors demonstrated the potential of combining deep learning models and phenological-driven training data and imagery for rice paddy distribution mapping in the northeastern parts of China. Combining knowledge-based proxies derived from phenological information through combining a suite of vegetation and water indices (such as NDVI and NDWI), the authors could distinguish paddy rice fields from other land cover classes. Other indices explored were EVI and LSWI, all of which served as inputs to generating rice paddy maps subsequently used to generate training data needed in the DL modeling. The DL model, Long Short-Term Memory [29], was used in the study. By exploring Sentinel-2 (S2) optical satellite imagery acquired over the paddy rice crop cycle (i.e., May to October), the authors were able to extract valuable phenological input in the classification process.

Similarly, [30] explored S2 imagery in GEE to map paddy rice fields accurately using an enhanced pixel-based phenological approach. Phenological data were obtained by combining a time series of several indices generated from S2 time acquired during the transplanting, growth and maturity periods of the paddy rice crops [30]. The phenological features used were Plant Senescence Reflectance Index (PSRI), Bare Soil Index (BSI), LSWI, and NDVI. Unlike the previous approach of DL modelling, a one-class classifier approach was adopted in the study. However, both studies illustrate the benefits of phenological information in paddy rice mapping on both small and large scales. A similar approach was developed using a full-resolution DL model for paddy rice mapping with Landsat data for parts of northeastern China [31].

Studies have also explored using SAR time-series data (such as S1) and DL models to map paddy rice fields [9]. Reference [9] proposed using multi-temporal S1 SAR imagery to address intra-class variability resulting from diverse management practices. A modified U-Net model was used for the DL modelling to identify paddy rice fields across Thailand. Studies have explored combining SAR and optical data for paddy rice mapping with shallow ML and DL models [19,28,32]. The existing literature shows that phenological information primarily derived from EO data (such as SAR and optical imagery) further analyzed using DL or non-DL models provides accurate paddy rice map products. However, limited information answers questions about paddy rice mapping in DL modelling.

This study evaluates several singular and multisensor approaches for paddy rice field mapping using a deep residual U-Net (ResU-Net) CNN model. By utilizing GEE and the ResU-Net DL models, this study proposes a workflow that accurately delineates smallholder paddy rice fields using multi-temporal S1 SAR and S2 optical imagery acquired during the crop growth cycle (vegetative, reproductive, and ripening stages). In addition to evaluating DL modelling, this study explored the RF classifier. This study addressed four research questions (RQ). The four RQs (RQ1, RQ2, RQ3, and RQ4) addressed in this study were:

- Which combination is optimal for paddy rice mapping using GEE-sourced multi-temporal S1 SAR and S2 optical derivatives as inputs in a U-Net DL model?

- How does the optimal image composite identified in RQ1 compare with RF experiments performed under different model parameters?

- Based on RQs 1 and 2, what are the implications of these outcomes in building a transferable and operational workflow? The transferability of the optimal model identified in RQs 1 and 2 was tested by upscaling (covering five districts in the southeastern parts of Heilongjiang province) over different years (i.e., 2016, 2018, and 2020).

- Based on the outcomes of RQ3, a spatial-temporal analysis of paddy rice agriculture between 2016 and 2020 was performed to quantify and better understand the dynamics and challenges associated with paddy rice mapping in the study area.

2. Materials and Methods

2.1. Study Site

The study site is in the southeastern Heilongjiang province and covers five districts: Xiangyang, Qianjin, Jianshan, Taoshan, and Jiguan in Northeast China (Figure 1). The region is characterized by a sub-humid continental monsoon climate with warm summers and cold winters [33], an annual mean temperature of 2 °C, and annual mean precipitation of 550 mm [34]. Heilongjiang is popularly known as a paddy rice agriculture hub in the region. Other prominent croplands in the study area are soybean, corn, and wheat [35].

Figure 1.

Study area map showing the Xiangyang, Qianjin, Jianshan, Taoshan, and Jiguan Districts in the southeastern Heilongjiang Province of China.

Paddy rice fields in the study area are cultivated yearly and have a growth cycle of 140 to 150 days (between April and October) [13,36]. Previous studies have outlined the different paddy rice fields for the study site [5,19,24,36].

2.2. Description of the Overall Methodology

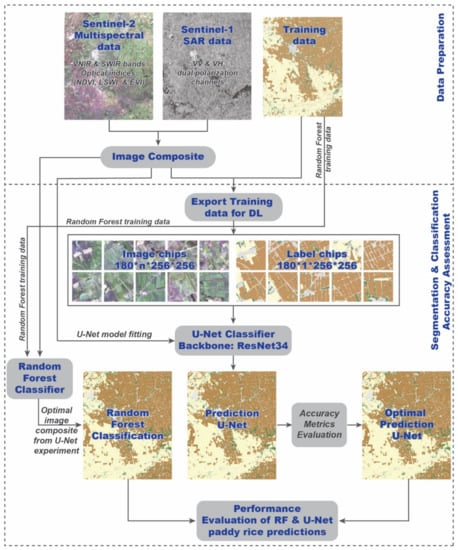

Figure 2 shows the workflow for paddy rice mapping proposed in this study. As shown in the proposed workflow, the methodology consists of three main stages, (a) data preparation, (b) segmentation and classification, and (c) performance assessment. The final output of this workflow was an optimal paddy rice prediction based on the performance evaluation of the RF and U-Net models. Using the most effective of the evaluated shallow learning and DL models, we generated three upscaled paddy rice maps covering five districts in the Heilongjiang Province of China. The final component of the methodology employed in this study was (d) upscaling of the identified optimal model and change detection analysis. This section of the paper discusses the four work components presented above.

Figure 2.

Proposed workflow for paddy rice mapping adapted in this study. The image chip dimensions were 256 × 256 pixels (n = the number of bands of input image composites used for deep learning experiments).

2.2.1. Data Preparation

The satellite imagery employed in this study was multi-temporal S1 SAR and S2 optical data (as described in Section 2.3) downloaded from the GEE platform. The pre-processed satellite images used in the study were acquired during the rice crop growing season (i.e., vegetative, reproductive, and ripening). The optical and SAR-derived inputs contained valuable phenological information for identifying important cyclic and seasonal growing patterns unique to rice crops. The phenologically driven approach was a vital component of this approach to paddy rice mapping.

2.2.2. Segmentation and Classification

Several S1 SAR and S2 optical image combinations were used as inputs in a shallow ML model (RF) and a DL model (i.e., ResU-Net). The first step of this component (RQ1) was to determine the optimal image composite using several inputs of GEE-sourced multi-temporal S1 SAR and S2 optical derivatives in a U-Net DL model. Section 2.3.3 describes the image composites evaluated in this component of the study. Details of the U-Net segmentation and the model parameters evaluated are presented in Section 2.3.5. The workflow diagram (Figure 2) presents the components associated with the U-Net modelling (such as exporting the training data for DL and the model fitting backbone–ResNet 34). The second part of the segmentation and classification workflow component was comparing the performance of the RF and U-Net models on the optimal image composite identified in RQ1. The optimal image composite identified from the U-Net experiments was further evaluated using the RF classifier under different model parameters. Section 2.3.6 presents the details of the RF classifier and the evaluated model parameters. For both models, independent training and validation data sets were employed for developing the models and assessing their performance. Section 2.3.4 details the ground validation and training data used in the study.

2.2.3. Performance Assessment

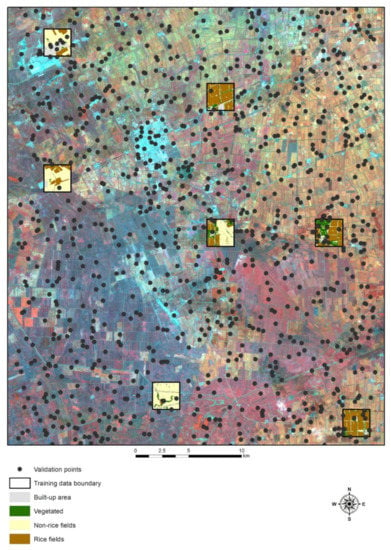

This study used the same reference training dataset to assess the accuracy of the RF and U-Net predictions. Section 2.3.4 details the ground validation and training data employed in the study. Figure 3 provides the spatial distribution of training polygons used for the RF classification and the validation points used for both models (RF and U-Net). Section 2.3.7 discusses the accuracy assessment metrics used to evaluate the model predictions′ performance and establish their accuracies.

Figure 3.

Map showing the distribution of training polygons and validation points used for random forest classification and accuracy assessment. The validation points were also used to assess the accuracy of the DL model.

2.2.4. Upscaling of the Identified Optimal Model and Change Detection Analysis

In this study component, the identified optimal U-Net model was used to generate three upscaled paddy rice maps (dated 2016, 2018 and 2020) covering the five districts investigated. This component addressed RQ3 and RQ4. This component′s primary output was testing the model′s transferability and operational capacity by upscaling the developed U-Net based on a subset of the study area.

The upscaled paddy rice maps for the three years (2016, 2018, and 2020) were used as inputs in a change detection analysis, quantifying the dynamics of rice agriculture in the study area. Section 3.4 presents the outcomes of the change detection analysis.

2.3. Inventory and Processing of Radar and Optical Satellite Sensor

2.3.1. Sentinel 1 Radar Data

GEE provides a rich repository of pre-processed satellite data, such as S1 SAR imagery. The S1 Image Collection consists of backscatter coefficient (σ°, in decibels) values processed from Level-1 Ground Range Detected scenes. The pre-processing workflow steps were: radiometric calibration, terrain correction, image re-projection, and speckle [11,37]. The S1 imagery was post-processed using standard steps that included the exclusion of pixels affected by wind and speckle filtering.

Finally, the radar image collection′s monthly mean composites were calculated and used as inputs for image classification. The monthly average composites for the descending satellite paths were generated using GEE. The S1 Image Collection was reduced to single bands in GEE with a mean (imageCollection.reduce) function. This image collection contained S1 images acquired over the paddy rice stages (i.e., from April to October) for 2016, 2018, and 2020 [19]. Table 1 presents details of the Sentinel data used in the study.

Table 1.

Description of satellite data (Sentinel-1 SAR and Sentinel-2 optical imagery) used in the study.

2.3.2. Sentinel 2 Optical Data

The S2 imagery used for this study was downloaded from GEE within the same time frame as the S1 imagery (i.e., April to October 2016, 2018, and 2020). Seventy-eight images covering the study area over the paddy rice stages were acquired using the GEE cloud-computing platform. The GEE platform was used for cloud masking and compositing. The median image reducer was used to calculate the median values of the image collections covering the rice growth stage. All 20 m bands were pan-sharpened to 10 m using the NIR band as performed by [38]. Using the GEE spatial enhancement tool (hsvToRgb), two 20 m RGB composites, namely composite 1 (B5, B6, and B7) and composite 2 (B8A, B11, and B12), were pan-sharpened [38]. Using the S2 median reduced bands, the optical indices NDVI, EVI, and LSWI with Equations (1)–(3) below.

where B2, B4, B8, and B11 (10 m pan-sharp) are the top of atmospheric reflectance values of the blue, red, NIR, and SWIR bands.

Appendix S1 in the Supplementary Materials presents the GEE codes used to download the S1 and S2 images used in the study.

2.3.3. Description of Image Composites Used for Experiments

Table 2 describes the image composites used for experiments in this study. The image composites had the following combinations: S2 multispectral bands (Dataset 1), S1 SAR bands only (Dataset 2), S2 optical indices only (EVI + NDVI + LSWI)–Dataset 3, S2 multispectral bands, and S1 SAR bands (Dataset 4), S1 SAR bands and optical indices (Dataset 5), S2 multispectral bands and optical indices (Dataset 6) and S2 multispectral bands, optical indices, and S1 SAR bands combination (Dataset 7).

Table 2.

Description of image composites used for experiments in this study (where B = bands).

Since the study area was prone to cloud cover issues, median composites of the available S2 imagery captured over the rice crop growth period (i.e., 10 April to 10 October) were used. It was a different approach from the S1 SAR bands, where the median values covered individual rice growth stages (i.e., vegetative, reproductive, and ripening).

2.3.4. Ground Validation and Training Data

An independent set of ground validation data was generated using a stratified random approach for accuracy assessment. This data was used to assess the accuracy of the RF classification and the optimal U-Net predictions. This study generated a thousand equally stratified random points (250 points per class) from the reference training dataset for accuracy assessment (Figure 3). These were the same reference training dataset used for assessing the accuracies of the RF and U-Net predictions evaluated in the study.

For the stratified random sampling, the study area was split into strata and random samples within each selected stratum. These strata are based on prior knowledge of the landscape. Using Google Earth imagery, landcover reference data (over 2000 GPS data representing major land-cover classes) were employed in the design of sampling grids across the project area.

Figure 3 shows the spatial distribution of training polygons used for RF classification. The classification schema adopted in this study was similar to that in [19]. Four broad classes were selected: built-up area (comprised of bare surfaces, buildings—commercial and industrial, road surface, and paved surfaces), non-rice fields (water bodies and other croplands), vegetated (forested landscape, wetlands, vegetation with considerable density and height, and shrubs), and paddy rice fields. At some point in the study, these four broad classes were recoded into two broad classes—other land-use and paddy rice fields.

2.3.5. U-Net CNN Segmentation

This study explored the U-Net model with a deep residual U-Net (ResU-Net) network framework for image segmentation. Its architecture comprises two components: namely encoding and decoding, respectively.

The encoder extracts features and labels patterns followed by a downsampling maxpool. The second half of the architecture (i.e., the decoder) spatially learns the information needed for reconstructing the original input data [39]. The decoder architecture semantically discriminates lower-resolution features onto higher-resolution pixel spaces to generate a dense classified output [39,40]. Reference [41] proposed using temporal feature-based segmentation for paddy rice mapping with time-series SAR images. The developed model extracts temporal features and learns the spatial context of extracted features for rice crop mapping [41]. This study used the ArcGIS API for Python to run the U-Net classifier. The U-Ner classifier runs the ResNet34 backbone by default. The ResU-Net network handles problems associated with vanishing gradients by including connections to convolutional layers into the U-Net framework [42,43,44]. In this study, the number of epochs used for all the U-Net experiments was 200. The training data used for the U-Net CNN segmentation experiments were obtained from the existing land use cover database of paddy rice monitoring plots in the center of Heilongjiang. The land use training data represented the study area′s general land use, including paddy rice fields. This raster layer was vectorized and served as input in the DL modelling performed in the study. Table 3 lists the other DL parameters and arguments for the U-Net prediction experiments. The parameters considered in the study included padding, batch size, and tile size.

Table 3.

Deep learning parameters used in U-Net prediction experiments.

The padding parameter refers to the border area discarded by the model during analysis. An example is the paddy rice field samples truncated from multiple overlapping tiles during inferencing. The padding ensures that areas that lie at the edge of one pass and are ignored in the first pass are captured in the inferencing′s second pass.

The batch size refers to the number of image tiles a Graphics Processing Unit (GPU) can process at a go while inferencing. Ideally, during inferencing, the input imagery is clipped to smaller tiles that the GPU can analyze in a single batch. The batch size is usually dependent on the computer system′s GPU. This project includes a computer laptop with an Intel i-Core CPU @ 2.60 GHz with 16 GB RAM and an NVIDIA-enabled GeForce GTX, 1650 Ti GPU, with 4 Gigabytes of memory employed. The batch size used for all the experiments was 4.

The tile size refers to the size of the image chips exported for the DL model development. After a series of tests, the tile size of 256 × 256, depicting dimension sizes of 2560 × 2560 m, was selected. The learning rates evaluated in the DL experiments are presented in the Supplementary Materials (see Figure S1). After the optimal dataset for paddy rice segmentation was determined, the model file was saved and applied to the upscaled imagery covering the entire study area.

2.3.6. Random Forest (RF) Classifier

The RF classifier was selected for this study because it is generally less affected by noise and overfitting remotely sensed data [45]. Studies have shown that RF can handle high data dimensionality compared to classifiers like Support Vector Machines, maximum likelihood, and single decision trees [46,47,48,49]. RF constructs bootstrapped de-correlated decision trees (DTs) and aggregates these to classify input datasets [50].

For this study, several experiments were conducted using the optimal image dataset determined from the U-Net segmentation. The RF parameters explored included maximum tree depths (250 and 500), maximum tree depth (30) and samples per class (1000). Table 4 presents the samples used for the RF classification experiments performed in the study.

Table 4.

Inventory of training polygons used for Random Forest experiments.

2.3.7. Accuracy Assessment

The accuracy assessment component was performed in two stages: the first evaluated the U-Net CNN segmentation′s performance and determined the optimal image. The second stage compared the performance of the optimal U-Net prediction and RF classifications.

The accuracy metrics, namely User′s and Producer′s Accuracy (UA and PA), Overall Accuracy (OA), and F1-score, were calculated for the optimal U-Net prediction and RF classifications from confusion matrices (see Equations (4)–(7) below).

where Xij = observation in row i column j, Xi = marginal total of row i, Xj = marginal total of row j, Sd is the total number of correctly-classified pixels, and n = the total number of validation pixels.

The classifier′s performance on datasets was evaluated using the overall classification accuracy and F1-score of the paddy rice field class, as this was the study′s focus.

The mean intersection over union (IoU) was used as an additional metric for the U-Net predictions. Equation (8) shows how the IoU is calculated:

where TP, FN, and FP represent true positives, false negatives, and false positives.

The mean F1 Score of all the classes was considered in explaining the performance evaluation of experiments conducted in the study.

2.3.8. Upscale Optimal U-Net Model for Rice Paddy Spatial–Temporal Analysis

The third RQ investigates the transferability and upscaling of the proposed optimal workflow for operational use. As proposed in the study′s objectives, the optimal model based on a subset of the study area was subsequently used to generate three paddy rice maps (dated 2016, 2018 and 2020) covering the five districts described in Section 2.1 of this paper. A change detection analysis was performed to understand better the dynamics of paddy rice field changes over time in this region of Heilongjiang province.

3. Results

The study′s uniqueness is in assessing the value of combining radar, multispectral and optical-derived indices with the cloud-based GEE computing platform. Considering the challenges of obtaining cloud-free images, complementary information from radar imagery is of immense value in paddy rice mapping [19,24,51,52]. This all-weather capability of SAR data and its ability to capture the different stages of paddy rice growth, monitor biomass development, and detect other biophysical measures makes it a valuable component of this study [53,54].

3.1. Selection of Optimal Image Composite in the U-Net DL Model Experiments

The first RQ focused on determining the optimal image combination of multi-temporal SAR and optical derivatives while utilizing the U-Net DL model prediction for paddy rice mapping. The U-Net model experiments were done with varying learning rates, the same padding (54), batch size (4), and epoch (200) (Table 3).

The U-Net model segmentation accuracy metrics indicated that the mean IoU, mean F1-Score and Rice F1-Score were highest for Dataset 4 (i.e., MS + SAR bands with 0.82, 0.67, and 0.95) and Dataset 7 (i.e., MS + SAR + Optical indices with 0.82, 0.67, and 0.95), respectively (see Figure S2 in the Supplementary Materials). The other datasets in the order of combined performance were Dataset 6 (MS + optical indices–0.81, 0.65, and 0.94), Dataset 5 (SAR + optical indices–0.80, 0.65, and 0.95), Dataset 1 (MS bands–0.80, 0.64, and 0.93), Dataset 2 (SAR bands–0.77, 0.60, and 0.94) and Dataset 3 (optical indices–0.76, 0.59, and 0.92). The results indicated that the singular use of the optical bands (Dataset 1), optical indices (Dataset 3), or SAR (Dataset 2) data did not outperform the multisensor combinations. Based on the accuracy assessment results, Dataset 4 (i.e., a combination of the multispectral and SAR bands) was selected as the optimal image combination and used for subsequent analysis. A few predictions from the validation dataset were compared with actual ground truth data as part of the validation process. Figure S3 shows a preview of ground truth and prediction chips for one of the optimal datasets from the U-Net DL experiments (i.e., Dataset 4). Table S1 presents the accuracy assessment results of U-Net predictions performed to establish the optimal image combination (see the Supplementary Materials). Figure S4 presents the U-Net predictions of paddy rice fields generated using datasets evaluated in the study.

3.2. Comparison of Random Forest and U-Net Predictions

Based on the results of RQ1, Dataset 4 (i.e., MS + SAR bands) was selected as the optimal dataset and used as input in the RF classification tests. RQ2 aimed to evaluate RF experiments performed under different model parameters using Dataset 4 as its input.

The two RF experiments were performed with varying decision tree numbers (250 and 500), and with the same maximum tree depth of 30 and samples per class (1000). The RF classification experiments were compared with the U-Net segmentation output using an independent set of validation data points. The four class predictions were recoded into two broad classes (other land-use and rice fields). This approach was adopted to allow easy comparison with similar studies on paddy rice mapping. Table S2 presents the RF classification and optimal U-Net prediction error matrices.

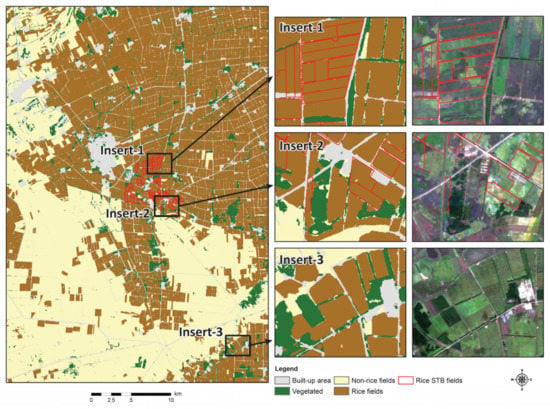

The classification accuracy assessment results indicate the optimal U-Net segmentation model had OA, OK, and Mean F1-Scores of 94%, 0.84, and 0.92, respectively (see Figure S5). The RF (500 trees) were 91% (OA), 0.78 (OK), and 0.89 (mean F1-score). In comparison, RF (250 trees) had 91% (OA), 0.77 (OK), and 0.89 (mean F1-score). The Rice F1-score was highest for the U-Net segmentation (0.88), followed by RF (500 trees) predictions with 0.84 and 0.83 for the RF (250 trees) prediction (see Table S2). Figure 4 shows the optimal U-Net segmentation from a combination of S2 multispectral and S1 dual polarization bands (Dataset 4) and a comparison with S2 composites. Figures S6 and S7 present the paddy rice maps generated using the U-Net model and RF classifier (with 500 trees). The U-Net segmentation and Random Forest predictions using Dataset 4 were compared (see Figures S8 and S9).

Figure 4.

Optimal U-Net segmentation generated from a combination of Sentinel 2 multispectral and Sentinel-1 dual polarization bands (Dataset 4). The three insert maps represent mapped paddy rice fields and their corresponding Sentinel-2 image coverage.

3.3. Transferability of Operational ResU-Net Model

Based on the outcomes of RQs 1 and 2, the proposed operational workflow was evaluated. The optimal paddy rice model (i.e., for Dataset 4) was applied to a more extensive spatial coverage over different years (i.e., 2016, 2018 and 2020). The five districts in the southeastern parts of Heilongjiang province (i.e., Xiangyang, Qianjin, Jianshan, Taoshan, and Jiguan) have similar rice crop practices. They represent the site used in developing the ResU-Net model adopted in the study. This section of the paper outlines the accuracy assessment results of the upscaled ResNet model based on the optimal dataset identified in RQs 1 and 2.

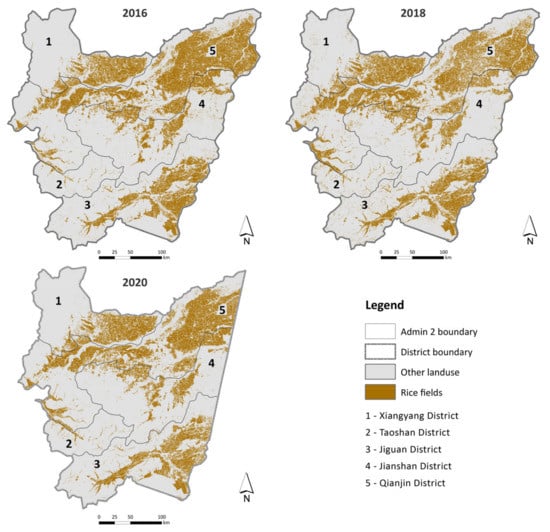

Table S3 presents the accuracy assessment results associated with the upscaled paddy rice mapping on the district scale for 2016, 2018, and 2020, respectively. The ground validation data used to assess the yearly upscaled paddy rice maps (dated 2016, 2018, and 2020) were sourced independently from Google Earth. The results had OK and OA values of 0.86 and 94% (2016), 0.74 and 90% (2018), and 0.73 and 90% (2020). The paddy rice F1-Score for 2016, 2018, and 2020 upscaled predictions were 0.89, 0.81, and 0.80, respectively. Figure 5 presents the paddy rice map fields of selected districts in southeastern Heilongjiang province (i.e., Xiangyang, Taoshan, Jianshan, Jiguan, and Qianjin districts).

Figure 5.

Paddy rice map fields of selected districts in southeastern Heilongjiang province (Xiangyang, Taoshan, Jianshan, Jiguan, and Qianjin). The 2020 boundaries were modified based on the limited Sentinel-1 radar data coverage in Jianshan, Jiguan, and Qianjin districts.

3.4. Spatial–Temporal Dynamics of Paddy Rice Fields from 2016 to 2020

Figure S10A shows the spatial distribution of mapped paddy rice fields for the five districts in Southeast Heilongjiang province. The spatial extent of mapped paddy rice fields over the study area was 2,363,423 hectares (2016), 2,308,506 hectares (2018), and 2,010,532 hectares (2020).

The spatial extent of the study area was modified (for 2018 and 2020) to accommodate the limited availability of S1 SAR data coverage across three districts affected (namely, Jianshan, Jiguan and Qianjin) (Figure 5). This step allowed determining the rates of change in paddy rice distribution over two epochs, namely 2016–2018 and 2018–2020, respectively.

Table 5 presents the change detection analysis results for both epochs, 2016–2018 and 2018–2020. The results indicated that the proportion of paddy rice fields converted to other land-use in the first epoch (i.e., 2016–2018) was 5% (approximately 437,123 hectares). For the second epoch (i.e., 2018–2020), the spatial extent of paddy rice fields converted to other land use was approximately 359,515 hectares (i.e., approximately 4% of the study area). The other land-use classes converted to paddy rice were approximately 378,831 hectares (4% of the total landscape) in 2016–2018 and 328,354 hectares in 2018–2020 (3% of the total landscape) (Figure S10B). Between 2016 and 2018, 71% of the other land-use classes remained unchanged, while 20% of the total study area remained paddy rice fields. These had spatial extents of approximately 6,832,948 and 1,932,610 hectares. Similarly, for the second epoch (i.e., 2018–2020), the spatial extent of unchanged other land-use and paddy rice fields were 6,459,426 (i.e., 67% of the study area) and 1,689,886 hectares (18% of the study area), respectively (Table 5 and Figure S10). Figures S11 and S12 show the change detection maps and sample hotspot locations of paddy rice fields for two epochs, 2016 to 2018 and 2018 to 2020, respectively.

Table 5.

Results of change detection analysis for 2016–2018 and 2018–2020 time intervals.

4. Discussion

4.1. Approach for Effective Paddy Rice Mapping

The availability of accurate and timely land monitoring information, especially for paddy rice fields in China and other parts of the world, is crucial for food security and sustainable development. Satellite remote sensing offers a cost-effective monitoring solution compared to the traditional “feet on the ground” approach, requiring time-consuming and costly human resources.

RQs 1 and 2 outputs demonstrate the value of combining SAR and optical data captured over the rice crop growth stages (i.e., vegetative, reproductive, and ripening) as inputs in the ResNet-34 CNN model for mapping paddy rice fields at field scales. The combined use of Sentinel optical and radar derivatives (i.e., Datasets 4, 5, 6, and 7) captured during the rice crop growth cycle in the evaluated ResU-Net models showed immense potential in paddy rice mapping (with the rice F1-Score ranging from 0.94 to 0.95) and overall performance (the mean F-1 Score was between 0.81 and 0.82). The study identified the optimal dataset for paddy rice mapping as Dataset 4, a fusion of S2 multispectral bands (VNIR, red edge, and SWIR) and S1-SAR dual polarization bands (VH and VV) acquired in the crop growing season. These results substantiate existing studies that associate multisensory data fusion techniques with crop-specific phenological data as a practical approach for mapping paddy rice distribution [19,44,55,56,57]. Compared to exploring only singular sensor inputs, multispectral-derived indices or radar inputs, using the full range of spectral information in the VNIR, red edge, and SWIR regions proved more effective in paddy rice mapping. The optical sensors′ VNIR, SWIR, and red edge regions of remotely sensed imagery are sensitive in detecting changes in the physiology of rice crops [58,59]. The red-edge region is a valuable input for crop discrimination, crop health assessment, and species identification [60,61]. Studies have evaluated the performance and importance of SAR satellite data (such as RADARSAT-2 or Sentinel-1) for crop and soil moisture monitoring and assessment [62,63,64].

As demonstrated by Reference [19], the temporal backscatter profile of the VV and VH polarization channels tends to vary over the rice crop growing season. Results of the study showed that for selected rice field training data, the VV polarizations were higher than the VH, a pattern observed in similar studies [65]. The temporal profile of VH polarization over rice fields is known to have a consistent variation in the recorded backscatter. The VH backscatter is known to have a consistent trend of increase in the backscatter during the reproductive and ripening stages of the rice crop growing season [19,65]. A similar trend was observed in the panicle initiation to the early milking phase (i.e., the reproductive stage). The rice crop attains peak growth at this stage and tends toward the maturity phase. For the VV polarization, an early peak at the flowering phase (i.e., the reproductive stage) is a usual occurrence in paddy rice fields in the study area [19]. The sustained VH temporal backscatter profile is attributed to the signal being less affected by changes in waterlogged surfaces [66]. Both VH and VV polarization channels offer valuable information on the temporal changes of backscatter transition for rice fields making this a valuable resource in large-scale mapping studies. As demonstrated in several studies, the singular or combined use of optical and SAR satellite data for paddy rice mapping has become common practice [4,10,19,28,52,66,67]. Combining the S1 SAR and S2 optical inputs has immense benefits in accurately mapping the spatial extent of paddy rice fields in this study.

The multisensor datasets outperformed the singular sensor datasets. The single sensor datasets of multispectral bands alone (Dataset 1), the radar bands alone (Dataset 2), and the MS optical indices (Dataset 3) had a Mean IoU of 0.64, 0.6 and 0.59, respectively. However, the multisensor datasets had mean IoU values ranging from 0.65 to 0.67 (Table S1). Overall, the combination of the mean F1-Score, the mean IoU, and the F1-Score of the paddy rice class showed the combined optical, and radar datasets outperformed the singular use of either optical or radar datasets (Figure S2). When the Sentinel radar and optical data captured during the paddy rice crop cycle were utilized in the DL model, the rice fields were better identified.

In comparison to several studies that have utilized DL techniques (Wei et al. 2021; Xu et al. 2021), the accuracy of this proposed approach has immense potential. Wei et al. (2021) exploited phenological similarity contained in S1 SAR time-series data to identify rice distribution with DL techniques. The overall accuracy of this study was 91% compared to the 94% obtained in this study. Similarly, Reference [9] utilized DL techniques to map rice fields using S1 SAR data as input into the model and obtained an accuracy of 91%. Our approach explores the ResU-Net DL framework allowing the training of multiple layers [68]. Reference [69] developed a U-Net DL model based on multi-temporal S1 SAR imagery (2019–2021) for paddy rice mapping across a similar landscape as this study in northeast China. The producers′ and users′ accuracies of our study were comparable with the results of [69]. Both studies provide a practical approach toward large-scale rice mapping based on multi-temporal satellite imagery. Table S4 in the Supplementary Materials presents the error matrices of the U-Net segmentation experiments.

In addition to the effectiveness of the prediction technique, using open-source S1 SAR and S2 optical data through GEE is a cost-effective and sustainable approach to explore in the long-term. Considering the level of detail required for mapping small-size paddy rice fields in the region, the high temporal and spatial resolution offered by combining S1 SAR and S2 optical imagery is an efficient approach for mapping paddy rice distribution.

However, a limitation of the DL technique is that it heavily depends on a large volume of training information needed for the modelling process. Establishing science and agriculture hubs, like the Science and Technology Backyard [70], would provide valuable training and validation data for rice mapping, yield estimation and regional fertilizer application studies. As part of the next steps in this study, the authors propose collecting more up-to-date seasonal training data to train further and validate the developed U-Net model. These experimental stations can also train local technicians and farmers on water-saving technology and practical fertilizer usage [19,70].

4.2. Understanding Spatial–Temporal Dynamics of Paddy Rice Agriculture

The change detection analysis revealed the spatial distribution of paddy rice fields across the study area over the two epochs analyzed. The spatial extent of converted paddy rice fields was 377,895 hectares between 2016 and 2018, which subsequently declined by 18,551 hectares in the second epoch (i.e., 2018–2020) (Figure S13A). On a district level, the change detection analysis revealed a similar trend of rise in the percentage of paddy rice fields converted to other land-uses for three districts, namely Xiangyang, Taoshan, and Jiguan (Figure S13B). The other two districts (Jiansahan and Qianjin) experienced declines in the percentage of paddy rice fields converted to other land-use over both epochs (Supplementary Figure S13B). Overall, the percentage of paddy rice fields converted to other land-use for all five districts declined by 0.2% over the two epochs analyzed (2016–2018 = 4.3% and 2018–2020 = 4.1%, see Figure S13B). The dynamics of these variations could be attributed to varied management practices by farmers across the five districts considered in this study. Several studies focused on change detection monitoring in paddy rice fields across parts of northeastern China indicate that rice production areas have experienced a substantial decline since 2015 [71]. The study noted that though the northeast of China is an emerging force in rice production, the region is affected by challenges related to sustainable agricultural practices, over-fertilization, and water degradation [71]. Considering the importance of large volumes of water supply needed for paddy rice production, there are concerns about the unavailability and reliability of its supply to farmers in the northeastern parts of China [72]. Due to the upsurge in paddy rice farming in the region, the pressure from pumping groundwater for irrigation and inter-basin water transfer from rivers negatively affects water availability [71]. This current practice does not offer aquifers sufficient time to recharge.

5. Conclusions

The main objective of this study was to evaluate several singular and multisensor approaches for paddy rice field mapping in a deep residual CNN model. Considering the limitation of cloud cover occurrence associated with optical sensors such as S2, complementary radar data from the S1-SAR sensor is a promising approach for mapping rice. This study explored several combinations of multi-temporal multispectral and radar derivatives as inputs in a deep residual ResU-Net CNN segmentation model for paddy rice field mapping. The study showed the optimal dataset for paddy rice mapping was a fusion of S2 multispectral bands (VNIR, RE and SWIR) and S1-SAR dual polarization bands (VH and VV) acquired in the rice crop growing season (i.e., vegetative, reproductive, and ripening). Compared to the RF classification, the ResU-Net model outperformed the shallow ML algorithm by over 3% with an OA of 94%.

Regarding paddy rice prediction, the F1-Score of the ResU-Net model was 0.92 compared to 0.84 for the RF classification (that used 500 trees in the modelling process). The proposed DL method indicated an immense potential to be upscaled and transferred to a more extensive spatial coverage. The optimal model identified in the study was subsequently used to generate yearly paddy rice maps between 2016 and 2020 (at a 2-year interval) covering five districts in the southeastern parts of Heilongjiang province. These yearly paddy rice maps were used to quantify and better understand the study area′s dynamics and challenges associated with paddy rice fields. The change detection analysis revealed the spatial extent of paddy rice fields was 377,895 hectares between 2016 and 2018 and later declined by 18,551 hectares in the second epoch (i.e., 2018–2020).

This proposed framework can accurately identify paddy rice fields locally and better inform decision-making and support. As the next step in this study, a DL approach shall be explored using the identified optimal inputs detailed in this research. The approach would prove beneficial to building a paddy rice monitoring inventory system that assists with decision-making and policy implementation to benefit smallholder farmers in the region.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs15061517/s1. The Supplementary Materials contain a list of figures and tables related to the results obtained in this study. In addition, hyperlinks to the GEE codes and an inventory of the results are presented. Figure S1: Learning rates chart of U-Net segmentation models evaluated in the study. Figure S2: Accuracy assessment of U-Net prediction experiments performed in the study. Figure S3: Preview of ground truth and prediction chips for one of the optimal datasets from the U-Net DL experiments (Dataset 4). Figure S4: U-Net prediction of paddy rice fields generated using datasets evaluated in the study. Figure S5: Accuracy assessment of U-Net prediction and Random Forest classification using optimal dataset. Figure S6: U-Net CNN segmentation paddy rice map produced from a combination of Sentinel 2 multispectral and Sentinel-1 dual polarization bands (Dataset 4). Figure S7: Random Forest (500 trees) paddy rice map produced from a combination of Sentinel 2 multispectral and Sentinel-1 dual polarization bands (Dataset 4). Figure S8: Comparison of U-Net segmentation and Random Forest predictions using Dataset 4. Figure S9: Comparison of U-Net segmentation and Random Forest predictions using Dataset 4 (contd.). Figure S10: Graph showing (A) the spatial distribution of mapped paddy rice fields and (B) the percentages of landuse change relative to the total project area for 2016, 2018, and 2020 respectively. Figure S11: Change detection analysis results of selected sites between 2016 and 2018. Figure S12: Change detection analysis results of selected sites between 2018 and 2020. Figure S13: Graph showing (A): changes in paddy rice distribution and (B): the percentage of paddy rice loss for each district in the study area over two epochs (i.e., 2016–2018 and 2018–2020). Table S1: Accuracy assessment results of U-Net predictions to establish the optimal image combination. Table S2: Error matrix and classification accuracy results of the Random Forest classification and optimal U-Net prediction. Table S3: Accuracy assessment results associated with the upscaled paddy rice mapping on the district scale for 2016, 2018, and 2020. Table S4: Error matrix and classification accuracy results of U-Net predictions.

Author Contributions

Conceptualization, A.O.O., G.A.B. and Y.M.; methodology, A.O.O., G.A.B. and Y.M., software, A.O.O.; validation, A.O.O. and Y.M.; formal analysis, A.O.O., G.A.B. and Y.M.; investigation, A.O.O.; resources, A.O.O.; data curation, A.O.O.; writing—original draft preparation, A.O.O., review and editing, A.O.O. and Y.M.; funding acquisition, A.O.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All data generated or analyzed during this study are included in the manuscript. An inventory of the earth observation data and results generated in this study are available as a web app for information dissemination purposes. The web app can be accessed using this link: https://jolexy.maps.arcgis.com/apps/webappviewer/index.html?id=d4d061430bea480eb20ace85919861e5 (accessed on 20 February 2023).

Acknowledgments

The author would like to thank the anonymous reviewers and editors for their valuable comments and inputs to improve the quality of this manuscript. Thanks to Google Earth Engine and Copernicus for making free Sentinel imagery available in the study.

Conflicts of Interest

The author declares that no known competing financial interests or personal relationships could have appeared to influence the work reported in this paper.

References

- FAOSTAT. FAO Statistical Databases (Food and Agriculture Organization of the United Nations) Databases. UW-Madison Libraries. 2020. Available online: http://digital.library.wisc.edu/1711.web/faostat (accessed on 24 December 2022).

- Shahbandeh, M. Paddy Rice Production Worldwide 2017–2018, by Country. Statista. Available online: https://www.statista.com/statistics/255937/leading-rice-producers-worldwide/ (accessed on 9 July 2020).

- Elert, E. Rice by the numbers: A good grain. Nature 2014, 514, S50–S51. [Google Scholar] [CrossRef] [PubMed]

- Singha, M.; Dong, J.; Zhang, G.; Xiao, X. High resolution paddy rice maps in cloud-prone Bangladesh and Northeast India using Sentinel-1 data. Sci. Data 2019, 6, 26. [Google Scholar] [CrossRef] [PubMed]

- Abdelraouf, M.A.; Abouelghar, M.A.; Belal, A.-A.; Saleh, N.; Younes, M.; Selim, A.; Emam, E.M.; Elwesemy, A.; Kucher, D.E.; Magignan, S. Crop Yield Prediction Using Multi Sensors Remote Sensing. Egypt. J. Remote Sens. Space Sci. 2022, 25, 711–716. [Google Scholar]

- Son, N.T.; Chen, C.F.; Chen, C.C. Remote Sensing Time Series Analysis for Early Rice Yield Forecasting Using Random Forest Algorithm. In Remote Sensing of Agriculture and Land Cover/Land Use Changes in South and Southeast Asian Countries; Vadrevu, K.P., Toan, T.L., Ray, S.S., Justice, C., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 353–366. [Google Scholar]

- Wen, Y.; Li, X.; Mu, H.; Zhong, L.; Chen, H.; Zeng, Y.; Miao, S.; Su, W.; Gong, P.; Li, B.; et al. Mapping corn dynamics using limited but representative samples with adaptive strategies. ISPRS J. Photogramm. Remote Sens. 2022, 190, 252–266. [Google Scholar] [CrossRef]

- Lowder, S.K.; Skoet, J.; Raney, T. The Number, Size, and Distribution of Farms, Smallholder Farms, and Family Farms Worldwide. World Dev. 2016, 87, 16–29. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C.; Wei, S.; Zhang, B.; Wu, F.; Tang, Y. Paddy Rice Mapping in Thailand Using Time-Series Sentinel-1 Data and Deep Learning Model. Remote Sens. 2021, 13, 3994. [Google Scholar] [CrossRef]

- Zhu, A.-X.; Zhao, F.-H.; Pan, H.-B.; Liu, J.-Z. Mapping Rice Paddy Distribution Using Remote Sensing by Coupling Deep Learning with Phenological Characteristics. Remote Sens. 2021, 13, 1360. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Y.; Clinton, N.; Wang, J.; Wang, X.; Liu, C.; Gong, P.; Yang, J.; Bai, Y.; Zheng, Y.; et al. Mapping major land cover dynamics in Beijing using all Landsat images in Google Earth Engine. Remote Sens. Environ. 2017, 202, 166–176. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B., III. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef]

- Casu, F.; Manunta, M.; Agram, P.S.; Crippen, R.E. Big Remotely Sensed Data: Tools, applications and experiences. Remote Sens. Environ. 2017, 202, 1–2. [Google Scholar] [CrossRef]

- Gulácsi, A.; Kovács, F. Sentinel-1-imagery-based high-resolution water cover detection on wetlands, Aided by Google Earth Engine. Remote Sens. 2020, 12, 1614. [Google Scholar] [CrossRef]

- Hu, Y.; Dong, Y.; Batunacun. An automatic approach for land-change detection and land updates based on integrated NDVI timing analysis and the CVAPS method with GEE support. ISPRS J. Photogramm. Remote Sens. 2018, 146, 347–359. [Google Scholar] [CrossRef]

- Oliphant, A.J.; Thenkabail, P.S.; Teluguntla, P.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K. Mapping cropland extent of Southeast and Northeast Asia using multi-year time-series Landsat 30-m data using a random forest classifier on the Google Earth Engine cloud. Int. J.App. Earth Observ. Geoinf. 2019, 81, 110–124. [Google Scholar] [CrossRef]

- Le Toan, T.; Ribbes, F.; Wang, L.-F.; Floury, N.; Ding, K.-H.; Kong, J.A.; Fujita, M.; Kurosu, T. Rice crop mapping and monitoring using ERS-1 data based on experiment and modeling results. IEEE Trans. Geosci. Remote Sens. 1997, 35, 41–56. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A.; Wang, Q.; Atkinson, P.M.; Kindred, D.; Miao, Y. Mapping paddy rice fields by applying machine learning algorithms to multi-temporal Sentinel-1A and Landsat data. Int. J. Remote Sens. 2018, 39, 1042–1067. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Huete, A.R.; Liu, H.Q.; Batchily, K.V.; van Leeuwen, W. A comparison of vegetation indices over a global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Chandrasekar, K.; Sesha Sai, M.V.R.; Roy, P.S.; Dwevedi, R.S. Land Surface Water Index (LSWI) response to rainfall and NDVI using the MODIS Vegetation Index product. Int. J. Remote Sens. 2010, 31, 3987–4005. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A.; Wang, Q.; Atkinson, P.; Kindred, D.; Miao, Y. Rice crop phenology mapping at high spatial and temporal resolution using downscaled MODIS time-series. GIScience Remote Sens. 2018, 55, 659–677. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B., III. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Boschetti, M.; Nutini, F.; Manfron, G.; Brivio, P.A.; Nelson, A. Comparative Analysis of Normalised Difference Spectral Indices Derived from MODIS for Detecting Surface Water in Flooded Rice Cropping Systems. PLoS ONE 2014, 9, e88741. [Google Scholar] [CrossRef] [PubMed]

- Zhao, R.; Li, Y.; Ma, M. Mapping Paddy Rice with Satellite Remote Sensing: A Review. Sustainability 2021, 13, 20503. [Google Scholar] [CrossRef]

- Thorp, K.; Drajat, D. Deep machine learning with Sentinel satellite data to map paddy rice production stages across West Java, Indonesia. Remote Sens. Environ. 2021, 265, 112679. [Google Scholar] [CrossRef]

- Sepp, H.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Ni, R.; Tian, J.; Li, X.; Yin, D.; Li, J.; Gong, H.; Zhang, J.; Zhu, L.; Wu, D. An enhanced pixel-based phenological feature for accurate paddy rice mapping with Sentinel-2 imagery in Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 178, 282–296. [Google Scholar] [CrossRef]

- Xia, L.; Zhao, F.; Chen, J.; Yu, L.; Lu, M.; Yu, Q.; Liang, S.; Fan, L.; Sun, X.; Wu, S.; et al. A full resolution deep learning network for paddy rice mapping using Landsat data. ISPRS J. Photogramm. Remote Sens. 2022, 194, 91–107. [Google Scholar] [CrossRef]

- Ranjan, A.K.; Parida, B.R. Paddy acreage mapping and yield prediction using sentinel-based optical and SAR data in Sahibganj district, Jharkhand (India). Spat. Inf. Res. 2019, 27, 399–410. [Google Scholar] [CrossRef]

- Gnyp, M.L.; Miao, Y.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.; Huang, S.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crops Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Wang, F.-M.; Huang, J.-F.; Wang, X.-Z. Identification of Optimal Hyperspectral Bands for Estimation of Rice Biophysical Parameters. J. Integr. Plant Biol. 2008, 50, 291–299. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Kuang, W.; Zhang, Z.; Xu, X.; Qin, Y.; Ning, J.; Zhou, W.; Zhang, S.; Li, R.; Yan, C.; et al. Spatiotemporal characteristics, patterns, and causes of land-use changes in China since the late 1980s. J. Geogr. Sci. 2014, 24, 195–210. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Kou, W.; Qin, Y.; Zhang, G.; Li, L.; Jin, C.; Zhou, Y.; Wang, J.; Biradar, C.; et al. Tracking the dynamics of paddy rice planting area in 1986–2010 through time series Landsat images and phenology-based algorithms. Remote Sens. Environ. 2015, 160, 99–113. [Google Scholar] [CrossRef]

- ESA. ESA Step—Science Toolbox Exploitation Platform. European Space Agency. 2019. Available online: http://step.esa.int/main/doc/tutorials/ (accessed on 12 July 2020).

- Kau, L.J.; Lee, T.L. An HSV model-based approach for the sharpening of color images. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; IEEE: New York, NY, USA, 2013. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- ESRI. How U-Net works? 2022. Available online: https://developers.arcgis.com/python/guide/how-unet-works/?rsource=https%3A%2F%2Flinks.esri.com%2FDevHelp_HowUNetWorks (accessed on 29 December 2022).

- Yang, L.; Huang, R.; Huang, J.; Lin, T.; Wang, L.; Mijiti, R.; Wei, P.; Tang, C.; Shao, J.; Li, Q.; et al. Semantic Segmentation Based on Temporal Features: Learning of Temporal-Spatial Information from Time-Series SAR Images for Paddy Rice Mapping. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Dang, K.B.; Nguyen, M.H.; Nguyen, D.A.; Phan, T.T.H.; Giang, T.L.; Pham, H.H.; Nguyen, T.N.; Tran, T.T.V.; Bui, D.T. Coastal Wetland Classification with Deep U-Net Convolutional Networks and Sentinel-2 Imagery: A Case Study at the Tien Yen Estuary of Vietnam. Remote Sens. 2020, 12, 3270. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Kussul, N.; Skakun, S.; Shelestov, A.; Kussul, O. The use of satellite SAR imagery to crop classification in Ukraine within JECAM project. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; IEEE: New York, NY, USA, 2014. [Google Scholar]

- Park, S.; Im, J.; Park, S.; Yoo, C.; Han, H.; Rhee, J. Classification and Mapping of Paddy Rice by Combining Landsat and SAR Time Series Data. Remote Sens. 2018, 10, 447. [Google Scholar] [CrossRef]

- Bouvet, A.; Le Toan, T.; Lam-Dao, N. Monitoring of the Rice Cropping System in the Mekong Delta Using ENVISAT/ASAR Dual Polarization Data. IEEE Trans. Geosci. Remote Sens. 2009, 47, 517–526. [Google Scholar] [CrossRef]

- Kuenzer, C.; Knauer, K. Remote sensing of rice crop areas. Int. J. Remote Sens. 2013, 34, 2101–2139. [Google Scholar] [CrossRef]

- Saadat, M.; Seydi, S.T.; Hasanlou, M.; Homayouni, S. A Convolutional Neural Network Method for Rice Mapping Using Time-Series of Sentinel-1 and Sentinel-2 Imagery. Agriculture 2022, 12, 2083. [Google Scholar] [CrossRef]

- Zhai, P.; Li, S.; He, Z.; Deng, Y.; Hu, Y. Collaborative mapping rice planting areas using multisource remote sensing data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Kuala Kumpur, Malaysia, 17–22 July 2021. [Google Scholar]

- Zhang, X.; Wu, B.; Ponce-Campos, G.E.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-Date Paddy Rice Extent at 10 M Resolution in China through the Integration of Optical and Synthetic Aperture Radar Images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef]

- Du, M.; Huang, J.; Wei, P.; Yang, L.; Chai, D.; Peng, D.; Sha, J.; Sun, W.; Huang, R. Dynamic Mapping of Paddy Rice Using Multi-Temporal Landsat Data Based on a Deep Semantic Segmentation Model. Agronomy 2022, 12, 1583. [Google Scholar] [CrossRef]

- Mandal, N.; Das, D.K.; Sahoo, R.N.; Adak, S.; Kumar, A.; Viswanathan, C.; Mukherjee, J.; Rajashekara, H.; Ranjan, R.; DAS, B. Assessing rice blast disease severity through hyperspectral remote sensing. J. Agrometeorol. 2022, 24, 241–248. [Google Scholar] [CrossRef]

- Forkuor, G.; Dimobe, K.; Serme, I.; Tondoh, J.E. Landsat-8 vs. Sentinel-2: Examining the added value of sentinel-2′s red-edge bands to land-use and land-cover mapping in Burkina Faso. GIScience Remote Sens. 2018, 55, 331–354. [Google Scholar] [CrossRef]

- Jiang, X.; Fang, S.; Huang, X.; Liu, Y.; Guo, L. Rice Mapping and Growth Monitoring Based on Time Series GF-6 Images and Red-Edge Bands. Remote Sens. 2021, 13, 579. [Google Scholar] [CrossRef]

- Khabbazan, S.; Steele-Dunne, S.; Vermunt, P.; Judge, J.; Vreugdenhil, M.; Gao, G. The influence of surface canopy water on the relationship between L-band backscatter and biophysical variables in agricultural monitoring. Remote Sens. Environ. 2021, 268, 112789. [Google Scholar] [CrossRef]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Woźniak, E.; Rybicki, M.; Kofman, W.; Aleksandrowicz, S.; Wojtkowski, C.; Lewiński, S.; Bojanowski, J.; Musiał, J.; Milewski, T.; Slesiński, P.; et al. Multi-temporal phenological indices derived from time series Sentinel-1 images to country-wide crop classification. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102683. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Huang, W.; Zhang, D.; Huang, J.; Li, J. Mapping Rice Fields in Urban Shanghai, Southeast China, Using Sentinel-1A and Landsat 8 Datasets. Remote Sens. 2017, 9, 257. [Google Scholar] [CrossRef]

- Nguyen, D.B.; Gruber, A.; Wagner, W. Mapping rice extent and cropping scheme in the Mekong Delta using Sentinel-1A data. Remote Sens. Lett. 2016, 7, 1209–1218. [Google Scholar] [CrossRef]

- Xiao, W.; Xu, S.; He, T. Mapping Paddy Rice with Sentinel-1/2 and Phenology-, Object-Based Algorithm—A Implementation in Hangjiahu Plain in China Using GEE Platform. Remote Sens. 2021, 13, 990. [Google Scholar] [CrossRef]

- De Bem, P.P.; Júnior, O.A.D.C.; de Carvalho, O.L.F.; Gomes, R.A.T.; Guimarāes, R.F.; Pimentel, C.M.M. Irrigated rice crop identification in Southern Brazil using convolutional neural networks and Sentinel-1 time series. Remote Sens. Appl. Soc. Environ. 2021, 24, 100627. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Lin, T.; Tang, C.; Du, M.; Huang, J. Large-scale rice mapping under different years based on time-series Sentinel-1 images using deep semantic segmentation model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Jiao, X.-Q.; Zhang, H.-Y.; Ma, W.-Q.; Wang, C.; Li, X.-L.; Zhang, F.-S. Science and Technology Backyard: A novel approach to empower smallholder farmers for sustainable intensification of agriculture in China. J. Integr. Agric. 2019, 18, 1657–1666. [Google Scholar] [CrossRef]

- Xin, F.; Xiao, X.; Dong, J.; Zhang, G.; Zhang, Y.; Wu, X.; Li, X.; Zou, Z.; Ma, J.; Du, G. Large increases of paddy rice area, gross primary production, and grain production in Northeast China during 2000–2017. Sci. Total Environ. 2020, 711, 135183. [Google Scholar] [CrossRef] [PubMed]

- Lu, W.; Cheng, W.; Zhang, Z.; Xin, X.; Wang, X. Differences in rice water consumption and yield under four irrigation schedules in central Jilin Province, China. Paddy Water Environ. 2016, 14, 473–480. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).