Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO

Abstract

1. Introduction

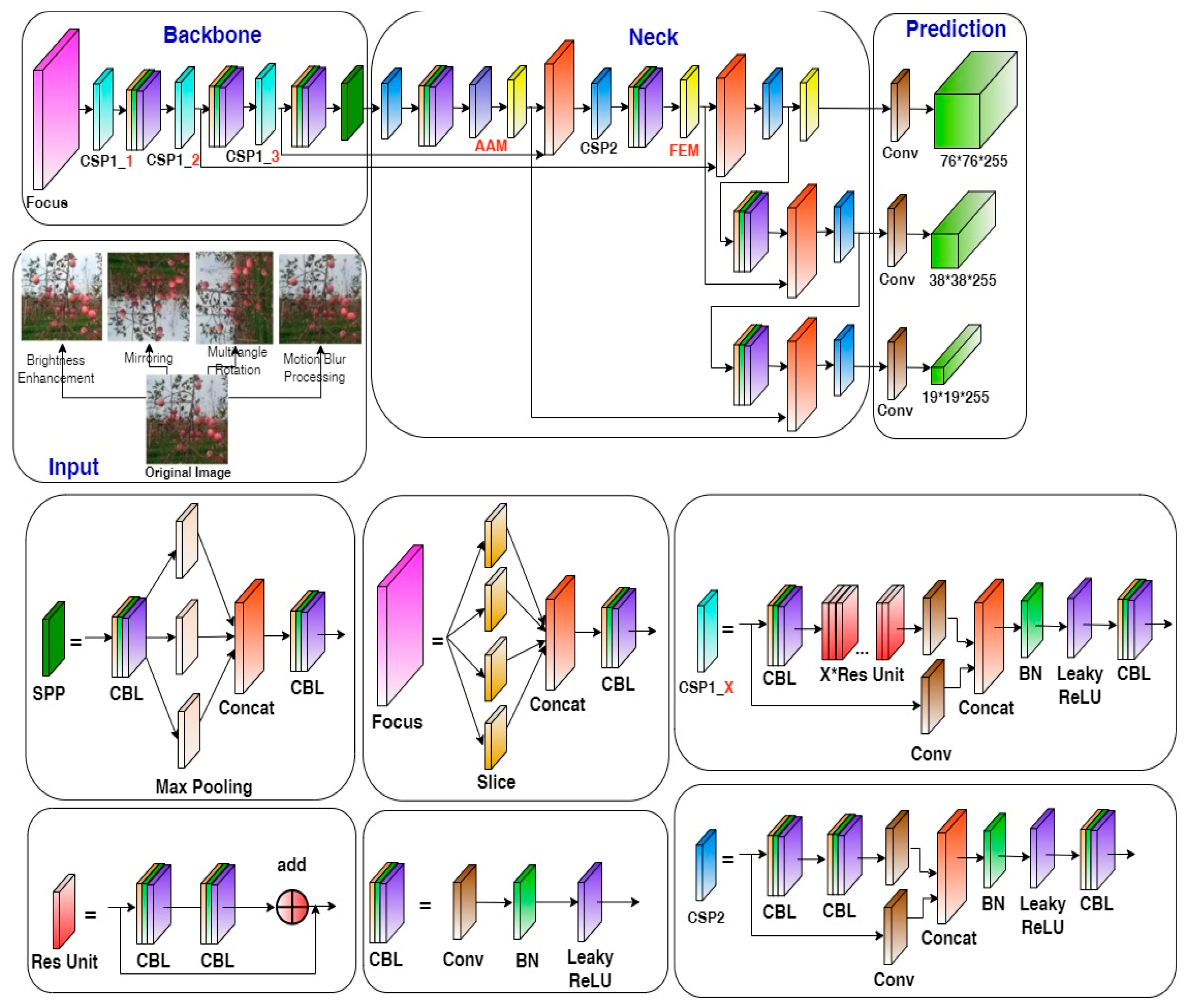

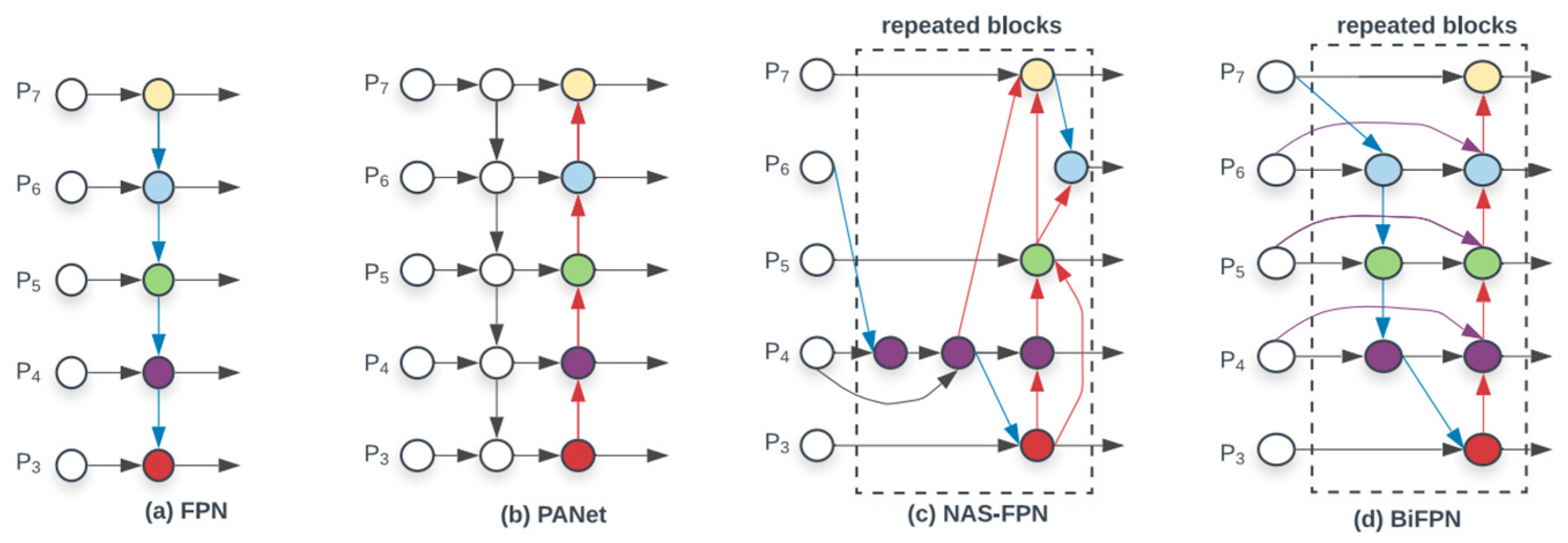

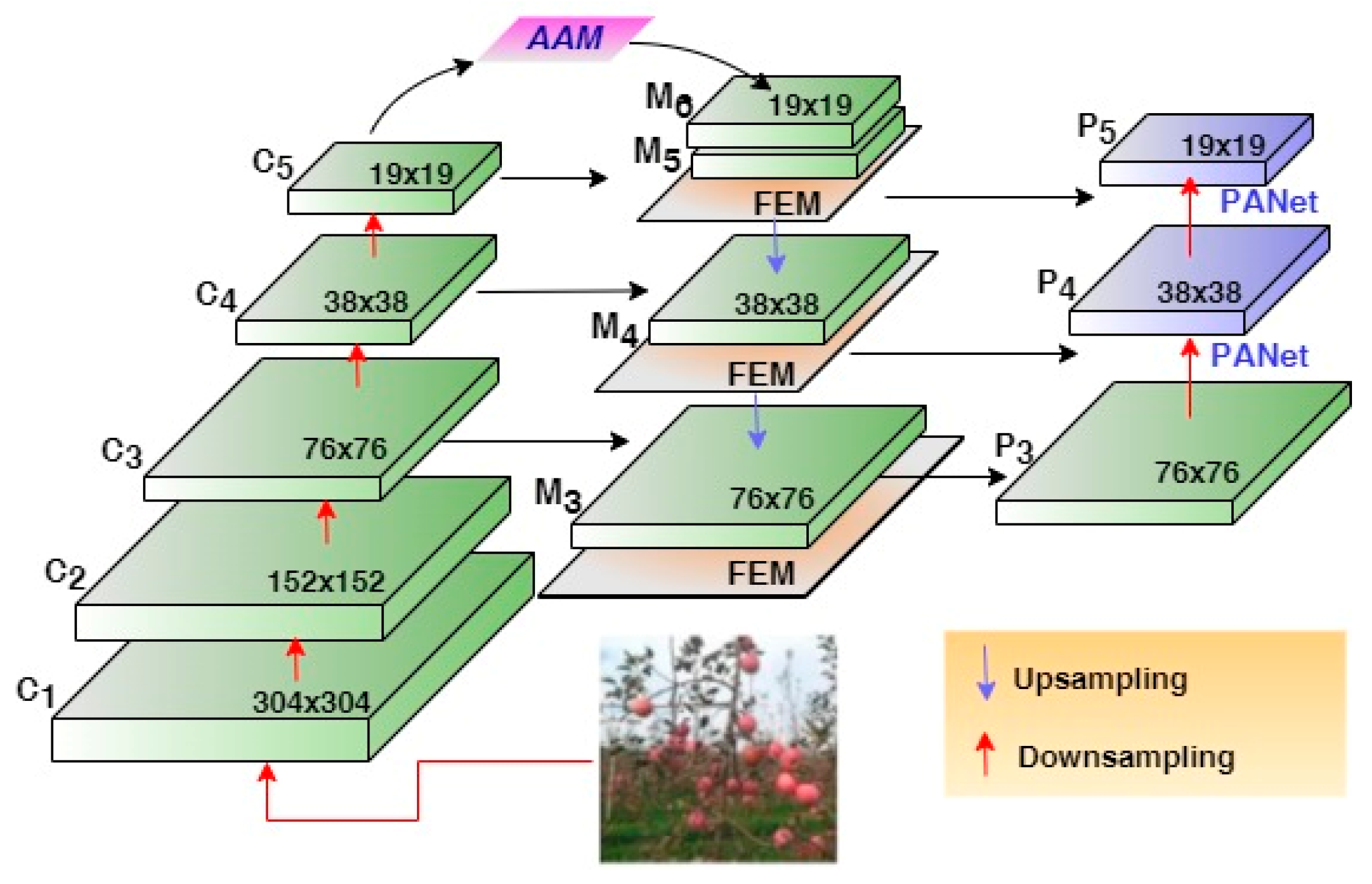

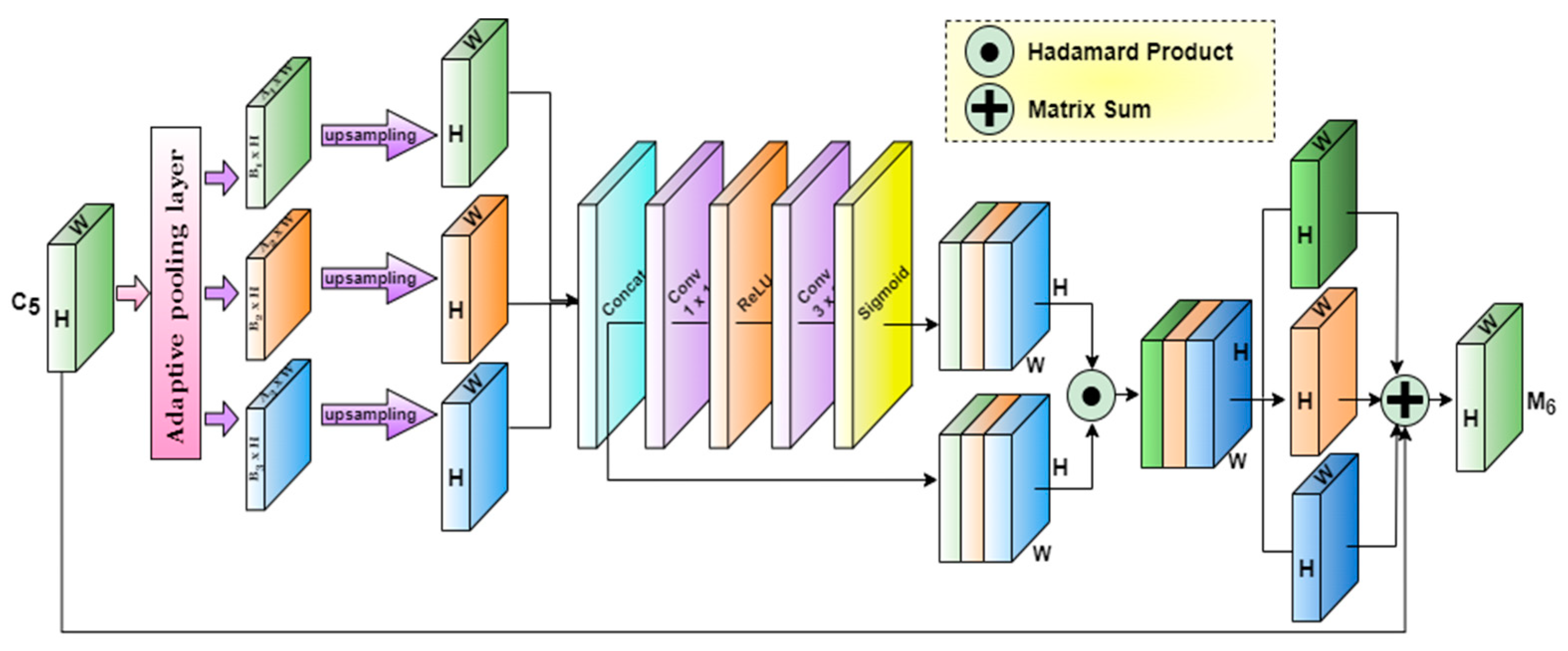

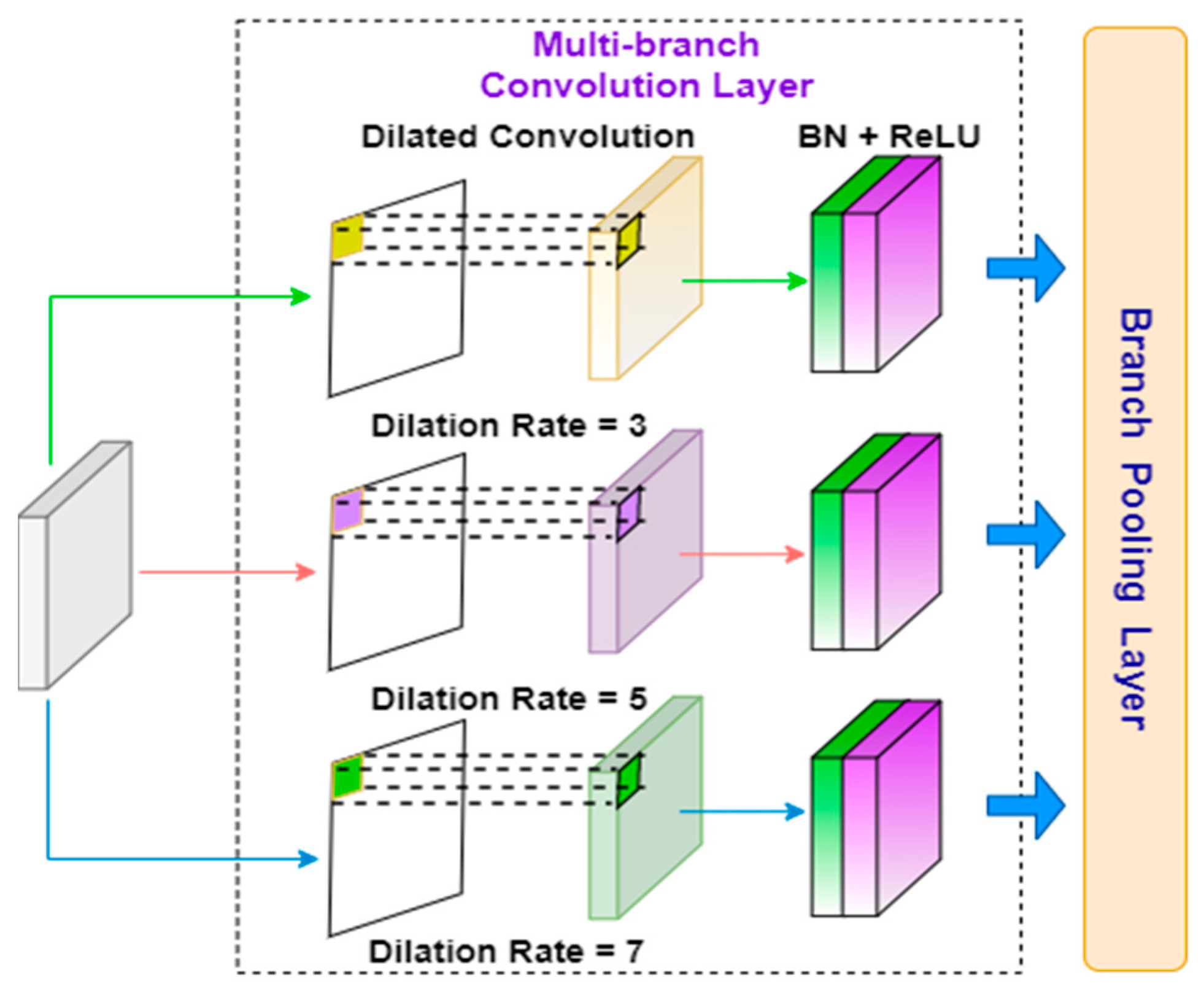

- Feature generation plays an important role in apple detention tasks because of irregular size, occlusion and position variations. To consider this, we adopted the concept of feature pyramid network (FPN). However, the tradition FPN-based model suffers from the contextual information loss, therefore, we incorporated an attribute augmentation model which helps to mitigate the issue of contextual information loss and a feature enhancement model which improves the feature representation to increase the inference speed.

- In order to increase the robustness of the proposed approach, we apply a data augmentation scheme which includes several tasks such as brightness variation, image mirroring, rotation, motion blur, and adding noise.

2. Proposed Model

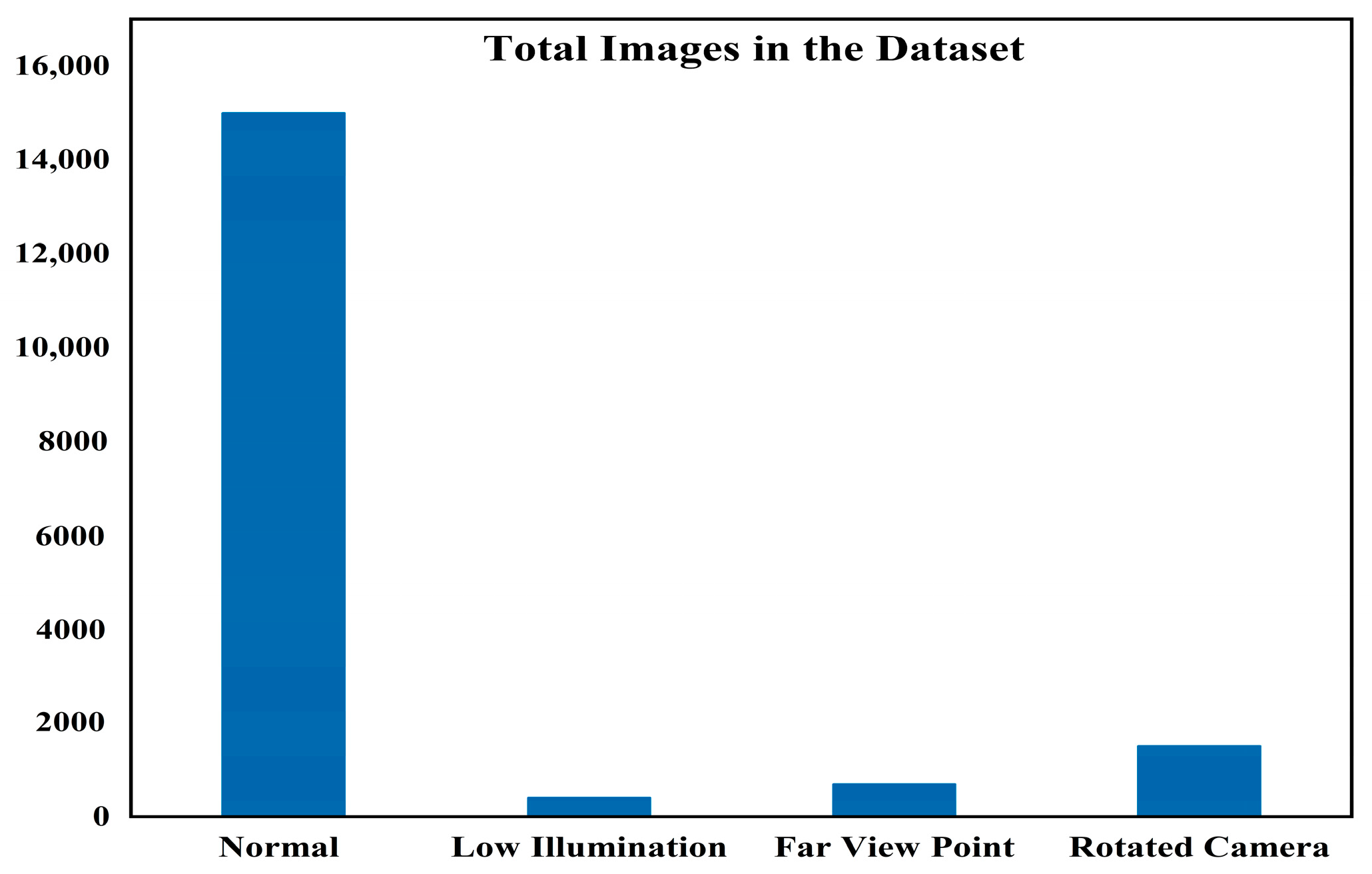

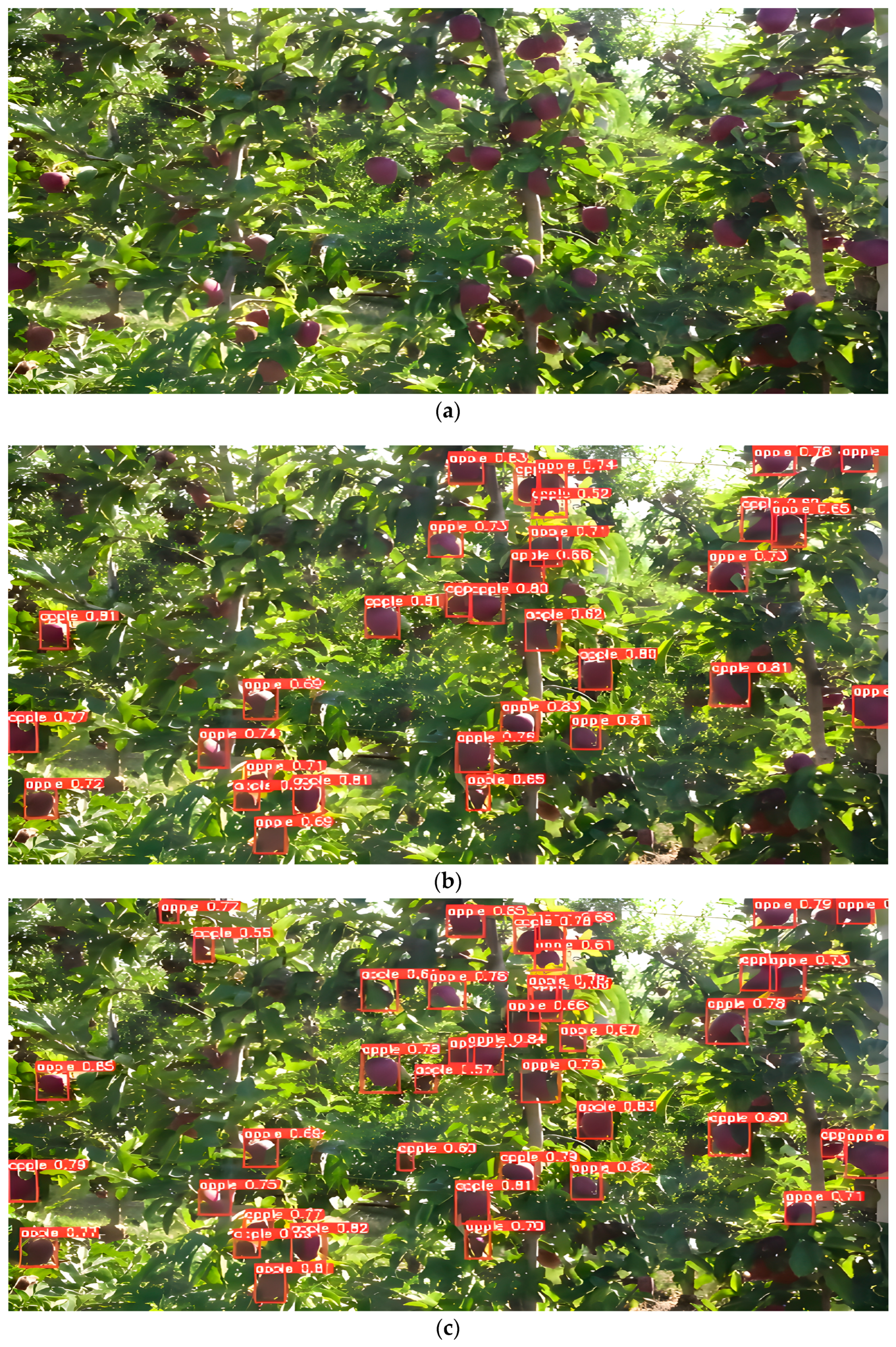

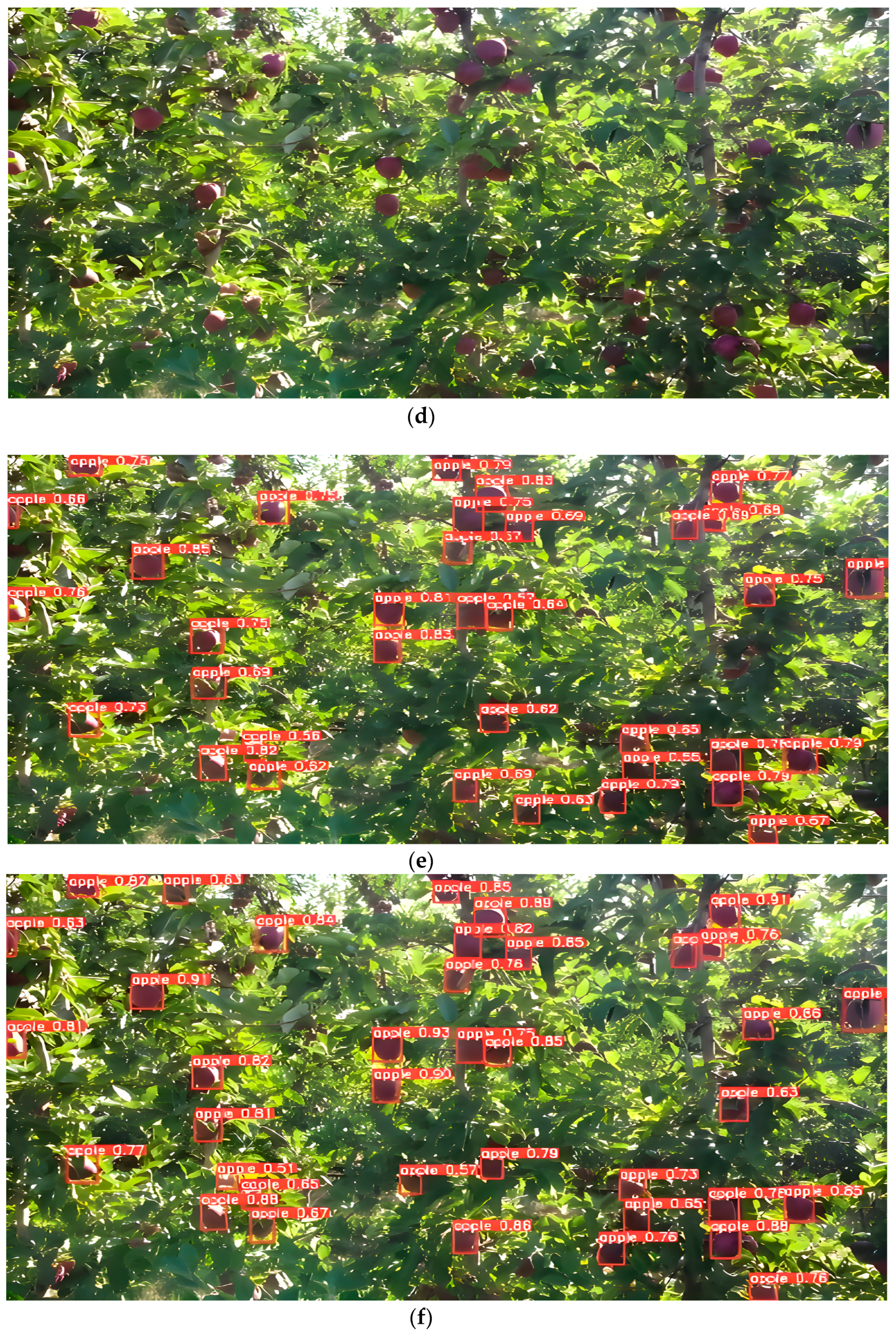

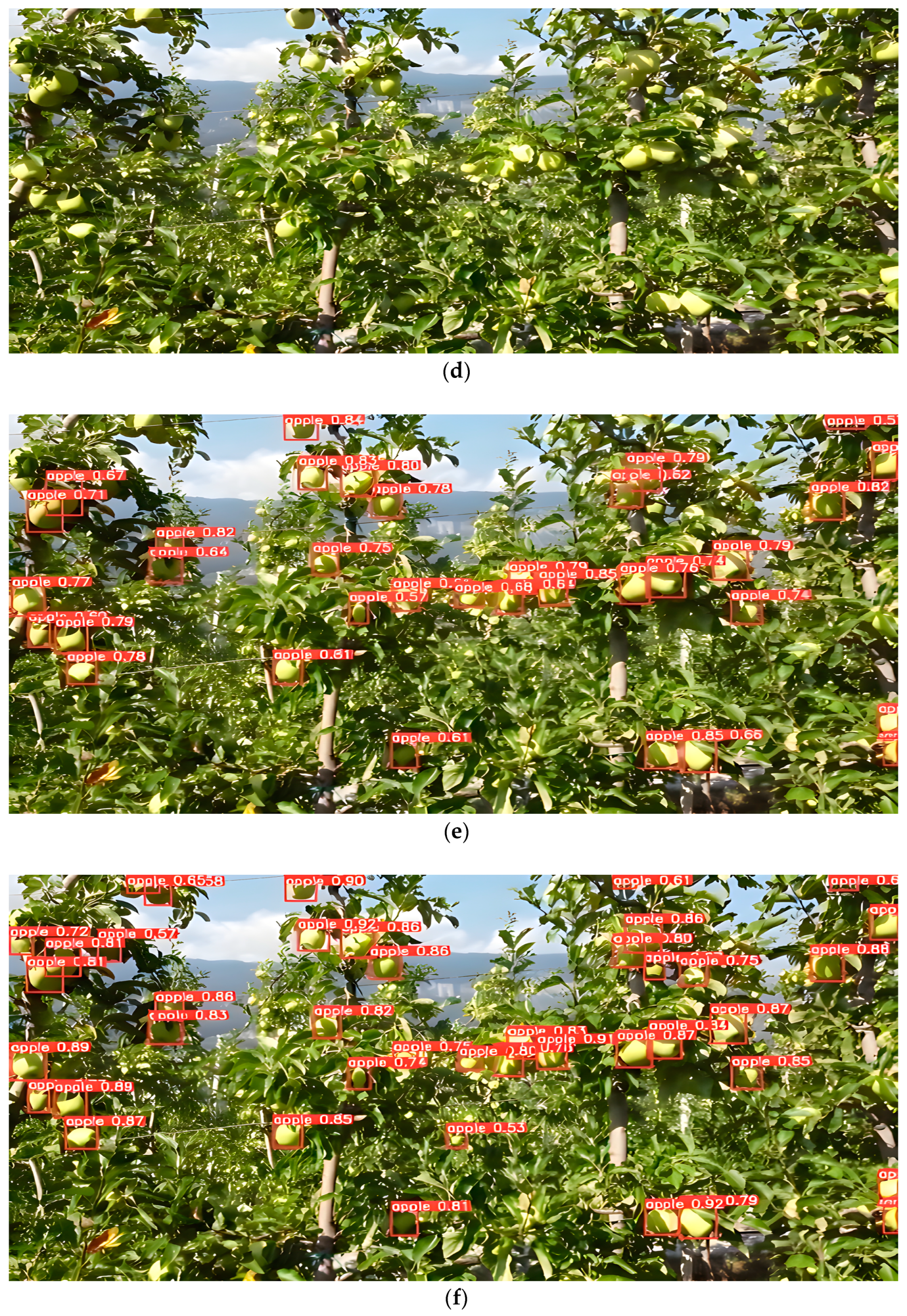

2.1. Data Acquisition

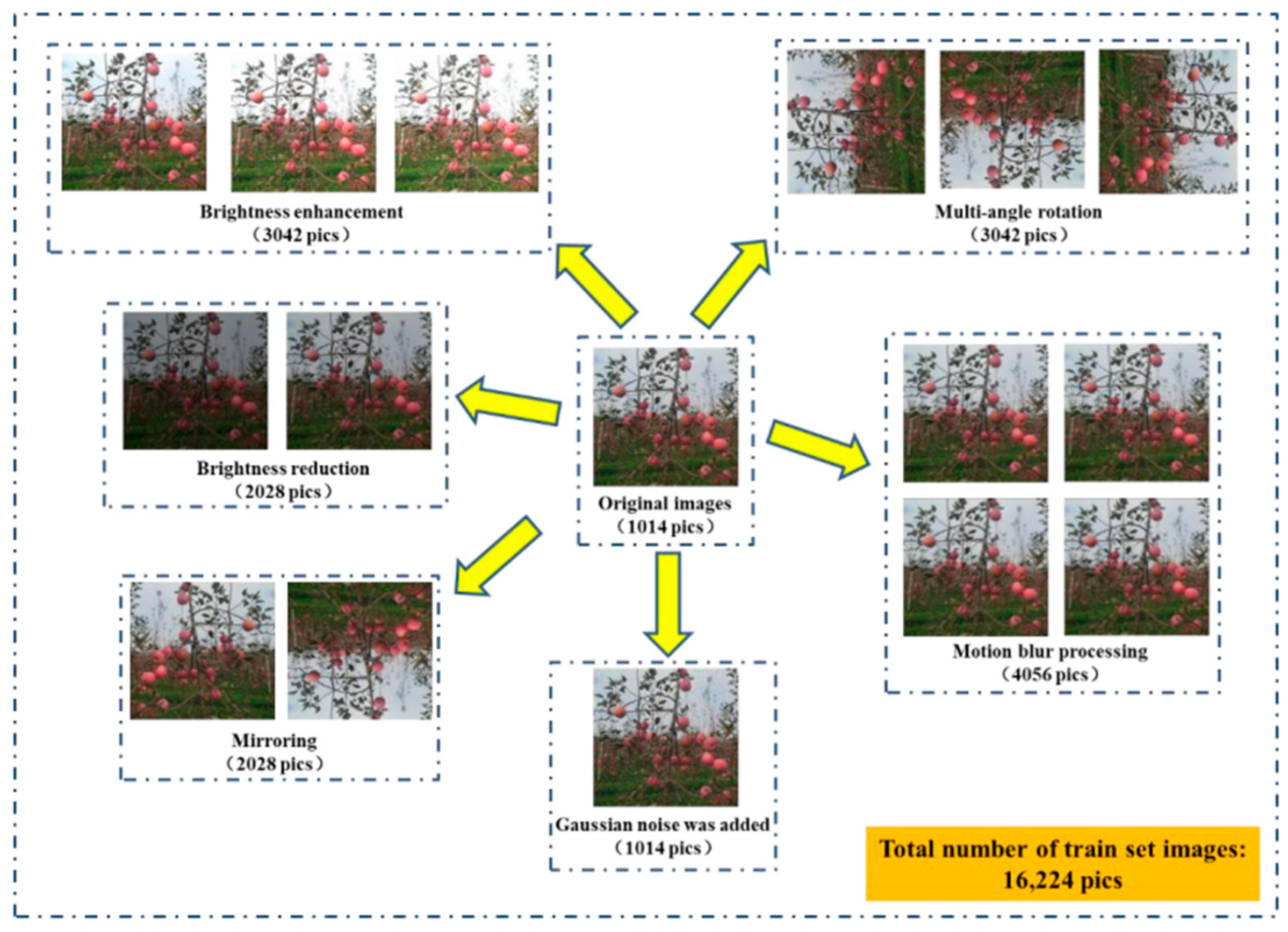

2.2. Data Augmentation

- Reducing and increasing the brightness of image: first, the image is converted into HSV space by using the ‘rgb2hsv’ function, in the next stage, the bright component, i.e., V is multiplied by different coefficients, the obtained HSV image is transformed into RGB space by applying the ‘hsv2rgb’ function, resulting in a brightness enhancement. The brightness enhancement is carried out based on two intensity values and , similarly, brightness reduction is carried out based on two intensity values as and . This method increases and decreases the brightness which helps to learn the patterns in such a way that the model can detect the apples in conditions with poor illumination.

- Image mirroring: this is performed by mirroring the horizontal and vertical pixels. The horizontal mirroring is obtained by transforming the left and right side of image centering on the vertical center line of image. Similarly, the upper and lower sides are transformed on the horizontal centerline of image to generate the vertical mirroring. This scenario helps to obtain the augmented data and mirror images carry the same characteristics of an apple that we would want an image classifier to learn. Especially when involving tasks where the perspective of the image is unknown.

- Image rotation: in this augmentation, the image is rotated by 90°, 180°, and 270°. These rotations help to obtain the accurate detection of apples irrespective of image capturing angle. If the camera position is not fixed relative to objects, random rotation is likely a helpful image augmentation. Therefore, we consider this an augmentation task.

- Motion blur: the speed of the capturing device affects the quality of image capturing. Thus, in this stage, we include four types of motion blur for data augmentation. These motion filters are obtained by applying (6, 30), (6, −30), (7, 45) and (7, −45) motion blurs, respectively. Here, the motion blur is represented as is the length which represents the pixels of linear motion of camera, and is the angular degree in counterclockwise. Researchers suspect blur particularly obscures convolution’s ability to locate edges in early levels of feature abstraction, causing inaccurate feature abstraction early in a network’s training. Therefore, training the model with blurred data helps to obtain a better level of detection.

- Noisy image: in this process, we add Gaussian noise with variance of 0.02 to obtain the augmented data. Authors in [21,22] used Gaussian noise for data augmentation where the variance of Gaussian noise is considered as 0.02. Moreover, characteristics of Gaussian noise make it more suitable to adopt it for experiments. Gaussian noise is caused due to sensor noise by poor illumination, high temperature and electronic circuit noise which can occur while capturing the image. In [16], the authors suggested that Gaussian noise represents the characteristics of human motion.

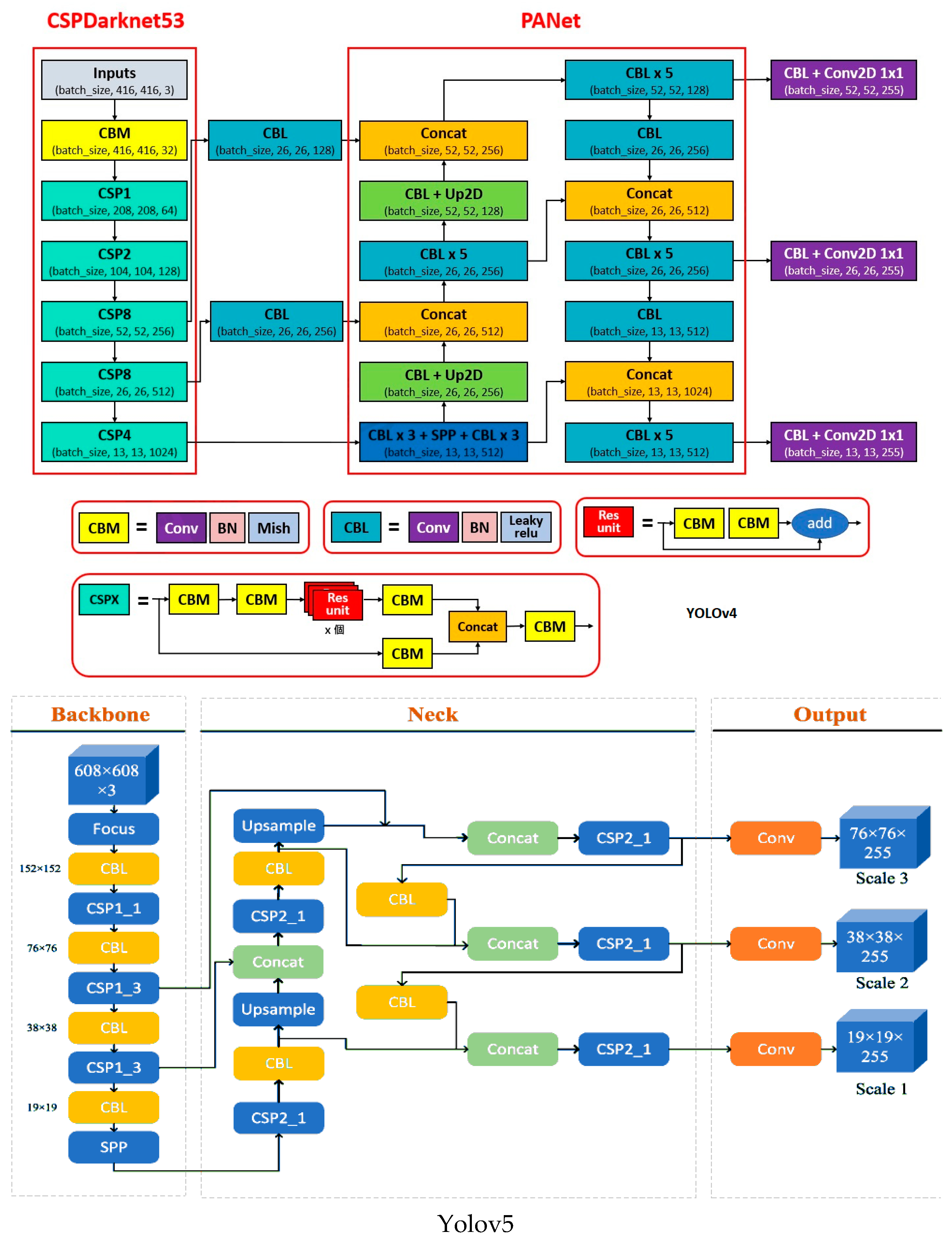

2.3. Yolov5 and Proposed Yolov5 Architecture

2.4. Box Prediction and Loss Function

3. Results and Discussion

3.1. Performance Evaluation

3.2. Apple Detection Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DL | Deep Learning |

| RGB | Red, Green and Blue |

| HSV | Hue, Saturation and Value |

| RGB-D | Depth Camera |

| R-CNN | Regions with Convolutional Neural Network |

| SIFT | Scale Invariant Feature Transform |

| RPN | Region proposal networks |

| HRNet | High-Resolution Network |

| RNN | Recurrent Neural Networks |

| CNN | Convolutional Neural Network |

| RPA | Remote Piloted Aircraft |

| SVM | Support Vector Machines |

| YOLO | You Only Look Once |

| IoU | Intersection over Union |

| ROI | Region of interest |

| ATSS | Adaptive Training Sample Selection |

| CMOS | Complementary Metal Oxide Semiconductor |

| RTMP | Routing Table Maintenance Protocol |

| CSP | Cross stage partial connections |

| Mp | Megapixel |

| µm | Micrometer |

| mAP | Mean average precision |

| VIA | VGG Image Annotator |

| VGG | Visual Geometry Group |

| ResNet | Residual Network |

| FPN | Feature Pyramid Network |

| P | Precision |

| R | Recall |

| std | Standard Deviation |

| BB | Bounding Box |

| BN | Batch Normalization |

| PANet | Path Aggregation Network |

| SGD | Stochastic Gradient Descent |

| AP | Average Precision |

| TP | True Positive |

| FP | False Positive |

References

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. arXiv 2019, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Murala, S.; Vipparthi, S.K.; Akhtar, Z. Vision Based Computing Systems for Healthcare Applications. J. Healthc. Eng. 2019, 2019, 9581275. [Google Scholar] [CrossRef] [PubMed]

- Chandra, A.L.; Desai, S.V.; Guo, W.; Balasubramanian, V.N. Computer vision with deep learning for plant phenotyping in agriculture: A survey. arXiv 2020, arXiv:2006.11391. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Using YOLOv3 algorithm with pre-and post-processing for apple detection in fruit-harvesting robot. Agronomy 2020, 10, 1016. [Google Scholar] [CrossRef]

- Jia, W.; Zhang, Y.; Lian, J.; Zheng, Y.; Zhao, D.; Li, C. Apple harvesting robot under information technology: A review. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420925310. [Google Scholar] [CrossRef]

- Jiao, Y.; Luo, R.; Li, Q.; Deng, X.; Yin, X.; Ruan, C.; Jia, W. Detection and localization of overlapped fruits application in an apple harvesting robot. Electronics 2020, 9, 1023. [Google Scholar] [CrossRef]

- Li, T.; Fang, W.; Zhao, G.; Gao, F.; Wu, Z.; Li, R.; Dhupia, J. An improved binocular localization method for apple based on fruit detection using deep learning. Inf. Process. Agric. 2021, in press. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Vilaplana, V.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Gregorio, E. Multi-modal deep learning for Fuji apple detection using RGB-D cameras and their radiometric capabilities. Comput. Electron. Agric. 2019, 162, 689–698. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Liu, Q.; Zhao, X.; Yang, H.; Zhao, L.; Ling, W.; Ma, X.; Zhao, Y. Image segmentation of Huaniu apple based on pulse coupled neural network and watershed algorithm. In Proceedings of the International Conference on Electronic Information Engineering and Computer Communication (EIECC 2021), Nanchang, China, 17–19 December 2021; Volume 12172, pp. 448–455. [Google Scholar]

- Zhang, C.; Zou, K.; Pan, Y. A method of apple image segmentation based on color-texture fusion feature and machine learning. Agronomy 2020, 10, 972. [Google Scholar] [CrossRef]

- Yang, M.; Kumar, P.; Bhola, J.; Shabaz, M. Development of image recognition software based on artificial intelligence algorithm for the efficient sorting of apple fruit. Int. J. Syst. Assur. Eng. Manag. 2022, 13, 322–330. [Google Scholar] [CrossRef]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Kumar, V. Counting apples and oranges with deep learning: A data-driven approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Farhadi, A.; Redmon, J. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.0276. [Google Scholar]

- Biffi, L.J.; Mitishita, E.; Liesenberg, V.; Santos AA, D.; Gonçalves, D.N.; Estrabis, N.V.; Gonçalves, W.N. ATSS deep learning-based approach to detect apple fruits. Remote Sens. 2020, 13, 54. [Google Scholar] [CrossRef]

- www.personaldrones.it. Available online: https://www.personaldrones.it/341-mavic-3 (accessed on 1 September 2021).

- www.dji.com. Available online: https://www.dji.com/it/mavic-3 (accessed on 1 September 2021).

- Wang, J.L.; Li, A.Y.; Huang, M.; Ibrahim, A.K.; Zhuang, H.; Ali, A.M. Classification of white blood cells with pattern net-fused ensemble of convolutional neural networks (pecnn). In Proceedings of the 2018 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Louisville, KY, USA, 6–8 December 2018; pp. 325–330. [Google Scholar]

- Brock, H.; Rengot, J.; Nakadai, K. Augmenting sparse corpora for enhanced sign language recognition and generation. In Proceedings of the 11th International Conference on Language Resources and Evaluation (LREC 2018) and the 8th Workshop on the Representation and Processing of Sign Languages: Involving the Language Community, Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Ghaffarian, S.; Valente, J.; Van Der Voort, M.; Tekinerdogan, B. Effect of attention mechanism in deep learning-based remote sensing image processing: A systematic literature review. Remote Sens. 2022, 13, 2965. [Google Scholar] [CrossRef]

- Hu, J.; Zheng, Y.; Lam, K.M.; Lou, P. DWANet: Focus on Foreground Features for More Accurate Location. IEEE Access 2022, 10, 30716–30729. [Google Scholar] [CrossRef]

- Wang, S. Research Towards Yolo-Series Algorithms: Comparison and Analysis of Object Detection Models for Real-Time UAV Applications. J. Phys. Conf. Ser. 2021, 1948, 012021. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Wolter, M.; Garcke, J. Adaptive wavelet pooling for convolutional neural networks. In Proceedings of the International Conference on Artificial Intelligence and Statistics, San Diego, CA, USA, 13–15 April 2021; pp. 1936–1944. [Google Scholar]

- Tsai, Y.H.; Hamsici, O.C.; Yang, M.H. Adaptive region pooling for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 731–739. [Google Scholar]

- Yang, X.; Liu, Q. Scale-sensitive feature reassembly network for pedestrian detection. Sensors 2021, 21, 4189. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Liang, B.; Fu, D.; Huang, G.; Yang, F.; Li, W. Airport small object detection based on feature enhancement. IET Image Process. 2021, 16, 2863–2874. [Google Scholar] [CrossRef]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

| Original Images | Illumination Variations | |||||

|---|---|---|---|---|---|---|

| Detection Method | Precision | Recall | F1-Score | Precision | Recall | F1-Score |

| AlexNet | 0.66 | 0.72 | 0.69 | 0.61 | 0.65 | 0.63 |

| ResNet | 0.72 | 0.76 | 0.74 | 0.72 | 0.69 | 0.7 |

| Faster RCNN | 0.83 | 0.79 | 0.81 | 0.68 | 0.71 | 0.7 |

| AlexNet + Faster RCNN | 0.88 | 0.84 | 0.86 | 0.68 | 0.75 | 0.71 |

| ResNet + FasterRCNN | 0.87 | 0.64 | 0.74 | 0.72 | 0.75 | 0.73 |

| YOLOv3 | 0.82 | 0.86 | 0.84 | 0.7 | 0.78 | 0.7378 |

| Improved Yolov3 | 0.83 | 0.9 | 0.86 | 0.81 | 0.86 | 0.83425 |

| YOLOv5 | 0.89 | 0.97 | 0.93 | 0.85 | 0.91 | 0.87897 |

| Improved Yolov5 | 0.97 | 0.99 | 0.98 | 0.86 | 0.93 | 0.89363 |

| Blurred Images | Noisy Images | |||||

|---|---|---|---|---|---|---|

| Detection Method | Precision | Recall | F1-Score | Precision | Recall | F1-Score |

| AlexNet | 0.69 | 0.87 | 0.77 | 0.65 | 0.71 | 0.75 |

| ResNet | 0.72 | 0.85 | 0.77 | 0.76 | 0.73 | 0.75 |

| Faster RCNN | 0.76 | 0.86 | 0.8 | 0.79 | 0.72 | 0.76 |

| AlexNet + Faster RCNN | 0.8 | 0.86 | 0.83 | 0.78 | 0.76 | 0.77 |

| ResNet + FasterRCNN | 0.8 | 0.88 | 0.84 | 0.78 | 0.83 | 0.8 |

| YOLOv3 | 0.84 | 0.86 | 0.85 | 0.8 | 0.87 | 0.83 |

| Improved Yolov3 | 0.88 | 0.9 | 0.89 | 0.82 | 0.9 | 0.86 |

| YOLOv5 | 0.94 | 0.83 | 0.88 | 0.87 | 0.97 | 0.92 |

| Improved Yolov5 | 0.96 | 0.99 | 0.99 | 0.92 | 0.97 | 0.94 |

| YoloV5 Models | Parameters (Million) | Accuracy (mAP 0.5) | CPU Time (ms) | GPU Time (ms) |

|---|---|---|---|---|

| YOLOv5x | 42.1 | 69.72 | 710 | 15.6 |

| YOLOv5I | 31.5 | 76.30 | 330 | 13.3 |

| YOLOv5m | 26.2 | 81.20 | 240 | 9.2 |

| YOLOv5s | 18.3 | 86.51 | 160 | 8.1 |

| Proposed YOLO Model | 11.1 | 91.25 | 72 | 6.1 |

| Original Images | Noisy Images | |||||

|---|---|---|---|---|---|---|

| Folds | Precision | Recall | F1-Score | Precision | Recall | F1-Score |

| kFold 1 | 0.96 | 0.97 | 0.99 | 0.95 | 0.97 | 0.94 |

| kFold 2 | 0.95 | 0.98 | 0.97 | 0.96 | 0.96 | 0.95 |

| kFold 3 | 0.96 | 0.98 | 0.96 | 0.95 | 0.96 | 0.96 |

| kFold 4 | 0.96 | 0.97 | 0.98 | 0.95 | 0.98 | 0.96 |

| kFold 5 | 0.98 | 0.96 | 0.97 | 0.97 | 0.97 | 0.98 |

| Blurred Images | Illumination variation | |||||

| Folds | precision | Recall | F1-score | precision | Recall | F1-score |

| kFold 1 | 0.95 | 0.96 | 0.98 | 0.98 | 0.96 | 0.97 |

| kFold 2 | 0.96 | 0.96 | 0.95 | 0.96 | 0.98 | 0.96 |

| kFold 3 | 0.97 | 0.99 | 0.97 | 0.98 | 0.97 | 0.94 |

| kFold 4 | 0.96 | 0.98 | 0.97 | 0.95 | 0.98 | 0.96 |

| kFold 5 | 0.95 | 0.96 | 0.94 | 0.96 | 0.97 | 0.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sekharamantry, P.K.; Melgani, F.; Malacarne, J. Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sens. 2023, 15, 1516. https://doi.org/10.3390/rs15061516

Sekharamantry PK, Melgani F, Malacarne J. Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sensing. 2023; 15(6):1516. https://doi.org/10.3390/rs15061516

Chicago/Turabian StyleSekharamantry, Praveen Kumar, Farid Melgani, and Jonni Malacarne. 2023. "Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO" Remote Sensing 15, no. 6: 1516. https://doi.org/10.3390/rs15061516

APA StyleSekharamantry, P. K., Melgani, F., & Malacarne, J. (2023). Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sensing, 15(6), 1516. https://doi.org/10.3390/rs15061516