1. Introduction

Low-frequency (LF) information is vital to full-waveform inversion (FWI) [

1] for retrieving reliable underground parameters from seismic data [

2]. The lack of usable LF information in acquired seismic field data mostly causes the inaccurate retrieval of long-scale features when using FWI [

3] because of intrinsic cycle skipping problems. FWI needs low frequencies to converge to a global optimal model if the starting model is insufficiently accurate [

1]. Note that here it does not mean that the FWI starts from an inaccurate initial model and using data with low-frequency content guarantees the convergence of the data inversion process to a global optimum. If the starting model is not sufficiently accurate, low-frequency content is only a necessary condition rather than a sufficient condition because of the ill-posedness of the FWI. To some extent, pursuing the global optimum is utopian. In practice, what is derived is a subsurface model that describes the seismic data well, i.e., an informative (local) optimum. Although seismic acquisition technologies have been remarkably enhanced over the past decades, it is still challenging to acquire low frequencies that possess a proper signal-to-noise ratio (S/N). A seismic acquisition device is incapable of delivering adequate LF energy. Because of this limitation, the input dataset of FWI is generally restricted to a frequency band ≥3 Hz [

4]. Conventional approaches have been tried to be used to retrieve LF signals, e.g., [

5,

6]. Chiu et al. [

5] evaluated the feasibility and value of LF data collected using colocated 2-Hz and 10-Hz geophones. Chiu et al. [

5] investigated the possibility of using the colocated data sets to enhance the LF signal of 10-Hz geophone data that include both experimental and production dataERROR: Failed to execute system command. Adamczyk et al. [

6] showed a case study of FWI applied to conventional Vibroseis data recorded along a regional seismic profile located in southeast Poland. The acquisition parameters, i.e., the use of 10-Hz geophones and Vibroseis sweeps starting at 6 Hz, made Adamczyk et al. [

6] design a non-standard data preconditioning workflow for enhancing low frequencies, including match filtering and curvelet denoising.

In recent years, low-frequency reconstruction (LFR) prior to FWI has attracted many researchers’ attention. Hu [

7] proposed an FWI method coined beat-tone inversion for reliable velocity model building without LF data, which was inspired by interference beat tone (a phenomenon commonly utilized by musicians for tuning check). Hu [

7] extracted low-wavenumber components from high-frequency (HF) data by using two recorded seismic waves with slightly different frequencies propagating through the subsurface. Hu [

7] designed two algorithms for their method; one is the amplitude-frequency differentiation beat inversion, and the other is the phase-frequency differentiation beat inversion. Li and Demanet [

2] explored the feasibility of compositing low frequencies from high frequencies based on a phase-tracking method. They reconstructed low frequencies by disassembling the chosen seismograms into elementary events through phase tracking each isolated arrival. Nevertheless, it is challenging to develop a method that can fully exploit intrinsic relations between low and high frequencies and between neighboring traces and shots [

8].

In the new age of artificial intelligence (AI), many fundamental contributions have been made in all areas of science and technology by exploiting progress in deep learning (DL) [

9,

10]. DL is a powerful method for mining complex connections from data by utilizing artificial neural networks (ANNs). DL has also been introduced in numerous successful applications in exploration seismology, e.g., seismic fault segmentation [

11], data reconstruction [

12,

13,

14], velocity model building [

15], FWI [

16], microseismic monitoring [

17], multitrace deconvolution [

18], geophysics-steered self-supervised learning for deconvolution [

19], seismic impedance inversion [

20], polarity determination for surface microseismic data [

21], inversion for shear-tensile focal mechanisms [

22], array-based seismic phase picking on 3D microseismic data with DL [

23], and seismic facies classification [

24]. Please refer to Yu and Ma [

10] for an overview of DL for geophysics and refer to Mousavi and Beroza [

25] for a review of DL for seismology.

Studies on DL-based LFR have also been reported. Ovcharenko et al. [

8] developed a method for extrapolating low frequencies from available high frequencies utilizing DL. A 2D convolutional neural network (CNN) was designed for LF extrapolation in the frequency domain. Through wavenumber analysis, Ovcharenko et al. [

8] reported that reconstruction per shot possesses wider applicability in comparison to per-trace reconstruction. Ovcharenko et al. [

8] tested their method on synthetic data only. By considering LFR as a regression problem, Sun and Demanet [

4] adopted a 1D CNN to automatically reconstruct missing low frequencies in the time domain. Numerical examples demonstrated that a 1D CNN trained on the Marmousi model can be applied to LFR on the BP 2004 benchmark model. However, this method was only tested using synthetic data. Fang et al. [

26] presented a DL-based LFR approach in the time domain. Different from Sun and Demanet [

4]’s approach, Fang et al. [

26] based their work on a 2D convolutional autoencoder. They used energy balancing and data patches to generate high- and low-frequency pairs to train a 2D CNN model. Their 2D CNN model was trained on the Marmousi dataset and tested on an overthrust model and a field dataset. Lin et al. [

27] presented a DL-based LF data prediction scheme to solve the highly nonlinear inverse scattering problem with strong scatterers. In the scheme of Lin et al. [

27], a 2D deep neural network (NN) was trained to predict the missing LF scattered field data from the measured HF dataset. Ovcharenko et al. [

28] proposed to jointly reconstruct LF data and a smooth background subsurface model within a multitask DL framework instead of aiming to reach superior accuracy in LFR. Ovcharenko et al. [

28] automatically balanced data, model, and trace-wise correlation loss terms in the loss function. Ovcharenko et al. [

28] discussed the data splitting into high and low frequencies. Ovcharenko et al. [

28] demonstrated that their approach improved the extrapolation capability of the network.

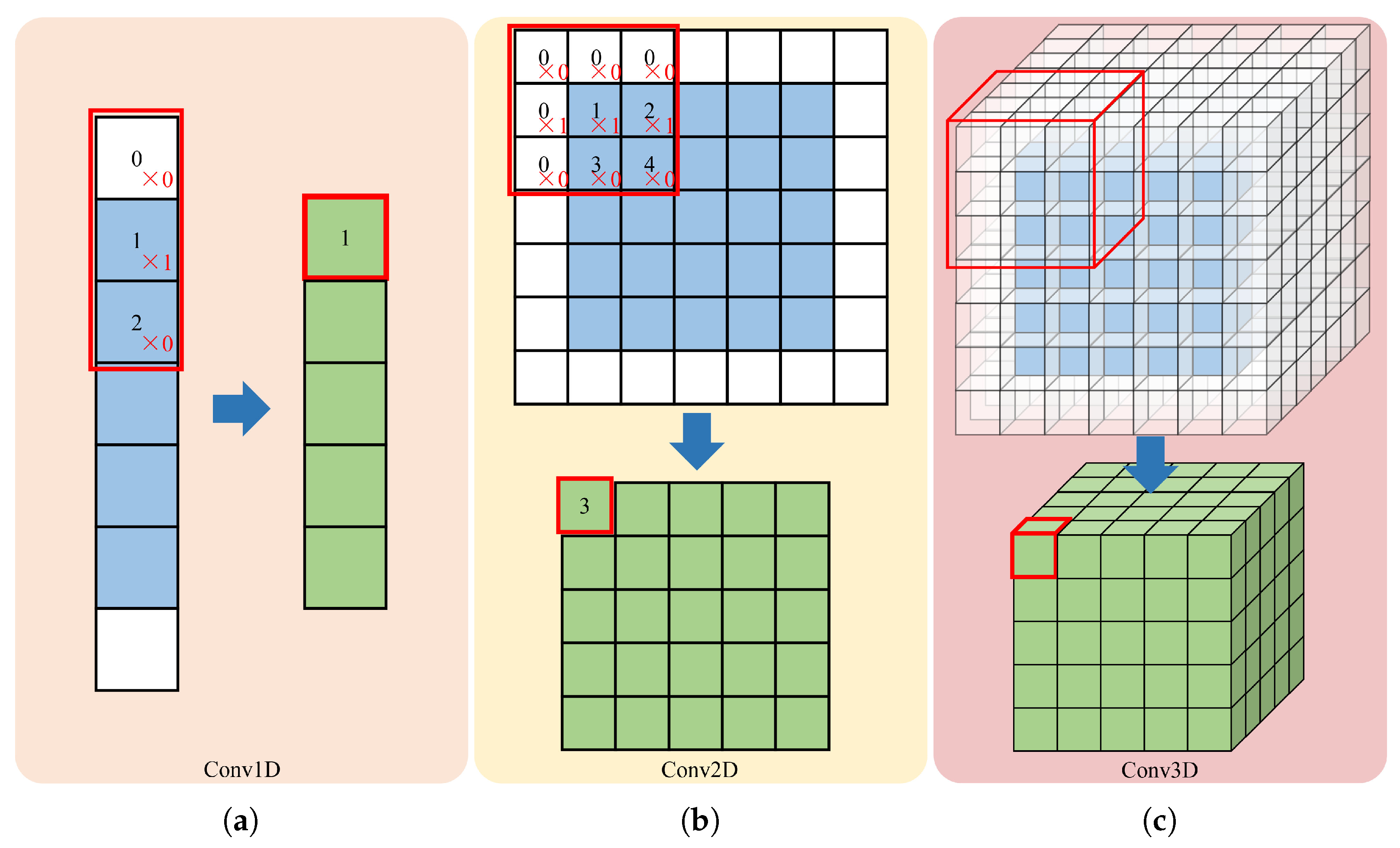

By formulating LFR as a DL-based regression problem, we evaluate a 3D CNN-based approach for LFR with the following motivations. Previous works are based on either 1D or 2D CNNs. Consequently, seismic data are processed slice-by-slice [

8], trace-by-trace [

4], or shot-by-shot [

26]. However, shot gathers of a prestack line are 3D field seismic data. In this work, the 3D seismic data actually refers to the prestack line data (2D) with the source as the third axis, i.e., (

,

,

), instead of the conventional 3D seismic data (

,

,

), where

,

, and

denote the number of the time sampling points, the receivers, and the sources, respectively.

and

represent the number of receivers along the space in the

x- and

y-directions, respectively. One drawback of previous DL-based 1D/2D reconstruction methods is that the stack of the prediction errors diminishes the correlation of events among slices/traces/shots. Previous 1D/2D reconstruction methods cannot fully exploit information that is available in 3D seismic data (see

Figure 1). Three-dimensional reconstruction based on 3D CNN can overcome this problem by utilizing spatial information naturally available in 3D seismic data. Three-dimensional CNN can inherently use this additional information to produce better results with superior spatial consistency and accuracy. This work is thus closely related to the work of Ovcharenko et al. [

8], Sun and Demanet [

4], and Fang et al. [

26]. In addition, suppressing the Gibbs effect associated with the hard splitting of the low- and high-frequency data is lightly discussed in the previous work. This work is essentially based on the works of Chai et al. [

14], Chai et al. [

18], and Chai et al. [

19], where 3D CNN was applied to 3D data reconstruction, multitrace deconvolution, and geophysics-steered self-supervised learning for deconvolution, respectively. The work combines and extends the ideas proposed earlier. Open-source work in the LFR area is scarce. For reproducibility, we have made all materials related to this work open-source.

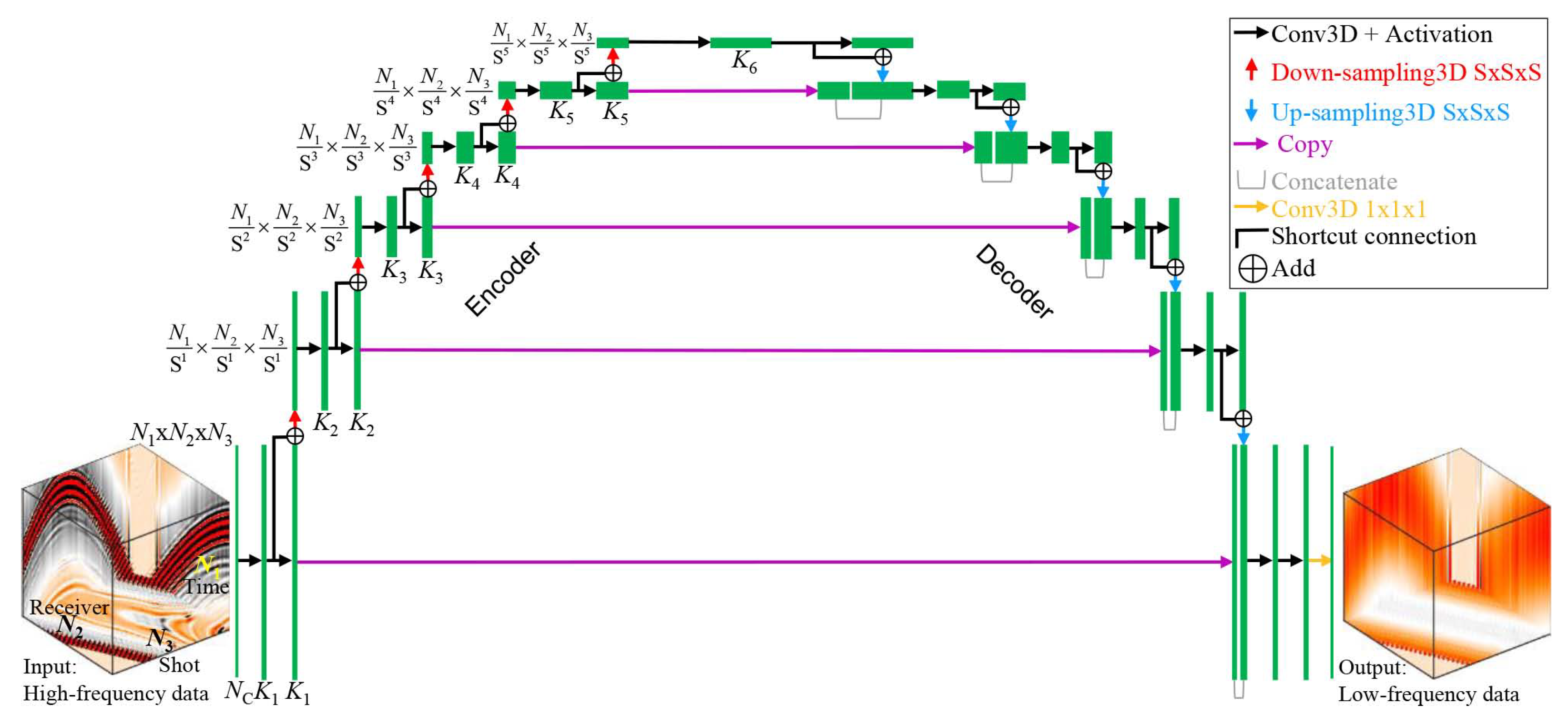

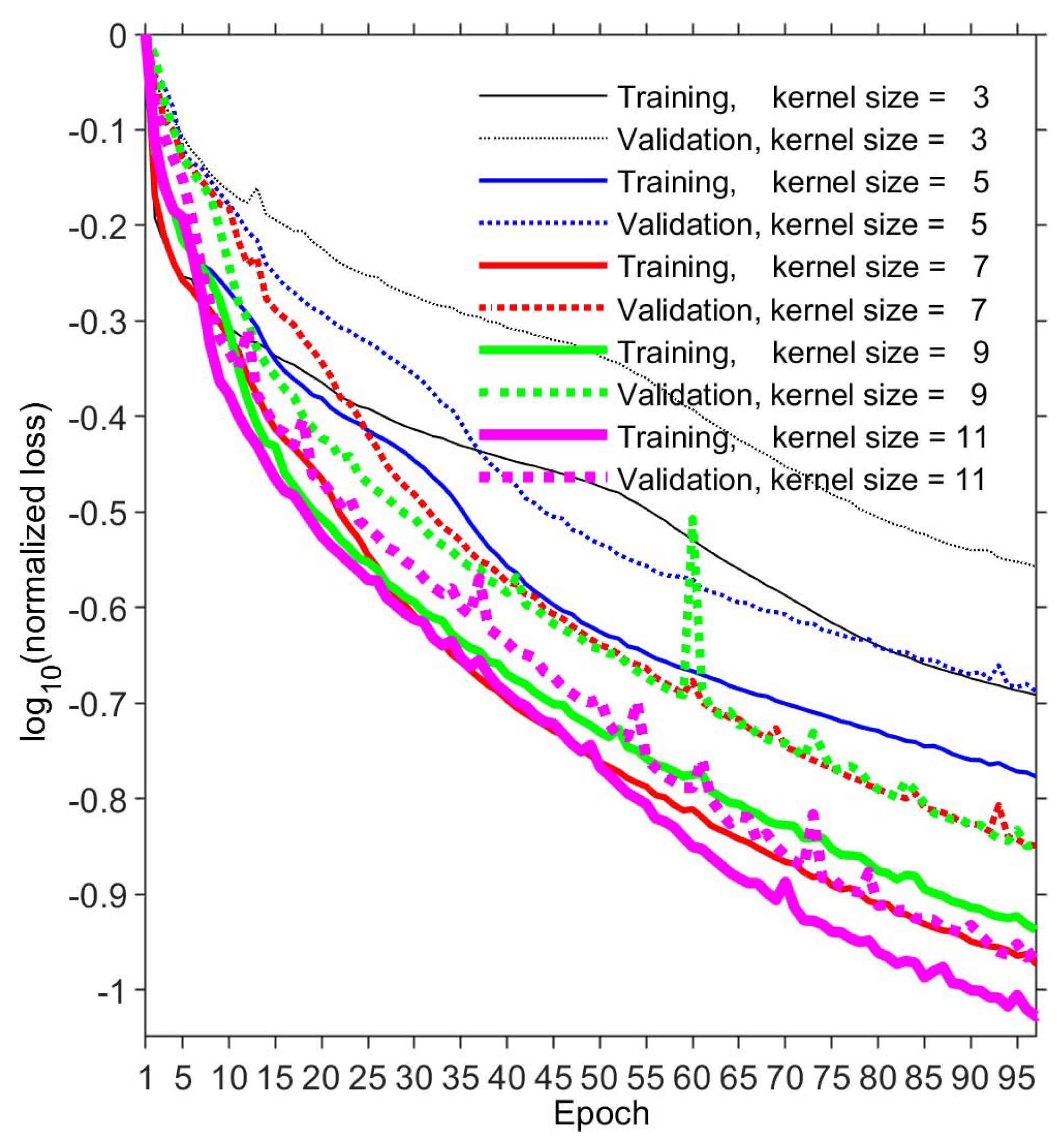

We organize the rest of the paper as follows. First, we describe how we split the low- and high-frequency data regarding the Gibbs effect. Then, we briefly describe the underlying DL methodology. Next, we detail the 3D CNN architecture used for LFR in this research, which is based on U-Net [

29] and Bridge-Net [

19,

24]. Then, we provide the CNN training setup in detail. A three-dimensional CNN is trained to reconstruct missing low frequencies from raw high frequencies in the time domain. Then, we report the CNN training stage’s convergence and the impact of different convolutional kernel sizes. After testing CNN’s effectiveness on the Marmousi2 open dataset [

30], we use a field dataset, which is invisible during the training stage, to check CNN’s generalization capability. We compare the result of 2D and 3D CNNs to show the benefits of our approach. We then assess the LFR results in terms of FWI with the aid of an open-source FWI code package PySIT [

31], to check whether the predicted low frequencies are accurate enough to mitigate FWI’s cycle-skipping problems and to observe the impact of low frequencies on the FWI results.

4. Low-Frequency Reconstruction for Full-Waveform Inversion

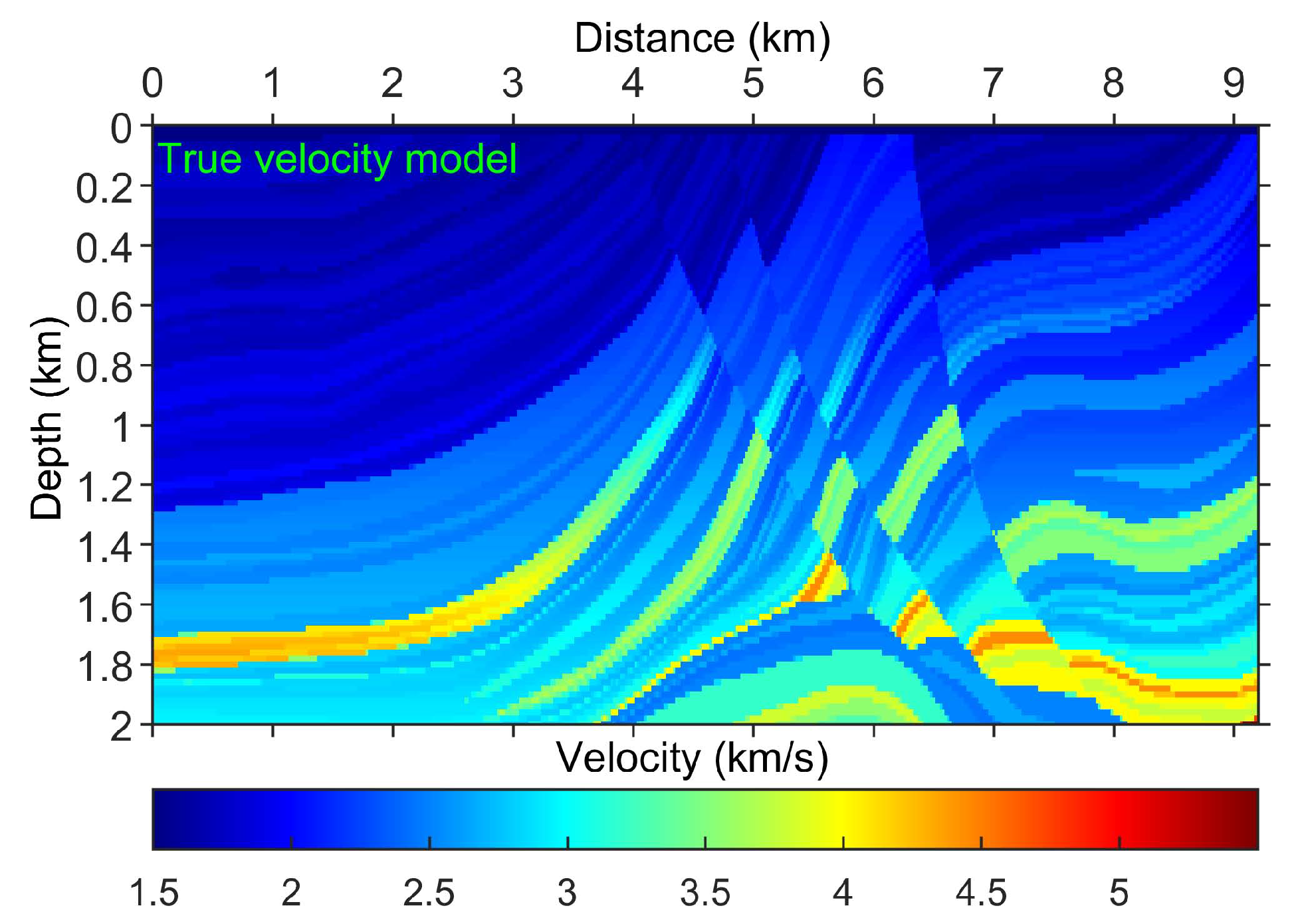

Now, we evaluate the quality of the reconstructed low frequencies in terms of FWI, which is the key motivation of LFR. We evaluate the LFR quality by running FWI on a benchmark Marmousi model [

39]. The default Marmousi model is not modified. We take advantage of the open-source FWI code package PySIT [

31] to accomplish this task. For technical aspects, the wave equation used is the time-domain constant-density acoustic wave equation. In the wave equation forward modeling process, the spatial accuracy order is six. The used codes evaluate the least squares objective function over a list of shots. Therefore, the used code package belongs to the

norm-based FWI. In this work, we used the FWI method developed by Hewett et al. [

31], and we did not work on proposing a new FWI method. We mainly focus on achieving low-frequency reconstruction via deep learning. As a consequence, comparing our FWI results with traditional approaches, such as another

norm-based FWI [

40], is not in the scope of this paper. We used the limited-memory BFGS (LBFGS) method [

41] for the large-scale optimization process. That is, for the calculations of adjoint sources, the codes compute the L-BFGS update for a set of shots.

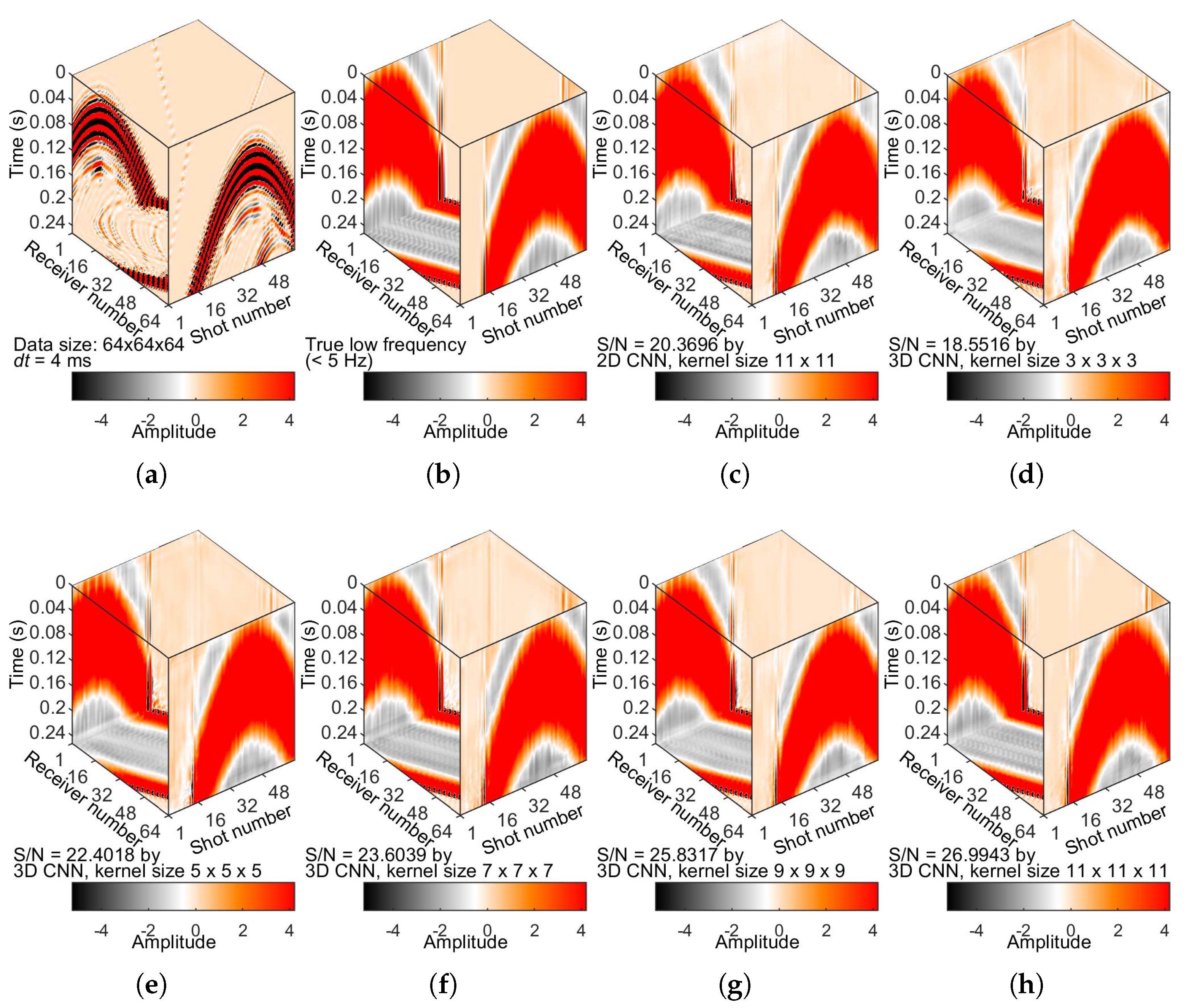

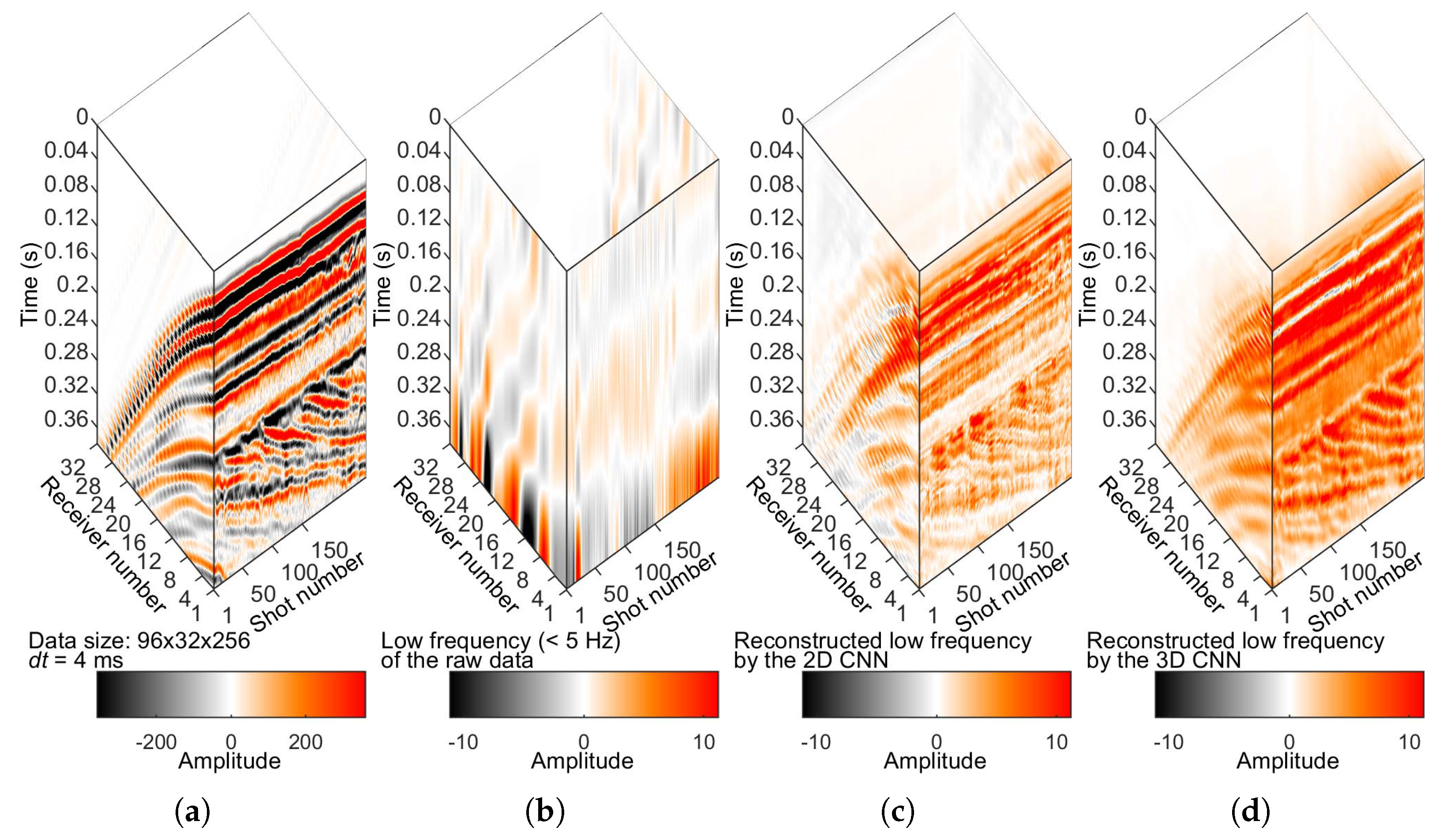

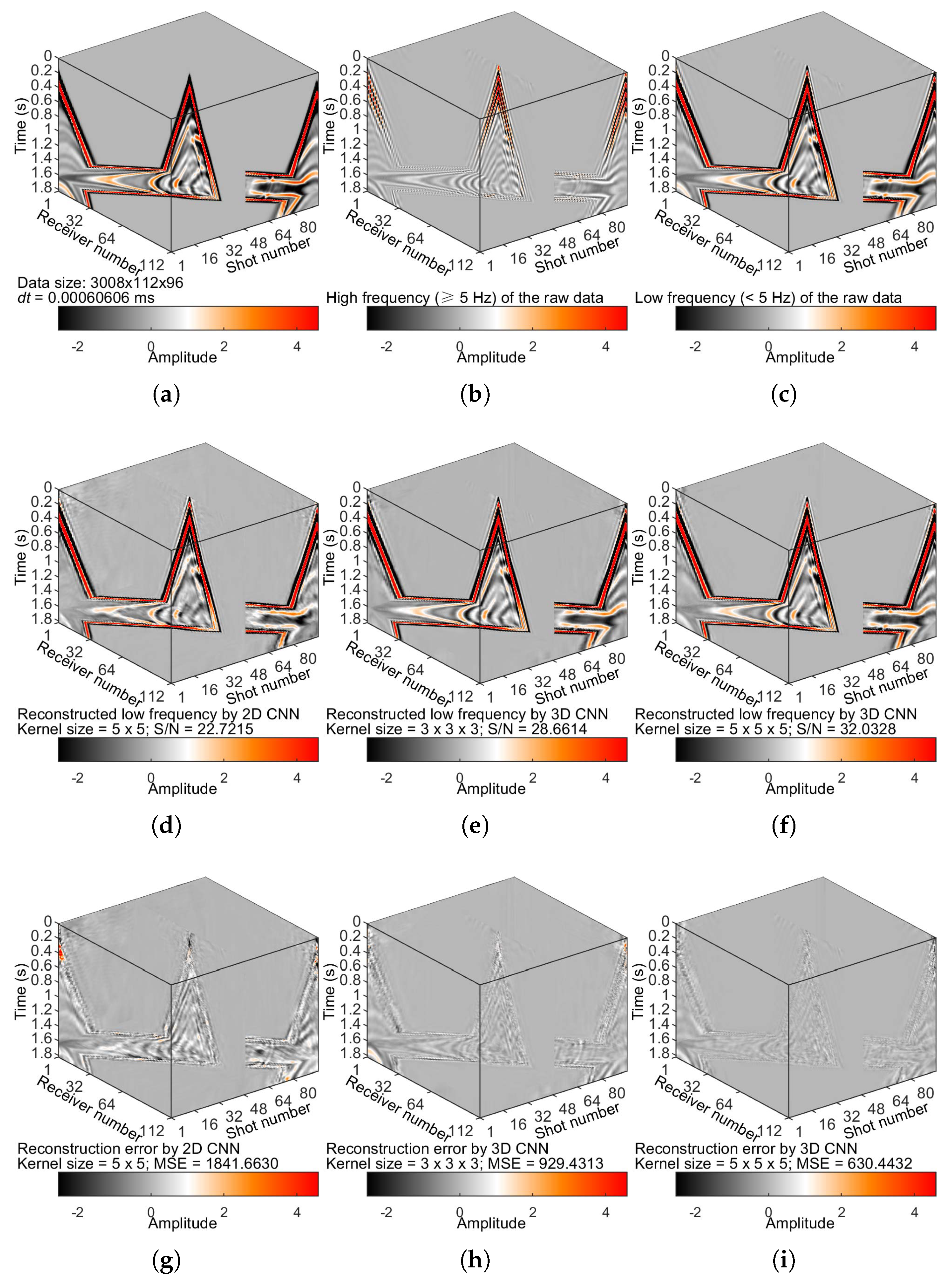

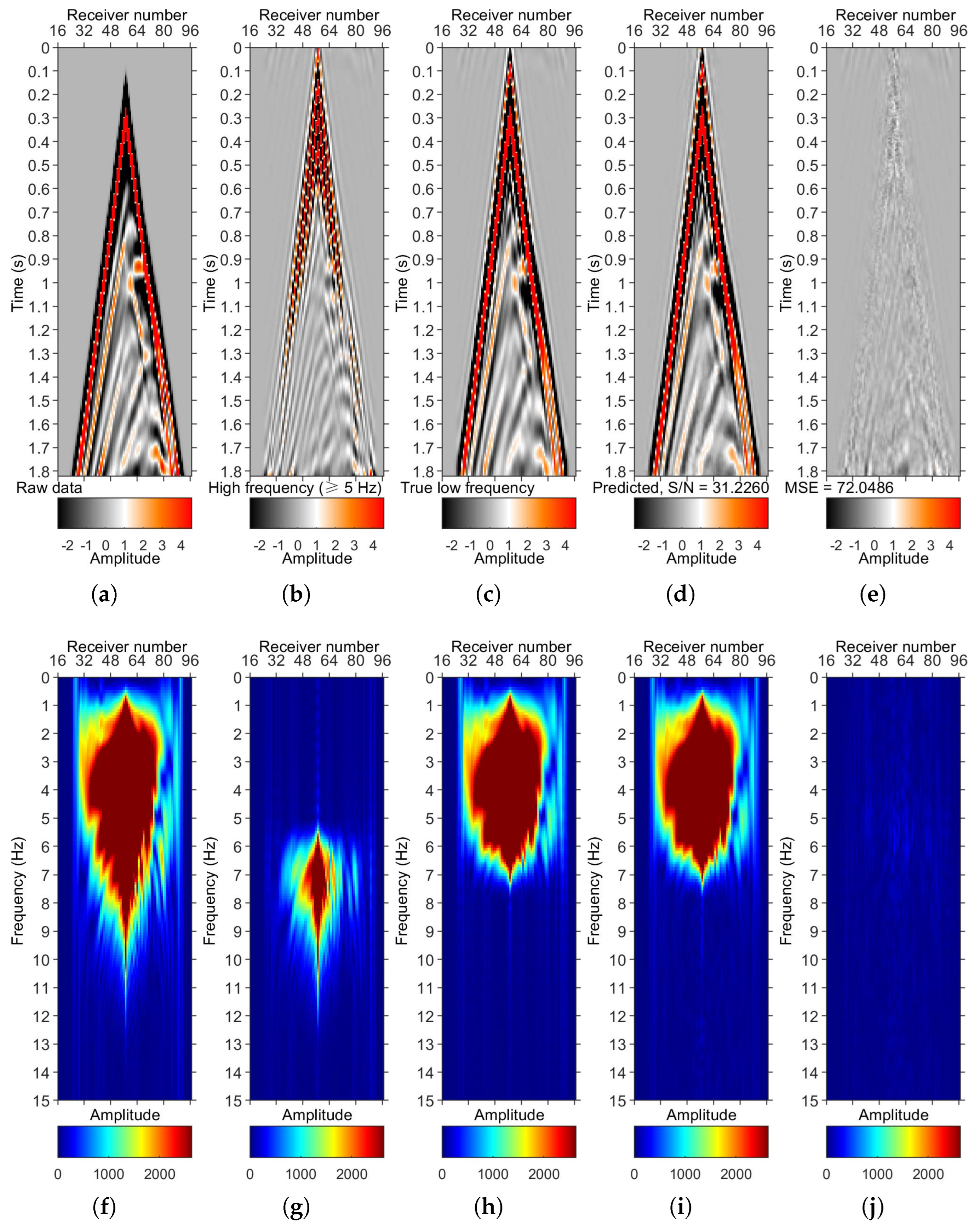

We simulate the shot gathers (

Figure 11a) on the Marmousi model (

Figure 12). For data modeling and FWI, we use the default settings implemented in PySIT [

31]. The grid size of the velocity model is

, and the grid space is

=

= 20 m. We use a 10-Hz Ricker wavelet. The source depth is 15 m, and the receiver depth is 0 m. We finally simulate 96 shots, 112 receivers per shot, with an equispaced geometry. There are 3008 points per trace,

0.0006 ms. We divide the complete data volume with a size of

into smaller datasets with a size of

. There is an overlap of eight between two small samples.

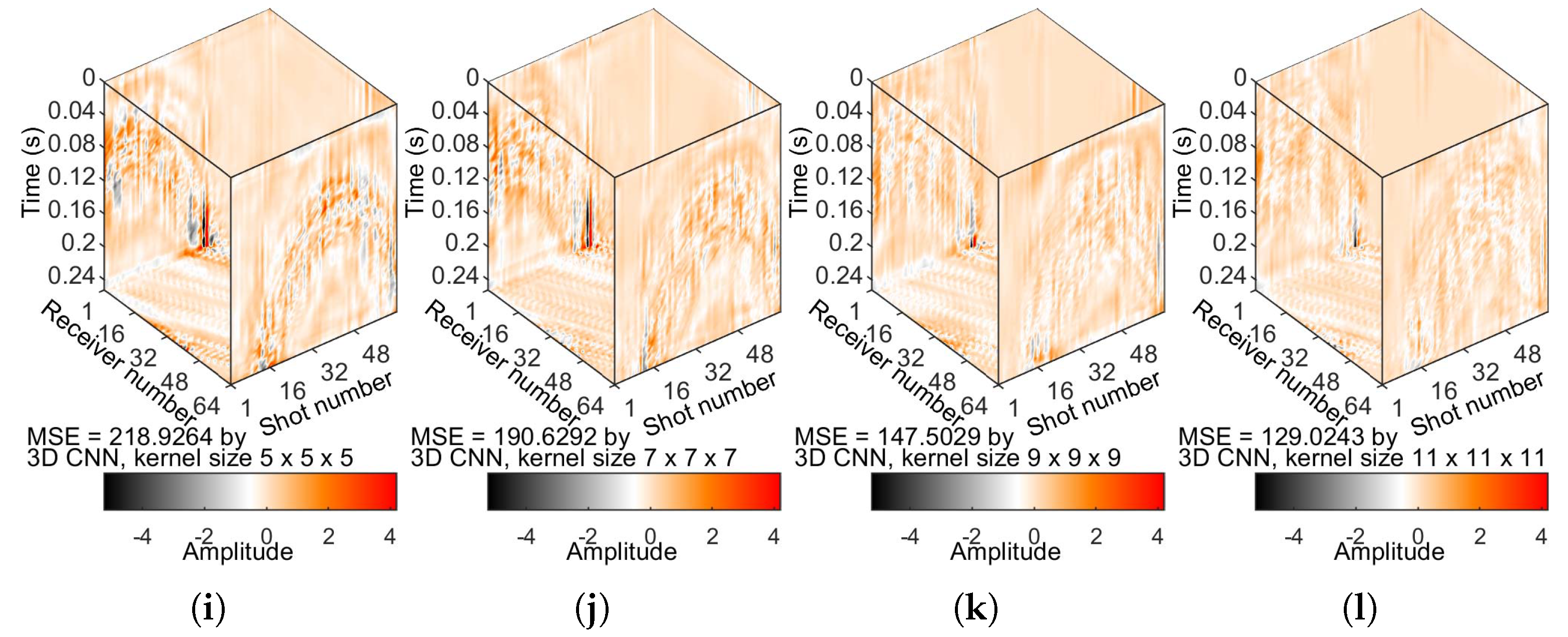

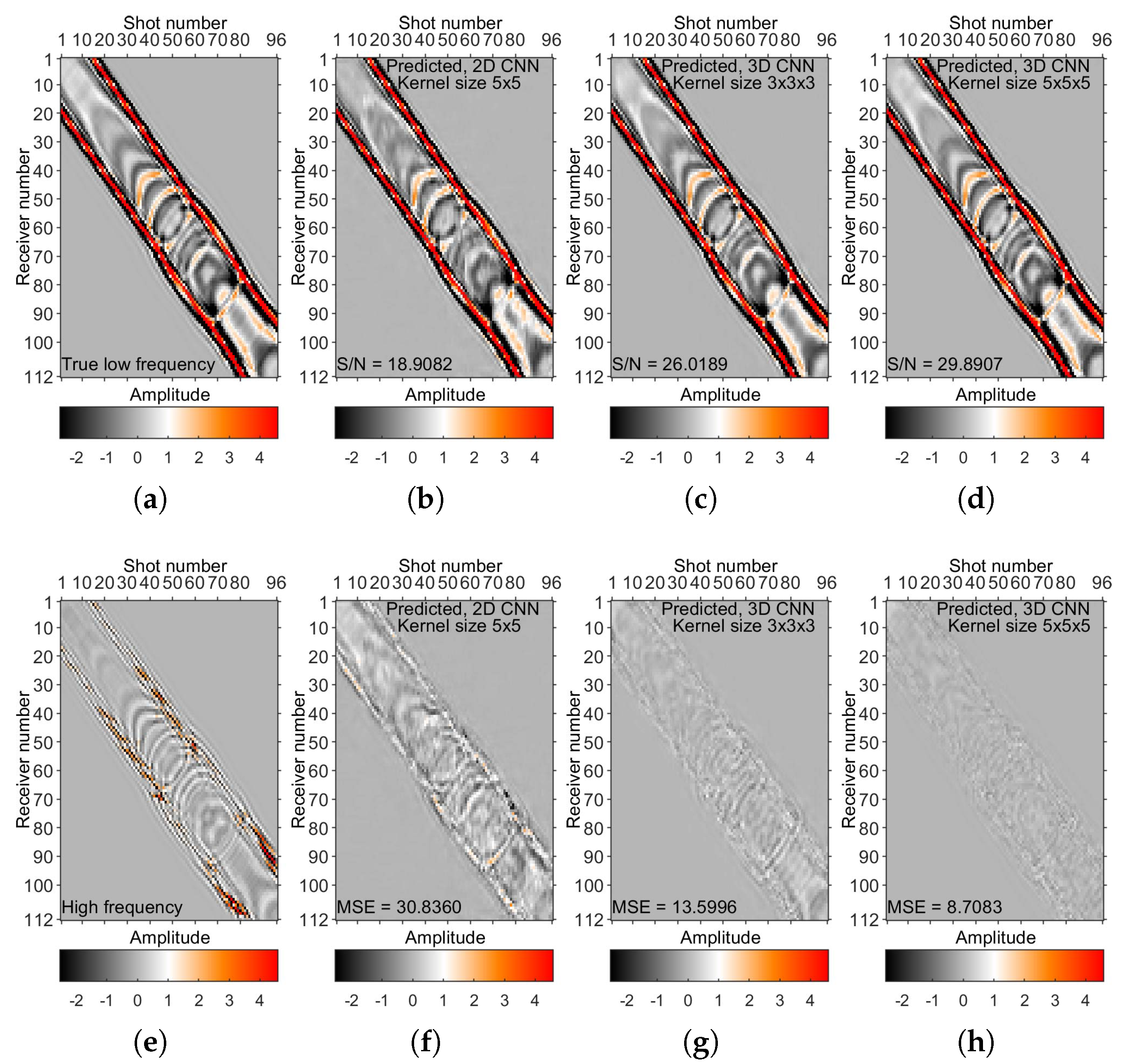

Figure 13 shows the S/N and MSE values.

Figure 11e,f shows the DL-predicted low frequencies by 3D CNN with different kernel sizes.

Figure 14 shows a time slice view at 1.2121 s of the 3D data volumes shown in

Figure 11.

Figure 15 displays a shot gather view of the 3D data volumes shown in

Figure 11. The DL-predicted low frequencies with a kernel size of

(

Figure 11f) are superior to the results of

(

Figure 11e), reflected in the S/N and MSE values (see also

Figure 13).

Figure 11d,f shows the DL-predicted low frequencies by 2D CNN and 3D CNN, respectively. The DL-predicted low frequencies by 3D CNN (

Figure 11f) are superior to the results of 2D CNN (

Figure 11d), embodied in the S/N and MSE values. We reconfirmed the importance of a larger kernel size to capture low frequencies.

As a bandwidth extension method, we also compare the reconstructed and actual low frequencies in the frequency domain for shot comparison (

Figure 15). We show the result with far offsets and later arrival times in

Figure 15. The results in

Figure 11 and

Figure 15 show that the developed method has adequate accuracy.

Figure 16 shows the frequency-wavenumber (F-K) amplitude spectra of the data shown in

Figure 15a, which indicates these plots in

Figure 15 are not aliased.

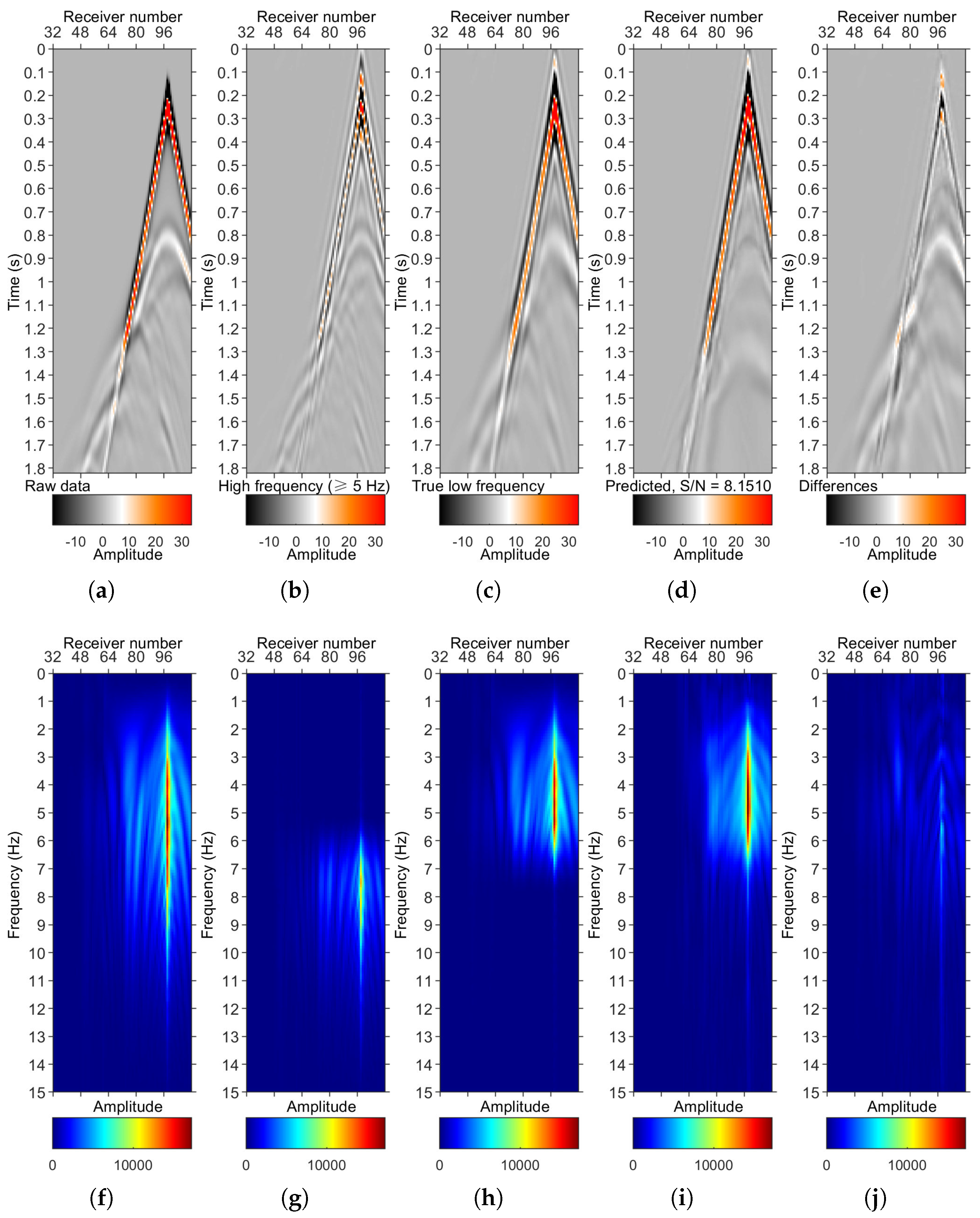

Figure 17 shows the test of the method on another completely unseen BP 2004 benchmark model [

42] other than the Marmousi1 model, which is already used in the training process. It is more helpful to sustain the generalization ability of the proposed method. We observe that the accuracy of the LFR result of the unseen BP 2004 benchmark model is lower than that of the Marmousi1 model used in the training process. This is as expected. The main reasons are as follows. It is likely due to the lack of diversity in the training dataset, which is built of crops from the Marmousi model. The training is biased toward the Marmousi1 model since the NN was trained on data generated on patches of this model. Even when crops are randomly sampled from the data/model, they still encode proper geological contrasts present in the Marmousi1 benchmark model. This partly supports the generalization ability of DL. It raises concerns about the sufficiency of the training dataset to enable the inference of previously unseen data. It also indicates the significance of augmenting the training dataset’s diversity for supervised-learning-based methods because exposing CNN to a wider scope of situations is the most effective way to enhance the generalization ability of DL. Moreover, the generalization capability (or aiming to reach superior generalizability in LFR) is undoubtedly important. However, it is not the core task of this work. The generalization ability of nearly all of the supervised-learning-based methods is limited. The data generation mechanism can be enhanced. Training the CNN on a larger dataset generated from tremendous various models is a sound approach, which is left as future work.

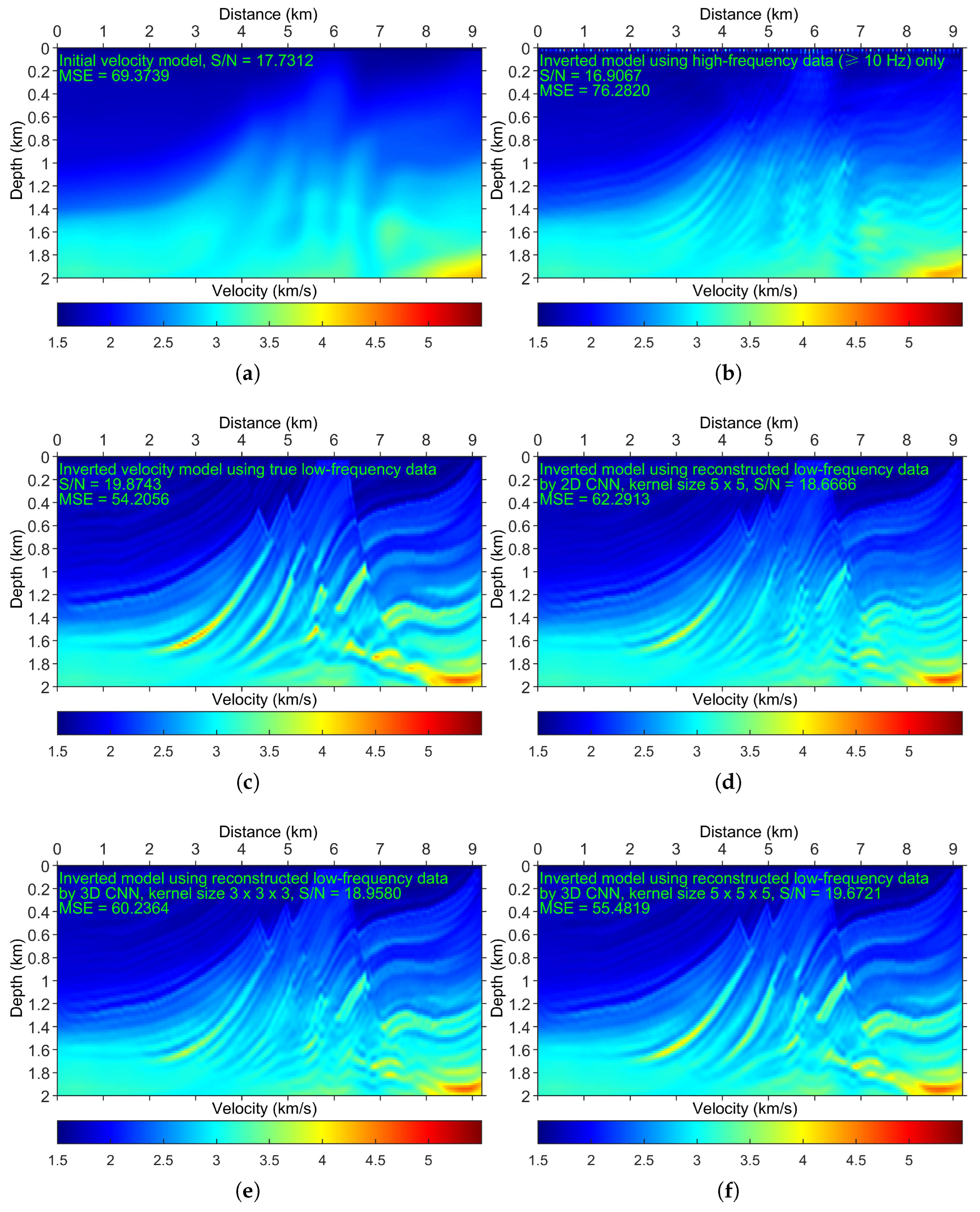

Figure 18 displays the results of low frequencies applied to FWI. The initial model shown in

Figure 18a is not accurate enough. A comparison of

Figure 18b (the FWI result using only high frequencies) and

Figure 18c (the FWI result using true low frequencies) indicates that low frequencies are vital for FWI. The FWI result without using low frequencies (

Figure 18b) is even worse than the initial model (

Figure 18a) (see the S/N and MSE values).

Figure 18b,c shows the impact of low frequencies on the FWI results. The FWI result using the DL-predicted low frequencies by 3D CNN (

Figure 18b), as expected, outperforms that of 2D CNN (

Figure 18d). A comparison of

Figure 18c,f (the FWI result using the DL-predicted low frequencies with a kernel size of

) indicates that the FWI result of the DL-predicted low frequencies nearly resembles that of the true low frequencies. The FWI result using the DL-predicted low frequencies with a kernel size of

(

Figure 18f), as expected, outperforms that of a

kernel size (

Figure 18e).

Figure 18d,f shows the impact of DL predictions when running FWI, where a more accurate LFR generates better FWI results. The above results imply that LFR is accurate enough to mitigate the cycle-skipping problem of FWI.

5. Discussion

For the results in

Figure 9 and

Figure 11, CNN predicted only once and directly output the whole volume with a dimension of

in

Figure 9 and a dimension of

in

Figure 11. That is, we can directly feed a trained CNN (trained with a specific input size, e.g.,

) with a different input size (e.g.,

). The explanation is that while coding, we set CNN’s input shape as “None” instead of a specific shape, which makes it adequately flexible for addressing the arbitrary input shape. To be more concrete, when programming with Python based on Keras, we define CNN’s input layer as “Input (shape = (None, None, None,

))”. Please see the shared code “FlexibleBridgeNet_Keras.py” for the actual implementation. A different size will damage the CNN’s performance to some extent. However, it is difficult to quantize how much generalization gap will be introduced due to the different sizes. We have checked the learned features (by looking at the trained kernels) of 2D and 3D CNNs to see which benefits are obtained by using 3D seismic volume. The trained kernels are mainly random numbers/weights. Currently, it is difficult to judge the benefits.

One practical limitation of this approach is the efficiency. The biggest concern of using 3D convolution is the computational cost for both training and testing. In theory, the 3D data volume does contain more information than 2D data but requires more computational resources. The computational cost of 3D networks is significantly more expensive than that of 2D networks, especially for the training process. To obtain the current result shown in

Figure 11f, we trained the 3D network in approximately 25 min per epoch. It takes about 2.4 min per epoch to train the 2D network. However, the performance of the 2D network (

Figure 11d) is obviously worse than that of the 3D network (

Figure 11f). Once trained, the computational cost for the network’s prediction process is comparatively inexpensive. For example, the network prediction of a dataset of size

takes approximately 5 s on our machine using the GPU. With the development of advanced hardware (e.g., GPU), the computational cost will not be a concern. One common limitation of the DL-based approach is the CNN’s generalization ability. It is a significant challenge to train a CNN model that is widely applicable to all field datasets. The LFR results shown above can be further enhanced by augmenting the training dataset’s diversity since exposing CNN to a wider scope of situations is capable of strengthening DL’s generalization ability. We can synthesize the training dataset by utilizing numerous scenarios of underground models, sources, and wave equation forward modeling operators.

In the above examples, the DL network is trained by utilizing data from the Marmousi model, which has fixed acquisition parameters (time interval, source and receiver intervals, etc.), but testing on a field dataset, which has different acquisition parameters. That is, when we applied CNNs trained on synthetic data, we did not adjust the geometry of the test data (field data) to “fit” the training data. One explanation is that the DL-based method is learning the essence of transforming the high frequencies into the corresponding low-frequency components. It has some tolerances to different acquisition parameters. However, in theory, there is a requirement or limitation on the acquisition geometry when using the developed DL-based LFR method. It is theoretically better to account for a different geometry. It is also better to address the situation that occurs when the shot and/or receiver distance is much larger than in the training data set. To obtain the optimal prediction result of the trained CNNs, the geometry of the test data should better approximate the geometry of the training data. In other words, we anticipate the choice of the time interval, the receiver interval, as well as the source interval plays an important role in improving the accuracy. It is optimal for the reconstruction result if the time/receiver/source interval of the trained data and test data remain the same. It is generally difficult to adjust the geometry of the test field data. However, it is relatively easier for us to adjust the geometry of the synthetic training data. The proposed CNN is trained with the synthetic “streamer” pressure dataset. The method can work well for land datasets and for seismic data in the component of velocity after we improve the corresponding training datasets.

For how to deal with the irregular dataset, e.g., missing traces and irregular geometry, with our previous experience working on the irregularly missing data reconstruction, i.e., [

14,

43], we think the presented framework after improvement can handle the dataset with irregular geometry by preparing the training datasets of the CNN with irregular geometry. We mean that the training inputs of the CNN are HF data with irregular geometry, while the training labels of the CNN are LF data with regular geometry. This is achievable since we generally prepare the training datasets with seismic wave forward-modeling tools. In this way, we may realize LFR and irregularly missing data reconstruction simultaneously.

Application of the evaluated method to a field dataset with LF content (ground truth) available is meaningful. It is important to test the generalization capability of a trained CNN. For the application to the field data, it could be more convincing if the trained CNN is tested on a field dataset with LF ground truth available. However, currently, we do not have a proper dataset with LF ground truth available in hand. Therefore, application to a field dataset with LF ground truth available is left as future work.

It is difficult to give a specific answer to the question of what the limit frequency that the developed method can reconstruct in terms of FWI is. Here, we assume that the lower band of the bandlimited data is 5 Hz. If we increase the lower band (so that an increased number of low frequencies are missing), the method may reach its limit and fail to reconstruct useful low frequencies for mitigating the cycle-skipping problem of FWI. For field data applications, we believe that the limit frequency relies on the quality of the available frequency band of the bandlimited data (i.e., how much effective information can be used) and the power of the DL-based LFR method. For a specific problem, the DL-based LFR method can be designed for a specific target by upgrading the CNN architecture, enhancing the training data preparation, and improving the training strategies.