1. Introduction

Wheat is a grain grown worldwide and plays a vital role in the world trade market, as it is one of the most important grains of the 21st century [

1]. China, as the world’s largest consumer of wheat [

2], accounts for one-fifth of the total planting area of food crops and 11.3% of the world’s total wheat planting area [

3], especially winter wheat, which dominates the agricultural products in China [

4]. Therefore, a timely and accurate understanding of the acreage of winter wheat and its distribution is essential for planting structure adjustment and the formulation of appropriate grain trading strategies.

Remote sensing data can provide accurate and timely information on crop phenology and development on a regional to global scale, and is considered as one of the most accurate data sources for monitoring crop growth conditions and area estimation [

5,

6]. Spectral characteristics are the most critical and direct information of these images [

7]. Remote sensing technology has the advantages of covering a wide area, having a long revisit cycle, having strong presentability, and having many access channels, which can cover all cycle conditions of crop growth and help to extract crop information of various scales quickly and accurately. Optical remote sensing data not only reflect the spectral characteristics of crops, but can also provide images at different resolutions [

8]. They can be used to identify large areas of agricultural land [

9] and extract crop-specific growing areas [

10], such as wheat [

11] and corn [

12]. At present, when large-scale (e.g., provincial or national) crop mapping is carried out by remote sensing technology, its spectral characteristics in time and space are difficult to reconcile due to the return period of satellite sensors and the imaging mechanism; the higher the spatial resolution of the image, the longer the return period, and the selection of the image often limits the classification accuracy [

13]. For example, Qiu et al. [

14] extracted winter wheat planting area information by MODIS data and achieved an overall accuracy of 88.86% when evaluated with Landsat images. Dong et al. [

15] based winter wheat mapping on Sentinel 2A/B data from the Yellow and Huaihe Plain of China and achieved an overall classification accuracy of 89.98% with a kappa coefficient of 0.7978. The large-scale range of the remote sensing identification of winter wheat is influenced by regional environmental-climatic differences, differences in winter wheat phenological changes, and various agricultural landscape profile factors such as farm management level and soil conditions. Meanwhile, in the same period, other crops with similar phenological characteristics and growth environments as winter wheat show similar common characteristics in remote sensing images. The selection of key phenological periods is vital for the effective differentiation between winter wheat and other crops. Within this period, the spectral characteristics presented by different feature types have strong variability, and obtaining remote sensing data to meet research requirements greatly affects the fine extraction of crops. Under this constraint, it is particularly important to acquire high-quality remote sensing images that can fully cover the study area during critical periods [

16]. The red edge is the region where the spectral reflectance of green vegetation rises rapidly within a certain wavelength range and is closely related to the pigmentation status of the crop [

17]. Numerous studies have shown that the use of the red-edge band to calculate spectral indices enhances the differentiability and the difference in spectral characteristics among different feature types, enriches the feature space for crop classification, and is vital for improving the accuracy of the remote sensing classification of crops [

18,

19,

20].

However, the spectral properties of the target features vary significantly owing to different sensors, and the same method cannot be used to solve the spectral heterogeneity. For crop area extraction, some researchers have used support vector machines (SVMs) [

21], random forests [

22], and decision trees [

23] in the field of shallow learning, but their performances depend on the accuracy of the input data based on human experience and cannot adapt to increasing levels of complexity. Due to the uncertainty of mixed pixels or heterogeneous landscape areas in different remote sensing data, it is difficult for traditional algorithms to achieve good performance in large-scale crop classification. The emergence of deep learning has provided new ideas for crop classification extraction, such as fully connected neural networks (FCNs) [

24] and back propagation (BP) neural networks (BPNNs). Such networks can accept scenarios with smaller scales and less data volumes. If evaluating image information with wide coverage and large data volumes, it often leads to a slow or even incomplete training process; this problem can be effectively resolved by convolutional neural networks (CNNs). CNNs, a branch of deep learning, have been extensively developed for image classification [

25] and agricultural information extraction [

26,

27]. For better and faster processing of massive amounts of data, a CNN comprises of multiple nonlinear mapping layers that can obtain higher dimensional features by mining the spatial correlation between target image elements and combining them for analysis [

28,

29]; this improves the classification accuracy [

30] and has obvious advantages in 2D image processing [

31]. CNNs have great advantages in extracting the correlation between pixels, and the spectral features can satisfactorily express the spatial relationship between pixels [

32], the features of which are extracted by the convolutional layer. The previously extracted features are then integrated by a fully connected layer, which has strong learning and fault tolerance abilities, thereby making the CNN effective for crop classification extraction.

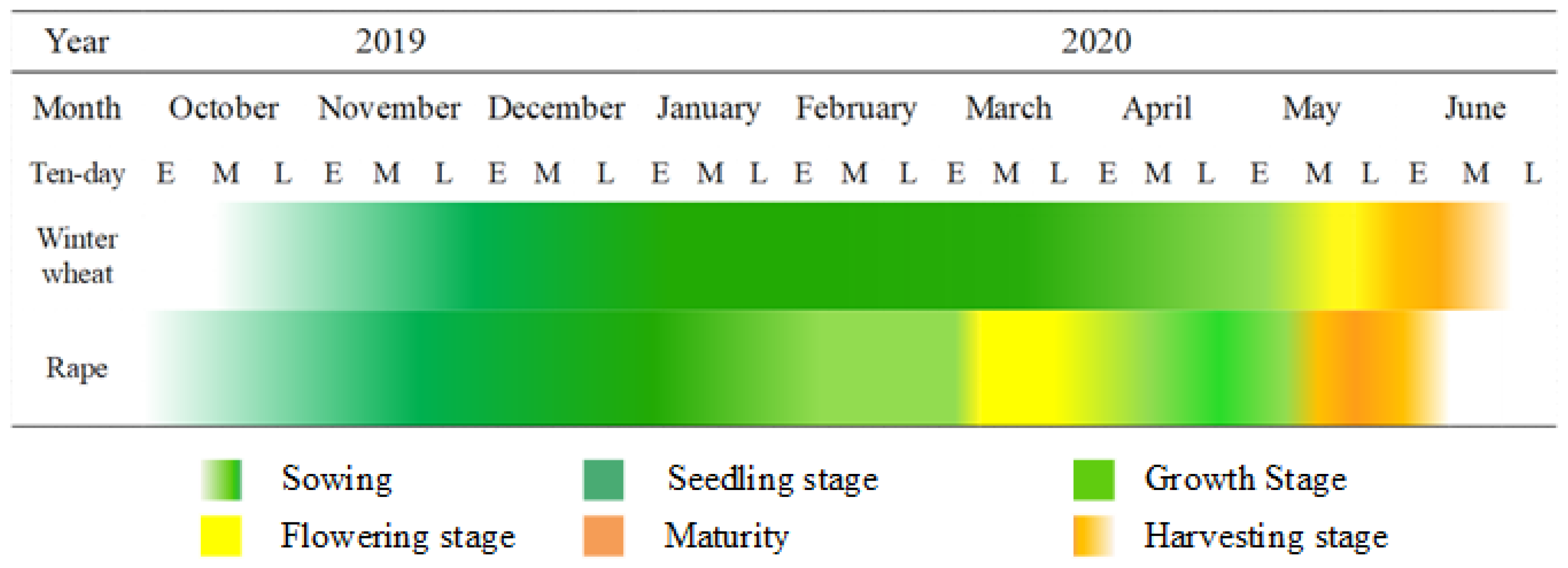

In this study, we conducted a spectral characteristic analysis on two selected remote sensing datasets (GF-6 WFV/16 m and Sentinel-2A/10 m), combined with a CNN for classification extraction and planting area calculation of winter wheat by enhancing the capability of spectral features for crop extraction. For regions with complex agricultural landscapes, the variability of the winter wheat growth state often results in different spectral information in the images. Therefore, this study simultaneously used field research to enhance the accuracy of visual interpretation of crops. The results show that this method of combining a CNN by reinforcing the spectral characteristics of target types is effective for the accurate extraction of winter wheat over a large area.

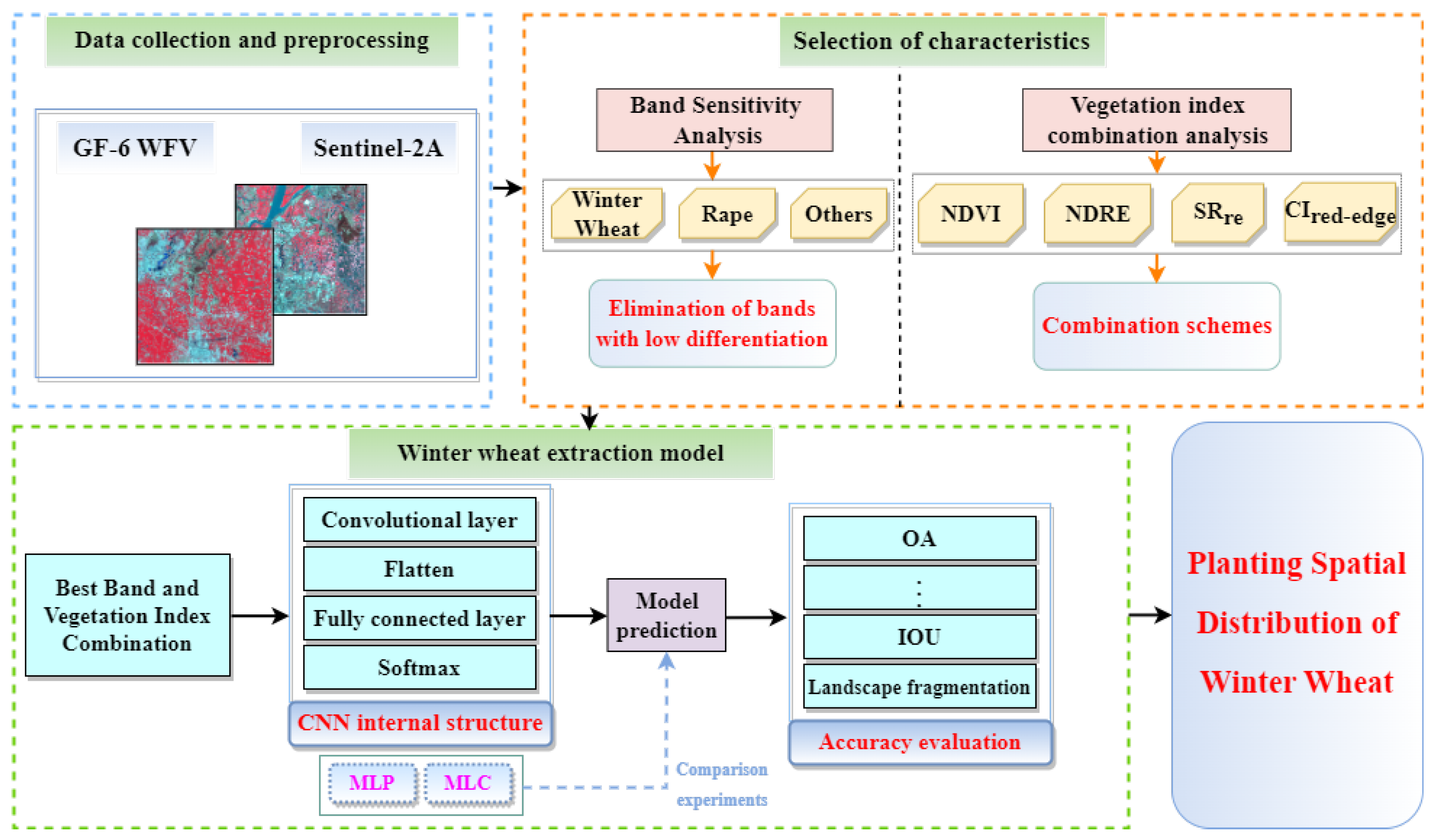

3. Methodology

In this study, we applied CNNs—a deep learning methodology showing outstanding performance in image classification tasks—to extract spectral features by combining the wavelength reflectance and vegetation index information of multispectral remote sensing images. We also used CNNs to identify and classify features such as winter wheat in Anhui Province through the feature contribution of different spectral information. By ranking the feature contribution of different types of spectral information, the optimal set of spectral features is selected to complete the extraction of fine classification of winter wheat. A flowchart of the methods is shown in

Figure 5.

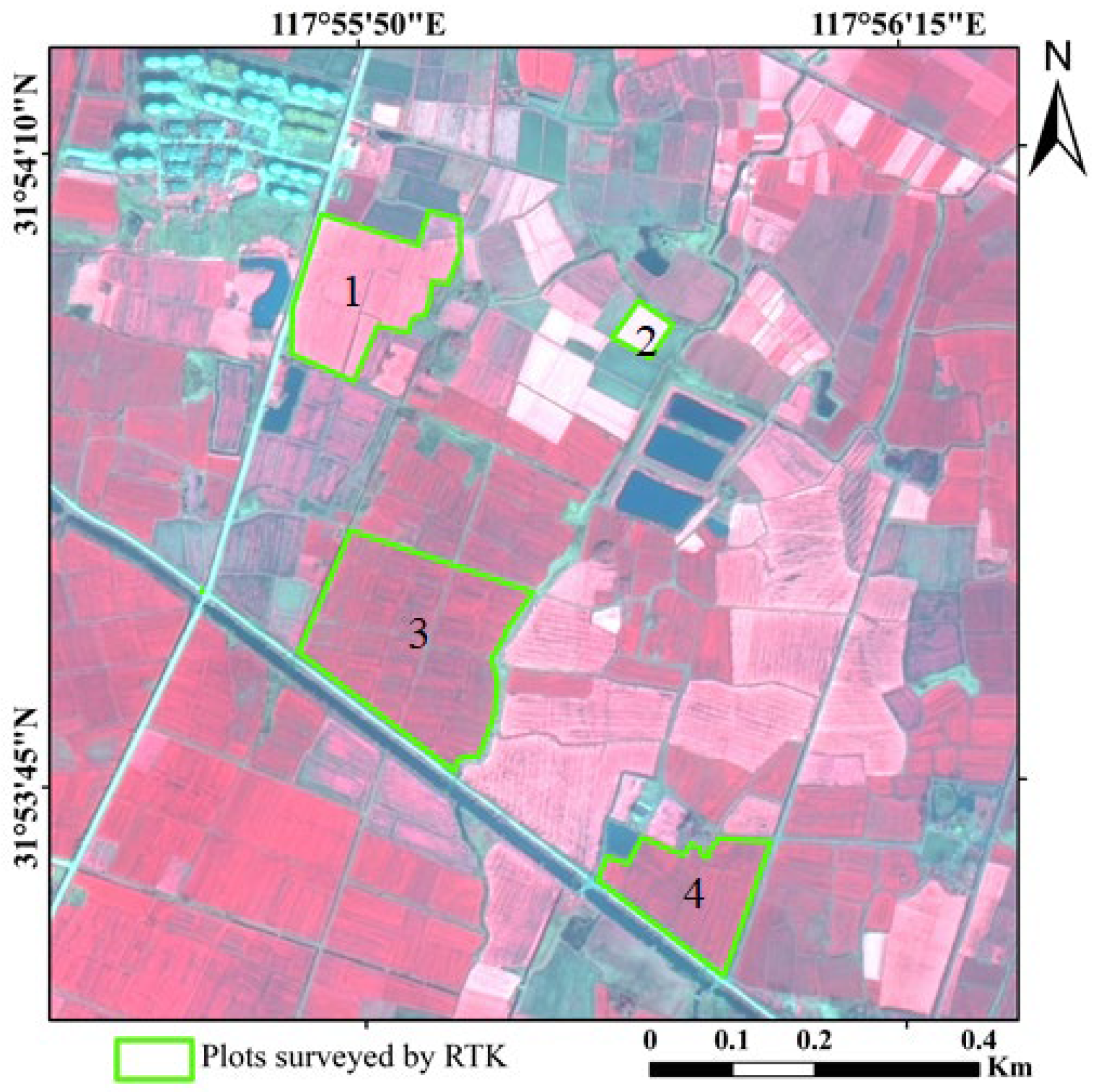

3.1. The Creation of a Database and Its Rules

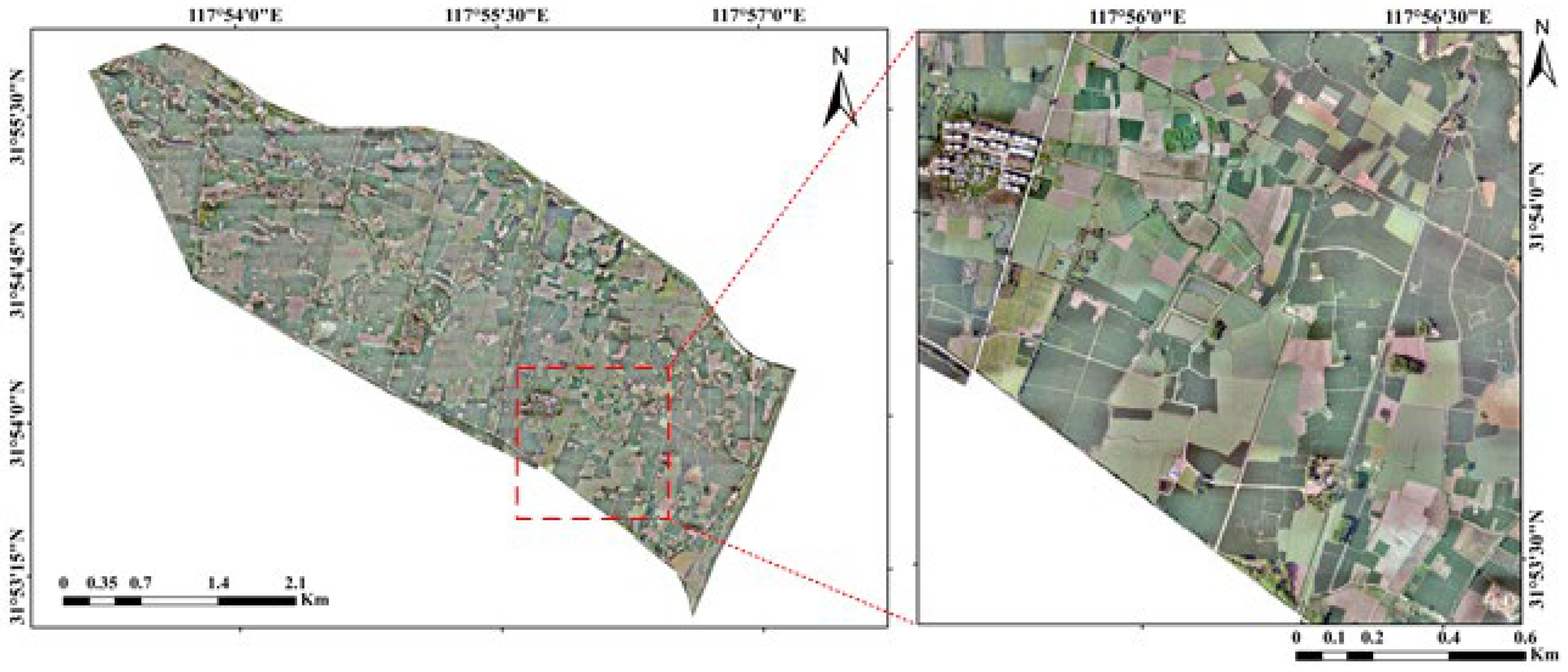

In the study area, fields are usually planted with winter wheat and interspersed with different proportions of other feature types (soil background, straw, and weeds) Other crops such as early rice and corn have not been transplanted during their growth. The unique phenological characteristics of winter wheat provide critical and effective information for its identification in multispectral remote sensing images. This study mainly used the standard false color images to extract the information of winter wheat (“Standard false color” means that the image RGB is displayed using the “NIR band, Red band, and Green band”). Corresponding to standard false color images, winter wheat and rape appeared bright red and pink in the images, respectively, while the other vegetation appeared dark red (

Figure 6). To accurately distinguish various feature types in the images, this study used GF2 images and UAV data for assistance, and manually photographed and recorded various types of plots (

Table 4). This was used as the basis for constructing a sample library for winter wheat and other feature categories. The number of samples is presented in

Table 5.

3.2. Analysis of Spectral Characteristics

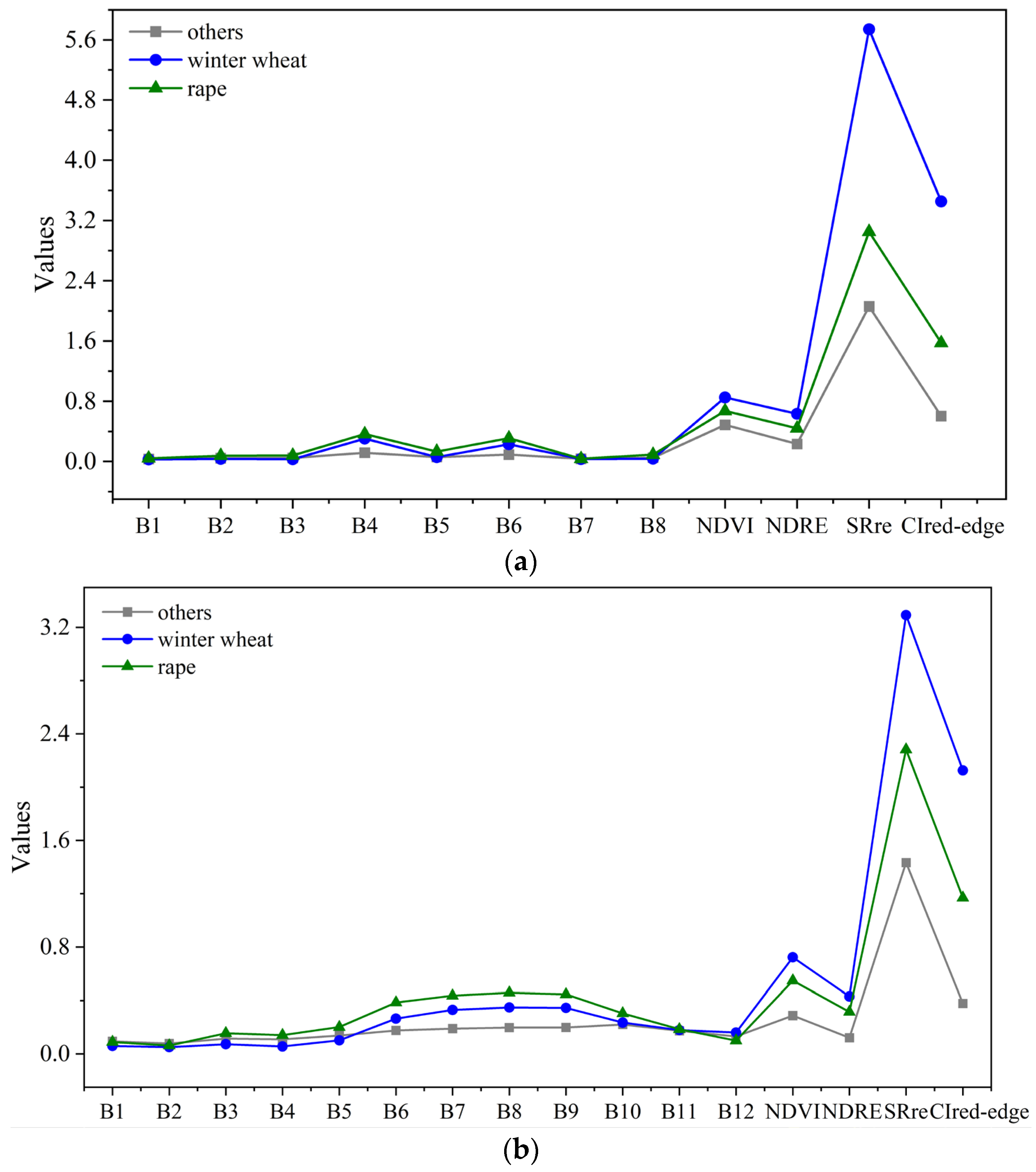

3.2.1. Band Sensitivity Analysis

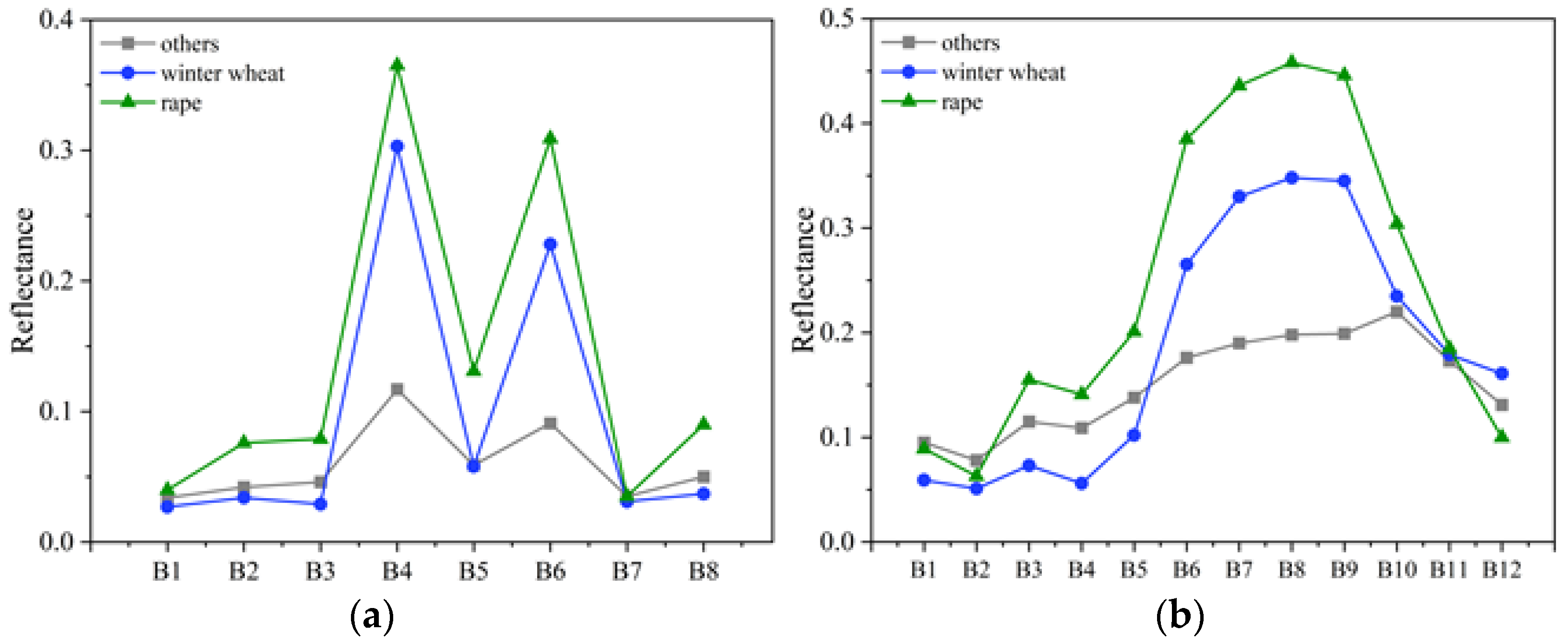

Using the full spectral information provided by the remote sensing data increases the computation time and does not improve the results. To determine the best band combination, this study analyzed the average values of the corresponding image element radiance values for the samples of winter wheat, rape, and other categories (including feature categories such as buildings, water bodies, mountains, and other vegetation) on two datasets (

Figure 7). Notably, the spectral trends of the three categories are roughly similar. When the points overlap on the band or are too close to each other, they are considered to not be clearly distinguishable, while those with a large segmentation gap are considered to be clearly distinguishable. Thus, we drew the following conclusions:

- (1)

GF-6 WFV data: the trend of the first three bands is relatively flat, with more obvious peaks in bands 4 and 6 and troughs in bands 5 and 6. The difference between the values of the three categories in bands 4 and 6 was obvious and highly differentiated, while the overlap between the points of the three categories in bands 1 and 7 was high; the differentiation between crops and other feature categories was low.

- (2)

Sentinel-2A data: winter wheat and rape have a clear elevated trend on bands 6 to 8, presenting a high degree of differentiation from other feature types with relatively flat trends. We could not easily distinguish rape from other features on the band 1, and the reflectance of rape and winter wheat are similar to that on bands 2 and 11.

Figure 7.

Analysis of spectral reflectance curves of various classes in (a) GF-6 WFV and (b) Sentinel-2A data.

Figure 7.

Analysis of spectral reflectance curves of various classes in (a) GF-6 WFV and (b) Sentinel-2A data.

Therefore, the effective bands of GF-6 WFV capable of distinguishing winter wheat, rape, and other categories are initially filtered as B2, B3, B4, B5, B6, and B8. Similarly, the effective bands of Sentinel-2A are B3, B4, B5, B6, B7, B8, B9, and B12.

3.2.2. Vegetation Index Extraction Based on Sentinel-2A and GF-6 WFV Imagery

The vegetation index was obtained by combining different bands of satellite image data, thus compressing the spectral information into a single channel reflecting the growth status of surface vegetation [

36]. The sensitivity of different vegetation indices varies among features, among which NDVI is widely used in crop extraction and monitoring. Considering the unique red-edge band of GF-6 and Sentinel-2A images is an important indicator band to describe vegetation pigmentation and health status [

37]. Therefore, in this study, were used three red-edge indices, NDRE, SR

re, and CI

red-edge, as index features to investigate the potential of extracting information from winter wheat. The formulae for each vegetation index are listed in

Table 6.

Figure 8 shows the overall numerical trends of the feature types, such as winter wheat, after adding the vegetation indices. Notably, the values of each index for winter wheat was higher those for the other two categories, among which three categories are most distinguished in the SR

re and CI

red-edge indices, which could increase the difference between winter wheat and other categories to some extent.

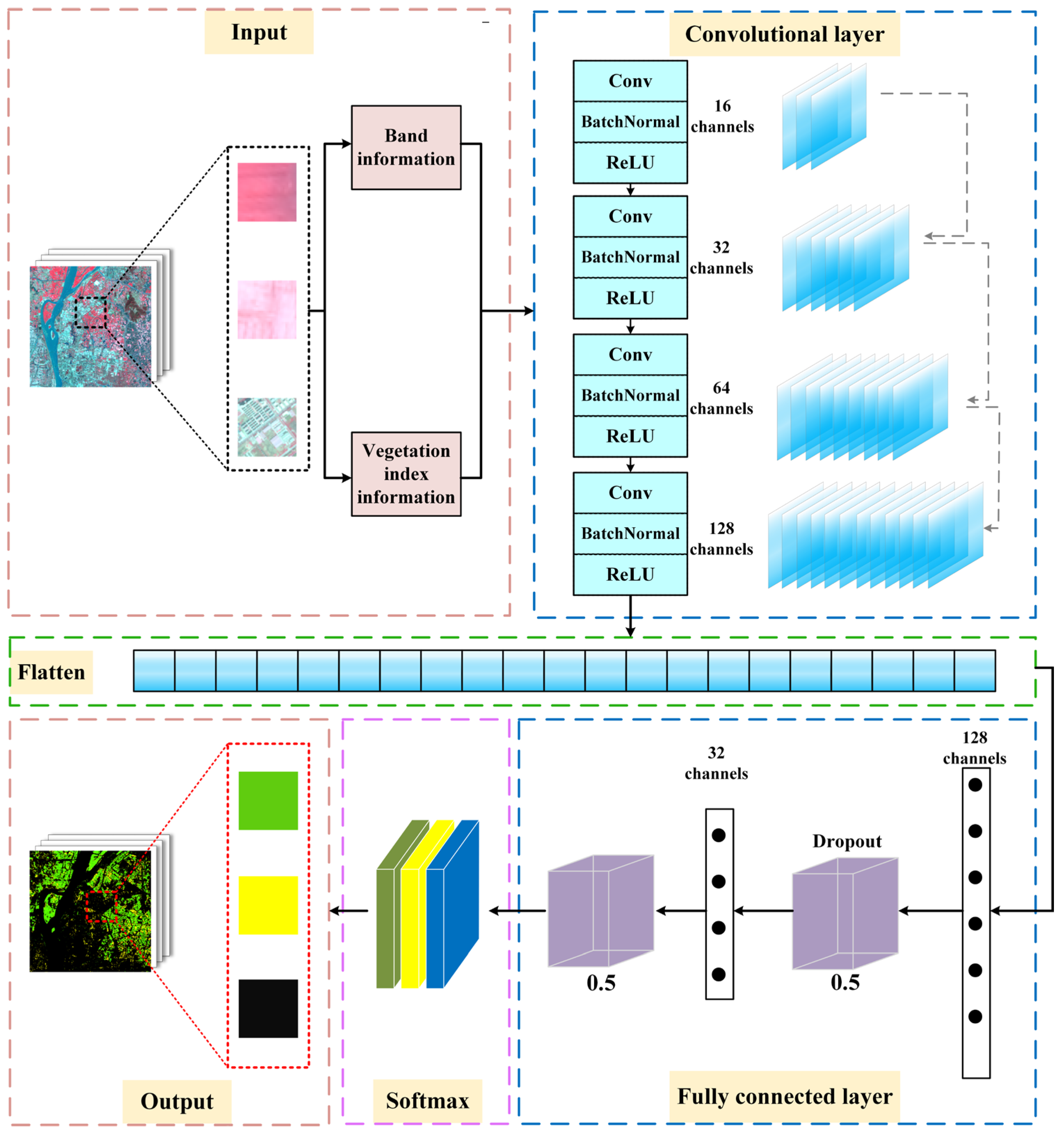

3.3. Winter Wheat Extraction Model Based on the Convolutional Neural Network

Considering the spectral variability between winter wheat and other classes, a CNN, which is often used for classification tasks, was used. The CNN is based on the original multilayer neural network, with the addition of partially connected convolutional and pooling layers, which are more effective feature-learning structures. The local perceptual field operation of CNNs can significantly reduce the computational complexity of the feature extraction process and thus improve computational efficiency. The CNN classifier architecture constructed in this study is illustrated in

Figure 9.

The significant spectral characteristic information from the remote sensing images was inputted into the model, and the characteristic information was extracted by the convolutional layer. Each convolution comprises one layer of convolution, one layer of batchnormal, and one layer of activation function. Since convolutions are a linear operation, it is necessary to add nonlinear mapping. We chose the rectified linear unit (ReLU) function as the activation function in the convolution layer as it is an unsaturated nonlinear function with fast convergence and no gradient disappearance problem [

42]. Adding a batchnormal slab combined with the ReLU function can speed up the training of the network, achieve efficient computation, and accelerate gradient diffusion for training [

43]. The convolutional layer is computed from the convolutional kernel at the previous input layer by sliding the window one by one. Each parameter in the convolutional kernel is called a weight parameter and is connected with the corresponding pixel to obtain the result of the convolutional layer. In this study, a 1 × 1 size convolution kernel is used. The 1 × 1 convolution kernel can be downscaled or upscaled, which is achieved by controlling the number of convolution kernels (channels), this can help reduce the model parameters and also normalize the size of different features; it can also be used for the fusion of features on different channels, which is guaranteed to get certain results. The first layer of convolution may only be able to extract some low-level features such as lines and edges, while a multilayer network can improve this situation by further extracting more tedious features. Therefore, the model in this study was set up with four blocks of convolution, which can iteratively extract more complex features from the low-level features and compute the features, effectively reducing the problem of large computation or numerous parameters that cannot be solved by traditional neural networks.

To utilize the learned features in the winter wheat classification task and successfully map them to the target values, a fully connected (FC) layer is usually added after the convolutional layer. This layer fuses the resulting information, enhances the information representation, and reduces the loss of feature information. The dropout layer was set after each fully connected layer to improve the regression-fitting ability and network training efficiency. By adding the dropout layer, we only needed to randomly sample the parameters of the weight layer with a certain retaining probability, bring the sampled nodes to participate in the update, and use this sub-network as the target network for this update. The advantage of doing this is that, since some nodes are randomly excluded from the work, is that it avoids some features being effective only under fixed combinations, and consciously lets the network learn some general commonalities (instead of some characteristics of some training samples) so as to improve the robustness of the trained model. After the dropout layer, the number of neural nodes is 64, after the second FC layer, the number of neural nodes becomes 32, and then after the dropout layer, the number of neural nodes becomes 16, after which the number of points is gradually reduced to prepare for the final fit. Between the convolutional and FC layers, a “Flatten” layer was set to one-dimensionalize the multidimensional input. The Flatten layer is used to “flatten” the input data, that is, to compress the (height, width, channel) data into a one-dimensional array of length height × width × channel, making the multi-dimensional input one-dimensional. Subsequently, a softmax classifier is added at the end of the CNN model to ensure that the number of neurons was equal to that of categories needed for prediction. Finally, the classification result was output as an image.

In this paper, the network is optimized using an Adam optimizer and the parameters are updated with the initial learning rate set to 0.001. To better train the model, the learning rate is automatically adjusted as the training period increases, which combined with the optimization, speeds up the convergence of the network. In this paper, a multi-class cross-entropy loss function is used as the loss function. The training process has a total of 100 epochs with a batch size of 1024.

3.4. Evaluation Index

To verify the efficiency of the model in identifying winter wheat, the results were quantitatively evaluated. The main evaluation methods include: (1) overall accuracy (OA), the ratio of the number of correctly classified samples to the total number of samples; (2) precision, the ratio of the number of correctly extracted samples to that of all extracted samples; (3) recall, the ratio of the number of correctly extracted samples to that of true samples; and (4) F1, calculated using the precision and recall, and is the summed mean of the powerful precision and recall evaluation indices. We set

as the number of samples with correctly extracted targets,

as the number of samples with incorrectly extracted targets,

as the number of samples with correctly extracted negative targets, and

as the number of samples with unextracted targets. The equations for each evaluation method are as follows:

Additionally, another metric, intersection over union (IOU), was used to assess the shape and area of both winter wheat and rape. The IOU is the intersection of the predicted area and ground truth values over the union of the categories. It is calculated as follows:

3.5. Landscape Fragmentation Analysis

For further precision analysis of the study results, the concept of landscape fragmentation was introduced to analyze the validation area. The landscape fragmentation degree characterizes the fragmentation degree of the landscape [

44]. The larger this value, the higher the complexity of the landscape spatial structure. Its calculation formula is as follows:

where

is the fragmentation of

i,

is the number of patches in landscape

i, and

is the total area of landscape

i.

4. Results and Discussion

4.1. Evaluation and Analysis of Winter Wheat Planting Area

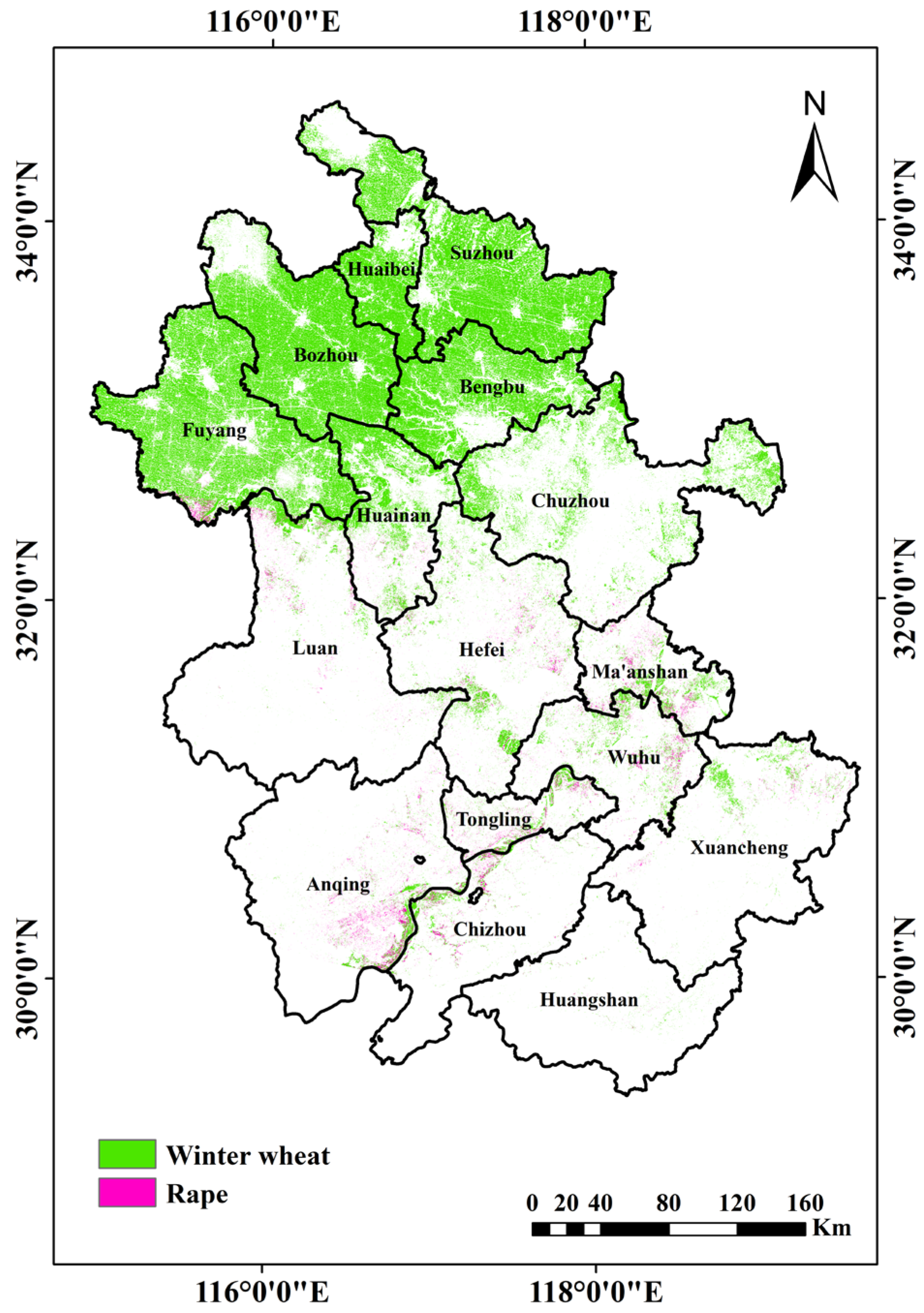

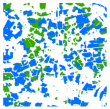

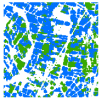

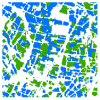

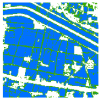

Figure 10 shows the extraction results of this method for winter wheat and rape in the study area and their spatial distributions.

Table 7 presents the results of the remote sensing monitoring of winter wheat in each prefecture of the study area and their comparison with the statistical results.

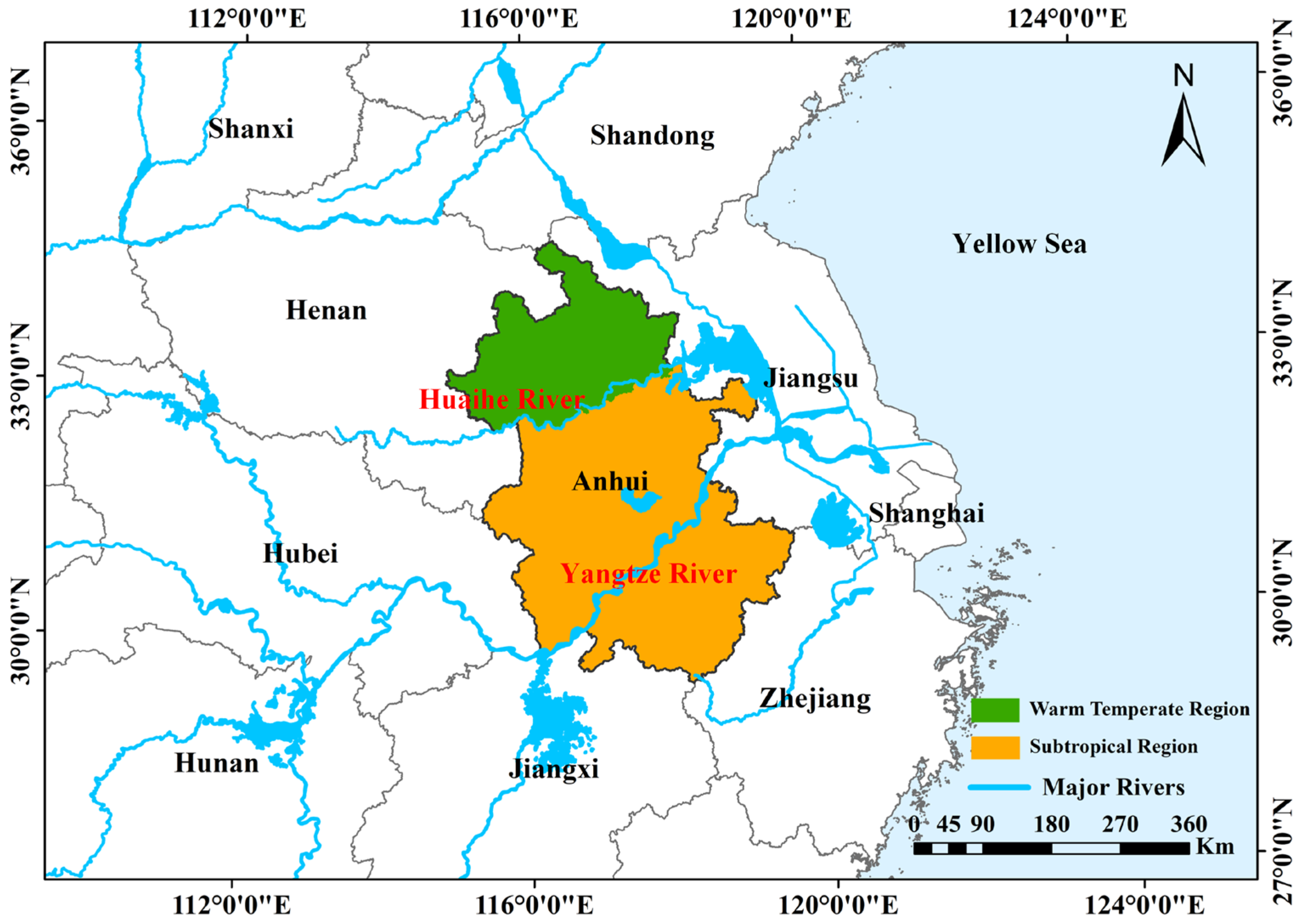

The evaluation results indicate that the area north of the Huaihe River and along Huaihua is the main planting area for winter wheat, which has a warm temperate semi-humid monsoon climate. The central and eastern parts of the country are hilly, while the southern part is mostly mountainous and hilly. Winter wheat is scattered between Jiang and Huai and along the Yangtze River area. The dense population in the northern part of the study area, the wide distribution of winter wheat cultivation, and the resolution of the sensors resulted in a ridge between cultivated fields, roads, and other non-winter wheat cultivation areas that were incorrectly classified as winter wheat cultivation areas due to mixed pixels. Consequently, the results of remote sensing were generally larger than the statistical results. The planting structure of wheat in the southern region showed that the planting area is generally small. The winter wheat planted in such areas often failed to achieve a finer extraction effect; consequently, the results of remote sensing were lower than the statistical results. The entire city of Huangshan City is dominated by mountains, and the planting area of winter wheat is almost zero. The misidentification of this study method is large for this area, which may be caused by vegetation on the mountains, resulting in a large error. The cities in the central region show a large deviation in the absolute error value, presumably because they are in the region where two remote sensing images are stitched together. This results in differences in the spectral features to some extent, thus reducing the overall classification accuracy.

Rape is mainly concentrated in the hills of Jianghuai and Huaihua, along the Yangtze River and southern Anhui, because the area south of the Huai River has a subtropical humid monsoon climate. Heat conditions, temperature, precipitation, and other climatic conditions meet the growth requirements of rapeseed. A small amount of rape is also distributed in the area north of the Huaihe River, but its climatic conditions make rape susceptible to autumn drought, which affects the normal sowing and emergence of rape. The area south of the Huai River was the main growing area for rape, and further south, the rape growing area was larger. Thus, most of the area predicted by the model was lower than the actual statistical area, thereby resulting in a low accuracy in the extraction of rape planting area.

4.2. Analysis of the Effectiveness of Spectral Characteristics Selection

To verify the effect of spectral characteristic on the extraction results of winter wheat, we conducted an experimental comparison on the remote sensing data of GF-6 WFV and Sentinel-2A. The details of the test results are presented in

Table 8. The “boundary wrapping” phenomenon of image elements and the misrepresentation were improved after excluding the bands with poor distinguishing capacities. To quantify the results between the two methods,

Table 9 presents the OA equivalence indices for each of the two categories of winter wheat and rape on the two remote sensing images. Notably, the OA improved after conducting band screening by approximately 0.5%, with recall and precision improving by 0.5% on average.

However, the extraction of winter wheat using band characteristics alone is far from highly accurate, based on which vegetation indices are added to enhance the characteristic variability of crops in this study. For this purpose, four vegetation indices, combined with the band filtering results as the overall features, were introduced into the CNN model, and the resulting crop classification results are presented in

Table 10. To further illustrate the effect of vegetation index combinations, the values of OA and recall for various combination schemes are presented in

Table 11. It is clear that the overall extraction accuracy for winter wheat increased after using the four vegetation index enhancement characteristics of NDVI, NDRE, SR

re, and CI

red-edge for both GF-6 WFV and Sentinel-2A data. The OA values of GF-6 WFV and Sentinel-2A images increased by 1.94 and 0.89%, respectively. After enhancing the image characteristics by adding the vegetation index, which is closely related to the red edge information after filtering the band features, the ability of the CNN model to distinguish winter wheat from other feature types (such as weeds, field ridges, and trees) enhanced, reducing the probability of misidentification and improving the extraction accuracy of winter wheat in the region. This facilitated the accurate calculation of the winter wheat planting area.

4.3. Comparison of Ablation Experiments with Related Networks

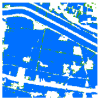

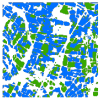

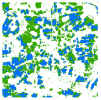

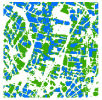

To verify the advantages of CNN with this spectral feature enhancement approach in extracting winter wheat acreage, a deep neural network (MLP) and maximum likelihood classification (MLC) methods were used for comparison. All the models were trained and tested using the same spectral feature data as the test set. We cropped the GF-6 WFV data to several scenic images of 256 size according to the overall planting structure characteristics of the study area, and used this to crop the Sentinel-2A data of the same range. The test area should be selected to include the structural characteristics of various types of typical terrain in the study area to ensure that the selected area is more intuitive in judging the accuracy of the results. Therefore, we selected the northern winter wheat plains with extensive cultivation, the central region with mixed cropping, and the southern region with more seriously scattered crops as the test areas to analyze and compare the accuracy of model recognition (

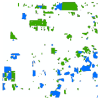

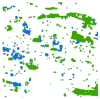

Figure 11).

Table 12 presents a comparison of the test area on GF-6 WFV remote sensing images using several methods of spectral feature enhancement combined with CNN, MLP, and MLC. The qualitative analysis of the recognition results showed that the spectral feature enhancement combined with CNN was able to extract winter wheat accurately. To verify the accuracy of several methods for winter wheat identification,

Table 13 presents the results of the quantitative analysis. In the northern region, winter wheat is grown on a large scale, while rape is grown sporadically, so the recall and precision of rape are relatively low in this region. Moreover, the spatial resolution of GF-6 WFV makes the ridge and road inconspicuous because of the large area covered by winter wheat planting, thereby offering difficultly in identifying roads with low widths, which reduces the extraction accuracy of winter wheat. The mixed planting phenomenon was obvious in the central test area, and winter wheat and rape were almost equally distributed in terms of the area, which ensured that the differences in the spectral features were more obvious. The CNN approach resulted in an OA of 94.71% on average.

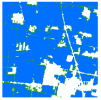

The comparison results of the various methods used on Sentinel-2A remote sensing images are presented in

Table 14. The qualitative analysis plots in the table indicate that the enhanced spectral features combined with the CNN model can more accurately extract the classification of winter wheat and rape, and more dimensional feature information can be obtained through convolution to maximize the role of spectral feature enhancement. Although MLPs are not sensitive to feature information, it is difficult to reflect the role of spectral feature enhancement. MLC is mainly based on manual experience and cannot easily distinguish the types of similar spectra presented on the image and requires extremely high manual visual interpretation requirements, which can result in incorrect winter wheat extraction results.

Table 15 presents the recall, F1, and OA calculated for the quantitative analysis of the Sentinel-2A data extraction results.

Table 16 presents the calculated IOUs of winter wheat and rape in the test area to further evaluate the extraction accuracy of the target crop’s planted area and shape. The results show that the combination of enhanced spectral features and CNN is better than the other two methods in terms of the evaluation indices, such as IOU and OA, for the recognition results of both winter wheat and rape. For the GF-6 WFV data, the average IOU of extracted winter wheat reached 77.70%, and that of winter wheat extracted from Sentinel-2A data reached 76.71%. This shows that the CNN method combined with spectral feature enhancement can successfully extract and calculate the winter wheat planting area in complex terrain.

Table 17 presents the results of the landscape fragmentation calculations for the two remote-sensing datasets. Notably, the differences between the two data in terms of the overall crop area are small. The Sentinel-2A data have higher spatial resolution and can better distinguish vegetation, weeds, and other types from winter wheat to a certain extent, making the overall crop area smaller than that of the GF-6 WFV data. The landscape fragmentation (

) between 0 and 0.5000 on the GF-6 WFV data is the same as the areas with

between 0 and 1.0000 on the Sentinel-2A data, which belong to areas with high landscape integrity. In the GF-6 WFV data, the

was 0.5000 or more, whereas that in the Sentinel-2A data was 1.0000 or more. These areas mostly consist of sporadic crops and more serious mixed cropping, and their overall plot types are more fragmented. The results show that in areas with high landscape integrity, most plain areas showed extensive winter wheat cultivation, and the plots of crops were all relatively intact. However, the width of field ridges between cultivated fields and some roads is small, implying that the individual image elements on the image may contain some spectral features of crops, which reduces the extraction accuracy of the model. However, in areas with relatively high landscape fragmentation, where spectral differences between different crops are evident, or where background classes have larger proportions, the model classification is better. Consequently, the overall accuracy in these areas is somewhat improved compared to those with lesser landscape fragmentation.

5. Conclusions

In this study, a method for large-scale extraction of winter wheat by spectral characteristic enhancement combined with convolutional neural network (CNN) is proposed. Firstly, due to the differences in harvesting time in different places, winter wheat cannot be identified very accurately at the time of maturity. Therefore, in this study, we analyzed the phenological characteristics of winter wheat, selected the best identification period for the experiment, and then selected two kinds of data, GF-6 WFV and Sentinel-2A, to meet the experimental requirements. Secondly, we selected Anhui province as the study area, which has a large north-south difference and a typical Chinese agricultural landscape fragmentation pattern. Therefore, in this study, we analyzed the effects of band and vegetation indexes on the extraction of winter wheat, and quoted the red-edge band-related features to further increase the differentiation between winter wheat and other feature classes, which can extract winter wheat information more accurately in the case of strong vegetation biodiversity in the study area. Then, the higher resolution GF2 and UAV data are cited for field sampling, and the samples are synthesized for forensics to ensure the accuracy of sample library establishment. The enhanced spectral characteristics are used as a dataset based on CNN to improve the extraction accuracy of winter wheat. The MLP and MLC methods are also used as a comparison to demonstrate that the CNN combined with spectral characteristic enhancement performs better than other model, and the average accuracy of winter wheat extraction reached 94.01 and 93.03% for GF-6 WFV and Sentinel-2A images, respectively. The average IOU of both images reached more than 85.00%; this fully demonstrates the effectiveness and universality of CNN for this method. In the future, we will study the extraction ability of other models for winter wheat and attempt to use higher resolution remote sensing images as data to explore the potential of deep learning for crop extraction.