Abstract

Oriented object detection (OOD) can more accurately locate objects with an arbitrary direction in remote sensing images (RSIs) compared to horizontal object detection. The most commonly used bounding box regression (BBR) loss in OOD is smooth L1 loss, which requires the precondition that spatial parameters are independent of one another. This independence is an ideal that is not achievable in practice. To avoid this problem, various kinds of IoU-based BBR losses have been widely used in OOD; however, their relationships with IoUs are approximately linear. Consequently, the gradient value, i.e., the learning intensity, cannot be dynamically adjusted with the IoU in these cases, which restricts the accuracy of object location. To handle this problem, a novel BBR loss, named smooth generalized intersection over union (GIoU) loss, is proposed. The contributions it makes include two aspects. First of all, smooth GIoU loss can employ more appropriate learning intensities in the different ranges of GIoU values to address the above problem and the design scheme of smooth GIoU loss can be generalized to other IoU-based BBR losses. Secondly, the existing computational scheme of GIoU loss can be modified to fit OOD. The ablation study of smooth GIoU loss validates the effectiveness of its design scheme. Comprehensive comparisons performed on two RSI datasets demonstrate that the proposed smooth GIoU loss is superior to other BBR losses adopted by existing OOD methods and can be generalized for various kinds of OOD methods. Furthermore, the core idea of smooth GIoU loss can be generalized to other IoU-based BBR losses.

1. Introduction

Oriented object detection (OOD) methods can locate objects with arbitrary orientation more accurately than horizontal object detection (HOD) in remote sensing images (RSIs) [1,2,3,4]. OOD has attracted much attention due to its wide applications in military and civilian fields [5,6,7,8,9,10], such as intelligent transportation, disaster rescue, military base reconnaissance, etc. [11,12,13,14,15,16,17].

With the rapid development of deep learning technology [18,19,20,21], OOD methods based on deep learning have made great progress in recent years [22,23,24,25]. According to whether OOD methods include a stage for generating region proposals or not, state-of-the-art (SOTA) OOD methods can be divided into two categories: one-stage [26,27,28,29,30,31,32,33] and two-stage methods [34,35,36,37,38,39,40,41,42,43]. Two-stage methods generate region proposals on the basis of many anchors and classify and regress them, whereas one-stage methods directly classify and regress the anchors. Two-stage methods usually have higher detection accuracy than one-stage methods, whereas one-stage methods usually have faster inference speed than two-stage methods. To the best of our knowledge, the mAP of one-stage and two-stage methods can reach 75.93% [44] and 82.40% [45] on the DOTA dataset (single scale training), respectively, and their inference speeds can reach 200 FPS [46] and 33 FPS [47], respectively. They are briefly described as follows.

The classical one-stage method RetinaNet [40] uses a fully convolutional network to directly predict the category and spatial location of each anchor; furthermore, a focal loss was proposed for assigning greater weight to hard samples. To solve the problem of mismatch between presetting anchors and objects with large aspect ratios, an alignment convolution was introduced in S2ANet [41], which could adaptively align the convolutional kernels with oriented bounding boxes. Label assignment strategies were improved in DAL [42] and Dynamic R-CNN [43]. With DAL, researchers proposed a novel quantitative metric of matching degrees to dynamically assign positive labels to high-quality anchors. This solved the suboptimal localization problem caused by traditional label assignment strategies, wherein locations are solely determined by intersection over union (IoU), resulting in the problem of an imbalanced capability regarding classification and regression. The IoU threshold between the anchors and ground truth bounding boxes was adaptively adjusted in Dynamic R-CNN.

The basic framework of many two-stage OOD methods is inherited from Faster R-CNN [34]. Generally speaking, the region proposal network (RPN) is used to first generate a region of interest (RoI), and then the RoI pooling operation is employed to obtain the deep features with a fixed size. Finally, the classifications and bounding box regressions (BBRs) of RoIs are implemented based on their deep features. To generate these oriented RoIs, the early two-stage method [48] presets 54 anchors with different scales, aspect ratios and angles at each location of the feature map. However, the computational and memory costs are obviously increased. To address this problem, Ding et al. [49] proposed an RoI transformer to generate oriented RoIs from horizontal RoIs, which significantly reduced the number of preset anchors. To further reduce the computational costs and improve detection accuracy, the Oriented R-CNN [50] introduced an oriented RPN to directly generate oriented RoIs from the presetting anchors.

Regardless of whether a one-stage or two-stage method has been employed, smooth L1 loss [51], which can optimize spatial parameters, is usually adopted for BBR. A precondition for the use of smooth L1 loss is that the spatial parameters are independent of one another; however, this ideal cannot be achieved in practice. A number of IoU-based BBR losses have been proposed, such as IoU loss [52] and PIoU loss [53], since the IoU was invariant to scale change and implicitly encoded the relationship of each spatial parameter during area calculation. After this, GIoU loss [54], DIoU loss [55], EIoU loss [56], etc., were proposed, because the IoU cannot handle the situation of two bounding boxes with no intersection. However, the relationship between the existing IoU-based BBR losses and the IoU is approximately linear; consequently, the gradient value, i.e., the learning intensity, cannot be dynamically adjusted with the IoU, which restricts the accuracy of object location.

To handle the aforementioned problems, a novel BBR loss, named smooth generalized intersection over union (GIoU) loss, is proposed for OOD in RSIs. First of all, the computational scheme of GIoU used for HOD is modified to fit OOD. Afterwards, a piecewise function, i.e., smooth GIoU loss, is proposed to adaptively adjust the gradient value with the GIoU value. Specifically, the gradient value of smooth GIoU loss is relatively constant if the GIoU value is small, and they can achieve stable and fast convergence at this stage. However, the absolute value of the gradient will gradually decrease with the increase in the GIoU value if the GIoU value is greater than the threshold; thus, fine-tuning of the learning intensity is required when the GIoU approaches one to avoid training oscillation.

The contributions of this paper are as follows:

- (1)

- A novel BBR loss, named smooth GIoU loss, is proposed for OOD in RSIs. The smooth GIoU loss can employ more appropriate learning intensities in the different ranges of the GIoU value. It is worth noting that the design scheme of smooth GIoU loss can be generalized to other IoU-based BBR losses, such as IoU loss [52], DIoU loss [55], EIoU loss [56], etc., regardless of whether it is OOD or HOD.

- (2)

- The existing computational scheme of GIoU is modified to fit OOD.

- (3)

- The experimental results on two RSI datasets demonstrate that the smooth GIoU loss is superior to other BBR losses and has good generalization capability for various kinds of OOD methods.

2. Materials and Methods

The proposed smooth GIoU loss is closely related to GIoU; therefore, the original computational scheme of GIoU and existing GIoU-based BBR losses are introduced in this section.

2.1. Related Works

2.1.1. Computational Scheme of GIoU for Horizontal Object Detection

The of bounding boxes A and B is formulated as:

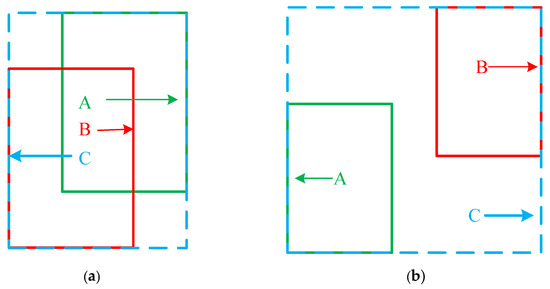

where and denote the intersection and the union area of A and B, respectively. As shown in Figure 1b, the IoU is always 0 when boxes A and B are non-overlapping, in other words, the IoU cannot measure the distance between them. The GIoU is proposed to address this problem, and its definition is as follows:

where C denotes the smallest enclosing rectangle (SER) of boxes A and B, as shown in Figure 1, and denotes the area of C. As shown in Equation (2), the GIoU is inversely proportional to the distance between A and B even if they are non-overlapping.

Figure 1.

Illustrations of (a) two intersecting and (b) non-overlapping bounding boxes. The box C denotes the SER of box A and B.

2.1.2. Existing GIoU-Based Bounding Box Regression Losses

Up to now, there are two types of GIoU-based BBR loss, one is the GIoU loss () in the form of a linear function [54]:

Another one is the IGIoU loss [57] () in the form of a logarithmic function:

Some experiments have demonstrated that the performance of is superior to , and the is adopted as the baseline BBR loss of the proposed smooth GIoU loss (see Equation (8)).

2.1.3. Other Important Works

The existing OOD methods are usually divided into two types, i.e., one-stage and two-stage OOD methods. Significant works (except those described in the Introduction) in the two kinds of methods and their limitations that motivate our method are given as follows.

(1) One-stage OOD methods. To improve the localization accuracy of objects with a high aspect ratio, Chen et al. [53] proposed a pixel level computational scheme of the IoU, named PIoU, and designed a PIoU loss for BBR. CFC-Net [58] proposed a polarization attention module to obtain the discriminative feature representation corresponding to classification and BBR tasks. Afterwards, a rotation anchor refinement module was used to refine the horizontal anchors for obtaining oriented anchors, and then a dynamic anchor learning scheme is employed to select the final anchors. DRN [59] proposed a feature selection module, in which the receptive field of neurons could be adaptively adjusted according to the shape and orientation of object; furthermore, the dynamic refinement head is proposed to refine the features in an object-aware manner. Oriented RepPoints [44] used adaptive points to represent the oriented objects, and proposed an adaptive point learning scheme to select representative samples for better training. Other important one-stage OOD methods include DARNet [60], CG-Net [61], FCOS-O [62], Double-Heads [63], etc.

(2) Two-stage OOD methods. AOPG [64] proposed a coarse location module to generate initial proposals, and then a RPN based on AlignConv was used to refine the initial proposals. Finally, the refined proposals were used for OOD. Eight spatial parameters, which are related to OBB and its SER, and an area ratio between OBB and its SER were jointly used to represent the OBB by a gliding vertex [65], and then the BBR of the above nine parameters was employed to precisely localize the OBB. DODet [66] proposed a novel five parameter representation of the OBB to generate the oriented proposals, and then the spatial location output from the BBR branch was used to extract more accurate RoI features for classification, which can handle the feature shifting between classification and BBR branches. Instead of an anchor-based RPN and NMS operation, RSADet [67] directly generated the candidate proposals from the attention map by taking into account the spatial distribution of remote sensing objects; furthermore, deformable convolution was adopted to obtain the features of OBB more accurately. Other important two-stage OOD methods include ReDet [68], CenterMap-Net [69], MPFP-Net [70], PVR-FD [71], DPGN [72], QPDet [73], etc.

(3) Limitations that motivate our method. Regardless of whether a one-stage or two-stage OOD method is used, the smooth L1 loss is usually adopted for BBR. The precondition of using the smooth L1 loss is that the spatial parameters are independent from each other; however, this is not always true in a practical situation. IoU-based BBR losses can avoid the above problem. However, the relationship between the existing IoU-based BBR losses and the IoU is approximately linear; therefore, the learning intensity cannot be dynamically adjusted with the IoU, which restricts the accuracy of object location. To address the aforementioned problems, a novel smooth GIoU loss is proposed, which can adaptively adjust the learning intensity with the GIoU value. Furthermore, the design of smooth GIoU loss can be generalized to other IoU-based BBR losses.

2.2. Proposed Method

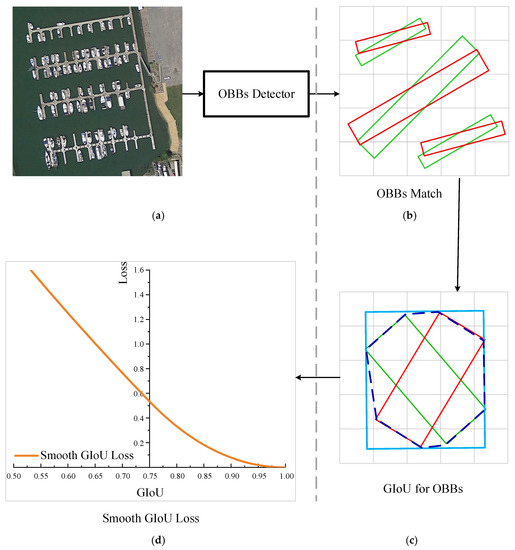

The flowchart of our method is shown in Figure 2. First of all, the oriented bounding boxes (OBBs) in RSI are predicted by the OBB detector. Secondly, all possible predicted and ground truth OBB pairs are matched if their IoUs exceed 0.5. Thirdly, the existing computational scheme of the GIoU of HBBs is modified for the computation of the GIoU of OBBs. Finally, the proposed smooth GIoU loss is calculated for the BBR of OBBs.

Figure 2.

Flowchart of our method. (a) Predicting the OBBs of an input RSI via an OBB detector, (b) all possible predicted (red) and ground truth (green) OBB pairs are matched according to their IoU, (c) calculating the GIoU of each OBB pair via the proposed computational scheme of GIoU, (d) calculating the proposed smooth GIoU loss for BBR.

2.2.1. Computational Scheme of GIoU for Oriented Object Detection

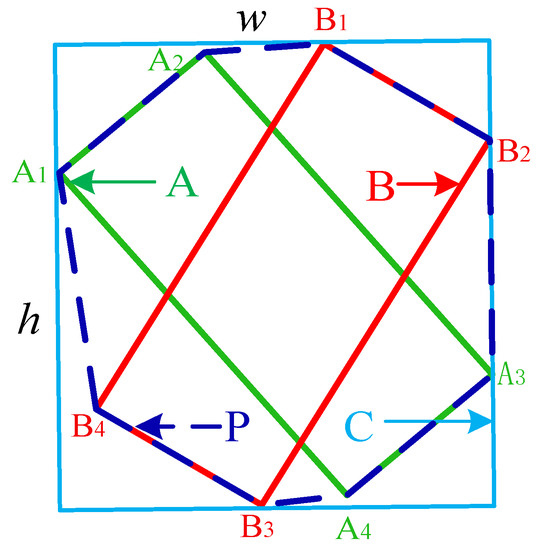

Equation (2) is still used for the computation of the GIoU in OOD; however, the computation of in Equation (2) is quite different from the one in HOD. The details can be found in Figure 3 and Algorithm 1.

| Algorithm 1: Computational scheme of Area(C) |

| Input: Vertex of OBB A:VA = {A1, A2, A3, A4} Vertex of OBB B:VB = {B1, B2, B3, B4} Output: Area(C) 1. Initialize V = ∅ 2. for i = 1 to 4 do 3. if Ai is not enclosed by OBB B then 4. V = append(V, Ai) 5. end if 6. if Bi is not enclosed by OBB A then 7. V = append(V, Bi) 8. end if 9. end for 10. Sort all the vertices in V in a counter/clockwise direction, V = {v1, ,vn}, n ≤ 8. Connect the sorted vertices to obtain P, its n edges is E = {e1,…,en}. 11. for j = 1 to n do 12. for k = 1 to n do 13. d( j, k) = dist_p2l(vj,ek) //smallest distance between vj and ek 14. end for 15. end for 16. w = max d(j, k), 17. (jm, km) = argmax d(j, k), 18. Draw n parallel lines L = {l1, ,ln} through v1∼vn which are all perpendicular to ekm. 19. for j = 1 to n do 20. for k = 1 to n do 21. ld(j, k) = dist_l2l(lj, lk) //distance between lj and lk 22. end for 23. end for 24. h = max ld(j, k), 25. Area(C) = w × h 26. return Area(C) |

Figure 3.

Illustration of the SER of two OBBs. P and C denote the smallest enclosing polygon and SER of OBB A and B, respectively. w and h denote the width and height of C, respectively. A1, A2, A3 and A4 are the vertexes of OBB A. B1, B2, B3 and B4 are the vertexes of OBB B.

2.2.2. Smooth GIoU Loss

Formulation of Smooth GIoU Loss

The core idea of smooth L1 loss is that the convergence is stable and fast when the deviations between the predicted and ground truth values are greater than the threshold. On the contrary, the convergence speed gradually slows down. Inspired by above idea, the absolute value of the gradient of the smooth GIoU loss should be relatively large and constant when the is small, i.e., the predicted box can quickly approach the ground truth box at this stage. Accordingly, the absolute value of the gradient of the smooth GIoU loss should be gradually decreased when the is large, i.e., the speed with which the predicted box approaches the ground truth box gradually decreases. In summary, the purpose of smooth GIoU loss is that it does not only avoid training oscil-lation, but also has a moderate learningspeed, so that the localization accuracy can be improved without sacrificing the learning speed. Following the above scheme, the smooth GIoU loss is formulated as follows:

where denotes the smooth GIoU loss, and denote GIoU_L1 loss and GIoU_L2 loss, respectively, denotes the baseline GIoU loss and its formulation refers to IGIoU loss [57] and k and β are hyper-parameters and are quantitatively analyzed in Section 4.2.

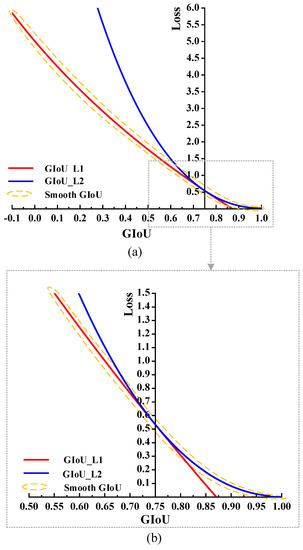

As shown in Figure 4a, the gradient value of GIoU_L1 loss is relatively constant, the absolute value of the gradient of GIoU_L2 loss is large when the GIoU is small; however, it rapidly decreases with the increase in the GIoU. Consequently, as shown in Figure 4b, the smooth GIoU loss selects GIoU_L1 loss for the BBR when ; accordingly, the predicted box is close to the ground truth box at a relatively constant rate, the smooth GIoU loss switches to GIoU_L2 loss when and the predicted box slowly approaches the ground truth box at this stage.

Figure 4.

The curves of GIoU_L1 loss (red solid line), GIoU_L2 loss (blue solid line) and smooth GIoU loss (yellow dashed line). (a) The curves of three losses within [−0.1, 1.0]. (b) Enlarging curves of three losses within [0.5, 1.0]. k and β in the three losses are set to 5 and 0.75, respectively (see Section 4.2).

Proof of the Smooth Characteristic of Smooth GIoU Loss

The smooth characteristic of smooth GIoU loss requires that the smooth GIoU loss should be differentiable and continuous [34], and the key of related proof includes two aspects. On the one hand, the GIoU_L1 and GIoU_L2 losses should be differentiable. On the other hand, the GIoU_L1 and GIoU_L2 losses should be differentiable and continuous at . The details of related proof are as follows:

- (1)

- Proof that GIoU_L1 and GIoU_L2 losses are differentiable:

According to Equation (9) (definition of derivative), GIoU_L1 loss is proven to be differentiable.

The proof that GIoU_L2 loss is differentiable can be obtained in a similar way.

- (2)

- Proof that GIoU_L1 and GIoU_L2 losses are differentiable and continuous at .

is substituted into Equations (6) and (7):

Equation (11) demonstrates that the function values of the GIoU_L1 and GIoU_L2 losses are continuous at . Subsequently, the GIoU_L1 and GIoU_L2 losses take derivatives of GIoU, respectively, and is substituted into their derivative formulation:

Equation (12) demonstrates that the gradient value of GIoU_L1 and GIoU_L2 are continuous at .

3. Results

3.1. Experimental Setup

3.1.1. Datasets

The DOTA dataset [45] includes 2806 RSIs with a size of 800 × 800~4000 × 4000, it contains 188,282 instances of 15 categories: bridge (BR), harbor (HA), ship (SH), plane (PL), helicopter (HC), small vehicle (SV), large vehicle (LV), baseball diamond (BD), ground track field (GTF), tennis court (TC), basketball court (BC), soccer-ball field (SBF), roundabout (RA), swimming pool (SP) and storage tank (ST). Both training and validation sets were used for training; the testing results are obtained from DOTA’s evaluation server. In the single-scale experiment, each image is split into a number of sub-images with 1024 × 1024 pixels by using a step of 824 pixels. In the multi-scale experiment, each image is resized using three scales {0.5,1.0,1.5}, and then split into a number of sub-images with 1024 × 1024 pixels by using a step of 512 pixels.

The DIOR-R dataset [64] includes 23,463 RSIs with a size of 800 × 800 pixels, it contains 192,518 instances of 20 categories: airplane (APL), airport (APO), baseball field (BF), basketball court (BC), bridge (BR), chimney (CH), expressway service area (ESA), expressway toll station (ETS), dam (DAM), golf field (GF), ground track field (GTF), harbor (HA), overpass (OP), ship (SH), stadium (STA), storage tank (STO), tennis court (TC), train station (TS), vehicle (VE) and windmill (WM). Both training and validation sets were used for training, and the rest is for testing.

3.1.2. Implementation Details

Training Details: Stochastic gradient descent (SGD) was used for optimization, where the momentum decay and weight decay were set to 0.9 and 0.0001 [64], respectively. The batch size and initial learning rate were the same as the baseline method, the learning rate decays at the 8th (24th) and 11th (33th) epoch if the total number of epochs is 12 (36) [44], the learning rate becomes 1/10 of the last stage after each decay [64]. All of the training samples were augmented by random horizontal and vertical flipping [64].

Evaluation Metric: The mean average precision (mAP) was adopted as the evaluation metric in this paper, which is consistent with PASCAL VOC [74].

Environment Setting: The experiments were implemented on the MMDetection platform [75] in the Pytorch framework and were run on a workstation with Intel(R) Xeon(R) CPU E5-2630 v4 @ 2.20GHz (a total of 10 × 2 cores), 256G memory and eight NVIDIA GeForce RTX 2080Ti (a total of 11 × 8 GB memory).

3.2. Parameters Analysis

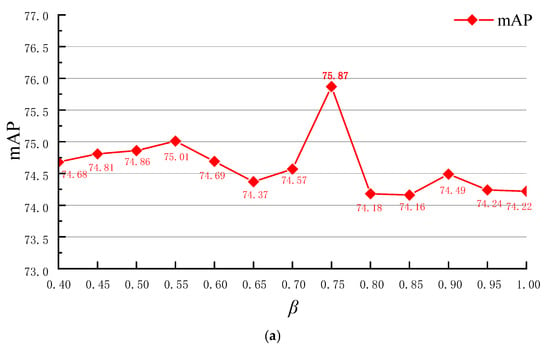

As shown in Equation (5), the smooth GIoU loss contains two hyper-parameters, k and β. Quantitative analyses of k and β were conducted on the DOTA dataset to evaluate their impacts on the overall performance, where the S2ANet is adopted as the baseline method of which the backbone is R-50-FPN (denotes ResNet-50-FPN [76]). First of all, k was quantitatively analyzed with an a value of β of 0.5. As shown in Figure 5a, the mAP is at a maximum of 75.18% when k = 5. Subsequently, β was quantitatively analyzed when k = 5. As shown in Figure 5b, mAP is at a maximum of 75.87% when β = 0.75. Finally, k and β were set to 5 and 0.75, respectively.

Figure 5.

Quantitative parameter analyses of β and k on the DOTA dataset. (a) The mAP of our method with different values of β, (b) the mAP of our method with different values of k.

3.3. Comparisons with Other Bounding Box Regression Losses and Ablation Study

To validate the superiority of smooth GIoU loss, it was quantitatively compared with other BBR losses used by OOD methods on the DOTA and DIOR-R datasets, where the S2ANet [41] is adopted as the baseline method. The BBR losses for comparison include PIoU loss [53], Smooth L1 loss [51], IoU loss [52], Smooth IoU Loss, GioU loss [54], Baseline GioU loss [57], GioU_L1 loss and GioU_L2 loss, where the smooth IoU loss is a modified version of the IoU loss following the definition of a smooth GIoU loss, i.e., the defined in Equation (8) is changed to IoU loss. The formulation of baseline GIoU loss, GIoU_L1 loss and GIoU_L2 loss are presented in Equations (6)–(8), respectively. The comparisons with baseline GIoU loss, GIoU_L1 and GIoU_L2 losses indicate the ablation study of smooth GIoU loss.

As shown in Table 1, smooth GIoU loss exhibits the best performance on both the DOTA and DIOR-R datasets, which validates its superiority. The smooth IoU loss exhibits a suboptimal performance, but is superior to IoU loss, which demonstrates that the core idea of the proposed smooth GIoU loss is also valid for IoU loss. In other words, our core idea has good generalization capability and can be applied to other IoU-based BBR losses. In addition, the superiority over baseline GIoU loss, GIoU_L1 Loss and GIoU_L2 Loss validates the effectiveness of our scheme design.

Table 1.

Quantitative comparisons between smooth GIoU loss and other BBR losses used by OOD methods on the DOTA and DIOR-R datasets, where S2ANet [41] is adopted as the baseline method. The comparisons of GIoU_L1 and GIoU_L2 losses indicate the ablation study of smooth GIoU loss. Bold denotes the best performance.

3.4. Evaluation of Generalization Capability

To evaluate the generalization capability of smooth GIoU loss, some quantitative comparisons were conducted on the DOTA and DIOR-R datasets, where six representative OOD methods (RoI Transformer [49], AOPG [64], Oriented RCNN [50], S2ANet [41], RetinaNet-O [40] and Gliding Vertex [65]) were selected as baseline methods, which include one-stage and two-stage methods and anchor-based and anchor-free methods. The original BBR losses of six OOD methods were replaced with smooth GIoU loss, and then the mAP of original and replaced versions were compared with each other. As shown in Table 2, the replaced version increased by 0.62%~1.75% on the DOTA dataset, and increased by 0.46%~2.10% on the DIOR-R dataset, which demonstrates that the smooth GIoU loss is effective for the six OOD methods, i.e., the smooth GIoU loss has good generalization capability for various kinds of OOD methods.

Table 2.

Quantitative comparisons between the six representative OOD methods and their revised version in which the original BBR losses are replaced with the smooth GIoU loss.

3.5. Comprehensive Comparisons

To fully validate the effectiveness of smooth GIoU loss, it was separately embedded into a representative one-stage and two-stage baseline method, and then quantitatively compared with other one-stage and two-stage methods on the DOTA and DIOR-R datasets. S2ANet [41] and AOPG [64] were adopted as the one-stage and two-stage baseline methods, respectively, and the embedded methods are denoted as ‘S2ANet + Smooth GIoU’ and ‘AOPG + Smooth GIoU’, respectively.

Results on the DOTA dataset: As shown in Table 3, the S2ANet + Smooth GIoU (epochs = 36) achieved the highest mAP among the one-stage methods with single scale training; it was increased by 0.22% compared with Oriented Reppoint (epochs = 40), which achieved the best results among the one-stage OOD methods. The S2ANet + Smooth GioU (epochs = 12) obtained an mAP of 75.87%; an increase of 1.75% compared with S2ANet, which achieved the best results among the one-stage methods with 12 epochs. The AOPG + Smooth GIoU (epochs = 12) achieved an mAP of 76.19%; an increase of 0.32% compared with Oriented R-CNN (epochs = 12), which achieved the best results among the two-stage OOD methods. The S2ANet + Smooth GIoU and AOPG + Smooth GIoU (epochs = 36) obtained mAPs of 80.61% and 81.03%, respectively, in the multi-scale experiments, which shows a quite competitive performance compared to the DOTA dataset. Furthermore, our method is the best in 14 of the 15 categories in both comparisons of one-stage and two-stage methods. In summary, the comprehensive performance of our method is optimal on the DOTA dataset.

Table 3.

Comparison of existing OOD methods on the DOTA dataset. The proposed smooth GIoU loss is embedded in a representative one-stage method (S2ANet [41]) and two-stage method (AOPG [64]) for comparison. The detection results of each category are reported to better demonstrate where the performance gains come from. Blue and red bold fonts denote the best results for each category in one-stage and two-stage methods, respectively (the same applies to below).

Results on the DIOR-R dataset: As shown in Table 4, the S2ANet + Smooth GIoU (epochs = 36) achieved the highest mAP among the one-stage methods, it increased by 0.64% compared with Oriented Reppoint (epochs = 40), which achieved the best results among the one-stage methods. The S2ANet + Smooth GIoU (epochs = 12) obtained an mAP of 63.90%; an increase of 1.00% compared with S2ANet, which achieved the best results among the one-stage methods with 12 epochs. The AOPG + Smooth GIoU (epochs = 12) obtained an mAP of 66.51%, it increased by 1.41% compared with DODet (epochs = 12), which achieved the best results among the two-stage methods. The AOPG + Smooth GIoU (epochs = 36) obtained an mAP of 69.37%, which shows a quite competitive performance on the DIOR-R dataset. Furthermore, our methods are the best in 15 (16) of the 20 categories in one-stage (two-stage) comparisons. In summary, the comprehensive performance of our methods is optimal on the DIOR-R dataset.

Table 4.

Comparisons with the existing OOD methods on the DIOR-R dataset.

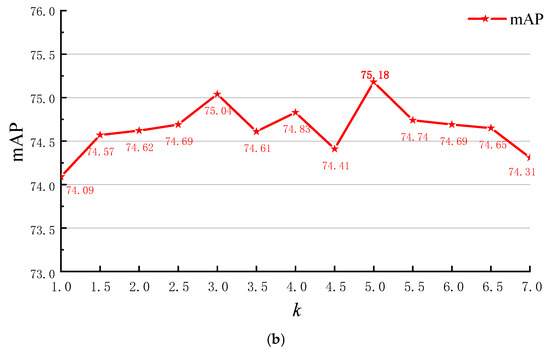

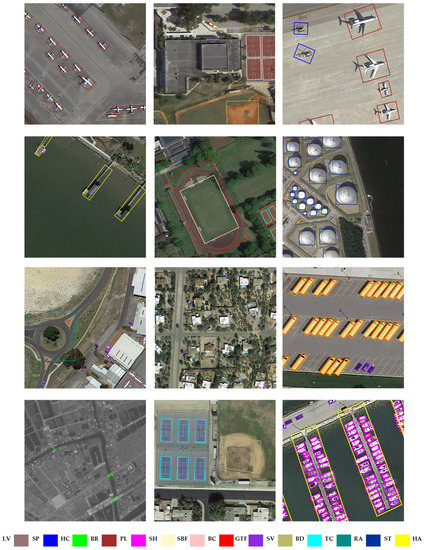

The visualizations of some detection results of S2ANet + Smooth GIoU (epochs = 12) on the DOTA and DIOR-R datasets are shown in Figure 6 and Figure 7, respectively.

Figure 6.

Visualizations of some of the detection results of S2ANet + Smooth GIoU (epochs = 12) on the DOTA dataset. OBBs with different colors indicate different categories.

Figure 7.

Visualizations of some of the detection results of S2ANet + Smooth GIoU (epoch = 12) on the DIOR-R dataset. OBBs with different colors indicate different categories.

In summary, the above comparisons on the DOTA and DIOR-R datasets can fully demonstrate the effectiveness of smooth GIoU loss.

4. Discussion

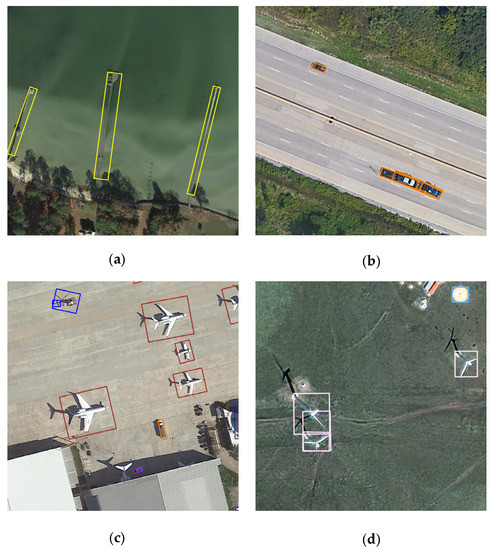

4.1. Analysis of False Positive Results

As shown in Figure 8, our method gives false positive results in some cases. The main reasons are as follows:

- (1)

- Similar background. The background regions have similar characteristics to the foreground objects in some cases; consequently, the object detection will be disturbed by the background. As shown in Figure 8a, the harbor has a similar color to the water and trees; therefore, the harbor cannot be completely enclosed by the OBBs.

- (2)

- Dense objects. Foreground objects in the same category are usually similar in appearance; thus, the object detection will be disturbed by adjacent objects in the same category. As shown in Figure 8b, three vehicles are very close to each other and have similar appearances; consequently, two vehicles are enclosed by a single OBB.

- (3)

- Shadow. The shadow of a foreground object usually has similar characteristics to object itself; therefore, the shadow will be falsely identified as the part of object. As shown in Figure 8c,d, the shadows of the helicopter and the windmill are also enclosed by the OBBs.

Figure 8.

Visualization of some false positive results on two RSI datasets. (a,c) are selected from the DOTA dataset, (b,d) are selected from the DIOR-R dataset.

4.2. Analysis of Running Time and Detection Accuracy after Expanding GPU Memory

Most of the training settings of our method, such as the number of GPUs, the batch size, optimization algorithm, etc., are the same as the baseline methods for fair comparison. Obviously, using more GPU, i.e., expanding the GPU memory, can accelerate the training and testing speed; therefore, it is necessary to analyze the changes in running time and the detection accuracy after expanding the GPU memory. To this end, a comparison between baseline methods with different GPU memories are conducted in terms of training time, testing time and mAP.

As shown in Table 5, the training and testing time of two baseline methods are apparently reduced through expanding the GPU memory because the parallel computation capability is improved by using more GPU. However, the mAP slightly decreased after expanding the GPU memory, a possible reason for this is as follows. The randomness of the gradient descent direction is weakened by using more GPU (i.e., increasing the batch size); therefore, the deep learning model more easily converges to the local optimal solution [77].

Table 5.

Comparisons between baseline methods with different GPU running memory in terms of training time, testing time and mAP (%). The single GPU (NVIDIA GeForce RTX 2080Ti) memory in our workstation is 11 GB. The number of GPUs used by S2ANet and AOPG is four [41] and one [64], respectiely, i.e., the GPU memories used by S2ANet and AOPG are 44 GB and 11 GB, respectively. The total GPU memory of our workstation is 88 GB.

5. Conclusions

Most of the IoU-based BBR losses for OOD in RSIs cannot dynamically adjust the learning intensity with the IoU, which restricts the accuracy of object location; therefore, a novel BBR loss, named smooth GIoU loss, is proposed in this paper to handle this problem. First of all, the existing computational scheme of GIoU is modified to fit OOD. Afterwards, a smooth GIoU loss in the form of a piecewise function is proposed to adaptively adjust the gradient value with the GIoU value. The ablation study of smooth GIoU loss validates the effectiveness of its design. Quantitative comparisons with other BBR losses demonstrate that the performance of the smooth GIoU loss is superior to other BBR losses adopted by existing OOD methods. Quantitative comparisons of four representative OOD methods, which include one-stage and two-stage methods and anchor-based and anchor-free methods, show that replacing their original BBR losses with smooth GIoU loss can improve their performance, which indicates that the smooth GIoU loss can be generalized for various kinds of OOD methods. Comprehensive comparisons conducted on the DOTA and DIOR-R datasets further validate the effectiveness of smooth GIoU loss.

The core idea of smooth GIoU loss is also generalized to the existing IoU loss, named smooth IoU loss. The experimental results demonstrate that it also exhibits a good performance. As a matter of fact, the core idea of smooth GIoU loss can also be generalized to other IoU-based BBR losses in the future.

Author Contributions

Conceptualization, X.Q.; formal analysis, X.Q.; funding acquisition, X.Q; methodology, X.Q. and N.Z.; project administration, W.W.; resources, W.W.; software, N.Z.; supervision, W.W.; validation, W.W.; writing—original draft, N.Z.; writing—review and editing, X.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under grant no. 62076223.

Data Availability Statement

The DOTA and DIOR-R datasets are available at following URLs: https://captain-whu.github.io/DOTA/dataset.html (accessed on 25 December 2022) and https://gcheng-nwpu.github.io/#Datasets (accessed on 25 December 2022), respectively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yao, X.; Shen, H.; Feng, X.; Cheng, G.; Han, J. R2IPoints: Pursuing Rotation-Insensitive Point Representation for Aerial Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Cheng, G.; Cai, L.; Lang, C.; Yao, X.; Chen, J.; Guo, L.; Han, J. SPNet: Siamese-prototype network for few-shot remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. Convolutional neural networks for multimodal remote sensing data classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X.X. An augmented linear mixing model to address spectral variability for hyperspectral unmixing. IEEE Trans. Image Process. 2018, 28, 1923–1938. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, J.; Wei, W.; Zhang, Y. Learning discriminative compact representation for hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8276–8289. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Zhou, P.; Xu, D. Learning rotation-invariant and fisher discriminative convolutional neural networks for object detection. IEEE Trans. Image Process. 2018, 28, 265–278. [Google Scholar] [CrossRef]

- Zhu, Q.; Guo, X.; Deng, W.; Guan, Q.; Zhong, Y.; Zhang, L.; Li, D. Land-use/land-cover change detection based on a Siamese global learning framework for high spatial resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 63–78. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph convolutional networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5966–5978. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. UIU-Net: U-Net in U-Net for Infrared Small Object Detection. IEEE Trans. Image Process. 2022, 32, 364–376. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More diverse means better: Multimodal deep learning meets remote-sensing imagery classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Yan, H.; Gao, Y.; Wei, W. Salient object detection in hyperspectral imagery using multi-scale spectral-spatial gradient. Neurocomputing 2018, 291, 215–225. [Google Scholar] [CrossRef]

- Liao, W.; Bellens, R.; Pizurica, A.; Philips, W.; Pi, Y. Classification of hyperspectral data over urban areas using directional morphological profiles and semi-supervised feature extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1177–1190. [Google Scholar] [CrossRef]

- Gao, L.; Zhao, B.; Jia, X.; Liao, W.; Zhang, B. Optimized kernel minimum noise fraction transformation for hyperspectral image classification. Remote Sens. 2017, 9, 548. [Google Scholar] [CrossRef]

- Du, L.; You, X.; Li, K.; Meng, L.; Cheng, G.; Xiong, L.; Wang, G. Multi-modal deep learning for landform recognition. ISPRS J. Photogramm. Remote Sens. 2019, 158, 63–75. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, Z.; Wu, J. A hierarchical oil tank detector with deep surrounding features for high-resolution optical satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4895–4909. [Google Scholar] [CrossRef]

- Stankov, K.; He, D.-C. Detection of buildings in multispectral very high spatial resolution images using the percentage occupancy hit-or-miss transform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4069–4080. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Zhang, L. An efficient and robust integrated geospatial object detection framework for high spatial resolution remote sensing imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef]

- Qian, X.; Zeng, Y.; Wang, W.; Zhang, Q. Co-saliency Detection Guided by Group Weakly Supervised Learning. IEEE Trans. Multimed. 2022. [CrossRef]

- Qian, X.; Huo, Y.; Cheng, G.; Yao, X.; Li, K.; Ren, H.; Wang, W. Incorporating the Completeness and Difficulty of Proposals Into Weakly Supervised Object Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1902–1911. [Google Scholar] [CrossRef]

- Qian, X.; Li, J.; Cao, J.; Wu, Y.; Wang, W. Micro-cracks detection of solar cells surface via combining short-term and long-term deep features. Neural Netw. 2020, 127, 132–140. [Google Scholar] [CrossRef]

- Zhu, Q.; Deng, W.; Zheng, Z.; Zhong, Y.; Guan, Q.; Lin, W.; Zhang, L.; Li, D. A spectral-spatial-dependent global learning framework for insufficient and imbalanced hyperspectral image classification. IEEE Trans. Cybern. 2021, 52, 11709–11723. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Meng, D.; Han, J. Co-saliency detection via a self-paced multiple-instance learning framework. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 865–878. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Zhang, D.; Hu, X.; Guo, L.; Ren, J.; Wu, F. Background prior-based salient object detection via deep reconstruction residual. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 1309–1321. [Google Scholar] [CrossRef]

- Han, J.; Yao, X.; Cheng, G.; Feng, X.; Xu, D. P-CNN: Part-based convolutional neural networks for fine-grained visual categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 44, 579–590. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Ji, X.; Hu, X.; Zhu, D.; Li, K.; Jiang, X.; Cui, G.; Guo, L.; Liu, T. Representing and retrieving video shots in human-centric brain imaging space. IEEE Trans. Image Process. 2013, 22, 2723–2736. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Liu, W.; Ma, L.; Wang, J. Detection of multiclass objects in optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 791–795. [Google Scholar] [CrossRef]

- Chen, S.; Zhan, R.; Zhang, J. Geospatial object detection in remote sensing imagery based on multiscale single-shot detector with activated semantics. Remote Sens. 2018, 10, 820. [Google Scholar] [CrossRef]

- Tang, T.; Zhou, S.; Deng, Z.; Lei, L.; Zou, H. Arbitrary-oriented vehicle detection in aerial imagery with single convolutional neural networks. Remote Sens. 2017, 9, 1170. [Google Scholar] [CrossRef]

- Tayara, H.; Chong, K.T. Object detection in very high-resolution aerial images using one-stage densely connected feature pyramid network. Sensors 2018, 18, 3341. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, T.; Ouyang, C. End-to-end airplane detection using transfer learning in remote sensing images. Remote Sens. 2018, 10, 139. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Li, K.; Cheng, G.; Bu, S.; You, X. Rotation-insensitive and context-augmented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2337–2348. [Google Scholar] [CrossRef]

- Zhong, Y.; Han, X.; Zhang, L. Multi-class geospatial object detection based on a position-sensitive balancing framework for high spatial resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2018, 138, 281–294. [Google Scholar] [CrossRef]

- Chen, C.; Gong, W.; Chen, Y.; Li, W. Object detection in remote sensing images based on a scene-contextual feature pyramid network. Remote Sens. 2019, 11, 339. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic anchor learning for arbitrary-oriented object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 2355–2363. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, H.; Ma, B. Dynamic R-CNN: Towards high quality object detection via dynamic training. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Li, W.; Chen, Y.; Hu, K. Oriented reppoints for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Xia, G.-S.; Bai, X.; Ding, J. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Mehta, R.; Ozturk, C. Object detection at 200 frames per second. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Probabilistic two-stage detection. arXiv 2021, arXiv:2103.07461. [Google Scholar]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y. Learning roi transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Zhou, D.; Fang, J.; Song, X.; Guan, C.; Yin, J.; Dai, Y.; Yang, R. Iou loss for 2d/3d object detection. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Québec City, QC, Canada, 16–19 September 2019. [Google Scholar]

- Chen, Z.; Chen, K.; Lin, W. Piou loss: Towards accurate oriented object detection in complex environments. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Qian, X.; Lin, S.; Cheng, G. Object detection in remote sensing images based on improved bounding box regression and multi-level features fusion. Remote Sens. 2020, 12, 143. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z. CFC-Net: A critical feature capturing network for arbitrary-oriented object detection in remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Pan, X.; Ren, Y.; Sheng, K. Dynamic refinement network for oriented and densely packed object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, F.; Wang, X.; Zhou, S. DARDet: A dense anchor-free rotated object detector in aerial images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Wei, Z.; Liang, D.; Zhang, D. Learning calibrated-guidance for object detection in aerial images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2721–2733. [Google Scholar] [CrossRef]

- Tian, Z.; Chu, X.; Wang, X.; Wei, X.; Shen, C. Fully Convolutional One-Stage 3D Object Detection on LiDAR Range Images. arXiv 2022, arXiv:2205.13764. [Google Scholar]

- Wu, Y.; Chen, Y.; Yuan, L. Rethinking classification and localization for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Cheng, G.; Wang, J.; Li, K. Anchor-free oriented proposal generator for object detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef]

- Cheng, G.; Yao, Y.; Li, S. Dual-aligned oriented detector. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S. A new spatial-oriented object detection framework for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Xue, N. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 202.

- Wang, J.; Yang, W.; Li, H.-C. Learning center probability map for detecting objects in aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4307–4323. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Chanussot, J.; Zareapoor, M. Multipatch feature pyramid network for weakly supervised object detection in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Miao, S.; Cheng, G.; Li, Q. Precise Vertex Regression and Feature Decoupling for Oriented Object Detection. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar] [CrossRef]

- Li, Q.; Cheng, G.; Miao, S. Dynamic Proposal Generation for Oriented Object Detection in Aerial Images. IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar]

- Yao, Y.; Cheng, G.; Wang, G. On Improving Bounding Box Representations for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2022. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J. MMDetection: Open mmlab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Shafiq, M.; Gu, Z. Deep residual learning for image recognition: A survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Li, H. Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).