Polarization Orientation Method Based on Remote Sensing Image in Cloudy Weather

Abstract

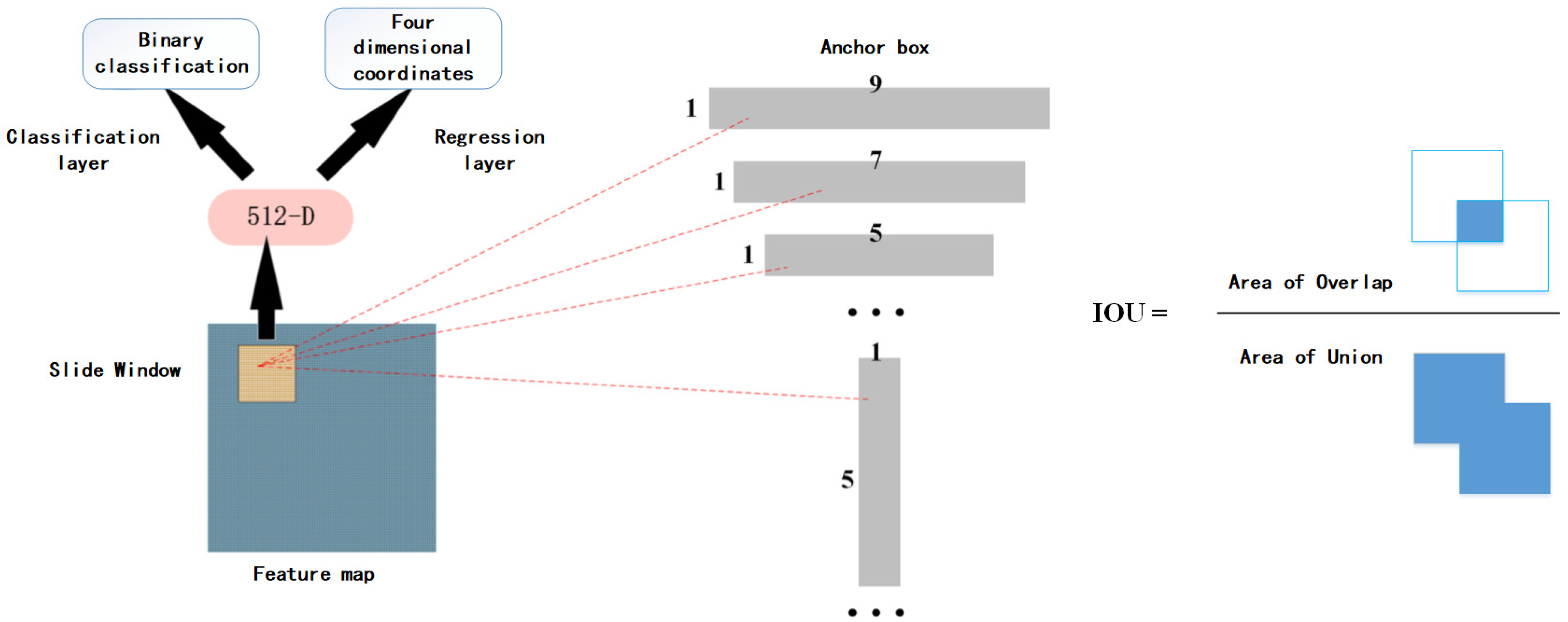

1. Introduction

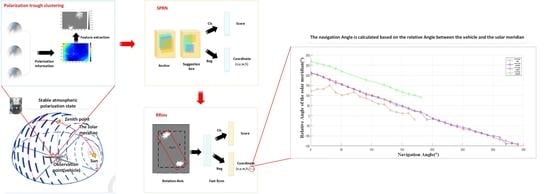

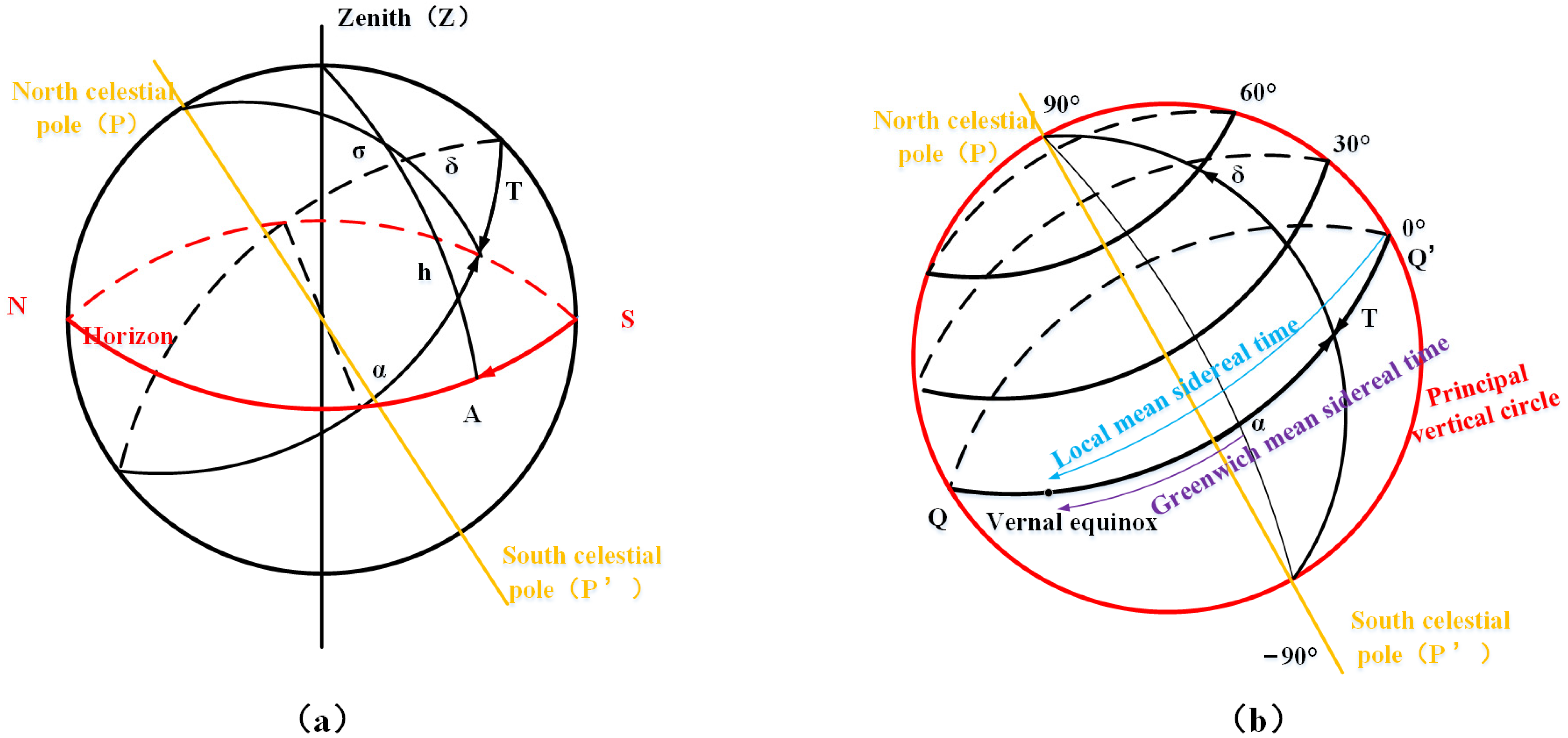

2. Polarization Navigation Algorithm

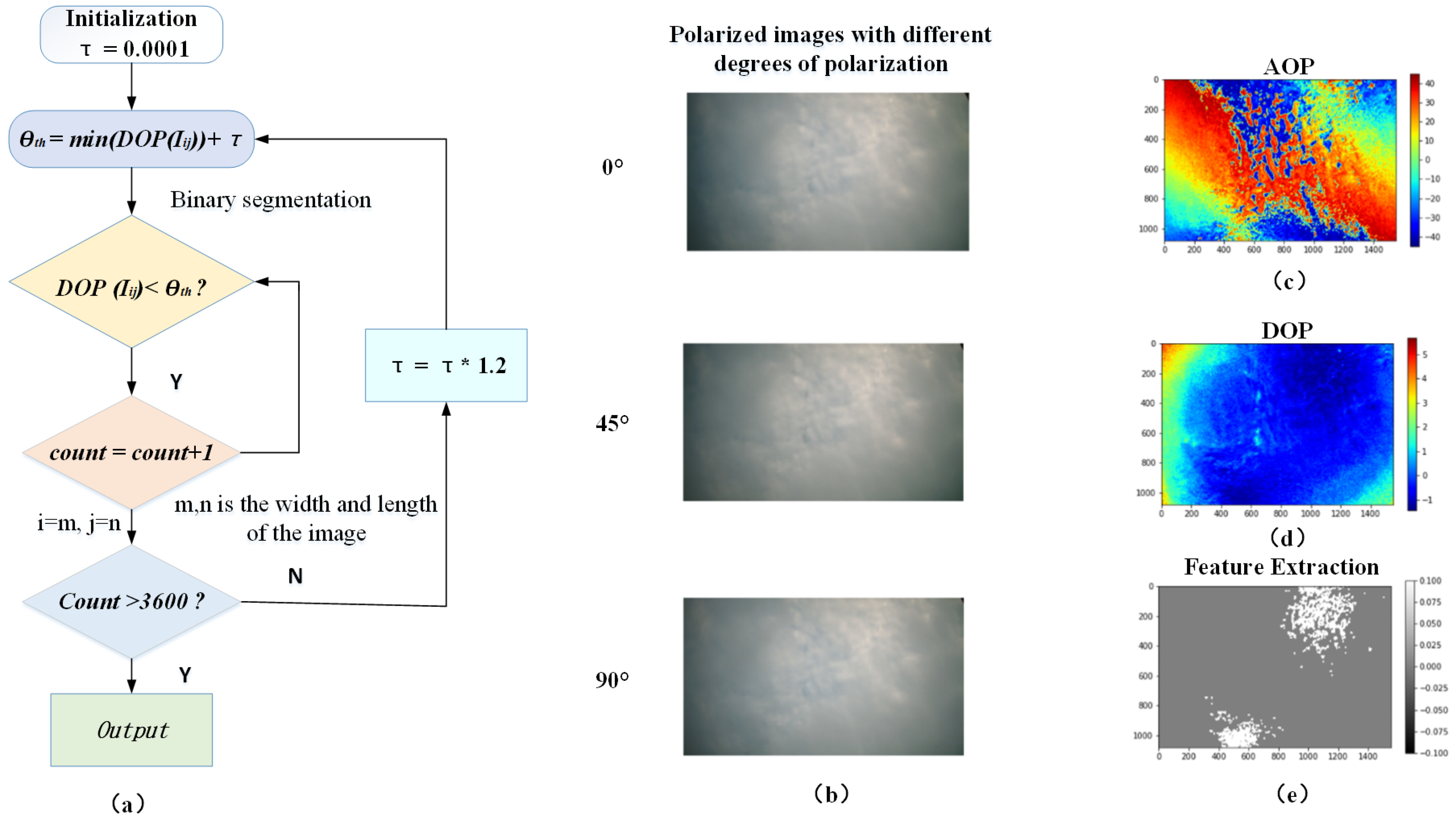

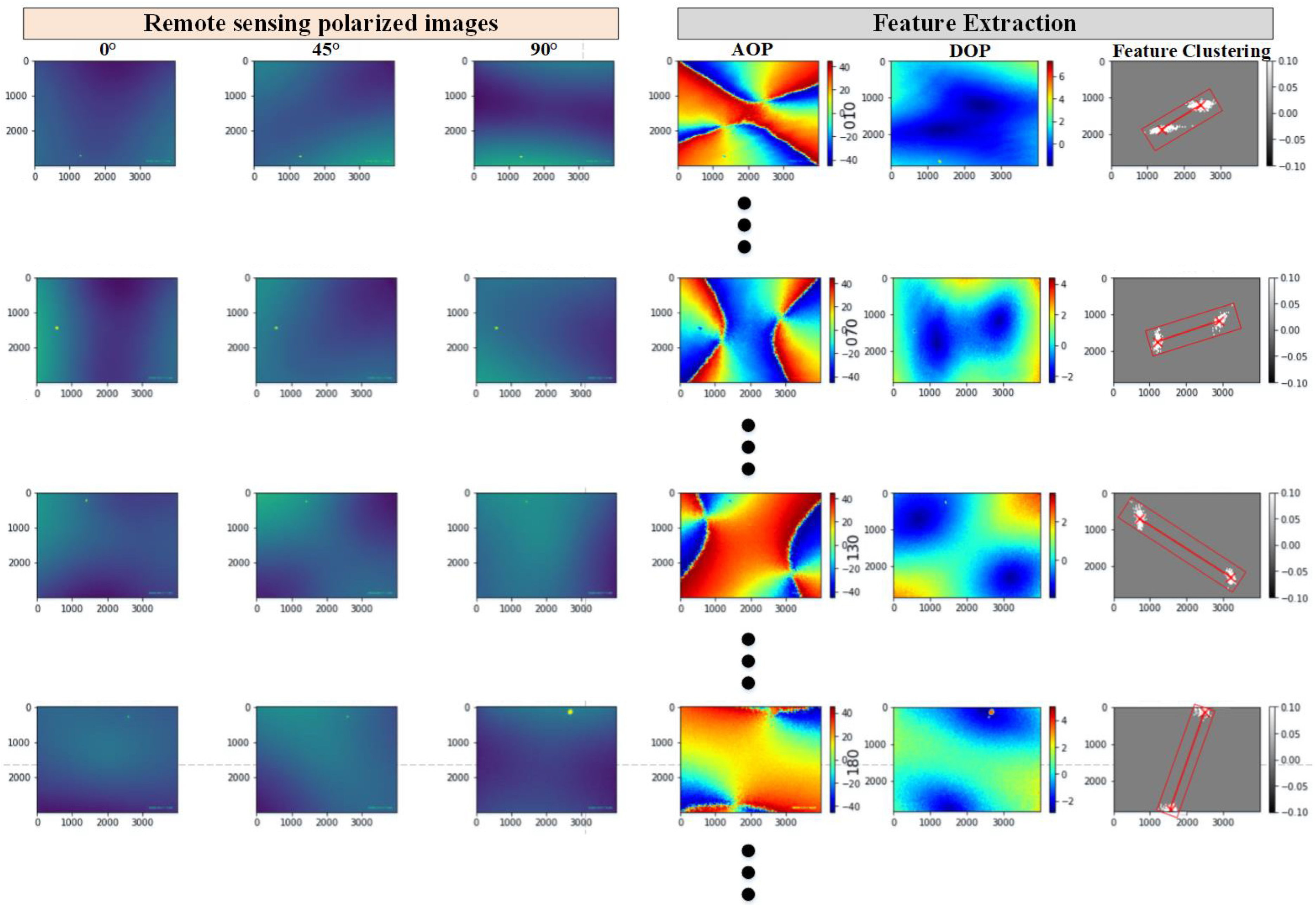

2.1. Image Description of Polarization Mode

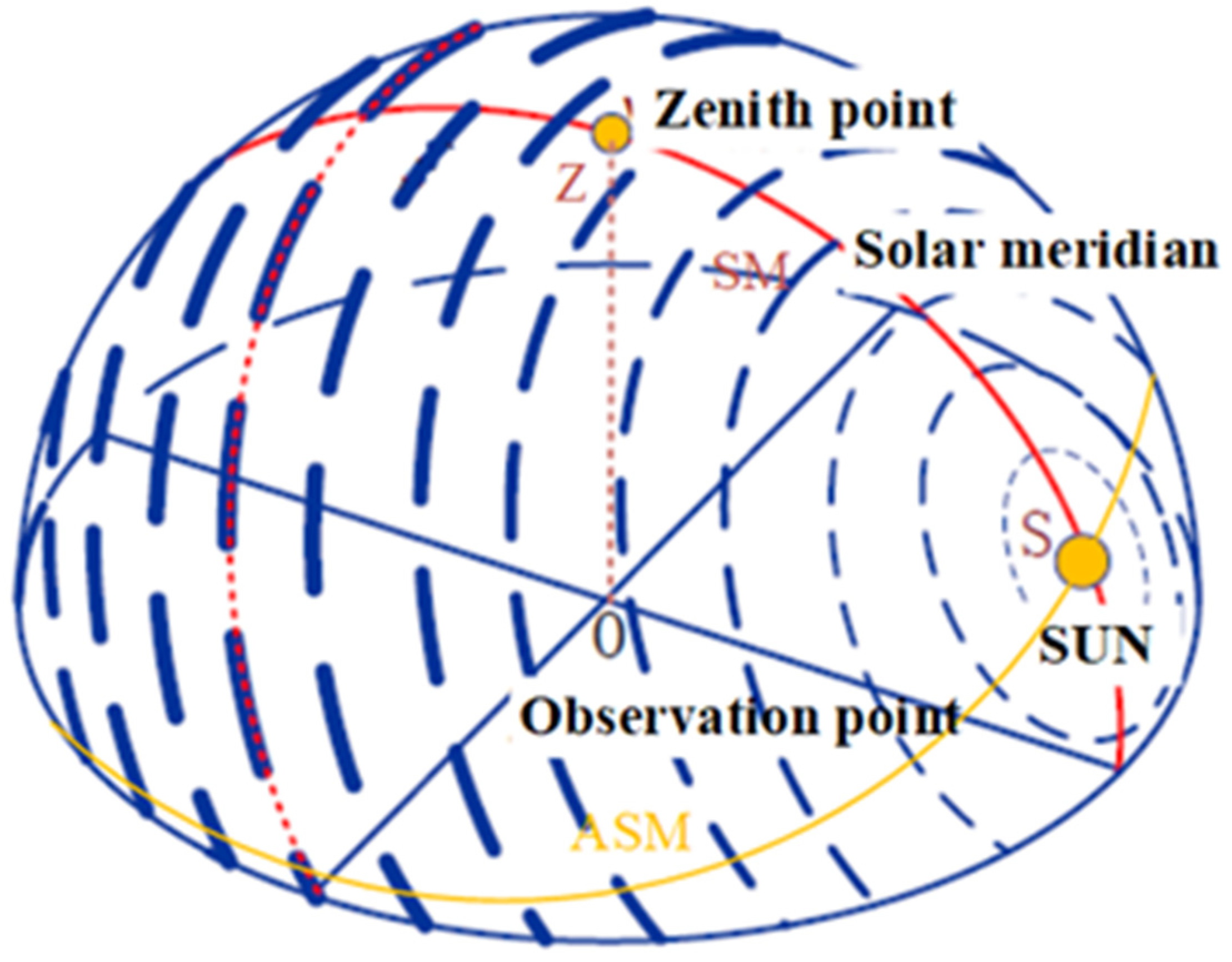

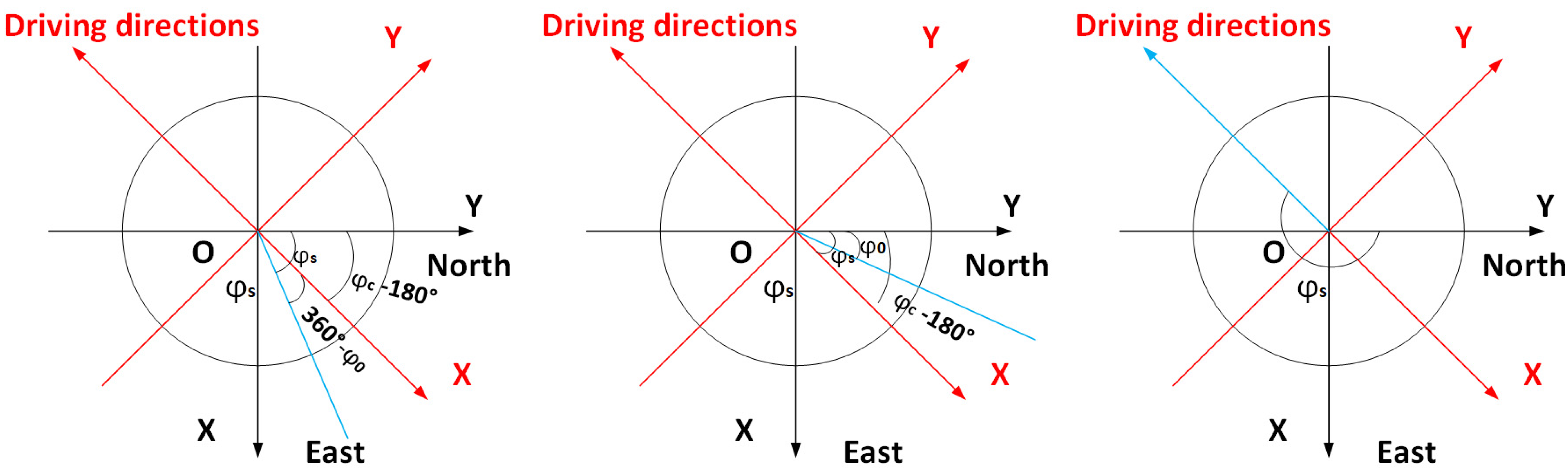

2.2. Solar Azimuth Acquisition in the Carrier Coordinate System

2.3. Solar Azimuth Acquisition in the Navigation Coordinate System

2.4. Course Angle Calculation

3. Experiment and Discussion

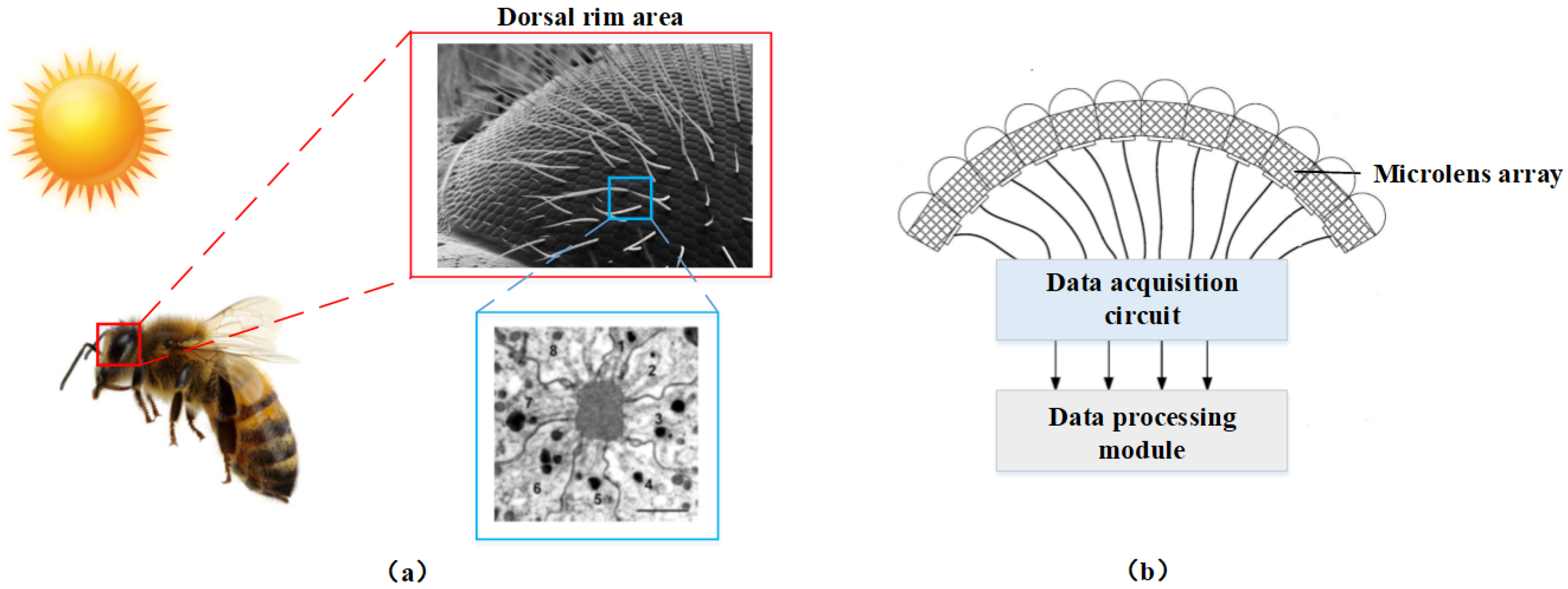

3.1. Measurement Platform Based on Bionic Compound Eye

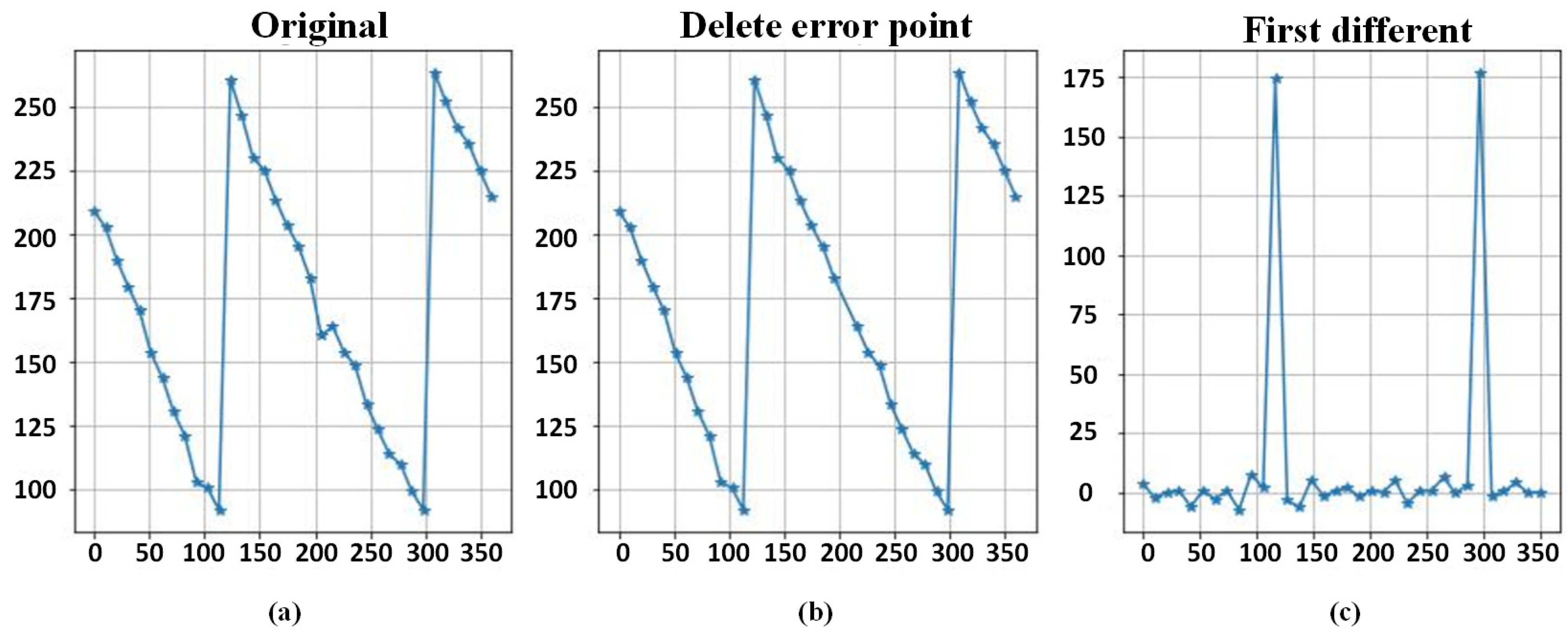

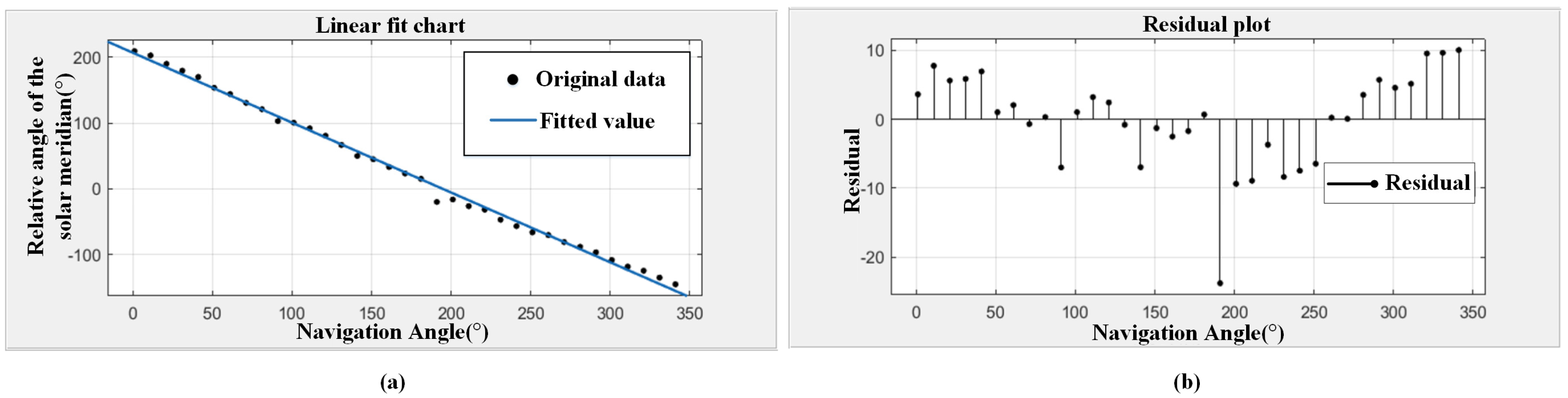

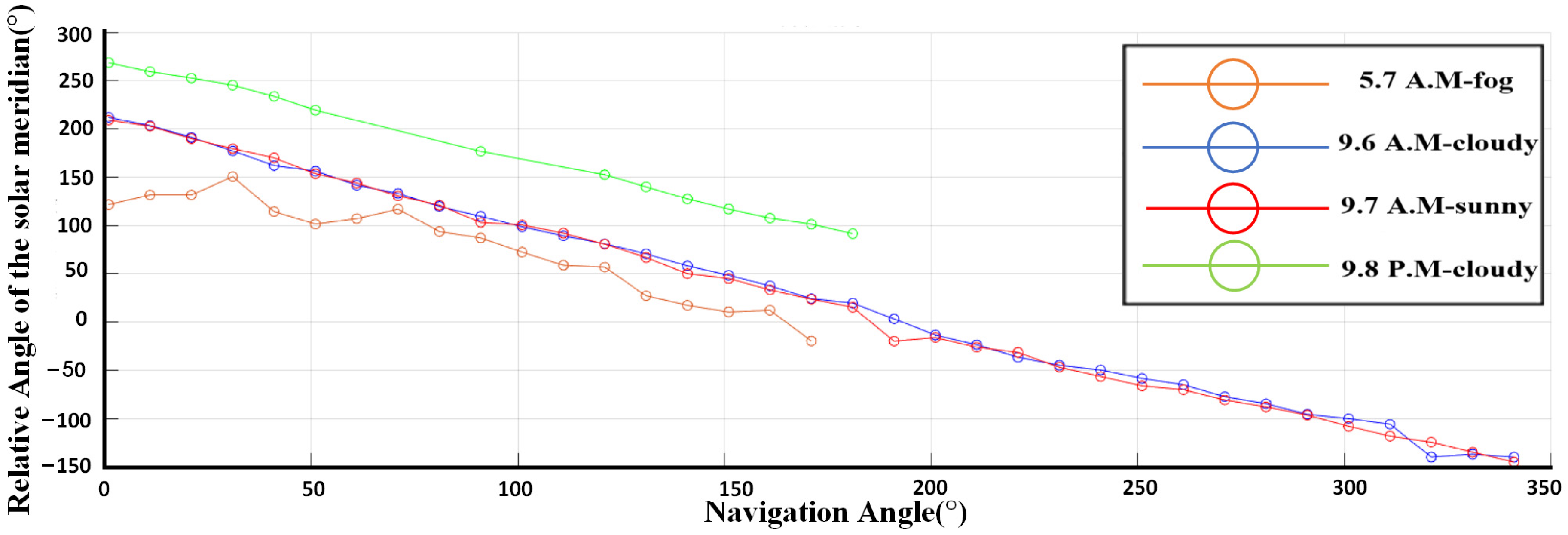

3.2. Measurement Test and Data Analysis

3.3. Disscussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wen, B.; Wei, Y.; Lu, Z. Sea Clutter Suppression and Target Detection Algorithm of Marine Radar Image Sequence Based on Spatio-Temporal Domain Joint Filtering. Entropy 2022, 24, 250. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Xu, G.; Zhou, R.; Zhang, H.; Hong, W. Multi-Channel Back-Projection Algorithm for Mmwave Automotive MIMO SAR Imaging with Doppler-Division Multiplexing. IEEE J. Sel. Top. Signal Process. 2022, 1–13. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.; Yu, H.; Chen, J.; Xing, M.; Hong, W. Sparse Synthetic Aperture Radar Imaging From Compressed Sensing and Machine Learning: Theories, Applications, and Trends. IEEE Geosci. Remote Sens. Mag. 2022, 10, 32–69. [Google Scholar] [CrossRef]

- Carretero-Moya, J.; Gismero-Menoyo, J.; Blanco-del-Campo, Á.; Asensio-Lopez, A. Statistical analysis of a high-resolution sea clutter database. IEEE Trans. Geosci. Electron. 2010, 48, 2024–2037. [Google Scholar] [CrossRef]

- Lopez-Alonso, J.M.; Alda, J. Characterization of dynamic sea scenarios with infrared imagers. Infrared Phys. Technol. 2005, 46, 355–363. [Google Scholar] [CrossRef]

- Yu, J.; Xia, G.; Deng, J.; Tian, J. Small object detection in forward-looking infrared images with sea clutter using context-driven Bayesian saliency model. Infrared Phys. Technol. 2015, 73, 175–183. [Google Scholar] [CrossRef]

- Kim, S. Analysis of small infrared target features and learning-based false detection removal for infrared search and track. Pattern Anal. Appl. 2014, 17, 883–900. [Google Scholar] [CrossRef]

- Yang, C.; Ma, J.; Qi, S.; Tian, J.; Zheng, S.; Tian, X. Directional Support Value of Gaussian Transformation for Infrared Small Target Detection. Appl. Opt. 2015, 54, 2255–2265. [Google Scholar] [CrossRef]

- Yang, C.; Ma, J.; Zhang, M.; Zheng, S.; Tian, X. Multiscale Facet Model for Infrared Small Target Detection. Infrared Phys. Technol. 2014, 67, 202–209. [Google Scholar] [CrossRef]

- Doyuran, U.C.; Tanik, Y. Expectation maximization-based detection in range-heterogeneous Weibull clutter. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 3156–3165. [Google Scholar] [CrossRef]

- Roy, L.; Kumar, R. Accurate K-distributed clutter model for scanning radar application. IET Radar Sonar Navig. 2010, 4, 158–167. [Google Scholar] [CrossRef]

- Karimov, A.; Rybin, V.; Kopets, E.; Karimov, T.; Nepomuceno, E.; Butusov, D. Identifying empirical equations of chaotic circuit from data. Nonlinear Dyn. 2023, 111, 871–886. [Google Scholar] [CrossRef]

- Kera, H.; Hasegawa, Y. Noise-tolerant algebraic method for reconstruction of nonlinear dynamical systems. Nonlinear Dyn. 2016, 85, 675–692. [Google Scholar] [CrossRef]

- Karimov, A.; Nepomuceno, E.G.; Tutueva, A.; Butusov, D. Algebraic Method for the Reconstruction of Partially Observed Nonlinear Systems Using Differential and Integral Embedding. Mathematics 2020, 8, 300. [Google Scholar] [CrossRef]

- Lei, Y.; Ding, L.; Zhang, W. Generalization performance of radial basis function networks. IEEE Trans. Neural Netw. Learn Syst. 2015, 26, 551–564. [Google Scholar] [PubMed]

- Zhang, P.; Li, J. Target detection under sea background using constructed biorthogonal wavelet. Chin. Opt. Lett. 2006, 4, 697–700. [Google Scholar]

- Wen, P.; Shi, Z.; Yu, H.; Wu, X. A method for automatic infrared point target detection in a sea background based on morphology and wavelet transform. Proc. Soc. Photo-Opt. Instrum. Eng. 2003, 5286, 248–253. [Google Scholar]

- Han, S.; Ra, W.; Whang, I.; Park, J. Linear recursive passive target tracking filter for cooperative sea-skimming anti-ship missiles. IET Radar Sonar Navig. 2014, 8, 805–814. [Google Scholar] [CrossRef]

- Liu, W.; Guo, L.; Wu, Z. Polarimetric scattering from a two-dimensional improved sea fractal surface. Chin. Phys. B 2010, 19, 074102. [Google Scholar]

- Bostynets, I.; Lopin, V.; Semenyakin, A.; Shamaev, P. Construction of infrared images of objects in the sea taking into account radiation reflected from an undulating sea surface. Meas. Tech. 2000, 43, 1048–1051. [Google Scholar] [CrossRef]

- Melief, H.; Greidanus, H.; van Genderen, P.; Hoogeboom, P. Analysis of sea spikes in radar sea clutter data. IEEE Trans. Geosci. Remote Sens. 2006, 44, 985–993. [Google Scholar] [CrossRef]

- Bourlier, C. Unpolarized infrared emissivity with shadow from anisotropic rough sea surfaces with non-Gaussian statistics. Appl. Opt. 2005, 44, 4335–4349. [Google Scholar] [CrossRef]

- Li, Z.; Chen, J.; Shen, M.; Hou, Q.; Jin, G. Sea clutter suppression approach for target images at sea based on chaotic neural network. J. Optoelectron. Laser. 2014, 25, 588–594. [Google Scholar]

- Wang, B.; Dong, L.; Zhao, M.; Wu, H.; Xu, W. Texture orientation-based algorithm for detecting infrared maritime targets. Appl. Opt. 2015, 54, 4689–4697. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Blanco, M.; Golikov, V. Multiframe GLRT-based adaptive detection of multipixel targets on a sea surface. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5506–5512. [Google Scholar] [CrossRef]

- Haykin, S.; Bakker, R.; Currie, B. Uncovering nonlinear dynamics-The case study of sea clutter. Proc. IEEE 2002, 90, 860–881. [Google Scholar] [CrossRef]

- Xin, Z.; Liao, G.; Yang, Z.; Zhang, Y.; Dang, H. A deterministic sea-clutter space–time model based on physical sea surface. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6659–6673. [Google Scholar] [CrossRef]

- Leung, H.; Hennessey, G.; Drosopoulos, A. Signal detection using the radial basis function coupled map lattice. IEEE Trans. Neural. Netw. 2000, 11, 1133–1151. [Google Scholar] [CrossRef]

- Hennessey, G.; Leung, H.; Drosopoulos, A.; Yip, P. Sea-clutter modeling using a radial-basis-function neural network. IEEE J. Ocean. Eng. 2001, 26, 358–372. [Google Scholar] [CrossRef]

- Tang, J.; Zhang, N.; Li, D.; Wang, F.; Zhang, B.; Wang, C.; Shen, C.; Ren, J.; Xue, C.; Liu, J. Novel robust skylight compass method based on full-sky polarization imaging under harsh conditions. Opt. Express 2016, 24, 15834. [Google Scholar] [CrossRef]

| Date | Total Number of the Images | Size | Horizontal/Vertical Resolution |

|---|---|---|---|

| March–May | 460 | 3000 × 4000 | 72 dpi |

| June–July | 1080 | 1920 × 1080 | 96 dpi |

| August–September | 2060 | 1920 × 1080 | 96 dpi |

| Navigation Angle (°) | Relative Angle of the Solar Meridian (°) | Navigation Angle (°) | Relative Angle of the Solar Meridian (°) |

|---|---|---|---|

| 0 | 209.17 | 180 | 195.28 |

| 10 | 202.69 | 190 | 182.89 |

| 20 | 189.94 | 200 | 160.21 |

| 30 | 179.58 | 210 | 164.00 |

| 40 | 170.05 | 220 | 153.83 |

| 50 | 153.53 | 230 | 148.45 |

| 60 | 143.96 | 240 | 133.18 |

| 70 | 130.60 | 250 | 123.46 |

| 80 | 121.00 | 260 | 113.86 |

| 90 | 103.07 | 270 | 109.94 |

| 100 | 100.49 | 280 | 99.17 |

| 110 | 92.07 | 290 | 92.03 |

| 120 | 260.67 | 300 | 263.60 |

| 130 | 246.84 | 310 | 251.84 |

| 140 | 230.06 | 320 | 241.81 |

| 150 | 225.14 | 330 | 235.58 |

| 160 | 213.30 | 340 | 225.07 |

| 170 | 203.48 | 350 | 214.87 |

| Time | K | RMSE | R2 |

|---|---|---|---|

| 7 September 2022 | −1.061 | 6.984 | 0.9968 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, J.; Zhou, S.; Li, Y.; Pang, Y.; Wang, Z.; Lu, Y.; Wang, H.; Bai, T. Polarization Orientation Method Based on Remote Sensing Image in Cloudy Weather. Remote Sens. 2023, 15, 1225. https://doi.org/10.3390/rs15051225

Luo J, Zhou S, Li Y, Pang Y, Wang Z, Lu Y, Wang H, Bai T. Polarization Orientation Method Based on Remote Sensing Image in Cloudy Weather. Remote Sensing. 2023; 15(5):1225. https://doi.org/10.3390/rs15051225

Chicago/Turabian StyleLuo, Jiasai, Sen Zhou, Yiming Li, Yu Pang, Zhengwen Wang, Yi Lu, Huiqian Wang, and Tong Bai. 2023. "Polarization Orientation Method Based on Remote Sensing Image in Cloudy Weather" Remote Sensing 15, no. 5: 1225. https://doi.org/10.3390/rs15051225

APA StyleLuo, J., Zhou, S., Li, Y., Pang, Y., Wang, Z., Lu, Y., Wang, H., & Bai, T. (2023). Polarization Orientation Method Based on Remote Sensing Image in Cloudy Weather. Remote Sensing, 15(5), 1225. https://doi.org/10.3390/rs15051225