Abstract

The impact crater detection offers a great scientific contribution in analyzing the geological processes, morphologies and physical properties of the celestial bodies and plays a crucial role in potential future landing sites. The huge amount of craters requires automated detection algorithms, and considering the low spatial resolution provided by the satellite jointly with, the solar illuminance/incidence variety, these methods lack their performance in the recognition tasks. Furthermore, small craters are harder to recognize also by human experts and the need to have a sophisticated detection algorithm becomes mandatory. To address these problems, we propose a deep learning architecture refers as “YOLOLens5x”, for impact crater detection based on super-resolution in a unique end-to-end design. We introduce the entire workflow useful to link the Robbins Lunar catalogue with the tiles orthoprojected from the Lunar mosaic LROC mission in order to train our proposed model as a supervised paradigm and, the various optimization due to provide a clear dataset in the training step. We prove by experimental results a boost in terms of precision and recall than the other state-of-the-art crater detection models, reporting the lowest error estimated craters diameter using the same scale factor given by LROC WAC Camera. To simulate the camera satellite at the lowest spatial resolution, we carried out experiments at different scale factors (200 m/px, 400 m/px) by interpolating the source image of 100 m/px, bringing to light remarkable results across all metrics under consideration compared with the baseline used.

1. Introduction

The surfaces of the Moon and other planetary bodies are characterized by a cratered landscape, which is the result of a long collisional history (e.g., [1]). Since the impact crater population naturally grows with time, the cratering record, in conjunction with assumptions about the crater formation rate, has successfully been applied to infer the age of planetary terrains, allowing to study of the geological history of a given body [2,3,4,5,6,7]. Therefore, the statistical analysis of an existing crater population in a geological unit does yield relative surface age, if assuming that craters accumulate randomly on surfaces at a rate that is on average constant [5,7,8]. In turn, absolute ages can be derived if it is known the projectile flux on a given body or if the relative age can be calibrated by means of rock samples, like in the lunar case [9,10,11].

The Moon was the first body to be extensively investigated with datation purposes (e.g., [7,8,10]). It is characterized by two main terrains, the highlands and maria. Highlands represent the most ancient crust and can be as old as 4.4–4.5 Ga (e.g., [10]), and are characterized by a density of >15 km sized craters larger at least ten times than what found on maria [12]. Lunar maria were emplaced as multiple episodes of low-rate basaltic lava flows [13], during a long period of time spanning from about Ga up to Ga [14].

Although the crater-based chronologies have long been used in planetary studies, it is challenging when applied to date young terrains, since the small impact crater population accounted for them [15,16]. In recent years, the growing advancement in the performances of space cameras allowed to improve their spatial resolution, and therefore enabling the observation of smaller and smaller impact craters [17]. However, this has brought additional issues. On one hand, the smaller the crater, the higher their sensitivity to flux variations, target properties, post-impact modification like volcanic emplacement or erosion [16,18,19,20,21,22]. On the other hand, because of the huge amount of craters in the <2–3 km size range, manual count makes this detection technique inadequate for obtaining a complete and reliable database [15]. Additionally, crater identification can vary between individuals, about 20–30% when they are experts [23].

Along with manual count, a number of automatic approaches for crater detection have been suggested so far [24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39]. Among them, many Digital Elevation Model (DEM) machine learning approaches [36,40,41] show better performances than classical discriminative algorithms that are distance-based [39] or other approaches linked to the Support Vector Machine (SVM) [42] characterized by a large number of descriptors to define specific feature extraction. Despite these methods providing good performances, DEM datasets of planetary bodies are either few in number or have poor quality (e.g., low spatial resolution, data holes). Therefore, DEM-based model methods can only have modest applicability. Indeed, object detection at finer precision requires above all a good spatial resolution, which represents therefore an issue for remote sensing applications. In recent years, researchers are moving on studying the effects of the super-resolution methodologies applied in the remote sensing field [43,44]. Although the results are promising, most of the super-resolution models are applied only as a preprocessing step, and then object detection models are used to study the effect of the achieved super-resolution in the abovementioned task [43]. To address this problem, we leverage super-resolution methodology to improve the images at low spatial resolution by to enhance the performance of the object detection model (YOLOLens), bridging the gap between low image resolution and pattern recognition. Focusing on crater detection, we aim to increase the detection capability through convolutional neural networks to obtain a lunar crater database more accurate than the catalogs in the literature.

In this paper we arise the following two hypotheses:

Hypothesis 1 (H1).

Through the super-resolution approach, it is possible to improve the spatial resolution from satellite instruments and enhance the detection ability in the space domain context.

Hypothesis 2 (H2).

Thanks to the improved images, the model confidence degree (which establish the correctness of the predicted entity) will be high as the image quality increase.

The manuscript is organized as follows: In Section 2, we introduce the Related Works. In Section 3 and Section 4, the proposed methodology and the description of the workflow useful as preprocessing steps are described, respectively. In Section 5, we report the quantitative/qualitative results of all the experiments carried out, while in Section 6, we present the discussion and finally, in Section 7, we present the conclusion of the work.

2. Background

Lunar craters have typically been detected from lunar surface images and/or through (DEMs) by manual detection ([45,46,47]), computer-assisted methods ([48]) and the ensemble methods ([42,49]). Whereas manual crater detection is time-consuming, computer-assisted methods reduce drastically the time-consuming requirements to obtain semiautomatic detections. Steps such as pre-/postprocessing are mandatory and can be high in terms of CPU computational time. Due to high impact frequencies, manual counting on the Moon might be extremely laborious and inconvenient [50,51], considering also the challenging for human recognition when spatial resolution is low and/or craters are small. Object detection in craters mapping provides the bridge to more precise and fast analysis. In [42], an SVM based-on ensemble method for automatic detection is proposed using the features extracted by the Histogram of Oriented Gradient (HOG [52]) technique to predict craters centers and diameters from DEMs sources. Even though the approach provides significant results, both techniques (HOG and SVM) are not comparable with the modern neural networks architecture because the features descriptors are hand-crafted (e.g., SIFT [53], HOG) and they are strongly dependent on the images taken into consideration. For this reason, many machine learning models for crater detection are DEM-based such that feature descriptors are more suitable to find discriminative features useful to train models as SVM or through non-parametric and discriminative models as [54,55]. However, it should be noted that limited DEM data for other bodies in the solar system as well as difficulties related to the quality and spatial resolution of the DEM makes the DEM-based model’s method not applicable or with limited coverage results at low quality (e.g., data holes, low spatial resolution).

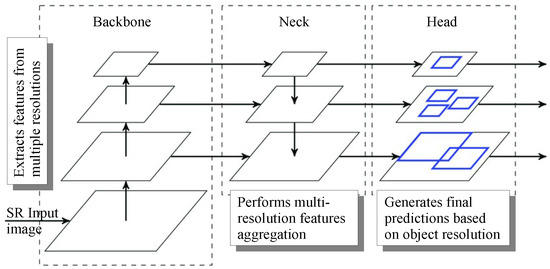

In the last decades, deep learning methods have achieved remarkable results in all fields of science, surpassing any past machine learning methods in terms of general performance and quality of results obtained. Deep learning models replace the handcrafted features descriptors, with the automatic feature representations learned by human experts annotations (e.g., supervised learning). Convolutional Neural Networks (CNN), through a learning process (training phase), can generalize well to new sample data with performance continuing to improve in comparison to manual expert annotations in many tasks (e.g., classification, objection detection, segmentation [56]). Focusing on crater detection, YOLO models [57] achieve great results in detection and classification independently by the specific task where it is applied. The main structure of YOLO is composed of three parts: the Backbone network, Neck Network, and the Head part. Backbone is a CNN used for discriminative feature extraction, where it is possible to apply any model used for classification (e.g., Resnet). It uses skip connections at different grains from coarse to fine to catch different details’ quality. These last features are forwarded into Feature Pyramid Network (FPN) [58]) or using a model similar architecture as PaNet [59], which works as features extractors generating a single image at different scales (multi-scale feature maps). Although exists many types of pathways in the literature (e.g., Top-down for the FPN, Top-down and Bottom-Up for the PaNet), the main idea is always to use a path (or multiple paths) to extract and combine semantically discriminative features with the precise localization information, because the complexity of the features increases as deep as we go through the neural networks decreasing the spatial resolution quality. The last network referring to the Head, takes the features at different previous levels (Neck) for the box and class prediction task. Usually, it is used in R-CNN [60] model, YOLO Head, SSD [61] and RetinaNet [62], but in the case of YOLOv5 Head, three types of Convolutional layers 1 × 1 are used. In addition, YOLOv5 consists of many versions on its own, which are YOLOv5s, YOLOv5m, YOLOv5l and YOLOv5x. The only difference is led by the depth of the learned parameters considered. In [28], authors train a well-known object detection model (YOLOv3 version [57]) over the Lunar Reconnaissance Orbiter-Narrow-Angle Camera images obtaining an overestimation by 15% in the final predicted crater diameters respect the Ground-Truth catalogue considered and shows acceptable performance on acquired LROC images with incidence angles ranging between ∼50° and ∼70°. This makes it applicable on many lunar sites independent of morphology area. In [36], a CNN-based on Unet is trained over Lunar craters using DEMs reporting low errors to localize the longitude/latitude and radius of the lunar craters. Again in [36], the trained model over Moon is used to test on DEM images of Mercury planet through a transfer learning technique, estimating a large fraction of impact craters in each map considered by the authors.

3. Methodology

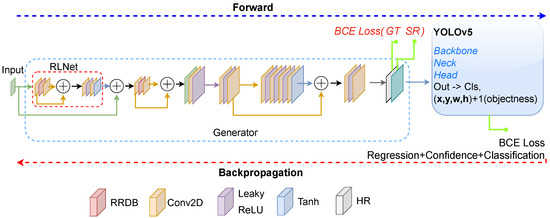

The proposed model is composed of the two main subnets, Generator and YOLOv5, which make up a single end-to-end model that we call YOLOLens. The terminology “YOLOLens5x” that we use in this paper is based-on the YOLOv5x model; in fact, we can use all YOLO family models fused into our proposed model. A schematic representation of the proposed YOLOLens model is reported in Figure 1 and Figure 2. The Generator is a neural network that, starting from a low-resolution image, creates a super-resolution image (SR) to be fed to the YOLO object detector model. The Generator learns to generate plausible data containing more detail than the input image. The first part of the Generator, the one closest to the input, consists of a Refinement Learned Network (RLNet) [44], which is responsible for the refinement process of the input low-resolution image. This RLNet uses the Residual in Residual Dense Blocks (RRDB) [63] to extract local features from previous blocks and to preserve residual local information. The SR image is then used by the connected YOLO model to perform object classification and detection simultaneously just by looking once at the given input image. Thus the algorithm is called You Only Look Once (YOLO). As shown in Figure 2, YOLO is a single-stage object detector with three important parts in its architecture; backbone, neck and head. The backbone is responsible for the extraction of features from the given input images, the neck mainly generates the feature pyramids and the head performs the final detection as an output.

Figure 1.

Schematic representation of the proposed YOLOLens model. The Generator transforms an input image into a 2× image (SR), whereas the YOLO model performs object detection starting from the SR image.

Figure 2.

The YOLO model architecture, where the backbone extracts relevant features from an image, the neck enhances feature maps by combining different scales and the head provides bounding boxes for detection.

In the training phase, a preprocessing step is necessary to reduce the source image by and through convolutive layers and RRDB layers, lead the learning on how to reconstruct the source (High-Resolution Ground-Truth) by generating a higher resolution image such that the error between predicted output and Ground-Truth be as much lower as possible. This approach is needed to match the shapes between the SR output and the GT source to calculate the pixel-by-pixel loss function and extract all the metrics to establish the goodness of the reconstruction output image. We apply a bicubic interpolation to down-sampling the HR input and forward it into RLNet, which is proved by [44] to refine the interpolated images by learning method using an unsupervised learning methodology (dot red rectangle in Figure 1). Then, the refined images are forwarded to Generator networks, which use convolutional operations and RRDB block to make a super-resolution output (binary cross entropy is applied into the last layers for the error computation). Finally, the super-resolution output prediction is fed into the object detection model (YOLOv5) to detect the target. We emphasize that during the test step, no one pre-processing is applied (e.g., down-sampling, mosaics, cropping, flipping). For each training sample n, the following triplet is used: >, where is the HR expected output image, is the LR input image, and is a set of bounding boxes of the objects of interest (craters in our application field) located in the model-generated SR image. The bounding boxes in are defined as follows:

where is the class label of a single bounding box, are the normalized coordinates representing the centre of the bounding box and are the normalized width and height of the bounding box.

The loss functions formalization assumes four types of loss functions, for Super-Resolution-Ground-Truth error computations, to reduce bounding box coordinates (Regression Loss) and finally and for Confidence and Classification Loss, respectively.

More precisely, we define as:

where j are the box coordinates predictions images and i denotes the Ground-Truth cell in the same format. denotes if object appears in cell i and denotes that the jth bounding box predictor in cell i is responsible for that prediction. G represents the Generator model (referred to as G function). Loss functions as in Equation (2) uses the Binary Cross-Entropy (BCE) to increase confidence values (which attribute a scalar value for each detection done, in a range [0, 1] to establish the “fidelity” of the recognized craters) and to decrease the classification loss (Craters and Not Craters task as Binary classification problem).

Finally, the total loss function error can be formalized as:

The partial derivatives for each input–output pair can be expressed as follows:

where the weight connects the output of node i in previous layer to the input of node j in layer k in the computation graph, and E is computer error loss described into in Equation (4).

After the learning process, since the model is able to make detection from the reconstructed source thanks to the super-resolution model, into the real model test, we use the original source (without downsampling) to make the real super-resolution and catch more fine details using the objected detection subnetwork. The workflow process is shown in Figure 1. The model’s parameters are reported in Table 2; more precisely the YOLOLens5x has 101,203,996 of parameters against, YOLOv5l and YOLOv5x have 46,108,278 and 86,173,414 of parameters, respectively.

4. Dataset

Lunar Reconnaissance Orbiter Camera (LROC) Wide Angle Camera (WAC) aboard the Lunar Reconnaissance Orbiter (LRO) has allowed the instrument team to create a Moon global mosaic [64,65,66,67] using over 15,000 images, acquired between November 2009 and February 2011. The purpose was to generate a 100 m/px morphology mosaic of the Moon. The Geospatial Information considered to process the information is in the Longitude domain [−180, 180] and Latitude domain in a range [−90, 90]. The map projection used is cylindrical with a final pixel resolution of 100 m/px and represented by 8 bits per pixel (0, 255) over only one channel (1 band).

Data Preprocessing Details and Craters Extraction

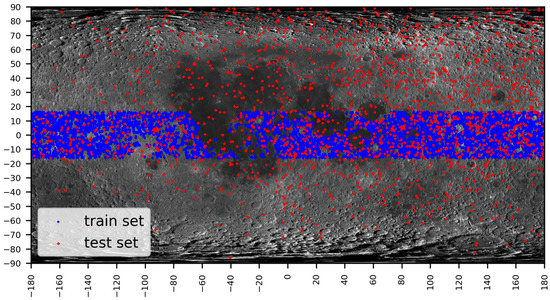

In this section, the full preprocessing operations useful for creating the dataset and its correspective train/test set size are described. In Figure 3, we show the lunar site location randomly extract from the training set (blue) and test set (red). The training set tiles have a dimension of px randomly chosen in the range [−180, 180, −16, 16] of Longitude/Latitude and the range of [−180, 180, −90, 90] for the test set. The equatorial lunar site location is selected in order to have the maximal spatial resolution provided by the global lunar mosaic with a low degree of dilation due to 3D representation into 2D space (Geoid to 2D map).

Figure 3.

The distribution of the train and test data over the cylindrical projection coordinate system.

As Ground-Truth, we used the Robbins catalogue provided by [47] that contains approximately 1.3 million lunar impact craters and it is enough accurate for all craters larger than 1 km in diameter [68]. Craters were manually identified and measured on LROC WAC images, LRO Lunar Orbiter Laser Altimeter (LOLA) topography, SELENE Kaguya Terrain Camera (TC) images and finally were used for the manual crater tracing [69]. The Robbins catalogue contains longitude/latitude/diameters and other useful information for each crater manually identified in the maximum range of latitude [−90, 90] and longitude [0–360]. The catalogue has a global coverage of the Moon and all coordinates are expressed using the cylindrical projection. The main preprocessing is to extract the coordinates of Robbins for the specific tile we selected using cylindrical projection, change the projection map to obtain an orthonormalized image and convert all Robbins coordinated into pixel space accordingly with the new projection used (orthographic). In Figure A4, we overlap the Robbins label in the example tiles and we changed the longitude system [0, 360] provided by Robbins into [−180, 180] reference. In all our experiments, we use the global mosaic of the LRO WAC images and we reproject the coordinate system from cylindrical projection to orthographic system considering the moon radius avoiding the longitude dilatation as much as possible. Then, the workflow to build the dataset extracts N random tiles of pixels from the orthoprojected LROC global mosaic. The dimension of the global mosaic is composed of 5,958,389,448 pixels (109,164 × 54,582) and we use of the entire Moon for the training set (6886 tiles) and 1721 tiles of the test set. In this process, all tiles with large dilatation are excluded from the dataset; furthermore, we discard all tiles with less than 45 mapped location craters (to learn more in the training step). All coordinates extracted are converted using pixel system coordinates from Lon/Lat to pixel reference and normalized in the range between [0–1] useful to run our model, and all tiles are saved in the format [class, x, y, w, h] where class represents the only class “crater” we need. The craters with a size greater than 416 pixels (41.6 km) are discarded in the training step, and all craters less than 416 pixels are included. This filtering operation is necessary to avoid all craters much larger than the observation windows themselves and therefore to avoid partial estimates of their diameters. The random tile selection function is built to avoid distortions and cut off all the crater’s locations that exceed out of the border tile (see Figure A4). This step is crucial because in the case which the tile has a distortion (e.g., north pole), the image under consideration contains no data value (black background) and, the bounding box extract by the Robbins catalogue can go in the black regions, making more difficult the detection model because the model can interpret the background noise as the crater and learn it, leading us into the low crater detection performance. This process is complex due to the variation of the dilation and it depends on where the lunar location is randomly selected; for example, we can have a high dilation near the polar region and a low dilation (or no dilation) in the equatorial zone. The labels and the tile change accordingly due to the new system projection and the cutoff must be considered also with the possible dilation. The operation allows us to use a “mosaic” during the training step which is a composition of random samples of different sizes and crops and, without a perfect cutoff as much as possible the GT labels can go outside the image crater until the black background. In Figure A4 we show random tile samples with different orders of dilatation and their correct coordinate Robbins interpretation system (red bounding boxes) due to the changing of the projection system.

We summarize the workflow of the pre-processing steps as follows:

- Convert global mosaic tiff to a png image.

- Read Robbins catalogue and discard all craters largest than the window selected.

- Select N random tiles and reproject them to the orthographic coordinates system.

- (a)

- Cut off all tiles outside the selected window (avoid no data regions)

- (b)

- Avoid all tiles with too dilatation

- (c)

- Discard all tiles with no Ground-Truth correspondence from the catalogue selected

- (d)

- Convert from Lon/Lat system to Pixel reference and normalize in [0–1] values.

- Split into train/eval in a ratio of 80:20 and save the tiles obtained.

- Convert all information into a YOLO format dataset.

5. Results

In this section, we analyse and evaluate the model’s performances, by reporting the quantitative results of the proposed method and comparing it across some algorithms known in the literature. In all experiments reported in this Section, we trained the YOLOLens models for 300 epochs, ADAM optimizer [70] was used with a learning rate of . Low data augmentation is applied to provide a slight scale, translation, flip left/right and image mosaic is always considered. This last augmentation type increases the generalization capability of the model to improve feature detection and avoid the context dependence by the scenario which they can find.

5.1. Quantitative/Qualitative Analysis

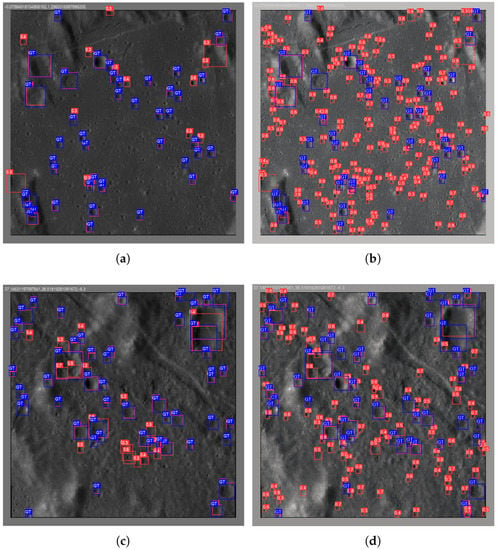

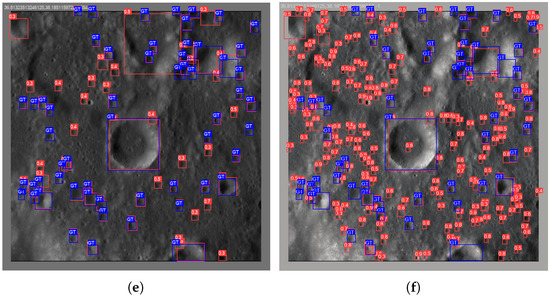

To evaluate the crater detection performance of the proposed YOLOLens, we use the common machine learning metrics to establish the goodness of the model’s prediction as precision described in Equation (6), recall in Equation (7), and error diameter value in Equation (8) compared with the ground-truth Robbins catalogue. The crater positions and their correlated diameters can be extracted through the object detection algorithm (from pixel coordinates to Lon/Lat system reference). In Table 1 we report and compare the results considering the evaluation set composed of 1720 extracted moon location site with 132,690 craters labelled as Ground-Truth from Robbin’s database. The metrics reported showing better performance of the YOLOLens5x than the baselines compared, reporting YOLOLens5x recall of than of the YOLOv5x baseline model, proving the validity of our proposed model to retrieve more craters of the Robbins catalogue with respect to the capability analysed by YOLOv5x baseline. In Table 2, the comparison across many deep learning models using precision, recall, error diameters and the number of parameters. Although the evaluation set is different (many models are DEM-based), we analyze the generalization capability by the reported models in the crater detection task, highlighting the lowest average error diameter values than the others value reported and, the highest precision (P) and recall (R) clearly denoted in bold style. In [49], estimates the human performance in the crater detection task, reporting a recall of 75%. This result is aligned with [36], which used a Deep Neural Network (Unet Model), where the recall metric (see Equation (7) obtained is similar to human performance for crater diameters <15 pixels (1.5 km). The achieved human performance and neural networks model in the crater detection task is overcome by the YOLOLens5x model, confirming the increments in terms of recall and across all metrics reported in this analysis. Finally, in Figure 4, we show three random tiles extracted from the test set, comparing all the craters detect by the YOLOv5x baseline (first column) and the predicted craters using YOLOLens5x (second column), highlighted in blue color the GT from the Robbins catalog. Furthermore, we show the confidence value for each red box detected.

Table 1.

Performance comparison between YOLO models. In bold the best results obtained.

Table 2.

Performance comparison between the best models available in the literature. In bold the best results obtained.

Figure 4.

In subfigures (a,c,e) the predictions of YOLOv5x across the respective tiles of the lunar coordinates locations reported. In subfigures (b,d,f) the prediction of YOLOLens5x along the correlated images compared with YOLOv5x baseline. The amount of craters detected by YOLOLens achieves a remarkable increment than YOLO baseline capability thanks to the super-resolution effects. The GT is marked in blue color and the YOLOv5x and YOLOLens5x in red color. (a) YOLOv5x predictions Lunar coords min/max Lon/Lat (−0.0758, 1.2960), (−2.9416, −1.5697); (b) YOLOLens5x predictions Lunar coords min/max Lon/Lat (−0.0758, 1.2960), (−2.9416, −1.5697); (c) YOLOv5x predictions Lunar coords min/max Lon/Lat (37.1463, 38.5181), (−6.3515, −4.9796); (d) YOLOLens5x predictions Lunar coords min/max Lon/Lat (37.1463, 38.5181), (−6.3515, −4.9796); (e) YOLOv5x predictions Lunar coords min/max Lon/Lat (36.8132, 38.1851), (−1.6620, −0.29020); (f) YOLOLens5x predictions Lunar coords min/max Lon/Lat (36.8132, 38.1851), (−1.6620, −0.29020).

5.1.1. Analysis 1: Model’s Performance per Diameters Crater Range

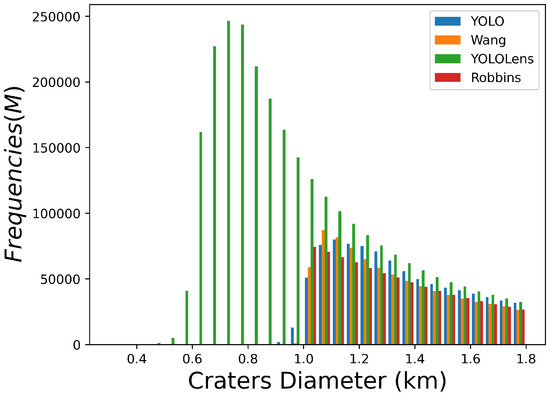

In this analysis, we carry out the performance evaluation by YOLOLensX and YOLOv5x baseline for lunar crater detection on different diameter groups of the validation set. As reported in Table 3, YOLOLens results ( in red color) achieve superior performance than baseline compared (black values) in any diameter ranges considered. An interesting observation is given by the results of the YOLOv5x baseline of the samples on the diameter range over 10 km, and similar results have been reported by [41]. The model performance depends on the number of GT samples and, in the case of the Lunar satellite, the number of small and medium craters is higher than the large craters. Therefore, the performance to detect large craters decreases because they are rarer than small crater diameters. These considerations are visibly observing all reported values in Table 3 by YOLOv5x baseline. However, with the improvement of resolution given by YOLOLen’s proposed method, the corresponding samples provide more discriminative information thanks to the factor of the spatial resolution so that the performance of the model is gradually improved on all the diameter groups under study. The motivation for which we analyze in the range 1–15 km is given by their higher number in the GT Robbins catalog, and as the crater’s size increases, the estimated errors increase due to the rarest of craters at that range. To solve this problem, the composition of the data batch has to be balanced by the diameter of the craters, such that the probability of observing a rare crater is the same as a frequent crater, and, in other hands, the ability to learn it will be balanced at rare/frequent craters and not biased at only frequent craters. In Table 4, we report the performance in terms of average precision with an IoU in comparison with other crater detection methods highlighting the superior performance achieved by YOLOLens5x in the diameter range between 5 and 10 km. The metric under consideration means that a prediction crater is considered as true positive where the intersection over union (IoU) is greater than the ; otherwise, it is considered as false positive. In Figure 5 we show the frequency histogram over the entire Moon between Robbins, Wang [42], YOLO and YOLOLens crater detection. Robbins and Wang do not contain craters under 1km and, the YOLOLens capability to detect small craters is quite evident above all in the range between [0.6, 1] km. Under 0.6 m, the YOLOLens model did not detect any craters, probably because the GT below 1 km is not provided; however, it is possible to select some detected craters and use them as GT, thus adopting a semi-supervised paradigm for the attempt to improve detection below the abovementioned threshold.

Table 3.

Comparison across different metrics between the ranges considered. The apex y and are referring as YOLO5x and YOLOLens5x with the correlated metrics (P precision, R recall, and mAP (Average precision with IoU and ≥0.95. In red, all best results achieved by YOLOLens5x compared with the baseline YOLO5x are presented.

Table 4.

Average Precision results of the models reported in [41] with IoU Threshold ≥0.5 in a range of craters diameters [5–10 km). In bold, the best value reported by YOLOLens5x is presented.

Figure 5.

Frequency histogram using different craters detected methodologies.

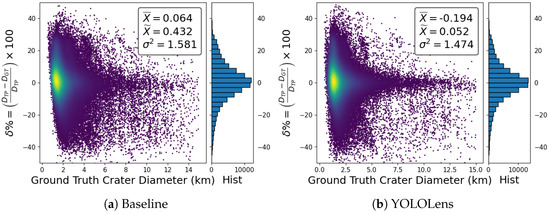

5.1.2. Analysis 2: Crater Diameter Error

To evaluate the crater diameter error estimation and demonstrate the effectiveness of YOLOLens5x, we calculate the delta percentage as follows:

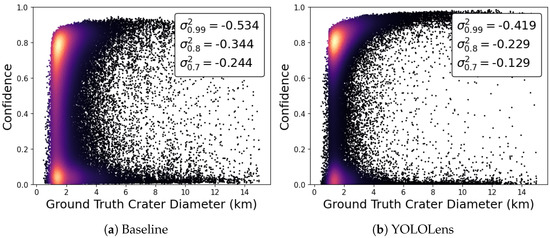

where are the Ground-Truth crater diameter and the correlated YOLOLens5x prediction of it. In this study, we assume that a true positive crater must match at least the 50% of the Intersection Over Union (IOU) area, whereas any detection with an estimation under 50% will be discarded. The analysis included 98,000 true positive crater detection over 1000 different tiles of size. Positive values reflect an overestimation, while negative values are an underestimation of the crater diameter examined by the algorithm. YOLOLens5x results Figure 6b show improvements in median and variance values, demonstrating a greater compactness and error reduction in terms of crater diameter estimation than the YOLO baseline model (see Figure 6b). Furthermore, in Figure 6b, mean and median are similar because the outliers of the given data population are rarer and both metrics are maintained close. Contrarily, as shown by the results obtained by the YOLO baseline showed in Figure 6a, the mean/median values are quite different from each other, which implies the existence of many outliers of the given error data population. In Figure 7, we show the confidence values obtained by all true positive craters between the model predictions and the Robbins catalogue. The higher its value (in a range [0–1]), the more confidence we can have in its crater prediction. Figure 7a shows an uncertain degree of confidence reported by the YOLO baseline, and in Figure 7b, more high confidence is achieved by the proposed YOLOLens (see the variances in the legend boxes). Thanks to this last, a remarkable increment has been obtained proving a better degree of confidence using the YOLOLens5x model than the YOLOv5x baseline. We note that a true positive may have low confidence because the discriminating feature that makes up the crater is rarer than other craters (or rarely observed during the training process). The sentence also applies to large craters (much rarer than small craters), and for this reason, they are rarely seen in the training step; therefore, it is evident that they may have a low confidence.

Figure 6.

In subfigures (a,b) the GT crater diameters in the x-axis and the delta error percentage in the y-axis between all true positive predicted craters diameter and correspective GT diameters from Robbins catalogue. In the legend, mean, median and variance of the given error distributions and the linked frequency histogram are given. From the plots comparison, the error distribution of the YOLOLens model in subfigure (b) is closer to the median value than YOLO baseline in subfigure (a).

Figure 7.

In subfigure (a,b) the GT crater diameters in the x-axis and the related confidence value in the y-axis obtained by the YOLO baseline and YOLOLens respectively. In these plots, all true positive (Equation (6)) craters were taken into account between the GT Robbins catalogue and the correspective deep model analyzed. The higher the value higher is the confidence provided by the model to establish the correctness of the detection. We observe higher values of YOLOLens than the YOLO baseline indicating more trust in the first model. In the legend, the variances computed, considering as mean values, show better confidence compactness values given by the YOLOLens model (subfigure (b)).

5.1.3. Analysis 3: The Performance’s Impact of the Scale Factor

In this section, we analyze the model’s performances using different scale factors to provide the crater detection effectiveness by downscaling the original spatial resolution from 100 m/px (LROC WAC) to 200 m/px and 400 m/px. When degrading the source and cutting off much high frequency of the images, the performance obtained by the YOLO baseline drops and its generalization capability decreases with spatial resolution. In Table 5, we report the results obtained in the validation set using a scale factor (SF) by and . YOLOv5x baseline with a scale factor obtains a precision of and a recall of , pointing to a higher degree of degradation with respect to the YOLOLens5x with a 4× scale factor, for which we get and for precision and recall, respectively (the best results are reported in bold in Table 5). As the spatial resolution increase at 100 m/px native for and 200 m/px for , the YOLOLens performance reaches better performances than the YOLO baseline. Indeed, YOLOLens have a precision and recall of and , respectively, in comparison to the and values obtained with the baseline model. In this analysis, a random interpolation (cubic, linear or area) is applied through the native LROC images (which are different by the only area interpolation made in our training experiments). In fact, the YOLOLens5x model estimates a super-resolution image upscaled by , enabling it to work as it was at (as the first row compared in the table). We find a significant boost of YOLOLens5x results than YOLO baseline, providing an increment in performances and suggest that the remarkable results can be valuable in satellite imagery tasks in which the on-board instruments capture images at low resolution in which it was trained.

Table 5.

The impact of the scale factor ( and ) over the Lunar LROC test set. All images are downscaled by a scale factor (SF) through a random interpolation (Cubic, linear, area), reporting the results in terms of Precision, Recall and mAP across YOLOLens5x and YOLO5x baseline models. The impact of scale factor on the detection mAP with an IoU of 0.6 and confidence threshold of 0.001 on the Lunar LROC test set.

6. Discussion

In this section, a brief discussion is provided to support the results highlighted in Section 5. In Figure 4, we show a significant improvement in crater detection with the super-resolution effect achieved by the YoloLens model (right column) in three lunar site locations. In the abovementioned figure, the blue rectangle represents the Robbins GT and the red rectangle the YOLOLens prediction. In order to main the same coordinates scale, we rescale the YOLOlens outputs by to match the Robbins GT labels, this procedure is performed for the visualization point of view. As reported in Table 1, Table 2, Table 3 and Table 4, we overcome all the models introduced by the literature across all the metrics examined, demonstrating by quantitative results the effectiveness of the proposal to obtain good generalization performance in the crater detection task. YOLOLens5x has more parameters than all compared models, and the computational time required in the training step and inference step is greater than that of all models and does not apply super-resolution methodologies. In Section 5.1.1, an extensive analysis is provided to support the robustness of the YOLOLens5x to crater detection using specific diameters range. In Section 5.1.2, the error diameter and the confidence plot are shown to highlight the robustness of the YOLOLens5x than the Yolov5x baseline. In Figure 7, we observe a boost in the confidence value achieved by our proposed model for crater detection, making the model more robust than using a model without super-resolution effects. In Figure 6, even though the two distributions have a similar appearance, the median and mean values extracted by YOLOLens5x (Figure 6b) are more similar than those extracted by YOLO baseline (Figure 6a), highlighting the capability of YOLOLens to obtain less craters error diameter. Although the hardware demand is high, we emphasize that the model enhances input data from a shape by to a super-resolution output of and all the computation required for the object detection is due. The computational time required is strictly dependent on the hardware used and by the location of your data for the training/eval/test step. The inference test using a NVIDIA RTX-5000 and batch size 8 (with all data into an SSD memory) requires 20 min for 1720 tiles and, the training step over 300 epochs and batch size 1 (avoiding out-of-memory) requires approximately 7 days.

The scientific contribution of this work covers different key points and can be summarized as follows:

- The novel generative/object detection model can produce a super-resolution image, enhancing the capability of the object detection task through an end-to-end model.

- A workflow is developed to the craters georeference on the LROC WAC derived global mosaic, starting from a generic lunar catalog.

- Quantitative and qualitative performance analyzes and comparison with literature, super-resolution impact at different scales for crater detection task, and evaluation per diameter ranges are performed to prove the effectiveness and robustness of the proposed model.

7. Conclusions

In this paper, the hypotheses we arose in the introduction section have been demonstrated successfully. The super-resolution enhances the object detection capability improving its performance across all the metrics reported. The novel generative/object detection model proposed obtains the best performance in terms of Precision, Recall, mAP and Error Crater Estimation in comparison to the most performing models of the literature, demonstrating the effectiveness of the proposal comparing it even at different ranges of diameter to evaluate the performance at different levels. Furthermore, we report the increment of the confidence value due to the super-resolution effect, which can establish the correctness of the detection/classification by the YoloLens5x model. To establish the super-resolution effects, we degrade images by and , to simulate the different spatial resolutions of images, provided by the camera on-board the satellite. We carry out experiments reporting a remarkable boost in performance by the proposed YoloLens5x in comparison to the Yolov5x baseline across all metrics considered.

Author Contributions

Conceptualization, R.L.G.; Methodology, R.L.G. and I.G.; Software, R.L.G.; Validation, R.L.G., G.C., C.R. and E.M.; Formal analysis, R.L.G. and I.G.; Investigation, R.L.G., G.C. and E.M.; Data curation, C.R.; Writing—original draft, R.L.G., G.C., I.G. and C.R.; Writing—review & editing, R.L.G., I.G. and E.M.; Supervision, R.L.G., G.C., I.G. and E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study is supported by the BepiColombo ASI-INAF contract n.2017-47-H.0.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

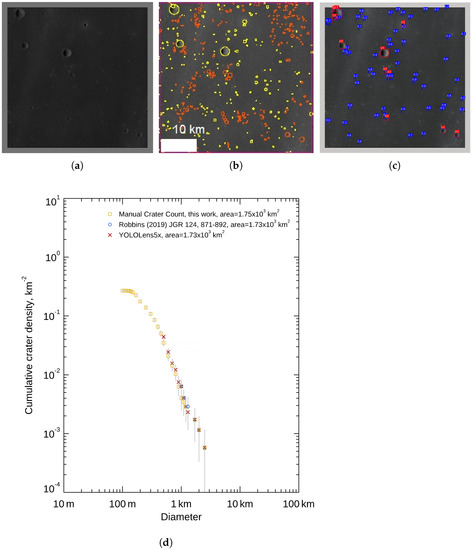

Appendix A. Apollo 12 Landing Site

The location of the Apollo landing site is on the southeastern side of Oceanis Procellarum, a region quite flat and dominated by rays and secondary crater fields from the Copernicus crater ([12,79]). In [80], the author measured an N(1) = 5910 ± 960 per km, generally higher with respect to other works in literature (e.g., [6,9,80]). We select a tile in the Apollo 12 landing site to perform a manual crater count and compare it with the Robbins (2019) catalogue. In Figure A1a, we show the area outlined, and in Figure A1b the results of the crater counting, where we marked the primary craters in yellow, while the secondaries in orange. We measured 473 craters, with diameters between 0.1 and 2.7 km. In Figure A1c, we report the craters of the Robbins (2019) catalogue, which include only structures larger than 1 km and, the craters outlined by the YOLOLens model. The YOLOLens model detected 76 craters, with diameter between 0.5 and 2.7 km. We obtained a crater density of and , respectively for our manual count and the YOLOLens crater automatic detection. In comparison, the crater density of the Robins catalogue in this tile is . In Figure A1d, we plotted the three different crater counts in a cumulative plot, supporting the capability of the YOLOLens model to detect craters in comparison to manual count.

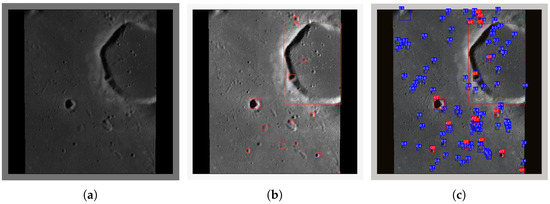

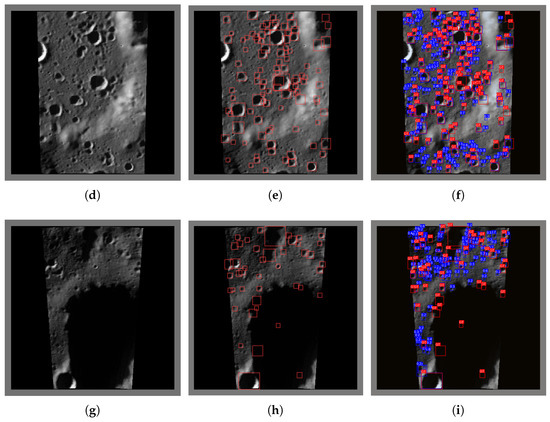

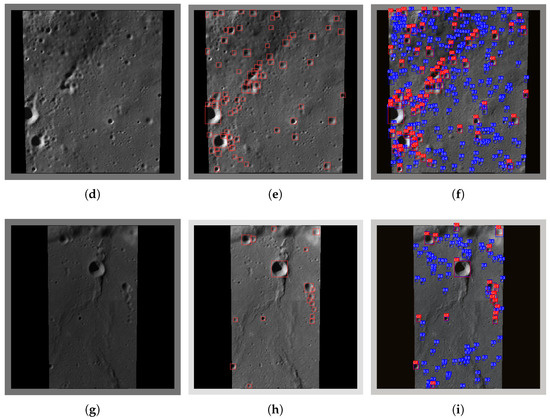

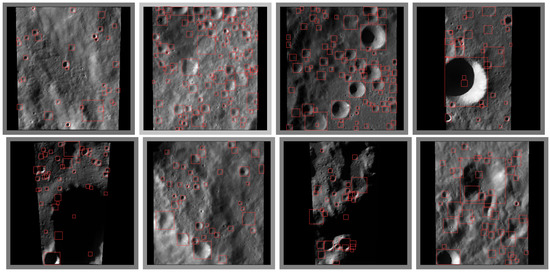

In Figure A2 and Figure A3, we show three six random tiles extracted from the Lunar Highlands, more precisely are random locations of the far side in the range of [90, 180. −90, −25] Lon/Lat. The first column for both figures represents the original sources with the linked lunar site location, in the second column the same sources with the Robbins labels used as GT and, in the last column the YOLOLens5x predictions (blue color) with the Robbins labels (red color). Some Robbins labels are located in the not-visible region because of the WAC, LOLA and DTM have been used to obtain more information for the crater detection task.

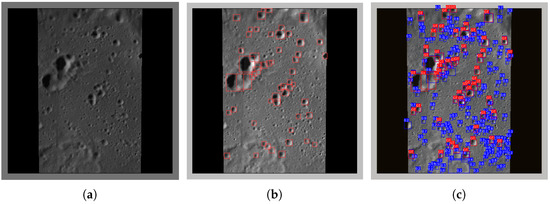

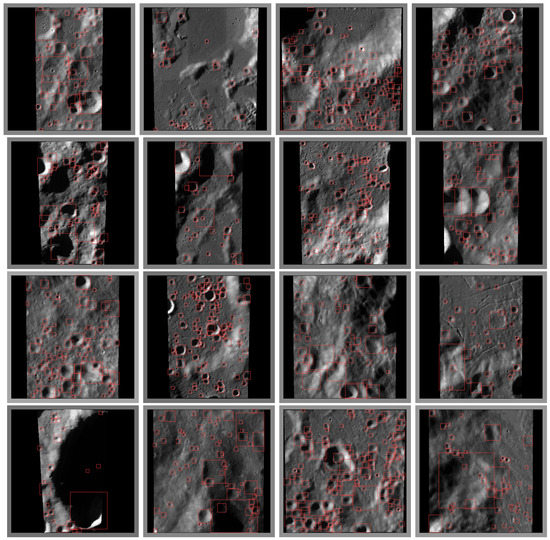

In Figure A4, we show many random samples of the far side with the correspective Robbins label (red bounding boxes). We highlight the different degrees of dilatation (black background) due to the near region polar location tile. All labels are cut off if they exceed the tile border and no one data source manipulation is done.

Figure A1.

In subfigures (a–c) the Apollo 12 landing site, the manual crater counting and the YOLOLens craters detection (Robbins label in blue color and YOLOLens detection in red color). In subfigure (d) the cumulative size-frequency distribution of the craters counted [47].

Figure A2.

In subfigures (a,d,g) Three lunar site locations from LRO Camera. In subfigures (b,e,h) the Robbins labels over the correspective image sources. In subfigures (c,f,i) the prediction of YOLOLens5x along the correlated images compared with the Robbins labels (Ground-Truth). (a) Lunar Location Site Lunar coords min/max Lon/Lat (176.2042, 177.5761), (−37.6372, −36.2653); (b) Robbins Labels (GT); (c) YOLOLens5X predictions; (d) Lunar Location Site Lunar coords min/max Lon/Lat (155.6985, 158.4423), (−71.4069, −70.0350); (e) Robbins Labels (GT); (f) YOLOLens5X predictions; (g) Lunar Location Site Lunar coords min/max Lon/Lat (142.9921, 151.2234), (−85.3929, −84.0210); (h) Robbins Labels (GT); (i) YOLOLens5X predictions.

Figure A3.

In subfigures (a,d,g) Three lunar site locations from LRO Camera. In subfigures (b,e,h) the Robbins labels over the correspective image sources. In subfigures (c,f,i) the prediction of YOLOLens5x along the correlated images compared with the Robbins labels (Ground-Truth). (a) Lunar Location Site Lunar coords min/max Lon/Lat (127.1660, 128.5379), (−51.6463, −50.2744); (b) Robbins Labels (GT); (c) YOLOLens5X predictions; (d) Lunar Location Site Lunar coords min/max Lon/Lat (110.3902, 113.1340), (−65.7841, −64.4122); (e) Robbins Labels (GT); (f) YOLOLens5X predictions; (g) Lunar Location Site Lunar coords min/max Lon/Lat (99.4778, 100.8497), (−57.7407, −56.3688); (h) Robbins Labels (GT); (i) YOLOLens5X predictions.

Figure A4.

Robbins crater label samples used as supervised signals (Ground-Truth) during the training step.

References

- Strom, R.G.; Malhotra, R.; Ito, T.; Yoshida, F.; Kring, D.A. The origin of planetary impactors in the inner solar system. Science 2005, 309, 1847–1850. [Google Scholar] [CrossRef] [PubMed]

- Fassett, C.I.; Minton, D.A. Impact bombardment of the terrestrial planets and the early history of the Solar System. Nat. Geosci. 2013, 6, 520–524. [Google Scholar] [CrossRef]

- Hartmann, W.K. Martian cratering 8: Isochron refinement and the chronology of Mars. Icarus 2005, 174, 294–320. [Google Scholar] [CrossRef]

- Hartmann, W. Discovery of multi-ring basins-Gestalt perception in planetary science. In Proceedings of the Multi-Ring Basins: Formation and Evolution, Houston, TX, USA, 10–12 November 1980; Pergamon Press: Oxford, UK, 1981; pp. 79–90. [Google Scholar]

- Neukum, G.; König, B.; Arkani-Hamed, J. A study of lunar impact crater size-distributions. Moon 1975, 12, 201–229. [Google Scholar] [CrossRef]

- Neukum, G.; Ivanov, B.A. Crater size distributions and impact probabilities on earth from lunar, terrestrial planeta, and asteroid cratering data. In Hazards Due to Comets and Asteroids; University of Arizona Press: Tucson, AZ, USA, 1994; pp. 359–416. [Google Scholar]

- Neukum, G.; Ivanov, B.A.; Hartmann, W.K. Cratering records in the inner solar system in relation to the lunar reference system. In Chronology and Evolution of Mars; Springer: Berlin/Heidelberg, Germany, 2001; pp. 55–86. [Google Scholar]

- Hartmann, W.K. Relative crater production rates on planets. Icarus 1977, 31, 260–276. [Google Scholar] [CrossRef]

- Marchi, S.; Mottola, S.; Cremonese, G.; Massironi, M.; Martellato, E. A new chronology for the Moon and Mercury. Astron. J. 2009, 137, 4936. [Google Scholar] [CrossRef]

- Le Feuvre, M.; Wieczorek, M.A. Nonuniform cratering of the Moon and a revised crater chronology of the inner Solar System. Icarus 2011, 214, 1–20. [Google Scholar] [CrossRef]

- Stoffler, D.; Ryder, G.; Ivanov, B.A.; Artemieva, N.A.; Cintala, M.J.; Grieve, R.A. Cratering history and lunar chronology. Rev. Mineral. Geochem. Mineral. Soc. Am. 2006, 60, 519–596. [Google Scholar] [CrossRef]

- Hiesinger, H.; Head III, J.W. New views of lunar geoscience: An introduction and overview. Rev. Mineral. Geochem. 2006, 60, 1–81. [Google Scholar] [CrossRef]

- Hiesinger, H.; Jaumann, R.; Neukum, G.; Head III, J.W. Ages of mare basalts on the lunar nearside. J. Geophys. Res. Planets 2000, 105, 29239–29275. [Google Scholar] [CrossRef]

- Hiesinger, H.; Head, J.; Wolf, U.; Jaumann, R.; Neukum, G. Ages and stratigraphy of lunar mare basalts: A synthesis. Recent Adv. Curr. Res. Issues Lunar Stratigr. 2011, 477, 1–51. [Google Scholar]

- Benedix, G.; Lagain, A.; Chai, K.; Meka, S.; Anderson, S.; Norman, C.; Bland, P.; Paxman, J.; Towner, M.; Tan, T. Deriving surface ages on Mars using automated crater counting. Earth Space Sci. 2020, 7, e2019EA001005. [Google Scholar] [CrossRef]

- Williams, J.P.; van der Bogert, C.H.; Pathare, A.V.; Michael, G.G.; Kirchoff, M.R.; Hiesinger, H. Dating very young planetary surfaces from crater statistics: A review of issues and challenges. Meteorit. Planet. Sci. 2018, 53, 554–582. [Google Scholar] [CrossRef]

- Gunn, M.; Cousins, C.R. Mars surface context cameras past, present, and future. Earth Space Sci. 2016, 3, 144–162. [Google Scholar] [CrossRef]

- Hu, T.; Yang, Z.; Kang, Z.; Lin, H.; Zhong, J.; Zhang, D.; Cao, Y.; Geng, H. Population of Degrading Small Impact Craters in the Chang’E-4 Landing Area Using Descent and Ground Images. Remote Sens. 2022, 14, 3608. [Google Scholar] [CrossRef]

- Martellato, E.; Vivaldi, V.; Massironi, M.; Cremonese, G.; Marzari, F.; Ninfo, A.; Haruyama, J. Is the Linné impact crater morphology influenced by the rheological layering on the Moon’s surface? Insights from numerical modeling. Meteorit. Planet. Sci. 2017, 52, 1388–1411. [Google Scholar] [CrossRef]

- Michael, G. Planetary surface dating from crater size–frequency distribution measurements: Multiple resurfacing episodes and differential isochron fitting. Icarus 2013, 226, 885–890. [Google Scholar] [CrossRef]

- Neukum, G.; Horn, P. Effects of lava flows on lunar crater populations. Moon 1976, 15, 205–222. [Google Scholar] [CrossRef]

- Prieur, N.C.; Rolf, T.; Wünnemann, K.; Werner, S.C. Formation of simple impact craters in layered targets: Implications for lunar crater morphology and regolith thickness. J. Geophys. Res. Planets 2018, 123, 1555–1578. [Google Scholar] [CrossRef]

- Robbins, S.J.; Antonenko, I.; Kirchoff, M.R.; Chapman, C.R.; Fassett, C.I.; Herrick, R.R.; Singer, K.; Zanetti, M.; Lehan, C.; Huang, D.; et al. The variability of crater identification among expert and community crater analysts. Icarus 2014, 234, 109–131. [Google Scholar] [CrossRef]

- Athanassas, C.; Vaiopoulos, A.; Kolokoussis, P.; Argialas, D. Remote Sensing of Mars: Detection of Impact Craters on the Mars Global Surveyor DTM by Integrating Edge-and Region-Based Algorithms. Earth Moon Planets 2018, 121, 59–72. [Google Scholar] [CrossRef]

- Bue, B.D.; Stepinski, T.F. Machine detection of Martian impact craters from digital topography data. IEEE Trans. Geosci. Remote Sens. 2006, 45, 265–274. [Google Scholar] [CrossRef]

- Christoff, N.; Jorda, L.; Viseur, S.; Bouley, S.; Manolova, A.; Mari, J.L. Automated extraction of crater rims on 3D meshes combining artificial neural network and discrete curvature labeling. Earth Moon Planets 2020, 124, 51–72. [Google Scholar] [CrossRef]

- Di, K.; Li, W.; Yue, Z.; Sun, Y.; Liu, Y. A machine learning approach to crater detection from topographic data. Adv. Space Res. 2014, 54, 2419–2429. [Google Scholar] [CrossRef]

- Fairweather, J.; Lagain, A.; Servis, K.; Benedix, G.; Kumar, S.; Bland, P. Automatic Mapping of Small Lunar Impact Craters Using LRO-NAC Images. Earth Space Sci. 2022, 9, e2021EA002177. [Google Scholar] [CrossRef]

- Hu, Y.; Xiao, J.; Liu, L.; Zhang, L.; Wang, Y. Detection of Small Impact Craters via Semantic Segmenting Lunar Point Clouds Using Deep Learning Network. Remote Sens. 2021, 13, 1826. [Google Scholar] [CrossRef]

- Kang, Z.; Wang, X.; Hu, T.; Yang, J. Coarse-to-fine extraction of small-scale lunar impact craters from the CCD images of the Chang’E lunar orbiters. IEEE Trans. Geosci. Remote Sens. 2018, 57, 181–193. [Google Scholar] [CrossRef]

- Kim, J.R.; Muller, J.P.; van Gasselt, S.; Morley, J.G.; Neukum, G. Automated crater detection, a new tool for Mars cartography and chronology. Photogramm. Eng. Remote Sens. 2005, 71, 1205–1217. [Google Scholar] [CrossRef]

- Lee, C. Automated crater detection on Mars using deep learning. Planet Space Sci. 2019, 170, 16–28. [Google Scholar] [CrossRef]

- Liu, D.; Chen, M.; Qian, K.; Lei, M.; Zhou, Y. Boundary detection of dispersal impact craters based on morphological characteristics using lunar digital elevation model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5632–5646. [Google Scholar] [CrossRef]

- Michael, G. Coordinate registration by automated crater recognition. Planet Space Sci. 2003, 51, 563–568. [Google Scholar] [CrossRef]

- Sawabe, Y.; Matsunaga, T.; Rokugawa, S. Automated detection and classification of lunar craters using multiple approaches. Adv. Space Res. 2006, 37, 21–27. [Google Scholar] [CrossRef]

- Silburt, A.; Ali-Dib, M.; Zhu, C.; Jackson, A.; Valencia, D.; Kissin, Y.; Tamayo, D.; Menou, K. Lunar crater identification via deep learning. Icarus 2019, 317, 27–38. [Google Scholar] [CrossRef]

- Tewari, A.; Verma, V.; Srivastava, P.; Jain, V.; Khanna, N. Automated crater detection from co-registered optical images, elevation maps and slope maps using deep learning. Planet Space Sci. 2022, 218, 105500. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhao, H.; Chen, M.; Tu, J.; Yan, L. Automatic detection of lunar craters based on DEM data with the terrain analysis method. Planet Space Sci. 2018, 160, 1–11. [Google Scholar] [CrossRef]

- Vijayan, S.; Vani, K.; Sanjeevi, S. Crater detection, classification and contextual information extraction in lunar images using a novel algorithm. Icarus 2013, 226, 798–815. [Google Scholar] [CrossRef]

- Fan, L.; Yuan, J.; Zha, K.; Wang, X. ELCD: Efficient Lunar Crater Detection Based on Attention Mechanisms and Multiscale Feature Fusion Networks from Digital Elevation Models. Remote Sens. 2022, 14, 5225. [Google Scholar] [CrossRef]

- Lin, X.; Zhu, Z.; Yu, X.; Ji, X.; Luo, T.; Xi, X.; Zhu, M.; Liang, Y. Lunar Crater Detection on Digital Elevation Model: A Complete Workflow Using Deep Learning and Its Application. Remote Sens. 2022, 14, 621. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, B.; Xue, H.; Li, X.; Ma, J. An Improved Global Catalog of Lunar Impact Craters (≥1 km) with 3D Morphometric Information and Updates on Global Crater Analysis. J. Geophys. Res. Planets 2021, 126, e2020JE006728. [Google Scholar] [CrossRef]

- Shermeyer, J.; Van Etten, A. The effects of super-resolution on object detection performance in satellite imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- La Grassa, R.; Gallo, I.; Re, C.; Cremonese, G.; Landro, N.; Pernechele, C.; Simioni, E.; Gatti, M. An Adversarial Generative Network Designed for High-Resolution Monocular Depth Estimation from 2D HiRISE Images of Mars. Remote Sens. 2022, 14, 4619. [Google Scholar] [CrossRef]

- Head III, J.W.; Fassett, C.I.; Kadish, S.J.; Smith, D.E.; Zuber, M.T.; Neumann, G.A.; Mazarico, E. Global distribution of large lunar craters: Implications for resurfacing and impactor populations. Science 2010, 329, 1504–1507. [Google Scholar] [CrossRef] [PubMed]

- Povilaitis, R.; Robinson, M.; Van der Bogert, C.; Hiesinger, H.; Meyer, H.; Ostrach, L. Crater density differences: Exploring regional resurfacing, secondary crater populations, and crater saturation equilibrium on the moon. Planet Space Sci. 2018, 162, 41–51. [Google Scholar] [CrossRef]

- Robbins, S.J. A new global database of lunar impact craters> 1–2 km: 1. Crater locations and sizes, comparisons with published databases, and global analysis. J. Geophys. Res. Planets 2019, 124, 871–892. [Google Scholar] [CrossRef]

- Salamunićcar, G.; Lončarić, S.; Grumpe, A.; Wöhler, C. Hybrid method for crater detection based on topography reconstruction from optical images and the new LU78287GT catalogue of Lunar impact craters. Adv. Space Res. 2014, 53, 1783–1797. [Google Scholar] [CrossRef]

- Wetzler, P.G.; Honda, R.; Enke, B.; Merline, W.J.; Chapman, C.R.; Burl, M.C. Learning to detect small impact craters. In Proceedings of the 2005 Seventh IEEE Workshops on Applications of Computer Vision (WACV/MOTION’05), Breckenridge, CO, USA, 5–7 January 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 178–184. [Google Scholar]

- Lee, C.; Hogan, J. Automated crater detection with human level performance. Comput. Geosci. 2021, 147, 104645. [Google Scholar] [CrossRef]

- Cadogan, P.H. Automated precision counting of very small craters at lunar landing sites. Icarus 2020, 348, 113822. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- La Grassa, R.; Gallo, I.; Landro, N. Dynamic decision boundary for one-class classifiers applied to non-uniformly sampled data. In Proceedings of the 2020 Digital Image Computing: Techniques and Applications (DICTA), Melbourne, Australia, 29 November–2 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–7. [Google Scholar]

- La Grassa, R.; Gallo, I.; Calefati, A.; Ognibene, D. Binary classification using pairs of minimum spanning trees or n-ary trees. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Salerno, Italy, 3–5 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 365–376. [Google Scholar]

- Gallo, I.; La Grassa, R.; Landro, N.; Boschetti, M. Sentinel 2 Time Series Analysis with 3D Feature Pyramid Network and Time Domain Class Activation Intervals for Crop Mapping. ISPRS Int. J.-Geo-Inf. 2021, 10, 483. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://proceedings.neurips.cc/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html (accessed on 14 February 2023). [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Speyerer, E.; Robinson, M.; Denevi, B.; LROC Science Team. Lunar Reconnaissance Orbiter Camera global morphological map of the Moon. In Proceedings of the 42nd Annual Lunar and Planetary Science Conference, The Woodlands, TX, USA, 7–11 March 2011; number 1608. p. 2387. [Google Scholar]

- Robinson, M.; Brylow, S.; Tschimmel, M.; Humm, D.; Lawrence, S.; Thomas, P.; Denevi, B.; Bowman-Cisneros, E.; Zerr, J.; Ravine, M.; et al. Lunar reconnaissance orbiter camera (LROC) instrument overview. Space Sci. Rev. 2010, 150, 81–124. [Google Scholar] [CrossRef]

- Wagner, R.; Speyerer, E.; Robinson, M.; LROC Team. New mosaicked data products from the LROC team. In Proceedings of the 46th Annual Lunar and Planetary Science Conference, The Woodlands, TX, USA, 16–20 March 2015; number 1832. p. 1473. [Google Scholar]

- Sato, H.; Robinson, M.; Hapke, B.; Denevi, B.; Boyd, A. Resolved Hapke parameter maps of the Moon. J. Geophys. Res. Planets 2014, 119, 1775–1805. [Google Scholar] [CrossRef]

- Robbins, S.J.; Riggs, J.D.; Weaver, B.P.; Bierhaus, E.B.; Chapman, C.R.; Kirchoff, M.R.; Singer, K.N.; Gaddis, L.R. Revised recommended methods for analyzing crater size-frequency distributions. Meteorit. Planet. Sci. 2018, 53, 891–931. [Google Scholar] [CrossRef]

- Barker, M.; Mazarico, E.; Neumann, G.; Zuber, M.; Haruyama, J.; Smith, D. A new lunar digital elevation model from the Lunar Orbiter Laser Altimeter and SELENE Terrain Camera. Icarus 2016, 273, 346–355. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, S.; Fan, Z.; Li, Z.; Zhang, H.; Wei, C. An effective lunar crater recognition algorithm based on convolutional neural network. Remote Sens. 2020, 12, 2694. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar]

- Orsic, M.; Kreso, I.; Bevandic, P.; Segvic, S. In defense of pre-trained imagenet architectures for real-time semantic segmentation of road-driving images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12607–12616. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 9627–9636. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. Reppoints: Point set representation for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 9657–9666. [Google Scholar]

- Iqbal, W.; Hiesinger, H.; van der Bogert, C.H. Geological mapping and chronology of lunar landing sites: Apollo 12. Icarus 2020, 352, 113991. [Google Scholar] [CrossRef]

- Robbins, S.J. New crater calibrations for the lunar crater-age chronology. Earth Planet. Sci. Lett. 2014, 403, 188–198. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).