Abstract

Vision-based modal analysis has gained popularity in the field of structural health monitoring due to significant advancements in optics and computer science. For long term monitoring, the structures are subjected to ambient excitation, so that their vibration amplitudes are quite small. Hence, although natural frequencies can be usually identified from the extracted displacements by vision-based techniques, it is still difficult to evaluate the corresponding mode shapes accurately due to limited resolution. In this study, a novel signal reconstruction algorithm is proposed to reconstruct the dynamic response extracted by the vision-based approach to identify the mode shapes of structures with low amplitude vibration due to environmental excitation. The experimental test of a cantilever beam shows that even if the vibration amplitude is as low as 0.01 mm, the first two mode shapes can be accurately identified if the proposed signal reconstruction algorithm is implemented, while without the proposed algorithm, they can only be identified when the vibration amplitude is at least 0.06 mm. The proposed algorithm can also perform well with various camera settings, indicating great potential to be used for vision-based structural health monitoring.

1. Introduction

Structural health monitoring (SHM) provides structural assessment and ensures operational safety of engineering structures [1,2]. Vision-based approach has gained great popularity since it is a non-contact, long-distance, and multi-point sensing tool.

The vision-based approach, also known as photogrammetry, is a measurement methodology that uses photographs or digital images to extract the geometry, displacement, and deformation of a structure. A great number of efforts have been made to identify quantitative indicators of structural condition from vision-based data, including structural deformation measurement [3], strain/stress monitoring [4], and dynamic response monitoring [5], etc. Dynamic response measurement and the identification of structural modal properties are the most extensively used in SHM [6,7]. Modal properties generally include natural frequency, mode shape, and damping ratio, which can be extracted by analyzing the vibration signals of the structure. In fact, natural frequencies and mode shapes are the eigenvalues and the corresponding eigenvectors of the product of the inverse of mass matrix and stiffness of the structure. The nth mode shape is the deformed shape of the structure as it vibrates in the nth mode. Compared to natural frequency, mode shapes are more sensitive to local damages, especially at the early stage.

Three types of photogrammetry technique have been developed: template matching approaches, optical-flow-based approaches, and phase-based approaches. The template matching method [8] is usually used to track the movement of a target in a sequence of video images, typically in conjunction with subpixel techniques [9]. Optical flow theory [10] explains the relationship between image intensity and the motion of a structure. It is suitable for cases in which there are no artificial targets, and can result in full-field mode shapes. The phase-based approach [11] is used to rapidly recover the displacements from the phase space of the images. Compared to the optical-flow-based method, the phase-based approach is frequently more resilient to noise and disturbances.

In practice, the long-term monitored structure is usually subjected to ambient excitation only, so its vibration is slight [12]. Conventionally, for the measurement of small-amplitude dynamic response caused by environmental excitation, the accelerometers are mounted on the structure to get the structure’s acceleration response, and then several approaches, such as the combination of natural excitation technique (NExT) and eigensystem realization algorithm (ERA) and the stochastic subspace identification technique (SSI), are used to determine the structure’s modal parameters. However, the accelerometers must be installed on the structure ahead of time, which is costly and inconvenient if the sensors installation is difficult. For a vision-based approach, which does not require installation of sensors, the natural frequencies of the structure can be approximately evaluated by Fourier transform, but it is difficult to quantify the mode shapes, since the extracted dynamic responses by vision-based technique usually have insufficient accuracy. As a result, the critical challenge in vision-based mode shape identification of structures subject to ambient excitation is to improve the noise resistance and robustness in low-level vibration signals. Motion magnification has been proposed [13] recently to increase the signal-to-noise ratio (SNR) in low-amplitude vibrations. However, motion magnification (video magnification) technology employed to preprocess the source footage necessitates a significant amount of storage space. A hybrid identification method combining a high-speed camera with accelerometers was proposed [14] to increase the estimate accuracy of mode shapes [15]. Nevertheless, this approach will lose its universality if the structure is not appropriate for mounting accelerometers.

This paper presents a novel signal processing algorithm for the vision-based method to identify mode shapes of structures with small amplitude vibration. Lab-scale experimental tests on a cantilever beam showed that with the proposed signal processing algorithm, the first two mode shapes can be accurately extracted even if the displacement amplitude is as low as 0.01 mm (0.06 pixel) whereas without the proposed signal reconstruction they can only be identified if the displacement amplitude is higher than 0.06 mm (0.3 pixel). The proposed algorithm also performed well for a variety of focal lengths and object distances. Compared to other motion amplification methods, the suggested approach shows the benefits of a shorter operation time and less storage space by processing the extracted displacement directly rather than applying amplification to the source video to speed up modal identification. The framework of the vision-based dynamic response extraction and modal identification method is summarized as follows. First, the template matching method, in conjunction with subpixel methodologies, is used to extract the displacements at different points on the targeted structure. Then the proposed signal processing algorithm is adopted to reconstruct the displacement and acceleration. Finally, the mode shapes of the targeted structure can be evaluated by NexT-ERA approach.

2. Theoretical Background

2.1. Dynamic Response Extraction Based on Template Matching at the Subpixel Level

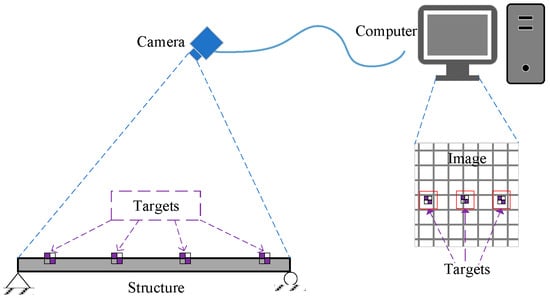

Figure 1 shows the illustration of vision-based displacement measurement; the targets should be pre-positioned on the structure, and the position of each target in the source image can be determined by template matching to calculate the time history of displacement of each target.

Figure 1.

Illustration of vision-based displacement measurement.

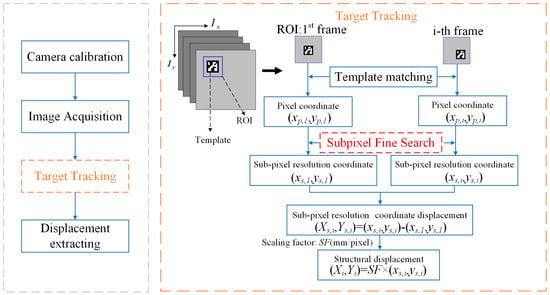

In order to improve the resolution of the displacement, the sub-pixel technology is combined with the template matching method to realize dynamic response extraction based on template matching at the subpixel level. Figure 2 summarizes the procedure of template matching-based dynamic response extraction at the sub-pixel level. Camera calibration should be conducted before measuring to determine the scaling factor. Then image acquisition begins and target tracking can be conducted subsequently. The template image is compared to the region of interest (ROI) in the source image by shifting the template image pixel by pixel to calculate the similarity. The sub-pixel technique is selected in this study to improve the resolution. First, the frequency domain cross-correlation is computed to produce a pixel-level template matching point. Consider two images I(x,y) and T(x,y) with identical dimensions of M × N; the Fourier transform can be applied to the cross-correlation between I(x,y) and T(x,y) as:

where Δx and Δy are the coordinate shifts in x- and y-directions, P(u,v) and Q(u,v) are the discrete Fourier transform (DFT) of the images I(x,y) and T(x,y), respectively, and * represents the complex conjugation. Hence, the pixel-level coordinates (xp,yp) of the template in the source image can be easily estimated by searching the peak of Rcorr. Second, the cross-correlation in a small neighborhood around (xp,yp) is computed by matrix-multiply DFT. Then the sub-pixel coordinates (xsp,ysp) of the template in the source image can be obtained by searching for the greatest value of cross-correlation. It should be noted that the sub-pixel resolution can be 1/κ of a pixel if the peak search is in a (1.5κ,1.5κ) neighborhood. Numerous investigations have revealed that sub-pixel resolution can range from 0.5 to 0.01 pixels. In this stage, only the original displacement of the structure is obtained, which generally contains noise and has low accuracy, especially for higher frequency components, which are more invulnerable to noise. It is necessary to further use the signal reconstruction method proposed in this paper to process the originally measured displacement and compute the acceleration.

Figure 2.

Flowchart of template matching-based dynamic response extraction at sub-pixel level.

2.2. Output-Only Modal Parameter Identification of Structures under Environmental Excitation

ERA is frequently used to identify the modal parameters of the structure. The essence of ERA is to identify the modal parameters of a structure by using its free vibration signal. In fact, in the time domain, the discrete dynamic responses of an n DoFs, linear, and time-invariant system can be represented as:

where k is the kth time step, Ad ∈ R2n×2n, Bd ∈ R2n×l, Cd ∈ Rm×2n and Dd ∈ Rm×l are the state, input, output, and feed-through matrices, respectively, x(k) ∈ R2n×l, y(k) ∈ Rm×l, and u(k) ∈ Rl×1 are state vector, measurement vector, and excitation vector, respectively, l and m denote the number of input and output, and 2n represents the state matrix order. When the measurement vector y(k) is the impulse response, the following Hankel matrix is formed as:

Then the comparable matrix Âd of Ad can be derived as:

where Vk−1, Uk−1,and Γk−1 fulfill the Hankel matrix’s singular value decomposition (SVD):

Then the eigenvalue decomposition of Âd is used to compute the frequencies as

where

is the eigenvalue matrix of Âd. The following equation can be used to obtain the mth natural frequency of the structure, fm:

where fs is the sampling frequency. Finally, the mode shapes can be obtained as:

It should be noted that the free-vibration response of a structure subject to impulse is used to generate the Hankel matrix. Hence the response should be translated to the impulse response if the structure is subjected to ambient excitation. NExT is always used prior to ERA in this condition, because it can translate the dynamic response of a structure subject to ambient excitation to impulse response. NExT-ERA is a conventional modal identification technique, and it has been used in this study for the mode shape identification by using the original displacement and acceleration extracted by the template matching method and the reconstructed acceleration by the proposed signal reconstruction method.

3. Proposed Signal Reconstruction Method

Conventionally, it is usually difficult to use the NExT-ERA method to identify mode shapes by using the original displacements at different points extracted by the vision-based template matching method. One reason is that the resolution of extracted displacement from the images is quite limited, so the displacement is not accurate enough to reconstruct the mode shapes by the existing modal analysis methods. The sub-pixel technique was proposed as a solution to improve the displacement resolution, which has been briefly introduced above; however, the extracted dynamic displacement resolution is still insufficient for low-amplitude vibration due to ambient excitation. The measurement noise and system noise also contaminate the images, which further increases the difficulty of reconstructing mode shapes. The other reason is that higher frequency components have much smaller amplitudes in displacement than lower frequency components, which makes extracting higher order mode shapes from displacement more difficult. Therefore, it is necessary to pre-process the original displacement to extract the mode shapes.

In fact, for an n-DOF system, its equation of motion can be expressed as:

where f(t) is the applied excitation force vector, and M, C, and K are the mass, damping, and stiffness matrices, respectively, ÿ(t), ẏ(t) and y(t) are the acceleration, velocity, and displacement vectors, respectively. When applying the Fourier transform to both acceleration ÿ(t) and displacement y(t), one can obtain:

where F(·) is the Fourier transform and ω is the angular frequency. As shown above, the amplitude of spectrum of acceleration is amplified by ω2, compared to that of displacement. As a consequence, acceleration is more preferred than displacement when extracting mode shapes, especially for higher modes. Traditionally, acceleration can be directly measured by an accelerometer in the modal testing, but it can only be obtained by applying central difference on extracted displacement for the vision-based technique. Due to the insufficient resolution and inevitable noise, it is almost impossible to compute the acceleration with sufficient accuracy from the directly extracted displacement by central difference.

Hence, a novel approach is proposed in this study, to reconstruct the displacement extracted by the vision-based method. Then, the acceleration can be generated by central difference, which can be further used to identify the mode shapes. Considering the extracted displacement at one single point, y(t), its Fourier transform can be represented as

A frequency domain operator is designed in this study as

where β is the scaling factor, ωm = 2πfm is the mth circular frequency of the structure, and Δω is the half band width. Hence, the frequency domain operator keeps the frequency components near the natural frequencies and significantly reduces other frequency components, which thus ensures that the frequency components near the natural frequencies are dominant. In this study, the scaling factor is set as 10 and the band width is selected as 10 times the frequency resolution. Subsequently, the time-history of displacement can be reconstructed as:

where ŷ(t) is the reconstructed displacement. Then, the corresponding acceleration can be obtained by applying central difference to the reconstructed displacement as:

where â(t) is the acceleration obtained from the reconstructed displacement, and Δt is the corresponding time interval. When applying the Fourier transform to â(t), one can obtain

from which it can be seen that in the reconstructed acceleration, the frequency components near the natural frequencies remain intact, while the other frequency components are suppressed. Hence, the noise in the original displacement can be efficiently reduced using this approach, allowing for further accurate identification of mode shapes.

4. Experimental Study

4.1. Experimental Design and Test Setup

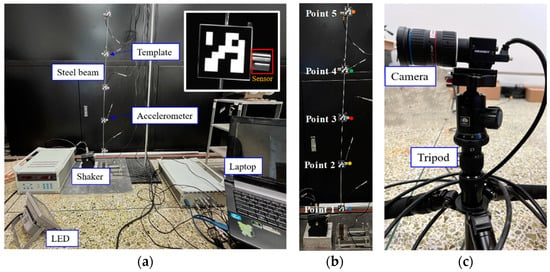

An experimental study was conducted to examine the performance of the proposed approach, in which a steel cantilever beam with dimensions of 2 mm × 20 mm × 1000 mm was used. The density of the beam was 7890 kg/m3, and the Young’s modulus was 2.1×1011 N/m2. In theory, the first two frequencies of the cantilever beam were five targets, and five accelerometers were attached on the beam at the same locations, so that the traditional modal analysis method based on accelerations can be used as a reference. The markers on the beam were numbered from bottom to top as points 1 to 5. Point 1 is just at the fixed end where it is a nodal point, therefore, there should be no response. Point 4 is near the nodal point of the second mode; hence, it is expected that the high frequency component in the dynamic response will be small, compared to the low frequency components. The accelerometer (DH1A108E) employed in this experiment has a mass of 5.2 g. The video was recorded at 50 frames per second by the camera, and the resolution was 2048 × 2048. The sampling frequency of the acceleration was 50 Hz. Figure 3a shows the overall experimental setup, Figure 3b shows the detailed view of the specimen and Figure 3c shows the enlarged side view of the camera and tripod.

Figure 3.

(a) View of overall experimental scene; (b) detailed view of the specimen; (c) enlarged side view of the camera and tripod.

Two series of tests were designed. For the first series, the focal length (f) of 35 mm and object distance (u) 160 cm were kept while the output voltage of random excitation increased from 300 mV to 1500 mV, which was designed to investigate the performance of the proposed method on reconstructing mode shapes of the structure with different-amplitude vibrations due to ambient excitation. For the second series, the output voltage of random excitation was kept at 1500 mV while the focal length varied from 12 mm to 35 mm and the object distance changed from 100 cm to 200 cm, the purpose of which was to check the performance of the proposed method with different camera settings.

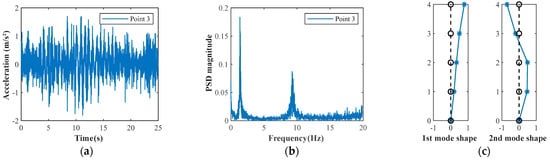

4.2. Modal Analysis by Acceleration Measurement

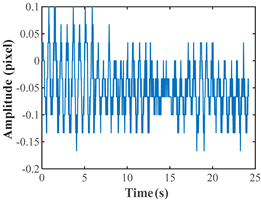

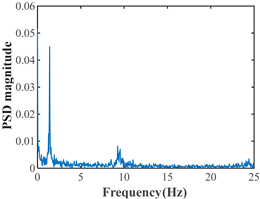

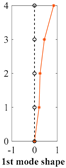

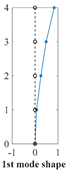

The modal analysis was conducted by using acceleration measurement first to generate a reference, since traditional modal analysis, which adopts the directly measured acceleration, is well established. Figure 4a shows the time history of the acceleration at Point 3, and Figure 4b shows the corresponding spectrum at the two peaks, 1.38 Hz and 9.42 Hz, indicating the first two natural frequencies can be seen clearly. The identified first two mode shapes by NExT-ERA are shown in Figure 4c where the circles indicate the vertical position of the measured five points.

Figure 4.

(a) Time history and (b) spectrum of acceleration at Point 3; (c) the mode shapes extracted from acceleration.

4.3. Mode Shape Identification by the Proposed Method

In the case of f = 35 mm and u =160 cm, the target had a length of 22 mm, corresponding to 118 pixels, therefore the scaling factor was 0.01864 mm/pixel. Table 1 shows the displacements at Point 2 and the corresponding spectra for the first series of test. As expected, when the excitation power increased, the displacement amplitude increased.

Table 1.

The displacements at Point 2 and corresponding spectra with varying excitation amplitude.

As can be seen from the spectra in Table 1, the first two frequencies are 1.44 Hz and 9.39 Hz, which coincide with those obtained from acceleration (Figure 4) well. However, it should be noted that the measured frequencies cannot match the theoretical ones (1.67 Hz and 10.42 Hz) because the total mass of accelerometers are 26 g while the mass of the beam is 316 g, indicating that the influence of the accelerometers cannot be ignored.

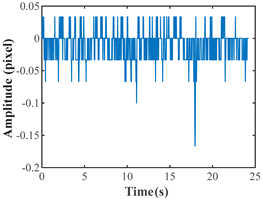

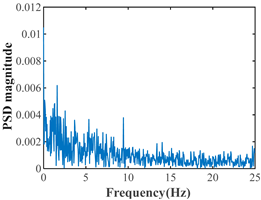

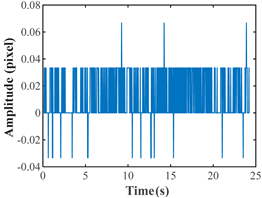

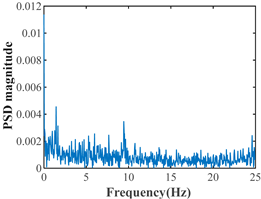

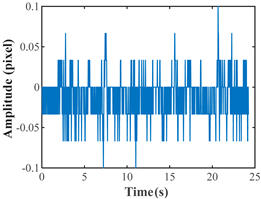

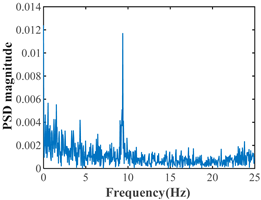

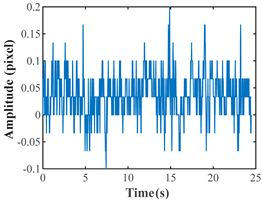

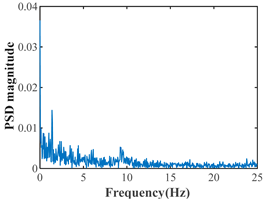

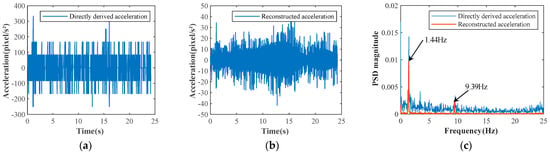

As the natural frequencies can be estimated by peak-picking from the spectrum, the proposed frequency domain operator can be used to reconstruct the displacement and acceleration. In fact, taking the displacement at Point 2 with the output voltage of excitation of 600 mV as an example, the acceleration obtained by applying central difference defined in Equation (16) on the original displacements, obtained by the template matching algorithm, is shown in Figure 5a. Then, after applying FFT on the directly calculated acceleration, the spectrum is shown as the blue line in Figure 5c. For comparison, the reconstructed acceleration is shown in Figure 5b, and the corresponding spectrum is shown as the yellow line in Figure 5c. As can be observed, the SNR of the reconstructed acceleration is higher than that of the directly calculated acceleration since the frequency components near the natural frequencies are maintained, while other frequency components are significantly reduced.

Figure 5.

Time history of the directly calculated and reconstructed accelerations at Point 2: (a) directly calculated acceleration; (b) reconstructed acceleration and the (c) corresponding spectra.

Subsequently, the mode shapes were evaluated as follows. The proposed signal processing algorithm was adopted to reconstruct the displacement and acceleration. Thereafter, the mode shapes of the targeted structure can be evaluated by NExT-ERA approach. Because of the camera’s limited field of view, the mode shape assembly method was employed. Five points were divided into four groups of two points each. Table 2 compares the identified mode shapes by using the original displacement and acceleration extracted by the template matching method and reconstructed acceleration, using the proposed method. When the original displacement was used, the mode shapes could only be identified if the output voltage of excitation was above 1200 mV. When the directly calculated acceleration was used, the fundamental mode shape could not be identified regardless of the output voltage of excitation, which proves once again that the acceleration directly obtained by applying central difference on the original displacement, extracted by the template matching method, is not accurate enough. Finally, when the reconstructed acceleration by the proposed method in this study was used, the first two mode shapes could be evaluated accurately, even if the output voltage of excitation was as low as 300 mV, which corresponds to the displacement amplitude of 0.01 mm. In addition, the modal assurance criterion (MAC) values between the mode shapes by the proposed method and the reference were all greater than 0.95, indicating good accuracy.

Table 2.

Comparison of the mode shapes extracted from different dynamic responses with various output voltage of excitations.

4.4. Effect of Camera Settings on the Proposed Method

Camera setting has an impact on the measurement outcomes as well. The effect of the focal length and object distance on the measurement is explored here. Three cases were considered, including f = 12 mm, u = 160 cm, f = 12 mm, u = 200 cm, and f = 35 mm, u = 200 cm. Other conditions remained unchanged: resolution was 2448×2048, frame rate was 50 per second, aperture was 2.8 and output voltage of excitation was 1500 mV. When the camera’s focal length and object distance changed, the pixels occupied by the target in the picture and the displacement amplitude in the image changed accordingly. The mode shapes extracted from different dynamic responses with various camera settings are shown in Table 3. When the original displacement extracted by template matching method was used, the mode shapes could be identified if f = 35 mm, u = 200 cm. When the acceleration reconstructed by the proposed method was used, the first two mode shapes could be identified for all of the camera settings, indicating that the proposed method can perform well for various camera settings.

Table 3.

Comparison of the mode shapes extracted from different dynamic responses with various camera settings.

5. Conclusions

A vision-based method has been proposed to identify mode shapes of a structure with low amplitude vibration. The findings are summarized as follows:

- The proposed framework can identify the first two natural frequencies and mode shapes even if the vibration amplitude is as small as 0.01 mm, while the traditional vision-based method can only identify the modal parameters of the first two modes if the vibration amplitude is at least 0.06 mm.

- The proposed method performed quite well in the experimental test where three camera settings, including different focal lengths and object distances, were considered, indicating that it has great potential to be applied in various conditions. It should be noted that the proposed method is also applicable to other types of structure although only a cantilever beam was used for illustration in the experimental test.

- Preliminary experiments on cantilever steel beams were carried out in the laboratory in this study. However, due to the low sampling rate of the camera in comparison to the accelerometer, challenges remain in estimating higher mode frequencies and mode shapes. Moreover, in order to assure the high quality of vision-based dynamic responses, the templates or pre-labeled targets were used in the experimental test. Hence, future research should be conducted to eliminate the usage of pre-labeled targets to make vision-based approach more practical.

Author Contributions

Conceptualization, Y.Z.; methodology, Y.Z.; software, Z.C.; validation, Y.Z. and X.R.; investigation, Z.C., X.R. and Y.Z; resources, Z.C.; data curation, X.R.; writing—original draft preparation, X.R. and Z.C; writing—review and editing, Y.Z.; visualization, X.R.; supervision, Z.C. and Y.Z.; project administration, Z.C.; funding acquisition, Z.C. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (NSFC-52278319), and the Fundamental Research Funds for the Central Universities (Grant No. 20720220070).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| I(x,y) | source image | fs | sampling frequency |

| T(x,y) | template image | mode shape vector | |

| DFT | discrete Fourier transform | M | mass matrix |

| Ad | state matrix | C | damping matrix |

| Bd | input matrix | K | stiffness matrix |

| Cd | output matrix | ÿ(t) | acceleration vector |

| Dd | feed-through matrix | ẏ(t) | velocity vector |

| x(k) | state vector | y(t) | displacement vector |

| y(k) | measurement vector | F(·) | Fourier transform |

| u(k) | excitation vector | ωm | mth angular frequency |

| Hk | Hankel matrix | ŷ(t) | reconstructed displacement |

| fm | mth natural frequency | â(t) | the reconstructed acceleration |

References

- Qu, X.; Shu, B.; Ding, X.; Lu, Y.; Li, G.; Wang, L. Experimental study of accuracy of high-rate gnss in context of structural health monitoring. Remote Sens. 2022, 14, 4989. [Google Scholar] [CrossRef]

- Cai, Y.F.; Zhao, H.S.; Li, X.; Liu, Y.C. Effects of yawed inflow and blade-tower interaction on the aerodynamic and wake characteristics of a horizontal-axis wind turbine. Energy 2023, 264, 126246. [Google Scholar]

- Feng, D.; Feng, M.Q. Vision-based multipoint displacement measurement for structural health monitoring. Struct. Control Health Monit. 2016, 23, 876–890. [Google Scholar]

- Enshaeian, A.; Luan, L.; Belding, M.; Sun, H.; Rizzo, P. A contactless approach to monitor rail vibrations. Exp. Mech. 2021, 61, 705–718. [Google Scholar]

- Luan, L.; Zheng, J.; Wang, M.L.; Yang, Y.; Rizzo, P.; Sun, H. Extractingfull-field subppixel structural displacements from videos via deep learning. J. Sound Vib. 2021, 505, 116142. [Google Scholar]

- Toh, G.; Park, J. Review of vibration-based structural health monitoring using deep learning. Appl. Sci. 2020, 10, 1680. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, D.H.; Yi, T.H.; Zhang, G.H.; Han, J.G. Eliminating environmental and operational effects on structural modal frequency: A comprehensive review. Struct. Control Health Monit. 2022, 29, e3073. [Google Scholar]

- Lu, Z.-R.; Lin, G.; Wang, L. Output-only modal parameter identification of structures by vision modal analysis. J. Sound Vib. 2021, 497, 115949. [Google Scholar]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Bruhn, A.; Weickert, J.; Schnörr, C. Lucas/Kanade meets Horn/Schunck: Combining local and global optic flow methods. Int. J. Comput. Vis. 2005, 61, 211–231. [Google Scholar] [CrossRef]

- Fleet, D.J.; Jepson, A.D. Computation of component image velocity from local phase information. Int. J. Comput. Vis. 1990, 5, 77–104. [Google Scholar]

- Zhang, D.; Fang, L.; Wei, Y.; Guo, J.; Tian, B. Structural low-level dynamic response analysis using deviations of idealized edge profiles and video acceleration magnification. Appl. Sci. 2019, 9, 712. [Google Scholar] [CrossRef]

- Yang, Y.; Dorn, C.; Mancini, T.; Talken, Z.; Kenyon, G.; Farrar, C.; Mascareñas, D. Blind identification of full-field vibration modes from video measurements with phase-based video motion magnification. Mech. Syst. Signal Process. 2017, 85, 567–590. [Google Scholar]

- Javh, J.; Slavič, J.; Boltežar, M. High frequency modal identification on noisy high-speed camera data. Mech. Syst. Signal Process. 2018, 98, 344–351. [Google Scholar] [CrossRef]

- Bregar, T.; Zaletelj, K.; Čepon, G.; Slavič, J.; Boltežar, M. Full-field FRF estimation from noisy high-speed-camera data using a dynamic substructuring approach. Mech. Syst. Signal Process. 2021, 150, 107263. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).