UAS-Based Real-Time Detection of Red-Cockaded Woodpecker Cavities in Heterogeneous Landscapes Using YOLO Object Detection Algorithms

Abstract

1. Introduction

2. Materials and Methods

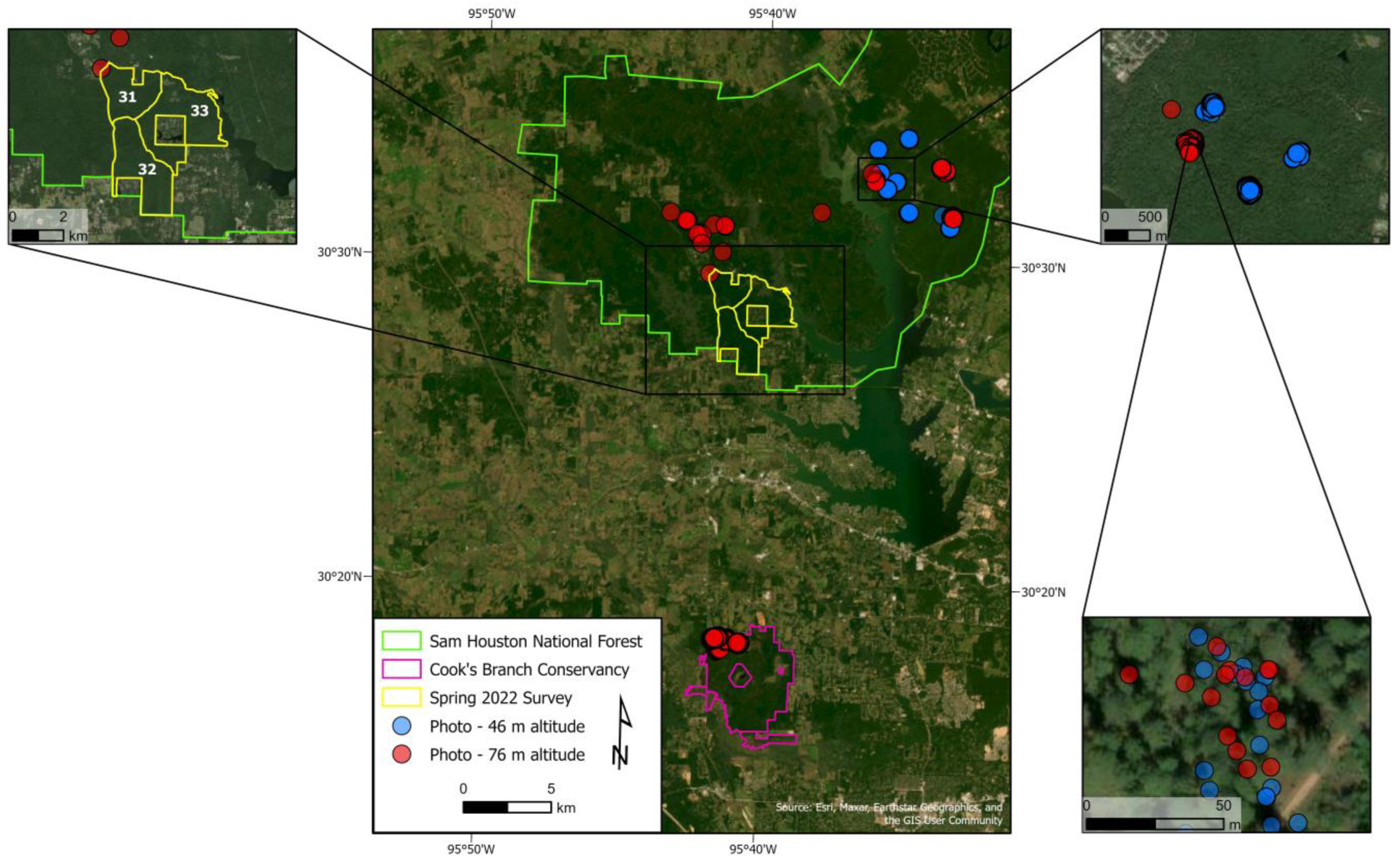

2.1. Study Area

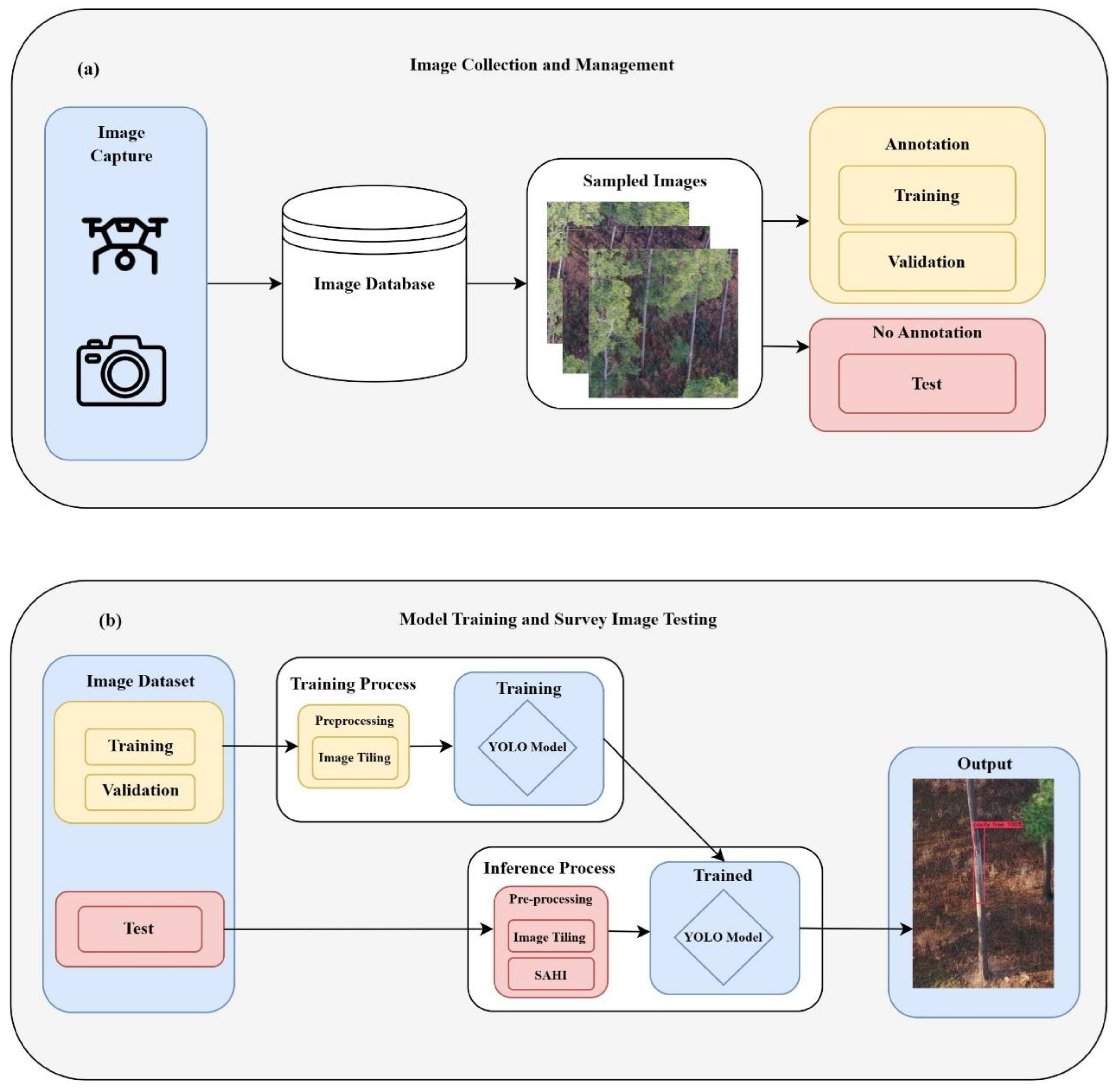

2.2. Training Samples

2.2.1. Training Sample Image Collection

2.2.2. Labeling and Data Management

2.3. Model Training and Testing

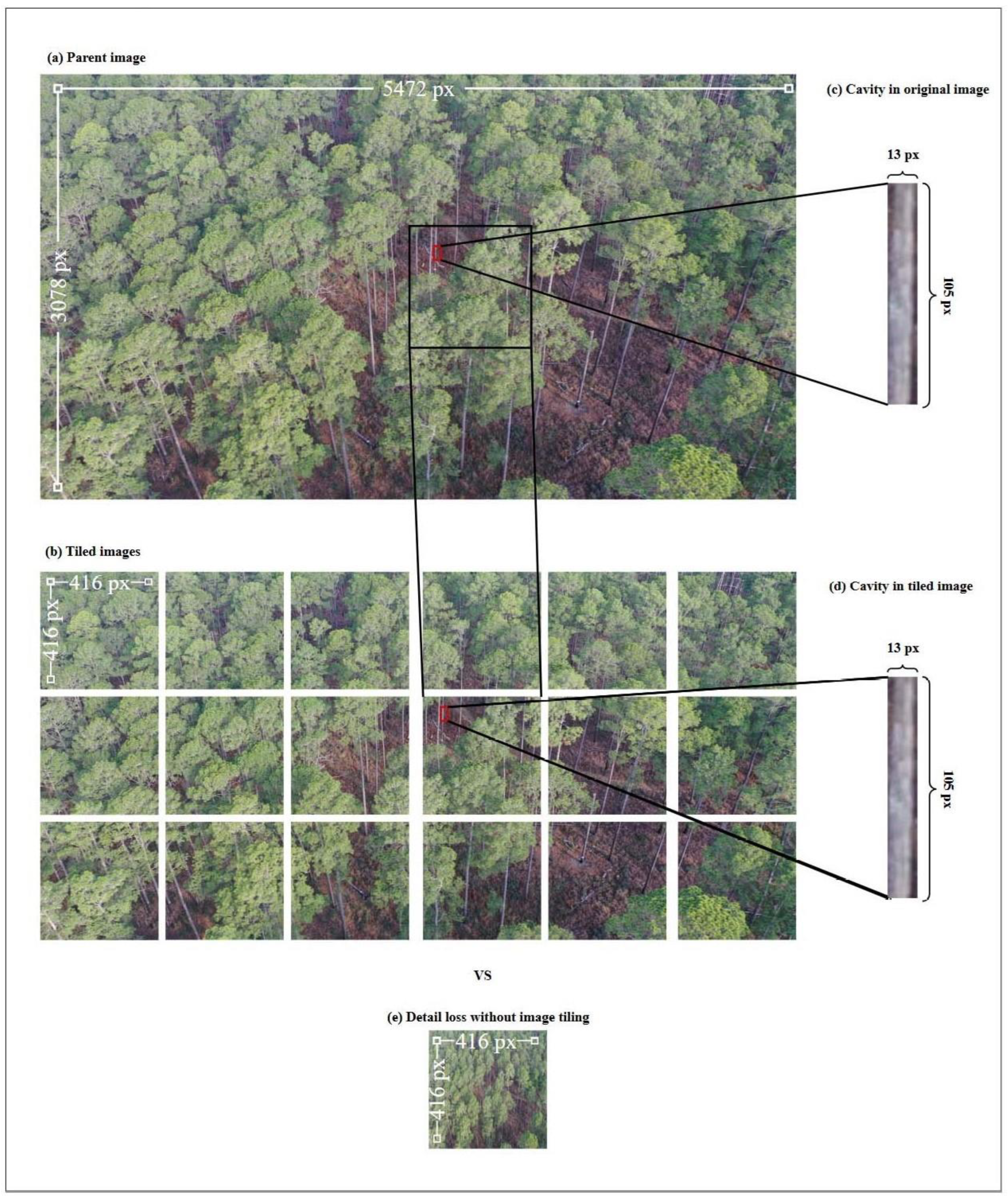

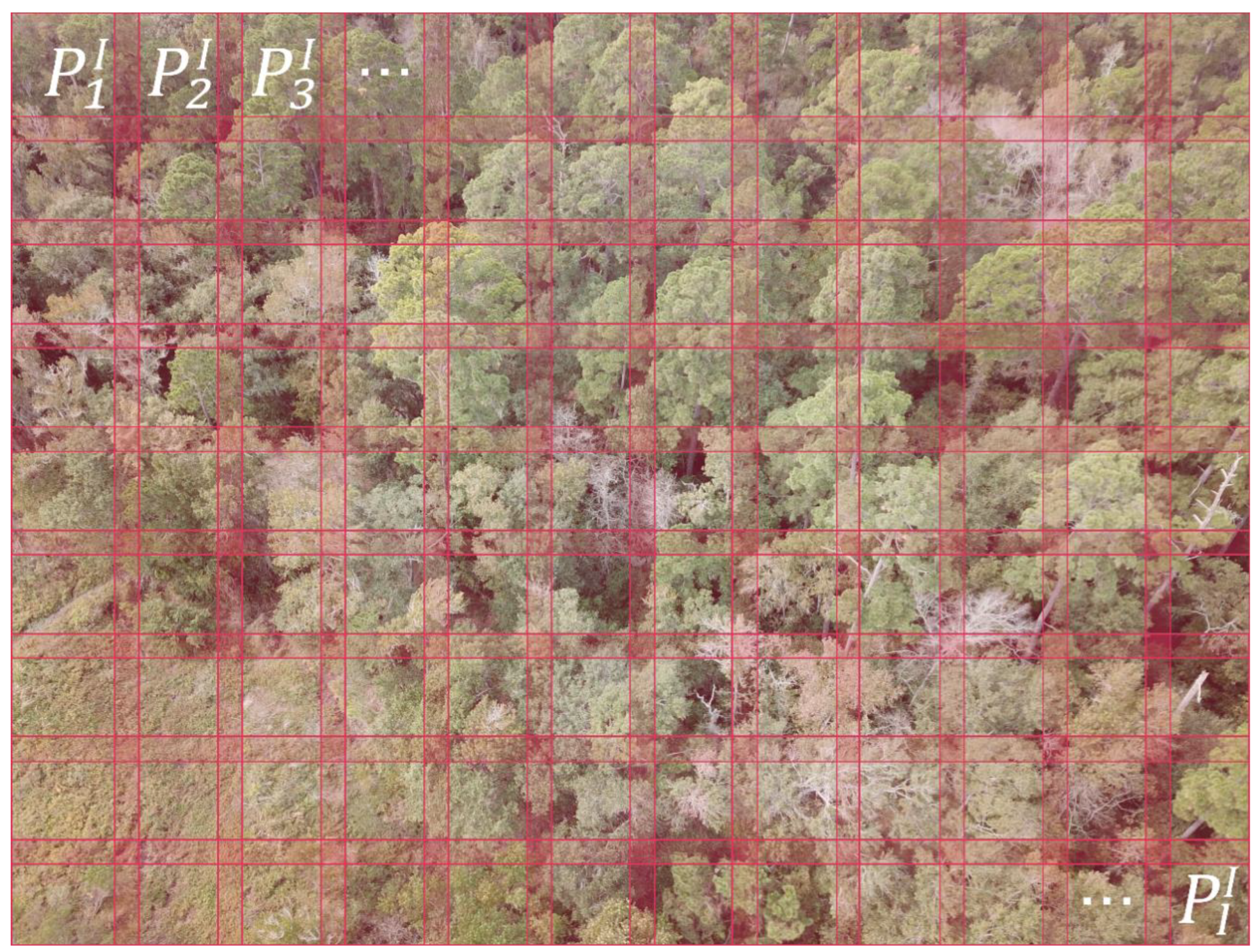

2.3.1. Model Architectures and Tiling

2.3.2. Training with YOLOv4-Tiny

2.3.3. Training with YOLOv5n

2.3.4. Model Testing

2.4. Surveying and Field Validation

2.4.1. Pedestrian

2.4.2. Aerial: Manual Review and Semi-Automation

2.4.3. Field Validation

3. Results

3.1. Model Testing Results

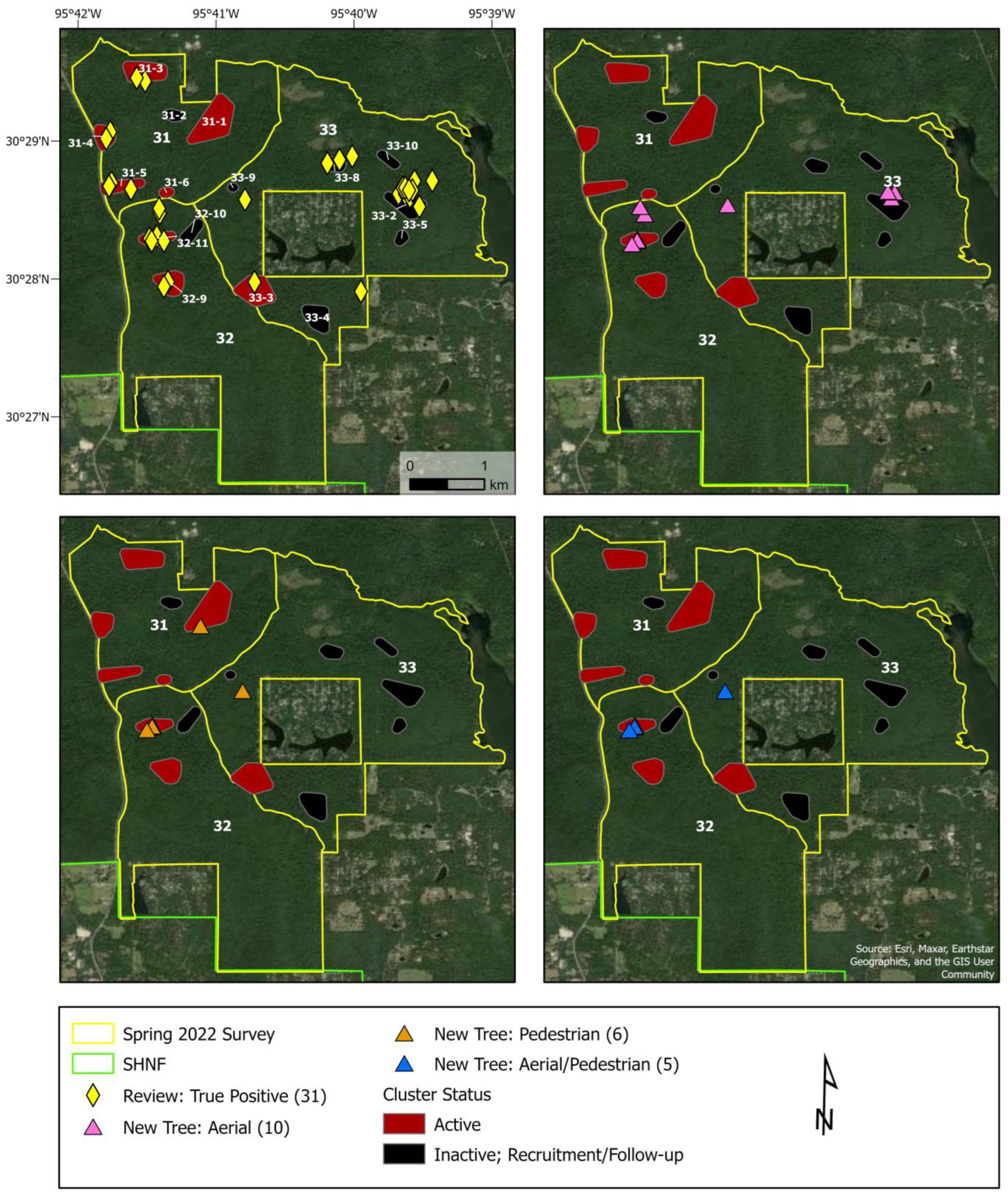

3.2. Comparing UAS and Pedestrian Survey Results in Compartments 31–33

4. Discussion

4.1. Interpretation of Results

4.2. Implications of Results

4.3. Limitations

4.4. Future Works

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pennekamp, F.; Schtickzelle, N. Implementing Image Analysis in Laboratory-Based Experimental Systems for Ecology and Evolution: A Hands-on Guide. Methods Ecol. Evol. 2013, 4, 483–492. [Google Scholar] [CrossRef]

- Weinstein, B.G. A Computer Vision for Animal Ecology. J Anim. Ecol. 2018, 87, 533–545. [Google Scholar] [CrossRef] [PubMed]

- Borowiec, M.L.; Dikow, R.B.; Frandsen, P.B.; McKeeken, A.; Valentini, G.; White, A.E. Deep Learning as a Tool for Ecology and Evolution. Methods Ecol. Evol. 2022, 13, 1640–1660. [Google Scholar] [CrossRef]

- Seymour, A.C.; Dale, J.; Hammill, M.; Halpin, P.N.; Johnston, D.W. Automated Detection and Enumeration of Marine Wildlife Using Unmanned Aircraft Systems (UAS) and Thermal Imagery. Sci. Rep. 2017, 7, 45127. [Google Scholar] [CrossRef] [PubMed]

- Hodgson, J.C.; Mott, R.; Baylis, S.M.; Pham, T.T.; Wotherspoon, S.; Kilpatrick, A.D.; Raja Segaran, R.; Reid, I.; Terauds, A.; Koh, L.P. Drones Count Wildlife More Accurately and Precisely than Humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef]

- Corcoran, E.; Winsen, M.; Sudholz, A.; Hamilton, G. Automated Detection of Wildlife Using Drones: Synthesis, Opportunities and Constraints. Methods Ecol. Evol. 2021, 12, 1103–1114. [Google Scholar] [CrossRef]

- Yi, Z.-F.; Frederick, H.; Mendoza, R.L.; Avery, R.; Goodman, L. AI Mapping Risks to Wildlife in Tanzania: Rapid Scanning Aerial iImages to Flag the Changing Frontier of Human-Wildlife Proximity. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5299–5302. [Google Scholar]

- Bogucki, R.; Cygan, M.; Khan, C.B.; Klimek, M.; Milczek, J.K.; Mucha, M. Applying Deep Learning to Right Whale Photo Identification. Conserv. Biol. 2019, 33, 676–684. [Google Scholar] [CrossRef]

- Hong, S.-J.; Han, Y.; Kim, S.-Y.; Lee, A.-Y.; Kim, G. Application of Deep-Learning Methods to Bird Detection Using Unmanned Aerial Vehicle Imagery. Sensors 2019, 19, 1651. [Google Scholar] [CrossRef]

- Duporge, I.; Isupova, O.; Reece, S.; Macdonald, D.W.; Wang, T. Using Very-high-resolution Satellite Imagery and Deep Learning to Detect and Count African Elephants in Heterogeneous Landscapes. Remote Sens. Ecol. Conserv. 2021, 7, 369–381. [Google Scholar] [CrossRef]

- Miao, Z.; Gaynor, K.M.; Wang, J.; Liu, Z.; Muellerklein, O.; Norouzzadeh, M.S.; McInturff, A.; Bowie, R.C.K.; Nathan, R.; Yu, S.X.; et al. Insights and Approaches Using Deep Learning to Classify Wildlife. Sci. Rep. 2019, 9, 8137. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Rivas, M.L.; Alcaraz-Segura, D.; Herrera, F. Whale Counting in Satellite and Aerial Images with Deep Learning. Sci. Rep. 2019, 9, 14259. [Google Scholar] [CrossRef]

- Schneider, S.; Taylor, G.W.; Linquist, S.; Kremer, S.C. Past, Present and Future Approaches Using Computer Vision for Animal Re-identification from Camera Trap Data. Methods Ecol. Evol. 2019, 10, 461–470. [Google Scholar] [CrossRef]

- Gray, P.C.; Fleishman, A.B.; Klein, D.J.; McKown, M.W.; Bézy, V.S.; Lohmann, K.J.; Johnston, D.W. A Convolutional Neural Network for Detecting Sea Turtles in Drone Imagery. Methods Ecol. Evol. 2019, 10, 345–355. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.-L.; Chen, S.-C.; Iyengar, S.S. A Survey on Deep Learning: Algorithms, Techniques, and Applications. ACM Comput. Surv. 2019, 51, 92. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M.; Ayyagari, M.R.; Kumar, G. A Survey of Deep Learning and Its Applications: A New Paradigm to Machine Learning. Arch. Computat. Methods Eng. 2020, 27, 1071–1092. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

- Wu, W.; Liu, H.; Li, L.; Long, Y.; Wang, X.; Wang, Z.; Li, J.; Chang, Y. Application of Local Fully Convolutional Neural Network Combined with YOLO v5 Algorithm in Small Target Detection of Remote Sensing Image. PLoS ONE 2021, 16, e0259283. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhao, Q.; Jiang, P.; Zheng, Y.; Yuan, L.; Yuan, P. LDS-YOLO: A Lightweight Small Object Detection Method for Dead Trees from Shelter Forest. Comput. Electron. Agric. 2022, 198, 107035. [Google Scholar] [CrossRef]

- Linlong, W.; Huaiqing, Z.; Tingdong, Y.; Jing, Z.; Zeyu, C.; Nianfu, Z.; Yang, L.; Yuanqing, Z.; Huacong, Z. Optimized Detection Method for Siberian Crane (Grus Leucogeranus) Based on Yolov5. In Proceedings of the 11th International Conference on Information Technology in Medicine and Education (ITME), Wuyishan, China, 19–21 November 2021; pp. 1–6. [Google Scholar]

- Alqaysi, H.; Fedorov, I.; Qureshi, F.Z.; O’Nils, M. A Temporal Boosted YOLO-Based Model for Birds Detection around Wind Farms. J. Imaging 2021, 7, 227. [Google Scholar] [CrossRef]

- Santhosh, K.; Anupriya, K.; Hari, B.; Prabhavathy, P. Real Time Bird Detection and Recognition Using TINY YOLO and GoogLeNet. Int. J. Eng. Res. Technol. 2019, 8, 1–5. [Google Scholar]

- Bjerge, K.; Mann, H.M.R.; Høye, T.T. Real-time Insect Tracking and Monitoring with Computer Vision and Deep Learning. Remote Sens. Ecol. Conserv. 2022, 8, 315–327. [Google Scholar] [CrossRef]

- Andrew, M.E.; Shephard, J.M. Semi-Automated Detection of Eagle Nests: An Application of Very High-Resolution Image Data and Advanced Image Analyses to Wildlife Surveys. Remote Sens. Ecol. Conserv. 2017, 3, 66–80. [Google Scholar] [CrossRef]

- Mishra, P.K.; Rai, A. Role of Unmanned Aerial Systems for Natural Resource Management. J. Indian Soc. Remote Sens. 2021, 49, 671–679. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight Unmanned Aerial Vehicles Will Revolutionize Spatial Ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Unel, F.O.; Ozkalayci, B.O.; Cigla, C. The Power of Tiling for Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 582–591. [Google Scholar]

- Akyon, F.C.; Onur Altinuc, S.; Temizel, A. Slicing Aided Hyper Inference and Fine-Tuning for Small Object Detection. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar]

- U.S. Fish and Wildlife Service. Recovery Plan for the Red-Cockaded Woodpecker (Picoides borealis): Second Revision; U.S. Fish and Wildlife Service: Atlanta, GA, USA, 2003; pp. 1–296. [Google Scholar]

- Ligon, J.D. Behavior and Breeding Biology of the Red-Cockaded Woodpecker. Auk 1970, 87, 255–278. [Google Scholar] [CrossRef]

- Jusino, M.A.; Lindner, D.L.; Banik, M.T.; Walters, J.R. Heart Rot Hotel: Fungal Communities in Red-Cockaded Woodpecker Excavations. Fungal Ecol. 2015, 14, 33–43. [Google Scholar] [CrossRef]

- Rudolph, C.D.; Howard, K.; Connor, R.N. Red-Cockaded Woodpeckers vs Rat Snakes: The Effectiveness of the Resin Barrier. Wilson Bull. 1990, 102, 14–22. [Google Scholar]

- Christie, K.S.; Gilbert, S.L.; Brown, C.L.; Hatfield, M.; Hanson, L. Unmanned Aircraft Systems in Wildlife Research: Current and Future Applications of a Transformative Technology. Front. Ecol. Environ. 2016, 14, 241–251. [Google Scholar] [CrossRef]

- Mulero-Pázmány, M.; Jenni-Eiermann, S.; Strebel, N.; Sattler, T.; Negro, J.J.; Tablado, Z. Unmanned Aircraft Systems as a New Source of Disturbance for Wildlife: A Systematic Review. PLoS ONE 2017, 12, e0178448. [Google Scholar] [CrossRef]

- Krause, D.J.; Hinke, J.T.; Goebel, M.E.; Perryman, W.L. Drones Minimize Antarctic Predator Responses Relative to Ground Survey Methods: An Appeal for Context in Policy Advice. Front. Mar. Sci. 2021, 8, 648772. [Google Scholar] [CrossRef]

- ESRI. World Imagery [basemap]. Scale Not Given. “World Imagery”. 9 June 2022. Available online: https://www.arcgis.com/home/item.html?id=226d23f076da478bba4589e7eae95952 (accessed on 20 December 2022).

- Walters, J.R.; Daniels, S.J.; Carter, J.H.; Doerr, P.D. Defining Quality of Red-Cockaded Woodpecker Foraging Habitat Based on Habitat Use and Fitness. J. Wild. Manag. 2002, 66, 1064. [Google Scholar] [CrossRef]

- Sardà-Palomera, F.; Bota, G.; Viñolo, C.; Pallarés, O.; Sazatornil, V.; Brotons, L.; Gomáriz, S.; Sardà, F. Fine-Scale Bird Monitoring from Light Unmanned Aircraft Systems: Bird Monitoring from UAS. Ibis 2012, 154, 177–183. [Google Scholar] [CrossRef]

- Chabot, D.; Craik, S.R.; Bird, D.M. Population Census of a Large Common Tern Colony with a Small Unmanned Aircraft. PLoS ONE 2015, 10, e0122588. [Google Scholar] [CrossRef] [PubMed]

- Fudala, K.; Bialik, R.J. The Use of Drone-Based Aerial Photogrammetry in Population Monitoring of Southern Giant Petrels in ASMA 1, King George Island, Maritime Antarctica. Glob. Ecol. Conserv. 2022, 33, e01990. [Google Scholar] [CrossRef]

- Pfeiffer, M.B.; Blackwell, B.F.; Seamans, T.W.; Buckingham, B.N.; Hoblet, J.L.; Baumhardt, P.E.; DeVault, T.L.; Fernández-Juricic, E. Responses of Turkey Vultures to Unmanned Aircraft Systems Vary by Platform. Sci. Rep. 2021, 11, 21655. [Google Scholar] [CrossRef]

- Open-Source Neural Networks in c. Available online: http://pjreddie.com/darknet/ (accessed on 20 December 2022).

- Hollings, T.; Burgman, M.; van Andel, M.; Gilbert, M.; Robinson, T.; Robinson, A. How Do You Find the Green Sheep? A Critical Review of the Use of Remotely Sensed Imagery to Detect and Count Animals. Methods Ecol. Evol. 2018, 9, 881–892. [Google Scholar] [CrossRef]

- National Association of Forest Service Retirees. Sustaining the Forest Service: Increasing Workforce Capacity to Increase the Pace and Scale of Restoration on National Forest System Lands; National Association of Forest Service Retirees: Milwaukee, WI, USA, 2019; p. 10. [Google Scholar]

- Santo, A.R.; Coughlan, M.R.; Huber-Stearns, H.; Adams, M.D.O.; Kohler, G. Changes in Relationships between the USDA Forest Service and Small, Forest-Based Communities in the Northwest Forest Plan Area amid Declines in Agency Staffing. J. For. 2021, 119, 291–304. [Google Scholar] [CrossRef]

| Model | DJI Mavic Pro Platinum | DJI Mavic Pro 2 |

|---|---|---|

| Weight | 734 g | 907 g |

| Flight time | 30 m | 31 m |

| Sensor | 1/2.3″(CMOS) | 1″(CMOS) |

| Effective pixels | 12.35 million | 20 million |

| Lens | FOV 78.8°, 26 mm (35 mm format equivalent), aperture f/2.2, shooting range from 0.5 m to ∞ | FOV about 77°, 28 mm (35 mm format equivalent), aperture f/2.8-f/11, shooting range from 1 m to ∞ |

| ISO Range (photo) | 100–1600 | 100–3200 (auto), 100–12,800 (manual) |

| Electronic Shutter Speed | 8–1/8000 s | 8–1/8000 s |

| Still Image Size | 4000 × 3000 | 5472 × 3648 |

| Altitude | Total Images | Class | Train | Valid | Test | Total Annotations | Null Images | Average Annotation per Image | Average Image Size | Average Image Ratio |

|---|---|---|---|---|---|---|---|---|---|---|

| 46 m | 444 | 1 | 307 | 87 | 50 | 678 | 1.5 | 27 | 9.00 mp | 4000 × 2250 |

| 76 m | 534 | 1 | 428 | 53 | 53 | 765 | 94 | 1.4 | 16.84 mp | 5472 × 3078 |

| System | GPU | GPU Memory | CPU | RAM |

|---|---|---|---|---|

| Laptop 1 | NVIDIA GeForce RTX 2080 Super Max Q | 8 GB | Intel Core i9-10980HK @ 2.40 GHz × 16 | 32 GB |

| Laptop 2 | NVIDIA GeForce RTX 3080 | 12 GB | AMD Ryzen 9 5900HK | 32 GB |

| Metric | YOLOv4-Tiny | YOLOv5n |

|---|---|---|

| TP (true positive) | 130 | 92 |

| FP (false positive) | 37 | 2 |

| FN (false negative) | 8 | 39 |

| TN (true negative) | - | - |

| precision | 0.7784 | 0.7023 |

| recall | 0.942 | 0.9787 |

| F1 score | 0.8525 | 0.8178 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lawrence, B.; de Lemmus, E.; Cho, H. UAS-Based Real-Time Detection of Red-Cockaded Woodpecker Cavities in Heterogeneous Landscapes Using YOLO Object Detection Algorithms. Remote Sens. 2023, 15, 883. https://doi.org/10.3390/rs15040883

Lawrence B, de Lemmus E, Cho H. UAS-Based Real-Time Detection of Red-Cockaded Woodpecker Cavities in Heterogeneous Landscapes Using YOLO Object Detection Algorithms. Remote Sensing. 2023; 15(4):883. https://doi.org/10.3390/rs15040883

Chicago/Turabian StyleLawrence, Brett, Emerson de Lemmus, and Hyuk Cho. 2023. "UAS-Based Real-Time Detection of Red-Cockaded Woodpecker Cavities in Heterogeneous Landscapes Using YOLO Object Detection Algorithms" Remote Sensing 15, no. 4: 883. https://doi.org/10.3390/rs15040883

APA StyleLawrence, B., de Lemmus, E., & Cho, H. (2023). UAS-Based Real-Time Detection of Red-Cockaded Woodpecker Cavities in Heterogeneous Landscapes Using YOLO Object Detection Algorithms. Remote Sensing, 15(4), 883. https://doi.org/10.3390/rs15040883