Abstract

Hyperspectral images (HSIs) are one of the most successfully used tools for precisely and potentially detecting key ground surfaces, vegetation, and minerals. HSIs contain a large amount of information about the ground scene; therefore, object classification becomes the most difficult task for such a high-dimensional HSI data cube. Additionally, the HSI’s spectral bands exhibit a high correlation, and a large amount of spectral data creates high dimensionality issues as well. Dimensionality reduction is, therefore, a crucial step in the HSI classification pipeline. In order to identify a pertinent subset of features for effective HSI classification, this study proposes a dimension reduction method that combines feature extraction and feature selection. In particular, we exploited the widely used denoising method minimum noise fraction (MNF) for feature extraction and an information theoretic-based strategy, cross-cumulative residual entropy (CCRE), for feature selection. Using the normalized CCRE, minimum redundancy maximum relevance (mRMR)-driven feature selection criteria were used to enhance the quality of the selected feature. To assess the effectiveness of the extracted features’ subsets, the kernel support vector machine (KSVM) classifier was applied to three publicly available HSIs. The experimental findings manifest a discernible improvement in classification accuracy and the qualities of the selected features. Specifically, the proposed method outperforms the traditional methods investigated, with overall classification accuracies on Indian Pines, Washington DC Mall, and Pavia University HSIs of 97.44%, 99.71%, and 98.35%, respectively.

1. Introduction

Due to the extraordinary advancement of hyperspectral remote sensors, hundreds of tiny and continuous spectral bands are feasible to acquire from the electromagnetic (EM) spectrum, which typically spreads in the ranges 0.4 μm to 2.5 μm and includes visible to the near-infrared region of the EM spectrum [1]. For example, with an exceptional spectral resolution of 0.01 μm, the airborne visible/infrared imaging spectrometer (AVIRIS) sensor effectively captures 224 spectral images for the Indian Pines (IP) hyperspectral image (HSI) scene [2]. Due to the superior spectral resolution, ground objects are becoming a more commonly used research topic [3]. Every single spectral channel is recognized as a feature for classification in this context, as long as it contains distinct ground surface responses [4]. An HSI is represented by a 3D data cube, from which we can extract 2D spatial information corresponding to the HSI’s height and width and 1D spectral information corresponding to the HSI’s total number of spectral bands. Due to the enormous amount of data made available by HSIs, traditional HSIs pose significant challenges during image processing processes such as classification [5]. The reasons are as follows: (i) There is a strong correlation between the input image bands; (ii) Not all spectral bands have the same amount of information to convey, and some of it is noisy [6]; (iii) The spectral bands are collected in a continuous range by hyperspectral sensors, which means that certain spectral bands might reveal unusual information about the surface of the earth [7]; (iv) The increased spectral resolution of hyperspectral images improves classification techniques but limits computational capacity. Additionally, because there are few training examples, this high-dimensional data cube’s classification accuracy is relatively unsatisfactory; (v) The ratio of the amount of input HSI features to training samples is not balanced. The test data classification accuracy steadily degrades as a result, a phenomenon known as the Hughes phenomenon or the curse of the dimensionality effect [8]. To increase classification accuracy, it is crucial to condense the high-dimensional HSI data to a useful subspace of informative features. Therefore, the fundamental goal of this study was to use a constructive approach to reduce the HSI dimensions for improved classification.

The HSI high-dimensional data may be reduced into a lower dimension using various feature reduction techniques. Feature extraction, feature selection, or a combination of the two can be used for this [9,10]. By utilizing a linear or nonlinear transformation, feature extraction converts the original images from the original space of S dimensionality to a new space of P dimensionality, where P << S. However, because HSIs are noisy data, the noise must be removed [11]. Feature extraction strategies for data subsets might be supervised or unsupervised [12]. Known data classes are used in supervised algorithms. To infer class separability, these approaches require datasets containing labeled samples. The most used supervised methods include linear discriminant analysis (LDA) [13], nonparametric weighted feature extraction (NWFE) [14], and genetic algorithms [15]. The fundamental drawback of these approaches is the need for labeled samples to reduce dimensionality. The unavailability of labelled data is addressed via unsupervised dimensionality reduction approaches. Minimum noise fraction (MNF) is an extremely popular unsupervised technique and is used to evaluate extracting features. Even though principal component analysis (PCA) is used to extract features from HSI data in many analyses, PCA did not accurately show the ratio of noise in the HSI data [16,17,18]. In this case, PCA only considers the HSI’s global variance to uncorrelate the data [19]. For such noisy data, the image quality is ignored when applying a variance because of the lack of consideration for the original signal-to-noise ratio (SNR) [20]. Therefore, MNF is introduced as a better approach for feature extraction in terms of image quality. In MNF, the components are ordered according to their SNR values, regardless of how noise appears in bands [21]. Some studies have shown that even though feature extraction moves the original data to a newly generated space, ranking the extracted features is still important [13,22,23]. MNF is unsupervised and takes SNR into account exclusively; therefore, there is a chance that some classes will negatively impact accuracy and that the first few features would not be used.

Therefore, feature selection is necessary to prioritize the features generated by the feature extraction method which contain the most spatial information. In order to obtain a blend approach that performs better than either feature extraction or feature selection alone, it is common practice at the moment to combine existing feature extraction and feature selection methods to obtain an approach that performs better than either feature extraction or feature selection alone [10]. Combining feature extraction and selection is advantageous for the reason that feature extraction performed prior to feature selection can fully utilize the spectral information to generate new features, whereas feature selection performed after feature extraction can generate new features. In the following step, feature selection approaches are utilized to select the appropriate bands based on a set of predefined criteria. Due to combinatorial explosion from local minima and excessive computation, search-based feature selection typically fails [24]. Mutual information (MI)-based feature selection is one example of an information-theoretic approach that can be used to uncover non-linear as well as linear correlations between input image bands and ground-truth labels [10]. However, it is conditional on the marginal and joint probabilities of the outcomes. Due to the exponential increase in the estimation of marginal and joint probability distributions with dimensionality, it is incapable of successfully selecting features from high-dimensional data [25]. In the suggested approach, we select a subset of informative features by lowering the number of features using cross-cumulative residual entropy (CCRE). The CCRE method is applied as a feature selection technique that quantifies the similarity of information between two images. A significant advantage over MI is that CCRE is more robust and has significantly greater immunity to noise. As CCRE is not bound to [0, 1], we propose to normalize CCRE and apply the extracted image by MNF and the available class tackling the minimum redundancy and maximum relevance (mRMR) criteria. Consequently, the informative characteristics are ordered, and a feature subset that can be employed for classification is exposed. Therefore, the proposed method to generate the subset of features is termed as MNF-nCCREmRMR. A kernel support vector machine (KSVM) was used to classify the data and is compared with other methods to determine its reliability. Below is a summary of this paper’s significant contributions.

- In addition to MNF and CCRE, we propose a hybrid feature reduction technique.

- To avoid selecting redundant features, we propose a normalized CCRE-based mRMR feature selection approach over the extracted features.

We organize the rest of the paper as follows. In Section 2, we first describe conventional feature reduction methods such as PCA, MNF, MI, and CCRE. In Section 3, the proposed hybrid subset detection method, MNF-nCCREmRMR, according to mRMR, is comprehensively described. In Section 4, we provide a detailed explanation of the experiments carried out on the three real HSI datasets utilizing the proposed method compared with the current state of the art. The results are summarized in Section 5, which also outlines the paper’s conclusion.

2. Preliminaries

2.1. Principal Component Analysis (PCA)

To extract meaningful features from spectral image bands, PCA, the most used linear unsupervised feature extraction method in HSI classification, determines the association between the bands. It depends on the fact that the HSI’s neighboring bands are highly correlated and usually convey information about ground things that are similar to one another [26,27,28]. Let the spectral vector of a pixel, denoted as , in be defined as , where . Now, subtract the mean spectral vector, , to obtain the mean adjusted spectral vector, , as:

Where the mean image vector, . The zero-mean image, denoted by , is thus obtained as . Subsequently, the covariance matrix, , is computed as follows:

Eigenvalues and eigenvectors are obtained by decomposing the covariance matrix as . The orthonormal matrix, , is composed by choosing eigenvectors after rearranging the eigenvectors with highest eigenvalues, where and often . Finally, the transformed or projected data matrix, , is calculated as:

2.2. Minimum Noise Fraction (MNF)

MNF transformation is used to estimate an HSI’s intrinsic features, which is the superposition of two PCA [28]. As such, instead of using the global variance to assess the relevance of features, the MNF is more appropriate, which uses SNR. Let X denote the input HSI, where X = [x1, x2 …… xp]T, and p represents the number of image bands. As noise exists in HSI in the signal, X will be X = Sg + N, where Sg and N are the noiseless image, and noise separately. Now, the covariance can be computed by the following equation:

Here, = covariances of the signal, and = covariances of the noise. The MNF transformation can be computed in terms of noise covariance as:

Here, A represents the eigenvector matrix, and it can be computed as:

where represents the diagonal eigenvalues matrix and can also be computed using the noise ratio, as:

The components are reorganized in accordance with the SNR after passing through the MNF transformation, in contrast to PCA, which employs global data statistics. Therefore, the few MNF components include meaningful and less noisy classification features.

2.3. Mutual Information (MI)

MI is a fundamental notion in the field of statistics to determine the dependency of two input variables, A and C, and is defined as:

where and represent the marginal probability distributions and the joint probability distribution, , of the two variables A and C. MI can also be defined in terms of entropy, provided that A is a band of an input image, and C is a class label of the input image.

where H(A) and H(C) represent the entropies of A and C, and H(A, C) is their joint entropy. It is possible to utilize the MI value in Equation (8) or Equation (9) as the selection criterion in order to choose the features that are the most helpful and informative.

I(A, C) = H(A) + H(C) − H(A, C),

2.4. Cross-Cumulative Residual Entropy (CCRE)

CCRE was introduced in [29] as a well-known similarity measure technique for multimodal image registration. CCRE can be utilized to compare the similarities between the two images where cumulative residual distribution is applied rather than probability distribution. Cumulative residual entropy (CRE), developed in [30], can be used to determine CCRE. Let a in be a random variable. Then, we can write CRE as:

where + = (x ∈ R; x ≥ 0). As a result, the following formula can be used to estimate the CCRE between images a and b:

where = conditional CRE between a and b, and = expectation of . One way to calculate the conditional CRE () between a and b is as follows:

Now, the CCRE of two images I and J can be given by:

where L is the maximum value of any pixel in the images, G(u) is the joint cumulative residual distribution, GI(u) is the marginal cumulative residual distribution of I, and PJ(v) is the marginal probability of J. CCRE is mostly used to determine how the training data and the transformed MNF images are related so that the informative MNF component can be found based on the available class label.

3. Proposed Methodology

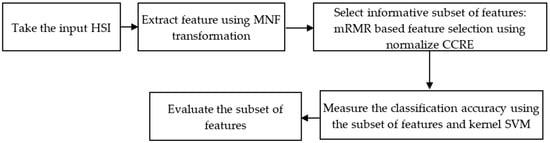

There are two primary phases in the proposed feature reduction process: (i) feature extraction through the implementation of the classical MNF on the complete HSI; and (ii) feature selection through the measurement of normalizing CCRE-based mRMR criteria on the transformed features of the HSI. Figure 1 illustrates the practical steps of our proposed method.

Figure 1.

Overview of the proposed method.

3.1. mRMR-Driven CCRE-Based Feature Selection

When deciding which features are most useful, the CCRE value in Equation (12) is taken into account. Using a comparison between the newly generated features from MNF component, Zi, and the available training class labels, C, CCRE is able to isolate the subset of features that were most important. Therefore, the feature that is calculated to be the most informative is as [31]:

where V is the most informative classification feature (maximum CCRE value) that was given to S (the number of features that were chosen). This ranked the MNF components, with the possibility that the first few components are the most useful for classification. However, there may be redundancy in the features chosen using Equation (14). Overall, the selected features should be as relevant as possible while being as redundant as possible. The greedy strategy can be utilized to select the (k + 1)th feature; then, it can be assigned to the subsets that have already been chosen. As such, the mRMR algorithm can be used to determine the model that was picked for subspace detection as:

In Equation (15), S represent the subset of selected feature, Zi is the current features extracted from MNF, k denotes the number of features to be selected for the feature space S, C signifies the ground truth image of the HSI, and Zj represents the already selected feature in the feature space, S.

3.2. Improved Feature Selection

(i) Using normalized CCRE: The values of CCRE are not constrained to a particular limit. Direct application of the in the aforementioned method is complicated by the fact that it is sensitive to the entropy of two variables and does not have a fixed range of validity. The quality of a given CCRE value is evaluated by comparing it to the range [0, 1] [32,33]. The normalized CCRE can be defined as:

We propose nCCREmRMR, utilizing the normalized CCRE in Equation (16). Accordingly, the subset of features using our proposed method can be defined as:

The observation is made that the (k + 1)th feature to be added to the target subspace of features, S, should have the largest value of .

(ii) Discard Negative values: Using Equation (17), the largest value of the difference might be less than zero, resulting in the chosen features being different from the previously picked characteristics, which is undesirable. As a result, in this study, was assumed to be positive, i.e., . If the greatest difference value is not positive, it might mean that there are no longer any desirable characteristics, and that the informative subspace has grown to its specified width.

(iii) Remove the noisy features: The formula described in Equation (17) is likely to be used to choose undesirable features. The selected features are thus only weakly related to the target when the largest difference value derives from two tiny values. The user-defined threshold (T) is introduced as a means of avoiding complications (if , remove Zi). The preprocessing stage applies the user-defined threshold, T, to condense the searching space for the greedy technique and eliminate the noisy feature. An outline of the suggested hybrid feature reduction method’s algorithm is provided below. Now, the selected set S contains the most informative features. The proposed feature reduction methodology is illustrated in Algorithm 1.

| Algorithm 1 MNF-nCCREmRMR |

(Y is the original HSI data, Z represents the transform MNF components, C is the ground truth image, T defines a user-defined threshold, and S represents the final subsets of n number of features.)

|

4. Experimental Setup and Analysis

4.1. Remote Sensing Data Sets

For experimental analysis, we used three HSI datasets publicly available and broadly used for HSI classification, i.e., AVIRIS IP, HYDICE Washington DC Mall (WDM), and ROSIS Pavia University (PU). Detailed descriptions of these datasets are given below.

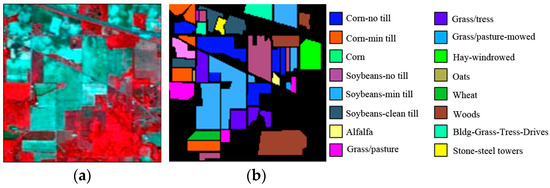

4.1.1. AVIRIS IP Dataset

NASA’s AVIRIS sensor captured the IP dataset in June 1992, which consists of 220 imaging bands [34]. The ground truth image, which is made up of sixteen classes and has a dimension of 145 by 145 pixels, contains the dataset. Furthermore, the data have a 0.1 µm spectral resolution. We excluded the classes “Oats” and “Grass/Pasture mowed” from this experiment for their insufficient training and testing samples. Figure 2 presents the IP false-color RGB and ground truth image.

Figure 2.

AVIRIS IP data: (a) false color RGB image RGB (50, 27, and 17); (b) ground truth image.

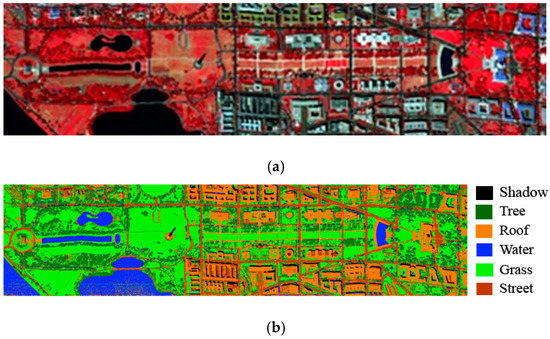

4.1.2. HYDICE WDM Dataset

The WDM data collection comprises 191 image bands, and each band consists of 1280 × 307 pixels. The HYDICE sensor captured the data in 1995 over the WDM. In the scenario depicted by the ground truth image, there are a total of seven distinct classes [35]. We have not employed “paths” in this study because the training data are insufficient. Figure 3 presents the WDM false-color RGB and ground truth image.

Figure 3.

HYDICE WDM data: (a) false color RGB image RGB (50, 52, and 36); (b) ground truth image.

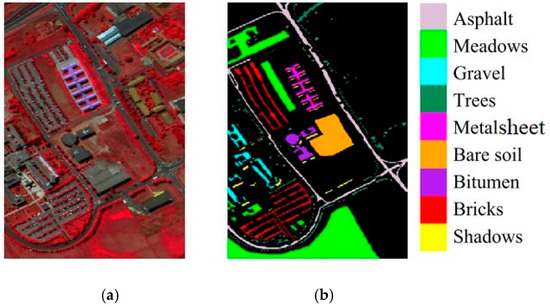

4.1.3. ROSIS PU Dataset

The ROSIS optical sensor was utilized to gather the PU dataset from the University of Pavia’s urban environment in Italy. A spatial resolution of 1.3 m per pixel and an image size of 610 × 610 were used in this his [36]. The acquired image contains 103 data channels (with a spectral range from 0.43 μm to 0.86 μm). A false-color composite of the image appears in Figure 4a, whereas nine ground-truth classes of interest are depicted in Figure 4b. Table 1 reviews the key features of all the three datasets.

Figure 4.

ROSIS PU data: (a) false color RGB image; (b) ground truth image.

Table 1.

Summary of the HSI datasets.

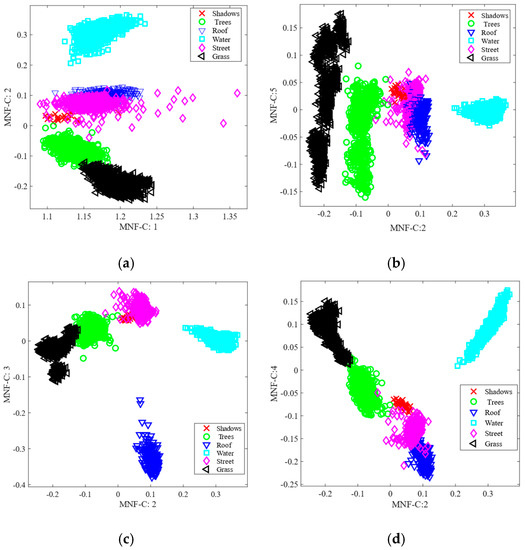

4.2. Analysis of Feature Extraction and Feature Selection

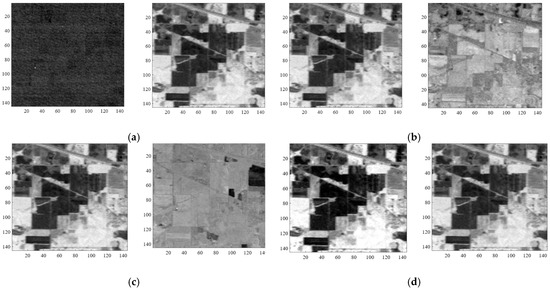

During feature extraction, the MNF technique generates new features using the transformation principles. In order to enhance the subset of features, features are chosen utilizing the normalized CCRE on newly generated features. It is possible to select the noisy feature using Equation (14). Furthermore, two weak MNF components might yield a large variation, and the algorithm can pick a useless component that is weakly connected to the ground truth image. We implemented a user-defined threshold T to prevent the use of less informative features in classification. The advantage of using T is that throughout the preprocessing steps, it first rejects noisy features. As a result, there is a reduced possibility of selecting a noisy features, and the order of the chosen features is apparent. For assessing the robustness of the proposed approach, we compared it to MNF, CCRE, MNF-MI, and MNF-CCRE methods. Table 2 outlines the abbreviations associated with the stated and different methods studied. For all studied and proposed methods, the order of the ranked features is presented in Table 3, Table 4 and Table 5 for all three datasets, respectively. The proposed method (MNF-nCCREmRMR), as shown in Table 3, ranks MNF component two (MNF-C: 2) and MNF component four (MNF-C: 4) as the top two features, in contrast to the traditional MNF method, which ranks MNF component one (MNF-C: 1) and MNF component two (MNF-C: 2) as the first and second-ranked features, respectively. Figure 5 provides a graphic representation of the first two ranking features of MNF, MNF-MI, and MNF-CCRE, as well as the proposed approach of the AVIRIS image. The illustration demonstrates how the suggested approach improves the effectiveness of the chosen characteristics and is more visually appealing than the other approaches used.

Table 2.

Description of different studied and proposed methods.

Table 3.

The order of selected features for AVIRIS IP dataset.

Table 4.

The order of selected features for HYDICE WDM dataset.

Table 5.

The order of selected features for ROSIS PU dataset.

Figure 5.

Visual representation of first two ranked features of the AVIRIS IP dataset: (a) MNF; (b) MNF-MI; (c) MNF-CCRE; and (d) MNF-nCCREmRMR.

4.3. Parameters Tuning for Classification

To evaluate the effectiveness of the chosen features, we used the KSVM classifier with the Gaussian (RBF) kernel to classify the HSIs. In the classifier, we used a grid search strategy based on tenfold cross-validation to determine the optimal values for the cost parameter, C, and the kernel width, γ [37]. The complete parameter tuning results for all the studied and proposed methods on the three datasets are presented in Table 6, Table 7 and Table 8, respectively. In particular, we obtained the optimal parameters C = 2.9 and γ = 0.5 for the AVIRIS dataset, C = 2.4 and γ = 2.1 for the HYDICE dataset, and C = 2.8 and γ = 3 for the ROSIS PU HSI.

Table 6.

Parameter tuning results using tenfold cross-validation for the AVIRIS IP dataset.

Table 7.

Parameter tuning results using tenfold cross-validation for the HYDICE WDM dataset.

Table 8.

Parameter tuning results using tenfold cross-validation for the ROSIS PU dataset.

4.4. Classification Performance Evaluation Metrics

In this study, the performance of the proposed method was assessed using commonly used quality indicators, including overall accuracy (OA), average accuracy (AA), the Kappa coefficient, and the F1 score. The proportion of all correctly categorized pixels is what the OA calculates; it can be calculated as follows:

The confusion matrix represented by A is determined by contrasting the classification map with the actual image. The number of classes is denoted by the letter C in this equation. Aii represents the number of samples in class i that are classified as class i, while B represents the total number of test samples. AA stands for the average value of the proportion of pixels in each class that have been correctly classified. This value is derived as follows:

where Aii stands for the total number of samples belonging to class i and classified as class i, and Ai+ represents the total number of samples as classified as class i. The Kappa coefficient computes the proportion of classified pixels adjusted for the number of agreements predicted only by chance. It indicates how much better the categorization performed than the likelihood of randomly assigning pixels to their correct categories and can be calculated using the notation used in Equations (18) and (19) as:

where A+i represents the total number of actual samples of class i. Now, the F1 score is calculated as follows:

where the precision and recall can be calculated as follows:

In Equation (22), TP, FP, and FN denote the number of true positive, false positive, and false negative classification of the testing samples of multiple classes.

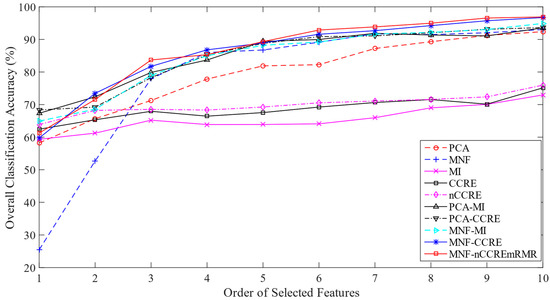

4.5. Classification Results on the AVIRIS IP Dataset

In this experiment, we took approximately 50% samples of each class as the training set and 50% samples as the testing set from a total of 2401 samples. The information regarding the samples utilized for both training and testing is presented in Table S1. As shown in Figure 2, the ground-truth image served as the basis for selecting both the training and testing samples to be used in the classification process. We calculated the OA of AVIRIS data without feature extraction and feature selection, and found 66.85% using the first ten features. This result provides motivation to reduce the number of features used in HSI classification. Table 9 shows the values of the OA, AA, Kappa, and F1 score of each method. The proposed technique has the highest OA, AA, and Kappa values, as shown in the table. This table demonstrates that the proposed MNF-nCCREmRMR approach performs better than the state-of-the-art methods on every single criterion used to evaluate performance. The two-dimensional line graphs presented in Figure 6 show the comparison of the proposed and studied methods in a more meaningful way using the OA versus the number of ranked features. As the number of features increases, the OA increases too.

Table 9.

Classification performance measure (%) on the AVIRIS IP HSI.

Figure 6.

Classification performance measure (%) on the AVIRIS IP HSI.

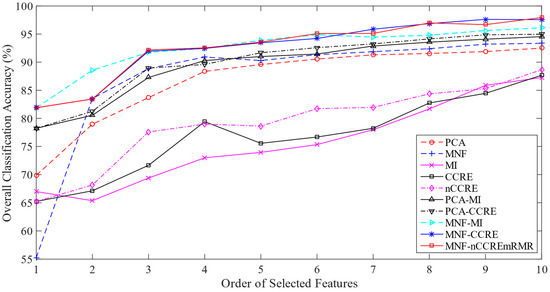

4.6. Classification Results on the HYDICE WDM Dataset

In this experiment, we have taken around 30% samples of each class as training set and 70% samples as testing set from a total of 5154 samples. Table S2 contains a representation of the information regarding the samples used for both training and testing. As shown in Figure 3, the ground-truth image is used to choose both the training samples and the testing samples for classification. Table 10 shows the values of the OA, AA, Kappa, and F1 score of each method. Performing the equivalent number of selected features, we find the OA of 99.71% by the proposed MNF-nCCREmRMR method. This table demonstrates that the proposed MNF-nCCREmRMR approach performs better than the state-of-the-art methods on every single criterion used to evaluate performance. In addition, the line graphs presented in Figure 7 shows the comparison of the proposed over the others. It can be seen that the overall classification accuracy increases with the increase in the ranked features.

Table 10.

Classification performance measure (%) on the HYDICE WDM HSI.

Figure 7.

Classification performance measure (%) on the HYDICE WDM HSI.

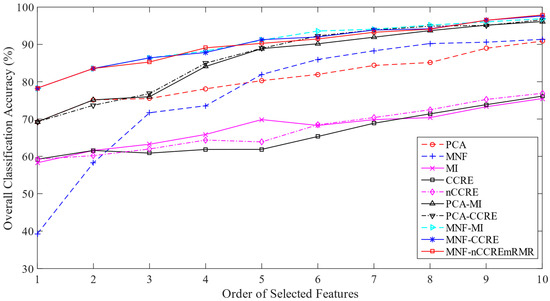

4.7. Classification Results on the ROSIS PU Dataset

For the ROSIS PU dataset, we took approximately 17% samples of each class as training set and 83% samples as testing set from a total of 20,075 samples. The detailed information of the training and testing samples is presented in Table S3. The ground-truth image is used to select the training and testing samples for classification, as shown in Figure 4. Table 11 shows the results of the OA, AA, Kappa, and F1 score of each method. Performing on the same number of selected features, we found a classification accuracy of 98.35% by the proposed MNF-nCCREmRMR method. This table also demonstrates that the proposed MNF-nCCREmRMR method outperformed all the performance measurement metrics. Based on the two-dimensional line graph presented in Figure 8, it can be seen that as the number of features increases, the overall classification accuracy also increases.

Table 11.

Classification performance measure (%) on the ROSIS PU HSI.

Figure 8.

Overall classification accuracy versus features for the ROSIS PU dataset.

We next calculated the OA on the three datasets in various numbers of training and testing ratios to confirm the robustness of the suggested feature extraction techniques in comparison to the investigated state-of-the-art feature reduction techniques. Table 12 shows the OA of the three datasets in 10%, 20%, and 30% of training samples. The results also reveal that the proposed method for feature extraction was better that the investigated state-of-the-art feature reduction methods for each of the three HSI datasets. On the other hand, we tested the investigated and proposed methods utilizing the three classifiers (Naïve Bayes classifier, decision tree classifier, and SVM) for three datasets are presented in Table 13, Table 14 and Table 15, respectively. From these tables’ data, we can conclude that the proposed methods outperform the studied methods.

Table 12.

OA measure using three different training: testing ratios on the three HSI datasets.

Table 13.

Classification performance measure (%) on the AVIRIS IP HSI for different dimension reduction methods and classification methods.

Table 14.

Classification performance measure (%) on the HYDICE WDM HSI for different dimension reduction methods and classification methods.

Table 15.

Classification performance measure (%) on the ROSIS PU HSI for different dimension reduction methods and classification methods.

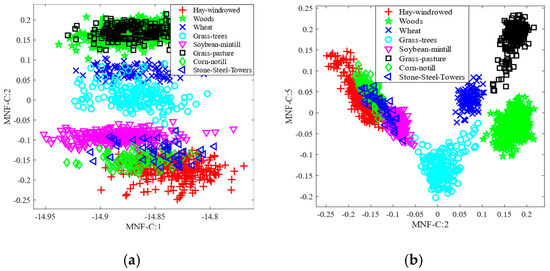

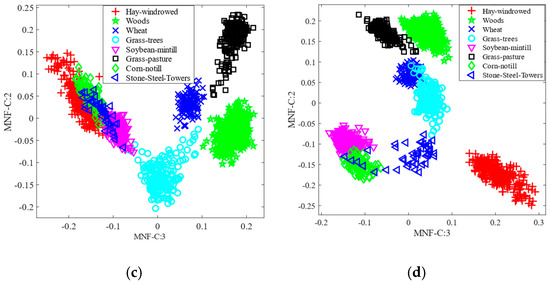

4.8. Features Scatter Plot Analysis

Here, we consider the feature space analysis approach using the scatter plot of the first two selected features to evaluate the robustness of the proposed method (MNF-nCCREmRMR). Figure 9 depicts the feature space for the AVIRIS IP dataset, utilizing the conventional approaches such as MNF, MNF-MI, MNF-CCRE, and proposed method. We used eight classes in the scatter plot to keep things simple. In this case, the standard MNF and MNF-MI methods exhibited greater class overlap, as shown in Figure 9a,b. As opposed to these studied methods, the proposed method MNF-nCCREmRMR demonstrates that the classes are more visually separable. Similarly, the feature space for the traditional MNF, MNF-MI, and MNF-CCRE, and the suggested approach on the WDM HYDICE dataset, is also depicted in Figure 10. As shown in Figure 10a,b, the classes are more intimately connected, but in the proposed method shown in Figure 10d, the class samples are more distinguishable than in the investigated methods. Additionally, it demonstrates how applying normalized CCRE with the mRMR approach to MNF data enhances the dominance of the selected features.

Figure 9.

Feature space on the AVIRIS IP dataset: (a) MNF; (b) MNF-MI; (c) MNF-CCRE; and (d) MNF-nCCREmRMR.

Figure 10.

Feature space on the HYDICE WDM dataset: (a) MNF; (b) MNF-MI; (c) MNF-CCRE; and (d) MNF-nCCREmRMR.

4.9. Extended Analysis

Each method’s execution time is analyzed and listed in this section for comparison. On a desktop computer running the Microsoft Windows 10 operating system and powered by an Intel Core i5 3.2 GHz processor, the experiments were carried out using MATLAB R2014b. Table 16 presents the execution time of each method for different datasets, from which it can be seen that MNF-nCCREmRMR is computationally comparable with the existing methods. In addition, the robustness of the proposed method MNF-nCCREmRMR for the multiclass classification was assessed using the error matrices. Tables S4–S6 show the error matrices using the AVIRIS IP, HYDICE WDM, and ROSIS PU datasets, respectively. All three error matrices illustrate that almost all classes are correctly predicted except very few of them by the proposed method.

Table 16.

The computational time (in second) of each method on AVIRIS IP, HYDICE WDM, and ROSIS PU datasets.

5. Conclusions

This study proposes a dimension reduction strategy that combines feature extraction and feature selection in order to find a relevant subset of characteristics for efficient HSI classification. We specifically made use of the widely utilized feature extraction technique MNF and the information theoretic approach CCRE for feature selection. The normalized CCRE was employed alongside the mRMR-driven feature selection criterion to enhance the quality of the chosen feature. The KSVM classifier was used to analyze the performance of the produced feature subsets on three real HSIs. The testing results showed a considerable improvement in the quality of the selected features and classification accuracy as well. The results manifest that applying normalized CCRE to the MNF data with mRMR criteria results in the subsets of informative features. The experiments also manifest that, in comparison to the traditional MNF, feature selection using normalized CCRE after the MNF transformation improves the grade of the selected features. This is the reason that the proposed approach (MNF-nCCREmRMR) selected the subset of less noisy features, provided relevant details about the appropriate objects, and ignored the redundant features. The improvement of classification accuracy and feature space analysis demonstrates the robustness of the proposed technique.

Future Work

Although, deep learning is now a trendy tool to analyze HSI but requires a large amount of labeled data, which can be costly and time-consuming. Therefore, in future, MNF-nCCREmRMR could be coupled with deep-learning-based approaches to extract both spectral and spatial characteristics of HSIs for further improving the classification performance which overcome the limitation of deep learning based HSI analysis.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs15041147/s1, Table S1. Training and testing samples of the AVIRIS IP dataset. Table S2. Training and testing samples of the HYDICE WDM dataset. Table S3. Training and testing samples of the ROSIS PU dataset. Table S4. Error matrix using the MNF-nCCREmRMR for the IP dataset. Table S5. Error matrix using the MNF-nCCREmRMR for the WDM dataset. Table S6. Error matrix using the MNF-nCCREmRMR for the ROSIS PU dataset.

Author Contributions

Conceptualization, M.R.I., A.S. and M.P.U.; methodology, M.R.I., M.P.U. and M.I.A.; software M.R.I.; validation, M.R.I., A.S. and M.P.U.; formal analysis, M.R.I., A.S. and M.I.A.; investigation, M.R.I., A.S., M.I.A. and M.P.U.; resources, M.R.I., A.S. and A.U.; data curation, M.R.I., A.S. and M.P.U.; writing—original draft preparation, M.R.I. and A.S.; writing—review and editing, M.I.A., M.P.U. and A.U.; visualization, M.R.I., A.S., M.I.A. and M.P.U.; supervision, M.I.A., M.P.U. and A.U.; funding acquisition, M.P.U. and A.U. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The AVIRIS Indian Pines data are available at https://purr.purdue.edu/publications/1947/1 (accessed on 15 February 2023), while the HYDICE Washington DC Mall data are available at https://engineering.purdue.edu/~biehl/MultiSpec/hyperspectral.html (accessed on 15 February 2023). The ROSIS Pavia University dataset can be found at https://ieee-dataport.org/documents/hyperspectral-data (accessed on 15 February 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Richards, J.A.; Richards, J. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 1999; Volume 3. [Google Scholar]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Chen, Y.; Zhao, X.; Jia, X. Spectral–spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Islam, M.R.; Ahmed, B.; Hossain, M.A. Feature reduction based on segmented principal component analysis for hyperspectral images classification. In Proceedings of the 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’s Bazar, Bangladesh, 7–9 February 2019. [Google Scholar]

- Jia, X.; Kuo, B.-C.; Crawford, M.M. Feature mining for hyperspectral image classification. Proc. IEEE 2013, 101, 676–697. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Chanussot, J.; Benediktsson, J.A. Segmentation and classification of hyperspectral images using watershed transformation. Pattern Recognit. 2010, 43, 2367–2379. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Islam, M.R.; Hossain, M.A.; Ahmed, B. Improved subspace detection based on minimum noise fraction and mutual information for hyperspectral image classification. In Proceedings of the International Joint Conference on Computational Intelligence, Budapest, Hungary, 2–4 November 2020. [Google Scholar]

- Islam, R.; Ahmed, B.; Hossain, A. Feature reduction of hyperspectral image for classification. J. Spat. Sci. 2020, 67, 331–351. [Google Scholar] [CrossRef]

- Luo, G.; Chen, G.; Tian, L.; Qin, K.; Qian, S.-E. Minimum noise fraction versus principal component analysis as a preprocessing step for hyperspectral imagery denoising. Can. J. Remote Sens. 2016, 42, 106–116. [Google Scholar] [CrossRef]

- Yang, X.; Xu, W.D.; Liu, H.; Zhu, L.Y. Research on dimensionality reduction of hyperspectral image under close range. In Proceedings of the 2019 International Conference on Communications, Information System and Computer Engineering (CISCE), Haikou, China, 5–7 July 2019; pp. 171–174. [Google Scholar]

- Li, H.; Cui, J.; Zhang, X.; Han, Y.; Cao, L. Dimensionality Reduction and Classification of Hyperspectral Remote Sensing Image Feature Extraction. Remote Sens. 2022, 14, 4579. [Google Scholar] [CrossRef]

- Kuo, B.C.; Li, C.H. Kernel Nonparametric Weighted Feature Extraction for Classification. In AI 2005: Advances in Artificial Intelligence. AI 2005. Lecture Notes in Computer Science; Zhang, S., Jarvis, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3809. [Google Scholar] [CrossRef]

- Gao, H.-M.; Zhou, H.; Xu, L.-Z.; Shi, A.-Y. Classification of hyperspectral remote sensing images based on simulated annealing genetic algorithm and multiple instance learning. J. Central South Univ. 2014, 21, 262–271. [Google Scholar] [CrossRef]

- Chang, C.-I.; Du, Q. Interference and noise-adjusted principal components analysis. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2387–2396. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. Kernel Principal Component Analysis for the Classification of Hyperspectral Remote Sensing Data over Urban Areas. EURASIP J. Adv. Signal Process. 2009, 2009, 783194. [Google Scholar] [CrossRef]

- Rodarmel, C.; Shan, J. Principal component analysis for hyperspectral image classification. Surv. Land Inf. Sci. 2002, 62, 115–122. [Google Scholar]

- Hossain, A.; Jia, X.; Pickering, M. Subspace Detection Using a Mutual Information Measure for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2013, 11, 424–428. [Google Scholar] [CrossRef]

- Green, A.; Berman, M.; Switzer, P.; Craig, M. A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef]

- Lixin, G.; Weixin, X.; Jihong, P. Segmented minimum noise fraction transformation for efficient feature extraction of hyperspectral images. Pattern Recognit. 2015, 48, 3216–3226. [Google Scholar] [CrossRef]

- Guo, B.; Gunn, S.; Damper, R.; Nelson, J. Band Selection for Hyperspectral Image Classification Using Mutual Information. IEEE Geosci. Remote Sens. Lett. 2006, 3, 522–526. [Google Scholar] [CrossRef]

- Hossain, M.A.; Jia, X.; Pickering, M. Improved feature selection based on a mutual information measure for hyperspectral image classification. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 June 2012. [Google Scholar]

- Islam, R.; Ahmed, B.; Hossain, A.; Uddin, P. Mutual Information-Driven Feature Reduction for Hyperspectral Image Classification. Sensors 2023, 23, 657. [Google Scholar] [CrossRef]

- Uddin, M.P.; Mamun, M.A.; Afjal, M.I.; Hossain, M.A. Information-theoretic feature selection with segmentation-based folded principal component analysis (PCA) for hyperspectral image classification. Int. J. Remote Sens. 2021, 42, 286–321. [Google Scholar] [CrossRef]

- Das, S.; Routray, A.; Deb, A.K. Fast Semi-Supervised Unmixing of Hyperspectral Image by Mutual Coherence Reduction and Recursive PCA. Remote Sens. 2018, 10, 1106. [Google Scholar] [CrossRef]

- Machidon, A.L.; Del Frate, F.; Picchiani, M.; Machidon, O.M.; Ogrutan, P.L. Geometrical Approximated Principal Component Analysis for Hyperspectral Image Analysis. Remote Sens. 2020, 12, 1698. [Google Scholar] [CrossRef]

- Uddin, M.P.; Mamun, M.A.; Hossain, M.A. PCA-based feature reduction for hyperspectral remote sensing image classification. IETE Tech. Rev. 2020, 38, 377–396. [Google Scholar] [CrossRef]

- Wang, F.; Vemuri, B.C. Non-Rigid Multi-Modal Image Registration Using Cross-Cumulative Residual Entropy. Int. J. Comput. Vis. 2007, 74, 201–215. [Google Scholar] [CrossRef]

- Rao, M.; Chen, Y.M.; Vemuri, B.C.; Wang, F. Cumulative residual entropy: A new measure of information. IEEE Trans. Inform. Theory 2004, 50, 1220–1228. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Estevez, P.A.; Tesmer, M.; Perez, C.A.; Zurada, J.M. Normalized Mutual Information Feature Selection. IEEE Trans. Neural Networks 2009, 20, 189–201. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J. Spectral–Spatial Classification of Hyperspectral Imagery Based on Partitional Clustering Techniques. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2973–2987. [Google Scholar] [CrossRef]

- Soelaiman, R.; Asfiandy, D.; Purwananto, Y.; Purnomo, M.H. Weighted kernel function implementation for hyperspectral image classification based on Support Vector Machine. In Proceedings of the International Conference on Instrumentation, Communication, Information Technology, and Biomedical Engineering 2009, Bandung, Indonesia, 23–25 November 2009. [Google Scholar]

- Huang, X.; Han, X.; Zhang, L.; Gong, J.; Liao, W.; Benediktsson, J.A. Generalized Differential Morphological Profiles for Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1736–1751. [Google Scholar] [CrossRef]

- Chen, Z.; Jiang, J.; Jiang, X.; Fang, X.; Cai, Z. Spectral-Spatial Feature Extraction of Hyperspectral Images Based on Propagation Filter. Sensors 2018, 18, 1978. [Google Scholar] [CrossRef]

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; National Taiwan University: Taipei, Taiwan, 2003. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).