The Potential of UAV Data as Refinement of Outdated Inputs for Visibility Analyses

Abstract

1. Introduction

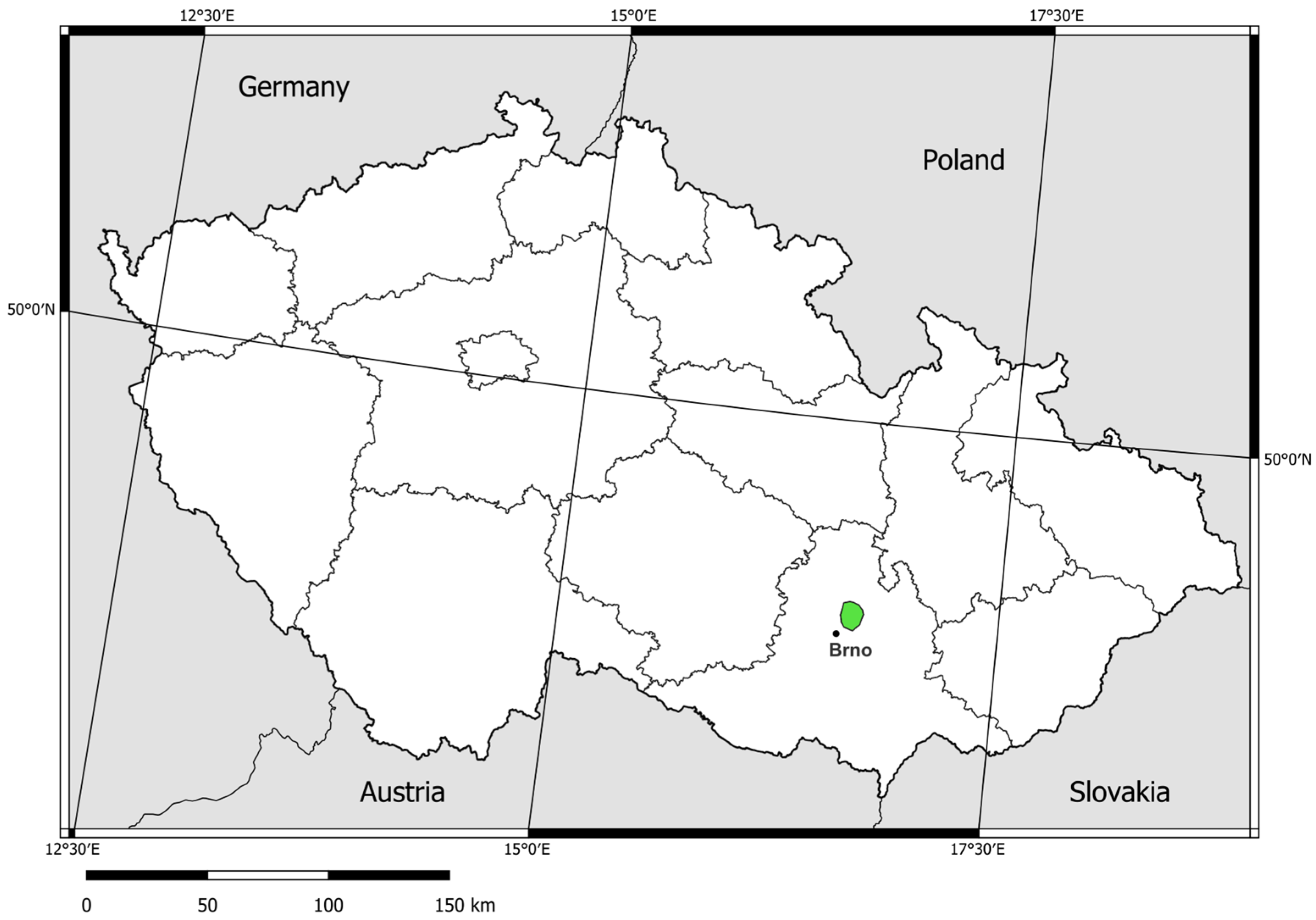

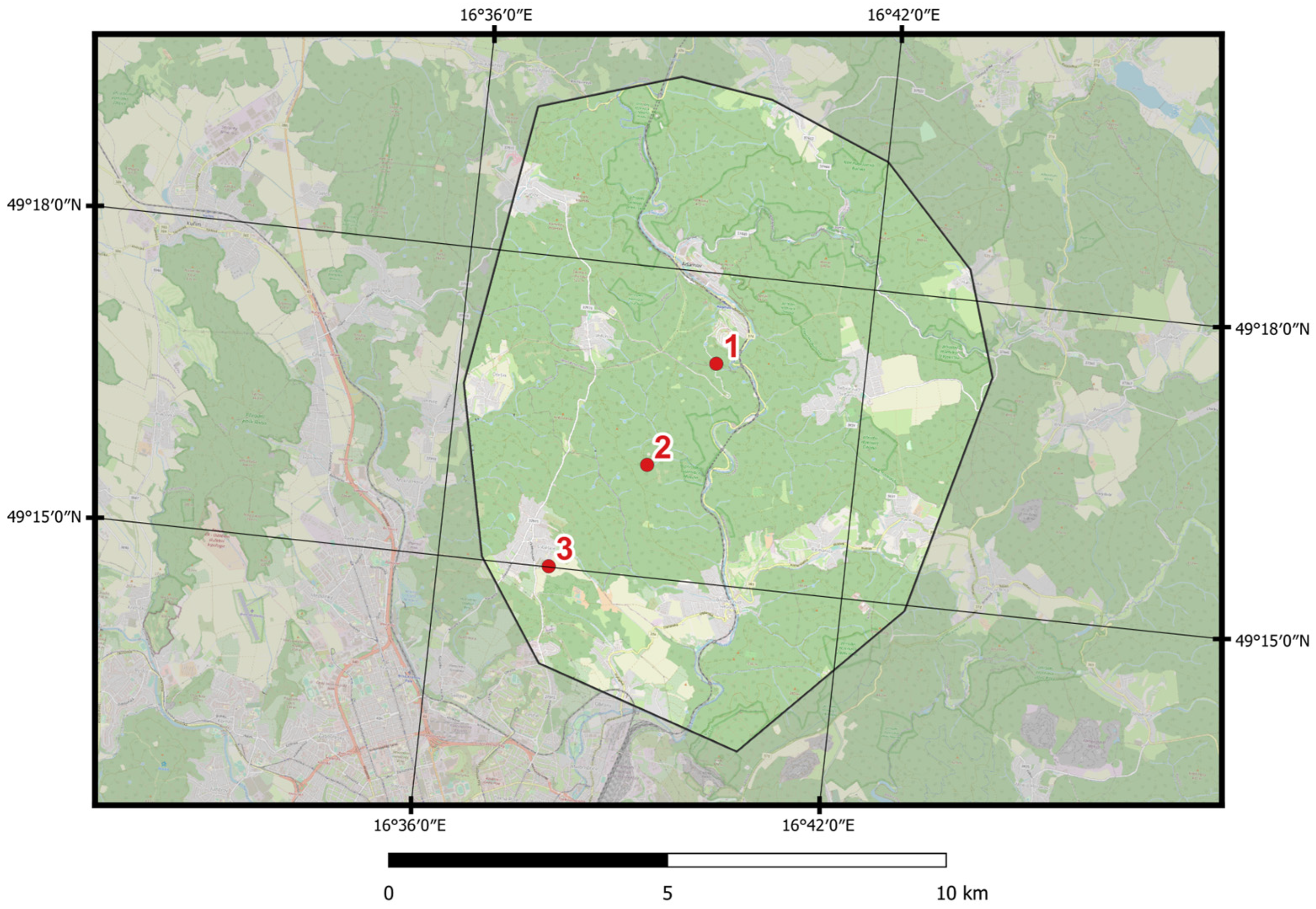

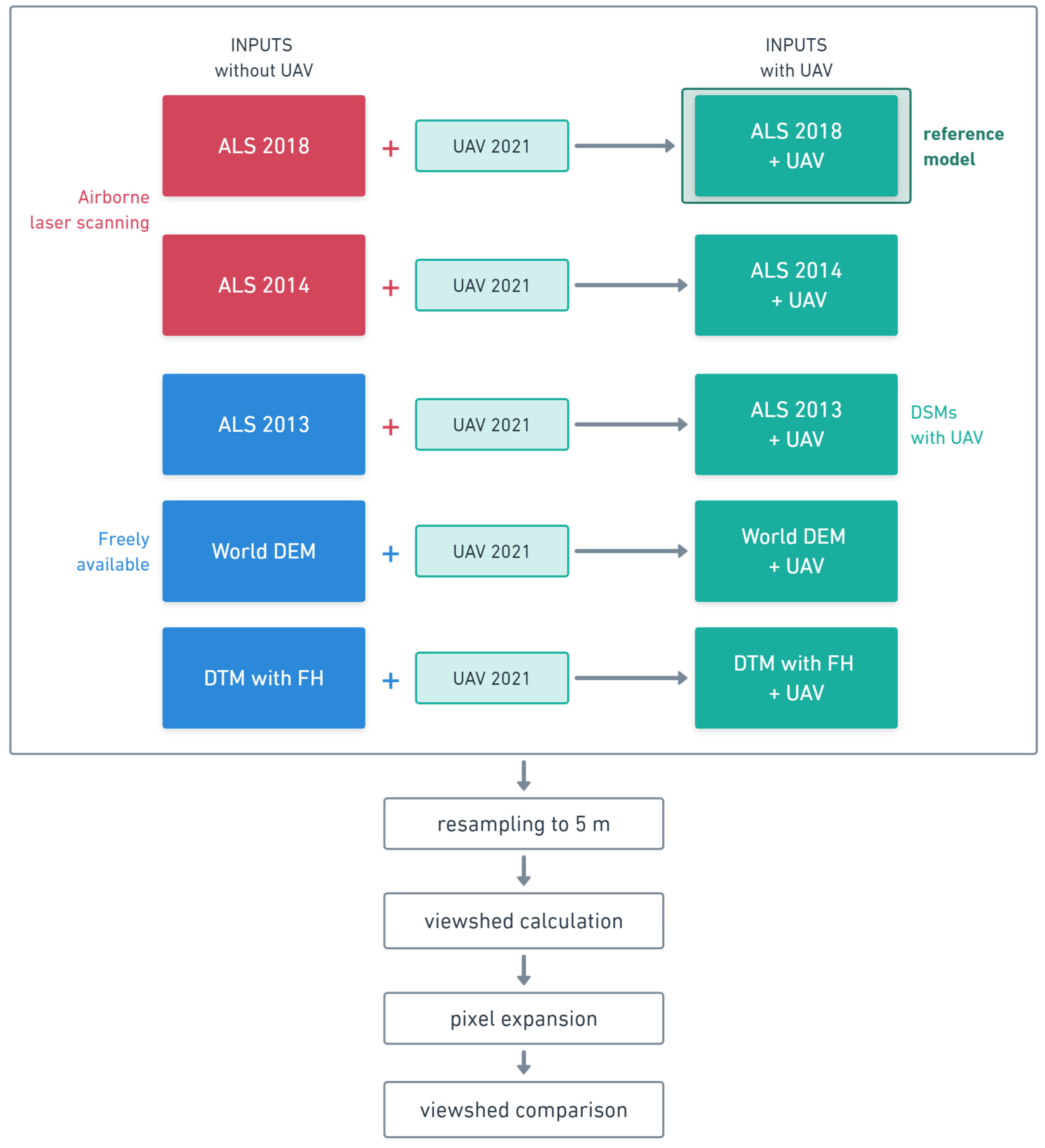

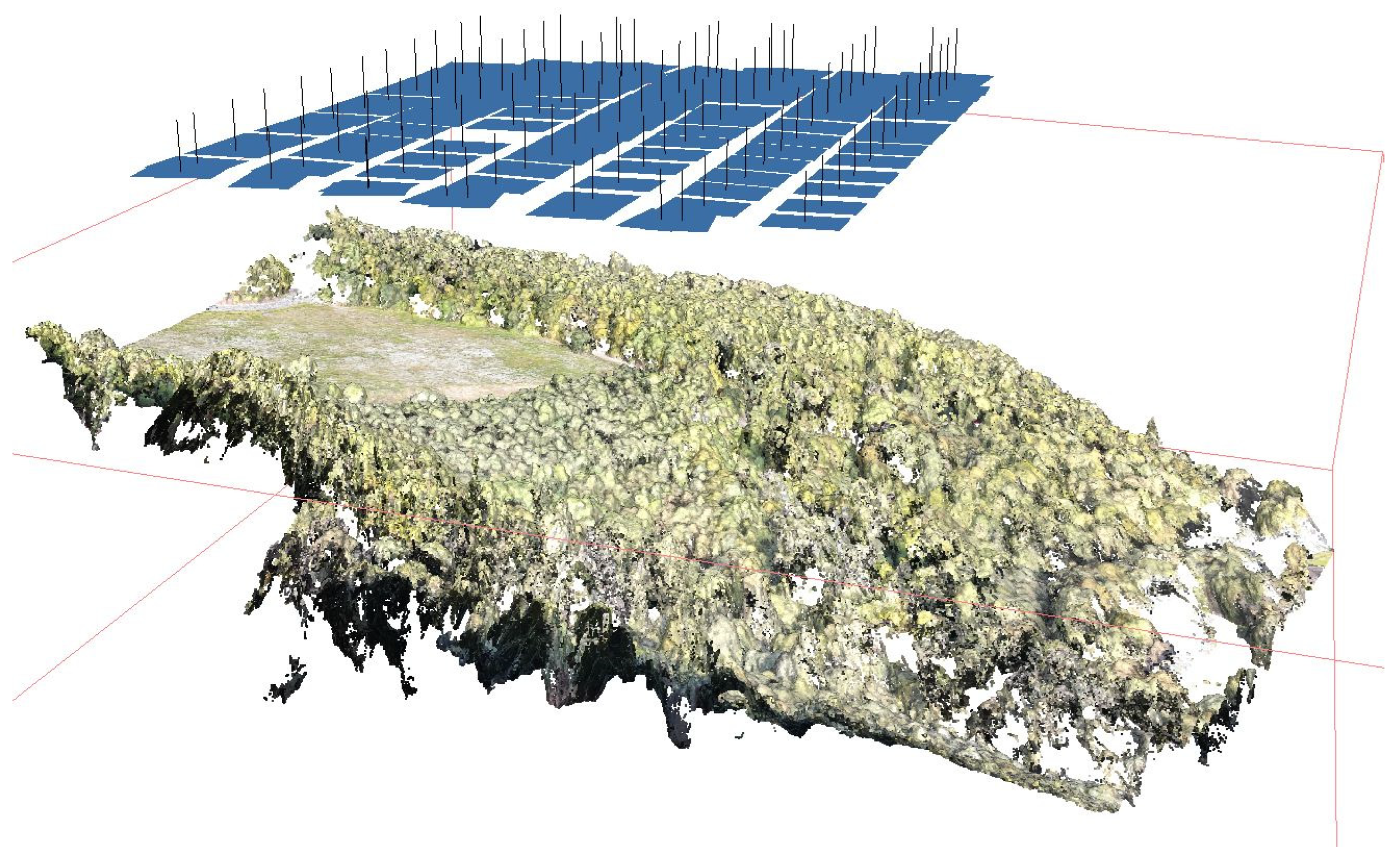

2. Materials and Methods

2.1. Data Sources

| DEM | Data Producer | Data Collection Method | Accessibility | Year of Acquisition | Original Spatial Resolution | Vertical Accuracy | Horizontal Accuracy |

|---|---|---|---|---|---|---|---|

| ALS 2013 | Czech Office for Surveying, Mapping and, Cadastre [33] | ALS | Public | 2013 | 1 m | 0.40–0.70 m | 0.70 m |

| ALS 2014 | CzechGlobe [69] | ALS | Private | 2014 | 1 m | 0.15 m | 0.20 m |

| ALS 2018 | CzechGlobe [69] | ALS | Private | 2018 | |||

| World DEM | Airbus n.d. [58] | combination of methods | Public | 2011–2015 | 24 m | 4 m | 2 m |

| DTM | Czech Office for Surveying, Mapping, and Cadastre [34] | ALS | Public | 2013 | 1 m | 0.18–0.30 m | 0.30 m |

| FH | The Global Land Analysis and Discovery (GLAD) [70] | GEDI scanner | Public | 2019 | 30 m | 4 m | 4 m |

| UAV | Author’s data | UAV | Private | 2021 | 0.15 m | 0.10 m | 0.05 m |

2.2. Visibility Calculation

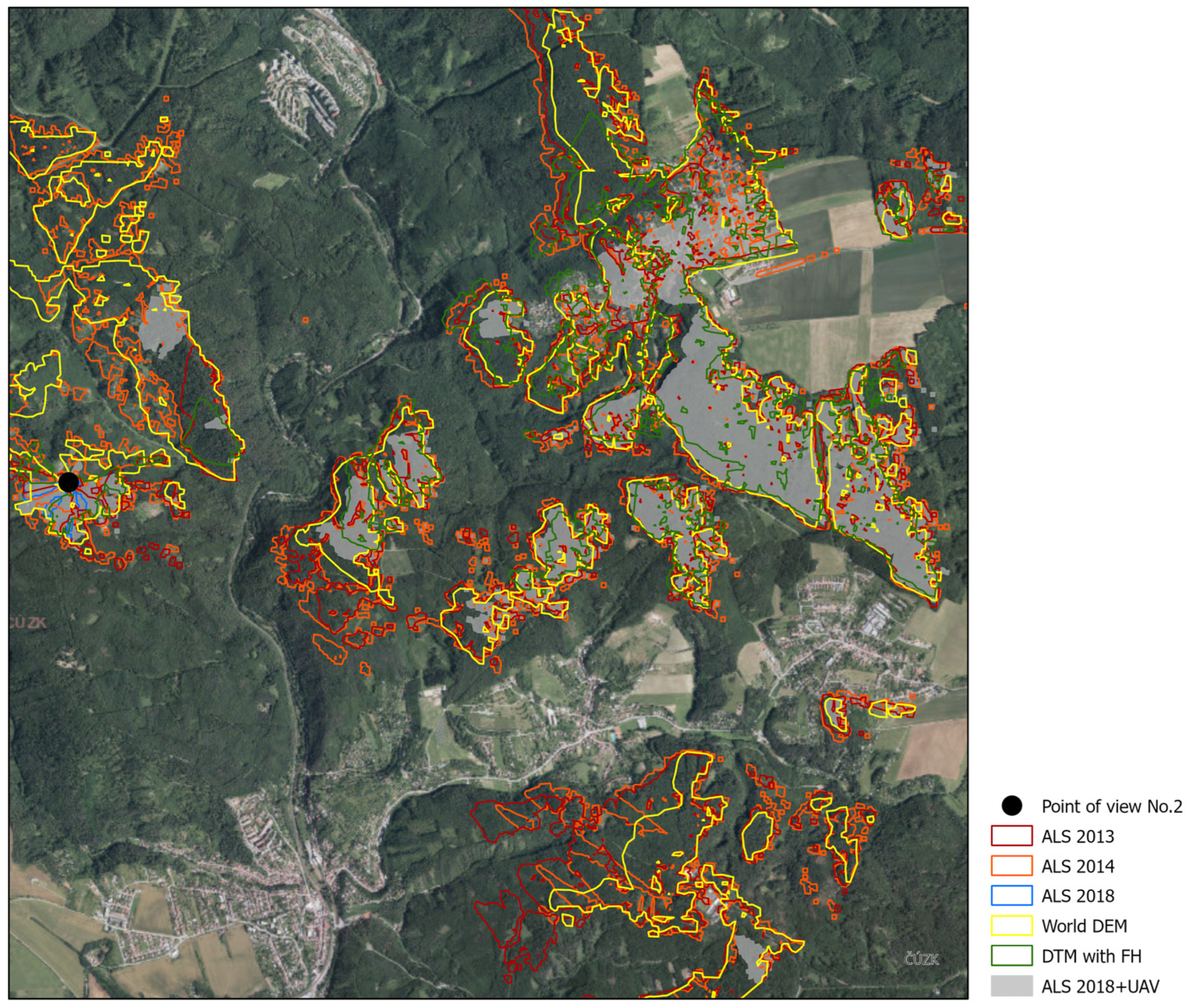

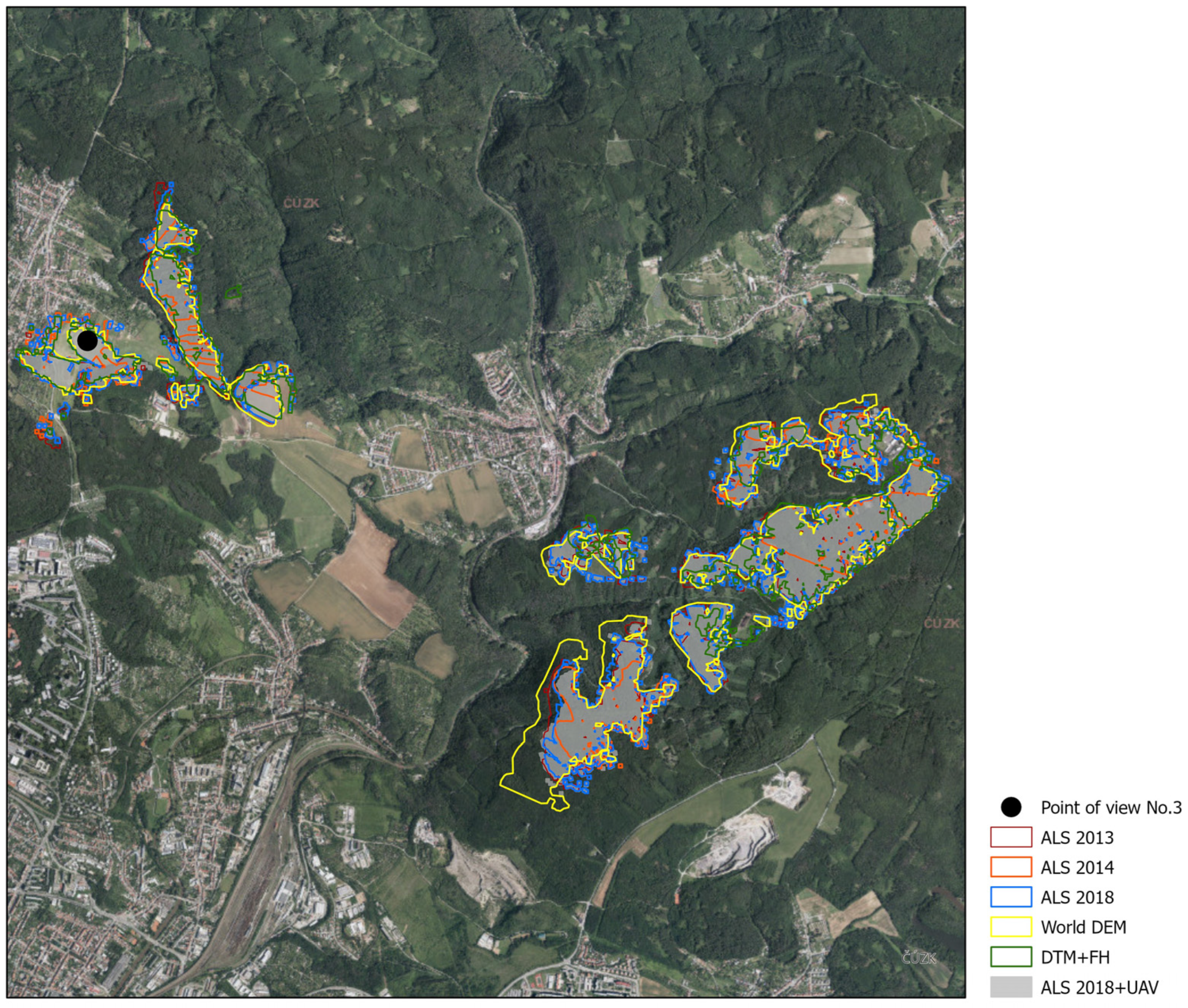

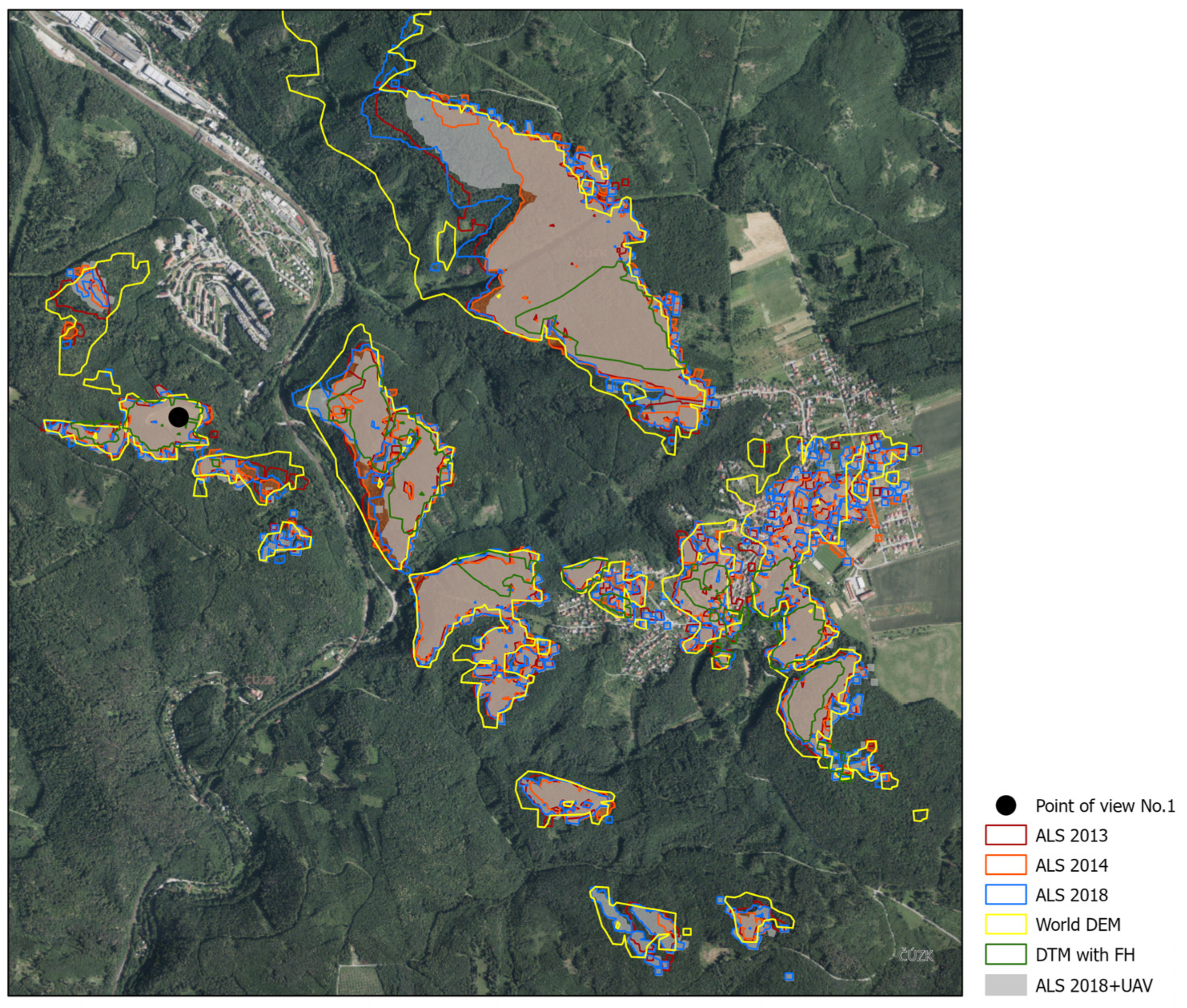

- ALS data from 2013 (ALS 2013);

- ALS data from 2014 (ALS 2014);

- ALS data from 2018 (ALS 2018);

- World DEM from ArcGIS Terrain (World DEM);

- Digital terrain model of Czech Republic with global forest height (DTM with FH).

- 6.

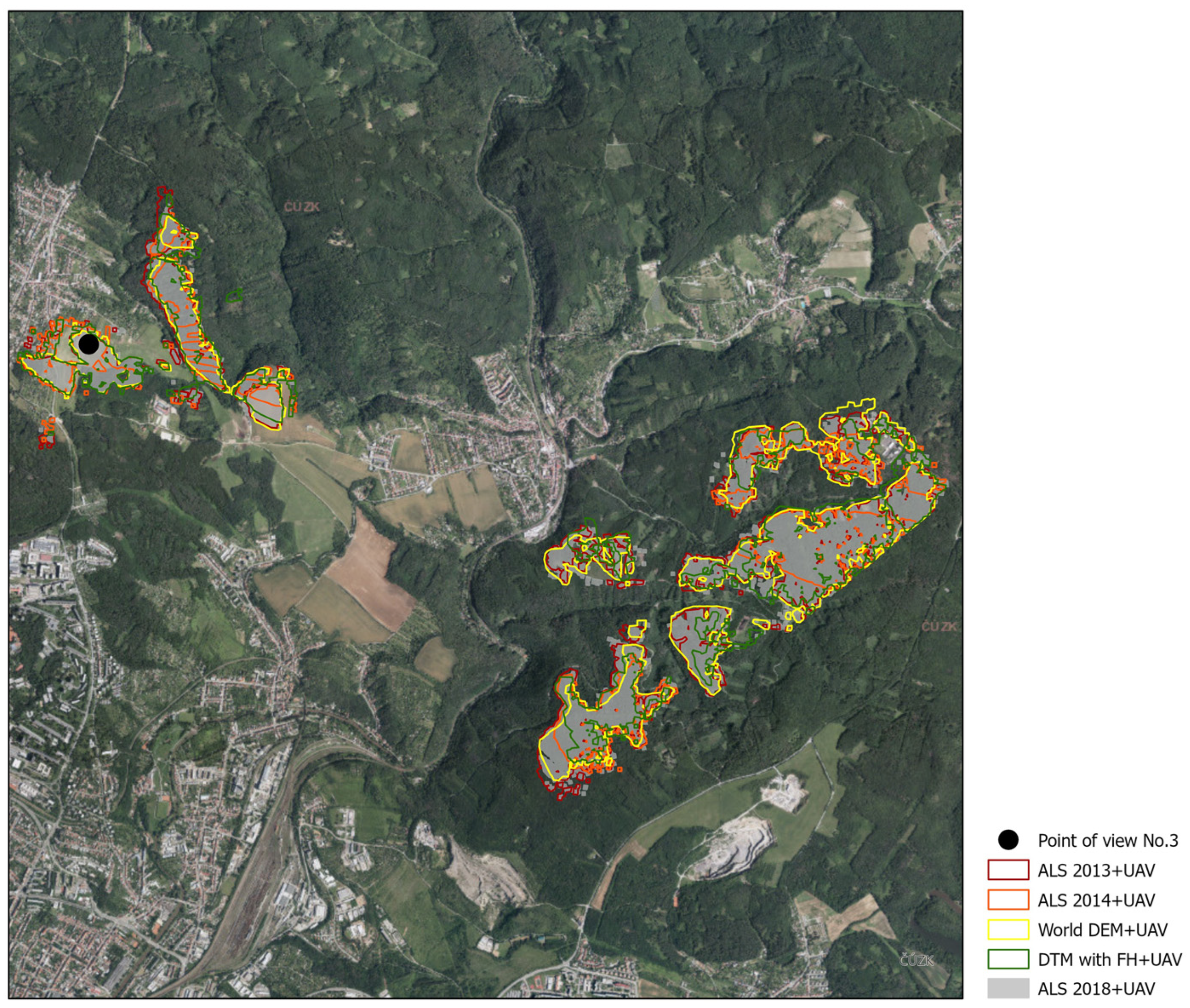

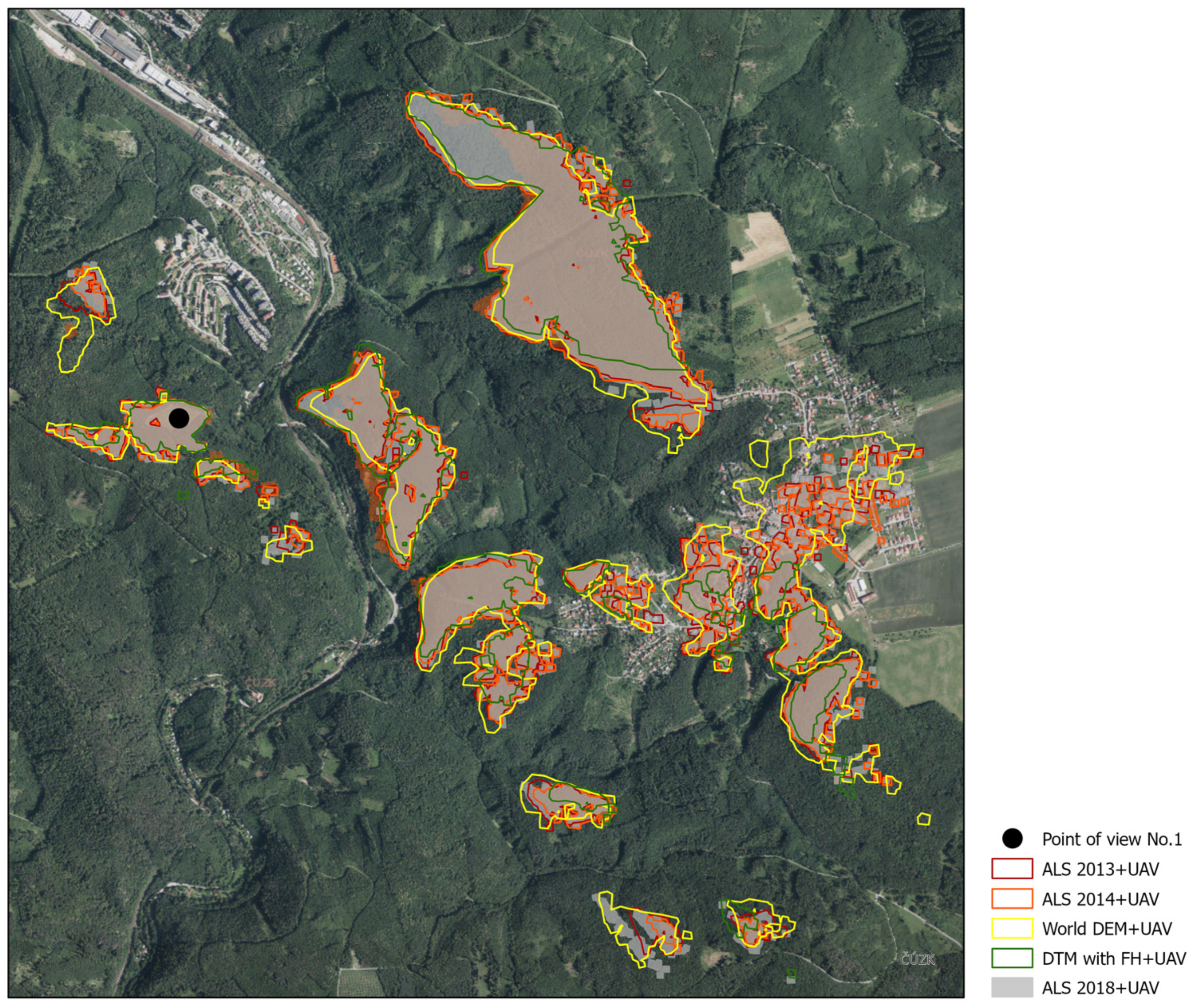

- ALS data from 2013 combined with UAV data (ALS 2013 + UAV);

- 7.

- ALS data from 2014 combined with UAV data (ALS 2014 + UAV);

- 8.

- ALS data from 2018 combined with UAV data (ALS 2018 + UAV);

- 9.

- World DEM from ArcGIS Terrain combined with UAV data (World DEM + UAV);

- 10.

- Digital terrain model of Czech Republic with global forest height combined with UAV data (DTM with FH + UAV).

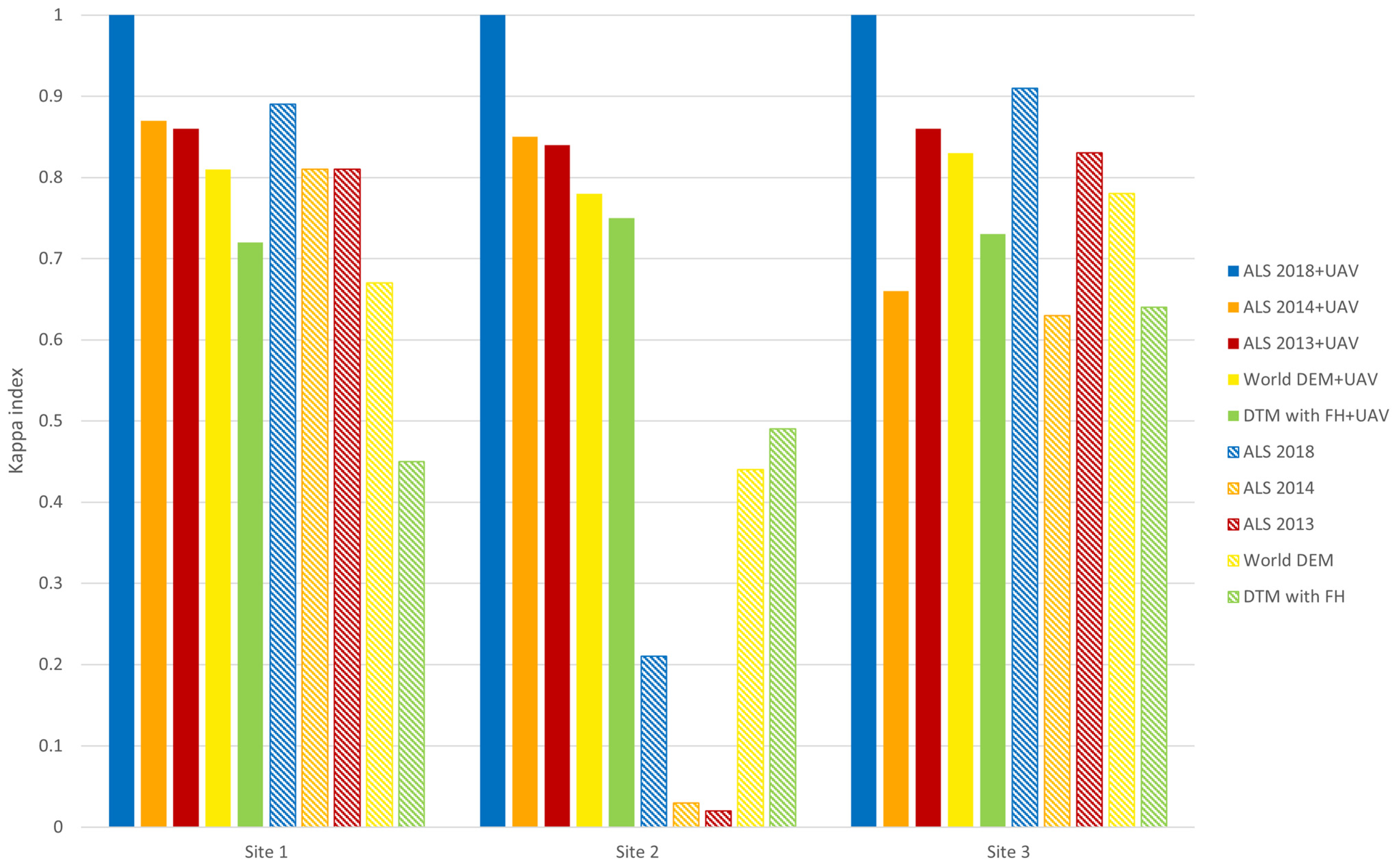

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Hyslop, N.P. Impaired visibility: The air pollution people see. Atmos. Environ. 2009, 43, 182–195. [Google Scholar] [CrossRef]

- Schwartz, M.; Vinnikov, M.; Federici, J. Adding Visibility to Visibility Graphs: Weighting Visibility Analysis with Attenuation Coefficients. arXiv 2021, arXiv:2108.04231. [Google Scholar]

- Malm, W.C. Visibility metrics. In Visibility: The Seeing of Near and Distant Landscape Features; Elsevier: Amsterdam, The Netherlands, 2016; pp. 93–115. [Google Scholar] [CrossRef]

- Campbell, M.J.; Dennison, P.E.; Hudak, A.T.; Parham, L.M.; Butler, B.W. Quantifying understory vegetation density using small-footprint airborne LiDAR. Remote Sens. Environ. 2018, 215, 330–342. [Google Scholar] [CrossRef]

- Fisher, P.F. An exploration of probable viewsheds in landscape planning. Environ. Plan. B Plan. Des. 1995, 22, 527–546. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, Y.; Gan, W.; Zou, Y.; Dong, W.; Zhou, S.; Wang, M. A Survey of the Landscape Visibility Analysis Tools and Technical Improvements. Int. J. Environ. Res. Public. Health 2023, 20, 1788. [Google Scholar] [CrossRef]

- Achilleos, G.; Tsouchlaraki, A. Visibility and Viewshed Algorithms in an Information System for Environmental Management. In Management Information Systems; McGraw-Hill: New York, NY, USA, 2004; pp. 109–121. [Google Scholar]

- Lagner, O.; Klouček, T.; Šímová, P. Impact of input data (in)accuracy on overestimation of visible area in digital viewshed models. PeerJ 2018, 6, e4835. [Google Scholar] [CrossRef]

- Inglis, N.C.; Vukomanovic, J.; Costanza, J.; Singh, K.K. From viewsheds to viewscapes: Trends in landscape visibility and visual quality research. Landsc. Urban Plan. 2022, 224, 104424. [Google Scholar] [CrossRef]

- VanHorn, J.E.; Mosurinjohn, N.A. Urban 3D GIS Modeling of Terrorism Sniper Hazards. Soc. Sci. Comput. Rev. 2010, 28, 482–496. [Google Scholar] [CrossRef]

- Mlynek, P.; Misurec, J.; Fujdiak, R.; Kolka, Z.; Pospichal, L. Heterogeneous Networks for Smart Metering—Power Line and Radio Communication. Elektron. Elektrotech. 2015, 21, 85–92. [Google Scholar] [CrossRef]

- Orsini, C.; Benozzi, E.; Williams, V.; Rossi, P.; Mancini, F. UAV Photogrammetry and GIS Interpretations of Extended Archaeological Contexts: The Case of Tacuil in the Calchaquí Area (Argentina). Drones 2022, 6, 31. [Google Scholar] [CrossRef]

- Kuna, M.; Novák, D.; Rášová, A.B.; Bucha, B.; Machová, B.; Havlice, J.; John, J.; Chvojka, O. Computing and testing extensive total viewsheds: A case of prehistoric burial mounds in Bohemia. J. Archaeol. Sci. J. Archaeol. Sci. 2022, 142, 105596. [Google Scholar] [CrossRef]

- Fadafan, F.K.; Soffianian, A.; Pourmanafi, S.; Morgan, M. Assessing ecotourism in a mountainous landscape using GIS—MCDA approaches. Appl. Geogr. 2022, 147, 102743. [Google Scholar] [CrossRef]

- Demir, S. Determining suitable ecotourism areas in protected watershed area through visibility analysis. J. Environ. Prot. Ecol. 2019, 20, 214–223. [Google Scholar]

- BParsons, M.; Coops, N.C.; Stenhouse, G.B.; Burton, A.C.; Nelson, T.A. Building a perceptual zone of influence for wildlife: Delineating the effects of roads on grizzly bear movement. Eur. J. Wildl. Res. 2020, 66, 53. [Google Scholar] [CrossRef]

- Chamberlain, B.C.; Meitner, M.J.; Ballinger, R. Applications of visual magnitude in forest planning: A case study. For. Chron. 2015, 91, 417–425. [Google Scholar] [CrossRef]

- Sivrikaya, F.; Sağlam, B.; Akay, A.; Bozali, N. Evaluation of Forest Fire Risk with GIS. Pol. J. Environ. Stud. 2014, 23, 187–194. [Google Scholar]

- Lee, J. Zoning scenic areas of heritage sites using visibility analysis: The case of Zhengding, China. J. Asian Archit. Build. Eng. 2023, 22, 1–13. [Google Scholar] [CrossRef]

- Zorzano-Alba, E.; Fernandez-Jimenez, L.A.; Garcia-Garrido, E.; Lara-Santillan, P.M.; Falces, A.; Zorzano-Santamaria, P.J.; Capellan-Villacian, C.; Mendoza-Villena, M. Visibility Assessment of New Photovoltaic Power Plants in Areas with Special Landscape Value. Appl. Sci. Switz. 2022, 12, 703. [Google Scholar] [CrossRef]

- Ioannidis, R.; Mamassis, N.; Efstratiadis, A.; Koutsoyiannis, D. Reversing visibility analysis: Towards an accelerated a priori assessment of landscape impacts of renewable energy projects. Renew. Sustain. Energy Rev. 2022, 161, 112389. [Google Scholar] [CrossRef]

- Amiri, T.; Shafiei, A.B.; Erfanian, M.; Hosseinzadeh, O.; Heidarlou, H.B. Using forest fire experts’ opinions and GIS/remote sensing techniques in locating forest fire lookout towers. Appl. Geomat. 2022. [Google Scholar] [CrossRef]

- Tabrizian, P.; Baran, P.; Van Berkel, D.; Mitasova, H.; Meentemeyer, R. Modeling restorative potential of urban environments by coupling viewscape analysis of LiDAR data with experiments in immersive virtual environments. Landsc. Urban Plan. 2020, 195, 103704. [Google Scholar] [CrossRef]

- Murgoitio, J.J.; Shrestha, R.; Glenn, N.; Spaete, L.P. Improved visibility calculations with tree trunk obstruction modeling from aerial LiDAR. Int. J. Geogr. Inf. Sci. 2013, 27, 1865–1883. [Google Scholar] [CrossRef]

- Zong, X.; Wang, T.; Skidmore, A.; Heurich, M. Estimating fine-scale visibility in a temperate forest landscape using airborne laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102478. [Google Scholar] [CrossRef]

- Ruzickova, K.; Ruzicka, J.; Bitta, J. A new GIS-compatible methodology for visibility analysis in digital surface models of earth sites. Geosci. Front. 2021, 12, 101109. [Google Scholar] [CrossRef]

- Pedrinis, F.; Samuel, J.; Appert, M.; Jacquinod, F.; Gesquière, G. Exploring Landscape Composition Using 2D and 3D Open Urban Vectorial Data. ISPRS Int. J. Geo-Inf. 2022, 11, 479. [Google Scholar] [CrossRef]

- Fisher-Gewirtzman, D.; Shashkov, A.; Doytsher, Y. Voxel based volumetric visibility analysis of urban environments. Surv. Rev. 2013, 45, 451–461. [Google Scholar] [CrossRef]

- Fisher-Gewirtzman, D.; Natapov, A. Different approaches of visibility analyses applied on hilly urban environment. Surv. Rev. 2014, 46, 366–382. [Google Scholar] [CrossRef]

- Cervilla, A.R.; Tabik, S.; Vías, J.; Mérida, M.; Romero, L.F. Total 3D-viewshed Map: Quantifying the Visible Volume in Digital Elevation Models. Trans. GIS 2017, 21, 591–607. [Google Scholar] [CrossRef]

- Zhang, G.-T.; Verbree, E.; Oosterom, P.V. A Study of Visibility Analysis Taking into Account Vegetation: An Approach Based on 3 D Airborne Point Clouds. 2017. Available online: https://www.semanticscholar.org/paper/A-Study-of-Visibility-Analysis-Taking-into-Account-Zhang-Verbree/d25cdf214d4289a9bc88178291bf5b579376bad5 (accessed on 18 October 2022).

- Shan, J.; Toth, C.K. (Eds.) Topographic Laser Ranging and Scanning, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Czech Office for Surveying, Mapping and Cadastre. ZABAGED®—Altimetry—DMP 1G.Digital Surface Model of the Czech Republic of the 1st Generation (DMP 1G). 1 January 2013. Available online: https://geoportal.cuzk.cz/(S(fcavlupr3vyjkldocxkjhp5j))/Default.aspx?mode=TextMeta&metadataID=CZ-CUZK-DMP1G-V&metadataXSL=Full&side=vyskopis (accessed on 13 December 2022).

- Czech Office for Surveying, Mapping and Cadastre. ZABAGED®—Altimetry—DMR 5G. Digital Terrain Model of the Czech Republic of the 5th Generation (DMR 5G). Czech Office for Surveying, Mapping and Cadastre, 1 September 2020. Available online: https://geoportal.cuzk.cz/(S(fcavlupr3vyjkldocxkjhp5j))/Default.aspx?mode=TextMeta&metadataXSL=full&side=vyskopis&metadataID=CZ-CUZK-DMR5G-V (accessed on 13 December 2022).

- Pyka, K.; Piskorski, R.; Jasińska, A. LiDAR-based method for analysing landmark visibility to pedestrians in cities: Case study in Kraków, Poland. Int. J. Geogr. Inf. Sci. 2022, 36, 476–495. [Google Scholar] [CrossRef]

- Murgoitio, J.; Shrestha, R.; Glenn, N.; Spaete, L. Airborne LiDAR and Terrestrial Laser Scanning Derived Vegetation Obstruction Factors for Visibility Models. Trans. GIS 2014, 18, 147–160. [Google Scholar] [CrossRef]

- Bhagat, V.; Kada, A.; Kumar, S. Analysis of Remote Sensing based Vegetation Indices (VIs) for Unmanned Aerial System (UAS): A Review. Remote Sens. Land 2019, 3, 58–73. [Google Scholar] [CrossRef]

- Caha, J.; Kačmařík, M. Utilization of large scale surface models for detailed visibility analyses. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W8, 53–58. [Google Scholar] [CrossRef]

- Felleman, J.P. Landscape Visibility Mapping: Theory and Practice; School of Landscape Architecture, State University of New York, College of Environmental Science and Forestry: New York, NY, USA, 1979. [Google Scholar]

- Domingo-Santos, J.M.; de-Villarán, R.F. Visibility Analysis. In International Encyclopedia of Geography: People, the Earth, Environment and Technology; Richardson, D., Castree, N., Goodchild, M.F., Kobayashi, A., Liu, W., Marston, R.A., Eds.; John Wiley & Sons, Ltd.: Oxford, UK, 2017; pp. 1–14. [Google Scholar] [CrossRef]

- Fantini, S.; Fois, M.; Secci, R.; Casula, P.; Fenu, G.; Bacchetta, G. Incorporating the visibility analysis of fire lookouts for old-growth wood fire risk reduction in the Mediterranean island of Sardinia. Geocarto Int. 2022, 1–11. [Google Scholar] [CrossRef]

- Kozumplikova, A.; Schneider, J.; Mikita, T.; Celer, S.; Kupec, P.; Vyskot, I. Usage possibility of GIS (Geographic Information System) for ecological damages evaluation on example of wind calamity in National Park High Tatras (TANAP), November 2004. Folia Oecol. 2007, 34, 125–145. [Google Scholar]

- Rášová, A. Vegetation modelling in 2.5D visibility analysis. Cartogr. Lett. 2018, 26, 10–20. [Google Scholar]

- Doneus, M.; Banaszek, Ł.; Verhoeven, G.J. The Impact of Vegetation on the Visibility of Archaeological Features in Airborne Laser Scanning Datasets from Different Acquisition Dates. Remote Sens. 2022, 14, 858. [Google Scholar] [CrossRef]

- Caha, J.; Rášová, A. Line-of-Sight Derived Indices: Viewing Angle Difference to a Local Horizon and the Difference of Viewing Angle and the Slope of Line of Sight. In Surface Models for Geosciences; Springer: Cham, Switzerland, 2015; pp. 61–72. [Google Scholar] [CrossRef]

- ESRI. ArcGIS Pro; Environmental Systems Research Institute Inc. (ESRI): Redlands, CA, USA, 2022. [Google Scholar]

- Elaksher, A.; Ali, T.; Alharthy, A. A Quantitative Assessment of LIDAR Data Accuracy. Remote Sens. 2023, 15, 442. [Google Scholar] [CrossRef]

- Maas, H. Least-Squares Matching with Airborne Laserscanning Data in a TIN Structure. IAPRS 2000, XXXIII, 548–555. Available online: https://www.semanticscholar.org/paper/Least-Squares-Matching-with-Airborne-Laserscanning-Maas/ea580fa9bf723c359fb32e50d8ea44a4e473cdc8 (accessed on 3 February 2023).

- Mostafa, M.; Hutton, J.; Reid, B.; Hill, R. GPS/IMU Products—The Applanix Approach. December 2003. [Google Scholar]

- Vosselman, G.; Maas, H.-G. Adjustment and filtering of raw laser altimetry data. In Proceedings of the OEEPE Workshop on Airborne Laserscanning and Interferometric SAR for Detailed Digital Terrain Models, Stockholm, Sweden, 1–3 March 2001. [Google Scholar]

- Burman, H. Laser Strip Adjustment for Data Calibration and Verification. IAPRS 2002, 34, 67. [Google Scholar]

- Crombaghs, M.; Brgelmann, R.; de Min, E. On the adjustment of overlapping strips of laser altimeter height data. IAPRS 2000, 33, 230–237. [Google Scholar]

- Huising, E.J.; Pereira, L.M.G. Errors and accuracy estimates of laser data acquired by various laser scanning systems for topographic applications. ISPRS J. Photogramm. Remote Sens. 1998, 53, 245–261. [Google Scholar] [CrossRef]

- Maas, H.-G. Methods for Measuring Height and Planimetry Discrepancies in Airborne Laserscanner Data. Photogramm. Eng. Remote Sens. 2002, 68, 933–940. [Google Scholar]

- Maas, H.-G. Planimetric and height accuracy of airborne laserscanner data: User requirements and system performance. In Proceedings of the 49th Photogrammetric Week, Stuttgart, Germany, 15 September 2003. [Google Scholar]

- Sabatini, R.; Richardson, M.; Gardi, A.; Ramasamy, S. Airborne laser sensors and integrated systems. Prog. Aerosp. Sci. 2015, 79, 15–63. [Google Scholar] [CrossRef]

- DLR Document: TD-GS-PS-0021; DEM Products Specification Document, Version 3.1; 2016.

- Airbus. ArcGIS Terrain Data: AirBus WorldDEM4Ortho data.Earth Observation Center, Airbus n.d. 2016. Available online: https://api.oneatlas.airbus.com/documents/2018-07_WorldDEM4Ortho_TechnicalSpec_Version1.4_I1.0.pdf (accessed on 5 October 2022).

- Becek, K.; Koppe, W.; Kutoğlu, Ş.H. Evaluation of Vertical Accuracy of the WorldDEMTM Using the Runway Method. Remote Sens. 2016, 8, 934. [Google Scholar] [CrossRef]

- Koppe, W.; Henrichs, L.; Hummel, P. Assessment of WorldDEMTM global elevation model using different references. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 5296–5299. [Google Scholar] [CrossRef]

- Potapov, P.; Li, X.; Hernandez-Serna, A.; Tyukavina, A.; Hansen, M.C.; Kommareddy, A.; Pickens, A.; Turubanova, S.; Tang, H.; Silva, C.E.; et al. Mapping global forest canopy height through integration of GEDI and Landsat data. Remote Sens. Environ. 2021, 253, 112165. [Google Scholar] [CrossRef]

- Dorado-Roda, I.; Pascual, A.; Godinho, S.; Silva, C.; Botequim, B.; Rodríguez-Gonzálvez, P.; González-Ferreiro, E.; Guerra-Hernández, J. Assessing the Accuracy of GEDI Data for Canopy Height and Aboveground Biomass Estimates in Mediterranean Forests. Remote Sens. 2021, 13, 2279. [Google Scholar] [CrossRef]

- Fayad, I.; Baghdadi, N.; Alvares, C.A.; Stape, J.L.; Bailly, J.S.; Scolforo, H.F.; Zribi, M.; Le Maire, G. Estimating Canopy Height and Wood Volume of Eucalyptus Plantations in Brazil Using GEDI LiDAR Data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5941–5944. [Google Scholar] [CrossRef]

- Hancock, S.; Armston, J.; Hofton, M.; Sun, X.; Tang, H.; Duncanson, L.I.; Kellner, J.R.; Dubayah, R. The GEDI Simulator: A Large-Footprint Waveform Lidar Simulator for Calibration and Validation of Spaceborne Missions. Earth Space Sci. 2019, 6, 294–310. [Google Scholar] [CrossRef]

- Lang, N.; Kalischek, N.; Armston, J.; Dubayah, R.; Wegner, J. Global canopy height estimation with GEDI LIDAR waveforms and Bayesian deep learning. arXiv 2021, arXiv:2103.03975. [Google Scholar]

- Silva, C.A.; Saatchi, S.; Garcia, M.; Labriere, N.; Klauberg, C.; Ferraz, A.; Meyer, V.; Jeffery, K.J.; Abernethy, K.; White, L.; et al. Comparison of Small- and Large-Footprint Lidar Characterization of Tropical Forest Aboveground Structure and Biomass: A Case Study From Central Gabon. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3512–3526. [Google Scholar] [CrossRef]

- Adam, M.; Urbazaev, M.; Dubois, C.; Schmullius, C. Accuracy Assessment of GEDI Terrain Elevation and Canopy Height Estimates in European Temperate Forests: Influence of Environmental and Acquisition Parameters. Remote Sens. 2020, 12, 3948. [Google Scholar] [CrossRef]

- Agisoft Metashape Professional Software; Agisoft LLC.: Saint Petersburg, Russia, 2022.

- CzechGlobe (Global Change Research Institute). Airborne Laser Scanned Data; CzechGlobe (Global Change Research Institute): Brno, Czech Republic, 2022. [Google Scholar]

- GLAD. Global Forest Canopy Height. The Global Land Analysis and Discovery (GLAD) Laboratory, University of Maryland. 2019. Available online: https://glad.umd.edu/dataset/gedi (accessed on 14 December 2022).

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.; Jurman, G. The Matthews Correlation Coefficient (MCC) is More Informative Than Cohen’s Kappa and Brier Score in Binary Classification Assessment. IEEE Access 2021, 9, 78368–78381. [Google Scholar] [CrossRef]

- Sobala, M.; Myga-Piątek, U.; Szypuła, B. Assessment of Changes in a Viewshed in the Western Carpathians Landscape as a Result of Reforestation. Land 2020, 9, 430. [Google Scholar] [CrossRef]

- Štular, B.; Lozić, E.; Eichert, S. Airborne LiDAR-Derived Digital Elevation Model for Archaeology. Remote Sens. 2021, 13, 1855. [Google Scholar] [CrossRef]

- Ďuračiová, R.; Rasova, A.; Lieskovský, T. Fuzzy Similarity and Fuzzy Inclusion Measures in Polyline Matching: A Case Study of Potential Streams Identification for Archaeological Modelling in GIS. Rep. Geod. Geoinform. 2018, 104, 115–130. [Google Scholar] [CrossRef]

- Hanssen, F.; Barton, D.; Venter, Z.; Nowell, M.; Cimburova, Z. Utilizing LiDAR data to map tree canopy for urban ecosystem extent and condition accounts in Oslo. Ecol. Indic. 2021, 130, 108007. [Google Scholar] [CrossRef]

- Gaspar, J.; Fidalgo, B.; Miller, D.; Pinto, L.; Salas, R. Visibility analysis and visual diversity assessment in rural landscapes. In Proceedings of the IUFRO Landscape Ecology Working Group International Conference, Bragança, Portugal, 21–27 September 2010. [Google Scholar]

- Chmielewski, S. Towards managing visual pollution: A 3D isovist and voxel approach to advertisement billboard visual impact assessment. ISPRS Int. J. Geo-Inf. 2021, 10, 656. [Google Scholar] [CrossRef]

- Cimburova, Z.; Blumentrath, S. Viewshed-based modelling of visual exposure to urban greenery—An efficient GIS tool for practical planning applications. Landsc. Urban Plan. 2022, 222, 104395. [Google Scholar] [CrossRef]

- Quinn, S.D. What can we see from the road? Applications of a cumulative viewshed analysis on a US state highway network. Geogr. Helvetica 2022, 77, 165–178. [Google Scholar] [CrossRef]

- Tsilimigkas, G.; Derdemezi, E.-T. Spatial Planning and the Traditional Settlements Management: Evidence from Visibility Analysis of Traditional Settlements in Cyclades, Greece. Plan. Pract. Res. 2020, 35, 86–106. [Google Scholar] [CrossRef]

- Cilliers, D.; Cloete, M.; Bond, A.; Retief, F.; Alberts, R.; Roos, C. A critical evaluation of visibility analysis approaches for visual impact assessment (VIA) in the context of environmental impact assessment (EIA). Environ. Impact Assess. Rev. 2023, 98, 106962. [Google Scholar] [CrossRef]

- Labib, S.M.; Huck, J.J.; Lindley, S. Modelling and mapping eye-level greenness visibility exposure using multi-source data at high spatial resolutions. Sci. Total Environ. 2021, 755, 143050. [Google Scholar] [CrossRef] [PubMed]

- Meek, S.; Goulding, J.; Priestnall, G. The Influence of Digital Surface Model Choice on Visibility-based Mobile Geospatial Applications. Trans. GIS 2013, 17, 526–543. [Google Scholar] [CrossRef]

- Lang, M.; Kuusk, A.; Vennik, K.; Liibusk, A.; Türk, K.; Sims, A. Horizontal Visibility in Forests. Remote Sens. 2021, 13, 4455. [Google Scholar] [CrossRef]

- Bartie, P.; Reitsma, F.; Kingham, S.; Mills, S. Incorporating vegetation into visual exposure modelling in urban environments. Int. J. Geogr. Inf. Sci. 2011, 25, 851–868. [Google Scholar] [CrossRef]

- Llobera, M. Modeling visibility through vegetation. Int. J. Geogr. Inf. Sci. 2007, 21, 799–810. [Google Scholar] [CrossRef]

- Wang, Y.; Dou, W. A fast candidate viewpoints filtering algorithm for multiple viewshed site planning. Int. J. Geogr. Inf. Sci. 2020, 34, 448–463. [Google Scholar] [CrossRef]

- Fisher, P.F. Extending the Applicability of Viewsheds in Landscape Planning. PE&RS 1996, 62, 1297–1302. [Google Scholar]

- Szypuła, B. Quality assessment of DEM derived from topographic maps for geomorphometric purposes. Open Geosci. 2019, 11, 843–865. [Google Scholar] [CrossRef]

- Kovačević, J.; Stančić, N.; Cvijetinović, Ž.; Brodić, N.; Mihajlović, D. Airborne Laser Scanning to Digital Elevation Model—LAStools approach. IPSI Trans. Adv. Res. 2023, 19, 13–17. [Google Scholar]

- Nutsford, D.; Reitsma, F.; Pearson, A.; Kingham, S. Personalising the viewshed: Visibility analysis from the human perspective. Appl. Geogr. 2015, 62, 1–7. [Google Scholar] [CrossRef]

- Ozkan, K.; Braunstein, M.L. Background surface and horizon effects in the perception of relative size and distance. Vis. Cogn. 2010, 18, 229–254. [Google Scholar] [CrossRef] [PubMed]

- Anderson, C.C.; Rex, A. Preserving the scenic views from North Carolina’s Blue Ridge Parkway: A decision support system for strategic land conservation planning. Appl. Geogr. 2019, 104, 75–82. [Google Scholar] [CrossRef]

- Bartie, P.; Reitsma, F.; Kingham, S.; Mills, S. Advancing visibility modelling algorithms for urban environments. Comput. Environ. Urban Syst. 2010, 34, 518–531. [Google Scholar] [CrossRef]

- Palmer, J.F. The contribution of a GIS-based landscape assessment model to a scientifically rigorous approach to visual impact assessment. Landsc. Urban Plan. 2019, 189, 80–90. [Google Scholar] [CrossRef]

- Siwiec, J. Comparison of Airborne Laser Scanning of Low and High Above Ground Level for Selected Infrastructure Objects. J. Appl. Eng. Sci. 2018, 8, 89–96. [Google Scholar] [CrossRef]

- Fetai, B.; Račič, M.; Lisec, A. Deep Learning for Detection of Visible Land Boundaries from UAV Imagery. Remote Sens. 2021, 13, 2077. [Google Scholar] [CrossRef]

- Govindaraju, V.; Leng, G.; Qian, Z. Visibility-based UAV path planning for surveillance in cluttered environments. In Proceedings of the 2014 IEEE International Symposium on Safety, Security, and Rescue Robotics (2014), Hokkaido, Japan, 27–30 October 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Stöcker, C.; Bennett, R.; Nex, F.; Gerke, M.; Zevenbergen, J. Review of the Current State of UAV Regulations. Remote Sens. 2017, 9, 459. [Google Scholar] [CrossRef]

- Klouček, T.; Lagner, O.; Šímová, P. How does data accuracy influence the reliability of digital viewshed models? A case study with wind turbines. Appl. Geogr. 2015, 64, 46–54. [Google Scholar] [CrossRef]

- Dean, D. Improving the accuracy of forest viewsheds using triangulated networks and the visual permeability method. Can. J. For. Res. 1997, 27, 969–977. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

| Height Differences (m) | Mean | Std.Dev. | RMSE | |

|---|---|---|---|---|

| ALS 2018 × ALS 2014 | All pixels | −4.19 | 7.11 | 8.25 |

| Selection: forest | −4.03 | 5.61 | 6.91 | |

| Selection: non-forest | −0.03 | 0.50 | 0.50 | |

| ALS 2018 × ALS 2013 | All pixels | −4.41 | 8.66 | 9.72 |

| Selection: forest | −3.93 | 5.85 | 7.05 | |

| Selection: non-forest | −0.31 | 0.20 | 0.37 | |

| ALS 2018 × World DEM | All pixels | −1.65 | 8.29 | 8.45 |

| Selection: forest | −0.20 | 5.66 | 5.66 | |

| Selection: non-forest | −0.23 | 0.71 | 0.75 | |

| ALS 2018 × DTM + FH | All pixels | −7.11 | 8.87 | 11.37 |

| Selection: forest | −7.49 | 7.57 | 10.65 | |

| Selection: non-forest | −0.29 | 0.51 | 0.59 | |

| DSM | All Pixels | True Positive | Kappa Index | DSM | All Pixels | True Positive | Kappa Index | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Pixels | % | Pixels | % | |||||||

| ALS 2018 | 71,375 | 60,532 | 93.86 | 0.89 | ALS 2018 + UAV | 64,491 | 64,491 | 100.00 | 1.00 | |

| ALS 2014 | 56,149 | 49,032 | 76.03 | 0.81 | ALS 2014 + UAV | 58,592 | 53,926 | 83.62 | 0.87 | |

| ALS 2013 | 65,784 | 53,027 | 82.22 | 0.81 | ALS 2013 + UAV | 58,575 | 53,153 | 82.42 | 0.86 | |

| World DEM | 100,972 | 56,222 | 87.18 | 0.67 | World DEM + UAV | 70,927 | 55,140 | 85.50 | 0.81 | |

| DTM with FH | 21,020 | 19,368 | 30.03 | 0.45 | DTM with FH + UAV | 43,295 | 38,832 | 60.21 | 0.72 | |

| DSM | All Pixels | True Positive | Kappa Index | DSM | All Pixels | True Positive | Kappa Index | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Pixels | % | Pixels | % | |||||||

| ALS 2018 | 12,715 | 12,083 | 11.67 | 0.21 | ALS 2018 + UAV | 105,553 | 105,553 | 100.00 | 1.00 | |

| ALS 2014 | 1397 | 1248 | 1.21 | 0.03 | ALS 2014 + UAV | 95,511 | 85,637 | 83.25 | 0.85 | |

| ALS 2013 | 1014 | 765 | 0.74 | 0.02 | ALS 2013 + UAV | 96,427 | 85,128 | 80.94 | 0.84 | |

| World DEM | 61,458 | 9898 | 9.56 | 0.44 | World DEM + UAV | 109,600 | 84,873 | 83.05 | 0.78 | |

| DTM with FH | 90,451 | 9898 | 9.56 | 0.49 | DTM with FH + UAV | 86,641 | 72,366 | 74.49 | 0.75 | |

| DSM | All Pixels | True Positive | Kappa Index | DSM | All Pixels | True Positive | Kappa Index | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Pixels | % | Pixels | % | |||||||

| ALS 2018 | 98,527 | 87,547 | 92.81 | 0.91 | ALS 2018 + UAV | 94,325 | 94,325 | 100.00 | 1.00 | |

| ALS 2014 | 51,794 | 46,499 | 49.30 | 0.63 | ALS 2014 + UAV | 53,048 | 49,190 | 52.15 | 0.66 | |

| ALS 2013 | 91,506 | 77,777 | 82.46 | 0.83 | ALS 2013 + UAV | 90,407 | 79,314 | 84.09 | 0.86 | |

| World DEM | 103,168 | 77,306 | 81.96 | 0.78 | World DEM + UAV | 86,145 | 74,841 | 79.34 | 0.83 | |

| DTM with FH | 61,468 | 50,344 | 53.37 | 0.64 | DTM with FH + UAV | 71,780 | 60,761 | 64.42 | 0.73 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mikita, T.; Janošíková, L.; Caha, J.; Avoiani, E. The Potential of UAV Data as Refinement of Outdated Inputs for Visibility Analyses. Remote Sens. 2023, 15, 1028. https://doi.org/10.3390/rs15041028

Mikita T, Janošíková L, Caha J, Avoiani E. The Potential of UAV Data as Refinement of Outdated Inputs for Visibility Analyses. Remote Sensing. 2023; 15(4):1028. https://doi.org/10.3390/rs15041028

Chicago/Turabian StyleMikita, Tomáš, Lenka Janošíková, Jan Caha, and Elizaveta Avoiani. 2023. "The Potential of UAV Data as Refinement of Outdated Inputs for Visibility Analyses" Remote Sensing 15, no. 4: 1028. https://doi.org/10.3390/rs15041028

APA StyleMikita, T., Janošíková, L., Caha, J., & Avoiani, E. (2023). The Potential of UAV Data as Refinement of Outdated Inputs for Visibility Analyses. Remote Sensing, 15(4), 1028. https://doi.org/10.3390/rs15041028