Remote Sensing Crop Recognition by Coupling Phenological Features and Off-Center Bayesian Deep Learning

Abstract

1. Introduction

2. Study Area and Dataset

2.1. Study Area

2.2. Reference Data

2.3. Sentinel 2

2.4. Phenological Features

3. Methodology

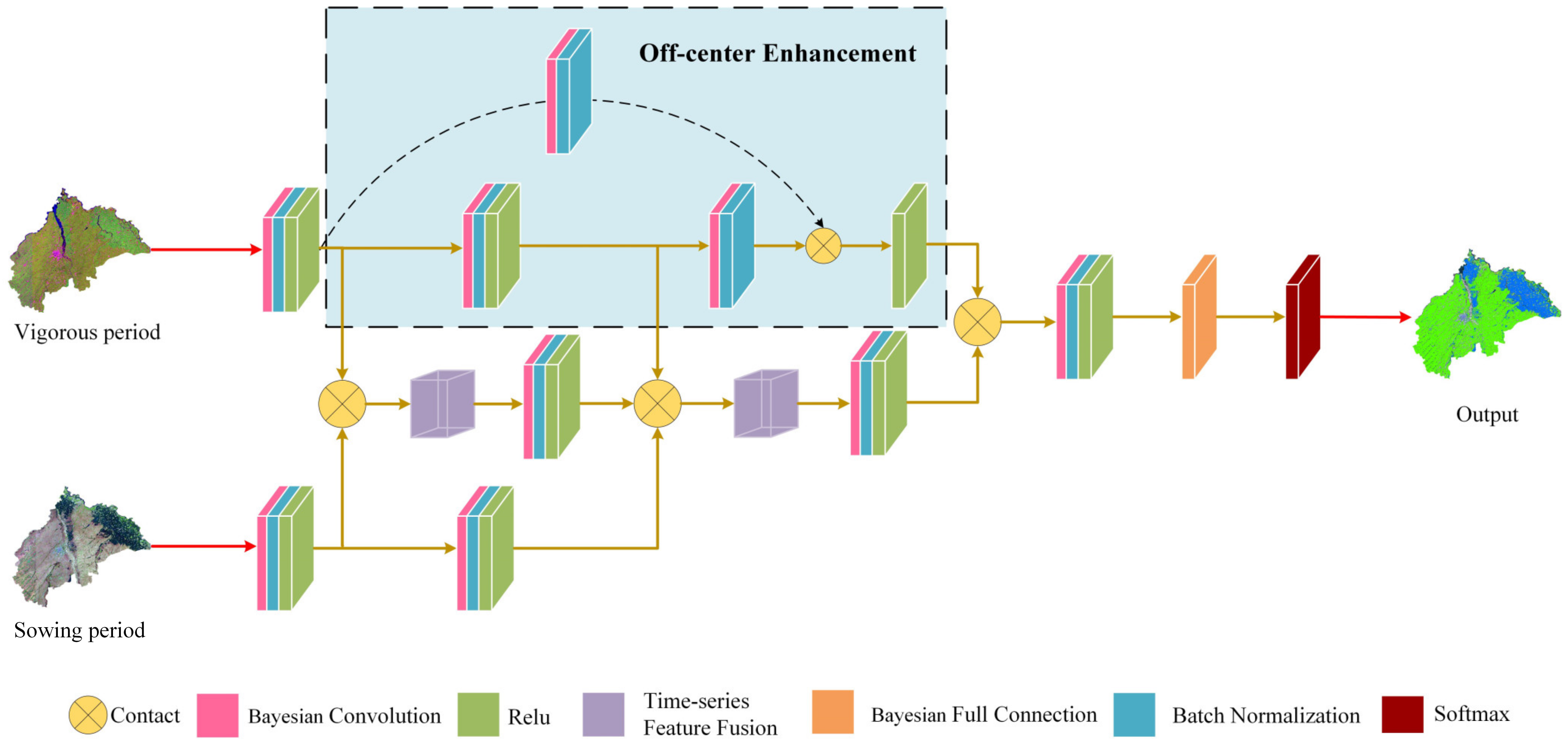

3.1. Off-Center Bayesian Deep Learning Network

3.2. Off-Center Enhancement Module

3.3. Time-Series Feature Fusion Module

3.4. Accuracy Evaluation Metrics

3.4.1. Pixel-Level Accuracy Evaluation

3.4.2. Classification Uncertainty Evaluation

3.5. Implementation Details

4. Results and Analysis

5. Discussion

5.1. Uncertainty Analysis of Different Model Structures

5.2. Validation of the Adaptability of the Method in Different Years

5.3. Validation of the Adaptability of the Proposed Method in Areas with Different Cropping Structures

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Foley, J.A.; Ramankutty, N.; Brauman, K.A.; Cassidy, E.S.; Gerber, J.S.; Johnston, M.; Mueller, N.D.; O’Connell, C.; Ray, D.K.; West, P.C.; et al. Solutions for a Cultivated Planet. Nature 2011, 478, 337–342. [Google Scholar] [CrossRef]

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food Security: The Challenge of Feeding 9 Billion People. Science (80-) 2010, 327, 812–818. [Google Scholar] [CrossRef]

- Biradar, C.M.; Thenkabail, P.S.; Noojipady, P.; Li, Y.; Dheeravath, V.; Turral, H.; Velpuri, M.; Gumma, M.K.; Gangalakunta, O.R.P.; Cai, X.L.; et al. A Global Map of Rainfed Cropland Areas (GMRCA) at the End of Last Millennium Using Remote Sensing. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 114–129. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m Landsat-Derived Cropland Extent Product of Australia and China Using Random Forest Machine Learning Algorithm on Google Earth Engine Cloud Computing Platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep Learning in Environmental Remote Sensing: Achievements and Challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Foerster, S.; Kaden, K.; Foerster, M.; Itzerott, S. Crop Type Mapping Using Spectral-Temporal Profiles and Phenological Information. Comput. Electron. Agric. 2012, 89, 30–40. [Google Scholar] [CrossRef]

- Mingwei, Z.; Qingbo, Z.; Zhongxin, C.; Jia, L.; Yong, Z.; Chongfa, C. Crop Discrimination in Northern China with Double Cropping Systems Using Fourier Analysis of Time-Series MODIS Data. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 476–485. [Google Scholar] [CrossRef]

- Conrad, C.; Dech, S.; Dubovyk, O.; Fritsch, S.; Klein, D.; Löw, F.; Schorcht, G.; Zeidler, J. Derivation of Temporal Windows for Accurate Crop Discrimination in Heterogeneous Croplands of Uzbekistan Using Multitemporal RapidEye Images. Comput. Electron. Agric. 2014, 103, 63–74. [Google Scholar] [CrossRef]

- Valero, S.; Morin, D.; Inglada, J.; Sepulcre, G.; Arias, M.; Hagolle, O.; Dedieu, G.; Bontemps, S.; Defourny, P.; Koetz, B. Production of a Dynamic Cropland Mask by Processing Remote Sensing Image Series at High Temporal and Spatial Resolutions. Remote Sens. 2016, 8, 55. [Google Scholar] [CrossRef]

- Devadas, R.; Denham, R.J.; Pringle, M. Support Vector Machine Classification of Object-Based Data for Crop Mapping, Using Multi-Temporal Landsat Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B7, 185–190. [Google Scholar] [CrossRef]

- Kumar, P.; Gupta, D.K.; Mishra, V.N.; Prasad, R. Comparison of Support Vector Machine, Artificial Neural Network, and Spectral Angle Mapper Algorithms for Crop Classification Using LISS IV Data. Int. J. Remote Sens. 2015, 36, 1604–1617. [Google Scholar] [CrossRef]

- Shao, Y.; Lunetta, R.S. Comparison of Support Vector Machine, Neural Network, and CART Algorithms for the Land-Cover Classification Using Limited Training Data Points. ISPRS J. Photogramm. Remote Sens. 2012, 70, 78–87. [Google Scholar] [CrossRef]

- Song, X.P.; Potapov, P.V.; Krylov, A.; King, L.A.; Di Bella, C.M.; Hudson, A.; Khan, A.; Adusei, B.; Stehman, S.V.; Hansen, M.C. National-Scale Soybean Mapping and Area Estimation in the United States Using Medium Resolution Satellite Imagery and Field Survey. Remote Sens. Environ. 2017, 190, 383–395. [Google Scholar] [CrossRef]

- Xu, J.; Zhu, Y.; Zhong, R.; Lin, Z.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A Multi-Temporal Deep Learning Approach with Improved Spatial Generalizability for Dynamic Corn and Soybean Mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Zhong, L.; Hawkins, T.; Biging, G.; Gong, P. A Phenology-Based Approach to Map Crop Types in the San Joaquin Valley, California. Int. J. Remote Sens. 2011, 32, 7777–7804. [Google Scholar] [CrossRef]

- Rubwurm, M.; Korner, M. Temporal Vegetation Modelling Using Long Short-Term Memory Networks for Crop Identification from Medium-Resolution Multi-Spectral Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1496–1504. [Google Scholar] [CrossRef]

- Ndikumana, E.; Minh, D.H.T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep Recurrent Neural Network for Agricultural Classification Using Multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban Land-Use Mapping Using a Deep Convolutional Neural Network with High Spatial Resolution Multispectral Remote Sensing Imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Marcos, D.; Volpi, M.; Kellenberger, B.; Tuia, D. Land Cover Mapping at Very High Resolution with Rotation Equivariant CNNs: Towards Small yet Accurate Models. ISPRS J. Photogramm. Remote Sens. 2018, 145, 96–107. [Google Scholar] [CrossRef]

- Lu, Y.; Li, H.; Zhang, S. Multi-Temporal Remote Sensing Based Crop Classification Using a Hybrid 3D-2D CNN Model. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2021, 37, 13. [Google Scholar] [CrossRef]

- Li, Z.; Chen, G.; Zhang, T. A CNN-Transformer Hybrid Approach for Crop Classification Using Multitemporal Multisensor Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 847–858. [Google Scholar] [CrossRef]

- Garnot, V.S.F.; Landrieu, L.; Giordano, S.; Chehata, N. Time-Space Tradeoff in Deep Learning Models for Crop Classification on Satellite Multi-Spectral Image Time Series. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6247–6250. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Self-Attention for Raw Optical Satellite Time Series Classification. ISPRS J. Photogramm. Remote Sens. 2020, 169, 421–435. [Google Scholar] [CrossRef]

- Castro, J.B.; Feitosa, R.Q.; Happ, P.N. An Hybrid Recurrent Convolutional Neural Network for Crop Type Recognition Based on Multitemporal SAR Image Sequences. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3824–3827. [Google Scholar] [CrossRef]

- Chamorro Martinez, J.A.; Cué La Rosa, L.E.; Feitosa, R.Q.; Sanches, I.D.A.; Happ, P.N. Fully Convolutional Recurrent Networks for Multidate Crop Recognition from Multitemporal Image Sequences. ISPRS J. Photogramm. Remote Sens. 2021, 171, 188–201. [Google Scholar] [CrossRef]

- Chen, Y.; Song, X.; Wang, S.; Huang, J.; Mansaray, L.R. Impacts of Spatial Heterogeneity on Crop Area Mapping in Canada Using MODIS Data. ISPRS J. Photogramm. Remote Sens. 2016, 119, 451–461. [Google Scholar] [CrossRef]

- Löw, F.; Knöfel, P.; Conrad, C. Analysis of Uncertainty in Multi-Temporal Object-Based Classification. ISPRS J. Photogramm. Remote Sens. 2015, 105, 91–106. [Google Scholar] [CrossRef]

- Löw, F.; Michel, U.; Dech, S.; Conrad, C. Impact of Feature Selection on the Accuracy and Spatial Uncertainty of Per-Field Crop Classification Using Support Vector Machines. ISPRS J. Photogramm. Remote Sens. 2013, 85, 102–119. [Google Scholar] [CrossRef]

- Biggs, T.W.; Thenkabail, P.S.; Gumma, M.K.; Scott, C.A.; Parthasaradhi, G.R.; Turral, H.N. Irrigated Area Mapping in Heterogeneous Landscapes with MODIS Time Series, Ground Truth and Census Data, Krishna Basin, India. Int. J. Remote Sens. 2006, 27, 4245–4266. [Google Scholar] [CrossRef]

- Turker, M.; Arikan, M. Sequential Masking Classification of Multi-Temporal Landsat7 ETM+ Images for Field-Based Crop Mapping in Karacabey, Turkey. Int. J. Remote Sens. 2005, 26, 3813–3830. [Google Scholar] [CrossRef]

- Zhong, L.; Gong, P.; Biging, G.S. Efficient Corn and Soybean Mapping with Temporal Extendability: A Multi-Year Experiment Using Landsat Imagery. Remote Sens. Environ. 2014, 140, 1–13. [Google Scholar] [CrossRef]

- Wang, H.; Yeung, D.Y. A Survey on Bayesian Deep Learning. ACM Comput. Surv. 2020, 53, 1–37. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision? In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5575–5585. [Google Scholar]

- Mena, J.; Catalunya, C.T.D.; Matemàtiques, D.D. A Survey on Uncertainty Estimation in Deep Learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Hern, D.; Tom, F.; Adams, R.P. Predictive Entropy Search for Multi-Objective Bayesian Optimization. arXiv 2014, arXiv:1511.05467v3. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.C.; Ying, Y.; Lin, T. Towards Interpreting Multi-Temporal Deep Learning Models in Crop Mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E.; Strahler, A.H. Maximizing Land Cover Classification Accuracies Produced by Decision Trees at Continental to Global Scales. IEEE Trans. Geosci. Remote Sens. 1999, 37, 969–977. [Google Scholar] [CrossRef]

- Geerken, R.A. An Algorithm to Classify and Monitor Seasonal Variations in Vegetation Phenologies and Their Inter-Annual Change. ISPRS J. Photogramm. Remote Sens. 2009, 64, 422–431. [Google Scholar] [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

| Images | Soybeans | Maize | Rice | Average F1 | Average IOU | OA | |||

|---|---|---|---|---|---|---|---|---|---|

| F1 | IOU | F1 | IOU | F1 | IOU | ||||

| A | 93.35 | 87.53 | 93.66 | 88.07 | 94.02 | 88.72 | 93.68 | 88.11 | 92.84 |

| B | 86.74 | 76.59 | 91.34 | 84.06 | 91.83 | 84.90 | 89.97 | 81.85 | 89.90 |

| C | 74.67 | 59.58 | 91.04 | 83.56 | 93.87 | 88.45 | 86.53 | 77.20 | 92.01 |

| D | 88.33 | 79.10 | 86.33 | 75.95 | 89.65 | 81.24 | 88.10 | 78.76 | 88.15 |

| Average | 85.77 | 75.70 | 90.59 | 82.91 | 92.34 | 85.83 | 89.57 | 81.48 | 90.73 |

| Model | Soybeans | Maize | Rice | Average F1 | Average IOU | OA | |||

|---|---|---|---|---|---|---|---|---|---|

| F1 | IOU | F1 | IOU | F1 | IOU | ||||

| OCBDL | 85.77 | 75.70 | 90.59 | 82.91 | 92.34 | 85.83 | 89.57 | 81.48 | 90.73 |

| CNN | 79.58 | 66.36 | 82.11 | 70.81 | 86.44 | 76.48 | 82.71 | 71.21 | 84.75 |

| RNN | 76.72 | 63.44 | 88.26 | 79.02 | 88.69 | 79.80 | 84.56 | 74.08 | 85.99 |

| RFC | 79.48 | 35.81 | 73.15 | 58.72 | 72.10 | 58.27 | 64.91 | 50.91 | 74.01 |

| Model | Soybeans | Maize | Rice | Average F1 | Average IOU | OA | |||

|---|---|---|---|---|---|---|---|---|---|

| F1 | IOU | F1 | IOU | F1 | IOU | ||||

| OCBDL | 85.77 | 75.70 | 90.59 | 82.91 | 92.34 | 85.83 | 89.57 | 81.48 | 90.73 |

| T-BDL | 80.11 | 67.92 | 87.33 | 78.29 | 89.77 | 82.20 | 85.74 | 76.14 | 88.23 |

| OCEBDL | 79.60 | 68.43 | 86.75 | 78.46 | 88.65 | 81.61 | 85.00 | 76.17 | 88.06 |

| SEBDL | 79.17 | 68.05 | 85.15 | 76.62 | 87.16 | 79.86 | 83.82 | 74.84 | 86.74 |

| BDL | 77.28 | 65.15 | 85.30 | 76.31 | 87.89 | 80.40 | 83.49 | 73.95 | 85.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Wu, P.; Wu, Y.; Yang, H.; Wang, B. Remote Sensing Crop Recognition by Coupling Phenological Features and Off-Center Bayesian Deep Learning. Remote Sens. 2023, 15, 674. https://doi.org/10.3390/rs15030674

Wu Y, Wu P, Wu Y, Yang H, Wang B. Remote Sensing Crop Recognition by Coupling Phenological Features and Off-Center Bayesian Deep Learning. Remote Sensing. 2023; 15(3):674. https://doi.org/10.3390/rs15030674

Chicago/Turabian StyleWu, Yongchuang, Penghai Wu, Yanlan Wu, Hui Yang, and Biao Wang. 2023. "Remote Sensing Crop Recognition by Coupling Phenological Features and Off-Center Bayesian Deep Learning" Remote Sensing 15, no. 3: 674. https://doi.org/10.3390/rs15030674

APA StyleWu, Y., Wu, P., Wu, Y., Yang, H., & Wang, B. (2023). Remote Sensing Crop Recognition by Coupling Phenological Features and Off-Center Bayesian Deep Learning. Remote Sensing, 15(3), 674. https://doi.org/10.3390/rs15030674