1. Introduction

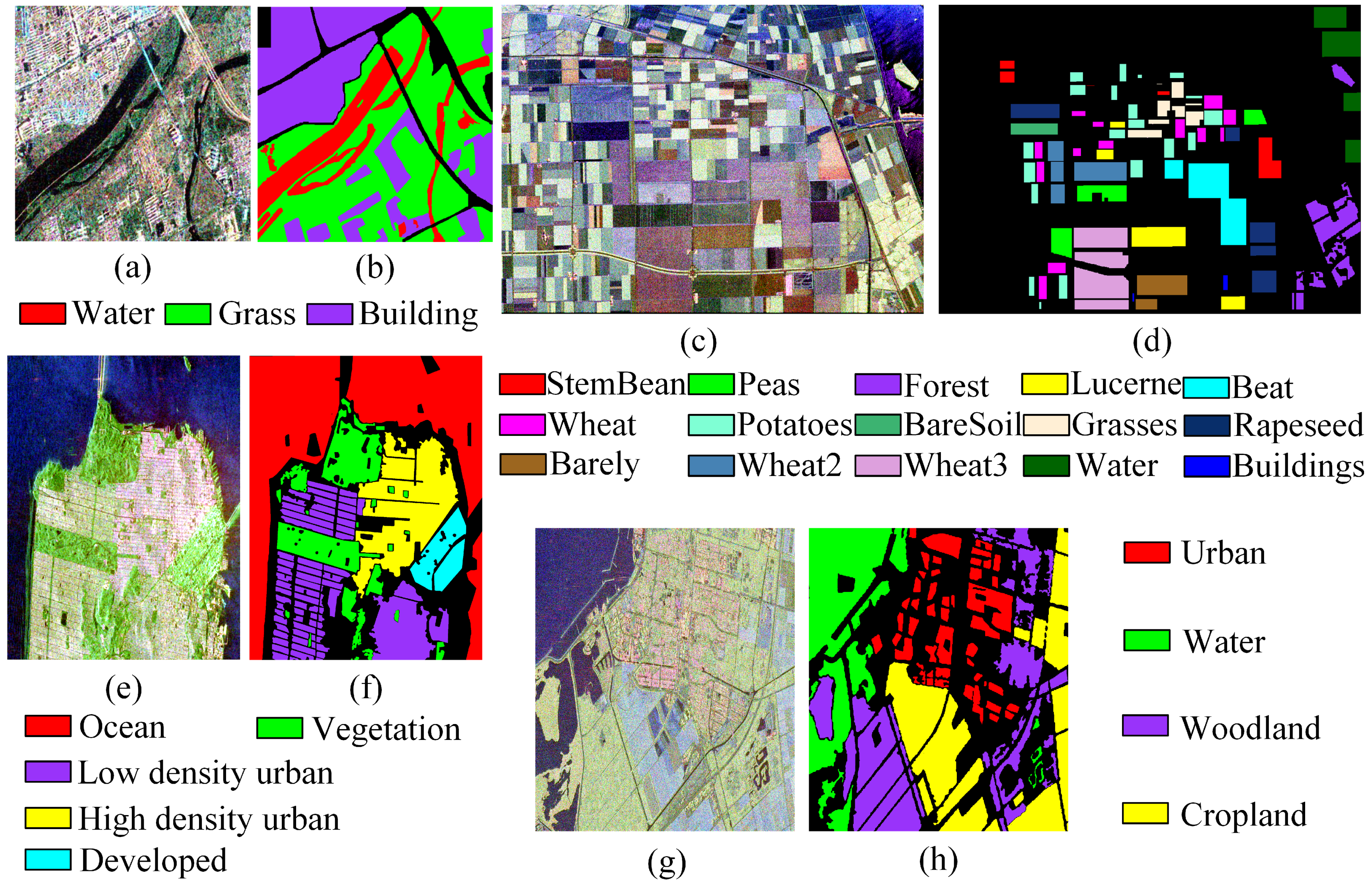

Polarimetric synthetic aperture radar (PolSAR) is an active radar imaging system that emits and receives electromagnetic waves in multiple polarimetric directions [

1]. In comparison to the single-polarimetric SAR system, a fully polarimetric SAR system can capture more scattering information from ground objects through four polarimetric modes, which can produce a

scattering matrix instead of complex-valued data. The advantages of PolSAR systems have led to their widespread application in various fields, such as military monitoring [

2], object detection [

3], crop growth prediction [

4], and terrain classification [

5]. One particular task related to PolSAR is image classification by assigning a class label to each pixel. This is a fundamental and essential task for further automatic image interpretation. In the past few decades, various PolSAR image classification methods have been proposed, which mainly include traditional scattering mechanism-based methods and more recent deep learning-based methods.

Traditional scattering mechanism-based methods primarily focus on exploiting the scattering features and designing classifiers, which can be categorized into three main groups. The first category comprises statistical distribution-based methods that leverage the statistical characteristics of PolSAR complex matrix data, such as Wishart [

6,

7,

8,

9], mixed Wishart [

10,

11,

12,

13],

[

14], Kummer [

15] distribution. These methods try to exploit various non-Gaussian distribution models for heterogeneous PolSAR images. However, estimating parameters for non-Gaussian models can be a complex task. The second category is the target decomposition-based methods that extract scattering features from target decomposition to differentiate various terrain objects. Some commonly employed methods for target scattering information extraction include Cloude and Pottier decomposition [

16,

17], Freeman decomposition [

18], four-component decomposition [

19],

decomposition [

20], and eigenvalue decomposition [

21]. These methods are designed to distinguish different objects based on the extracted information. Nevertheless, it is important to note that these pixel-wise methods easily produce classes with speckle noise. To address this issue, some researchers have explored the combination of statistical distribution and scattering features, including

[

22], K-Wishart [

23],

[

24], and other similar approaches. In these approaches, the initial classification result is obtained by utilizing the scattering features and then further optimized using a statistical distribution model. However, these methods based on scattering mechanisms tend to overlook the incorporation of high-level semantic information. Additionally, they face challenges in effectively learning the complicated textural structures associated with heterogeneous terrain types, including buildings and forests.

Recently, deep learning models have achieved remarkable performance in learning high-level semantic features, and so they are extensively utilized in the domain of PolSAR image classification. In light of the valuable information contained within PolSAR original data, numerous deep learning methods have been developed for PolSAR image classification. Deng et al. [

21] proposed a deep belief network for PolSAR image classification. Furthermore, Jiao et al. [

25] introduced the Wishart deep stacking network for fast PolSAR image classification. Later, Dong et al. [

26] applied neural structure searching to PolSAR images, which performed well. In a separate study, Xie et al. [

27] developed a semi-supervised recurrent complex-valued convolutional neural network (CNN) model that could effectively learn complex data, thereby improving the classification accuracy. Liu et al. [

28] derived an active assemble deep learning method that incorporated active learning into a deep network. This method significantly reduced the number of training samples required for PolSAR image classification. Additionally, Liu et al. [

29] further constructed an adaptive graph model to decrease computational complexity and enhance classification performance. Luo et al. [

30] proposed a novel approach for multi-temporal PolSAR image classification by combining a stacking auto-encoder network with a CNN model. Ren et al. [

31] improved the complex-valued CNN method and proposed a new structure to learn complex features of PolSAR data. These deep learning methods focused on learning the polarimetric features and scattering high-level features to enhance the performance of classification algorithms. However, these methods only utilized the original data, which may lead to the misclassification of extremely heterogeneous terrain objects, such as buildings, forests, and mountains. This is because there are significant scattering and textural structure variations within heterogeneous objects, which make it difficult to extract high-level semantic features using complex matrix learning alone.

Nowadays, there are many advantages in the field of PolSAR image classification to the multiple scattering feature-based deep learning methods. It is widely recognized that the utilization of various target decomposition-based and textural features can greatly improve the accuracy of PolSAR image classification. However, one crucial aspect to improving classification performance is feature selection. To address this issue, Yang et al. [

32] proposed a CNN-based polarimetric feature selection model. This model incorporated the use of the Kullback–Leibler distance to select feature subsets and employed a CNN to identify the optimal features that could enhance classification accuracy. Bi et al. [

33] proposed a method that combined low-rank feature extraction, a CNN, and a Markov random field (MRF) for classification. Dong et al. [

34] introduced an end-to-end feature learning and classification method for PolSAR images. In their approaches, high-dimensional polarimetric features were directly inputted into a CNN, allowing the network to learn discriminating representations for classification. Furthermore, Wu et al. [

35] proposed a statistical-spatial feature learning network that aimed to jointly learn both statistical and spatial features from PolSAR data while also reducing the speckle noise. Shi et al. [

36] presented a multi-feature sparse representation model that enabled learning joint sparse features for classification. Furthermore, Liang et al. [

37] introduced a multi-scale deep feature fusion and covariance pooling manifold network (MFFN-CPMN) for high-resolution SAR image classification. This network combined the benefits of local spatial features and global statistical properties to enhance classification performance. These multi-feature learning methods [

35,

38] have the ability to automatically fuse and select multiple polarimetric and scattering features to improve classification performance. However, these methods ignored the statistical distribution of the original complex matrix, resulting in the loss of channel correlation.

The aforementioned deep learning methods solely focused on either the original complex matrix data or multiple scattering features. However, it is important to note that these two types of data can offer complementary information. Unfortunately, only a few methods are capable of utilizing both types of data simultaneously. This limitation arises due to the different structures and distributions of the two types of data, which cannot be directly employed in the same data space. To combine them, Shi et al. [

36] proposed a complex matrix and multi-feature joint learning method, which constructed a complex matrix dictionary in the Riemannian space and a multi-feature dictionary in the Euclidean space and further jointly learned the sparse features for classification. However, it has been observed that this method is unable to effectively learn high-level semantic features, particularly for heterogeneous terrain objects. In this paper, we construct a double-channel convolution network (DCCNN) that aims to effectively learn both the complex matrix and multiple features. Additionally, a unified fusion module is designed to combine both of them.

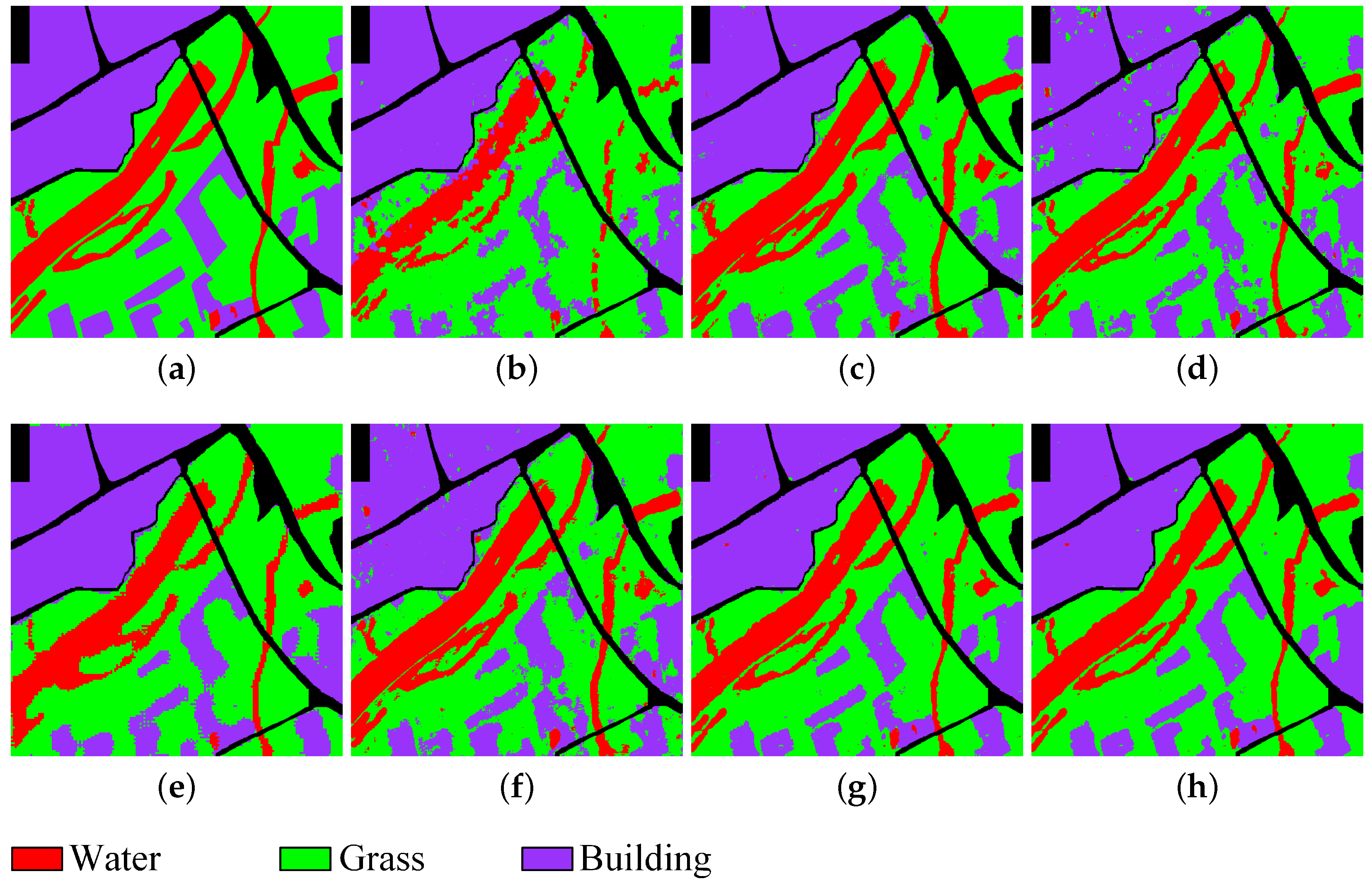

Furthermore, deep learning-based methods demonstrate a strong ability to effectively learn semantic features for heterogeneous PolSAR images. However, it is important to note that the utilization of high-level features often leads to the loss of edge details. This phenomenon can be attributed to the fact that two neighboring pixels across an edge have similar high-level semantic features, which are extracted from large-scale contextual information. Therefore, high-level features cannot identify the edge details, as a result of edge confusion. In order to address this issue and mitigate the impact of speckle noise, the MRF [

39,

40] has emerged as a valuable tool in remote sensing image classification. For example, Song et al. [

22] combined the MRF with the WGt mixed model, which could capture both the statistical distribution and contextual information simultaneously. Karachristos et al. [

41] proposed a novel method that utilized hidden Markov models and target decomposition representation to fully exploit the scattering mechanism and enhance classification performance. The traditional MRF with a fixed square neighborhood window is considered effective in removing speckle noise but tends to blur the edge pixels. This is because, for edge pixels, the neighbors should be along the edge instead of the square box. Considering the edge direction, Liu. et al. [

42] proposed the polarimetric sketch map to describe the edges and structure of PolSAR images. Inspired by the polarimteric sketch map, in this paper, we define an adaptive weighted neighborhood structure for edge pixels. Then, an edge preserving prior term is designed to optimize the edges with an adaptive weighted neighborhood. Therefore, by implementing appropriate contextual design, the MRF has the ability to modify the edge details. It can not only smooth the classification map to reduce speckles, but also preserve edges through designing a suitable adaptive neighborhood prior term.

To preserve edge details, we combine the proposed DCCNN model and the MRF. By leveraging the strengths of both semantic features and edge preservation, the proposed method aims to achieve optimal results. Furthermore, we develop an edge-preserving prior term that specifically addresses the issue of blurred edges. Therefore, the main contributions of our proposed method can be summarized into three aspects, as follows:

- (1)

Based on different traditional deep learning networks with either the complex matrix or multiple features as the input, our method presents a novel double-channel CNN (DCCNN) network that jointly learns both complex matrix and multi-feature information. By designing Wishart and multi-feature subnetworks, the DCCNN model can not only learn pixel-wise complex matrix features, but also extract high-level discriminating features for heterogeneous objects.

- (2)

In this paper, the Wishart-based complex matrix and multi-feature subnetworks are integrated into a unified framework, and a weighted fusion module is presented to adaptively learn the valuable features and suppress useless features in order to improve the classification performance.

- (3)

A novel DCCNN-MRF method is proposed by combining the proposed DCCNN model with an edge-preserving MRF, which can classify heterogeneous objects effectively, as well as revising the edges. In contrast to conventional square neighborhoods, in the DCCNN-MRF model, a sketch-based adaptive weighted neighborhood is designed to construct the prior term and preserve edge details.

The remaining sections of this paper are structured as follows. Related work is introduced in

Section 2.

Section 3 explains the proposed method in detail. The experimental results and analysis are given in

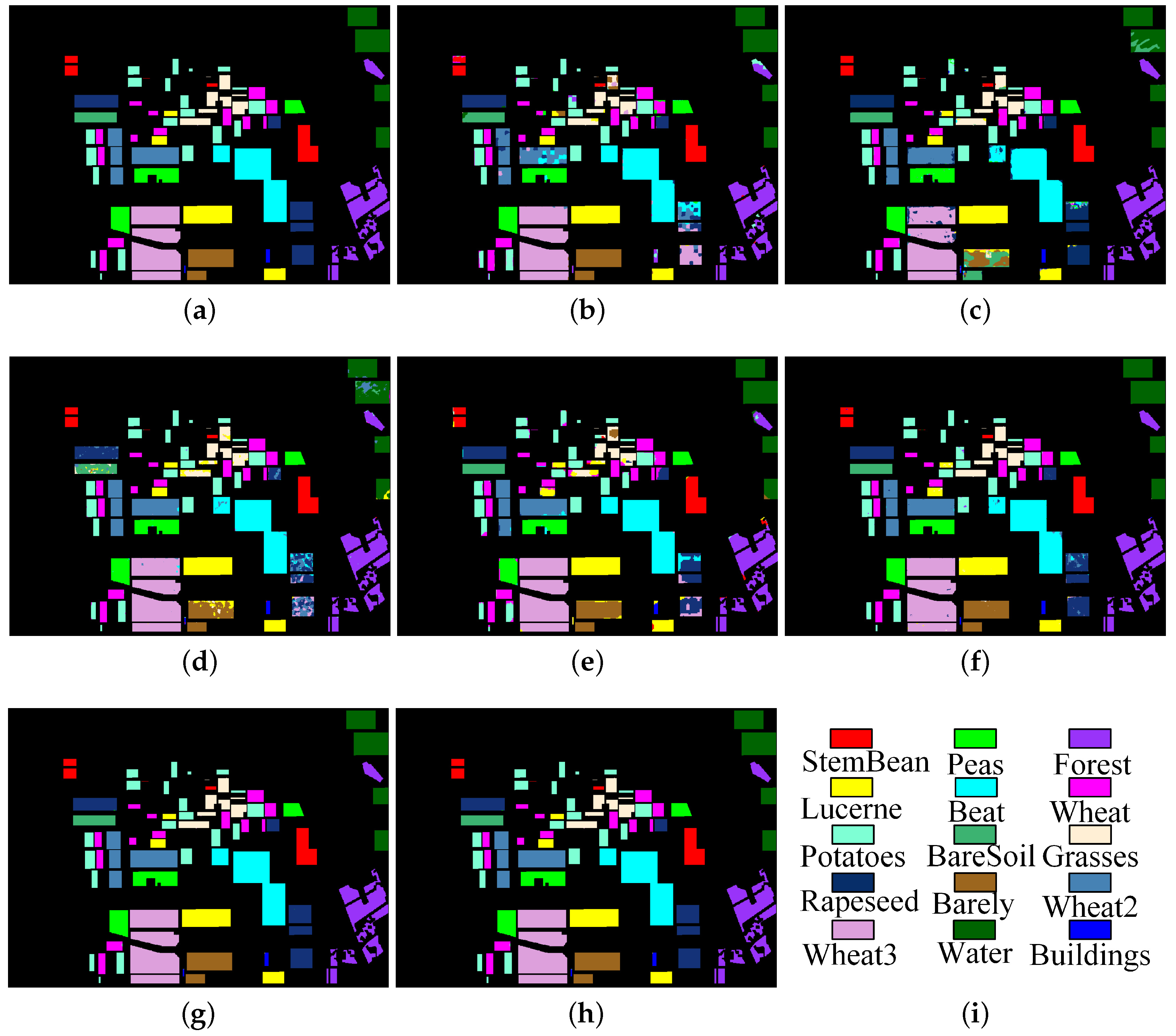

Section 4, and the conclusions are summarized in

Section 5.

3. Proposed Method

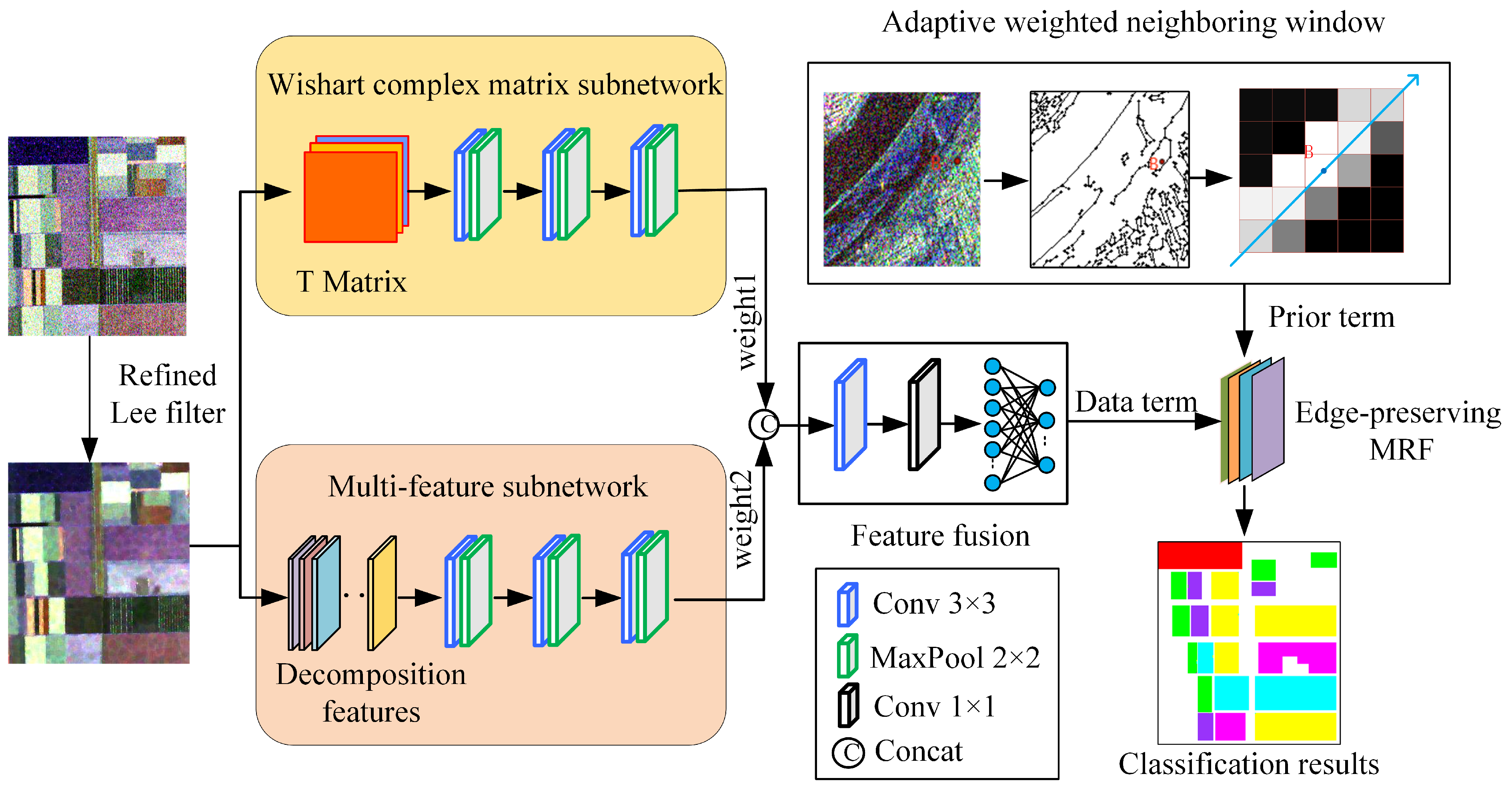

In this paper, a novel DCCNN-MRF method is proposed for PolSAR image classification, whose framework is illustrated in

Figure 2. Firstly, a refined Lee filter [

44] is applied to the original PolSAR image to reduce the speckle noise. Then, a double-channel convolution network is developed to jointly learn the complex matrix and multiple features. On the one hand, a Wishart-based convolutional network is designed, which utilizes the complex matrix as the input and defines the Wishart measurement as the first convolution layer. The Wishart convolution network can effectively measure the similarity of complex matrices. Following this initial step, a traditional CNN is employed to learn deeper features. On the other hand, a multi-feature subnetwork is specifically designed to acquire various polarimetric scattering features. These features serve the purpose of providing supplementary information for the Wishart convolution network. Subsequently, a unified framework is developed to adaptively merge the outputs of the two subnetworks. To accomplish this fusion, multiple layer convolutions are employed to effectively combine the two types of features. Secondly, to suppress speckle noise and revise the edges, a MRF model is incorporated with the DCCNN network. This integration also improves the overall performance of image classification. The data term in the MRF model is defined as the class probability obtained from the DCCNN model, and the prior term is designed using an edge penalty function. The purpose of this edge penalty function is to reduce the confusion related to edges that may arise due to the high-level features of the deep model.

3.1. Double-Channel Convolution Network

In this paper, a DCCNN is proposed to jointly learn the complex matrix and various scattering features from PolSAR data, as shown in

Figure 2. The DCCNN network consists of two subnetworks: the Wishart-based complex matrix and multi-feature subnetworks, which can learn complex matrix relationships and various polarimetric features, respectively. Then, a unified feature fusion module is designed to combine different features dynamically, which provides a unified framework for integrating complex matrix and multi-feature learning. The incorporation of complementary information further enhances the classification performance.

(1) Wishart-based complex matrix subnetwork

Traditional deep learning methods commonly convert the polarimetric complex matrix into a column vector. However, this conversion process results in the loss of both the matrix structure and the data distribution of PolSAR data. To effectively capture the characteristics of the complex matrix effectively, a Wishart-based complex matrix network is designed. This network aims to learn the statistical distribution of the PolSAR complex matrix. The first layer in the neural network architecture is the Wishart convolution layer. This layer is responsible for converting the Wishart metric into a linear transformation, which corresponds to the convolution operation. To be specific, the coherency matrix

T, which is widely known to follow the Wishart distribution, is calculated by the Wishart distance in this layer. For example, the distance between the

jth pixel

and the

ith class center

can be measured by the Wishart distance, defined as

where

is the log operation,

is the trace operation of a matrix, and

is the determinant operation of a matrix. However, the Wishart metric is not directly applicable to the convolution network due to its reliance on complex matrices. In [

25], Jiao et al. proposed a method to convert the Wishart distance into a linear operation. Firstly, the T matrix is converted into a vector as follows:

where

and

are used to extract the real and imagery parts of a complex number, respectively. This allows for the conversion of a complex matrix into a real-valued vector, where each element is a real value. Then, the Wishart convolution can be defined as

where

is the convolution kernel;

is the

ith pixel value;

b is the bias vector defined as

; and

is the output of the Wishart convolution layer. Although it is a linear operation on vector

, it is equal to the Wishart distance between pixel

and class center

W.

In addition, to learn the statistical characteristics of complex matrices, we initialize the convolution kernel as the class center. Thus, the Wishart convolution is interpretable and can learn the distance between each pixel and the class centers. Thus, it overcomes the non-interpretability of traditional networks. The number of kernels is set equal to the number of classes, and the initial convolution kernel is calculated by averaging the complex matrices of labeled samples for each class. After the first Wishart convolution layer, a complex matrix is transformed into a real value for each pixel. Subsequently, several CNN convolution layers are utilized to learn the contextual high-level features.

(2) Multi-feature subnetwork

The Wishart subnetwork is capable of effectively learning the statistical characteristics of the complex matrix. However, when it comes to heterogeneous areas, the individual complex matrices cannot learn the high-level semantic features. This is because the heterogeneous structure results in neighboring pixels having significantly different scattering matrices, even though they belong to the same class. To learn high-level semantic information in heterogeneous areas, it is necessary to employ multiple features that offer complementary information to the original data. In this paper, a set of 57-dimensional features are extracted. These features encompass both the original data and various polarimetric decomposition-based features. These features include Cloude decomposition, Freeman decomposition, and Yamaguki decomposition. The detailed feature extraction process can be found in [

45], as shown in

Table 1. The feature vector is defined as

, which describes each pixel from several perspectives. Due to the great ranges of different features, a normalization process is employed initially. Subsequently, several layers of convolutions are applied to facilitate the learning of high-level features.

In addition, the network architecture employs a three-layer convolutional structure to achieve multi-scale feature learning. The convolution kernel size is , and the moving step size is set to 1. To reduce both the parameter number and computational complexity, we select the maximum pooling method for down-sampling. This technique effectively maintains the same receptive field while reducing the spatial dimensions of the feature maps.

(3) The proposed DCCNN fusion network

To enhance the benefits derived from both the complex matrix and multiple features, a unified framework is designed to fuse these two subnetworks. To be specific, the complex matrix features

are extracted from the Wishart subnetwork, and the multi-feature vector

is obtained from the multi-feature subnetwork. Then, they are weighted and connected to construct the combined feature X. Later, several CNN convolution layers are utilized to fuse them. By multiple layer convolution, all the features are fused to capture global feature information effectively. Adaptive weights are learned to automatically obtain larger weights for effective features and smaller weights for useless features. Thus, discriminating features are extracted, and useless features are suppressed. The classification accuracy of the target object can be improved by focusing on useful features. Therefore, the feature transformation of the proposed DCCNN network can be described as

where

represents the feature

extracted from the Wishart subnetwork based on the T matrix,

indicates the feature

extracted from the multi-feature subnetwork based on the multi-feature

F, ⊕ is the connection operation of

and

, and

is the weight vector for the combined features. The combined features are then fed into the DCCNN, which is specifically designed to generate high-level features denoted as

. Finally, the softmax layer is utilized for classification.

3.2. Combining Edge-Preserving MRF and DCCNN Model

The proposed DCCNN model can effectively learn both the statistical characteristics and multiple features for PolSAR data. The learned high-level semantic features can improve the classification performance especially for heterogeneous areas. However, as the number of convolution layers increases, the DCCNN model incorporates larger-scale contextual information. While this is beneficial for capturing global patterns and relationships, it poses challenges for edge pixels. The high-level features learned by the model struggle to accurately identify neighboring pixels that cross the edge with different classes. Consequently, deep learning methods always blur edge details with high-level features. In order to learn the contextual relationships for heterogeneous terrain objects and simultaneously accurately identify edge features, we combine the proposed DCCNN network with the MRF to optimize the pixel level classification results.

The MRF is a widely used probability model that can learn contextual relationship by designing an energy function. The MRF can learn the pixel features effectively, as well as incorporating contextual information. In this paper, we design an edge penalty function to revise the edge pixels and suppress the speckle noise. Within the MRF framework, an energy function is defined, which consists of data and prior terms. The data term represents the probability of each pixel belonging to a certain class, while the prior term is the class prior probability. The energy function is defined as

where

is the data term, which stands for the probability of data

belonging to class

for pixel

s. In this paper, we define the data term as the probability learned from the DCCNN model. The probability from the DCCNN model is normalized to

.

is the prior term, which is the prior probability of class

. In the MRF, the spatial contextual relationship is implemented to learn the prior probability.

is a neighboring set of pixel

s, and

r is the neighboring pixel of

s. When neighboring pixel

r has the same class label as pixel

s, the probability increases; otherwise, it decreases. When none of the neighboring pixels belong to class

, it indicates that pixel

s may likely be a noisy point. In such cases, it is advisable to revise the classification of pixel

s to match the majority class of its neighboring pixels. In addition, the neighborhood set is essential for the prior term. If pixel

s belongs to a non-edge region, a

square neighbor is suitable for suppressing speckle noise. If pixel

s is nearing the edges, its neighbors should be pixels along the edges instead of pixels in a square box. Furthermore, it is not fair that all the neighbors contribute to the pixel with the same probability, especially for edge pixels. Pixels on the same side of the edge are similar to the central pixel, which should have a higher probability than totally different pixels crossing the edge, even though they are also close to the central pixel. Neighboring pixels crossing the edge with a completely different class are unfavorable for estimating the probability of pixel

s and can even lead to erroneous estimation.

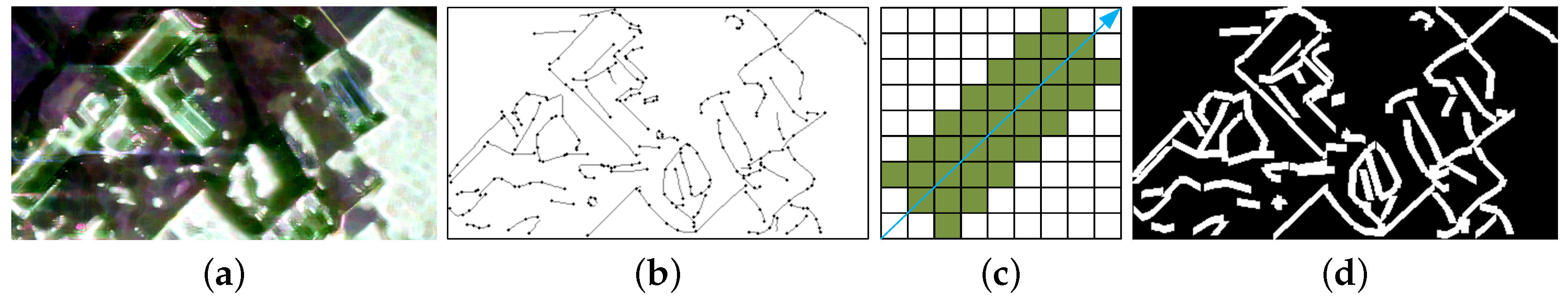

In this paper, we firstly define the edge and non-edge regions for a PolSAR image by utilizing the polarimetric sketch map [

43]. The polarimetric sketch map is calculated by polarimetric edge detection and sketch pursuit methods. Each sketch segment is characterized by its direction and length. Then, edge regions are extracted using a geometric structure block to expand a certain width along the sketch segments, such as a five-pixel width.

Figure 3 illustrates examples of edge and non-edge regions.

Figure 3a shows the PolSAR PauliRGB image.

Figure 3b shows the polarimetric sketch map extracted from (a).

Figure 3c shows the geometric structure block. By expanding the sketch segments with (c), the edge and non-edge regions are shown in

Figure 3d. Pixels in white are edge regions, while pixels in black are non-edge regions. The directions of the edge pixels are assigned as the directions of the sketch segments.

In addition, we design adaptive neighborhood sets for edge and non-edge regions. For non-edge regions, a

box is utilized as the neighborhood set. For edge regions, we adopt an adaptive weighted neighborhood window to obtain the adaptive neighbors. That is, the pixels along the edges have a higher probability than the other pixels. The weight of pixel

r to central pixel

s is measured by the revised Wishart distance, defined as

where

and

are the covariance matrices of neighboring and central pixels, respectively. According to the Wishart measurement, the weight of neighboring pixel

r to central pixel

s is defined as

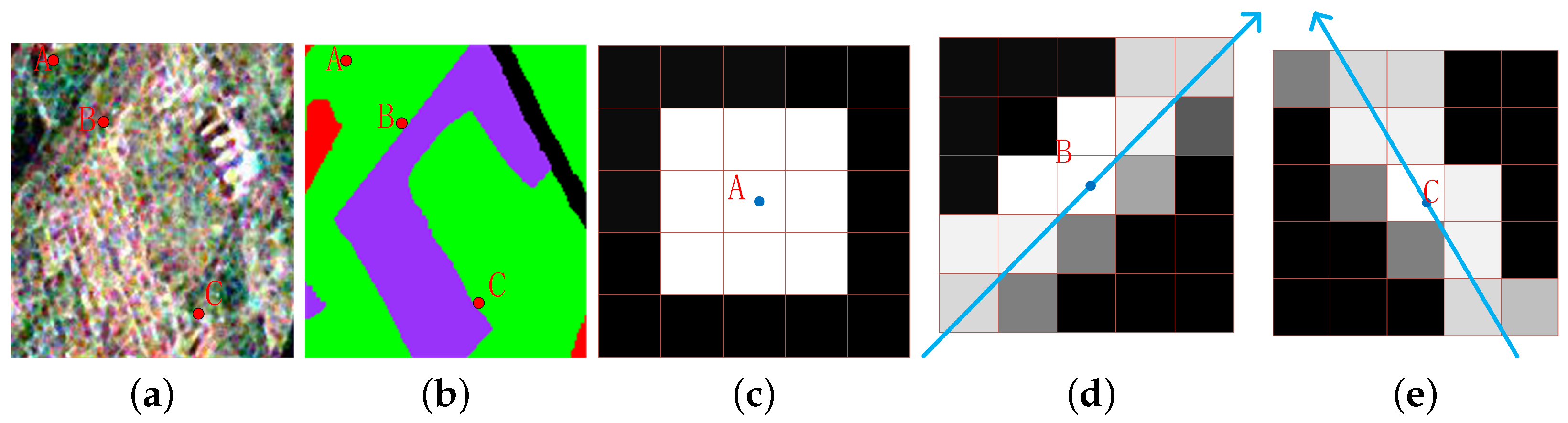

The adaptive weighted neighboring window is shown in

Figure 4.

Figure 4a shows the Pauli RGB subimage of the Xi’an area, in which pixel A is in the non-edge region, while pixels B and C belong to edge regions.

Figure 4b shows the class label map of (a). We select a

neighborhood window for pixel A in the non-edge region, as shown in

Figure 4c.

Figure 4d,e depict the adaptive weighted neighbors for point B and C, respectively. In addition, for edge pixels, varying weights are assigned to the neighboring pixels. It is evident that the neighborhood pixels are always located along the edges. The black pixels that are distant from the center pixel no longer qualify as neighborhood pixels. Furthermore, neighborhood pixels with lighter colors are assigned higher weights, while pixels with darker colors have lower weights. From

Figure 4c,d, we can see that pixels on the same side of the edge have higher weights than those on the other side, which could avoid the confusion of neighboring pixels crossing the edge.

According to the adaptive weighted neighborhood, we develop an edge-preserving prior term that effectively integrates the contextual relationship while simultaneously minimizing the impact of neighboring pixels that traverse the edge. The prior term is built as follows:

where

is the balance factor between data and prior terms;

and

are the class labels of pixel s and r, respectively;

is the neighborhood weight of pixel

r to central pixel

s; and

is the Kronecker delta function, defined as

where

takes a value of 1 when

and

are equal, and 0 otherwise. It is used to describe the class relationship between the central point and its neighbor pixels. After MRF optimization, the proposed method can obtain the final classification map with both better region homogeneity in heterogeneous regions and edge preservation.

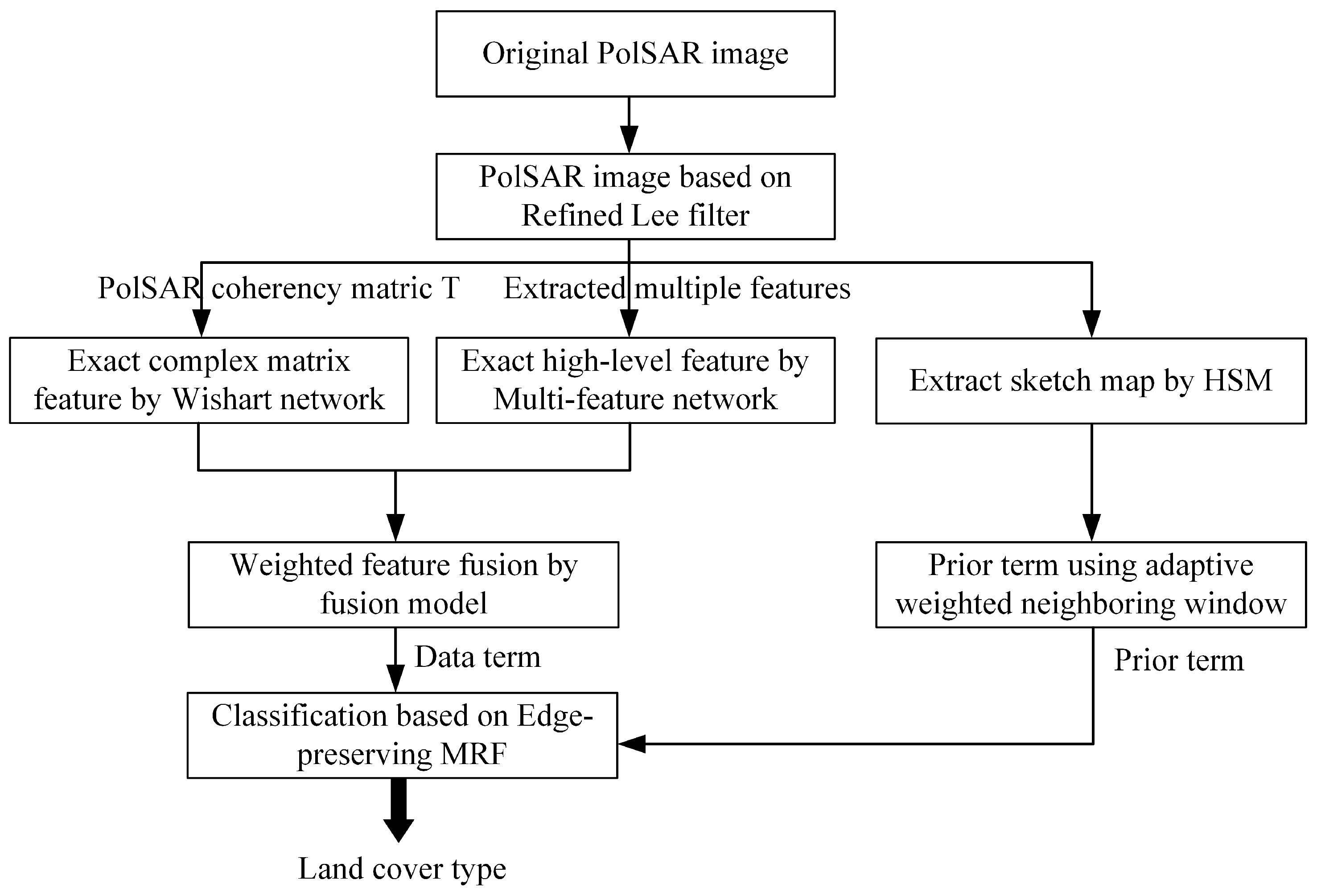

A flowchart of the proposed DCCNN-MRF method is presented in

Figure 5. Firstly, the refined Lee filter is applied to reduce speckle noise. Secondly, a Wishart complex matrix subnetwork is designed to learn complex matrix features, and a multi-feature subnetwork is developed to learn multiple scattering features. Thirdly, the two kinds of features are weight-fused to select discriminating features that enhance classification performance. Fourthly, to address the issue of edge confusion, a sketch map is extracted from the PolSAR image, and an adaptive weighted neighborhood window is constructed to design an edge-preserving MRF prior term. Finally, the proposed DCCNN-MRF method combines the data term from the DCCNN model and the edge-preserving prior term, which can classify the heterogeneous objects into homogenous regions, as well as preserving edge details. The proposed DCCNN-MRF algorithm procedure is described in Algorithm 1.

| Algorithm 1 Procedure of the proposed DCCNN-MRF method |

| Input: PolSAR original data S, class label map , balance factor , and class number C. |

| Step 1: Apply a refined Lee filter to PolSAR data to obtain the filtered coherency matrix T. |

| Step 2: Extract multiple scattering features F from PolSAR images based on Table 1. |

| Step 3: Learn the complex matrix features from coherency matrix T using the Wishart subnetwork. |

| Step 4: Learn the high-level features from multiple features F by the multi-feature subnetwork. |

| Step 5: Weight-fuse and into the DCCNN model and learn the fused feature . |

| Step 6: Obtain the class probability P and estimated class label map Y using the DCCNN model. |

| Step 7: Obtain the sketch map of the PolSAR image and compute the adaptive weighted neighbors for edge pixels by Equation (9). |

| Step 8: Optimize the estimated class label Y using Equation (7) according to the edge-preserving MRF model. |

| Output: class label estimation map Y. |

5. Conclusions

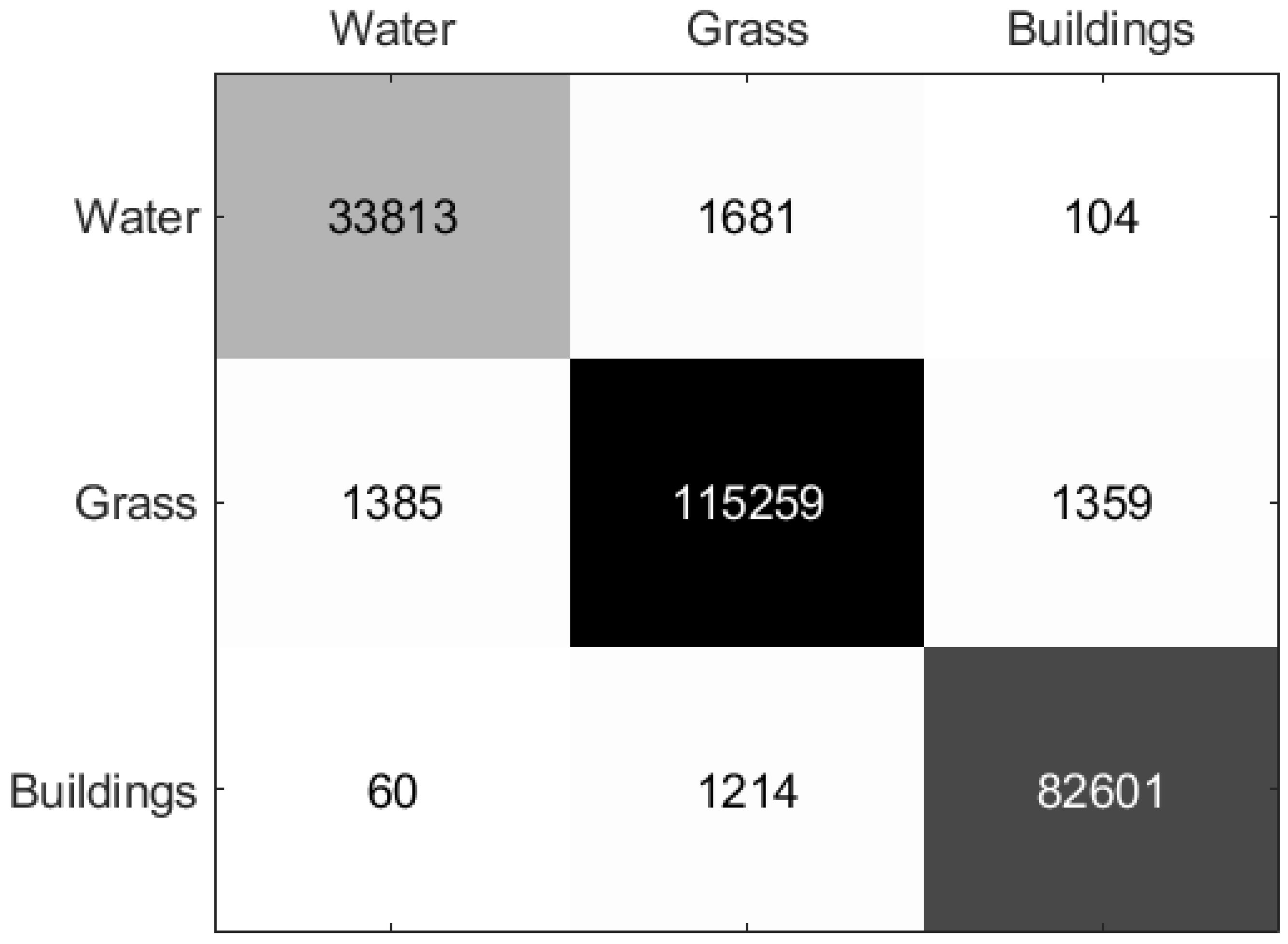

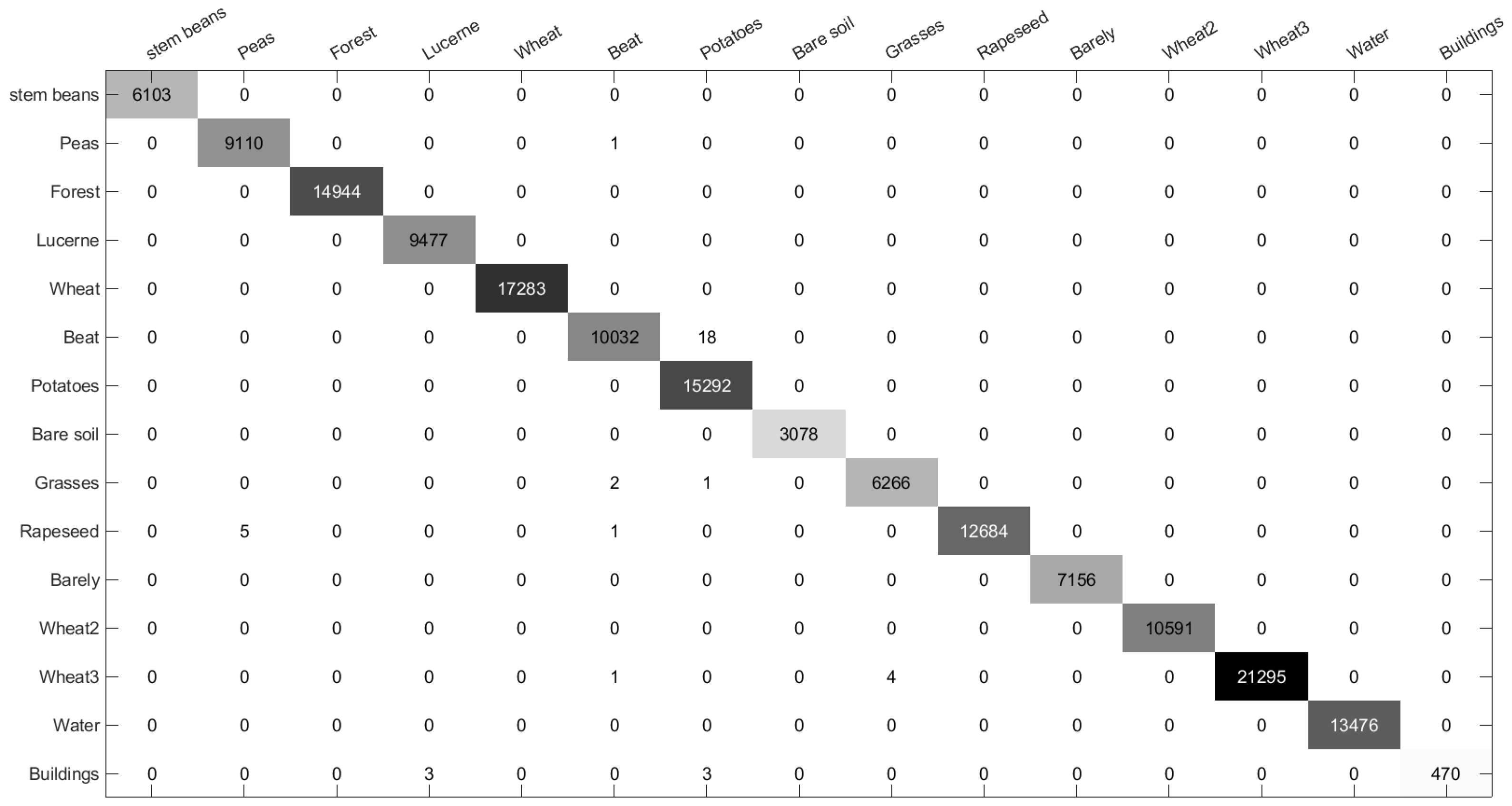

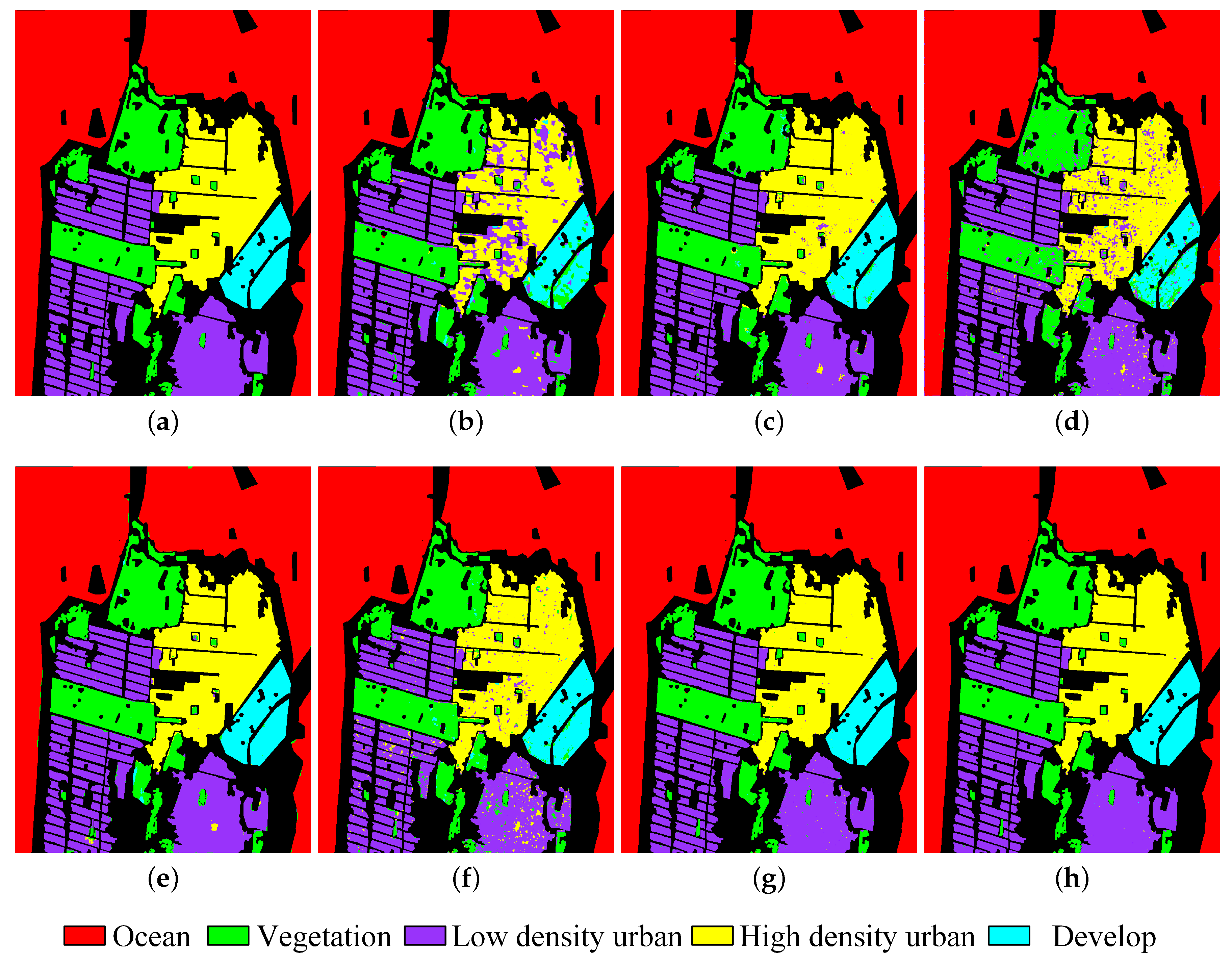

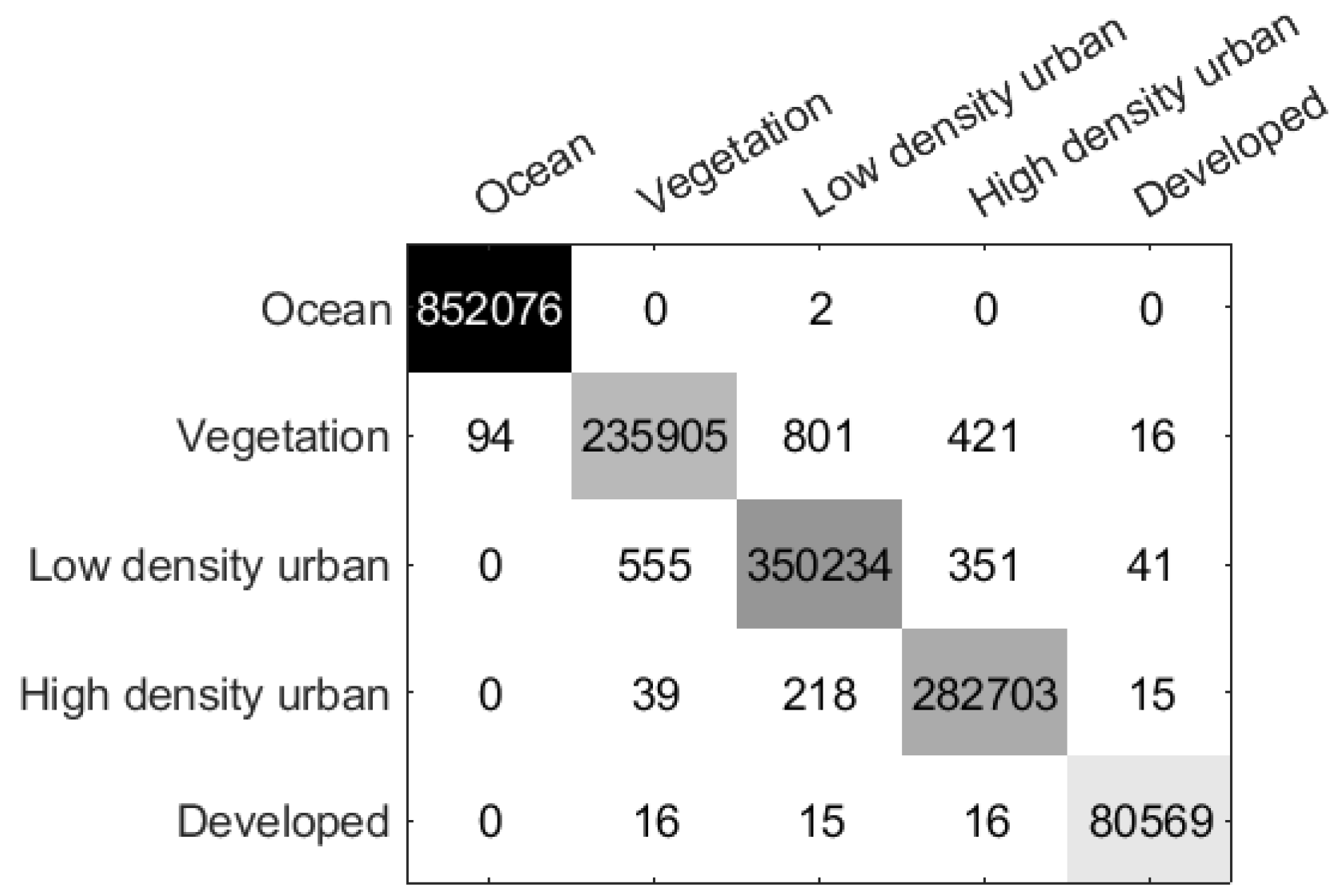

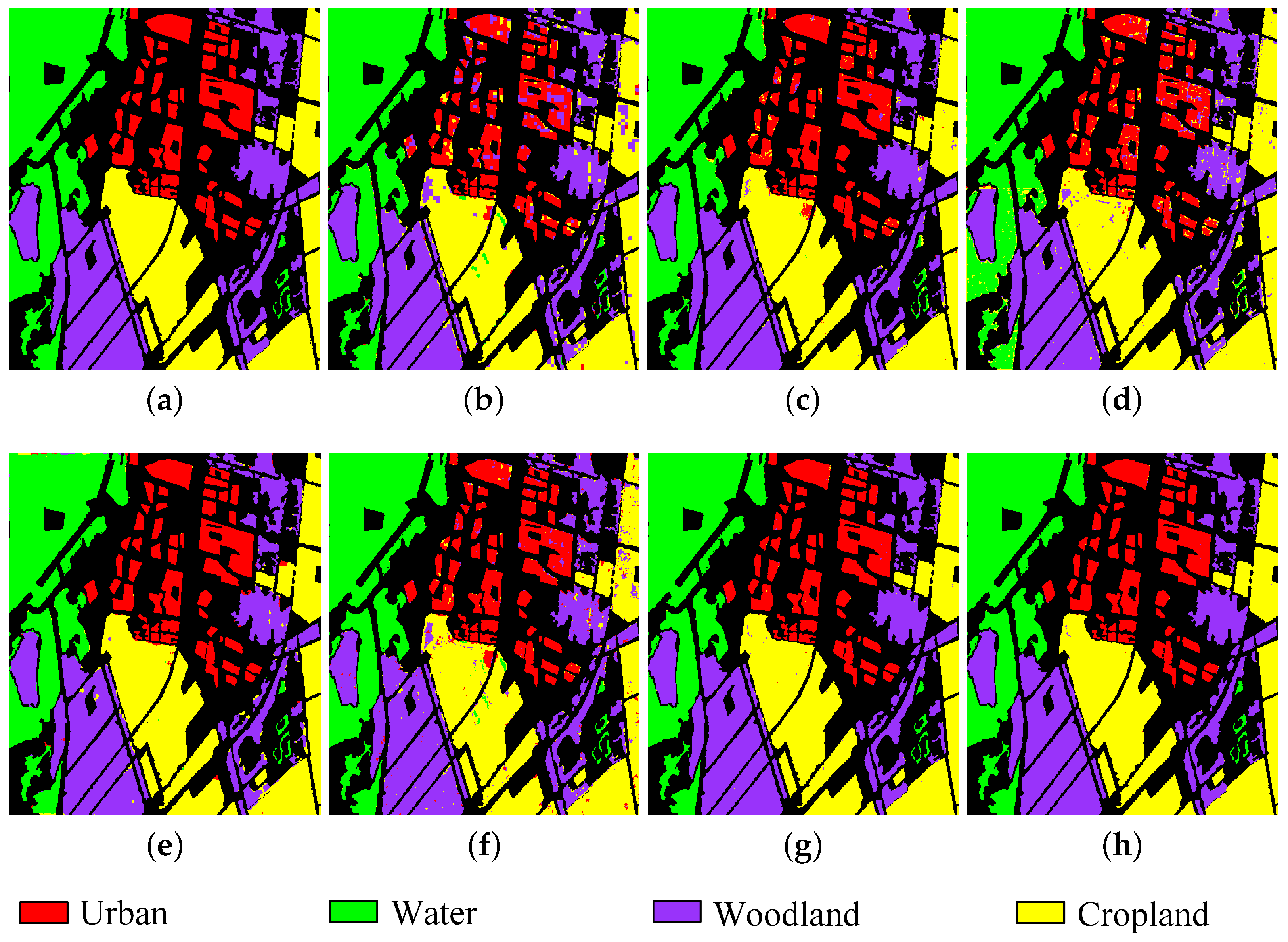

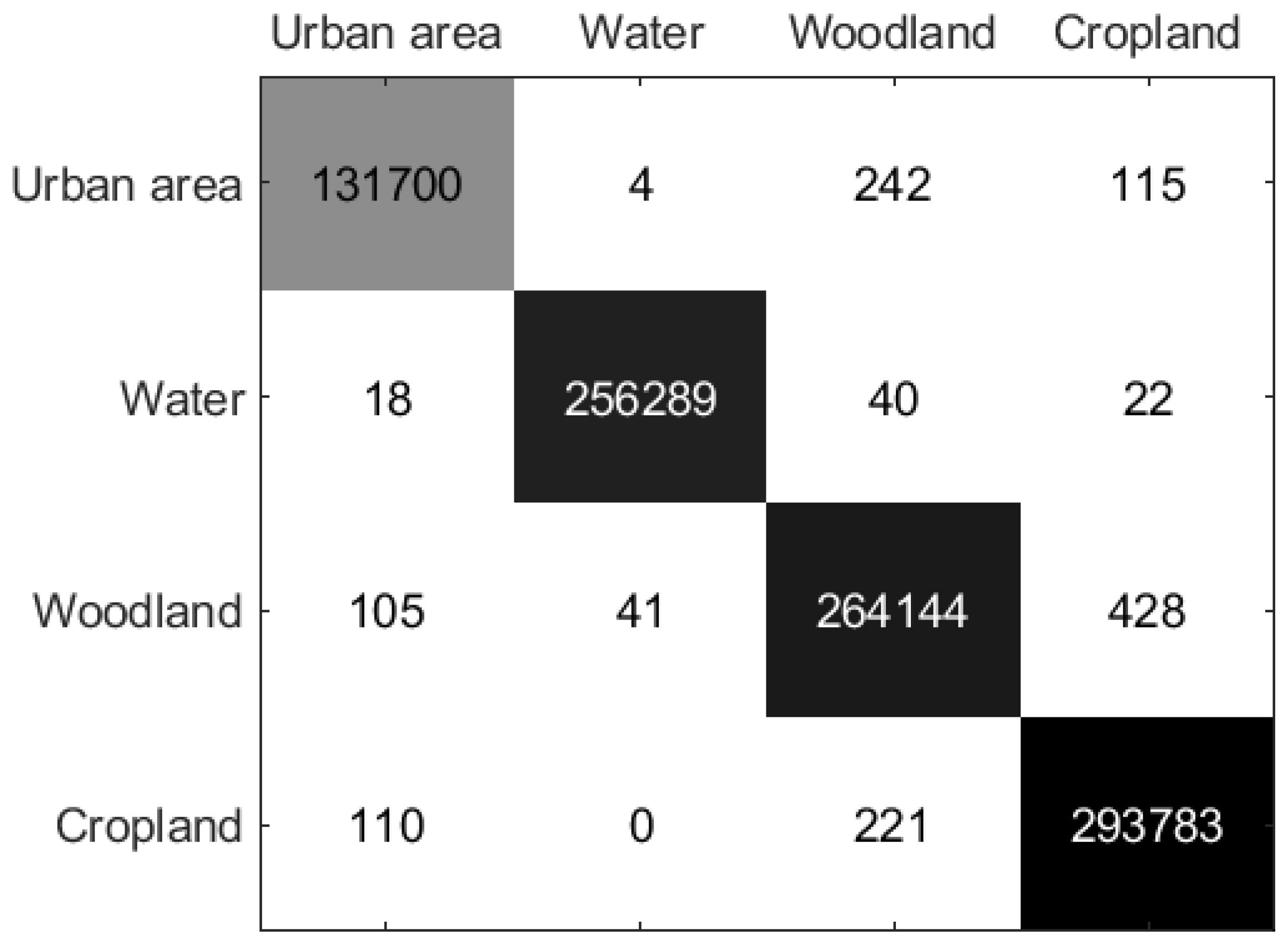

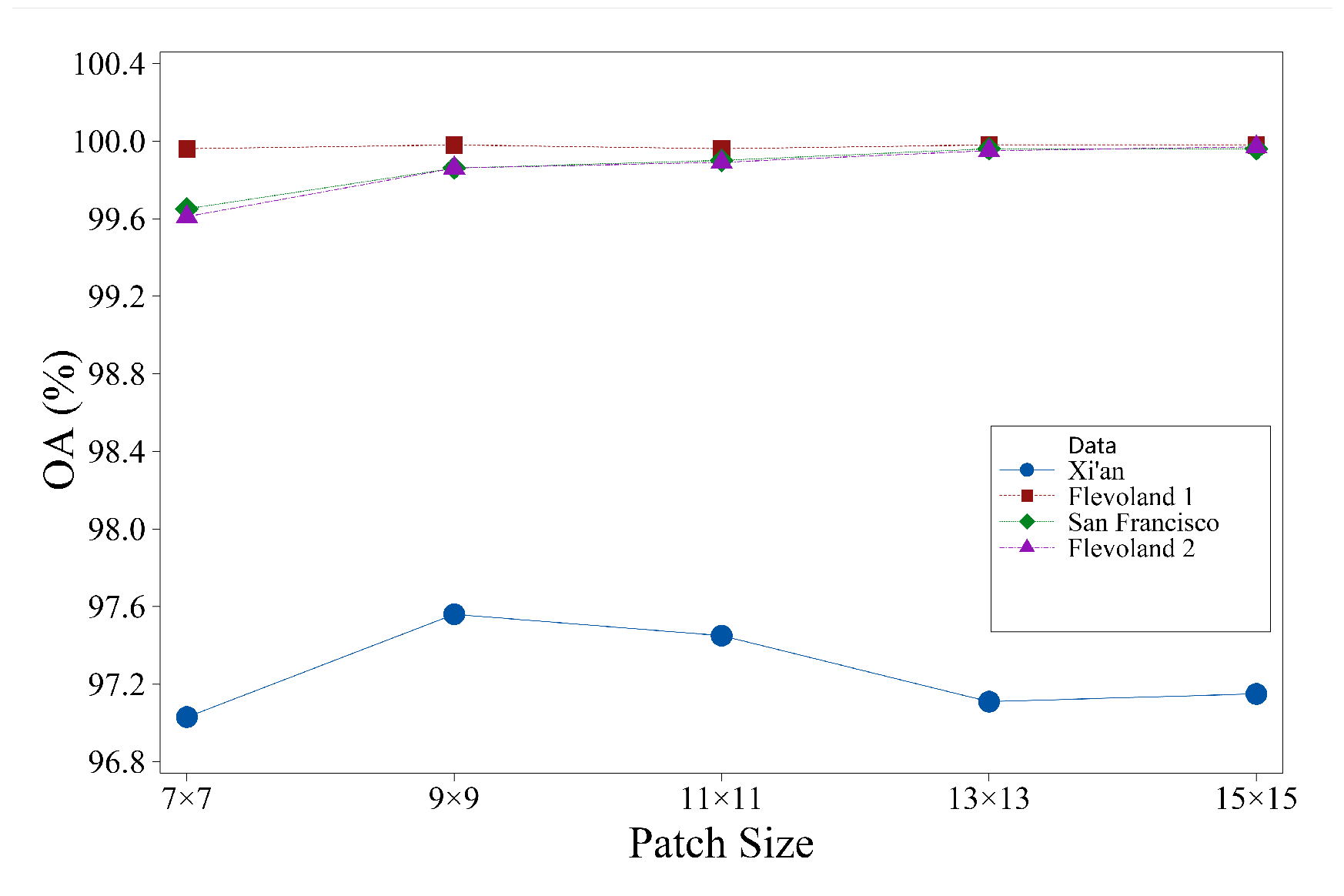

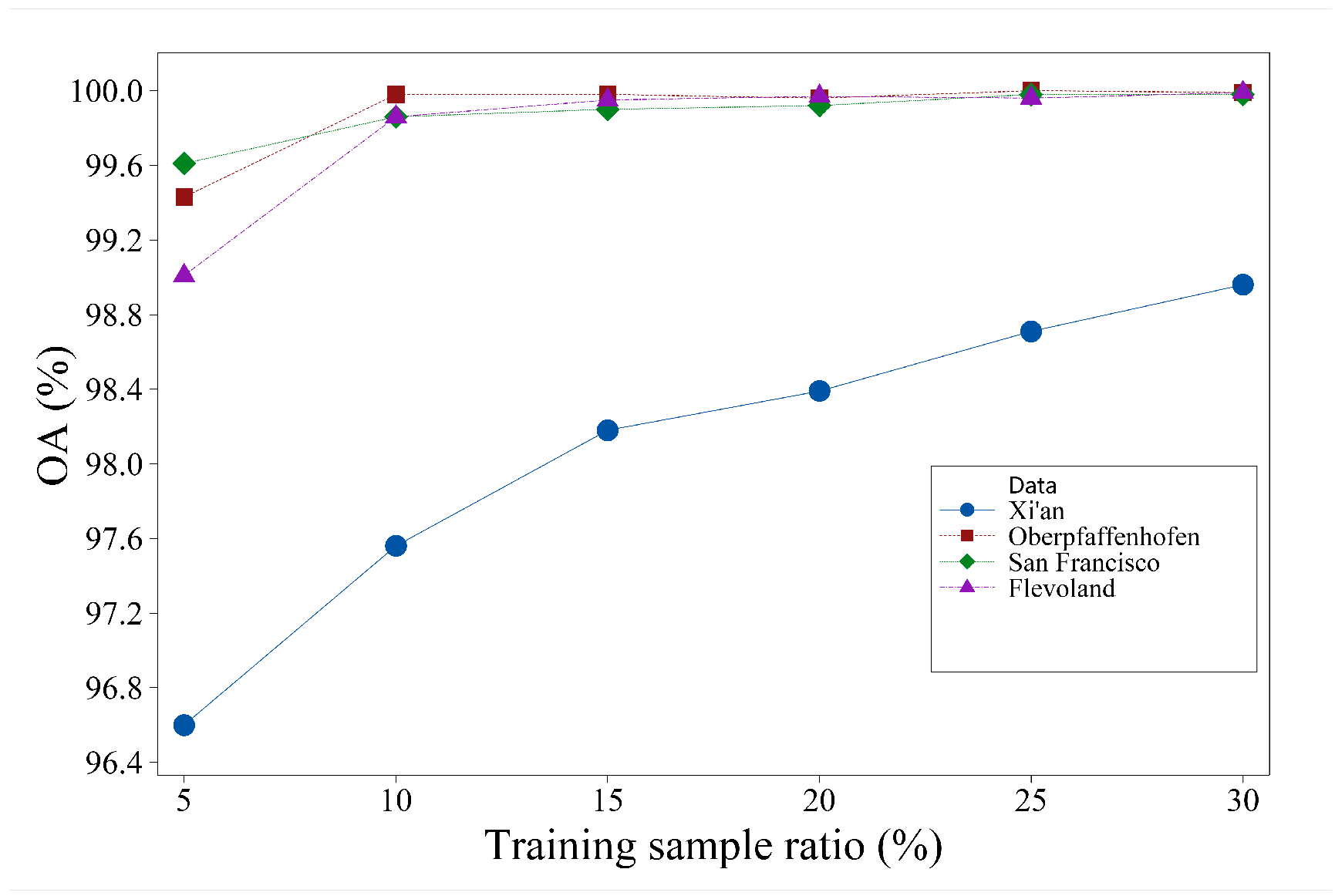

In this paper, a novel DCCNN-MRF method was proposed for PolSAR image classification that combined a double-channel convolution network and edge-preserving MRF to improve classification performance. Firstly, a novel DCCNN was developed, which consisted of Wishart-based complex matrix and multi-feature subnetworks. The Wishart-based complex matrix subnetwork was designed to learn the statistical characteristics of the original data, while the multi-feature subnetwork was designed to learn more high-level scattering features, especially for extremely heterogeneous areas. Then, a unified framework was presented to combine the two subnetworks and fuse the advantageous features of both. Finally, the DCCNN model was combined with an edge-preserving MRF to alleviate the issue of edge confusion caused by the deep network. In this model, an adaptive weighted neighborhood prior term was developed to optimize the edges. Experiments were conducted on four real PolSAR datasets, and quantitative evaluation indicators were calculated, including the OA, AA, and Kappa coefficient. The experiments showed that the proposed methods could obtain both higher classification accuracy and better visual appearance compared with some related methods. Our findings demonstrated that the proposed method could not only obtain homogeneous classification results for heterogeneous terrain objects, but also preserve edge details well.

In addition, further work should focus on how to generate more training samples. Obtaining ground-truth data for PolSAR images is challenging, and the proposed method currently requires a relatively high percentage of training samples (10%). To address the issue of limited labeled samples, various techniques can be employed to augment the sample size. One such approach is the utilization of generative adversarial networks (GANs) to generate additional samples. In addition, a feature selection mechanism could be exploited to better fuse the complex matrix and multi-feature information.