Wheat Yield Prediction Using Unmanned Aerial Vehicle RGB-Imagery-Based Convolutional Neural Network and Limited Training Samples

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Setup

2.2. Data Acquisition

2.2.1. Field Data Collection

2.2.2. UAV RGB Imagery Collection

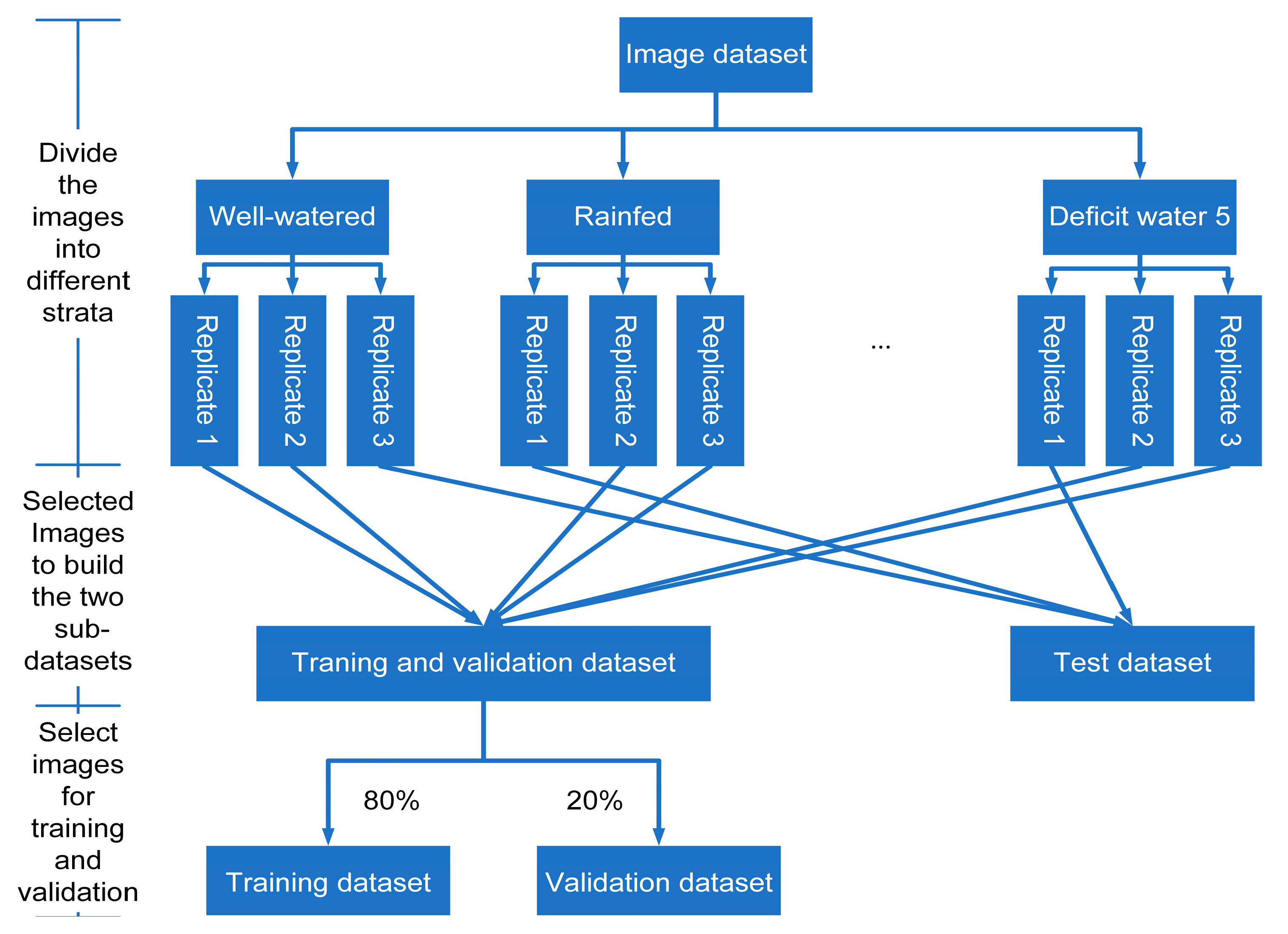

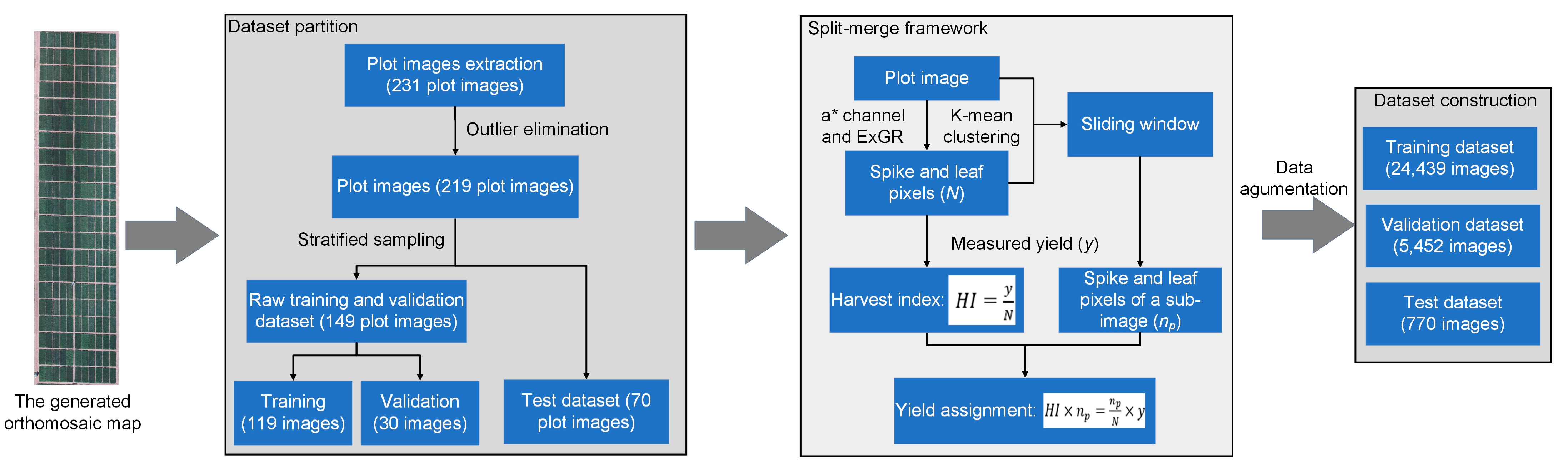

2.2.3. Data Preprocessing

2.3. The Field-Scale Yield Prediction Method

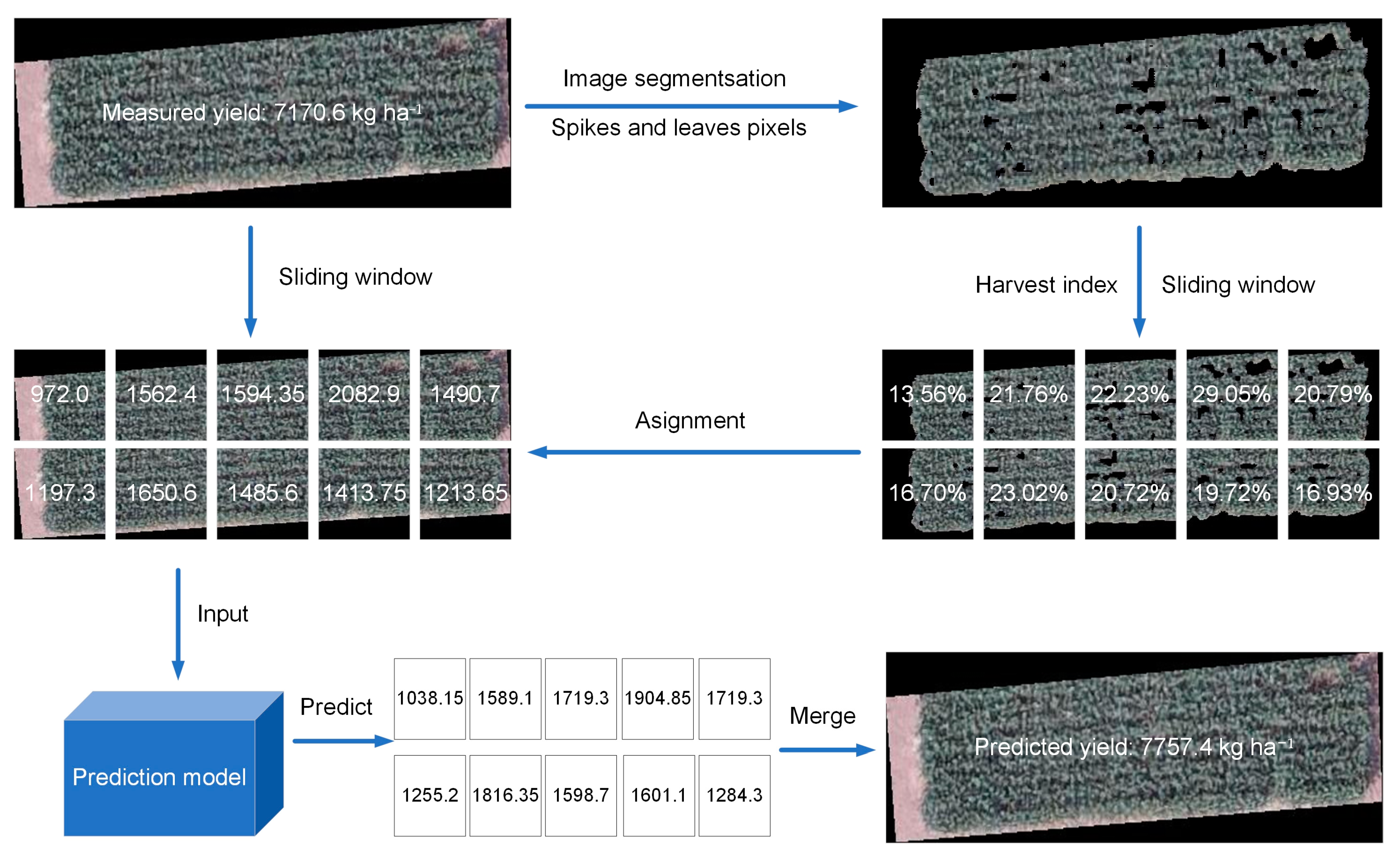

2.3.1. The Split-Merge Framework

2.3.2. The RGB-Imagery-Based Yield Prediction Model

2.4. Performance Evaluation

3. Results

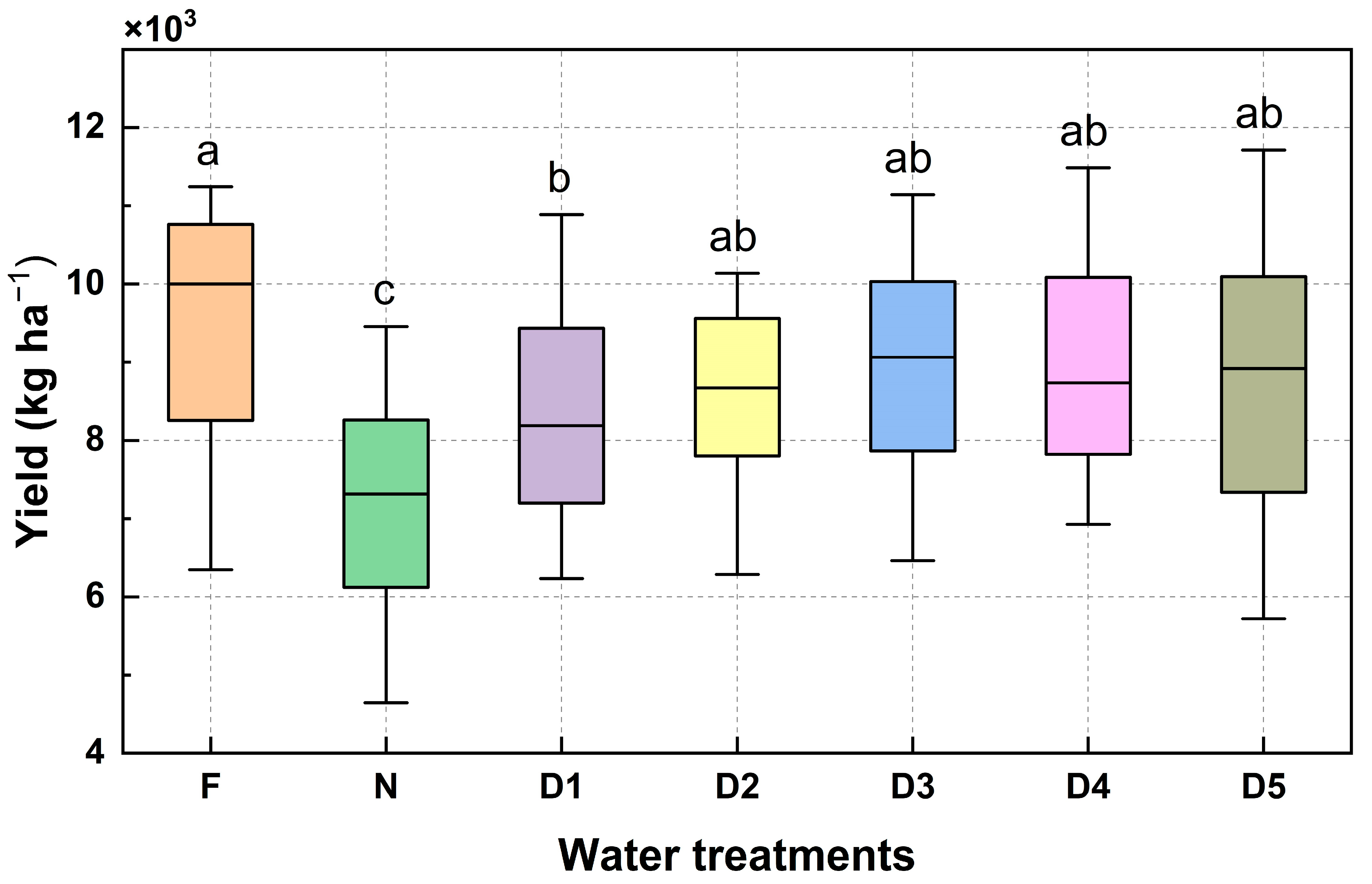

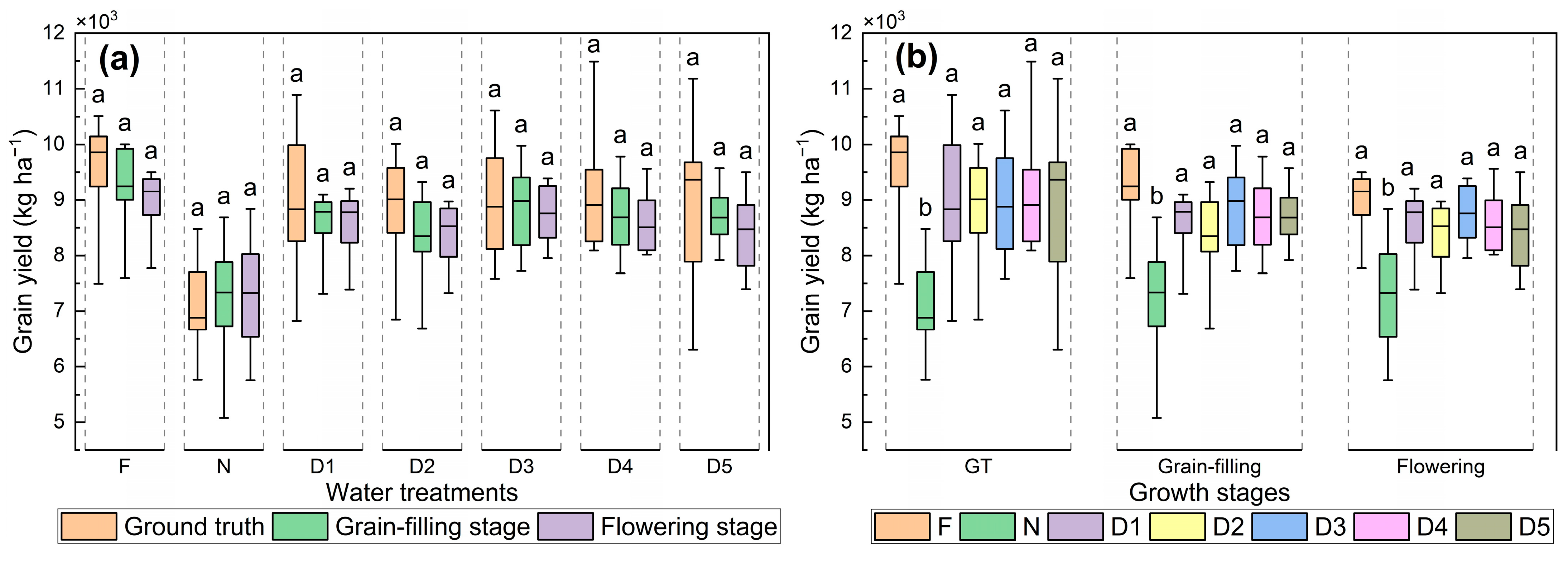

3.1. Impacts of Water Treatments

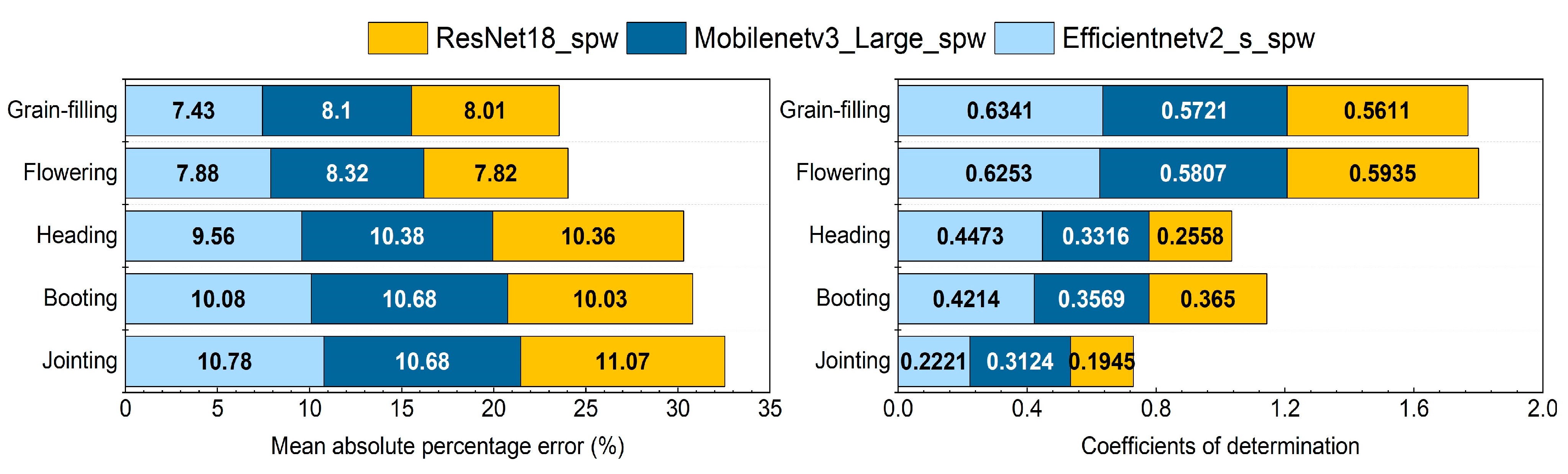

3.2. Prediction Results of the Yield Prediction Models

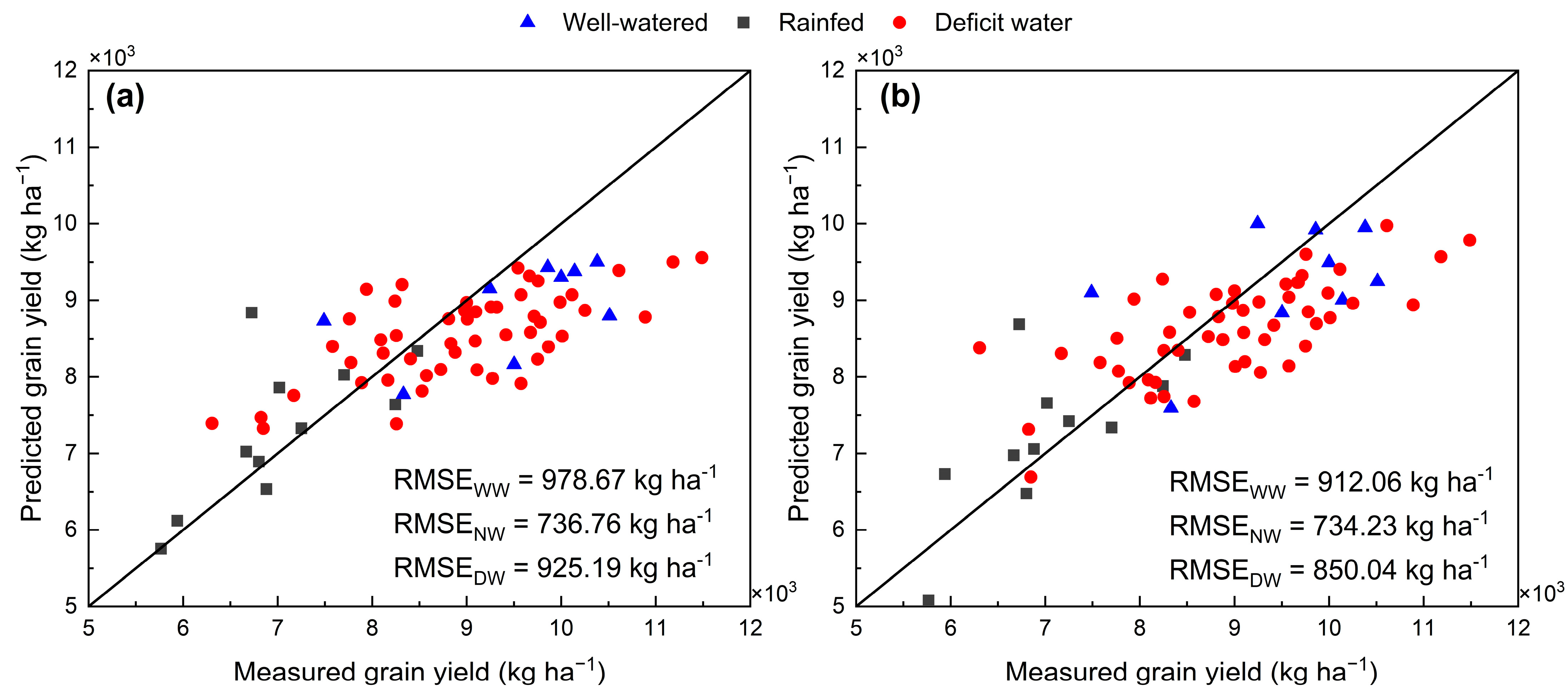

3.3. Performance of the Yield Prediction Model across Water Treatments

4. Discussion

4.1. Performance of UAV RGB-Imagery-Based CNNs

4.1.1. Potential of the Split-Merge Framework

4.1.2. Saturation Issues

4.2. Growth Stages for Grain Yield Prediction

4.3. Performance of the Yield Prediction Model across Water Treatments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Input | Operator | Out | S | L |

|---|---|---|---|---|

| 1122 × 3 | Conv2d, 3 × 3 | 64 | 2 | 1 |

| 562 × 64 | Bacisblock, 3 × 3 | 64 | 1 | 2 |

| 562 × 64 | Bacisblock, 3 × 3 | 128 | 2 | 2 |

| 282 × 128 | Bacisblock, 3 × 3 | 256 | 2 | 2 |

| 142 × 256 | Bacisblock, 3 × 3 | 512 | 2 | 2 |

| 72 × 512 | Avgpool, 7 × 7 | - | - | 1 |

| 12 × 512 | Conv2d, 1 × 1, NBN | 1000 | 1 | 1 |

| 12 × 1000 | Conv2d, 1 × 1, NBN, dropout | 1 | 1 | 1 |

| Input | Operator | Out | SE | S | L |

|---|---|---|---|---|---|

| 1122 × 3 | Conv2d, 3 × 3 | 24 | - | 1 | 1 |

| 1122 × 24 | FusedMBConv1, 3 × 3 | 24 | - | 1 | 2 |

| 1122 × 24 | FusedMBConv4, 3 × 3 | 48 | - | 2 | 4 |

| 562 × 48 | FusedMBConv4, 3 × 3 | 64 | - | 2 | 4 |

| 282 × 64 | MBConv4, 3 × 3 | 128 | 🗸 | 2 | 6 |

| 142 × 128 | MBConv6, 3 × 3 | 160 | 🗸 | 1 | 9 |

| 142 × 160 | MBConv6, 3 × 3 | 256 | 🗸 | 2 | 15 |

| 72 × 256 | Conv2d, 1 × 1, NBN | 1280 | - | 1 | |

| 72 × 1280 | Adaptive avgpool | - | - | 1 | 1 |

| 12 × 1280 | Conv2d, 1 × 1, NBN | 1000 | - | 1 | 1 |

| 12 × 1000 | Conv2d, 1 × 1, NBN, dropout | 1 | - | 1 | 1 |

| Input | Operator | Out | SE | Act | S | L |

|---|---|---|---|---|---|---|

| 1122 × 3 | Conv2d, 3 × 3 | 16 | - | HS | 1 | 1 |

| 1122 × 16 | MBConv, 3 × 3 | 16 | - | RE | 1 | 1 |

| 1122 × 16 | MBConv, 3 × 3 | 24 | - | RE | 2 | 2 |

| 562 × 24 | MBConv, 5 × 5 | 40 | 🗸 | RE | 2 | 3 |

| 282 × 40 | MBConv, 3 × 3 | 80 | - | HS | 2 | 4 |

| 142 × 80 | MBConv, 3 × 3 | 112 | 🗸 | HS | 1 | 2 |

| 142 × 112 | MBConv, 5 × 5 | 160 | 🗸 | HS | 2 | 2 |

| 72 × 160 | Conv2d, 1 × 1 | 960 | - | HS | 1 | 1 |

| 72 × 960 | Adaptive avgpool | - | - | - | 1 | 1 |

| 12 × 960 | Conv2d, 1 × 1, NBN | 1280 | - | HS | 1 | 1 |

| 12 × 1280 | Conv2d, 1 × 1, NBN | 1000 | - | - | 1 | 1 |

| 12 × 1000 | Conv2d, 1 × 1, NBN | 1 | - | - | 1 | 1 |

References

- Maestrini, B.; Mimi, G.; Oort, P.A.J.V.; Jindo, K.; Brdar, S. Mixing Process-Based and Data-Driven Approaches in Yield Prediction. Eur. J. Agron. 2022, 139, 126569. [Google Scholar] [CrossRef]

- Barbosa, A.; Trevisan, R.; Hovakimyan, N.; Martin, N.F. Modeling Yield Response to Crop Management Using Convolutional Neural Networks. Comput. Electron. Agric. 2020, 170, 105197. [Google Scholar] [CrossRef]

- Jones, E.J.; Bishop, T.F.A.; Malone, B.P.; Hulme, P.J.; Whelan, B.M.; Filippi, P. Identifying Causes of Crop Yield Variability with Interpretive Machine Learning. Comput. Electron. Agric. 2022, 192, 106632. [Google Scholar] [CrossRef]

- Tang, X.; Liu, H.; Feng, D.; Zhang, W.; Chang, J.; Li, L.; Yang, L. Prediction of Field Winter Wheat Yield Using Fewer Parameters at Middle Growth Stage by Linear Regression and the BP Neural Network Method. Eur. J. Agron. 2022, 141, 126621. [Google Scholar] [CrossRef]

- Abbaszadeh, P.; Gavahi, K.; Alipour, A.; Deb, P.; Moradkhani, H. Bayesian Multi-Modeling of Deep Neural Nets for Probabilistic Crop Yield Prediction. Agric. For. Meteorol. 2022, 314, 108773. [Google Scholar] [CrossRef]

- Tanabe, R.; Matsui, T.; Tanaka, T.S.T. Winter Wheat Yield Prediction Using Convolutional Neural Networks and UAV-Based Multispectral Imagery. Field Crops Res. 2023, 291, 108786. [Google Scholar] [CrossRef]

- Shuai, G.; Basso, B. Subfield Maize Yield Prediction Improves When In-Season Crop Water Deficit Is Included in Remote Sensing Imagery-Based Models. Remote Sens. Environ. 2022, 272, 112938. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, J.; Ye, H.; Ali, M.L.; Chen, P.; Nguyen, H.T. Yield Estimation of Soybean Breeding Lines under Drought Stress Using Unmanned Aerial Vehicle-Based Imagery and Convolutional Neural Network. Biosyst. Eng. 2021, 204, 90–103. [Google Scholar] [CrossRef]

- Lischeid, G.; Webber, H.; Sommer, M.; Nendel, C.; Ewert, F. Machine Learning in Crop Yield Modelling: A Powerful Tool, but No Surrogate for Science. Agric. For. Meteorol. 2022, 312, 108698. [Google Scholar] [CrossRef]

- Ben-Ari, T.; Adrian, J.; Klein, T.; Calanca, P.; Van Der Velde, M.; Makowski, D. Identifying Indicators for Extreme Wheat and Maize Yield Losses. Agric. For. Meteorol. 2016, 220, 130–140. [Google Scholar] [CrossRef]

- van Klompenburg, T.; Kassahun, A.; Catal, C. Crop Yield Prediction Using Machine Learning: A Systematic Literature Review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Ziliani, M.G.; Altaf, M.U.; Aragon, B.; Houborg, R.; Franz, T.E.; Lu, Y.; Sheffield, J.; Hoteit, I.; McCabe, M.F. Early Season Prediction of within-Field Crop Yield Variability by Assimilating CubeSat Data into a Crop Model. Agric. For. Meteorol. 2022, 313, 108736. [Google Scholar] [CrossRef]

- Babar, M.A.; Reynolds, M.P.; Van Ginkel, M.; Klatt, A.R.; Raun, W.R.; Stone, M.L. Spectral Reflectance Indices as a Potential Indirect Selection Criteria for Wheat Yield under Irrigation. Crop Sci. 2006, 46, 578–588. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Vatter, T.; Aparicio Gutiérrez, N.; Nieto-Taladriz, M.T.; Araus, J.L. Low-Cost Assessment of Grain Yield in Durum Wheat Using RGB Images. Eur. J. Agron. 2019, 105, 146–156. [Google Scholar] [CrossRef]

- Ma, J.; Liu, B.; Ji, L.; Zhu, Z.; Wu, Y.; Jiao, W. Field-Scale Yield Prediction of Winter Wheat under Different Irrigation Regimes Based on Dynamic Fusion of Multimodal UAV Imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103292. [Google Scholar] [CrossRef]

- Leukel, J.; Zimpel, T.; Stumpe, C. Machine Learning Technology for Early Prediction of Grain Yield at the Field Scale: A Systematic Review. Comput. Electron. Agric. 2023, 207, 107721. [Google Scholar] [CrossRef]

- Cheng, M.; Penuelas, J.; Mccabe, M.F.; Atzberger, C.; Jiao, X.; Wu, W.; Jin, X. Combining Multi-Indicators with Machine-Learning Algorithms for Maize Yield Early Prediction at the County-Level in China. Agric. For. Meteorol. 2022, 323, 109057. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based Multi-sensor Data Fusion and Machine Learning Algorithm for Yield Prediction in Wheat. Precis. Agric. 2022, 27, 187–212. [Google Scholar] [CrossRef]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Yeom, J.; Maeda, M.; Maeda, A.; Dube, N.; Landivar, J.; Hague, S.; et al. Developing a Machine Learning Based Cotton Yield Estimation Framework Using Multi-Temporal UAS Data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 180–194. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain Yield Prediction of Rice Using Multi-Temporal UAV-Based RGB and Multispectral Images and Model Transfer—A Case Study of Small Farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Wang, F.; Yi, Q.; Hu, J.; Xie, L.; Yao, X. Combining Spectral and Textural Information in UAV Hyperspectral Images to Estimate Rice Grain Yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Shafiee, S.; Lied, L.M.; Burud, I.; Dieseth, J.A.; Alsheikh, M.; Lillemo, M. Sequential Forward Selection and Support Vector Regression in Comparison to LASSO Regression for Spring Wheat Yield Prediction Based on UAV Imagery. Comput. Electron. Agric. 2021, 183, 106036. [Google Scholar] [CrossRef]

- Fei, S.; Li, L.; Han, Z.; Chen, Z.; Xiao, Y. Combining Novel Feature Selection Strategy and Hyperspectral Vegetation Indices to Predict Crop Yield. Plant Methods 2022, 18, 119. [Google Scholar] [CrossRef] [PubMed]

- Delmotte, S.; Tittonell, P.; Mouret, J.C.; Hammond, R.; Lopez-Ridaura, S. On Farm Assessment of Rice Yield Variability and Productivity Gaps between Organic and Conventional Cropping Systems under Mediterranean Climate. Eur. J. Agron. 2011, 35, 223–236. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Bhadra, S.; Maimaitiyiming, M.; Brown, D.R.; Sidike, P.; Fritschi, F.B. Field-Scale Crop Yield Prediction Using Multi-Temporal WorldView-3 and PlanetScope Satellite Data and Deep Learning. ISPRS J. Photogramm. Remote Sens. 2021, 174, 265–281. [Google Scholar] [CrossRef]

- Li, Y.; Liu, H.; Ma, J.; Zhang, L. Estimation of Leaf Area Index for Winter Wheat at Early Stages Based on Convolutional Neural Networks. Comput. Electron. Agric. 2021, 190, 106480. [Google Scholar] [CrossRef]

- Ma, J.; Li, Y.; Chen, Y.; Du, K.; Zheng, F.; Zhang, L.; Sun, Z. Estimating above Ground Biomass of Winter Wheat at Early Growth Stages Using Digital Images and Deep Convolutional Neural Network. Eur. J. Agron. 2019, 103, 117–129. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop Yield Prediction with Deep Convolutional Neural Networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep Convolutional Neural Networks for Rice Grain Yield Estimation at the Ripening Stage Using UAV-Based Remotely Sensed Images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Zeng, L.; Peng, G.; Meng, R.; Man, J.; Li, W.; Xu, B.; Lv, Z.; Sun, R. Wheat Yield Prediction Based on Unmanned Aerial Vehicles-Collected Red–Green–Blue Imagery. Remote Sens. 2021, 13, 2937. [Google Scholar] [CrossRef]

- Castro-Valdecantos, P.; Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Egea, G. Leaf Area Index Estimations by Deep Learning Models Using RGB Images and Data Fusion in Maize. Precis. Agric. 2022, 23, 1949–1966. [Google Scholar] [CrossRef]

- Zhang, Y.; Hui, J.; Qin, Q.; Sun, Y.; Zhang, T.; Sun, H.; Li, M. Transfer-Learning-Based Approach for Leaf Chlorophyll Content Estimation of Winter Wheat from Hyperspectral Data. Remote Sens. Environ. 2021, 267, 112724. [Google Scholar] [CrossRef]

- Moghimi, A.; Yang, C.; Anderson, J.A. Aerial Hyperspectral Imagery and Deep Neural Networks for High-Throughput Yield Phenotyping in Wheat. Comput. Electron. Agric. 2020, 172, 105299. [Google Scholar] [CrossRef]

- Zadoks, J.C.; Chang, T.T.; Konzak, C.F. A Decimal Code for the Growth Stages of Cereals. Weed Res. 1974, 14, 415–421. [Google Scholar] [CrossRef]

- Hay, R.K.M. Harvest Index: A Review of Its Use in Plant Breeding and Crop Physiology. Ann. Appl. Biol. 1995, 126, 197–216. [Google Scholar] [CrossRef]

- Wheeler, T.R.; Hong, T.D.; Ellis, R.H.; Batts, G.R.; Morison, J.I.L.; Hadley, P. The Duration and Rate of Grain Growth, and Harvest Index, of Wheat (Triticum Aestivum L.) in Response to Temperature and CO2. J. Exp. Bot. 1996, 47, 623–630. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of Color Vegetation Indices for Automated Crop Imaging Applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for mobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of Winter-Wheat above-Ground Biomass Based on UAV Ultrahigh-Ground-Resolution Image Textures and Vegetation Indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Prasad, B.; Carver, B.F.; Stone, M.L.; Babar, M.A.; Raun, W.R.; Klatt, A.R. Potential Use of Spectral Reflectance Indices as a Selection Tool for Grain Yield in Winter Wheat under Great Plains Conditions. Crop Sci. 2007, 47, 1426–1440. [Google Scholar] [CrossRef]

- Behmann, J.; Steinrücken, J.; Plümer, L. Detection of Early Plant Stress Responses in Hyperspectral Images. ISPRS J. Photogramm. Remote Sens. 2014, 93, 98–111. [Google Scholar] [CrossRef]

- Ma, J.; Li, Y.; Liu, H.; Wu, Y.; Zhang, L. Towards Improved Accuracy of UAV-Based Wheat Ears Counting: A Transfer Learning Method of the Ground-Based Fully Convolutional Network. Expert Syst. Appl. 2022, 191, 116226. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Rasheed, A.; Xia, X.; Ma, Y.; Fu, L.; Chen, Z.; He, Z. Application of Multi-Layer Neural Network and Hyperspectral Reflectance in Genome-Wide Association Study for Grain Yield in Bread Wheat. Field Crops Res. 2022, 289, 108730. [Google Scholar] [CrossRef]

- Xu, X.; Li, H.; Yin, F.; Xi, L.; Qiao, H.; Ma, Z.; Shen, S.; Jiang, B.; Ma, X. Wheat Ear Counting Using K-Means Clustering Segmentation and Convolutional Neural Network. Plant Methods 2020, 16, 106. [Google Scholar] [CrossRef]

- Geirhos, R.; Rubisch, P.; Michaelis, C.; Bethge, M.; Wichmann, F.A.; Brendel, W. ImageNet-Trained CNNs Are Biased towards Texture; Increasing Shape Bias Improves Accuracy and Robustness. arXiv 2018, arXiv:1811.12231. [Google Scholar]

- Ma, J.; Li, Y.; Du, K.; Zheng, F.; Zhang, L.; Gong, Z.; Jiao, W. Segmenting Ears of Winter Wheat at Flowering Stage Using Digital Images and Deep Learning. Comput. Electron. Agric. 2020, 168, 105159. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Wheat Ear Counting In-Field Conditions: High Throughput and Low-Cost Approach Using RGB Images. Plant Methods 2018, 14, 22. [Google Scholar] [CrossRef]

- Becker, E.; Schmidhalter, U. Evaluation of Yield and Drought Using Active and Passive Spectral Sensing Systems at the Reproductive Stage in Wheat. Front. Plant Sci. 2017, 8, 379. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

| Treatments | Abbreviations | Irrigation Time | Growth Stage | Irrigation Amount (m3 ha−1) |

|---|---|---|---|---|

| Well-watered | F | 3 April 2021 and 3 May 2021 | Jointing and Flowering | 750 for each irrigation |

| Rainfed | N | / | / | / |

| Deficit water 1 | D1 | 29 November 2020 | Over-wintering | 750 |

| Deficit water 2 | D2 | 10 March 2021 | Regreening | 750 |

| Deficit water 3 | D3 | 3 April 2021 | Jointing | 750 |

| Deficit water 4 | D4 | 10 April 2021 | Jointing | 750 |

| Deficit water 5 | D5 | 18 April 2021 | Booting | 750 |

| Flight No. | Date | Zadoks Growth Stage | General Description |

|---|---|---|---|

| No. 1 | 8 April 2021 | GS34 | Middle stem elongation |

| No. 2 | 18 April 2021 | GS47 | Early booting, the flag leaf shealth opened |

| No. 3 | 28 April 2021 | GS55 | Middle heading, half of the inflorescence merged |

| No. 4 | 12 May 2021 | GS65 | Middle flowering |

| No. 5 | 21 May 2021 | GS77 | Middle grain-filling |

| Evaluation Metrics | Models | Treatments | ||

|---|---|---|---|---|

| Well-Watered | Rainfed | Deficit Water | ||

| R2 | Flowering | 0.3100 | 0.7997 | 0.4460 |

| Grain-filling | 0.2232 | 0.7288 | 0.4971 | |

| MAPE (%) | Flowering | 9.11 | 4.12 | 8.40 |

| Grain-filling | 8.74 | 6.02 | 7.48 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, J.; Wu, Y.; Liu, B.; Zhang, W.; Wang, B.; Chen, Z.; Wang, G.; Guo, A. Wheat Yield Prediction Using Unmanned Aerial Vehicle RGB-Imagery-Based Convolutional Neural Network and Limited Training Samples. Remote Sens. 2023, 15, 5444. https://doi.org/10.3390/rs15235444

Ma J, Wu Y, Liu B, Zhang W, Wang B, Chen Z, Wang G, Guo A. Wheat Yield Prediction Using Unmanned Aerial Vehicle RGB-Imagery-Based Convolutional Neural Network and Limited Training Samples. Remote Sensing. 2023; 15(23):5444. https://doi.org/10.3390/rs15235444

Chicago/Turabian StyleMa, Juncheng, Yongfeng Wu, Binhui Liu, Wenying Zhang, Bianyin Wang, Zhaoyang Chen, Guangcai Wang, and Anqiang Guo. 2023. "Wheat Yield Prediction Using Unmanned Aerial Vehicle RGB-Imagery-Based Convolutional Neural Network and Limited Training Samples" Remote Sensing 15, no. 23: 5444. https://doi.org/10.3390/rs15235444

APA StyleMa, J., Wu, Y., Liu, B., Zhang, W., Wang, B., Chen, Z., Wang, G., & Guo, A. (2023). Wheat Yield Prediction Using Unmanned Aerial Vehicle RGB-Imagery-Based Convolutional Neural Network and Limited Training Samples. Remote Sensing, 15(23), 5444. https://doi.org/10.3390/rs15235444