A Practical Star Image Registration Algorithm Using Radial Module and Rotation Angle Features

Abstract

:1. Introduction

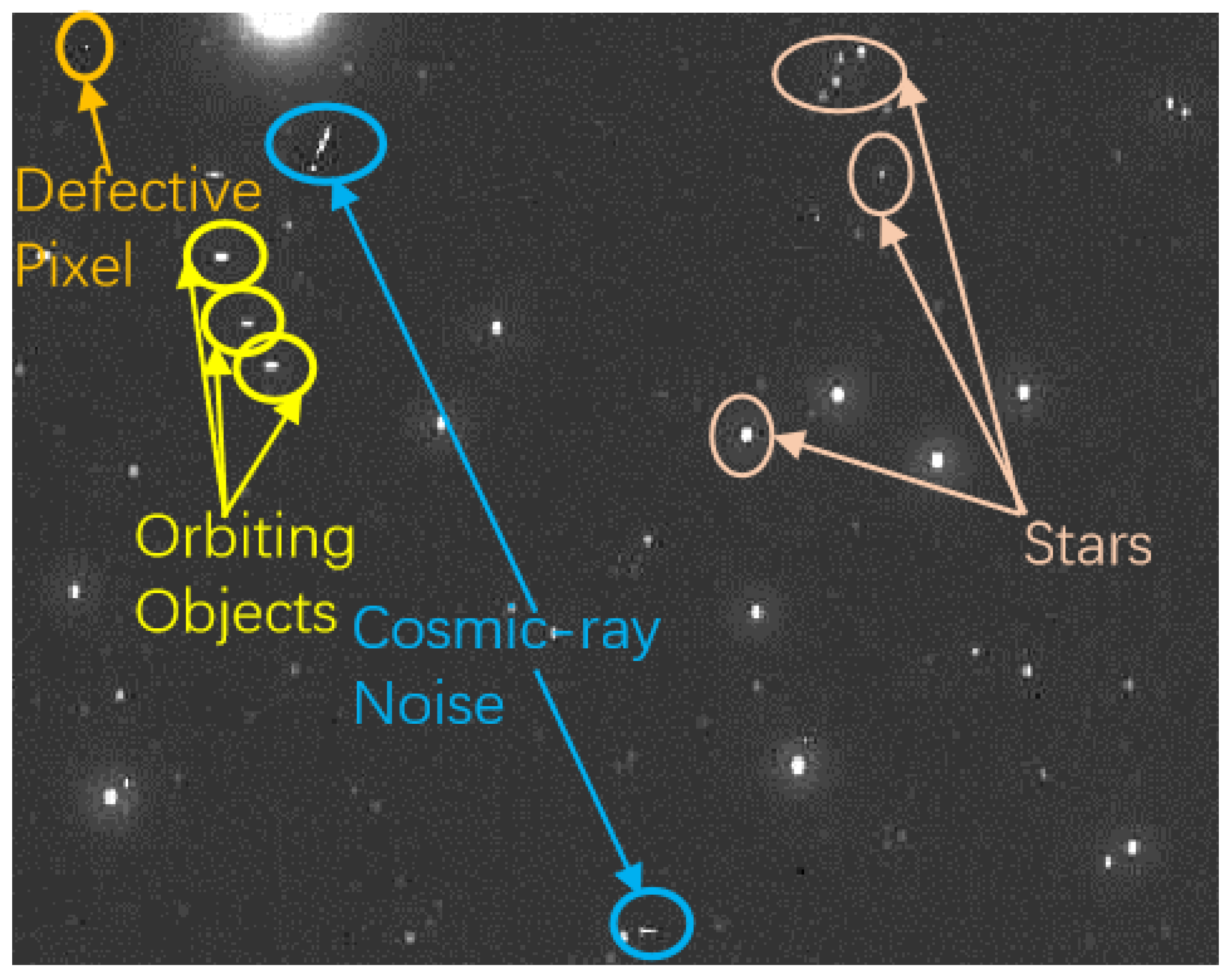

2. Image Preprocessing

2.1. Background Suppression

2.2. Stellar Centroid Positioning

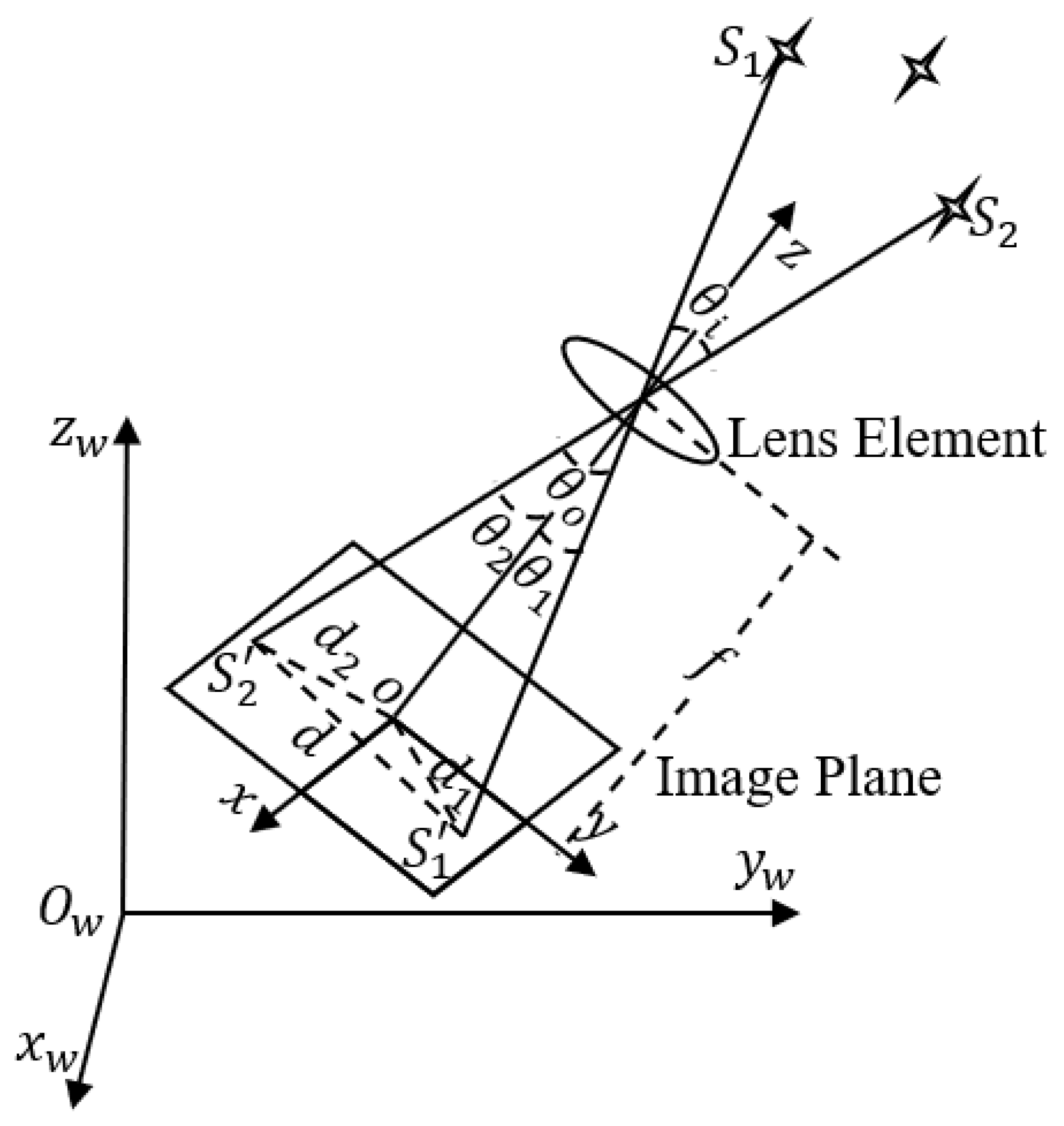

3. Star Image Registration

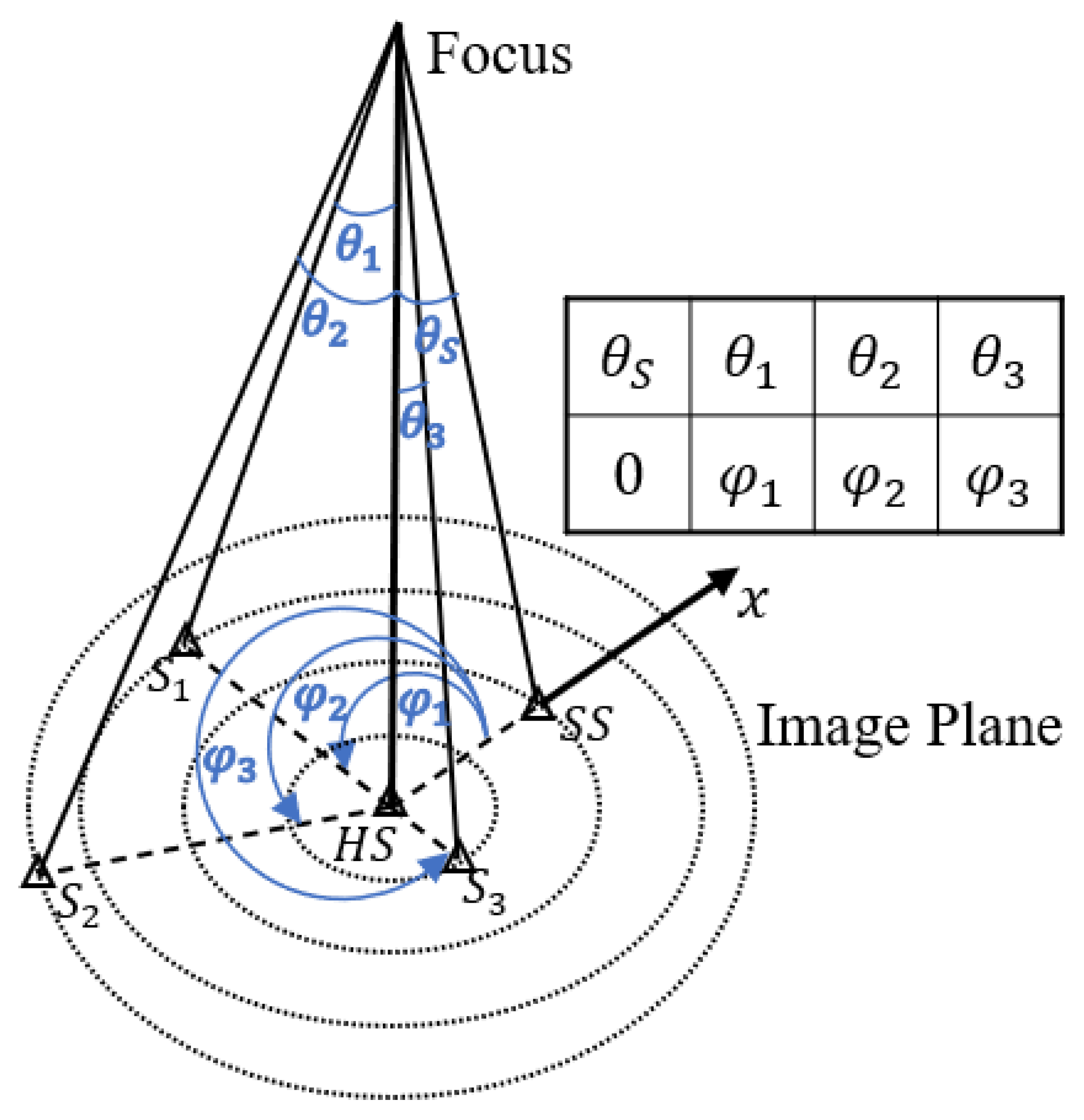

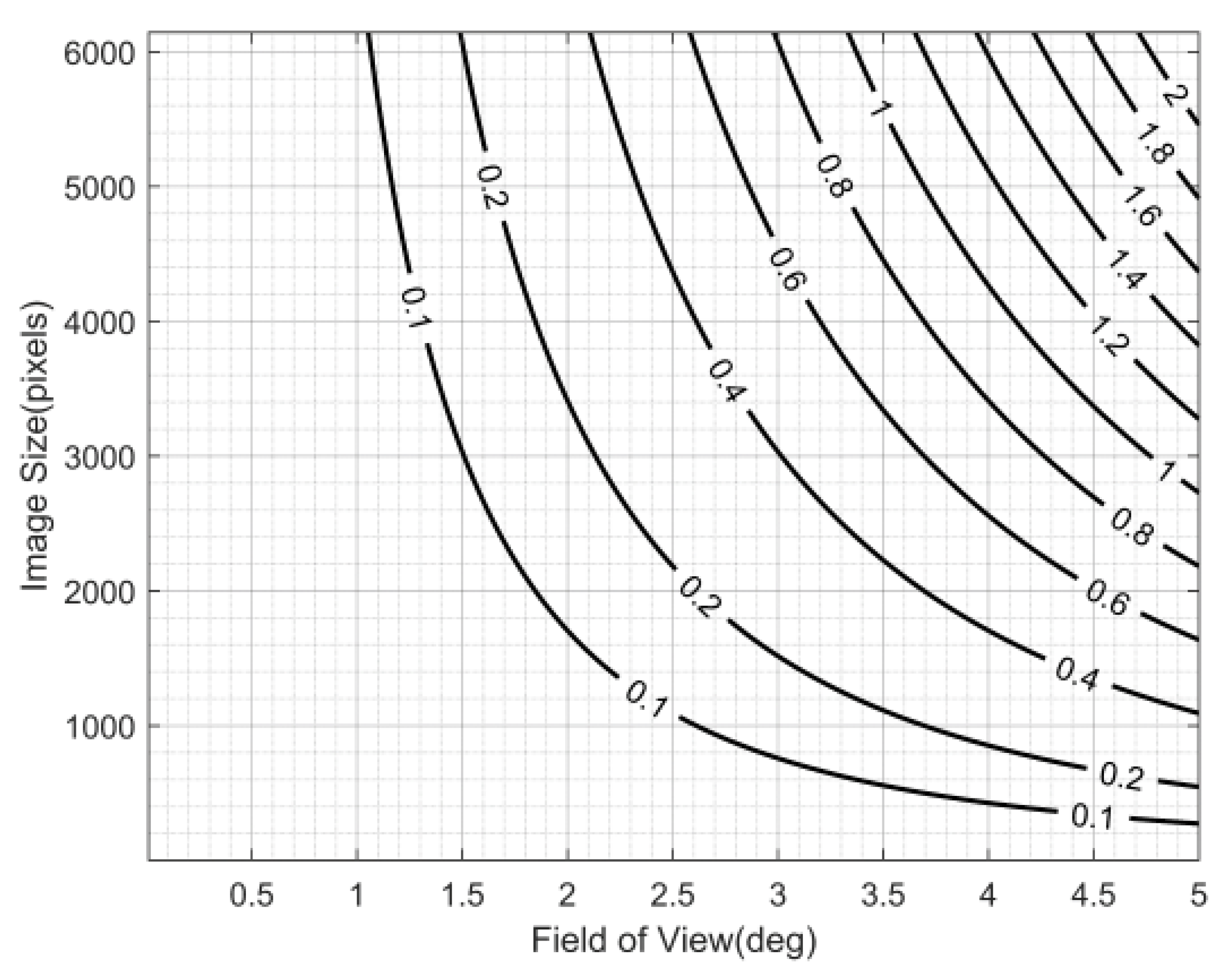

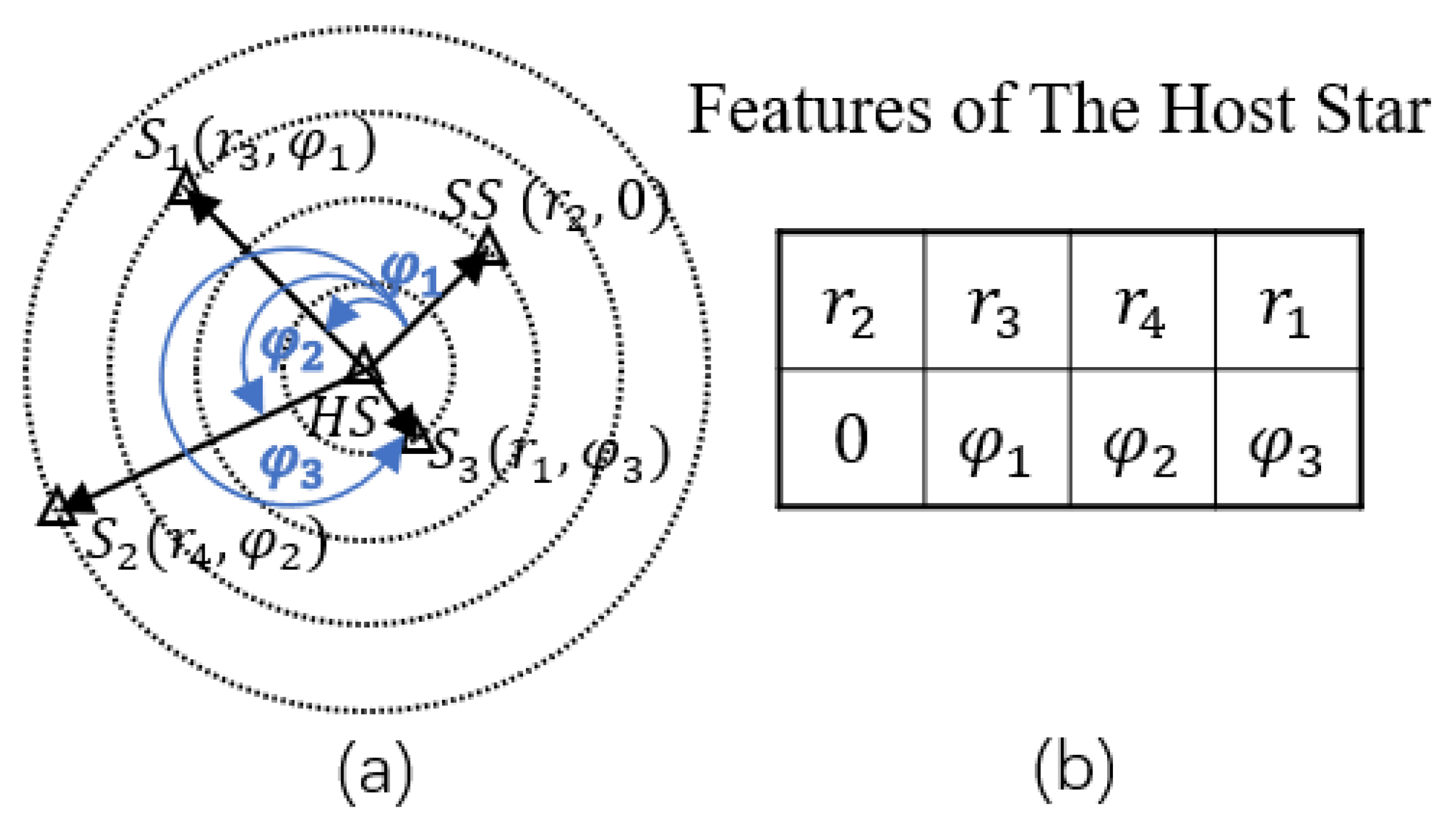

3.1. Matching Features

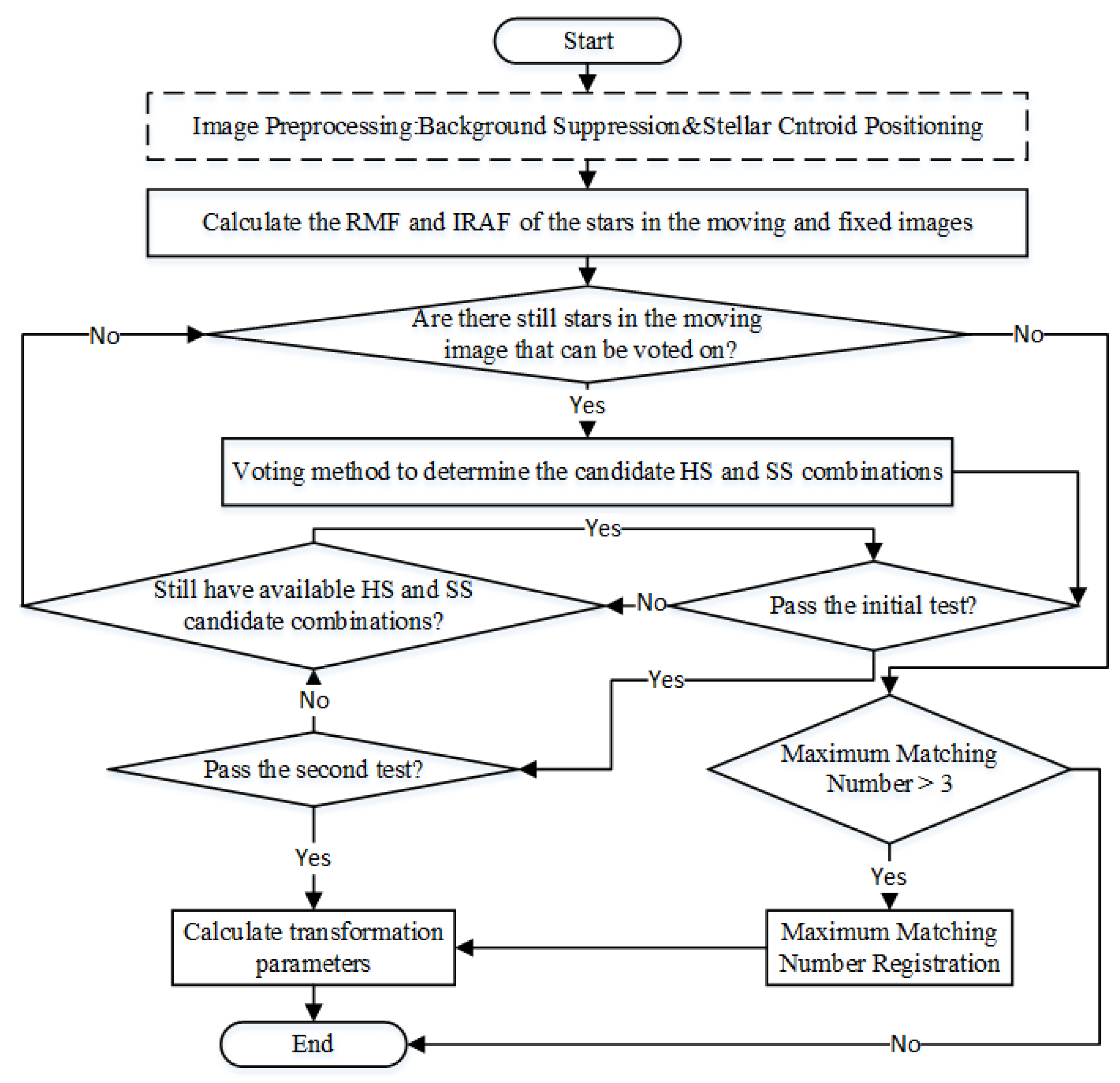

3.2. Registration Process

3.2.1. Calculating RMF and Initial RAF

3.2.2. Determining the Candidate HS and SS

3.2.3. Verifying and Obtaining Matching Star Pairs

3.2.4. Maximum Matching Number Registration

3.2.5. Calculating the Transformation Parameters

4. Simulation and Real Data Testing

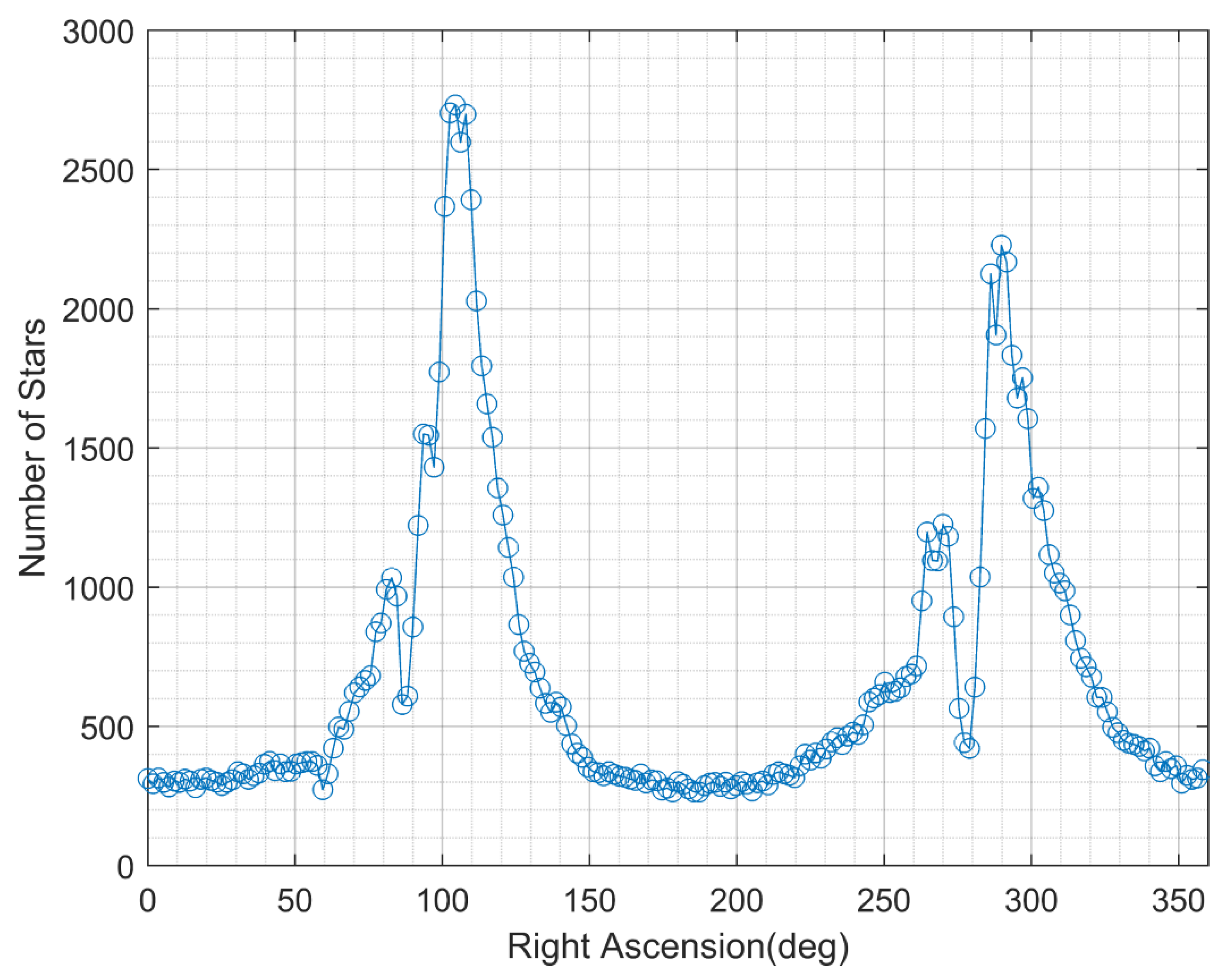

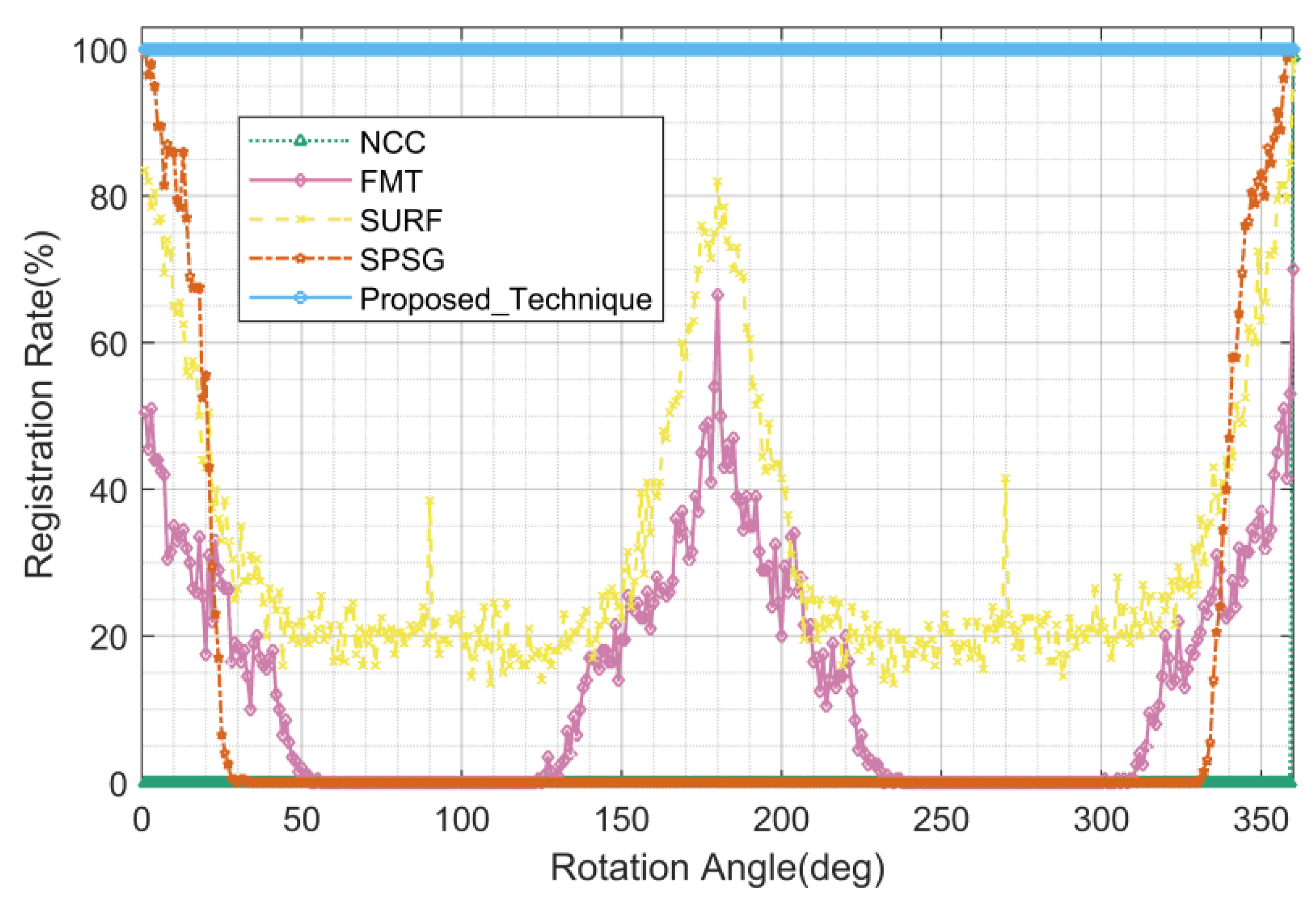

4.1. Simulation Data Testing

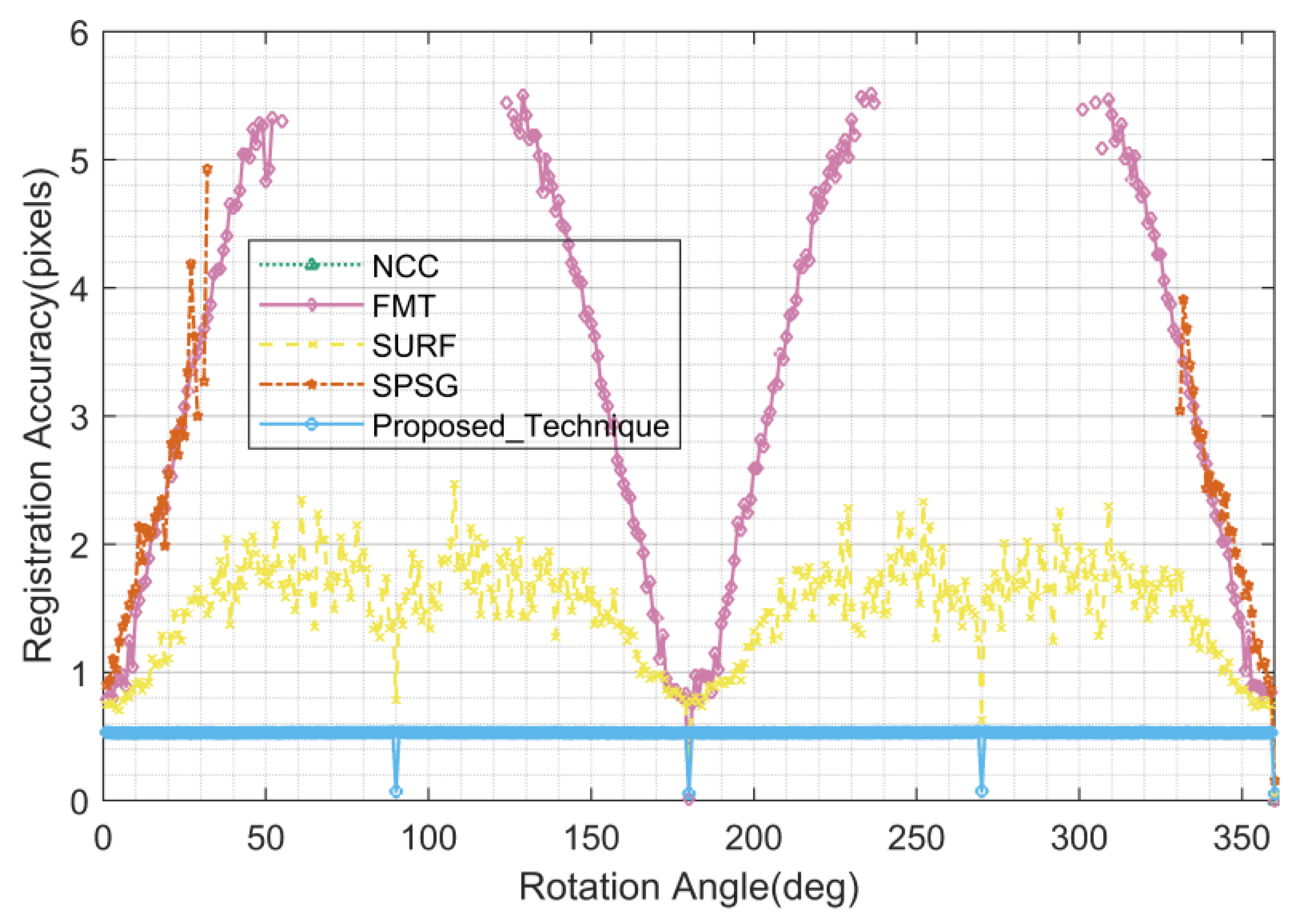

4.1.1. Rotation

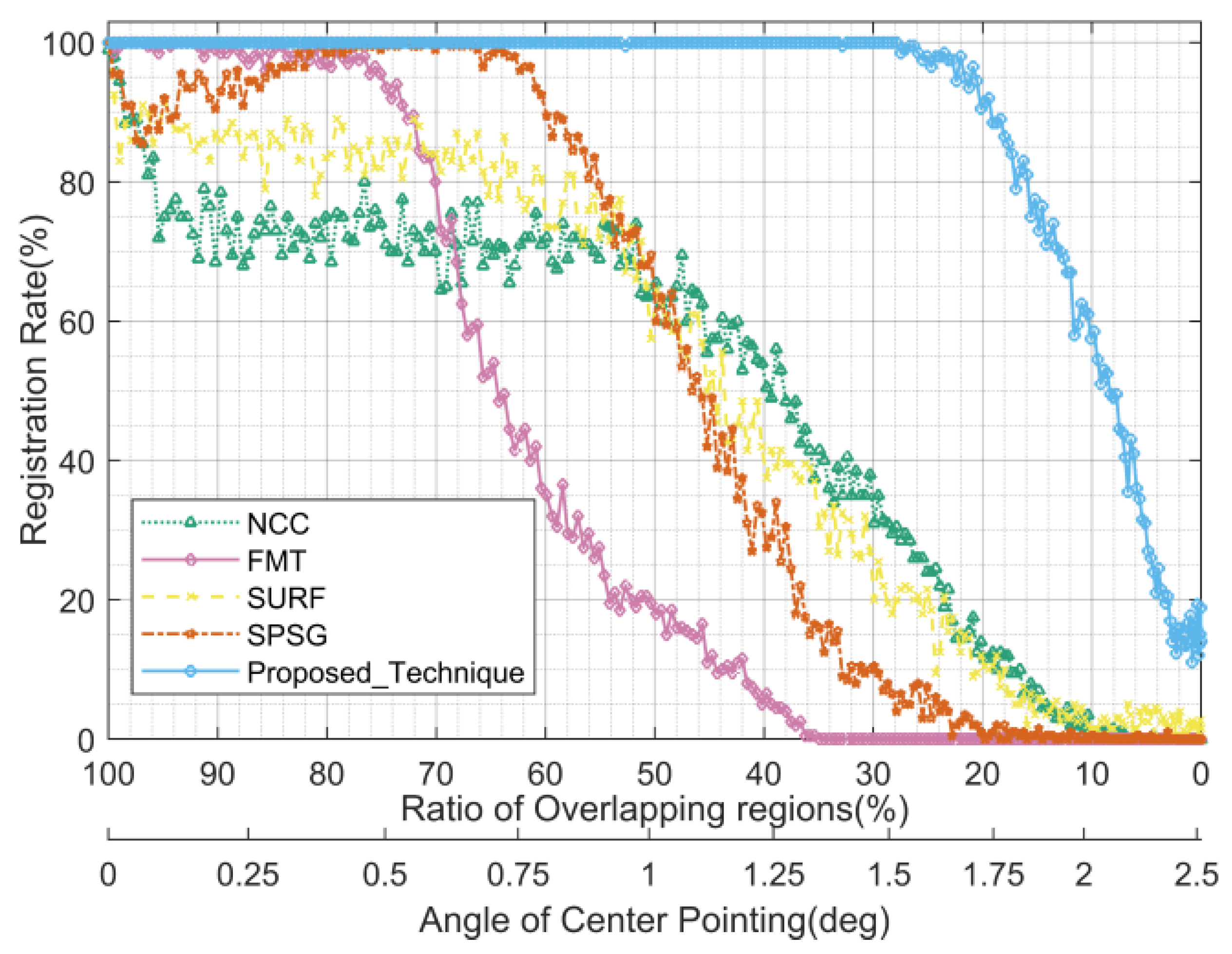

4.1.2. Overlapping Regions

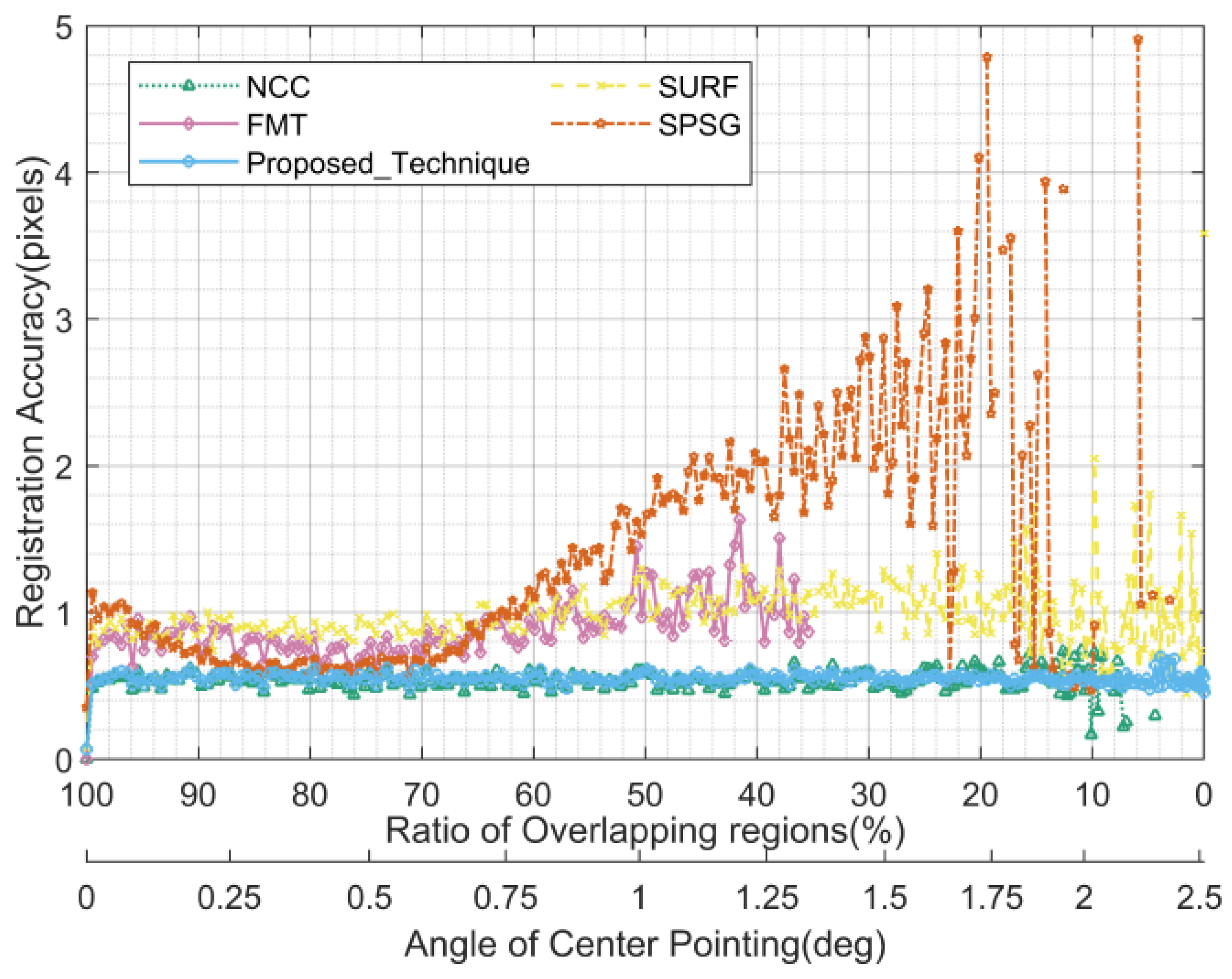

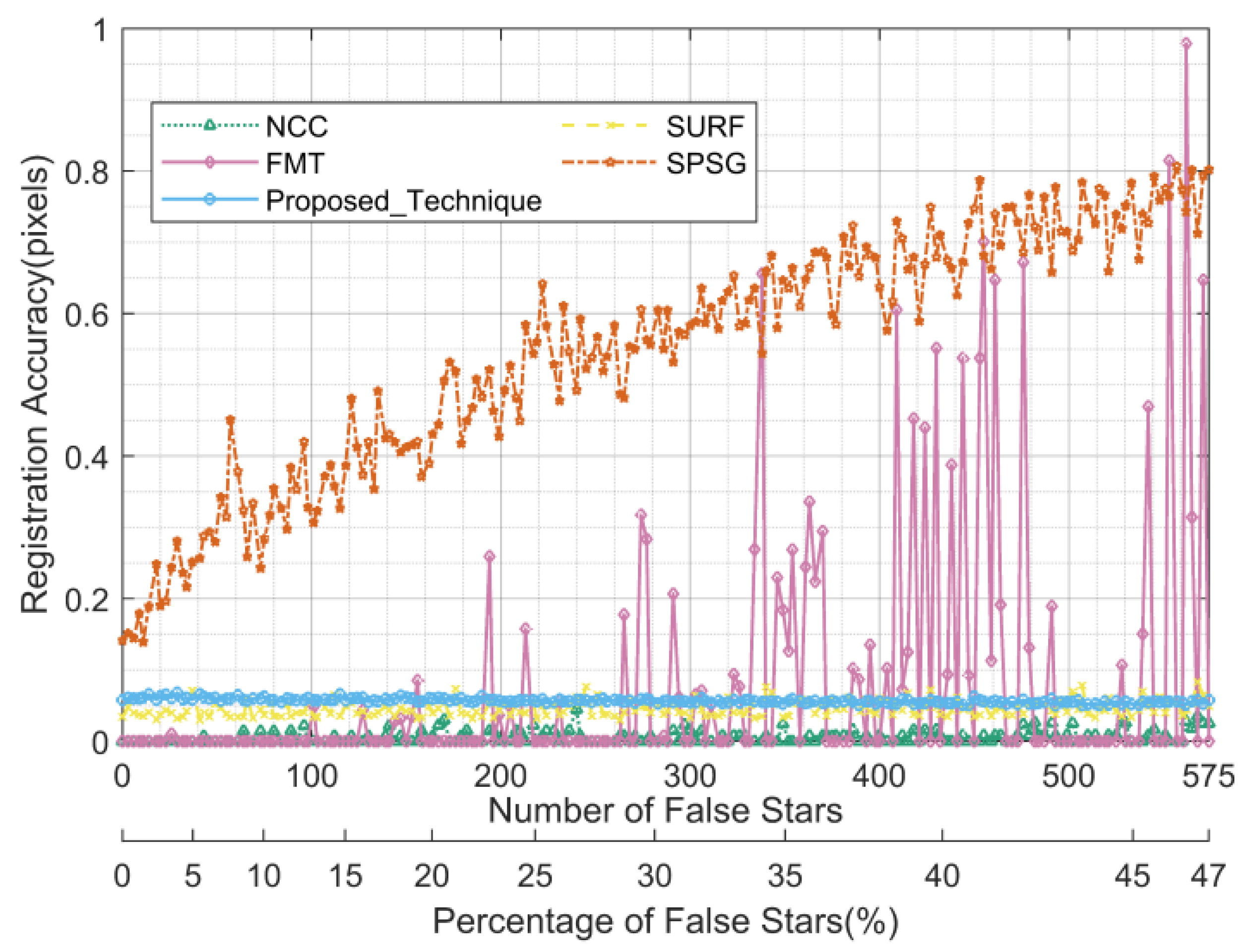

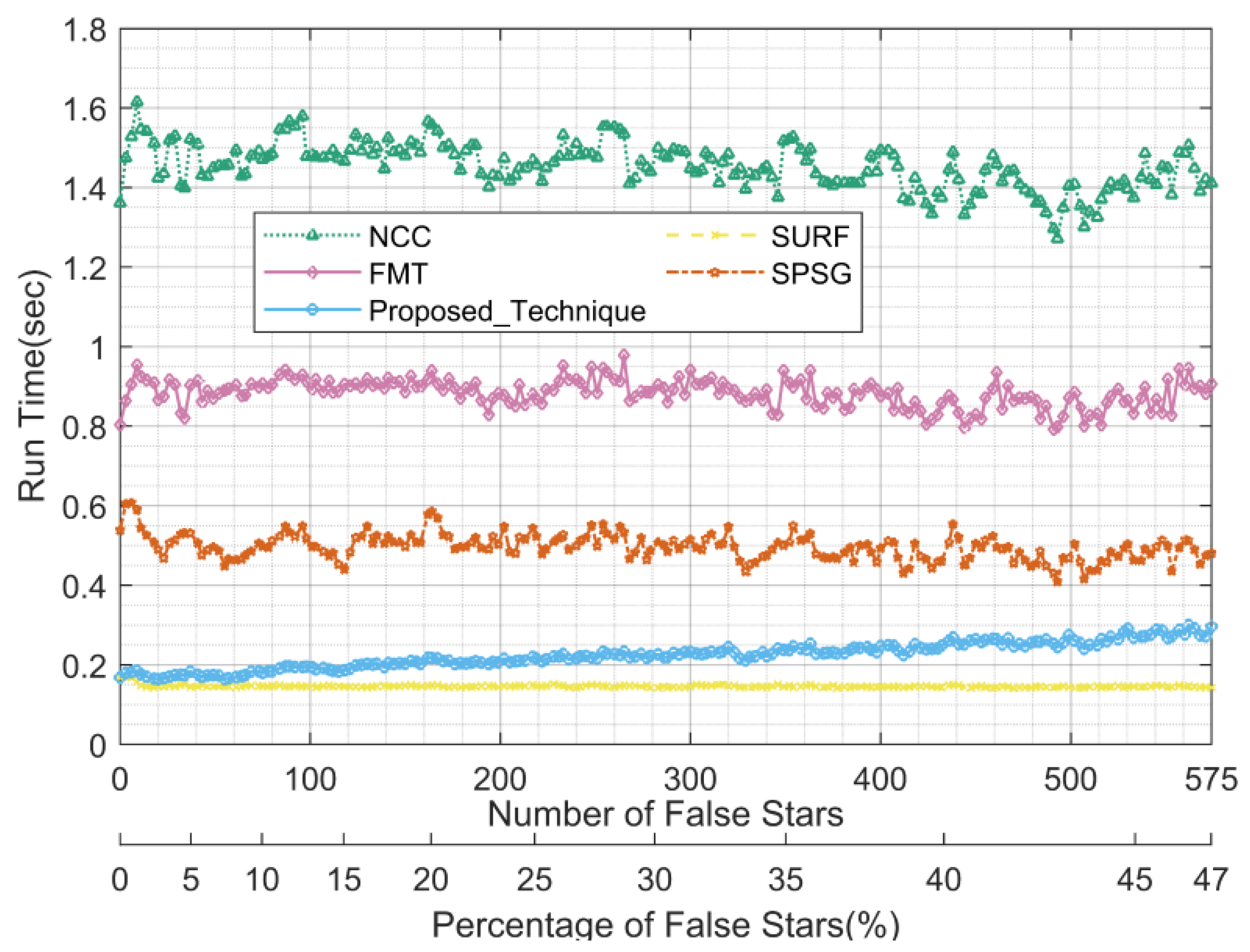

4.1.3. False Stars

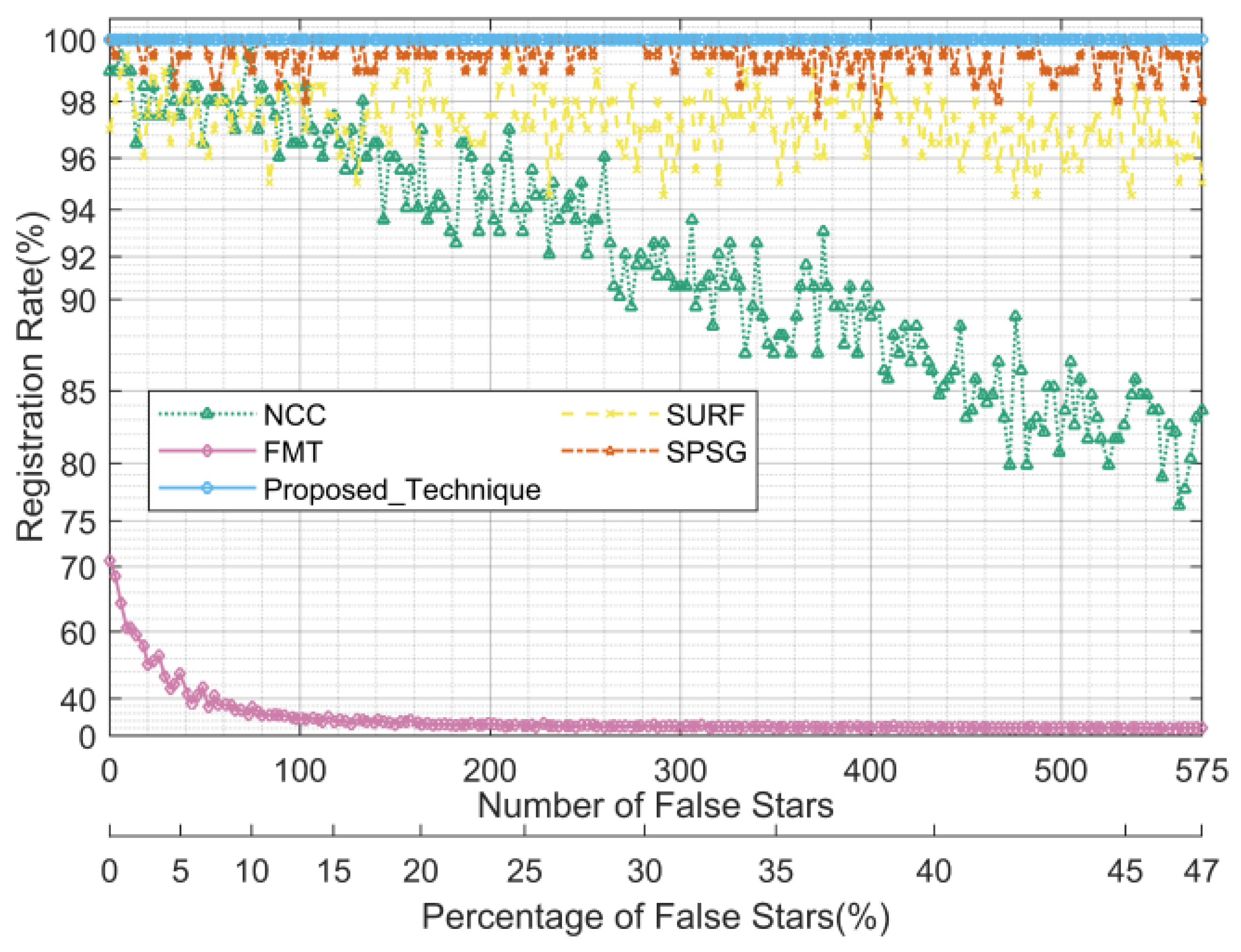

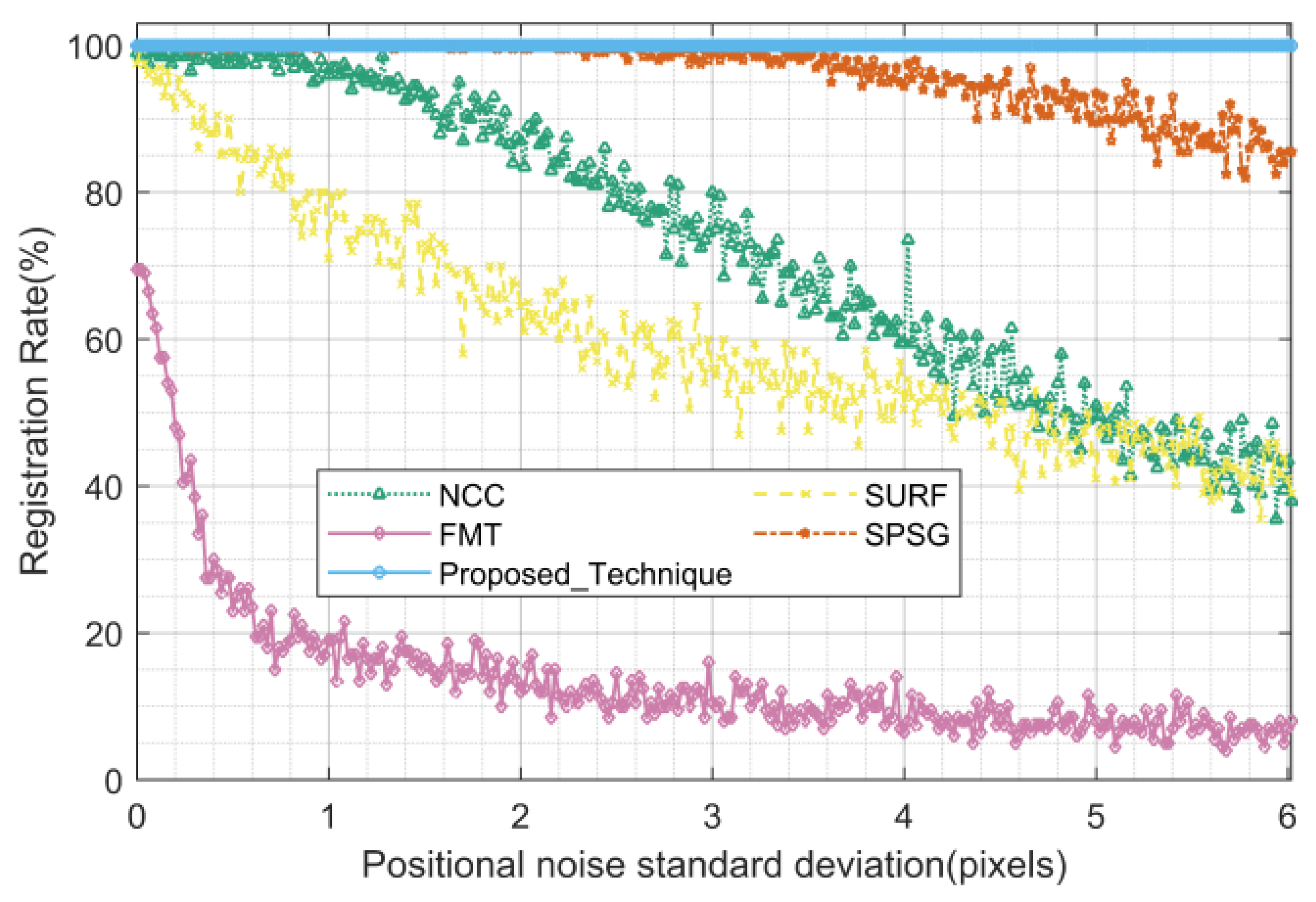

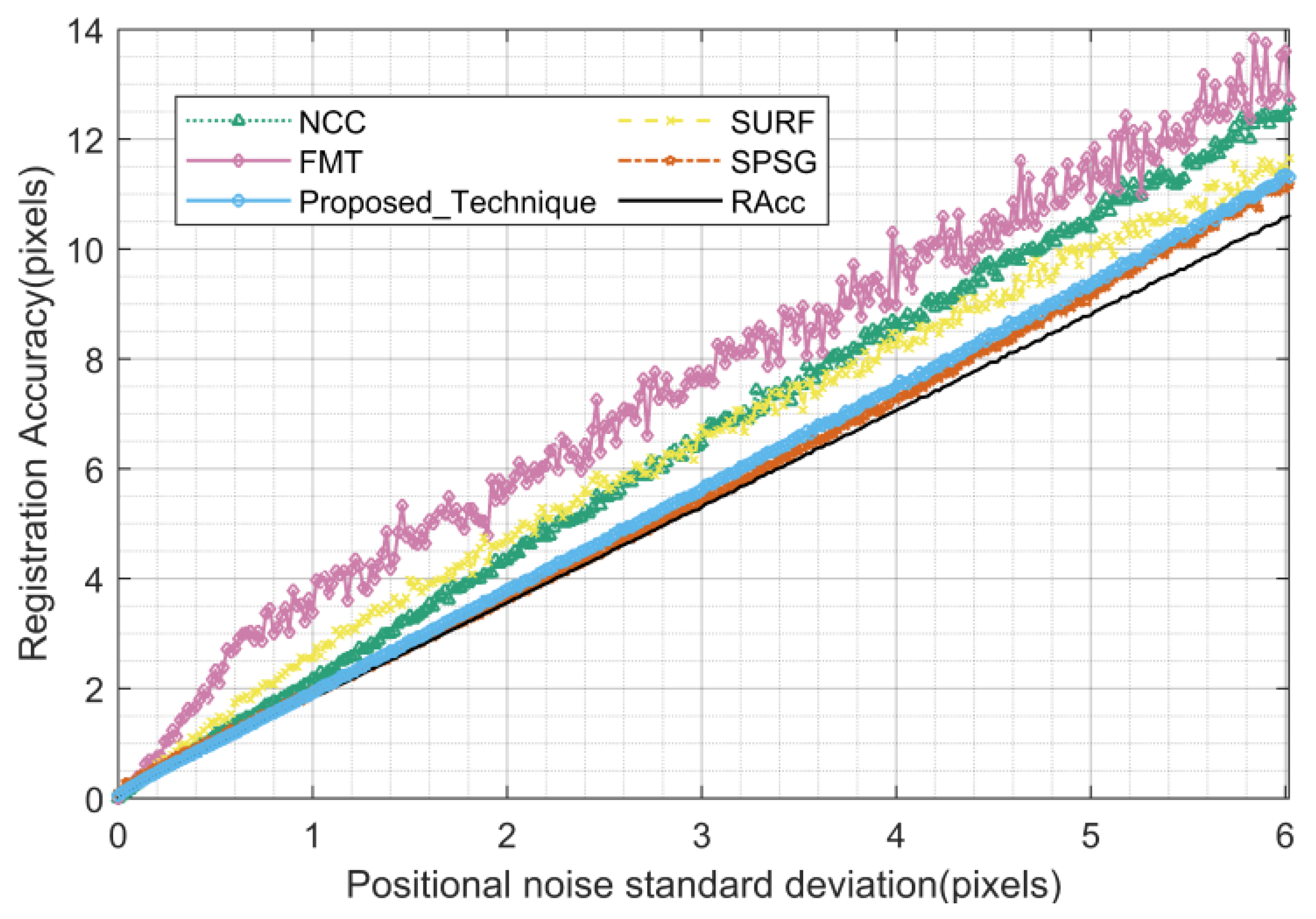

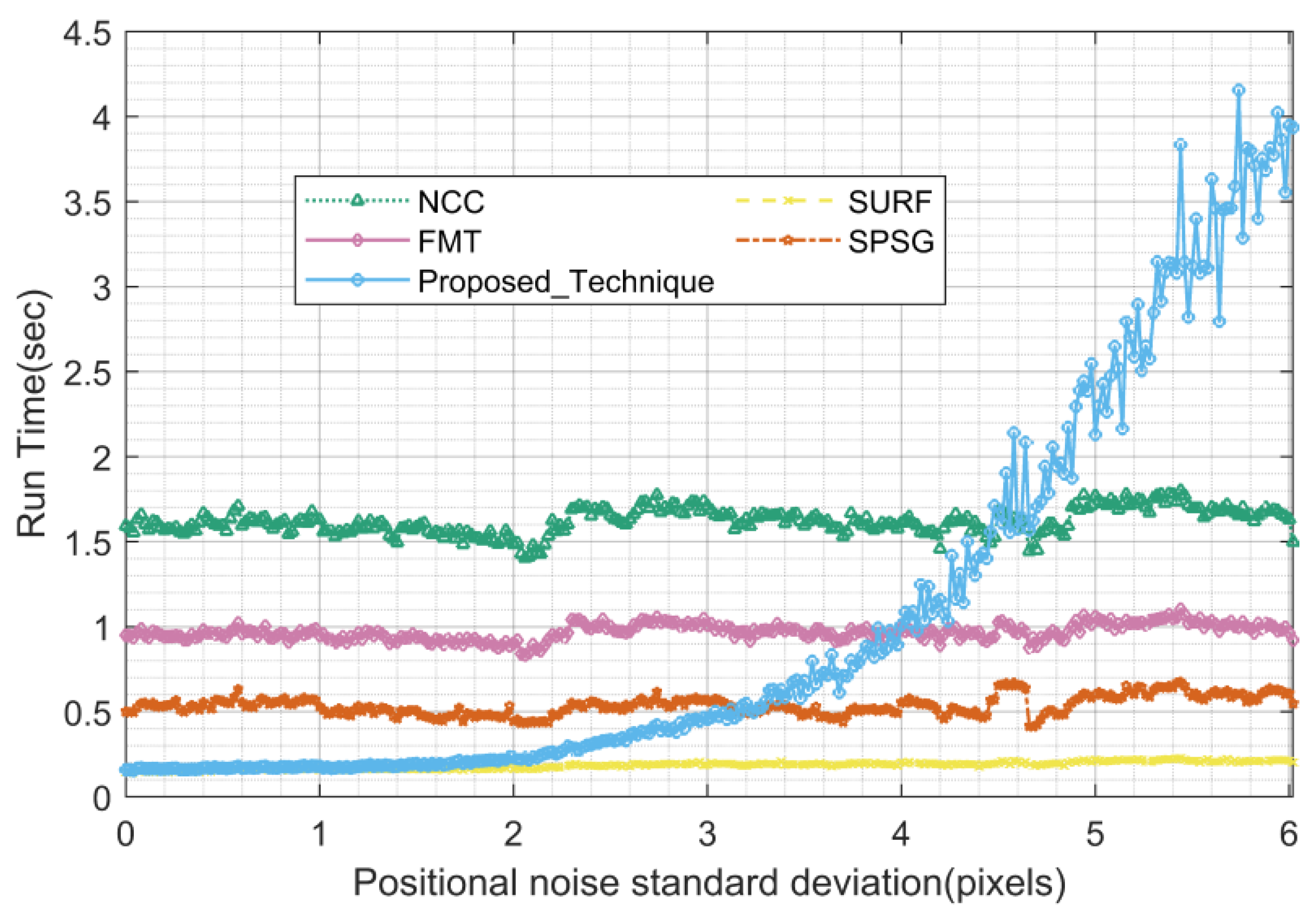

4.1.4. Positional Deviation

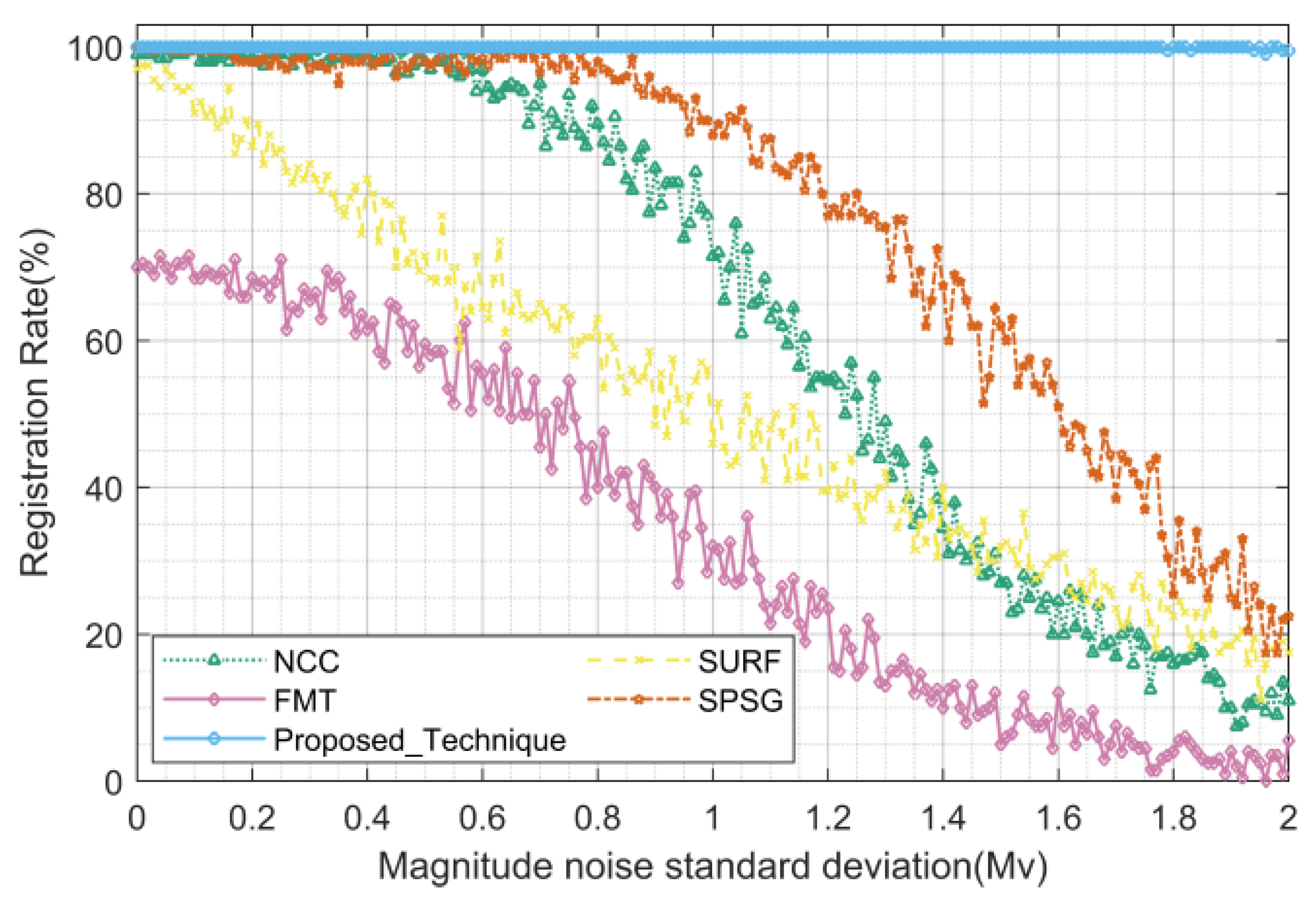

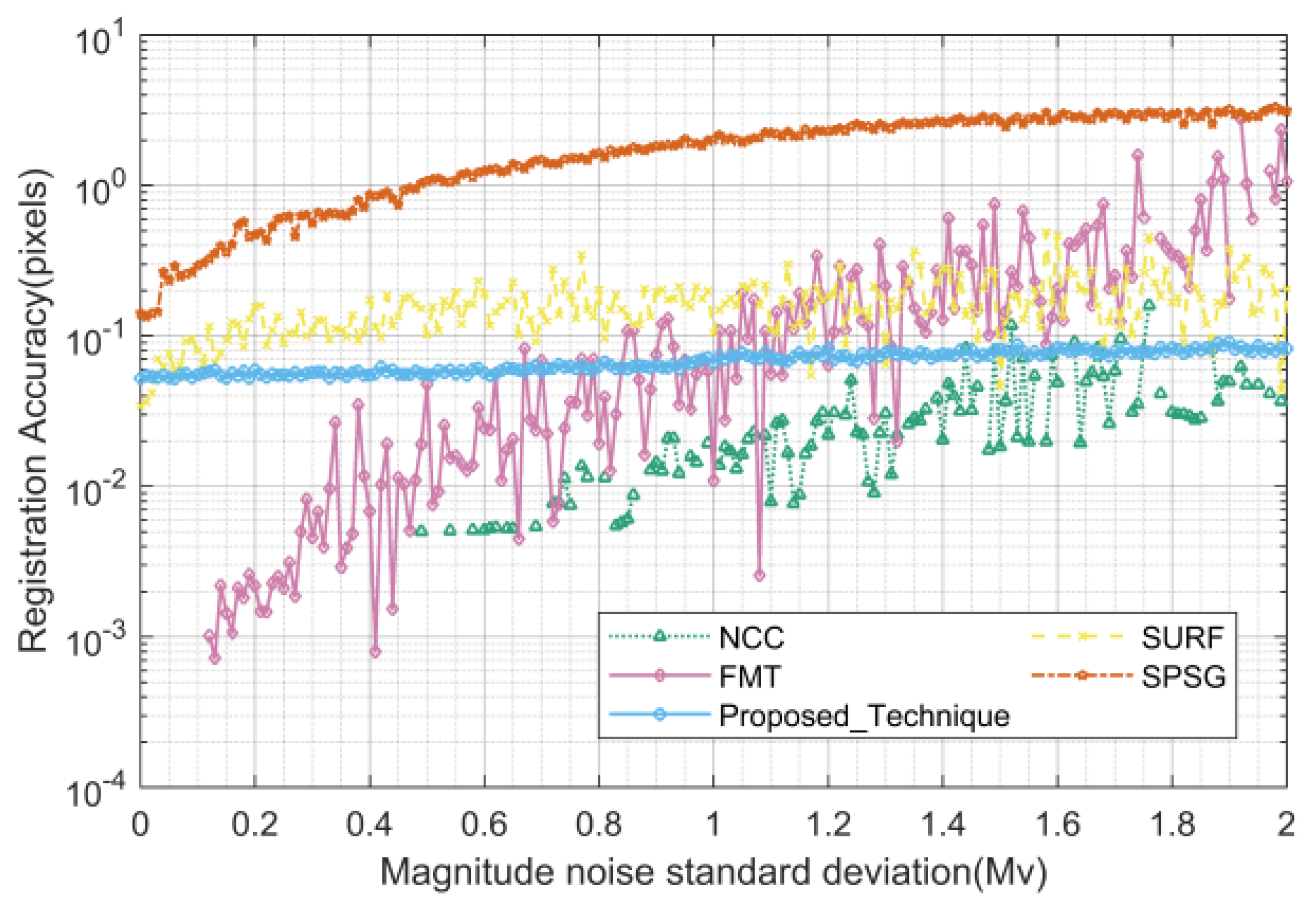

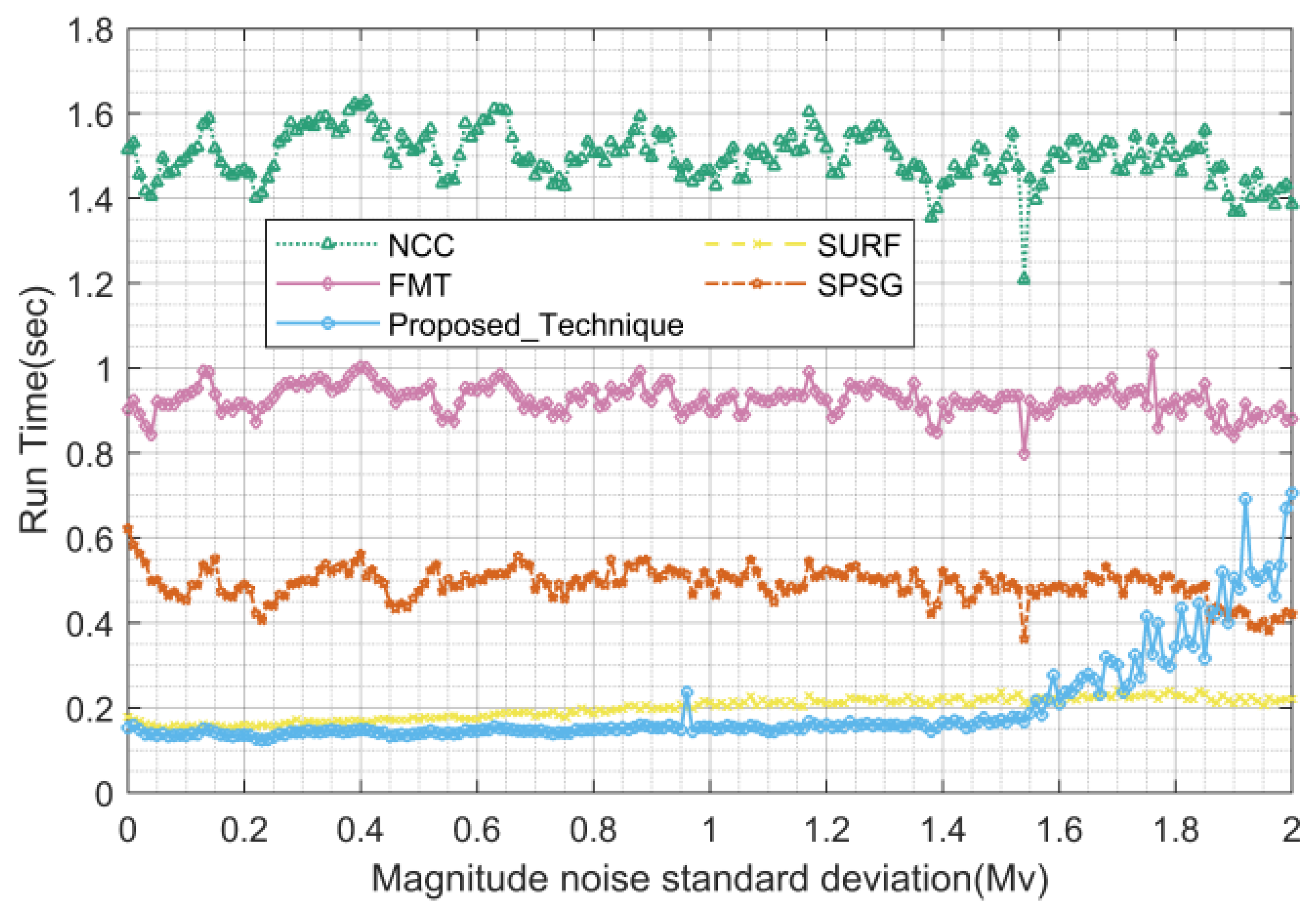

4.1.5. Magnitude Deviation

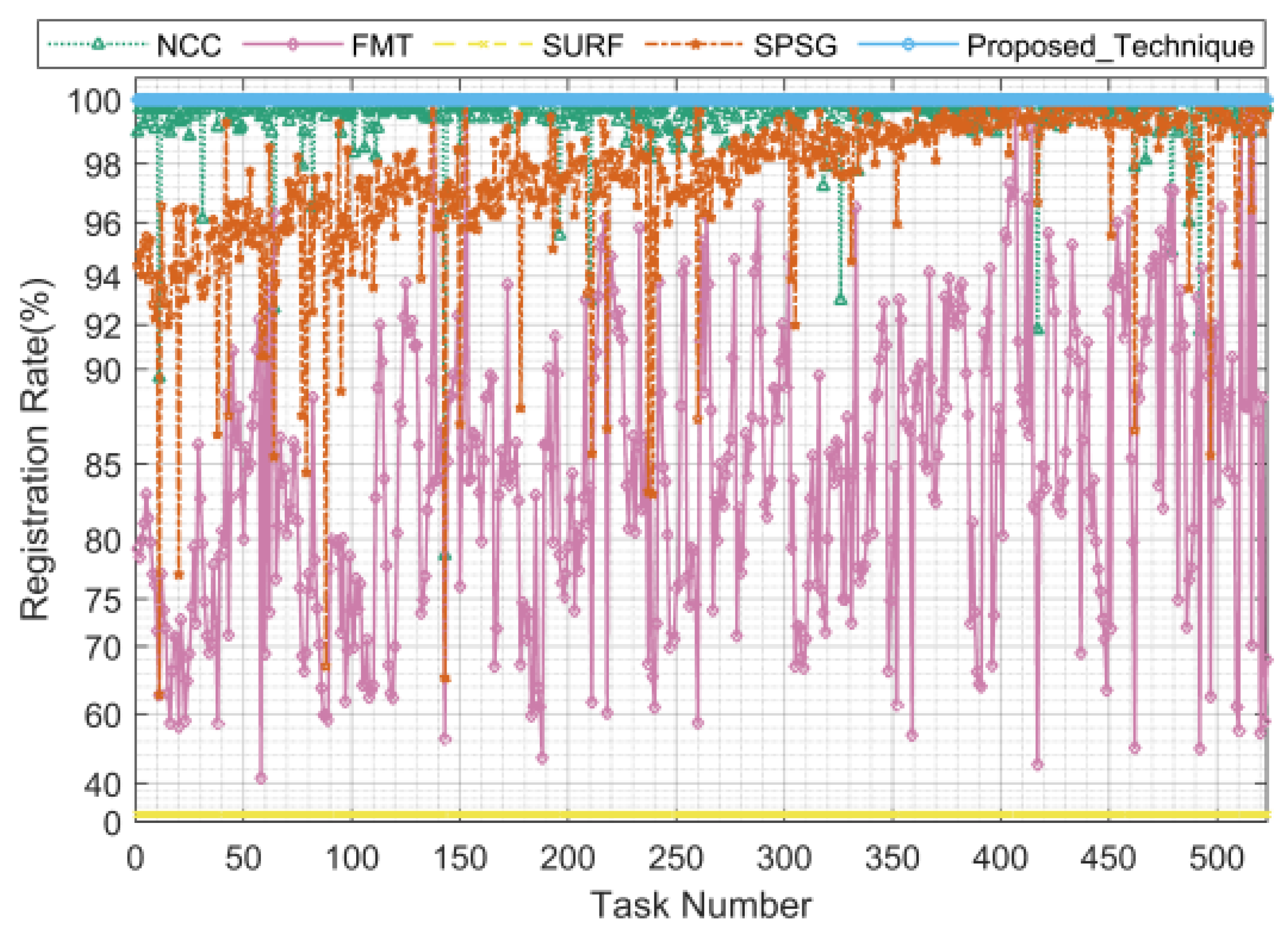

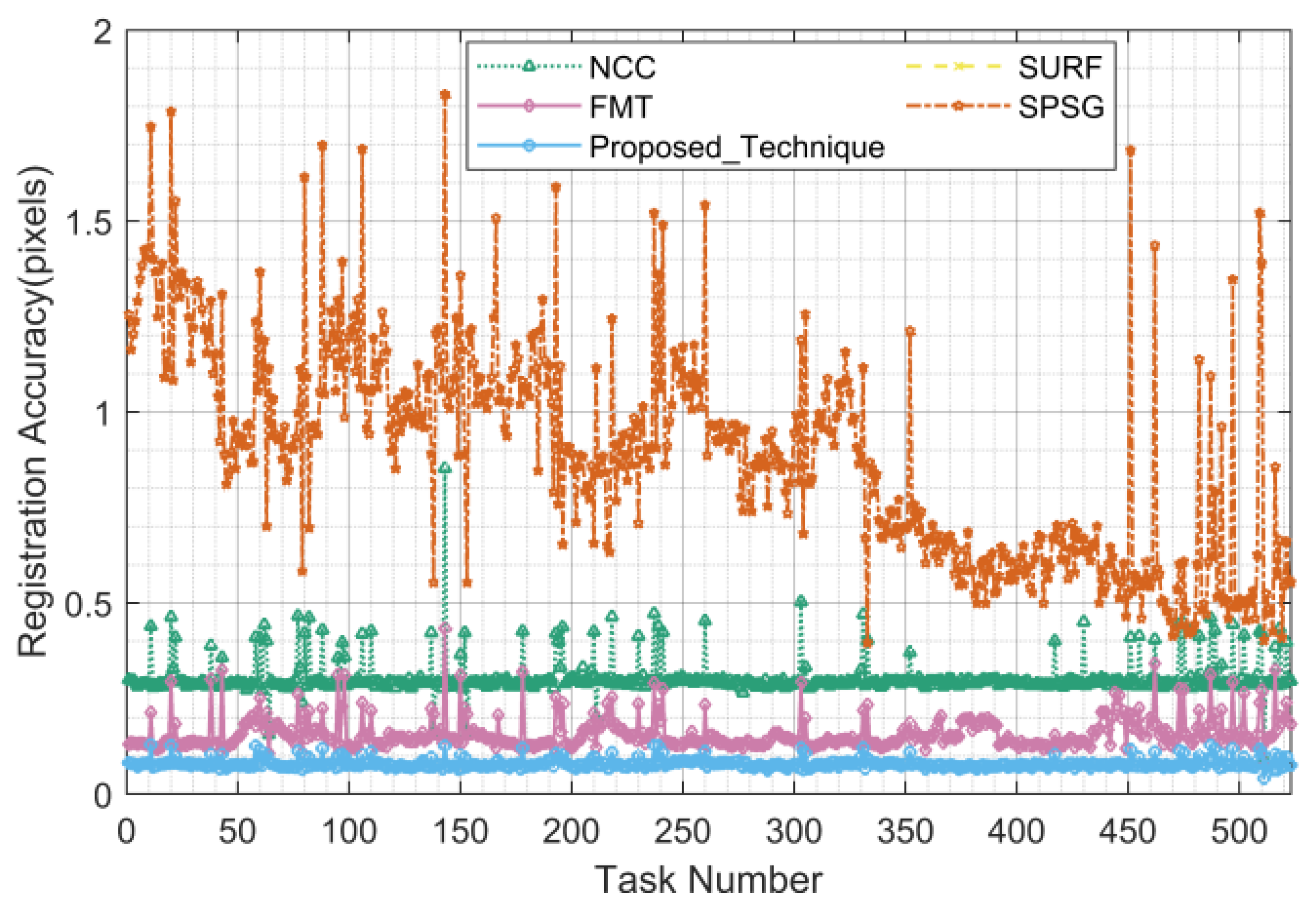

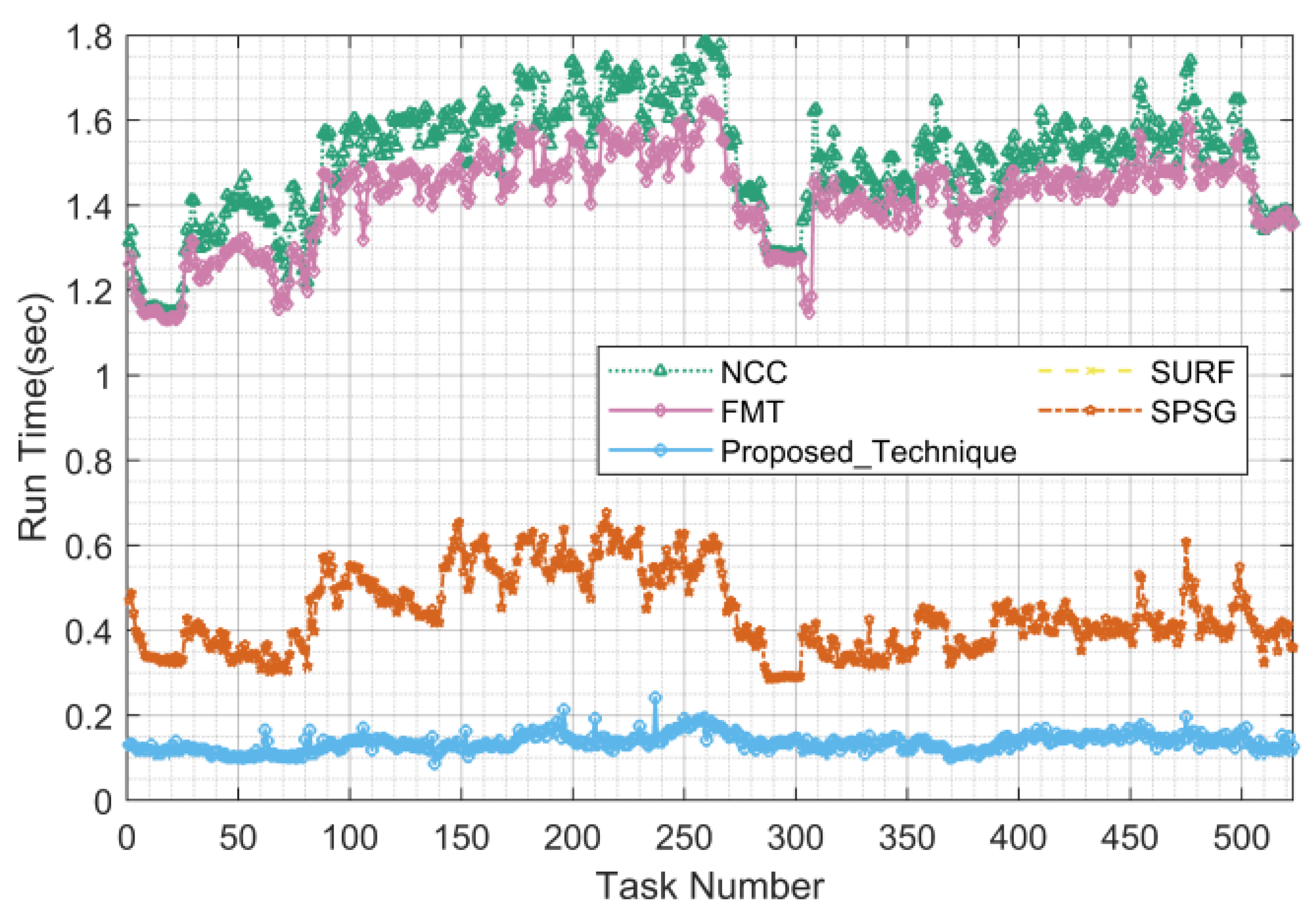

4.2. Real Data Testing

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jiang, P.; Liu, C.; Yang, W.; Kang, Z.; Fan, C.; Li, Z. Automatic extraction channel of space debris based on wide-field surveillance system. npj Microgravity 2022, 8, 14. [Google Scholar] [CrossRef] [PubMed]

- Barentine, J.C.; Venkatesan, A.; Heim, J.; Lowenthal, J.; Kocifaj, M.; Bará, S. Aggregate effects of proliferating low-Earth-orbit objects and implications for astronomical data lost in the noise. Nat. Astron. 2023, 7, 252–258. [Google Scholar] [CrossRef]

- Li, Y.; Niu, Z.; Sun, Q.; Xiao, H.; Li, H. BSC-Net: Background Suppression Algorithm for Stray Lights in Star Images. Remote Sens. 2022, 14, 4852. [Google Scholar] [CrossRef]

- Li, H.; Niu, Z.; Sun, Q.; Li, Y. Co-Correcting: Combat Noisy Labels in Space Debris Detection. Remote Sens. 2022, 14, 5261. [Google Scholar] [CrossRef]

- Liu, L.; Niu, Z.; Li, Y.; Sun, Q. Multi-Level Convolutional Network for Ground-Based Star Image Enhancement. Remote Sens. 2023, 15, 3292. [Google Scholar] [CrossRef]

- Shou-cun, H.; Hai-bin, Z.; Jiang-hui, J. Statistical Analysis on the Number of Discoveries and Discovery Scenarios of Near-Earth Asteroids. Chin. Astron. Astrophys. 2023, 47, 147–176. [Google Scholar] [CrossRef]

- Ivezić, Ž.; Kahn, S.M.; Tyson, J.A.; Abel, B.; Acosta, E.; Allsman, R.; Alonso, D.; AlSayyad, Y.; Anderson, S.F.; Andrew, J.; et al. LSST: From Science Drivers to Reference Design and Anticipated Data Products. Astrophys. J. 2019, 873, 111. [Google Scholar] [CrossRef]

- Bosch, J.; AlSayyad, Y.; Armstrong, R.; Bellm, E.; Chiang, H.F.; Eggl, S.; Findeisen, K.; Fisher-Levine, M.; Guy, L.P.; Guyonnet, A.; et al. An Overview of the LSST Image Processing Pipelines. Astrophysics 2018, arXiv:1812.03248. [Google Scholar] [CrossRef]

- Mong, Y.L.; Ackley, K.; Killestein, T.L.; Galloway, D.K.; Vassallo, C.; Dyer, M.; Cutter, R.; Brown, M.J.I.; Lyman, J.; Ulaczyk, K.; et al. Self-supervised clustering on image-subtracted data with deep-embedded self-organizing map. Mon. Not. R. Astron. Soc. 2023, 518, 752–762. [Google Scholar] [CrossRef]

- Singhal, A.; Bhalerao, V.; Mahabal, A.A.; Vaghmare, K.; Jagade, S.; Kulkarni, S.; Vibhute, A.; Kembhavi, A.K.; Drake, A.J.; Djorgovski, S.G.; et al. Deep co-added sky from Catalina Sky Survey images. Mon. Not. R. Astron. Soc. 2021, 507, 4983–4996. [Google Scholar] [CrossRef]

- Yu, C.; Li, B.; Xiao, J.; Sun, C.; Tang, S.; Bi, C.; Cui, C.; Fan, D. Astronomical data fusion: Recent progress and future prospects—A survey. Exp. Astron. 2019, 47, 359–380. [Google Scholar] [CrossRef]

- Paul, S.; Pati, U.C. A comprehensive review on remote sensing image registration. Int. J. Remote Sens. 2021, 42, 5400–5436. [Google Scholar] [CrossRef]

- Wu, P.; Li, W.; Song, W. Fast, accurate normalized cross-correlation image matching. J. Intell. Fuzzy Syst. 2019, 37, 4431–4436. [Google Scholar] [CrossRef]

- Lewis, J. Fast Normalized Cross-Correlation. Vis. Interface 1995, 120–123. Available online: www.scribblethink.org/Work/nvisionInterface/nip.pdf (accessed on 25 October 2023).

- Yan, X.; Zhang, Y.; Zhang, D.; Hou, N.; Zhang, B. Registration of Multimodal Remote Sensing Images Using Transfer Optimization. IEEE Geosci. Remote Sens. Lett. 2020, 17, 2060–2064. [Google Scholar] [CrossRef]

- Reddy, B.S.; Chatterji, B.N. A FFT-Based Technique for Translation, Rotation and Scale-Invariant Image Registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef] [PubMed]

- Misra, I.; Rohil, M.K.; Moorthi, S.M.; Dhar, D. Feature based remote sensing image registration techniques: A comprehensive and comparative review. Int. J. Remote Sens. 2022, 43, 4477–4516. [Google Scholar] [CrossRef]

- Tang, G.; Liu, Z.; Xiong, J. Distinctive image features from illumination and scale invariant keypoints. Multimed. Tools Appl. 2019, 78, 23415–23442. [Google Scholar] [CrossRef]

- Chang, H.H.; Wu, G.L.; Chiang, M.H. Remote Sensing Image Registration Based on Modified SIFT and Feature Slope Grouping. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1363–1367. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, X. An FPGA-Based General-Purpose Feature Detection Algorithm for Space Applications. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 98–108. [Google Scholar] [CrossRef]

- Zhou, H.; Yu, Y. Applying rotation-invariant star descriptor to deep-sky image registration. Front. Comput. Sci. China 2018, 12, 1013–1025. [Google Scholar] [CrossRef]

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Lin, B.; Xu, X.; Shen, Z.; Yang, X.; Zhong, L.; Zhang, X. A Registration Algorithm for Astronomical Images Based on Geometric Constraints and Homography. Remote Sens. 2023, 15, 1921. [Google Scholar] [CrossRef]

- Lang, D.; Hogg, D.W.; Mierle, K.; Blanton, M.; Roweis, S. Astrometry. net: Blind astrometric calibration of arbitrary astronomical images. Astron. J. 2010, 139, 1782. [Google Scholar] [CrossRef]

- Garcia, L.J.; Timmermans, M.; Pozuelos, F.J.; Ducrot, E.; Gillon, M.; Delrez, L.; Wells, R.D.; Jehin, E. prose: A python framework for modular astronomical images processing. Mon. Not. R. Astron. Soc. 2021, 509, 4817–4828. [Google Scholar] [CrossRef]

- Li, J.; Wei, X.; Wang, G.; Zhou, S. Improved Grid Algorithm Based on Star Pair Pattern and Two-dimensional Angular Distances for Full-Sky Star Identification. IEEE Access 2019, 8, 1010–1020. [Google Scholar] [CrossRef]

- Zhang, G.; Wei, X.; Jiang, J. Full-sky autonomous star identification based on radial and cyclic features of star pattern. Image Vis. Comput. 2008, 26, 891–897. [Google Scholar] [CrossRef]

- Ma, W.; Zhang, J.; Wu, Y.; Jiao, L.; Zhu, H.; Zhao, W. A Novel Two-Step Registration Method for Remote Sensing Images Based on Deep and Local Features. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4834–4843. [Google Scholar] [CrossRef]

- Li, L.; Han, L.; Ding, M.; Cao, H. Multimodal image fusion framework for end-to-end remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Ye, Y.; Tang, T.; Zhu, B.; Yang, C.; Li, B.; Hao, S. A Multiscale Framework with Unsupervised Learning for Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching With Graph Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4937–4946. [Google Scholar] [CrossRef]

- Foster, J.J.; Smolka, J.; Nilsson, D.E.; Dacke, M. How animals follow the stars. Proc. R. Soc. Biol. Sci. 2018, 285, 20172322. [Google Scholar] [CrossRef] [PubMed]

- Kolomenkin, M.; Pollak, S.; Shimshoni, I.I.; Lindenbaum, M. Geometric voting algorithm for star trackers. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 441–456. [Google Scholar] [CrossRef]

- Wei, M.S.; Xing, F.; You, Z. A real-time detection and positioning method for small and weak targets using a 1D morphology-based approach in 2D images. Light. Sci. Appl. 2018, 7, 97–106. [Google Scholar] [CrossRef]

- Vincent, L. Morphological grayscale reconstruction in image analysis: Applications and efficient algorithms. IEEE Trans. Image Process. 1993, 2, 176–201. [Google Scholar] [CrossRef] [PubMed]

- McKee, P.; Nguyen, H.; Kudenov, M.W.; Christian, J.A. StarNAV with a wide field-of-view optical sensor. Acta Astronaut. 2022, 197, 220–234. [Google Scholar] [CrossRef]

- Khodabakhshian, S.; Enright, J. Neural Network Calibration of Star Trackers. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2020, 129, 23–79. [Google Scholar] [CrossRef]

| 160.10 + 6.25 | −1.80 + 5.04 | ||

| 1.64 + 2.82 | −1.82 + 3.87 | ||

| 1.31 + 2.14 | 8.07 + 2.74 | ||

| 3.43 + 6.04 | −4.02 + 1.96 | ||

| −2.83 + 3.10 | 1.51 + 3.61 |

| Potate | OLR | FalseStar | PosDev | MagDev | Real | Score | |

|---|---|---|---|---|---|---|---|

| NCC | 0.25 | 36.56 | 81.72 | 59.64 | 56.98 | 72.40 | 51.26 |

| FMT | 4.36 | 23.64 | 11.55 | 10.10 | 30.93 | 69.84 | 25.07 |

| SURF | 19.95 | 34.84 | 94.06 | 53.82 | 47.64 | 0.00 | 41.72 |

| SPSG | 4.86 | 32.88 | 61.83 | 88.78 | 26.45 | 59.37 | 45.69 |

| PT | 99.13 | 78.94 | 94.19 | 93.44 | 94.18 | 99.91 | 93.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Q.; Liu, L.; Niu, Z.; Li, Y.; Zhang, J.; Wang, Z. A Practical Star Image Registration Algorithm Using Radial Module and Rotation Angle Features. Remote Sens. 2023, 15, 5146. https://doi.org/10.3390/rs15215146

Sun Q, Liu L, Niu Z, Li Y, Zhang J, Wang Z. A Practical Star Image Registration Algorithm Using Radial Module and Rotation Angle Features. Remote Sensing. 2023; 15(21):5146. https://doi.org/10.3390/rs15215146

Chicago/Turabian StyleSun, Quan, Lei Liu, Zhaodong Niu, Yabo Li, Jingyi Zhang, and Zhuang Wang. 2023. "A Practical Star Image Registration Algorithm Using Radial Module and Rotation Angle Features" Remote Sensing 15, no. 21: 5146. https://doi.org/10.3390/rs15215146

APA StyleSun, Q., Liu, L., Niu, Z., Li, Y., Zhang, J., & Wang, Z. (2023). A Practical Star Image Registration Algorithm Using Radial Module and Rotation Angle Features. Remote Sensing, 15(21), 5146. https://doi.org/10.3390/rs15215146