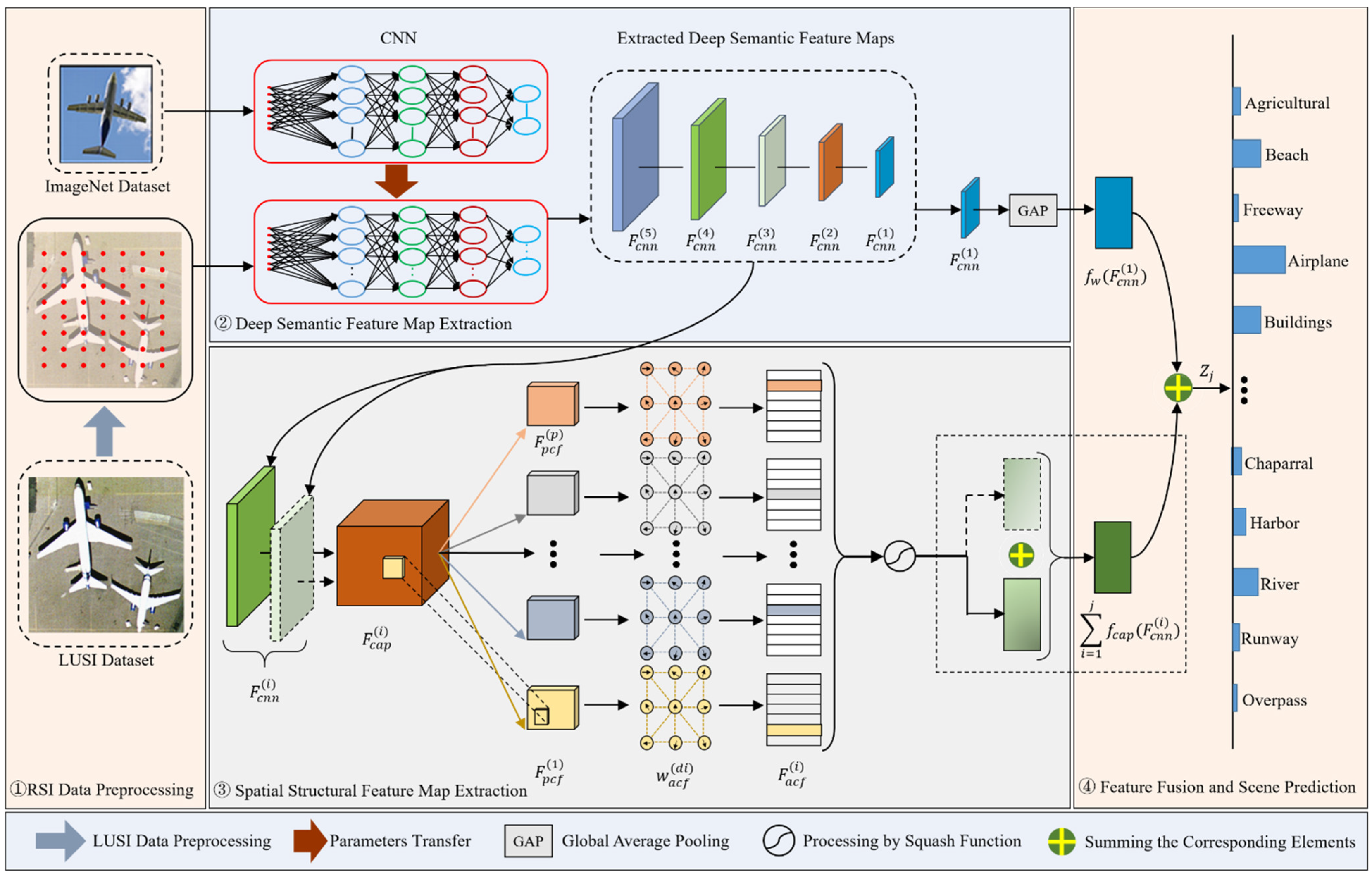

4.1. Overall Framework of HFCC-Net

HFCC-Net is a novel scene classifier with a two-branch network architecture (see

Figure 3), which can classify the deep semantic and spatial structure features extracted by CNN and CapsNet at the same time after fusion, and has an outstanding performance in dealing with LUSC with complex background contents. The overall structure of HFCC-Net consists of three parts: the DSFM extraction branch built by the transfer-learning-based CNN, the SSFM extraction branch built by the graph routing-based CapsNet, and the DFM and prediction module generated by fusing the two branches.

Before inputting the RSI data into the network, the data are first processed to be classified by the model. That is, it mainly consists of three steps: the first step is to crop the image to match the input size of the model (we set the size of the image as 224 × 224); the second step is to decode the image data into tensor data; and the third step is to convert the pixel values into floating point data type and perform normalization.

In HFCC-Net, considering that the training cost of utilizing CapsNet is higher than utilizing CNN, for example, utilizing CapsNet requires more computational resources and training time when dealing with a large number of high-resolution RSIs, in order to achieve the task of model training faster and more accurately, instead of choosing to utilize the original image data directly for the computation, we chose to use the DSFMs extracted by CNNs in the upper branch as the input data to the lower branch of the CapsNet. Inspired by the literature [

27], we integrated the graph structure into the CapsNet in the lower branch, and utilized the multi-head attention graph-based pooling operation to replace the routing operation of the traditional method, which further reduced the training volume of HFCC-Net. Meanwhile, we utilized a transfer learning technique in computing DSFM, using pre-trained models from the ImageNet dataset.

In addition, we designed a new feature fusion algorithm to generate the DFM for improving the utilization efficiency of DSFM and SSFM. Finally, the DFM was fed into the classifier consisting of the SoftMax function to obtain the predicted probabilities.

4.2. Deep Semantic Feature Map Extraction

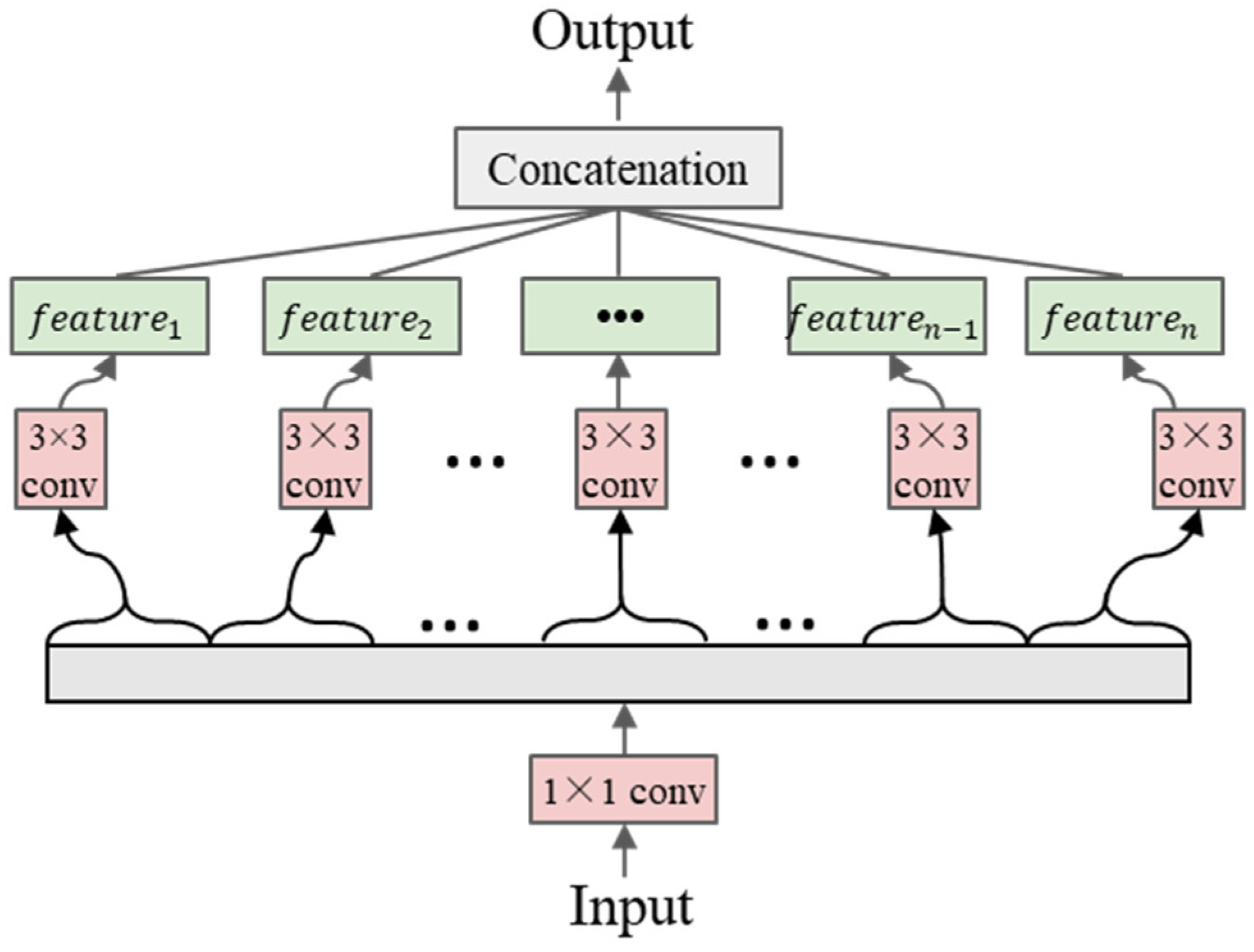

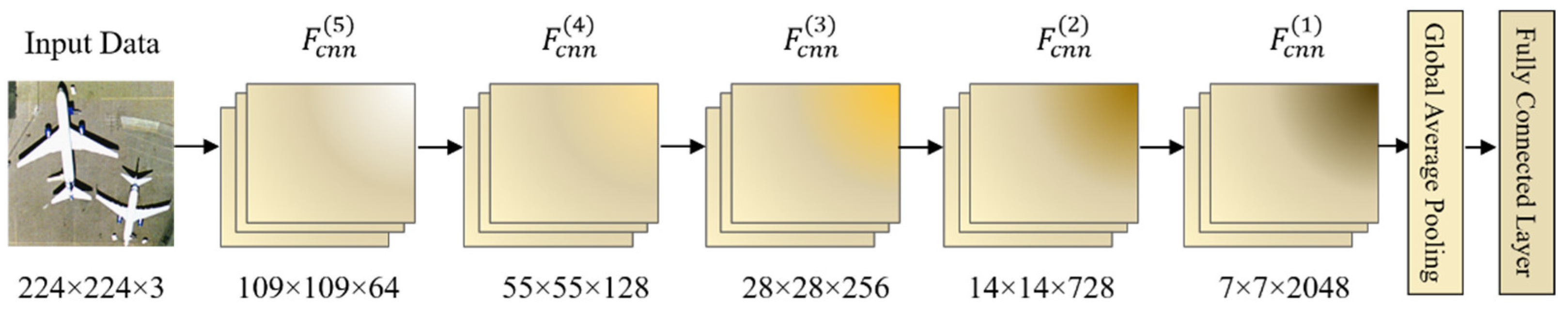

The deep semantic feature extraction module of the upper branch is based on CNN. To extract the rich semantic information of the LUSI data, a deep CNN, which is Xception [

50], was used. As is known, CNN is used to extract semantic information by performing convolutional operations on the data obtained from the input layer. The traditional convolution is that the convolution kernel traverses the input data according to the step size, and each traversal multiplies the input value with the corresponding position of the convolution kernel and then adds the operation, and the feature matrix is obtained after traversal in turn. However, Xception is different. As shown in

Figure 4, it designs the traditional convolution as a deeply separable convolution, i.e., point-by-point convolution and deep convolution. First, a convolution kernel of size 1 × 1 is used for point-by-point convolution to reduce the computational complexity; then, a 3 × 3 deep convolution is applied to disassemble and reorganize the feature map; simultaneously, ReLUs are not added to ensure that data are not corrupted. The whole network structure is stacked by multiple deep separable convolutions.

Compared to the traditional structure, Xception has four advantages: first, it uses cross-layer connections in the deep network to avoid the problem of degradation of the deep network, to make the network easier to train, and to improve the accuracy of the network; second, it uses the maximum pooling layer, which reduces the loss of information and improves the accuracy of the network; third, it uses the depth-separable convolutional layer, which reduces the number of parameters and improves the computational efficiency; and fourth, different sized images are used for training during the training process, which improves the robustness of the network.

Figure 5 shows a schematic of the flow of Xception to extract deep semantic features from the input data. The size of each DSFM is set to

, where

denotes the height,

donates the width of the DSFM, and

denotes the channel dimension of the DSFM. Furthermore, we modified the last two layers of the Xception to use the new global average pooling (GAP) and fully connected layer (FCL).

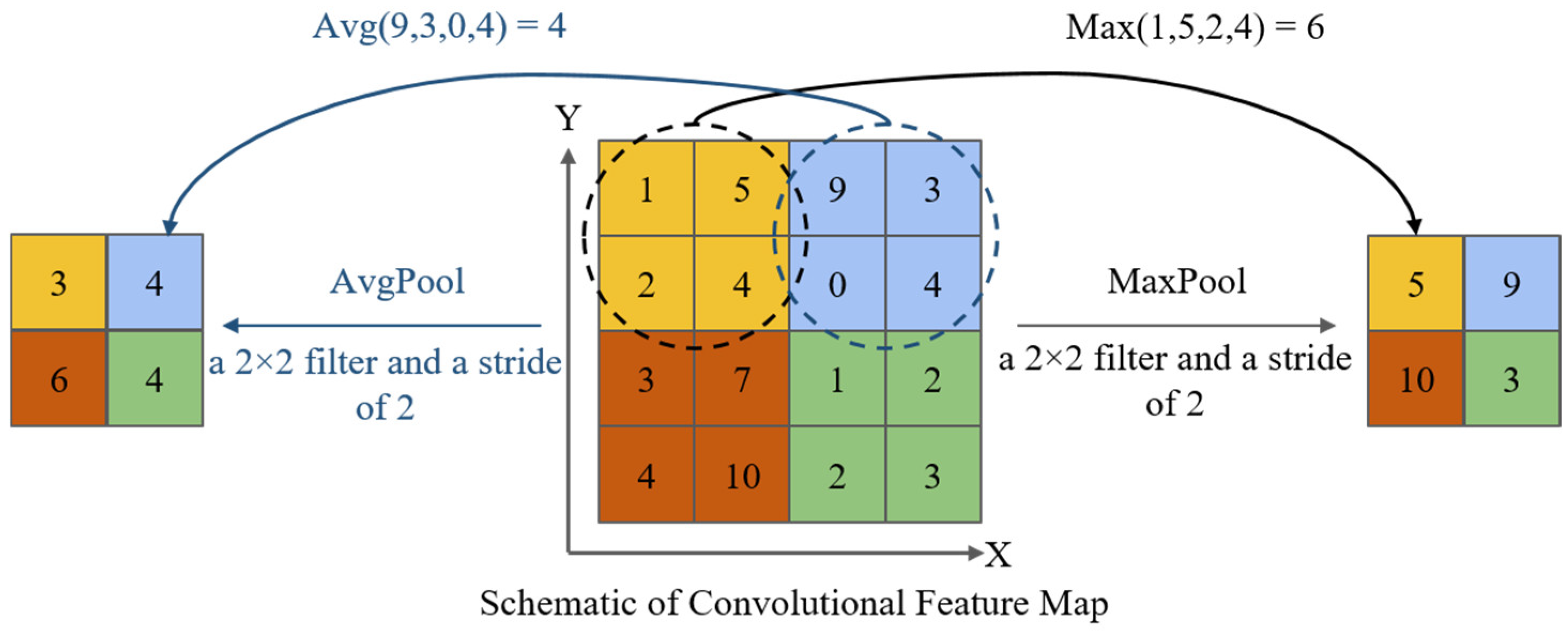

In the structure of Xception, the convolutional layer and the pooling layer generally appear at the same time. There are two main approaches to pooling: maximum pooling and average pooling. Maximum pooling retains the maximum value of each region as the result of the calculation, while average pooling is calculated by calculating the average value of each region as the result of the calculation. As shown in

Figure 6, the 4 × 4 convolutional feature traversal is computed using the 2 × 2 filter according to the size of the step size of 2. Retaining the maximum value gives the maximum pooled feature map, and retaining the average value gives the average pooled feature map. Xception usually chooses a filter of size 3 × 3, traversed in 2 steps, to achieve maximum pooling.

The last DSFM processed by GAP is converted into an n-dimensional vector for easy classification by FCL. Its calculation is:

where

is the output vector,

is the activation function,

is the input data with flattening the pooled DSFM to one-dimensional form,

is the weight vector, and

is the offset vector.

4.3. Spatial Structural Feature Map Extraction

As is known, CapsNet consists of an encoder and a decoder. The encoder consists of three layers, which serves to perform feature extraction on the input data; in addition, a routing process for computation between capsule layers and an activation function for capsule feature classification are included. The decoder is mainly composed of three FCLs and is used to ensure that the encoded information is reduced to the original input features. For the LUSC task, we mainly utilized the encoder part with adaptations.

For the rapid extraction of the rich structural information of the LUSI data while reducing the network parameters, inspired by the literature [

27], the SSFM extraction module of the lower branch was built by using the graph routing-based CapsNet.

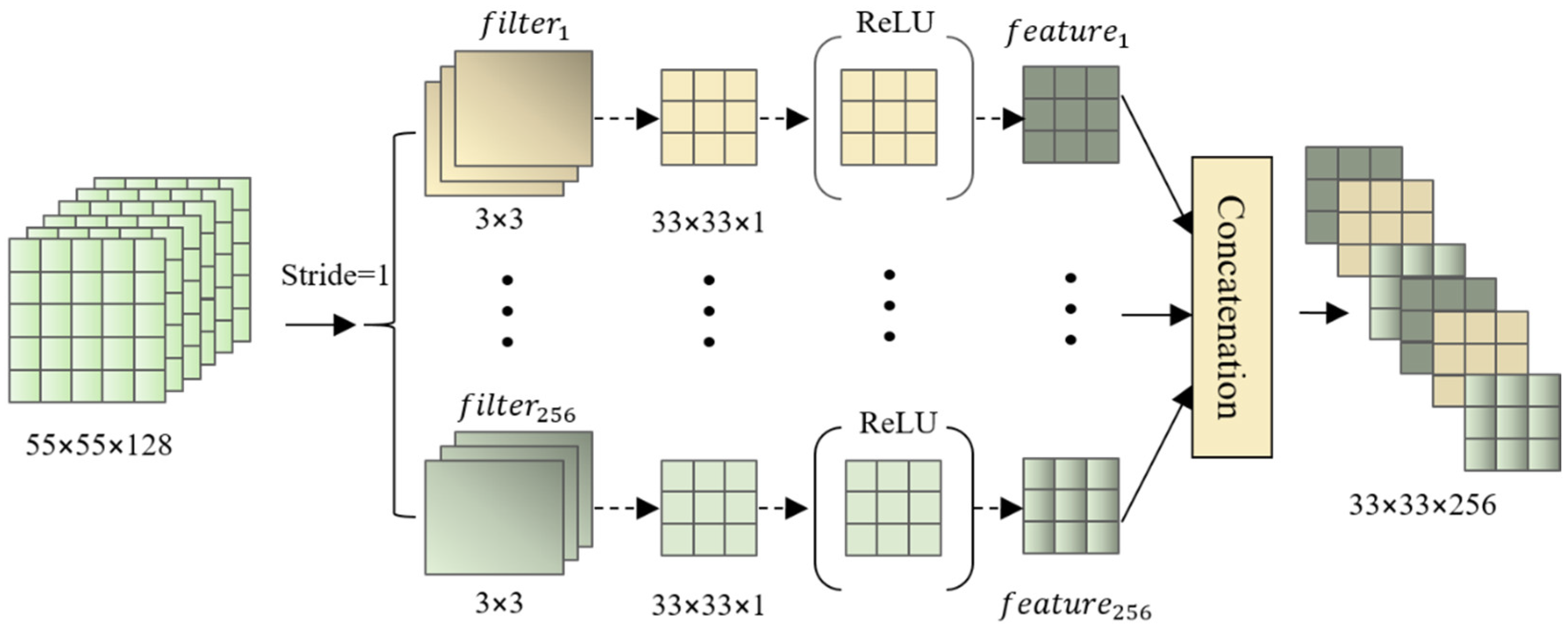

Specifically, it includes three important parts. The first part is to modify the original input layer to process the DSFMs of the upper branch. As shown in

Figure 7, the shallow semantic feature map

extracted by CNN are fine-tuned in size with convolutional operations to fit the input requirements of the CapsNet. The specific method is to use 256 convolution kernels of size 3 × 3 to perform convolution operations on the shallow semantic feature map in a traversal manner by step size 1. The ReLU function is also utilized to retain the values of the elements in the output features that are greater than 0, and the values of the other elements are set to 0. Finally, the 256 feature maps are stitched together by the cat function to form the input feature map

of the next layer.

The second part is to compute the primary capsule feature

using the output feature map

of the previous layer. First,

convolution kernels of size 3 × 3 are utilized to carry out convolution operations in a traversal manner with a step size of 2 to obtain feature maps

, where

; then, the output feature maps

are converted into a feature matrix

of the shape of

, where

denotes the number of primary capsules, the value of

is the product of the square of the size of the feature after the convolution and the value of

. Finally, the squash function is utilized to perform normalization on the new form of feature map

to obtain the main capsule feature map

, which is calculated as follows:

When the modulus length of tends to positive infinity, tends to 1; when the modulus length of tends to 0, tends to 0. denotes the unit vector, which compresses the range of values of the modulus length of between [0, 1], keeping the feature direction unchanged, i.e., keeping the attributes of the image features represented by the feature unchanged.

The third part is the calculation of advanced capsule feature maps using primary capsule feature maps . After adding a dimension to the third dimension of feature map , we obtain , then we perform matrix multiplication operation with randomly initialized weight parameters to get , where , and then, we convert the feature map to , and finally input into the multiple graphs pooling module to obtain .

The multiple graphs pooling module serves to transform the primary capsule feature map

into advanced capsule feature map

, and the key technique is to calculate the weight coefficients

of each primary capsule feature map.

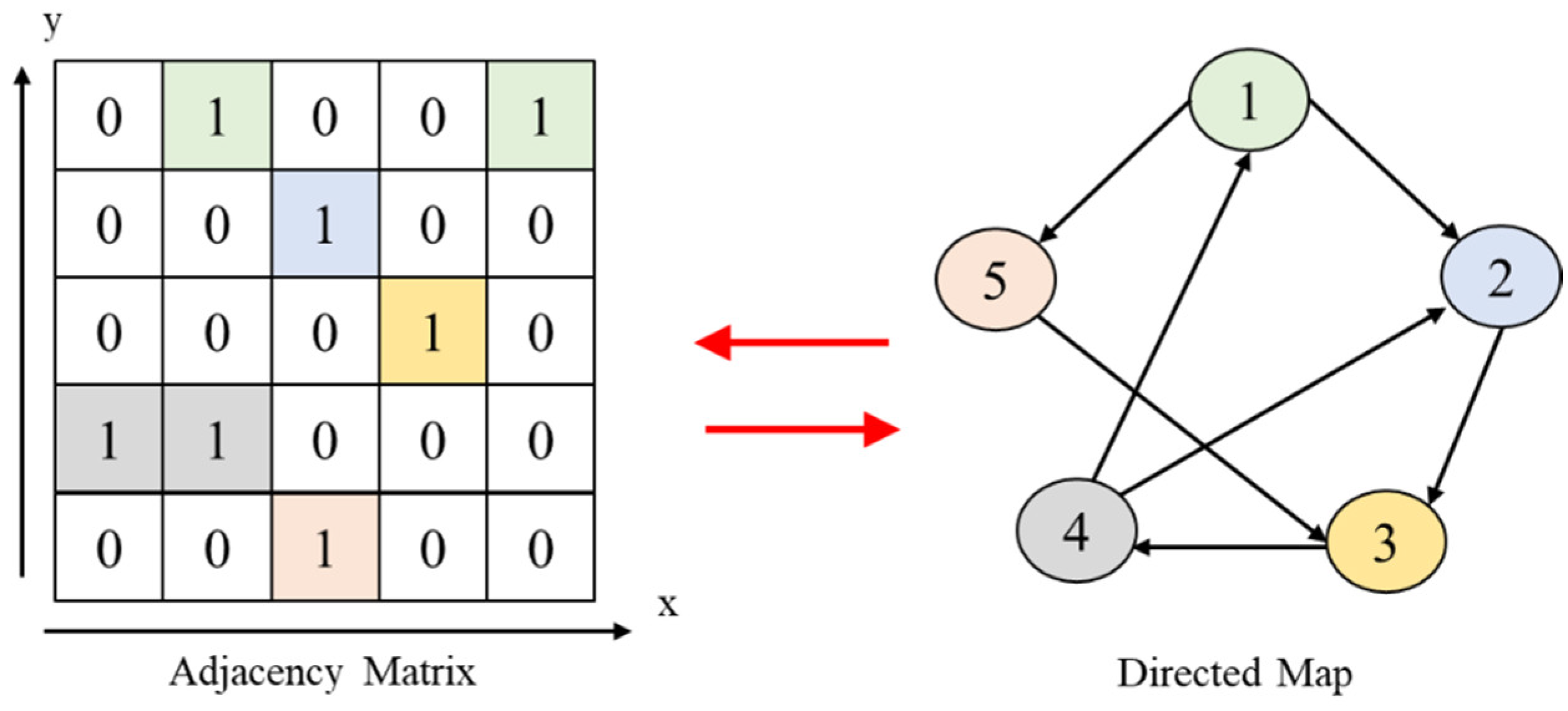

Figure 8 shows a simple relationship between a two-dimensional array and an image.

The first step is to compute the nodes and edges of feature map . Consider feature map as graphs, where each single graph consists of nodes, and the vector can be seen as the feature map of the corresponding node.

The second step is to calculate the attention coefficients of the

unigraph. Matrix multiplication of the adjacency matrix of the

unigram is performed with the node features of the

unigram, and then multiplied with the random initialization weight parameter

, and then the calculation result is inputted into the softmax function to obtain the attention coefficient

of the feature

.

where

denotes the feature map of the

dimension, and

and

denote the index of the feature map

node, respectively.

The third step is to use multiply the attention coefficient with the node feature to obtain the attention feature, then the squash function is used to perform the normalization disposal to obtain the advanced capsule feature map

.

4.4. Feature Map Fusion and Scene Prediction

To obtain both DSFMs and SSFMs of the LUSIs, we designed a simple two-branch fusion module with different characteristics, where the feature maps extracted from the upper-branch CNN and the lower-branch CapsNet are integrated and inputted to the recognizer. The computational formulae used in this fusion module are as follows:

where

denotes the fused feature, called DFM,

denotes the last layer of semantic feature map extracted by the CNN,

denotes the mean GAP and FCL,

denotes the use of summing according to the values of the corresponding elements one by one,

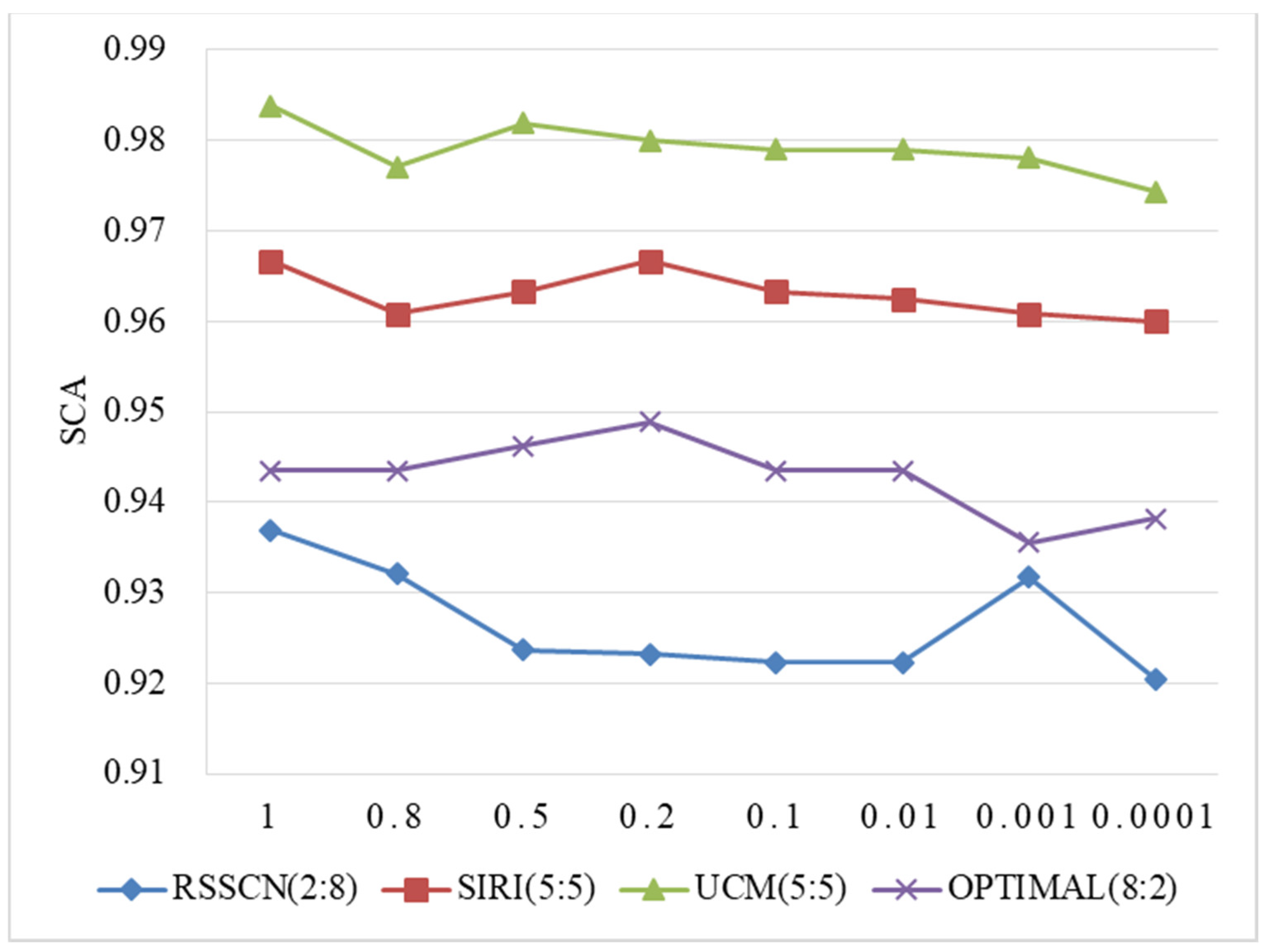

is the control coefficient, which denotes the key parameter controlling the deep fusion of the two-branch feature maps, and

denotes the new spatial structure feature map formed by the CNN extracted feature maps input to the CapsNet after summing the corresponding elements.

After obtaining the DFM

, it is first fed into the softmax function to calculate the probability of the image belonging to each class, and then the obtained probability values are used to calculate the predicted loss together with the probability values of the data labels after the image has been encoded by one-hot coding. While training the model using the HFCC-Net method, we used the cross-entropy loss function to obtain the minimum loss in both the training and validation datasets, achieving the task of optimizing the weight parameters of the model. Equation (7) lists the loss values obtained for a batch size of land-use data after softmax function and cross-entropy loss calculation.

where

denotes the number of the LUSI,

denotes the number of categories of land-use scenes,

denotes the true probability value of the

-th scene data label (if the true category of the

-th scene data label is equal to

then,

is equal to 1, otherwise 0), and

denotes the predicted probability that the

-th scene image data belongs to category

-th.