1. Introduction

Hyperspectral images contain much spectral-spatial information, with hundreds of narrow continuous bands. The significant value of the abundant information they carry has been more and more obvious in many fields, such as agricultural applications [

1], geological exploration and mineralogy [

2,

3], forestry and environmental management [

4,

5], water and marine resources management [

6], and military and defense applications [

7,

8]. Since HSI classification is one of the most essential procedures of HSI analysis, the innovation discovery in HSI classification has been an increasingly important promotion of the development of these fields mentioned above.

The main task of HSI classification is to label every image pixel based on the feature information carried by the training samples. Many pixel-wise-based HSI classification methods have been proposed based on the realistic idea that different categories of pixels should take different spectral information. For example, methods such as support vector machine (SVM) [

9], random forests (RF) [

10], and traditional distance metrics-based classifiers [

11] treat a single pixel with several bands as a single sample. This view makes them only use spectral information for classification and neglect the rich spatial characteristics. Furthermore, since the features of pixels vary in the same class and resemble the different classes, which is called the salt-and-pepper noise problem, the aforementioned traditional machine learning algorithms can hardly achieve a desirable accuracy.

In recent decades, deep learning (DL) has shown great potential in natural language processing, computer vision, and object detection. In this context, DL-based classifiers, especially convolution neural networks (CNNs)-based approaches, could effectively utilize spatial-spectral information for HSI classification [

12,

13]. Chen et al. [

14] proposed a 2D CNN stacked autoencoder and introduced the CNNs-based method to HSI classification for the first time. Furthermore, to achieve more efficient extractions of spatial-spectral features, the structure of the DL-based model is increasingly complicated, and the number of parameters of the models is increasingly enormous. Roy et al. [

15] constructed a hybrid 2D–3D CNN to classify HSI. Hamida et al. [

16] used a 3D CNN model to obtain even better classification results. Zhao et al. [

17] proposed a convolutional transformer network, which introduced the promising transformer to HSI classification. To ensure that a deeper network achieves better effectiveness, researchers introduced the residual structure into HSI classification [

18,

19,

20]. With the classification model becoming more complex and deeper, and the training and inference time increasing, the computational energy required also increases.

In the past few years, we are rapidly reaching a point where DL may no longer be feasible, while spiking neural networks are one of the most promising paradigms to cross it. Unlike artificial neural networks (ANNs), SNNs use spike sequences to represent information and take advantage of spatiotemporal information during training and inference. Inspired by biological neural networks, the spiking neurons, foundational components of SNN, will remain silent outside of a few active states. Because of the inherent asynchrony and sparseness of spike trains, SNN has the potential to reduce power consumption while maintaining a relatively good performance [

21]. Due to the discontinuity of spike trains, to obtain a high-performance SNN, the selection of training methods is the first issue to consider. The current mainstream SNN training methods are divided into ANN to SNN conversion (ANN2SNN) [

22] and backpropagation with surrogate gradient [

23]. The ANN2SNN method trains an ANN and saves its parameters first, then converts it into an SNN by replacing the activation function with spiking neurons. However, to obtain an accuracy that matches the original ANN, a large timestep is needed for the converted SNN. This limitation causes a counteraction to the low latency characteristics of SNN. The surrogate gradient method achieves error backpropapgation by keeping the non-differentiable firing function in the forward and substituting it with a continuous and smooth surrogate function in the backward. Moreover, the surrogate method can gobtainet direct training SNNs, which could surpass ANNs with similar architecture in an end-to-end manner.

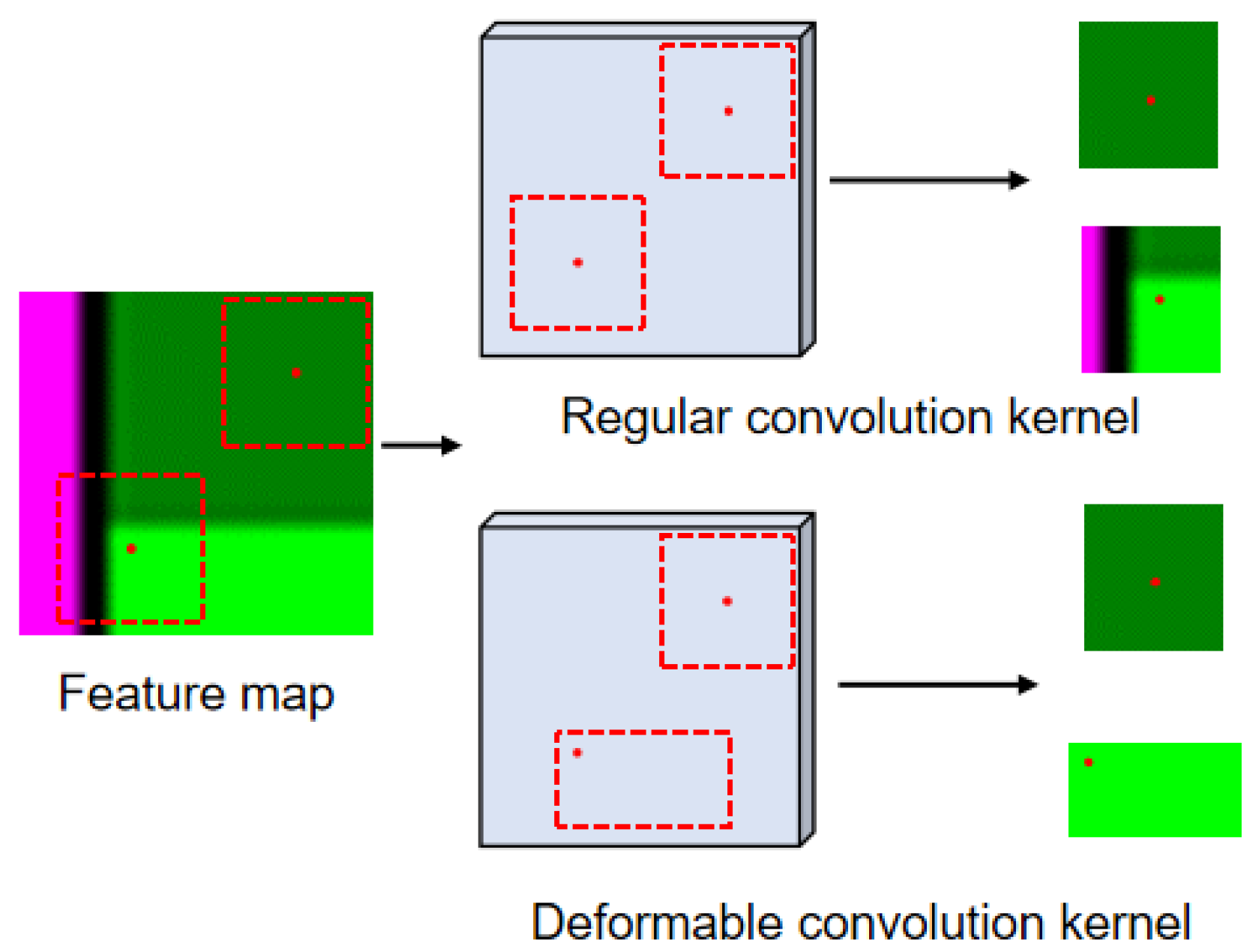

On this basis, many mechanisms and model schemes that have been proven helpful in ANN were introduced into direct training SNN. Fang et al. [

24] use the idea of spike-element-wise (SEW) to introduce ResNet into SNN, which makes it possible to achivee a deeper SNN. Zhu et al. [

25] proposed a temporal-channel joint attention (TCJA) mechanism and designed an SNN that carried out the weight allocation of time and channel joint information. With these developments, SNN models have achieved noteworthy results in many fields, especially image classification. In recent years, a few researchers have also tried to construct an SNN model to achieve HSI classification. Datta et al. [

26] proposed a quantization-aware gradient descent method to train an SNN generated from iso-architecture CNNs for HSI classification. Liu et al. [

27,

28] proposed two SNN classifiers based on channel shuffle attention mechanisms with two different derivative algorithms. These SNN models for HSI classification tend to fall into the trap of the Hughes phenomenon with fewer training samples. In particular, through there are plenty of experiments for these methods, we found that the pixels on the edges of different categories are most likely to obtain the wrong label, which plays a major role in the causes of the Hughes phenomenon. These issues indicate the limitations of the existing SNN methods for boundary discrimination.

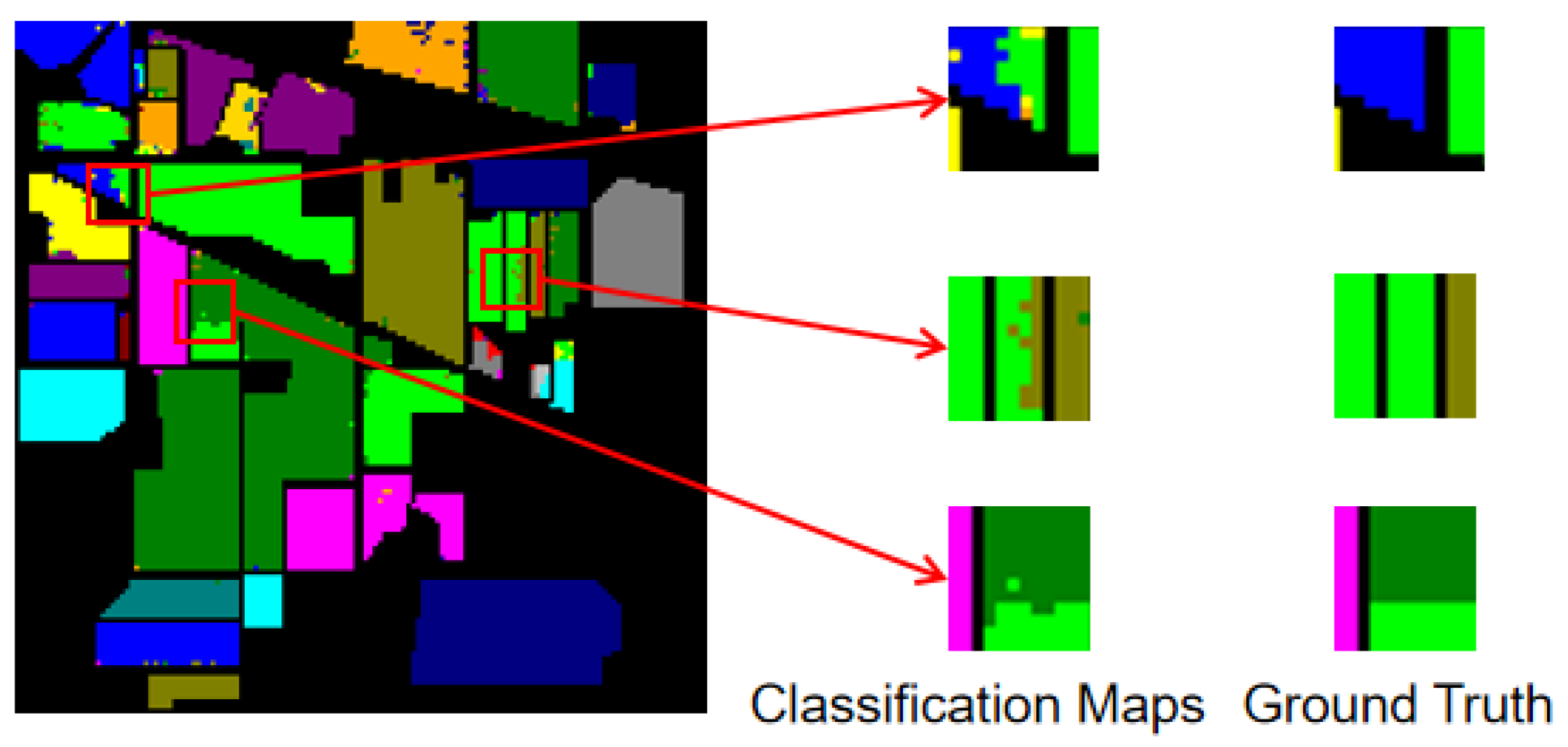

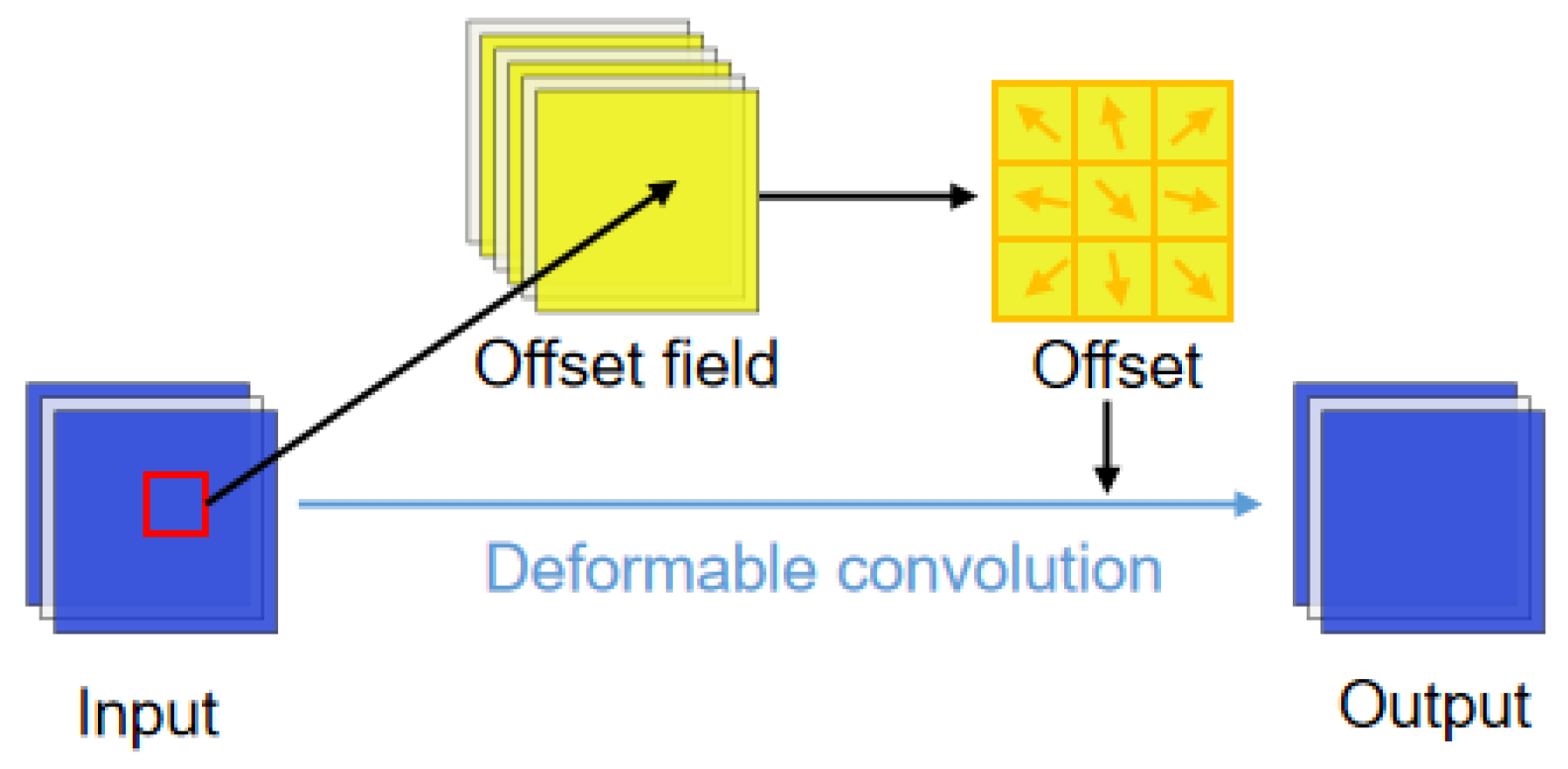

To address the above issues, inspired by the deformable convolutional mechanism [

29] in computer vision tasks to distinguish ambiguous boundaries, we proposed a boundary-aware deformable spiking neural network (BDSNN). The contributions of this article are as follows:

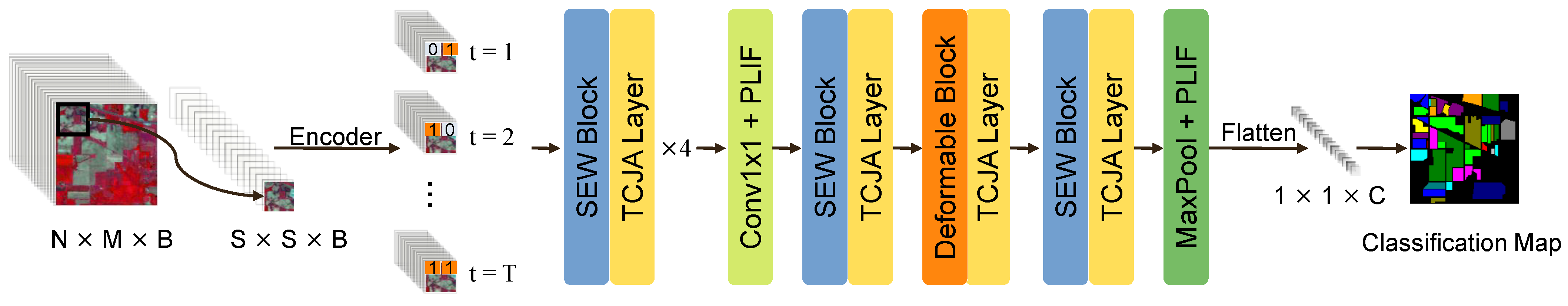

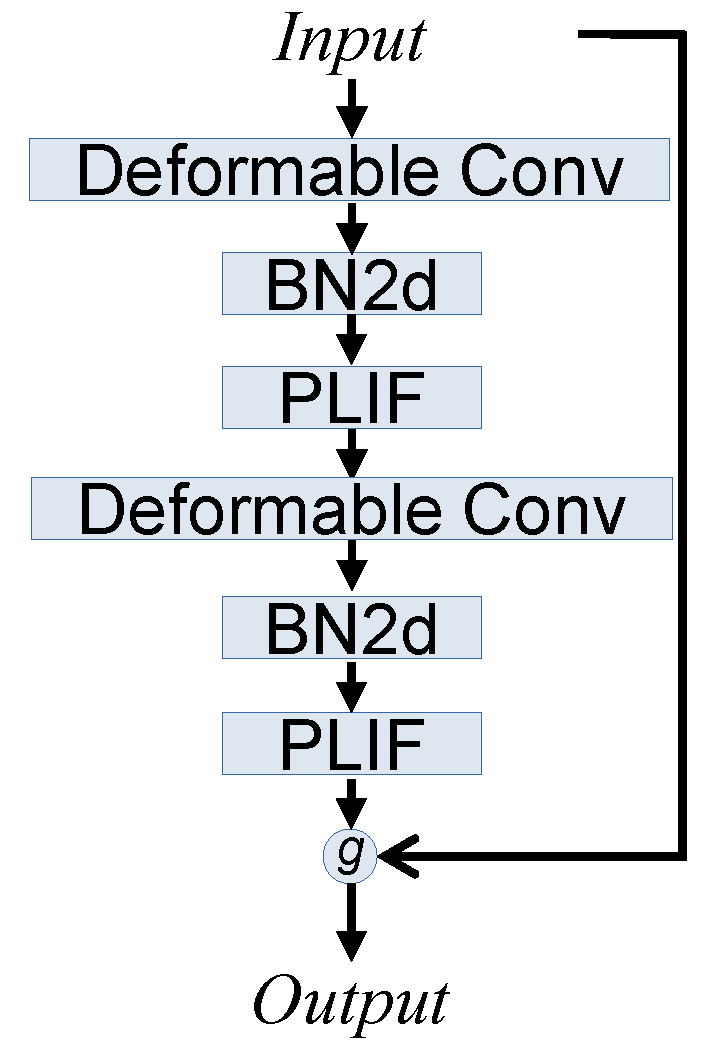

We proposed a novel SNN-based model for HSI classification by integrating an attention mechanism and deformable convolution with a spiking ResNet. The spiking ResNet framework we used, named SEW ResNet, could overcome the vanishing/exploding gradient problems effectively. In addition, the temporal-channel joint attention (TCJA) mechanism was introduced for better feature extraction, by guiding the model to figure out what is useful and when, through filtering the abundant temporal and spectral information.

For boundary-awareness, we proposed the deformable SEW ResNet method by adding the deformable convolutional mechanism into the SEW ResNet block. The deformable convolution provides variable receptive fields for high-level features extraction and brings our method the boundary-awareness to mitigate the boundary confusion phenomenon.

The rest of this article is organized as follows.

Section 2 introduces the proposed methods used to build an attention-based deformable SNN model and the model architecture in detail.

Section 3 presents the results of experiments.

Section 4 summarizes this paper.

3. Experimental Results

In this section, we choose four CNN-based methods (ResNet [

38], DPyResNet [

18], SSRN [

19], and A2S2KResNet [

20]) one deformable-CNN-based model (DHCNet [

29]), and one SNN-based model (HSI-SNN [

28]) for comparison. To fully prove the effectiveness of the proposed method, the experiment was performed on five benchmark data sets: Indian Pines(IP), Kennedy Space Center (KSC), Houston University (HU), Pavia University (PU), and Salinas (SV). All experiments are conducted under the experimental environment of Ubuntu16, Titan-RTX GPUs, and 125G memory. We train and test the proposed model with the SpikingJelly [

40] framework based on PyTorch [

41]. Overall accuracy (OA), average accuracy (AA), and statistical kappa (

) coefficient are used to evaluate the performance of the models.

3.1. Data Sets

AVIRIS [

42] obtained the Indian Pines data set imaging of Indiana Indian Pine trees in the United States. Its spectrum is 200 (excluding 20 bands that cannot be reflected by water). Its size is

, composed of 21,025 pixels. There are 10,776 background pixels, and 10,249 object pixels used for training and testing are available.

Rosis-03 obtained the Pavia University data set [

43] on Pavia City in Italy. It contains 103 available bands (12 noise affected by noise). Its size is

, including 207,400 pixels, of which 42,776 are object pixels.

The Salinas data set was shot by AVIRIS [

42] sensors in Salinas Valley, California. The spatial resolution of these data is 3.7 m, and the size is

. The original data are 224 bands; after removing the bands with severe water vapor absorption, there are 204 bands left. These data include 16 crop categories.

The Houston University data set was obtained from the imaging of Houston University through the ITRES Casi-1500 sensor provided by the 2013 IEEE GRSS Data Fusion Contest. It has 144 bands. Its size is , of which 15,029 are object pixels.

The KSC data set was taken by AVIRIS [

42] sensors at the Kennedy Space Center in Florida on 23 March 1996. These data contain 224 bands, leaving 176 bands after water vapor noise removal, with a spatial resolution of 18 m and a total of 13 categories.

Table 2 shows the main characteristics of the five data sets and the detail of our sample split strategy. Considering that the total sample size is close to or below 10,000, for IN and KSC data sets, we randomly select

samples for training and

for testing. While the extremely limited

samples are randomly selected to train and

to test for the other three data sets (PU, SV, and HU), their total sample sizes are big enough.

3.2. Experimental Setup and Parameter Evaluation

We train the proposed model using the stochastic gradient descent (SGD) optimizer and choose the cross entropy loss function to represent loss. The batch size is set to 32, and the learning rate is set to 0.1. The hyperparameters initial of PLIF, the kernel size of channel attention 1D-CNN , and temporal attention 1D-CNN for the TCJA layer are uniformly set to 2.0, 9, and 3, respectively. The experiment is repeated five times, using 200 epochs each time. The model with the highest accuracy in the validation process is selected for evaluation on test samples.

Firstly, the experiment on the KSC data set is implemented for the evaluation of element-wise functions

g in SEW ResNet.

Table 3 shows the accuracy of the proposed model using different element-wise functions. It is observed that model with the

function gets optimal results, while the other two functions are more likely to fall into the vanishing/exploding gradient problems. Thus, we set the element-wise function

g to

for the following experiments.

The patch size of the input samples is an essential parameter for the extraction of spatial information, and it also influences the extension of disturbance from pixels with different classes. A smaller patch size will limit the model’s spatial feature extraction, decreasing classification accuracy. In contrast, a bigger patch size will aggravate the salt-and-pepper phenomenon. During these experiments, the timestep is set to 8.

Table 4 shows the classification accuracy of the five data sets with four different patch sizes (

,

,

, and

). The five data sets’ best results are achieved using patch size

and

. Noting that a bigger patch size will make the other models achieve worse results, we fix

as the patch size of the proposed method for all the data sets for fair comparisons.

The timestep is another critical parameter for an SNN-based model. A tinier timestep limits the model’s ability to extract features of HSIs, and a longer timestep will enhance computational energy consumption.

Table 5 shows the evaluation results for different timesteps (4, 8, 12, 16) on five data sets. The best OA, AA, and

are achieved using timesteps 12 and 16. Considering the computational consumption, we set 12 as the timestep for the proposed model.

3.3. Classification Results

Table 6,

Table 7 and

Table 8 show the average OAs, AAs,

κs, and accuracies for every class of the five repeat processes on four data sets (IP, PU, SV, and HU). Moreover, our proposed method obtains competitive results in all data sets, compared with the other ResNet-based, deformable-CNN-based, and SNN-based methods.

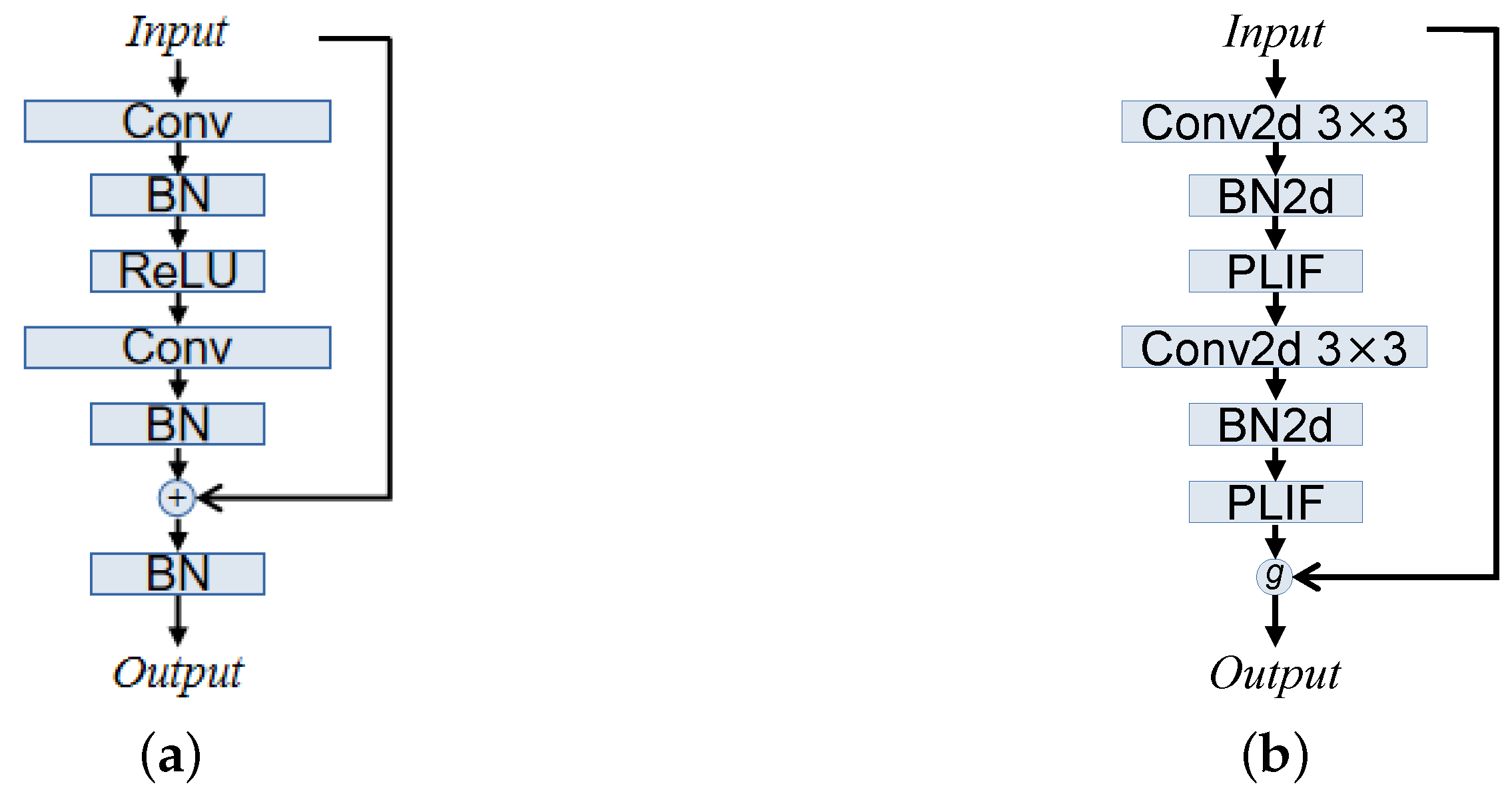

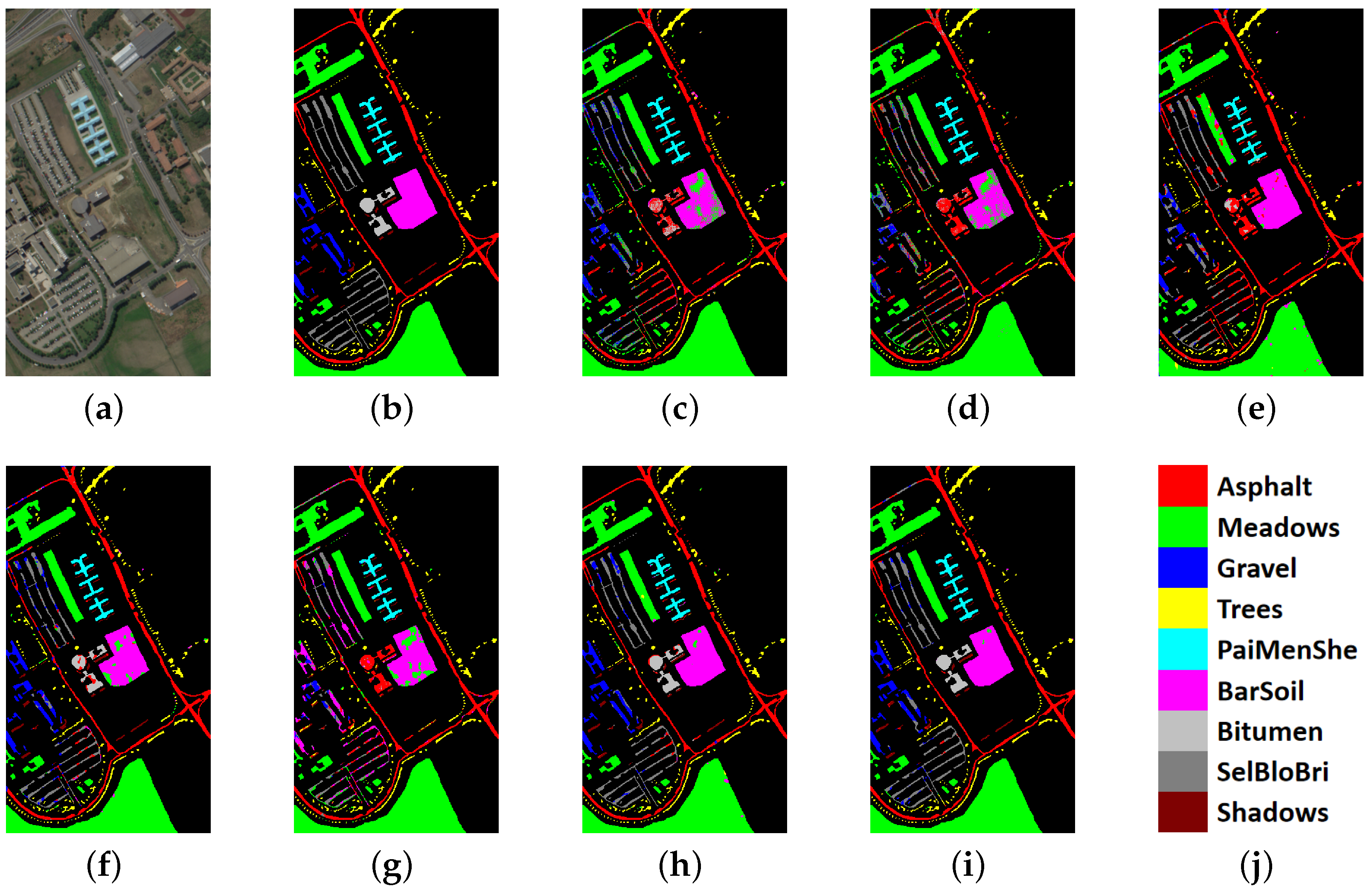

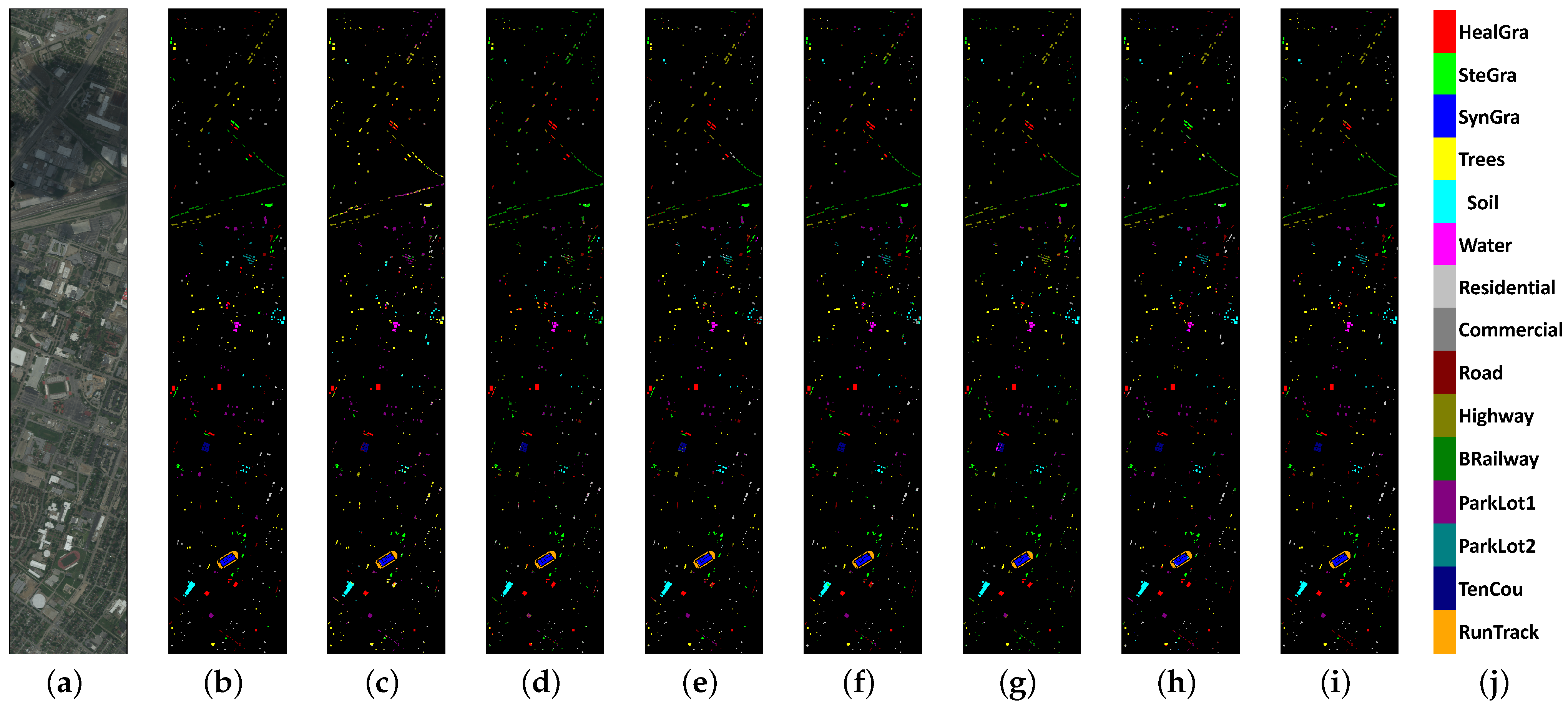

The results for the IP data set are shown in

Table 6, and

Figure 10 shows the classification maps of our model and others for comparison. Our model achieves the best OA (99.16 ± 0.003%), which is 0.34% higher than the best CNN-based model (A2S2KResNet) and 4.58% higher than HSI-SNN. In addition, the proposed model also achieves the biggest

. However, the AA of the proposed model is 1.18% lower than A2S2KResNet, expressing the worse robustness of the SNN-based model for the IP data set, which has an uneven number of categories.

For the PU data set, the results are shown in

Table 7. The proposed model achieves the best OA (96.15 ± 0.006%), which is 4.24% higher than the best results of the other models. And the proposed model also obtains a higher AA (93.96 ± 0.015%) and

(0.9537 ± 0.008) than the others. The classification maps are shown in

Figure 11.

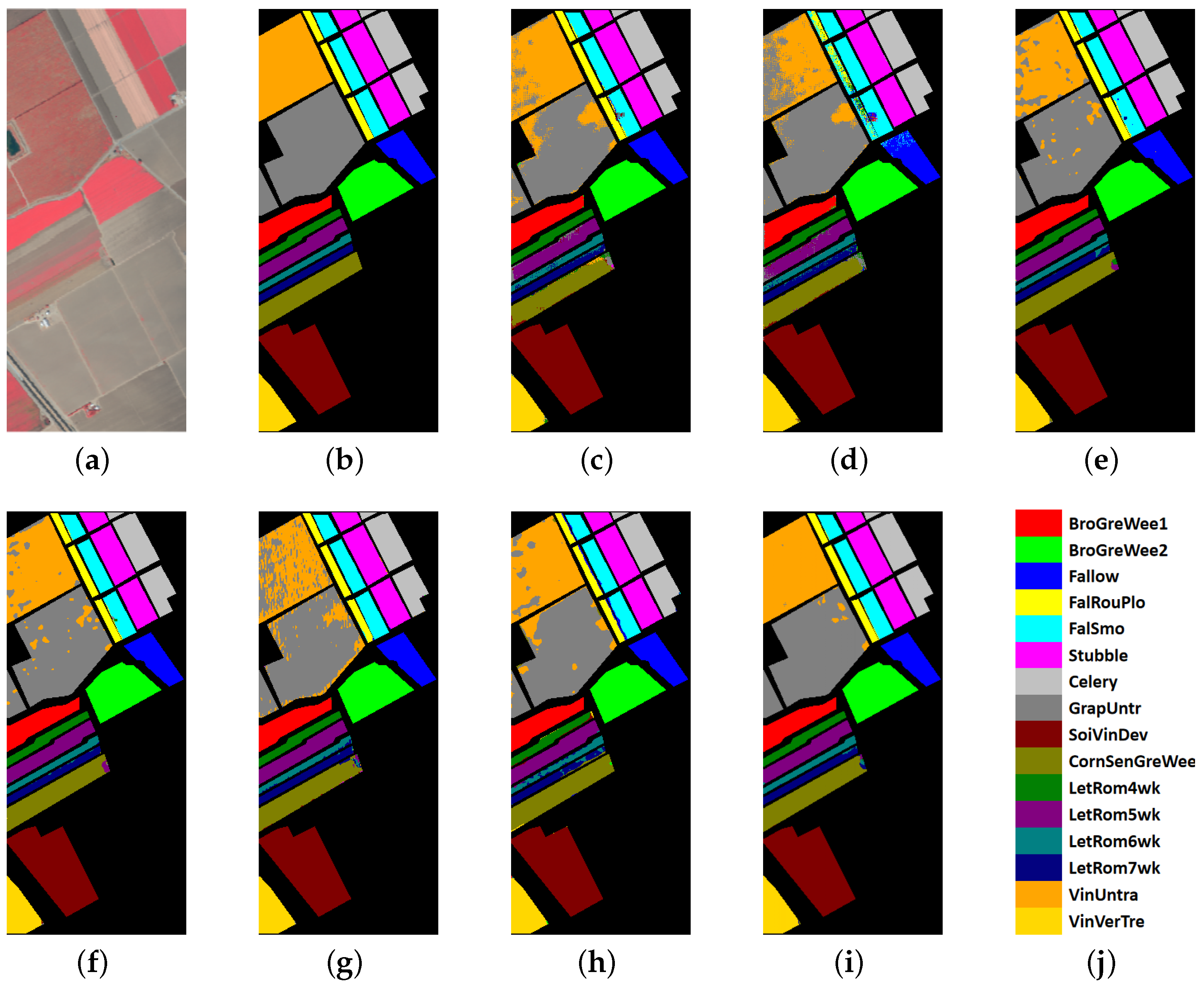

Table 9 shows the results for the SV data set. The proposed model achieves the best OA (99.03 ± 0.002%), which is 5.45% higher than the best results of the other models. And the proposed model also obtains a higher AA (99.28 ± 0.001%) and

(0.9892 ± 0.002) than the others. The classification maps are shown in

Figure 12.

Table 8 shows the results for the HU data set. The proposed model achieves the best OA (86.29 ± 0.017%), which is 4.24% higher than the best results of the other models. And the proposed model also obtains a higher AA (86.63 ± 0.014%) and

(0.8517 ± 0.018) than the others. The classification maps are shown in

Figure 13.

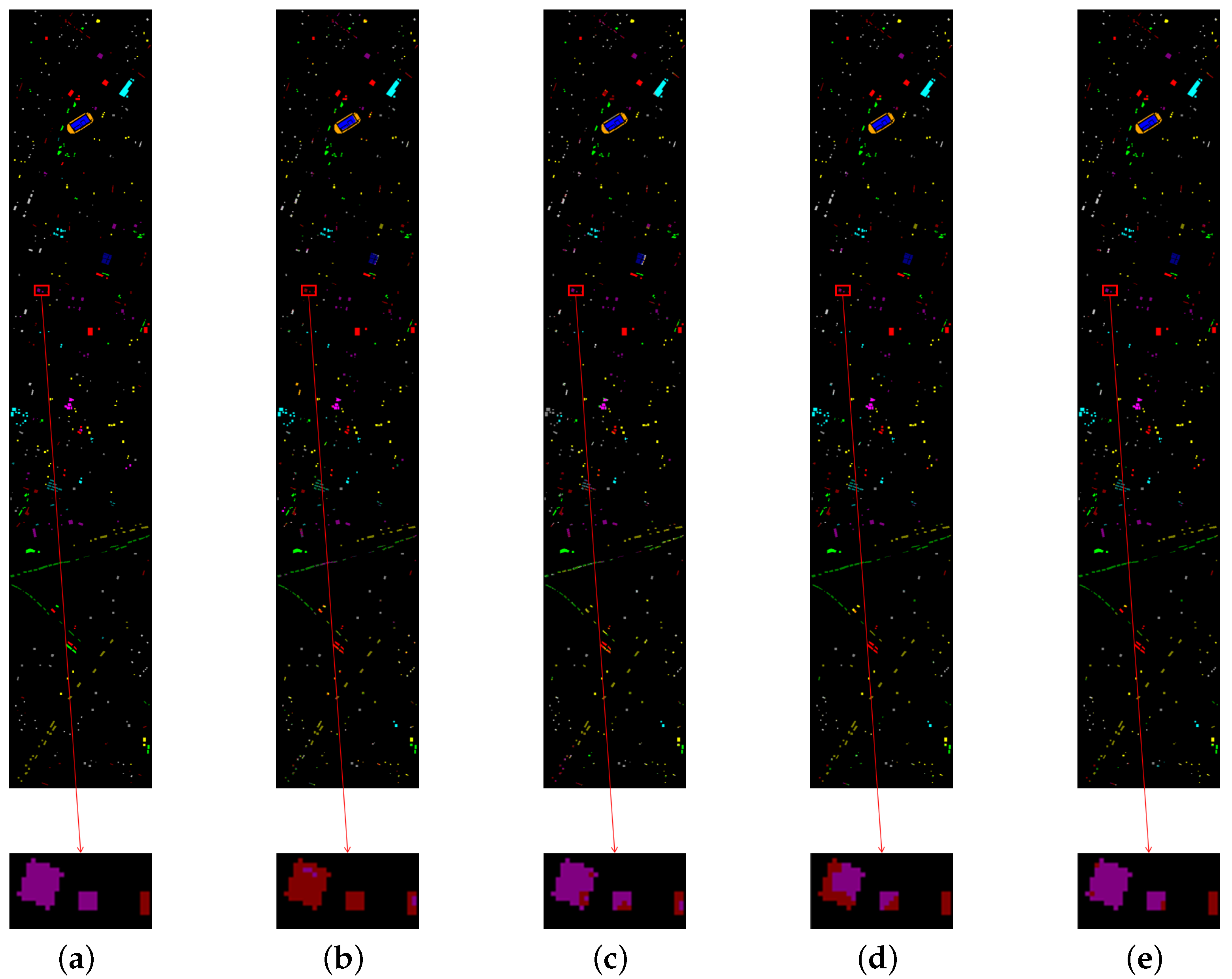

3.4. Ablation Study

In order to further validate the methods we used in our proposed model, we evaluate the generalization performance for the HU data set of the proposed model and three other models without the specific methods we used. The details of the models are as follows:

Denoted as SEW + TCJA, the deformable CNN is removed from the proposed framework.

Denoted as SEW + DEF, the TCJA layer is removed from the proposed framework.

Denoted as SEW, the deformable CNN and TCJA layers are both removed from the proposed framework.

For the ablation experiments, we change the patch size to

to better reflect the boundary effect and keep the other experimental settings unchanged.

Table 10 shows the classification results of the ablation experiments over HU data sets. Compared with SEW, SEW + DEF, SEW + TCJA, and the proposed model produce notable improvement in all of the three matrices (OA, AA, and

). Regarding OA, the TCJA layer method used in SEW + TCJA and the proposed model achieves 10.24% and 10.41% improvement than SEW and SEW + DEF, respectively. Furthermore, the deformable CNN method used in SEW + DEF and the proposed model obtain 0.63% and 0.8% advances compared with SEW and SEW + TCJA, respectively. The classification maps are shown in

Figure 14. We can observe that the deformable CNN method can mitigate the boundary confusion phenomenon.

3.5. Comparison of Running Times

In this section, the training and testing time of three representative CNN-based methods and our proposed BDSNN on four data sets are shown in

Table 11. Due to the limitations of the computing platform, we can only estimate the time on nonneuromorphic computers. As a result, all of the traditional deep learning methods have an advantage in training and test time compared with our proposed BDSNN, while the advantages of SNN in terms of energy saving and faster computing can only be demonstrated with the application to neuromorphic computers [

44]. Concerning the SNN-based HSI-SNN, the time and OA are shown in

Table 12. The training time of our proposed BDSNN is about 1.82–3.24 times as long as the training time of HSI-SNN and about 3.61–7.73 times in terms of test time, with about a 2.33–8.56% improvement of OA. Due to a more complex structure, the proposed BDSNN has a disadvantage in running times. The introductions of TCJA and deformable convolution create a burden for computation, as they have many non-spiking calculation processes, such as attention vector generation and offset generation. The solution to reducing the implications for computational efficiency will be one of our future research directions.