1. Introduction

Hyperspectral remote sensing is a specialized remote sensing technique employed to acquire hyperspectral image data pertaining to the Earth’s surface. Compared with traditional remote sensing images, hyperspectral remote sensing can provide richer spectral information by observing and collecting data in more narrow wavelength bands. It has a wide range of applications in the fields of earth science, environmental monitoring, agriculture, forestry, urban planning, etc. Hyperspectral remote sensing uses sensors capable of collecting data in dozens or hundreds of consecutive spectral bands. These bands typically cover the visible, near-infrared, and short-wave infrared frequency bands. The spectral response of each band can provide a spectral signature, allowing more information to be obtained from remotely sensed images. By analyzing and processing these hyperspectral data, more detailed and accurate information, such as ground features, material composition, and environmental parameters, is obtained. However, the spatial resolution and spectral resolution of hyperspectral images often cannot reach the ideal state at the same time, considering the limitations of solar incident energy and sensors. Therefore, a popular approach is to improve the spatial resolution of hyperspectral images by fusing multispectral images (MSIs) with hyperspectral images (HSIs). This fusion method can make full use of the higher spatial resolution of multispectral images and combine it with the rich spectral information of hyperspectral images to obtain images with both a high spatial resolution and rich spectral information.

In the field of hyperspectral images, image fusion has been extensively and intensively studied by many researchers. According to the three dimensions in which the fused data are located, image fusion can be classified into pixel-level fusion, feature-level fusion, and decision-level fusion. Panchromatic sharpening [

1] was one of the first spatial–spectral fusion methods developed. This method fuses a panchromatic image with a high spatial resolution with a multispectral image with a low spatial resolution but with multiple spectral bands. The panchromatic image has only one spectral band but a high spatial resolution, while the multispectral image contains rich spectral information, although it has a low spatial resolution. Via the panchromatic sharpening method, an image with both high spatial resolution containing multiple spectral bands can be obtained. This fusion method has an important role in the field of remote sensing and can provide more accurate and comprehensive information for various applications.

Pixel-level fusion methods are classified as component replacement (CS) [

2,

3,

4], multi-resolution analysis (MRA) [

5,

6], hybrid methods [

7,

8], and model-based methods. These methods include intensity hue saturation (IHS) [

9,

10] methods, the Brovey transform [

11], principal component analysis (PCA) [

12], Gram–Schmidt (GS) [

13] orthogonalization, the Laplace pyramid [

14], the curvelet transform [

15], and sparse matrix decomposition [

16]. However, with the introduction of more algorithms and experiments, researchers have found some limitations of traditional methods to solve the problem of the hyper-resolution of hyperspectral images.

With the continuous development of model-based methods, researchers are increasingly focused on matrix-based decomposition [

17,

18,

19,

20,

21] and tensor-based decomposition [

22,

23,

24,

25] methods. Common tensor decomposition methods include matrix decomposition, CP decomposition (CANDECOMP/PARAFAC) [

26,

27], Tucker decomposition [

28,

29], Tensor Train decomposition [

30,

31], and tensor ring decomposition [

32,

33,

34]. These methods are receiving increasing attention and have more applications in the field of hyperspectral image super-resolution. A matrix can be viewed as a two-dimensional tensor, and therefore matrix decomposition can also be viewed as two-dimensional tensor decomposition.

Naoto Yokoya et al. [

35] proposed a fusion method for hyperspectral and multispectral data called Coupled Non-negative Matrix Factorization (CNMF). This approach decomposes both types of data into endmember matrices and abundance matrices through unsupervised unmixing, resulting in a high-resolution hyperspectral image. However, CNMF is an ill-posed inverse problem and requires the addition of regularization terms to enhance its effectiveness. Some researchers have made improvements to CNMF. For instance, Yang et al. [

36] introduced endmember vertex distance and iterative centroid proximity regularization terms, incorporating sparse and proximal regularization terms into CNMF. This approach reduces the computational complexity through proximal alternating optimization. Simões et al. [

37] proposed the HySure method, which uses subspace regularization and vector total variation to effectively address the fusion of multispectral and panchromatic images. Dian et al. [

38] utilized clustering structures to propose a low-dimensional spectral subspace representation model, which effectively captures the self-similarity of hyperspectral images and enhances image reconstruction. Xue et al. [

21] developed a subspace clustering method based on structured sparse low-rank representation, where data samples are expressed as linear combinations of a dictionary and a basis matrix. This approach leverages learned spatial and spectral low-rank structures for hyperspectral image super-resolution.

However, in terms of computational efficiency and cost-effectiveness, tensor decomposition methods have shown unique advantages compared to matrix factorization methods. They comprehensively capture both spatial and spectral information in hyperspectral images. This comprehensiveness allows tensor decomposition to excel in preserving image details and features, thereby improving image resolution and information recovery capabilities.

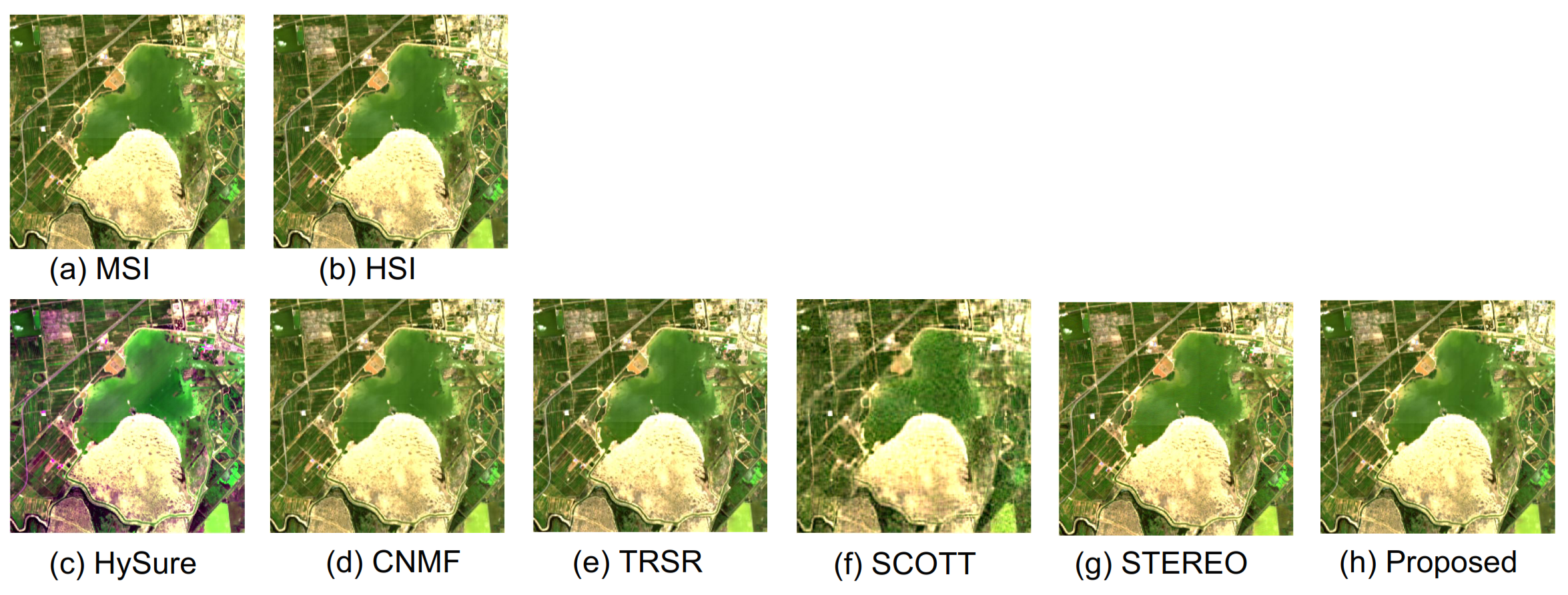

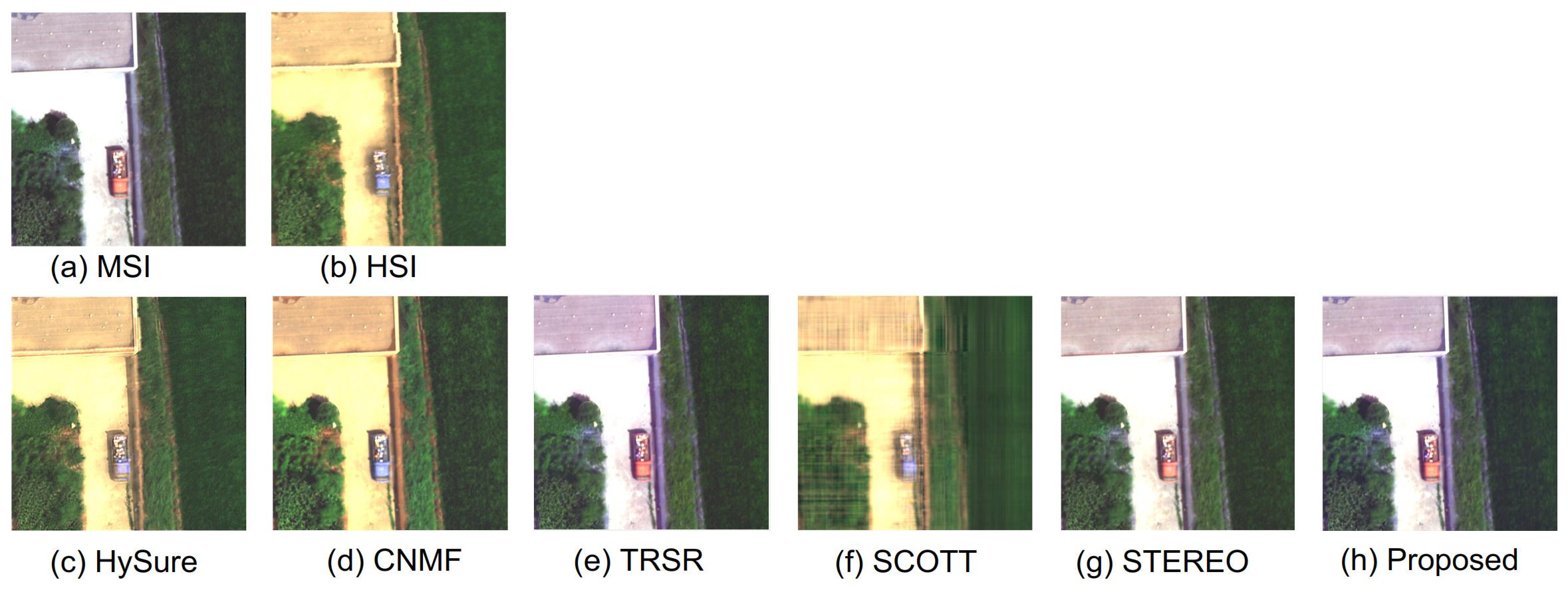

Prévost et al. [

39] proposed a new method for hyperspectral super-resolution (Coupled Tucker Approximation: Recoverability and SVD-Based, SCOTT), which is based on a coupled low-rank multicollinear (Tucker) model, using low-rank tensor approximation for hyperspectral super-resolution reconstruction of images. To implement the coupled tensor approximation, they proposed two SVD-based algorithms that are simple and efficient for dealing with unknown spatial degradation and generalized sharpening. By optimizing these algorithms, more accurate super-resolution reconstruction results of hyperspectral images can be obtained. Kanatsoulis et al. [

40] proposed a new framework based on coupled tensor factorization (STEREO). This method aims to identify the super-resolution tensor and is suitable for dealing with cases where the spatial degradation model is unclear or inaccurately estimated. By optimizing this framework, the super-resolution reconstruction of hyperspectral images can be improved.

Dian et al. [

41] introduced the non-local sparse tensor factorization (NLSTF) method to address hyperspectral image super-resolution. They treated hyperspectral data as a three-way tensor and applied Tucker decomposition techniques while incorporating the non-local similarity prior to hyperspectral images. This approach combines sparse tensor decomposition and non-local means into a unified framework, comprehensively addressing the issues of dictionary estimation and the sparse core tensor of each cube. This innovative method has brought new insights and solutions to the field of hyperspectral image super-resolution. Zeng et al. [

25] investigated a multi-modal core tensor factorization (MCTF) method, which combines tensor low-rank modeling with a non-convex relaxation form (NC-MCTF). This model integrates the low-rank information provided by the Tucker and T-SVD methods, enabling simultaneous modeling of low-rank structures in multiple spectral directions and accurately recovering the intrinsic low-rank structural data using a small number of observed entries.

In summary, these methods make full use of tensor decomposition techniques to handle the multi-dimensional information in hyperspectral images, providing powerful tools and approaches for the field of hyperspectral image processing. In recent years, with the in-depth study of high-dimensional tensors, methods based on tensor chains and tensor rings have gradually emerged and gained popularity. These methods offer valuable insights and directions for future research in hyperspectral image processing.

In 2019, Dian [

42] introduced a prior model based on the low tensor sequence rank, learning the correlations among spatial, spectral, and non-local modes in an HR-HSI cube with non-local similarity. This method transformed the super-resolution problem into a tensor chain rank regularization optimization problem. Jin et al. [

23] employed a fully connected coupled tensor network to decompose the spatial structure of high-order tensors and used graph regularization techniques to preserve spectral information, thus improving the quality of the image.

Zhao et al. [

43] first introduced the tensor ring decomposition (TR) model in 2016 based on the basic tensor decomposition model, which represents a high-dimensional tensor as a third-order tensor with cyclic interconnection properties by performing cyclic linear product operations on a low-dimensional core, and at the same time uses a graphical interpretation of it. The research focuses on how the properties of multilinear algebra can be effectively utilized by performing direct operations on TR representations (i.e., cores). This provides a potentially powerful framework for dealing with large-scale data. Additionally, it explores the connections with other tensor decomposition models, allowing for easy migration of potential representations from traditional models to TR models.

The coupled tensor ring model for hyperspectral image super-resolution problem was proposed by He et al. [

44]. The model combines the advantages of coupling matrix and Tucker factorization and can better exploit the global spectral low-rank properties of recovered high-resolution hyperspectral images by using the kernel normalization tensor of the third core. The model has been shown to perform better in exploiting the low-rank properties of different hyperspectral image classes. By optimizing the CTRF model, accurate super-resolution reconstruction of hyperspectral images can be achieved, thus improving the spatial resolution and the quality of spectral information of the images.

Chen et al. [

34] proposed an efficient FSTRD method to reconstruct HR-HSIs from an LR-HSI and HR-MSI pair of the same scene. The method introduces a smooth regularization term to constrain the factors in the tensor ring decomposition to capture the spatial–spectral continuity of HR-HSIs. Meanwhile, a PAM (Projected Alternating Minimization) algorithm was used to optimize each factor. Through the optimization process, the FSTRD method can effectively reconstruct the high-resolution hyperspectral images (HRIs) and improve the spatial resolution and spectral continuity of the images.

Despite the promising future of tensor ring decomposition methods in the field of hyperspectral image super-resolution, they still face several challenges and problems, which include difficulties in overfitting problems, real dataset validation, and noise interference. This paper presents a new algorithm for super-resolution of hyperspectral images based on graph-regular tensor ring decomposition. The contributions of the proposed method are as follows.

- (1)

The graph regularization term is introduced, and the symmetric normalized Laplacian matrix is used to constrain the model, which provides a better structure representation and feature extraction ability for the model. This optimization method can not only effectively improve the structure and feature representation of the image, but also make full use of the spatial and spectral information in the process of image reconstruction, so as to preserve the key details and edge information.

- (2)

By introducing a spatial smoothness constraint term, the paper successfully achieves spatial smoothness in the reconstructed images. This constraint not only preserves the textures and structural characteristics of the images but also effectively addresses overfitting and optimization oscillations. As a result, it ensures the stability and quality of the hyperspectral image super-resolution algorithm, providing a safeguard for its performance.

- (3)

This method is based on tensor ring decomposition, fully utilizing the dual characteristics of hyperspectral images’ spatial structure and spectral information. By constructing a graph model and applying regularization techniques, it effectively captures both local and global features of the image, while also successfully preserving the spectral information. This enables achieving more comprehensive and accurate image reconstruction in the context of super-resolution.

- (4)

The model proposed in this paper employs a multi-stage optimization framework, wherein the results of tensor ring decomposition are iteratively refined concurrently with the integration of regularization constraints. This multi-stage optimization strategy enhances the convergence and stability of the model, thereby yielding more precise and dependable super-resolution outcomes.

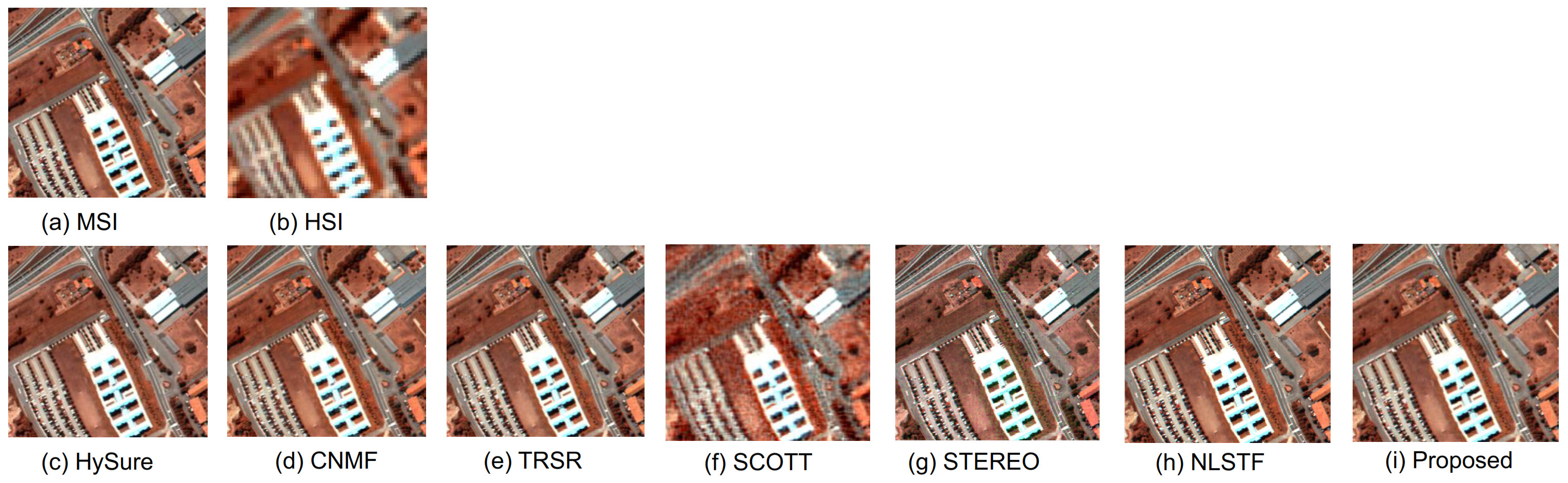

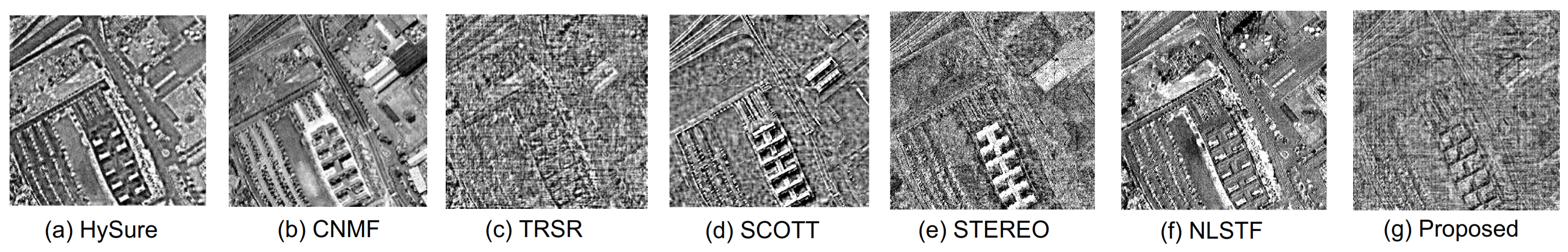

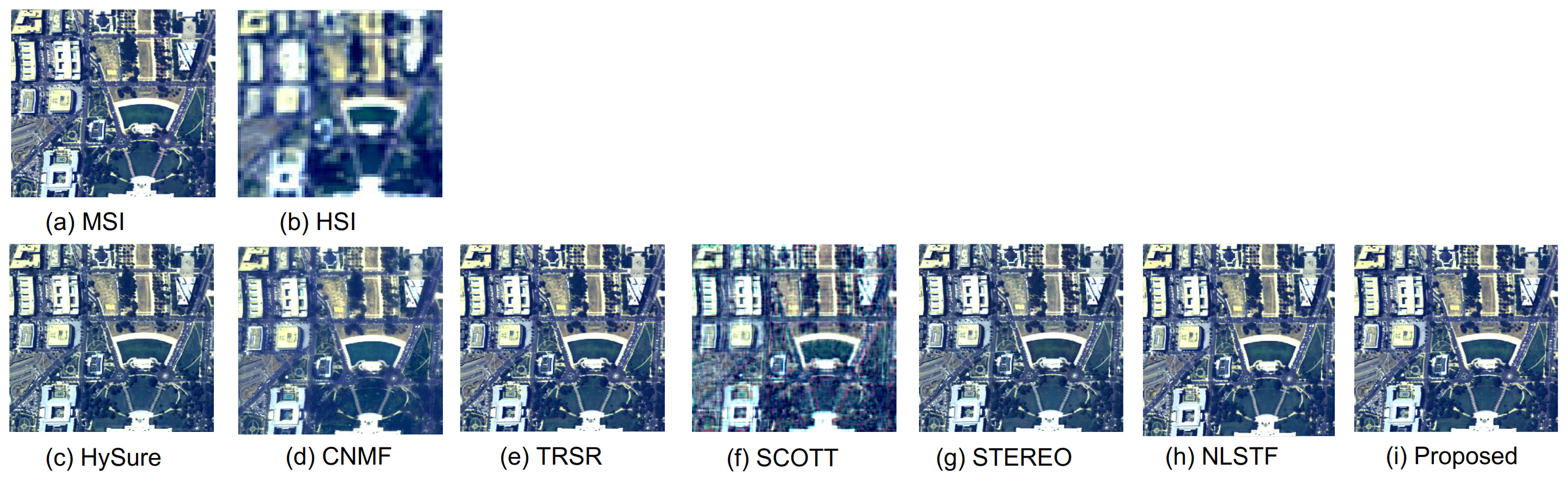

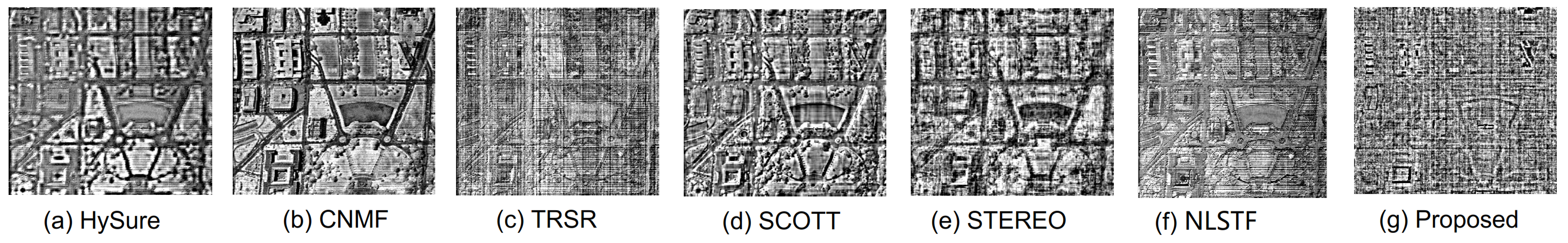

The algorithm in this paper has been experimentally demonstrated to achieve a significant improvement in the hyper-resolution task of hyperspectral images. Compared with traditional methods, our proposed algorithm not only produces clearer, detail-rich high-resolution images but also effectively retains the spectral information, particularly evident in real datasets. Therefore, the hyperspectral image super-resolution algorithm based on graph regular tensor ring decomposition has a broad potential and feasibility in practical applications.

The rest of the paper is organized according to the following structure.

Section 2 provides the background information of the paper and details the hyperspectral image super-resolution algorithm (GRTR) based on graph regular tensor ring decomposition.

Section 3 describes the optimization process of the GRTR algorithm in detail.

Section 4 presents and analyzes the experimental results. Experimental evaluations are performed using multiple hyperspectral image datasets and compared with other classical methods. The performance of different algorithms in terms of spatial resolution enhancement and spectral fidelity is analyzed, and the quality of the reconstructed images is quantitatively evaluated. Finally,

Section 5 summarizes the advantages and limitations of the GRTR algorithm and discusses possible future directions for improvement.

2. Background

2.1. Symbolic Representation

In this paper, the higher-order tensor is denoted using a calligraphic letter, denotes a tensor of order n, and denotes the size of the nth dimension. The third-order tensor, matrix, and vector are represented by the cursive letter , the upper-case letter , and the lower-case letter , respectively.

The high-resolution hyperspectral images is defined as the third-order tensor

,

, the low-resolution hyperspectral image (HSI) is defined as

, and the high-resolution multispectral image (MSI) is defined as

, where

and

denote the magnitude of the broad mode,

,

and

denote the magnitude of the high mode,

,

and

denote the magnitude of the spectral mode,

.

represents the product of the tensor

and the matrix

X in the

nth mode. The three-state expansion of the third-order tensor is denoted as

2.2. Tensor Decomposition

A tensor represents an abstraction of a multilinear mapping. It is an extension of a multidimensional array or multidimensional vector that can be used to represent a linear relationship between multiple vectors, matrices, or higher dimensional arrays. A tensor can be thought of as a multidimensional object that can have any number of dimensions, each of which can have an arbitrary size. The dimensions describe how the elements in the tensor are arranged, while the size of the tensor indicates the number of elements in each dimension. Common tensor decomposition methods include CP decomposition, Tucker decomposition, Tensor Train decomposition, and tensor ring decomposition.

CP decomposition [

45] is a basic tensor decomposition method that decomposes a high-dimensional tensor into the product of multiple low-dimensional tensors. In hyperspectral image fusion, CP decomposition can decompose a hyperspectral image into three tensors that represent the relationship of spectra, space, and data, respectively, and each sub-tensor has rank 1 in a single dimension. The mathematical representation of CP decomposition is

where

R is the magnitude of tensor rank, and the three sub-tensors are multiplied in the form of the outer product.

,

, and

denote low-order potential factors, so CP decomposition can also be denoted as

Although CP decomposition is concisely expressed, determining its rank is an NP-hard problem, making it challenging to ascertain the magnitude of the tensor rank.

Tucker decomposition [

46], also known as higher-order singular value decomposition (HOSVD), is a widely used higher-order tensor decomposition method, which decomposes a tensor into the product of a kernel tensor and multiple factor tensors. In hyperspectral image fusion, Tucker decomposition can decompose a hyperspectral image into a core tensor and multiple factor tensors. Each factor tensor represents different features, such as spectral, spatial, etc. The expression of Tucker decomposition is

where

represents the core tensor,

and

represent the spatial feature tensor, and

represents the spectral feature tensor.

Tensor Train decomposition [

47] processes higher-order tensors by decomposing them into a product of multiple small kernel tensors. In hyperspectral image fusion, Tensor Train decomposition can decompose a hyperspectral image into multiple small kernel tensors. Each kernel tensor represents features like spectra, space, and so on. The expression of Tensor Train decomposition is

where

,

,

. The rank of Tensor Train is defined as

,

.

2.3. Tensor Ring Decomposition

Tensor ring decomposition is a method that represents higher-order tensors as a ring product of a series of lower-order tensors. It is an extension and improvement of traditional tensor decomposition methods such as CP decomposition and Tucker decomposition. In tensor ring decomposition, the original high-dimensional tensor is represented as a ring structure consisting of multiple low-dimensional tensors. Each low-dimensional tensor represents the projection of the original tensor onto a particular mode. By interconnecting these low-dimensional tensors, the original tensor can be recovered. The expression of tensor ring decomposition is

where

denotes the matrix trace multiplication. The rank of the tensor ring is defined as

,

.

is the

nth transverse slice matrix of the core tensor

, so the HRI under the tensor ring decomposition can also be expressed as

where

,

, and

. The matrix representation of the TR can be expressed as

. The main advantage of tensor ring decomposition is its ability to handle high-dimensional data efficiently and keep the storage and computational complexity low. It also has better local representation performance to capture the local structure and dependencies in high-dimensional data so that it can efficiently process high-dimensional data and extract useful information.

2.4. Graph Regularization

Graph regularization [

48] is a graph-theory-based regularization method for processing data with a graph structure, such as social networks, images, videos, speech, etc. Its main aim is to improve the performance of a model by introducing structural information from the graph into the model. It is based on the concept of graph theory by establishing connection relations between data samples and using these connection relations to enhance the performance and robustness of the learning algorithm.

For a graph,

, where

V is the set of vertices and

E is the set of edges, let

W be a symmetric weight matrix whose elements

denote the weights of the edges between vertices

i and

j. Define the degree matrix

D to be a diagonal matrix whose element

denotes the degree of vertex

i (the sum of the weights of the edges connected to it). The symmetric normalized Laplace matrix

is defined as:

I is the unit matrix, and is the matrix obtained by calculating the positive square root of all elements of D. The symmetric normalized Laplace matrix has the following characteristics: Symmetry— is a symmetric matrix, i.e., . Normalization— is normalized by the normalization of the degree matrix, such that the weights corresponding to the degrees of each vertex are normalized.

In this paper, the spatial map of

is denoted as

, and the normalized spatial Laplace matrix with respect to the graph

can be denoted as

The spectral map of

is denoted as

, and the normalized spatial Laplace matrix with respect to the graph can be denoted as

where

and

are unitary matrices with matching sizes.

focuses on the similarity between pixels in a local region, which can be defined based on the distance between pixels or other similarity metrics, and thus it is able to capture spatial features such as textures, details, and edges in an image. By constraining , the super-resolution algorithm is able to better preserve and reconstruct the spatial features of the image, resulting in a clearer and more detailed synthetic high-resolution image. The can capture the spectral features in the image, i.e., the correlation and consistency between different bands. By constraining , the super-resolution algorithm ensures that the synthesized high-resolution image is consistent with the original image in terms of spectral features, thus preserving the color accuracy and spectral detail of the image. Collectively, the spatial constraint term plays a role in preserving and reconstructing spatial attributes like texture, details, and edges within an image. Similarly, the spectral constraint term contributes to upholding the spectral precision and consistency of an image. The amalgamation of these two constraint terms synergistically enhances the reconstruction prowess of the hyperspectral image super-resolution algorithm, resulting in synthesized high-resolution images that are more faithful and accurate in representation.

Graph regularization involves constructing a graph for the hyperspectral image, which models the relationships between adjacent pixels. These relationships can encompass spatial positions, spectral similarities, and more. By introducing regularization terms defined on the graph, the output of the super-resolution algorithm can be constrained, promoting smoother and more coherent high-resolution images in both spatial and spectral aspects. Additionally, graph regularization helps maintain the image’s structural and textural features, preventing the generation of overly smooth or distorted images.

The objective of hyperspectral image super-resolution is to recover a high-resolution image from a low-resolution hyperspectral image, aiming to enhance image details and spatial resolution. Graph regularization, on the other hand, harnesses the spatial and spectral characteristics within hyperspectral images and leverages the connections within the constructed graph. By doing so, it introduces additional constraints and prior information to enhance the performance of super-resolution algorithms.

2.5. Spatial Smoothing Constraints

Spatial smoothing constraint is a regularisation constraint method commonly used in image processing and computer vision tasks. It achieves the smoothing of an image by constraining the neighboring pixels of the image and limiting the gradient change of the image. For an image

, the spatial smoothing constraint on it is denoted as

In this context, represents the set of all pairs of neighboring elements in the graph . The matrix G is derived by unfolding in accordance with the spectral mode, where signifies the mth element of the jth column. A diminished value of the smoothness constraint term suggests a decrease in differences between adjacent pixels in the image, producing smoother transitions and preserving the innate textures and structural attributes. Moreover, this results in a reduction in the potential noise and irregularities within the image. By incorporating the spatial smoothing constraint, a standard of smoothness is imposed on the output. This constraint compels the model to generate results that are more uniform and streamlined. Such a method not only diminishes the likelihood of overfitting due to noise or localized details in the training dataset but also stabilizes the optimization process. This stability enhances the possibility of the model arriving at a globally optimal solution or a more desirable local optimum, ultimately refining the generalization capabilities and optimization outcomes.

2.6. Proposed Method

In the context of hyperspectral image super-resolution, the relationship between HSRs and HSIs can be understood as arising from spatial downsampling. Specifically, a HRI undergoes downsampling operations along both spatial dimensions, resulting in the creation of an HSI. Thus, we can express the generation relationship of HSIs as follows:

where

represents the HSI, and

and

are spatial downsampling matrices. Similarly, MSIs can be seen as obtained through spectral downsampling.

Thus, MSIs can be represented as follows:

where

represents the MSI and

represents the spectral downsampling matrix.

The hyperspectral image super-resolution algorithm based on graph-regularized tensor ring decomposition integrates the concepts of graph regularization and tensor ring decomposition when addressing hyperspectral image super-resolution challenges. In this approach, graph regularization is employed to capture the structural information within the image, while tensor ring decomposition aids in extracting latent spectral and spatial features. Within the algorithm’s framework, spatial smoothness constraints are applied to spatial sub-tensors, further enhancing the reconstruction effectiveness of the images. The specific formulation of this algorithm is as follows:

where

denotes the Frobenius norm and

,

, and

are the regularization parameters. ⊗ denotes the Kronecker product.

and

denote the symmetric normalized Laplacian matrices, and

and

represent spatial smoothness constraints. Through a multi-stage optimization framework, the algorithm progressively refines the results of

,

, and

to achieve hyperspectral image super-resolution reconstruction. Within this algorithm, the combination of graph regularization and tensor ring decomposition, along with the introduction of spatial smoothness constraints, leads to better integration of the structure and features of hyperspectral images. Such comprehensive constraints not only enhance the quality of image reconstruction but also aid in preserving image details and features, resulting in outstanding performance in hyperspectral image super-resolution tasks.

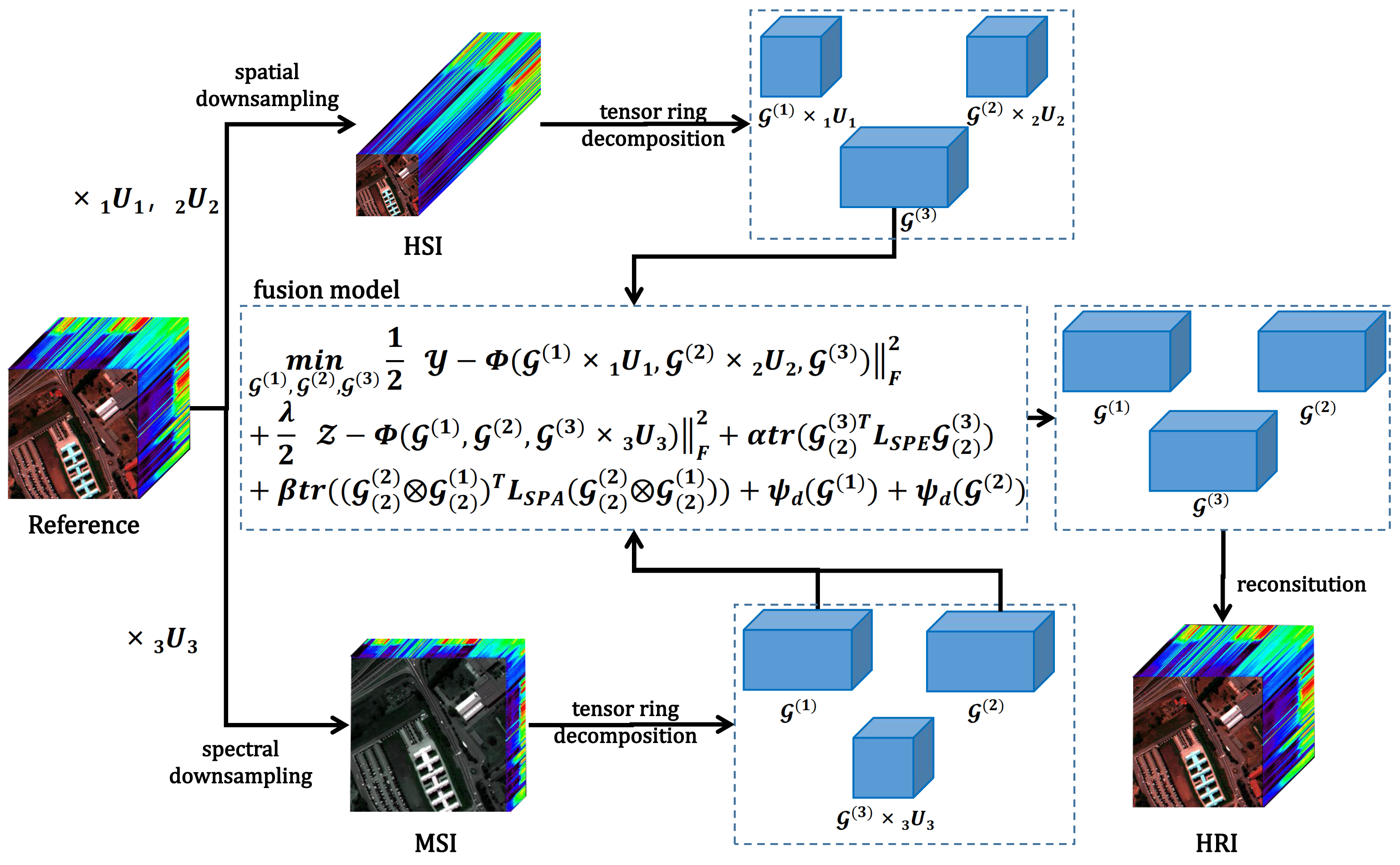

Figure 1 illustrates the overall framework of this algorithm, encompassing key steps such as data preprocessing, graph construction, tensor ring decomposition, objective function formulation, and super-resolution reconstruction. The process begins with HRI and undergoes data preprocessing, including spatial and spectral downsampling operations, resulting in corresponding HSI and MSI outputs. Subsequently, graph construction is a pivotal step within the algorithm. In the process of graph construction, pixels in hyperspectral and multispectral images are considered as nodes of the graph, and the normalized Laplace matrix of the graph is built based on the similarity and distance relationship between pixels. This approach effectively integrates spatial and spectral information, providing a foundation for subsequent regularization and optimization processes. By introducing the tensor ring decomposition technique, the hyperspectral and multispectral images are decomposed to obtain latent feature representations

,

, and

. These features have the capacity to capture underlying patterns and information within the data. To achieve the super-resolution reconstruction of hyperspectral images, we devised an objective function that integrates the features obtained from tensor ring decomposition with the relationships between the input hyperspectral image and the multispectral image. This objective function encompasses data terms, regularization terms, and spatial smoothness constraint terms, collectively aiming to enhance reconstruction quality while preserving image features.

The entire algorithm employs a multi-stage iterative optimization process to progressively refine , , and . Each stage of optimization thoroughly considers the constraints of graph regularization and tensor ring decomposition, aiming to iteratively improve the feature representations. Ultimately, based on the optimized features , , and , hyperspectral image super-resolution reconstruction is performed. Through reverse tensor ring decomposition and graph regularization, the algorithm recovers detailed information about the high-resolution image, achieving hyperspectral image super-resolution reconstruction.