Fast Tailings Pond Mapping Exploiting Large Scene Remote Sensing Images by Coupling Scene Classification and Sematic Segmentation Models

Abstract

1. Introduction

2. Methodology

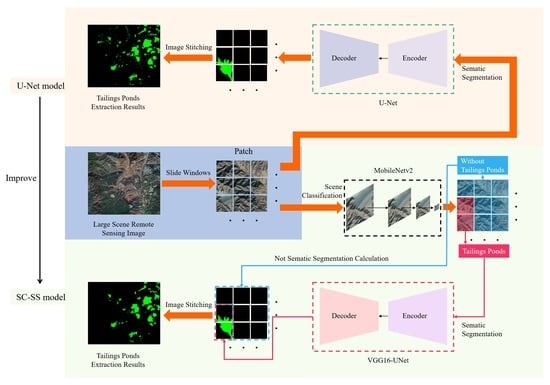

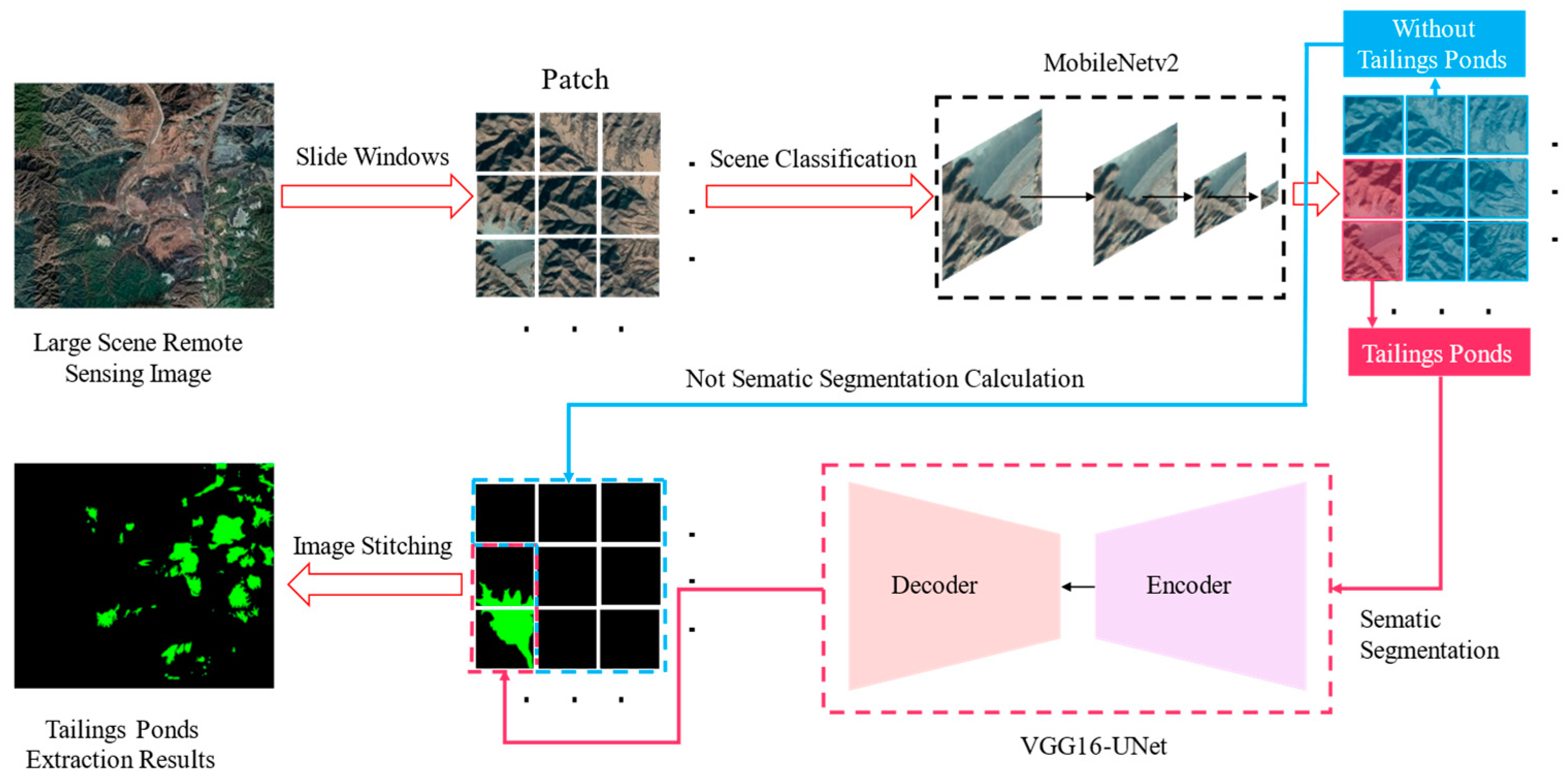

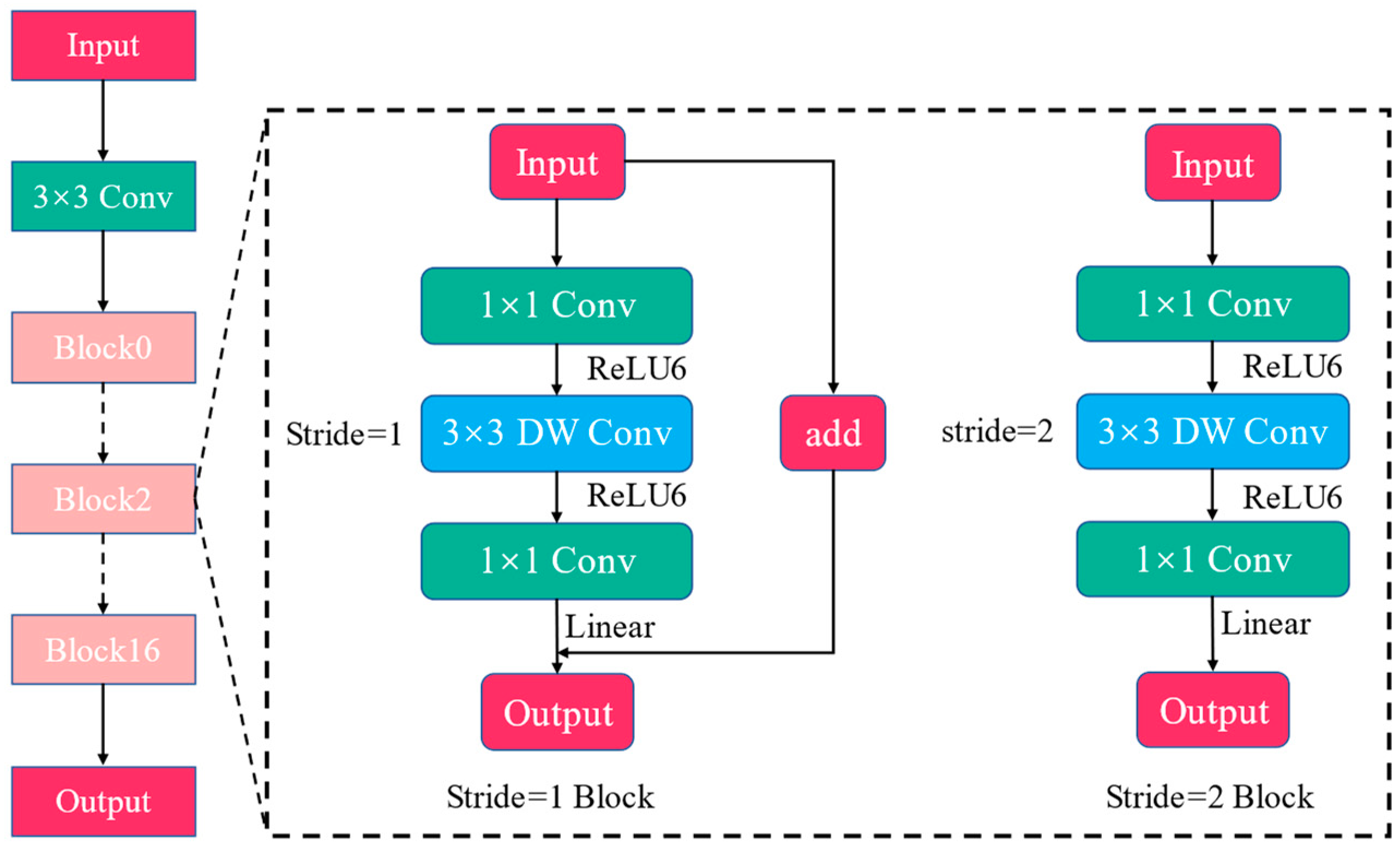

2.1. SC-SS Model

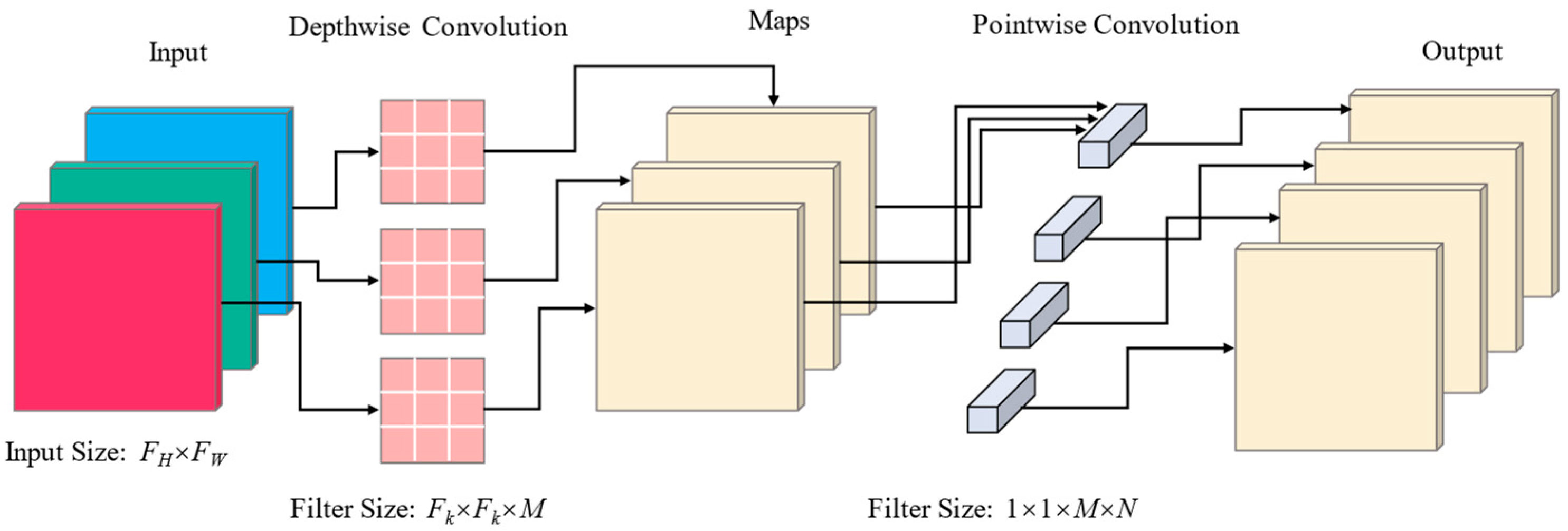

2.2. Scene Classification

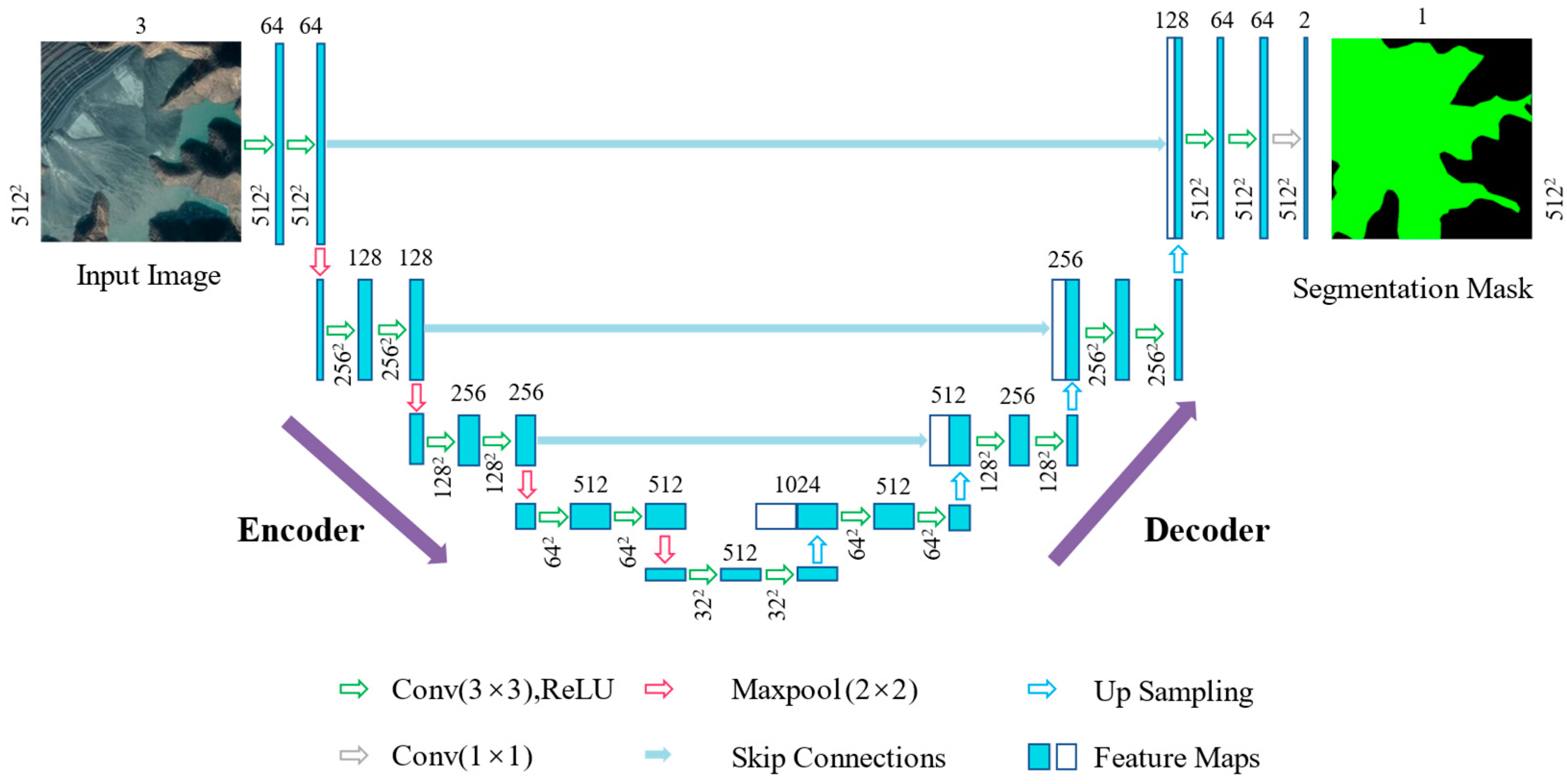

2.3. Semantic Segmentation

2.4. Evaluation Metrics

3. Experiments and Results

3.1. Study Area and Data

3.1.1. Study Area

3.1.2. Experimental Data

3.2. Experimental Setup

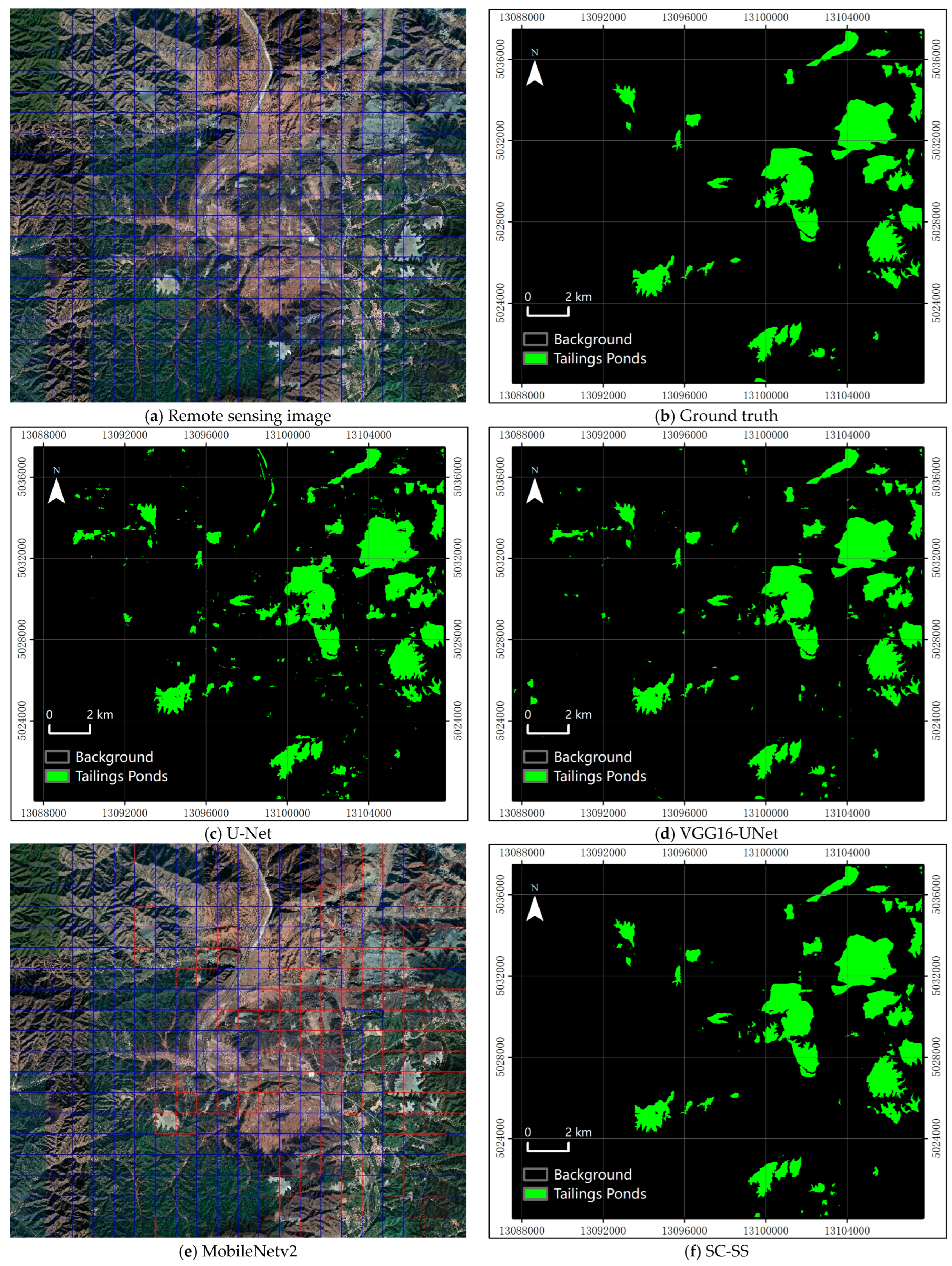

3.3. Experimental Results of the SC-SS Model

3.3.1. Tailings Pond Extraction Using MobileNetv2

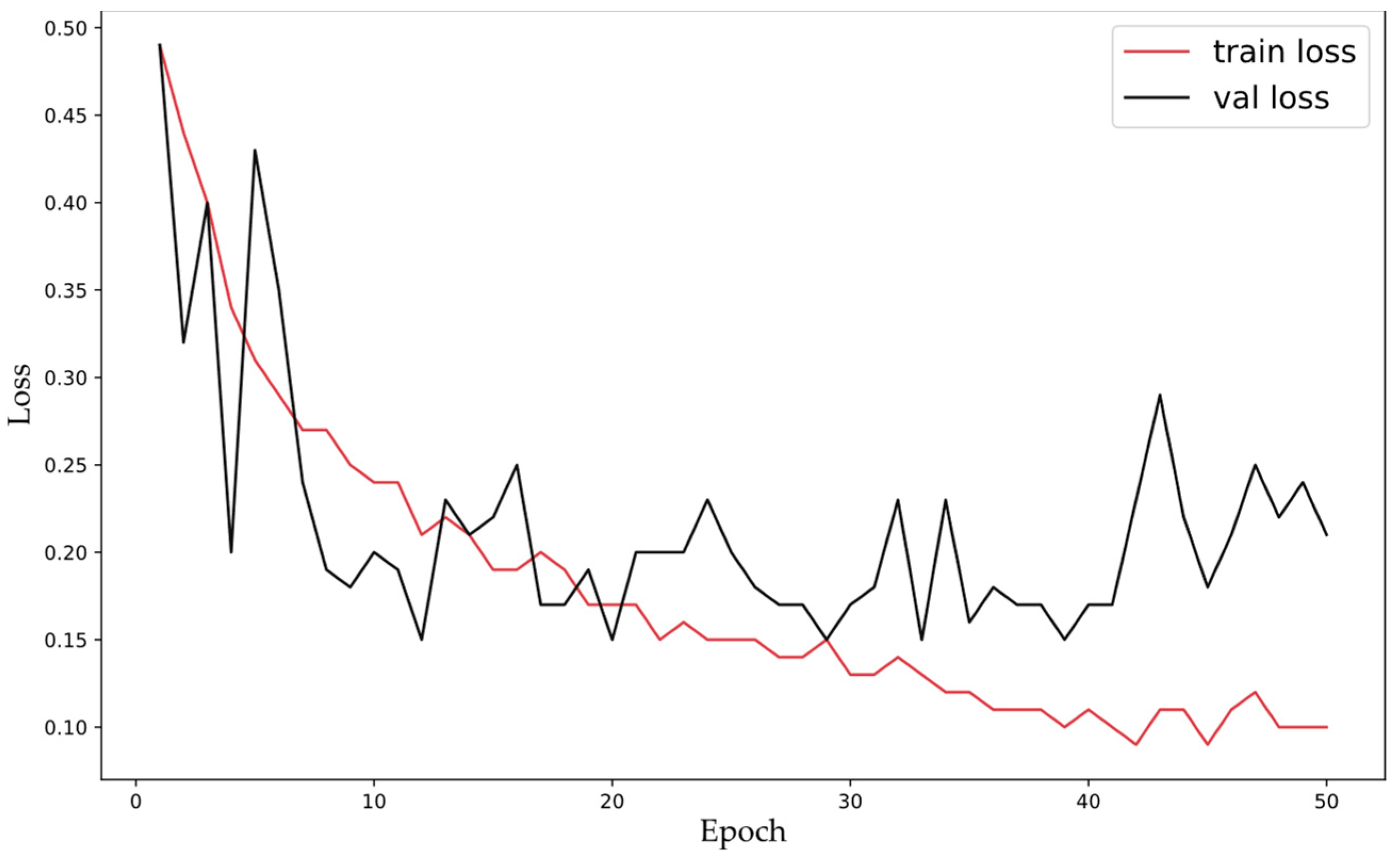

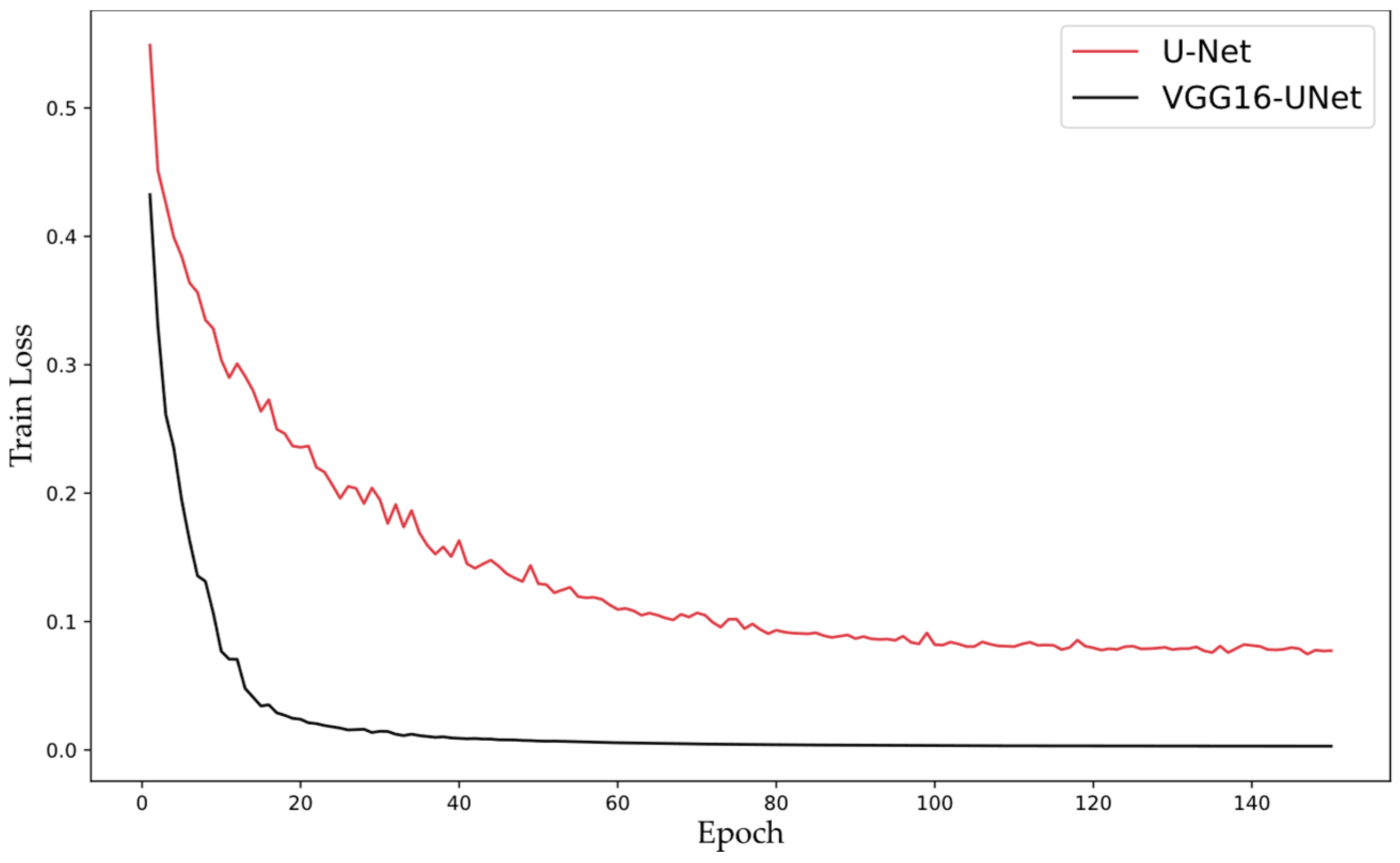

3.3.2. Tailings Pond Extraction Using VGG16-UNet

3.4. Comparison of Different Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Faster R-CNN | Faster Region-based Convolutional Neural Networks |

| FN | False Negatives |

| FP | False Positives |

| GF-6 | GaoFen-6 |

| IOU | Intersection Over Union |

| mAP | Mean Average Precision |

| ML | Maximum Likelihood |

| P | Precision |

| R | Recall |

| ReLU | Rectified Linear Unit |

| RF | Random Forest |

| SC-SS | Scene-Classification-Sematic-Segmentation |

| SSD | Single Shot Multibox Detector |

| SVM | Support Vector Machine |

| TP | True Positives |

| YOLOv4 | You Only Look Once v4 |

References

- Wang, C.; Harbottle, D.; Liu, Q.; Xu, Z. Current state of fine mineral tailings treatment: A critical review on theory and practice. Miner. Eng. 2014, 58, 113–131. [Google Scholar] [CrossRef]

- Komljenovic, D.; Stojanovic, L.; Malbasic, V.; Lukic, A. A resilience-based approach in managing the closure and abandonment of large mine tailing ponds. Int. J. Min. Sci. Technol. 2020, 30, 737–746. [Google Scholar] [CrossRef]

- Small, C.C.; Cho, S.; Hashisho, Z.; Ulrich, A.C. Emissions from oil sands tailings ponds: Review of tailings pond parameters and emission estimates. J. Pet. Sci. Eng. 2015, 127, 490–501. [Google Scholar] [CrossRef]

- Rotta, L.H.S.; Alcântara, E.; Park, E.; Negri, R.G.; Lin, Y.N.; Bernardo, N.; Mendes, T.S.G.; Souza Filho, C.R. The 2019 Brumadinho tailings dam collapse: Possible cause and impacts of the worst human and environmental disaster in Brazil. Int. J. Appl. Earth Obs. Geoinf. 2020, 90, 102119. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, Y.; Li, Q.; Zhang, Y.; Chen, X. Early Warning of Heavy Metal Pollution after Tailing Pond Failure Accident. J. Earth Sci. 2022, 33, 1047–1055. [Google Scholar] [CrossRef]

- Yan, D.; Zhang, H.; Li, G.; Li, X.; Lei, H.; Lu, K.; Zhang, L.; Zhu, F. Improved Method to Detect the Tailings Ponds from Multispectral Remote Sensing Images Based on Faster R-CNN and Transfer Learning. Remote Sens. 2022, 14, 103. [Google Scholar] [CrossRef]

- Oparin, V.N.; Potapov, V.P.; Giniyatullina, O.L. Integrated assessment of the environmental condition of the high-loaded industrial areas by the remote sensing data. J. Min. Sci. 2014, 50, 1079–1087. [Google Scholar] [CrossRef]

- Song, W.; Song, W.; Gu, H.; Li, F. Progress in the remote sensing monitoring of the ecological environment in mining areas. Int. J. Environ. Res. Public Health 2020, 17, 1846. [Google Scholar] [CrossRef] [PubMed]

- Lumbroso, D.; Collell, M.R.; Petkovsek, G.; Davison, M.; Liu, Y.; Goff, C.; Wetton, M. DAMSAT: An eye in the sky for monitoring tailings dams. Mine Water Environ. 2021, 40, 113–127. [Google Scholar] [CrossRef]

- Li, H.; Xiao, S.; Wang, X.; Ke, J. High-resolution remote sensing image rare earth mining identification method based on Mask R-CNN. J. China Univ. Min. Technol. 2020, 49, 1215–1222. [Google Scholar] [CrossRef]

- Chen, T.; Zheng, X.; Niu, R.; Plaza, A. Open-Pit Mine Area Mapping with Gaofen-2 Satellite Images Using U-Net+. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3589–3599. [Google Scholar] [CrossRef]

- Rivera, M.J.; Luís, A.T.; Grande, J.A.; Sarmiento, A.M.; Dávila, J.M.; Fortes, J.C.; Córdoba, F.; Diaz-Curiel, J.; Santisteban, M. Physico-chemical influence of surface water contaminated by acid mine drainage on the populations of diatoms in dams (Iberian Pyrite Belt, SW Spain). Int. J. Environ. Res. Public Health 2019, 16, 4516. [Google Scholar] [CrossRef] [PubMed]

- Rossini-Oliva, S.; Mingorance, M.; Peña, A. Effect of two different composts on soil quality and on the growth of various plant species in a polymetallic acidic mine soil. Chemosphere 2017, 168, 183–190. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Liu, X.; Wang, X.; Liu, S.; Deng, H. Statistical analysis of tailings ponds in China. J. Geochem. Explor. 2020, 216, 106579. [Google Scholar] [CrossRef]

- Ke, R.; Bugeau, A.; Papadakis, N.; Kirkland, M.; Schuetz, P.; Schönlieb, C.-B. Multi-Task Deep Learning for Image Segmentation Using Recursive Approximation Tasks. IEEE Trans. Image Process. 2021, 30, 3555–3567. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking BiSeNet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9716–9725. [Google Scholar]

- Yuan, Y.; Fang, J.; Lu, X.; Feng, Y. Remote Sensing Image Scene Classification Using Rearranged Local Features. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1779–1792. [Google Scholar] [CrossRef]

- Zhang, X.; Yue, Y.; Gao, W.; Yun, S.; Su, Q.; Yin, H.; Zhang, Y. DifUnet++: A Satellite Images Change Detection Network Based on Unet++ and Differential Pyramid. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zakria, Z.; Deng, J.; Kumar, R.; Khokhar, M.S.; Cai, J.; Kumar, J. Multiscale and Direction Target Detecting in Remote Sensing Images via Modified YOLO-v4. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1039–1048. [Google Scholar] [CrossRef]

- Xu, G.; Wu, X.; Zhang, X.; He, X. LeviT-UNet: Make faster encoders with transformer for medical image segmentation. arXiv 2021, arXiv:2107.08623. [Google Scholar] [CrossRef]

- Huang, X.; Deng, Z.; Li, D.; Yuan, X. MISSformer: An effective medical image segmentation transformer. arXiv 2021, arXiv:2109.07162. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. UNet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2020), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change detection based on artificial intelligence: State-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Zhu, X.X.; Montazeri, S.; Ali, M.; Hua, Y.; Wang, Y.; Mou, L.; Shi, Y.; Xu, F.; Bamler, R. Deep learning meets SAR: Concepts, models, pitfalls, and perspectives. IEEE Geosci. Remote Sens. Mag. 2021, 9, 143–172. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, M.; Pan, B.; Shi, Z. Semisupervised center loss for remote sensing image scene classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1362–1373. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Li, Q.; Chen, J.; Li, Q.; Li, B.; Lu, K.; Zan, L.; Chen, Z. Detection of tailings pond in Beijing-Tianjin-Hebei region based on SSD model. Remote Sens. Technol. Appl. 2021, 36, 293–303. [Google Scholar]

- Liu, B.; Xing, X.; Wu, H.; Hu, S.; Zan, J. Remote sensing identification of tailings pond based on deep learning model. Sci. Surv. Mapp. 2021, 46, 129–139. [Google Scholar]

- Zhang, K.; Chang, Y.; Pan, J.; Lu, K.; Zan, L.; Chen, Z. Tailing pond extraction of Tangshan City based on Multi-Task-Branch Network. J. Henan Polytech. Univ. Nat. Sci. 2022, 41, 65–71, 94. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision–ECCV 2016, Proceedings of the European Conference on Computer Vision 2016 (ECCV 2016), Amsterdam, The Netherlands, 8–16 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Kai, Y.; Ting, S.; Zhengchao, C.; Hongxuan, Y. Automatic extraction of tailing pond based on SSD of deep learning. J. Univ. Chin. Acad. Sci. 2020, 37, 360. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Zhang, C.; Xing, J.; Li, J.; Sang, X. Recognition of the spatial scopes of tailing ponds based on U-Net and GF-6 images. Remote Sens. Land Resour. 2021, 33, 252–257. [Google Scholar] [CrossRef]

- Lyu, J.; Hu, Y.; Ren, S.; Yao, Y.; Ding, D.; Guan, Q.; Tao, L. Extracting the Tailings Ponds from High Spatial Resolution Remote Sensing Images by Integrating a Deep Learning-Based Model. Remote Sens. 2021, 13, 743. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018 (CVPR 2018), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016 (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, A.; Yang, Y.; Sun, Q.; Xu, Q. A deep fully convolution neural network for semantic segmentation based on adaptive feature fusion. In Proceedings of the 5th International Conference on Information Science and Control Engineering (ICISCE 2018), Zhengzhou, China, 20–22 July 2018; pp. 16–20. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Lin, S.-Q.; Wang, G.-J.; Liu, W.-L.; Zhao, B.; Shen, Y.-M.; Wang, M.-L.; Li, X.-S. Regional Distribution and Causes of Global Mine Tailings Dam Failures. Metals 2022, 12, 905. [Google Scholar] [CrossRef]

- Cheng, D.; Cui, Y.; Li, Z.; Iqbal, J. Watch Out for the Tailings Pond, a Sharp Edge Hanging over Our Heads: Lessons Learned and Perceptions from the Brumadinho Tailings Dam Failure Disaster. Remote Sens. 2021, 13, 1775. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 2017 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Roy, S.K.; Deria, A.; Hong, D.; Rasti, B.; Plaza, A.; Chanussot, J. Multimodal fusion transformer for remote sensing image classification. arXiv 2022, arXiv:2203.16952. [Google Scholar]

| Name | Params | FLOPs |

|---|---|---|

| Standard Convolution | ||

| Depthwise Separable Convolution |

| Model | P | R | F1 | IOU | Input Size | Time (s) |

|---|---|---|---|---|---|---|

| U-Net | 94.23% | 94.85% | 94.54% | 89.64% | 512 × 512 | 0.07 |

| VGG16-UNet | 98.90% | 98.95% | 98.93% | 97.88% | 512 × 512 | 0.09 |

| Model | P | R | F1 | IOU | Time (s) |

|---|---|---|---|---|---|

| U-Net | 83.26% | 93.01% | 87.87% | 78.36% | 30.99 |

| VGG16-UNet | 91.43% | 98.14% | 94.67% | 89.88% | 36.59 |

| SC-SS | 96.26% | 97.00% | 96.63% | 93.4% | 19.92 |

| Model | Scenes with Tailings Ponds | Scenes without Tailings Ponds | Accuracy | Time (s) |

|---|---|---|---|---|

| MobileNetv2 | 131 | 287 | 86.60% | 8.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Zhao, H.; Yang, Z.; Jin, Q.; Wu, Y.; Xia, P.; Meng, L. Fast Tailings Pond Mapping Exploiting Large Scene Remote Sensing Images by Coupling Scene Classification and Sematic Segmentation Models. Remote Sens. 2023, 15, 327. https://doi.org/10.3390/rs15020327

Wang P, Zhao H, Yang Z, Jin Q, Wu Y, Xia P, Meng L. Fast Tailings Pond Mapping Exploiting Large Scene Remote Sensing Images by Coupling Scene Classification and Sematic Segmentation Models. Remote Sensing. 2023; 15(2):327. https://doi.org/10.3390/rs15020327

Chicago/Turabian StyleWang, Pan, Hengqian Zhao, Zihan Yang, Qian Jin, Yanhua Wu, Pengjiu Xia, and Lingxuan Meng. 2023. "Fast Tailings Pond Mapping Exploiting Large Scene Remote Sensing Images by Coupling Scene Classification and Sematic Segmentation Models" Remote Sensing 15, no. 2: 327. https://doi.org/10.3390/rs15020327

APA StyleWang, P., Zhao, H., Yang, Z., Jin, Q., Wu, Y., Xia, P., & Meng, L. (2023). Fast Tailings Pond Mapping Exploiting Large Scene Remote Sensing Images by Coupling Scene Classification and Sematic Segmentation Models. Remote Sensing, 15(2), 327. https://doi.org/10.3390/rs15020327