A Fourier Frequency Domain Convolutional Neural Network for Remote Sensing Crop Classification Considering Global Consistency and Edge Specificity

Abstract

:1. Introduction

- (1)

- A convolutional neural network based on the Fourier frequency domain learning strategy is proposed, called FFDC net. This approach transforms the feature maps from the spatial domain to the Fourier frequency domain, decomposes the feature maps into low-frequency and high-frequency components in the spectral space using a dynamic frequency filtering component, and automatically adjusts the intensity and distribution of the different frequency components;

- (2)

- An analysis of the influence of the Fourier frequency domain learning strategy and the Dynamic Frequency Filtering module on the improvement of overall crop classification consistency and boundary distinction in crop classification;

- (3)

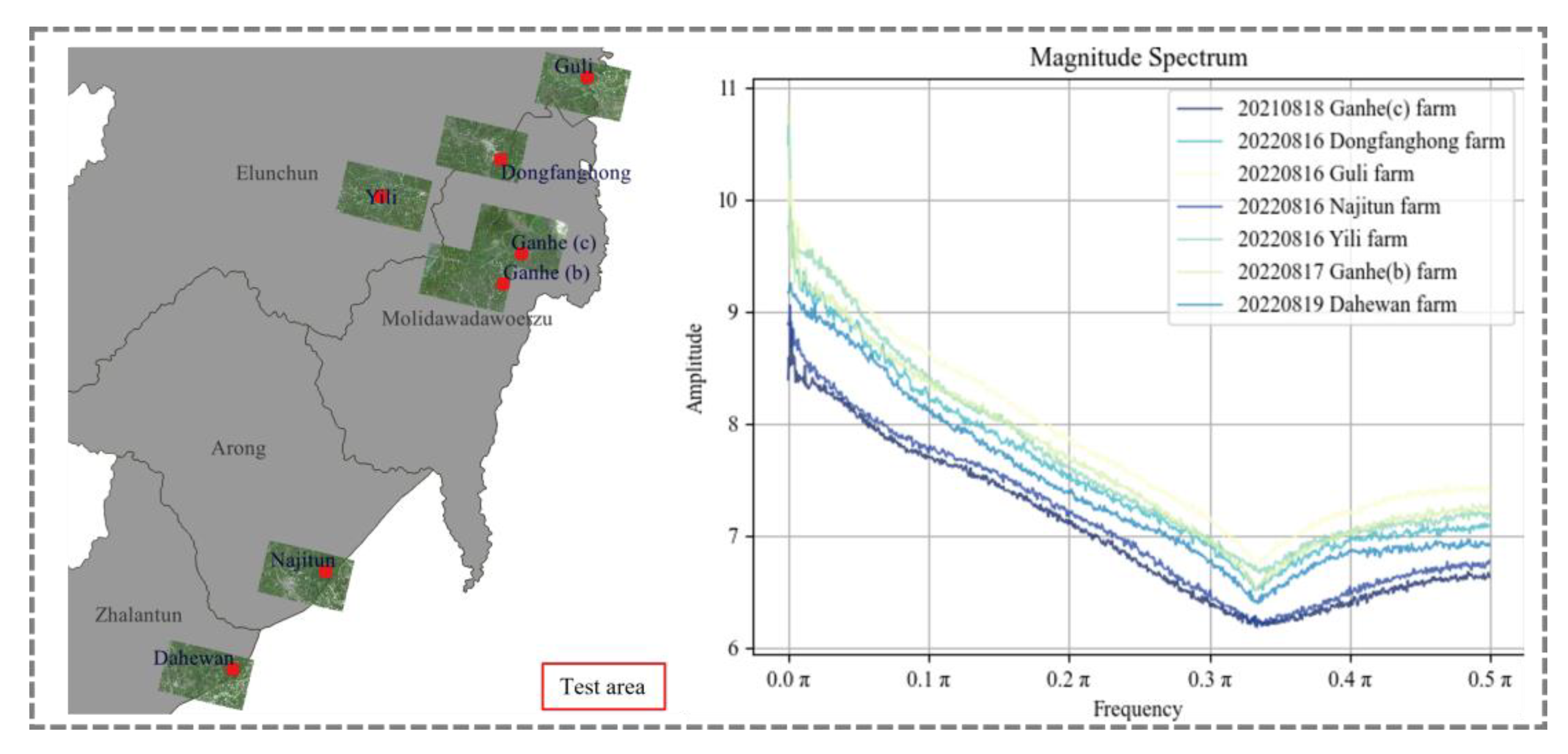

- The method’s adaptability was validated and compared through experiments in randomly selected regions from various farms in Hulunbuir, Inner Mongolia, China, and Cumberland County, the USA.

2. Materials and Methods

2.1. Dataset Acquisition and Preprocessing

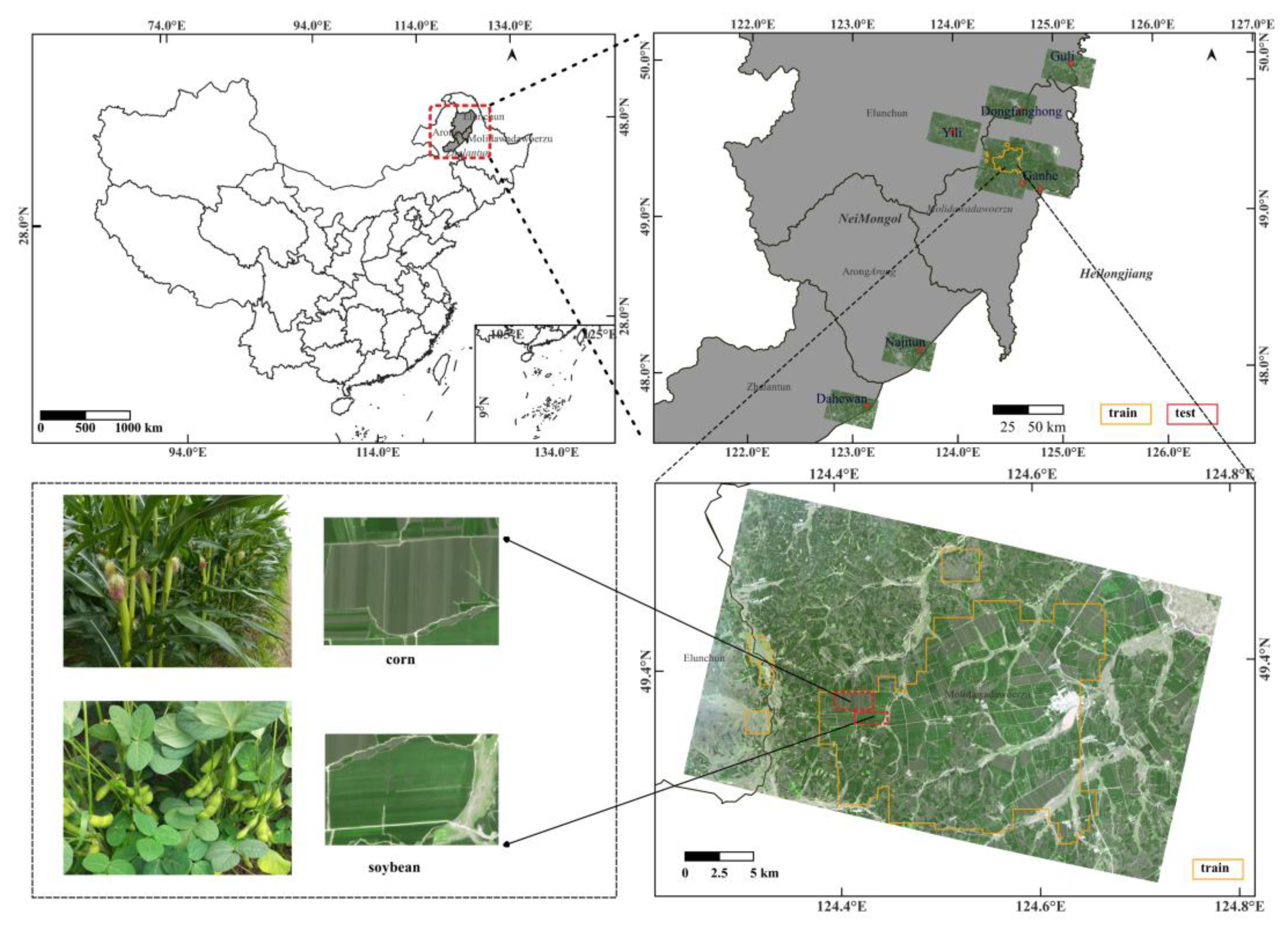

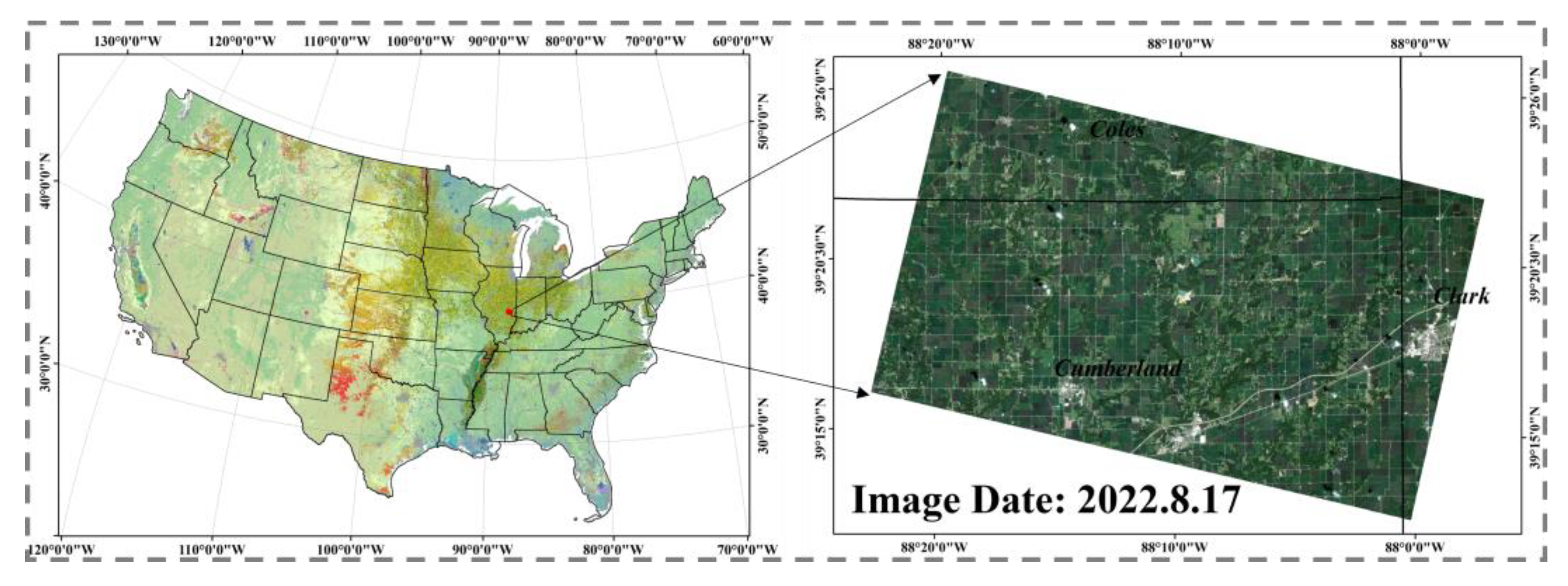

2.1.1. Study Area

2.1.2. Dataset Acquisition

2.1.3. Data Preprocessing

2.2. Method

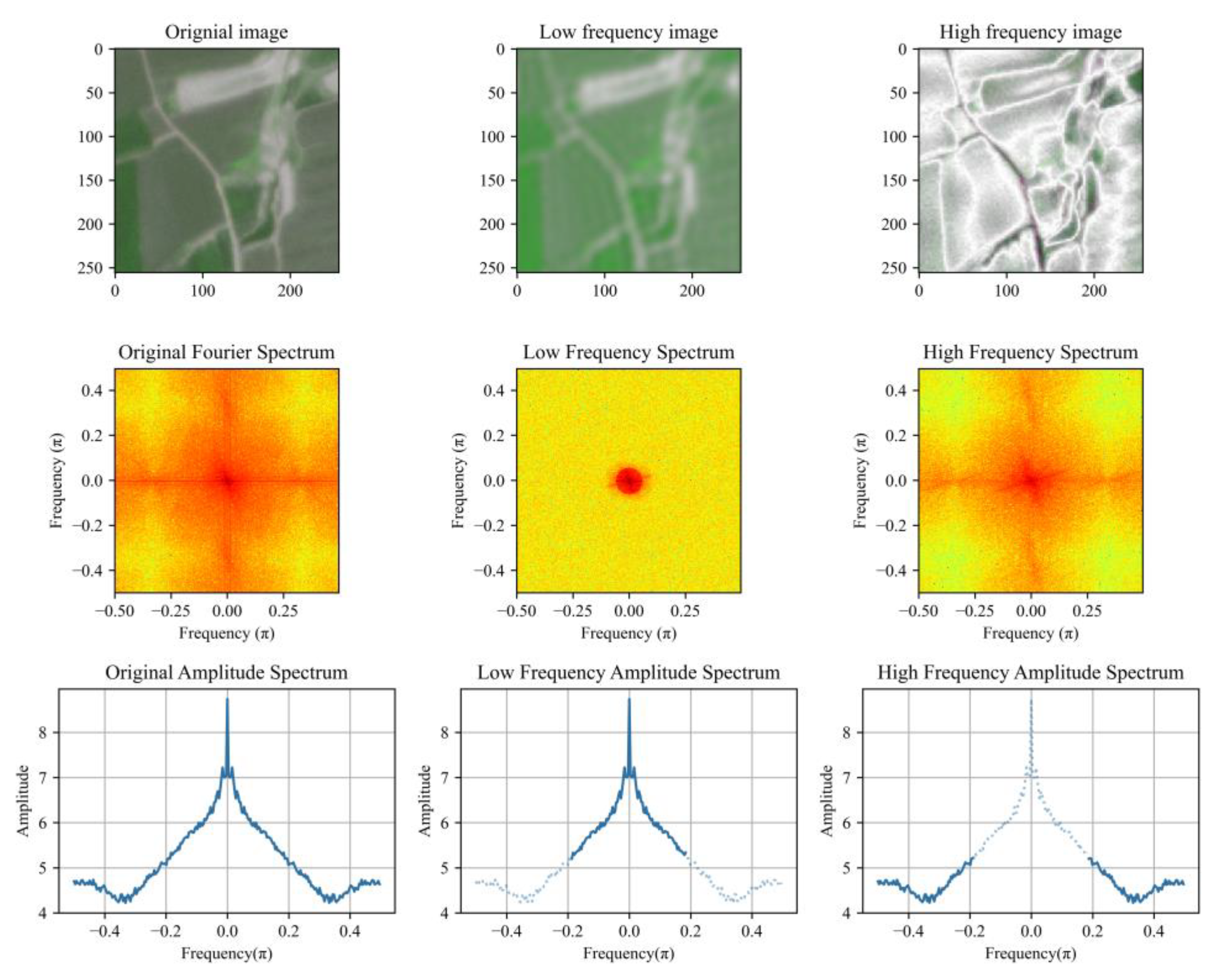

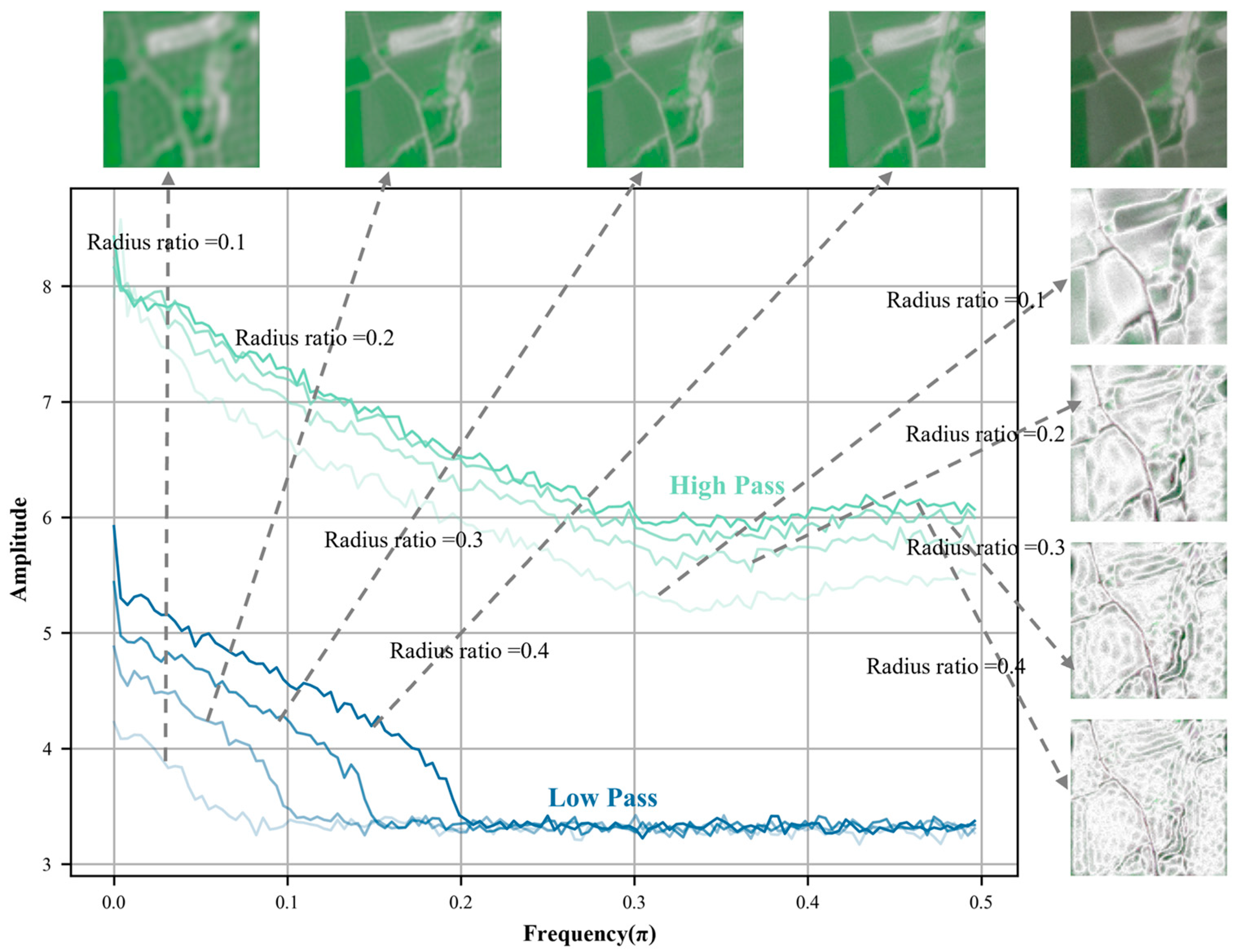

2.2.1. Fourier Transform

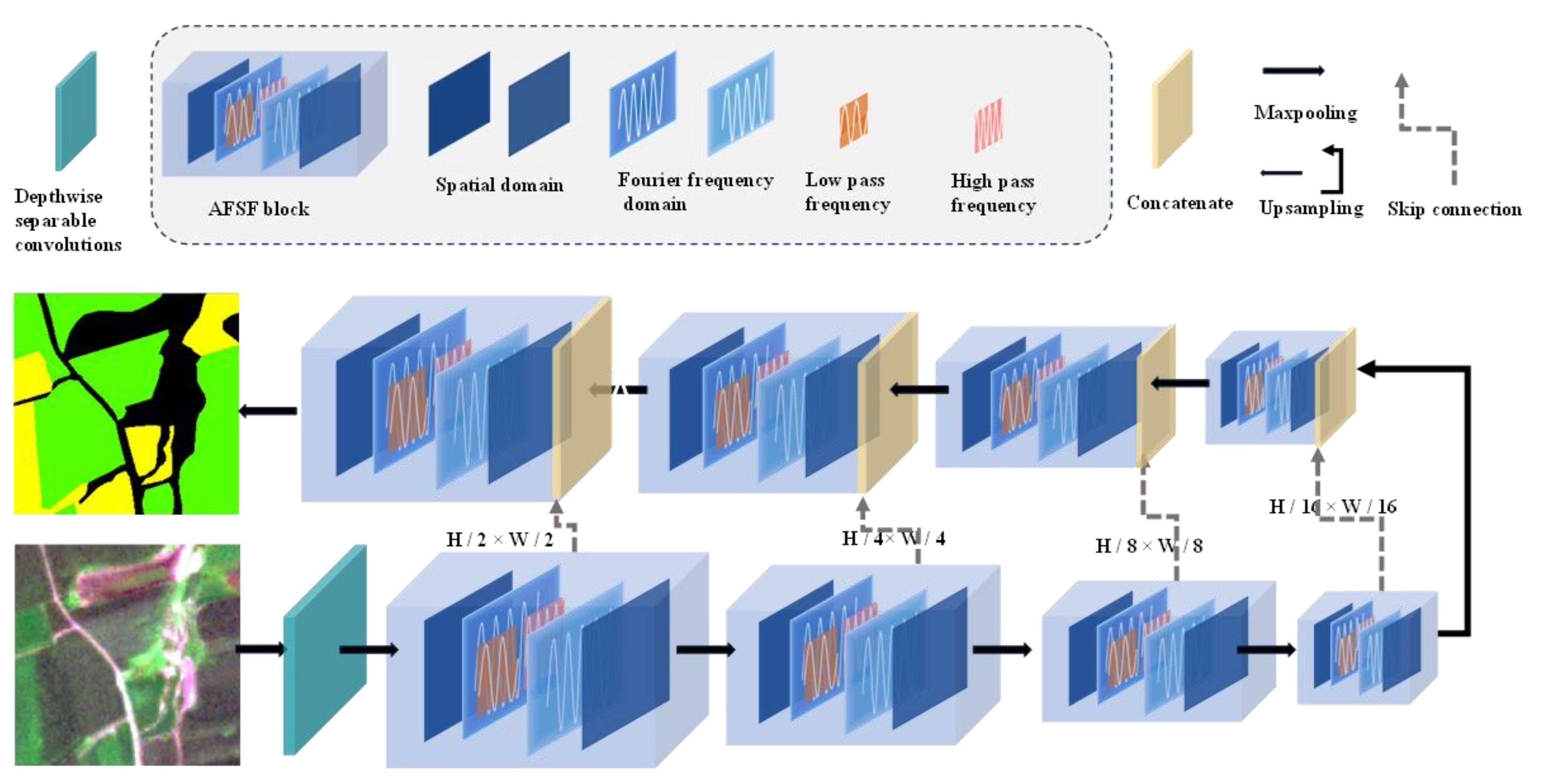

2.2.2. Network Architecture

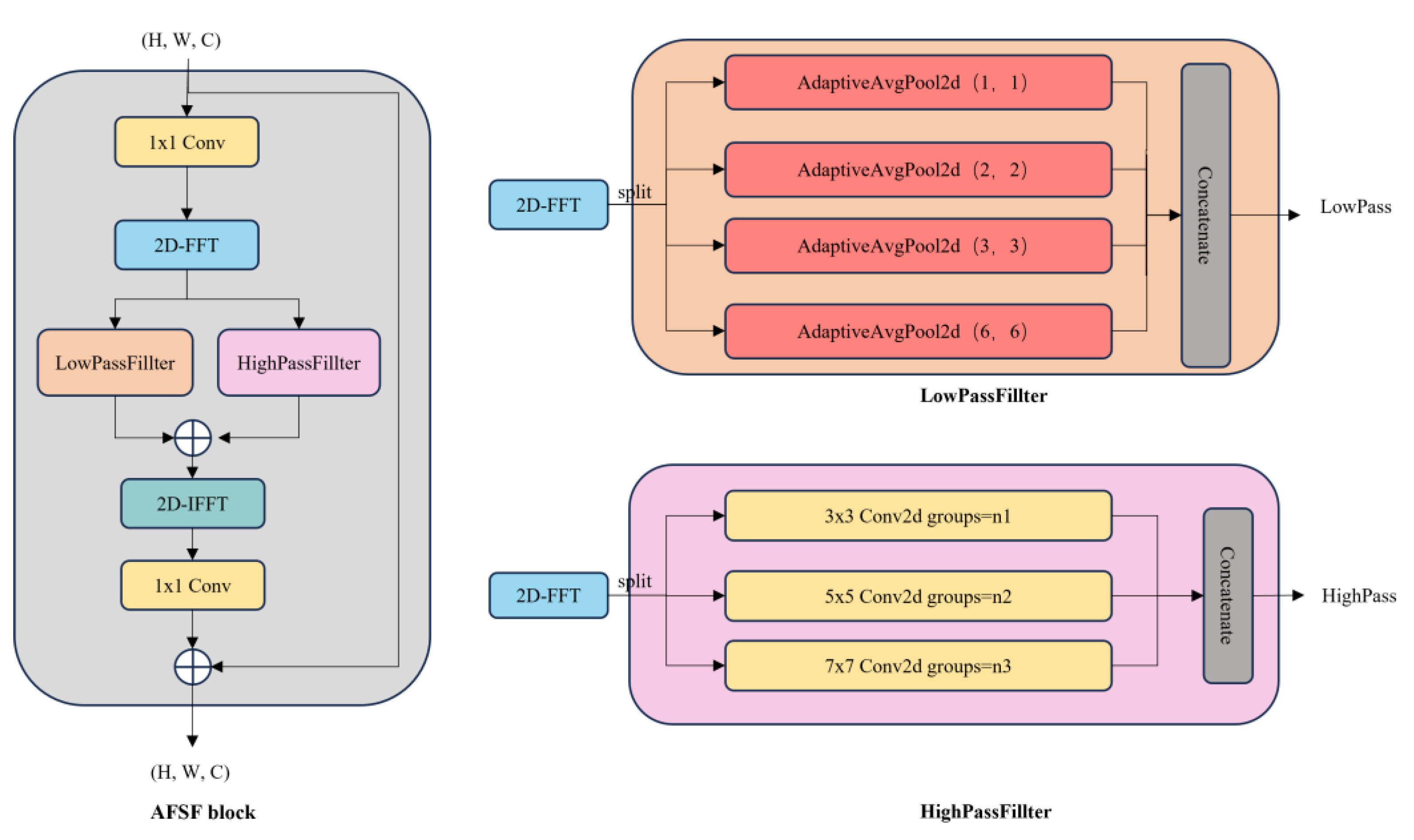

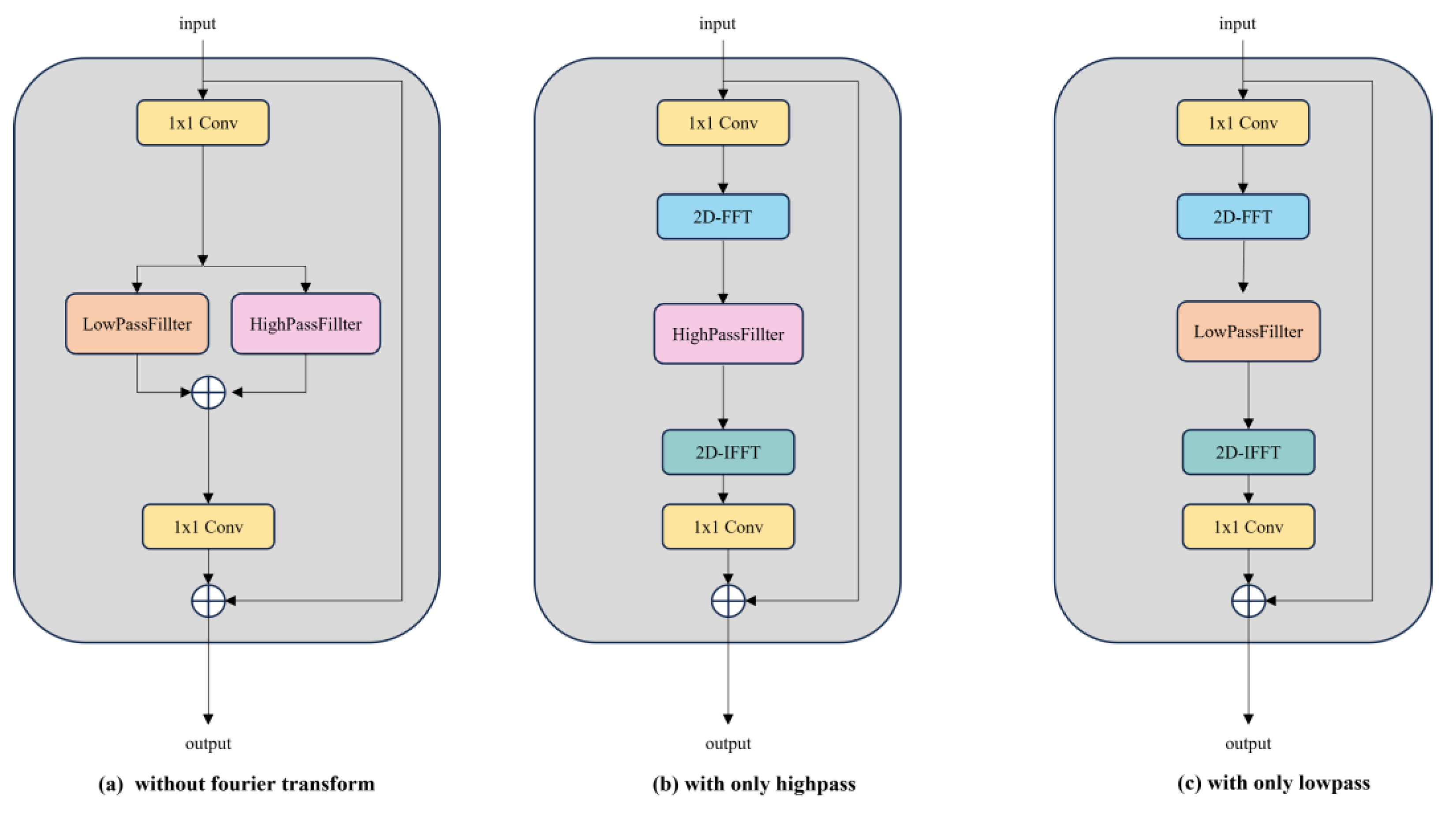

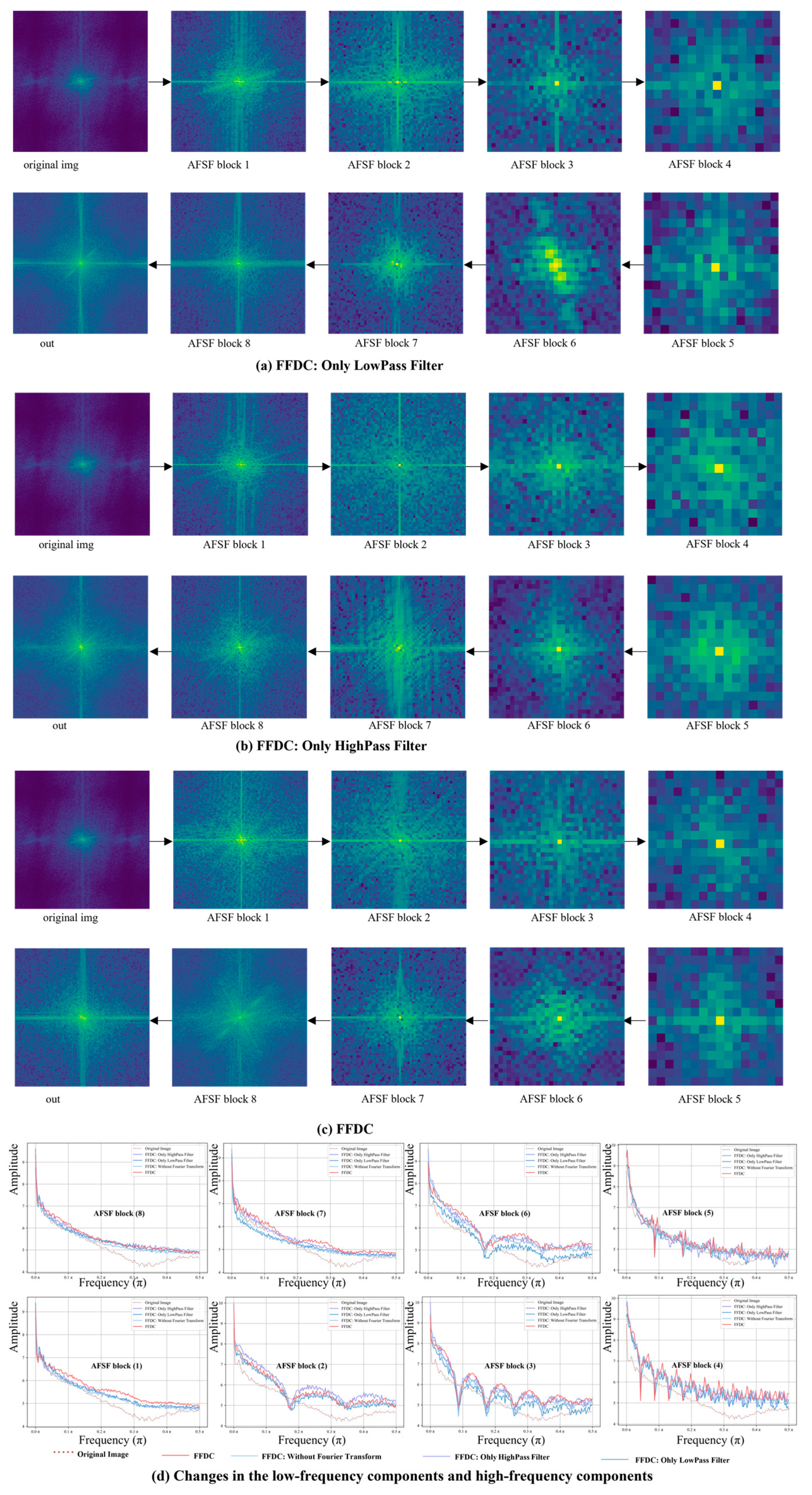

2.2.3. Adaptive Frequency Selective Filter Module

2.2.4. Training Parameters and Evaluation Metrics

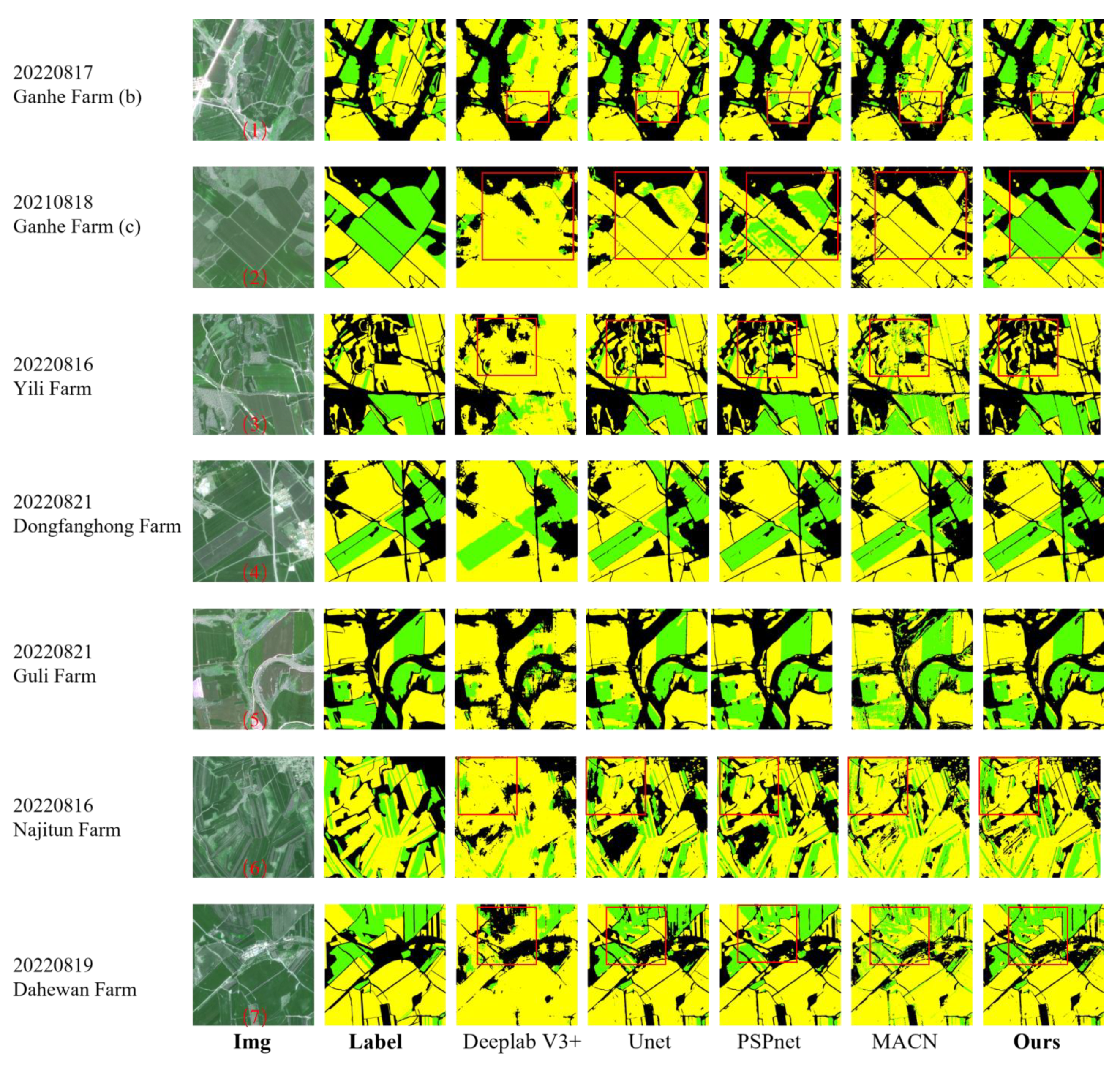

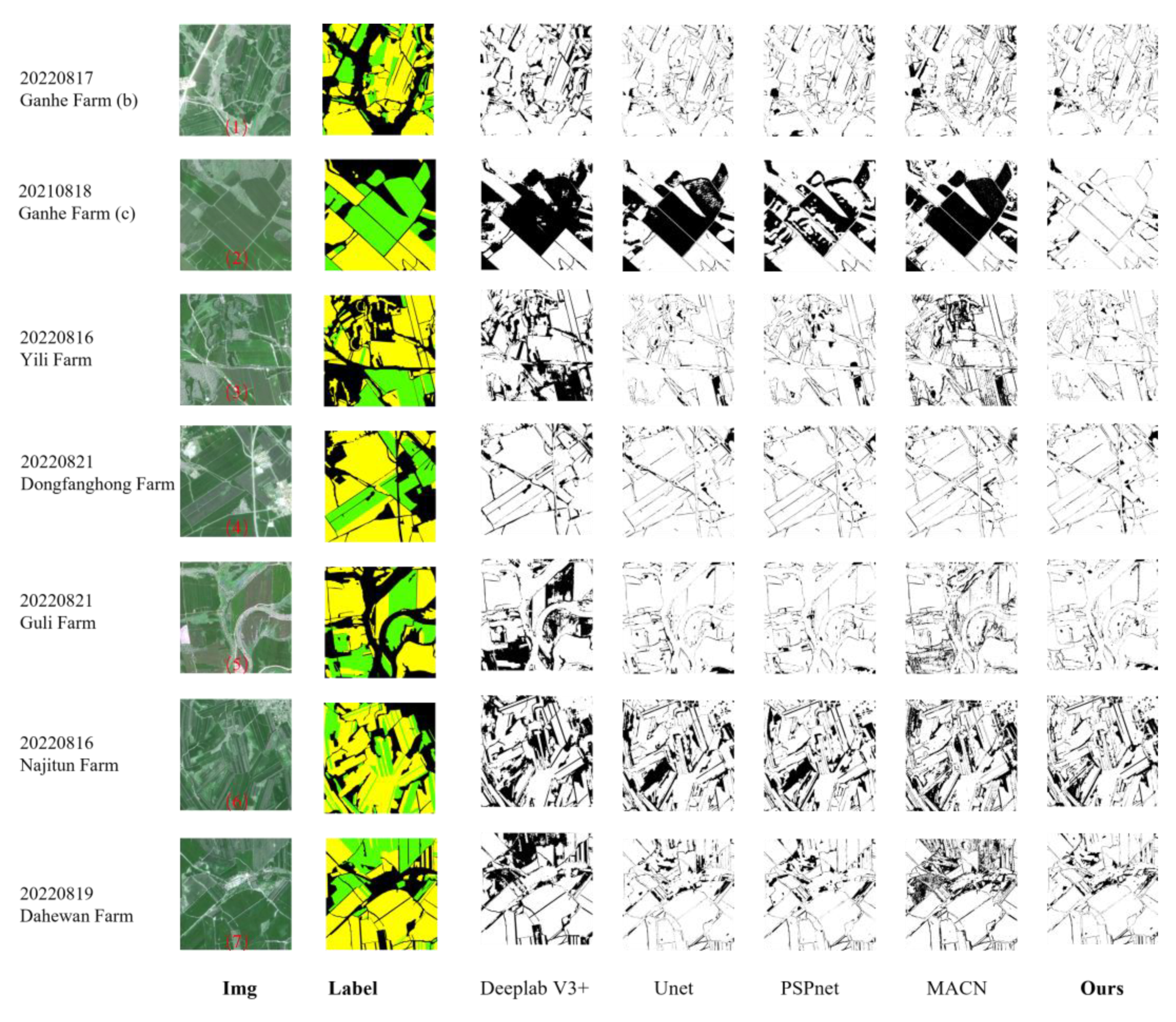

3. Results

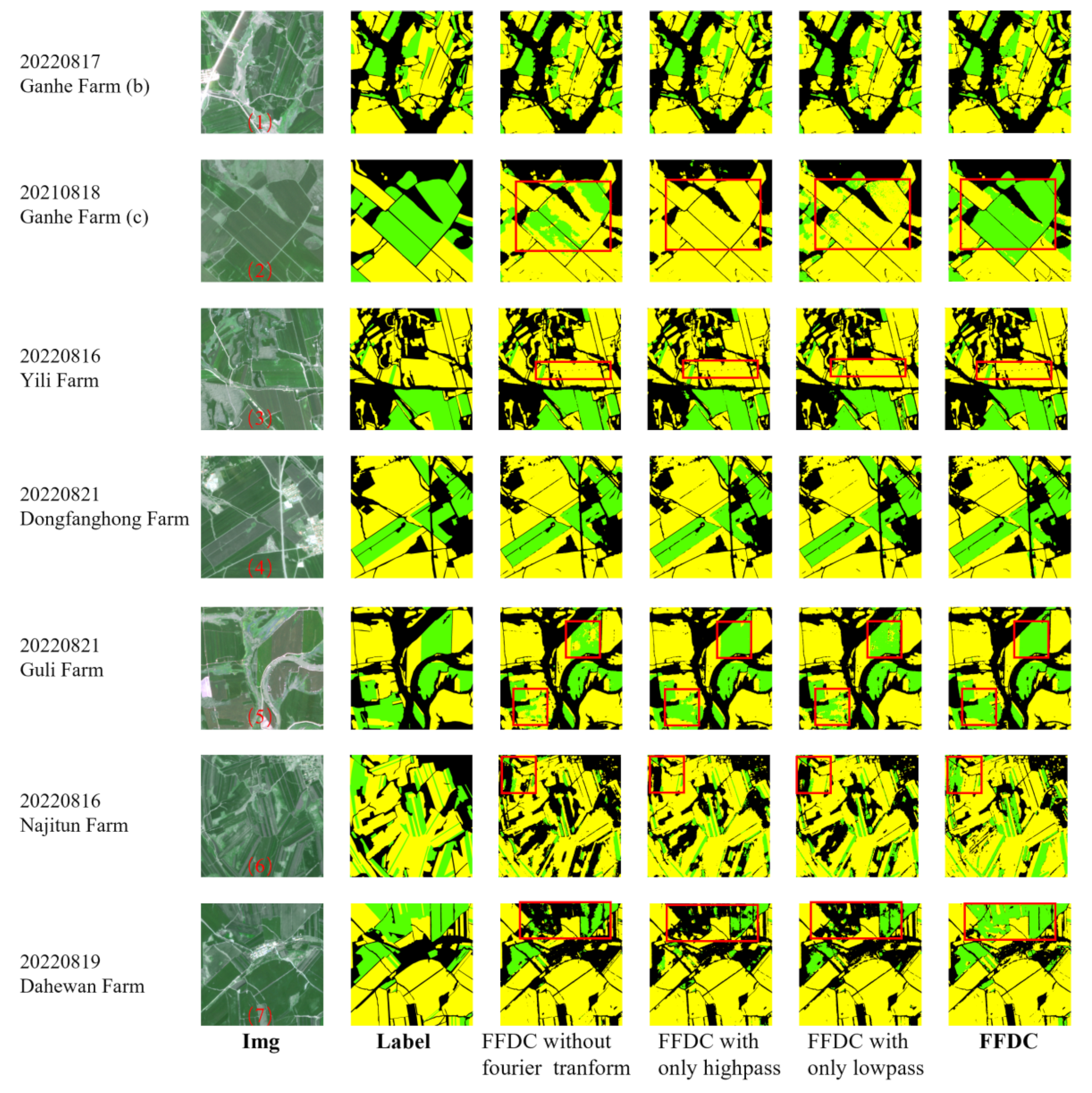

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- MacDonald, R.B.; Hall, F.G. Global Crop Forecasting. Science 1980, 208, 670–679. [Google Scholar] [CrossRef] [PubMed]

- Potgieter, A.B.; Zhao, Y.; Zarco-Tejada, P.J.; Chenu, K.; Zhang, Y.; Porker, K.; Biddulph, B.; Dang, Y.P.; Neale, T.; Roosta, F.; et al. Evolution and application of digital technologies to predict crop type and crop phenology in agriculture. In Silico Plants 2021, 3, diab017. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Luo, Z.; Yang, W.; Yuan, Y.; Gou, R.; Li, X. Semantic segmentation of agricultural images: A survey. Inf. Process. Agric. 2023; in press. [Google Scholar] [CrossRef]

- Muruganantham, P.; Wibowo, S.; Grandhi, S.; Samrat, N.H.; Islam, N. A Systematic Literature Review on Crop Yield Prediction with Deep Learning and Remote Sensing. Remote Sens. 2022, 14, 1990. [Google Scholar] [CrossRef]

- Ennouri, K.; Kallel, A. Remote Sensing: An Advanced Technique for Crop Condition Assessment. Math. Probl. Eng. 2019, 2019, 9404565. [Google Scholar] [CrossRef]

- Hashemi-Beni, L.; Gebrehiwot, A. Deep learning for remote sensing image classification for agriculture applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIV-M-2-2, 51–54. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Tian, F.; Potgieter, A.B.; Qin, X.; Yan, N.; Chang, S.; Zhao, Y.; Dong, Q.; et al. Challenges and opportunities in remote sensing-based crop monitoring: A review. Natl. Sci. Rev. 2022, 10, nwac290. [Google Scholar] [CrossRef] [PubMed]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Wu, Y.; Wu, P.; Wu, Y.; Yang, H.; Wang, B. Remote Sensing Crop Recognition by Coupling Phenological Features and Off-Center Bayesian Deep Learning. Remote Sens. 2023, 15, 674. [Google Scholar] [CrossRef]

- Wu, Y.; Wu, Y.; Wang, B.; Yang, H. A Remote Sensing Method for Crop Mapping Based on Multiscale Neighborhood Feature Extraction. Remote Sens. 2022, 15, 47. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Anilkumar, P.; Venugopal, P. Research Contribution and Comprehensive Review towards the Semantic Segmentation of Aerial Images Using Deep Learning Techniques. Secur. Commun. Netw. 2022, 2022, 6010912. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Hong, Q.; Yang, T.; Li, B. A Survey on Crop Image Segmentation Methods. In Proceedings of the 8th International Conference on Intelligent Systems and Image Processing 2021, Kobe, Japan, 6–10 September 2021; pp. 37–44. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, S.; Shao, Y. Crops classification from sentinel-2a multi-spectral remote sensing images based on convolutional neural networks. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar] [CrossRef]

- Zou, J.; Dado, W.T.; Pan, R. Early Crop Type Image Segmentation from Satellite Radar Imagery. 2020. Available online: https://api.semanticscholar.org/CorpusID:234353421 (accessed on 18 June 2023).

- Wang, L.; Wang, J.; Liu, Z.; Zhu, J.; Qin, F. Evaluation of a deep-learning model for multispectral remote sensing of land use and crop classification. Crop. J. 2022, 10, 1435–1451. [Google Scholar] [CrossRef]

- Song, Z.; Wang, P.; Zhang, Z.; Yang, S.; Ning, J. Recognition of sunflower growth period based on deep learning from UAV remote sensing images. Precis. Agric. 2023, 24, 1417–1438. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- Huang, Y.; Tang, L.; Jing, D.; Li, Z.; Tian, Y.; Zhou, S. Research on crop planting area classification from remote sensing image based on deep learning. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019. [Google Scholar] [CrossRef]

- Du, S.; Du, S.; Liu, B.; Zhang, X. Incorporating DeepLabv3+ and object-based image analysis for semantic segmentation of very high resolution remote sensing images. Int. J. Digit. Earth 2020, 14, 357–378. [Google Scholar] [CrossRef]

- Singaraju, S.K.; Ghanta, V.; Pal, M. OOCS and Attention based Remote Sensing Classifications. In Proceedings of the 2023 International Conference on Machine Intelligence for GeoAnalytics and Remote Sensing (MIGARS), Hyderabad, India, 27–29 January 2023. [Google Scholar] [CrossRef]

- Fan, X.; Yan, C.; Fan, J.; Wang, N. Improved U-Net Remote Sensing Classification Algorithm Fusing Attention and Multiscale Features. Remote Sens. 2022, 14, 3591. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. A nested U-Net architecture for medical image segmentation. arXiv 2018, arXiv:1807.10165. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer Neural Network for Weed and Crop Classification of High Resolution UAV Images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Advances in neural information processing systems. arXiv 2017. [Google Scholar] [CrossRef]

- Lu, T.; Wan, L.; Wang, L. Fine crop classification in high resolution remote sensing based on deep learning. Front. Environ. Sci. 2022, 10, 991173. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Gao, M.; Yan, J.; Jin, Y.; Yang, G.; Wu, W. MP-Net: An efficient and precise multi-layer pyramid crop classification network for remote sensing images. Comput. Electron. Agric. 2023, 212. [Google Scholar] [CrossRef]

- Yan, C.; Fan, X.; Fan, J.; Yu, L.; Wang, N.; Chen, L.; Li, X. HyFormer: Hybrid Transformer and CNN for Pixel-Level Multispectral Image Land Cover Classification. Int. J. Environ. Res. Public Health 2023, 20, 3059. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Chen, X.; Zhang, T.; Xu, Z.; Li, J. CCTNet: Coupled CNN and Transformer Network for Crop Segmentation of Remote Sensing Images. Remote Sens. 2022, 14, 1956. [Google Scholar] [CrossRef]

- Xiang, J.; Liu, J.; Chen, D.; Xiong, Q.; Deng, C. CTFuseNet: A Multi-Scale CNN-Transformer Feature Fused Network for Crop Type Segmentation on UAV Remote Sensing Imagery. Remote Sens. 2023, 15, 1151. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Mehmood, M.; Shahzad, A.; Zafar, B.; Shabbir, A.; Ali, N. Remote Sensing Image Classification: A Comprehensive Review and Applications. Math. Probl. Eng. 2022, 2022, 5880959. [Google Scholar] [CrossRef]

- Yu, B.; Yang, A.; Chen, F.; Wang, N.; Wang, L. SNNFD, spiking neural segmentation network in frequency domain using high spatial resolution images for building extraction. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102930. [Google Scholar] [CrossRef]

- Xu, K.; Qin, M.; Sun, F.; Wang, Y.; Chen, Y.-K.; Ren, F. Learning in the frequency domain. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Ismaili, I.A.; Khowaja, S.A.; Soomro, W.J. Image compression, comparison between discrete cosine transform and fast fourier transform and the problems associated with DCT. In Proceedings of the International Conference on Image Processing, Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar] [CrossRef]

- Shuman, D.I.; Narang, S.K.; Frossard, P.; Ortega, A.; Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 2013, 30, 83–98. [Google Scholar] [CrossRef]

- Ricaud, B.; Borgnat, P.; Tremblay, N.; Gonçalves, P.; Vandergheynst, P. Fourier could be a data scientist: From graph Fourier transform to signal processing on graphs. Comptes Rendus Phys. 2019, 20, 474–488. [Google Scholar] [CrossRef]

- Chen, Y.; Fan, H.; Xu, B.; Yan, Z.; Kalantidis, Y.; Rohrbach, M.; Shuicheng, Y.; Feng, J. Drop an octave: Reducing spatial redundancy in convolutional neural networks with octave convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Katznelson, Y. An Introduction to Harmonic Analysis; Cambridge University Press: Cambridge, MA, USA, 1968. [Google Scholar] [CrossRef]

- Chi, L.; Jiang, B.; Mu, Y. Fast Fourier Convolution. 2020. Available online: https://api.semanticscholar.org/CorpusID:227276693 (accessed on 25 April 2023).

- Yin, D.; Lopes, R.G.; Shlens, J.; Cubuk, E.D.; Gilmer, J. A fourier perspective on model robustness in computer vision. Advances in Neural Information Processing Systems. arXiv 2019. [Google Scholar] [CrossRef]

- Kauffmann, L.; Ramanoã«L, S.; Peyrin, C. The neural bases of spatial frequency processing during scene perception. Front. Integr. Neurosci. 2014, 8, 37. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Wang, J.; Chen, C.; Jiao, J.; Sun, L.; Cai, Y.; Song, S.; Li, J. FreMAE: Fourier Transform Meets Masked Autoencoders for Medical Image Segmentation. arXiv 2023, arXiv:2304.10864. [Google Scholar] [CrossRef]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land use classification in remote sensing images by convolutional neural networks. arXiv 2015, arXiv:1508.00092. [Google Scholar] [CrossRef]

- Kakhani, N.; Mokhtarzade, M.; Zoej, M.V. Watershed Segmentation of High Spatial Resolution Remote Sensing Image Based on Cellular Neural Network (CNN). Available online: https://www.sid.ir/FileServer/SE/574e20142106 (accessed on 12 September 2023).

- Lee, H.; Kwon, H. Contextual deep CNN based hyperspectral classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar] [CrossRef]

- Piramanayagam, S.; Schwartzkopf, W.; Koehler, F.W.; Saber, E. Classification of remote sensed images using random forests and deep learning framework. In Proceedings of the Image and Signal Processing for Remote Sensing XXII, Edinburgh, UK, 26–28 September 2016; SPIE: Washington, DC, USA, 2016. [Google Scholar] [CrossRef]

- Zhang, C.; Wan, S.; Gao, S.; Yu, F.; Wei, Q.; Wang, G.; Cheng, Q.; Song, D. A Segmentation Model for Extracting Farmland and Woodland from Remote Sensing Image. Preprints 2017, 2017120192. [Google Scholar] [CrossRef]

- Xu, Z.-Q.J.; Zhang, Y.; Xiao, Y. Training behavior of deep neural network in frequency domain. In Proceedings of the Neural Information Processing: 26th International Conference, ICONIP 2019, Sydney, NSW, Australia, 12–15 December 2019; Proceedings, Part I 26. Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- Rahaman, N.; Baratin, A.; Arpit, D.; Draxler, F.; Lin, M.; Hamprecht, F.A.; Bengio, Y.; Courville, A. On the spectral bias of neural networks. in International Conference on Machine Learning. arXiv 2019. [Google Scholar] [CrossRef]

| Farm | Image Name | |

|---|---|---|

| Ganhe | Ganhe (a) | 20220817_021446_16_2485_3B_AnalyticMS_SR |

| Ganhe (b) | 20220817_021723_59_247d_3B_AnalyticMS_SR | |

| Ganhe (c) | 20210818_015304_46_2440_3B_AnalyticMS_SR | |

| Dongfanghong | 20220816_023424_19_2405_3B_AnalyticMS_SR | |

| Yili | 20220816_022045_11_248c_3B_AnalyticMS_SR | |

| Najitun | 20220816_021802_52_2461_3B_AnalyticMS_SR | |

| Guli | 20220816_021618_41_2489_3B_AnalyticMS_SR | |

| Dahewan | 20220819_021916_67_2484_3B_AnalyticMS_SR | |

| Band No | Band Name | Resolution (m) | Wavelength (nm) |

|---|---|---|---|

| B1 | Blue | 3 | 465–515 |

| B2 | Green | 3 | 547–593 |

| B3 | Red | 3 | 650–680 |

| B4 | Nir-infrared | 3 | 845–885 |

| Method | Precision | Recall | F1 | OA | mIoU | Kappa | Flops | Params | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Corn | Soybean | Corn | Soybean | Corn | Soybean | ||||||

| DeeplabV3+ | 0.9607 | 0.3606 | 0.6752 | 0.8741 | 0.7930 | 0.5106 | 0.7331 | 0.5289 | 0.5543 | 7.9 G | 5.2 M |

| U-Net | 0.9446 | 0.6720 | 0.8178 | 0.9093 | 0.8766 | 0.7728 | 0.8525 | 0.7236 | 0.7633 | 50.8 G | 32.1 M |

| PSPnet | 0.9221 | 0.7509 | 0.8570 | 0.8881 | 0.8883 | 0.8138 | 0.8622 | 0.7437 | 0.7814 | 50.5 G | 52.5 M |

| MACN | 0.9425 | 0.5850 | 0.7562 | 0.8076 | 0.8391 | 0.6785 | 0.7990 | 0.6381 | 0.6742 | 20.3 G | 17.2 M |

| FFDC | 0.9432 | 0.8936 | 0.9084 | 0.9369 | 0.9255 | 0.9147 | 0.9092 | 0.8305 | 0.8571 | 7.5 G | 2.62 M |

| Ablation | Precision | Recall | F1 | OA | mIoU | Kappa | FLOPs | Params | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Corn | Soybean | Corn | Soybean | Corn | Soybean | ||||||

| Only low-pass | 0.9344 | 0.5940 | 0.8421 | 0.9375 | 0.8858 | 0.7272 | 0.8479 | 0.7048 | 0.7548 | 4.13 G | 1.26 M |

| Only high-pass | 0.9378 | 0.5605 | 0.8193 | 0.9496 | 0.8746 | 0.7049 | 0.8368 | 0.6860 | 0.7356 | 4.25 G | 1.32 M |

| No Fourier | 0.9221 | 0.6186 | 0.8558 | 0.9502 | 0.8877 | 0.7494 | 0.8476 | 0.7082 | 0.7553 | 4.18 G | 1.27 M |

| FFDC | 0.9432 | 0.8936 | 0.9084 | 0.9369 | 0.9255 | 0.9147 | 0.9092 | 0.8305 | 0.8571 | 7.54 G | 2.62 M |

| Method | OA | mIoU | Kappa |

|---|---|---|---|

| DeeplabV3+ | 0.7466 | 0.5192 | 0.6145 |

| U-Net | 0.7894 | 0.6565 | 0.6897 |

| PSPnet | 0.8072 | 0.6548 | 0.6854 |

| MACN | 0.7214 | 0.5080 | 0.5974 |

| FFDC | 0.8466 | 0.7184 | 0.7967 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, B.; Min, S.; Yang, H.; Wu, Y.; Wang, B. A Fourier Frequency Domain Convolutional Neural Network for Remote Sensing Crop Classification Considering Global Consistency and Edge Specificity. Remote Sens. 2023, 15, 4788. https://doi.org/10.3390/rs15194788

Song B, Min S, Yang H, Wu Y, Wang B. A Fourier Frequency Domain Convolutional Neural Network for Remote Sensing Crop Classification Considering Global Consistency and Edge Specificity. Remote Sensing. 2023; 15(19):4788. https://doi.org/10.3390/rs15194788

Chicago/Turabian StyleSong, Binbin, Songhan Min, Hui Yang, Yongchuang Wu, and Biao Wang. 2023. "A Fourier Frequency Domain Convolutional Neural Network for Remote Sensing Crop Classification Considering Global Consistency and Edge Specificity" Remote Sensing 15, no. 19: 4788. https://doi.org/10.3390/rs15194788

APA StyleSong, B., Min, S., Yang, H., Wu, Y., & Wang, B. (2023). A Fourier Frequency Domain Convolutional Neural Network for Remote Sensing Crop Classification Considering Global Consistency and Edge Specificity. Remote Sensing, 15(19), 4788. https://doi.org/10.3390/rs15194788