Abstract

Deep learning (DL) algorithms have demonstrated important breakthroughs for hyperspectral image (HSI) classification. Despite the remarkable success of DL, the burden of a manually designed DL structure with increased depth and size aroused the difficulty for the application in the mobile and embedded devices in a real application. To tackle this issue, in this paper, we proposed an efficient lightweight attention network architecture search algorithm (EL-NAS) for realizing an efficient automatic design of a lightweight DL structure as well as improving the classification performance of HSI. First, aimed at realizing an efficient search procedure, we construct EL-NAS based on a differentiable network architecture search (NAS), which can greatly accelerate the convergence of the over-parameter supernet in a gradient descent manner. Second, in order to realize lightweight search results with high accuracy, a lightweight attention module search space is designed for EL-NAS. Finally, further for alleviating the problem of higher validation accuracy and worse classification performance, the edge decision strategy is exploited to perform edge decisions through the entropy of distribution estimated over non-skip operations to avoid further performance collapse caused by numerous skip operations. To verify the effectiveness of EL-NAS, we conducted experiments on several real-world hyperspectral images. The results demonstrate that the proposed EL-NAS indicates a more efficient search procedure with smaller parameter sizes and high accuracy performance for HSI classification, even under data-independent and sensor-independent scenarios.

1. Introduction

A hyperspectral remote sensing image (HSI) can be regarded as a 3D cube, which reflects the material’s spatial, spectral, and radiation information and land cover. Based on such abundant spectral bands, HSI data presents great importance in many practical applications, such as agriculture [1], environmental science [2], urban remote sensing [3], military defense [4], and other fields [5]. Various methods have been proposed for the HSI classification regarding the rich spectral information. Traditional representative algorithms such as sparse represent classification (SRC) [6], collaborative represent classification (CRC) [7], SVM with a nonlinear kernel projection method [8], and other kernel-based methods [9,10] are proposed by utilizing the rich discriminant spectral information. Spectral–spatial (SS) algorithms were gradually proposed to improve classification performance for sufficiently involving and fusing spatial correlation information. By introducing spatial features into HSI classification, a spatial–spectral derivative-aided kernel joint sparse representation (KJSR-SSDK) [11] and an adaptive nonlocal spatial–spectral kernel (ANSSK) [12] are proposed for extracting SS combination features. The above-mentioned algorithms are mainly keen on handcrafted features (spectral information or dimension reduction information of spectral information) with classifiers for HSI classification.

Inspired by the success of DL techniques, DL-based methods present prospects in the computer vision area, which can discover distributed feature representations of data by combining low-level features to form more abstract high-level representation features [13]. Spacial–spectral (SS)-based DL methods have presented promising performance for HSI classification. Additionally, 2D-CNN-based methods are proposed for extracting SS features, whereas dimension reduction of the HSI data is required first [14,15,16]. Considering the input data is a 3D cube in HSI processing, multi-scale 3D-CNN is introduced by considering filters of different sizes [17]. By combining ResNet with SS CNN, SSRN (SS residual network) is introduced to learn robust SS features from HSI [15]. RCDN (Residual Conv–Deconv Network) is proposed by densely connecting the deep residual networks [18]. Meanwhile, the deep feature fusion network (DFFN) was proposed to alleviate the overfitting and gradient disappearance problems of CNNs by taking into account the strong complementary correlation information between different layers of the neural network [19]. A novel deep generative spectral–spatial classifier (DGSSC) is proposed for addressing the issues of imbalanced HSIC [20]. Zhang et al. further proposed a deep 3D lightweight convolutional network consisting of dozens of 3D convolutional layers to improve classification performance [21]. However, it is worth noting that CNN-based algorithms are liable to indicate local information loss due to pooling layers. To cope with the problem, a dual-channel capsule network with GAN (DcCapsGAN) is proposed, which can generate pseudo-samples more efficiently and improve the classification accuracy and performance [22]. Additionally, a novel quaternion transformer network (QTN) for recovering self-adaptive and long-range correlations in HSIs is proposed in [23]. The Lightweight SS Attention Feature Fusion Framework (LMAFN) [24] is constructed based on architectural guidelines provided by NAS [25], and the proposed LMAFN achieves commendable classification accuracy and performance with a reduced parameter quantity. Specifically, LMAFN is a manually-designed neural network that incorporates the architectural principles from NAS to guide its feature fusion and network architecture. Therefore the entire network of LMAFN is manually constructed by the guiding rules established by NAS, but does not utilize automated searches for its architecture.

Nonetheless, in the realm of hyperspectral image classification, deep learning confronts multifaceted challenges, encompassing model intricacy, burdensome architectural design, and the inherent scarcity of accessible labeled hyperspectral data. These factors collectively impede the training efficacy and generalization prowess of deep learning paradigms. As a practical solution, transfer learning helps improve model performance when there is not much data available. It does this by transferring useful knowledge from a source domain, where plenty of data exists, to the target domain, which is lacking in data. Deep convolutional recurrent neural networks with transfer learning [26] present a sophisticated methodology for the extraction of spatial–spectral features, even in scenarios where the availability of training samples is limited. HT-CNN [27] propose a heterogeneous transfer learning that adjusts the differences between heterogeneous datasets through an attention mechanism. TL-ELM [28] introduces an ensemble migration learning algorithm built upon Extreme Learning Machines. This innovative approach not only preserves the input weights and hidden biases acquired from the target domain, but also iteratively fine-tunes the output weights using instances from the source domain.

Recently, the limitation of storage resources, power consumption, computational complexity, and parameter size hindered the application and implementation of DL-based algorithms for relevant applications, especially for edge devices and embedded platforms. Therefore, how to further realize lightweight and automated architecture design with limited storage and power constraints became a crucial issue [29,30]. Mobilenet V3 [31] efficiently combined the depthwise (DW) separable convolution, the inverted residual, and SE attention modules. Furthermore, EfficientNet V2 [32] and Squeezenet [33] all simultaneously involved attention modules and lightweight structures for efficiently improving classification performance. Nevertheless, the above-mentioned algorithms are mainly manually designed for specific tasks. In a real application, it is inherently a difficult and time-consuming task that relies heavily on expert knowledge. As research becomes more complex, the cost of debugging the model parameters of deep networks increases dramatically.

The Neural Architecture Search (NAS) approach effectively solves the problem of efficient and lightweight architectures for edge devices that are difficult to design. In general, there are mainly three mainstreams in NAS literature: reinforcement-learning-based (RL-based) NAS approaches, evolutionary-learning-based (EL-based) NAS approaches, and gradient-based (GD-based) NAS approaches. In RL-based NAS literature, the strategy is mainly iteratively generating new architectures based on learning a maximized reward from an objective (i.e., the accuracy on the validation set or model latency) [34,35,36]. In EL-based literature, architectures are represented as individuals in a population. Individuals with high fitness scores (verification accuracy) are privileged to generate offspring, thereby replacing individuals with low fitness scores. Large-Scale Evolution is proposed in [37], which applies evolutionary algorithms to discovery architectures for the first time. A new hierarchical genetic representation scheme and an expression search space supporting complex topologies are combined in Hier-Evolution [38], which outperforms various manually designed architectures for image classification tasks. However, most RL-based and EL-based NAS usually require high computational demand for the revolution in neural architecture design. For instance, NASNet [39] based on RL strategy demands 450GPUs for 4 days resulting in 1800 GPU-hours and MnasNet [40] used 64TPUs for 4.5 days in CIFAR-10. Similarly, Hier-Evolution [38] based on EL strategy needs to spend 300 GPU days to acquire a satisfying architecture in CIFAR-10. RL-based and EL-based NAS methods indicate that the neural architecture search in a discrete search strategy is usually regarded as a black-box optimization problem with an excessive process of structural performance evaluation.

In contrast to RL-based and EL-based NAS, the GD-based NAS approach continuously relaxes the original discrete search space, making it possible to optimize the architectural search space efficiently in a gradient descent manner. Following the cell-based search space of NASNet and exploring the possibility of transforming the discrete neural architecture space into a continuously differentiable form, DARTS [41] is developed by introducing an architecture parameter for each path and jointly training weights and architecture parameters via a gradient descent algorithm, which makes more efficient way for architecture search problem.

Therefore, inspired by the abovementioned problem and the literature, we construct an efficient attention architecture search (EL-NAS) for HSI classification in this paper. First, because of the efficiency of the GD-based architecture search, we mainly adopt the manner of differentiable neural architecture search as the main automatic DL design strategy to realize the efficient search procedure. Considering the real application for a mobile and embedded device, the lightweight and the attention module and 3D decomposition convolution are simultaneously exploited to construct the searching space, which can efficiently improve the classification accuracy with lower computation and storage costs. Meanwhile, aiming to mitigate the performance collapse caused by the number of skip operations in the searching procedure, the edge decision strategy, and the dynamic regularization is designed by the entropy and distribution of the non-skip operations to preserve the most efficient searching structure. Furthermore, generalization loss is introduced to improve the generalization of the searched model.

We then summarize the main contribution and the innovation of the proposed EL-NAS as follows:

- EL-NAS successfully introduces the lightweight and attention module and 3D decomposition convolution for automatically realizing the efficient design of DL structure in the hyperspectral image classification area. Therefore, the efficient automatic searching strategy enables us to establish a task-driven automatic design of DL structure for different datasets from different acquisition sensors or scenarios.

- EL-NAS presents remarkable searching efficiency through edge decision strategy to realize lightweight attention DL structure by imposing (i) the knowledge of successful lightweight 3D decomposition convolution and attention module in the searching space. (ii) The entropy of operation distribution estimated over non-skip operation is implemented to make the edge decision. (iii) Dynamic regularization loss based on the impact of the number of skip connections is adopted for further improving the searching performance. Therefore, the most effective and lightweight operations will be preserved by utilizing the edge decision strategy.

- Compared with several state-of-the-art methods via comprehensive experiments in accuracy, classification maps, the number of parameters, and the execution cost, EL-NAS presents fewer GPU searching costs and lower parameters and computation costs. The experimental results on three real HSI datasets demonstrate that EL-NAS can search out a more lightweight network structure and realize more robust classification results even under data-independent and sensor-independent scenarios.

The rest of this article is organized as follows. Section 2 reviews the related works in HSI classification. The details of the proposed EL-NAS are described in Section 3. Experiments performance and analysis are designed and discussed in Section 4. Finally, the conclusions are summarized in Section 5 in this article.

2. Related Work

2.1. GD-Based NAS

The search space, search strategy and performance evaluation are main three aspects of GD-based NAS that could be improved. Compared to DARTS, ACA-DARTS [42] removes skip connections from the operation space by introducing an adaptive channel allocation strategy to refill the skip connections in the evaluation stage. PAD-NAS [43] can automatically design the operations for each layer and achieve a trade-off between search space quality and model diversity. In [44], a new search space is introduced based on the backbone of the convolution-enhanced transformer (Conformer), a more expressive architecture than the ASR architecture used in existing NAS-based ASR frameworks. For the re-identification (ReID) task, CDNet [45] is proposed based on a novel search space called the combined depth space (CDS). By using the combined basic building blocks in the CDS, CDNet tends to focus on the combined pattern information normally found in pedestrian images. For hyperspectral image classification task, 3D-ANAS is proposed by a three-dimensional asymmetric decomposition search space, which realized the efficiency improvement of classification [46]. A novel hybrid search space is also proposed in [47], where 3D convolution, 2D spatial convolution and 2D spectral convolution are employed.

Recent research gradually focused on how to avoid the well-known performance collapse caused by an inevitable aggregation of skip connections and mitigate the drawbacks of weight sharing in DARTS. DARTS+ [48] leverages early stopping to avoid the performance collapse in DARTS. PC-DARTS [49] exploits the redundancy of the network space by sampling parts of the supernet for a more efficient search. DARTS- [50] offsets the advantages of a skip connection with an auxiliary skip connection, ensuring more equitable competition for all operations. SGAS [51] partitioned the search process into sub-problems to select and greedily reduce candidate operations. FairNAS [52] suggests strict fairness, where each iteration of the supernet has to train the parameters of each candidate module at each layer, which guarantees that all candidate modules have equal opportunities for optimization throughout the training process. Single-DARTS [53] updates network weights and structural parameters simultaneously in the same batch of data instead of replacing bi-level optimization, which significantly alleviates performance collapse and improves the stability of the architecture search. Zela et al. [54] demonstrated that the various types of regularization can improve the robustness of DARTS to find solutions with better generalization properties. Additionally, -DARTS [55] proposes a simple-but-efficient Beta-Decay regularization method to regularize the DARTS-based NAS searching process. U-DARTS [56] redesigns the search space by combining the new search space with sampling and parameter-sharing strategies, where the regularization method considers depth and complexity to prevent network deterioration. FP-DARTS [57] constructs two over-parameter sub-networks that formed a two-way parallel hypernetwork by introducing dichotomous gates to control whether the paths were involved in the training of the hypernetwork.

2.2. NAS for HSI

It is usually a trivial and challenging task to realize state-of-the-art (SOTA) neural networks for a specific task with manually designed expert knowledge and effort. PSO-Net [58] is based on Particle Swarm Optimization (PSO) and an evolutionary search method for hyperspectral image data, enabling accelerated convergence. CPSO-Net [59] presents a more efficient continuous evolutionary approach that can speed the generation of architectures as weight-sharing parameters are optimized. Inspired by DARTS, Chen et al. proposed an automatic CNN (Auto-CNN) [60] for HSI classification by introducing a regularization technique named cutout to improve the classification accuracy. Auto-CNN outperformed with fewer parameters than manually designed DL architectures. A hierarchical search space is proposed in 3D-ANAS [46], which searches both topology and network width and introduces a three-dimensional asymmetric decomposition search space with extremely effective classification performance. A2S-NAS [61] proposes a multi-stage architectural search framework to overcome the asymmetric spatial dimension of the spectrum and capture important features. LMSS-NAS [62] proposes a lightweight multiscale NAS with spatial–spectral attention, which is centered on the design of a search space composed of lightweight convolutional operators and the migration of label smoothing losses into the NAS to ameliorate the problem of unbalanced samples.

Based on the efficiency search strategy of DARTS, we additionally adopt the edge decision algorithm to alleviate performance collapse. Simultaneously, considering the lightweight modules and attention module can improve model performance with fewer parameters, we construct EL-NAS to improve the generalization of the searched model, which further makes it more possible for HSI classification method applied on edge devices.

3. Methodology

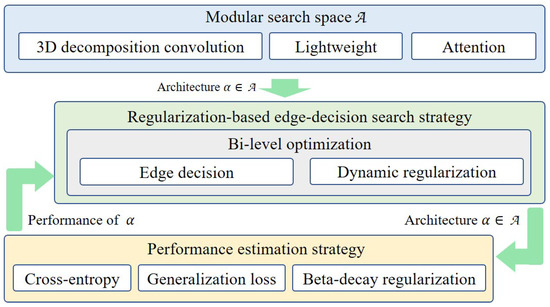

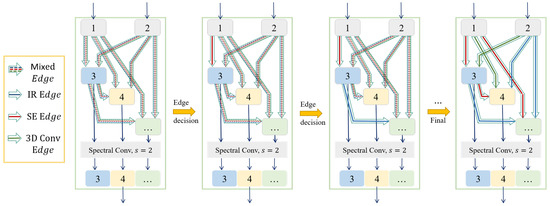

In this section, we mainly introduce the procedure of our proposed EL-NAS in detail. The overall workflow is illustrated in Figure 1. The modular search space is mainly used to fully exploit the hyperspectral data characteristics and construct an over-parameterized supernet while combining the lightweight module and attention mechanisms for optimal models with high performance and generalization. The regularization-based edge-decision search strategy involves an edge-decision algorithm that significantly accelerates the convergence of the over-parameter supernet and mitigates weight sharing, which can efficiently limit unfair competition in the search process for skip connections. The performance evaluation defines the metrics guidance of the NAS for high-performance and highly generalization models.

Figure 1.

The search framework of proposed EL-NAS for HSI classification.

3.1. Modular Search Space

In order to realize more efficient and compact search results for hyperspectral image classification task by the NAS method, we propose a modular search space different from DARTS [41]. In the modular search space, modules are regarded as candidate operations rather than simple convolutions, which can exploit the experience of manual architecture design and ensure the stability of search results.

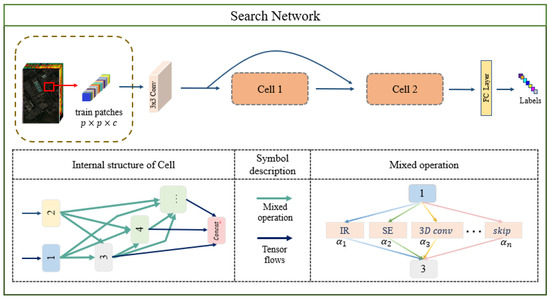

The whole modular search space is illustrated in Figure 2. Each cell is a DAG (Directed Acyclic Graph) consisting of an ordered sequence of N nodes (including two input nodes, intermediate nodes, and one output node), where each node represents a feature map in network, where i is the order in the DAG. An operation that transforms node to node , associated with each directed edge connects node and node in the DAG. Meanwhile, each intermediate node is connected with all its predecessors. The set of edges E is formulated as

Figure 2.

The whole modular search space and searching network of the proposed EL-NAS.

Each edge contains all candidate operations (alias paths), and DARTS transforms the discrete operation options into a differentiable parameter optimization problem by continuously relaxing the outputs of the different operations through a set of learnable architectural parameters . For example, the mixed operation taking the feature map as input can be represented as follows:

where is the set of candidate operations (i.e., , , …, ), o is a specific operation to be applied to , and parameterized by architecture parameters . Each intermediate node is computed by the following formula:

The input nodes in the DAG are represented by the output of the previous convolution and previous two cells, and the output nodes are represented by concatenating all intermediate nodes.

We learn from experience in manual architecture design and exploit existing modules as the candidate operations of search space, including lightweight module, attention module, and 3D decomposition convolution.

- (1)

- Lightweight module (i.e., inverted residual block in MobileNetv2 [63], IR) involves pointwise convolution and depthwise separable convolution. The purpose of the inverted residual module is to increase the number of channels by pointwise convolution and then perform depthwise separable convolution in higher dimensions to extract better channel features without significantly increasing the model parameters and computational costs.

- (2)

- Attention module (i.e., Squeeze-and-Excitation [64], SE) adaptively learns weights for different channels using global pooling and fully connected layers. Hundreds of spectral channels is a significant characteristic of hyperspectral images, where different channels contribute differently to the feature classification task, so the channel attention module is essential as verified in the experimental section.

- (3)

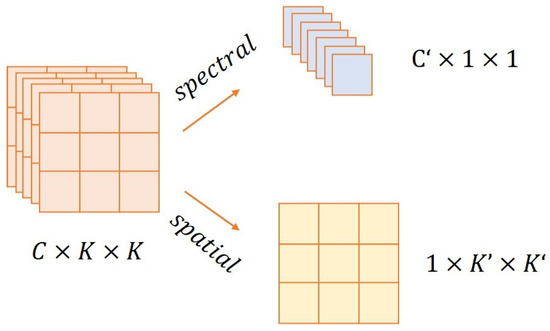

- 3D decomposition convolution. In this paper, 3D convolution is decomposed into two types of decomposition convolution for processing spectral and spatial information, respectively. The principle of 3D decomposition convolution is shown in Figure 3, where a 3D convolution with a kernel size of is decomposed into two decomposition convolutions with a kernel size of and , respectively. This simplifies the complexity of a single candidate operation and allows the search space to yield more possibilities of models, which can significantly reduce the model parameters.

Figure 3. The principle of 3D convolution decomposition.

Figure 3. The principle of 3D convolution decomposition.

Therefore, our modular search space fully considered the characteristics of hyperspectral data and presented the following improvements: (1) We take patches as input for accelerating the speed of processing, where the down-sampling operation is not essential in this procedure. (2) In order to efficiently extract the discriminative features of SS, we involve 3D decomposition convolution in search space as the candidate operation. (3) By well-designed attention module on the hyperspectral channel, our method can fully exploit spectral discriminant information. (4) The SS information of the HSI dataset is further extracted by using inverse residual module with less parameter.

3.2. Regularization-Based Edge-Decision Search Strategy

How to search the optimal architecture from the discrete search space in a differentiable manner is a key challenge after the construction of modular search space. Therefore, softmax transforms the search for network architectures from selecting discrete candidate operations to optimize the probability of continuous mixed operations.

3.2.1. Bi-Level Optimization

The network weights and the architecture parameters are two parameters need to be optimized. denotes the weights of different operations/paths on all edges, while is the internal parameters in operations. The bi-level optimization problem is presented by using the following formula to jointly optimize and :

where and denote the validation and training losses, respectively. is the upper-level variable, and is the lower-level variable. The optimal is obtained by the above bi-level optimization formula, and then the final neural architecture is derived by discretization on chosen operations.

The discretization process is to select the operation with the highest weight on the directed edge and discard other operations:

The bi-level optimization process mentioned above presents the following problems: (1) In the later period of the search, the number of skip connections in the selected architecture increases sharply, which is liable to resulting in performance degradation. (2) The weight sharing between subnets leads to inaccurate evaluation. Therefore, the edge decision criterion is exploited to alleviate the above-mentioned problem.

3.2.2. Edge Decision Criterion

The design of the selection criterion is crucial to guarantee that the most optimal edge is chosen during edge decision, i.e., maintaining the optimization of the supernet. Two aspects of edges should be considered: edge importance and selection certainty.

Edge Importance

Skip operation on important edges should have a lower weight. Therefore, the edge importance is defined to measure the weight of non-skip operations:

Selection Certainty

Denote distribution represents the normalized softmaxed weights of non-skip operation. Selection certainty is defined as the normalized entropy of the operation distribution to measure the certainty of distribution:

Then, the edge importance and the are normalized to calculate the final score and select the edge with the highest score:

where denotes the standard scaling regularization. First, an edge is selected greedily according to the above edge decision criterion, i.e., . The corresponding mixture operation is replaced with the optimal operation via . The weights and architectural parameters of the remaining paths within the mixed operation are no longer needed as the architectural parameters, and the network weights are gradually pruned in the optimization iteration for drastically improving the efficiency of search procedure. The remaining over-parameters supernet (including remaining and ) forms a new subproblem, which is also defined based on DAG. Furthermore, the operations on edge are selected iteratively by solving the remaining subproblems. Therefore, the validation accuracy better reflects the final evaluation accuracy as the model discrepancy is minimized in this procedure. Additionally, we provide each intermediate node eventually preserves two input edges. Once a node has two determined input edges, its other input edges will be pruned.

3.2.3. Dynamic Regularization (DR)

Following the determination of the edge decision, the regularization term that considers the quantity of skip connections is chosen to guide the adjustment of the skipped architecture parameters through the regularity factor . This approach effectively mitigates the issue of unfair competition among skip connections. More precisely, the dynamic regularity is defined as

The performance of the architecture is observed to exhibit a distribution closely resembling a Gaussian distribution when influenced by the number of skip connections. Consequently, the symbol is introduced to represent this phenomenon:

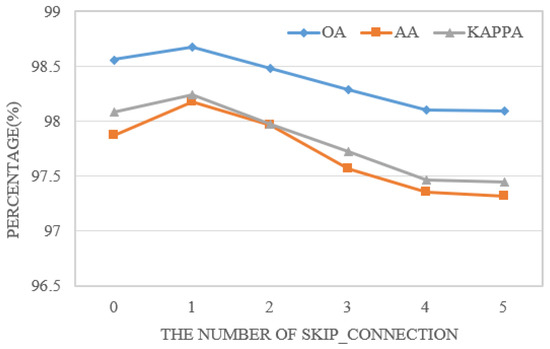

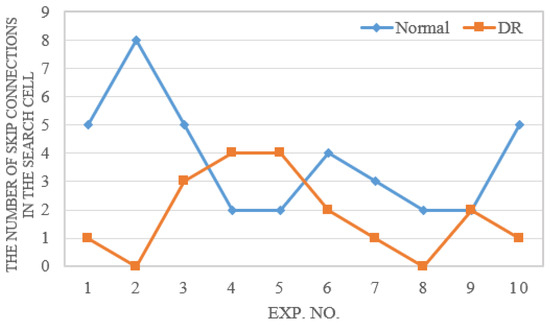

where is the number of skip connections in the selected operation. a, , and are the parameters in the Gaussian distribution. Dynamic regularity ensures that the selection of skip connections is encouraged when the number of skip connections is not enough and discouraged when a large number of skip connections is involved. As illustrated in Figure 4, the suitable number of skip connections will keep the architecture performance at the optimization status so as to avoid performance collapse caused by skip connections. The super-parameter settings of the Gaussian function were estimated by substituting the numerical results into a Gaussian distribution, e.g., , , . The whole searching workflow is illustrated in Figure 5, and an independent network without weight sharing is obtained.

Figure 4.

The performance impact of the number of skip connections on Pavia.

Figure 5.

The searching workflow works with edge decision with dynamic regularization.

3.3. Performance Evaluation

Designing a suitable loss function is a crucial task in the searching procedure for the optimal architecture. The cross-entropy loss measures the difference between the predicted value and the ground truth in the classification task, the loss is defined as

where n denotes the number of samples, is the ground-truth label of the given sample, and is the predicted label.

The generalization ability of the model can be quantified regarding the differences between the training and evaluation metrics. For two models with similar training metrics, the model with better evaluation metrics is more generalizable because it can better predict an unknown dataset. The training dataset is taken in the searching phase to optimize the model weights. The validation dataset is only available for model searching. The generalization loss is defined as the difference between the training metrics and the validation metrics , which measures the generalization ability of the model and is designed to guide the searching procedure.

where is the absolute value. To further improve the robustness and generalization performance of the searched model, we integrated the Beta-Decay regularization [55]. Specifically, the Beta-Decay regularization can impose constraints to keep the values and variances of the activation architecture parameters not too large.

where is the set of candidate operations and is the architectural parameter associated with operation k. We construct the above losses in an automatic way as optimization objectives.

where and are the hyperparameters, the learnable parameters can adaptively balance the weights of different losses. The whole procedure of the proposed EL-NAS is summarized in the Algorithm 1.

| Algorithm 1 The overall procedure of the proposed EL-NAS. |

|

4. Experiments

In this section, we mainly introduce five HSI data sets and the evaluation metrics utilized in this paper. The experiments performance, ablation studies, the parameters of the model and the running time are discussed and analyzed. In order to verify the effectiveness of EL-NAS in different scenarios, we also evaluate our method under independent scenarios with the same sensors (IN and SA) and also independent scenarios under different sensor circumstances (IN, UP, HU, SA, and IMDB).

4.1. Hyperspectral Data Sets

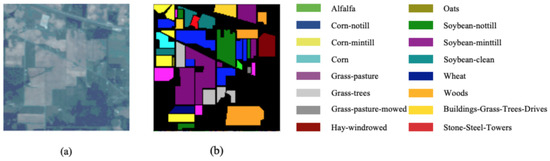

Indian Pines (IN) was collected by AVIRIS sensors in northwest India in 1992. This scene has 220 data channels, the spectral range is 0.2 to 2.4 m, and the size of each spectral dimension is 145 × 145. The image has a spatial resolution of 20 m/pixel and contains 16 feature categories, in which two-thirds are agriculture and one-third is forests or other natural perennial plants. Figure 6 shows the three-band false color composite of IN images and the corresponding ground truth data, respectively.

Figure 6.

IN. (a) False-color image. (b) Ground-truth map.

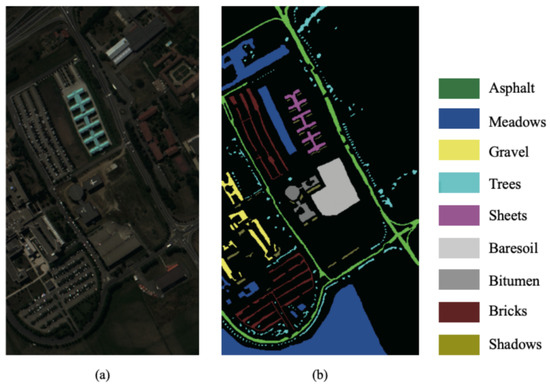

The ROSIS-03 sensor recorded the Pavia University (UP) image of the University of Pavia over Pavia in northern Italy. The image captures the urban area around the University of Pavia. The image size is 610 × 340 × 115, the spatial resolution is 1.3 m/pixel, and the spectral coverage is 0.43 to 0.86 m. The image contains nine categories. Before the experiment, 12 frequency bands and some samples containing no information were removed. Figure 7 shows the three-band false-color composite of the UP image and the corresponding ground truth data.

Figure 7.

UP. (a) False-color image. (b) Ground-truth map.

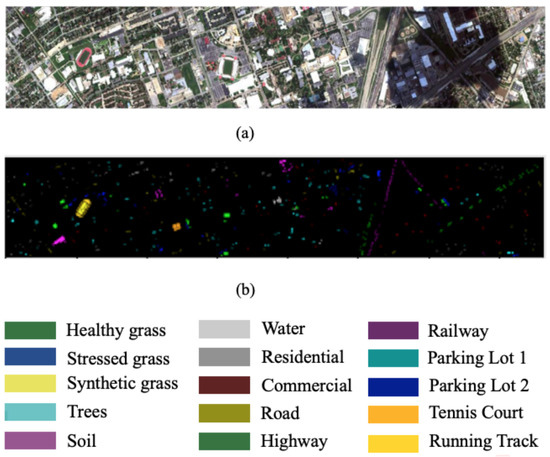

The Houston (HU) data set was collected by the Compact Aerial Spectral Imager (CASI) in 2013 on the University of Houston campus and adjacent urban areas. HU has 144 spectral channels, the wavelength range is 0.38 to 1.05 m, and the space size of 1905 × 349 is 2.5 m/pixel. It has 15 different ground truth classes with 15,029 marked pixels. Figure 8 shows the three-band false-color composite of the HU image and the corresponding ground truth data.

Figure 8.

HU. (a) False-color image. (b) Ground-truth map.

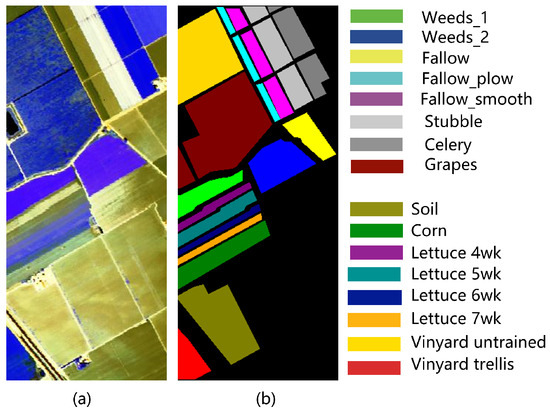

Salinas (SA) is captured by the 224-band AVIRIS sensor over the Salinas Valley in California and features high spatial resolution (3.7m pixels). The coverage area includes 512 rows by 217 samples. Like Indian Pines, 20 water absorption bands were discarded, leaving 224 bands remaining. The image contains 16 categories. Figure 9 shows the three-band false-color composite of the SA image and the corresponding ground truth data.

Figure 9.

SA. (a) False-color image. (b) Ground-truth map.

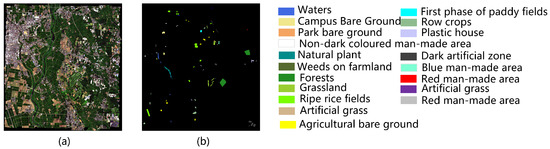

The Chikusei (IMDB) data set was collected by Hyperspectral Visible Near-Infrared Cameras (Hyperspec-VNIR-C) in Chikusei, Ibaraki, Japan, on 19 July 2014. It contains 19 classes and has 2517 × 2335 pixels. Its spatial resolution is 2.5 m per pixel. It consists of 128 spectral bands, which range from 363 to 1018 nm. The IMDB dataset was utilized in the sensor-independent scenario to verify the effects of the proposed EL-NAS. Figure 10 shows the three-band false-color composite of the IMDB image and the corresponding ground truth data.

Figure 10.

IMDB. (a) False-color image. (b) Ground-truth map.

4.2. Experimental Configuration

We take a pixel-centered patch of size as input data. The classification results are all summarized with the standard deviation of the estimated means by five independent random runs in experiments to avoid possible bias caused by the random sampling. The number of samples in each category in the training set and test set is also shown in Table 1.

Table 1.

Sample setup of IN, UP and HU data sets.

All the experiments in this paper are executed under the computer configuration as follows: An Intel Xeon W-2123 CPU at 3.60 GHz with 32-GB RAM and an NVIDIA GeForce GTX 2080 Ti graphical processing unit (GPU) with 27.8-GB RAM. The software environment is the system of 64-bit Windows 10 and DL frameworks of Pytorch 1.6.0.

4.3. Search Space Configuration

Five types of candidate operations are selected to construct the modular search space (MSS):

- Lightweight modules ( and inverted residual modules, IR).

- Three-dimensional decomposition convolution (3D convolution with kernel size of (SPA)and (SPE)).

- Attention modules (SE).

- Skip connection ().

- None ().

During the searching phase, a network is constructed using two normal cells. Within each normal cell, the stride for each convolution is set to 1. Throughout the search process, each cell comprises eight nodes, which include five intermediate nodes and a total of 20 edges.

4.4. Hyperparameter Settings

In the searching phase, we divide the training set into training and validation samples at a ratio of 0.5. Stochastic gradient descent (SGD) is used to optimize the model weight W, the initial learning rate is 0.005, the momentum is 0.9, and the weight decay is . For the architecture parameter A, an Adam optimizer with an initial learning rate of , momentum , and weight decay of is used. Edge decisions are made according to the selection criterion, and a complete supernet is not trained during the entire searching phase. After 50 epochs of warm-up, the edge decision is executed every five epochs. In addition, the batch size is increased by 16 after each edge decision, which can further improve the search efficiency.

In the training phase, we perform model training in 1000 epochs with a batch size of 128 and use a random gradient with an initial learning rate of 0.005, a momentum of 0.9, and a weight decay of . The gradient descent optimizer optimizes the model weight W. Other essential hyperparameters include gradient clipping set to 1 and dropout probability set to 0.3.

4.5. Ablation Study

4.5.1. Different Candidate Operations

In this section, we will analyze the effects of different candidate operations and verify the effectiveness of the modular search space, which is shown in Table 2. Based on the comparison between IR and BASE, the results of using the lightweight module are better than the basic convolution. The channel attention SE is ideally suited to datasets with a massive spectrum and significantly boosts performance. The performance of SPE and SPA further improves the performance because of the enhanced ability to extract 3D features of hyperspectral images. We can observe that MSS candidate operations achieved the optimal performance.

Table 2.

The performance of different candidate operations.

4.5.2. Strategy Optimisation Scheme

Three distinct architectural designs were explored within each optimization strategy to assess their impact on the search process. The evaluation results for these architectures are presented in Table 3. The regularization term serves to constrain exceedingly large , thereby allowing for the inclusion of architectural parameters that better represent high-quality architectures. The term enhances model performance by approximately , corroborating the notion that a more generalized search model is likely to yield an optimally performing architecture. Figure 11 compares the number of skip connections in models with and without Dynamic Regularization (DR) across ten different searches. DR enables the automatic, dynamic adjustment of weights based on the current number of skip connections during each iteration, thereby reducing the frequency of skip operations and leading to more stable search outcomes.

Table 3.

The impact of different strategic optimization scheme in three individual searches.

Figure 11.

The number of skip connections in the operations selected in ten independent searches.

4.6. Architecture Evaluation

In the first set of experiments, we mainly verify the proposed EL-NAS performance under the same scenario (Searching and Test under the same dataset). We randomly select of whole labeled samples as the training set, of the whole labeled samples as the validation sets, and the remaining is used as the test set for the three HSI data sets of IN, UP and HU. We first search for the architecture on the training set and reserve an optimal architecture for evaluation. The optimal cell structures obtained from the three data sets are shown in Figure 12. We compare the proposed EL-NAS model with traditional methods SVMCK, six DL methods (2D-CNN, 3D-CNN, DFFN, SSRN, DcCapsGAN, and LMAFN), and one NAS method for HSI classification (Auto-CNN, i.e., 3D-Auto-CNN).

Figure 12.

Best cell architecture on different dataset settings. (a) IN; (b) UP; (c) HU.

According to the quantitative comparison results shown in Table 4, Table 5 and Table 6, compared with the traditional method SVMCK, DL-based algorithms can achieve better classification results on the three data sets. CNN-based methods can be divided into 2D-CNN and 3D-CNN. Overall, 2D-CNN can extract more discriminative SS features through convolution operation and the nonlinear activation function. Compared with 2D-CNN, 3D-CNN achieves better classification accuracy by fully learning spectral features. Both DFFN and SSRN fuse SS features, and SSRN indicates better results than DFFN. DcCapsGAN integrates GAN and capsule networks to preserve features’ relative location further to improve classification performance. LMAFN adopts lightweight structures, which greatly increases the network depth while reducing the size of the model as well as enhances the nonlinear fitting ability of the model. Additionally, the above traditional algorithms and manually designed DL-based methods are subject to the constraints of subjective human cognition. Auto-CNN achieves satisfactory results in an automated way for neural architecture generation.

Table 4.

Classification results of different methods for labeled pixels of the IN data set.

Table 5.

Classification results of different methods for labeled pixels of the UP data set.

Table 6.

Classification results of different methods for labeled pixels of the HU data set.

Upon a meticulous evaluation of the empirical results, it is evident that the proposed EL-NAS consistently outperforms all comparison algorithms, including Auto-CNN, across the board on all three examined datasets. Specifically focusing on the University of Pavia (UP) dataset, EL-NAS exhibits an exemplary classification accuracy of . This result eclipses the performance metrics of other established algorithms as follows: it is more accurate than the Spectral–Spatial Residual Network (SSRN) which scores , higher than DcCapsGAN with , greater than Lightweight Multiscale Attention Fusion Network (LMAFN) at , and notably superior to Auto-CNN, which has an accuracy of . The superior performance of EL-NAS is due to its innovative integration of a lightweight structure, an attention module, and 3D decomposition convolutions. These elements work synergistically to enhance computational efficiency and focus on key features, contributing to its high classification accuracy. Moreover, EL-NAS leverages automated architecture search, avoiding manual design biases and delivering an optimized, resource-efficient model. This results in better performance metrics across all evaluated datasets, highlighting the algorithm’s efficacy and robustness.

In addition, Table 7 compares the parameter, and network depths of 2D-CNN, 3D-CNN, DFFN, SSRN, DcCapsGAN, LMAFN, and EL-NAS on the three datasets. From Table 7, based on the UP dataset, we can notice that EL-NAS has only 175657 parameters, which is less than 443929 parameters of DFFN, less than 229261 parameters of SSRN, and less than 21468326 parameters of DcCapsGAN. While reducing the model’s size, EL-NAS decreases the network depth to 13 layers and presents the most satisfying accuracies for three different datasets. Table 8 presents the running time of DcCapsGAN, 2D CNN, 3D CNN, DFFN, SSRN, LMAFN, Auto-CNN, and EL-NAS, including searching time, training time, and test time. For the three data sets, our model runs 68.22 s, 62.66 s, and 71.39 s for searching, 87.81 s, 117.81 s, and 147.43 s for training, and 0.88 s, 3.42 s, and 1.28 s for testing, respectively. Note that we use more efficient and complex modules compared to Auto-CNN, so the searched network takes slightly longer to train and test. The execution time of EL-NAS surpasses that of all comparable handcrafted deep-learning algorithms, and its search time also outperforms that of Auto-CNN. This exceptional performance strongly attests to EL-NAS’s high efficiency in both memory utilization and computational overhead. This efficiency is largely attributed to the incorporation of lightweight modules and the expedited search process facilitated by intelligent edge decision-making.

Table 7.

Parameter, depth of different models for three data sets.

Table 8.

Running times (s) for three datasets: ’Training’ refers to the total duration required for model training. ’Test’ denotes the complete time taken for testing. ’Searching’ indicates the time needed to complete a search.

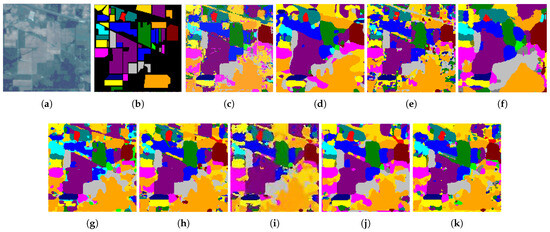

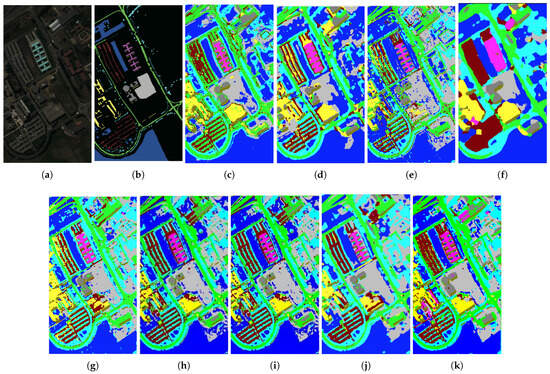

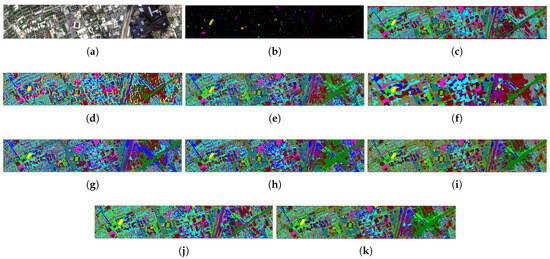

Figure 13, Figure 14 and Figure 15 illustrate the full classification maps obtained from different algorithms on three HSI data sets. Pixel-based approaches SVMCK present more random noise and depict more errors, while SS-based approaches such as 2D-CNN, 3D-CNN, DFFN, SSRN, DcCapsGAN, and LMAFN demonstrate smoother results than pixel-based approaches. In addition, compared with other comparisons, LMAFN exhibits a smoother classification result and higher accuracy because of simultaneously considers spatial and continuous spectral features. Noteworthy, Auto-CNN can obtain precise classification results, which demonstrates the effectiveness of the auto-designed neural network for HSI classification. Nonetheless, when juxtaposed with the aforementioned algorithms, the proposed EL-NAS not only achieves superior accuracy and classification performance, but also does so with a reduced parameter count. This is accomplished through the synergistic integration of lightweight modules and an efficient architecture search algorithm, all underpinned by a highly effective automated architecture search process.

Figure 13.

Classification maps for IN. (a) False-color image; (b) ground-truth map; (c) SVMCK; (d) 2D-CNN; (e) 3D-CNN; (f) DFFN; (g) SSRN; (h) DcCapsGAN; (i) AUTO-CNN; (j) LAMFN; (k) EL-NAS.

Figure 14.

Classification maps for UP. (a) False-color image; (b) ground-truth map; (c) SVMCK; (d) 2D-CNN; (e) 3D-CNN; (f) DFFN; (g) SSRN; (h) DcCapsGAN; (i) AUTO-CNN; (j) LMAFN; (k) EL-NAS.

Figure 15.

Classification maps for HU. (a) False-color image; (b) ground-truth map; (c) SVM; (d) SVMCK; (e) 2D-CNN; (f) 3D-CNN; (g) DFFN; (h) SSRN; (i) DcCapsGAN; (j) AUTO-CNN; (k) EL-NAS.

4.7. Cross Domain Experiment

In the second phase of our experiments, we aim to validate the cross-dataset and cross-sensor capabilities of our proposed EL-NAS framework. Specifically, we conduct tests under two distinct scenarios: a dataset-independent scenario, where the neural network architecture is optimized within the same sensor type but across different datasets, and a sensor-independent scenario, where the architecture is optimized across varying sensor types. To facilitate domain adaptation within the classification network, we have engineered dataset-specific classification layers in the latter stages of the network. Additionally, the convolutional layers preceding the shared cells are designed to adapt to diverse datasets.

4.7.1. Cross-Datasets Architecture Search of EL-NAS

In this section, we utilize the IN and SA datasets collected by the AVIRIS sensor for our experiments. EL-NAS is conducted on the IN dataset, and the optimal cell structure identified is then employed to construct the SA classification network. According to Table 9, using the IN dataset for searching yields classification accuracies of 94.70% and 95.99% on the SA dataset with 10 and 20 labeled samples per class, respectively. Conversely, using the SA dataset for searching results in accuracies of 88.60% and 90.39% on the IN dataset with 10 and 20 labeled samples per class, respectively.

Table 9.

Classification results of cross-datasets architecture search of EL-NAS.

The experimental results further substantiate the efficacy of the proposed EL-NAS method in key evaluation metrics. Notably, the use of a substantial auxiliary dataset (labeled as 10% IN or SA) for architecture searching not only matches but often surpasses the performance achieved using the target datasets. These findings offer an efficient methodology for automatic neural network architecture design across different application scenarios under the same acquisition sensor.

4.7.2. Cross-Sensors Architecture Search of EL-NAS

In this part, we adopt five datasets collected by four kinds of HSI acquisition sensors (i.e., IN and SA from AVIRIS, UP from ROSIS, HU from CASI, IMDB from Hyperspec-VNIR-C). We conduct architecture searching on one of the above datasets, and the classification network derived by the searched architecture is applied to other datasets. The experimental results of the search on HU are shown in Table 10. Our findings indicate that when target data volume is limited, the proposed EL-NAS method, utilizing a large auxiliary dataset (labeled as 10% HU), can achieve comparable or superior performance on key evaluation metrics, compared to using target datasets. These results offer an effective optimization strategy for cross-domain learning applications facing data scarcity, demonstrating that EL-NAS can automatically yield a neural network architecture design with satisfactory results even under different datasets collected by different acquisition sensors.

Table 10.

Classification results of cross-sensors architecture search of EL-NAS.

5. Conclusions

In this article, a novel EL-NAS is designed based on the gradient-based NAS manner to realize an efficient automatic way for the application of HSI classification. Meanwhile, the 3D decomposition convolution, lightweight structure, and attention module are considered to construct an efficient, lightweight attention searching space to accelerate the searching procedure and improve the searching results. Further for mitigating performance collapse caused by the number of skip connections in the architecture searching procedure, the edge decision and dynamic regularization are exploited through entropy probability distribution estimation of the non-skip operation and number of skip connections. Meanwhile, with the implementation of edge decisions and decrease in the weight sharing, the consistency in the searching and the evaluation procedure is ensured. In performance evaluation, we also construct a generalization loss to further improve the searching and classification performance. The experiments performed on three different HSI datasets demonstrate that the proposed EL-NAS outperforms other state-of-the-art comparison algorithms in classification accuracy, searching and computationally effectiveness, the number of parameters, and visual comparison performance. In cross scenario experiment, EL-NAS also indicates satisfying performance among different datasets collected by various acquisition sensors. The low searching, parameters, and computational burden of the proposed EL-NAS can further pave a new way for its practical application in HSI classification or edge computing application areas.

Author Contributions

Conceptualization, J.W. and J.H.; methodology, J.W. and J.H.; validation, Y.L., Z.H., S.H. and Y.Y.; investigation, J.W., J.H. and Y.L.; writing—original draft preparation, J.W. and J.H.; writing—review and editing, J.W. and J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under grant numbers 61801353 and 61977052, in part by GHfund B under grant number 202107020822 and 202202022633, and in part by the Project Supported by the China Postdoctoral Science Foundation funded project under grant number 2018M633474, and in part by the, and in part by the China Aerospace Science and Technology Corporation Joint Laboratory for Innovative Onboard Computer and Electronic Technologies under grant number 2023KFKT001-2.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Lacar, F.M.; Lewis, M.M.; Grierson, I.T. Use of hyperspectral imagery for mapping grape varieties in the Barossa Valley, South Australia. In Proceedings of the Geoscience and Remote Sensing Symposium, Sydney, Australia, 9–13 July 2001. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Zhang, F.; Wu, L.; Zhu, D.; Liu, Y. Social sensing from street-level imagery: A case study in learning spatio-temporal urban mobility patterns. ISPRS J. Photogramm. Remote Sens. 2019, 153, 48–58. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X.; Du, B. Hyperspectral remote sensing image subpixel target detection based on supervised metric learning. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4955–4965. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification via kernel sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 51, 217–231. [Google Scholar] [CrossRef]

- Yi, C.; Nasrabadi, N.M.; Tran, T.D. Classification for hyperspectral imagery based on sparse representation. In Proceedings of the Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Reykjavik, Iceland, 14–16 June 2010. [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Peng, J.; Zhou, Y.; Chen, C. Region-Kernel-Based Support Vector Machines for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4810–4824. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1351–1362. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, L.; Liu, H.; Yang, S. Hyperspectral Image Classification by Spatial–Spectral Derivative-Aided Kernel Joint Sparse Representation. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2485–2500. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, L.; Shuang, W.; Hou, B.; Fang, L. Adaptive Nonlocal Spatial–Spectral Kernel for Hyperspectral Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1–16. [Google Scholar] [CrossRef]

- Saxena, L. Recent advances in deep learning. Comput. Rev. 2016, 57, 563–564. [Google Scholar]

- Zhang, H.; Li, Y.; Zhang, Y.; Shen, Q. Spectral-spatial classification of hyperspectral imagery using a dual-channel convolutional neural network. Remote Sens. Lett. 2017, 8, 438–447. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral-Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 847–858. [Google Scholar] [CrossRef]

- Slavkovikj, V.; Verstockt, S.; Neve, W.D.; Hoecke, S.V.; Walle, R. Hyperspectral Image Classification with Convolutional Neural Networks. In Proceedings of the the 23rd ACM International Conference, Montreal, QC, Canada, 18–22 October 2021. [Google Scholar]

- He, M.; Bo, L.; Chen, H. Multi-scale 3D deep convolutional neural network for hyperspectral image classification. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Unsupervised Spectral-Spatial Feature Learning via Deep Residual Conv-Deconv Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 391–406. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L.; Lu, T. Hyperspectral Image Classification With Deep Feature Fusion Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3173–3184. [Google Scholar] [CrossRef]

- Xi, B.; Li, J.; Diao, Y.; Li, Y.; Li, Z.; Huang, Y.; Chanussot, J. DGSSC: A Deep Generative Spectral-Spatial Classifier for Imbalanced Hyperspectral Imagery. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1535–1548. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Y.; Jiang, Y.; Wang, P.; Shen, C. Hyperspectral Classification Based on Lightweight 3-D-CNN with Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5813–5828. [Google Scholar] [CrossRef]

- Wang, J.; Guo, S.; Huang, R.; Li, L.; Jiao, L. Dual-Channel Capsule Generation Adversarial Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Yang, X.; Cao, W.; Lu, Y.; Zhou, Y. QTN: Quaternion Transformer Network for Hyperspectral Image Classification. IEEE Trans. Circuits Syst. Video Technol. 2023. [Google Scholar] [CrossRef]

- Wang, J.; Huang, R.; Guo, S.; Li, L.; Zhu, M.; Yang, S.; Jiao, L. NAS-Guided Lightweight Multiscale Attention Fusion Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 8754–8767. [Google Scholar] [CrossRef]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollar, P. Designing Network Design Spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2020. [Google Scholar]

- Liu, B.; Yu, X.; Yu, A.; Wan, G. Deep convolutional recurrent neural network with transfer learning for hyperspectral image classification. J. Appl. Remote Sens. 2018, 12, 026028. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.; Ghamisi, P. Heterogeneous transfer learning for hyperspectral image classification based on convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3246–3263. [Google Scholar] [CrossRef]

- Liu, X.; Hu, Q.; Cai, Y.; Cai, Z. Extreme learning machine-based ensemble transfer learning for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3892–3902. [Google Scholar] [CrossRef]

- Jaderberg, M.; Vedaldi, A.; Zisserman, A. Speeding up Convolutional Neural Networks with Low Rank Expansions. arXiv 2014, arXiv:1405.3866. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. Comput. Sci. 2015, 14, 38–39. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. arXiv 2021, arXiv:2103.15808. [Google Scholar]

- Leiva-Aravena, E.; Leiva, E.; Zamorano, V.; Rojas, C.; John, M. Neural Architecture Search with Reinforcement Learning. arXiv. 2019, arXiv:1611.01578. [Google Scholar]

- Pham, H.; Guan, M.; Zoph, B.; Le, Q.; Dean, J. Efficient neural architecture search via parameters sharing. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 4095–4104. [Google Scholar]

- Baker, B.; Gupta, O.; Naik, N.; Raskar, R. Designing neural network architectures using reinforcement learning. arXiv 2016, arXiv:1611.02167. [Google Scholar]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-scale evolution of image classifiers. In Proceedings of the International Conference on Machine Learning, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 2902–2911. [Google Scholar]

- Liu, H.; Simonyan, K.; Vinyals, O.; Fernando, C.; Kavukcuoglu, K. Hierarchical representations for efficient architecture search. arXiv 2017, arXiv:1711.00436. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv 2018, arXiv:1806.09055. [Google Scholar]

- Li, C.; Ning, J.; Hu, H.; He, K. Enhancing the Robustness, Efficiency, and Diversity of Differentiable Architecture Search. arXiv 2022, arXiv:2204.04681. [Google Scholar]

- Xia, X.; Xiao, X.; Wang, X.; Zheng, M. Progressive Automatic Design of Search Space for One-Shot Neural Architecture Search. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2455–2464. [Google Scholar]

- Liu, Y.; Li, T.; Zhang, P.; Yan, Y. Improved conformer-based end-to-end speech recognition using neural architecture search. arXiv 2021, arXiv:2104.05390. [Google Scholar]

- Li, H.; Wu, G.; Zheng, W.S. Combined depth space based architecture search for person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6729–6738. [Google Scholar]

- Zhang, H.; Gong, C.; Bai, Y.; Bai, Z.; Li, Y. 3-D-ANAS: 3-D Asymmetric Neural Architecture Search for Fast Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–19. [Google Scholar] [CrossRef]

- Xue, X.; Zhang, H.; Fang, B.; Bai, Z.; Li, Y. Grafting Transformer Module on Automatically Designed ConvNet for Hyperspectral Image Classification. arXiv 2021, arXiv:2110.11084. [Google Scholar]

- Liang, H.; Zhang, S.; Sun, J.; He, X.; Huang, W.; Zhuang, K.; Li, Z. Darts+: Improved differentiable architecture search with early stopping. arXiv 2019, arXiv:1909.06035. [Google Scholar]

- Xu, Y.; Xie, L.; Zhang, X.; Chen, X.; Qi, G.J.; Tian, Q.; Xiong, H. PC-DARTS: Partial channel connections for memory-efficient architecture search. arXiv 2019, arXiv:1907.05737. [Google Scholar]

- Chu, X.; Wang, X.; Zhang, B.; Lu, S.; Wei, X.; Yan, J. DARTS-: Robustly stepping out of performance collapse without indicators. arXiv 2020, arXiv:2009.01027. [Google Scholar]

- Li, G.; Qian, G.; Delgadillo, I.C.; Muller, M.; Thabet, A.; Ghanem, B. Sgas: Sequential greedy architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1620–1630. [Google Scholar]

- Chu, X.; Zhang, B.; Xu, R. Fairnas: Rethinking evaluation fairness of weight sharing neural architecture search. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 12239–12248. [Google Scholar]

- Hou, P.; Jin, Y.; Chen, Y. Single-DARTS: Towards Stable Architecture Search. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 373–382. [Google Scholar]

- Zela, A.; Elsken, T.; Saikia, T.; Marrakchi, Y.; Brox, T.; Hutter, F. Understanding and Robustifying Differentiable Architecture Search. arXiv 2019, arXiv:1909.09656. [Google Scholar]

- Ye, P.; Li, B.; Li, Y.; Chen, T.; Fan, J.; Ouyang, W. beta-DARTS: Beta-Decay Regularization for Differentiable Architecture Search. arXiv 2022, arXiv:2203.01665. [Google Scholar]

- Huang, L.; Sun, S.; Zeng, J.; Wang, W.; Pang, W.; Wang, K. U-DARTS: Uniform-space differentiable architecture search. Inf. Sci. 2023, 628, 339–349. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, X.; Cui, H.; Yin, H.; Zhang, Y. FP-DARTS: Fast parallel differentiable neural architecture search for image classification. Pattern Recognit. 2023, 136, 109193. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, X.; Wang, G.; Cai, Z. Particle Swarm Optimization Based Deep Learning Architecture Search for Hyperspectral Image Classification. In Proceedings of the IGARSS 2020-2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 509–512. [Google Scholar]

- Liu, X.; Zhang, C.; Cai, Z.; Yang, J.; Zhou, Z.; Gong, X. Continuous Particle Swarm Optimization-Based Deep Learning Architecture Search for Hyperspectral Image Classification. Remote Sens. 2021, 13, 1082. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, K.; Zhu, L.; He, X.; Ghamisi, P.; Benediktsson, J.A. Automatic design of convolutional neural network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7048–7066. [Google Scholar] [CrossRef]

- Zhan, L.; Fan, J.; Ye, P.; Cao, J. A2S-NAS: Asymmetric Spectral-Spatial Neural Architecture Search for Hyperspectral Image Classification. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–9 June 2023; pp. 1–5. [Google Scholar]

- Cao, C.; Xiang, H.; Song, W.; Yi, H.; Xiao, F.; Gao, X. Lightweight Multiscale Neural Architecture Search With Spectral–Spatial Attention for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).