Abstract

The fusion of spectral–polarimetric information can improve the autonomous reconnaissance capability of unmanned aerial vehicles (UAVs) in detecting artificial targets. However, the current spectral and polarization imaging systems typically suffer from low image sampling resolution, which can lead to the loss of target information. Most existing segmentation algorithms neglect the similarities and differences between multimodal features, resulting in reduced accuracy and robustness of the algorithms. To address these challenges, a real-time spectral–polarimetric segmentation algorithm for artificial targets based on an efficient attention fusion network, called ESPFNet (efficient spectral–polarimetric fusion network) is proposed. The network employs a coordination attention bimodal fusion (CABF) module and a complex atrous spatial pyramid pooling (CASPP) module to fuse and enhance low-level and high-level features at different scales from the spectral feature images and the polarization encoded images, effectively achieving the segmentation of artificial targets. Additionally, the introduction of the residual dense block (RDB) module refines feature extraction, further enhancing the network’s ability to classify pixels. In order to test the algorithm’s performance, a spectral–polarimetric image dataset of artificial targets, named SPIAO (spectral–polarimetric image of artificial objects) is constructed, which contains various camouflaged nets and camouflaged plates with different properties. The experimental results on the SPIAO dataset demonstrate that the proposed method accurately detects the artificial targets, achieving a mean intersection-over-union (MIoU) of 80.4%, a mean pixel accuracy (MPA) of 88.1%, and a detection rate of 27.5 frames per second, meeting the real-time requirement. The research has the potential to provide a new multimodal detection technique for enabling autonomous reconnaissance by UAVs in complex scenes.

1. Introduction

One of the typical characteristics of future intelligent warfare is the use of unmanned aerial vehicles (UAVs) for autonomous reconnaissance and target engagement [1,2]. However, the deployment of advanced coatings and camouflaged nets allows the specific targets to blend seamlessly with the environment, posing significant challenges in detection [3]. Therefore, achieving precise and rapid identification of potential enemy military targets holds strategic significance within dynamic and complex environments [1].

Artificial target detection involves using computer vision and machine learning techniques to represent features in images or videos in order to accurately detect and segment hidden target objects within complex backgrounds [4]. Currently, camouflaged target detection primarily relies on visible light images and can be broadly categorized into two approaches: handcrafted feature-based methods and deep learning-based methods [4,5]. Handcrafted feature-based methods primarily rely on low-level features, such as texture, color, and motion, to detect or segment camouflaged targets [6,7,8]. Due to the variations in texture and color among different objects in the input image, adaptive filters are utilized to recognize textures of different sizes to identify the disguised targets [6]. Alternatively, color can also be employed as a threshold to differentiate specific areas [7]. Static features, like texture and color, do not perform well in detecting moving targets. Therefore, motion features are utilized as supplementary information to enhance the accuracy of detection [8]. These methods do not require a large amount of training with annotated data, but they are subject to issues such as long feature extraction time and poor transferability. To address these issues, researchers have introduced deep learning into the field of target detection. Most existing deep learning-based methods for target detection initially employ convolutional neural networks to extract target features. They then utilize strategies, such as expanding the receptive field, introducing attention mechanisms, and enhancing feature aggregation, to further enhance the features and improve the performance of target detection [9,10,11,12]. Expanding the receptive field aims to enhance the network’s feature extraction capability by simulating the visual mechanism of the human eye. It plays a role in improving the discriminability of features [9]. Attention mechanisms help the network focus only on the relevant parts of targets during feature extraction, while ignoring the influence of background information [10]. Efficient feature aggregation strategies can fully utilize multi-level feature information, thereby enhancing the network’s performance [11,12]. Deep learning-based detection methods have improved the capability of extracting deep level features of targets. However, in complex natural scenes with low contrast, visible light images often lack features that can reveal the differences between the target and the background. This limitation restricts the performance of artificial target detection algorithms in such scenarios.

Different objects exhibit unique polarization and spectral characteristics due to variations in material, texture, and other properties. When illuminated, these objects undergo non-elastic scattering, resulting in distinct polarization and spectral features that differentiate them from the background [13]. Objects with significant polarization differences can be easily detected and recognized, but they are susceptible to the factors of both the imaging angles and the target material [14]. The disparities between artificial targets and background components also result in inconsistent spectral characteristics. Analyzing the reflectance spectra of different targets is conducive to distinguish the artificial targets. However, it remains challenging to differentiate low reflectivity targets or artificial targets with similar spectral waveforms [15]. Spectral–polarimetric detection can simultaneously provide information about the polarization features, spectral features, spatial features, and radiative characteristics of the target, while suppressing the background and highlighting the target [16]. For instance, camouflaged nets are challenging to detect in scenes such as desert or grassland, where their colors and morphology are like the surroundings. However, by incorporating spectral features into the detection process, the accuracy can be significantly improved [16]. As camouflage techniques continue to advance, coatings with spectral characteristics like the background have been developed. Under low illumination or shadowed conditions, even the fusion of spectral information may not separate the target from the background. However, incorporating polarimetric information has the potential to address these challenges [17,18,19]. In addition, under adverse weather conditions, such as rainy or foggy weather, relying solely on visible light images is insufficient to perceive the surrounding complex environment. Polarization information can serve as a supplementary source and enhance the outdoor scene perception capability of unmanned driving systems [20,21]. Therefore, combining polarization information with spectral information for artificial target detection and recognition can compensate for the limitations of each method and improve detection performance.

Currently, the spectral and polarization imaging systems can be primarily categorized into scanning and snapshot modes based on their data acquisition methods [22]. Scanning methods typically involve the use of filter rotation and controlled illumination, liquid crystal tunable filters, and acousto–optic tunable filters [22]. These approaches require mechanical or optical scanning, which makes it challenging to meet real-time requirements. Snapshot methods can be further divided into computational tomography-based, aperture-coded, and color filter array-based approaches [23]. However, computational tomography-based and aperture-coded methods suffer from issues related to complex data reconstruction and inadequate spectral resolution. Color filter array-based approaches usually capture spectral information in only the RGB channels, limiting the performance of target detection algorithms. Deep learning-based artificial target segmentation methods primarily extract features from single-modal information and employ various strategies to enhance these features and improve detection performance [4]. However, when using multi-modal information input and detecting small or complex-background artificial targets, these methods face the following issues: 1. Insufficient consideration of complementarity and differences between multi-modal information; 2. Difficulty in accurately extracting target edge textures, leading to significant deviations in model predictions; 3. Severe loss of detailed information for small targets after multiple down-sampling steps, which affects detection accuracy.

Based on these considerations, this study proposes the joint use of the snapshot spectral camera and the snapshot polarization camera to acquire spectral––polarimetric images. These images are registered and used as inputs to improve the spatial resolution in detecting multiple targets in complex backgrounds while reducing the impact of illumination variations. Furthermore, the study introduces an efficient spectral–polarimetric fusion network (ESPFNet) for real-time semantic segmentation, which incorporates a highly efficient attention mechanism. The network utilizes a coordination attention bimodal fusion (CABF) module based on position attention to capture both the spectral and the polarization information of the targets. Additionally, a complex atrous spatial pyramid pooling (CASPP) module is proposed to enhance the feature extraction capability. The network also incorporates a residual decoding block (RDB) to extract fused features and improve segmentation performance. To facilitate the research, a dataset of spectral–polarimetric images on ground artificial targets is acquired using UAVs equipped with both the snapshot spectral camera and the snapshot polarization camera.

The main contributions of this study are as follows:

- A real-time spectral–polarimetric segmentation algorithm for artificial targets based on an efficient attention fusion network, called the ESPFNet (efficient spectral–polarimetric fusion network) is proposed. The ESPFNet employs dual input streams from a snapshot spectral camera and a snapshot polarization camera to improve the spatial resolution in detecting multiple targets in complex backgrounds while reducing the impact of illumination variations;

- A coordination attention bimodal fusion (CABF) module is designed, which leverages position attention mechanisms to optimize information alignment across encoding layers. Additionally, a complex atrous spatial pyramid pooling (CASPP) module is pro-posed to enhance the feature extraction capability. The network also incorporates a residual decoding block (RDB) to extract fused features and improve segmentation performance;

- A spectral–polarimetric image dataset of artificial targets, named SPIAO (spectral–polarimetric image of artificial objects) is constructed. Experimental results on the SPIAO dataset demonstrate that the ESPFNet accurately detects the artificial targets and meets the real-time requirement.

2. Methods

2.1. Algorithm Overview

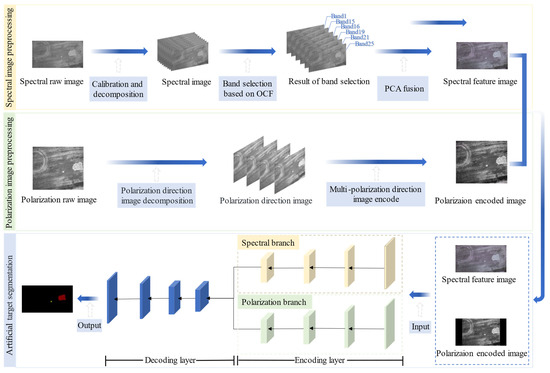

The overall process of the spectral–polarimetric artificial target segmentation algorithm is illustrated in Figure 1. First, the spectral and the polarization images are preprocessed. The spectral image undergoes band selection to generate a fused spectral feature image, while the polarization image is analyzed to generate an encoded image. Next, the pre-calibrated transformation matrix is used to register the spectral feature image and the polarization-encoded image. Finally, the spectral–polarization images are encoded and decoded using the ESPFNet to achieve artificial target segmentation.

Figure 1.

Overall schematic diagram of spectral–polarimetric artificial target segmentation algorithm, which includes the spectral image preprocessing framework, the polarization image preprocessing framework, and the artificial target segmentation framework.

2.2. Preprocessing of Spectral–Polarimetric Images

The preprocessing steps for the spectral image include the following:

- Spectral calibration: To mitigate the effects of CMOS variations and imaging noise caused by changes in lighting conditions, a calibration process is performed using the white and dark reference taken under the same lighting conditions. The corrected spectral image [15] is calculated as follows:where is the corrected spectral image, is the original spectral images, is the dark reference, and is the white reference;

- Band selection: To obtain a subset of bands with lower correlation and higher discriminative information, reducing the redundancy of the spectral bands while preserving high information content, an optimal clustering framework (OCF)-based band selection algorithm is applied [15];

- Band image fusion: The selected subset of bands is divided into R, G, and B band sets based on wavelengths. Principal component analysis (PCA) is then utilized to merge the different bands in the set into a single image [24]. This fusion technique retains the original spectral information while reducing the dimensionality of the data, making it compatible with subsequent detection using deep learning models.

The preprocessing steps for polarization image include the following:

- The Newton polynomial interpolation algorithm [25] is used to decompose the original polarized images obtained from the snapshot polarimetric camera into four polarized intensity images: 0°, 45°, 90°, and 135° [14];

- Fusion of multiple polarization direction images. The 0°, 45°, and 90° polarization direction images are encoded, allowing the encoded image to fully retain the polarization information of the target while also meeting the processing requirements of the neural network [21].

2.3. ESPFNet Artificial Target Segmentation Algorithm

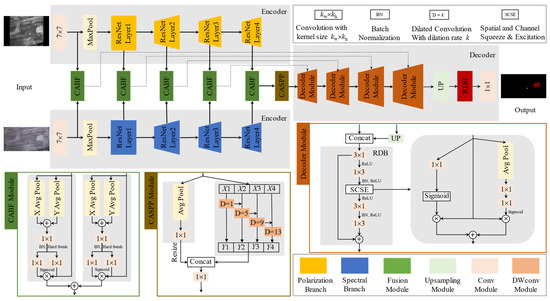

The ESPFNet’s overall framework is depicted in Figure 2. The network adopts an encoder–decoder structure, where the encoder part utilizes a lightweight architecture called RseNet18 as the backbone network [26]. The encoding layer employs a dual-stream input approach to simultaneously extract the spectral features and the polarization features of the artificial target. The feature extraction process is divided into five stages, where each stage is responsible for extracting features from both the spectral and the polarization images. The feature maps obtained at each stage are then passed into the CABF feature fusion module. Additionally, the CASPP module is utilized to connect the decoder. The decoding part of the ESPFNet consists of four decoder sub-modules. Each decoder module takes two inputs: the output of the previous module and the skip connections from the encoder network. This allows the decoder to utilize information from both the previous decoding stage and the encoder’s skip connections. After the decoding process, the resulting feature maps are subjected to upsampling and convolution operations. This is executed to resize the decoded feature maps back to the input resolution, thereby obtaining the final prediction results.

Figure 2.

Network structure of the ESPFNet, which includes a coordination attention bimodal fusion (CABF) module, a complex atrous spatial pyramid pooling (CASPP) module, and a residual decoding block (RDB).

The network introduces the CABF feature fusion module in the encoding structure, which enhances the ability to combine features from different modalities. Additionally, the CASPP module is employed to enrich the global information of the bottom-level feature maps. This module enhances the feature extraction capability for small targets. Moreover, the network utilizes the RDB module to improve the segmentation performance of the model.

2.3.1. Coordination Attention Bimodal Fusion Module

In order to mitigate the impact of feature disparities and strengthen the extraction and utilization of the multimodal features, the feature fusion module based on a position attention mechanism is designed. It is applied after each encoding stage. The spectral feature maps and the polarization feature maps extracted at each layer are coordinated and optimized using a position attention mechanism, enabling the model to adaptively focus on target information while suppressing background information. The fusion results are fed into the decoder through multi-scale skip connections. The specific design of the bimodal feature fusion module is shown in Figure 2 (highlighted in light green).

It is difficult to preserve the positional information of the targets since the global spatial information of the input features is compressed to the channel dimension by using a two-dimensional global pooling operation in the attention mechanism modules. To enhance long-range interactions of positional information, the input features are encoded along both width and height dimensions for each channel separately. The calculation process is represented by (2) and (3):

where the input is the input features, w is the width of the input features, h is the height of the input features, and c is a specific channel of the input features.

They are then passed through a shared 1 × 1 convolution function, followed by batch normalization and an activation function, to blend the encoded features. This process ensures that each dimension of the features contains the global information.

where X is the input features from the previous layers, Y is the output features, is the 1 × 1 convolution function, is the activation function. Hard Swish is used as an activation function to improve the accuracy of the network while keeping the computational cost low.

The features are divided into two parts along the spatial dimension for further coordination. Two 1 × 1 convolution functions are used for channel adjustment, as shown in Equations (5) and (6), where represents the Sigmoid function:

The weight information obtained from the previous two parts, along with the original input features, are used to perform a weighted operation as shown in (7):

During the feature fusion process, the coordinate attention is utilized to accurately localize the target’s position. This allows the network to precisely capture the target information. As a result, the segmentation performance is enhanced.

2.3.2. Complex Atrous Spatial Pyramid Pooling Module

Since the details of the targets are severely lost during the multiple down-sampling operations in the encoding stage, receptive field modules, including common ones such as the inception block [27], receptive field block (RFB) [9], and atrous spatial pyramid pooling (ASPP) [28], are introduced. The ASPP module utilizes parallel dilated convolutions to expand the receptive field range. In order to obtain a larger receptive field, this paper strengthens the connections between different dilated convolutions, effectively improving the overall receptive field obtained. The specific design of the CASPP module is shown in Figure 2 (highlighted in gold).

To capture the global contextual information of the input features, the CASPP module performs operations such as 2D global pooling, convolution, and resolution adjustment. Simultaneously, the input features are divided into four equal subsets: X1, X2, X3, and X4. Each subset undergoes a separate dilated convolution with a different dilation rate, resulting in corresponding outputs Y1, Y2, Y3, and Y4. The calculation process is illustrated in Equation (8). Finally, the feature containing global contextual information and the four output features are blended to obtain the result.

where Xi is the i-th feature subset, is the dilated convolution with a kernel size of 3 × 3 and dilation rates k = 5, 9, 13, and Yi is the output of the i-th feature subset. By concatenating the outputs of the four feature subsets, the result is obtained, which incorporates features extracted by dilated convolutions with different dilation rates. This leads to a significant increase in the receptive field.

2.3.3. Residual Decoding Block Module

The specific design of the decoding module in the network is shown in Figure 2 (highlighted in orange). The input consists of two parts: the input with skip connections from the encoding part and the output from the superior decoding module. The output of the superior decoding module is upsampled, leading to an enlarged feature map that is twice its original size. Then, it is concatenated with the input from the encoding part along the channel dimension. Next, the merged feature map goes through the RDB module to refine the feature extraction. Finally, the decoding result is obtained from this process.

The RDB module adopts a residual structure and replaces the commonly used 3 × 3 convolution with two sets of asymmetric convolutions. This substitution effectively reduces the number of parameters in the network. Additionally, the SCSE (spatial and channel squeeze and excitation) module is incorporated in the middle of the residual structure [29]. This integration enhances the pixel-wise classification ability of the decoding module, thereby improving the overall segmentation performance of the network. The SCSE module recalibrates and combines the features along both the spatial and channel directions. This allows the network to leverage global information to enhance target features and suppress background features, improving the discriminative power of the decoding module.

3. Experiments and Results

3.1. Data Set

The study constructed a self-built dataset called SPIAO (spectral–polarimetric image of artificial objects). The data acquisition system consists of a hexacopter UAV equipped with a snapshot polarization camera (FLIR, USA, BLACKFLY S BFS-U3-51S5 PC) and a snapshot multispectral camera (XIMA, German, MQ022HG-IM-SM5X5-NIR). Both cameras are equipped with 16 mm (F = 2.8) focal length lenses (ML-M1616UR, MOTRITEX). The weather was sunny during data collection. The data was collected in an outdoor natural setting that includes desert and grassland. Additionally, the polarization camera used an automatic exposure mode, while the multispectral camera had an exposure time of 0.5 s.

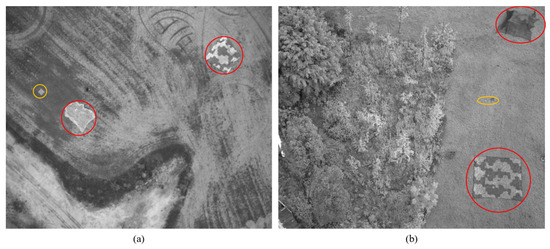

To investigate the performance of the algorithm, a dataset consisting of 500 pairs of spectral polarization images under both real desert and grassland backgrounds is constructed. The targets include the grassland camouflaged nets, desert camouflaged nets, and the aluminum alloy board with coatings of green camouflage and yellow desert camouflage (30 cm × 30 cm in size), as shown in Figure 3. The detection heights were set at 25 m, 50 m, and 75 m, and the detection angles were set at 45° and 90°. The optimal imaging angle for polarization imaging is the specular reflection angle within the Brewster’s angle region [14]. Therefore, the acquisition angles were set at 45° (representing the optimal imaging angle) and 90° (representing the non-optimal imaging angle), with a ratio of 1:1 between the two angles.

Figure 3.

Schematic diagram of different types of camouflaged nets and camouflaged plates. (a) In the desert scene, the desert camouflaged net is outlined by a red circle, and the camouflaged plate is outlined by a yellow circle. (b) In the grassland scene, the grassland camouflaged net is outlined by a red circle, and the camouflaged plate is outlined by a yellow circle.

The paired spectral–polarimetric images were annotated by selecting 450 pairs as the training set and 50 pairs as the test set. The labelme tool was used to perform semantic segmentation labeling on the targets and automatically generate annotation files. These annotation files were then converted into the commonly used the Pascal visual object classes dataset format for semantic segmentation tasks.

3.2. Experiment Settings

The hardware environment for all experiments in this study included an AMD RYZEN 3500X processor, an NVIDIA GeForce RTX 3060Ti graphics card, 16 GB of RAM, 8 GB of GPU memory, and the Windows 10 operating system. The experimental software used was PyCharm 2021, PyTorch 1.8, and Python 3.9.

3.2.1. Parameter Settings

The parameters of the backbone network are initialized based on a pre-trained model on ImageNet. During the training phase, the Adam optimizer [30] is used with a momentum of 0.9 for network training. The initial learning rate is set to 0.004, and a cosine annealing learning rate schedule is employed. The batch size is set to 16, and the input image size is 512 × 512. The entire training process involves 350 iterations.

3.2.2. Evaluation Metrics

This study adopts the mean intersection-over-union (MIoU) and mean pixel accuracy (MPA) as evaluation metrics. The IoU refers to the ratio of the intersection to the union of the predicted values (PS) and the ground truth (GT) for a particular class. The calculation formula [28] is shown in Equation (9):

The PA refers to the proportion of correctly predicted pixels (P) for a particular class to the total number of pixels (M). The calculation formula [28] is shown in (10):

To find the optimal subset of bands, the KLC and the correlation coefficient are used as metrics. The KLC [31] reflects the information content of the selected subset of bands and is calculated as follows:

where pi (p1 > p2 > … > pm) is the eigenvalues of the selected bands after the PCA transformation, and λj (λ1 > λ2 > … > λn) is the eigenvalues of the original data’s n bands after the PCA transformation.

The correlation between bands reflects the redundancy of information between different bands. Therefore, the correlation coefficient between subsets of bands can be applied to measure this [15].

3.2.3. Statistical Significance Tests

The Wilcoxon method is utilized to perform statistical significance tests among different models. First, the IoU of various models is computed on the test dataset. Then, statistical analysis is conducted using the scipy library in Python. Last, the significance is determined by analyzing the p-values (the value p < 0.05 is statistically significant).

3.3. Results

3.3.1. Spectral Feature Images and Polarization-Encoded Images

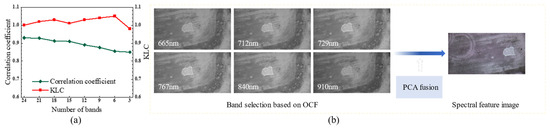

To verify the effectiveness of the input which consists of the spectral feature images and polarization-encoded images, the spectral–polarimetric images of UAVs in a desert environment at a flying height of 50 m are utilized.

Figure 4a shows that, as the number of bands decreases, the KLC value increases while the correlation coefficient decreases. With a band number of six, the KLC reaches a peak of 1.05, and the correlation coefficient is 0.85. A KLC value greater than one means that sufficient information is involved in the selected bands [32]. Additionally, weaker correlation corresponds to higher information content, and the smaller correlation values indicate the lower redundancy. As shown in Figure 4b, in order to minimize the data’s redundancy while preserving the spectral information, the OCF algorithm, combined with a sorting method, is used to reduce the number of spectral bands to six: 665 nm, 712 nm, 729 nm, 767 nm, 840 nm, and 910 nm. The PCA transformation is conducted to transfer the six-band spectral image into a three-channel image while preserving the spectral information. Spectral data, due to its large volume, can provide rich information on the targets. However, it also leads to information redundancy, increasing the difficulty in the integration of the spectral and the polarization information [33]. The use of the OCF algorithm to reduce the redundancy of spectral data, followed by the PCA algorithm to retain discriminative information from key bands, enables spectral data to be treated as a common three-channel image in deep learning algorithms [34]. Spectral feature images enhance the model’s ability to extract spectral features while reducing the disparity between spectral and polarization data.

Figure 4.

Spectral image preprocessing framework and evaluation metrics. (a) Band screening evaluation index. (b) Spectral image processing framework.

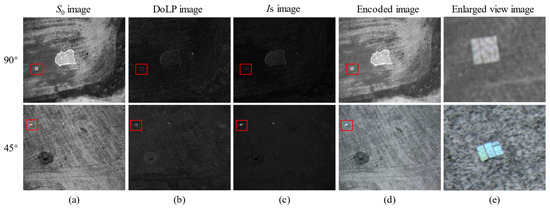

Figure 5 illustrates different polarization images acquired by the UAVs at detection angles of 45° and 90°. Polarization encoded images highlight the target when the detection angle is 45°. And the target information is also well preserved when the detection angle is 90°. The polarization encoded image, at the optimal imaging angle, can highlight the differences in polarization characteristics between the target and the background, effectively emphasizing the target when compared to other polarization parameter images. It can also preserve target features well even at non-optimal imaging angles. The degree of linear polarization (DoLP) images are Stokes vector-based parameter images which can display the polarization characteristics of the target. IS images are also Stokes vector-based parameter images, in which the contrast between the artificial target and the natural background is improved. The DoLP and IS [14] images suppress the background at a detection angle of 45°, resulting in the target appearing bright in the image, thereby highlighting the target. However, at a detection angle of 90°, both the target and the background are suppressed, causing the target to blend into the background. On the other hand, S0 image only reflects the intensity information of the target and cannot utilize polarization information to highlight the target. Therefore, it cannot extract sufficient target feature information for effective target detection in the artificial target recognition scenario.

Figure 5.

Comparison of different polarization images of camouflaged plates at different imaging angles. (a) S0 image. (b) DoLP image. (c) IS image. (d) Polarization-encoded image. (e) Enlarged view image. The red squares show the specific position of camouflaged plates.

3.3.2. Ablation Study

Four sets of experiments on the SPIAO dataset are conducted to validate the impact of the CABF module, CASPP module, and RDB module on the segmentation results of artificial targets.

In this study, the CABF module, CASPP module, and the designed RDB module were removed from the ESPFNet’s architecture. The CABF module was replaced with a simple addition operation, and the RDB module was replaced with the residual structure from ResNet [26]. This modified network was used as the baseline network in the experiments. The ablation experiment results are shown in Table 1, where the symbol √ indicates the inclusion of the respective module, and the absence of a symbol indicates that the module was removed. The results showed that when the CABF feature fusion module was used, the model achieved a 5.3% improvement in MIoU and a 4.2% improvement in MPA. The model achieved a 5.6% improvement in MIoU and a 4.4% improvement in MPA when using the CASPP module. And the model achieved a 4.1% improvement in MIoU and a 3.2% improvement in MPA when using the RDB module. After adding the CASPP module between the decoder and encoder, compared to solely adding the CABF module, the MIoU and MPA improved by 2.8% and by 1.7%, respectively. When the designed RDB module replaced the traditional residual blocks, the MIoU improved by 0.8% and the MPA improved by 1.0%, respectively. After adding all the modules, the model achieved optimal performance with an MIoU of 80.4%, which has an improvement of 8.9% as compared to the baseline network. The MPA also increased by 6.9%.

Table 1.

Results of ablation experiments.

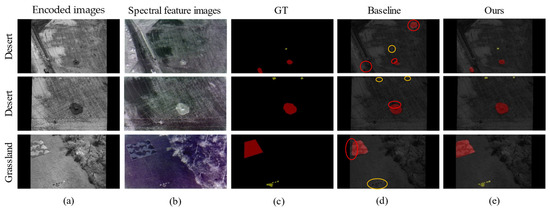

To further validate the effectiveness of different modules, the samples from real desert and grassland scenes were selected for visual comparison in Figure 6. The first three columns represent the polarization-encoded images, spectral feature images, and semantic labels, respectively. The fourth and fifth columns show the semantic segmentation results of the base network and the ESPFNet, respectively. The red boxes indicate the regions where the segmentation results of the camouflaged nets target are incomplete or incorrect, while the yellow boxes represent the missed detections of the camouflaged plates target. In the three scenes, the base network exhibits issues with incomplete segmentation of the edge details for camouflaged net targets and mistakenly recognizing the background as the target. Additionally, it also has difficulties in detecting camouflaged plate targets. On the other hand, the ESPFNet network can segment camouflaged net targets and camouflaged plate targets more comprehensively. This is because the CABF module optimizes and coordinates the feature information between the two modalities instead of simply adding them together. It fully utilizes the spectral–polarimetric information. The CASPP module enhances the receptive field, enriching the detailed information of deep features, thereby improving the ability to extract the features of small targets. The RDB module refines the feature extraction, effectively enhancing the segmentation capability for target edge details.

Figure 6.

Visualization comparison of segmentation results of the base network and the ESPFNet. (a) Polarization-encoded images. (b) Spectral feature images. (c) GT. (d) Baseline network. (e) ESPFNet (ours). The red circles show the segmentation results of camouflaged nets. The yellow circles show the segmentation results of camouflaged plates.

3.3.3. CASPP Compared with Modules of the Same Type

To demonstrate the performance of the CASPP module, we compare it with other modules of the same type in terms of the parameter count and segmentation performance. The compared modules include a pyramid pooling module (PPM), ASPP, RFB, and (spatial pyramid pooling fully connected spatial pyramid convolution) SPPFCSPC. The PPM module was proposed in the PSPNet [35] to aggregate the contextual information from different regions. The ASPP was proposed in DeepLabv3+ [28] and uses multiple dilated convolutions to capture the multi-scale information. The RFB, proposed in SINet [9], increases the receptive field by adding dilated convolutions to the inception block. The SPPFCSPC module, proposed in YOLOv7 [36], utilizes max pooling at different scales to obtain the various receptive fields. In the parameter count comparison experiment, the input size is set to (512, 16, 16), and the output size is also set to (512, 16, 16). The parameter counts of each module are shown in Table 2. The parameter size of the CASPP module is 0.853 MB, only 0.065 MB more than the PPM module, which owes the minimum parameters. The ASPP has the highest parameter count, which is 8.92 MB. The RFB and the SPPCSPC have parameter counts of 1.32 MB and 7.09 MB, respectively.

Table 2.

Comparison of module parameters.

As shown in Table 3, a segmentation performance comparison experiment was conducted by replacing the CASPP module in the ESPFNet with modules of the same type, under the same experimental conditions. The results indicate that by using the CASPP module, the ESPFNet achieves the highest segmentation accuracy, with an MIoU of 80.4% and an MPA of 88.1%. Compared to the PPM module (with the similar parameter size) and the ASPP module (with the highest parameter size), the CASPP module is conducive to improving the MIoU by 1.0% and 0.90% and the MPA by 0.90% and 0.80%, respectively.

Table 3.

Comparison of segmentation performance.

3.3.4. Comparison with Other Methods

To evaluate the capability of the proposed method, ESPFNet is compared with several state-of-the-art semantic segmentation networks, including single-modal semantic segmentation networks such as FCN [37], U-Net [38], PSPNet, DeepLabv3+, HRNet [39], SegNeXt [40]; RGB-D dual-modal semantic segmentation networks such as GIFNet [41], ACNet [42], and ESANet [43]; and the spectral–polarimetric dual-modal semantic segmentation network, EAFNet [20]. FCN is a fully convolutional network that solves pixel-wise prediction problems by using convolutional operations and employs skip connections to fuse high- and low-level feature information. It is one of the most used models in the field of semantic segmentation. The U-Net is improved based on an FCN which utilizes a U-shaped structure and skip connections to fully exploit the local and global features in the input image. It is originally proposed for biomedical image segmentation and has been widely adopted in various directions of semantic segmentation due to its excellent performance. The PSPNet employs a pyramid pooling module that processes the input with pooling kernels of different sizes, enabling the network to perceive features of different scales and improve the segmentation accuracy. DeepLabv3+ introduces the ASPP module to extract multi-scale contextual information and refine segmentation results. It also employs multi-scale fusion techniques to further enhance the model’s performance. The HRNet adopts a multi-scale information fusion strategy, directly preserving high-resolution features and fusing features of different resolutions to improve segmentation accuracy. A convolutional attention mechanism is employed by the SegNeXt to successfully encode the contextual information. It captures global characteristics by employing large-scale convolutional kernels and incorporates lightweight decoding modules to further enhance the model performance. GIFNet utilizes a gate fusion module to adaptively fuse the two modalities’ information during the encoding stage, significantly improving the model’s robustness. The ESANet and the ACNet utilize network structure parallelization and efficient cross-modal guidance methods to improve segmentation performance, demonstrating excellent performance in the field of RGB-D dual-modal segmentation. The EAFNet dynamically adjusts the weights of the spectral and polarization branches, precisely fusing information from different modalities.

The segmentation results of different methods are shown in Table 4. The results demonstrate that the ESPFNet achieves the highest segmentation accuracy, with an MIoU of 80.4% and an MPA of 88.1%. Compared to single-modal segmentation networks that use polarization data alone, the ESPFNet improves the MIoU by 22.4%, 20.6%, 21.2%, 20.1%, 12.9%, and 12.8%, and the MPA by 21.6%, 19.7%, 20.6%, 18.8%, 12.6%, and 12.9%, respectively. When compared to single-modal segmentation networks that use spectral data alone, the ESPFNet improves the MIoU by 21.7%, 19.3%, 20.6%, 18.3%, 12.5%, and 12.9%, and the MPA by 21.2%, 19.4%, 20.2%, 18.2%, 12.2%, and 12.4%, respectively. The model’s segmentation accuracy can be significantly enhanced by integrating the two modalities. Compared to other multimodal segmentation networks, the ESPFNet achieves an increase in the MIoU of 10.3%, 9.1%, 3.1%, and 4.2%, and an increase in the MPA of 9.9%, 9.0%, 2.6%, and 4.3%, respectively. The ESPFNet achieves fast segmentation speed, with a detection rate of 27.5 frames per second. This makes it suitable for real-time detection with UAVs. Additionally, we utilize the Wilcoxon method to conduct statistical significance tests between ESPNet and other dual-modal networks. By calculating the IoU for each image in the test set, the p values for the ESPFNet, when compared to GIFNet, ACNet, ESAFNet, and EAFNet, are 4.38 × 10−11, 1.51 × 10−10, 4.0 × 10−4, and 4.62 × 10−5, respectively. It shows a significant difference between the ESPFNet and the other models.

Table 4.

Comparison of segmentation results with different models.

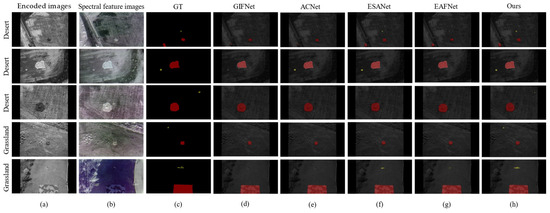

To provide a more intuitive representation of the segmentation results of different models, typical cases are selected in Figure 7, which showcases the visual comparison of segmentation results using samples from real desert and grassland scenes. The first three columns represent the polarization encoded image, the spectral feature image, and the semantic label, respectively. The fourth, fifth, sixth, seventh, and eighth columns show the semantic segmentation results of the GIFNet, the ACNet, the ESANet, the EAFNet, and the ESPFNet, respectively. The visualized results show that the ESPFNet improves the segmentation accuracy of both camouflaged plate targets and camouflaged net targets in terms of edge detail segmentation. In all five scenes, the ESPFNet achieves good performance in segmenting camouflaged plate targets. Additionally, the edge segmentation results of camouflaged nets are also close to the labels. However, the other four segmentation networks exhibit varying degrees of inability to detect the camouflaged plates or coarse segmentation of the camouflaged net’s edge details.

Figure 7.

Visual comparison of segmentation results with different models. (a) Polarization-encoded images. (b) Spectral feature images. (c) GT. (d) GIFNet. (e) ACNet. (f) ESANet. (g) EAFNet. (h) ESPFNet (ours).

4. Discussion

In this study, we investigated how to effectively leverage spectral–polarimetric information to enhance the capabilities of autonomous reconnaissance for UAVs. However, existing approaches are challenged by issues such as inadequate resolution of spectral and polarization imaging systems and suboptimal performance of multimodal segmentation algorithms. Therefore, we proposed a real-time spectral–polarimetric segmentation algorithm for artificial targets based on an efficient attention fusion network, which is called the ESPFNet. The network utilizes spectral feature images and polarization-encoded images as inputs. From the experimental results, we can observe that polarization information performs better in segmenting camouflaged plates, while spectral information demonstrates superior performance in segmenting camouflaged nets. This is because camouflaged plates are coated with anti-spectral paint, which makes their spectral curves consistent with the background [14]. It becomes difficult to differentiate them using single spectral information. The polarization properties differ significantly from the background environment and using mono polarization information improves detection accuracy. However, the opposite situation is found in detecting the camouflaged nets [15]. These phenomena indicate that accurately distinguishing between different types of targets solely based on visible light information or individual spectral/polarization information is difficult. Therefore, by appropriately integrating the features of both the spectral and the polarization information, the detection performance of artificial targets can be significantly improved.

Compared to other dual-modal networks, the ESPFNet can accurately segment camouflaged plates, and the edge segmentation details of camouflaged nets are also the closest to the labels. This is because the ESPFNet is specifically designed to address the feature fusion, the receptive field expansion, and the integration of attention mechanisms between different modalities [43]. These optimizing strategies contribute to its superior performance when compared with other dual-modal segmentation networks. Due to the small size of the camouflaged panel, the other four dual-modal networks have not adequately addressed the issue of information loss for small objects, resulting in poor detection performance for camouflaged plates [20]. Additionally, the complex shape of the camouflaged net’s edges is challenging for other networks to accurately extract fine edge details, leading to rough segmentation results for the camouflaged nets. The ESPFNet precisely integrates and utilizes information from both modalities, expands the receptive field, improves the segmentation performance for small objects, and refines the segmentation results using the residual decoding module.

The simple addition-based fusion method is unable to fully utilize the complementary and differential information of the spectral–polarimetric data [44]. The CABF module utilizes an attention mechanism to capture the features of the target in the spectral and polarization images, coordinates the weights of the feature information of the two modalities, and leverages their complementarity. It optimizes the feature information in a unified modality and effectively integrates the useful information from both modalities. The RDB module employs attention mechanisms to allow the network to learn how to utilize global information for enhancing relevant features, thereby bolstering the network’s capability to classify pixels. The ASPP addresses this limitation by extensively utilizing dilated convolutions to expand the receptive field. However, the ASPP simply concatenates the multi-scale contextual features without considering the relationships among them, which may result in inconsistent predictions [45,46]. Comparatively, the CASPP ensures sufficient interaction among spectral–polarimetric features at different scales, further expanding the receptive field and strengthening the connections between features at different scales.

The ESPFNet’s encoder–decoder architecture can simultaneously extract spectral–polarimetric information. However, due to the utilization of the same feature extraction method, the differences between the two modalities’ data are not fully considered. In future work, we will consider designing dedicated feature extraction branches for spectral and polarization images, further enhancing the autonomous reconnaissance capability of unmanned aerial vehicles in complex environments.

5. Conclusions

This study introduces an efficient spectral–polarimetric fusion network (ESPFNet) for real-time semantic segmentation, which incorporates a highly efficient attention mechanism. The ESPFNet utilizes a dual-stream approach to simultaneously extract feature information from both the spectral feature images and the polarization-encoded images. The network includes a CABF module, which reduces the modality differences and optimizes the complementary nature between the two modalities. Additionally, a CASPP module is designed to connect the decoder and encoder, enhancing the feature information in the decoder’s output. Furthermore, an RDB module is introduced to further enhance the segmentation performance of the model. Experimental results on the dataset SPIAO demonstrate that the ESPFNet achieves an MIoU of 80.4% and an MPA of 88.1%, with a detection speed of 27.5 FPS. This algorithm enhances the autonomous reconnaissance capability of UAVs.

Author Contributions

Conceptualization, Y.S., F.H., X.L. and S.W.; methodology, X.L.; software, X.L.; investigation, X.L., S.Z., Y.X. and D.Z.; formal analysis, X.L., Y.X. and S.W.; writing—original draft preparation, X.L. and Y.S.; writing—review and editing, Y.S., X.L., F.H. and S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (62005049); Natural Science Foundation of Fujian Province (2020J01451); Education and Scientific Research Foundation for Young Teachers in Fujian Province (JAT190003).

Data Availability Statement

The data generated and analyzed during this study are available from the corresponding author by request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huang, Y.; Ding, W.; Li, H. Haze removal for UAV reconnaissance images using layered scattering model. Chin. J. Aeronaut. 2016, 29, 502–511. [Google Scholar] [CrossRef]

- Gao, S.; Wu, J.; Ai, J. Multi-UAV reconnaissance task allocation for heterogeneous targets using grouping ant colony optimization algorithm. Soft Comput. 2021, 25, 7155–7167. [Google Scholar] [CrossRef]

- Yang, X.; Xu, W.; Jia, Q.; Liu, J. MF-CFI: A fused evaluation index for camouflage patterns based on human visual perception. Def. Technol. 2021, 17, 1602–1608. [Google Scholar] [CrossRef]

- Bi, H.; Zhang, C.; Wang, K.; Tong, J.; Zheng, F. Rethinking Camouflaged Object Detection: Models and Datasets. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 5708–5724. [Google Scholar] [CrossRef]

- Mondal, A. Camouflaged Object Detection and Tracking: A Survey. Int. J. Image Graph. 2020, 20, 2050028. [Google Scholar] [CrossRef]

- Feng, X.; Guoying, C.; Richang, H.; Jing, G. Camouflage texture evaluation using a saliency map. Multimed. Syst. 2015, 21, 165–175. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, C.; Wang, S.; Liu, Y.; Ye, M. A Bayesian Approach to Camouflaged Moving Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 2001–2013. [Google Scholar] [CrossRef]

- Hall, J.R.; Cuthill, I.C.; Baddeley, R.; Shohte, A.J.; Scott-Samuel, N.E. Camouflage, detection and identification of moving targets. Proc. Biol. Sci. 2013, 280, 20130064. [Google Scholar] [CrossRef]

- Fan, D.; Ji, G.; Sun, G.; Cheng, M.; Shen, J.; Shao, L. Camouflaged Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2777–2787. [Google Scholar]

- Wang, K.; Bi, H.; Zhang, Y.; Zhang, C.; Liu, Z.; Zheng, S. D2C-Net: A dual-branch, dual-guidance and cross-refine network for camouflaged object detection. IEEE Trans. Ind. Electron. 2021, 69, 5364–5374. [Google Scholar] [CrossRef]

- Zhou, T.; Zhou, Y.; Gong, C. Feature aggregation and propagation network for camouflaged object detection. IEEE Trans. Image Process. 2022, 31, 7036–7047. [Google Scholar] [CrossRef] [PubMed]

- Mei, H.; Ji, G.; Wei, Z.; Yang, X.; Wei, X.; Fan, D. Camouflaged object segmentation with distraction mining. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8772–8781. [Google Scholar]

- Tan, J.; Zhang, J.; Zou, B. Camouflage target detection based on polarized spectral features. In Proceedings of the SPIE 9853, Polarization: Measurement, Analysis, and Remote Sensing XII, Baltimore, MD, USA, 17–21 May 2016. [Google Scholar]

- Shen, Y.; Lin, W.; Wang, Z.; Li, J.; Sun, X.; Wu, X.; Wang, S.; Huang, F. Rapid detection of camouflaged artificial target based on polarization imaging and deep learning. IEEE Photonics J. 2021, 13, 1–9. [Google Scholar] [CrossRef]

- Shen, Y.; Li, J.; Lin, W.; Chen, L.; Huang, F.; Wang, S. Camouflaged target detection based on snapshot multispectral imaging. Remote Sens. 2021, 13, 3949. [Google Scholar] [CrossRef]

- Zhou, P.C.; Liu, C.C. Camouflaged target separation by spectral-polarimetric imagery fusion with shearlet transform and Clustering Segmentation. In Proceedings of the International Symposium on Photoelectronic Detection and Imaging 2013: Imaging Sensors and Applications, Beingjing, China, 21 August 2013. [Google Scholar]

- Islam, M.N.; Tahtali, M.; Pickering, M. Hybrid fusion-based background segmentation in multispectral polarimetric imagery. Remote Sens. 2020, 12, 1776. [Google Scholar] [CrossRef]

- Tan, J.; Zhang, J.; Zhang, Y. Target detection for polarized hyperspectral images based on tensor decomposition. IEEE Geosci. Remote Sens. Lett. 2017, 14, 674–678. [Google Scholar] [CrossRef]

- Zhang, J.; Tan, J.; Zhang, Y. Joint sparse tensor representation for the target detection of polarized hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2235–2239. [Google Scholar] [CrossRef]

- Xiang, K.; Yang, K.; Wang, K. Polarization-driven semantic segmentation via efficient attention-bridged fusion. Opt. Express 2021, 29, 4802–4820. [Google Scholar] [CrossRef]

- Blin, R.; Ainouz, S.; Canu, S.; Meriaudeau, F. The PolarLITIS dataset: Road scenes under fog. IEEE Trans. Intell. Transp. Syst. 2022, 23, 10753–10762. [Google Scholar] [CrossRef]

- Sattar, S.; Lapray, P.; Foulonneau, A.; Bigué, L. Review of spectral and polarization imaging systems. In Proceedings of the Unconventional Optical Imaging II, Online, 6–10 April 2020; SPIE: Bellingham, WA, USA, 2020; Volume 11351, pp. 191–203. [Google Scholar]

- Ning, J.; Xu, Z.; Wu, D.; Zhang, R.; Wang, Y.; Xie, Y.; Zhao, W.; Ren, W. Compressive circular polarization snapshot spectral imaging. Opt. Commun. 2021, 491, 126946. [Google Scholar] [CrossRef]

- Son, D.; Kwon, H.; Lee, S. Visible and near-infrared image synthesis using PCA fusion of multiscale layers. Appl. Sci. 2020, 10, 8702. [Google Scholar] [CrossRef]

- Li, N.; Zhao, Y.; Pan, Q.; Kong, S.G. Demosaicking DoFP images using newton’s polynomial interpolation and polarization difference model. Opt. Express 2019, 27, 1376–1391. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent spatial and channel squeeze & excitation in fully convolutional networks. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Granada, Spain, 16–20 September 2018; pp. 421–429. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Q.; Zhang, F.; Li, X. Optimal clustering framework for hyperspectral band selection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5910–5922. [Google Scholar] [CrossRef]

- Shi, B.; Liu, C.; Sun, W.; Chen, N. Sparse nonnegative matrix factorization for hyperspectral optimal band selection. Acta Geod. Cartogr. Sin. 2013, 42, 351–357. [Google Scholar]

- Matteoli, S.; Diani, M.; Theiler, J. An overview of background modeling for detection of targets and anomalies in hyperspectral remotely sensed imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 2317–2336. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Image Computing and Computer Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Guo, M.; Lu, C.; Hou, Q.; Liu, Z.; Cheng, M.; Hu, S. SegNeXt: Rethinking convolutional attention design for semantic segmentation. arXiv 2022, arXiv:2209.08575. [Google Scholar]

- Kim, J.; Koh, J.; Kim, Y.; Choi, J.; Hwang, Y.; Choi, J.W. Robust deep multi-modal learning based on gated information fusion network. In Proceedings of the 2018 Asian Coference on Computer Vision (ACCV), Perth, Australia, 2–6 December 2018; pp. 90–106. [Google Scholar]

- Hu, X.; Yang, K.; Fei, L.; Wang, K. ACNET: Attention based network to exploit complementary features for rgbd semantic segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1440–1444. [Google Scholar]

- Seichter, D.; Köhler, M.; Lewandowski, B.; Wengefeld, T.; Gross, H.M. Efficient rgb-d semantic segmentation for indoor scene analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13525–13531. [Google Scholar]

- Cao, Z. C3Net: Cross-modal feature recalibrated, cross-scale semantic aggregated and compact network for semantic segmentation of multi-modal high-resolution aerial images. Remote Sens. 2021, 13, 528. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G.W. Understanding convolution for semantic seg-mentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NA, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Zhou, W.; Lv, Y.; Lei, J.; Yu, L. Global and local-contrast guides content-aware fusion for rgb-d saliency prediction. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 3641–3649. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).