1. Introduction

Remote sensing object detection is the process of determining the location and the category of objects in optical remote sensing images. In recent years, a large number of remote sensing object detection methods [

1,

2] have been proposed based on the deep learning technique. As an important and practical research field, the object detection task is not only used in the detection of ships [

3], airports [

4], vehicles [

5] and other objects, but is also widely used in object tracking [

6], instance segmentation [

7], caption generation [

8] and many other fields. The detector consists of a position regressor and a category classifier. In the object detection task the coupling problem has always been a concern in the object detection task.

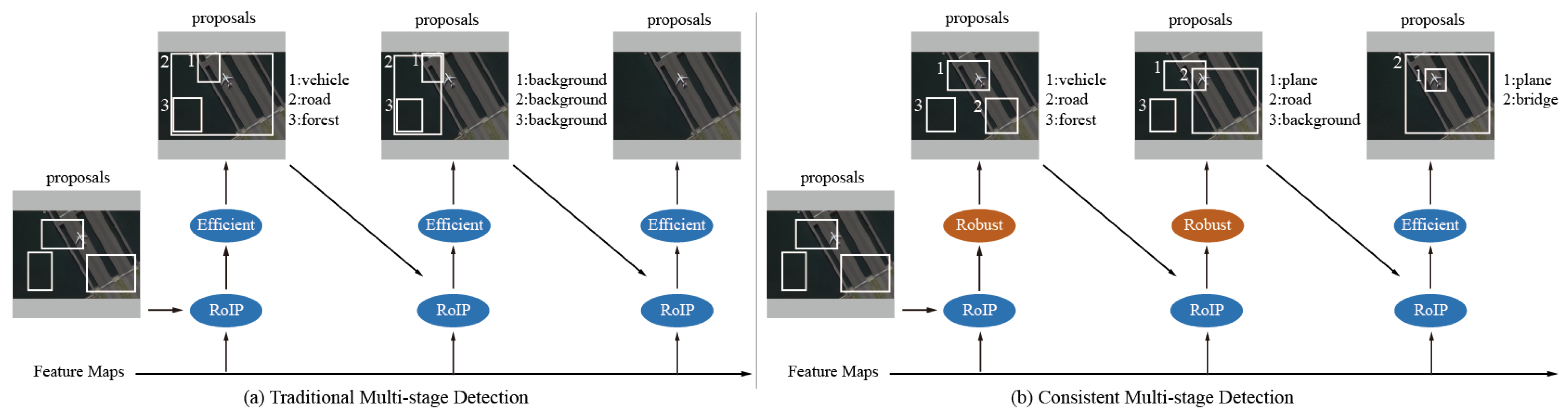

Recently, many object detection methods have been proposed. In these methods, the mainstream methods are based on deep learning. As shown in

Figure 1a, deep learning = based methods can be roughly divided into the following steps: feature extraction, region proposal,

Region of Interest Pooling (RoIP), classification and regression. The region proposal is used to pre-generate regions where objects may exist. The RoIP is used to sample features in the pre-generated regions.

The above methods use a single network head (including classification and regression) to detect objects. The singularity of the network head makes the framework lack efficiency in both classification and regression [

9]. In order to deal with this problem, many studies have been made on multi-stage object detection, which is defined as using multiple cascaded network heads to improve the accuracy of the bounding boxes. The framework of the multi-stage object detection is shown in

Figure 1b. Some examples are shown as follows. Li et al. [

9] proposed a group recursive learning network consisting of three cascaded network heads: weakly supervised object segmentation, object proposal generation and recursive detection refinement. Cai et al. [

10] proposed cascade R-CNN with different

Intersection over Union (IoU) thresholds to deal with the insufficient training samples problem on the later network heads.

However, the current multi-stage methods have an inherent problem that the coupling error is transmitted along multiple network heads [

10,

11,

12]. In the aforementioned methods, the traditional way of classify-to-regress coupling is one-for-one exact matching. First, this one-for-one exact matching is likely to transmit classification errors to regression. As shown in

Figure 1a,b, the network finally adopts the regressor corresponding to the classification result. Secondly, the false regression will make the input proposal of the RoIP deviate from the true object. Therefore, the RoIP cannot sample useful features, which further leads to the classification error in the next network head [

11]. In other words, the regression error is transmitted to the next network head. Finally, the above two kinds of errors will be repeated between multiple network heads, resulting in the final detection result error. Apparently, the problem can cause iterative error transmitting in the multi-stage detection heads. It urgently needs to be dealt with for this issue.

In order to overcome the coupling error transmitting problem, the

Consistent Multi-stage Detection (CMD) framework is proposed. As shown in

Figure 1c, the proposed CMD framework consists of the following parts.

First, the proposed method introduced the conception of a robust coupling head and an efficient coupling head for coarse boxes and fine boxes, respectively. In contrast, the prior works only used the fine coupling head. Second, the proposed method adopts coupling mechanisms that are consistent with the change in boxes during multiple detection stages. The boxes tend to change from coarse to fine, thus the adopted coupling mechanism also keep this trend. Thus the proposed model is a relatively consistent method.

In summary, the main contributions of our work are as follows.

Concepts of a robust head and efficient head are proposed. Through various experiments, the functions of two kinds of coupling methods are validated. The robust coupling method is helpful for avoiding detection errors for coarse proposals. The efficient coupling method usually achieves better performance on fine proposals but worse performance on coarse proposals.

Fineness-consistent multi-head cooperation mechanisms are investigated between the robust coupling head and the efficient coupling head. These cooperation mechanisms are designed to be consistent with the coarse-to-fine trend of the object bounding boxes during the multi-stage detection process.

A novel network head architecture, consistent multi-stage detection, is proposed to deal with the coupling error transmitting problem by adopting an appropriate multi-head cooperation mechanism. Experiments with different backbone networks on three widely used remote sensing object detection data sets have shown the effectiveness of the proposed framework.

The rest of this paper is organized as follows. In

Section 2, some existing object detection methods are illustrated.

Section 3 describes the proposed method in detail. Finally, the experimental results and discussion are reported in

Section 4, while the conclusion is made in

Section 5.

4. Results and Analysis

In this section, we sequentially introduce our experiments from four different aspects: data set description, evaluation metrics, implementation details and experimental results. Details of these parts are illustrated as follows.

4.1. Data Set Description

To evaluate our localization model, experiments are implemented on three remote sensing object detection data sets: DIOR [

40], HRRSD [

41] and NWPU VHR-10 [

18]. Details of these data sets are introduced below.

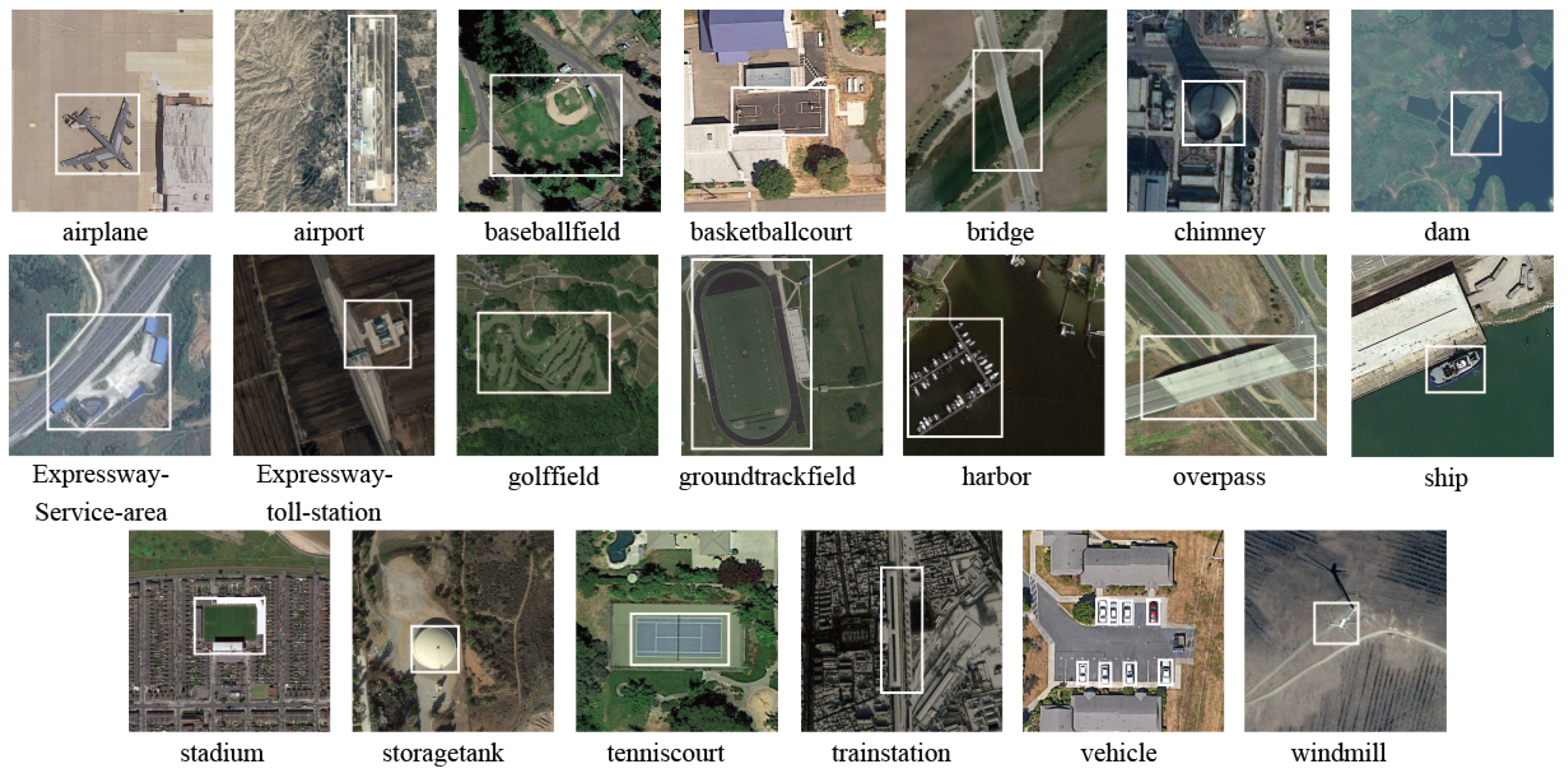

4.1.1. DIOR

The DIOR data set is the most recently proposed remote sensing object detection data set, which is a large-scale benchmark data set. This data set contains 23,463 optical remote sensing images. In total, 190,288 instances of 20 class objects are distributed in these images.

Some examples of DIOR are shown in

Figure 5. In this data set, objects of the same class have different sizes, which can increase the difficulty of detection [

40]. Moreover, object instance numbers and class numbers are abundant. Therefore, DIOR is a large-scale and hard data set.

4.1.2. HRRSD

The HRRSD data set is a balanced, which means that object instances of different classes have similar quantities, remote sensing object detection data set. Moreover, this data set is also a large-scale data set containing 21,761 optical remote sensing images. In total, 55,740 instances of 13 class objects are distributed in these images.

Some examples of HRRSD are shown in

Figure 6. In this data set, the object numbers are balanced for different classes. This balanced data set makes the training samples of each class sufficient [

41]. Moreover, this data set contains a large number of object instances. Therefore, the HRRSD is a large-scale and balanced data set.

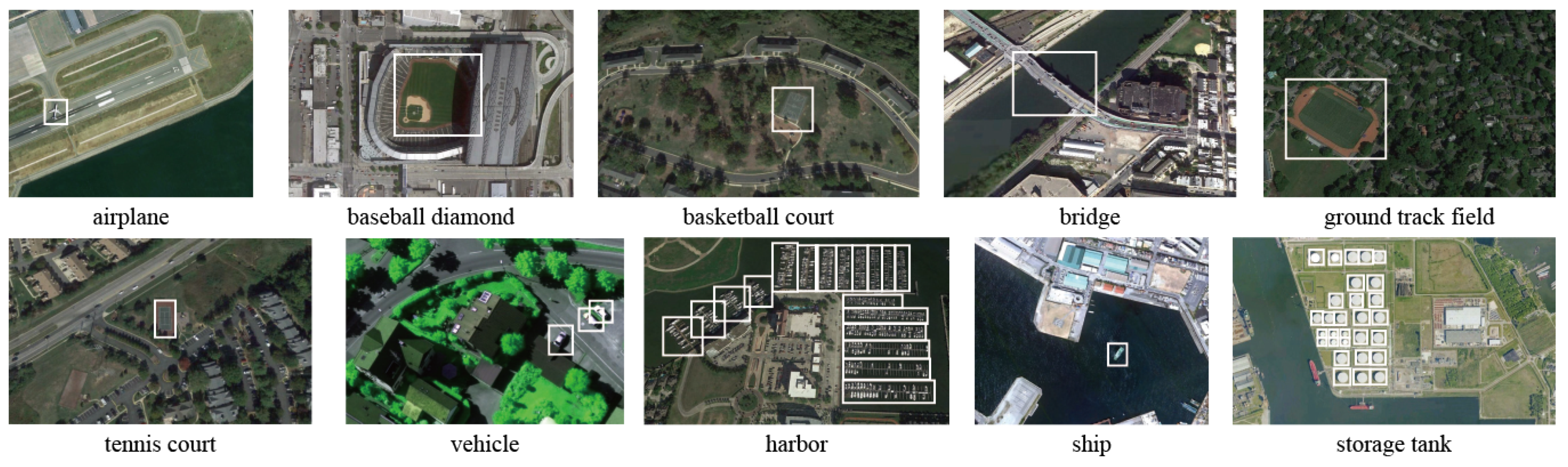

4.1.3. NWPU VHR-10

The DIOR data set is a historical remote sensing object detection data set, which was proposed in 2014. This data set contains 800 optical remote sensing images. In total, 800 instances of 10 class objects are distributed in these images.

Some examples of NWPU VHR-10 are shown in

Figure 7. NWPU VHR-10 is a small-scale data set, which was one of the earliest remote sensing object detection data sets.

4.2. Evaluation Metrics

In order to quantitatively validate the efficiency of the proposed method, we adopt the most-used evaluation metrics in object detection: Average Precision (AP) for each class and mean AP (mAP) for each data set. Moreover, Intersection over Union (IoU) is used for location evaluation of objects.

4.2.1. AP and mAP

To clearly explain AP, it is necessary to introduce the confusion matrix. The confusion matrix is shown in

Table 1. As shown in this table, TP represents the true positive object that is correctly predicted as positive. Similarly, FP represents the false positive object that is falsely predicted as positive, FN represents the false negative object that is falsely predicted as negative and TN represents the true negative object that is correctly predicted as negative. Then formulas for precision and recall are represented as:

For a single object category, a Precision-Recall Curve (PRC) is drawn. For PRC, the vertical axis and the horizontal axis are the and , respectively. Since both the and the are smaller than one, the area under PRC is represented as the Average Precision (AP), a synthetic measurement considering both the and the .

After the illustration of AP, it is easy to understand that the mean AP (mAP) is the mean value of the APs of all categories in a data set.

4.2.2. IoU

For a single predicted box, both the category and the location are used for the assessment of detection results. In this evaluation process, the location accuracy of an object is measured by IoU.

While

and

represent the intersection area and the union area between the predicted bounding box and the label bounding box, respectively, IoU is represented as

To be noted, if a predicted bounding box does not have an intersection area with any label-bounding boxes, the predicted bounding box is viewed as background.

4.3. Implementation Details

In this part, the implementation details of the experiments are illustrated from four aspects: experimental environment, preprocessing, training parameters and CMD parameters.

4.3.1. Experimental Environment

The following experiments are conducted on a server with seven NVIDIA Titan X GPU. A comprehensive toolbox named MMdetection [

42] is used in our experiment, with the software environment of CUDA 10.1, CUDNN 7.6.3, gcc-4.8, g++-4.9 and Pytorch 1.2.

4.3.2. Preprocessing

The images are first resized and paddled, with a paddle size of 32 to (1333, 800). Moreover, half of the randomly selected training examples are flipped. Finally, the images are normalized with statistical information, including average values and standard deviations for three channels (red, green and blue) of the current data set.

4.3.3. Training Parameters

First of all, the experiments are trained rather than fine-tuned. The training is implemented with a batch size of 16 for 12 epochs. In each epoch, all samples of the training set are used only once. Second, the stochastic gradient descent optimizer is set as: a learning rate of , a weight decay of and a momentum of . Third, the RPNs are trained on samples without the ground-truth bounding boxes, which have IoU scores of . All the training samples are obtained with anchors generated on each pixel. The anchors on each pixel are with an aspect ratio of , and . Finally, 256 anchors are randomly selected for RPN training, and 512 RPN proposals are randomly selected for detector training.

4.3.4. CMD Parameters

Since the CMD contains multiple detectors, the training of CMD involves the IoU settings of each detector. In the proposed method, the IoU of each detector is set to in accordance with most current detectors.

4.4. Experimental Results

In this part, validation experiments are first implemented to show the function of robust detectors and fine detectors. After that, the CMD-based framework is compared with other methods in the contrast experiments.

4.4.1. Validation Experiments

To validate the function of robust detector and fine detector, CMDs with different structures are investigated on three data sets. In all the validation experiments, each of the different CMDs consists of three cascaded detectors. Each detector can be a robust detector, represented as “R”, or a fine detector, represented as “F”. According to the selection and order of “R” and “F”, four different structures of CMDs are implemented: CMD-RRR, CMD-RRF, CMD-RFF and CMD-FFF.

DIOR Validation: As shown in

Table 2, the four types of CMDs are implemented on the DIOR data set. CMD-RRR, with three robust detectors, reaches the highest mAP of 60.0%. CMD-FFF, with three fine detectors, achieves the lowest mAP of 58.4%. Moreover, CMDs with more fine detectors tend to have better performance.

HRRSD Validation: As shown in

Table 3, the four types of CMDs are implemented on the HRRSD data set. CMD-RRF, with two robust detectors and one fine detector, reaches the highest mAP of 88.7%. CMD-RFF, with one fine detector and two fine detectors, reaches the same mAP as CMD-RRF. CMD-FFF, with three fine detectors, reaches the lowest mAP of 88.1%.

NWPU VHR-10 Validation: As shown in

Table 4, the four types of CMDs are implemented on the NWPU VHR-10 data set. CMD-RRR, with three robust detectors, reaches the highest mAP of 82.3%. The CMD-FFF, with three fine detectors, reaches the lowest mAP of 72.3%. Moreover, CMDs with more fine detectors tend to have better performance.

Table 2.

Validation Experiments on DIOR (%).

Table 2.

Validation Experiments on DIOR (%).

| class | Sp | ST | Sd | Bg | Vc | Ap | TC | WM | Dm | GF | BF |

| CMD-RRR | 73.3 | 65.1 | 37.7 | 33.8 | 44.7 | 66.5 | 81.3 | 75.6 | 46.2 | 71.1 | 63.6 |

| CMD-RRF | 74.2 | 69.6 | 44.2 | 34.1 | 44.9 | 63.5 | 80.4 | 73.1 | 44.5 | 71.0 | 64.5 |

| CMD-RFF | 73.6 | 64.6 | 42.3 | 34.3 | 44.1 | 62.0 | 80.4 | 75.1 | 43.9 | 69.2 | 64.3 |

| CMD-FFF | 73.4 | 64.1 | 41.1 | 32.2 | 42.6 | 58.4 | 80.9 | 74.0 | 41.7 | 68.4 | 65.3 |

| | BC | GTF | ESA | Hb | ETS | Op | Cn | Ar | TS | mAP | |

| CMD-RRR | 85.4 | 74.6 | 52.5 | 44.6 | 45.2 | 52.5 | 75.9 | 61.6 | 49.7 | 60.0 | |

| CMD-RRF | 86.1 | 72.1 | 52.5 | 45.0 | 46.9 | 52.6 | 76.3 | 58.2 | 49.0 | 59.9 | |

| CMD-RFF | 86.7 | 72.5 | 51.6 | 43.4 | 46.5 | 52.8 | 75.7 | 59.7 | 48.6 | 59.6 | |

| CMD-FFF | 84.9 | 69.6 | 49.0 | 40.8 | 46.7 | 50.7 | 77.0 | 61.9 | 44.8 | 58.4 | |

Table 3.

Validation Experiments on HRRSD (%).

Table 3.

Validation Experiments on HRRSD (%).

| class | Sp | Bg | GTF | ST | BC | TC | Ar | BD | Hb | Vc | CR | TJ | PL | mAP |

| CMD-RRR | 91.5 | 87.3 | 97.7 | 95.7 | 68.2 | 93.1 | 98.1 | 91.5 | 93.7 | 94.8 | 92.5 | 77.4 | 66.7 | 88.3 |

| CMD-RRF | 92.8 | 88.2 | 97.9 | 95.9 | 69.3 | 93.0 | 98.5 | 90.9 | 94.2 | 92.9 | 92.4 | 77.7 | 67.9 | 88.7 |

| CMD-RFF | 91.6 | 88.3 | 97.7 | 95.5 | 69.3 | 93.7 | 98.5 | 91.0 | 95.1 | 95.0 | 93.2 | 78.1 | 66.8 | 88.7 |

| CMD-FFF | 92.2 | 86.4 | 97.5 | 95.3 | 68.8 | 93.3 | 98.6 | 90.7 | 94.4 | 95.7 | 92.0 | 75.5 | 65.5 | 88.1 |

Table 4.

Validation Experiments on NWPU VHR-10 (%).

Table 4.

Validation Experiments on NWPU VHR-10 (%).

| class | Sp | Bg | GTF | ST | BC | TC | Ar | BD | Hb | Vc | mAP |

| CMD-RRR | 93.3 | 42.8 | 85.3 | 95.4 | 72.4 | 80.6 | 99.4 | 96.8 | 69.7 | 87.0 | 82.3 |

| CMD-RRF | 89.9 | 24.5 | 76.0 | 96.6 | 50.8 | 79.1 | 99.2 | 96.6 | 58.4 | 85.4 | 75.7 |

| CMD-RFF | 90.9 | 37.2 | 70.1 | 96.3 | 71.5 | 77.7 | 99.6 | 97.2 | 60.3 | 86.2 | 78.7 |

| CMD-FFF | 84.2 | 19.2 | 54.3 | 95.7 | 62.2 | 75.3 | 99.1 | 96.4 | 51.4 | 85.5 | 72.3 |

According to these results, we can find two clues for the analysis of the robust detector and the fine detector. Among the four CMDs, CMD-FFF always has the worst performance on different data sets, CMD-RRF and CMD-RFF reach the best performance on HRRSD and CMD-RRR reaches the best performance on DIOR and NWPU VHR-10. Details of the analyses are illustrated as follows.

First, among the four CMDs, CMD-FFF always achieves the worst performance on different data sets. Obviously, CMD-RFF can always surpass CMD-FFF; in other words, replacing the first fine detector in CMD-FFF with a robust detector can steadily improve model performance. This phenomenon implies that the robust detector works better in the earlier stages, with relatively coarse bounding boxes as input.

Second, among the four CMDs, CMD-RRF and CMD-RFF reach the best performance on HRRSD. To be noted, the CMD-FFF mAP on HRRSD reaches a high score of nearly 90%. This means that the RPN trained on HRRSD, a balanced large-scale data set, provides proposals fine enough to use three fine detectors. Under this condition, CMD-RRF or CMD-RFF shows better performance than CMD-RRR. This phenomenon implies that the fine detector works better in the later stages, with relatively fine bounding boxes.

Third, among the four CMDs, CMD-RRR reaches the best performance on DIOR and NWPU VHR-10. To be noted, the CMD-FFF mAPs on DIOR and NWPU VHR-10 cannot surpass 75%. This means that the RPNs trained on DIOR, a hard large-scale data set, and NWPU VHR-10, a small-scale data set, provide proposals too coarse to use three fine detectors. Under this condition, CMD-RRR shows better performance than CMD-RRF or CMD-RFF. Compared with validation experiments on HRRSD, where RPN is trained to provide fine proposals, we can find that more robust detectors are preferred if coarse proposals are provided, and more fine detectors are preferred if fine proposals are provided. This conclusion can also be inferred from the performance (mAP) order of CMDs in

Table 2 and

Table 4: CMD-RRR > CMD-RRF > CMD-RFF > CMD-FFF.

4.4.2. Ablation Experiments

To evaluate model effectiveness, ablation experiments are conducted. In Exp. 2, the experiment without (w/o) the RFF schedule denotes a normal three-stage network with normal heads, i.e., the FFF schedule. Then, in Exp. 3 and 4, the three cascaded detection heads are reduced. As shown in

Table 5, the proposed method shows better performance than the ablation experiments, Exp. 2 to 4. This validates the effectiveness of the proposed model and schedule.

4.4.3. Contrast Experiments

To evaluate the proposed CMD-based object detection framework, some other state-of-the-art frameworks are adopted as contrast methods, the majority of which are illustrated as follows:

R-CNN [

31]:

Region-based Convolutional Neural Network (R-CNN) is the first deep feature-based object detection framework, which consists of several progresses: proposal generation, region cropping, feature extraction, classification and regression.

RICNN [

43]:

Rotation-Invariant Convolutional Neural Network (RICNN) proposed a new rotation-invariant layer with the Alexnet CNN as the backbone network.

FasterR-CNN_r101 [

33]: Faster R-CNN is proposed on the base of R-CNN and can simultaneously improve both the speed and accuracy of detection through the optimization and integration of different parts. This framework takes the 101-layer ResNet [

44] for feature extraction.

FasterR-CNN_r50/_r101+FPN: These are compound object detection frameworks from [

42]. These frameworks take the ResNet [

44] with 50 or 101 layers for feature extraction and adopt the

Feature Pyramid Network (FPN) [

45] to enhance the extracted framework.

YOLO-v3 [

28]:

Yolov3 is an incremental improvement proposed by a state-of-the-art method in terms of its lightweight and high-speed characteristics.

YOLOF [

29]: The

You Only Look One-level Feature analyzed the success of FPN and proposed an efficient single-level feature method.

Dynamic R-CNN [

46]: The

Dynamic R-CNN: Toward high-quality object detection via dynamic training designed a dynamic adjustment scheme for model parameters.

After introducing the contrast methods, the proposed methods are represented with the form of FasterR-CNN_r{N} + FPN + CMD - {D}, where N and D are optional from the sets of {50, 101} and {RRR, RFF}, respectively. Contrast experiments between the proposed methods and contrast methods on different data sets are conducted as follows.

DIOR Contrast: As shown in

Table 6, different methods are implemented on the DIOR data sets. The proposed CMD-RRR on FasterR-CNN_r101+RPN reaches the best performance, with a mAP of 61.6%. The proposed CMD-RRR improves the mAP on FasterR-CNN_r50+RPN by 1.9% and on FasterR-CNN_r101+RPN by 1.1%. The proposed CMD-RFF improves the mAP on FasterR-CNN_r50+RPN by 1.5% and on FasterR-CNN_r101+RPN by 0.8%. Apparently, CMD-RRR shows better performance than CMD-RFF.

HRRSD Contrast: As shown in

Table 7, different methods are implemented on the DIOR data sets. The proposed CMD-RFF on FasterR-CNN_r101+RPN reaches the second-best performance, with a mAP of 90.3%. The proposed CMD-RRR improves the mAP on FasterR-CNN_r50+RPN by 2.3% and on FasterR-CNN_r101+RPN by −1.6%. The proposed CMD-RFF improves the mAP on FasterR-CNN_r50+RPN by 2.7% and on FasterR-CNN_r101+RPN by −0.9%. Apparently, CMD-RFF shows better performance than CMD-RRR.

NWPU VHR-10 Contrast: As shown in

Table 8, different methods are implemented on the DIOR data sets. The proposed CMD-RRR on FasterR-CNN_r101+RPN reaches the best performance, with a mAP of 87.4%. The proposed CMD-RRR improves the mAP on FasterR-CNN_r50+RPN by 12.3% and on FasterR-CNN_r101+RPN by 4.9%. The proposed CMD-RFF improves the mAP on FasterR-CNN_r50+RPN by 8.7% and on FasterR-CNN_r101+RPN by 3.0%. Apparently, CMD-RRR shows better performance than CMD-RFF.

Table 6.

Contrast Experiments on DIOR (%).

Table 6.

Contrast Experiments on DIOR (%).

| class | Sp | ST | Sd | Bg | Vc | Ap | TC | WM | Dm | GF | BF |

| R-CNN [31] | 9.1 | 18.0 | 60.8 | 15.6 | 9.1 | 43.0 | 54.0 | 16.4 | 33.7 | 50.1 | 53.8 |

| RICNN [43] | 9.1 | 19.1 | 61.1 | 25.3 | 11.4 | 61.0 | 63.5 | 31.5 | 41.1 | 55.9 | 60.1 |

| FasterR-CNN [33] | 27.7 | 39.8 | 73.0 | 28.0 | 23.6 | 49.3 | 75.2 | 45.4 | 62.3 | 68.0 | 78.8 |

| RIFD-CNN [47] | 31.7 | 41.5 | 73.6 | 29.0 | 28.5 | 53.2 | 79.5 | 46.9 | 63.1 | 68.9 | 79.9 |

| ssD [30] | 59.2 | 46.6 | 61.0 | 29.7 | 27.4 | 72.7 | 76.3 | 65.7 | 56.6 | 65.3 | 72.4 |

| YOLov3 [28] | 87.4 | 68.7 | 70.6 | 31.2 | 48.3 | 29.2 | 87.3 | 78.7 | 26.9 | 31.1 | 74.0 |

| FasterR-CNN_r50+FPN [42] | 73.3 | 63.2 | 47.8 | 32.0 | 43.2 | 54.2 | 80.5 | 74.4 | 37.1 | 65.6 | 66.0 |

| FasterR-CNN_r50+FPN+CMD-RRR | 73.3 | 65.1 | 37.7 | 33.8 | 44.7 | 66.5 | 81.3 | 75.6 | 46.2 | 71.1 | 63.6 |

| FasterR-CNN_r50+FPN+CMD-RFF | 73.6 | 64.6 | 42.3 | 34.3 | 44.1 | 62.0 | 80.4 | 75.1 | 43.9 | 69.2 | 64.3 |

| FasterR-CNN_r101+FPN [42] | 72.7 | 61.6 | 51.6 | 34.9 | 43.0 | 61.5 | 80.0 | 75.4 | 48.9 | 71.0 | 64.7 |

| FasterR-CNN_r101+FPN+CMD-RRR | 72.9 | 63.3 | 46.8 | 35.9 | 44.3 | 70.5 | 81.4 | 74.4 | 52.2 | 73.5 | 64.5 |

| FasterR-CNN_r101+FPN+CMD-RFF | 72.9 | 62.4 | 41.5 | 37.1 | 44.2 | 67.4 | 80.3 | 75.6 | 52.7 | 74.4 | 64.3 |

| class | BC | GTF | ESA | Hb | ETS | Op | Cn | Ar | TS | mAP | |

| R-CNN [31] | 62.3 | 49.3 | 50.2 | 39.5 | 33.5 | 30.9 | 53.7 | 35.6 | 36.1 | 37.7 | |

| RICNN [43] | 66.3 | 58.9 | 51.7 | 43.5 | 36.6 | 39.0 | 63.3 | 39.1 | 46.1 | 44.2 | |

| FasterR-CNN [33] | 66.2 | 56.9 | 69.0 | 50.2 | 55.2 | 50.1 | 70.9 | 53.6 | 38.6 | 54.1 | |

| RIFD-CNN [47] | 69.0 | 62.4 | 69.0 | 51.2 | 56.0 | 51.1 | 71.5 | 56.6 | 40.1 | 56.1 | |

| ssD [30] | 75.7 | 68.6 | 63.5 | 49.4 | 53.1 | 48.1 | 65.8 | 59.5 | 55.1 | 58.6 | |

| YOLov3 [28] | 78.6 | 61.1 | 48.6 | 44.9 | 54.4 | 49.7 | 69.7 | 72.2 | 29.4 | 57.1 | |

| FasterR-CNN_r50+FPN [42] | 85.5 | 70.8 | 49.6 | 38.0 | 46.5 | 49.9 | 76.9 | 62.6 | 44.2 | 58.1 | |

| FasterR-CNN_r50+FPN+CMD-RRR | 85.4 | 74.6 | 52.5 | 44.6 | 45.2 | 52.5 | 75.9 | 61.6 | 49.7 | 60.0 | |

| FasterR-CNN_r50+FPN+CMD-RFF | 86.7 | 72.5 | 51.6 | 43.4 | 46.5 | 52.8 | 75.7 | 59.7 | 48.6 | 59.6 | |

| FasterR-CNN_r101+FPN [42] | 86.0 | 72.0 | 53.6 | 42.8 | 50.0 | 52.5 | 77.8 | 58.0 | 52.0 | 60.5 | |

| FasterR-CNN_r101+FPN+CMD-RRR | 85.3 | 73.7 | 55.0 | 46.2 | 48.8 | 54.3 | 76.7 | 55.4 | 57.5 | 61.6 | |

| FasterR-CNN_r101+FPN+CMD-RFF | 86.0 | 71.7 | 54.3 | 47.0 | 51.5 | 55.0 | 77.1 | 56.2 | 53.6 | 61.3 | |

Table 7.

Contrast Experiments on HRRSD (%).

Table 7.

Contrast Experiments on HRRSD (%).

| class | Sp | Bg | GTF | ST | BC | TC | Ar |

| R-CNN [31] | 49.7 | 20.0 | 76.3 | 79.1 | 18.0 | 70.8 | 77.5 |

| RICNN [43] | 56.5 | 27.4 | 78.0 | 81.0 | 23.0 | 66.4 | 78.1 |

| FasterR-CNN [33] | 88.5 | 85.5 | 90.6 | 88.7 | 47.9 | 80.7 | 90.8 |

| YOLO-v3 [28] | 83.7 | 88.1 | 96.1 | 92.9 | 53.5 | 87.1 | 96.7 |

| YOLOF [29] | 90.1 | 89.2 | 96.7 | 90.6 | 61.0 | 89.9 | 97.3 |

| Dynamic R-CNN [46] | 88.9 | 89.2 | 97.2 | 93.4 | 69.0 | 92.7 | 96.9 |

| FasterR-CNN_r50+FPN [42] | 89.8 | 86.2 | 97.3 | 94.5 | 60.4 | 89.1 | 98.7 |

| FasterR-CNN_r50+FPN+CMD-RRR | 91.5 | 87.3 | 97.7 | 95.7 | 68.2 | 93.1 | 98.1 |

| FasterR-CNN_r50+FPN+CMD-RFF | 91.6 | 88.3 | 97.7 | 95.5 | 69.3 | 93.7 | 98.5 |

| FasterR-CNN_r101+FPN [42] | 94.4 | 89.6 | 97.9 | 96.5 | 77.6 | 96.4 | 97.8 |

| FasterR-CNN_r101+FPN+CMD-RRR | 92.9 | 89.8 | 98.4 | 95.6 | 70.6 | 93.1 | 98.4 |

| FasterR-CNN_r101+FPN+CMD-RFF | 93.2 | 90.4 | 98.4 | 95.9 | 71.6 | 93.7 | 98.1 |

| class | BD | Hb | Vc | CR | TJ | PL | mAP |

| R-CNN [31] | 57.6 | 54.1 | 41.3 | 25.9 | 2.4 | 16.6 | 45.3 |

| RICNN [43] | 59.6 | 47.8 | 52.0 | 26.6 | 9.3 | 20.5 | 48.2 |

| FasterR-CNN [33] | 86.9 | 89.4 | 84.0 | 88.6 | 75.1 | 63.3 | 81.5 |

| YOLO-v3 [28] | 89.1 | 95.0 | 81.1 | 91.5 | 75.0 | 53.1 | 83.3 |

| YOLOF [29] | 91.8 | 95.0 | 87.0 | 93.2 | 79.7 | 63.8 | 86.6 |

| Dynamic R-CNN [46] | 93.1 | 95.4 | 91.4 | 92.7 | 76.6 | 64.0 | 87.7 |

| FasterR-CNN_r50+FPN [42] | 90.4 | 94.3 | 92.6 | 90.2 | 71.9 | 63.0 | 86.0 |

| FasterR-CNN_r50+FPN+CMD-RRR | 91.5 | 93.7 | 94.8 | 92.5 | 77.4 | 66.7 | 88.3 |

| FasterR-CNN_r50+FPN+CMD-RFF | 91.0 | 95.1 | 95.0 | 93.2 | 78.1 | 66.8 | 88.7 |

| FasterR-CNN_r101+FPN [42] | 93.8 | 96.0 | 96.1 | 92.2 | 84.0 | 72.8 | 91.2 |

| FasterR-CNN_r101+FPN+CMD-RRR | 91.8 | 94.3 | 95.3 | 93.1 | 81.1 | 70.3 | 89.6 |

| FasterR-CNN_r101+FPN+CMD-RFF | 93.3 | 95.8 | 95.6 | 93.5 | 83.2 | 71.5 | 90.3 |

Table 8.

Contrast Experiments on NWPU (%).

Table 8.

Contrast Experiments on NWPU (%).

| class | Sp | Bg | GTF | ST | BC | TC | Ar | BD | Hb | Vc | mAP |

| R-CNN [31] | 63.7 | 45.4 | 81.2 | 84.3 | 46.8 | 35.5 | 70.1 | 83.6 | 62.3 | 44.8 | 61.8 |

| RICNN [43] | 77.3 | 61.5 | 86.7 | 85.3 | 58.5 | 40.8 | 88.4 | 88.1 | 68.6 | 71.1 | 72.6 |

| FasterR-CNN [33] | 89.9 | 80.9 | 100.0 | 67.3 | 87.5 | 78.6 | 90.7 | 89.2 | 89.8 | 88.0 | 86.2 |

| OneshotDet-r50 [48] | 90.4 | 53.1 | 97.0 | 96.9 | 41.3 | 9.5 | 7.3 | 2.9 | 74.8 | 56.0 | 52.92 |

| CoAE-r50 [49] | 87.0 | 79.23 | 100.0 | 88.2 | 75.2 | 4.1 | 9.1 | 19.47 | 94.5 | 77.86 | 63.47 |

| SCoDANet-r50 [50] | 90.0 | 86.2 | 99.8 | 90.8 | 79.7 | 6.75 | 18.5 | 16.81 | 87.1 | 77.66 | 65.33 |

| FasterR-CNN_r50+FPN [42] | 84.3 | 23.6 | 50.1 | 96.5 | 61.5 | 73.3 | 97.2 | 95.7 | 37.3 | 83.4 | 70.3 |

| FasterR-CNN_r50+FPN+CMD-RRR | 93.3 | 42.8 | 85.3 | 95.4 | 72.4 | 80.6 | 99.4 | 96.8 | 69.7 | 87.0 | 82.3 |

| FasterR-CNN_r50+FPN+CMD-RFF | 90.9 | 37.2 | 70.1 | 96.3 | 71.5 | 77.7 | 99.6 | 97.2 | 60.3 | 86.2 | 78.7 |

| FasterR-CNN_r101+FPN [42] | 91.4 | 45.4 | 79.1 | 96.1 | 76.0 | 76.1 | 99.7 | 97.5 | 76.0 | 87.3 | 82.5 |

| FasterR-CNN_r101+FPN+CMD-RRR | 94.0 | 53.1 | 92.8 | 96.8 | 80.5 | 81.9 | 99.9 | 97.8 | 85.9 | 91.1 | 87.4 |

| FasterR-CNN_r101+FPN+CMD-RFF | 92.1 | 56.6 | 83.8 | 97.3 | 80.0 | 80.2 | 99.7 | 97.5 | 79.3 | 88.5 | 85.5 |

According to these experiments, it is found that the proposed method can steadily improve the detection performance on different models and most data sets. Moreover, the proposed method reaches state-of-the-art performance on different data sets. Therefore, the proposed method is an effective method for the remote sensing object detection task.

4.5. Failure Case Analysis

As shown in

Table 2 and

Table 3, the proposed schedules are not always effective. In other words, the FFF schedule sometimes reaches the best performance compared with other schedules. To be specific, failure cases include the categories {baseball field, chimney, airplane and train station} in DIOR and {airplane, harbor and vehicle} in HRRSD. The robust head ‘R’ can more easily handle low-quality proposals, but the accuracy is lower when proposals are more precise. The input proposal for the categories of failed cases is generally better. Thus the accuracy after using the R-header is lower in these cases. More studies are to be conducted in the future.

5. Conclusions

In this article, novel network detector schedules on multi-stage convolutional neural network frameworks are built for object detection in remote sensing images. First, the robust detectors and the fine detector are carefully defined to build the CMDs. Then, different network detector schedules are investigated to give suggestions for using robust and fine detectors. The conclusion of this investigation can be easily migrated to various other models. Experiments with different baselines on different data sets are conducted to probe that the robust detector and the fine detector are very appropriate for coarse and fine proposals, respectively. Finally, the proposed frameworks are compared with several state-of-the-art methods. These abundant and convincing experiments have shown that: First, the robust detector and the fine detector are, respectively, appropriate for coarse proposals and fine proposals; Second, the order and the numbers of the two types of detectors are designed in accordance with the coarse–fine degree of different stage input proposals. Finally, the CMD-based framework can steadily improve the model performance with various backbone networks on different data sets.