Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions

Abstract

1. Introduction

- We propose an effective adaptive feature attention module (AFAM) that can be widely applied to boost the representation power of CNNs.

- We validate the effectiveness of our AFAM via ablation studies.

- We verify that the AFAM outperforms the benchmark network EfficientDet on the benchmark dataset, the Dense Dataset.

2. Related Work

2.1. Camera-Based Object Detection

2.2. Lidar-Based Object Detection

2.3. Visual–Lidar-Based Object Detection

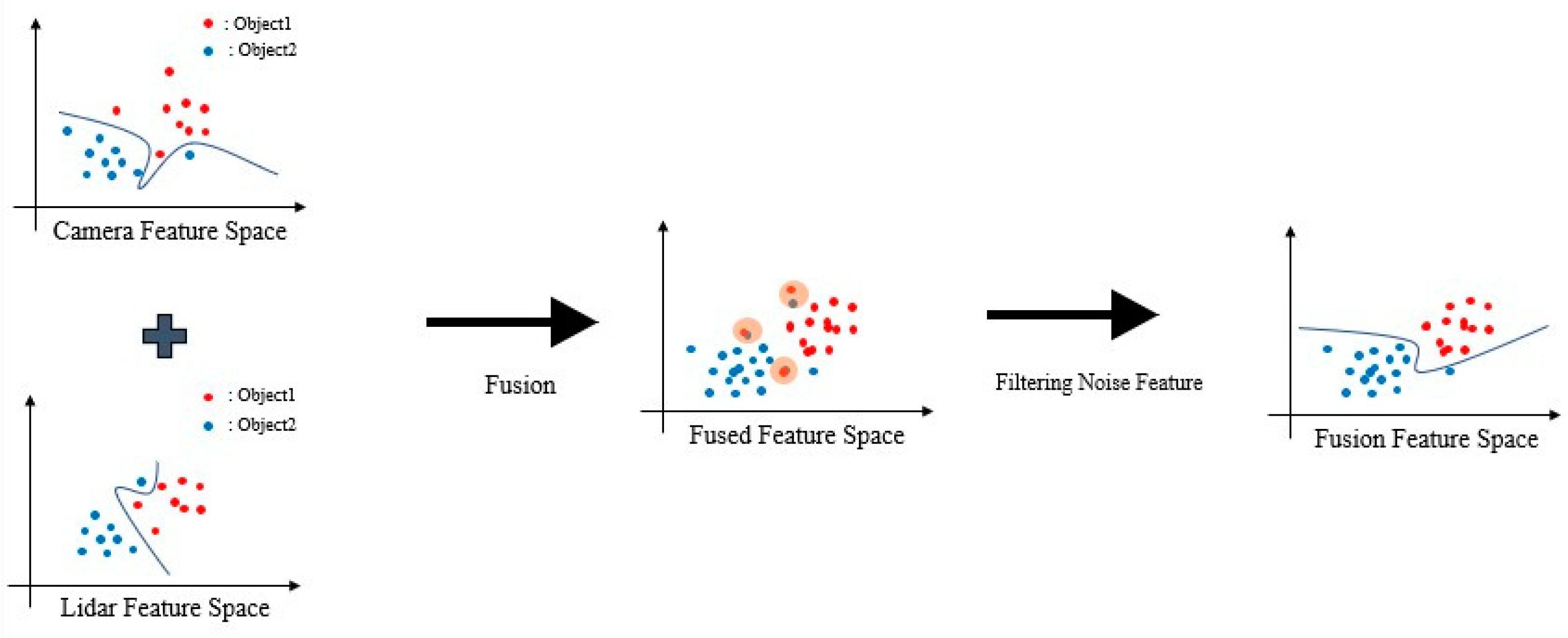

3. Proposed Method

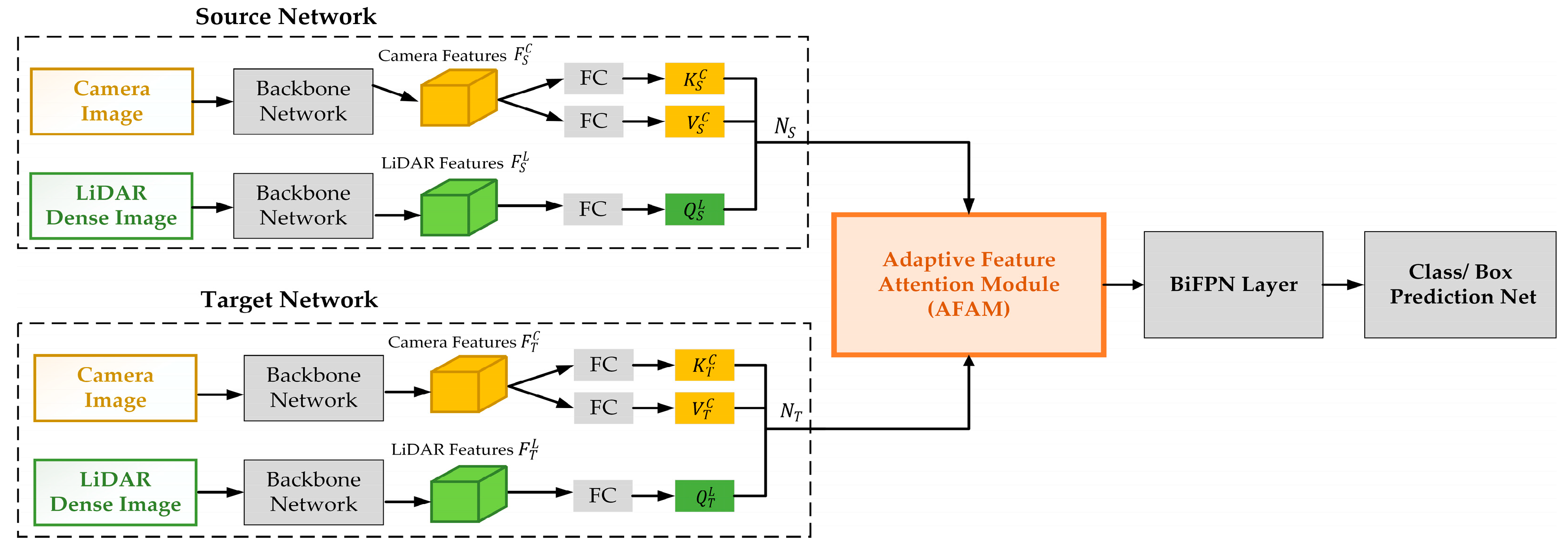

3.1. Network Pipeline

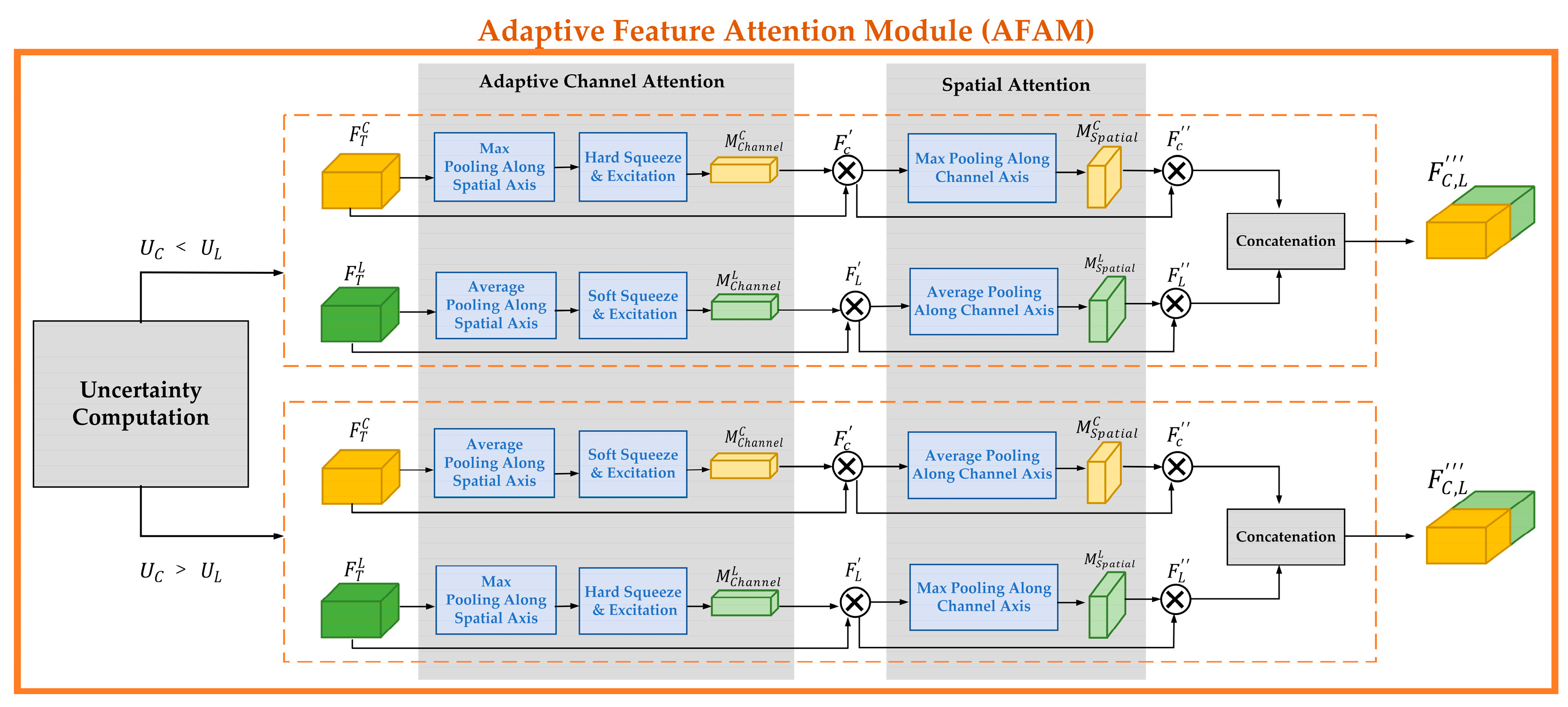

3.2. Adaptive Feature Attention Module (AFAM)

3.2.1. Uncertainty Computation

3.2.2. Adaptive Channel Attention

- Case 1: , the lidar data uncertainty is lower than the camera uncertainty. In such condition, max pooling is applied on the lidar features , while camera features are average pooled.

- Case 2: , the similarity between camera features is higher in comparison to the lidar features of the source and target networks. In this case, max pooling is applied on the camera features , while lidar features are average pooled.

3.2.3. Adaptive Spatial Attention

3.3. Training with AFAM

| Algorithm 1: Object Detection Network Training with AFAM–EfficientDet |

| Input: Camera Images and LiDAR dense range images Output: List_of_class_predictions ← epoch ← 0 initialize random weights , , , while epoch ← do for each in do input camera and LiDAR data into each backbone network//four backbones , feature_extraction ( ) training source network training target network compute if then ← epoch break end if class_predictions_without_AFAM () ← predictions(labels, bounding_box, probability) ++ end for epoch++ end while List_of_class_predictions.append(class_predictions_without_AFAM) epoch ← 0 while epoch ← do for in do input camera and LiDAR data into each backbone network//four backbones extract features from source and target network Refined_features ← Feature_Recalibration_with_AFAM (, , ) training target network//update weights of target network class_predictions_with AFAM () ← predictions (labels, bounding_box, probabilty) ++ end for epoch++ end while List_of_class_predictions.append(class_predictions_with_AFAM) return List_of_class_predictions |

| Algorithm 2: Feature_Recalibration_with_AFAM |

| Input: , , Output: Refined Features . , , ← Compute similarity (, ) ← Compute uncertainty (, , ) if then //camera feature recalibration ← maxpooling() ← hard_squeeze&excitation () //lidar feature recalibration ← average_pooling() ← soft_squeeze&excitation () else //camera feature recalibration ← averagepooling() ← soft_squeeze&excitation () //lidar feature recalibration ← maxpooling() ← hard_squeeze&excitation () end if Return ← concatenate( |

4. Experiments and Results

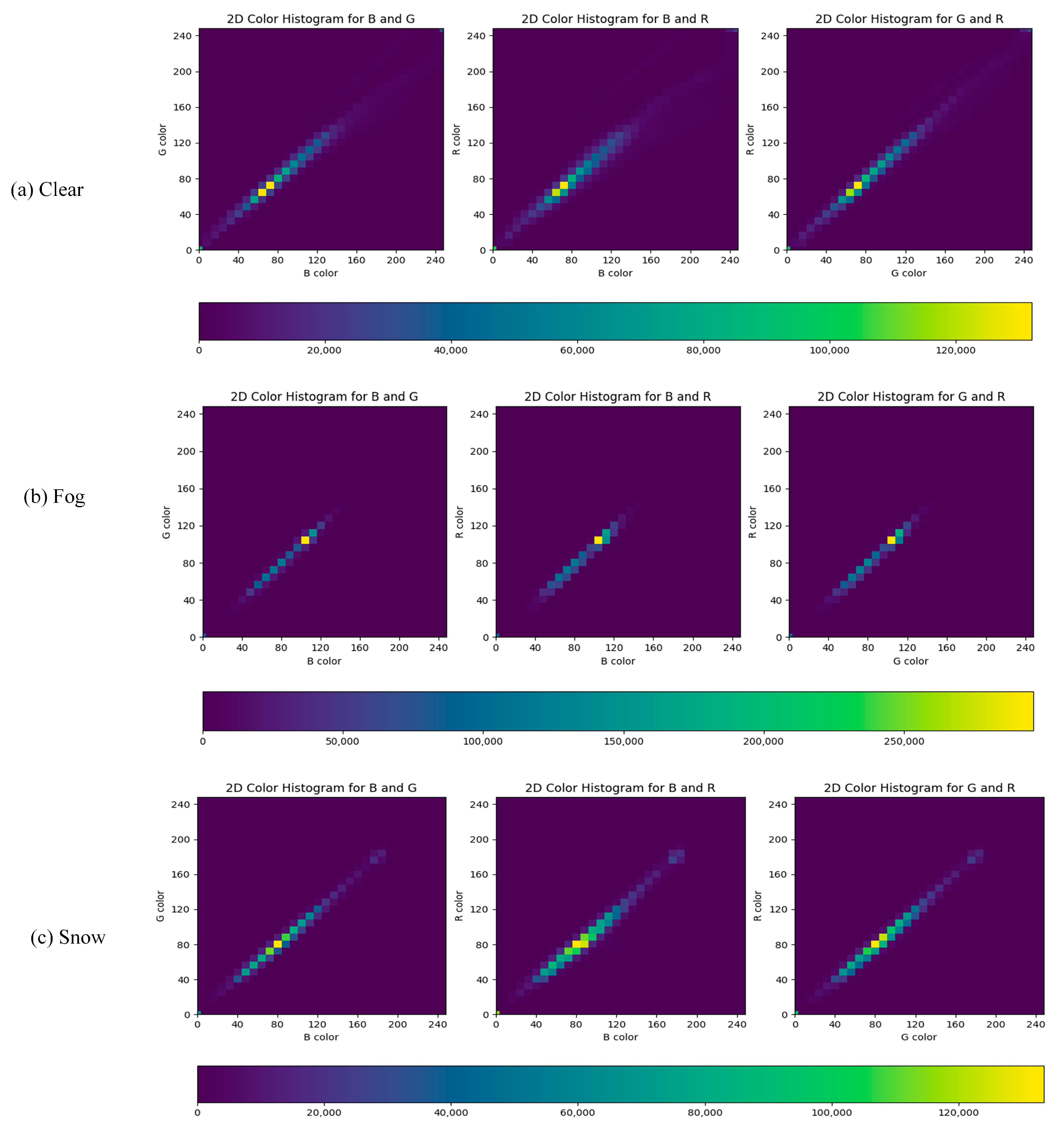

4.1. Implementation Setup, Dataset, and Evaluation Parameters

4.2. Ablation Study

4.3. Comparison with Other Methods

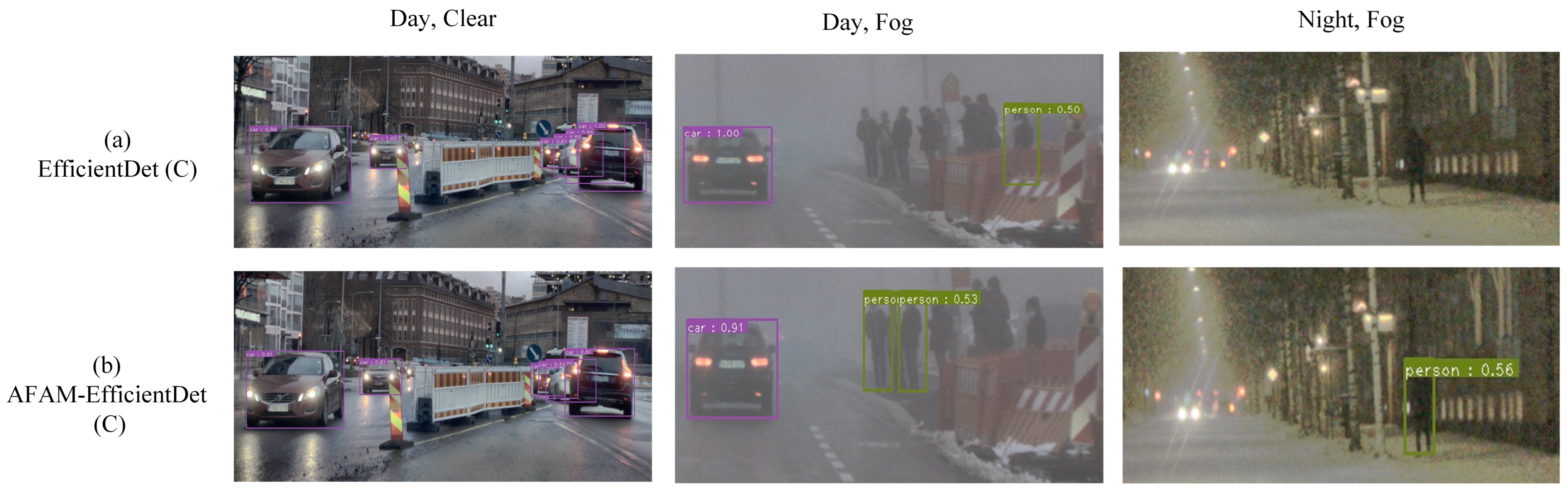

- Comparison with baseline method (only camera features): Firstly, we compared the performance of the AFAM for camera only features. The EfficientDet takes raw features from the backbone network as input and predicts the object classes. On the other hand, AFAM when embedded with the EfficientDet performs the refinement of the features, thus providing more robustness for the object detection network. It is observed that using refined features enhances object detection performance.

- Comparison with baseline method (visual–lidar fusion): Secondly, the performance of the multimodal EfficientDet that undergoes the deep fusion of camera and lidar features for object detection is analyzed and is compared with the AFAM–EfficientDet. It can be clearly seen that the AFAM, providing the more robust deep fusion of visual–lidar features, achieves a higher mAP in comparison to the multimodal EfficientDet.

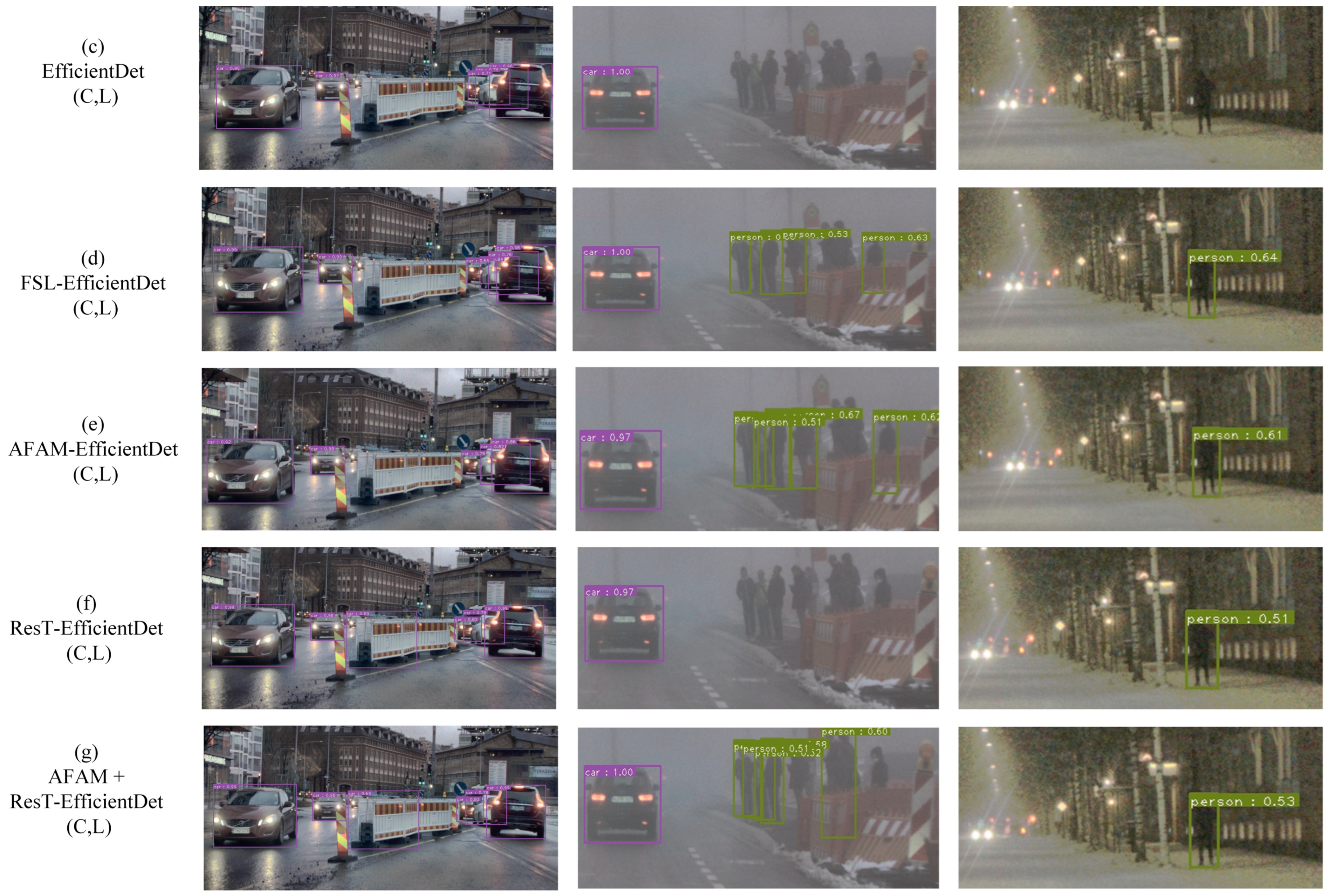

- Comparison with different network architecture: Here, we present the evaluation results when the AFAM is ported to different network architecture. For this purpose, first, we compute the results for ResT–EfficientDet. Here, ResT is used as a backbone network for visual–lidar feature extraction, and those features are given as input to EfficientDet for class prediction. To assess the effectiveness of the AFAM, we have replaced the backbone EfficientNet with ResT. The AFAM takes raw features from ResT as input, performs the feature recalibration and fusion, and then gives the fused visual–lidar features as input to the BiFPN layer of EfficientDet. As the backbone network is changed, the input features are different, resulting in a change in performance, which can be observed in Table 4. However, the AFAM–ResT–EfficientDet outperforms the ResT–EfficientDet.

- Comparison with feature refinement method: Finally, we present the comparison of the AFAM with the FSL. Both the modules are used in integration with EfficientDet. They take the same features as input, perform the recalibration of the features, and output the refined visual–lidar features for class prediction. It is observed that FSL–EfficientDet achieves the highest Top1-mAP as it employs annotations for environment learning, while AFAM–EfficientDet adaptively learns via the environment’s dissimilar appearance and computes the uncertainty. In the case of dense fog, snow, and light changes from day to night, the camera–lidar-based object detection performance is significantly degraded. Based on the adaptive learning approach, AFAM–EfficientDet achieves the least variance, which is the difference between Top1-mAP and Worst-mAP, when the environment significantly changes due to illumination changes or adverse weather. On the other hand, annotation-dependent FSL–EfficientDet fails to deliver high performance under challenging weather conditions such as dense fog or extreme light changes, resulting in increased variance. Thus, the AFAM empowers the object detection network, EfficientDet, in this case, to achieve more robustness in adverse weather conditions.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Khairuddin, A.R.; Talib, M.S.; Haron, H. Review on simultaneous localization and mapping (SLAM). In Proceedings of the 5th IEEE International Conference on Control System, Computing and Engineering, ICCSCE 2015, Penang, Malaysia, 27–29 May 2016; pp. 85–90. [Google Scholar]

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A Survey on 3D Object Detection Methods for Autonomous Driving Applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Kaur, J.; Singh, W. Tools, techniques, datasets and application areas for object detection in an image: A review. Multimed. Tools Appl. 2022, 81, 38297–38351. [Google Scholar] [CrossRef] [PubMed]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-Task Multi-Sensor Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7345–7353. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and Object Detection from View Aggregation. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 5750–5757. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4604–4612. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Huang, T.; Liu, Z.; Chen, X.; Bai, X. EPNet: Enhancing Point Features with Image Semantics for 3D Object Detection. In Computer Vision–ECCV 2020; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2020; pp. 35–52. [Google Scholar]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le Quoc, V.; et al. DeepFusion: Lidar-Camera Deep Fusion for Multi-Modal 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17182–17191. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All you Need. Adv. Neural Inf. Process Syst. 2017, 30. [Google Scholar]

- Xu, D.; Anguelov, D.; Jain, A. PointFusion: Deep Sensor Fusion for 3D Bounding Box Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madrid, Spain, 1–5 October 2018; pp. 244–253. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing Through Fog Without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11682–11692. [Google Scholar]

- Kim, T.L.; Park, T.H. Camera-LiDAR Fusion Method with Feature Switch Layer for Object Detection Networks. Sensors 2022, 22, 7163. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madrid, Spain, 1–5 October 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Patel, S.P.; Nakrani, M. A Review on Methods of Image Dehazing. Int. J. Comput. Appl. 2016, 133, 975–8887. [Google Scholar]

- Zhang, Z.; Zhao, L.; Liu, Y.; Zhang, S.; Yang, J. Unified Density-Aware Image Dehazing and Object Detection in Real-World Hazy Scenes. In Proceedings of the Asian Conference on Computer Vision (ACCV), Kyoto, Janpan, 30 Noveber–4 December 2020. [Google Scholar]

- Chen, W.-T.; Ding, J.-J.; Kuo, S.-Y. PMS-Net: Robust Haze Removal Based on Patch Map for Single Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11681–11689. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Non-Local Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-In-One Dehazing Network. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. GridDehazeNet: Attention-Based Multi-Scale Network for Image Dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7314–7323. [Google Scholar]

- Zeng, C.; Kwong, S. Dual Swin-Transformer based Mutual Interactive Network for RGB-D Salient Object Detection. arXiv 2022, arXiv:2206.03105. [Google Scholar]

- Liu, Z.; Tan, Y.; He, Q.; Xiao, Y. SwinNet: Swin Transformer Drives Edge-Aware RGB-D and RGB-T Salient Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 4486–4497. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather influence and classification with automotive lidar sensors. IEEE Intell. Veh. Symp. Proc. 2019, 2019, 1527–1534. [Google Scholar]

- Sebastian, G.; Vattem, T.; Lukic, L.; Burgy, C.; Schumann, T. RangeWeatherNet for LiDAR-only weather and road condition classification. IEEE Intell. Veh. Symp. Proc. 2021, 2021, 777–784. [Google Scholar]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. CNN-Based Lidar Point Cloud De-Noising in Adverse Weather. IEEE Robot Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madrid, Spain, 1–5 October 2018; pp. 4490–4499. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel R-CNN: Towards High Performance Voxel-based 3D Object Detection. Proc. AAAI Conf. Artif. Intell. 2021, 35, 1201–1209. [Google Scholar] [CrossRef]

- Mao, J.; Xue, Y.; Niu, M.; Bai, H.; Feng, J.; Liang, X.; Xu, H.; Xu, C. Voxel Transformer for 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3164–3173. [Google Scholar]

- Meyer, G.P.; Laddha, A.; Kee, E.; Vallespi-Gonzalez, C.; Wellington, C.K. LaserNet: An Efficient Probabilistic 3D Object Detector for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12677–12686. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection. IEEE Int. Conf. Intell. Robot. Syst. 2020, 10386–10393. [Google Scholar] [CrossRef]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A unified multi-scale deep convolutional neural network for fast object detection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 354–370. [Google Scholar]

- Hoffman, J.; Gupta, S.; Darrell, T. Learning with Side Information Through Modality Hallucination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 826–834. [Google Scholar]

- Song, S.; Xiao, J. Deep Sliding Shapes for Amodal 3D Object Detection in RGB-D Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 808–816. [Google Scholar]

- Gsai, S.L.; Suha, T.A.; Gsai, K. FIFO: Learning Fog-Invariant Features for Foggy Scene Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 18911–18921. [Google Scholar]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised Feature Learning via Non-Parametric Instance Discrimination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madrid, Spain, 1–5 October 2018; pp. 3733–3742. [Google Scholar]

- Blundell, C.; Cornebise, J.; Kavukcuoglu, K.; Com, W.; Deepmind, G. Weight Uncertainty in Neural Network. In Proceedings of the 32nd International Conference on Machine Learning, PMLR, Lile, France, 6–11 July 2015; pp. 1613–1622. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019 2019; pp. 6105–6114. [Google Scholar]

- GitHub–lukemelas/EfficientNet-PyTorch: A PyTorch Implementation of EfficientNet and EfficientNetV2. Available online: https://github.com/lukemelas/EfficientNet-PyTorch (accessed on 1 May 2023).

- Zhang, Q.-L.; Yang, Y.-B. ResT: An Efficient Transformer for Visual Recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 15475–15485. [Google Scholar]

| Dataset Traverse | Environmental Condition (Light, Weather) | Training | Validation | Testing |

|---|---|---|---|---|

| T1 | Daytime, Clear | 2183 | 399 | 1005 |

| T2 | Daytime, Snow | 1615 | 226 | 452 |

| T3 | Daytime, Fog | 525 | 69 | 140 |

| T4 | Nighttime, Clear | 1343 | 409 | 877 |

| T5 | Nighttime, Snow | 1720 | 240 | 480 |

| T6 | Nighttime, Fog | 525 | 69 | 140 |

| Total | 8238 | 1531 | 3189 |

| Network | Max Pooling | Average Pooling | Hard Squeeze | Soft Squeeze | Top1-mAP |

|---|---|---|---|---|---|

| AFAM– EfficientDet | 0.419 | ||||

| 0.414 | |||||

| 0.405 | |||||

| 0.397 |

| Network | Top1-mAP | ||

|---|---|---|---|

| AFAM–EfficientDet | 16 | 8 | 0.419 |

| 16 | 12 | 0.406 | |

| 16 | 16 | 0.395 | |

| 24 | 8 | 0.412 | |

| 24 | 12 | 0.398 |

| Comparison | Network | Modality | Top5-mAP | Top1-mAP | Worst-mAP | Variance |

|---|---|---|---|---|---|---|

| a | EfficientDet | C | 0.347 ± 0.00073 | 0.367 | 0.318 | 0.049 |

| AFAM–EfficientDet | C | 0.354 ± 0.00024 | 0.370 | 0.325 | 0.045 | |

| b | EfficientDet | C, L | 0.398 ± 0.00018 | 0.414 | 0.377 | 0.037 |

| AFAM–EfficientDet | C, L | 0.403 ± 0.00007 | 0.419 | 0.402 | 0.017 | |

| c | ResT–EfficientDet | C, L | 0.234 ± 0.00232 | 0.247 | 0.205 | 0.042 |

| AFAM + ResT–EfficientDet | C, L | 0.308 ± 0.00077 | 0.319 | 0.294 | 0.025 | |

| d | FSL | C, L | 0.406 ± 0.00016 | 0.427 | 0.395 | 0.032 |

| AFAM | C, L | 0.403 ± 0.00007 | 0.419 | 0.402 | 0.017 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.-L.; Arshad, S.; Park, T.-H. Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions. Remote Sens. 2023, 15, 3992. https://doi.org/10.3390/rs15163992

Kim T-L, Arshad S, Park T-H. Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions. Remote Sensing. 2023; 15(16):3992. https://doi.org/10.3390/rs15163992

Chicago/Turabian StyleKim, Taek-Lim, Saba Arshad, and Tae-Hyoung Park. 2023. "Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions" Remote Sensing 15, no. 16: 3992. https://doi.org/10.3390/rs15163992

APA StyleKim, T.-L., Arshad, S., & Park, T.-H. (2023). Adaptive Feature Attention Module for Robust Visual–LiDAR Fusion-Based Object Detection in Adverse Weather Conditions. Remote Sensing, 15(16), 3992. https://doi.org/10.3390/rs15163992