Daytime Sea Fog Identification Based on Multi-Satellite Information and the ECA-TransUnet Model

Abstract

1. Introduction

2. Materials

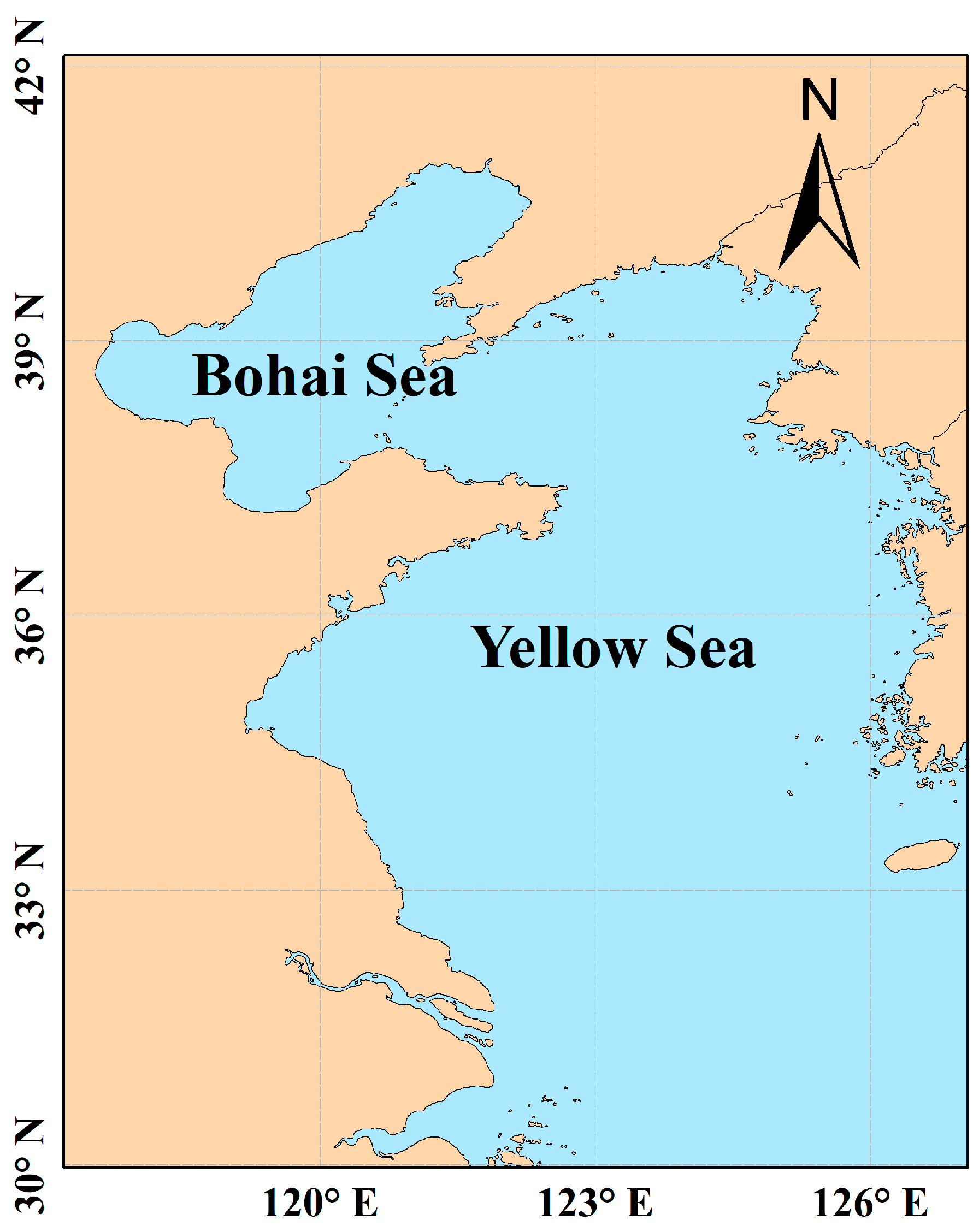

2.1. Study Area

2.2. Data

2.2.1. MODIS Data

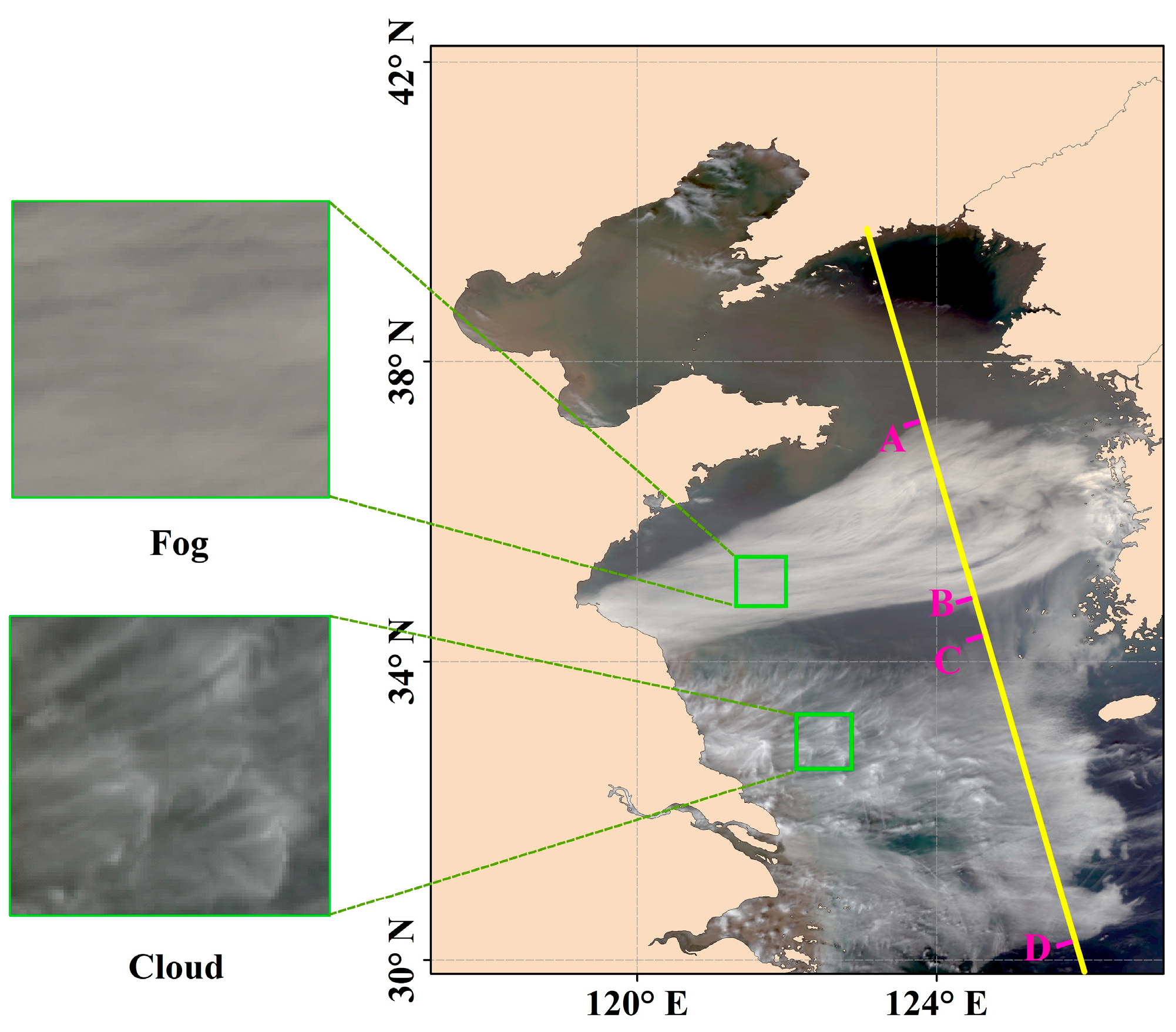

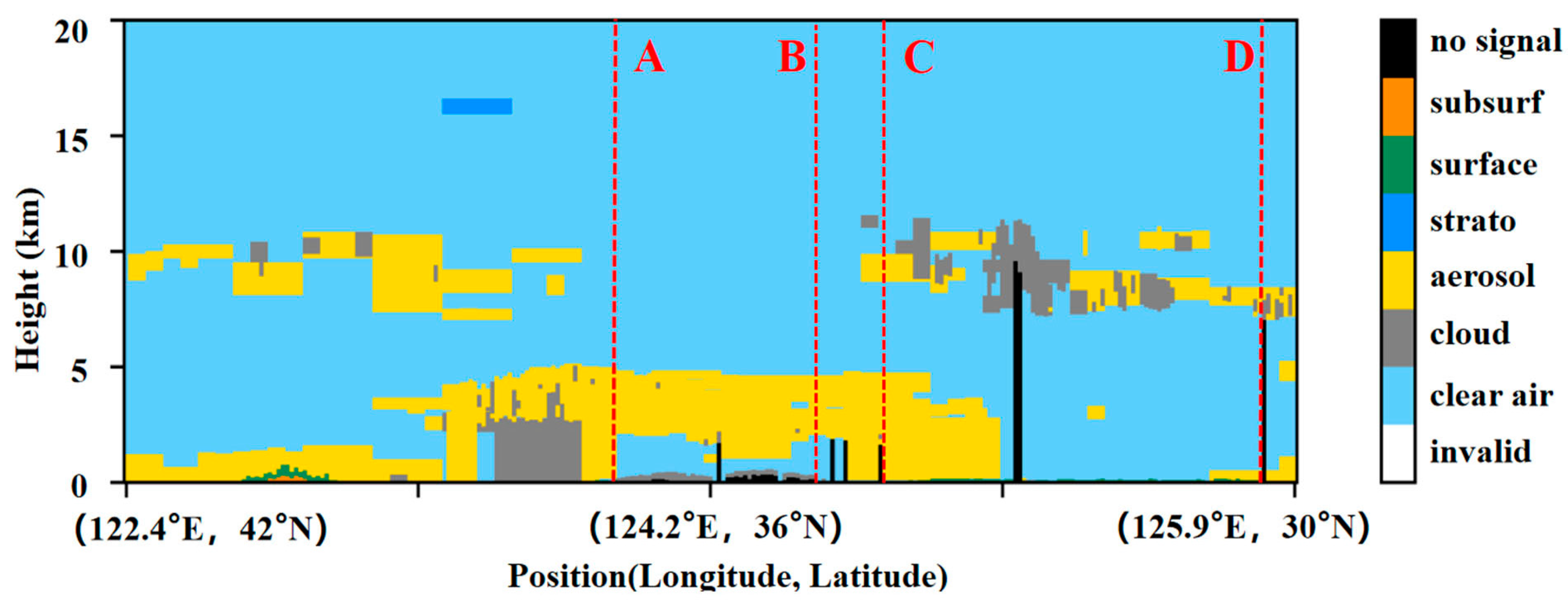

2.2.2. CALIPSO Data

2.2.3. Fifth Generation of Global Climate Reanalysis Data

3. Method

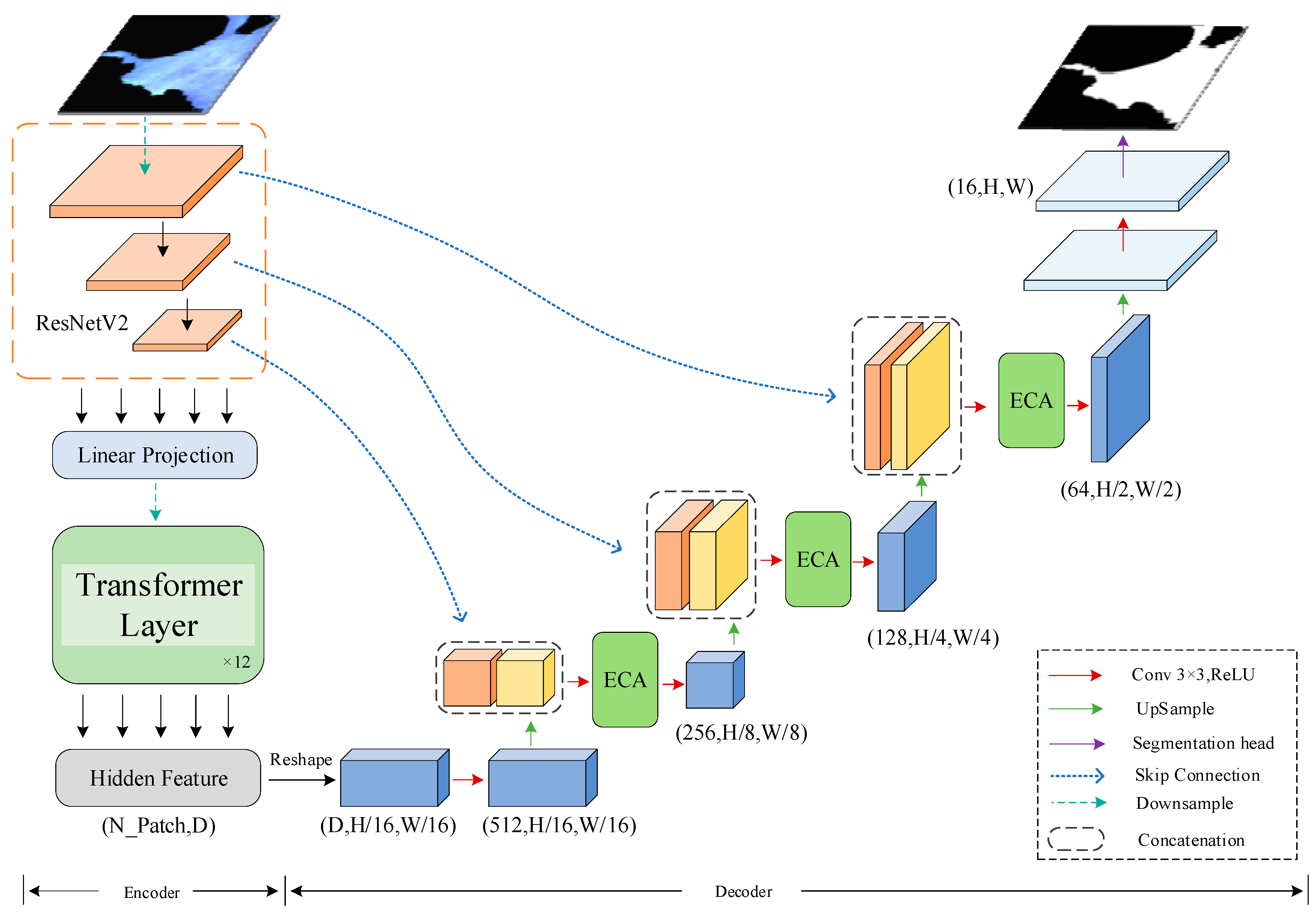

3.1. ECA-TransUnet Network Model

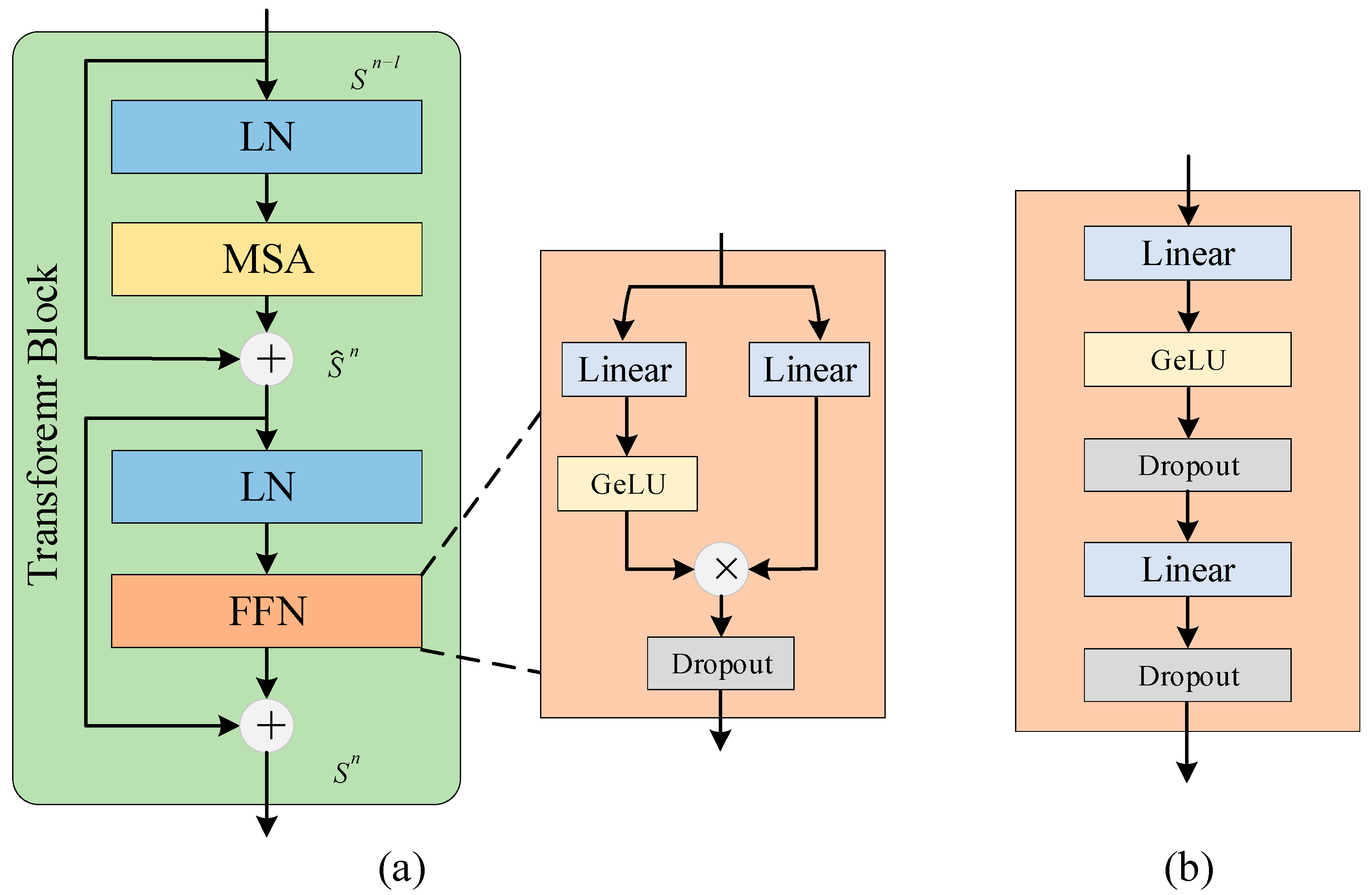

3.2. FFN Improvement

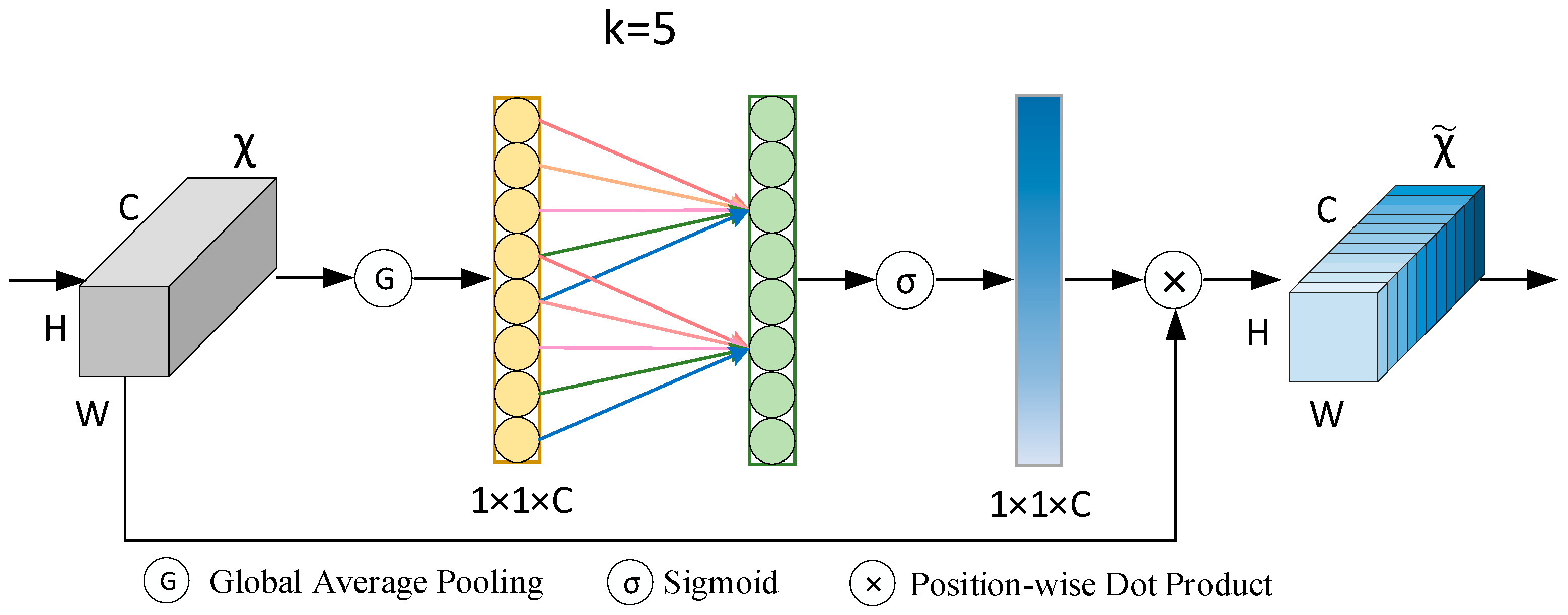

3.3. ECA Model

Precision Evaluation

4. Data Pre-Processing and Experiment

4.1. Data Pre-Processing

4.2. Experimental Setup

5. Results

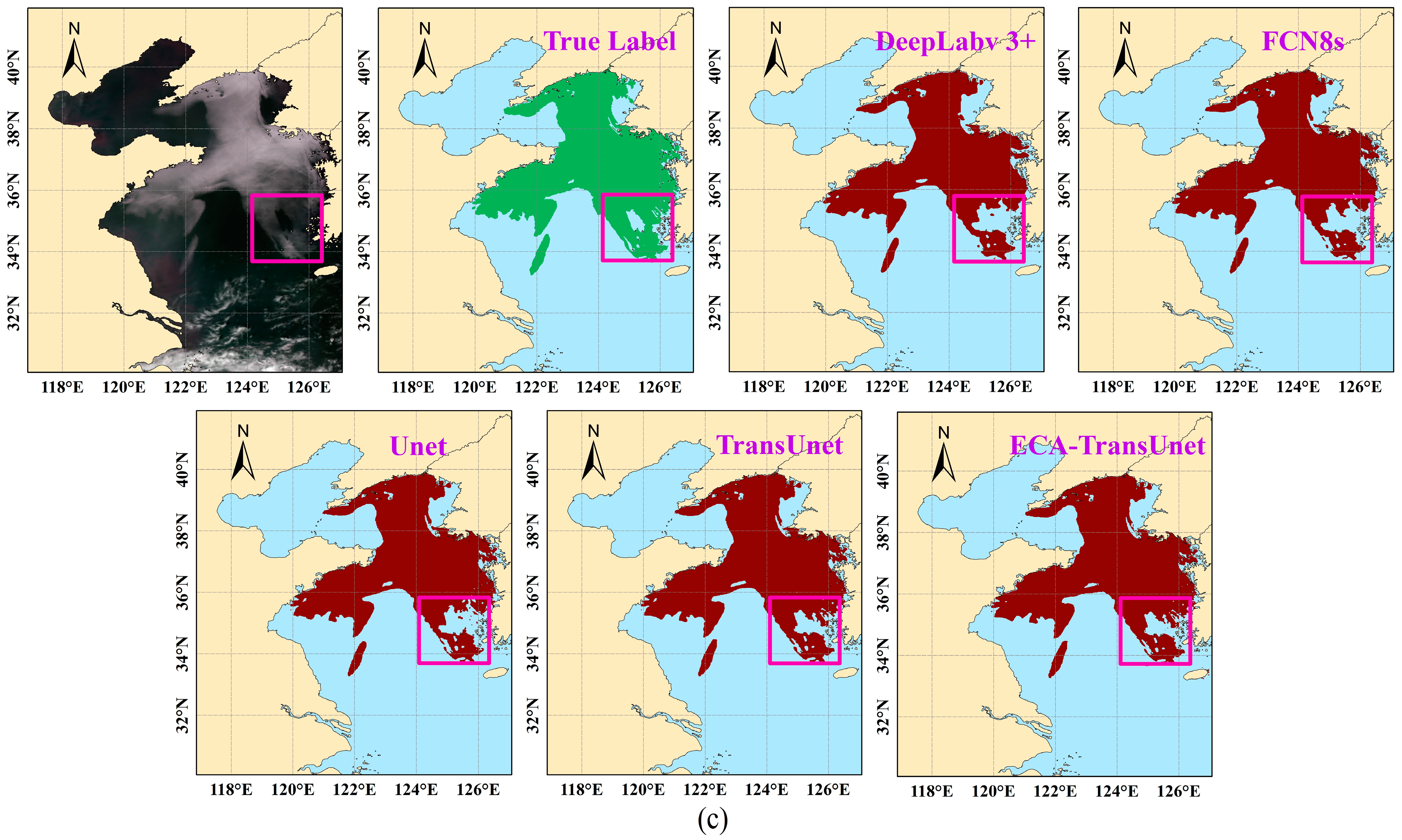

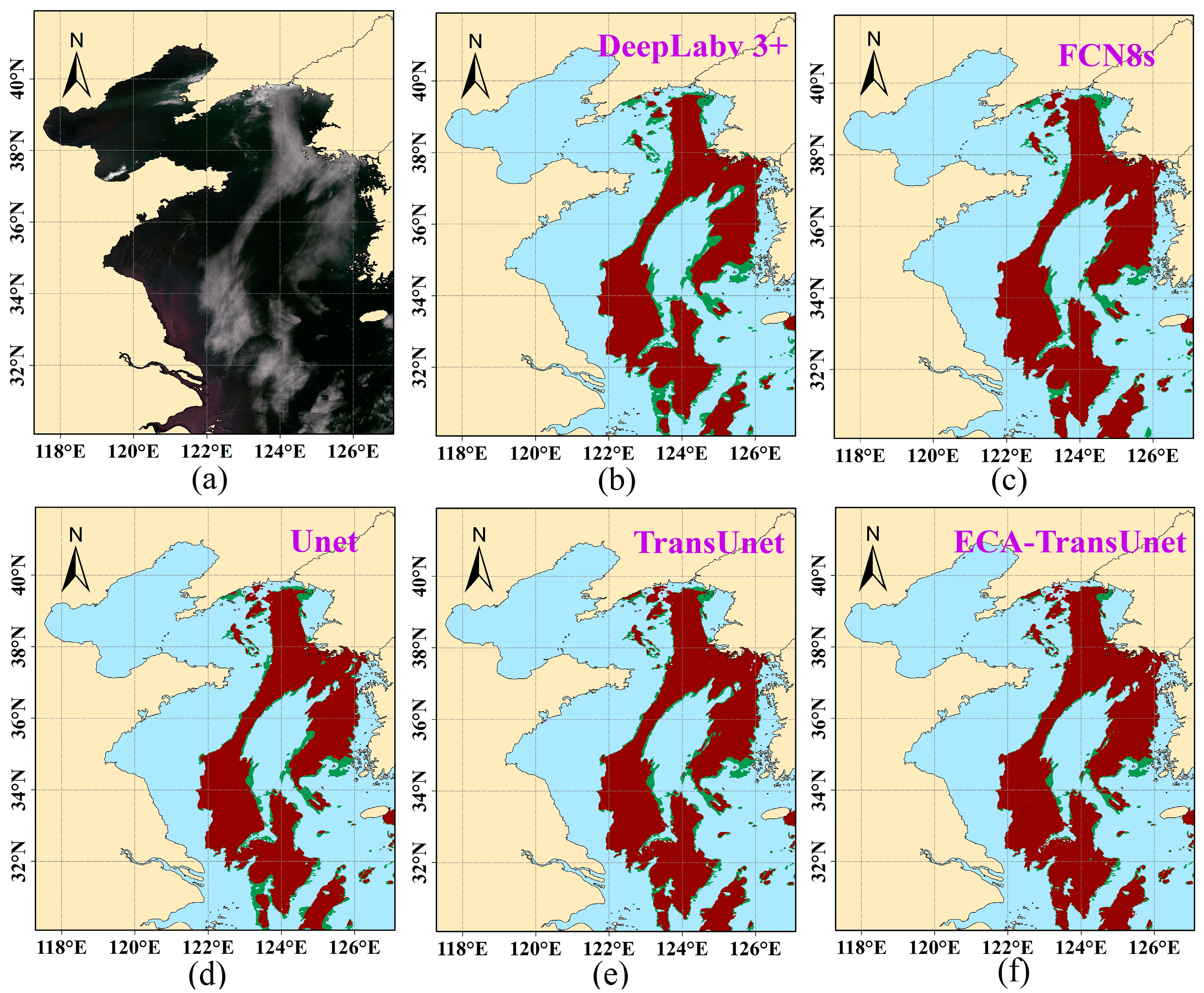

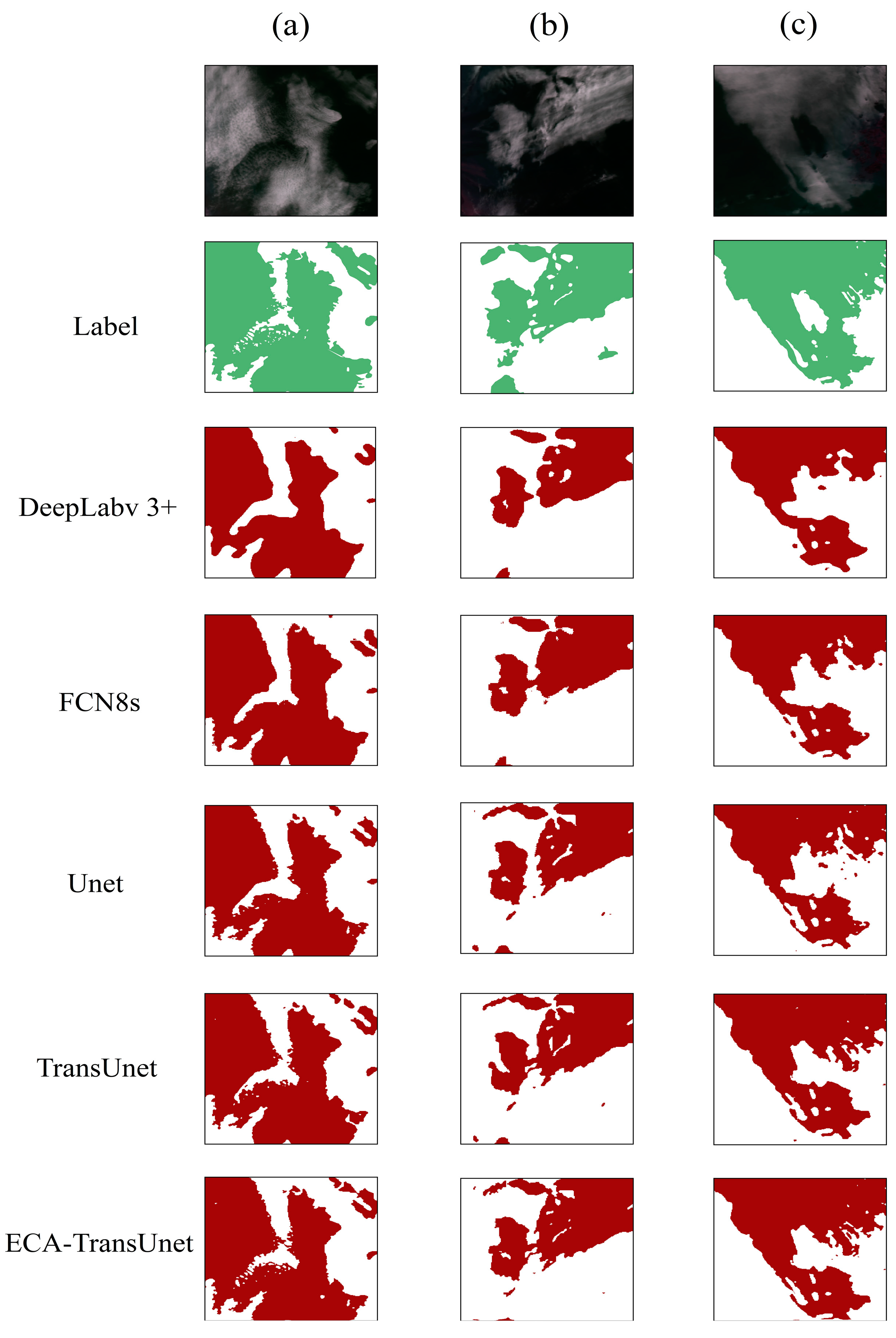

5.1. Model Comparison and Evaluation

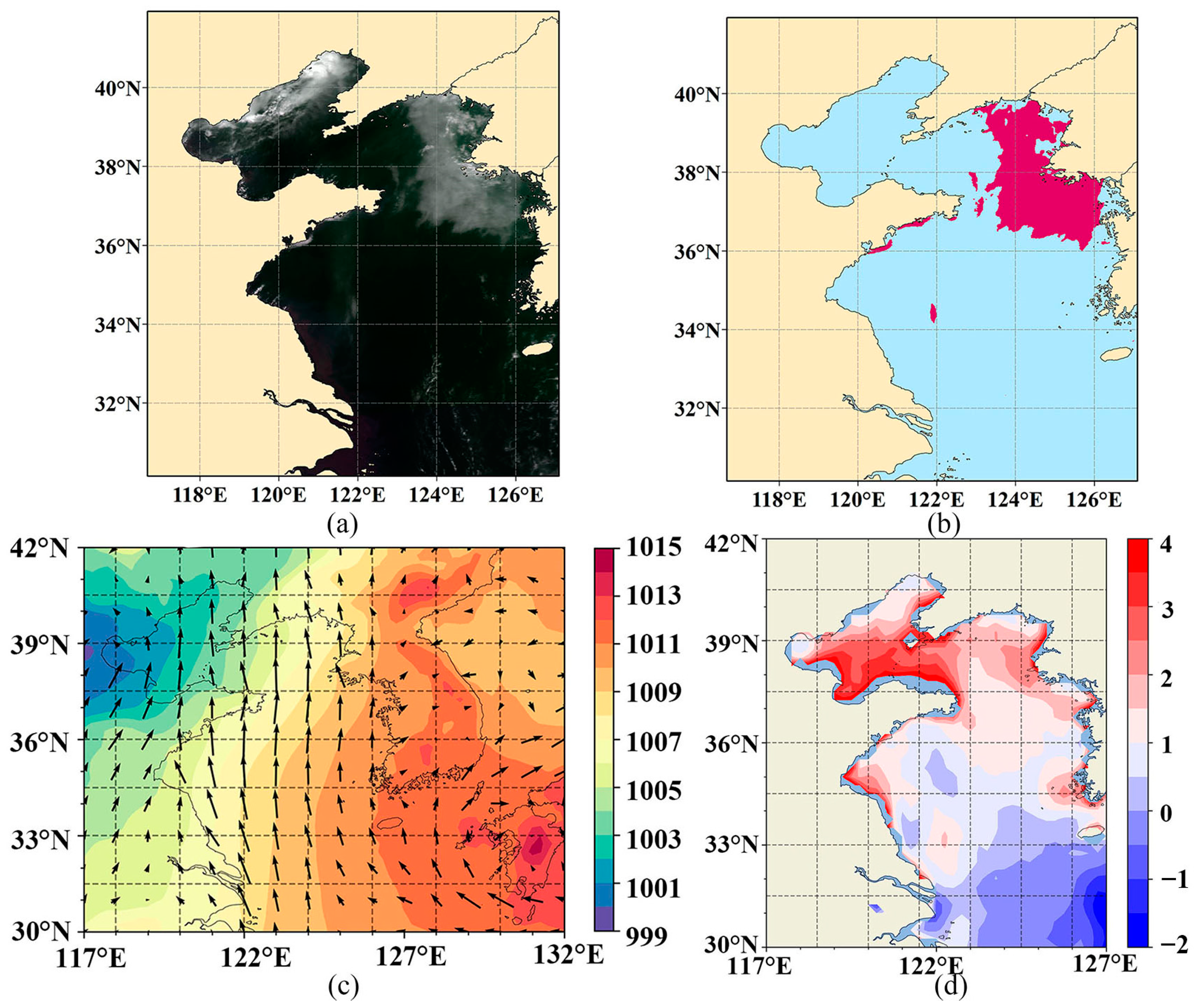

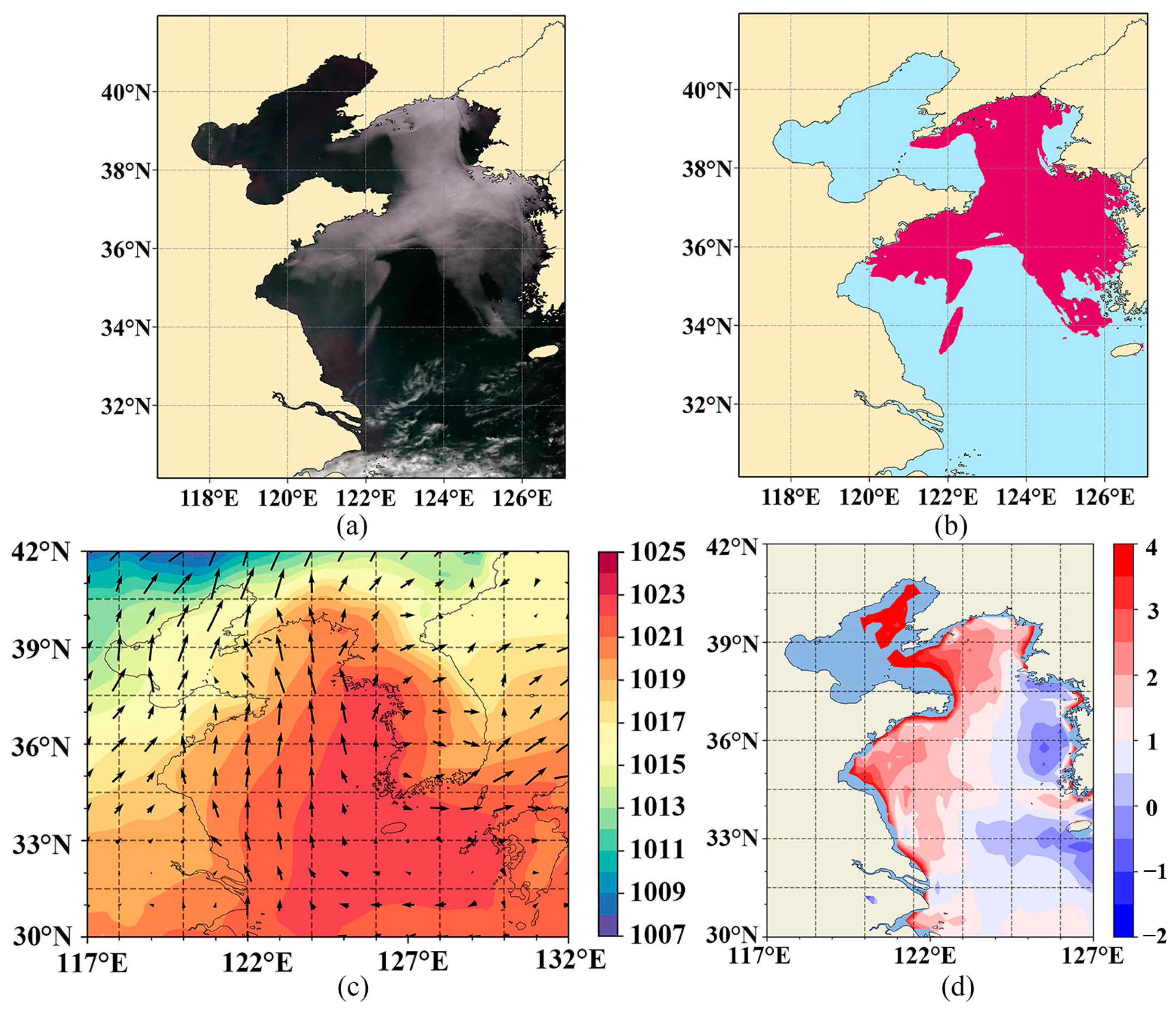

5.2. The Meteorological Conditions of Sea Fog Generation Analysis

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bendix, J. A Satellite-Based Climatology of Fog and Low-Level Stratus in Germany and Adjacent Areas. Atmos. Res. 2002, 64, 3–18. [Google Scholar] [CrossRef]

- Gultepe, I.; Tardif, R.; Michaelides, S.C.; Cermak, J.; Bott, A.; Bendix, J.; Mueller, M.D.; Pagowski, M.; Hansen, B.; Ellrod, G.; et al. Fog Research: A Review of Past Achievements and Future Perspectives. Pure Appl. Geophys. 2007, 164, 1121–1159. [Google Scholar] [CrossRef]

- Zhang, S.; Li, M.; Meng, X.; Fu, G.; Ren, Z.; Gao, S. A Comparison Study Between Spring and Summer Fogs in the Yellow Sea-Observations and Mechanisms. Pure Appl. Geophys. 2012, 169, 1001–1017. [Google Scholar] [CrossRef]

- Han, J.H.; Kim, K.J.; Joo, H.S.; Han, Y.H.; Kim, Y.T.; Kwon, S.J. Sea Fog Dissipation Prediction in Incheon Port and Haeundae Beach Using Machine Learning and Deep Learning. Sensors 2021, 21, 5232. [Google Scholar] [CrossRef] [PubMed]

- Fu, G.; Guo, J.; Xie, S.-P.; Duane, Y.; Zhang, M. Analysis and High-Resolution Modeling of a Dense Sea Fog Event over the Yellow Sea. Atmos. Res. 2006, 81, 293–303. [Google Scholar] [CrossRef]

- Heo, K.-Y.; Park, S.; Ha, K.-J.; Shim, J.-S. Algorithm for Sea Fog Monitoring with the Use of Information Technologies. Meteorol. Appl. 2014, 21, 350–359. [Google Scholar] [CrossRef]

- Mahdavi, S.; Amani, M.; Bullock, T.; Beale, S. A Probability-Based Daytime Algorithm for Sea Fog Detection Using GOES-16 Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1363–1373. [Google Scholar] [CrossRef]

- Du, P.; Zeng, Z.; Zhang, J.; Liu, L.; Yang, J.; Qu, C.; Jiang, L.; Liu, S. Fog Season Risk Assessment for Maritime Transportation Systems Exploiting Himawari-8 Data: A Case Study in Bohai Sea, China. Remote Sens. 2021, 13, 3530. [Google Scholar] [CrossRef]

- Wang, Y.; Qiu, Z.; Zhao, D.; Ali, M.A.; Hu, C.; Zhang, Y.; Liao, K. Automatic Detection of Daytime Sea Fog Based on Supervised Classification Techniques for FY-3D Satellite. Remote Sens. 2023, 15, 2283. [Google Scholar] [CrossRef]

- Fu, G.; Guo, J.; Pendergrass, A.; Li, P. An Analysis and Modeling Study of a Sea Fog Event over the Yellow and Bohai Seas. J. Ocean Univ. China 2008, 7, 27–34. [Google Scholar] [CrossRef]

- Yang, J.-H.; Yoo, J.-M.; Choi, Y.-S. Advanced Dual-Satellite Method for Detection of Low Stratus and Fog near Japan at Dawn from FY-4A and Himawari-8. Remote Sens. 2021, 13, 1042. [Google Scholar] [CrossRef]

- Ahn, M.; Sohn, E.; Hwang, B. A New Algorithm for Sea Fog/Stratus Detection Using GMS-5 IR Data. Adv. Atmos. Sci. 2003, 20, 899–913. [Google Scholar] [CrossRef]

- Eyre, J.R. Detection of fog at night using Advanced Very High Resolution Radiometer (AVHRR) imagery. Meteorol. Mag. 1984, 113, 266–271. [Google Scholar]

- Ellrod, G.P. Advances in the detection and analysis of fog at night using GOES multispectral infrared imagery. Weather. Forecast. 1995, 10, 606–619. [Google Scholar] [CrossRef]

- Zhang, S.; Yi, L. A Comprehensive Dynamic Threshold Algorithm for Daytime Sea Fog Retrieval over the Chinese Adjacent Seas. Pure Appl. Geophys. 2013, 170, 1931–1944. [Google Scholar] [CrossRef]

- Han, J.-H.; Suh, M.-S.; Yu, H.-Y.; Roh, N.-Y. Development of Fog Detection Algorithm Using GK2A/AMI and Ground Data. Remote Sens. 2020, 12, 3181. [Google Scholar] [CrossRef]

- Ryu, H.-S.; Hong, S. Sea Fog Detection Based on Normalized Difference Snow Index Using Advanced Himawari Imager Observations. Remote Sens. 2020, 12, 1521. [Google Scholar] [CrossRef]

- Wu, X.; Li, S. Automatic Sea Fog Detection over Chinese Adjacent Oceans Using Terra/MODIS Data. Int. J. Remote Sens. 2014, 35, 7430–7457. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Luo, H.; Chen, C.; Fang, L.; Khoshelham, K.; Shen, G. MS-RRFSegNet: Multiscale Regional Relation Feature Segmentation Network for Semantic Segmentation of Urban Scene Point Clouds. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8301–8315. [Google Scholar] [CrossRef]

- Zhao, J.; Zhou, Y.; Shi, B.; Yang, J.; Zhang, D.; Yao, R. Multi-Stage Fusion and Multi-Source Attention Network for Multi-Modal Remote Sensing Image Segmentation. ACM Trans. Intell. Syst. Technol. 2021, 12, 1–20. [Google Scholar] [CrossRef]

- Ding, L.; Zhang, J.; Bruzzone, L. Semantic Segmentation of Large-Size VHR Remote Sensing Images Using a Two-Stage Multiscale Training Architecture. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5367–5376. [Google Scholar] [CrossRef]

- Yu, Y.; Bao, Y.; Wang, J.; Chu, H.; Zhao, N.; He, Y.; Liu, Y. Crop Row Segmentation and Detection in Paddy Fields Based on Treble-Classification Otsu and Double-Dimensional Clustering Method. Remote Sens. 2021, 13, 901. [Google Scholar] [CrossRef]

- Bi, H.; Xu, L.; Cao, X.; Xue, Y.; Xu, Z. Polarimetric SAR Image Semantic Segmentation With 3D Discrete Wavelet Transform and Markov Random Field. IEEE Trans. Image Process. 2020, 29, 6601–6614. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, H.; Xia, Y.; Han, T.; Miao, K.; Yao, Y.; Liu, C.; Zhou, J.P.; Chen, P.; Wang, B. Deep convolutional neural network for fog detection. In Intelligent Computing Theories and Application, Proceedings of the 14th International Conference on Intelligent Computing, Wuhan, China, 15–18 August 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–10. [Google Scholar]

- Jeon, H.-K.; Kim, S.; Edwin, J.; Yang, C.-S. Sea Fog Identification from GOCI Images Using CNN Transfer Learning Models. Electronics 2020, 9, 311. [Google Scholar] [CrossRef]

- Zhu, C.; Wan, J.; Liu, S.; Xiao, Y. Sea Fog Detection Using U-Net Deep Learning Model Based on Modis Data. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019. [Google Scholar]

- Olaf, R.; Philipp, F.; Thomas, B. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhou, Y.; Chen, K.; Li, X. Dual-Branch Neural Network for Sea Fog Detection in Geostationary Ocean Color Imager. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4208617. [Google Scholar] [CrossRef]

- Huang, Y.; Wu, M.; Guo, J.; Zhang, C.; Xu, M. A Correlation Context-Driven Method for Sea Fog Detection in Meteorological Satellite Imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1003105. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Yu, Q.; Zheng, N.; Huang, J.; Zhao, F. CNSNet: A Cleanness-Navigated-Shadow Network for Shadow Removal. arXiv 2022, arXiv:2209.02174. [Google Scholar]

- Han, W.; Zhang, Z.; Zhang, Y.; Yu, J.; Chiu, C.-C.; Qin, J.; Gulati, A.; Pang, R.; Wu, Y. ContextNet: Improving convolutional neural networks for automatic speech recognition with global context. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), Shanghai, China, 25–29 October 2020; pp. 3610–3614. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Song, R.; Feng, Y.; Cheng, W.; Mu, Z.; Wang, X. BS2T: Bottleneck Spatial–Spectral Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5532117. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Zou, J.; He, W.; Zhang, H. LESSFormer: Local-Enhanced Spectral-Spatial Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5535416. [Google Scholar] [CrossRef]

- Yang, X.; Cao, W.; Lu, Y.; Zhou, Y. Hyperspectral Image Transformer Classification Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5528715. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Wu, D.; Lu, B.; Zhang, T.; Yan, F. A Method of Detecting Sea Fogs Using CALIOP Data and Its Application to Improve MODIS-Based Sea Fog Detection. J. Quant. Spectrosc. Radiat. Transf. 2015, 153, 88–94. [Google Scholar] [CrossRef]

- Kim, D.; Park, M.-S.; Park, Y.-J.; Kim, W. Geostationary Ocean Color Imager (GOCI) Marine Fog Detection in Combination with Himawari-8 Based on the Decision Tree. Remote Sens. 2020, 12, 149. [Google Scholar] [CrossRef]

- Shin, D.; Kim, J.-H. A New Application of Unsupervised Learning to Nighttime Sea Fog Detection. ASIA Pac. J. Atmos. Sci. 2018, 54, 527–544. [Google Scholar] [CrossRef]

- Wan, J.; Su, J.; Sheng, H.; Liu, S.; Li, J.J. Spatial and Temporal Characteristics of Sea Fog in Yellow Sea and Bohai Sea Based on Active and Passive Remote Sensing. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar]

- Zhang, S.-P.; Xie, S.-P.; Liu, Q.-Y.; Yang, Y.-Q.; Wang, X.-G.; Ren, Z.-P. Seasonal Variations of Yellow Sea Fog: Observations and Mechanisms. J. Clim. 2009, 22, 6758–6772. [Google Scholar] [CrossRef]

- Holz, R.E.; Ackerman, S.A.; Nagle, F.W.; Frey, R.; Dutcher, S.; Kuehn, R.E.; Vaughan, M.A.; Baum, B. Global Moderate Resolution Imaging Spectroradiometer (MODIS) Cloud Detection and Height Evaluation Using CALIOP. J. Geophys. Res. Atmos. 2008, 113, D00A19. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2019, arXiv:1910.03151. [Google Scholar]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness and Correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Chen, L.; Niu, S.; Zhong, L. Detection and Analysis of Fog Based on MODIS Data. J. Nanjing Inst. Meteorol. 2006, 29, 448–454. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [PubMed]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Cermak, J.; Bendix, J. A novel approach to fog/low stratus detection using Meteosat 8 data. Atmos. Res. 2008, 87, 279–292. [Google Scholar] [CrossRef]

- Gao, S.H.; Wu, W.; Zhu, L.; Fu, G. Detection of nighttime sea fog/stratus over the Huang-hai Sea using MTSAT-1R IR data. Acta Oceanol. Sin. 2009, 28, 23–35. [Google Scholar]

| Band | Central Wavelength (nm) | Spectral Range (nm) | Main Applications | Resolution (m) |

|---|---|---|---|---|

| 1 | 645 | 620–670 | Land/Cloud Border | 250 |

| 17 | 905 | 890–920 | Atmospheric water vapor | 1000 |

| 32 | 12.02 | 11.77–12.27 | Cloud Temperature | 1000 |

| Acc | Recall | IoU | F1 | |

|---|---|---|---|---|

| DeeplabV3+ | 0.9151 | 0.7353 | 0.7276 | 0.7946 |

| FCN8s | 0.9244 | 0.745 | 0.73 | 0.802 |

| Unet | 0.9279 | 0.783 | 0.7533 | 0.8152 |

| TransUnet | 0.9302 | 0.8019 | 0.7762 | 0.8374 |

| ECA-TransUnet | 0.9451 | 0.8231 | 0.8157 | 0.8587 |

| Recall | IoU | F1 | |

|---|---|---|---|

| TransUnet | 0.8019 | 0.7662 | 0.8374 |

| TransUnet-block1 | 0.8029 | 0.7860 | 0.8302 |

| TransUnet-block2 | 0.8146 | 0.8059 | 0.8425 |

| ECA-TransUnet | 0.8231 | 0.8157 | 0.8587 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, H.; Ma, Y.; Zhang, S.; Yu, X.; Zhang, J. Daytime Sea Fog Identification Based on Multi-Satellite Information and the ECA-TransUnet Model. Remote Sens. 2023, 15, 3949. https://doi.org/10.3390/rs15163949

Lu H, Ma Y, Zhang S, Yu X, Zhang J. Daytime Sea Fog Identification Based on Multi-Satellite Information and the ECA-TransUnet Model. Remote Sensing. 2023; 15(16):3949. https://doi.org/10.3390/rs15163949

Chicago/Turabian StyleLu, He, Yi Ma, Shichao Zhang, Xiang Yu, and Jiahua Zhang. 2023. "Daytime Sea Fog Identification Based on Multi-Satellite Information and the ECA-TransUnet Model" Remote Sensing 15, no. 16: 3949. https://doi.org/10.3390/rs15163949

APA StyleLu, H., Ma, Y., Zhang, S., Yu, X., & Zhang, J. (2023). Daytime Sea Fog Identification Based on Multi-Satellite Information and the ECA-TransUnet Model. Remote Sensing, 15(16), 3949. https://doi.org/10.3390/rs15163949