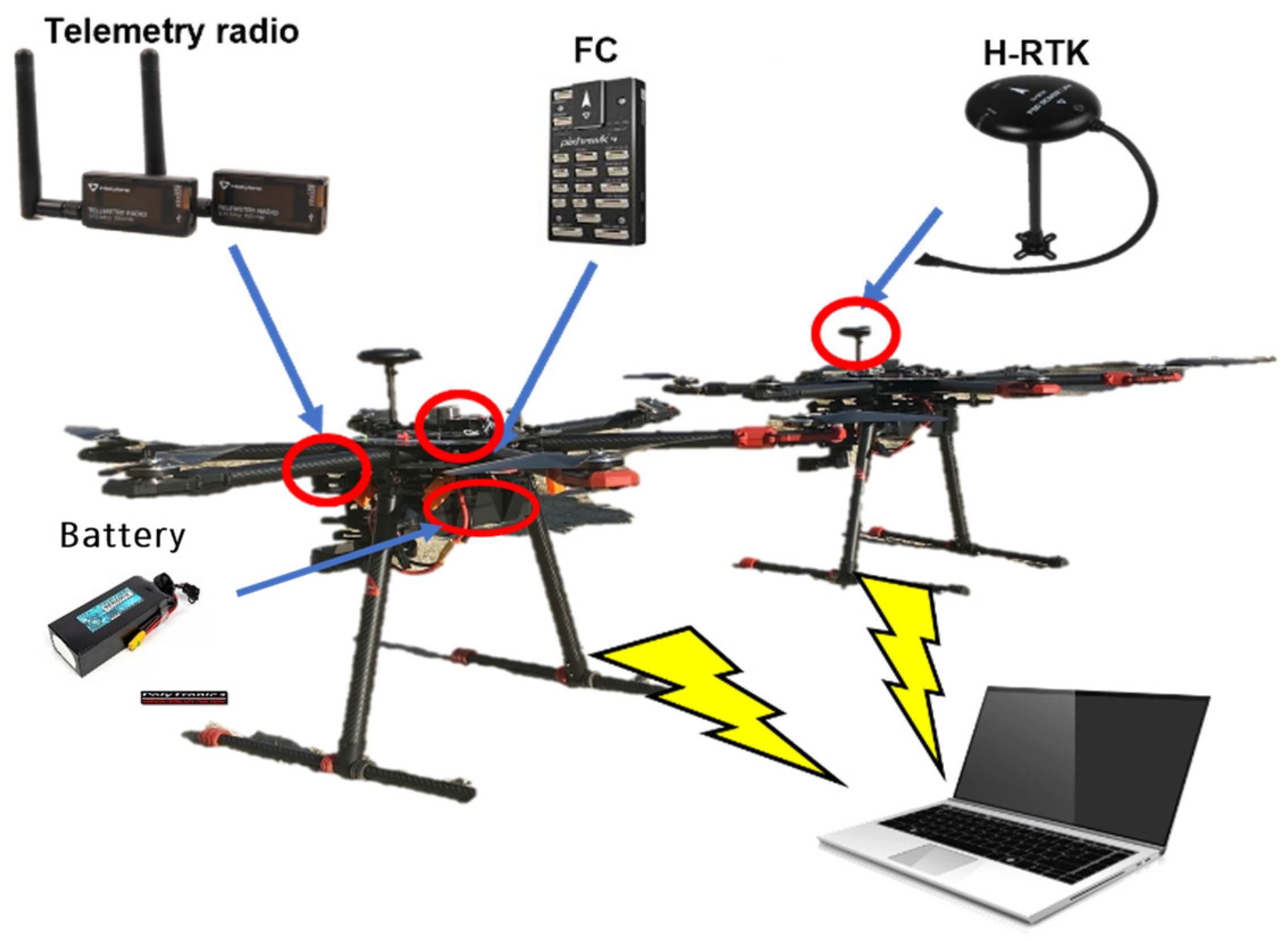

Figure 1.

Typical components of dual UAVs engaged in a collaborative driving system, where the telemetry radio was mounted under each drone’s arm, FC—drone’s flight controller was put on top of the drone, H-RTK indicates the drone’s GPS, and the battery was placed under the drone’s main frame.

Figure 1.

Typical components of dual UAVs engaged in a collaborative driving system, where the telemetry radio was mounted under each drone’s arm, FC—drone’s flight controller was put on top of the drone, H-RTK indicates the drone’s GPS, and the battery was placed under the drone’s main frame.

Figure 2.

The visible-light GoPro 10 camera used in this study.

Figure 2.

The visible-light GoPro 10 camera used in this study.

Figure 3.

The wireless communication module ESP32 for camera synchronization.

Figure 3.

The wireless communication module ESP32 for camera synchronization.

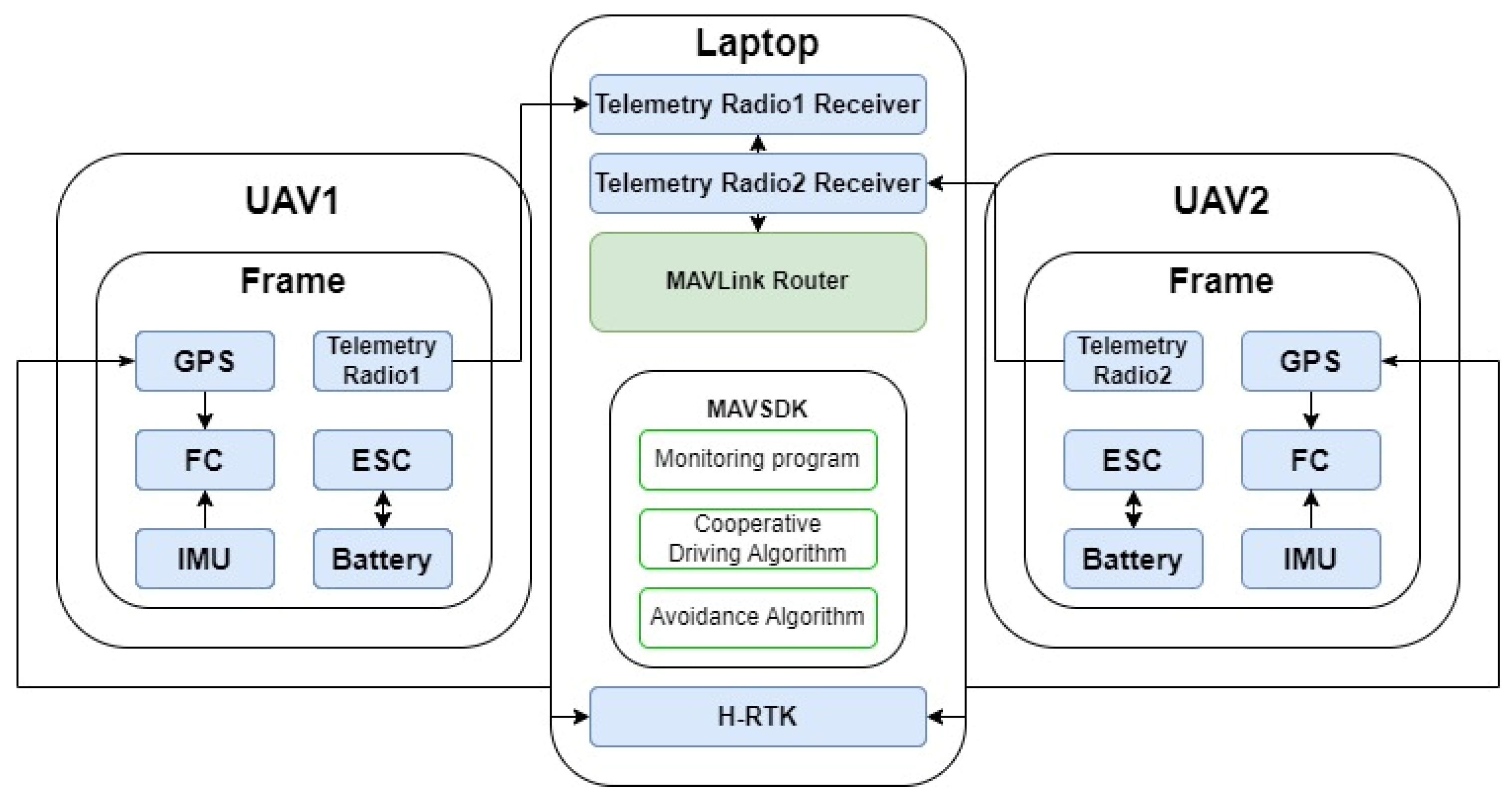

Figure 4.

Schematic of the UAV collaborative driving control system.

Figure 4.

Schematic of the UAV collaborative driving control system.

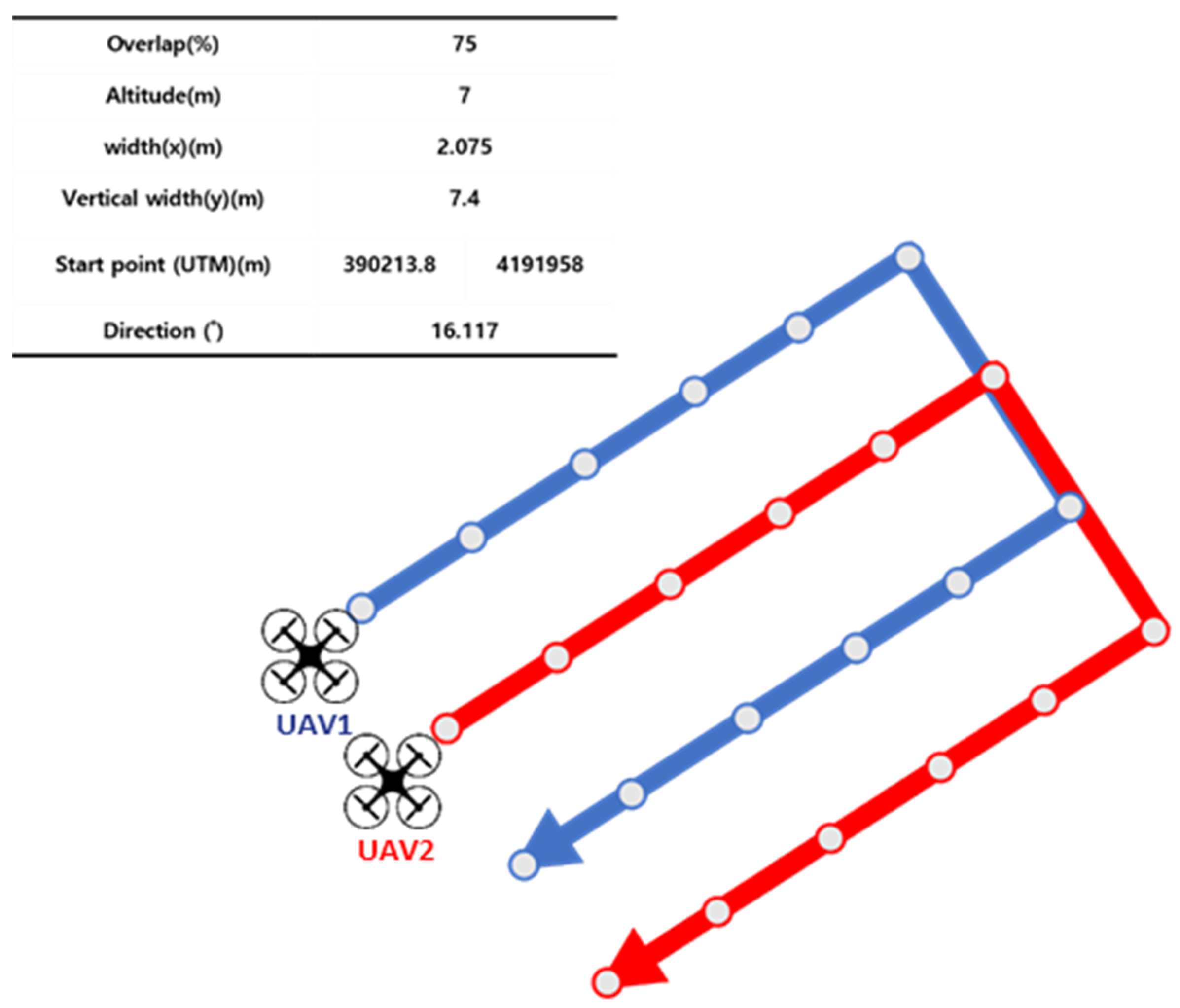

Figure 5.

An automatic route creation program for multiple UAVs and a flight algorithm.

Figure 5.

An automatic route creation program for multiple UAVs and a flight algorithm.

Figure 6.

Example of program-generated automatic driving routes for multiple UAVs.

Figure 6.

Example of program-generated automatic driving routes for multiple UAVs.

Figure 7.

UAV collaborative driving area for aerial imagery of boxes and crop model fields.

Figure 7.

UAV collaborative driving area for aerial imagery of boxes and crop model fields.

Figure 8.

Three different colors and sizes of boxes used in this study.

Figure 8.

Three different colors and sizes of boxes used in this study.

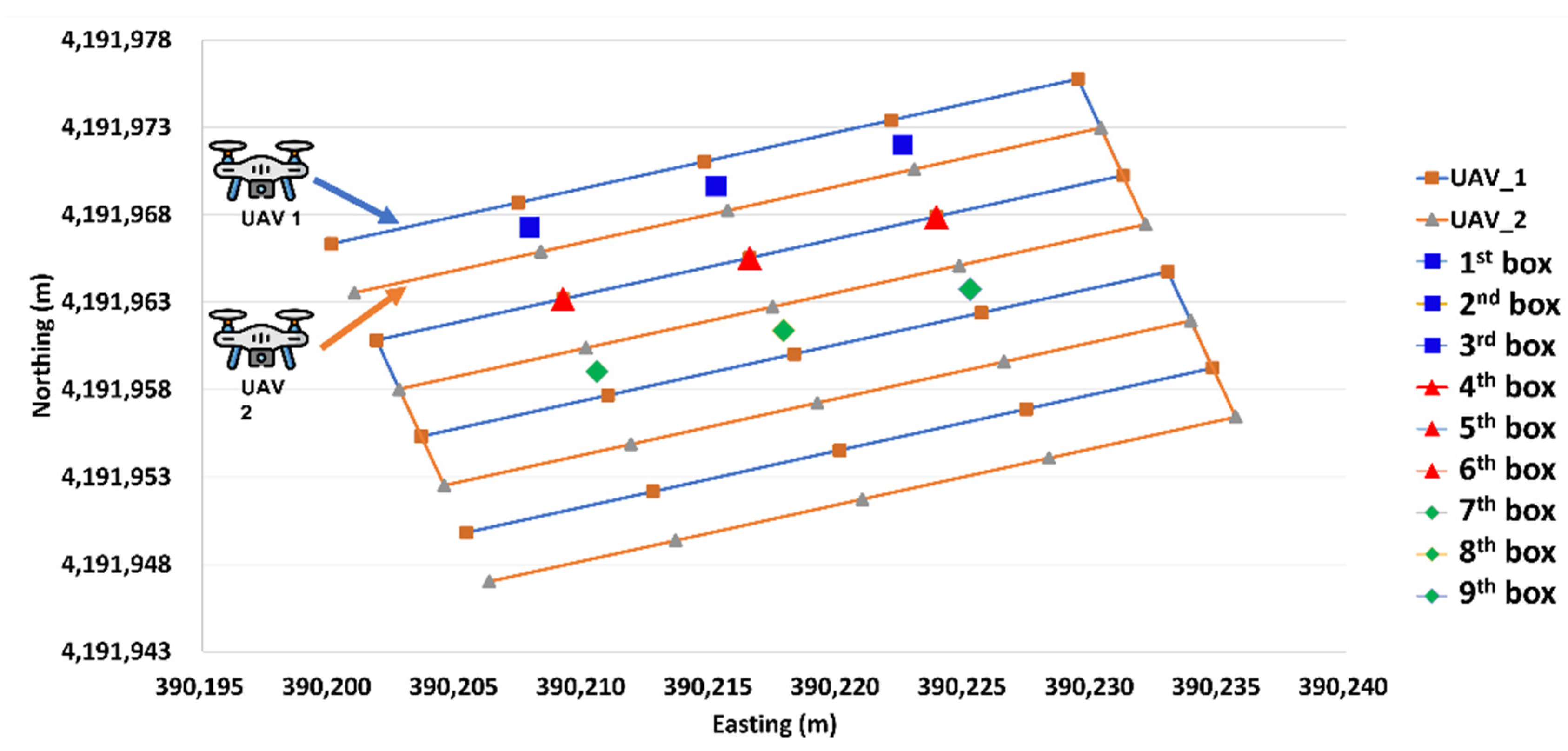

Figure 9.

Box positions and flight routes for the dual UAV system.

Figure 9.

Box positions and flight routes for the dual UAV system.

Figure 10.

The location of the experiment where the single and dual UAV systems were comparatively analyzed.

Figure 10.

The location of the experiment where the single and dual UAV systems were comparatively analyzed.

Figure 11.

(a) The appearance of crop models and field, (b) detailed view of the crop model, and (c) 3D representation of the field.

Figure 11.

(a) The appearance of crop models and field, (b) detailed view of the crop model, and (c) 3D representation of the field.

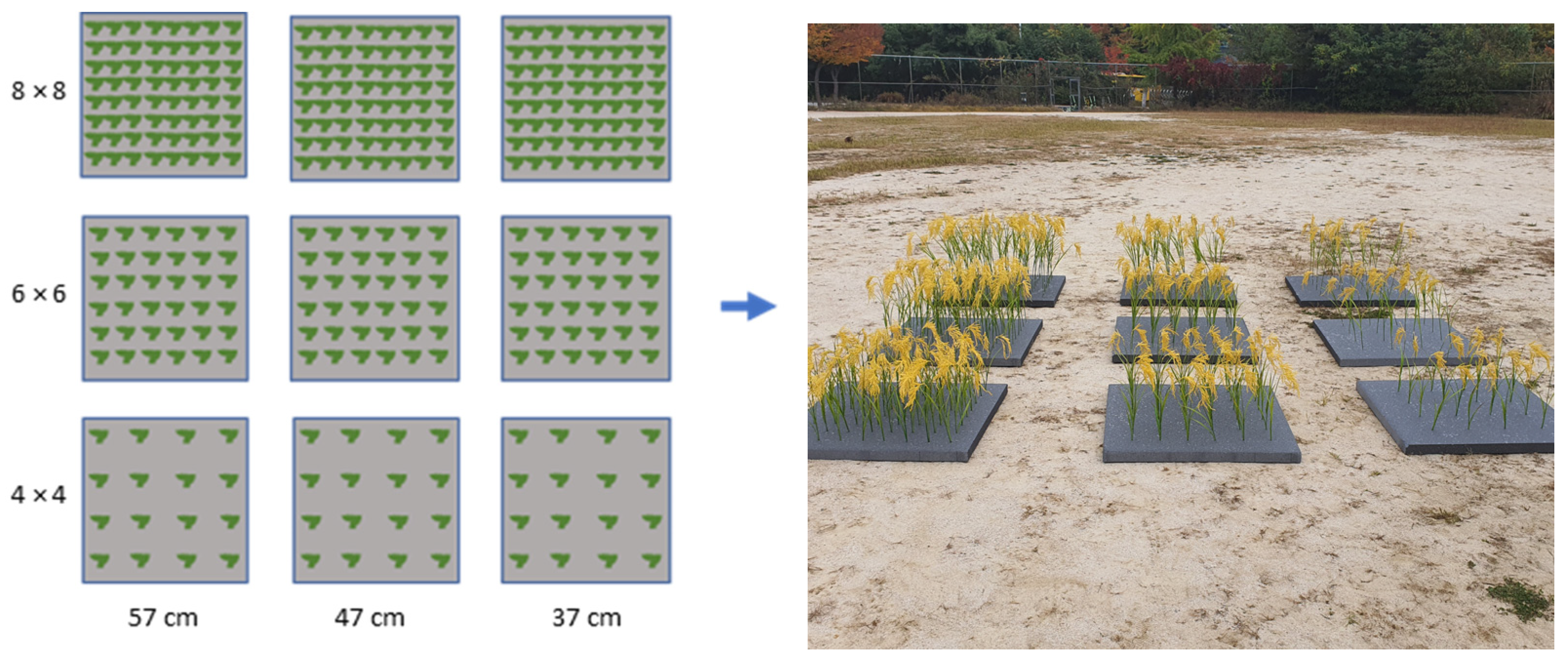

Figure 12.

Test layout of the crop fields based on three different kinds of heights and densities.

Figure 12.

Test layout of the crop fields based on three different kinds of heights and densities.

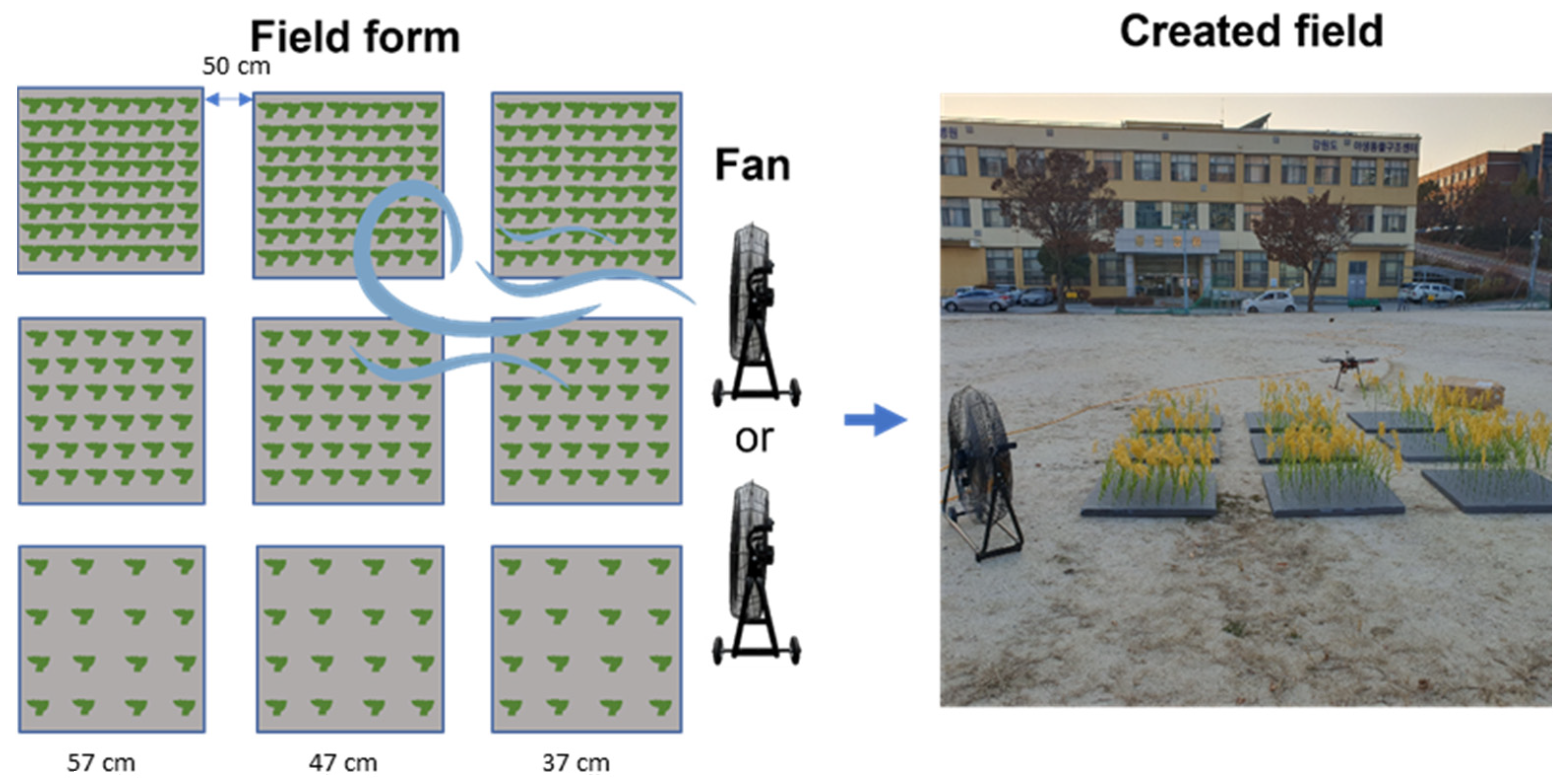

Figure 13.

Wind effect locations and real created model crop fields.

Figure 13.

Wind effect locations and real created model crop fields.

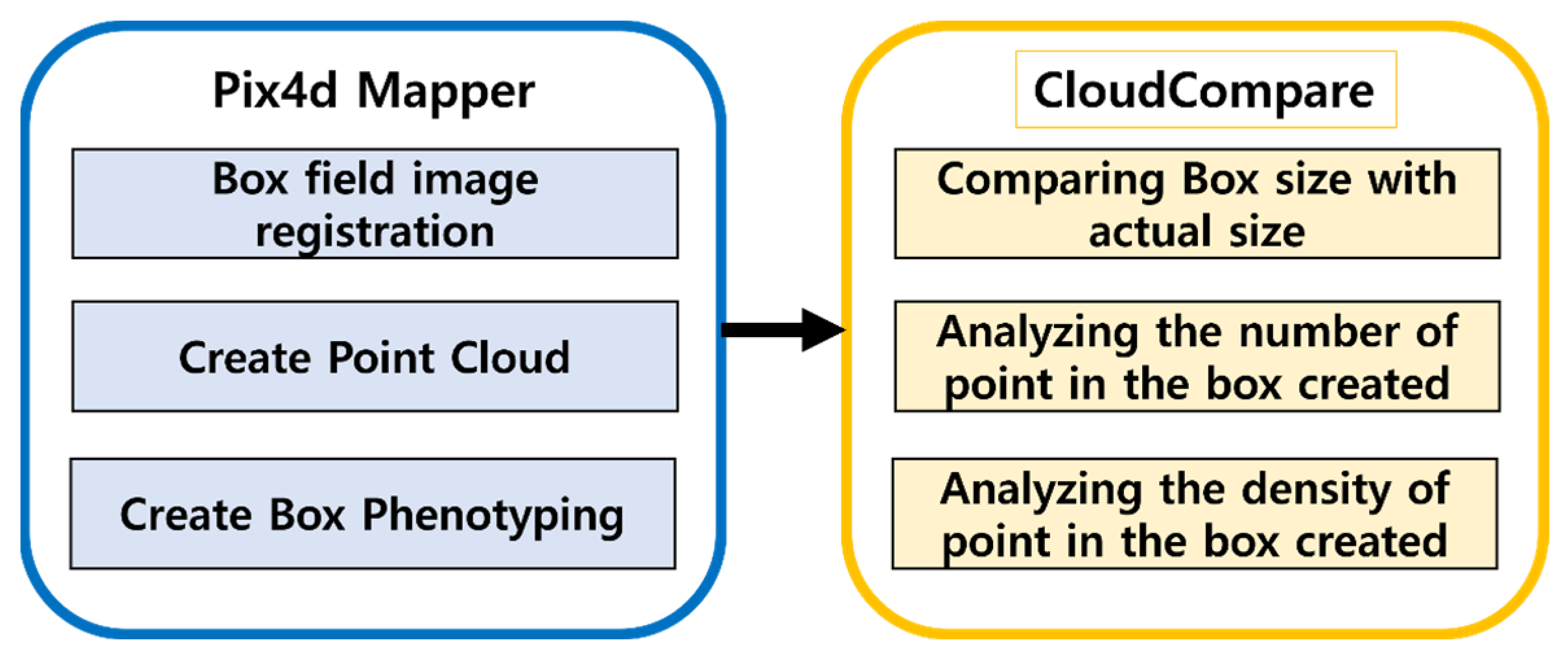

Figure 14.

Box phenotyping algorithm for size, point number, and point density measurements.

Figure 14.

Box phenotyping algorithm for size, point number, and point density measurements.

Figure 15.

Method for measuring the LAI of the crop field, where the red frame shows the close-up view of one of the crop field models, the black arrow indicates the process segmenting the yellow area, and the red arrow shows the leaf area from above.

Figure 15.

Method for measuring the LAI of the crop field, where the red frame shows the close-up view of one of the crop field models, the black arrow indicates the process segmenting the yellow area, and the red arrow shows the leaf area from above.

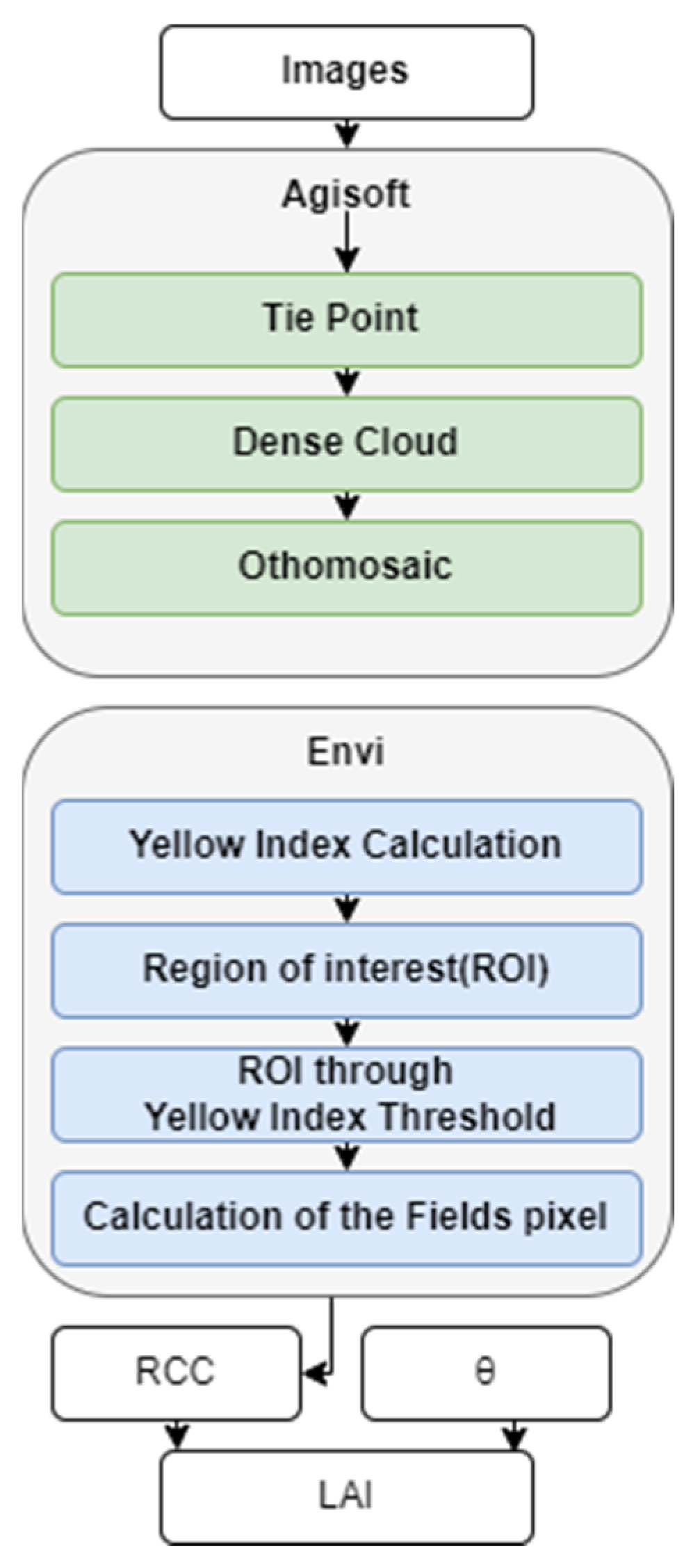

Figure 16.

LAI measurement algorithm based on yellow index threshold.

Figure 16.

LAI measurement algorithm based on yellow index threshold.

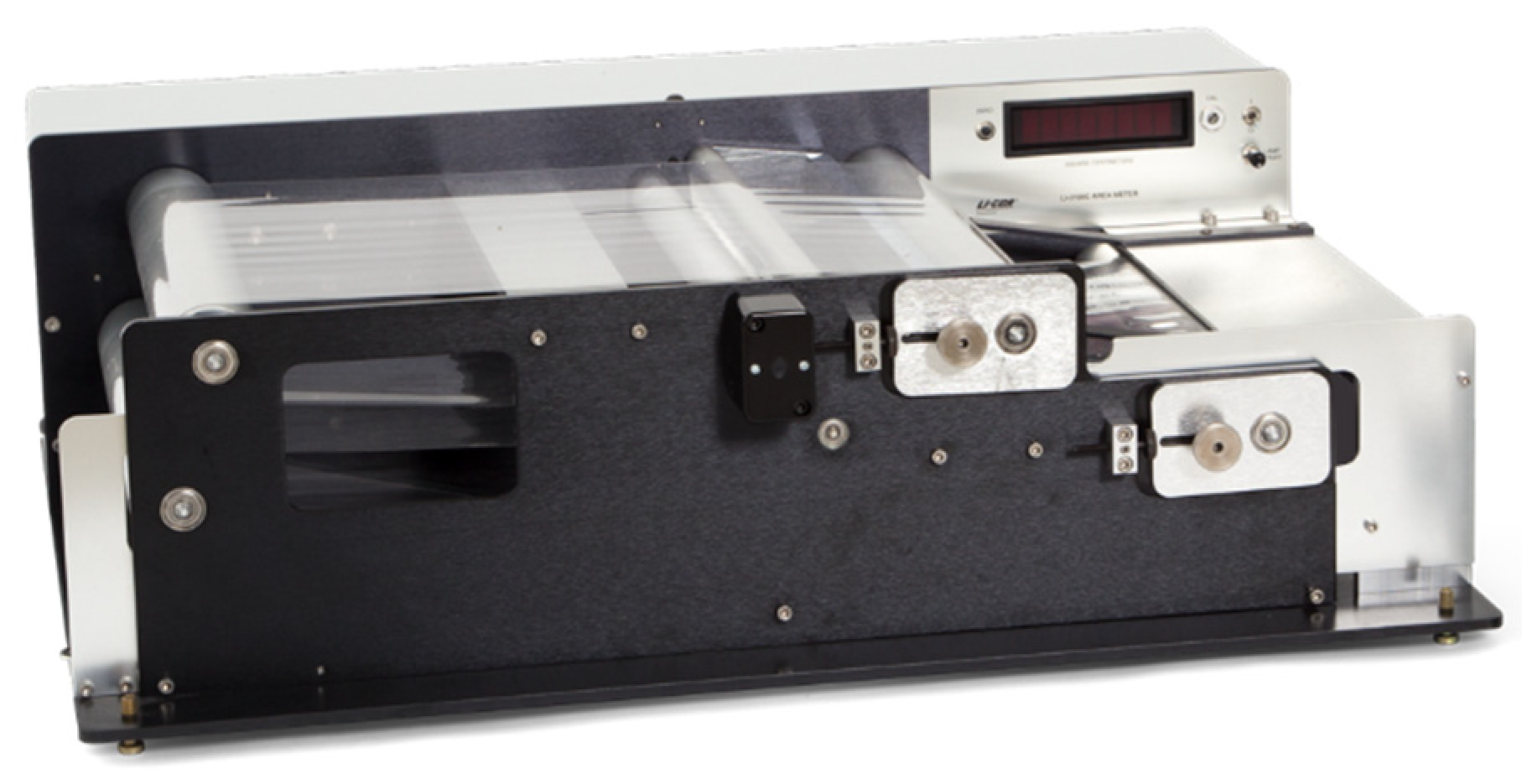

Figure 17.

Leaf area measurement instrument (LI-3300C) for measuring ground-truth LAI.

Figure 17.

Leaf area measurement instrument (LI-3300C) for measuring ground-truth LAI.

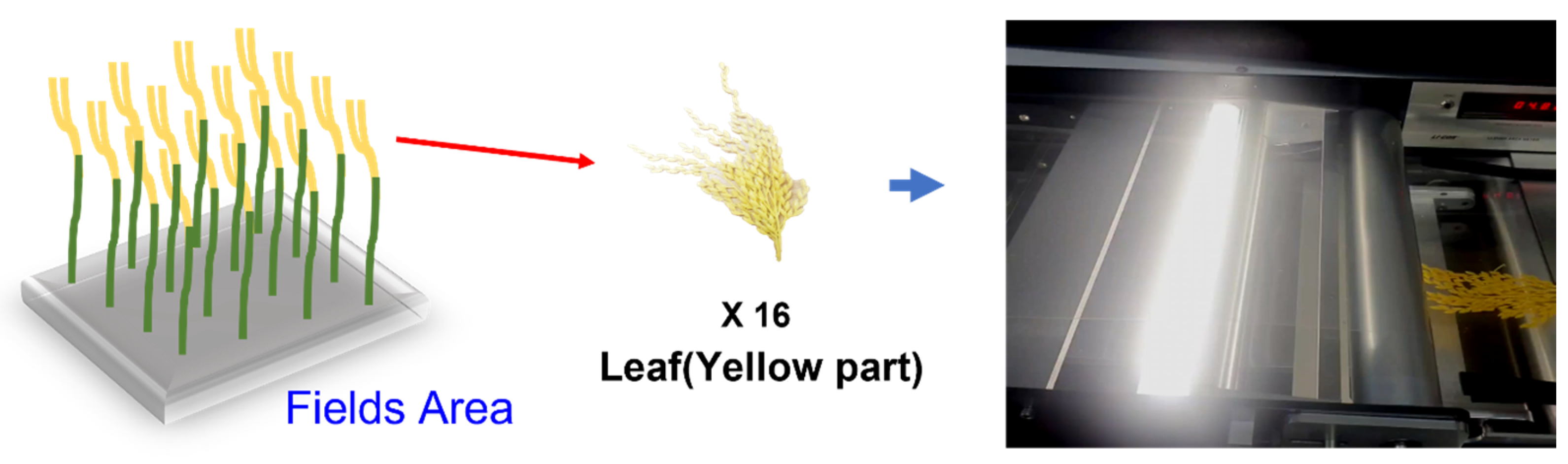

Figure 18.

Measuring the LA of a yellow leaf using the leaf area measurement instrument, where the red arrow shows the process of isolating the LA and the blue arrow shows the process of measuring each leaf.

Figure 18.

Measuring the LA of a yellow leaf using the leaf area measurement instrument, where the red arrow shows the process of isolating the LA and the blue arrow shows the process of measuring each leaf.

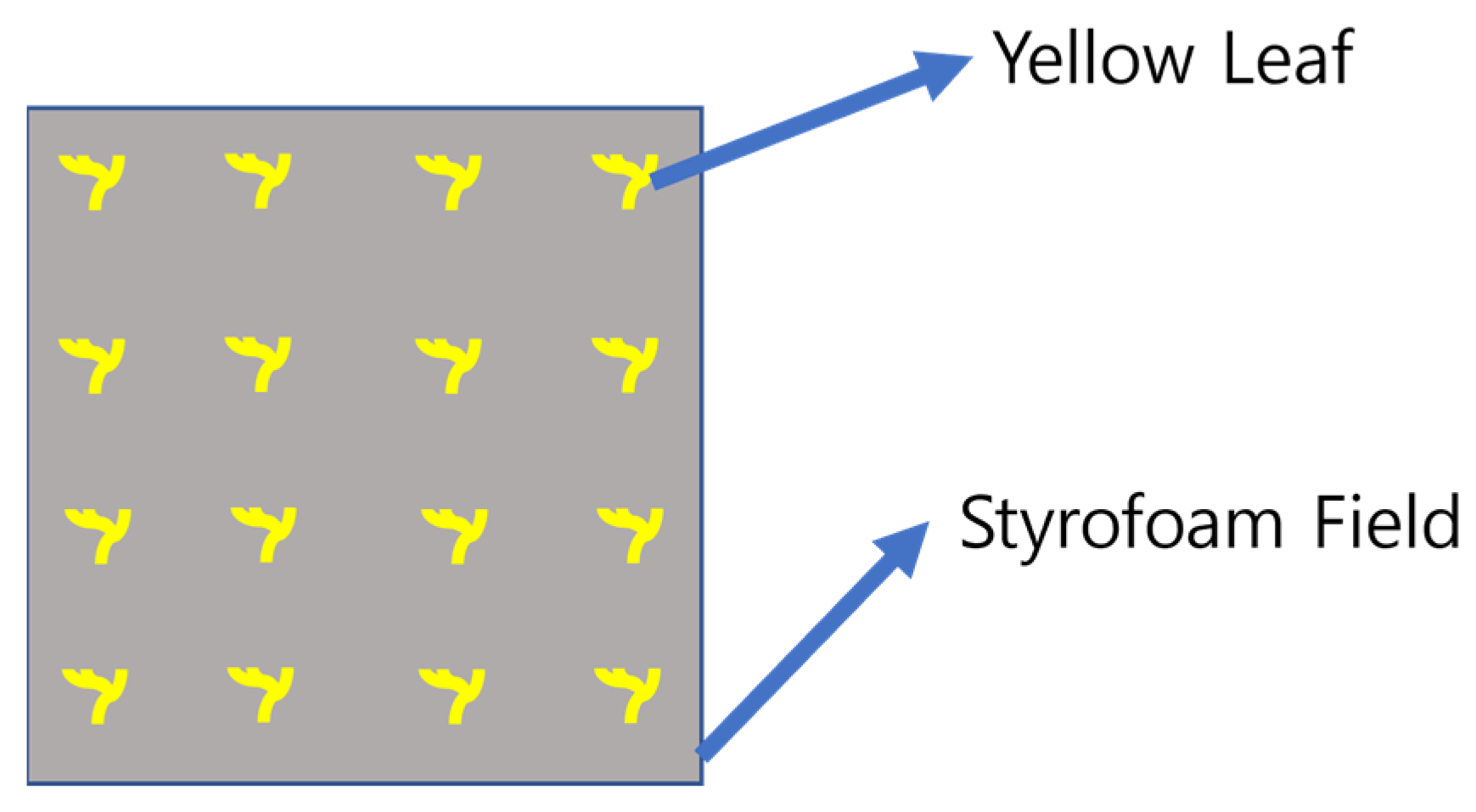

Figure 19.

Illustrative example for ground-measured LAI.

Figure 19.

Illustrative example for ground-measured LAI.

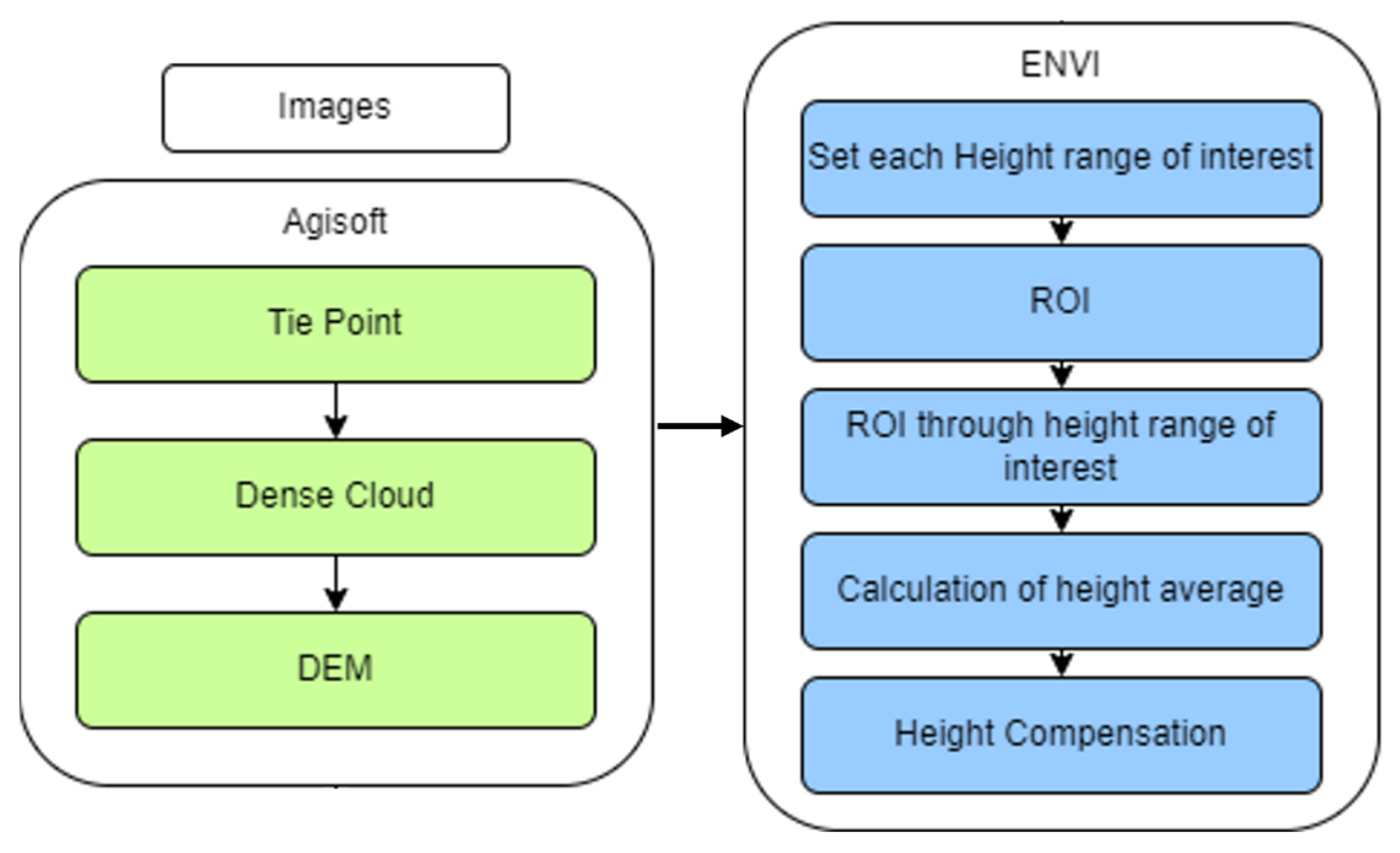

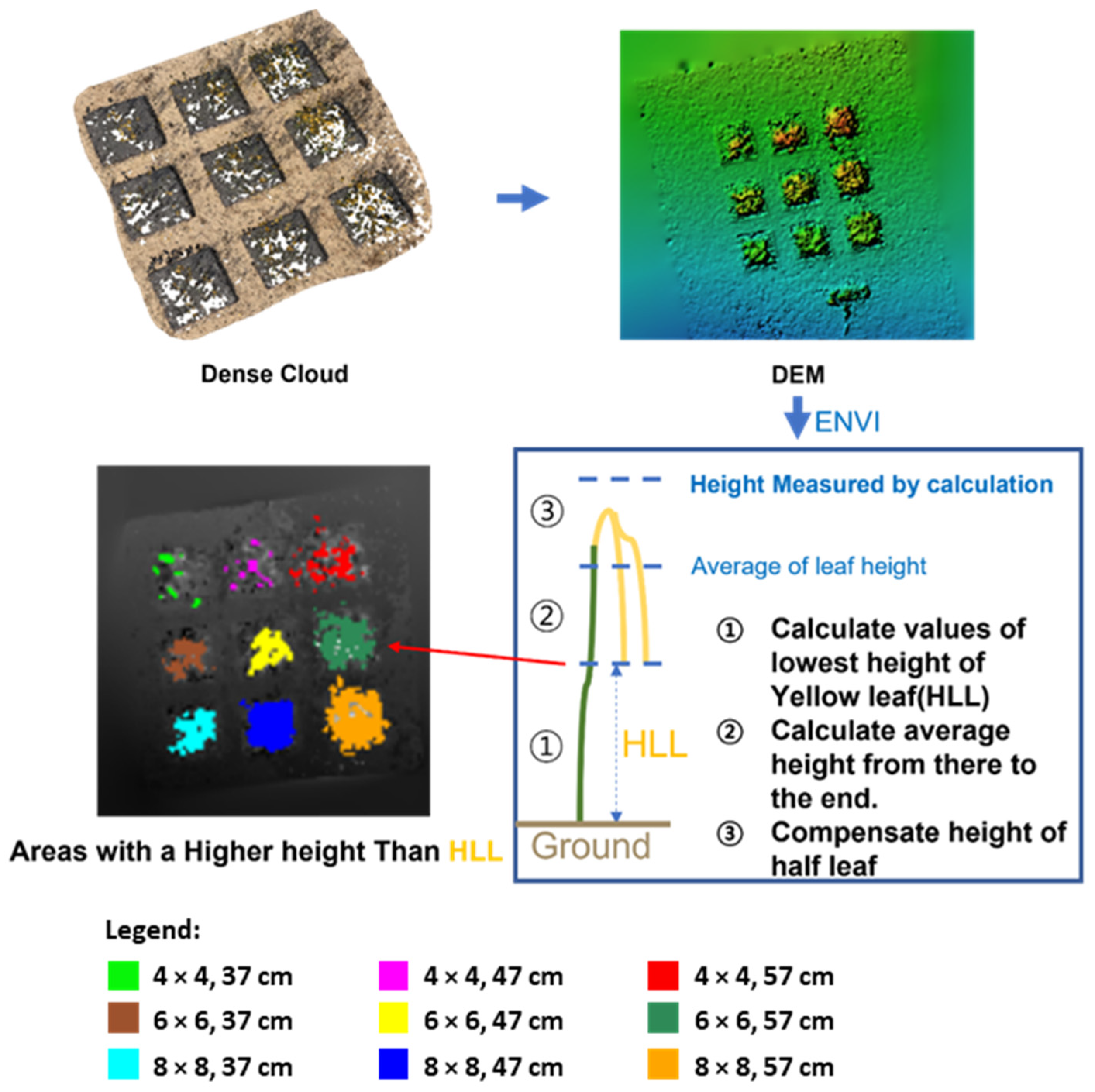

Figure 20.

The height measurement algorithm for the crop model.

Figure 20.

The height measurement algorithm for the crop model.

Figure 21.

An illustrative example of the height measurement method for the crop model, where the blue arrows indicate the data transformation process and the red arrow shows an example of height measurement for the crop model.

Figure 21.

An illustrative example of the height measurement method for the crop model, where the blue arrows indicate the data transformation process and the red arrow shows an example of height measurement for the crop model.

Figure 22.

Box phenotypes from the single UAV system: (a) registered and (b) detailed box images.

Figure 22.

Box phenotypes from the single UAV system: (a) registered and (b) detailed box images.

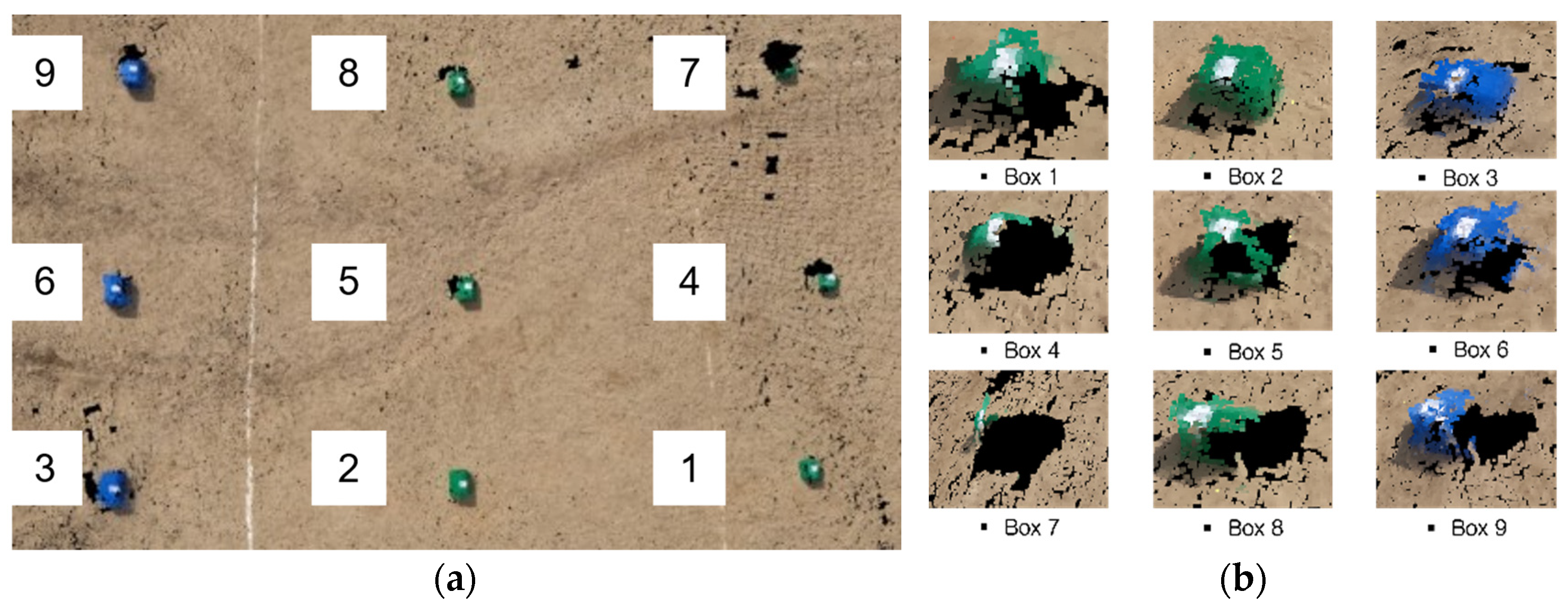

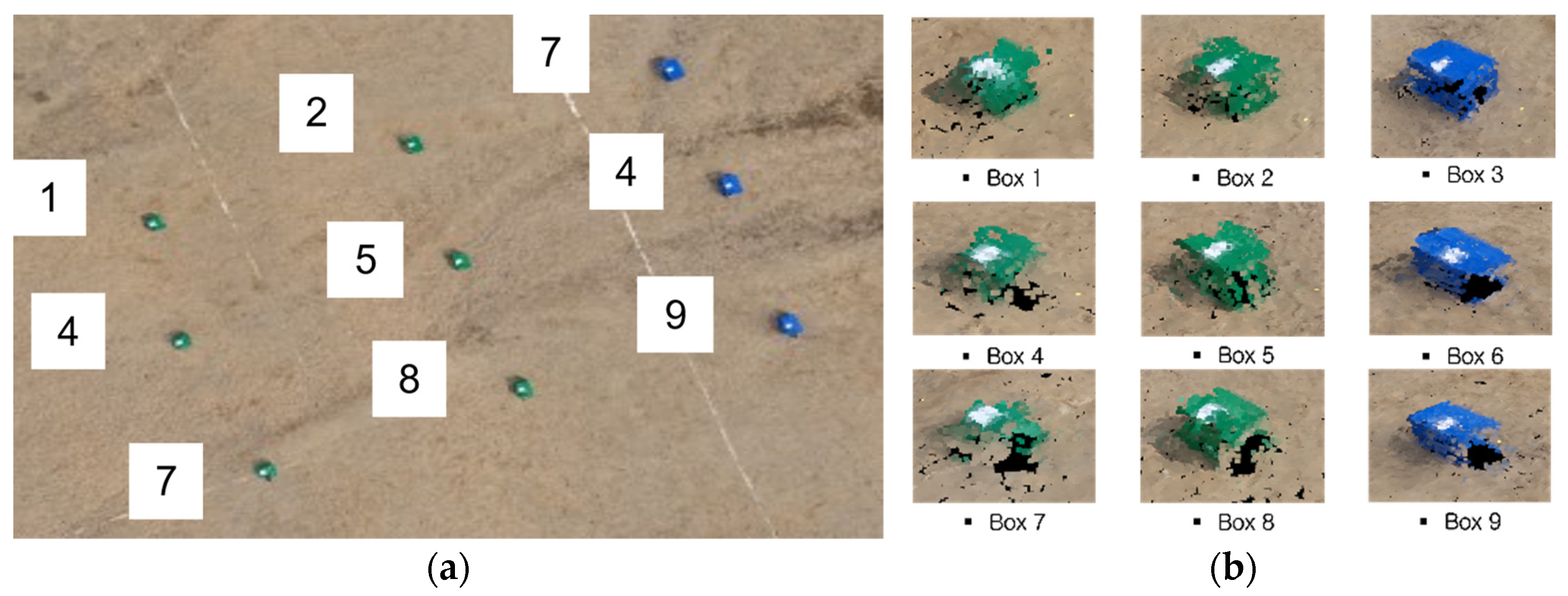

Figure 23.

Box phenotypes from the multiple UAV system: (a) registered and (b) detailed box images.

Figure 23.

Box phenotypes from the multiple UAV system: (a) registered and (b) detailed box images.

Figure 24.

Yellow leaf pixels by crop field measured from the aerial images captured by the single UAV system without wind effects.

Figure 24.

Yellow leaf pixels by crop field measured from the aerial images captured by the single UAV system without wind effects.

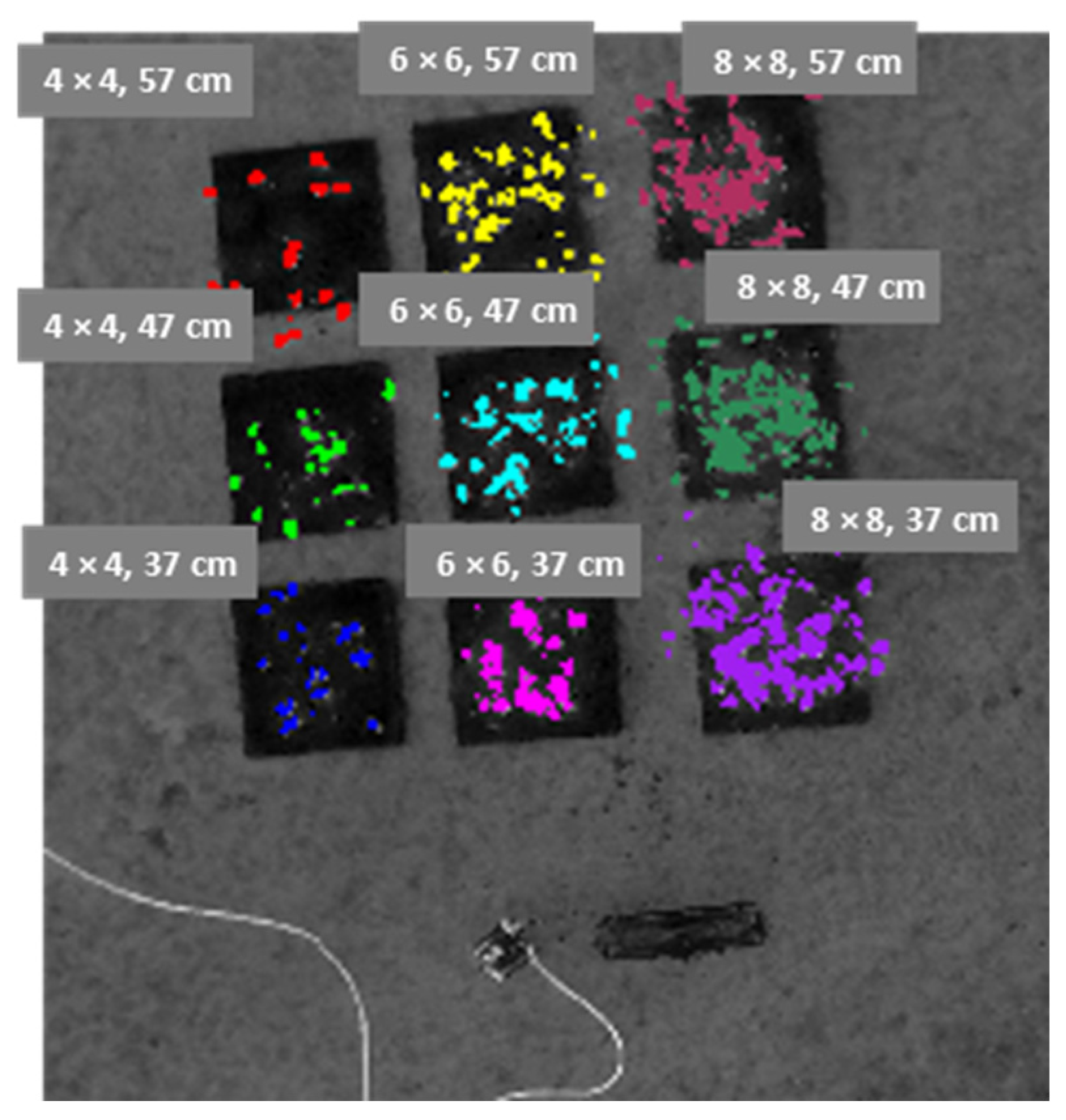

Figure 25.

Yellow leaf pixels by crop field measured from the aerial images captured by the multiple UAV system without wind effects.

Figure 25.

Yellow leaf pixels by crop field measured from the aerial images captured by the multiple UAV system without wind effects.

Figure 26.

The yellow leaf pixels of crop models measured using aerial photography from the single UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

Figure 26.

The yellow leaf pixels of crop models measured using aerial photography from the single UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

Figure 27.

The yellow leaf pixels of the crop models measured using aerial photography from the multiple UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

Figure 27.

The yellow leaf pixels of the crop models measured using aerial photography from the multiple UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

Figure 28.

The yellow leaf pixels of crop models measured using aerial photography from the single UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

Figure 28.

The yellow leaf pixels of crop models measured using aerial photography from the single UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

Figure 29.

The yellow leaf pixels of crop models measured using aerial photography from the multiple UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

Figure 29.

The yellow leaf pixels of crop models measured using aerial photography from the multiple UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

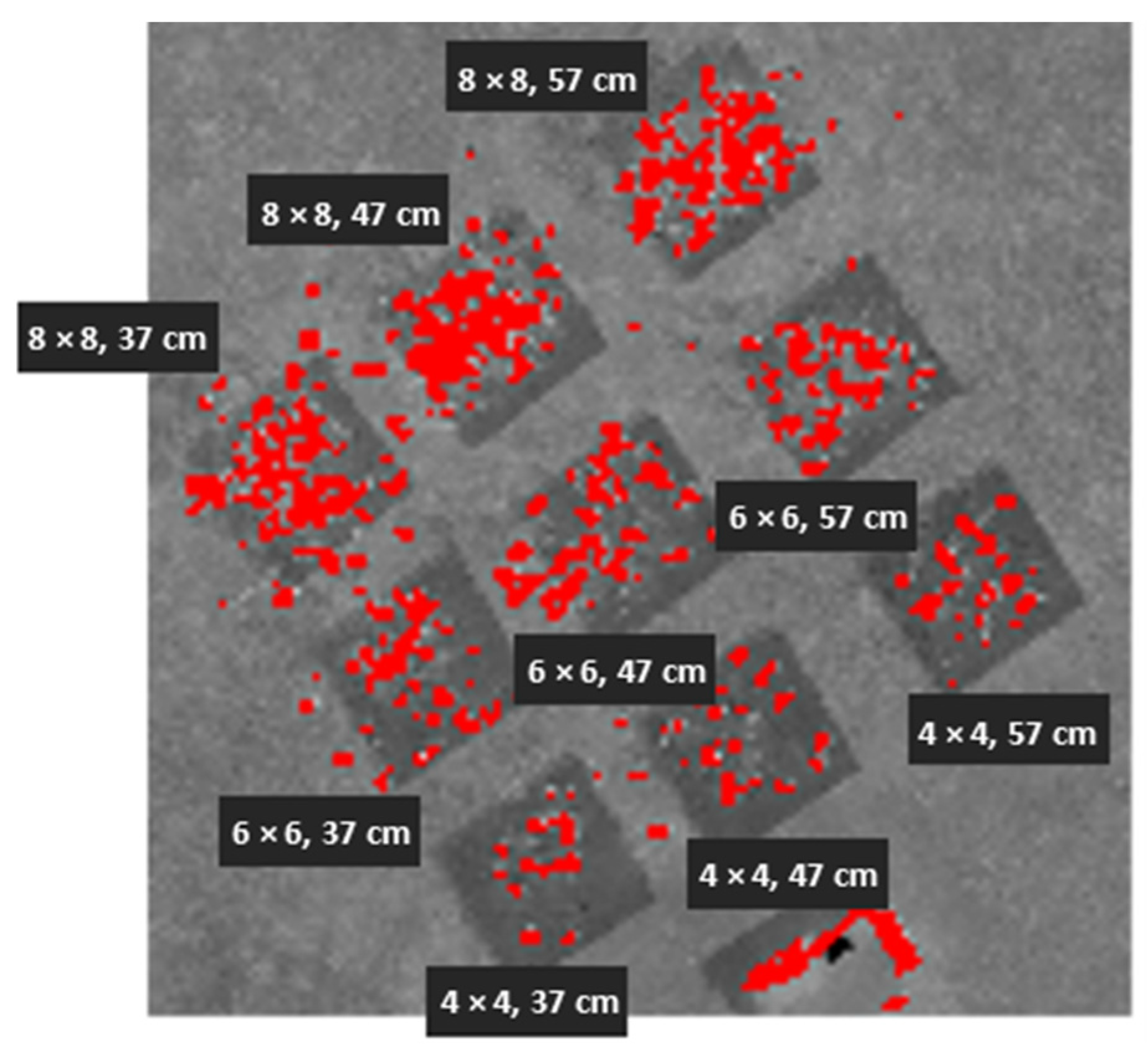

Figure 30.

Locations of height pixels that are above the lowest leaf height measured using aerial photography from the single UAV system without wind effects in the field.

Figure 30.

Locations of height pixels that are above the lowest leaf height measured using aerial photography from the single UAV system without wind effects in the field.

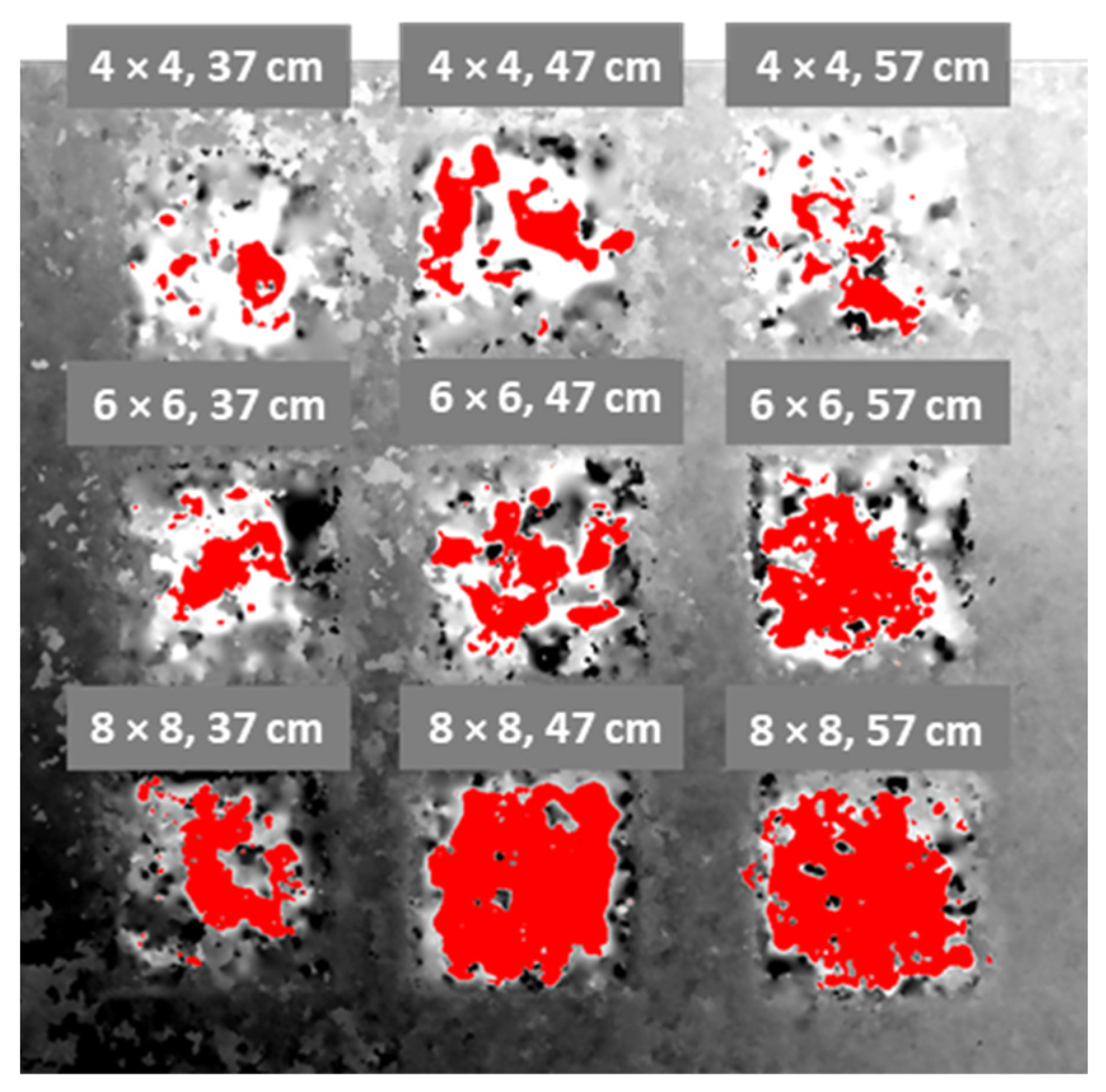

Figure 31.

Locations of height pixels that are above the lowest leaf height measured using aerial photography from the multiple UAV system without wind effects in the field.

Figure 31.

Locations of height pixels that are above the lowest leaf height measured using aerial photography from the multiple UAV system without wind effects in the field.

Figure 32.

Flight route and flight time measurement for the single and multiple UAV systems.

Figure 32.

Flight route and flight time measurement for the single and multiple UAV systems.

Table 1.

Specifications of the visible-light GoPro 10 camera.

Table 1.

Specifications of the visible-light GoPro 10 camera.

| Camera Variables | Values |

|---|

| Camera resolution (pixels) | 1920 × 1080 |

| (mm) | 6.17 |

| (mm) | 2.92 |

Table 2.

Aerial photography-related values for boxes phenotyping.

Table 2.

Aerial photography-related values for boxes phenotyping.

| Input Variables | Values |

|---|

| Site area | 641 m2 |

| Flight altitude | 15 m |

| Ground sample distance (GSD) | 1.65 cm/pixel |

| Image overlap | 75% |

| Flight speed | 3 m/s |

Table 3.

Aerial photography-related values for crop models phenotyping.

Table 3.

Aerial photography-related values for crop models phenotyping.

| Input Variables | Values |

|---|

| Site area | 641 m2 |

| Flight altitude | 7 m |

| GSD | 0.77 cm/pixel |

| Image overlap | 75% |

| Flight speed | 1 m/s |

Table 4.

Comparison of box’s size error, point number, and point density from the single UAV and multiple UAV systems.

Table 4.

Comparison of box’s size error, point number, and point density from the single UAV and multiple UAV systems.

| | Box 1 | Box 2 | Box 3 | Box 4 | Box 5 | Box 6 | Box 7 | Box 8 | Box 9 |

|---|

| Single UAV size error (mm) | 0.010 | 0.050 | 0.010 | N/A | 0.010 | 0.010 | N/A | N/A | N/A |

Multi-UAV size

error (mm) | 0.005 | 0.010 | 0.010 | 0.010 | 0.005 | 0.010 | 0.010 | 0.020 | 0.010 |

| Single UAV point number | 410 | 621 | 809 | 416 | 386 | 624 | 118 | 487 | 822 |

| Single UAV point density (points/m3) | 11,333.400 | 10,561.200 | 8427.080 | 11,499.300 | 6564.620 | 10,612.200 | 3261.830 | 8282.310 | 8562.500 |

| Multi-UAV point number | 502 | 790 | 1216 | 496 | 816 | 1035 | 501 | 861 | 1361 |

| Multi-UAV point density (points/m3) | 13,876.667 | 13,435.300 | 12,666.667 | 13,710.700 | 13,877.500 | 17,602.000 | 13,848.900 | 14,642.800 | 14,177.000 |

Table 5.

Yellow leaf LAI values and error rates by crop field measured using the single UAV system without wind effects.

Table 5.

Yellow leaf LAI values and error rates by crop field measured using the single UAV system without wind effects.

| Height (cm) | Array | Crop Pixels | Pad Pixels | Leaf Angle (°) | LAIm | Ground-Measured LAI | % Error |

|---|

| 57 | 4 × 4 | 1014 | 126,953 | 35 | 0.014 | 0.067 | 79.104 |

| 57 | 6 × 6 | 6969 | 126,137 | 35 | 0.096 | 0.151 | 36.424 |

| 57 | 8 × 8 | 15,998 | 128,214 | 35 | 0.218 | 0.269 | 18.959 |

| 47 | 4 × 4 | 860 | 125,435 | 35 | 0.012 | 0.067 | 82.090 |

| 47 | 6 × 6 | 5860 | 128,260 | 35 | 0.080 | 0.151 | 47.020 |

| 47 | 8 × 8 | 13,219 | 128,532 | 35 | 0.179 | 0.269 | 33.457 |

| 37 | 4 × 4 | 2438 | 129,065 | 35 | 0.033 | 0.067 | 50.746 |

| 37 | 6 × 6 | 2843 | 129,032 | 35 | 0.038 | 0.151 | 74.834 |

| 37 | 8 × 8 | 13,114 | 129,946 | 35 | 0.176 | 0.269 | 34.572 |

| Average | - | - | - | - | - | - | 50.801 |

Table 6.

Yellow leaf LAI values and error rates by crop field measured using the multiple UAV system without wind effects.

Table 6.

Yellow leaf LAI values and error rates by crop field measured using the multiple UAV system without wind effects.

| Height (cm) | Array | Crop Pixels | Pad Pixels | Leaf Angle (°) | LAIm | Ground-Measured LAI | % Error |

|---|

| 57 | 4 × 4 | 3884 | 117,578 | 35 | 0.058 | 0.067 | 13.433 |

| 57 | 6 × 6 | 8355 | 115,879 | 35 | 0.126 | 0.151 | 16.556 |

| 57 | 8 × 8 | 15,020 | 116,806 | 35 | 0.224 | 0.269 | 16.729 |

| 47 | 4 × 4 | 3862 | 116,874 | 35 | 0.058 | 0.067 | 13.433 |

| 47 | 6 × 6 | 11,746 | 117,608 | 35 | 0.174 | 0.151 | 15.232 |

| 47 | 8 × 8 | 22,670 | 115,885 | 35 | 0.341 | 0.269 | 26.766 |

| 37 | 4 × 4 | 4664 | 116,864 | 35 | 0.070 | 0.067 | 4.478 |

| 37 | 6 × 6 | 11,677 | 119,902 | 35 | 0.170 | 0.151 | 12.583 |

| 37 | 8 × 8 | 17,539 | 116,864 | 35 | 0.262 | 0.269 | 2.602 |

| Average | - | - | - | - | - | - | 13.535 |

Table 7.

The yellow leaf LAI values and error rates by crop field measured from the aerial images captured by the single UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

Table 7.

The yellow leaf LAI values and error rates by crop field measured from the aerial images captured by the single UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

| Height (cm) | Array | Crop Pixels | Pad Pixels | Leaf Angle (°) | LAIm | Ground-Measured LAI | % Error |

|---|

| 57 | 4 × 4 | 5380 | 136,882 | 35 | 0.069 | 0.067 | 2.985 |

| 57 | 6 × 6 | 2621 | 138,594 | 35 | 0.033 | 0.067 | 50.746 |

| 57 | 8 × 8 | 1789 | 137,189 | 35 | 0.023 | 0.067 | 65.672 |

| 47 | 4 × 4 | 6327 | 135,217 | 35 | 0.082 | 0.151 | 45.695 |

| 47 | 6 × 6 | 5703 | 138,475 | 35 | 0.072 | 0.151 | 52.318 |

| 47 | 8 × 8 | 7713 | 140,375 | 35 | 0.096 | 0.151 | 36.424 |

| 37 | 4 × 4 | 13,923 | 134,952 | 35 | 0.180 | 0.269 | 33.086 |

| 37 | 6 × 6 | 13,390 | 138,392 | 35 | 0.169 | 0.269 | 37.175 |

| 37 | 8 × 8 | 17,359 | 145,789 | 35 | 0.208 | 0.269 | 22.677 |

| Average | - | - | - | - | - | - | 38.531 |

Table 8.

The yellow leaf LAI values and error rates by crop field measured from the aerial images captured by the multiple UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

Table 8.

The yellow leaf LAI values and error rates by crop field measured from the aerial images captured by the multiple UAV system with wind effects in the 8 × 8 and 6 × 6 layout fields.

| Height (cm) | Array | Crop Pixels | Pad Pixels | Leaf Angle (°) | LAIm | Ground-Measured LAI | % Error |

|---|

| 57 | 4 × 4 | 2457 | 112,925 | 35 | 0.038 | 0.067 | 43.284 |

| 57 | 6 × 6 | 2825 | 114,981 | 35 | 0.043 | 0.067 | 35.821 |

| 57 | 8 × 8 | 3377 | 115,546 | 35 | 0.051 | 0.067 | 23.881 |

| 47 | 4 × 4 | 8066 | 112,880 | 35 | 0.125 | 0.151 | 17.219 |

| 47 | 6 × 6 | 8425 | 117,021 | 35 | 0.126 | 0.151 | 16.556 |

| 47 | 8 × 8 | 9655 | 113,444 | 35 | 0.148 | 0.151 | 1.987 |

| 37 | 4 × 4 | 15,362 | 109,094 | 35 | 0.246 | 0.269 | 8.550 |

| 37 | 6 × 6 | 16,681 | 113,795 | 35 | 0.256 | 0.269 | 4.833 |

| 37 | 8 × 8 | 18,599 | 112,389 | 35 | 0.289 | 0.269 | 7.435 |

| Average | - | - | - | - | - | - | 17.729 |

Table 9.

The yellow leaf LAI values and error rates measured from the aerial images captured by the single UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

Table 9.

The yellow leaf LAI values and error rates measured from the aerial images captured by the single UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

| Height (cm) | Array | Crop Pixels | Pad Pixels | Leaf Angle (°) | LAIm | Ground-Measured LAI | % Error |

|---|

| 57 | 4 × 4 | 6851 | 136,640 | 35 | 0.087 | 0.067 | 30.253 |

| 57 | 6 × 6 | 10,374 | 134,559 | 35 | 0.134 | 0.151 | 11.052 |

| 57 | 8 × 8 | 10,272 | 131,448 | 35 | 0.136 | 0.269 | 49.293 |

| 47 | 4 × 4 | 3665 | 137,672 | 35 | 0.046 | 0.067 | 30.849 |

| 47 | 6 × 6 | 5427 | 135,391 | 35 | 0.070 | 0.151 | 53.805 |

| 47 | 8 × 8 | 13,071 | 135,922 | 35 | 0.168 | 0.269 | 37.602 |

| 37 | 4 × 4 | 2209 | 137,448 | 35 | 0.028 | 0.067 | 58.271 |

| 37 | 6 × 6 | 9053 | 139,295 | 35 | 0.113 | 0.151 | 25.017 |

| 37 | 8 × 8 | 14,669 | 133,971 | 35 | 0.191 | 0.269 | 28.928 |

| Average | - | - | - | - | - | - | 36.119 |

Table 10.

The yellow leaf LAI values and error rates measured from the aerial images captured by the multiple UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

Table 10.

The yellow leaf LAI values and error rates measured from the aerial images captured by the multiple UAV system with wind effects in the 6 × 6 and 4 × 4 layout fields.

| Height (cm) | Array | Crop Pixels | Pad Pixels | Leaf Angle (°) | LAIm | Ground-Measured LAI | % Error |

|---|

| 57 | 4 × 4 | 3764 | 155,322 | 35 | 0.042 | 0.067 | 37.202 |

| 57 | 6 × 6 | 13,651 | 153,263 | 35 | 0.155 | 0.151 | 2.780 |

| 57 | 8 × 8 | 18,083 | 152,360 | 35 | 0.207 | 0.269 | 22.971 |

| 47 | 4 × 4 | 4492 | 157,633 | 35 | 0.050 | 0.067 | 26.042 |

| 47 | 6 × 6 | 10,379 | 155,241 | 35 | 0.117 | 0.151 | 22.833 |

| 47 | 8 × 8 | 24,682 | 161,329 | 35 | 0.267 | 0.269 | 0.707 |

| 37 | 4 × 4 | 3241 | 160,753 | 35 | 0.035 | 0.067 | 47.619 |

| 37 | 6 × 6 | 12,097 | 157,474 | 35 | 0.134 | 0.151 | 11.383 |

| 37 | 8 × 8 | 22,699 | 156,226 | 35 | 0.253 | 0.269 | 5.696 |

| Average | - | - | - | - | - | - | 19.693 |

Table 11.

The heights and error rates of crop models measured from the aerial images captured by the single UAV system without wind effects in the field.

Table 11.

The heights and error rates of crop models measured from the aerial images captured by the single UAV system without wind effects in the field.

| True Crop Height (cm) | Array | Ground Height (m) | HLL (m) | Average Leaf Height (m) | Compensated Height (m) | Measured Crop Height (cm) | % Error |

|---|

| 57 cm | 4 × 4 | −1.150 | −0.746 | 0.746 | −0.615 | 53.490 | 6.167 |

| 47 cm | 4 × 4 | −1.210 | −0.906 | 0.578 | −0.794 | 41.620 | 11.438 |

| 37 cm | 4 × 4 | −1.280 | −1.076 | 0.423 | −0.953 | 32.680 | 11.676 |

| 57 cm | 6 × 6 | −1.150 | −0.746 | 0.764 | −0.560 | 59.040 | 3.579 |

| 47 cm | 6 × 6 | −1.210 | −0.906 | 0.600 | −0.813 | 39.700 | 15.532 |

| 37 cm | 6 × 6 | −1.280 | −1.076 | 0.442 | −0.887 | 39.300 | 6.216 |

| 57 cm | 8 × 8 | −1.110 | −0.706 | 0.787 | −0.545 | 56.500 | 0.877 |

| 47 cm | 8 × 8 | −1.170 | −0.866 | 0.645 | −0.695 | 47.480 | 1.021 |

| 37 cm | 8 × 8 | −1.240 | −1.036 | 0.500 | −0.833 | 40.720 | 10.054 |

| Average | | - | - | - | - | - | 7.396 |

Table 12.

The heights and error rates of crop models measured from the aerial images captured by the multiple UAV system without wind effects.

Table 12.

The heights and error rates of crop models measured from the aerial images captured by the multiple UAV system without wind effects.

| True Crop Height (cm) | Array | Ground Height (m) | HLL (m) | Average Leaf Height (m) | Compensated Height (m) | Measured Crop Height (cm) | % Error |

|---|

| 57 cm | 4 × 4 | −0.880 | −0.476 | 0.746 | −0.338 | 54.250 | 4.825 |

| 47 cm | 4 × 4 | −0.750 | −0.446 | 0.578 | −0.329 | 42.120 | 10.388 |

| 37 cm | 4 × 4 | −0.640 | −0.436 | 0.423 | −0.308 | 33.240 | 10.162 |

| 57 cm | 6 × 6 | −0.830 | −0.426 | 0.764 | −0.289 | 54.140 | 5.018 |

| 47 cm | 6 × 6 | −0.730 | −0.426 | 0.600 | −0.286 | 44.420 | 5.487 |

| 37 cm | 6 × 6 | −0.610 | −0.406 | 0.442 | −0.272 | 33.800 | 8.649 |

| 57 cm | 8 × 8 | −0.810 | −0.406 | 0.787 | −0.269 | 54.100 | 5.088 |

| 47 cm | 8 × 8 | −0.730 | −0.426 | 0.645 | −0.260 | 47.050 | 0.106 |

| 37 cm | 8 × 8 | −0.590 | −0.386 | 0.500 | −0.214 | 37.630 | 1.703 |

| Average | | - | - | - | - | - | 5.714 |

Table 13.

The heights and error rates of crop models measured using aerial photography from the single UAV system with wind effects in the field.

Table 13.

The heights and error rates of crop models measured using aerial photography from the single UAV system with wind effects in the field.

| True Crop Height (cm) | Array | Ground Height (m) | HLL (m) | Average Leaf Height (m) | Compensated Height (m) | Measured Crop Height (cm) | % Error |

|---|

| 57 cm | 4 × 4 | −1.850 | −1.416 | −1.365 | −1.304 | 54.640 | 4.134 |

| 47 cm | 4 × 4 | −1.980 | −1.646 | −1.561 | −1.500 | 48.040 | 2.221 |

| 37 cm | 4 × 4 | −2.110 | −1.876 | −1.790 | −1.729 | 38.140 | 3.091 |

| 57 cm | 6 × 6 | −1.817 | −1.383 | −1.282 | −1.221 | 59.640 | 4.638 |

| 47 cm | 6 × 6 | −1.957 | −1.623 | −1.510 | −1.449 | 50.840 | 8.178 |

| 37 cm | 6 × 6 | −2.058 | −1.824 | −1.737 | −1.676 | 38.240 | 3.361 |

| 57 cm | 8 × 8 | −1.763 | −1.329 | −1.257 | −1.196 | 56.700 | 0.520 |

| 47 cm | 8 × 8 | −1.901 | −1.567 | −1.474 | −1.412 | 48.870 | 3.986 |

| 37 cm | 8 × 8 | −2.032 | −1.798 | −1.707 | −1.646 | 38.600 | 4.334 |

| Average | | - | - | - | - | - | 6.640 |

Table 14.

The heights and error rates measured using aerial photography from the multiple UAV system with wind effects in the field.

Table 14.

The heights and error rates measured using aerial photography from the multiple UAV system with wind effects in the field.

| True Crop Height (cm) | Array | Ground Height (m) | HLL (m) | Average Leaf Height (m) | Compensated Height (m) | Measured Crop Height (cm) | % Error |

|---|

| 57 cm | 4 × 4 | 0.250 | 0.654 | 0.746 | 0.807 | 55.740 | 2.204 |

| 47 cm | 4 × 4 | 0.186 | 0.490 | 0.578 | 0.639 | 45.250 | 3.716 |

| 37 cm | 4 × 4 | 0.127 | 0.331 | 0.423 | 0.484 | 35.730 | 3.423 |

| 57 cm | 6 × 6 | 0.271 | 0.675 | 0.764 | 0.825 | 55.430 | 2.756 |

| 47 cm | 6 × 6 | 0.220 | 0.524 | 0.600 | 0.661 | 44.140 | 6.077 |

| 37 cm | 6 × 6 | 0.150 | 0.354 | 0.442 | 0.503 | 35.340 | 4.477 |

| 57 cm | 8 × 8 | 0.268 | 0.672 | 0.787 | 0.849 | 58.080 | 1.901 |

| 47 cm | 8 × 8 | 0.196 | 0.500 | 0.645 | 0.706 | 51.040 | 8.603 |

| 37 cm | 8 × 8 | 0.167 | 0.371 | 0.500 | 0.561 | 39.440 | 6.604 |

| Average | | - | - | - | - | - | 4.418 |

Table 15.

Comparison of flight times for the single UAV and multiple UAV systems.

Table 15.

Comparison of flight times for the single UAV and multiple UAV systems.

| Driving Line | Flight Time Based on Single UAV | Flight Time Based on Multi-UAV |

|---|

| 1 | 1 min 16 s | 25 s |

| 2 | 2 min 42 s | 55 s |