Deep Image Prior Amplitude SAR Image Anonymization

Abstract

:1. Introduction

- we tackle the anonymization of amplitude SAR images by inpainting them with the DIP technique, a CNN-based solution which does not need a training stage, thus enabling fast reconstructions and not requiring a huge amount of training data;

- we test different combinations of DIP hyperparameters to find the one working best to inpaint multiple different land-cover contents;

- we inpaint a dataset of SAR images of different land-cover contents and evaluate the goodness of the final inpainted results in terms of data fidelity using signal processing metrics, data compatibility using SAR-quality metrics, and data usability using semantic metrics.

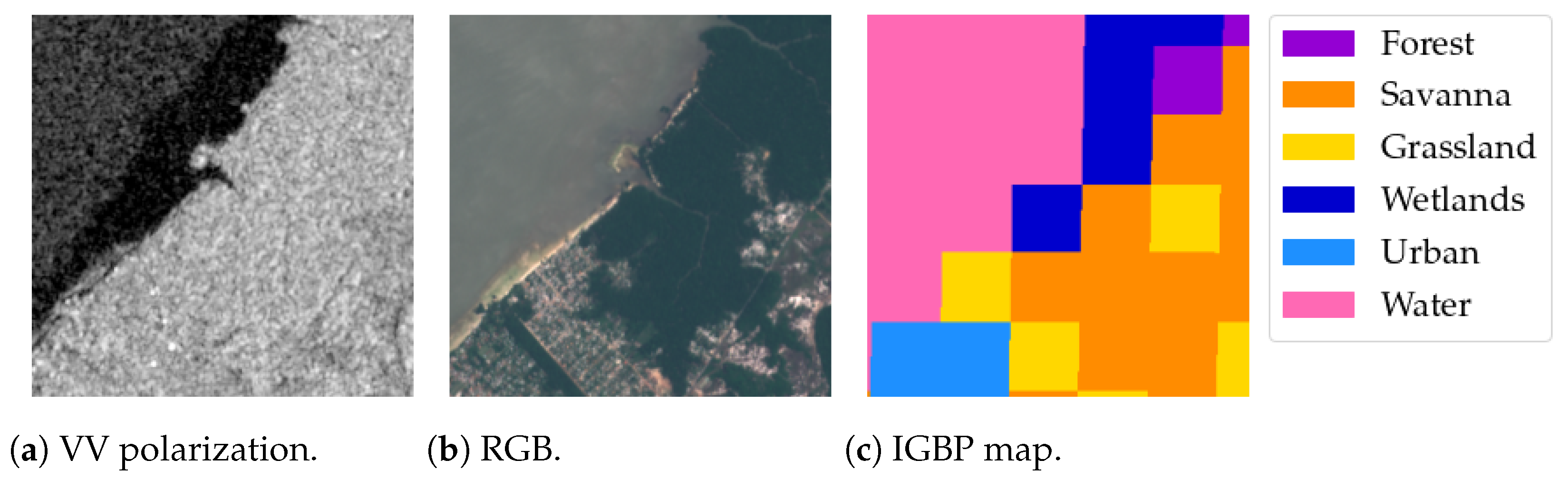

2. Background and Problem Statement

2.1. Image Restoration and Inpainting

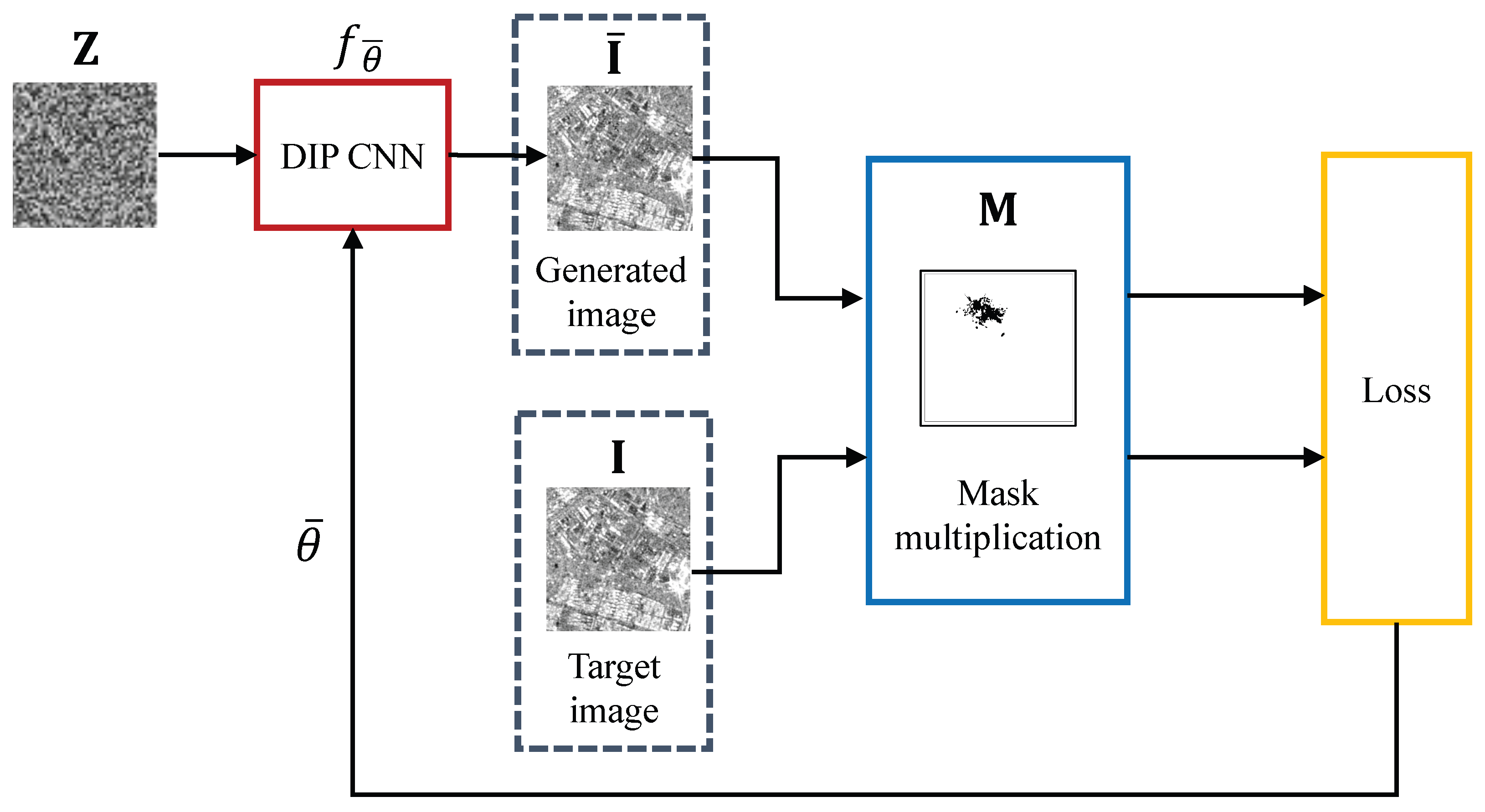

2.2. Deep Image Prior

- the regularization term is omitted;

- the final solution is optimized over the network parameters .

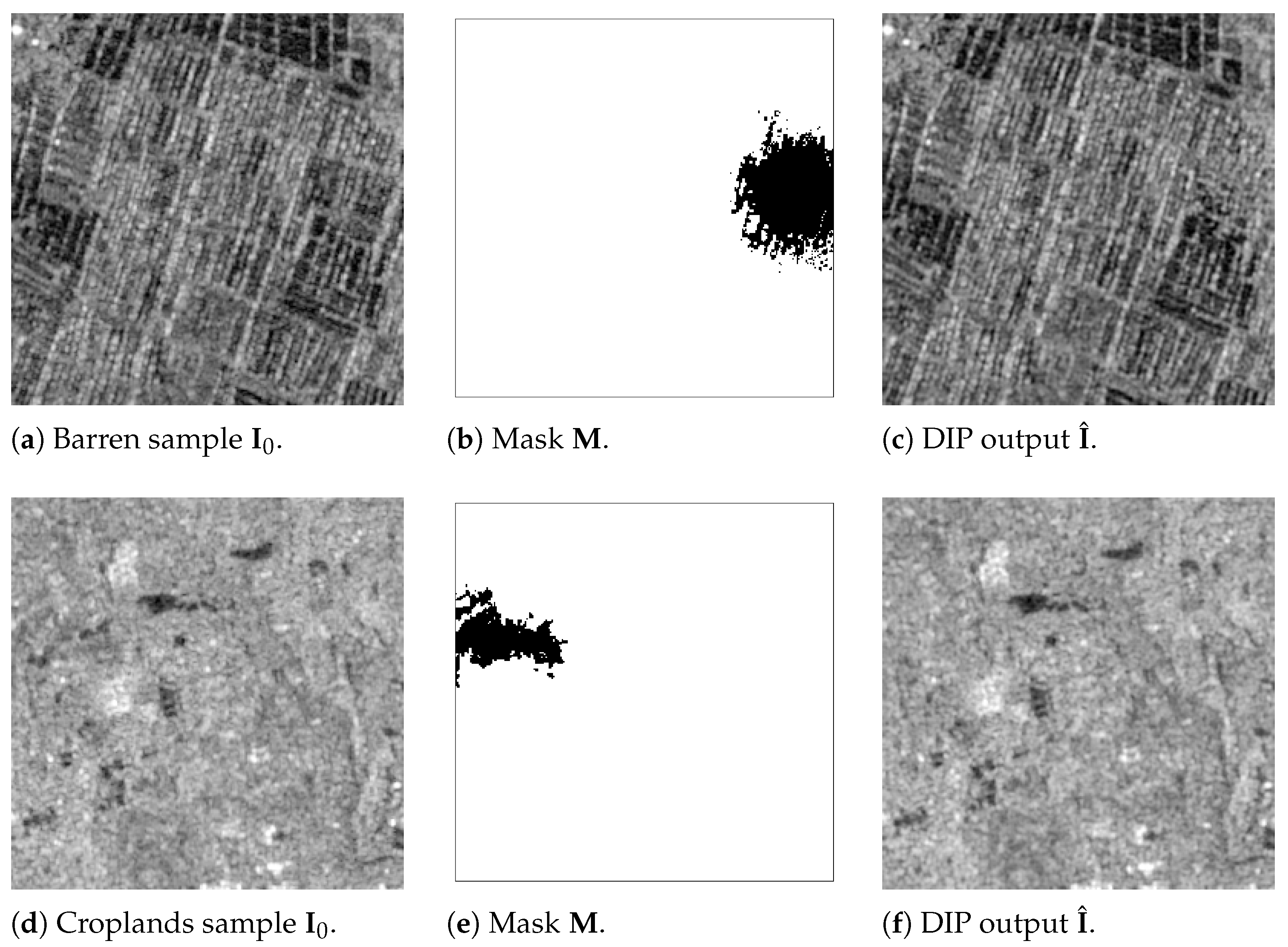

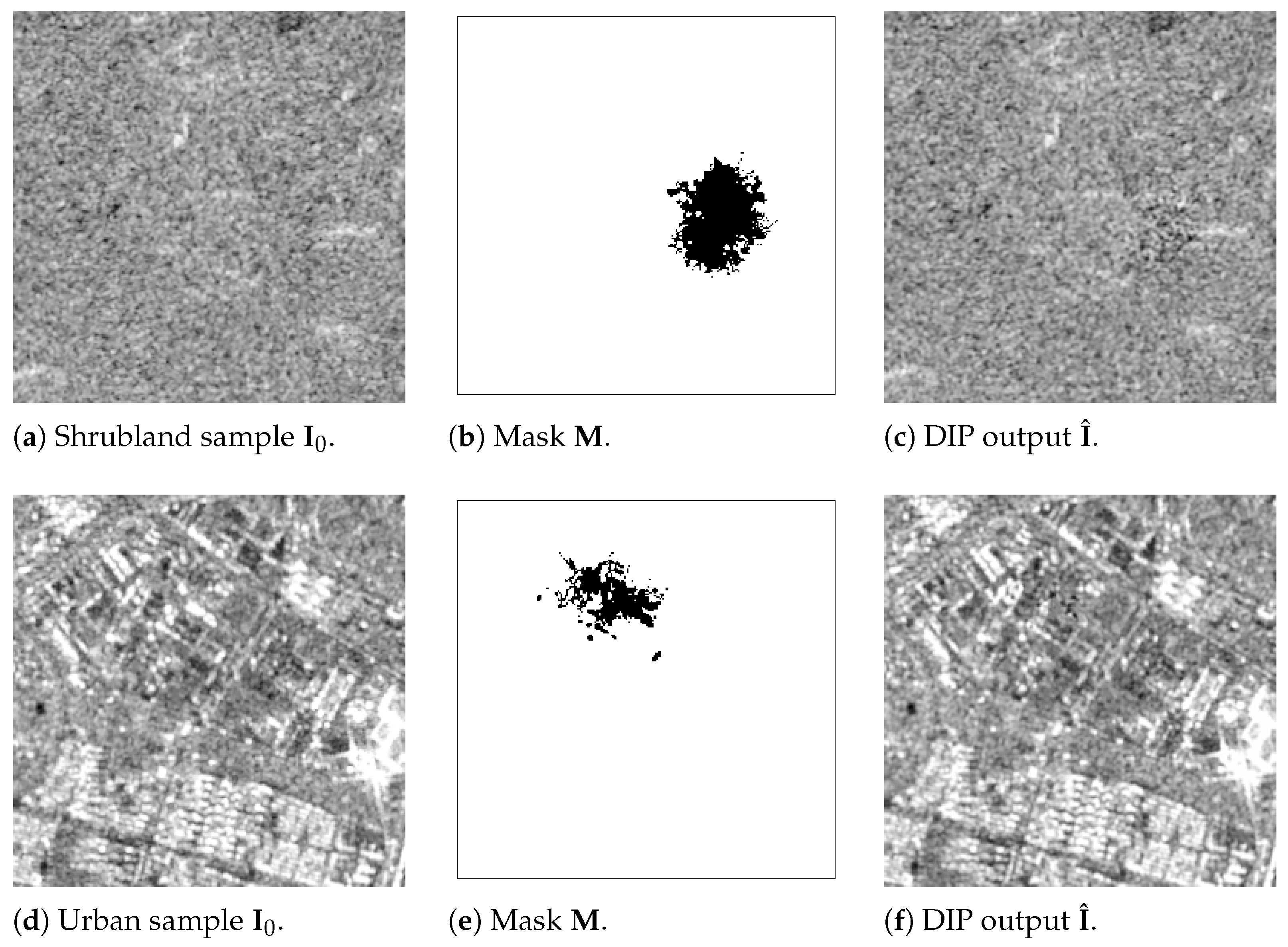

3. Proposed DIP Anonymization Analysis

Experimental Setup

- Structural Similarity Index Measure (SSIM) [73] is a method for evaluating the similarity between two images and . This measure is performed at a patch level, i.e., the two images are divided into patches, and for each patch and of size pixels, the following measure is computedbeing the sample pixels’ means of the , patches, e.g., ; their pixels’ variances, e.g., , and their covariance, i.e., ; finally, are two constants to stabilize the division denominator. The final SSIM value is then computed as the mean of the SSIM from all patches. Its values range from 0 to 1, with 1 indicating perfect similarity;

- Multi-Scale Structural Similarity Index Measure (MS-SSIM) [74] is an extension of the SSIM, consisting in computing the SSIM of different downsampled images of the two samples and , with each downsampling operation being defined as a “scale”. The final MS-SSIM value is the average of the SSIM computed at the different scales, i.e.,with indicating a “scale” of the original image, and S the total number of scales considered.As for the SSIM, its values range from 0 to 1;

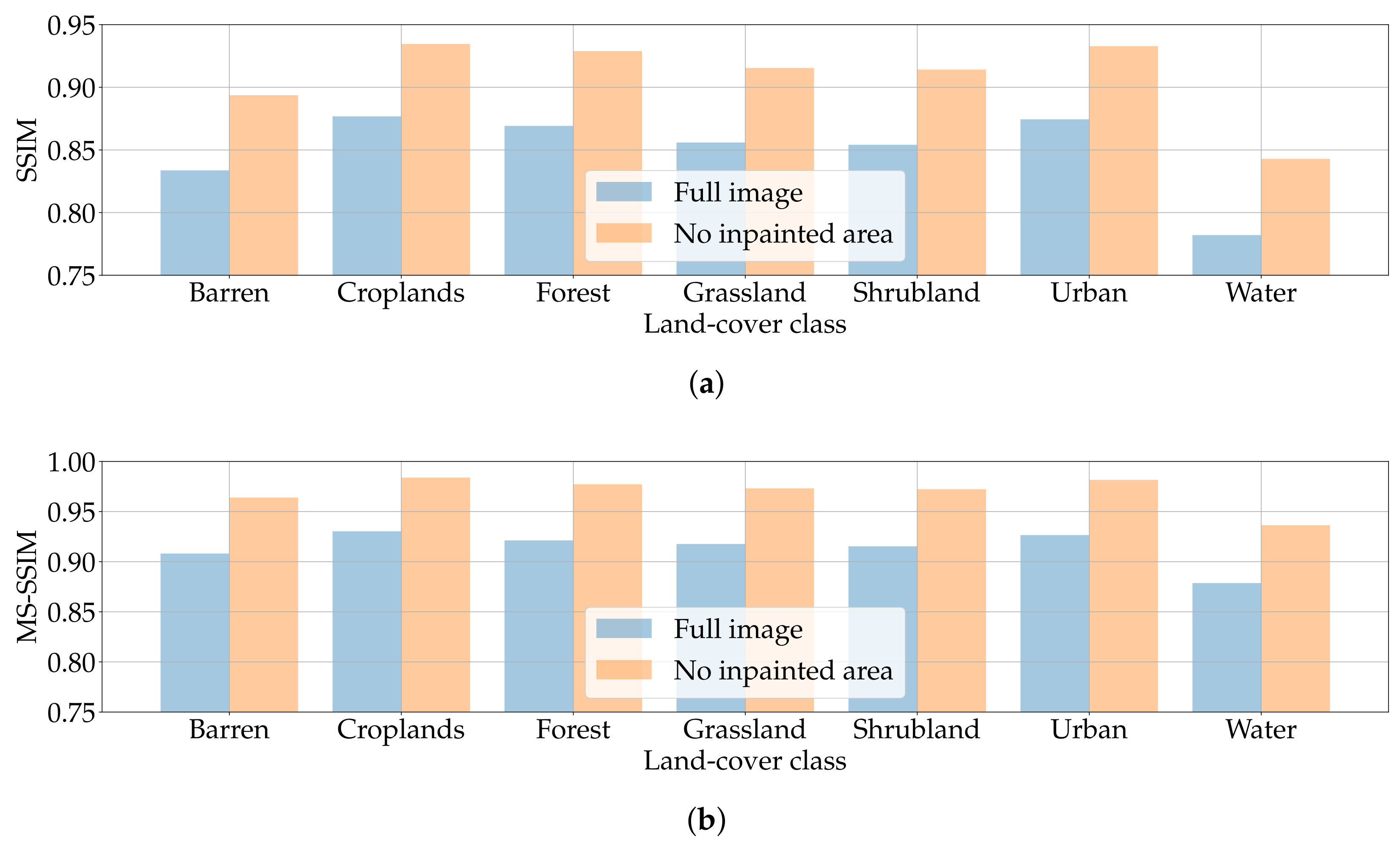

- Equivalent Number of Looks (ENL) [75] is a statistical measure to quantify the level of speckle noise in a SAR image. Given a sample , it is defined aswhere is the pixel mean of and its pixel variance. The higher the ENL value, the less the level of speckle affecting the SAR image;

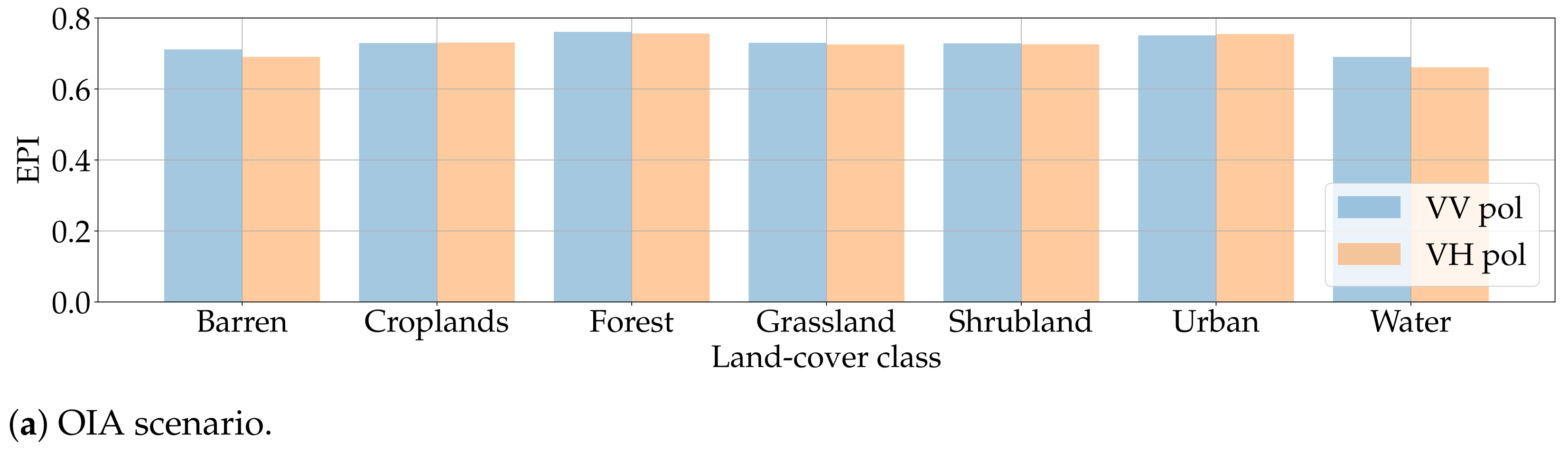

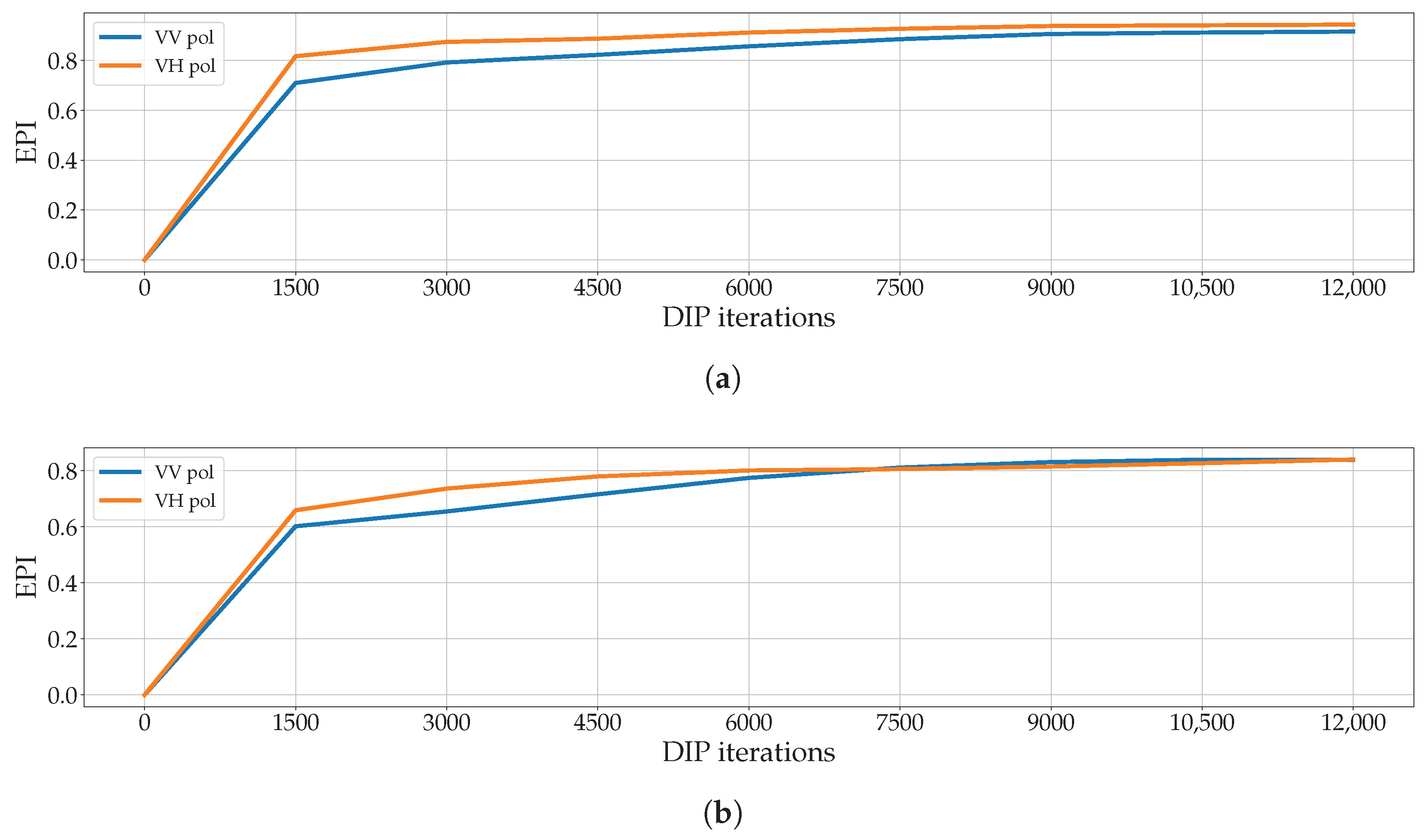

- Edge Preservation Index (EPI) is a measure of image quality used in the SAR field to quantify how many edges a denoising algorithm preserves when processing a sample [76]. Given a noisy, single polarization, amplitude SAR image with pixel size and a corresponding denoised image , the index is defined aswhere is the absolute value of the gradient operator. The EPI values range from 0 to 1, where 1 indicates perfect edge preservation.

4. Results and Discussion

- DIP hyperparameter search. We evaluate if the same combination of hyperparameters can produce convincing results independently on the land-cover content of the inpainted sample.

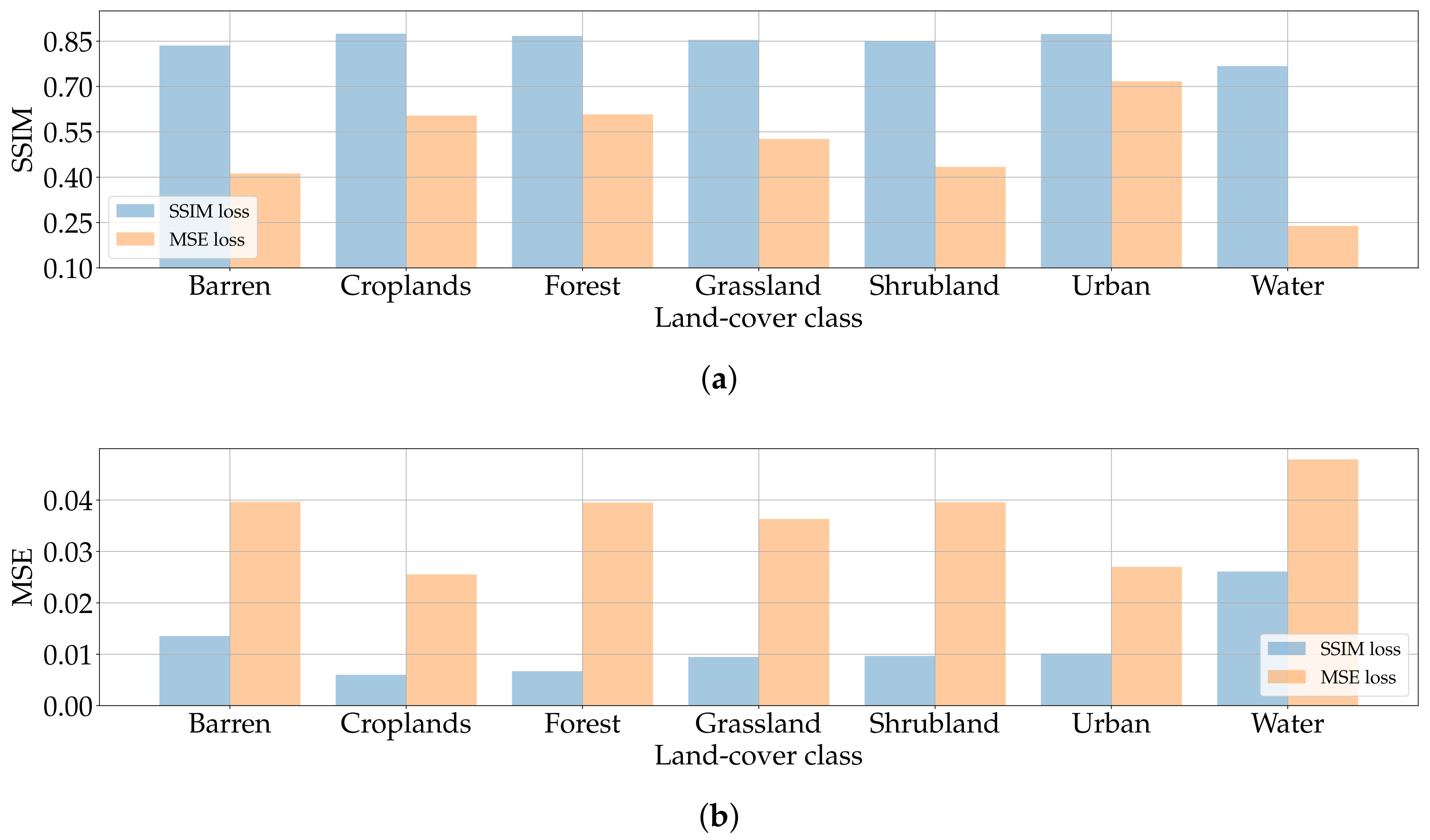

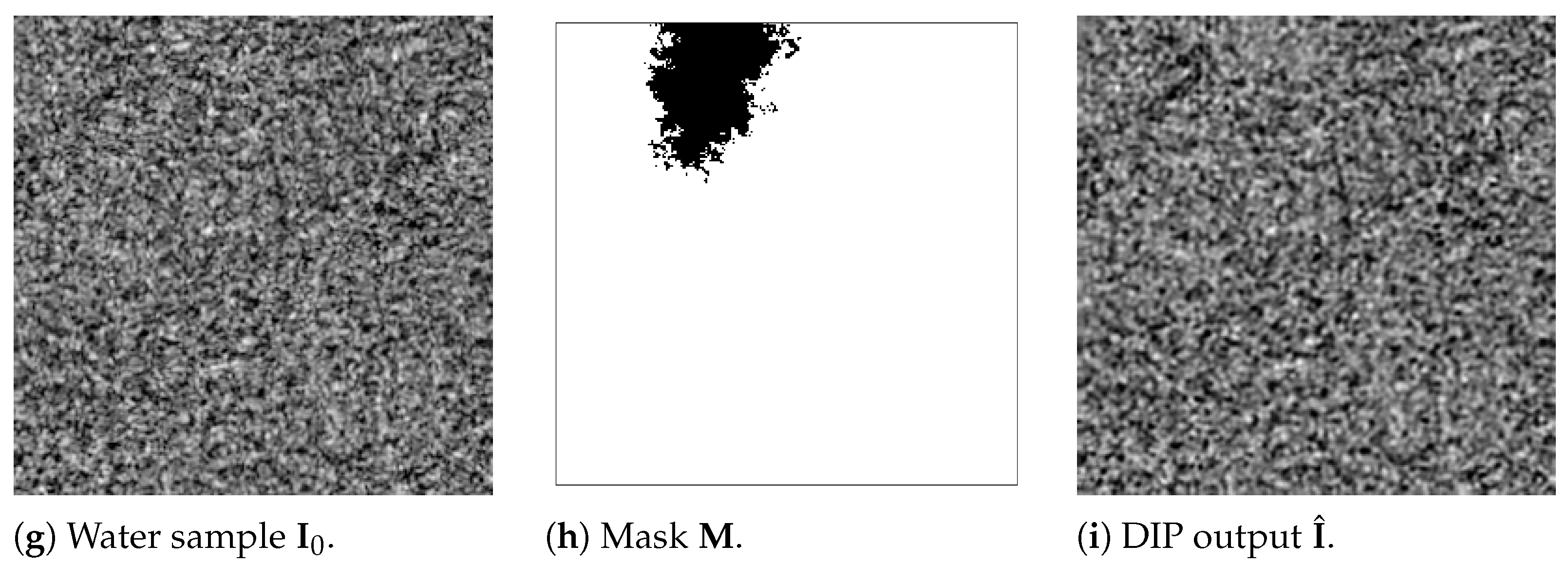

- Generation process quality evaluation from a “signal processing” perspective. We estimate the quality of generated samples by computing metrics typical of the image full-reference quality assessment field, i.e., SSIM, and MS-SSIM.

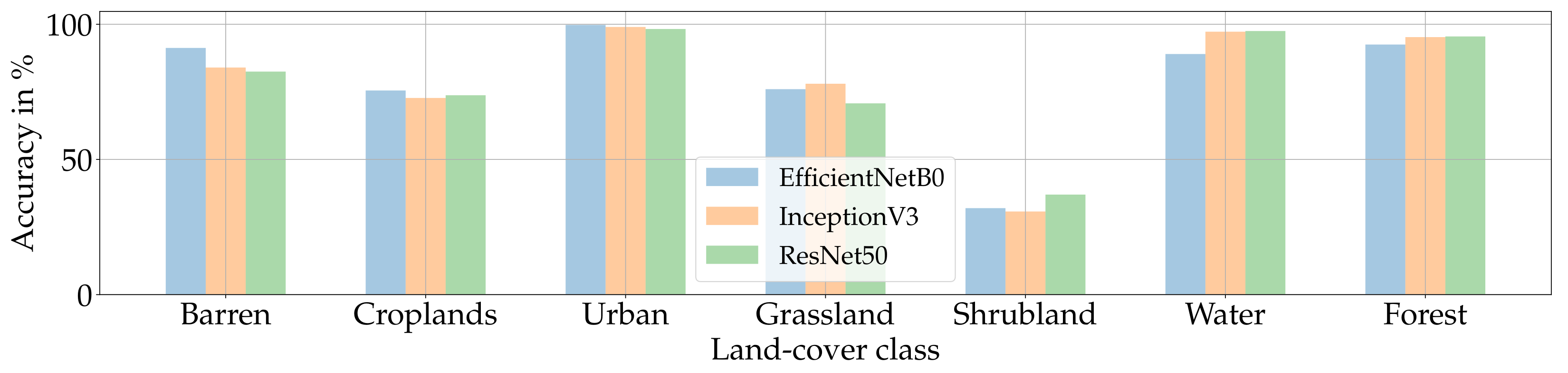

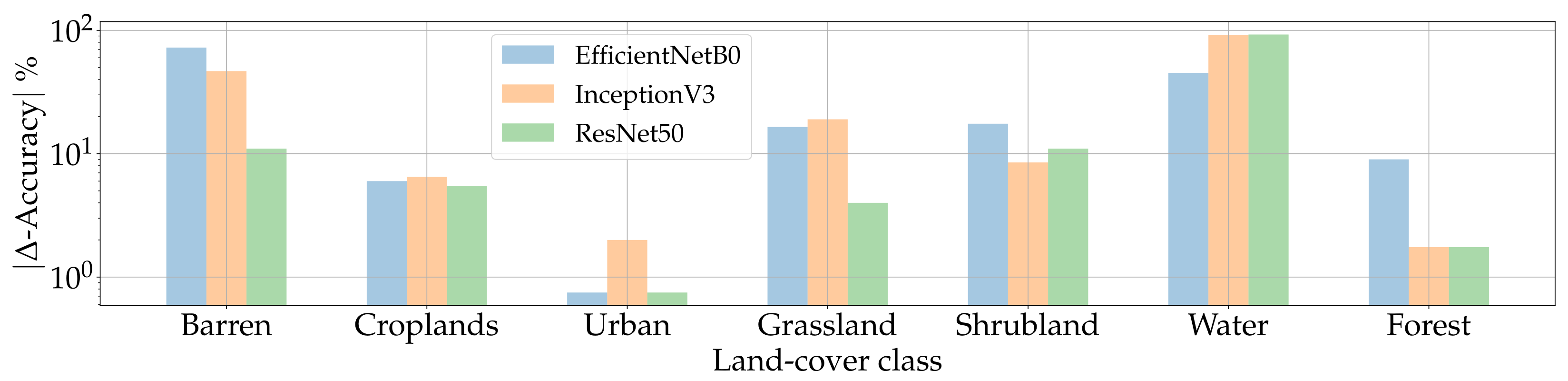

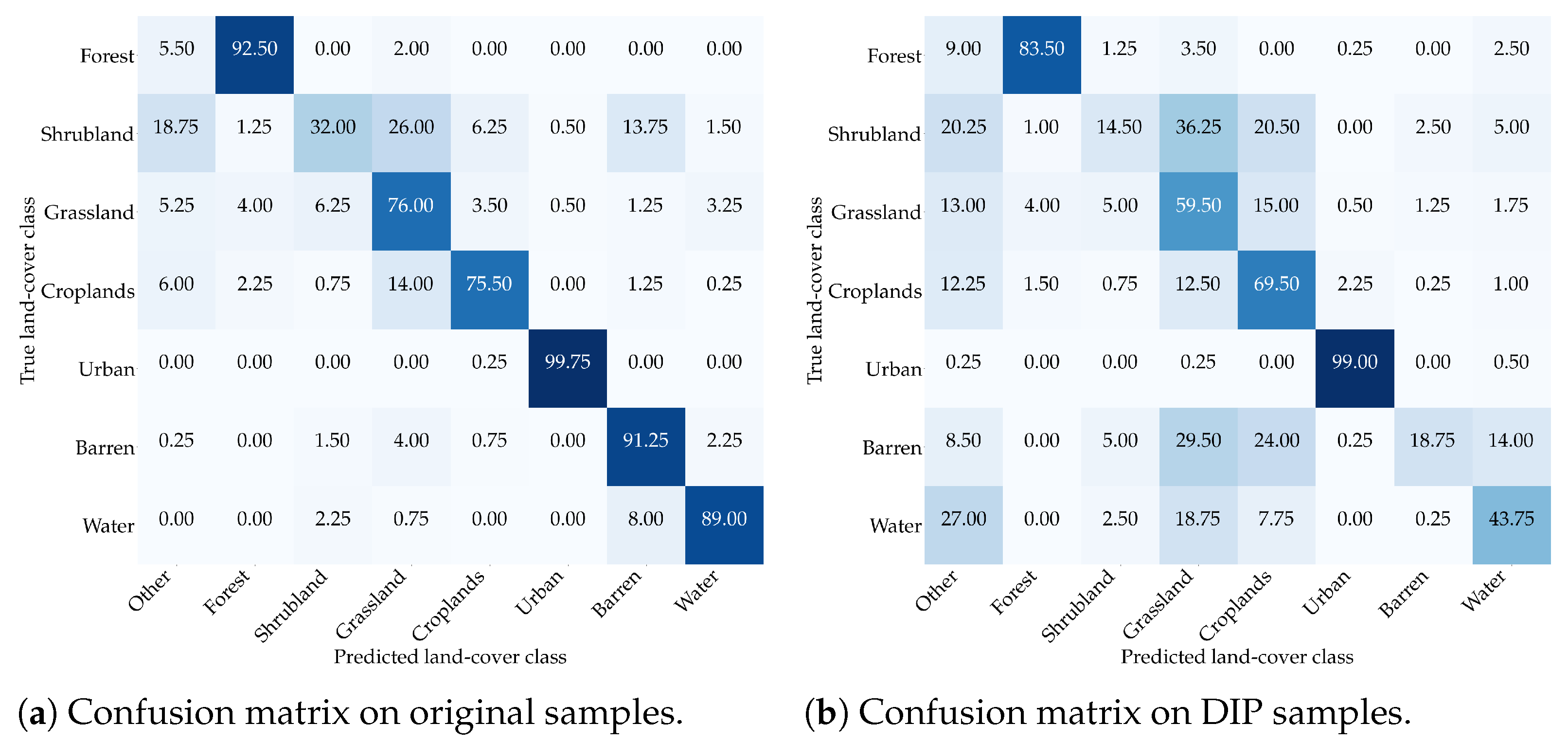

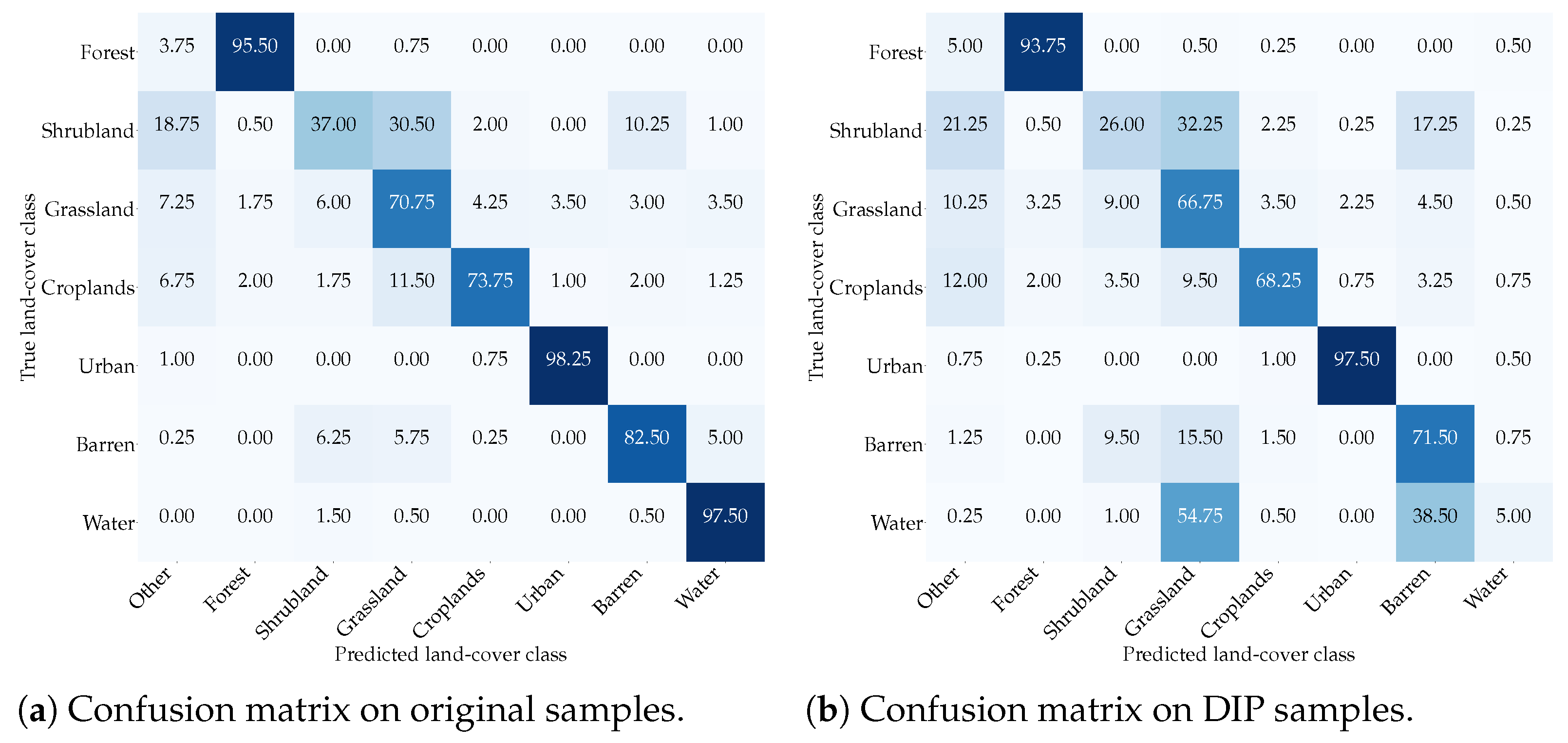

- Generation process “data usability” evaluation. We analyze our generation results in terms of the possibility of running additional applications on top of them. We do so by processing the generated samples with deep learning tools. In particular, we employ CNNs purposely trained for semantic tasks like land-cover classification to see if the generated samples maintain semantic information related to the original land type, and can be “used” in place of the original samples with no major performance losses.

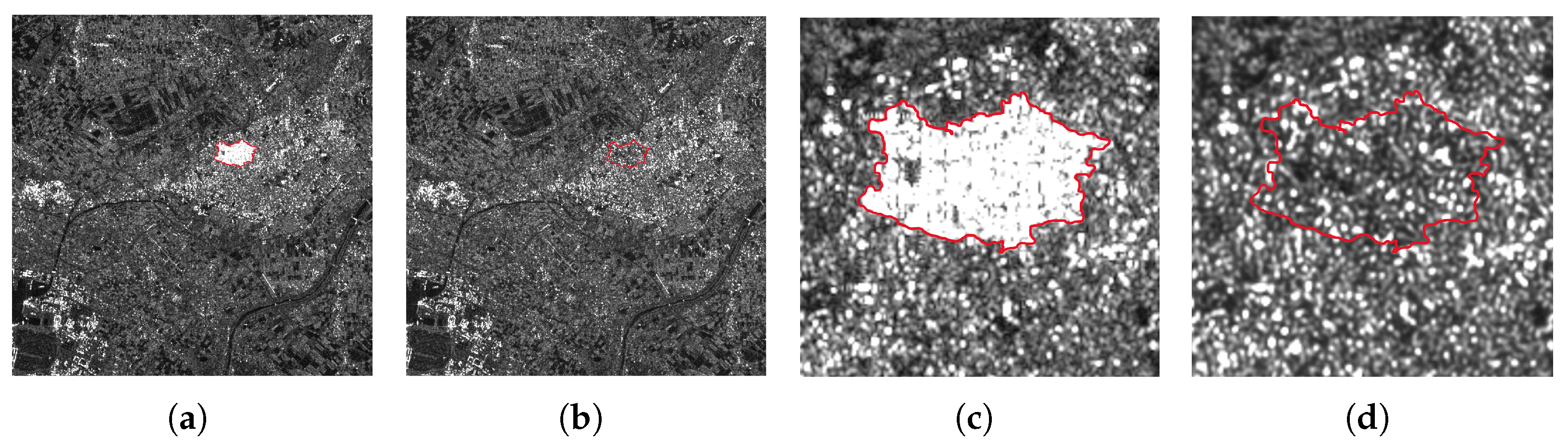

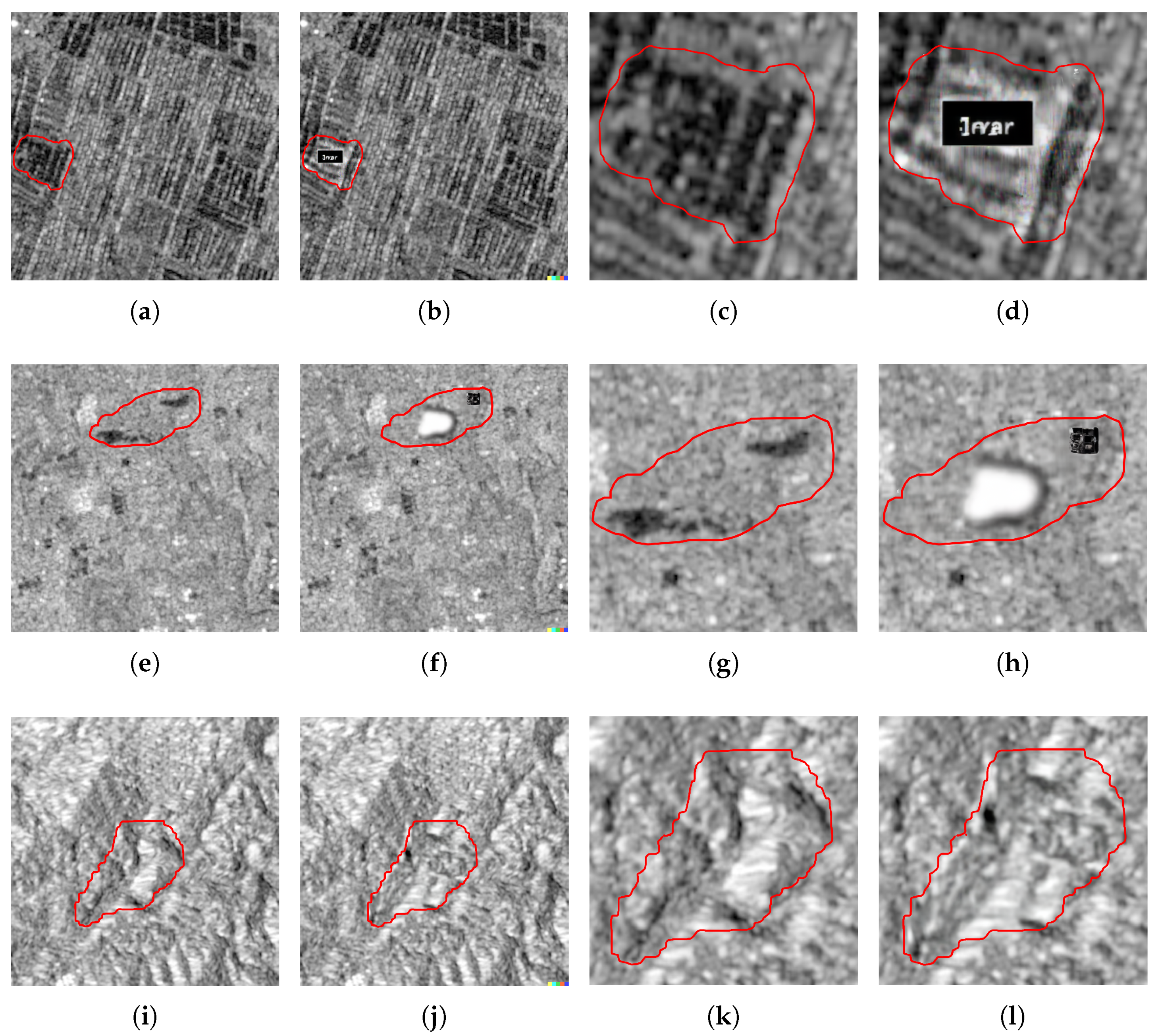

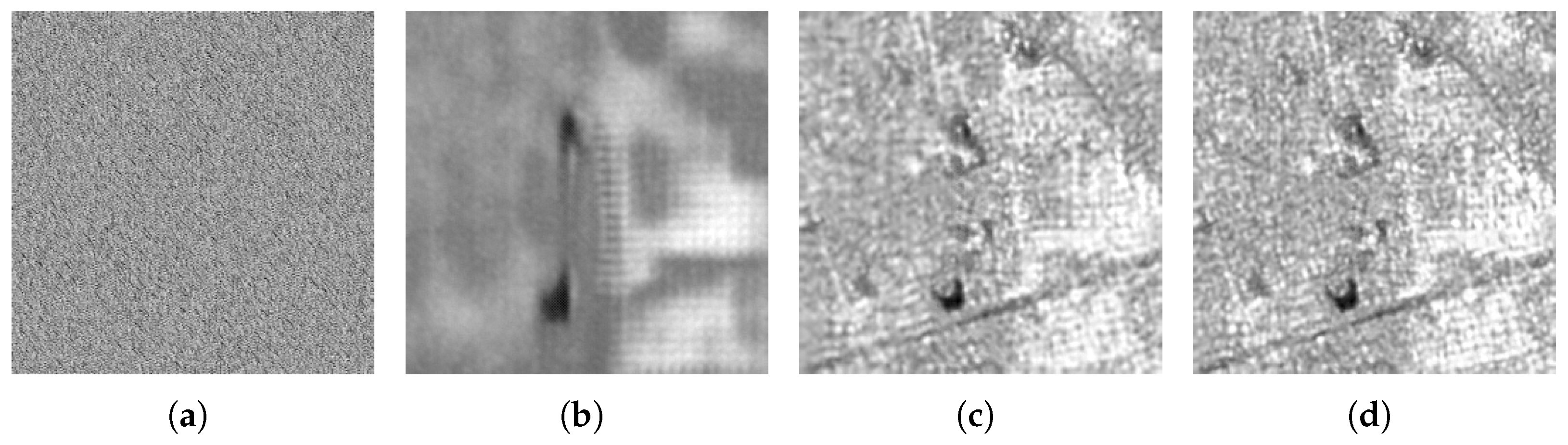

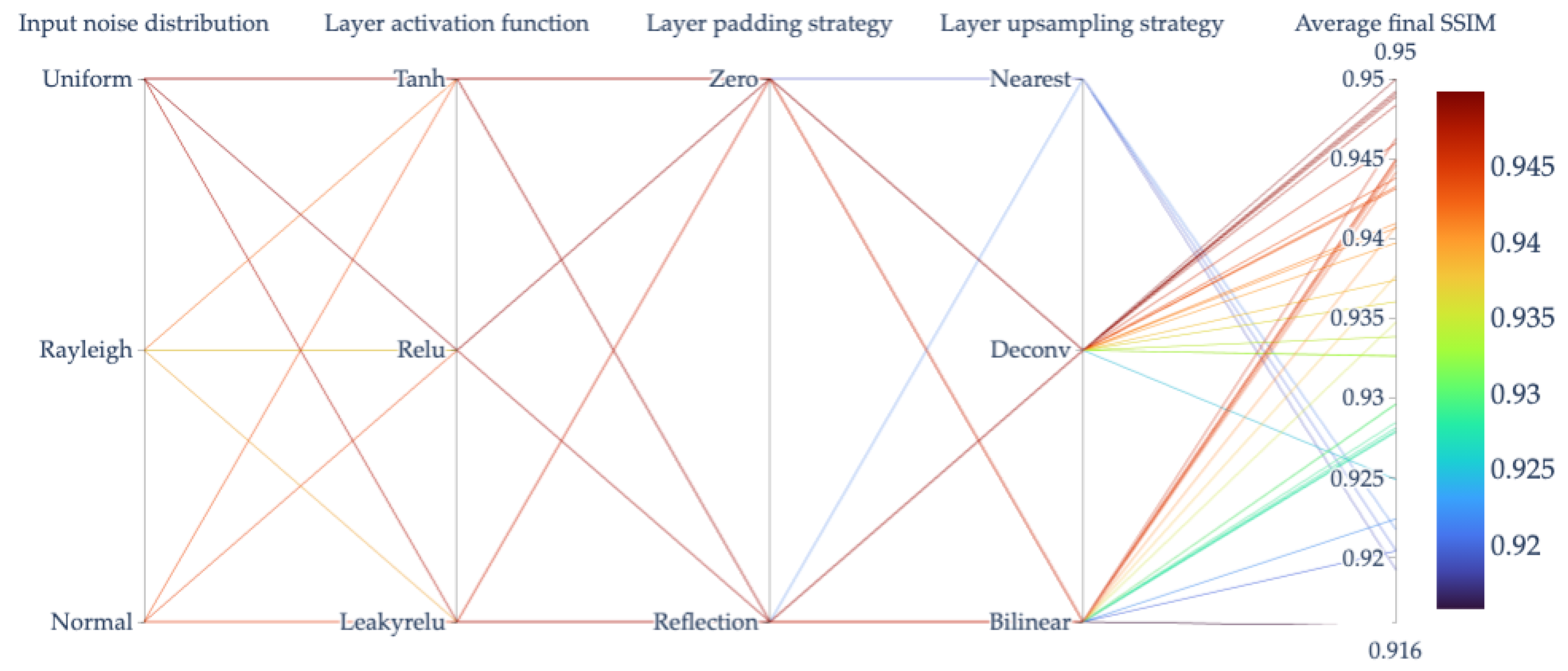

4.1. DIP Hyperparameter Search and Dataset Creation

- reflection as padding strategy;

- a deconvolution for upsampling;

- the SSIM as loss function;

- a uniform input noise distribution.

4.2. Quality Evaluation from Signal Processing Perspective

- No Inpainted Area (NIA): in this case, we neglect the pixel region inpainted by the DIP, i.e., the region of (7). This is useful to check that the region that should have not been touched by the tampering mask actually remains the same, indicating that the DIP is correctly converging;

- Full Image (FI): in this case, we consider the complete DIP-generated images, inpainting region included. This is useful to check that the content of the inpainted region is visually coherent with the rest of the picture.

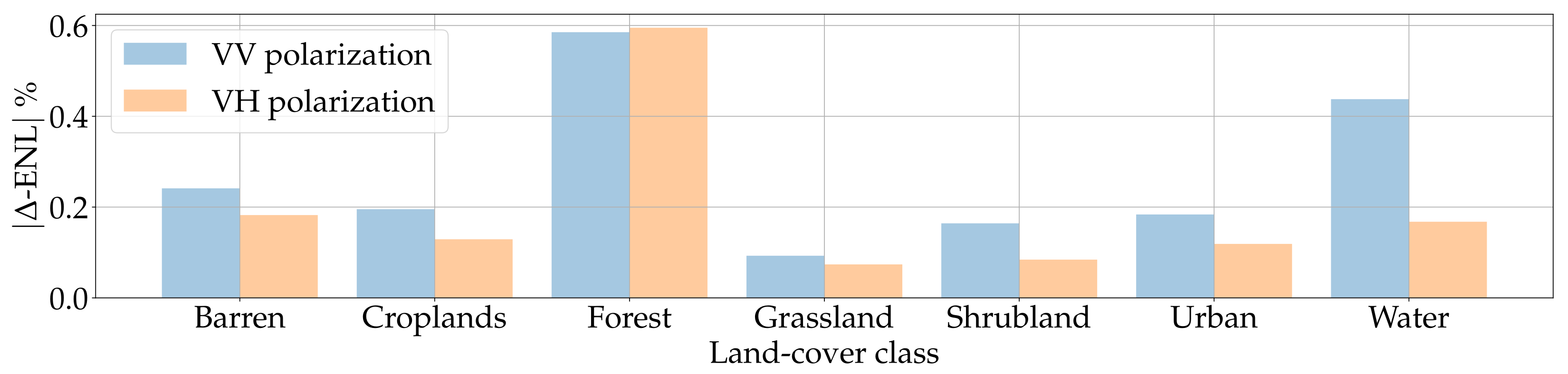

4.3. Data Compatibility Evaluation

- Only Inpainted Area (OIA): in this case, we focus only on values inpainted by the DIP, i.e., only on pixels inside ;

- FI: this scenario resembles the FI case of the previous experiment, i.e., we consider the complete DIP-generated images.

- we divide the DIP inpainted samples into non-overlapping patches with a resolution of pixels;

- we computed the average ENL considering the N patches of each sample.

4.4. Data Usability Evaluation

5. Conclusions

- Image quality assessment, i.e., SSIM and MS-SSIM;

- SAR image de-speckling, i.e., ENL and EPI;

- Convolutional Neural Network (CNN) semantic metrics.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Oliver, C.; Quegan, S. Understanding Synthetic Aperture Radar Images; Scitech Publishing: Raleigh, NC, USA, 2004. [Google Scholar]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Tsokas, A.; Rysz, M.; Pardalos, P.M.; Dipple, K. SAR data applications in earth observation: An overview. Expert Syst. Appl. 2022, 205, 117342. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Y.; Yu, F.; Yu, W.; Jiang, Z.; Ding, Y. Object detection capability evaluation for SAR image. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar] [CrossRef]

- Chen, L.; Tan, S.; Pan, Z.; Xing, J.; Yuan, Z.; Xing, X.; Zhang, P. A New Framework for Automatic Airports Extraction from SAR Images Using Multi-Level Dual Attention Mechanism. Remote Sens. 2020, 12, 560. [Google Scholar] [CrossRef] [Green Version]

- Chang, Y.L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.Y.; Lee, W.H. Ship Detection Based on YOLOv2 for SAR Imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef] [Green Version]

- Hummel, R. Model-based ATR using synthetic aperture radar. In Proceedings of the IEEE International Radar, Alexandria, VA, USA, 7–12 May 2000. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Space, C. Capella Space Open Data Gallery, March 2023. Available online: https://www.capellaspace.com/gallery/ (accessed on 22 March 2023).

- ICEYE. ICEYE SAR Datasets, March 2023. Available online: https://www.iceye.com/downloads/datasets (accessed on 22 March 2023).

- Meaker, M. High Above Ukraine, Satellites Get Embroiled in the War, March 2022. Available online: https://www.wired.co.uk/article/ukraine-russia-satellites (accessed on 22 March 2023).

- Walker, K. Helping Ukraine, March 2022. Available online: https://blog.google/inside-google/company-announcements/helping-ukraine/ (accessed on 22 March 2023).

- Lattari, F.; Gonzalez Leon, B.; Asaro, F.; Rucci, A.; Prati, C.; Matteucci, M. Deep Learning for SAR Image Despeckling. Remote Sens. 2019, 11, 1532. [Google Scholar] [CrossRef] [Green Version]

- Shaban, M.; Salim, R.; Abu Khalifeh, H.; Khelifi, A.; Shalaby, A.; El-Mashad, S.; Mahmoud, A.; Ghazal, M.; El-Baz, A. A Deep-Learning Framework for the Detection of Oil Spills from SAR Data. Sensors 2021, 21, 2351. [Google Scholar] [CrossRef]

- Ronci, F.; Avolio, C.; di Donna, M.; Zavagli, M.; Piccialli, V.; Costantini, M. Oil Spill Detection from SAR Images by Deep Learning. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef]

- Li, Y.; Peng, C.; Chen, Y.; Jiao, L.; Zhou, L.; Shang, R. A Deep Learning Method for Change Detection in Synthetic Aperture Radar Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5751–5763. [Google Scholar] [CrossRef]

- Geng, J.; Ma, X.; Zhou, X.; Wang, H. Saliency-Guided Deep Neural Networks for SAR Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7365–7377. [Google Scholar] [CrossRef]

- Guo, J.; Lei, B.; Ding, C.; Zhang, Y. Synthetic Aperture Radar Image Synthesis by Using Generative Adversarial Nets. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1111–1115. [Google Scholar] [CrossRef]

- Baier, G.; Deschemps, A.; Schmitt, M.; Yokoya, N. Synthesizing Optical and SAR Imagery From Land Cover Maps and Auxiliary Raster Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- He, W.; Yokoya, N. Multi-Temporal Sentinel-1 and -2 Data Fusion for Optical Image Simulation. ISPRS Int. J. Geo Inf. 2018, 7, 389. [Google Scholar] [CrossRef] [Green Version]

- Merkle, N.; Auer, S.; Müller, R.; Reinartz, P. Exploring the Potential of Conditional Adversarial Networks for Optical and SAR Image Matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1811–1820. [Google Scholar] [CrossRef]

- Liu, L.; Lei, B. Can SAR Images and Optical Images Transfer with Each Other? In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7019–7022. [Google Scholar]

- Grohnfeldt, C.; Schmitt, M.; Zhu, X. A conditional generative adversarial network to fuse sar and multispectral optical data for cloud removal from sentinel-2 images. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1726–1729. [Google Scholar]

- Ebel, P.; Schmitt, M.; Zhu, X.X. Cloud Removal in Unpaired Sentinel-2 Imagery Using Cycle-Consistent GAN and SAR-Optical Data Fusion. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2065–2068. [Google Scholar] [CrossRef]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud removal with fusion of high resolution optical and SAR images using generative adversarial networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef] [Green Version]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9446–9454. [Google Scholar]

- JAXA. ALOS-2 Overview. Available online: https://www.eorc.jaxa.jp/ALOS-2/en/about/overview.htm (accessed on 25 May 2023).

- JPL. NISAR Quick Facts. Available online: https://nisar.jpl.nasa.gov/mission/quick-facts (accessed on 25 May 2023).

- CEOS. CEOS Interoperability Handbook. Available online: https://ceos.org/document_management/Working_Groups/WGISS/Documents/WGISS_CEOS-Interoperability-Handbook_Feb2008.pdf (accessed on 25 May 2023).

- OGC. OGC GeoTiff Standard. Available online: https://www.earthdata.nasa.gov/s3fs-public/imported/19-008r4.pdf (accessed on 25 May 2023).

- Group, H. HDF5 User Guide. Available online: https://docs.hdfgroup.org/hdf5/develop/_u_g.html (accessed on 25 May 2023).

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-Shot Text-to-Image Generation. In Proceedings of the 38th International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis With Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Rasti, B.; Chang, Y.; Dalsasso, E.; Denis, L.; Ghamisi, P. Image Restoration for Remote Sensing: Overview and toolbox. IEEE Geosci. Remote Sens. Mag. 2022, 10, 201–230. [Google Scholar] [CrossRef]

- Ogawa, T.; Haseyama, M. Image inpainting based on sparse representations with a perceptual metric. EURASIP J. Adv. Signal Process. 2013, 2013, 179. [Google Scholar] [CrossRef] [Green Version]

- Tikhonov, A.N. On the solution of ill-posed problems and the method of regularization. In Proceedings of the Russian Academy of Sciences; Academy of Sciences of the Soviet Union: Moscow, Russia, 1963; Volume 151, pp. 501–504. [Google Scholar]

- Elad, M. Sparse and Redundant Representations; Springer: New York, NY, USA, 2010. [Google Scholar]

- Getreuer, P. Total Variation Inpainting using Split Bregman. Image Process. Line 2012, 2, 147–157. [Google Scholar] [CrossRef] [Green Version]

- Guillemot, C.; Le Meur, O. Image Inpainting: Overview and Recent Advances. IEEE Signal Process. Mag. 2014, 31, 127–144. [Google Scholar] [CrossRef]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image Inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000. [Google Scholar] [CrossRef]

- Efros, A.; Leung, T. Texture synthesis by non-parametric sampling. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kobe, Japan, 24–28 October 1999. [Google Scholar] [CrossRef] [Green Version]

- Criminisi, A.; Perez, P.; Toyama, K. Object removal by exemplar-based inpainting. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Madison, WI, USA, 16–22 June 2003. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative Image Inpainting with Contextual Attention. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Lahiri, A.; Jain, A.K.; Agrawal, S.; Mitra, P.; Biswas, P.K. Prior Guided GAN Based Semantic Inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Liu, H.; Wan, Z.; Huang, W.; Song, Y.; Han, X.; Liao, J. PD-GAN: Probabilistic Diverse GAN for Image Inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Wang, W.; Niu, L.; Zhang, J.; Yang, X.; Zhang, L. Dual-Path Image Inpainting With Auxiliary GAN Inversion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 July 2022. [Google Scholar]

- Qin, Z.; Zeng, Q.; Zong, Y.; Xu, F. Image inpainting based on deep learning: A review. Displays 2021, 69, 102028. [Google Scholar] [CrossRef]

- Cao, C.; Dong, Q.; Fu, Y. ZITS++: Image Inpainting by Improving the Incremental Transformer on Structural Priors. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 1–17. [Google Scholar] [CrossRef]

- Mandelli, S.; Bondi, L.; Lameri, S.; Lipari, V.; Bestagini, P.; Tubaro, S. Inpainting-based camera anonymization. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1522–1526. [Google Scholar]

- Sun, J.; Xue, F.; Li, J.; Zhu, L.; Zhang, H.; Zhang, J. TSINIT: A Two-Stage Inpainting Network for Incomplete Text. IEEE Trans. Multimed. 2022, 1–11. [Google Scholar] [CrossRef]

- He, S.; Peng, X.; Yuan, Z.; Du, W. Contour-context joint blind image inpainting network for molecular sieve particle size measurement of SEM images. IEEE Trans. Instrum. Meas. 2023, 72, 5019709. [Google Scholar] [CrossRef]

- Sun, H.; Ma, J.; Guo, Q.; Zou, Q.; Song, S.; Lin, Y.; Yu, H. Coarse-to-fine Task-driven Inpainting for Geoscience Images. IEEE Trans. Circuits Syst. Video Technol. 2023, 1. [Google Scholar] [CrossRef]

- Kingma, D.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Neural Information Processing Systems Conference, Montréal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Picetti, F.; Mandelli, S.; Bestagini, P.; Lipari, V.; Tubaro, S. DIPPAS: A deep image prior PRNU anonymization scheme. EURASIP J. Inf. Secur. 2022, 2022, 2. [Google Scholar] [CrossRef]

- Gong, K.; Catana, C.; Qi, J.; Li, Q. PET Image Reconstruction Using Deep Image Prior. IEEE Trans. Med. Imaging 2019, 38, 1655–1665. [Google Scholar] [CrossRef]

- Kong, F.; Picetti, F.; Bestagini, P.; Lipari, V.; Tang, X.; Tubaro, S. Deep prior-based unsupervised reconstruction of irregularly sampled seismic data. IEEE Geosci. Remote Sens. Lett. 2020, 19, 7501305. [Google Scholar] [CrossRef]

- Kong, F.; Picetti, F.; Lipari, V.; Bestagini, P.; Tubaro, S. Deep prior-based seismic data interpolation via multi-res U-net. In Proceedings of the SEG International Exposition and Annual Meeting, Online, 11–16 October 2020. [Google Scholar] [CrossRef]

- Pezzoli, M.; Perini, D.; Bernardini, A.; Borra, F.; Antonacci, F.; Sarti, A. Deep Prior Approach for Room Impulse Response Reconstruction. Sensors 2022, 22, 2710. [Google Scholar] [CrossRef]

- Lin, H.; Zhuang, Y.; Huang, Y.; Ding, X. Self-Supervised SAR Despeckling Powered by Implicit Deep Denoiser Prior. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4514705. [Google Scholar] [CrossRef]

- Samadi, S.; Abdi, Z.M.; Khosravi, M.R. Phase Unwrapping with Quality Map and Sparse-Inpainting in Interferometric SAR. In Proceedings of the EUSAR 2018 12th European Conference on Synthetic Aperture Radar, Aachen, Germany, 4–7 June 2018. [Google Scholar]

- Borzì, A.; Di Bisceglie, M.; Galdi, C.; Pallotta, L.; Ullo, S.L. Phase retrieval in SAR interferograms using diffusion and inpainting. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Schmitt, M.; Hughes, L.H.; Qiu, C.; Zhu, X.X. SEN12MS—A curated dataset of georeferenced multi-spectral Sentinel-1/2 imagery for deep learning and data fusion. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, IV-2/W7, 153–160. [Google Scholar] [CrossRef] [Green Version]

- Bertini, F.; Brand, O.; Carlier, S.; Del Bello, U.; Drusch, M.; Duca, R.; Fernandez, V.; Ferrario, C.; Ferreira, M.; Isola, C.; et al. Sentinel-2 ESA’s Optical High-Resolution Mission for GMES Operational Services. ESA Bull. Bull. ASE Eur. Space Agency 2012, 1322, 25–36. [Google Scholar]

- NASA. Moderate Resolution Imagery Spectroradiometer (MODIS), June 2022. Available online: https://modis.gsfc.nasa.gov/about/ (accessed on 22 June 2022).

- Agency, E.S. Radar Course 2, June 2021. Available online: https://earth.esa.int/web/guest/missions/esa-operational-eo-missions/ers/instruments/sar/applications/radar-courses/course-2 (accessed on 26 June 2021).

- De Zan, F.; Monti Guarnieri, A. TOPSAR: Terrain Observation by Progressive Scans. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2352–2360. [Google Scholar] [CrossRef]

- NASA. Shuttle Radar Topography Mission, January 2023. Available online: https://www2.jpl.nasa.gov/srtm/ (accessed on 3 January 2022).

- NASA. Advanced Spaceborne Thermal Emission and Reflection Radiometer, January 2023. Available online: https://asterweb.jpl.nasa.gov/gdem.asp (accessed on 3 January 2022).

- Schmitt, M.; Prexl, J.; Ebel, P.; Liebel, L.; Zhu, X.X. Weakly Supervised Semantic Segmentation of Satellite Images for Land Cover Mapping—Challenges and Opportunities. In Proceedings of the International Society for Photogrammetry and Remote Sensing (ISPRS) Congress, Nice, France, 14–20 June 2020. [Google Scholar]

- Zhou, W.; Alan C., B.; Ligang, L. Why Is Image Quality Assessment So Difficult? In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Orlando, FL, USA, 13–17 May 2002. [Google Scholar] [CrossRef]

- Zhou, W.; Eero, P.S.; Alan, C.B. Multiscale structural similarity for image quality assessment. In Proceedings of the Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 9–12 November 2003. [Google Scholar]

- Gagnon, L.; Jouan, A. Speckle filtering of SAR images: A comparative study between complex-wavelet-based and standard filters. In Wavelet Applications in Signal and Image Processing; SPIE: Bellingham, WA, USA, 1997. [Google Scholar]

- Chumning, H.; Huadong, G.; Changlin, W. Edge preservation evaluation of digital speckle filters. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Neural Information Processing Systems Conference, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Biewald, L. Experiment Tracking with Weights and Biases. 2020. Software. Available online: wandb.com (accessed on 20 July 2023).

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Girod, B. Psychovisual Aspects of Image Processing: What’s Wrong with Mean-Squared Error? In Proceedings of the Seventh Workshop on Multidimensional Signal Processing, Lake Placid, NY, USA, 23–25 September 1991. [Google Scholar]

- Eskicioglu, A.; Fisher, P. Image quality measures and their performance. IEEE Trans. Commun. 1995, 43, 2959–2965. [Google Scholar] [CrossRef] [Green Version]

- Zhou, W.; Alan, C.B.; Hamid, R.S.; Eero, P.S. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. A comprehensive evaluation of full reference image quality assessment algorithms. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012. [Google Scholar] [CrossRef]

- Li, L.; Jamieson, K.G.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimization. J. Mach. Learn. Res. 2017, 18, 6765–6816. [Google Scholar]

- Brunet, D.; Vrscay, E.R.; Wang, Z. On the mathematical properties of the structural similarity index. IEEE Trans. Image Process. 2012, 21, 1488–1499. [Google Scholar] [CrossRef]

- Dellepiane, S.G.; Angiati, E. Quality Assessment of Despeckled SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 691–707. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X.; Chen, X. Improved Techniques for Training GANs. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Bińkowski, M.; Sutherland, D.J.; Arbel, M.; Gretton, A. Demystifying MMD GANs. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef] [Green Version]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, (ICML), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Schmitt, M.; Wu, Y.L. Remote sensing image classification with the SEN12MS dataset. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2021, V-2-2021, 101–106. [Google Scholar] [CrossRef]

| Hyperparameter Class | Parameters Values |

|---|---|

| Layer activation functions | Tanh |

| ReLU | |

| LeakyReLU | |

| Layer padding strategy | Reflection |

| Zero | |

| Layer upsampling strategy | Bilinear |

| Nearest | |

| Deconvolution | |

| Loss function | MSE |

| SSIM | |

| Input noise distribution | Gaussian |

| Rayleigh | |

| Uniform |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cannas, E.D.; Mandelli, S.; Bestagini, P.; Tubaro, S.; Delp, E.J. Deep Image Prior Amplitude SAR Image Anonymization. Remote Sens. 2023, 15, 3750. https://doi.org/10.3390/rs15153750

Cannas ED, Mandelli S, Bestagini P, Tubaro S, Delp EJ. Deep Image Prior Amplitude SAR Image Anonymization. Remote Sensing. 2023; 15(15):3750. https://doi.org/10.3390/rs15153750

Chicago/Turabian StyleCannas, Edoardo Daniele, Sara Mandelli, Paolo Bestagini, Stefano Tubaro, and Edward J. Delp. 2023. "Deep Image Prior Amplitude SAR Image Anonymization" Remote Sensing 15, no. 15: 3750. https://doi.org/10.3390/rs15153750

APA StyleCannas, E. D., Mandelli, S., Bestagini, P., Tubaro, S., & Delp, E. J. (2023). Deep Image Prior Amplitude SAR Image Anonymization. Remote Sensing, 15(15), 3750. https://doi.org/10.3390/rs15153750