Active Navigation System for a Rubber-Tapping Robot Based on Trunk Detection

Abstract

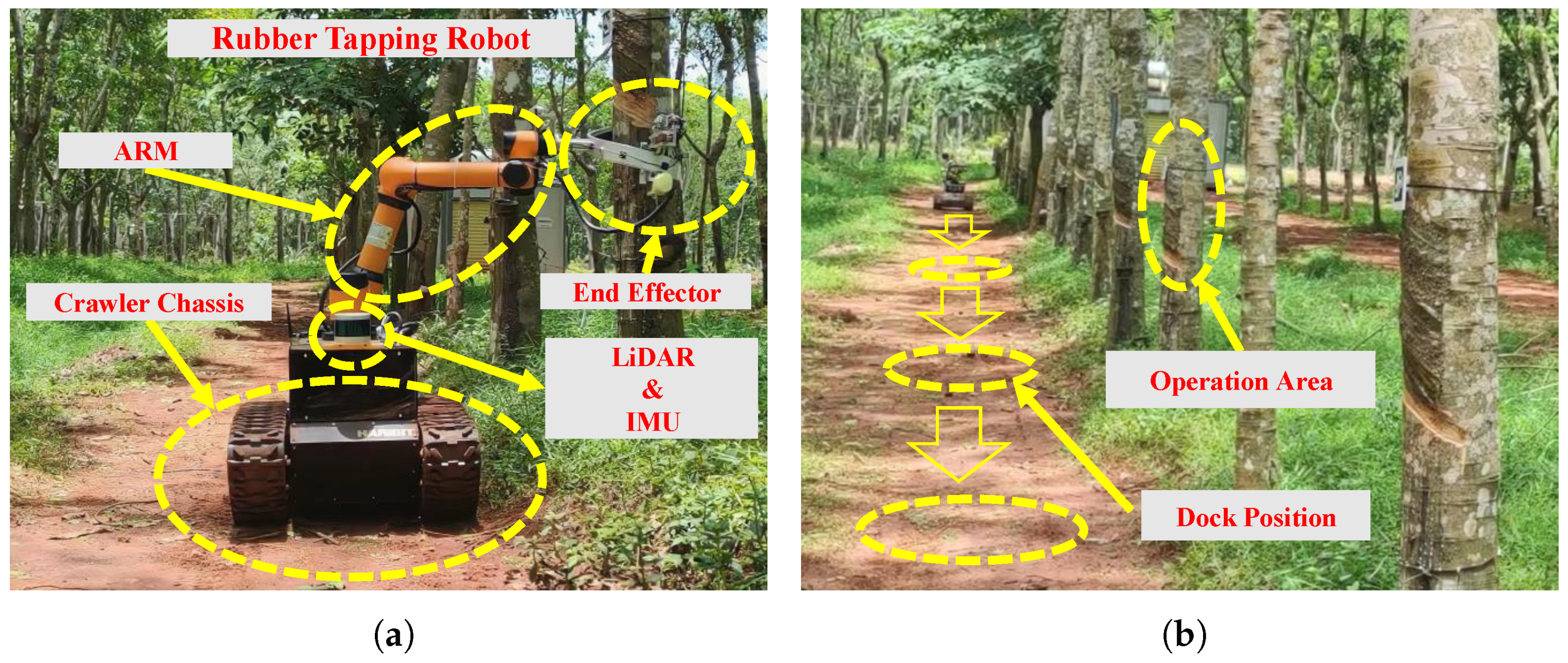

:1. Introduction

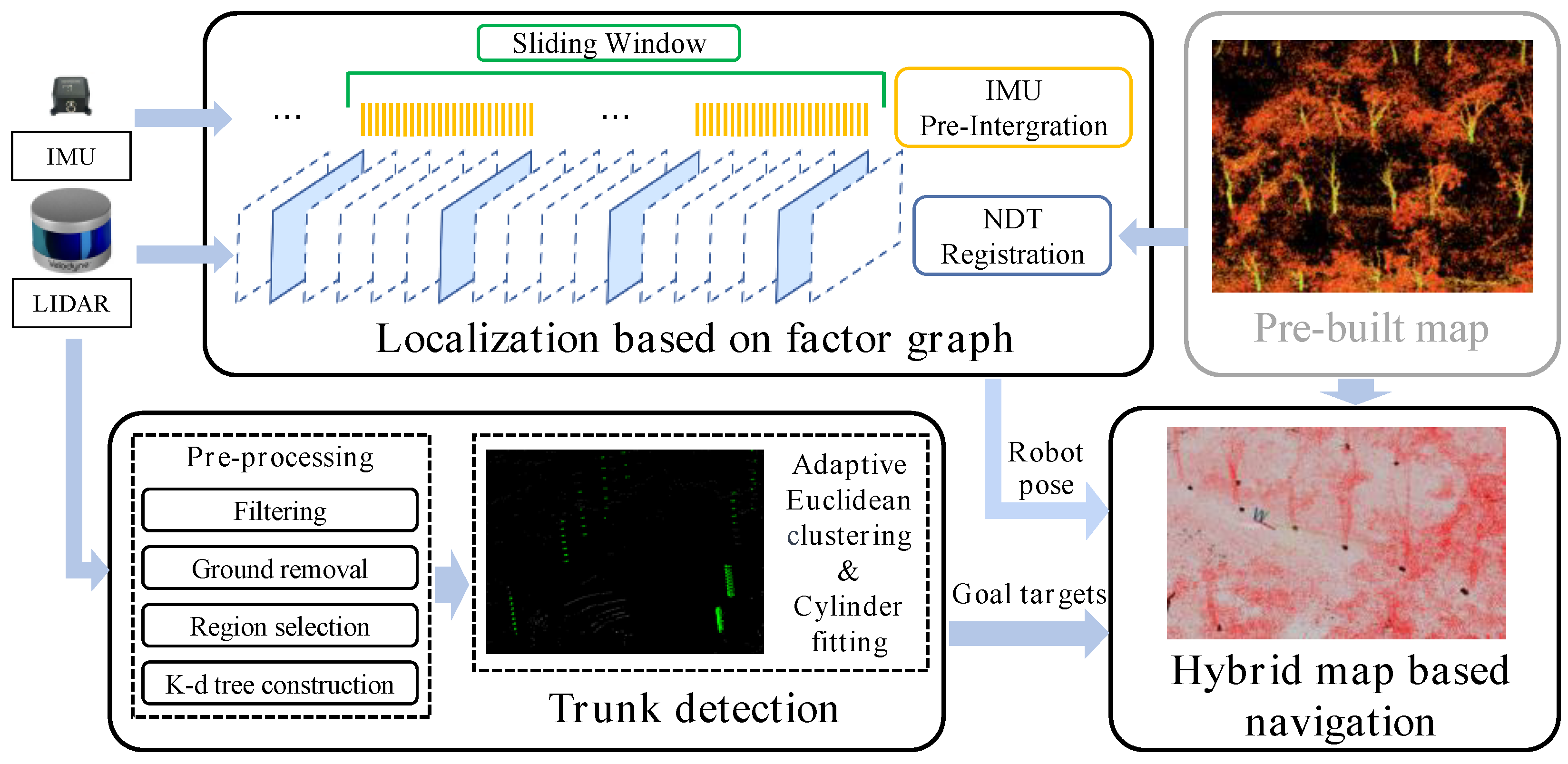

- A tightly coupled sliding window-based factor graph pose-tracking method for forest operation robots, especially rubber-tapping robots, providing stable and accurate 3D pose estimation continuously in forest scenarios;

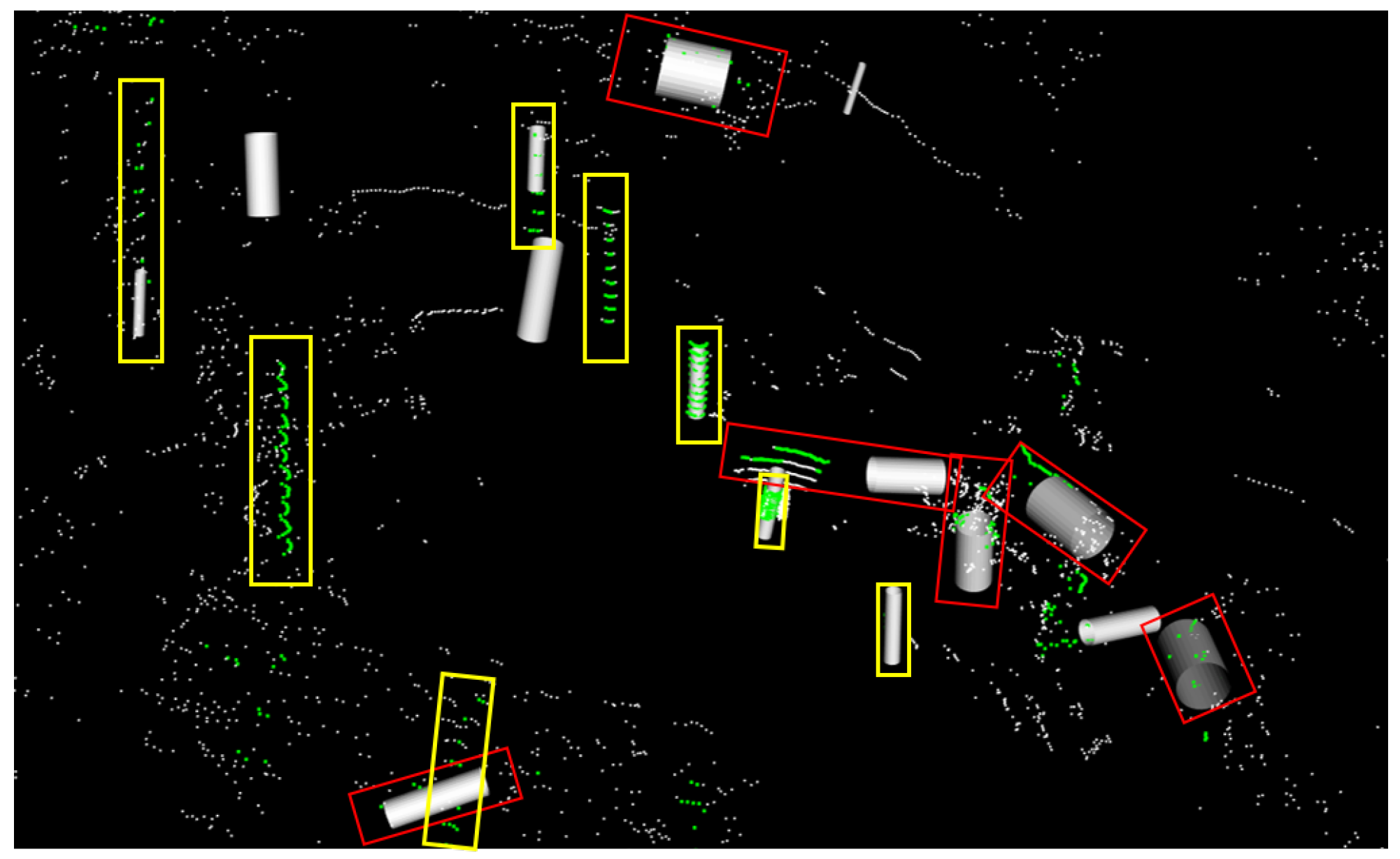

- A tree trunk detection method based on distance-adaptive Euclidean clustering, followed by cylinder fitting and a composite criteria screening, achieving a precision of 93.0% and a recall of 87.0% within the ROI;

- An active navigation system based on detected tree trunk guidance in hybrid map mode, which eliminates the need for manual target selection during navigation, improving efficiency;

- A practical validation is completed in robot rubber-tapping tasks of a real rubber plantation.

2. Related Work

2.1. Localization

2.2. Trunk Detection

3. Materials and Methods

3.1. Pose Tracking Based on Factor Graph

3.2. Trunk Detection

3.3. Hybrid Map Navigation

4. Results

4.1. Pose Tracking

4.2. Trunk Detection

4.3. Hybrid Map Navigation

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, H.; Zhang, S.; Zhai, Y.; Wang, S.; Zhang, C.; Zhang, J.; Li, W. Vision Servo Control Method and Tapping Experiment of Natural Rubber Tapping Robot. Smart Agric. 2020, 2, 56. [Google Scholar]

- Zhang, C.; Yong, L.; Chen, Y.; Zhang, S.; Ge, L.; Wang, S.; Li, W. A Rubber-Tapping Robot Forest Navigation and Information Collection System Based on 2D LiDAR and a Gyroscope. Sensors 2019, 19, 2136. [Google Scholar] [CrossRef] [Green Version]

- Yatawara, Y.; Brito, W.; Perera, M.; Balasuriya, D. “Appuhamy”—The Fully Automatic Rubber Tapping Machine. Engineer 2019, 27, 1. [Google Scholar] [CrossRef]

- Kamil, M.F.M.; Zakaria, W.N.W.; Tomari, M.R.M.; Sek, T.K.; Zainal, N. Design of Automated Rubber Tapping Mechanism. IOP Conf. Ser. Mater. Sci. Eng. 2020, 917, 012016. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Sensor Fusion IV: Control Paradigms and Data Structures; Society of Photo Optical: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Robotics: Science and Systems; MIT Press: Seattle, WA, USA, 2009; Volume 2, p. 435. [Google Scholar]

- Velas, M.; Spanel, M.; Herout, A. Collar line segments for fast odometry estimation from velodyne point clouds. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4486–4495. [Google Scholar]

- Grant, W.S.; Voorhies, R.C.; Itti, L. Finding planes in LiDAR point clouds for real-time registration. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 4347–4354. [Google Scholar]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef] [Green Version]

- Akai, N.; Morales, L.Y.; Takeuchi, E.; Yoshihara, Y.; Ninomiya, Y. Robust localization using 3D NDT scan matching with experimentally determined uncertainty and road marker matching. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1356–1363. [Google Scholar]

- Zhang, J.; Singh, S. Low-drift and real-time lidar odometry and mapping. Auton. Robot. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Zhang, J.; Singh, S. Visual-lidar odometry and mapping: Low-drift, robust, and fast. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2174–2181. [Google Scholar]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3d lidar inertial odometry and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3144–3150. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping. arXiv 2020, arXiv:2007.00258. [Google Scholar]

- Wolcott, R.W.; Eustice, R.M. Robust LIDAR localization using multiresolution Gaussian mixture maps for autonomous driving. Int. J. Robot. Res. 2017, 36, 292–319. [Google Scholar] [CrossRef]

- Wolcott, R.W.; Eustice, R.M. Fast LIDAR localization using multiresolution Gaussian mixture maps. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2814–2821. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419841532. [Google Scholar] [CrossRef]

- Dellaert, F.; Fox, D.; Burgard, W.; Thrun, S. Monte carlo localization for mobile robots. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No. 99CH36288C), Detroit, MI, USA, 10–15 May 1999; Volume 2, pp. 1322–1328. [Google Scholar]

- Fox, D.; Burgard, W.; Dellaert, F.; Thrun, S. Monte carlo localization: Efficient position estimation for mobile robots. In Proceedings of the Sixteenth National Conference on Artificial Intelligence and Eleventh Conference on Innovative Applications of Artificial Intelligence (AAAI/IAAI), Orlando, FL, USA, 18–22 July 1999; Volume 2, pp. 343–349. [Google Scholar]

- Pfaff, P.; Burgard, W.; Fox, D. Robust monte-carlo localization using adaptive likelihood models. In European Robotics Symposium 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 181–194. [Google Scholar]

- Blanco, J.L.; González, J.; Fernández-Madrigal, J.A. Optimal filtering for non-parametric observation models: Applications to localization and SLAM. Int. J. Robot. Res. 2010, 29, 1726–1742. [Google Scholar] [CrossRef]

- Levinson, J.; Thrun, S. Robust vehicle localization in urban environments using probabilistic maps. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4372–4378. [Google Scholar]

- Dhawale, A.; Shankar, K.S.; Michael, N. Fast monte-carlo localization on aerial vehicles using approximate continuous belief representations. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5851–5859. [Google Scholar]

- Mazuran, M.; Tipaldi, G.D.; Spinello, L.; Burgard, W. Nonlinear Graph Sparsification for SLAM. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; pp. 1–8. [Google Scholar]

- Carlevaris-Bianco, N.; Eustice, R.M. Conservative edge sparsification for graph SLAM node removal. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 854–860. [Google Scholar]

- Merfels, C.; Stachniss, C. Sensor fusion for self-localisation of automated vehicles. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 113–126. [Google Scholar] [CrossRef]

- Merfels, C.; Stachniss, C. Pose fusion with chain pose graphs for automated driving. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 3116–3123. [Google Scholar]

- Ding, W.; Hou, S.; Gao, H.; Wan, G.; Song, S. LiDAR Inertial Odometry Aided Robust LiDAR Localization System in Changing City Scenes. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4322–4328. [Google Scholar]

- Wilbers, D.; Rumberg, L.; Stachniss, C. Approximating marginalization with sparse global priors for sliding window SLAM-graphs. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 25–31. [Google Scholar]

- Wilbers, D.; Merfels, C.; Stachniss, C. Localization with sliding window factor graphs on third-party maps for automated driving. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5951–5957. [Google Scholar]

- Wilbers, D.; Merfels, C.; Stachniss, C. A comparison of particle filter and graph-based optimization for localization with landmarks in automated vehicles. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 220–225. [Google Scholar]

- Xie, Y.; Tian, J.; Zhu, X.X. Linking Points With Labels in 3D: A Review of Point Cloud Semantic Segmentation. IEEE Geosci. Remote Sens. Mag. 2020, 8, 38–59. [Google Scholar] [CrossRef] [Green Version]

- Miadlicki, K.; Pajor, M.; Sakow, M. Ground plane estimation from sparse LIDAR data for loader crane sensor fusion system. In Proceedings of the 2017 22nd International Conference on Methods and Models in Automation and Robotics (MMAR), Miedzyzdroje, Poland, 28–31 August 2017. [Google Scholar]

- Himmelsbach, M.; Hundelshausen, F.V.; Wuensche, H.J. Fast segmentation of 3D point clouds for ground vehicles. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010. [Google Scholar]

- Nie, F.; Zhang, W.; Wang, Y.; Shi, Y.; Huang, Q. A Forest 3-D Lidar SLAM System for Rubber-Tapping Robot Based on Trunk Center Atlas. IEEE/ASME Trans. Mechatronics 2021, 27, 2623–2633. [Google Scholar] [CrossRef]

- Zhao, M.; Jha, A.; Liu, Q.; Millis, B.A.; Mahadevan-Jansen, A.; Lu, L.; Landman, B.A.; Tyska, M.J.; Huo, Y. Faster Mean-shift: GPU-accelerated clustering for cosine embedding-based cell segmentation and tracking. Med. Image Anal. 2021, 71, 102048. [Google Scholar] [CrossRef] [PubMed]

- You, L.; Jiang, H.; Hu, J.; Chang, C.H.; Chen, L.; Cui, X.; Zhao, M. GPU-accelerated Faster Mean Shift with euclidean distance metrics. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Los Alamitos, CA, USA, 27 June–1 July 2022; pp. 211–216. [Google Scholar]

- Monnier, F.; Vallet, B.; Soheilian, B. Trees detection from laser point clouds acquired in dense urban areas by a mobile mapping system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci 2012, 3, 245–250. [Google Scholar] [CrossRef] [Green Version]

- Jurado, J.M.; Pádua, L.; Feito, F.R.; Sousa, J.J. Automatic Grapevine Trunk Detection on UAV-Based Point Cloud. Remote Sens. 2020, 12, 3043. [Google Scholar] [CrossRef]

- Zheng, Z.; Hu, Y.; Guo, T.; Qiao, Y.; He, Y.; Zhang, Y.; Huang, Y. AGHRNet: An attention ghost-HRNet for confirmation of catch-and-shake locations in jujube fruits vibration harvesting. Comput. Electron. Agric. 2023, 210, 107921. [Google Scholar] [CrossRef]

- Li, J.; Gao, W.; Wu, Y.; Liu, Y.; Shen, Y. High-quality indoor scene 3D reconstruction with RGB-D cameras: A brief review. Comput. Vis. Media 2022, 8, 369–393. [Google Scholar] [CrossRef]

- Zhu, X.F.; Xu, T.; Wu, X.J. Visual object tracking on multi-modal RGB-D videos: A review. arXiv 2022, arXiv:2201.09207. [Google Scholar]

- Su, F.; Zhao, Y.; Shi, Y.; Zhao, D.; Wang, G.; Yan, Y.; Zu, L.; Chang, S. Tree Trunk and Obstacle Detection in Apple Orchard Based on Improved YOLOv5s Model. Agronomy 2022, 12, 2427. [Google Scholar] [CrossRef]

- Jiang, A.; Noguchi, R.; Ahamed, T. Tree trunk recognition in orchard autonomous operations under different light conditions using a thermal camera and faster R-CNN. Sensors 2022, 22, 2065. [Google Scholar] [CrossRef] [PubMed]

- Beyaz, A.; Özkaya, M.T. Canopy analysis and thermographic abnormalities determination possibilities of olive trees by using data mining algorithms. Not. Bot. Horti Agrobot. Cluj-Napoca 2021, 49, 12139. [Google Scholar] [CrossRef]

- Kaess, M.; Ila, V.; Roberts, R.; Dellaert, F. The Bayes tree: An algorithmic foundation for probabilistic robot mapping. In Algorithmic Foundations of Robotics IX: Selected Contributions of the Ninth International Workshop on the Algorithmic Foundations of Robotics; Springer: Berlin/Heidelberg, Germany, 2010; pp. 157–173. [Google Scholar]

- Schmiedel, T.; Einhorn, E.; Gross, H.M. IRON: A fast interest point descriptor for robust NDT-map matching and its application to robot localization. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 3144–3151. [Google Scholar]

- Chiu, H.P.; Williams, S.; Dellaert, F.; Samarasekera, S.; Kumar, R. Robust vision-aided navigation using sliding-window factor graphs. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 46–53. [Google Scholar]

- Yan, Z.; Duckett, T.; Bellotto, N. Online learning for 3D LiDAR-based human detection: Experimental analysis of point cloud clustering and classification methods. Auton. Robot. 2020, 44, 147–164. [Google Scholar] [CrossRef]

- Jin, Y.H.; Lee, W.H. Fast cylinder shape matching using random sample consensus in large scale point cloud. Appl. Sci. 2019, 9, 974. [Google Scholar] [CrossRef] [Green Version]

- Nurunnabi, A.; Sadahiro, Y.; Lindenbergh, R.; Belton, D. Robust cylinder fitting in laser scanning point cloud data. Measurement 2019, 138, 632–651. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Sadahiro, Y.; Lindenbergh, R. Robust cylinder fitting in three-dimensional point cloud data. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2017, 42, 63–70. [Google Scholar] [CrossRef] [Green Version]

- Rösmann, C.; Hoffmann, F.; Bertram, T. Integrated online trajectory planning and optimization in distinctive topologies. Robot. Auton. Syst. 2017, 88, 142–153. [Google Scholar] [CrossRef]

| Methods | Max | Mean | Min | Rmse | Std |

|---|---|---|---|---|---|

| Ours | 0.464941 | 0.306398 | 0.128458 | 0.308381 | 0.034919 |

| UKF | 0.430175 | 0.325414 | 0.140065 | 0.327407 | 0.036074 |

| MCL | 4.411130 | 1.851616 | 0.029355 | 2.064198 | 0.912376 |

| NDT | 6.217928 | 0.532878 | 0.055203 | 0.983322 | 0.826416 |

| Methods | Max | Mean | Min | Rmse | Std |

|---|---|---|---|---|---|

| Ours | 0.032788 | 0.006511 | 0.000332 | 0.007258 | 0.003208 |

| UKF | 0.053262 | 0.007903 | 0.000348 | 0.008789 | 0.003845 |

| MCL | 1.062193 | 0.032857 | 0.001063 | 0.064827 | 0.055883 |

| NDT | 1.053219 | 0.012379 | 0.000068 | 0.031304 | 0.028753 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, J.; Shi, Y.; Cao, J.; Sun, Y.; Zhang, W. Active Navigation System for a Rubber-Tapping Robot Based on Trunk Detection. Remote Sens. 2023, 15, 3717. https://doi.org/10.3390/rs15153717

Fang J, Shi Y, Cao J, Sun Y, Zhang W. Active Navigation System for a Rubber-Tapping Robot Based on Trunk Detection. Remote Sensing. 2023; 15(15):3717. https://doi.org/10.3390/rs15153717

Chicago/Turabian StyleFang, Jiahao, Yongliang Shi, Jianhua Cao, Yao Sun, and Weimin Zhang. 2023. "Active Navigation System for a Rubber-Tapping Robot Based on Trunk Detection" Remote Sensing 15, no. 15: 3717. https://doi.org/10.3390/rs15153717

APA StyleFang, J., Shi, Y., Cao, J., Sun, Y., & Zhang, W. (2023). Active Navigation System for a Rubber-Tapping Robot Based on Trunk Detection. Remote Sensing, 15(15), 3717. https://doi.org/10.3390/rs15153717