Magnetotelluric Deep Learning Forward Modeling and Its Application in Inversion

Abstract

1. Introduction

- 1.

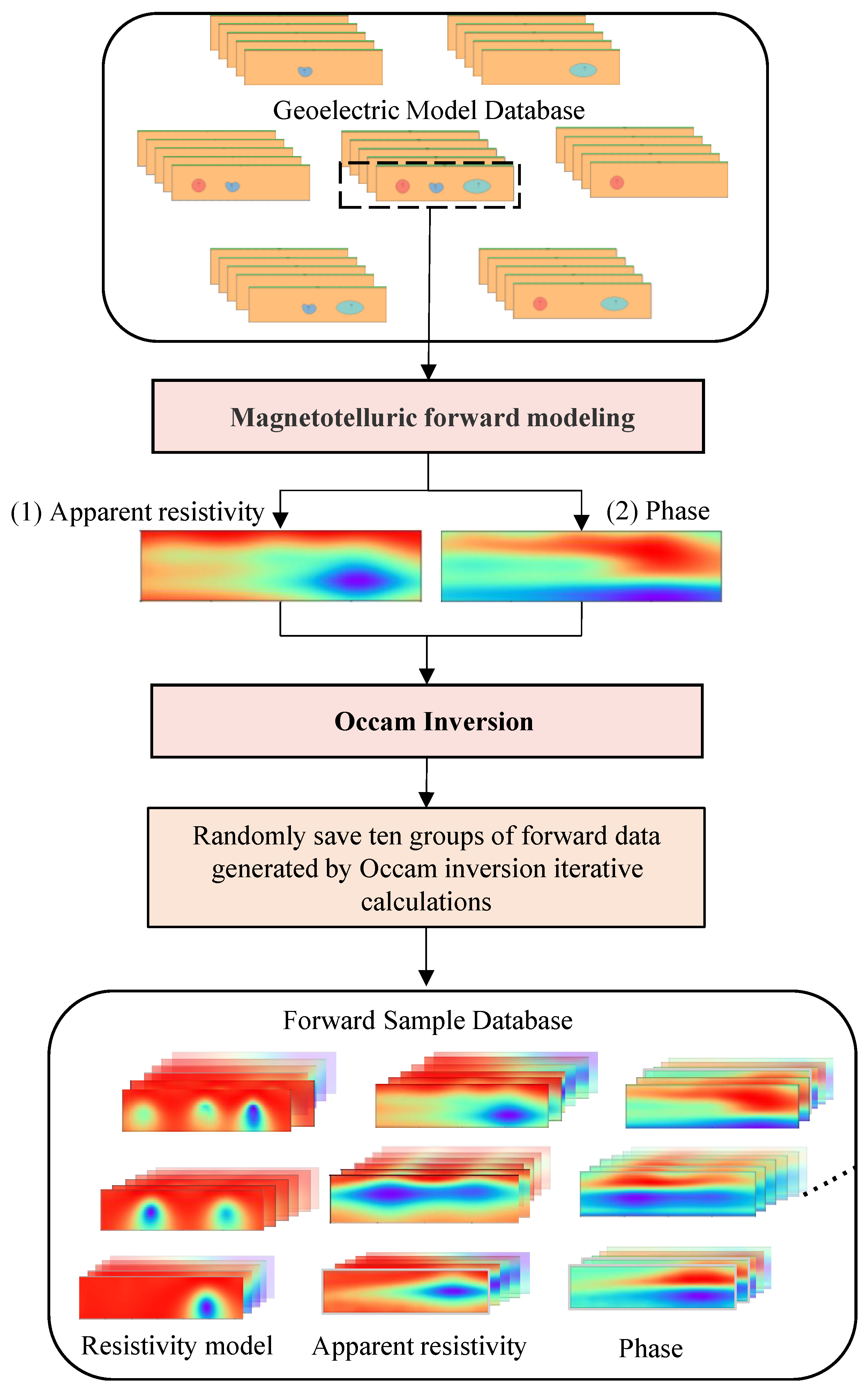

- The construction of a new forward network dataset to serve MT inversion. To ensure the effectiveness of DL networks, constructing an appropriate dataset for the training samples is crucial for MT forward networks. The inverse forward response model is generated iteratively through an optimization algorithm during the inversion procedure, which differs from real subsurface models or human-made models. We used different geoelectric models for MT forwarding and input the inversion program after obtaining the observed data. By acquiring the forward data generated by the inversion process to build a forward sample dataset that fits the inversion iterative model, an excellent forward network model oriented to inversion was trained.

- 2.

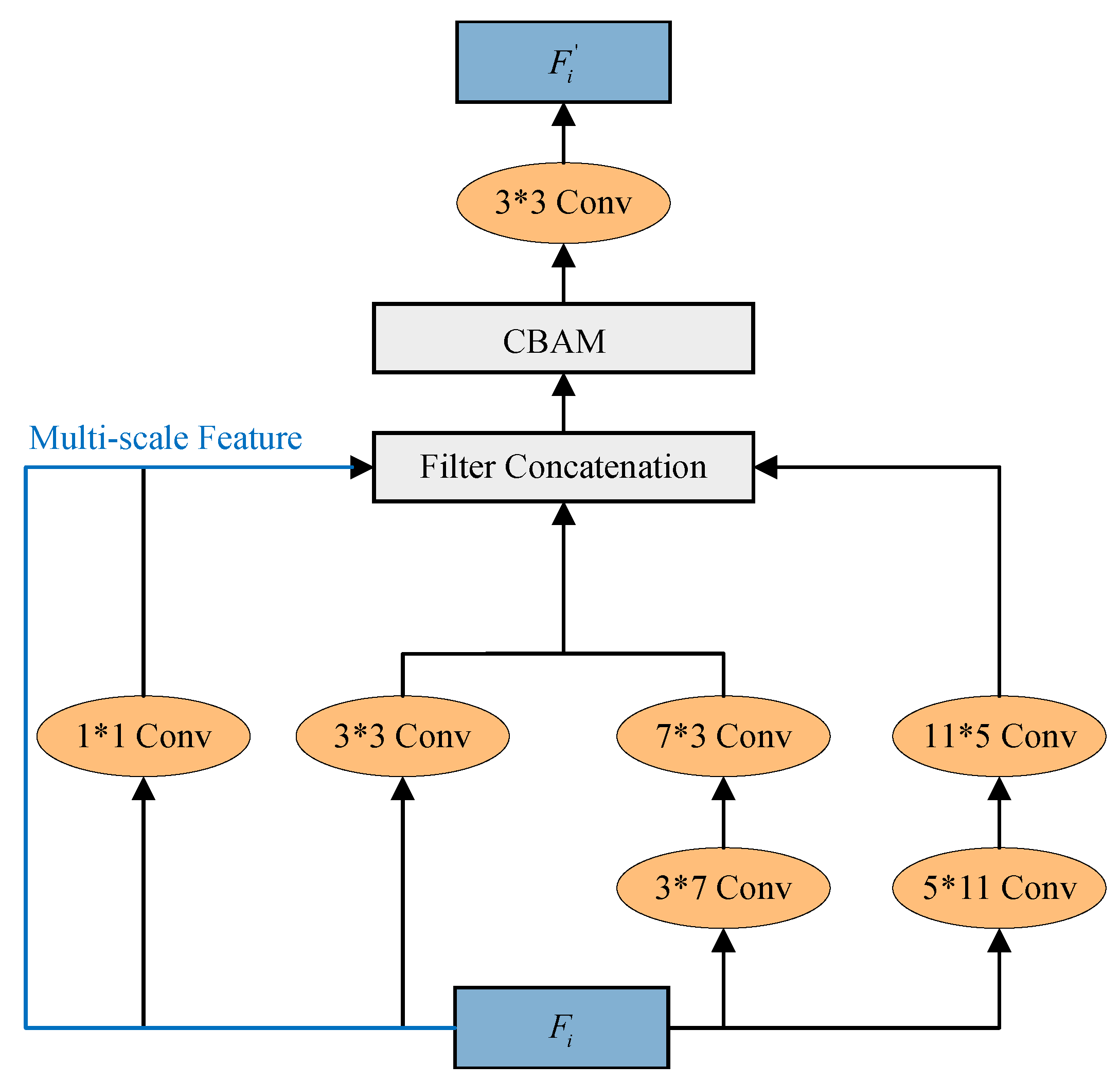

- We designed the forward modeling network MT-MitNet for forward computations. MT-MitNet takes Mix Transformer [22] as the backbone of the network, obtains rich feature information of the anomalies in the encoding module, reconstructs anomalous features in the decoding module by combining multiscale ideas, and eliminates the skip connection between the encoder and decoder. After training, MT-MitNet, with global modeling capability, displayed stable and rapid forward modeling calculations, with an average accuracy greater than 95%. MT-MitNet serves the forward calculation in inversion with high accuracy, which improves the overall efficiency of inversion calculation, and the network model has strong generalization ability in the face of multiple anomalies.

2. Methodology

2.1. Dataset Preparation

2.2. Inversion-Oriented Deep Learning Forward Network Model: MT-MitNet

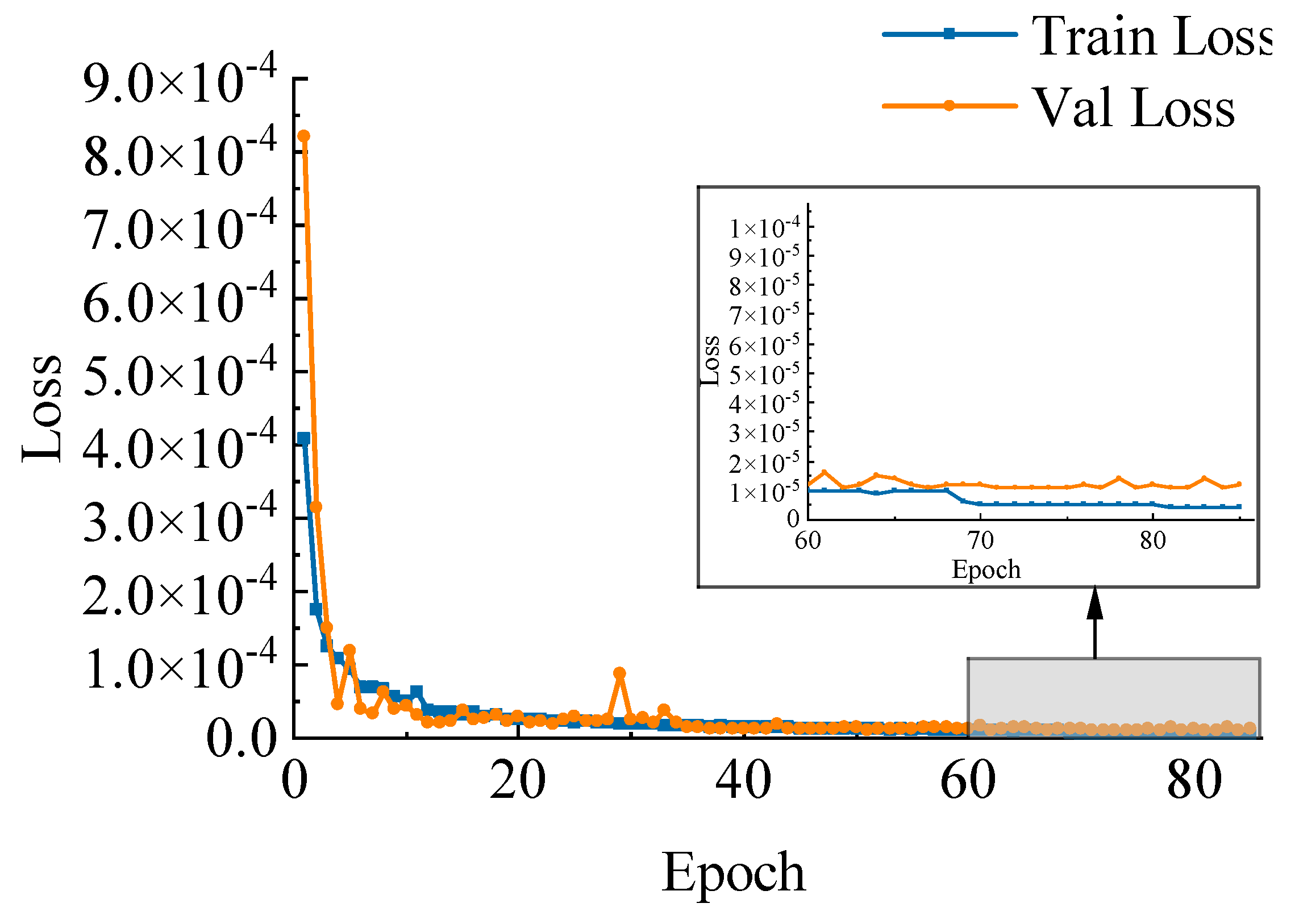

2.3. Network Training

3. Experiments

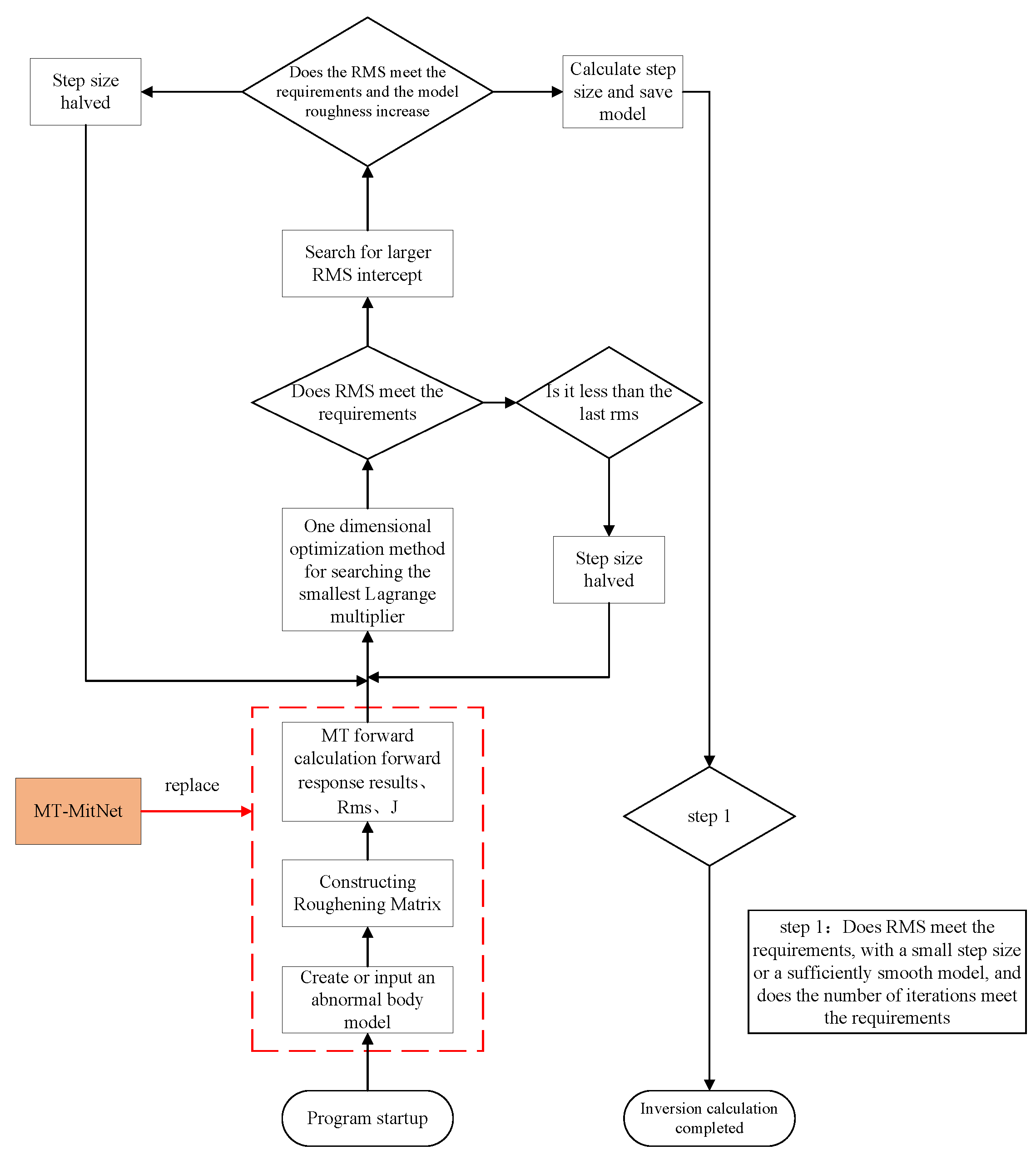

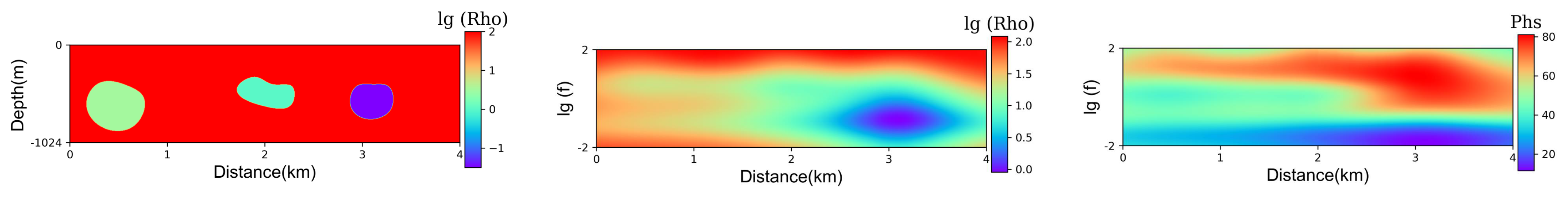

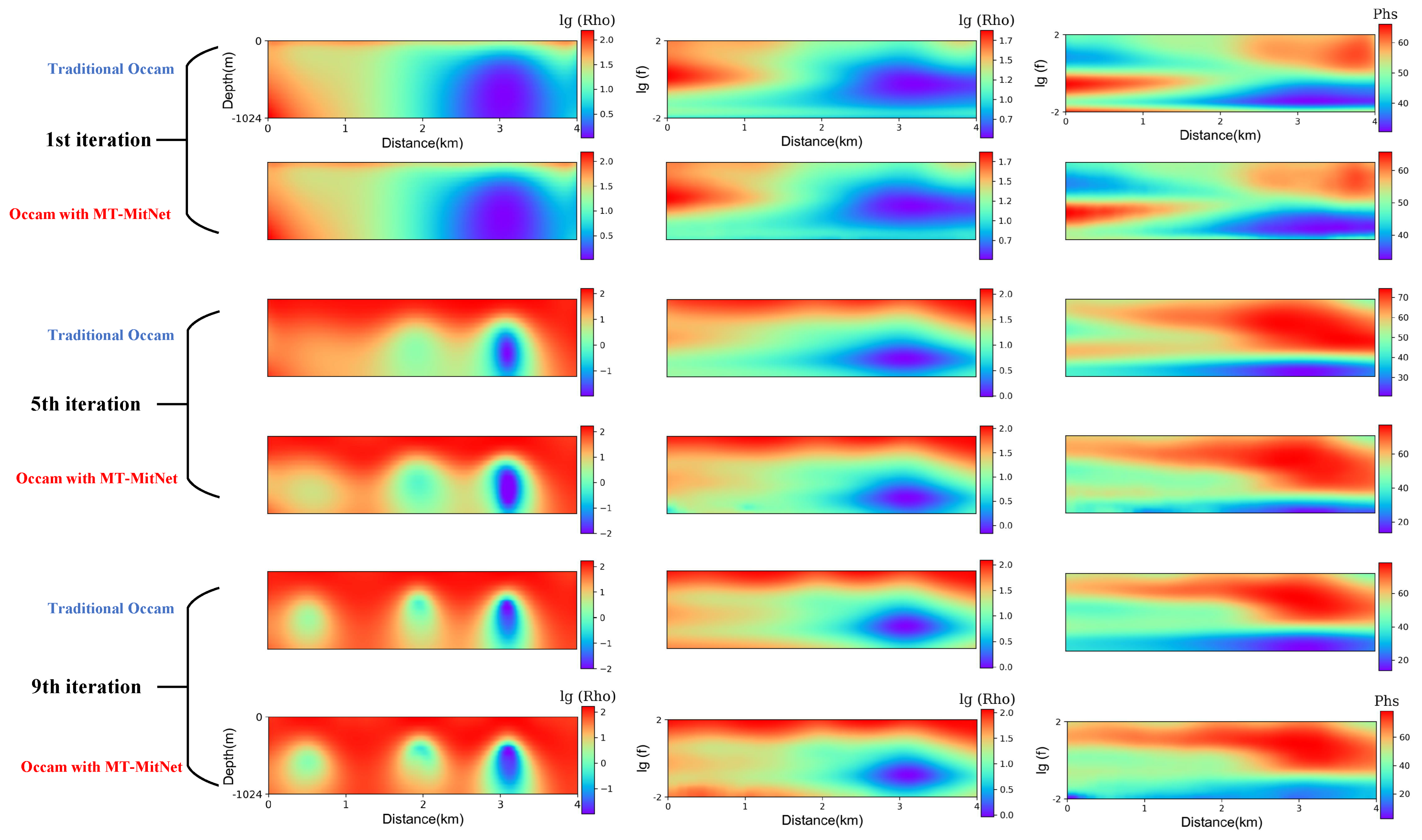

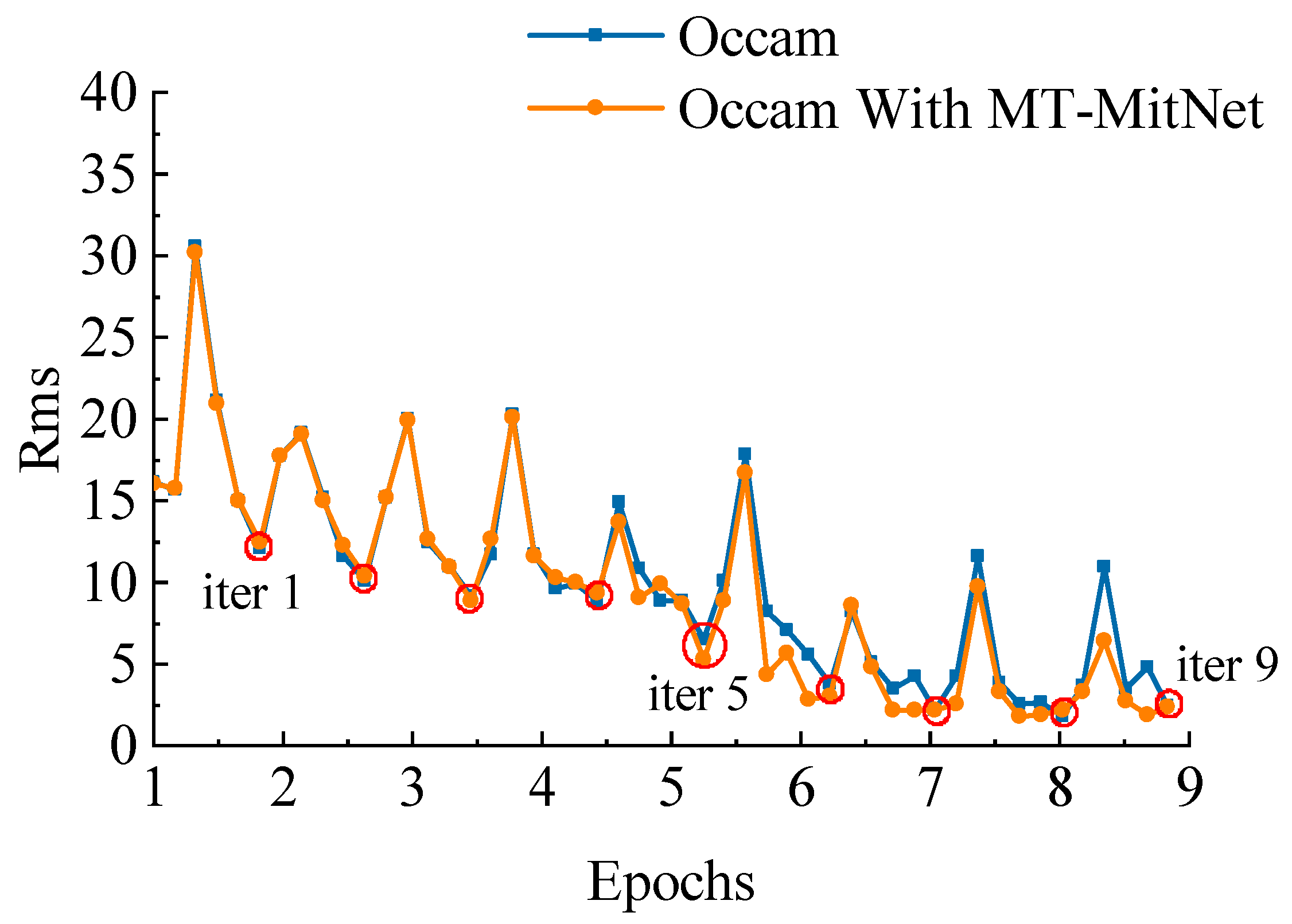

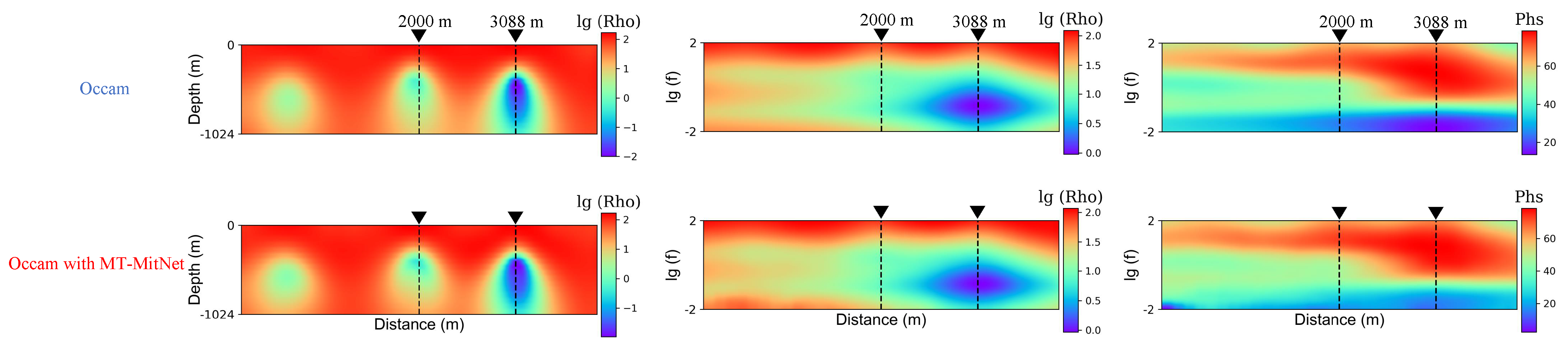

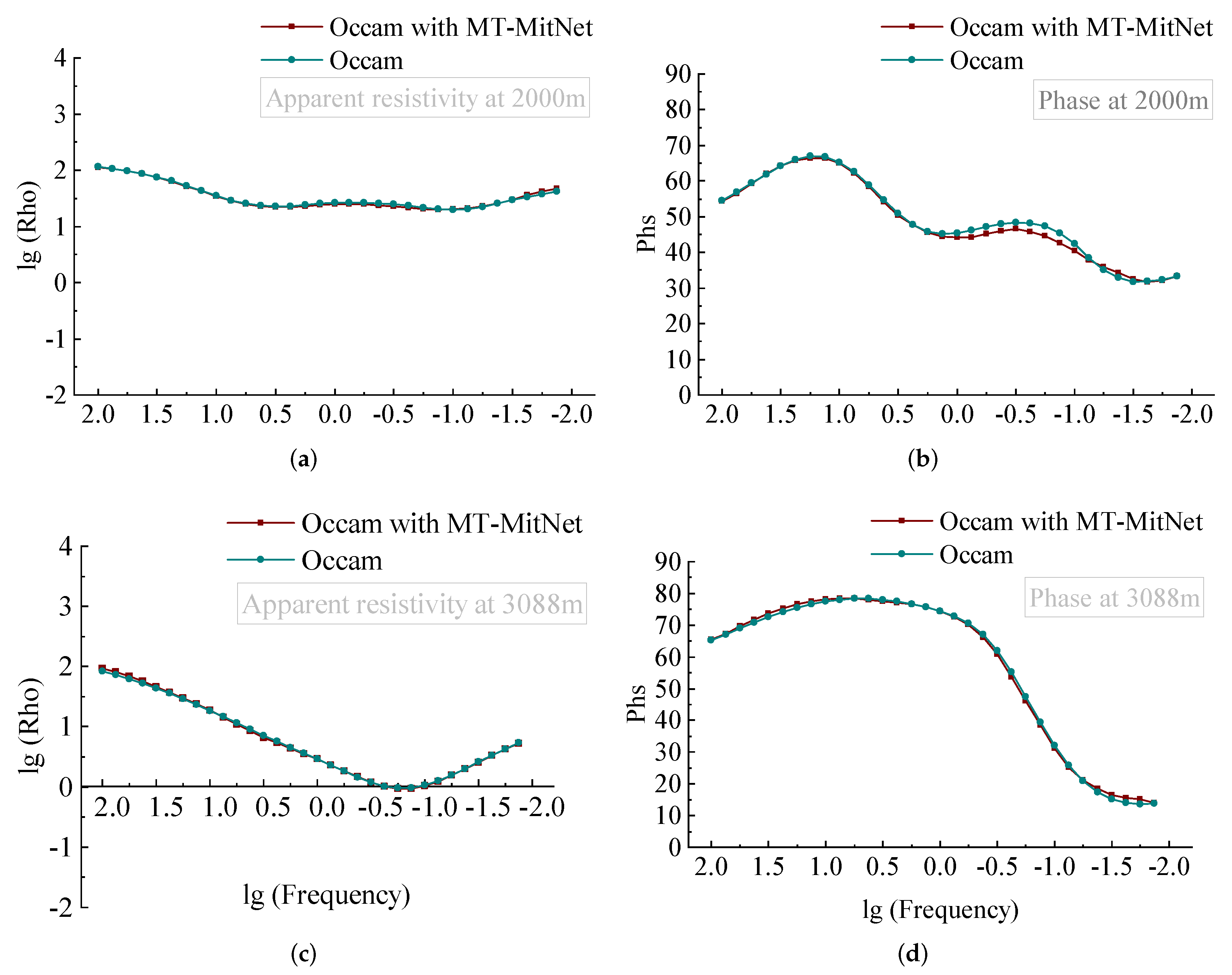

3.1. MT-MitNet Forward Network Model Replaces the Forward Computation Module of Occam Inversion Program

3.2. Experimental Analysis of the Inversion of MT-MitNet Replacement Forward Calculation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tikhonov, A.N. On determining electrical characteristics of the deep layers of the Earth’s crust. Dokl. Akad. Nauk. SSSR 1950, 73, 295–297. [Google Scholar]

- Cagniard, L. Basic theory of the magneto-telluric method of geophysical prospecting. Geophysics 1953, 18, 605–635. [Google Scholar] [CrossRef]

- Constable, S.C.; Parker, R.; Constable, C. A practical algorithm for generating smooth models from electromagnetic sounding data. Geophysics 1987, 52, 289–300. [Google Scholar] [CrossRef]

- De Groot Hedlin, C.; Constable, S. Occam’s inversion to generate smooth, two-dimensional models from magnetotelluric data. Geophysics 1990, 55, 1613–1624. [Google Scholar] [CrossRef]

- Smith, J.T.; Booker, J.R. Rapid inversion of two-and three-dimensional magnetotelluric data. J. Geophys. Res. Solid Earth 1991, 96, 3905–3922. [Google Scholar] [CrossRef]

- Newman, G.A.; Alumbaugh, D.L. Three-dimensional magnetotelluric inversion using non-linear conjugate gradients. Geophys. J. Int. 2000, 140, 410–424. [Google Scholar] [CrossRef]

- Rodi, W.; Mackie, R.L. Nonlinear conjugate gradients algorithm for 2-D magnetotelluric inversion. Geophysics 2001, 66, 174–187. [Google Scholar] [CrossRef]

- Pratt, R.G.; Shin, C.; Hick, G. Gauss—Newton and full Newton methods in frequency—Space seismic waveform inversion. Geophys. J. Int. 1998, 133, 341–362. [Google Scholar] [CrossRef]

- Loke, M.H.; Dahlin, T. A comparison of the Gauss—Newton and quasi-Newton methods in resistivity imaging inversion. J. Appl. Geophys. 2002, 49, 149–162. [Google Scholar] [CrossRef]

- Jones, A.G. On the equivalence of the “Niblett” and “Bostick” transformations in the magnetotelluric method. J. Geophys. 1983, 53, 72–73. [Google Scholar]

- Montahaei, M.; Oskooi, B. Magnetotelluric inversion for azimuthally anisotropic resistivities employing artificial neural networks. Acta Geophys. 2014, 62, 12–43. [Google Scholar] [CrossRef]

- Fang, J.; Zhou, H.; Li, Y.E.; Zhang, Q.; Wang, L.; Sun, P.; Zhang, J. Data-driven low-frequency signal recovery using deep-learning predictions in full-waveform inversion. Geophysics 2020, 85, A37–A43. [Google Scholar] [CrossRef]

- Moseley, B.; Markham, A.; Nissen-Meyer, T. Fast approximate simulation of seismic waves with deep learning. arXiv 2018, arXiv:1807.06873. [Google Scholar]

- Hansen, T.M.; Cordua, K.S. Efficient Monte Carlo sampling of inverse problems using a neural network-based forward—Applied to GPR crosshole traveltime inversion. Geophys. J. Int. 2017, 211, 1524–1533. [Google Scholar] [CrossRef]

- Li, S.; Liu, B.; Ren, Y.; Chen, Y.; Yang, S.; Wang, Y.; Jiang, P. Deep-learning inversion of seismic data. arXiv 2019, arXiv:1901.07733. [Google Scholar] [CrossRef]

- Puzyrev, V. Deep learning electromagnetic inversion with convolutional neural networks. Geophys. J. Int. 2019, 218, 817–832. [Google Scholar] [CrossRef]

- Puzyrev, V.; Swidinsky, A. Inversion of 1D frequency-and time-domain electromagnetic data with convolutional neural networks. Comput. Geosci. 2021, 149, 104681. [Google Scholar] [CrossRef]

- Liu, W.; Wang, H.; Xi, Z.; Zhang, R.; Huang, X. Physics-Driven Deep Learning Inversion with Application to Magnetotelluric. Remote Sens. 2022, 14, 3218. [Google Scholar] [CrossRef]

- Conway, D.; Alexander, B.; King, M.; Heinson, G.; Kee, Y. Inverting magnetotelluric responses in a three-dimensional earth using fast forward approximations based on artificial neural networks. Comput. Geosci. 2019, 127, 44–52. [Google Scholar] [CrossRef]

- Liu, B.; Guo, Q.; Li, S.; Liu, B.; Ren, Y.; Pang, Y.; Guo, X.; Liu, L.; Jiang, P. Deep learning inversion of electrical resistivity data. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5715–5728. [Google Scholar] [CrossRef]

- Guo, R.; Li, M.; Fang, G.; Yang, F.; Xu, S.; Abubakar, A. Application of supervised descent method to transient electromagnetic data inversion. Geophysics 2019, 84, E225–E237. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Sharma, H.; Zhang, Q. Transient electromagnetic modeling using recurrent neural networks. In Proceedings of the IEEE MTT-S International Microwave Symposium Digest, Long Beach, CA, USA, 17 June 2005; pp. 1597–1600. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Ovcharenko, O.; Kazei, V.; Kalita, M.; Peter, D.; Alkhalifah, T. Deep learning for low-frequency extrapolation from multioffset seismic dataDeep learning for low-frequency extrapolation. Geophysics 2019, 84, R989–R1001. [Google Scholar] [CrossRef]

- Lewis, W.; Vigh, D. Deep learning prior models from seismic images for full-waveform inversion. In Proceedings of the 2017 SEG International Exposition and Annual Meeting, Houston, TX, USA, 24–29 September 2017; OnePetro: Richardson, TX, USA, 2017. [Google Scholar]

- Mao, B.; Han, L.G.; Feng, Q.; Yin, Y.C. Subsurface velocity inversion from deep learning-based data assimilation. J. Appl. Geophys. 2019, 167, 172–179. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Deng, F.; Yu, S.; Wang, X.; Guo, Z. Accelerating magnetotelluric forward modeling with deep learning: Conv-BiLSTM and D-LinkNet. Geophysics 2023, 88, E69–E77. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, W.; Tan, X.; Zhang, P.; Wang, X. A CBAM based multiscale transformer fusion approach for remote sensing image change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6817–6825. [Google Scholar] [CrossRef]

- Fu, H.; Song, G.; Wang, Y. Improved YOLOv4 marine target detection combined with CBAM. Symmetry 2021, 13, 623. [Google Scholar] [CrossRef]

- Reitermanova, Z. Data Splitting. In WDS’10 Proceedings of Contributed Papers; Part I; Matfyzpress: Prague, Czechia, 2010; pp. 31–36. [Google Scholar]

- Warner, R.W. Earth Resistivity as Affected by the Presence of Underground Water. Trans. Kans. Acad. Sci. 1935, 38, 235–241. [Google Scholar] [CrossRef]

- El-Qady, G.; Ushijima, K. Inversion of DC resistivity data using neural networks. Geophys. Prospect. 2001, 49, 417–430. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.P. An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Li, Q.; Zhang, W.; Li, M.; Niu, J.; Wu, Q.J. Automatic detection of ship targets based on wavelet transform for HF surface wavelet radar. IEEE Geosci. Remote Sens. Lett. 2017, 14, 714–718. [Google Scholar] [CrossRef]

| Average Euclidean Distance (%) | Histogram (%) | Peak S/N (dB) | |

|---|---|---|---|

| Resistivity | 4.29 | 22.60 | 31.55 |

| Apparent Resistivity | 1.60 | 19.27 | 33.36 |

| Phase | 2.58 | 21.99 | 32.81 |

| Average Time Taken for Forward Computation (s) | |

|---|---|

| Traditional Occam | 2619.1 |

| MT-MitNet Occam | 4.0 |

| Average Euclidean Distance (%) | Histogram (%) | Peak S/N (dB) | |

|---|---|---|---|

| Resistivity | 4.25 | 22.64 | 31.60 |

| Apparent Resistivity | 1.64 | 19.32 | 33.34 |

| Phase | 2.43 | 21.95 | 32.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, F.; Hu, J.; Wang, X.; Yu, S.; Zhang, B.; Li, S.; Li, X. Magnetotelluric Deep Learning Forward Modeling and Its Application in Inversion. Remote Sens. 2023, 15, 3667. https://doi.org/10.3390/rs15143667

Deng F, Hu J, Wang X, Yu S, Zhang B, Li S, Li X. Magnetotelluric Deep Learning Forward Modeling and Its Application in Inversion. Remote Sensing. 2023; 15(14):3667. https://doi.org/10.3390/rs15143667

Chicago/Turabian StyleDeng, Fei, Jian Hu, Xuben Wang, Siling Yu, Bohao Zhang, Shuai Li, and Xue Li. 2023. "Magnetotelluric Deep Learning Forward Modeling and Its Application in Inversion" Remote Sensing 15, no. 14: 3667. https://doi.org/10.3390/rs15143667

APA StyleDeng, F., Hu, J., Wang, X., Yu, S., Zhang, B., Li, S., & Li, X. (2023). Magnetotelluric Deep Learning Forward Modeling and Its Application in Inversion. Remote Sensing, 15(14), 3667. https://doi.org/10.3390/rs15143667