Abstract

The You Only Look Once (YOLO) series has been widely adopted across various domains. With the increasing prevalence of continuous satellite observation, the resulting video streams can be subjected to intelligent analysis for various applications, such as traffic flow statistics, military operations, and other fields. Nevertheless, the signal-to-noise ratio of objects in satellite videos is considerably low, and their size is often smaller, ranging from tens to one percent, when compared to those taken by drones and other equipment. Consequently, the original YOLO algorithm’s performance is inadequate when detecting tiny objects in satellite videos. Hence, we propose an improved framework, named HB-YOLO. To enable the backbone to extract features, we replaced the universal convolution with an improved HorNet that enables higher-order spatial interactions. We replaced all Extended Efficient Layer Aggregation Networks (ELANs) with the BoTNet attention mechanism to make the features fully fused. In addition, anchors were re-adjusted, and image segmentation was integrated to achieve detection results, which are tracked using the BoT-SORT algorithm. The experimental results indicate that the original algorithm failed to learn using the satellite video dataset, whereas our proposed approach yielded improved recall and precision. Specifically, the F1-score and mean average precision increased to 0.58 and 0.53, respectively, and the object-tracking performance was enhanced by incorporating the image segmentation method.

1. Introduction

In recent years, computer vision has undergone rapid development, providing intelligent solutions that have improved people’s quality of life in various fields. This has increased the potential for object detection and tracking technologies to be applied in many areas. Moreover, the progress made in satellite remote sensing imaging technology has enabled large-scale, continuous observation, leading to the fulfillment of various needs in fields, such as traffic management, forest and ocean monitoring, shared cities, and military surveillance. As a result, detecting and tracking moving objects in remote sensing videos has become a crucial research topic.

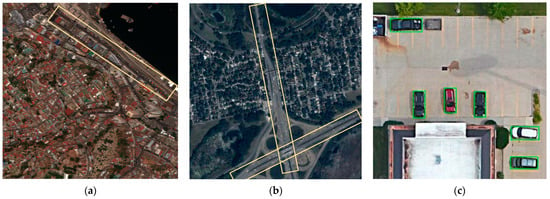

Figure 1 illustrates diverse remote sensing images, where (a) and (b) were acquired by satellites and (c) represents general remote sensing images typically captured by drones or aircraft, among others. Satellite-based imaging equipment has the potential to provide extensive spatial and temporal observation capabilities, enabling a wide field of view, as reported in previous studies [1]. Nevertheless, the visible objects in satellite remote sensing imagery comprise a limited proportion of the image, and their detection is challenging through naked-eye observation. Consequently, these objects are often deemed as noise or indistinguishable [1,2,3,4,5], posing a significant detection challenge.

Figure 1.

Different remote sensing images. (a,b) are satellite video remote sensing images. The boxes contain the objects. Vehicles on the road and ships in the port are moving objects. (c) is a general remote sensing image. The object is relatively large so it is easy to distinguish the object.

Currently, most datasets in the field of remote sensing comprise independent images with disparate contents. However, object tracking necessitates the use of satellite remote sensing image sequences, and the availability of such public datasets is limited. The existing remote sensing datasets, including DOTA, DIOR, TGRS-HRRSD, and NWPU VHR-10, primarily comprise independent images with unrelated contents. In 2022, Yin et al. [1] addressed this gap by introducing the Video Satellite Objects (VISO) dataset, which includes well-annotated satellite remote sensing sequences. This dataset represents one of the few publicly available resources that can be utilized for moving object detection and tracking.

In addition, satellite remote sensing videos still possess unique characteristics:

- The field of view in satellite remote sensing images is vast [1], and objects in motion are generally faint and occupy only a few to dozens of pixels, lacking color, texture, and other features. In the presence of noise, conventional algorithms may not detect and track such dim objects effectively.

- In addition, satellite remote sensing images typically encompass larger fields of view, more objects, and more complex backgrounds, including roads, buildings, vegetation, and various traffic conditions [2,3].

- Moreover, due to the movement of the satellite, the background of the remote sensing image demonstrates weak, non-uniform motion [4]. The satellite remote sensing image, being a projection of a satellite on a two-dimensional plane during complex three-dimensional motion [6,7], exhibits complex motion patterns.

- Furthermore, due to the dark current in the photosensitive device, material structures, and other local differences, there exist many additional noise sources, leading to variations in the gray value of each pixel for the same irradiance. After image stabilization, these variations can result in random fluctuations of pixel brightness in satellite images, posing a challenge for detecting and tracking faint objects in satellite remote sensing images.

Illustrative satellite remote sensing images are depicted in Figure 1a,b, wherein the enclosed rectangles contain numerous objects. We are attempting to focus on the moving objects, such as cars on the road and ships in the port, which are not apparent in the images, indicating the small proportion of objects in the scene. The challenge of locating objects through manual observation of these images is evident.

The characteristics mentioned above are common to satellite remote sensing images and their associated objects. Deep learning networks, characterized by their ability to learn features, have demonstrated remarkable success in computer vision due to the advancement of computer hardware processing capabilities, increasing network depth, and availability of training data. Currently, deep learning is an indispensable method to improve image processing capabilities.

Within the domain of deep learning, object detection algorithms are broadly classified into two methods [8,9,10]. The first category is the two-stage object detection method [11,12,13], which is represented by R-CNN and offers high precision. The second category is the one-stage object detection algorithms that have an advantage in speed, such as the YOLO series [14,15,16,17,18] and single-shot multi-box detector (SSD) algorithm [19]. Despite the progress made in detecting and tracking dim objects in satellite remote sensing images [1,2,5,8], this field still lags behind other general fields. There is significant potential for enhancing existing algorithms, particularly in the detection and tracking of multi-class and multi-scale objects, as reported in previous studies [9]. However, most of these algorithms primarily focus on improving one aspect of remote sensing imagery and fail to address the problem of remote sensing image detection systematically.

In recent years, a new framework has been proposed to address object detection and moving object tracking problems in universal videos [20,21,22,23]. These algorithms have demonstrated excellent performance on ordinary datasets, prompting some researchers to transfer general visual detection and tracking algorithms to satellite remote sensing videos. However, the characteristics of objects in satellite videos present a challenge for extending these networks to other satellite videos [1]. Moreover, there is a paucity of literature on object tracking in satellite videos using deep learning methods. Despite the challenges associated with improving and expanding the current moving object detection and tracking framework to large-scale satellite remote sensing videos, this endeavor has significant implications for breakthroughs in computer vision, national defense, military applications, people’s livelihoods, and many other fields.

In this paper, we propose the HB-YOLO architecture based on YOLOv7. This framework introduces novel contributions aimed at enhancing object detection and tracking in satellite remote sensing videos. The following summarizes the primary contributions of this work:

- In the backbone, we employed an enhanced variant of HorNet, a novel methodology that effectively integrates three 1 × 1 convolution models in lieu of the conventional convolution utilized in YOLO. This innovative approach enables the attainment of heightened spatial interaction, thereby facilitating improved feature extraction capabilities.

- To achieve optimal fusion of backbone-extracted features, we employed the BoTNet attention mechanism in the neck as an alternative to Extended Efficient Layer Aggregation Networks (ELANs), facilitating enhanced feature integration.

- The Anchor Box was re-selected to improve performance indexes, including mean Average Precision (mAP).

- The proposed framework was used for object detection and combined with image segmentation detection and the latest BoT-SORT for object tracking, which was evaluated using general indexes.

2. Related Works

Deep learning-based object detection algorithms have exhibited rapid development and possess superior generalization capabilities when compared to traditional approaches. Consequently, object detection and tracking algorithms rooted in deep learning have emerged as prominent research areas within the field of computer vision. Several researchers have introduced diverse neural architectures [1,2,3,4,5,6,7,8,9,10] to effectively detect and track objects in standard video settings. This study employed an object-tracking paradigm based on detection. Therefore, this section introduces the recent methods of object detection and moving object tracking, as well as related methods applied to satellite remote sensing images.

2.1. Object Detection

Traditional object detection algorithms, including edge detection and visual significance detection, have limitations in terms of accuracy, speed, and robustness. In recent years, object detection models based on Convolutional Neural Networks (CNNs) have developed rapidly [2]. In 2014, Girshick et al. [16] proposed a two-stage algorithm, RCNN, which first selects possible object boxes from candidate boxes, fixes them to a certain size, and then extracts features through a CNN. Then, the coordinates and categories are returned first. Since then, multiple object detection algorithms have continually emerged [3,4,10,17].

In May 2016, Redmon et al. [14] first proposed a one-stage deep learning detection algorithm, YOLO, which directly returns the location and category of the bounding box at the output layer, significantly improving the detection speed. Although the detection accuracy of small objects was poor, these defects did not obscure the merits. In July 2022, Wang et al. [18] proposed YOLOv7, which exceeded the then-known detection algorithms in terms of speed and accuracy.

The aforementioned algorithms exhibit outstanding performance on conventional datasets but exhibit subpar performance on satellite videos, mainly due to the notable distinctions in object characteristics. Currently, the majority of methods [19,20,21,22,23] applied in satellite remote sensing videos achieve satellite moving object detection by formulating motion and background information.

The frame-differencing approach eliminates a significant portion of the background noise. For instance, Saleemi et al. [24] proposed a moving object detection approach based on a two-frame-differencing method. Nonetheless, background modeling and frame-differencing techniques necessitate uniform lighting and rely heavily on interframe relationships [1]. While some scholars have suggested the direct estimation method for this task, the associated computational load remains prohibitive. Consequently, scholars face a long and arduous journey to achieve satellite image object detection.

2.2. Object Tracking

The moving-object-tracking paradigm based on detection has gained increasing attention with the advancement of deep learning techniques. In 2016, Bewley et al. [23] proposed the tracking algorithm SORT based on the Kalman filter, which attracted wide attention as soon as it came out. Although it had a fast running speed, its anti-occlusion ability was poor, and it could not conduct stable tracking.

To address this issue, Wojke et al. [25] proposed the Deep-SORT algorithm. This improved SORT algorithm can effectively track for a long period of time and reduce identity conversion in the process of tracking to a large extent. However, due to the need for depth feature extraction, it is time-consuming. In 2018, LaLonde [26] used a space-time CNN and a dedicated network to jointly estimate the region proposal and object centroid. In 2021, Zhang et al. [27] proposed the Byte-Track algorithm, which proposed a new Byte association method and significantly improved the accuracy of the tracking algorithm. In 2022, Aharon et al. [28] proposed the BOT-SORT algorithm, a tracking algorithm with high speed and accuracy. This algorithm combined motion and appearance information with camera motion compensation and a more accurate Kalman filter state vector, resulting in the best performance on the MOT-Challenge dataset consisting of the MOT17 and MOT20 dense pedestrian test sets. Therefore, this algorithm was adopted in the tracking part of our study.

3. Materials and Methods

Aimed at detecting many features of satellite remote sensing videos and considering speed and accuracy, this study improved the current popular BoT-SORT tracking algorithm based on the one-stage algorithm YOLOv7’s detection framework.

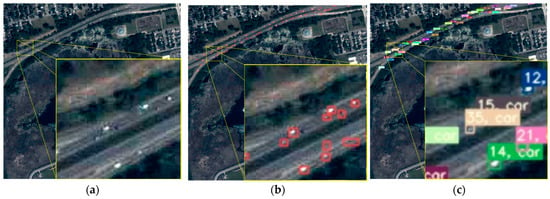

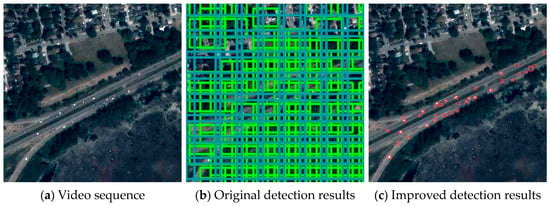

This section discusses the fundamental and modified architecture of YOLOv7 and the employed tracking algorithm. The overall process of the tracking algorithm, based on the detection framework, is depicted in Figure 2.

Figure 2.

The approximate flow of the tracking algorithm based on detection framework. Input video sequence (a) is processed by the detection network to produce the result (b), which is subsequently tracked using a tracking algorithm to obtain (c).

This study first employed the improved object detection framework to detect each frame of the satellite remote sensing video sequence (an example original image is depicted in Figure 2a) and obtain the corresponding detection results, including the center position and width and height information of the object frame. The visualization of the detection result is illustrated in Figure 2b. Next, the feature of the object is extracted, and the correlation method for similarity calculations is employed to calculate the probability that two objects are the same object by leveraging information from other frames. Finally, based on the obtained probability results, each object is assigned an ID to construct a complete tracking trajectory.

3.1. Detection and Improvement

3.1.1. Original and Improved YOLOv7

Figure 3 depicts both the original and improved network structures of YOLOv7. The original architecture begins by resizing the input image’s three-channel image to 640 × 640, followed by sending it to the backbone. Subsequently, the head layer network outputs three-layer feature maps of various sizes, and the prediction results are produced through the re-parameterized and convolution (RepCONV) modules. In the backbone, the input image undergoes four convolutional layers to obtain a feature map with a size of 160 × 160 × 128. Subsequently, the feature map is followed by an Extended Efficient Layer Aggregation Network (ELAN) module, which is formed by multiple CBSs. A CBS consists of a convolution layer, a batch normalization layer, and a sigmoid activation function. In the ELAN module, the feature size of the input and output remains the same, but the number of channels is multiplied. The slight difference between ELAN is the number of internal branches. The Maxpool with Convolution (MP-CONV) layer mainly performs Maxpool, and the feature map becomes 80 × 80 × 256 in size. Then, through the ELAN module, the octuple drop sampling feature map is obtained.

Figure 3.

Original and improved structure of YOLOv7.

The MP-CONV layer conducts Maxpool and convolution operations, resulting in a feature map size of 80 × 80 × 256. By applying the ELAN module, the eight-fold down-sampling feature map is obtained, which is further down-sampled to 16-fold and 32-fold through two groups of modules. The YOLOv7 head follows a Path Aggregation Feature Pyramid Network (PAFPN) structure, where the 32-fold down-sampling feature map is processed by an SPP concatenating SPC (SPPCSP) module to change the number of channels from 1024 to 512. This feature map is then fused with the C4 and C3 feature maps obtained in a top-down manner to generate P3, P4, and P5. The RepConv module is employed to obtain the final output. The original and improved structures of YOLOv7 are illustrated in Figure 3.

In satellite remote sensing videos, the inherent characteristics of objects pose a challenge to the original structure of YOLOv7 in terms of efficient training and object detection. Specifically, the shallow feature map utilized by YOLO fails to provide adequate semantic information, resulting in subpar detection performance for small objects [4,9]. The standard convolution operation used by YOLOv7’s backbone does not explicitly consider the interaction between spaces, resulting in inefficiency in extracting the features of dim targets. To address the issue of small objects being omitted in processing satellite videos due to the lack of explicit consideration of spatial interaction in the standard convolution operation, this study proposed an innovative module that enables higher-order spatial interaction in the backbone. The module is named C3HB, which consists of three convolution layers and is based on HorNetBlock [29]. By introducing C3HB into the backbone, the module can fully extract spatial information to improve object detection accuracy. To enhance feature extraction in satellite images, this study proposed using the C3HB + CONV module to replace the first and last ELAN module in the backbone.

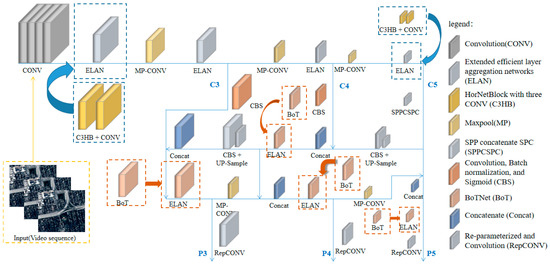

The present study employed the proposed C3HB architecture, which is based on HorNet [29] and consists of three convolutional layers. The HorNet architecture is a novel framework that incorporates spatial mixing layers and feedforward networks called gnCONV as an alternative to the Visual Transformer. This convolutional-based network exhibits higher-order spatial interactions similar to the Visual Transformer, as depicted in Figure 4a, where the multiplication of matrices represents the matrix product of tensors and multiplication denotes the element-wise multiplication of matrices. The gnCONV module is a flexible plug-and-play component that can be utilized in various architectures. The model shown in Figure 4b is self-attention. Unlike self-attention, gnCONV only employs convolution and fully connected layers, which circumvents the quadratic complexity of self-attention and results in a faster model. Therefore, the HorNet model combines the strengths of both ViT and CNN, enabling the efficient modeling of spatial interactions of any order.

Figure 4.

gnCONV (a) and self-attention (b). Unlike the way matrix multiplication is used in self-attention, gnCONV uses simple operations to achieve spatial interaction.

In the context of satellite remote sensing video, the detection of weak objects is a crucial task that requires the extraction of advanced features. Conventionally, a convolution module of size 1 by 1 (Conv 1 × 1) is utilized to transform the dimension of channel numbers, which involves a linear combination of information among channels. Inspired by this, we innovatively integrated multiple Conv 1 × 1 into HorNet to realize the dimensional transformation of the number of channels. This facilitates the extraction of high-level features and promotes channel-level interactions, thereby aiding in the detection of small and faint objects. However, relying solely on a single Conv 1 × 1 combination may not adequately capture rich information. To address this limitation, our study used a novel methodology that incorporates three Conv 1 × 1 modules within the HorNet framework, thereby enhancing the extraction of features related to dim objects.

In feature fusion, the YOLOv7 selects the Path Aggregation Feature Pyramid Network (PAFPN) to fuse the multi-scale features output by the backbone [18]. However, despite the efficiency of common modules in sharing parameters and aggregating local information [4,9], their use leads to the neglect of dim objects in satellite videos, hindering their detection. In addition, in feature fusion, the information lost in down-sampling cannot be recovered during up-sampling. The model is highly disturbed by the feature layer with a lower resolution, so the prediction accuracy of the model is low [9].

To mitigate this issue and inspired by the ability of attention mechanisms to integrate global information and reduce the information loss caused by subsampling, we used the attention architecture to fully incorporate extracted weak object features without ignoring them and to better integrate global information. To this end, this study employed the BoT module with an attention mechanism to replace the original ELAN module in the feature fusion stage. This can make the feature information more fully utilized instead of being ignored as noise.

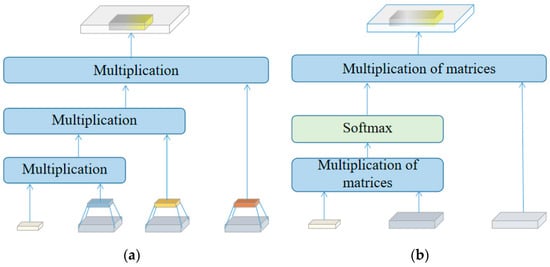

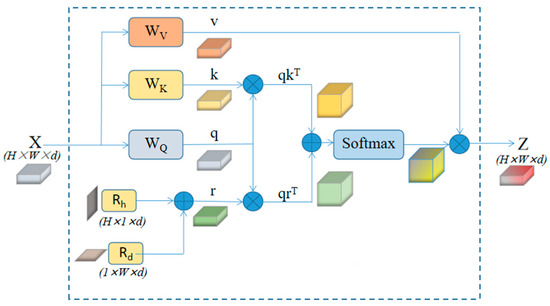

The BoT module utilized in this study is derived from the BoTNet [30] architecture, which replaces convolution with multi-head self-attention mechanisms (MHSAs) in the last three bottleneck blocks of ResNet [31]. We chose the BoTNet network because it is known for its simplicity, power, and reduced parameter count [30]. Figure 5 depicts the attention mechanism utilized in BoTNet, where H and W, respectively, represent the height and width of the input feature matrix; d is the dimension number of the token; and q, k, and r represent the query, key, and position encodings, respectively. Notably, the original ResNet architecture features down-sampling, which MHSA does not support. Therefore, a 2 × 2 average-pooling layer was appended to enable down-sampling.

Figure 5.

Self-attention mechanism in BoTNet.

3.1.2. Anchor Box

The concept of anchor boxes was first introduced in Faster R-CNN [13] and has been widely adopted in various state-of-the-art object recognition models. YOLO introduced the idea of an anchor box. In the YOLOv7 algorithm, three anchor boxes are usually generated in each feature map grid cell. The inclusion of anchor boxes has been shown to accelerate model convergence [4,18]. Therefore, the adjustment of the anchor is critical [8].

However, when dealing with objects in satellite remote sensing videos, which tend to be small in size compared to those in regular datasets, the use of unadjusted anchors can lead to the occurrence of local optima during backpropagation training. This issue persists, even when employing the cosine annealing method. As a consequence, the Intersection over Union (IOU) values become severely distorted, resulting in significant errors in True Positives (TPs), False Positives (FPs), True Negatives (TNs), and False Negatives (FNs) related to IOU calculations. Consequently, the performance metrics of Recall (Rcll), Precision (Prcn), and mAP (mean Average Precision) are adversely affected.

To mitigate this issue, it is imperative to make adjustments to the anchor box. In our approach, we precisely modified the anchor to align with the size of the target objects in satellite videos. Given the high-quality annotations and well-distributed object sizes within the dataset utilized in this study, we directly adjusted the anchor box without resorting to k-means clustering analysis or treating it as a learning hyperparameter.

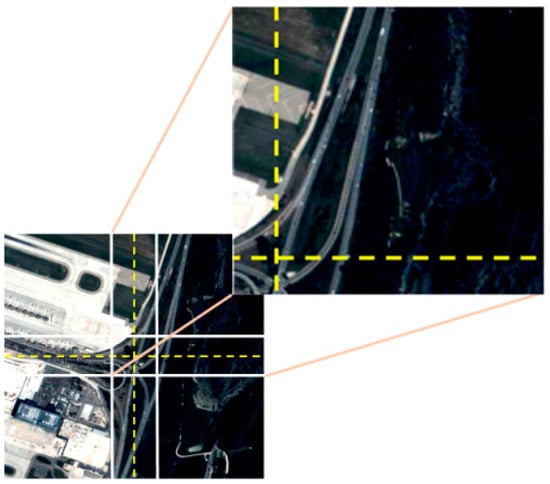

3.1.3. Image Segmentation

Detecting objects in satellite remote sensing videos poses a challenge due to their small sizes [1,4,5]. Additionally, the large dimensions of the images necessitate cropping to obtain appropriately sized subgraphs, a preprocessing step [1,2]. However, this process alone does not enhance the proportion of object pixels. In this study, we employed image segmentation detection, which involves segmenting and standardizing the image size prior to network input, thereby improving the proportion of target pixels. We seamlessly integrated this approach into the detection framework, partitioning the original image into multiple sub-images along the x and y dimensions to enhance the object proportion in each sub-image. This leads to improved detection accuracy. However, this approach may increase the detection time, which must be balanced against performance considerations.

Direct segmentation of an original image can result in object splitting, leading to challenges in object detection. To address this issue, it is crucial to adjust the dividers in a way that ensures sufficient overlap margins in each subgraph after cutting. This process is mathematically expressed in Equations (1) and (2):

where X and Y and X′ and Y′ are, respectively, the dimensions of the two-dimensional image obtained after input and segmentation. m refers to the number of copies of the picture equally divided into two dimensions. As shown in Figure 6, the picture is equally divided in the x and y dimensions along the dotted line into two parts; that is, m = 2 and m2 = 4 subgraphs are obtained. However, this is highly likely to segment the object on the dotted line, so each subgraph needs to have some extra margin. Therefore, the margin index t is introduced, which is meaningful only when the value is greater than 1. Otherwise, the resulting subgraph will be smaller than the size obtained by dividing the graph along the dotted line, leading to the loss of the object. Upon completion of the subgraph detection, the original image size needs to be restored, and only a single result should be retained for the repeated object.

Figure 6.

Schematic diagram of image segmentation, where m = 2, t = 1.2; dotted lines are bisector lines and solid white lines are actual dividing lines.

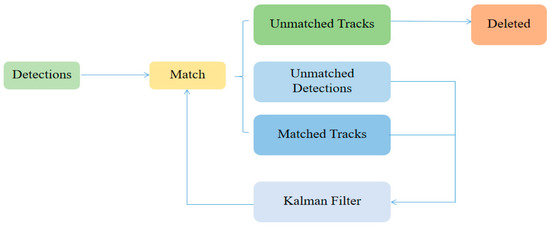

3.2. Object-Tracking Model

At present, multiple object-tracking algorithms rely on the popular and efficient Simple (SORT) algorithm [23]. The approximate flow of the SORT algorithm is shown in Figure 7.

Figure 7.

The general flow of the SORT algorithm.

The proposed method involves modeling the state of objects using a linear constant velocity model. The state of each object is modeled using Equation (3):

SORT leverages the Kalman filter to predict the motion of detection boxes. In physics, an object’s motion can be described by its dynamics when its position and momentum are known. Similarly, object detection and tracking rely on detection boxes to capture the object’s motion, necessitating knowledge of its position, speed, and size. Since detection boxes can have varying widths and heights for a given area, an aspect ratio parameter is incorporated to determine their dimensions. The state vector X comprises four essential elements: the horizontal and vertical pixel positions of the object center (u and v), the size of the detection box (s), and its aspect ratio (r) [23]. However, these four states alone are insufficient to fully describe the object’s motion characteristics. Hence, to encompass motion state information, the change quantities of these states, specifically the speed, are introduced. In SORT, it assumes a constant aspect ratio throughout the object detection sequence and employs a uniform linear model. As such, the inter-frame change rates of u′, v′, and s′ represent their respective motion dynamics in the state vector X. The aspect ratio (r), which has a rate of change of zero, is not included in the state vector X.

The object detection framework generates results that are then inputted into the SORT algorithm, which utilizes the Kalman filter to predict the tracks of the current frame. The algorithm matches detections and tracks to obtain three possible outcomes.

- Unmatched tracks indicate the temporary disappearance of the object, with the removal of the object ID from the graph if it does not reappear within a specified number of frames.

- Unmatched detections correspond to new objects, and a new ID is assigned to each object, with Kalman filter predictions initiated.

- Matched tracks represent successful matches, with the tracks and Kalman filter being updated accordingly.

Through constant development, tracking algorithms based on SORT have continually emerged. In this work, we employed an enhanced version of SORT, referred to as BOT-SORT, which was recently proposed in 2022. BOT-SORT leverages the detection-based object-tracking paradigm and implements three key modifications aimed at improving the tracking accuracy and robustness, namely:

- Combined with motion and appearance information, a new cost matrix is designed.

- Camera motion compensation is introduced.

- More accurate width estimation is obtained using a more precise Kalman filter state vector and replacing the original aspect ratio and height with width and height.

The proposed modifications in the BOT-SORT algorithm result in a stronger association ability and enhanced access to object location and bounding box, thereby improving the performance of the algorithm. As evidenced by the MOT-Challenge dataset consisting of the MOT17 and MOT20 Intensive Pedestrian test sets, BOT-SORT outperformed other object-tracking algorithms.

4. Results

4.1. Dataset

The spatial resolution of satellite remote sensing video is typically low, which results in complex and changing backgrounds, low signal-to-noise ratios, and a small but numerous amount of objects. Existing remote sensing datasets, such as DOTA, DIOR, TGRS-HRRSD, and NWPU VHR-10, primarily consist of independent images with unrelated contents. However, object tracking requires satellite remote sensing image sequences. Such public datasets are few and far between.

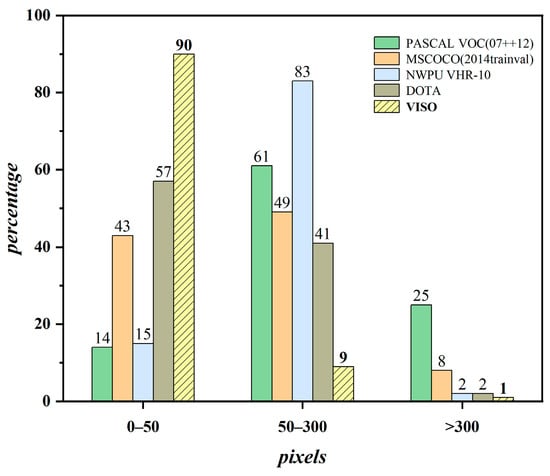

In 2022, Q. Yin et al. [1] introduced Video Satellite Objects (VISO), a novel satellite remote sensing video dataset. This dataset is composed of videos obtained from the Jilin-1 satellite platform that were subsequently processed, including cropping and manual labeling. VISO is currently one of the limited numbers of datasets available for object detection and tracking. In particular, VISO is unique in the sense that it includes satellite remote sensing image sequences, as opposed to independent images with unrelated contents. Figure 8 illustrates the comparative pixel size distribution of objects in various datasets. The size of the object is mostly in the 10–20-pixel range, which is less than 50 pixels.

Figure 8.

Object size distribution in different existing datasets. Ninety percent of the objects in the VISO dataset are less than 50 pixels, which makes object detection difficult.

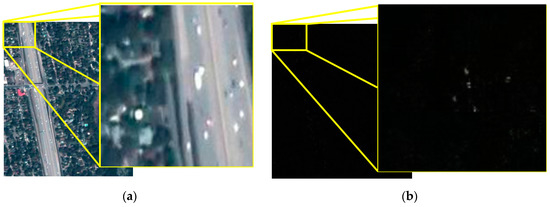

The current study employed a video sequence that is visually presented in Figure 9, showcasing the original frame and two-frame difference results. The video sequence poses a significant challenge for object detection and tracking, since the objects are considerably minuscule, and the signal-to-noise ratio is poor. Furthermore, some objects are entirely submerged in the noise, thereby exacerbating the difficulty.

Figure 9.

Original image (a) and results of difference between adjacent frames (b).

4.2. Evolution and Indicator

In this paper, F1-score, Recall (Rcll), Precision (Prcn), and mAP@0.5 were used as evaluation indexes for object detection. The relevant indicators are defined in detail below.

The four indexes, True Positives (TPs), False Positives (FPs), True Negatives (TNs), and False Negatives (FNs), are essential to the classification problem. Prcn is the ratio of TPs to all detection results, and Rcll is the ratio of TPs to all real objects, that is,

Generally, Rcll is negatively correlated with Prcn. Since both of them have the meaning that True Positives are correctly classified, for objective evaluation, the conciliation average of these indexes is defined as the F1-score, i.e.,

In this study, TP and FP represent the number of correctly predicted and false objects in the background in the satellite remote sensing video. FN represents the number of objects that were incorrectly predicted.

The metric used in this study to evaluate object detection performance is mAP@0.5, which is the average precision value of Intersection over Union (IoU) when the threshold is set to 0.5 [9]. IoU is defined as the ratio of the intersection area between the ground truth box and the predicted box to the union area of the two. Due to the small proportion of objects in the satellite remote sensing video and the high sensitivity of IoU to even slight changes in the predicted box [1], even the prediction box is completely separated from the annotation, resulting in a great decrease in detection accuracy. This study considered areas with IoU values greater than or equal to 0.4 as true-positive detections, areas with IoU values less than or equal to 0.3 as background, and the remaining areas were discarded.

Furthermore, the performance of the tracking network was assessed through the metrics of object-tracking accuracy (MOTA), object-tracking precision (MOTP), ID switches (IDs), and FPS. ID switches indicate the number of times the destination identity is altered. MOTA provides a measure of cumulative tracking errors, which are correlated with the detection results [1]. The specific calculation method is as follows:

where FN and FP are False Negative and False Positive, as mentioned above. Ground Truth (GT) is the label marked in the dataset.

On the other hand, MOTP is insensitive to changes in the detection results. The formula is:

where dt,i is the average measured distance between the detection object i and the ground truth assigned to it in frame t, while ct is the number of successful matches in frame t. It can be seen from Formula (8) that MOTP mainly quantifies the positioning accuracy of the detector and contains almost no information related to the actual performance of the tracker.

Finally, FPS is utilized to quantify the speed of the algorithm, with its value being dependent on the device used for the computation.

The experimental setup consisted of a computer equipped with a 4.20 GHz Intel Core i7-7700K CPU and a 1070Ti GPU. The study commenced by implementing the enhanced architecture and subsequently adjusting the three groups of anchors to dimensions of [2 × 3, 5 × 7, 8 × 6], [5 × 6, 8 × 14, 15 × 11], and [12 × 6, 19 × 36, 40 × 28]. Both the original YOLOv7 model and the modified model were then trained on the VISO dataset using the SGD optimizer and cosine annealing schedule for 65 and 100 epochs, respectively. Finally, the trained and refined detection model was employed for object tracking.

For segmentation, given that this method divides a single image into multiple images, the detection process can be computationally intensive. Hence, we focused on analyzing the effectiveness of this method by only dividing images 4 and 9 equally and detecting them. Subsequently, the results obtained were used for tracking and evaluation, along with calculation of the corresponding performance metrics.

4.3. Experiment and Analysis

In the present study, a modified version of the YOLOv7 network was employed. The entire dataset was utilized for model training. During the detection phase, the first seven representative video frames from the VISO dataset were employed.

Ascertaining the convergence and accuracy of a model during training can be accomplished by evaluating its loss value. In this study, we employed the loss value as a metric to determine whether the experimental network can effectively learn. To this end, comparative experiments were conducted using the proposed improved methods, and the results are summarized in Table 1. The initial YOLOv7 model was trained thrice on the VISO dataset to mitigate the impact of chance. However, the resulting outputs exhibited erratic training losses, irregular Rcll, Prcn, and mAP@0.5 scores that quickly converged to zero. Such outcomes were deemed insubstantial and were discarded. These results indicate that the network failed to learn effectively and highlight the need for an enhanced framework that is optimized for this particular objective, which is the main focus of this research.

Table 1.

Results of ablation experiments on the structure and anchor improvement, where -- represents meaninglessness and √ represents an implemented improvement.

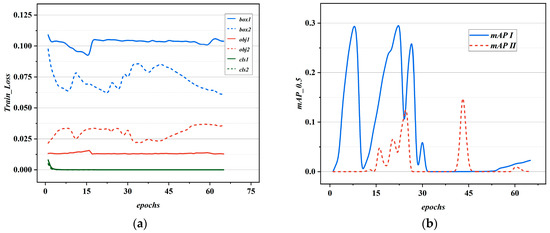

In light of the significantly smaller size of objects in satellite videos, we initially adjusted the anchors and retrained the model. The experiment was repeated twice to minimize random variations. Each experiment consisted of 65 epochs, and the corresponding training loss values and mAP@0.5 results are depicted in Figure 10a,b, respectively. The loss values for the bounding box (Box), object (obj), and classes (cls) are shown in Figure 10. The term “mAP” represents the average precision at IOU = 0.5, while 1 (I) and 2 (II) denote the experiment numbers. Generally, one would expect the loss to decrease and the mean average precision (mAP) to increase during the training process. However, contrary to these expectations, the experimental outcomes did not align with this pattern, despite various adjustments being implemented. Specifically, the loss values did not exhibit a decrease, and the model failed to learn even after the applied modifications. Moreover, increasing the number of training epochs did not yield any significant improvements in the obtained results.

Figure 10.

Training results obtained by simply adjusting anchors based on the original frame. (a) is the loss value and (b) is the mAP.

Henceforth, we proceeded to modify the network architecture. The training outcomes of Recall (Rcll), Precision (Prcn), and mAP@0.5 proved to be subpar, whereas the training loss results seemed promising. Considering the nearly identical training sessions’ results, Figure 11a displays the average value of the two sessions. It is evident that the three loss values first declined and then stabilized, thereby affirming the network’s ability to learn. We then adjusted the anchors on this basis. Although the loss values closely aligned with the structural improvement alone, Recall (Rcll), Precision (Prcn), and mAP witnessed significant enhancement, as revealed in Figure 11b. This matches the last row of Table 1.

Figure 11.

The result after improving the structure and anchor. (a) Training loss and (b) other important indicators.

This phenomenon can be explained as follows: during the intermediate stage between the initial feature extraction through convolution and the final up-sampling, the conventional convolution operation does not incorporate the spatial interactions among different regions, leading to the possible omission of small objects in satellite videos. In contrast, Conv 1 × 1 facilitates the linear combination of information between channels, thus enhancing the network’s capability to capture more advanced and interactive features. Additionally, the proposed architecture, gnCONV, enables high-order spatial interactions of the object, which enables the extraction of comprehensive information and the acquisition of richer features. During the feature fusion stage, common modules offer parameter sharing and efficient local information aggregation [31] but fail to capture dim objects in satellite videos, leading to detection inefficiency. To address this issue, the attention mechanism was introduced, which facilitates the integration of global information and the comprehensive utilization of feature information. As a result, the weak object features were not neglected but were fully incorporated, thus enhancing object recognition ability.

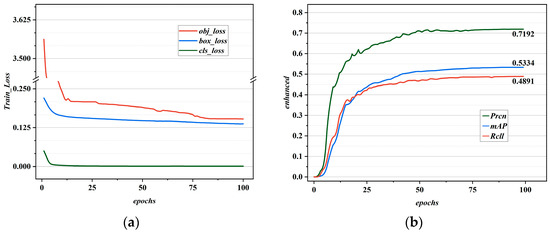

The results of the two tests, before and after anchor adjustment, are presented in Figure 12. The improved network can detect objects before anchor adjustment but with larger bounding boxes. After adjustment, the bounding boxes in the detection results fit better. Figure 11b demonstrates the positive impact of adjusting the anchor on Recall (Rcll) and Precision (Prcn). The adjustment led to an effective improvement in these metrics, resulting in an increase in True Positives (TPs) and a decrease in both False Positives (FPs) and False Negatives (FNs). Additionally, the increase in mAP signifies a closer alignment between the size of the detection box and the actual target, indicating an improvement in Intersection over Union (IOU) values.

Figure 12.

The influence of anchor size selection on object detection and tracking. (a) Object coordinates can be detected, but anchor boxes are inappropriate; (b) the adjusted result.

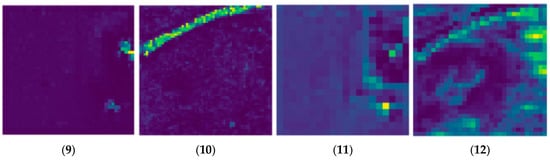

In addition, we used the weights obtained by different frameworks in Table 1 to conduct object detection in the satellite video, and the visual results are shown in Figure 13. To optimize visualization, the background section lacking objects was removed, leaving only the densely packed object area. It is discernible from the figure that the detection outcomes using the original framework failed to differentiate the objects in question. In contrast, the improved framework enabled the effective detection of objects.

Figure 13.

Original image (a) and results obtained using different detection frameworks. (b) is the result of using an unimproved network, the boxes of different colors are error detection boxes. (c) is the result of using an improved network.

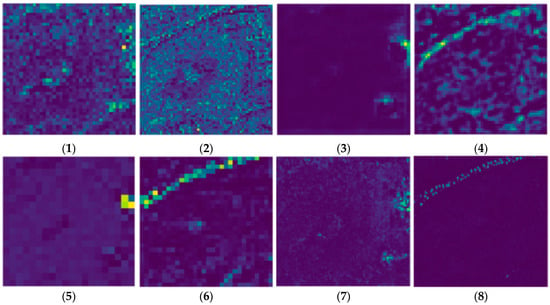

Figure 14 depicts the feature maps derived from both the original and improved schema detections, as well as the ablation results. The results suggest that the enhanced structure is efficacious in detecting faint objects in satellite videos. Given the cumbersome nature of displaying the more than 60 layers of the frame, only the significant feature diagrams, including C3, C4, C5, P3, P4, and P5, are presented in pairs, corresponding to (1) (2), (3) (4), (5) (6), (7) (8), (9) (10), and (11) (12) in Figure 14. Ci represents the feature layer output at the i-th stage of the backbone, with a resolution of 1/2i. P is a similar feature layer but with different output locations. The specific locations of these layers are illustrated in Figure 3. The odd-numbered diagrams display the feature maps obtained from the original structure, whereas the even-numbered diagrams showcase the feature maps generated from the improved structure. Compared with the feature map obtained using the original framework, the improved result can detect the object, namely, the improved feature map of dense light. Although some noise is present, the results were overall favorable.

Figure 14.

Important feature diagrams using the original and improved frameworks. The odd-numbered labels are the results obtained by using the original network, and the even-numbered labels are the results obtained by using the improved network.

We employed the improved network for detection and performed ablation studies on the two improved modules in the backbone. Specifically, Figure 14 (2) depicts the outcomes of the effective C3HB module after retaining only low-rate down-sampling. Compared to the result obtained by the original network, as depicted in Figure 14 (1), the improved C3HB module in the backbone produced a C3 feature map that differed significantly from that of the original network, indicating the efficacy of the improved module with dim objects.

Building upon this, we utilized the C3HB module in the final down-sampling in the backbone, and the resulting 32-fold down-sampling feature is shown in Figure 14 (6). Compared to the outcomes obtained by the original backbone, as shown in Figure 14 (5), the network could effectively extract valuable features. The outcomes indicated that the module remains effective under high-power sampling. Nonetheless, using this module alone was not very meaningful for BoTNet since the backbone is responsible for feature extraction, while the BoTNet network is in charge of feature fusion. Hence, obtaining inadequate features from the backbone renders the outcome of feature fusion insignificant.

Figure 14 (8), (10), and (12) depict the feature maps of P3, P4, and P5, respectively, that were obtained using the improved detection framework. The results obtained using the unimproved framework are shown in Figure 14 (7), (9), and (11), respectively. The improved framework effectively integrated the features extracted by the backbone, thereby enabling the detection of dim objects in satellite remote sensing images. It should be emphasized that the feature maps presented in Figure 14 were obtained using the same input image, and similar results are expected for other satellite remote sensing images.

Table 2 depicts the findings of the ablation experiment conducted on the VISO dataset, wherein different methods were implemented. The HorNet approach offers the notable benefit of facilitating high-order spatial interactions, which renders it capable of identifying faint objects through a solitary C3HB module. However, the results of the experiment revealed that the detection effect of HorNet was relatively poor compared to other methods. On the other hand, using two C3HB modules in the backbone architecture led to reduced loss values and improved performance indicators. The visualization results are illustrated in Figure 14 (2), (4). Further, an improved backbone architecture was developed by integrating BoTNet, which effectively improved metrics, such as F1 and mAP, as shown in Table 2. The simultaneous use of C3HB and BoT modules proved to be a potent combination for detecting small dim objects.

Table 2.

Results of different variants on VISO datasets.

In recent years, several improved modules have appeared with frameworks for satellite image tracking [32,33,34,35,36,37,38,39]. Moreover, we conducted a comparative analysis of our findings with those achieved through diverse techniques and summarized them in Table 3. The applied methods include frame difference (FD) [32], fast robust matrix completion (FRMC) [35], two background modeling-based methods, namely Gaussian mixture models (GMM) [33], MGB [38], and a low-rank matrix decomposition approach FPCP [39]. It is noteworthy that all the outcomes were obtained by analyzing the identical videos on a frame-by-frame basis. As Rcll and Prcn metrics usually have an inverse relationship, an excessive emphasis on Rcll can potentially impact Prcn. This is because a higher Rcll indicates fewer missed tests but results in more false tests, thus reducing Prcn. Thus, assessing the model’s performance based on a single metric would be inappropriate. Hence, we employed the F1-score, the harmonic mean of both Rcll and Prcn, for a balanced evaluation. Higher F1-scores indicate better performance. In addition, a higher value of mAP indicates greater detection accuracy.

Table 3.

Comparison of results of different methods, and the optimal results are shown in bold numbers.

In machine learning, the size and speed of a network are commonly assessed by its parameters and floating-point operations per second (FLOPs), respectively. A larger number of parameters may require more memory, while higher FLOPs suggest increased model complexity and computational demands, which can impact device performance. It is worth noting that the updated model in this study possesses 32.28 M parameters and 40.8 GFLOPs. Despite the improvements made, the updated model does not significantly increase the number of parameters and is similar to the unimproved algorithm in terms of parameter count.

For segmentation detection, an object-tracking index was used for comparison, and the same video from the VISO dataset was used for comparison. The results are shown in Table 4. When the original image was divided into nine subgraphs, MOTA was increased by four percentage points. MOTA was employed to measure the accumulation of tracking errors during the tracking process. A larger value of MOTA indicates a smaller error, which suggests that the tracking algorithm has better anti-jamming ability against stationary objects. On the other hand, MOTP calculates the average distance between the object and the ground truth, thereby mainly reflecting the positioning accuracy of the detector. A decrease in MOTP indicates a reduction in the accuracy of the object position. Despite this, a lower value of IDs indicates that the tracking is more stable. The metric FPS, which represents the number of frames processed per second, decreases as the number of splits increases. The tracking accuracy is directly proportional to the number of splits within the acceptable range, but a larger number of splits leads to an increase in processing time.

Table 4.

Influences of different segmentation indexes on object tracking, and the optimal results are shown in bold numbers.

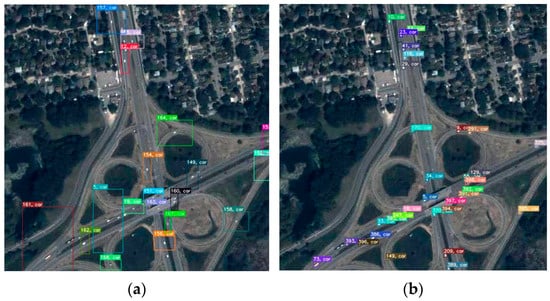

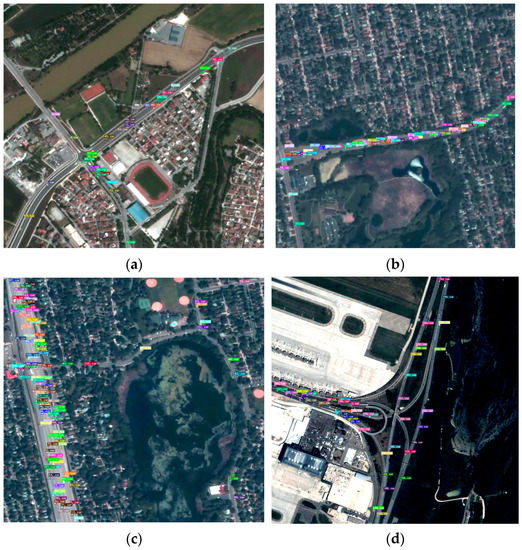

Our approach is based on a widely used deep learning framework. Since the depth framework can be detected based on the morphological and motion information of the target, the improved network shows versatility in detecting moving objects in satellite remote sensing videos. The detection results of our approach on different satellite remote sensing datasets are presented in Figure 15, which confirmed its efficacy across diverse scenarios. In different datasets, the mAP of the elevated detection framework all exceeded 0.5, which means that the network can detect moving targets.

Figure 15.

The improved model was used to detect results using other remote sensing images. (a–d) are from different datasets.

5. Discussion

In view of the limitations of YOLOv7 in detecting dim objects in satellite remote sensing videos, we proposed an improved detection framework that incorporates HorNet and BoTNet. Our experimental results demonstrated that the improved network can effectively detect small dim objects in satellite remote sensing videos, significantly enhancing detection accuracy. Furthermore, we introduce a segmentation detection method that improves the accuracy of the tracking algorithm and reduces IDs.

However, our improved model is not particularly fast, and further research is needed to make the model lightweight without sacrificing accuracy. To address these issues, we plan to collect and curate more satellite video data to verify our proposed improved algorithm. Furthermore, we aim to improve the speed of our algorithm while maintaining accuracy as our next research direction.

6. Conclusions

Currently, YOLOv7 is a popular deep learning framework used for object detection, and tracking algorithms based on detection frameworks have become the norm for object tracking. This study proposed an enhanced model named HB-YOLO for detecting objects in satellite remote sensing videos based on the YOLOv7 framework. The proposed network incorporates Conv 1 × 1 and HorNet, enabling more comprehensive feature extraction by leveraging high-order spatial interactions and a combination of information among channels. Moreover, an attention mechanism was integrated into the model to effectively utilize the features extracted by the backbone. The improved detection framework was then utilized as an input to the BoT-SORT tracking algorithm. The improved algorithm proposed in this paper was capable of detecting objects in satellite remote sensing videos, resulting in an increased F1-score of 0.58 and a mAP@0.5 score of 0.53 compared to the original detection algorithm. Additionally, the segmentation detection method introduced in this study enhanced the essential indexes of MOTA and IDs in the tracking algorithm. Compared with mainstream algorithms for moving objects, the improved model is more suitable for moving objects in satellite remote sensing videos. The tracking of moving objects from satellite remote sensing images has wide-ranging applications in urban vehicle road control and innovative cities, highlighting the practical significance of our study.

Author Contributions

Conceptualization, Z.F. and C.Y.; methodology, C.Y.; software, Z.W. and R.W.; validation, B.S. and C.C.; formal analysis, C.C.; investigation, C.Y.; resources, Z.F.; data curation, C.Y. and R.W.; writing—original draft preparation, C.Y.; writing—review and editing, Z.F. and C.C.; visualization, Z.W.; supervision, B.S.; project administration, C.Y.; funding acquisition, Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Shaanxi Province, grant number 2020JM-206; State Key Laboratory of Laser Interaction with Matter, grant number SKLLIM2103; and 111 project, grant number B17035.

Data Availability Statement

Data are available on request due to restrictions, e.g., privacy or ethical restrictions.

Acknowledgments

The authors thank the optical sensing and measurement team from Xidian University for their help.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yin, Q.; Hu, Q.; Liu, H.; Zhang, F.; Wang, Y.; Lin, Z.; An, W.; Guo, Y. Detecting and Tracking Small and Dense Moving Objects in Satellite Videos: A Benchmark. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5612518. [Google Scholar] [CrossRef]

- Zhao, M.; Li, S.; Xuan, S.; Kou, L.; Gong, S.; Zhou, Z. SatSOT: A Benchmark Dataset for Satellite Video Single Object Tracking. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5617611. [Google Scholar] [CrossRef]

- Ye, F.; Ai, T.; Wang, J.; Yao, Y.; Zhou, Z. A Method for Classifying Complex Features in Urban Areas Using Video Satellite Remote Sensing Data. Remote Sens. 2022, 14, 2324. [Google Scholar] [CrossRef]

- Yang, L.; Yuan, G.; Zhou, H.; Liu, H.; Chen, J.; Wu, H. RS-YOLOX: A High-Precision Detector for Object Detection in Satellite Remote Sensing Images. Appl. Sci. 2022, 12, 8707. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, T.; Zhang, G.; Cheng, Q.; Wu, J. Small Object Tracking in Satellite Videos Using Background Compensation. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7010–7021. [Google Scholar] [CrossRef]

- Li, W.; Gao, F.; Zhang, P.; Li, Y.; An, Y.; Zhong, X.; Lu, Q. Research on Multiview Stereo Mapping Based on Satellite Video Images. IEEE Access 2021, 9, 44069–44083. [Google Scholar] [CrossRef]

- Shao, J.; Du, B.; Wu, C.; Zhang, L. Tracking Objects From Satellite Videos: A Velocity Feature Based Correlation Filter. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7860–7871. [Google Scholar] [CrossRef]

- Wu, J.; Cao, C.; Zhou, Y.; Zeng, X.; Feng, Z.; Wu, Q.; Huang, Z. Multiple Ship Tracking in Remote Sensing Images Using Deep Learning. Remote Sens. 2021, 13, 3601. [Google Scholar] [CrossRef]

- Liu, Z.; Gao, Y.; Du, Q.; Chen, M.; Lv, W. YOLO-Extract: Improved YOLOv5 for Aircraft Object Detection in Remote Sensing Images. IEEE Access 2023, 11, 1742–1751. [Google Scholar] [CrossRef]

- Etten, A.V. You Only Look Twice: Rapid Multi-Scale Object Detection In Satellite Imagery. arXiv 2018, arXiv:1805.09512. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE ICCV, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Xu, D.; Wu, Y. Improved YOLO-V3 with DenseNet for multi-scale remote sensing object detection. Sensors 2020, 20, 4276. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Mark Liao, H.-Y. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the IEEE ECCV, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Mehran, Y.; Thierry, B. New trends on moving object detection in video images captured by a moving camera: A survey. Comput. Sci. Rev. 2018, 28, 157–177. [Google Scholar]

- Hu, Q.; Guo, Y.; Lin, Z.; An, W.; Cheng, H. Object tracking using multiple features and adaptive model updating. IEEE Trans. Instrum. Meas. 2017, 66, 2882–2897. [Google Scholar] [CrossRef]

- Luca, B.; Jack, V.; João, F.H.; Andrea, V.; Philip, H.S.T. Fully-convolutional Siamese networks for object tracking. arXiv 2016, arXiv:1606.09549. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and real-time tracking. In Proceedings of the 23rd IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Saleemi, I.; Shah, M. Multiframe many–many point correspondence for vehicle tracking in high density wide area aerial videos. Int. J. Comput. Vis. 2013, 104, 198–219. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and real-time tracking with a deep association metric. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Lalonde, R.; Zhang, D.; Shah, M. ClusterNet: Detecting small objects in large scenes by exploiting spatio-temporal information. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, Utah, 18–22 June 2018; pp. 4003–4012. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. arXiv 2021, arXiv:2110.06864. [Google Scholar]

- Aharon, N.; Orfaig, R.; Bobrovsky, B. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Rao, Y.; Zhao, W.; Tang, Y.; Zhou, J.; Lim, S.; Lu, J. HorNet: Efficient High-Order Spatial Interactions with Recursive Gated Convolutionsar. arXiv 2022, arXiv:2207.14284. [Google Scholar]

- Srinivas, A.; Lin, T.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck Transformers for Visual Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 16514–16524. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Wang, G.; Yan, D.; Zhao, Z. Two algorithms for the detection and tracking of moving vehicle objects in aerial infrared image sequences. Remote Sens. 2016, 8, 28. [Google Scholar] [CrossRef]

- Zivkovic, Z.; Van der Heijden, F. Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Barnich, O.; Van Droogenbroeck, M. ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2011, 20, 1709–1724. [Google Scholar] [CrossRef] [PubMed]

- Rezaei, B.; Ostadabbas, S. Background subtraction via fast robust matrix completion. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 1871–1879. [Google Scholar]

- Pflugfelder, R.; Weissenfeld, A.; Wagner, J. On learning vehicle detection in satellite video. arXiv 2020, arXiv:2001.10900. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Reilly, V.; Idrees, H.; Shah, M. Detection and tracking of large number of objects in wide area surveillance. Proc. Eur. Conf. Comput. Vis. 2010, 6313, 186–199. [Google Scholar]

- Rodriguez, P.; Wohlberg, B. Fast principal component pursuit via alternating minimization. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 69–73. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).