A Benchmark for Multi-Modal LiDAR SLAM with Ground Truth in GNSS-Denied Environments

Abstract

1. Introduction

- 1.

- A ground truth trajectory generation method for environments where MOCAP or GNSS/RTK are unavailable. The method leverages the multi-modality of the data acquisition platform and high-resolution sensors;

- 2.

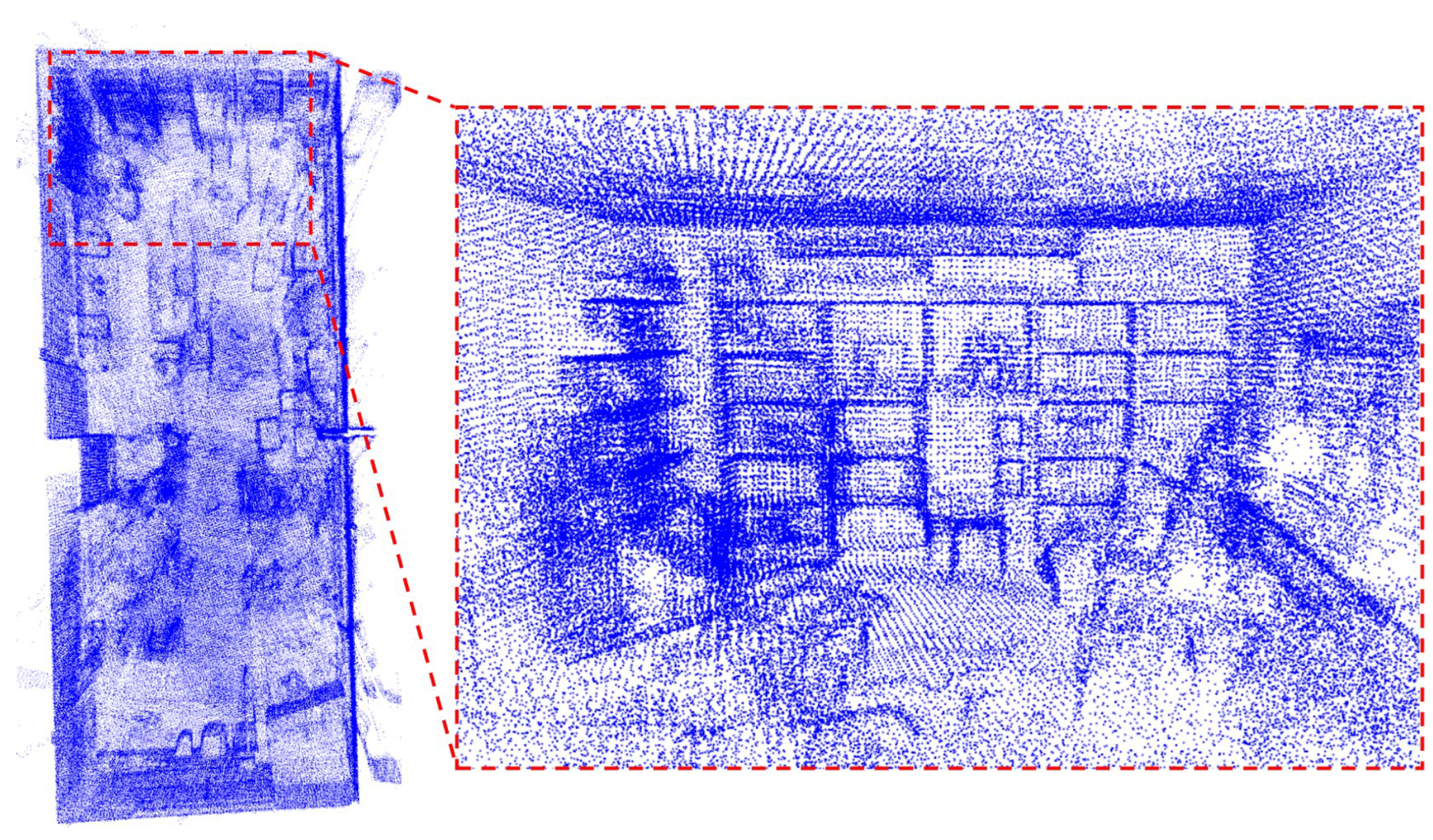

- A new dataset composed of data from five different LiDAR sensors, one LiDAR camera, and one stereo fisheye camera (see Figure 2) in various environments. Ground truth data are provided for all sequences;

- 3.

- The benchmarking of ten state-of-the-art filter-based and optimization-based SLAM methods on our proposed dataset in terms of the accuracy of odometry, memory, and computing resource consumption. The results indicate the limitations of the current SLAM algorithms and potential future research directions.

2. Related Works

2.1. Three-Dimensional LiDAR SLAM

2.2. SLAM Benchmarks

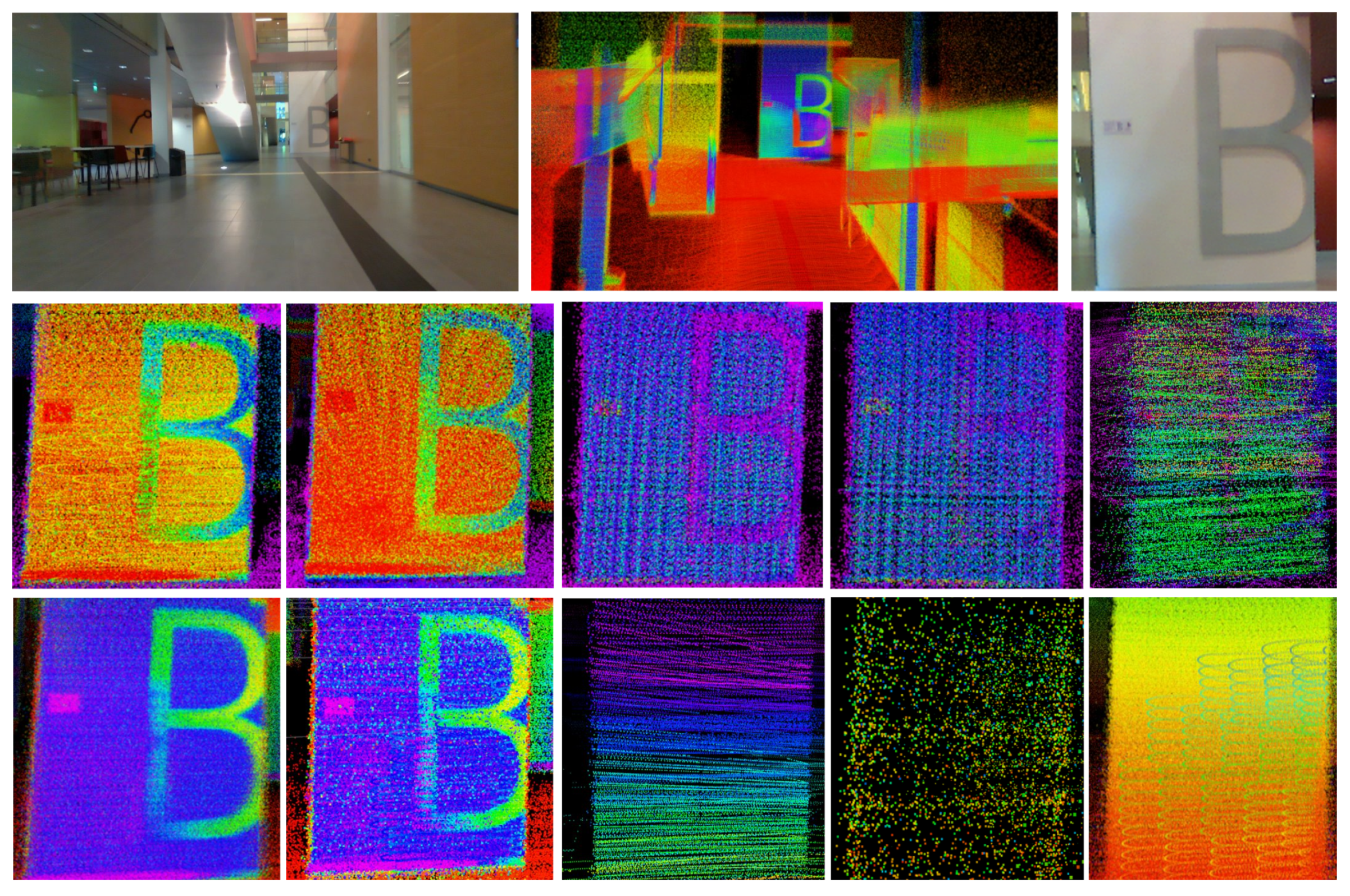

3. Data Collection

3.1. Data Collection Platform

3.2. Calibration and Synchronization

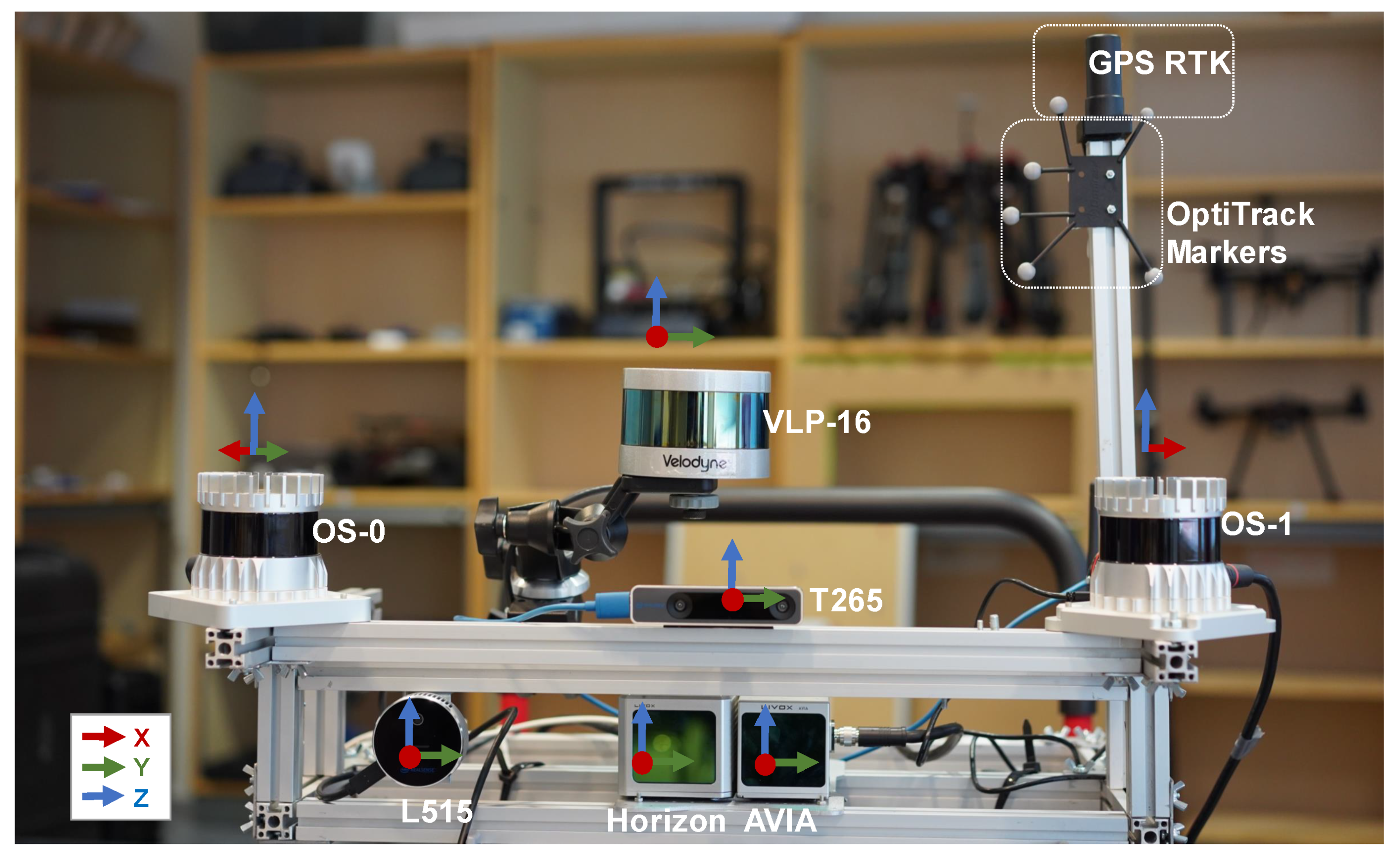

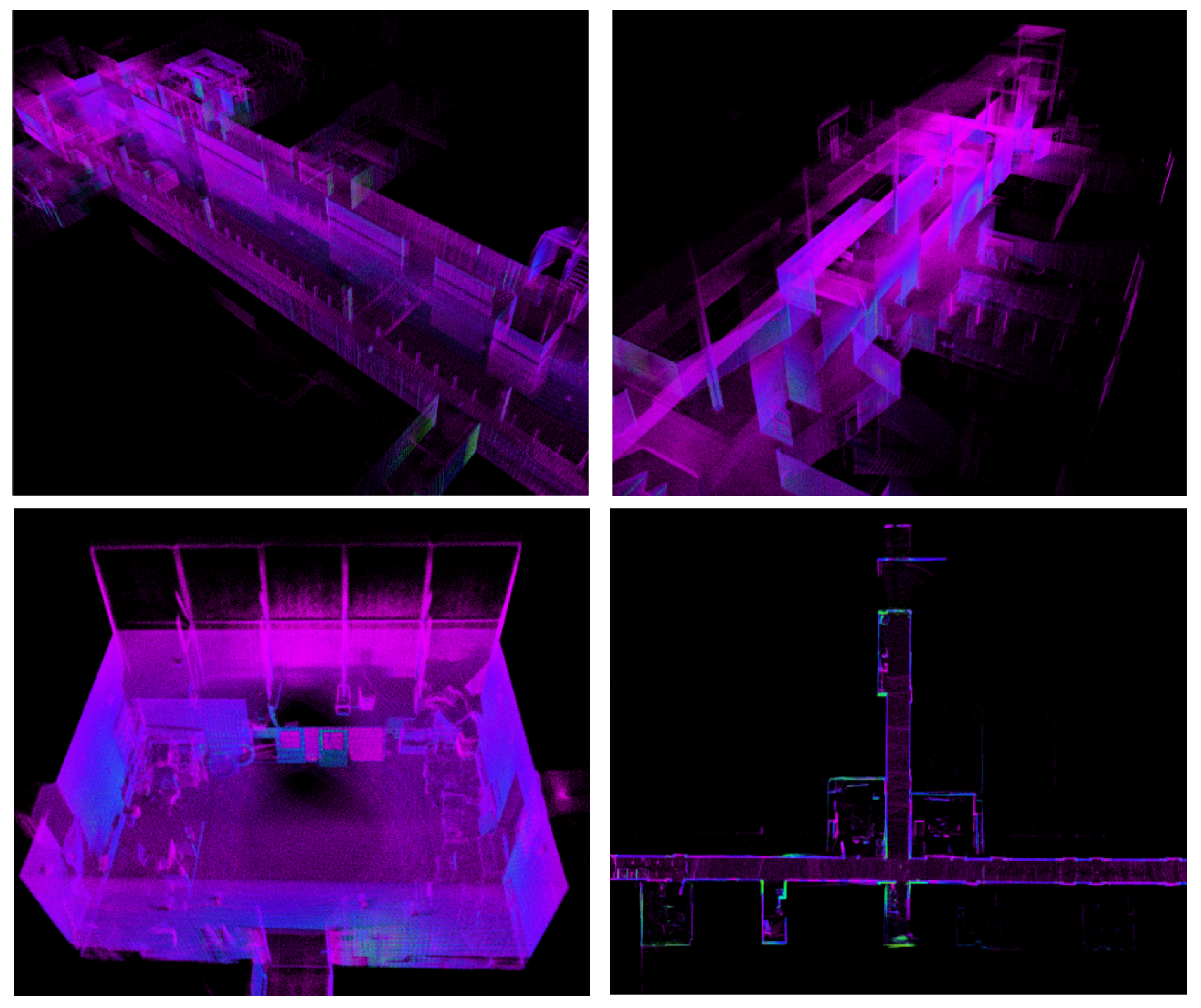

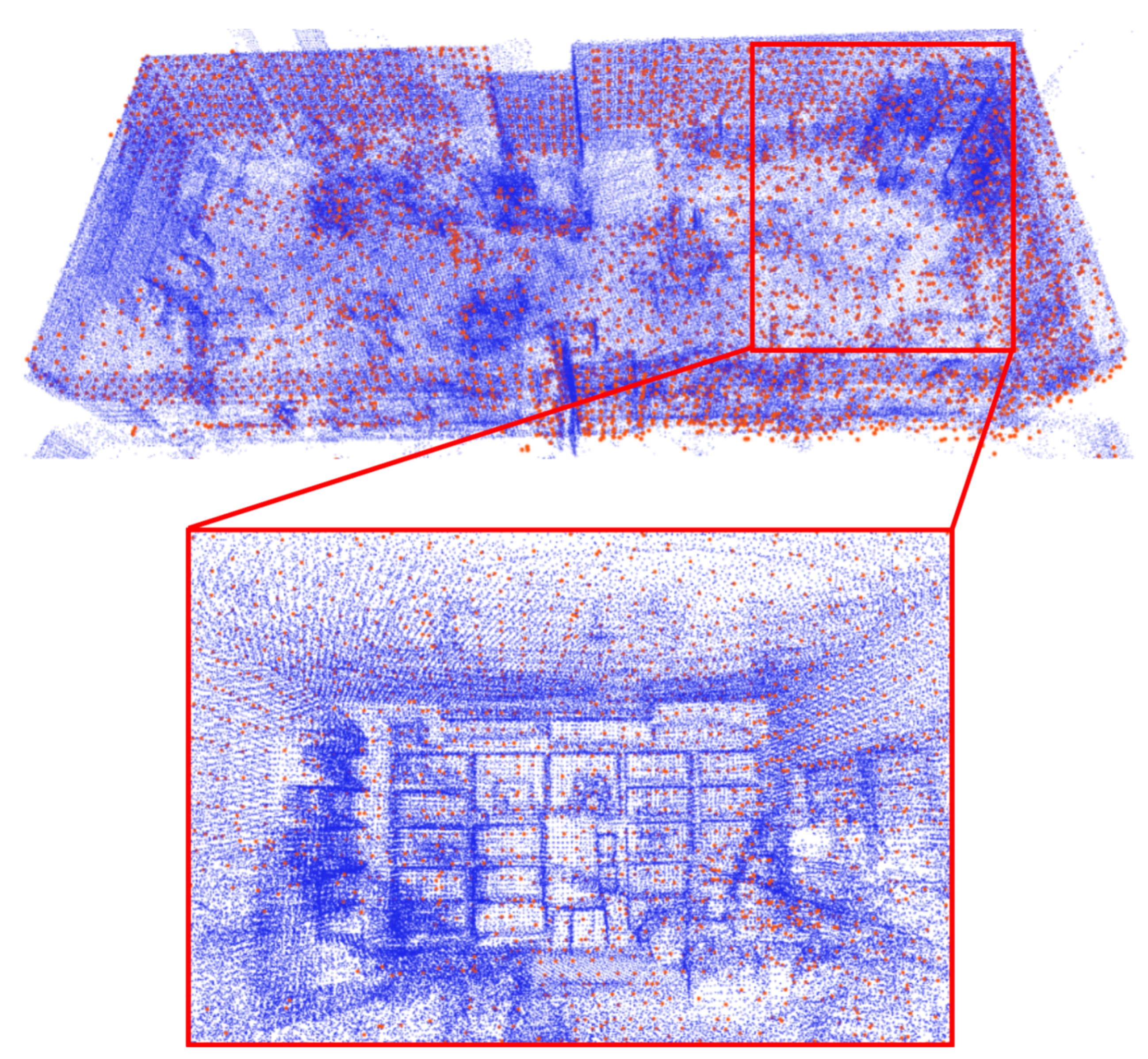

3.3. SLAM-Assisted Ground Truth Map

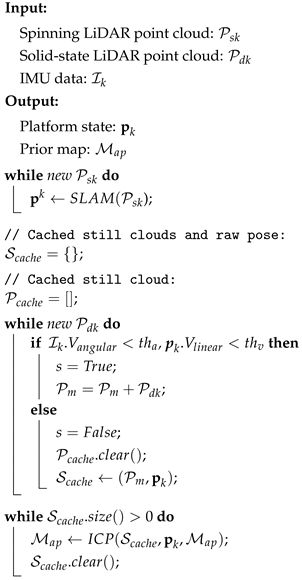

| Algorithm 1:SLAM-assisted ICP-based prior map generation for ground truth data |

|

4. SLAM Benchmark

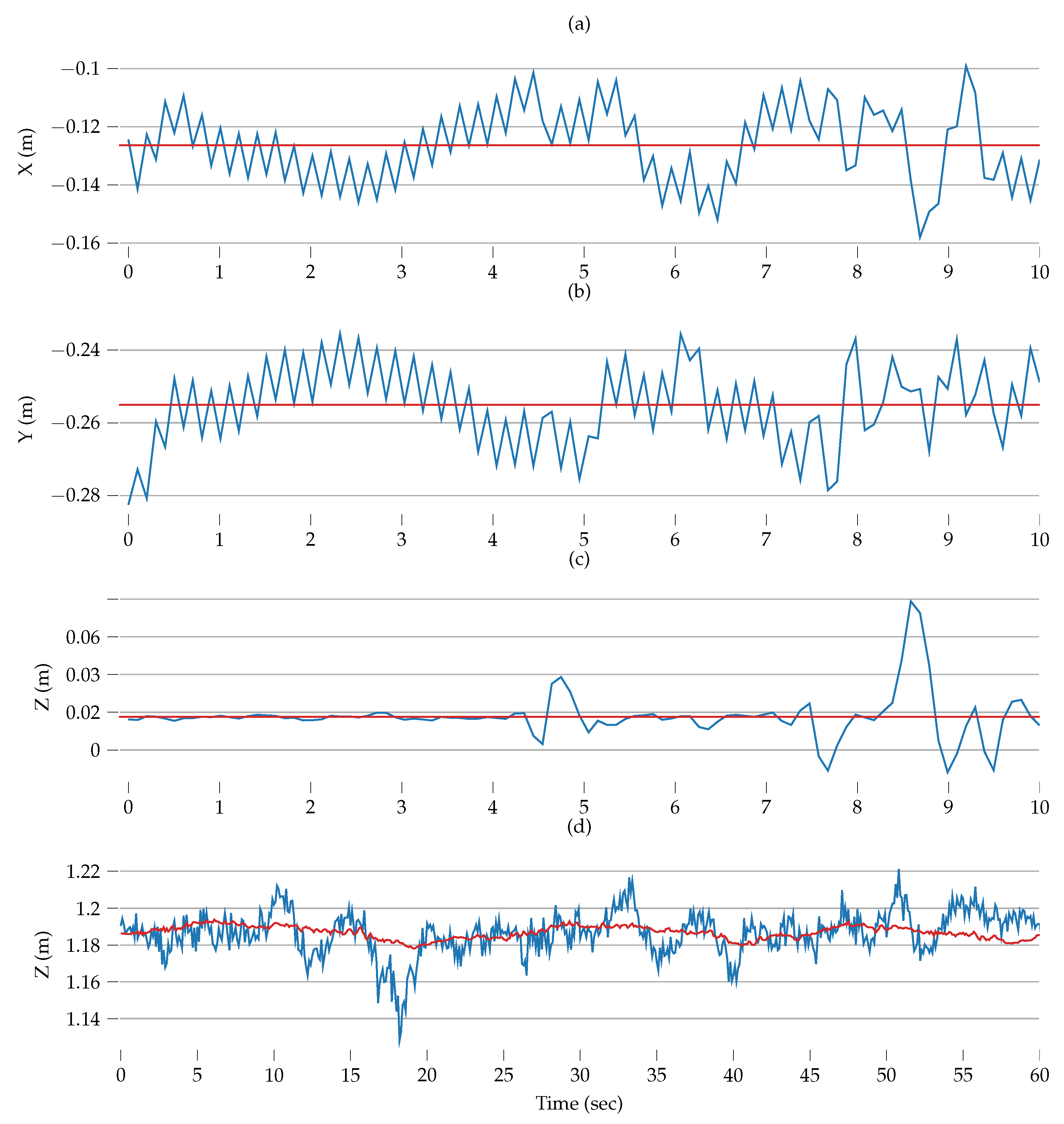

4.1. Ground Truth Evaluation

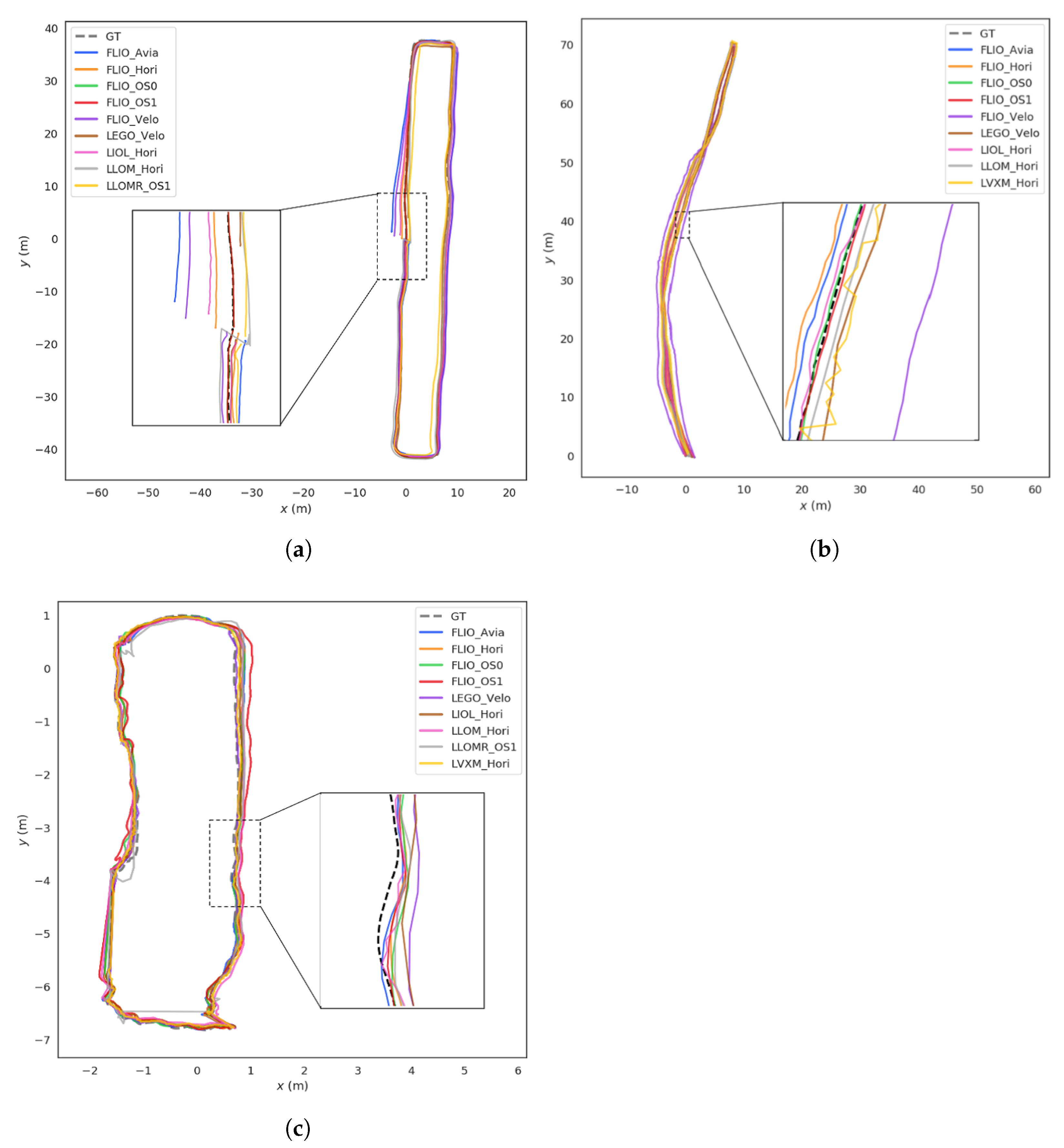

4.2. LiDAR Odometry Benchmarking

4.3. Runtime Evaluation

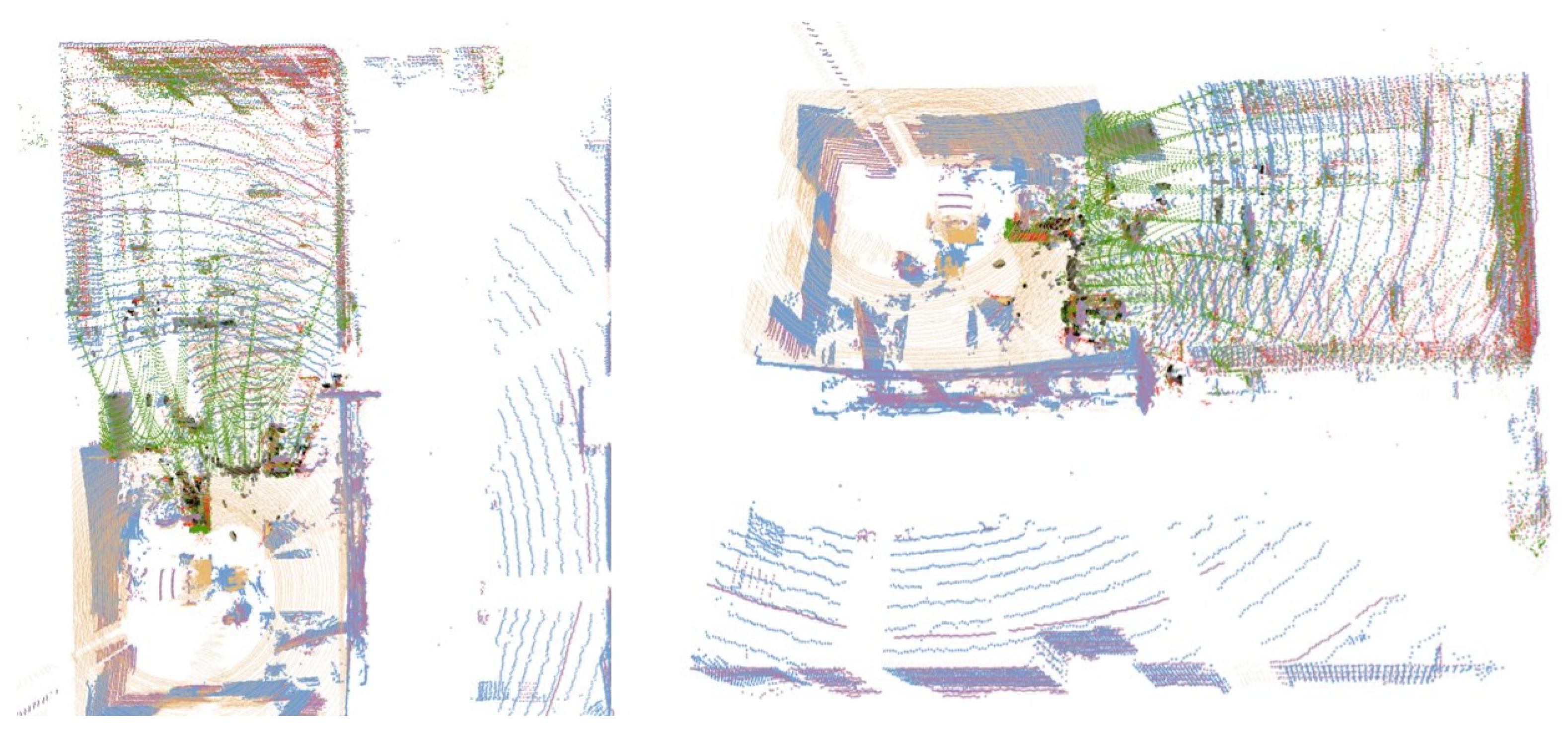

4.4. Mapping Quality Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, Q.; Queralta, J.P.; Gia, T.N.; Zou, Z.; Westerlund, T. Multi-sensor fusion for navigation and mapping in autonomous vehicles: Accurate localization in urban environments. Unmanned Syst. 2020, 8, 229–237. [Google Scholar] [CrossRef]

- Varney, N.; Asari, V.K.; Graehling, Q. DALES: A large-scale aerial LiDAR data set for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 186–187. [Google Scholar]

- Yang, J.; Kang, Z.; Cheng, S.; Yang, Z.; Akwensi, P.H. An individual tree segmentation method based on watershed algorithm and three-dimensional spatial distribution analysis from airborne LiDAR point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2020, 13, 1055–1067. [Google Scholar] [CrossRef]

- Van Nam, D.; Gon-Woo, K. Solid-state LiDAR based-SLAM: A concise review and application. In Proceedings of the 2021 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju Island, Republic of Korea, 17–20 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 302–305. [Google Scholar]

- Qingqing, L.; Xianjia, Y.; Queralta, J.P.; Westerlund, T. Adaptive lidar scan frame integration: Tracking known mavs in 3d point clouds. In Proceedings of the 2021 20th International Conference on Advanced Robotics (ICAR), Ljubljana, Slovenia, 6–10 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1079–1086. [Google Scholar]

- Li, K.; Li, M.; Hanebeck, U.D. Towards high-performance solid-state-lidar-inertial odometry and mapping. IEEE Robot. Autom. Lett. 2021, 6, 5167–5174. [Google Scholar] [CrossRef]

- Queralta, J.P.; Qingqing, L.; Schiano, F.; Westerlund, T. VIO-UWB-based collaborative localization and dense scene reconstruction within heterogeneous multi-robot systems. In Proceedings of the 2022 International Conference on Advanced Robotics and Mechatronics (ICARM), IEEE, Guilin, Guangxi, China, 3–5 July 2022; pp. 87–94. [Google Scholar]

- Lin, J.; Zhang, F. Loam livox: A fast, robust, high-precision LiDAR odometry and mapping package for LiDARs of small FoV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3126–3131. [Google Scholar]

- Li, Q.; Yu, X.; Queralta, J.P.; Westerlund, T. Multi-Modal Lidar Dataset for Benchmarking General-Purpose Localization and Mapping Algorithms. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 3837–3844. [Google Scholar]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Rozenberszki, D.; Majdik, A.L. LOL: Lidar-only odometry and localization in 3D point cloud maps. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4379–4385. [Google Scholar]

- Zhen, W.; Zeng, S.; Soberer, S. Robust localization and localizability estimation with a rotating laser scanner. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 6240–6245. [Google Scholar]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3d lidar inertial odometry and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3144–3150. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. Robot. Sci. Syst. 2014, 2, 1–9. [Google Scholar]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Li, Q.; Nevalainen, P.; Peña Queralta, J.; Heikkonen, J.; Westerlund, T. Localization in Unstructured Environments: Towards Autonomous Robots in Forests with Delaunay Triangulation. Remote Sens. 2020, 12, 1870. [Google Scholar] [CrossRef]

- Nevalainen, P.; Movahedi, P.; Queralta, J.P.; Westerlund, T.; Heikkonen, J. Long-Term Autonomy in Forest Environment Using Self-Corrective SLAM. In New Developments and Environmental Applications of Drones; Springer: Cham, Switzerland, 2022; pp. 83–107. [Google Scholar]

- Xu, W.; Zhang, F. Fast-lio: A fast, robust lidar-inertial odometry package by tightly-coupled iterated kalman filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Lin, J.; Zhang, F. R3LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 10672–10678. [Google Scholar]

- Nguyen, T.M.; Cao, M.; Yuan, S.; Lyu, Y.; Nguyen, T.H.; Xie, L. Viral-fusion: A visual-inertial-ranging-lidar sensor fusion approach. IEEE Trans. Robot. 2021, 38, 958–977. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Lixia, M.; Benigni, A.; Flammini, A.; Muscas, C.; Ponci, F.; Monti, A. A software-only PTP synchronization for power system state estimation with PMUs. IEEE Trans. Instrum. Meas. 2012, 61, 1476–1485. [Google Scholar] [CrossRef]

- Ramezani, M.; Wang, Y.; Camurri, M.; Wisth, D.; Mattamala, M.; Fallon, M. The newer college dataset: Handheld lidar, inertial and vision with ground truth. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4353–4360. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

| Sensor | IMU | Type | Channels | FoV | Resolution | Range | Freq. | Points (pts/s) |

|---|---|---|---|---|---|---|---|---|

| VLP-16 | N/A | spinning | 16 | 360° × 30° | V: 2.0°, H: 0.4° | 100 m | 10 Hz | 300,000 |

| OS1-64 | ICM-20948 | spinning | 64 | 360° × 45° | V: 0.7°, H: 0.18° | 120 m | 10 Hz | 1,310,720 |

| OS0-128 | ICM-20948 | spinning | 128 | 360° × 90° | V: 0.7°, H: 0.18° | 50 m | 10 Hz | 2,621,440 |

| Horizon | BS-BMI088 | solid state | N/A | 81.7° × 25.1° | N/A | 260 m | 10 Hz | 240,000 |

| Avia | BS-BMI088 | solid state | N/A | 70.4° × 77.2° | N/A | 450 m | 10 Hz | 240,000 |

| L515 | BS-BMI085 | LiDAR camera | N/A | 70° × 43° (°) | N/A | 9 m | 30 Hz | - |

| T265 | BS-BMI055 | fisheye cameras | N/A | 163 ± 5° | N/A | N/A | 30 Hz | - |

| Sequence | Description | Ground Truth | Sensor Setup | |

|---|---|---|---|---|

| Forest01-03 | Previous dataset [9] | MOCAP/SLAM |  | 5x LiDARs |

| Indoor01-05 | Previous dataset [9] | MOCAP/SLAM | L515 | |

| Road01-02 | Previous dataset [9] | SLAM | Optitrack | |

| Indoor06 | Lab space (easy) | MOCAP |  | |

| Indoor07 | Lab space (hard) | MOCAP | 5x LiDARs | |

| Indoor08 | Classroom space | SLAM+ICP | L515 | |

| Indoor09 | Corridor (short) | SLAM+ICP | T265 | |

| Indoor10 | Corridor (long) | SLAM+ICP | Optitrack | |

| Indoor11 | Hall (large) | SLAM+ICP | GNSS | |

| Road03 | Open road | GNSS RTK | ||

| Sequence | FLIO_OS0 | FLIO_OS1 | FLIO_Velo | FLIO_Avia | FLIO_Hori | LLOM_Hori | LLOMR_OS1 | LIOL_Hori | LVXM_Hori | LEGO_Velo |

|---|---|---|---|---|---|---|---|---|---|---|

| Indoor06 | 0.015/0.006 | 0.032/0.011 | N/A | 0.205/0.093 | 0.895/0.447 | N/A | 0.882/0.326 | N/A | N/A | 0.312/0.048 |

| Indoor07 | 0.022/0.007 | 0.025/0.013 | 0.072/0.031 | N/A | N/A | N/A | N/A | N/A | N/A | 0.301/0.081 |

| Indoor08 | 0.048/0.030 | 0.042/0.018 | 0.093/0.043 | N/A | N/A | N/A | N/A | N/A | N/A | 0.361/0.100 |

| Indoor09 | 0.188/0.099 | N/A | 0.472/0.220 | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Indoor10 | 0.197/0.072 | 0.189/0.074 | 0.698/0.474 | 0.968/0.685 | 0.322/0.172 | 1.122/0.404 | 1.713/0.300 | 0.641/0.469 | N/A | 0.930/0.901 |

| Indoor11 | 0.584/0.080 | 0.105/0.041 | 0.911/0.565 | 0.196/0.098 | 0.854/0.916 | 0.1.097/0.0.45 | 1.509/0.379 | N/A | N/A | N/A |

| Road03 | 0.123/0.032 | 0.095/0.037 | 1.001/0.512 | 0.211/0.033 | 0.351/0.043 | 0.603/0.195 | N/A | 0.103/0.058 | 0.706/0.396 | 0.2464/0.063 |

| Forest01 | 0.138/0.054 | 0.146/0.087 | N/A | 0.142/0.074 | 0.125/0.062 | 0.116/0.053 | 0.218/0.110 | 0.054/0.033 | 0.083/0.041 | 0.064/0.032 |

| Forest02 | 0.127/0.065 | 0.121/0.069 | N/A | 0.211/0.077 | 0.348/0.077 | 0.612/0.198 | N/A | 0.125/0.073 | 0.727/0.414 | 0.275/0.077 |

| (CPU Utilization (%), RAM Utilization (MB), Pose Publication Rate (Hz)) | |||||

|---|---|---|---|---|---|

| Intel PC | AGX MAX | AGX 30 W | UP Xtreme | NX 15 W | |

| FLIO_OS0 | (79.4, 384.5, 74.0) | (40.9, 385.3, 13.6) | (55.1, 398.8, 13.2) | (90.9, 401.8, 47.3) | (53.7, 371.1, 14.3) |

| FLIO_OS1 | (73.7, 437.4, 67.5) | (54.5, 397.5, 21.2) | (73.9, 409.2, 15.4) | (125.9, 416.2, 58.0) | (73.3, 360.4, 14.2) |

| FLIO_Velo | (69.9, 385.2, 98.6) | (44.4, 369.7, 29.1) | (58.3, 367.6, 21.4) | (110.5, 380.5, 89.6) | (57, 331.5, 19.5) |

| FLIO_Avia | (65.0, 423.8, 98.3) | (40.8, 391.5, 32.3) | (47.4, 413.4, 24.5) | (113.2, 401.2, 90.7) | (51.2, 344.8, 21.9) |

| FLIO_Hori | (65.7, 423.8, 103.7) | (37.6, 408.4, 34.7) | (50.5, 387.9, 26.8) | (109.7, 422.8, 91.0) | (47.5, 370.7, 23.4) |

| LLOM_Hori | (126.2, 461.6, 14.5) | (128.5, 545.4, 9.1) | (168.5, 658.5, 1.5) | (130.1, 461.1, 12.8) | (N/A) |

| LLOMR_OS1 | (112.3, 281.5, 25.8) | (70.8, 282.3, 9.6) | (107.1, 272.2, 6.5) | (109.0, 253.5, 13.6) | (N/A) |

| LIOL_Hori | (186.1, 508.7, 19.1) | (247.2, 590.3, 9.6) | (188.1, 846.0, 4.1) | (298.2, 571.8, 14.0) | (239.0, 750.5, 4.54) |

| LVXM_Hori | (135.4, 713.7, 14.7) | (162.3, 619.0, 10.5) | (185.86, 555.81, 5.0) | (189.6, 610.4, 7.9) | (198.0, 456.7, 5.5) |

| LEGO_Velo | (28.7, 455.4, 9.8) | (42.4, 227.8, 7.0) | (62.8, 233.4, 3.5) | (39.7, 256.6, 9.1) | (36.9, 331.4, 3.7) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sier, H.; Li, Q.; Yu, X.; Peña Queralta, J.; Zou, Z.; Westerlund, T. A Benchmark for Multi-Modal LiDAR SLAM with Ground Truth in GNSS-Denied Environments. Remote Sens. 2023, 15, 3314. https://doi.org/10.3390/rs15133314

Sier H, Li Q, Yu X, Peña Queralta J, Zou Z, Westerlund T. A Benchmark for Multi-Modal LiDAR SLAM with Ground Truth in GNSS-Denied Environments. Remote Sensing. 2023; 15(13):3314. https://doi.org/10.3390/rs15133314

Chicago/Turabian StyleSier, Ha, Qingqing Li, Xianjia Yu, Jorge Peña Queralta, Zhuo Zou, and Tomi Westerlund. 2023. "A Benchmark for Multi-Modal LiDAR SLAM with Ground Truth in GNSS-Denied Environments" Remote Sensing 15, no. 13: 3314. https://doi.org/10.3390/rs15133314

APA StyleSier, H., Li, Q., Yu, X., Peña Queralta, J., Zou, Z., & Westerlund, T. (2023). A Benchmark for Multi-Modal LiDAR SLAM with Ground Truth in GNSS-Denied Environments. Remote Sensing, 15(13), 3314. https://doi.org/10.3390/rs15133314