Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network

Abstract

1. Introduction

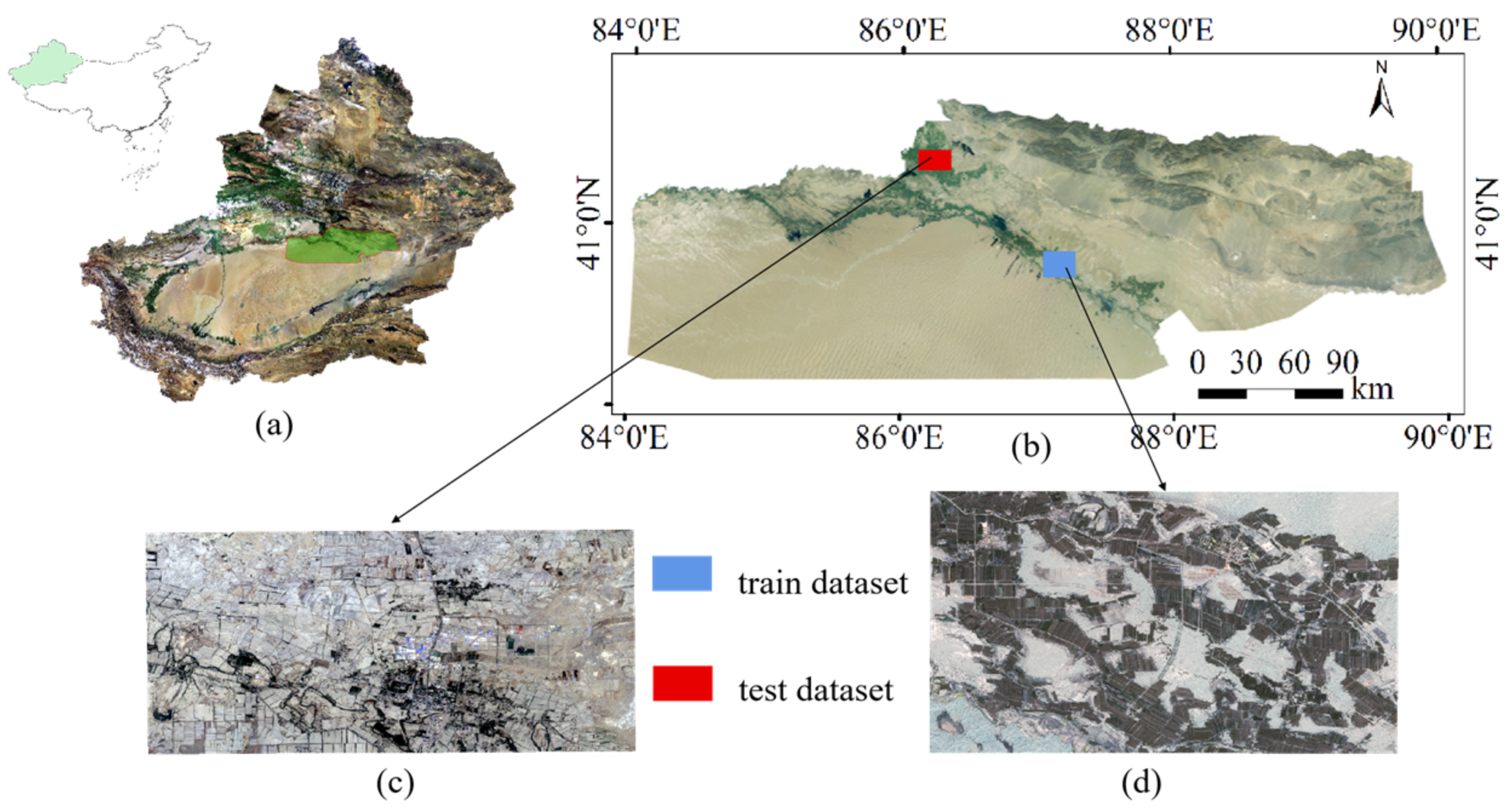

2. Materials and Study Area

2.1. Study Area

2.2. Labeled Data Acquisition and Production

3. Methods

3.1. Network Structure

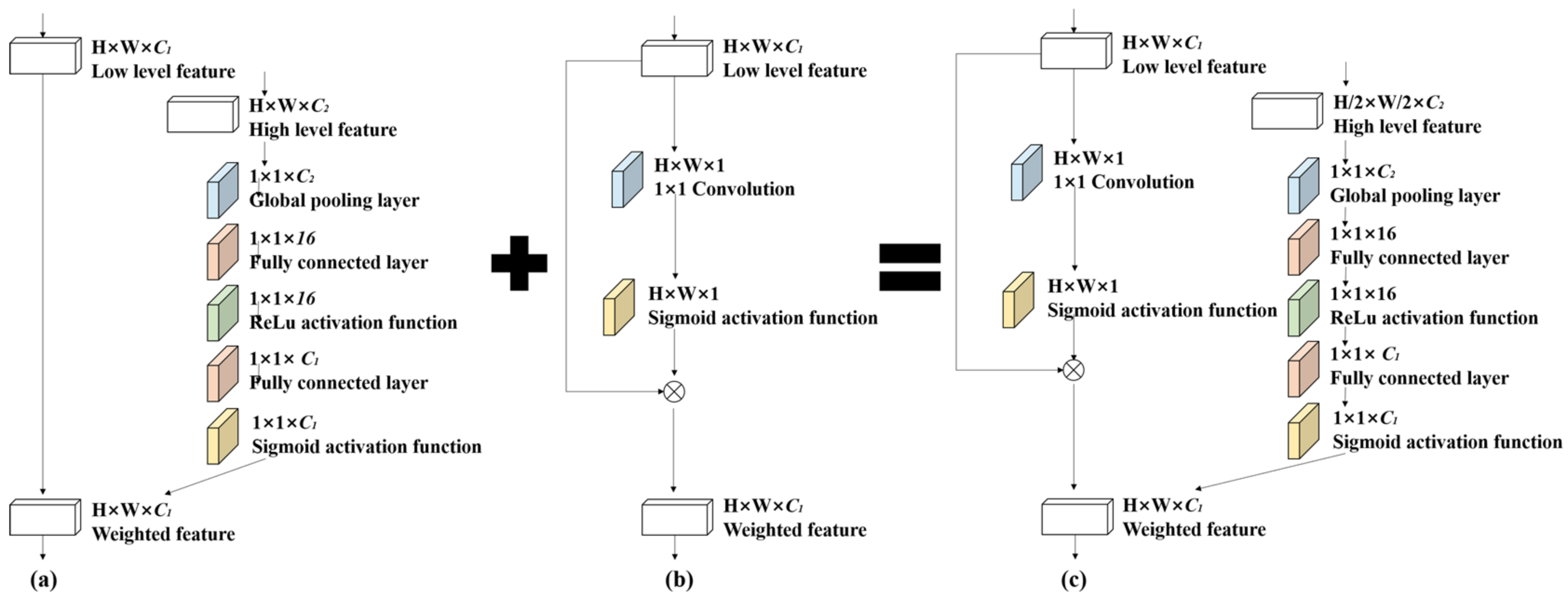

3.2. “Spatial-Channel” Attention Guidance Module

3.3. Evaluation Index

4. Experiments and Results

4.1. Single Module Functional Comparison

4.2. Pear Tree Extraction from GF-6 Data

4.2.1. Data Enhancement

4.2.2. Result of the GF-6 Data

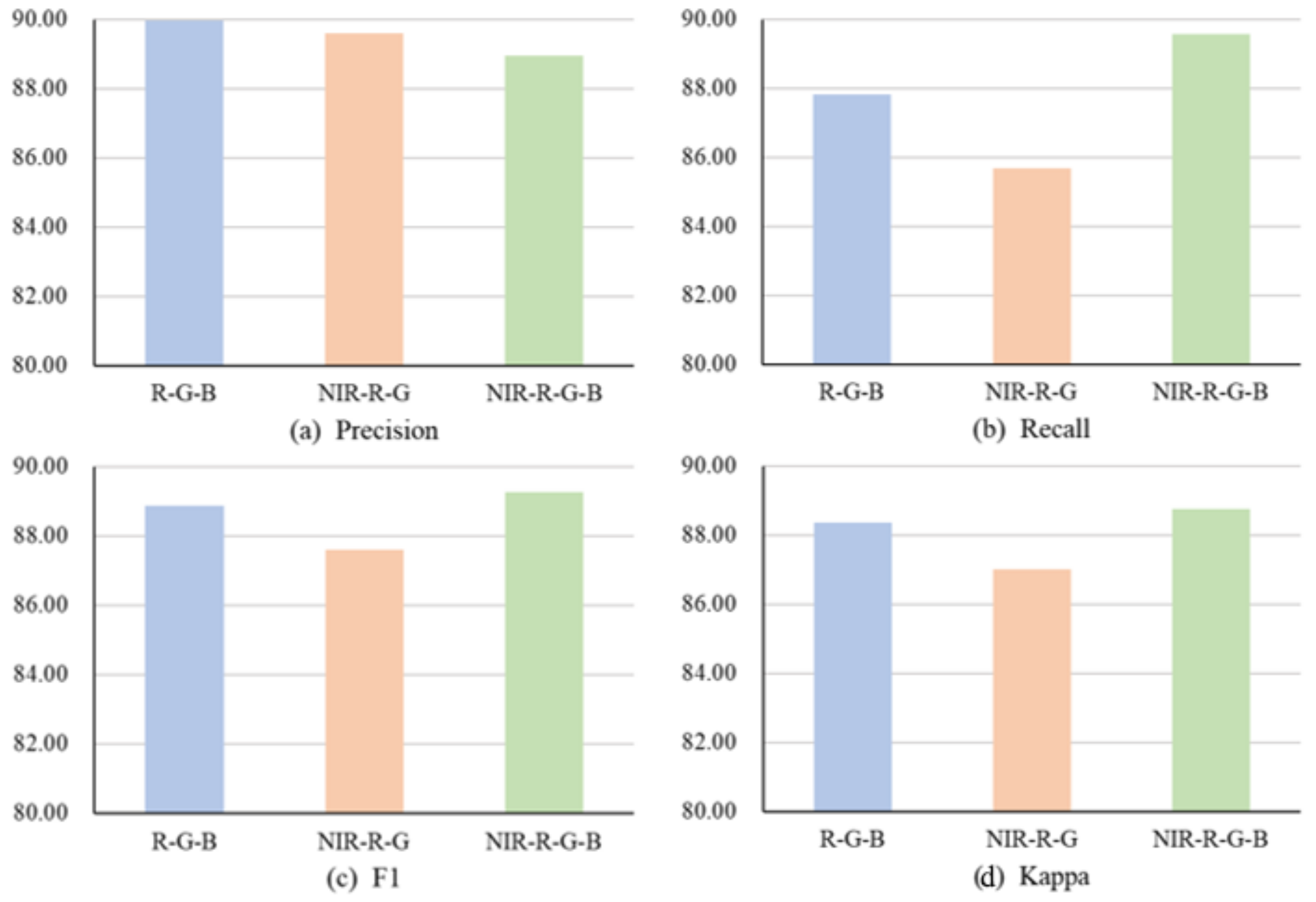

4.2.3. The Impacts of Different Band Combinations on the Model Classification Accuracy

4.3. Tree Extraction from Potsdam Data

4.4. Model Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Liaghat, S.; Balasundram, S.K. A review: The role of remote sensing in precision agriculture. Am. J. Agric. Biol. Sci. 2010, 5, 50–55. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Seelan, S.K.; Laguette, S.; Casady, G.M.; Seielstad, G.A. Remote sensing applications for precision agriculture: A learning community approach. Remote Sens. Environ. 2003, 88, 157–169. [Google Scholar] [CrossRef]

- Segarra, J.; Buchaillot, M.L.; Araus, J.L.; Kefauver, S.C. Remote Sensing for Precision Agriculture: Sentinel-2 Improved Features and Applications. Agronomy 2020, 10, 641. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, X.; Xin, Q.; Sun, Y.; Zhang, P. Automatic building extraction from high-resolution aerial images and LiDAR data using gated residual refinement network. ISPRS J. Photogramm. Remote Sens. 2019, 151, 91–105. [Google Scholar] [CrossRef]

- Bagheri, N. Development of a high-resolution aerial remote-sensing system for precision agriculture. Int. J. Remote Sens. 2017, 38, 2053–2065. [Google Scholar] [CrossRef]

- Qin, S.; Ding, J.; Ge, X.; Wang, J.; Wang, R.; Zou, J.; Tan, J.; Han, L. Spatio-Temporal Changes in Water Use Efficiency and Its Driving Factors in Central Asia (2001–2021). Remote Sens. 2023, 15, 767. [Google Scholar] [CrossRef]

- Zhou, Q.-B.; Yu, Q.-Y.; Liu, J.; Wu, W.-B.; Tang, H.-J. Perspective of Chinese GF-1 high-resolution satellite data in agricultural remote sensing monitoring. J. Integr. Agric. 2017, 16, 242–251. [Google Scholar] [CrossRef]

- Holmgren, P.; Thuresson, T. Satellite remote sensing for forestry planning—A review. Scand. J. For. Res. 1998, 13, 90–110. [Google Scholar] [CrossRef]

- Wen, D.; Huang, X.; Liu, H.; Liao, W.; Zhang, L. Semantic Classification of Urban Trees Using Very High Resolution Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1413–1424. [Google Scholar] [CrossRef]

- Ge, X.; Ding, J.; Teng, D.; Wang, J.; Huo, T.; Jin, X.; Wang, J.; He, B.; Han, L. Updated soil salinity with fine spatial resolution and high accuracy: The synergy of Sentinel-2 MSI, environmental covariates and hybrid machine learning approaches. CATENA 2022, 212, 106054. [Google Scholar] [CrossRef]

- Ge, X.; Ding, J.; Jin, X.; Wang, J.; Chen, X.; Li, X.; Liu, J.; Xie, B. Estimating Agricultural Soil Moisture Content through UAV-Based Hyperspectral Images in the Arid Region. Remote Sens. 2021, 13, 1562. [Google Scholar] [CrossRef]

- Sothe, C.; De Almeida, C.M.; Schimalski, M.B.; La Rosa, L.E.C.; Castro, J.D.B.; Feitosa, R.Q.; Dalponte, M.; Lima, C.L.; Liesenberg, V.; Miyoshi, G.T.; et al. Comparative performance of convolutional neural network, weighted and conventional support vector machine and random forest for classifying tree species using hyperspectral and photogrammetric data. GIScience Remote Sens. 2020, 57, 369–394. [Google Scholar] [CrossRef]

- Fricker, G.A.; Ventura, J.D.; Wolf, J.A.; North, M.P.; Davis, F.W.; Franklin, J. A Convolutional Neural Network Classifier Identifies Tree Species in Mixed-Conifer Forest from Hyperspectral Imagery. Remote Sens. 2019, 11, 1562. [Google Scholar] [CrossRef]

- Paul, N.C.; Sahoo, P.M.; Ahmad, T.; Sahoo, R.; Krishna, G.; Lal, S. Acreage estimation of mango orchards using hyperspectral satellite data. Indian J. Hortic. 2018, 75, 27–33. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, L.; Yan, M.; Qi, J.; Fu, T.; Fan, S.; Chen, B. High-Resolution Mangrove Forests Classification with Machine Learning Using Worldview and UAV Hyperspectral Data. Remote Sens. 2021, 13, 1529. [Google Scholar] [CrossRef]

- Yu, N.; Li, L.; Schmitz, N.; Tian, L.F.; Greenberg, J.A.; Diers, B.W. Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Dong, Y.; Liu, Q.; Du, B.; Zhang, L. Weighted Feature Fusion of Convolutional Neural Network and Graph Attention Network for Hyperspectral Image Classification. IEEE Trans. Image Process. 2022, 31, 1559–1572. [Google Scholar] [CrossRef]

- Yan, J.; Wang, L.; Song, W.; Chen, Y.; Chen, X.; Deng, Z. A time-series classification approach based on change detection for rapid land cover mapping. ISPRS J. Photogramm. Remote Sens. 2019, 158, 249–262. [Google Scholar] [CrossRef]

- Son, N.-T.; Chen, C.-F.; Chen, C.-R.; Minh, V.-Q. Assessment of Sentinel-1A data for rice crop classification using random forests and support vector machines. Geocarto Int. 2018, 33, 587–601. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Marais Sicre, C.; Le Dantec, V.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Sibanda, M.; Mutanga, O.; Rouget, M. Examining the potential of Sentinel-2 MSI spectral resolution in quantifying above ground biomass across different fertilizer treatments. ISPRS J. Photogramm. Remote Sens. 2015, 110, 55–65. [Google Scholar] [CrossRef]

- Wang, R.; Ding, J.; Ge, X.; Wang, J.; Qin, S.; Tan, J.; Han, L.; Zhang, Z. Impacts of climate change on the wetlands in the arid region of Northwestern China over the past 2 decades. Ecol. Indic. 2023, 149, 110168. [Google Scholar] [CrossRef]

- Hassan, S.M.; Maji, A.K. Plant Disease Identification Using a Novel Convolutional Neural Network. IEEE Access 2022, 10, 5390–5401. [Google Scholar] [CrossRef]

- Arce, L.S.D.; Osco, L.P.; Arruda, M.d.S.d.; Furuya, D.E.G.; Ramos, A.P.M.; Aoki, C.; Pott, A.; Fatholahi, S.; Li, J.; Araújo, F.F.d.; et al. Mauritia flexuosa palm trees airborne mapping with deep convolutional neural network. Sci. Rep. 2021, 11, 19619. [Google Scholar] [CrossRef] [PubMed]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Li, H.; Zhang, C.; Zhang, S.; Atkinson, P.M. Crop classification from full-year fully-polarimetric L-band UAVSAR time-series using the Random Forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2020, 87, 102032. [Google Scholar] [CrossRef]

- Sidike, P.; Sagan, V.; Maimaitijiang, M.; Maimaitiyiming, M.; Shakoor, N.; Burken, J.; Mockler, T.; Fritschi, F.B. dPEN: Deep Progressively Expanded Network for mapping heterogeneous agricultural landscape using WorldView-3 satellite imagery. Remote Sens. Environ. 2019, 221, 756–772. [Google Scholar] [CrossRef]

- Lakmal, D.; Kugathasan, K.; Nanayakkara, V.; Jayasena, S.; Perera, A.S.; Fernando, L. Brown Planthopper Damage Detection using Remote Sensing and Machine Learning. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 97–104. [Google Scholar]

- Hariharan, S.; Mandal, D.; Tirodkar, S.; Kumar, V.; Bhattacharya, A.; Lopez-Sanchez, J.M. A Novel Phenology Based Feature Subset Selection Technique Using Random Forest for Multitemporal PolSAR Crop Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4244–4258. [Google Scholar] [CrossRef]

- Zhang, R.; Li, W.; Mo, T. Review of deep learning. arXiv 2018, arXiv:1804.01653. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- Zhao, H.; Duan, S.; Liu, J.; Sun, L.; Reymondin, L. Evaluation of Five Deep Learning Models for Crop Type Mapping Using Sentinel-2 Time Series Images with Missing Information. Remote Sens. 2021, 13, 2790. [Google Scholar] [CrossRef]

- Xu, R.; Tao, Y.; Lu, Z.; Zhong, Y. Attention-Mechanism-Containing Neural Networks for High-Resolution Remote Sensing Image Classification. Remote Sens. 2018, 10, 1602. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D. Dense Semantic Labeling of Subdecimeter Resolution Images With Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 881–893. [Google Scholar] [CrossRef]

- Li, F.; Zhang, C.; Zhang, W.; Xu, Z.; Wang, S.; Sun, G.; Wang, Z. Improved Winter Wheat Spatial Distribution Extraction from High-Resolution Remote Sensing Imagery Using Semantic Features and Statistical Analysis. Remote Sens. 2020, 12, 538. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Yin, H.; Prishchepov, A.V.; Kuemmerle, T.; Bleyhl, B.; Buchner, J.; Radeloff, V.C. Mapping agricultural land abandonment from spatial and temporal segmentation of Landsat time series. Remote Sens. Environ. 2018, 210, 12–24. [Google Scholar] [CrossRef]

- Ursani, A.A.; Kpalma, K.; Lelong, C.C.D.; Ronsin, J. Fusion of Textural and Spectral Information for Tree Crop and Other Agricultural Cover Mapping With Very-High Resolution Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 225–235. [Google Scholar] [CrossRef]

- Rei, S.; Yuki, Y.; Hiroshi, T.; Xiufeng, W.; Nobuyuki, K.; Kan-ichiro, M. Crop classification from Sentinel-2-derived vegetation indices using ensemble learning. J. Appl. Remote Sens. 2018, 12, 026019. [Google Scholar] [CrossRef]

- Liu, P.; Chen, X. Intercropping Classification From GF-1 and GF-2 Satellite Imagery Using a Rotation Forest Based on an SVM. ISPRS Int. J. Geo-Inf. 2019, 8, 86. [Google Scholar] [CrossRef]

- Cheng, K.; Wang, J. Forest-Type Classification Using Time-Weighted Dynamic Time Warping Analysis in Mountain Areas: A Case Study in Southern China. Forests 2019, 10, 1040. [Google Scholar] [CrossRef]

- Ran, S.; Ding, J.; Liu, B.; Ge, X.; Ma, G. Multi-U-Net: Residual Module under Multisensory Field and Attention Mechanism Based Optimized U-Net for VHR Image Semantic Segmentation. Sensors 2021, 21, 1794. [Google Scholar] [CrossRef]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Lotte, R.G.; D’Elia, F.V.; Stamatopoulos, C.; Kim, D.-H.; Benjamin, A.R. Accurate mapping of Brazil nut trees (Bertholletia excelsa) in Amazonian forests using WorldView-3 satellite images and convolutional neural networks. Ecol. Inform. 2021, 63, 101302. [Google Scholar] [CrossRef]

- Yan, S.; Jing, L.; Wang, H. A New Individual Tree Species Recognition Method Based on a Convolutional Neural Network and High-Spatial Resolution Remote Sensing Imagery. Remote Sens. 2021, 13, 479. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar] [CrossRef]

- Liu, Y.; Fan, B.; Wang, L.; Bai, J.; Xiang, S.; Pan, C. Semantic labeling in very high resolution images via a self-cascaded convolutional neural network. ISPRS J. Photogramm. Remote Sens. 2018, 145, 78–95. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. High-Resolution Aerial Image Labeling With Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7092–7103. [Google Scholar] [CrossRef]

- Liu, B.; Ding, J.; Zou, J.; Wang, J.; Huang, S. LDANet: A Lightweight Dynamic Addition Network for Rural Road Extraction from Remote Sensing Images. Remote Sens. 2023, 15, 1829. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, J.; Ding, J.; Liu, B.; Weng, N.; Xiao, H. SIGNet: A Siamese Graph Convolutional Network for Multi-Class Urban Change Detection. Remote Sens. 2023, 15, 2464. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Timilsina, S.; Aryal, J.; Kirkpatrick, J.B. Mapping Urban Tree Cover Changes Using Object-Based Convolution Neural Network (OB-CNN). Remote Sens. 2020, 12, 2464. [Google Scholar] [CrossRef]

- Sun, Y.; Xin, Q.; Huang, J.; Huang, B.; Zhang, H. Characterizing Tree Species of a Tropical Wetland in Southern China at the Individual Tree Level Based on Convolutional Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4415–4425. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Deng, J.; Niu, Z.; Zhang, X.; Zhang, J.; Pan, S.; Mu, H. Kiwifruit vine extraction based on low altitude UAV remote sensing and deep semantic segmentation. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 843–846. [Google Scholar]

- Wang, S.; Xu, Z.; Zhang, C.; Zhang, J.; Mu, Z.; Zhao, T.; Wang, Y.; Gao, S.; Yin, H.; Zhang, Z. Improved Winter Wheat Spatial Distribution Extraction Using A Convolutional Neural Network and Partly Connected Conditional Random Field. Remote Sens. 2020, 12, 821. [Google Scholar] [CrossRef]

- Song, Z.; Zhou, Z.; Wang, W.; Gao, F.; Fu, L.; Li, R.; Cui, Y. Canopy segmentation and wire reconstruction for kiwifruit robotic harvesting. Comput. Electron. Agric. 2021, 181, 105933. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Sun, Y.; Zhang, X.; Zhao, X.; Xin, Q. Extracting Building Boundaries from High Resolution Optical Images and LiDAR Data by Integrating the Convolutional Neural Network and the Active Contour Model. Remote Sens. 2018, 10, 1459. [Google Scholar] [CrossRef]

- Ge, X.; Ding, J.; Teng, D.; Xie, B.; Zhang, X.; Wang, J.; Han, L.; Bao, Q.; Wang, J. Exploring the capability of Gaofen-5 hyperspectral data for assessing soil salinity risks. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102969. [Google Scholar] [CrossRef]

- Ren, Y.; Zhang, X.; Ma, Y.; Yang, Q.; Wang, C.; Liu, H.; Qi, Q. Full Convolutional Neural Network Based on Multi-Scale Feature Fusion for the Class Imbalance Remote Sensing Image Classification. Remote Sens. 2020, 12, 3547. [Google Scholar] [CrossRef]

| Module | Precision | Recall | Iou |

|---|---|---|---|

| A: Spatialwise attention guidance module | 82.5 | 71.6 | 75.6 |

| B: Channel attention guidance module | 83.8 | 72.5 | 77.5 |

| C: “Spatial-channel” attention guidance module | 84.1 | 73.5 | 78.2 |

| Model | Input Size | Precision | Recall | F1 | Kappa |

|---|---|---|---|---|---|

| FCN8s | 256 × 256 | 83.97 | 59.96 | 69.96 | 68.77 |

| 512 × 512 | 90.53 | 56.41 | 69.51 | 68.39 | |

| SegNet | 256 × 256 | 89.25 | 60.83 | 72.35 | 71.28 |

| 512 × 512 | 89.73 | 59.17 | 71.31 | 70.22 | |

| U-Net | 256 × 256 | 81.43 | 75.42 | 78.31 | 77.31 |

| 512 × 512 | 80.62 | 72.27 | 76.22 | 75.14 | |

| Res-U-Net | 256 × 256 | 86.28 | 73.11 | 79.15 | 78.23 |

| 512 × 512 | 69.02 | 87.16 | 77.04 | 75.80 | |

| PSPNet | 256 × 256 | 82.73 | 76.96 | 79.74 | 78.82 |

| 512 × 512 | 80.40 | 76.76 | 78.54 | 76.01 | |

| RefineNet | 256 × 256 | 75.37 | 81.94 | 78.52 | 77.44 |

| 512 × 512 | 75.73 | 73.17 | 74.43 | 73.25 | |

| DeepLabv3+ | 256 × 256 | 88.27 | 83.97 | 86.07 | 85.42 |

| 512 × 512 | 79.17 | 87.12 | 82.96 | 82.10 | |

| Multi-Unet | 256 × 256 | 88.95 | 89.57 | 89.26 | 88.74 |

| 512 × 512 | 90.28 | 76.64 | 82.90 | 82.15 |

| FCN8s | Multi-Unet | U-Net | RefineNet | SegNet | Deeplabv3+ | PSPNet101 | Res-U-Net | |

|---|---|---|---|---|---|---|---|---|

| F1 | 76.38 | 92.45 | 90.55 | 87.22 | 86.94 | 91.55 | 88.2 | 87.45 |

| IOU | 56.0 | 86.0 | 82.9 | 78.1 | 76.9 | 84.4 | 78.3 | 77.7 |

| parameter | 3,050,726 | 7,263,143 | 7,847,147 | 7,263,143 | 31,821,702 | 41,254,646 | 66,239,013 | 110,140,324 |

| Model size (MB) | 11.67 | 28.34 | 30.03 | 46.10 | 121.63 | 158.63 | 253.4 | 422.32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Ding, J.; Ran, S.; Qin, S.; Liu, B.; Li, X. Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network. Remote Sens. 2023, 15, 3283. https://doi.org/10.3390/rs15133283

Wang J, Ding J, Ran S, Qin S, Liu B, Li X. Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network. Remote Sensing. 2023; 15(13):3283. https://doi.org/10.3390/rs15133283

Chicago/Turabian StyleWang, Jinjie, Jianli Ding, Si Ran, Shaofeng Qin, Bohua Liu, and Xiang Li. 2023. "Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network" Remote Sensing 15, no. 13: 3283. https://doi.org/10.3390/rs15133283

APA StyleWang, J., Ding, J., Ran, S., Qin, S., Liu, B., & Li, X. (2023). Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network. Remote Sensing, 15(13), 3283. https://doi.org/10.3390/rs15133283