Progress in the Application of CNN-Based Image Classification and Recognition in Whole Crop Growth Cycles

Abstract

1. Introduction

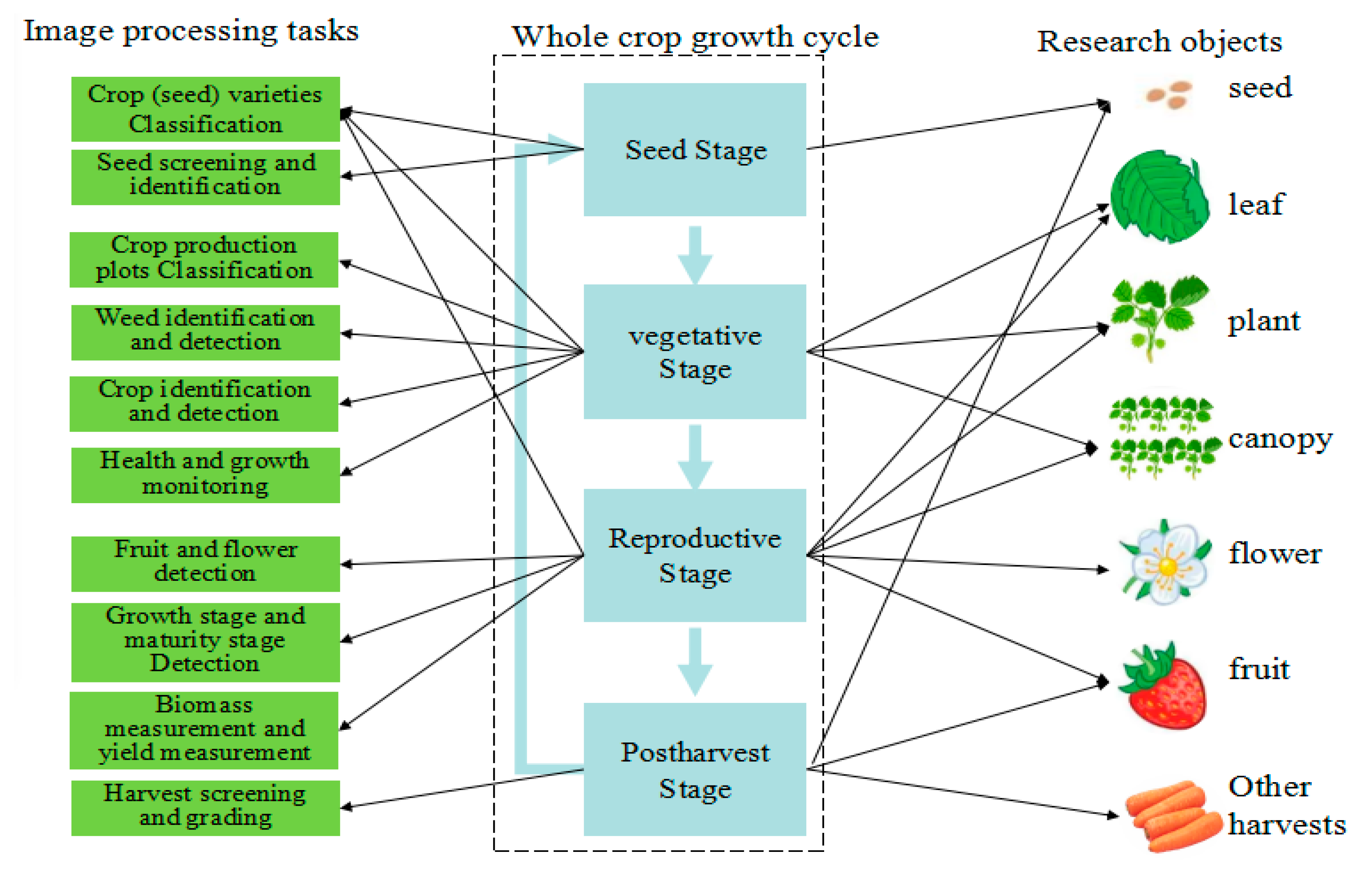

2. Whole Crop Growth Cycle and CNN

2.1. Whole Crop Growth Cycle

- Seed stage: This stage encompasses the period from the fertilization of maternal egg cells to the germination of seeds. During this stage, crops undergo embryonic development and seed dormancy.

- Vegetative stage: from seed germination to the differentiation of flower buds.

- Reproductive stage: Following a series of changes during the vegetative stage, crops initiate the development of flower buds on the growth cone of their stems. Subsequently, the crops blossom, bear fruit, and eventually form seeds.

- Postharvest stage: This stage involves the harvesting of mature crop plants, seeds, fruits, roots, and stems. Once harvested, these crops undergo screening and grading to facilitate subsequent sale or seed production.

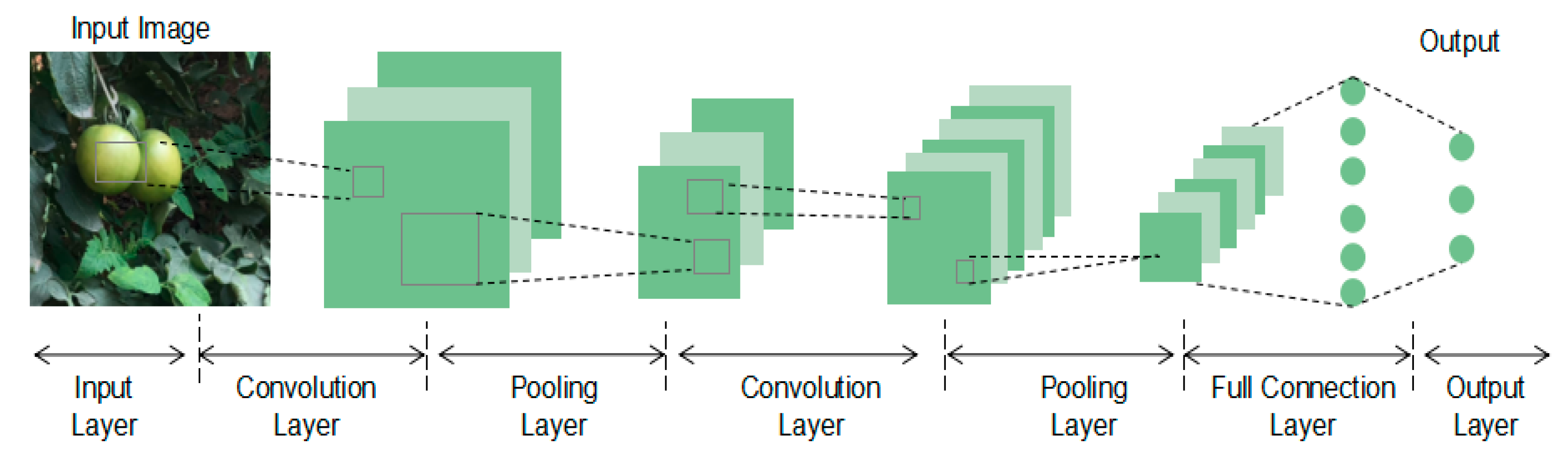

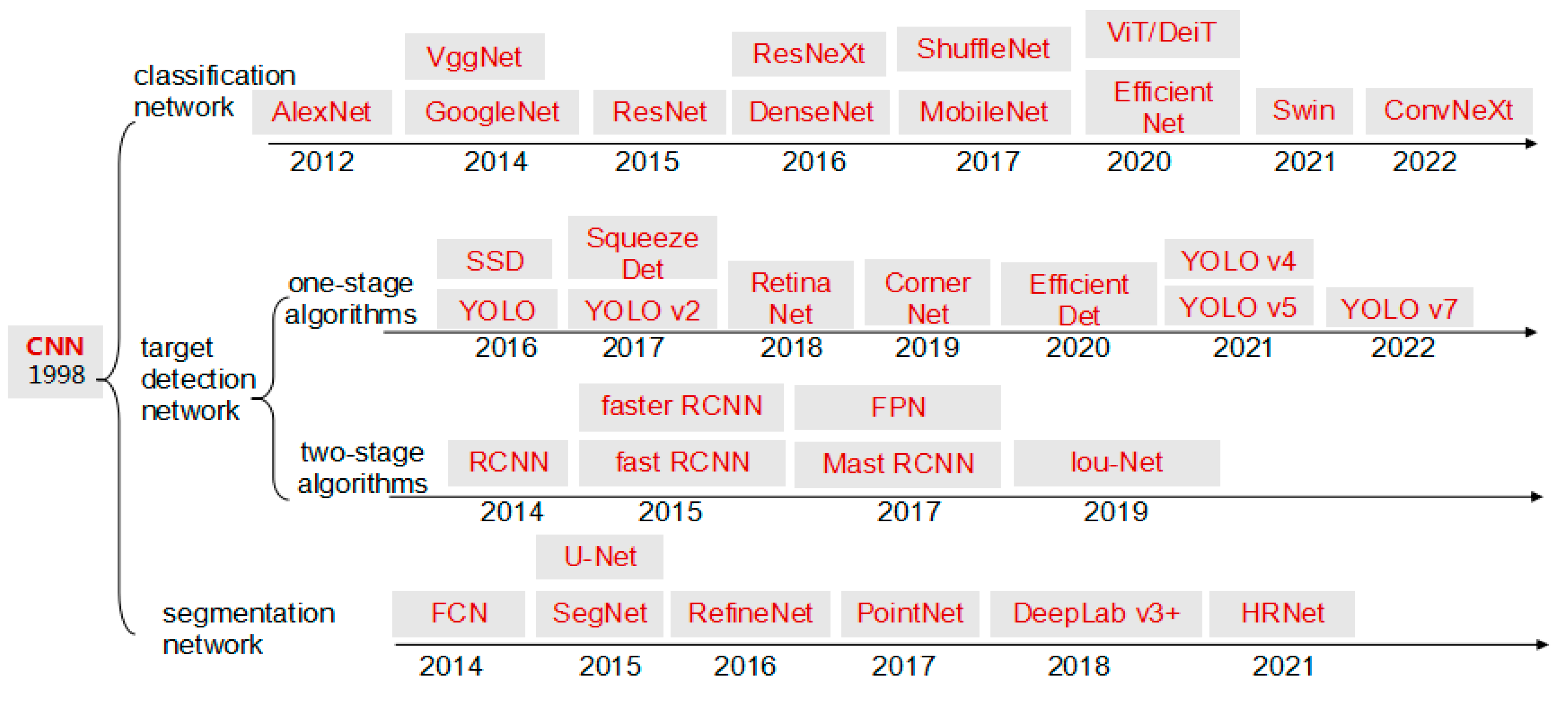

2.2. CNN and Its Development

- The Classification network solely determines the category to which the entire picture belongs, without providing object positions or object count calculations.

- The Target-detection network precisely identifies the category and location of a specific object within the image. It can be categorized into one-stage and two-stage algorithms, with the one-stage algorithm being faster and the two-stage algorithm being more accurate.

- The Segmentation network classifies and segments all the pixels in the image at a pixel level. It can be further categorized into semantic and instance segmentation.

3. Progress of CNN Applications in Crop Growth Cycle

3.1. Seed Stage

3.1.1. Seed Variety Classification

3.1.2. Seed Screening and Identification

| Application Direction | Crop Varieties | Literature | Year | Image Processing Task | Network Framework | Accuracy |

|---|---|---|---|---|---|---|

| seed variety classification | rice | [16] | 2022 | identification of rice seed varieties | GoogLeNet, ResNet | 86.08% |

| rice | [1] | 2021 | rice variety classification | VGG 16 | 99.87% | |

| rice | [13] | 2021 | rice seed type classification | RiceNet | 100.00% | |

| maize | [20] | 2021 | maize seed identification | LeNet-5 | 98.15% | |

| maize | [21] | 2021 | corn seed classification | CNN-ANN | 98.1% | |

| wheat | [22] | 2022 | wheat varietal classification | DenseNet201 | 98.10% | |

| barley | [15] | 2021 | barley seed variety identification | CNN | 98.00% | |

| soybean | [17] | 2020 | soybean seed variety identification | 97.20% | ||

| chickpea | [12] | 2021 | chickpea situ seed variety identification | VGG16 | 94.00% | |

| pepper | [18] | 2020 | pepper seed variety discrimination | 1D-CNN | 92.60% | |

| pepper | [14] | 2021 | pepper seed classification | ResNet | 98.05% | |

| hazelnut | [11] | 2021 | variety classification in hazelnut | Lprtnr1 | 98.63% | |

| okra | [19] | 2021 | hybrid okra seed identification | CNN | 97.68% | |

| seed screening and identification | rice | [23] | 2022 | milled rice grain damage classification | EfficientNet-B0 | 98.37% |

| maize | [24] | 2022 | maize seed classification | SeedViT | 97.60% | |

| maize | [27] | 2021 | haploid maize seed identification | CNN | 97.07% | |

| wheat | [25] | 2022 | unsound wheat kernel discrimination | CNN | 96.67% | |

| grain | [26] | 2022 | bulk grain sample classification | ResNet | 98.70% |

3.1.3. Brief Summary

- Dataset construction: MSI and hyperspectral images (HSI) offer richer seed phenotypic characteristics compared with visible images, thus finding widespread application in CNN-based seed classification and identification. During the seed stage, the image-acquisition process becomes relatively complex. Furthermore, acquiring image samples is a time-consuming and labor-intensive task, leading most studies to rely on self-built datasets.

- Model selection: The seed stage involves relatively simple image processing tasks, with the CNN application primarily focusing on the classification network. Specifically, efforts concentrate on improving conventional and self-built classification networks. These models tend to be compact and highly efficient, with no inclusion of CNN object detection or segmentation networks within the scope of the selected literature.

- Existing problems: The utilization of standardized indoor collection environments and uniform image backgrounds result in a limited generalization ability for trained CNN models. Consequently, much of the research remains in the experimental stage and struggles to be effectively applied in real-world production scenarios. Moreover, the scarcity of high-quality seed data samples poses challenges in implementing large-scale and deep CNN models.

- Further research: The untapped potential of multispectral and hyperspectral images warrants further exploration, as they offer the ability to visualize the internal features and components of seeds in a “non-destructive” manner. By integrating these imaging techniques with breeding and genetic trait knowledge, CNN networks hold the promise of providing fast, cost-expensive, and non-destructive detection tools for breeding and seed production. This would significantly enhance the efficiency of breeding efforts.

3.2. Vegetative Stage

3.2.1. Crop Variety Classification

3.2.2. Weeds Identification and Detection

3.2.3. Classification of Crop Production Plots

3.2.4. Crop Identification and Detection

3.2.5. Health and Growth Monitoring

| Application Direction | Crop Varieties | Literature | Year | Image Processing Task | Network Framework | Accuracy |

|---|---|---|---|---|---|---|

| crop variety classification | grape | [30] * | 2021 | grapevine cultivar identification | GoogLeNet | 99.91% |

| grape | [29] | 2021 | grapevine cultivar identification | VGG16 | 99.00% | |

| ladies finger plant | [31] | 2020 | ladies finger plant leaf classification | CNN | 96.00% | |

| weeds identification and detection | weed | [34] | 2023 | weed classification | CNN | 98.58% |

| weed | [42] | 2022 | weed detection | YOLOv4, Faster R-CNN | 88% | |

| weed | [47] | 2021 | crop–weed classification | 98.51% | ||

| weed | [46] | 2022 | weed recognition | U-NET | 96.06% | |

| weed | [45] | 2022 | weeds growing detection | 98%% | ||

| weed | [39] | 2020 | weed and crop recognition | GCN-ResNet-101 | 99.37% | |

| weed | [36] | 2022 | weed classification | 97.00% | ||

| weed | [40] | 2021 | broadleaf weed seedlings detection | |||

| weed | [37] | 2021 | weeds detection | VGG16 | ||

| weed | [43] | 2020 | weed detection | Faster RCNN | 85% | |

| weed | [44] | 2021 | weed identification | YOLOv3-Tiny | 97.00% | |

| weed | [38] | 2020 | mikania micrantha kunth identifying | MmNet | 94.50% | |

| weed | [35] | 2022 | weed detection in soybean crops | DRCNN | 97.30% | |

| crop production plots classification | vegetable | [52] | 2020 | 8 vegetables and 4 crops | ARCNN | 92.80% |

| blueberries | [54] | 2020 | legacy blueberries recognition | CNN composed of eight layers | 86.00% | |

| crop | [50] | 2020 | crop identification | CNNCRF | ||

| crop | [51] | 2020 | crop classification | 2D-CNN | 86.56% | |

| wheat | [59] | 2020 | winter wheat spatial distribution | RefineNet-PCCCRF | 94.51% | |

| crop | [49] | 2022 | crop identification and classification | CD-CNN | 96.20% | |

| crop | [48] | 2022 | crop classification | 99.35% | ||

| crop | [55] | 2020 | crop classification | CNN-Transformer | ||

| crop | [53] | 2020 | crop classification | Conv1D-RF | 94.27% | |

| rice | [56] | 2020 | rice-cropping classifying | AlexNet | 94.87% | |

| vegetable | [57] | 2021 | vegetable crops object-level classification | CropPointNet | 81.00% | |

| crop | [58] | 2020 | precise crop classification | 3D FCN | 86.50% | |

| crop identification and detection | tree | [63] | 2020 | tree seedlings detecting | Faster R-CNN | 97.00% |

| rice | [60] | 2022 | rice seedling detection | EfficientDet, Faster R-CNN | 88.80% | |

| banana | [61] | 2021 | banana plants detection | 93.00% | ||

| apple | [65] | 2020 | apple tree crown extracting | Faster R-CNN | 97.10% | |

| maize | [62] | 2022 | maize seedling number estimating | Faster R-CNN | ||

| potato | [64] | 2021 | leaf detection | Faster R-CNN | 89.06% | |

| flower | [74] | 2021 | detection and location of potted flowers | YOLO V4-Tiny | 89.72% | |

| health and growth monitoring | medicinal materials | [67] | 2021 | medicinal leaf species and maturity identification | CNN | 99.00% |

| lettuce | [68] | 2022 | lettuce growth index estimation | ResNet50 | ||

| lettuce | [73] | 2020 | growth monitoring of greenhouse lettuce | CNN | 91.56% | |

| rice | [70] | 2022 | rice seedling growth stage detection | EfficientnetB4 | 99.47% | |

| gynura bicolor | [69] | 2020 | gynura bicolor growth classification | GL-CNN | 95.63% | |

| mango | [66] | 2022 | mango leaf stress identification | CNN | 98.12% | |

| oil Palm | [71] | 2021 | oil palm tree detection | Resnet-50 | 97.67% | |

| maize, rice | [72] | 2022 | corn and rice growth state recognition |

3.2.6. Brief Summary

- Dataset construction: In this stage, the collection of image data is diverse, with various production scenarios, image targets, and collection devices. The majority of data are collected from real-life scenes, and there is an abundance of publicly available data resources.

- Model selection: The classification network is primarily utilized for crop variety classification, weed identification, production plot classification, and health monitoring. The target-detection network is mainly employed for plant, organ, and weed detection, crop counting, and growth stage identification. The commonly used algorithms include the YOLO series and Faster R-CNN. The segmentation network is primarily used for separating plants or organs from the background and is applied in growth modeling. Semantic segmentation algorithms such as SegNet, Fully Convolutional Network (FCN), U-Net, DeepLab, and Global Convolutional Network are commonly used [37].

- Existing problems: Each dataset may vary in terms of collection perspective, hardware platform, collection cycle, image type, and other aspects. This leads to the limited adaptability of datasets across different tasks. The CNN-based target-detection network can effectively detect weed areas and patches, but the model size and operational efficiency can be limiting factors. Additionally, crop production land classification based on remote sensing images may suffer from low image resolution and small feature size, which can impact the accuracy of CNN classification and segmentation networks.

- Further research: To enhance the generalization ability of models, multiple publicly available image datasets can be used for training and improvement. Transfer learning techniques can also be applied to leverage knowledge from one image processing task to another. In the context of weed identification, the target-detection and segmentation networks hold significant value. Instead of focusing on identifying specific weed species, farmers are more interested in identifying whether a plant is a weed and its location. In real-field operations, an “exclusion strategy” can be considered, where green plants not identified as “target crops” are assumed to be “weeds”. Leveraging the regular spatial attributes of mechanized planting, weeds (areas) can be accurately identified and located with reduced computational requirements.

3.3. Reproductive Stage

3.3.1. Crop Variety Classification

3.3.2. Fruit and Flower Detection

3.3.3. Growth Stage and Maturity

3.3.4. Biomass and Yield Measurement

| Application Direction | Crop Varieties | Literature | Year | Image Processing Task | Network Framework | Accuracy |

|---|---|---|---|---|---|---|

| crop variety classification | grape | [75] | 2021 | grape variety identification | AlexNetGoogLeNet | 96.90% |

| tree | [76] | 2021 | pollen monitoring | SimpleModel | 97.88% | |

| flower | [77] | 2022 | flower classification | MobileNet | 95.50% | |

| tree | [78] | 2021 | tree species classification | ResNet-18 | ||

| fruit and flower detection | kiwifruit | [80] | 2021 | kiwifruit detection | DY3TNet | 90.05% |

| litchi | [81] * | 2022 | litchi harvester | YOLOv3-tiny litchi | 87.43% | |

| cherry | [82] | 2021 | cherry tomatoes detection | Yolov3-DPN | 94.29% | |

| apple | [83] | 2020 | apple detection | Faster R-CNN with ZFNet and VGG16 | 89.30% | |

| apple | [84] | 2020 | apple detection | Faster R-CNN with VGG16 | 87.90% | |

| kiwifruit | [85] * | 2020 | kiwifruit detection | MobileNetV2, InceptionV3 | 90.80% | |

| tomato | [86] | 2020 | Immature tomatoes detection | Resnet-101 | 87.83% | |

| olive | [79] | 2020 | olive fruit identification | 98.22% | ||

| fruit | [95] | 2020 | fruit detection | 88.10% | ||

| grape | [87] | 2021 | grape bunch detection | SwinGD | 91.50% | |

| tomato | [96] | 2022 | cherry tomato recognition | Mask R-CNN | 93.76% | |

| chickpeas | [110] | 2021 | plant detection and automate counting | 93.18% | ||

| maize | [89] | 2020 | maize tassel detection | Faster R-CNN | 94.99% | |

| apple | [92] | 2020 | apple flower detection | YOLO v4 | 97.31% | |

| coffee | [97] | 2020 | coffee flower identification | VGGNet | 80.00% | |

| strawberry | [93] | 2020 | strawberry flower detection | Faster R-CNN | 86.10% | |

| apple | [94] | 2022 | apple flower bud classification | YOLOv4 | ||

| growth stage and maturity detection | olive | [113] | 2021 | olive tree biovolume | Mask R-CNN | 82% |

| guineagrass | [107] | 2021 | estimate dry matter yield of guineagrass | AlexNet, ResNeXt50 | ||

| soybean | [108] | 2022 | soybean yield prediction | YOLO v3 | 90.30% | |

| tomato | [111] | 2021 | tomato anomalies | YOLO-Dense | 96.41% | |

| tomato | [116] | 2020 | tomato ripeness identification | Fuzzy Mask R-CNN | 98.00% | |

| broccoli | [115] * | 2020 | broccoli head detection | Mask R-CNN | 98.70% | |

| sorghum panicle | [114] | 2020 | sorghum panicle detection and counting | U-NET CNN | 95.50% | |

| apple | [117] | 2020 | overlapped fruits detection and segmentation | mask R-CNN | 97.31% | |

| biomass and yield measurement | tomato | [102] | 2021 | tomato fruit monitoring | Faster R-CNN | 90.20% |

| olive | [99] | 2021 | olive ripening recognition | M2 + NewBN | 91.91% | |

| sweet pepper | [104] | 2021 | sweet pepper development stage prediction | YOLO v5 | 77.00% | |

| cotton | [98] | 2020 | cotton boll status identification | NCADA | 86.40% | |

| broccoli | [101] | 2022 | broccoli maturity classification | Faster R-CNN, CenterNet | 80.00% | |

| tomato | [103] | 2021 | tomato fruit location identification | ResNet-101, Mask R-CNN | 95.00% | |

| strawberry | [100] | 2022 | strawberry appearance quality identification | Swin-MLP | 98.45% | |

| oil palm | [106] * | 2021 | oil palm ripeness classification | EfficientNetB0 | 89.30% | |

| apple | [105] | 2020 | apple flowers instance segmentation | MASU R-CNN | 96.43% |

3.3.5. Brief Summary

- Dataset construction: Similar to the vegetative stage, there is an abundance of image resources available for fruit detection. Specifically, there are ample resources for detecting fruits in images.

- Model selection: The focus of CNN applications in this stage is on CNN object detection networks, with the Faster R-CNN and YOLO algorithms being popular choices. These algorithms primarily enable the detection of fruits and flowers, and also facilitate crop yield or maturity detection. Classification networks are mainly utilized for classifying flowers and fruits. Segmentation networks are employed, for instance in the segmentation of crop fruits, flowers, and plants, with the Mask R-CNN framework being a popular choice.

- Existing problems: While there are numerous image acquisition devices available, the combination of multiple acquisition devices is not commonly practiced. The reported accuracy of the existing research is mostly above 80%, but this is limited to specific datasets. When trained models are deployed in real production scenarios, their accuracy and speed often fall below the benchmark.

- Further research: In crop variety classification, exploring the use of organ images other than leaves, flowers, and fruits for classification can be attempted. For large-scale planting, using whole plant images for classification is recommended during the seedling stage or when plants are independent. When plants are overlapping or densely planted, it is recommended to classify crops using specific and distinct organs such as flowers, ears, and fruits. Leveraging CNN object detection networks to identify fruits at different maturity levels and integrating them into hardware devices can enable precise mechanical picking in batches and stages. Instance segmentation based on CNN classification networks can achieve the precise segmentation of fruit contours, providing precise targeting for mechanical operations.

3.4. Postharvest Stage

3.4.1. Harvests Screening and Grading

| Application Direction | Crop Varieties | Literature | Year | Image Processing Task | Network Framework | Accuracy |

|---|---|---|---|---|---|---|

| harvests screening and grading | apple | [122] | 2022 | apple quality grading | Multi-View Spatial Network | 99.23% |

| carrot | [123] | 2021 | detecting defective carrots | CarrotNet | 97.04% | |

| potato | [127] | 2021 | potato detecting | 97% | ||

| lemon | [124] | 2020 | sour lemon classification | 16–19 layer CNN | 100% | |

| nut | [118] | 2021 | nuts quality estimation | CNN | 93.48% | |

| cherry | [120] | 2020 | cherry classification | CNN | 99.40% | |

| ginseng | [128] | 2021 | ginseng sprout quality prediction | ResNet152 V2 | 80% | |

| persimmon | [126] | 2022 | persimmon fruit prediction | VGG16, ResNet50, InceptionsV3 | 85% | |

| apple | [125] | 2021 | apple quality identification | CNN | 95.33% | |

| potato | [130] | 2020 | potato bud detection | Faster R-CNN | 97.71% | |

| mangoe | [121] | 2022 | mangoes classification and grading | InceptionV3 | 99.2% | |

| apple | [129] | 2022 | apple stem/calyx recognition | YOLO-v5 | 94% | |

| jujube fruit | [119] | 2022 | jujube fruit classification | AlexNet, VGG16 | 99.17% | |

| corn | [131] | 2020 | corn kernel detection and counting | CNN |

3.4.2. Brief Summary

- Dataset construction: The dataset construction in this stage is similar to the seed stage, where efforts are made to collect and curate relevant image data.

- Model selection: The model selection in this stage is also similar to the seed stage. The main image-processing tasks involve the screening and grading of harvested crops, and most CNN applications focus on the utilization of classification and target-detection networks. Additionally, the application of hyperspectral imaging (HSI) and multispectral imaging (MSI) techniques is also observed in some studies [22,115].

- Existing problems: Similar to the seed stage, there are existing challenges and limitations in this stage that need to be addressed.

- Further research: Future research endeavors can explore the application of multispectral and hyperspectral images for batch agricultural product detection. By leveraging these advanced imaging techniques, it becomes possible to achieve the early identification of internal damage, diseases, and insect pests in agricultural products. This can significantly contribute to improving the quality assurance and screening processes of agricultural products.

4. Discussion

4.1. Self-Built Network

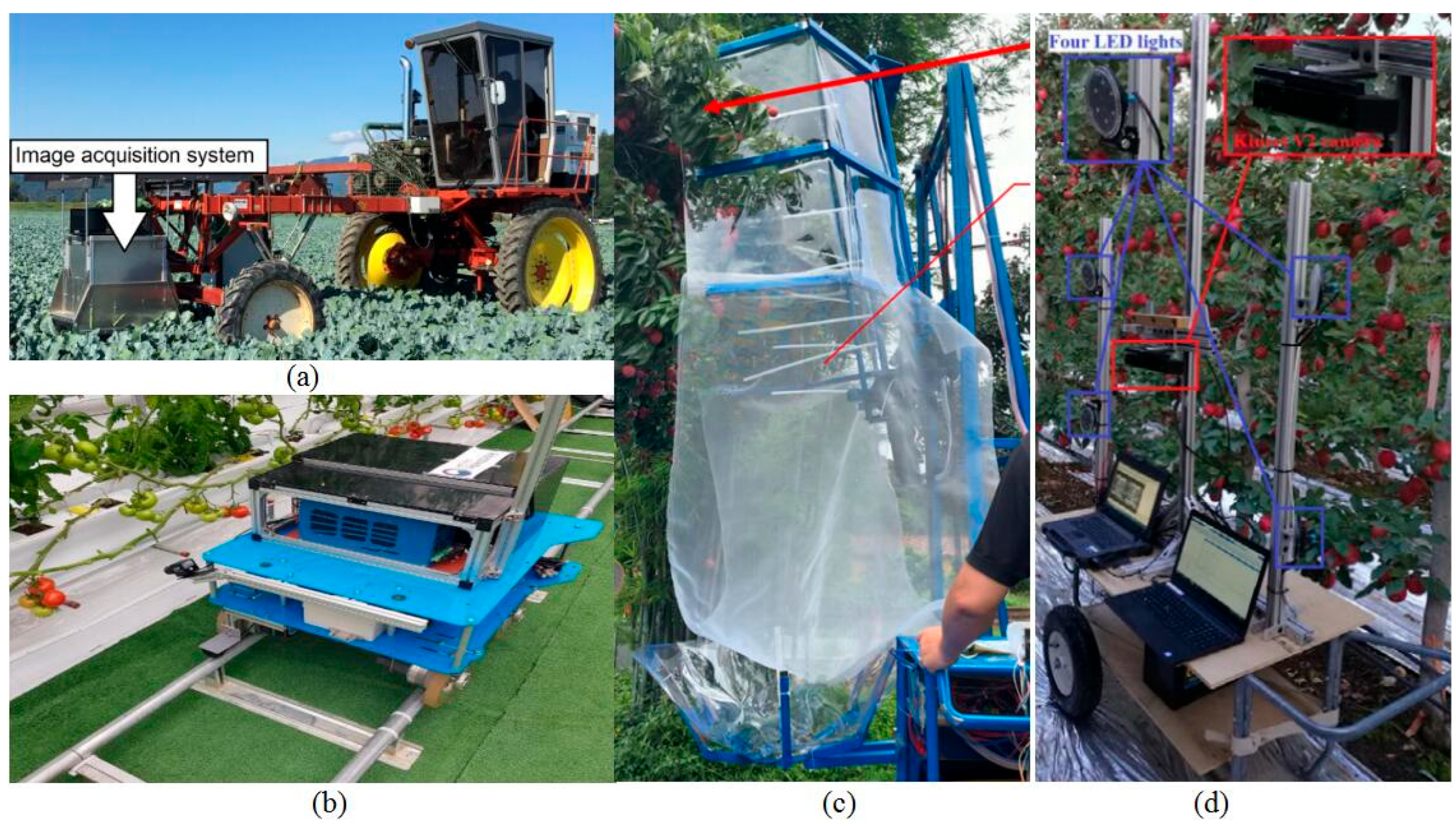

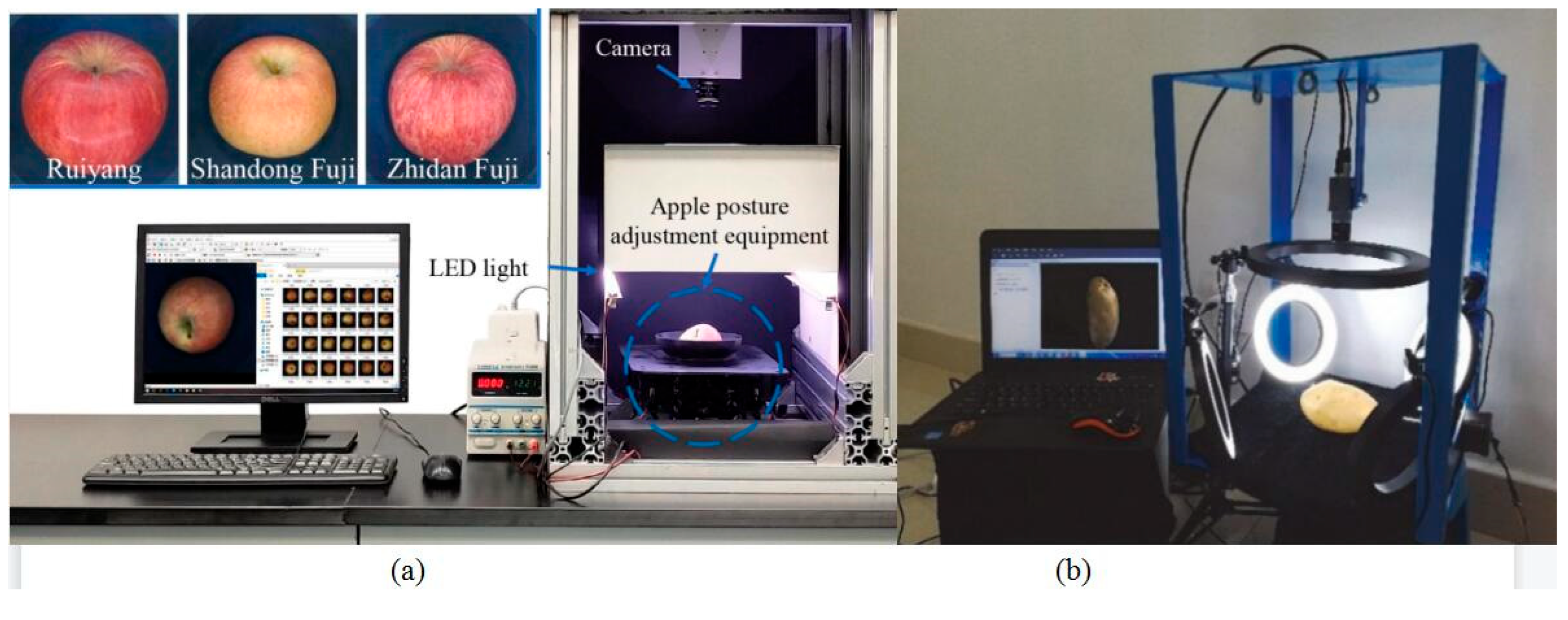

4.2. Special Image Acquisition Device

4.3. Special Objects

4.4. Multimodal Data

4.5. Cross Stage

4.6. Application Deployment

5. Research Prospect

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Koklu, M.; Cinar, I.; Taspinar, Y.S. Classification of rice varieties with deep learning methods. Comput. Electron. Agric. 2021, 187, 106285. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A Review of Deep Learning in Multiscale Agricultural Sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Elizar, E.; Zulkifley, M.A.; Muharar, R.; Zaman, M.H.M.; Mustaza, S.M. A Review on Multiscale-Deep-Learning Applications. Sensors 2022, 22, 7384. [Google Scholar] [CrossRef] [PubMed]

- Pathmudi, V.R.; Khatri, N.; Kumar, S.; Abdul-Qawy, A.S.H.; Vyas, A.K. A systematic review of IoT technologies and their constituents for smart and sustainable agriculture applications. Sci. Afr. 2023, 19, e01577. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep learning techniques to classify agricultural crops through UAV imagery: A review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef]

- Van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Al-Badri, A.H.; Ismail, N.A.; Al-Dulaimi, K.; Salman, G.A.; Khan, A.R.; Al-Sabaawi, A.; Salam, M.S.H. Classification of weed using machine learning techniques: A review-challenges, current and future potential techniques. J. Plant Dis. Prot. 2022, 129, 745–768. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L. Gradient-based learning applied to document recognition. Proc. IEEE 1988, 86, 2278–2324. [Google Scholar] [CrossRef]

- Liu, Y.; Pu, H.B.; Sun, D.W. Efficient extraction of deep image features using convolutional neural network (CNN) for applications in detecting and analysing complex food matrices. Trends Food Sci. Tech. 2021, 113, 193–204. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. International Conference on Neural Information Processing Systems. Curran Assoc. Inc. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Taner, A.; Oztekin, Y.B.; Duran, H. Performance Analysis of Deep Learning CNN Models for Variety Classification in Hazelnut. Sustainability 2021, 13, 6527. [Google Scholar] [CrossRef]

- Taheri-Garavand, A.; Nasiri, A.; Fanourakis, D.; Fatahi, S.; Omid, M.; Nikoloudakis, N. Automated In Situ Seed Variety Identification via Deep Learning: A Case Study in Chickpea. Plants 2021, 10, 1406. [Google Scholar] [CrossRef] [PubMed]

- Gilanie, G.; Nasir, N.; Bajwa, U.I.; Ullah, H. RiceNet: Convolutional neural networks-based model to classify Pakistani grown rice seed types. Multimed. Syst. 2021, 27, 867–875. [Google Scholar] [CrossRef]

- Sabanci, K.; Aslan, M.F.; Ropelewska, E.; Unlersen, M.F. A convolutional neural network-based comparative study for pepper seed classification: Analysis of selected deep features with support vector machine. J. Food Process Eng. 2022, 45, e13955. [Google Scholar] [CrossRef]

- Singh, T.; Garg, N.M.; Iyengar, S.R.S. Nondestructive identification of barley seeds variety using near-infrared hyperspectral imaging coupled with convolutional neural network. J. Food Process Eng. 2021, 44, e13821. [Google Scholar] [CrossRef]

- Jin, B.C.; Zhang, C.; Jia, L.Q.; Tang, Q.Z.; Gao, L.; Zhao, G.W.; Qi, H.N. Identification of Rice Seed Varieties Based on Near-Infrared Hyperspectral Imaging Technology Combined with Deep Learning. ACS Omega 2022, 7, 4735–4749. [Google Scholar] [CrossRef]

- Zhu, S.L.; Zhang, J.Y.; Chao, M.N.; Xu, X.J.; Song, P.W.; Zhang, J.L.; Huang, Z.W. A Rapid and Highly Efficient Method for the Identification of Soybean Seed Varieties: Hyperspectral Images Combined with Transfer Learning. Molecules 2020, 25, 152. [Google Scholar] [CrossRef]

- Li, X.; Fan, X.; Zhao, L.; Huang, S.; He, Y.; Suo, X. Discrimination of Pepper Seed Varieties by Multispectral Imaging Combined with Machine Learning. Appl. Eng. Agric. 2020, 36, 743–749. [Google Scholar] [CrossRef]

- Yu, Z.Y.; Fang, H.; Zhangjin, Q.N.; Mi, C.X.; Feng, X.P.; He, Y. Hyperspectral imaging technology combined with deep learning for hybrid okra seed identification. Biosyst. Eng. 2021, 212, 46–61. [Google Scholar] [CrossRef]

- Zhou, Q.; Huang, W.Q.; Tian, X.; Yang, Y.; Liang, D. Identification of the variety of maize seeds based on hyperspectral images coupled with convolutional neural networks and subregional voting. J. Sci. Food Agric. 2021, 101, 4532–4542. [Google Scholar] [CrossRef]

- Javanmardi, S.; Ashtiani, S.H.M.; Verbeek, F.J.; Martynenko, A. Alex Computer-vision classification of corn seed varieties using deep convolutional neural network. J. Stored Prod. Res. 2021, 92, 101800. [Google Scholar] [CrossRef]

- Unlersen, M.F.; Sonmez, M.E.; Aslan, M.F.; Demir, B.; Aydin, N.; Sabanci, K.; Ropelewska, E. CNN-SVM hybrid model for varietal classification of wheat based on bulk samples. Eur. Food Res. Technol. 2022, 248, 2043–2052. [Google Scholar] [CrossRef]

- Bhupendra; Moses, K.; Miglani, A.; Kankar, P.K. Deep CNN-based damage classification of milled rice grains using a high-magnification image dataset. Comput. Electron. Agric. 2022, 195, 106811. [Google Scholar] [CrossRef]

- Chen, J.Q.; Luo, T.; Wu, J.H.; Wang, Z.K.; Zhang, H.D. A Vision Transformer network SeedViT for classification of maize seeds. J. Food Process Eng. 2022, 45, 13998. [Google Scholar] [CrossRef]

- Li, H.; Zhang, L.; Sun, H.; Rao, Z.H.; Ji, H.Y. Discrimination of unsound wheat kernels based on deep convolutional generative adversarial network and near-infrared hyperspectral imaging technology. Spectrochim. Acta Part A-Mol. Biomol. Spectrosc. 2022, 268, 120722. [Google Scholar] [CrossRef]

- Dreier, E.S.; Sorensen, K.M.; Lund-Hansen, T.; Jespersen, B.M.; Pedersen, K.S. Hyperspectral imaging for classification of bulk grain samples with deep convolutional neural networks. J. Near Infrared Spectrosc. 2022, 30, 107–121. [Google Scholar] [CrossRef]

- Sabadin, F.; Galli, G.; Borsato, R.; Gevartosky, R.; Campos, G.R.; Fritsche-Neto, R. Improving the identification of haploid maize seeds using convolutional neural networks. Crop Sci. 2021, 61, 2387–2397. [Google Scholar] [CrossRef]

- Goeau, H.; Bonnet, P.; Joly, A. Plant identification based on noisy web data: The amazing performance of deep learning (lifeclef 2017). In CLEF 2017-Conference and Labs of the Evaluation Forum; Gareth, J.F.J., Séamus, L., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 1–13. [Google Scholar]

- Nasiri, A.; Taheri-Garavand, A.; Fanourakis, D.; Zhang, Y.D.; Nikoloudakis, N. Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties. Plants 2021, 10, 1628. [Google Scholar] [CrossRef]

- Liu, Y.X.; Su, J.Y.; Shen, L.; Lu, N.; Fang, Y.L.; Liu, F.; Song, Y.Y.; Su, B.F. Development of a mobile application for identification of grapevine (Vitis vinifera L.) cultivars via deep learning. Int. J. Agric. Biol. Eng. 2021, 14, 172–179. [Google Scholar] [CrossRef]

- Selvam, L.; Kavitha, P. Classification of ladies finger plant leaf using deep learning. J. Ambient Intell. Humaniz. Comput. 2020. [Google Scholar] [CrossRef]

- Grinblat, G.L.; Uzal, L.C.; Larese, M.G.; Granitto, P.M. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 2016, 127, 418–424. [Google Scholar] [CrossRef]

- Vayssade, J.A.; Jones, G.; Gee, C.; Paoli, J.N. Pixelwise instance segmentation of leaves in dense foliage. Comput. Electron. Agric. 2022, 195, 106797. [Google Scholar] [CrossRef]

- Manikandakumar, M.; Karthikeyan, P. Weed Classification Using Particle Swarm Optimization and Deep Learning Models. Comput. Syst. Sci. Eng. 2023, 44, 913–927. [Google Scholar] [CrossRef]

- Babu, V.S.; Ram, N.V. Deep Residual CNN with Contrast Limited Adaptive Histogram Equalization for Weed Detection in Soybean Crops. Trait. Signal 2022, 39, 717–722. [Google Scholar] [CrossRef]

- Garibaldi-Marquez, F.; Flores, G.; Mercado-Ravell, D.A.; Ramirez-Pedraza, A.; Valentin-Coronado, L.M. Weed Classification from Natural Corn Field-Multi-Plant Images Based on Shallow and Deep Learning. Sensors 2022, 22, 3021. [Google Scholar] [CrossRef]

- Moazzam, S.I.; Khan, U.S.; Qureshi, W.S.; Tiwana, M.I.; Rashid, N.; Alasmary, W.S.; Iqbal, J.; Hamza, A. A Patch-Image Based Classification Approach for Detection of Weeds in Sugar Beet Crop. IEEE Access 2021, 9, 121698–121715. [Google Scholar] [CrossRef]

- Qiao, X.; Li, Y.Z.; Su, G.Y.; Tian, H.K.; Zhang, S.; Sun, Z.Y.; Yang, L.; Wan, F.H.; Qian, W.Q. MmNet: Identifying Mikania micrantha Kunth in the wild via a deep Convolutional Neural Network. J. Integr. Agric. 2020, 19, 1292–1300. [Google Scholar] [CrossRef]

- Jiang, H.H.; Zhang, C.Y.; Qiao, Y.L.; Zhang, Z.; Zhang, W.J.; Song, C.Q. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Zhuang, J.Y.; Li, X.H.; Bagavathiannan, M.; Jin, X.J.; Yang, J.; Meng, W.T.; Li, T.; Li, L.X.; Wang, Y.D.; Chen, Y.; et al. Evaluation of different deep convolutional neural networks for detection of broadleaf weed seedlings in wheat. Pest Manag. Sci. 2022, 78, 521–529. [Google Scholar] [CrossRef]

- Gao, J.F.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 29. [Google Scholar] [CrossRef]

- Sapkota, B.B.; Hu, C.S.; Bagavathiannan, M.V. Evaluating Cross-Applicability of Weed Detection Models Across Different Crops in Similar Production Environments. Front. Plant Sci. 2022, 13, 837726. [Google Scholar] [CrossRef] [PubMed]

- Sivakumar, A.N.V.; Li, J.T.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y.Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid-to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Hennessy, P.J.; Esau, T.J.; Farooque, A.A.; Schumann, A.W.; Zaman, Q.U.; Corscadden, K.W. Hair Fescue and Sheep Sorrel Identification Using Deep Learning in Wild Blueberry Production. Remote Sens. 2021, 13, 943. [Google Scholar] [CrossRef]

- Yang, J.; Wang, Y.D.; Chen, Y.; Yu, J.L. Detection of Weeds Growing in Alfalfa Using Convolutional Neural Networks. Agronomy 2022, 12, 1459. [Google Scholar] [CrossRef]

- Nasiri, A.; Omid, M.; Taheri-Garavand, A.; Jafari, A. Deep learning-based precision agriculture through weed recognition in sugar beet fields. Sustain. Comput.-Inform. Syst. 2022, 35, 100759. [Google Scholar] [CrossRef]

- Su, D.; Kong, H.; Qiao, Y.L.; Sukkarieh, S. Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

- Agilandeeswari, L.; Prabukumar, M.; Radhesyam, V.; Phaneendra, K.L.N.B.; Farhan, A. Crop Classification for Agricultural Applications in Hyperspectral Remote Sensing Images. Appl. Sci. 2022, 12, 1670. [Google Scholar] [CrossRef]

- Pandey, A.; Jain, K. An intelligent system for crop identification and classification from UAV images using conjugated dense convolutional neural network. Comput. Electron. Agric. 2022, 192, 106543. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Hu, X.; Luo, C.; Wang, X.Y.; Zhao, J.; Zhang, L.P. WHU-Hi: UAV-borne hyperspectral with high spatial resolution (H-2) benchmark datasets and classifier for precise crop identification based on deep convolutional neural network with CRF. Remote Sens. Environ. 2020, 250, 112012. [Google Scholar] [CrossRef]

- Park, S.; Park, N.W. Effects of Class Purity of Training Patch on Classification Performance of Crop Classification with Convolutional Neural Network. Appl. Sci. 2020, 10, 3773. [Google Scholar] [CrossRef]

- Feng, Q.L.; Yang, J.Y.; Liu, Y.M.; Ou, C.; Zhu, D.H.; Niu, B.W.; Liu, J.T.; Li, B.G. Multi-Temporal Unmanned Aerial Vehicle Remote Sensing for Vegetable Mapping Using an Attention-Based Recurrent Convolutional Neural Network. Remote Sens. 2020, 12, 1668. [Google Scholar] [CrossRef]

- Yang, S.T.; Gu, L.J.; Li, X.F.; Jiang, T.; Ren, R.Z. Crop Classification Method Based on Optimal Feature Selection and Hybrid CNN-RF Networks for Multi-Temporal Remote Sensing Imagery. Remote Sens. 2020, 12, 3119. [Google Scholar] [CrossRef]

- Quiroz, I.A.; Alferez, G.H. Image recognition of Legacy blueberries in a Chilean smart farm through deep learning. Comput. Electron. Agric. 2020, 168, 105044. [Google Scholar] [CrossRef]

- Li, Z.T.; Chen, G.K.; Zhang, T.X. A CNN-Transformer Hybrid Approach for Crop Classification Using Multitemporal Multisensor Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 847–858. [Google Scholar] [CrossRef]

- Wang, L.Y.; Liu, M.L.; Liu, X.N.; Wu, L.; Wan, P.; Wu, C.Y. Pretrained convolutional neural network for classifying rice-cropping systems based on spatial and spectral trajectories of Sentinel-2 time series. J. Appl. Remote Sens. 2020, 14, 14506. [Google Scholar] [CrossRef]

- Jayakumari, R.; Nidamanuri, R.R.; Ramiya, A.M. Object-level classification of vegetable crops in 3D LiDAR point cloud using deep learning convolutional neural networks. Precis. Agric. 2021, 22, 1617–1633. [Google Scholar] [CrossRef]

- Ji, S.P.; Zhang, Z.L.; Zhang, C.; Wei, S.Q.; Lu, M.; Duan, Y.L. Learning discriminative spatiotemporal features for precise crop classification from multi-temporal satellite images. Int. J. Remote Sens. 2020, 41, 3162–3174. [Google Scholar] [CrossRef]

- Wang, S.Y.; Xu, Z.G.; Zhang, C.M.; Zhang, J.H.; Mu, Z.S.; Zhao, T.Y.; Wang, Y.Y.; Gao, S.; Yin, H.; Zhang, Z.Y. Improved Winter Wheat Spatial Distribution Extraction Using A Convolutional Neural Network and Partly Connected Conditional Random Field. Remote Sens. 2020, 12, 821. [Google Scholar] [CrossRef]

- Tseng, H.H.; Yang, M.D.; Saminathan, R.; Hsu, Y.C.; Yang, C.Y.; Wu, D.H. Rice Seedling Detection in UAV Images Using Transfer Learning and Machine Learning. Remote Sens. 2022, 14, 2837. [Google Scholar] [CrossRef]

- Aeberli, A.; Johansen, K.; Robson, A.; Lamb, D.W.; Phinn, S. Detection of Banana Plants Using Multi-Temporal Multispectral UAV Imagery. Remote Sens. 2021, 13, 2123. [Google Scholar] [CrossRef]

- Liu, S.B.; Yin, D.M.; Feng, H.K.; Li, Z.H.; Xu, X.B.; Shi, L.; Jin, X.L. Estimating maize seedling number with UAV RGB images and advanced image processing methods. Precis. Agric. 2022, 23, 1604–1632. [Google Scholar] [CrossRef]

- Pearse, G.D.; Tan, A.Y.S.; Watt, M.S.; Franz, M.O.; Dash, J.P. Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data. ISPRS J. Photogramm. Remote Sens. 2020, 168, 156–169. [Google Scholar] [CrossRef]

- Zhang, L.K.; Xia, C.L.; Xiao, D.Q.; Weckler, P.; Lan, Y.B.; Lee, J.M. A coarse-to-fine leaf detection approach based on leaf skeleton identification and joint segmentation. Biosyst. Eng. 2021, 206, 94–108. [Google Scholar] [CrossRef]

- Wu, J.T.; Yang, G.J.; Yang, H.; Zhu, Y.H.; Li, Z.H.; Lei, L.; Zhao, C.J. Extracting apple tree crown information from remote imagery using deep learning. Comput. Electron. Agric. 2020, 174, 105504. [Google Scholar] [CrossRef]

- Gautam, V.; Rani, J. Mango Leaf Stress Identification Using Deep Neural Network. Intell. Autom. Soft Comput. 2022, 34, 849–864. [Google Scholar] [CrossRef]

- Mukherjee, G.; Tudu, B.; Chatterjee, A. A convolutional neural network-driven computer vision system toward identification of species and maturity stage of medicinal leaves: Case studies with Neem, Tulsi and Kalmegh leaves. Soft Comput. 2021, 25, 14119–14138. [Google Scholar] [CrossRef]

- Gang, M.S.; Kim, H.J.; Kim, D.W. Estimation of Greenhouse Lettuce Growth Indices Based on a Two-Stage CNN Using RGB-D Images. Sensors 2022, 22, 5499. [Google Scholar] [CrossRef] [PubMed]

- Hao, X.; Jia, J.D.; Khattak, A.M.; Zhang, L.; Guo, X.C.; Gao, W.L.; Wang, M.J. Growing period classification of Gynura bicolor DC using GL-CNN. Comput. Electron. Agric. 2020, 174, 105497. [Google Scholar] [CrossRef]

- Tan, S.Y.; Liu, J.B.; Lu, H.H.; Lan, M.Y.; Yu, J.; Liao, G.Z.; Wang, Y.W.; Li, Z.H.; Qi, L.; Ma, X. Machine Learning Approaches for Rice Seedling Growth Stages Detection. Front. Plant Sci. 2022, 13, 914771. [Google Scholar] [CrossRef]

- Yarak, K.; Witayangkurn, A.; Kritiyutanont, K.; Arunplod, C.; Shibasaki, R. Oil Palm Tree Detection and Health Classification on High-Resolution Imagery Using Deep Learning. Agriculture 2021, 11, 183. [Google Scholar] [CrossRef]

- Tian, L.; Wang, C.; Li, H.L.; Sun, H.T. Recognition Method of Corn and Rice Crop Growth State Based on Computer Image Processing Technology. J. Food Qual. 2022, 2022, 2844757. [Google Scholar] [CrossRef]

- Zhang, L.X.; Xu, Z.Y.; Xu, D.; Ma, J.C.; Chen, Y.Y.; Fu, Z.T. Growth monitoring of greenhouse lettuce based on a convolutional neural network. Hortic. Res. 2020, 7, 124. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.Z.; Gao, Z.H.; Zhang, Y.; Zhou, J.; Wu, J.Z.; Li, P.P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2022, 8, 21. [Google Scholar] [CrossRef]

- Peng, Y.; Zhao, S.Y.; Liu, J.Z. Fused Deep Features-Based Grape Varieties Identification Using Support Vector Machine. Agriculture 2021, 11, 869. [Google Scholar] [CrossRef]

- Wang, Z.B.; Wang, K.Y.; Wang, X.F.; Pan, S.H.; Qiao, X.J. Dynamic ensemble selection of convolutional neural networks and its application in flower classification. Int. J. Agric. Biol. Eng. 2022, 15, 216–223. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. Silvi-Net-A dual-CNN approach for combined classification of tree species and standing dead trees from remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102292. [Google Scholar] [CrossRef]

- Kubera, E.; Kubik-Komar, A.; Piotrowska-Weryszko, K.; Skrzypiec, M. Deep Learning Methods for Improving Pollen Monitoring. Sensors 2021, 21, 3526. [Google Scholar] [CrossRef]

- Aquino, A.; Ponce, J.M.; Andujar, J.M. Identification of olive fruit, in intensive olive orchards, by means of its morphological structure using convolutional neural networks. Comput. Electron. Agric. 2020, 176, 105616. [Google Scholar] [CrossRef]

- Fu, L.S.; Feng, Y.L.; Wu, J.Z.; Liu, Z.H.; Gao, F.F.; Majeed, Y.; Al-Mallahi, A.; Zhang, Q.; Li, R.; Cui, Y.J. Fast and accurate detection of kiwifruit in orchard using improved YOLOv3-tiny model. Precis. Agric. 2021, 22, 754–776. [Google Scholar] [CrossRef]

- Li, C.; Lin, J.Q.; Li, B.Y.; Zhang, S.; Li, J. Partition harvesting of a column-comb litchi harvester based on 3D clustering. Comput. Electron. Agric. 2022, 197, 106975. [Google Scholar] [CrossRef]

- Chen, J.Q.; Wang, Z.K.; Wu, J.H.; Hu, Q.; Zhao, C.Y.; Tan, C.Z.; Teng, L.; Luo, T. An improved Yolov3 based on dual path network for cherry tomatoes detection. J. Food Process Eng. 2021, 44, 13803. [Google Scholar] [CrossRef]

- Fu, L.S.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R-CNN-based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Gao, F.F.; Fu, L.S.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-class fruit-on-plant detection for apple in SNAP system using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Zhou, Z.X.; Song, Z.Z.; Fu, L.S.; Gao, F.F.; Li, R.; Cui, Y.J. Real-time kiwifruit detection in orchard using deep learning on Android (TM) smartphones for yield estimation. Comput. Electron. Agric. 2020, 179, 105856. [Google Scholar] [CrossRef]

- Mu, Y.; Chen, T.S.; Ninomiya, S.; Guo, W. Intact Detection of Highly Occluded Immature Tomatoes on Plants Using Deep Learning Techniques. Sensors 2020, 20, 2984. [Google Scholar] [CrossRef]

- Wang, J.H.; Zhang, Z.Y.; Luo, L.F.; Zhu, W.B.; Chen, J.W.; Wang, W. SwinGD: A Robust Grape Bunch Detection Model Based on Swin Transformer in Complex Vineyard Environment. Horticulturae 2021, 7, 492. [Google Scholar] [CrossRef]

- Zhang, Y.L.; Xiao, W.W.; Lu, X.Y.; Liu, A.M.; Qi, Y.; Liu, H.C.; Shi, Z.K.; Lan, Y.B. Method for detecting rice flowering spikelets using visible light images. Trans. Chin. Soc. Agric. Eng. 2021, 37, 253–262. [Google Scholar] [CrossRef]

- Liu, Y.L.; Cen, C.J.; Che, Y.P.; Ke, R.; Ma, Y.; Ma, Y.T. Detection of Maize Tassels from UAV RGB Imagery with Faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef]

- Chandra, A.L.; Desai, S.V.; Balasubramanian, V.N.; Ninomiya, S.; Guo, W. Active learning with point supervision for cost-effective panicle detection in cereal crops. Plant Methods 2020, 16, 1–16. [Google Scholar] [CrossRef]

- Rahim, U.F.; Utsumi, T.; Mineno, H. Deep learning-based accurate grapevine inflorescence and flower quantification in unstructured vineyard images acquired using a mobile sensing platform. Comput. Electron. Agric. 2022, 198, 107088. [Google Scholar] [CrossRef]

- Wu, D.H.; Lv, S.H.; Jiang, M.; Song, H.B. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Lin, P.; Lee, W.S.; Chen, Y.M.; Peres, N.; Fraisse, C. A deep-level region-based visual representation architecture for detecting strawberry flowers in an outdoor field. Precis. Agric. 2020, 21, 387–402. [Google Scholar] [CrossRef]

- Yuan, W.N.; Choi, D.; Bolkas, D.; Heinemann, P.H.; He, L. Sensitivity examination of YOLOv4 regarding test image distortion and training dataset attribute for apple flower bud classification. Int. J. Remote Sens. 2022, 43, 3106–3130. [Google Scholar] [CrossRef]

- Gene-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; Gregorio, E. Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Comput. Electron. Agric. 2020, 169, 105165. [Google Scholar] [CrossRef]

- Xu, P.H.; Fang, N.; Liu, N.; Lin, F.S.; Yang, S.Q.; Ning, J.F. Visual recognition of cherry tomatoes in plant factory based on improved deep instance segmentation. Comput. Electron. Agric. 2022, 197, 106991. [Google Scholar] [CrossRef]

- Wei, P.L.; Jiang, T.; Peng, H.Y.; Jin, H.W.; Sun, H.; Chai, D.F.; Huang, J.F. Coffee Flower Identification Using Binarization Algorithm Based on Convolutional Neural Network for Digital Images. Plant Phenomics 2020, 2020, 6323965. [Google Scholar] [CrossRef]

- Li, Y.A.; Cao, Z.G.; Lu, H.; Xu, W.X. Unsupervised domain adaptation for in-field cotton boll status identification. Comput. Electron. Agric. 2020, 178, 105745. [Google Scholar] [CrossRef]

- Khosravi, H.; Saedi, S.I.; Rezaei, M. Real-time recognition of on-branch olive ripening stages by a deep convolutional neural network. Sci. Hortic. 2021, 287, 110252. [Google Scholar] [CrossRef]

- Zheng, H.; Wang, G.H.; Li, X.C. Swin-MLP: A strawberry appearance quality identification method by Swin Transformer and multi-layer perceptron. J. Food Meas. Charact. 2022, 16, 2789–2800. [Google Scholar] [CrossRef]

- Psiroukis, V.; Espejo-Garcia, B.; Chitos, A.; Dedousis, A.; Karantzalos, K.; Fountas, S. Assessment of Different Object Detectors for the Maturity Level Classification of Broccoli Crops Using UAV Imagery. Remote Sens. 2022, 14, 731. [Google Scholar] [CrossRef]

- Seo, D.; Cho, B.H.; Kim, K.C. Development of Monitoring Robot System for Tomato Fruits in Hydroponic Greenhouses. Agronomy 2021, 11, 2211. [Google Scholar] [CrossRef]

- Hsieh, K.W.; Huang, B.Y.; Hsiao, K.Z.; Tuan, Y.H.; Shih, F.P.; Hsieh, L.C.; Chen, S.M.; Yang, I.C. Fruit maturity and location identification of beef tomato using R-CNN and binocular imaging technology. J. Food Meas. Charact. 2021, 15, 5170–5180. [Google Scholar] [CrossRef]

- Moon, T.; Park, J.; Son, J.E. Prediction of the fruit development stage of sweet pepper by an ensemble model of convolutional and multilayer perceptron. Biosyst. Eng. 2021, 210, 171–180. [Google Scholar] [CrossRef]

- Tian, Y.N.; Yang, G.D.; Wang, Z.; Li, E.; Liang, Z.Z. Instance segmentation of apple flowers using the improved mask R-CNN model. Biosyst. Eng. 2020, 193, 264–278. [Google Scholar] [CrossRef]

- Suharjito; Elwirehardja, G.N.; Prayoga, J.S. Oil palm fresh fruit bunch ripeness classification on mobile devices using deep learning approaches. Comput. Electron. Agric. 2021, 188, 106359. [Google Scholar] [CrossRef]

- De Oliveira, G.S.; Marcato Junior, J.; Polidoro, C.; Osco, L.P.; Siqueira, H.; Rodrigues, L.; Jank, L.; Barrios, S.; Valle, C.; Simeão, R.; et al. Convolutional Neural Networks to Estimate Dry Matter Yield in a Guineagrass Breeding Program Using UAV Remote Sensing. Sensors 2021, 21, 3971. [Google Scholar] [CrossRef] [PubMed]

- Lu, W.; Du, R.; Niu, P.; Xing, G.; Luo, H.; Deng, Y.; Shu, L. Soybean Yield Preharvest Prediction Based on Bean Pods and Leaves Image Recognition Using Deep Learning Neural Network Combined With GRNN. Front. Plant Sci. 2022, 12, 791256. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Cheein, F.A. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Kartal, S.; Choudhary, S.; Masner, J.; Kholová, J.; Stočes, M.; Gattu, P.; Schwartz, S.; Kissel, E. Machine Learning-Based Plant Detection Algorithms to Automate Counting Tasks Using 3D Canopy Scans. Sensors 2021, 21, 8022. [Google Scholar] [CrossRef]

- Wang, X.W.; Liu, J. Tomato Anomalies Detection in Greenhouse Scenarios Based on YOLO-Dense. Front. Plant Sci. 2021, 12, 634103. [Google Scholar] [CrossRef]

- Xu, X.; Wang, L.; Shu, M.; Liang, X.; Ghafoor, A.Z.; Liu, Y.; Ma, Y.; Zhu, J. Detection and Counting of Maize Leaves Based on Two-Stage Deep Learning with UAV-Based RGB Image. Remote Sens. 2022, 14, 5388. [Google Scholar] [CrossRef]

- Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive Tree Biovolume from UAV Multi-Resolution Image Segmentation with Mask R-CNN. Sensors 2021, 21, 1617. [Google Scholar] [CrossRef]

- Lin, Z.; Guo, W.X. Sorghum Panicle Detection and Counting Using Unmanned Aerial System Images and Deep Learning. Front. Plant Sci. 2020, 11, 534853. [Google Scholar] [CrossRef] [PubMed]

- Blok, P.M.; van Evert, F.K.; Tielen, A.P.; van Henten, E.J.; Kootstra, G. The effect of data augmentation and network simplification on the image-based detection of broccoli heads with Mask R-CNN. J. Field Robot. 2021, 38, 85–104. [Google Scholar] [CrossRef]

- Huang, Y.P.; Wang, T.H.; Basanta, H. Using Fuzzy Mask R-CNN Model to Automatically Identify Tomato Ripeness. IEEE Access 2020, 8, 207672–207682. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Han, Y.; Liu, Z.; Khoshelham, K.; Bai, S.H. Quality estimation of nuts using deep learning classification of hyperspectral imagery. Comput. Electron. Agric. 2021, 180, 105868. [Google Scholar] [CrossRef]

- Mahmood, A.; Singh, S.K.; Tiwari, A.K. Pre-trained deep learning-based classification of jujube fruits according to their maturity level. Neural Comput. Appl. 2022, 34, 13925–13935. [Google Scholar] [CrossRef]

- Momeny, M.; Jahanbakhshi, A.; Jafarnezhad, K.; Zhang, Y.D. Accurate classification of cherry fruit using deep CNN based on hybrid pooling approach. Postharvest Biol. Technol. 2020, 166, 111204. [Google Scholar] [CrossRef]

- Iqbal, H.M.; Hakim, A. Classification and Grading of Harvested Mangoes Using Convolutional Neural Network. Int. J. Fruit Sci. 2022, 22, 95–109. [Google Scholar] [CrossRef]

- Shi, X.; Chai, X.; Yang, C.; Xia, X.; Sun, T. Vision-based apple quality grading with multi-view spatial network. Comput. Electron. Agric. 2022, 195, 106793. [Google Scholar] [CrossRef]

- Xie, W.; Wei, S.; Zheng, Z.; Yang, D. A CNN-based lightweight ensemble model for detecting defective carrots. Biosyst. Eng. 2021, 208, 287–299. [Google Scholar] [CrossRef]

- Jahanbakhshi, A.; Momeny, M.; Mahmoudi, M.; Zhang, Y.D. Classification of sour lemons based on apparent defects using stochastic pooling mechanism in deep convolutional neural networks. Sci. Hortic. 2020, 263, 109133. [Google Scholar] [CrossRef]

- Li, Y.; Feng, X.; Liu, Y.; Han, X. Apple quality identification and classification by image processing based on convolutional neural networks. Sci. Rep. 2021, 11, 16618. [Google Scholar] [CrossRef]

- Suzuki, M.; Masuda, K.; Asakuma, H.; Takeshita, K.; Baba, K.; Kubo, Y.; Ushijima, K.; Uchida, S.; Akagi, T. Deep Learning Predicts Rapid Over-softening and Shelf Life in Persimmon Fruits. Hortic. J. 2022, 91, 408–415. [Google Scholar] [CrossRef]

- Korchagin, S.A.; Gataullin, S.T.; Osipov, A.V.; Smirnov, M.V.; Suvorov, S.V.; Serdechnyi, D.V.; Bublikov, K.V. Development of an Optimal Algorithm for Detecting Damaged and Diseased Potato Tubers Moving along a Conveyor Belt Using Computer Vision Systems. Agronomy 2021, 11, 1980. [Google Scholar] [CrossRef]

- Lee, C.G.; Jeong, S.B. A Quality Prediction Model for Ginseng Sprouts based on CNN. J. Korea Soc. Simul. 2021, 30, 41–48. [Google Scholar] [CrossRef]

- Wang, Z.; Jin, L.; Wang, S.; Xu, H. Apple stem/calyx real-time recognition using YOLO-v5 algorithm for fruit automatic loading system. Postharvest Biol. Technol. 2022, 185, 111808. [Google Scholar] [CrossRef]

- Xi, R.; Hou, J.; Lou, W. Potato Bud Detection with Improved Faster R-CNN. Trans. Asabe 2020, 63, 557–569. [Google Scholar] [CrossRef]

- Khaki, S.; Pham, H.; Han, Y.; Kuhl, A.; Kent, W.; Wang, L. Convolutional Neural Networks for Image-Based Corn Kernel Detection and Counting. Sensors 2020, 20, 2721. [Google Scholar] [CrossRef]

- Li, H.; Zhang, L.; Sun, H.; Rao, Z.; Ji, H. Identification of soybean varieties based on hyperspectral imaging technology and one-dimensional convolutional neural network. J. Food Process Eng. 2021, 44, e13767. [Google Scholar] [CrossRef]

- Trevisan, R.; Pérez, O.; Schmitz, N.; Diers, B.; Martin, N. High-Throughput Phenotyping of Soybean Maturity Using Time Series UAV Imagery and Convolutional Neural Networks. Remote Sens. 2020, 12, 3617. [Google Scholar] [CrossRef]

- Sun, H.; Wang, L.; Lin, R.; Zhang, Z.; Zhang, B. Mapping Plastic Greenhouses with Two-Temporal Sentinel-2 Images and 1D-CNN Deep Learning. Remote Sens. 2021, 13, 2820. [Google Scholar] [CrossRef]

- Kim, T.K.; Baek, G.H.; Seok, K.H. Construction of a Bark Dataset for Automatic Tree Identification and Developing a Convolutional Neural Network-based Tree Species Identification Model. J. Korean Soc. For. Sci. 2021, 110, 155–164. [Google Scholar]

- Deng, R.; Jiang, Y.; Tao, M.; Huang, X.; Bangura, K.; Liu, C.; Lin, J.; Qi, L. Deep learning-based automatic detection of productive tillers in rice. Comput. Electron. Agric. 2020, 177, 105703. [Google Scholar] [CrossRef]

- Kalampokas, Τ.; Vrochidou, Ε.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Grape stem detection using regression convolutional neural networks. Comput. Electron. Agric. 2021, 186, 106220. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, W.; Wang, X.; Yu, S. Recognition of rice seedling rows based on row vector grid classification. Comput. Electron. Agric. 2021, 190, 106454. [Google Scholar] [CrossRef]

- Gao, J.; Liu, C.; Han, J.; Lu, Q.; Wang, H.; Zhang, J.; Bai, X.; Luo, J. Identification Method of Wheat Cultivars by Using a Convolutional Neural Network Combined with Images of Multiple Growth Periods of Wheat. Symmetry 2021, 13, 2012. [Google Scholar] [CrossRef]

- Zhang, J.; Karkee, M.; Zhang, Q.; Zhang, X.; Yaqoob, M.; Fu, L.; Wang, S. Multi-class object detection using faster R-CNN and estimation of shaking locations for automated shake-and-catch apple harvesting. Comput. Electron. Agric. 2020, 173, 105384. [Google Scholar] [CrossRef]

- Yang, C.H.; Xiong, L.Y.; Wang, Z.; Wang, Y.; Shi, G.; Kuremot, T.; Zhao, W.H.; Yang, Y. Integrated detection of citrus fruits and branches using a convolutional neural network. Comput. Electron. Agric. 2020, 174, 105469. [Google Scholar] [CrossRef]

- Abdalla, A.; Cen, H.; Wan, L.; Mehmood, K.; He, Y. Nutrient Status Diagnosis of Infield Oilseed Rape via Deep Learning-Enabled Dynamic Model. IEEE Trans. Ind. Inform. 2021, 17, 4379–4389. [Google Scholar] [CrossRef]

- Sun, Z.; Guo, X.; Xu, Y.; Zhang, S.; Cheng, X.; Hu, Q.; Wang, W.; Xue, X. Image Recognition of Male Oilseed Rape Plants Based on Convolutional Neural Network for UAAS Navigation Applications on Supplementary Pollination and Aerial Spraying. Agriculture 2022, 12, 62. [Google Scholar] [CrossRef]

- Yang, W.; Nigon, T.; Hao, Z.; Paiao, G.D.; Fernández, F.G.; Mulla, D.; Yang, C. Estimation of corn yield based on hyperspectral imagery and convolutional neural network. Comput. Electron. Agric. 2021, 184, 106092. [Google Scholar] [CrossRef]

- Han, J.; Shi, L.; Yang, Q.; Huang, K.; Zha, Y.; Yu, J. Real-time detection of rice phenology through convolutional neural network using handheld camera images. Precis. Agric. 2021, 22, 154–178. [Google Scholar] [CrossRef]

- Massah, J.; Vakilian, K.A.; Shabanian, M.; Shariatmadari, S.M. Design, development, and performance evaluation of a robot for yield estimation of kiwifruit. Comput. Electron. Agric. 2021, 185, 106132. [Google Scholar] [CrossRef]

- Li, Y.; Chao, X.W. ANN-Based Continual Classification in Agriculture. Agriculture 2020, 10, 178. [Google Scholar] [CrossRef]

- CWD30: A Comprehensive and Holistic Dataset for Crop Weed Recognition in Precision Agriculture. Available online: https://arxiv.org/abs/2305.10084 (accessed on 17 May 2023).

| Datasets Name | Data Volume | Obtain Address |

|---|---|---|

| global wheat detection | 4700 | https://www.kaggle.com/c/global-wheat-detection/data (accessed on 12 January 2023) |

| flower recognition dataset | 4242 | https://www.kaggle.com/datasets/alxmamaev/flowers-recognition (accessed on 19 December 2022) |

| pest and disease library | 17,624 | http://www.icgroupcas.cn/website_bchtk/index.html (accessed on 5 January 2023) |

| AI Challenger 2018 | 50,000 | https://aistudio.baidu.com/aistudio/datasetdetail/76075 (accessed on 16 January 2023) |

| plant village | 54,303 | https://data.mendeley.com/datasets/tywbtsjrjv/1 (accessed on 5 January 2023) |

| lettuce growth images | 388 | https://doi.org/10.4121/15023088.v1 (accessed on 25 January 2023) |

| ABCPollen dataset | 1274 | http://kzmi.up.lublin.pl/~ekubera/ABCPollen.zip (accessed on 19 December 2022) |

| WGISD (grape) | 300 | https://github.com/thsant/wgisd (accessed on 22 January 2023) |

| hyperspectral remote sensing scenes | http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 15 January 2023) | |

| weed dataset | http://agritech.tnau.ac.in/agriculture/agri_weedmgt_fieldcrops.html (accessed on 19 December 2022) | |

| ICAR-DWR database (weed) | http://weedid.dwr.org.in/ (accessed on 19 December 2022) | |

| CWD30 dataset (weed) | 219,770 | https://arxiv.org/abs/2305.10084 (accessed on 17 May 2023) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, F.; Zhang, Q.; Xiao, J.; Ma, Y.; Wang, M.; Luan, R.; Liu, X.; Ping, Y.; Nie, Y.; Tao, Z.; et al. Progress in the Application of CNN-Based Image Classification and Recognition in Whole Crop Growth Cycles. Remote Sens. 2023, 15, 2988. https://doi.org/10.3390/rs15122988

Yu F, Zhang Q, Xiao J, Ma Y, Wang M, Luan R, Liu X, Ping Y, Nie Y, Tao Z, et al. Progress in the Application of CNN-Based Image Classification and Recognition in Whole Crop Growth Cycles. Remote Sensing. 2023; 15(12):2988. https://doi.org/10.3390/rs15122988

Chicago/Turabian StyleYu, Feng, Qian Zhang, Jun Xiao, Yuntao Ma, Ming Wang, Rupeng Luan, Xin Liu, Yang Ping, Ying Nie, Zhenyu Tao, and et al. 2023. "Progress in the Application of CNN-Based Image Classification and Recognition in Whole Crop Growth Cycles" Remote Sensing 15, no. 12: 2988. https://doi.org/10.3390/rs15122988

APA StyleYu, F., Zhang, Q., Xiao, J., Ma, Y., Wang, M., Luan, R., Liu, X., Ping, Y., Nie, Y., Tao, Z., & Zhang, H. (2023). Progress in the Application of CNN-Based Image Classification and Recognition in Whole Crop Growth Cycles. Remote Sensing, 15(12), 2988. https://doi.org/10.3390/rs15122988