AHF: An Automatic and Universal Image Preprocessing Algorithm for Circular-Coded Targets Identification in Close-Range Photogrammetry under Complex Illumination Conditions

Abstract

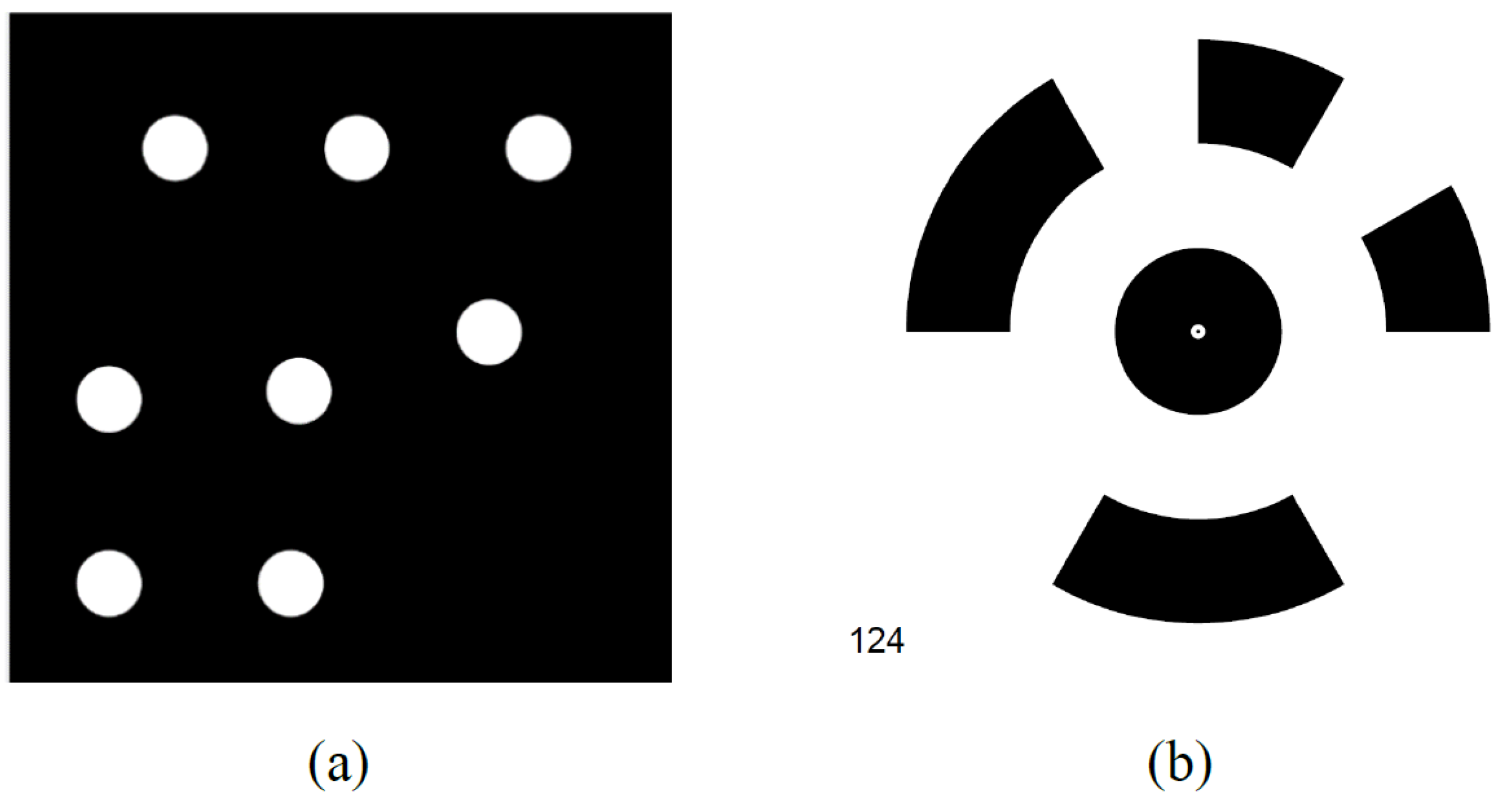

1. Introduction

2. Proposed Algorithm

- 1

- We can enter the total number of pasted CCTs in advance with prior knowledge;

- 2

- If unknown, a general object detection network [56] can identify CCTs. The recognition result is treated as ground truth, i.e., the number of targets N.

2.1. Homomorphic Filtering (HF)

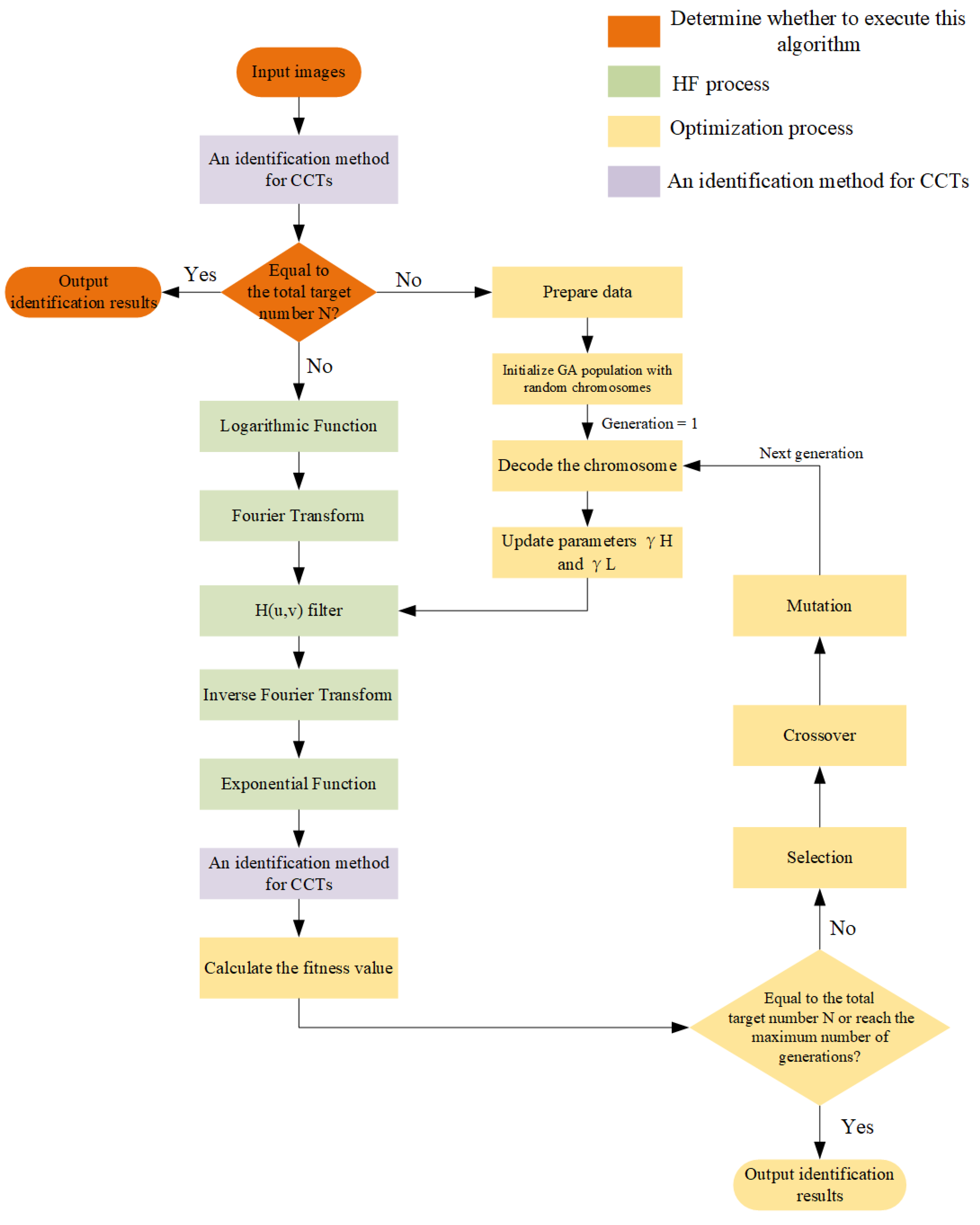

2.2. Optimization Process

2.2.1. Optimization Problem

- : Used to adjust the high-frequency components in the filtered image, and increasing results in an increase in the high-frequency components in the picture;

- : Used to adjust the low-frequency components in the filtered image, and increasing causes the low-frequency components in the image to expand;

- Cutoff frequency : The frequency at which the filter stops attenuating the input signal. In HF, the cutoff frequency determines the degree of detail retention in the image. A lower cutoff frequency preserves more low-frequency components, resulting in a smoother picture; Higher cutoff frequencies keep more high-frequency components, resulting in more explicit photos;

- Attenuation factor c: determines the rate at which the filter attenuation frequency exceeds the cutoff frequency. Higher attenuation factors can lead to a steeper transfer function slope, resulting in a clearer image, but may introduce more noise. A lower attenuation factor produces a smoother appearance but may lose some details, usually between and .

2.2.2. GA Modeling

- (1)

- Fitness FunctionThe only requirement for using a GA is that the model must be able to determine the value of the objective function, and the objective function used to select offspring should be a non-negative growth function of individual quality [60]. In our optimization problem, we use Equation (9) as the objective function, and our goal is to minimize Equation (9). To adapt to a GA, we use its reciprocal as the fitness function. The fitness value is shown in Equation (10).where is an infinitesimal value, preventing an error when the denominator is 0.

- (2)

- Decision VariablesThe decision variables are the parameters and of HF. The value range of is [1,4], and the quantization during optimization is 0.1. The value range of is [0, 1], and the quantization during optimization is 0.01. Due to the different value ranges and coding accuracy of the two decision variables, the multiparameter cascade coding method is adopted. Each parameter is first binary-coded to obtain a substring; then, these substrings are connected in a specific order to form a chromosome (individual). Each chromosome corresponds to an optimized set of variable values.

- (3)

- GA StructureAs shown in Figure 2, our optimization process includes various modules of the GA. In the following, the functions and parameter configurations of each module are described. Table 1 lists the specifications of the GA used in this algorithm.

- Preparing data: In the GA, several parameters must be determined to converge and save computational resources quickly. We first use the four parameters of HF, i.e., , , C, and . At the same time, the population size, maximum generation, crossover probability, and mutation probability are fixed.

- Initialization: Initialize the GA population with random chromosomes. Different identification methods, illumination conditions, and HF parameters are others.The calculation cost of combined permutations of HF parameters is tremendous. For example, the value range of is [1, 4], and the quantization during optimization is 0.1. The value range of is [0, 1], and the quantization during optimization is 0.01. There are 3000 combined permutations in total. Due to the Fourier transform required after each combination permutation, choosing a simple brute-force optimization algorithm would waste many computational resources. We randomly select and and use Equation (7) to determine the parameter c, while the median value of the calculated determines .

- Fitness function calculation: We update the parameters and by decoding the chromosomes for individuals. The image processed by HF is fed into a CCT identification method, and the fitness function value is calculated using the number of targets n identified by Equation (10).

- Optimization stop criteria: “Is it equal to the total target number N or reaches the maximum generation?” is used as the judgment module. If it is equal to the total target number N, the identification result is directly output; if the maximum generation is reached, the ultimate fitness member of the final GA is selected, and the identification result is output. If none is achieved, proceed to the next step, such as “Selection”, “Crossover”, and “Mutation”.

3. Experiments and Analysis

3.1. Experimental Setup

- Shadows: Casting structured light may produce shadows on the CCT surface, making some areas of low light intensity or completely dim. The presence of shadows can cause CCTs to be invisible and unrecognizable;

- Uneven illumination: This uneven lighting condition may cause changes in the brightness of the CCT surface, affecting the extraction and decoding of CCTs;

- Light spot: Densely projectedlattice-structured light, which will form an oval spot when imaging and may damage the imaging structure of the CCT.

3.2. Evaluation Indicators

3.3. Experimental Results

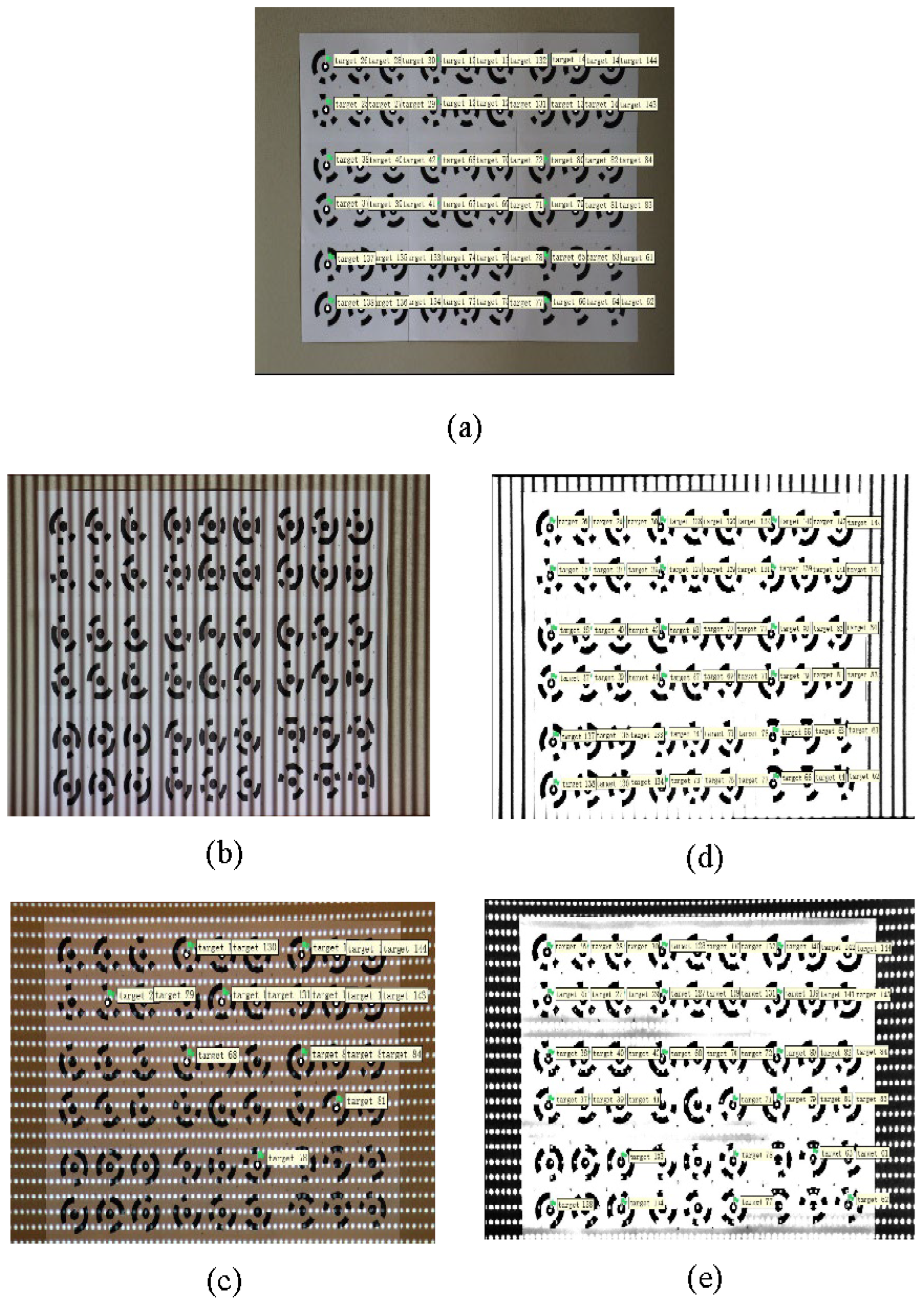

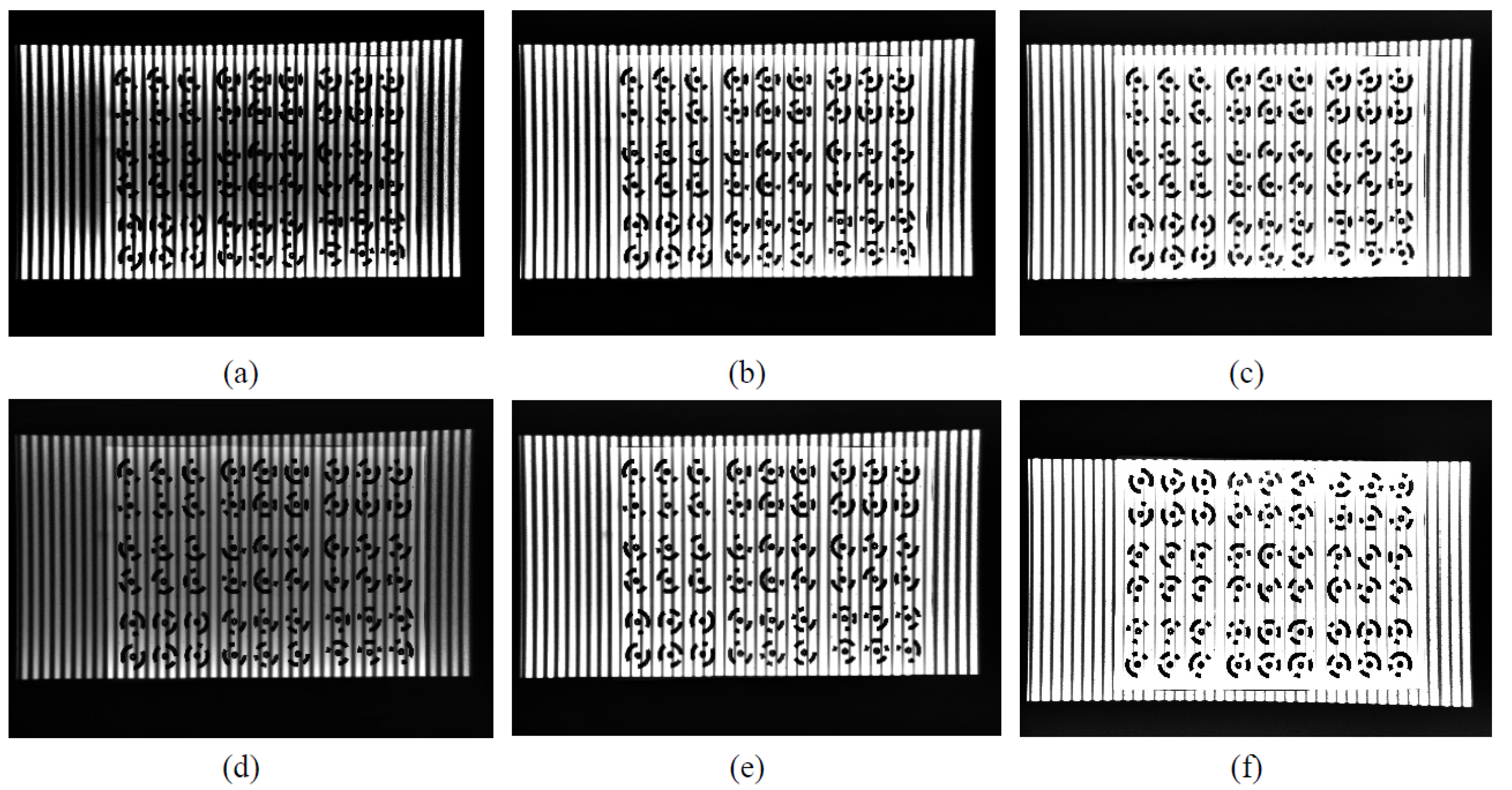

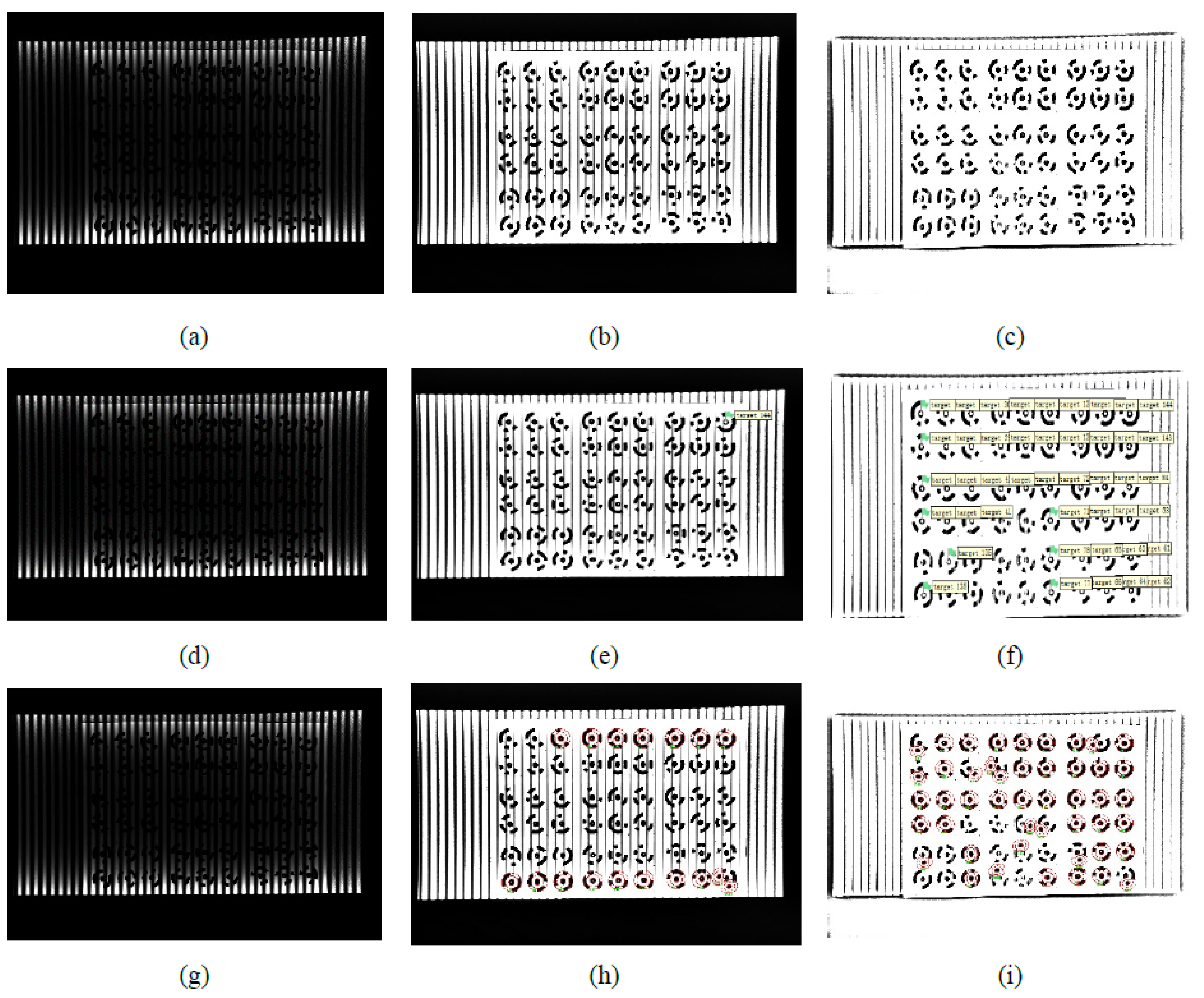

3.3.1. AM and AHF-AM Identification Results

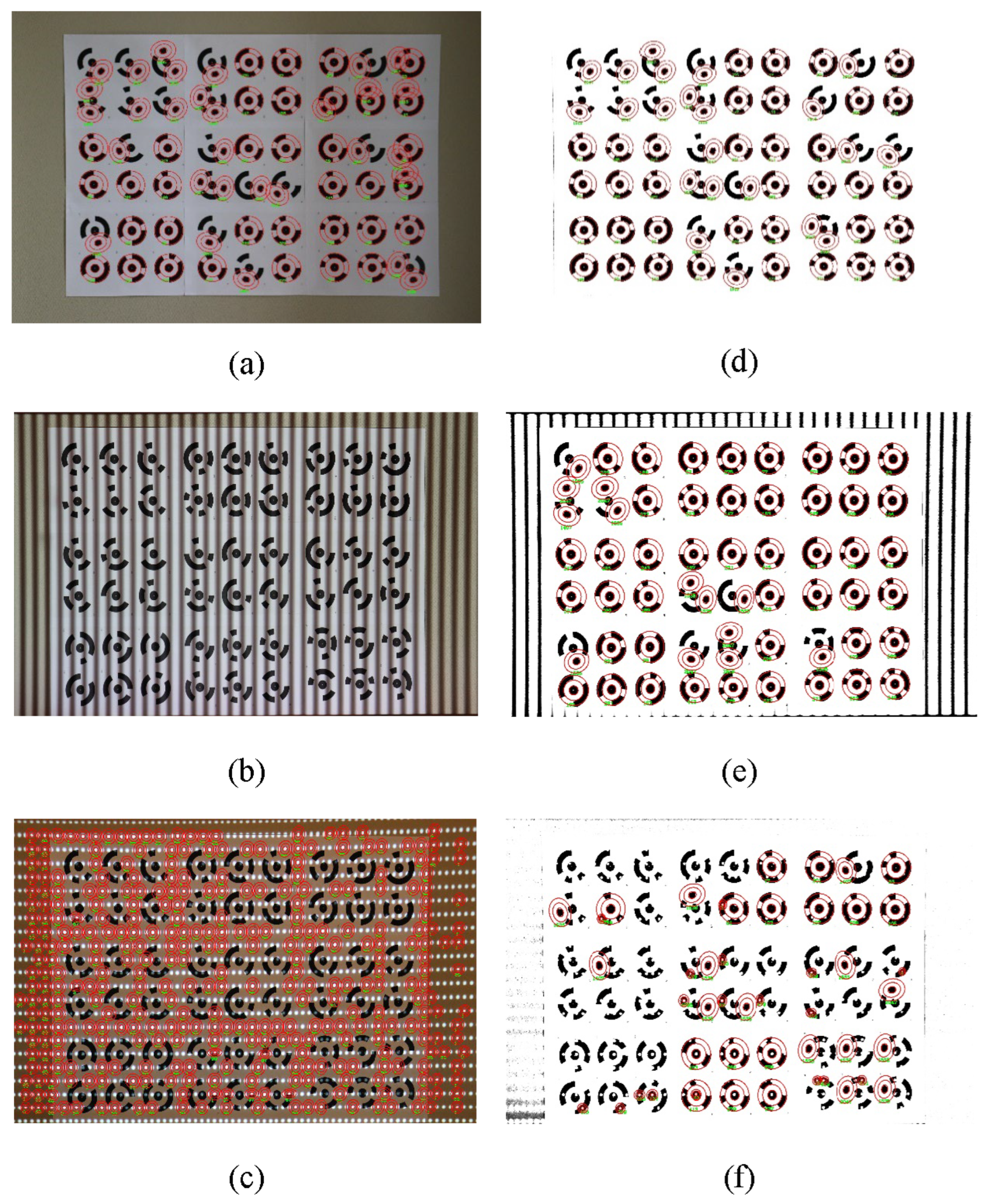

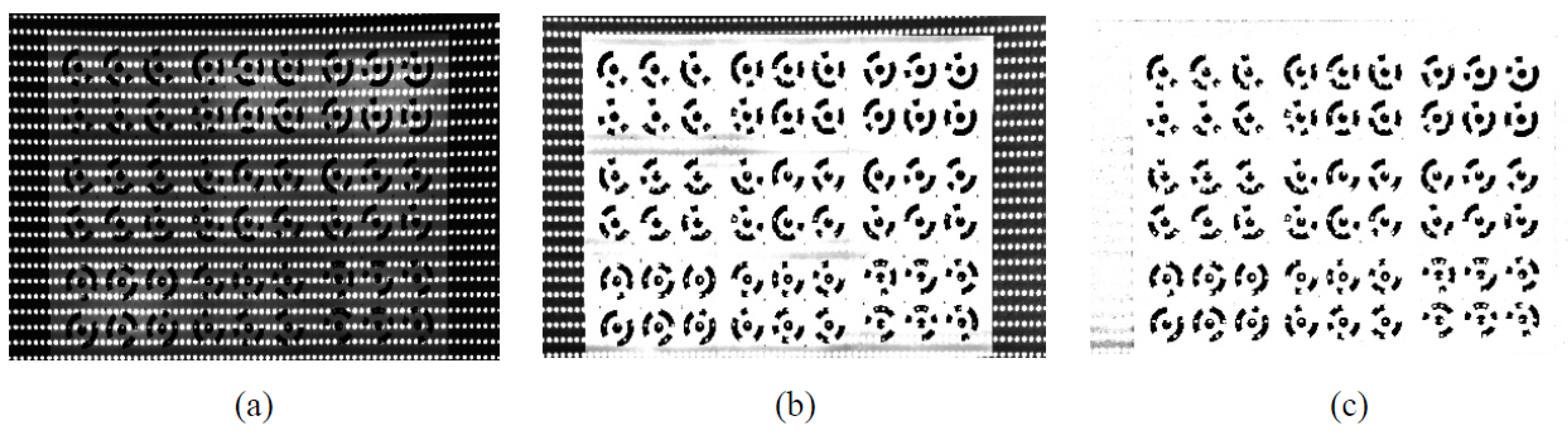

3.3.2. TI and AHF-TI Identification Results

3.3.3. The Precision of Center Positioning of CCTs

4. Discussion

4.1. Why Can Our AHF Be Combined with Any Identification Method?

4.2. Analysis of Experimental Results

4.2.1. Experimental Results under Striped Structured Light

4.2.2. Experimental Results under Lattice-Structured Light

4.3. Efficiency Issues

4.4. Application Prospects

4.4.1. The Application of AHF in Photogrammetry in the Field of Remote Sensing

- 1

- Robustness to Constantly Changing Lighting Conditions:Photogrammetry typically involves capturing images in outdoor environments with significant changes in lighting conditions [65]. The ability to identify CCTs under complex lighting conditions, including shadows, highlights, and uneven lighting, ensures the consistency and robustness of the photogrammetric process. It reduces the probability of CCT identification failure or errors due to challenging lighting conditions.

- 2

- Improving Reconstruction Accuracy:Accurately reconstructing 3D models and maps is the primary goal of UAV photogrammetry. Accurate identification of CCTs under complex lighting conditions improves the accuracy of the reconstruction model. It ensures reliable correspondence between images and facilitates more accurate triangulation and point cloud generation, thereby achieving higher-quality 3D reconstruction.

- 3

- Multifunctional Application:It successfully identifies CCTs under complex lighting conditions, expanding the applicability of UAV photogrammetry in various scenarios. It can capture high-quality data in challenging lighting environments, enhancing the effectiveness of data collection and analysis based on UAV photogrammetry.

4.4.2. AHF for Close-Range Photogrammetry and Structured Light Fusion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Moyano, J.; Nieto-Julián, J.E.; Bienvenido-Huertas, D.; Marín-García, D. Validation of Close-Range Photogrammetry for Architectural and Archaeological Heritage: Analysis of Point Density and 3D Mesh Geometry. Remote Sens. 2020, 12, 3571. [Google Scholar] [CrossRef]

- Lauria, G.; Sineo, L.; Ficarra, S. A Detailed Method for Creating Digital 3D Models of Human Crania: An Example of Close-Range Photogrammetry Based on the Use of Structure-from-Motion (SfM) in Virtual Anthropology. Archaeol. Anthrop. Sci. 2022, 14, 42. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Pellis, E.; Grussenmeyer, P.; Landes, T.; Masiero, A. Towards Semantic Photogrammetry: Generating Semantically Rich Point Clouds from Architectural Close-Range Photogrammetry. Sensors 2022, 22, 966. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Shang, H.; Liu, C.; Wang, R. Measurement Methods of 3D Shape of Large-Scale Complex Surfaces Based on Computer Vision: A Review. Measurement 2022, 197, 111302. [Google Scholar] [CrossRef]

- Ahn, S.J.; Rauh, W.; Kim, S.I. Circular Coded Target for Automation of Optical 3D-Measurement and Camera Calibration. Int. J. Pattern Recogn. 2001, 15, 905–919. [Google Scholar] [CrossRef]

- Chen, L.; Rottensteiner, F.; Heipke, C. Feature Detection and Description for Image Matching: From Hand-Crafted Design to Deep Learning. Geo-Spat. Inf. Sci. 2021, 24, 58–74. [Google Scholar] [CrossRef]

- Sharma, S.K.; Jain, K.; Shukla, A.K. A Comparative Analysis of Feature Detectors and Descriptors for Image Stitching. Appl. Sci. 2023, 13, 6015. [Google Scholar] [CrossRef]

- Remondino, F. Detectors and Descriptors for Photogrammetric Applications. International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2006, 36, 49–54. [Google Scholar]

- Forero, M.G.; Mambuscay, C.L.; Monroy, M.F.; Miranda, S.L.; Méndez, D.; Valencia, M.O.; Gomez Selvaraj, M. Comparative Analysis of Detectors and Feature Descriptors for Multispectral Image Matching in Rice Crops. Plants 2021, 10, 1791. [Google Scholar] [CrossRef]

- Liu, W.C.; Wu, B. An Integrated Photogrammetric and Photoclinometric Approach for Illumination-Invariant Pixel-Resolution 3D Mapping of the Lunar Surface. ISPRS J. Photogramm. Remote Sen. 2020, 159, 153–168. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F.; Varshosaz, M. Exploiting Light Directionality for Image-Based 3d Reconstruction of Non-Collaborative Surfaces. Photogramm. Rec. 2022, 37, 111–138. [Google Scholar] [CrossRef]

- Tang, C.H.; Tang, H.E.; Tay, P.K. Low Cost Digital Close Range Photogrammetric Measurement of an As-Built Anchor Handling Tug Hull. Ocean Eng. 2016, 119, 67–74. [Google Scholar] [CrossRef]

- Liu, Y.; Su, X.; Guo, X.; Suo, T.; Yu, Q. A Novel Concentric Circular Coded Target, and Its Positioning and Identifying Method for Vision Measurement under Challenging Conditions. Sensors 2021, 21, 855. [Google Scholar] [CrossRef] [PubMed]

- Fraser, C.S. Innovations in Automation for Vision Metrology Systems. Photogramm. Rec. 1997, 15, 901–911. [Google Scholar] [CrossRef]

- Hattori, S.; Akimoto, K.; Fraser, C.; Imoto, H. Automated Procedures with Coded Targets in Industrial Vision Metrology. Photogramm. Eng. Remote Sens. 2002, 68, 441–446. [Google Scholar]

- Tushev, S.; Sukhovilov, B.; Sartasov, E. Robust Coded Target Recognition in Adverse Light Conditions. In Proceedings of the 2018 International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM), Moscow, Russia, 15–18 May 2018; pp. 1–6. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic Generation and Detection of Highly Reliable Fiducial Markers under Occlusion. Pattern Recogn. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Schneider, C.-T.; Sinnreich, K. Optical 3-D Measurement Systems for Quality Control in Industry. Int. Arch. Photogramm. Remote Sens. 1993, 29, 56. [Google Scholar]

- Knyaz, V.A. The Development of New Coded Targets for Automated Point Identification and Non-Contact 3D Surface Measurements. Int. Arch. Photogramm. Remote Sens. 1998, 5, 80–85. [Google Scholar]

- Yang, J.; Han, J.-D.; Qin, P.-L. Correcting Error on Recognition of Coded Points for Photogrammetry. Opt. Precis. Eng. 2012, 20, 2293–2299. [Google Scholar] [CrossRef]

- Xuemei, H.; Xinyong, S.; Weihong, L. Recognition of Center Circles for Encoded Targets in Digital Close-Range Industrial Photogrammetry. J. Robot. Mechatron. 2015, 27, 208–214. [Google Scholar] [CrossRef]

- Kniaz, V.V.; Grodzitskiy, L.; Knyaz, V.A. Deep Learning for Coded Target Detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 44, 125–130. [Google Scholar] [CrossRef]

- Guo, Z.; Liu, X.; Wang, H.; Zheng, Z. An Ellipse Detection Method for 3D Head Image Fusion Based on Color-Coded Mark Points. Front. Optoelectron. 2012, 5, 395–399. [Google Scholar] [CrossRef]

- Yang, X.; Fang, S.; Kong, B.; Li, Y. Design of a Color Coded Target for Vision Measurements. Optik 2014, 125, 3727–3732. [Google Scholar] [CrossRef]

- Shortis, M.R.; Seager, J.W. A Practical Target Recognition System for Close Range Photogrammetry. Photogramm. Rec. 2014, 29, 337–355. [Google Scholar] [CrossRef]

- Tushev, S.; Sukhovilov, B.; Sartasov, E. Architecture of Industrial Close-Range Photogrammetric System with Multi-Functional Coded Targets. In Proceedings of the 2017 2nd International Ural Conference on Measurements (UralCon), Chelyabinsk, Russia, 16–19 October 2017; pp. 435–442. [Google Scholar]

- Wang, Q.; Liu, Y.; Guo, Y.; Wang, S.; Zhang, Z.; Cui, X.; Zhang, H. A Robust and Effective Identification Method for Point-Distributed Coded Targets in Digital Close-Range Photogrammetry. Remote Sens. 2022, 14, 5377. [Google Scholar] [CrossRef]

- Tinkham, W.T.; Swayze, N.C. Influence of Agisoft Metashape Parameters on UAS Structure from Motion Individual Tree Detection from Canopy Height Models. Forests 2021, 12, 250. [Google Scholar] [CrossRef]

- Forbes, K.; Voigt, A.; Bodika, N. An Inexpensive, Automatic and Accurate Camera Calibration Method. In Proceedings of the Thirteenth Annual South African Workshop on Pattern Recognition, Salerno, Italy, 27 May–1 June 2002; pp. 1–6. [Google Scholar]

- Zhou, L.; Zhang, L.Y.; Zheng, J.D.; Zhang, W.Z. Automated Reference Point Detection in Close Range Photogrammetry. J. Appl. Sci. 2007, 25, 288–294. [Google Scholar]

- Xia, R.-B.; Zhao, J.-B.; Liu, W.-J.; Wu, J.-H.; Fu, S.-P.; Jiang, J.; Li, J.-Z. A Robust Recognition Algorithm for Encoded Targets in Close-Range Photogrammetry. J. Inf. Sci. Eng. 2012, 28, 407–418. [Google Scholar]

- Li, W.; Liu, G.; Zhu, L.; Li, X.; Zhang, Y.; Shan, S. Efficient Detection and Recognition Algorithm of Reference Points in Photogrammetry. In Proceedings of the Optics, Photonics and Digital Technologies for Imaging Applications IV, SPIE, Brussels, Belgium, 3–7 April 2016; Volume 9896, pp. 246–255. [Google Scholar]

- Yu, J.; Liu, Y.; Zhang, Z.; Gao, F.; Gao, N.; Meng, Z.; Jiang, X. High-Accuracy Camera Calibration Method Based on Coded Concentric Ring Center Extraction. Opt. Express 2022, 30, 42454–42469. [Google Scholar] [CrossRef]

- Xia, X.; Zhang, X.; Fayek, S.; Yin, Z. A Table Method for Coded Target Decoding with Application to 3-D Reconstruction of Soil Specimens during Triaxial Testing. Acta Geotech. 2021, 16, 3779–3791. [Google Scholar] [CrossRef]

- Vo, M.; Wang, Z.; Pan, B.; Pan, T. Hyper-Accurate Flexible Calibration Technique for Fringe-Projection-Based Three-Dimensional Imaging. Opt. Express 2012, 20, 16926–16941. [Google Scholar] [CrossRef]

- Liu, M.; Yang, S.; Wang, Z.; Huang, S.; Liu, Y.; Niu, Z.; Zhang, X.; Zhu, J.; Zhang, Z. Generic Precise Augmented Reality Guiding System and Its Calibration Method Based on 3D Virtual Model. Opt. Express 2016, 24, 12026–12042. [Google Scholar] [CrossRef]

- Shortis, M.R.; Seager, J.W.; Robson, S.; Harvey, E.S. Automatic Recognition of Coded Targets Based on a Hough Transform and Segment Matching. In Proceedings of the Videometrics VII, SPIE, Santa Clara, CA, USA, 10 January 2003; Volume 5013, pp. 202–208. [Google Scholar]

- Li, Y.; Li, H.; Gan, X.; Qu, J.; Ma, X. Location of Circular Retro-Reflective Target Based on Micro-Vision. In Proceedings of the 2019 International Conference on Optical Instruments and Technology: Optoelectronic Measurement Technology and Systems, SPIE, San Diego, CA, USA, 26–28 October 2020; Volume 11439, pp. 226–236. [Google Scholar]

- Kong, L.; Chen, T.; Kang, T.; Chen, Q.; Zhang, D. An Automatic and Accurate Method for Marking Ground Control Points in Unmanned Aerial Vehicle Photogrammetry. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 16, 278–290. [Google Scholar] [CrossRef]

- Dosil, R.; Pardo, X.M.; Fdez-Vidal, X.R.; García-Díaz, A.; Leborán, V. A New Radial Symmetry Measure Applied to Photogrammetry. Pattern Anal. Appl. 2013, 16, 637–646. [Google Scholar] [CrossRef]

- Döring, T.; Meysel, F.; Reulke, R. Autonomous Calibration of Moving Line Scanners with Coded Photogrammetric Targets Recognition. In Proceedings of the ISPRS Commission V Symposium on Image Engineering and Vision Metrology, Dresden, Germany, 25–27 September 2006; pp. 84–89. [Google Scholar]

- Zhang, D.; Liang, J.; Guo, C.; Liu, J.-W.; Zhang, X.-Q.; Chen, Z.-X. Exploitation of Photogrammetry Measurement System. Opt. Eng. 2010, 49, 037005. [Google Scholar] [CrossRef]

- Hu, H.; Liang, J.; Xiao, Z.; Tang, Z.; Asundi, A.K.; Wang, Y. A Four-Camera Videogrammetric System for 3-D Motion Measurement of Deformable Object. Opt. Laser Eng. 2012, 50, 800–811. [Google Scholar] [CrossRef]

- Chen, R.; Zhong, K.; Li, Z.; Liu, M.; Zhan, G. An Accurate and Reliable Circular Coded Target Detection Algorithm for Vision Measurement. In Proceedings of the Optical Metrology and Inspection for Industrial Applications IV, SPIE, Beijing, China, 12–14 October 2016; Volume 10023, pp. 236–245. [Google Scholar]

- Zeng, Y.; Dong, X.; Zhang, F.; Zhou, D. A Stable Decoding Algorithm based on Circular Coded Target. ICIC Express Lett. 2018, 12, 221–228. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Syst. Man Cybern. B 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. 1986, 679–698. [Google Scholar] [CrossRef]

- Luhmann, T. Close Range Photogrammetry for Industrial Applications. ISPRS J. Photogramm. Remote Sens. 2010, 65, 558–569. [Google Scholar] [CrossRef]

- Zheng, F.; Wackrow, R.; Meng, F.-R.; Lobb, D.; Li, S. Assessing the Accuracy and Feasibility of Using Close-Range Photogrammetry to Measure Channelized Erosion with a Consumer-Grade Camera. Remote Sens. 2020, 12, 1706. [Google Scholar] [CrossRef]

- Dey, N. Uneven Illumination Correction of Digital Images: A Survey of the State-of-the-Art. Optik 2019, 183, 483–495. [Google Scholar] [CrossRef]

- Dong, S.; Ma, J.; Su, Z.; Li, C. Robust Circular Marker Localization under Non-Uniform Illuminations Based on Homomorphic Filtering. Measurement 2021, 170, 108700. [Google Scholar] [CrossRef]

- Venkatappareddy, P.; Lall, B. A Novel Thresholding Methodology Using WSI EMD and Adaptive Homomorphic Filter. IEEE Trans. Circuits-II 2019, 67, 590–594. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, L.; Guo, H.; Hao, H.; Qian, K. Image Processing for Laser Imaging Using Adaptive Homomorphic Filtering and Total Variation. Photonics 2020, 7, 30. [Google Scholar] [CrossRef]

- Gamini, S.; Kumar, S.S. Homomorphic Filtering for the Image Enhancement Based on Fractional-Order Derivative and Genetic Algorithm. Comput. Electr. Eng. 2023, 106, 108566. [Google Scholar] [CrossRef]

- Kamath, V.; Renuka, A. Deep Learning Based Object Detection for Resource Constrained Devices-Systematic Review, Future Trends and Challenges Ahead. Neurocomputing 2023, 531, 34–60. [Google Scholar] [CrossRef]

- Król, A.; Sierpiński, G. Application of a Genetic Algorithm with a Fuzzy Objective Function for Optimized Siting of Electric Vehicle Charging Devices in Urban Road Networks. IEEE T. Intell. Transp. 2021, 23, 8680–8691. [Google Scholar] [CrossRef]

- Vivek, M.B.; Manju, N.; Vijay, M.B. Machine Learning Based Food Recipe Recommendation System. In Proceedings of the International Conference on Cognition and Recognition, ICCR, Karnataka, India, 30–31 December 2016; pp. 11–19. [Google Scholar]

- Chen, Y.; Zhu, X.; Fang, K.; Wu, Y.; Deng, Y.; He, X.; Zou, Z.; Guo, T. An Optimization Model for Process Traceability in Case-Based Reasoning Based on Ontology and the Genetic Algorithm. IEEE Sens. J. 2021, 21, 25123–25132. [Google Scholar] [CrossRef]

- Tasan, A.S.; Gen, M. A Genetic Algorithm Based Approach to Vehicle Routing Problem with Simultaneous Pick-up and Deliveries. Comput. Ind. Eng. 2012, 62, 755–761. [Google Scholar] [CrossRef]

- Oliveto, P.S.; Witt, C. Improved Time Complexity Analysis of the Simple Genetic Algorithm. Theor. Comput. Sci. 2015, 605, 21–41. [Google Scholar] [CrossRef]

- Hurník, J.; Zatočilová, A.; Paloušek, D. Circular Coded Target System for Industrial Applications. Mach. Vis. Appl. 2021, 32, 39. [Google Scholar] [CrossRef]

- Hartmann, W.; Havlena, M.; Schindler, K. Recent Developments in Large-Scale Tie-Point Matching. ISPRS J. Photogramm. Remote Sens. 2016, 115, 47–62. [Google Scholar] [CrossRef]

- Yang, K.; Hu, Z.; Liang, Y.; Fu, Y.; Yuan, D.; Guo, J.; Li, G.; Li, Y. Automated Extraction of Ground Fissures Due to Coal Mining Subsidence Based on UAV Photogrammetry. Remote Sens. 2022, 14, 1071. [Google Scholar] [CrossRef]

- Tysiac, P.; Sieńska, A.; Tarnowska, M.; Kedziorski, P.; Jagoda, M. Combination of Terrestrial Laser Scanning and UAV Photogrammetry for 3D Modelling and Degradation Assessment of Heritage Building Based on a Lighting Analysis: Case Study—St. Adalbert Church in Gdansk, Poland. Herit. Sci. 2023, 11, 53. [Google Scholar] [CrossRef]

- Catalucci, S.; Senin, N.; Sims-Waterhouse, D.; Ziegelmeier, S.; Piano, S.; Leach, R. Measurement of Complex Freeform Additively Manufactured Parts by Structured Light and Photogrammetry. Measurement 2020, 164, 108081. [Google Scholar] [CrossRef]

- Bräuer-Burchardt, C.; Munkelt, C.; Bleier, M.; Heinze, M.; Gebhart, I.; Kühmstedt, P.; Notni, G. Underwater 3D Scanning System for Cultural Heritage Documentation. Remote Sens. 2023, 15, 1864. [Google Scholar] [CrossRef]

- Nomura, Y.; Yamamoto, S.; Hashimoto, T. Study of 3D Measurement of Ships Using Dense Stereo Vision: Towards Application in Automatic Berthing Systems. J. Mar. Sci. Technol. 2021, 26, 573–581. [Google Scholar] [CrossRef]

| Parameter | Value or Method |

|---|---|

| Population size | 50 (chromosome) |

| Selection | Tournament |

| Crossover | Single-point |

| The probability of crossover | 0.8 |

| The probability of mutation | 0.1 |

| Stopping criterion | “Lack of progress over 50 successive generations” OR “The total target number N was reached” |

| Device | Parameter | Value |

|---|---|---|

| Canon EOS 850D SLR Camera | Resolution | 3984 × 2656 pixels |

| Focal length | 55 mm | |

| Optoma EH415e Projector | Resolution | 1920 × 1080 pixels |

| Horizontal scanning frequency | 15.375–91.146 KHz | |

| Vertical scanning frequency | 24–85 Hz | |

| Brightness | 4200 lux |

| Type | Methods | N | n | I | d | C | R | D | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Normal | AM | - | - | 54 | 54 | 54 | 54 | 100% | 100% | 100% |

| AHF-AM | - | - | - | - | - | - | - | - | - | |

| Stripe | AM | - | - | 54 | 0 | 0 | 0 | 0 | 0 | 0 |

| AHF-AM | 2.8 | 0.82 | 54 | 54 | 54 | 54 | 100% | 100% | 100% | |

| Lattice | AM | - | - | 54 | 18 | 18 | 18 | 100% | 33% | 33% |

| AHF-AM | 2.6 | 0.44 | 54 | 42 | 42 | 42 | 100% | 78% | 78% |

| Types | Methods | N | n | I | d | C | R | D | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Normal | TI | - | - | 54 | 65 | 35 | 35 | 54% | 65% | 65% |

| AHF-TI | 3.9 | 0.93 | 54 | 58 | 36 | 36 | 62% | 67% | 67% | |

| Stripe | TI | - | - | 54 | 0 | 0 | 0 | 0 | 0 | 0 |

| AHF-TI | 3.8 | 0.70 | 54 | 58 | 45 | 45 | 78% | 83% | 83% | |

| Lattice | TI | - | - | 54 | 342 | 0 | 0 | 0 | 0 | 0 |

| AHF-TI | 3.7 | 0.66 | 54 | 47 | 15 | 15 | 32% | 28% | 28% |

| Methods | Normal | Stripe | Lattice | ||||||

|---|---|---|---|---|---|---|---|---|---|

| AM | 100% | 100% | 100% | 0 | 0 | 0 | 100% | 22% | 22% |

| AHF-AM | - | - | - | 100% | 100% | 100% | 100% | 78% | 78% |

| TI | 53% | 62% | 62% | 0 | 0 | 0 | 0 | 0 | 0 |

| AHF-TI | 63% | 68% | 68% | 80% | 83% | 83% | 35% | 26% | 26% |

| Types | Methods | RMSE X (px) | RMSE Y (px) | RMSE XY (px) |

|---|---|---|---|---|

| Normal | TI | 0.312 | 0.214 | 0.378 |

| AHF-TI | 0.201 | 0.170 | 0.263 | |

| Stripe | AM | - | - | - |

| AHF-AM | 0.070 | 0.093 | 0.116 | |

| TI | - | - | - | |

| AHF-TI | 0.189 | 0.159 | 0.247 | |

| Lattice | AM | 0.125 | 0.203 | 0.238 |

| AHF-AM | 0.091 | 0.103 | 0.137 | |

| TI | - | - | - | |

| AHF-TI | 0.256 | 0.219 | 0.337 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shang, H.; Liu, C. AHF: An Automatic and Universal Image Preprocessing Algorithm for Circular-Coded Targets Identification in Close-Range Photogrammetry under Complex Illumination Conditions. Remote Sens. 2023, 15, 3151. https://doi.org/10.3390/rs15123151

Shang H, Liu C. AHF: An Automatic and Universal Image Preprocessing Algorithm for Circular-Coded Targets Identification in Close-Range Photogrammetry under Complex Illumination Conditions. Remote Sensing. 2023; 15(12):3151. https://doi.org/10.3390/rs15123151

Chicago/Turabian StyleShang, Hang, and Changying Liu. 2023. "AHF: An Automatic and Universal Image Preprocessing Algorithm for Circular-Coded Targets Identification in Close-Range Photogrammetry under Complex Illumination Conditions" Remote Sensing 15, no. 12: 3151. https://doi.org/10.3390/rs15123151

APA StyleShang, H., & Liu, C. (2023). AHF: An Automatic and Universal Image Preprocessing Algorithm for Circular-Coded Targets Identification in Close-Range Photogrammetry under Complex Illumination Conditions. Remote Sensing, 15(12), 3151. https://doi.org/10.3390/rs15123151