Abstract

Coastal ship tracking is used in many applications, such as autonomous navigation, maritime rescue, and environmental monitoring. Many general object-tracking methods based on deep learning have been explored for ship tracking, but they often fail to accurately track ships in challenging scenarios, such as occlusion, scale variation, and motion blur. We propose a memory-guided perception network (MGPN) to address these issues. MGPN has two main innovative improvements. The dynamic memory mechanism (DMM) in the proposed method stores past features of the tracked target to enhance the model’s feature fusion capability in the temporal dimension. Meanwhile, the hierarchical context-aware module (HCAM) enables the interaction of different scales, global and local information, to address the scale discrepancy of targets and improve the feature fusion capability in the spatial dimension. These innovations enhance the robustness of tracking and reduce inaccuracies in the bounding boxes. We conducted an in-depth ablation study to demonstrate the effectiveness of DMM and HCAM. Finally, influenced by the above two points, MGPN has achieved state-of-the-art performance on a large offshore ship tracking dataset, which contains challenging scenarios such as complex backgrounds, ship occlusion, and varying scales.

1. Introduction

Object tracking is a long-standing research problem with applications ranging from robot navigation [1,2], monitoring biological phenomena [3], and machinery fault diagnosis [4,5]. Recent developments in autonomous and intelligent systems [6,7,8] have drawn increased attention to the potential of intelligent navigation in the maritime industry. Intelligent navigation refers to the use of advanced technologies to optimize navigation processes and improve safety at sea. In port cities, ship tracking is a valuable tool for traffic counting, which facilitates the analysis of port handling capacity and helps to optimize the flow of goods and resources.

Ship tracking is significant for monitoring harbors, detecting stowaways and illegal fishing vessels, and assisting in maritime transport monitoring and management. In defense and the military, tracking and detecting specific ships contributes to tactical deployment and vigilance capabilities at sea. In the past, most ship-tracking tasks relied on traditional methods such as data association and hand-crafted features. For example, Maddalena et al. [6] proposed a self-organizing method based on artificial neural networks to segment moving objects and backgrounds by focusing on the problem of background modeling. Similarly, Chen et al. [7] presented a multi-view learning algorithm and a sparse representation method to improve ship tracking, including exploiting different ship-relevant features and utilizing wavelet filters to correct the ship’s position [8]. However, these methods are often complex in feature extraction and still have room for improvement in terms of tracking accuracy and speed.

In recent years, many trackers have been proposed to improve the tracking performance. The Discriminative Correlation Filter (DCF) method was originally introduced in the MOSSE tracker [9]. The CSK tracker [10] later enhanced the method by introducing the kernel trick and the circulant matrix, which improved the tracking accuracy by enriching the training samples of the filter through cyclic shift operations. To overcome the limitation of rare sample features, some works introduced high-dimensional features such as the histogram of oriented gradient (HOG) [11]. In addition, some works extracted the instantaneous characteristic frequency [12] based on marginal spectrum features using time–frequency information from Hilbert spectra to detect targets. In order to accelerate convergence and enhance robustness, Song et al. [13] proposed adding adaptive gain. With the development of deep network technology [14,15], deep features and networks have been widely used in DCF-based tracking methods [9,16,17,18,19,20].

Some works have explored the use of online-trained deep networks to improve tracking accuracy and robustness. Siamese networks have made significant contributions to visual object tracking. SiamFC [21] was the first to formulate visual tracking as a similarity comparison problem. SiamRPN [22] introduced region proposal networks (RPNs) which consist of a classification head for foreground–background discrimination and a regression head for anchor refinement. These approaches, based on anchor, have brought tracking accuracy to a high level. SiamRPN++ [23] utilize deeper backbone networks, such as ResNet [14] and GoogleNet [24], to enhance feature representation. Many anchor-free trackers [25,26,27,28] follow the pixel-wise prediction approach to perform target localization, inspired by anchor-free object detectors such as FCOS [29] and CornerNet [30].

Ship-tracking tasks play a critical role in a variety of applications, from monitoring harbors to detecting illegal fishing vessels and supporting military operations. From a computer vision perspective, ship tracking is considered a branch of single-object tracking, where the goal is to track an object (in this case, a ship) across multiple frames of a video sequence. Siamese trackers have become increasingly popular for these tasks due to their ability to transform complex tracking problems into similarity matching problems. Specifically, the Siamese network has two branches: the template branch, which models the object of interest in the first frame, and the search branch, which scans the subsequent frames to locate the object. During training, the network learns to compare the features from the two branches and predict the similarity score, which can be used to generate a response map that highlights the object’s location in each frame. By leveraging this response map, the network can accurately track the ship throughout the video sequence.

Although many tracking models [31,32] based on the Siamese network achieve high accuracy, there are two limitations that lead to inaccurate and erratic tracking results in coastal ship tracking. Firstly, as shown in the first line in Figure 1, due to the camera’s perspective, the target is prone to scale differences when the ship and camera are in relative motion. However, the template and search branches under the general tracking architecture cannot adapt to the same target of different sizes. Therefore, it is necessary to enhance the network’s adaptability to different scales to improve tracking accuracy.

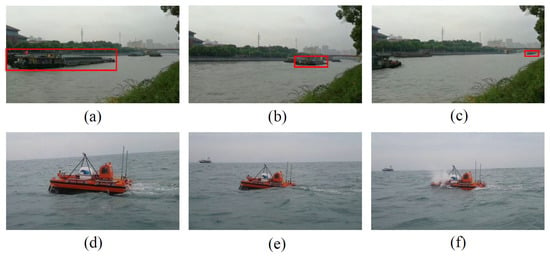

Figure 1.

(a–c) When the ship is relative to the camera, there will be a large difference in scale. (The red box means the target to be tracked) (d–f) The part of the ship which is covered by sea due to weather and waves.

Secondly, as shown in the second line in Figure 1 coastal ship targets face a unique challenge. Compared to target tracking in terrestrial scenes, the target in the ship-tracking task is often susceptible to the influence of surrounding sea waves during the operation. Since the target motion of a ship is usually monotonic and linear, and there is no background interference, more adjacent frame attributes can be used to model time series characteristics in ship-tracking tasks. However, current Siam networks use only static feature information from the search branch to complete similarity calculations, ignoring the frame-to-frame relationship in the feature. Therefore, a dynamic inter-frame fusion model is needed to mine more information in the search branch to solve the wave occlusion problem.

Motivated by these analyses, we present a Memory-Guided Perceptual Network (MGPN) for coastal ship tracking to overcome the difficulties above. Especially in the search branch, MGPN stores the past frame features dynamically and fuses them with the current frame features to enhance features. In the template branch, MGPN adds multi-scale information of the target through hierarchical convolution structure. Our contributions are three-fold:

- A dynamic memory mechanism (DMM) is introduced in the search branch to dynamically store the features of past frames and merge them with current frame features as prior information, which can improve the robustness of tracking by incorporating more context and history and accuracy features. DMMs can help mitigate the effects of complex background occlusions and provide a more comprehensive and contextualized representation of objects.

- We introduce a Hierarchical Context-Aware Module (HCAM) in the template branch. It extracts the contextual information of ship features at multiple scales and improves the receptive field through a hierarchical global-and-local dilation convolution, which can improve tracking accuracy and robustness.

- Without bells and whistles, our method outperforms the state-of-the-art methods on a large maritime dataset LMD-TShip [33] and achieves a tracking EAO of up to 0.665 and Robustness of up to 0.067.

2. Related Work

2.1. Visual Object Tracking

In recent years, Siamese-like methods [21,22,23] have been more popular in the tracking field. Current visual object tracking can be divided into two categories: anchor-based framework and anchor-free framework.

Anchor-based Framework. SiamFC [21] is the first to use a Siamese network for object tracking, which converts the tracking task into a similarity learning problem. In this way, the target can be determined by calculating the response graph scores of the two branches in the network. SiamFC learns a function that compares a template image z to a search image x and returns a score, which is defined as:

where is deep neural network and is a simple distance or similarity metric.

After that, SiamRPN [22] combines classification and regression tasks into the Siamese network to further improve the accuracy. The classification task focuses on separating the target and background, while the regression task is responsible for the coordinate position of the region through the anchor. To address an imbalance in datasets and perform incremental learning, DaSiamRPN [34] presents an effective sampling strategy and a distractor-aware module. For network structure, SiamRPN++ [23] successfully trains a deep CNN with Siamese and performs a novel deep-wise aggregation, which shows the effect on several large datasets. Furthermore, SiamAPN [35] proposes a novel anchor proposal network for lightweight anchor generation, which increase the robustness of different objects. SiamAtt [36] introduces an attention module to exploit the target location. In multi-task development, SiamMask [37] narrows the gap between visual object segmentation and visual object tracking, showing better accuracy than other trackers.

Anchor-free Framework. Compared with the anchor-based approaches, anchor-free methods require non-prior information and increased flexibility. The object detection mission [29] proposes the concept of anchor-free, which significantly reduces the number of parameters and memory space. In the tracking task, SiamFC++ [28] and SiamCAR [27] classification and target state estimation branches in a fully convolutional Siamese network. Ocean [38] designs feature alignment modules to learn object-aware features. For head improvement, SiamBAN [25] designs an adaptive box module and multi-level prediction network to optimize the tracking performance. The architecture without anchors and pre-parameters makes these anchor-free methods more flexible and versatile and surpasses the anchor-based framework in precision and speed.

2.2. Ship Tracking

In the past few years, ship tracking has been a topic of interest for researchers. Most of them perform tracking by Kernel Correlation Filter (KCF) [9,16]. Influenced by KCF, many representative ship-tracking efforts emerged. Teng et al. [39] present a hybrid feature model and a Bayes classifier for feature fusion and classification of foreground and background. For feature extraction, Chen et al. [8] combine Laplacian of Gaussian (LOG), Gabor, Local Binary Pattern (LBP), and other features to enhance the feature representation. They apply wavelet filters and post-processing to optimize the tracking results for ship oscillation. For remote sensing applications, some works have proposed adaptive low-rank approximation (LRA) strategies [40] to reduce the computational complexity of image super-resolution. Zhou et al. [41] modeled the Gaussian distribution in the Fourier domain to better identify targets and suppress sea clutter. The recent rapid development of deep neural networks has dramatically improved the performance of ship tracking. Shan et al. [31] utilize several region proposal networks in the Siamese network, which builds a ship-tracking pipeline. Liu et al. [42] propose a deep residual network and a penalty mechanism for ship recognition and tracking. In multiple ship tracking, Wu et al. [43] extract features via Yolov3 [44] and present a novel multiple granularity network that acquires complete target appearance information for feature matching between frames.

3. Methodology

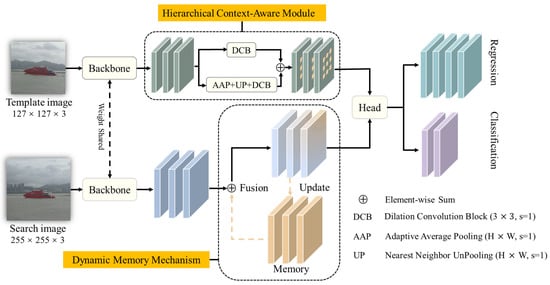

In this section, we provide a detailed description of our MGPN method for coastal ship tracking, as illustrated in Figure 2. Our approach relies on two key components: the Dynamic Memory Mechanism (DMM) in the search branch and the Hierarchical Context-Aware Module (HCAM) in the template branch. DMM dynamically updates the features of past frames and uses them as prior information to enhance current frame features. This allows us to exploit inter-frame correlation and incorporate more context and history into our tracking model, leading to improved accuracy and robustness. HCAM, on the other hand, enhances the receptive field of the template branch through a hierarchical global-and-local dilation convolution. The local convolution module maintains detailed information through a 3 × 3 small convolution kernel, while the global branch aggregates information through Global Pooling, which is implemented through Adaptive Average pooling. This enables our tracker to capture more detailed information about the ships, leading to stronger and more discriminative features that are robust to different ships. The hierarchical structure of HCAM also enhances the integrity and continuity of our features across different channels.

Figure 2.

The pipeline of the proposed method. MGPN mainly contains four sections. The first is a weight shared backbone to extract features, the second is Dynamic Memory Mechanism (DMM) in the search branch, the third is Hierarchical Context-Aware Module (HCAM) in the template branch, and the fourth is the tracking head based on anchor-free approach.

3.1. Dynamic Memory Mechanism

The proposed DMM is a dynamic storage mechanism that takes up little space and maintains the current and the previous frame features in the memory mechanism at all times. The implementation of DMM is described as follows.

During the training process, n images from the search branch are passed through a backbone to extract the feature map continuously.

where is the video frames in the search branch.

Then, we create a memory and initialize the size of as q, where q is also defined as a threshold in the range . As the network performs feature extraction, begins to store features gradually until is full:

After that, the feature map of current frame is merged with the features of previous q frames when the feature map of the th frame is

To dynamically implement , we update after obtaining a new feature map. This operation ensures that the size of the does not change, which is the key that consumes little space.

The memory bank is designed to continually update and improve the feature representation of the data. New features are incorporated into the memory bank, while outdated features are periodically removed:

The algorithm process of DMM is shown in Algorithm 1. Its process involves continuously merging features from different stages of the tracking process, allowing for the detection of temporal relationships between features. In addition, memory has an updated state, i.e., the oldest frames in the current memory are discarded after one iteration, and the latest frames in training are kept, which is a dynamic approach that facilitates feature enhancement and aggregation.

| Algorithm 1: Dynamic Memory Mechanism. |

|

3.2. Hierarchical Context-Aware Module

The template branch is responsible for extracting the features of the image, which are used to discriminate the correlation with the features obtained from the search branch. Most trackers [23,27,28,37] employ deep convolution to merge spatial information with channel information. However, due to the vast number of channels, the final output features ignore information such as ship contours, locations, and textures. Thus, we need more feature context information and network deepening to make the template branch more strongly representational.

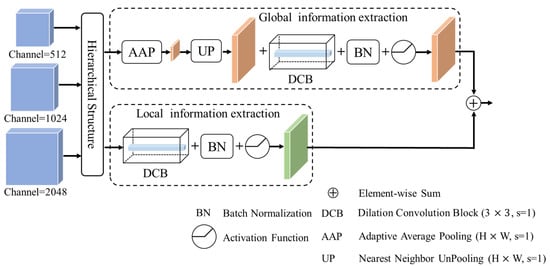

Figure 3 illustrates the detailed architecture of HCAM, which consists of three main parts: local information extraction, global information extraction, and hierarchical structure. Global–local acquires global and local features by increasing the ship’s target receptive field, and the hierarchical structure considers the integrity and continuity of the module. We place Batch normalization layer in front of activation function. It directly normalizes the data and allows the activation function to take advantage of a stable distribution of inputs.

Figure 3.

The detailed structure of Hierarchical Context-Aware Module (HCAM). AAP means adaptive average pooling and BN denotes batchnorm.

3.2.1. Local Information Extraction

The local module DCB is composed of a convolution with dilation, BatchNorm and ReLU, which is defined as:

where indicates DCB and i denotes the block’s number. We set and , l denotes the lth layer in the backbone; here we consider the last three layers of the countdown.

3.2.2. Global Information Extraction

In contrast to the local module, we design the global module concerning these models [45,46], which is structured as a combination of adaptive average pooling and dilation convolution block to acquire global information. Pooling can obtain the optimal elements in a region, and adaptive operation automatically sets the kernel size and stride in training. To balance the local information, we also set the number of modules to n. The relevant formula is defined as:

where represents the composition of AAP+DCB and the parameters are the same as the local module. In subsequent implementations, the global feature vector is mapped to the same size as the local feature through the (unpooling) UP operation. l denotes the lth layer in backbone. Here we consider the last three layers of the countdown.

3.2.3. Hierarchical Structure

To ensure the integrity and continuity of features, we adopt a hierarchical approach by extracting three features with different channels. Then, global and local operation act in three layers, as defined by:

3.3. Head and Loss

3.3.1. Head

For the head of MGPN, we introduce the depth-wise cross-correlation [23] operation between the search branch and the template branch, which is defined as:

where denotes classification map, indicates regression map, represents the feature of search branch, and is the feature of template branching, which is regarded as the convolution kernel in cross-correlation.

3.3.2. Loss

Our loss function is composed of two parts: classification loss and regression loss. We use the cross entropy loss for classification to distinguish ship from the background and the IoU loss for regression. Similar to FCOS [29], our regression goals are , and they represent the distance from the center point to the border. The overall loss function is:

4. Experiments

4.1. Dataset and Evaluation Metrics

4.1.1. Dataset

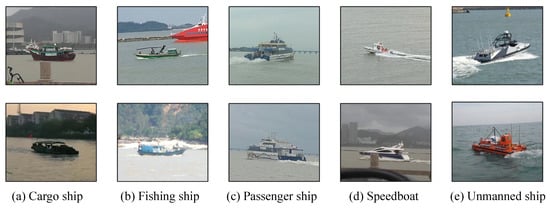

Our dataset LMD-TShip [33] is a large scale, full category, high resolution for ship tracking. It contains 40,240 frames in 191 videos for five categories of ships, including cargo ships, fishing ships, passenger ships, speedboats, and unmanned ships. Each frame is labelled with a bounding box of the ship manually and accurately. The dataset covers a variety of factors that affect tracking performance at sea, such as scale, shaking, occlusion, and so on, which is the most abundant dataset in the field of ship tracking. In our experiments, we randomly select 144 videos as the training set and the rest as the testing set. The ratio is approximately 3:1. We present several samples in Figure 4.

Figure 4.

Samples of five types of ships in datasets. (a) Cargo ship. (b) Fishing ship. (c) Passenger ship. (d) Speedboat. (e) Unmanned ship.

4.1.2. Evaluation Metrics

We refer to some measurement methods [47,48,49,50,51] and employ three evaluation metrics: accuracy, robustness, and EAO. These metrics are highly critical measures and are currently used in many visual object tracking (VOT) tasks and competitions.

Accuracy. Accuracy mainly describes the degree of precision of the tracker. Similar to object detection, it adopts the IoU (Intersection over Union) of predicted targets with ground truth in each frame for calculation. Given a time-step r, the accuracy is evaluated as:

where stands for the predicted bounding box and represents the ground truth bounding box.

Robustness. Robustness measures how many times the tracker loses the target, i.e., the lower the metric, the better the tracking. In addition, it can reinitialize the tracker after f frames are lost. Generally, we set .

Expected Average Overlap. EAO is a comprehensive evaluation that balances accuracy and robustness, which becomes a crucial metrics for object tracking. Given a specification of the range , the expected average overlap is defined as:

where represents the average overlap of a range .

4.2. Implementation Details

Training. The proposed MGPN is implemented in Python with Pytorch on a single RTX TITAN, and our baseline is SiamBAN [25]. The training set accounts for about three-quarters of the total. During the training process, the input images are cropped to 127 × 127 pixels and 255 × 255 pixels, which are the same as SiamFC [21]. We apply a pre-trained model on ImageNet to initialize our network. MGPN is trained with stochastic gradient descent (SGD) and weight decay and momentum are set as 0.0001 and 0.9.

Hyper-parameters. Learning rate grows from 0.001 to 0.005 in the first 5 epochs with a manner and decays exponentially to 0.00005 in the remaining 15 epochs. In an image pair, we pick up 16 positive samples and 48 negative samples at most for the classification task. For data augmentation, we enhanced the data of the target template with a probability of 0.5, including flip, shift, and color jitter. In addition, we introduced an additional 0.2 probability to achieve image blur for the search branch. The loss function consists of classification loss and regression loss , which we have described in detail in the proposed method.

Inference. During inference, we crop the images and then extract the features through the network to obtain the classification map and regression map after the cross-correlation [23] between the template branch and the search branch. As with the anchor-free method, we predict the top-left and bottom-right coordinates of the regression frame by . Then, we optimize the prediction bounding box with cosine window and scale penalty function [22].

4.3. Ablation Study

To verify the effectiveness of each component of our MGPN, we perform an ablation study on LMD-TShip [33]. We adopt SiamBAN [25] (represented by B) as our baseline, which is an anchor-free tracking framework. Subsequently, we conduct Dynamic Memory Mechanism (DMM), Hierarchical Context-Aware Module (HCAM), and MGPN experiments, respectively. Table 1 shows the performance of different components in terms of accuracy, robustness, and EAO.

Table 1.

Performance of different components in terms of accuracy, robustness and EAO.

Selection of parameter q. A parameter q in DMM represents the actual size of the memory . Table 2 shows the ablation experiments at different q. Since our network is trained in minibatch format, the size of is . In Table 2, it can be observed that increasing the size of q does not necessarily bring continuous improvement, and the model achieves the best EAO when , which shows that our model achieves the best tracking performance when considering 24 frames of video as memory features.

Table 2.

Performance with different parameters in Dynamic Memory Mechanism (DMM).

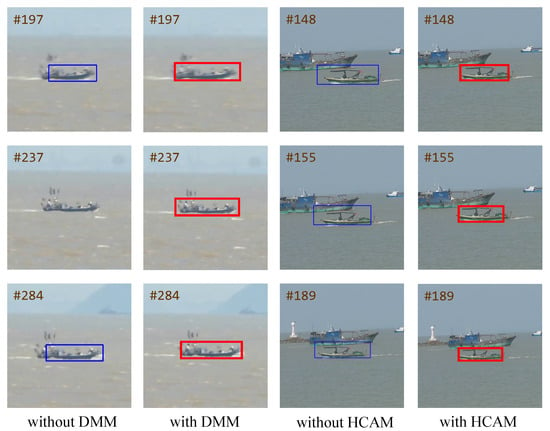

Effectiveness of DMM and HCAM. As shown in Table 1, the baseline tracker achieved a tracking accuracy of 78.0% and an EAO of 59.5%. Adding the Dynamic Memory Mechanism (DMM) improves the performance by 1.7% in accuracy and 4.0% in EAO compared to the baseline. DMM dynamically merges features from current and previous frames to establish inter-temporal relationships and enhance features. Incorporating the Hierarchical Context-Aware Module (HCAM) improves the baseline by 1.7% and 4.1% in terms of robustness and EAO, respectively, while maintaining comparable accuracy to Baseline. HCAM boosts the receptive field hierarchically, enhancing integrity and continuity of features in the template branch. Combining DMM and HCAM results in a 0.4% decrease in accuracy compared to DMM alone, but an 0.8% increase in robustness compared to HCAM, demonstrating the joint approach’s ability to handle challenging tracking conditions. Specifically, the joint approach is better at handling occlusion and illumination changes. In addition, by comparing the computational performance of different modules, our proposed HCAM module and DMM did not bring a significant amount of parameters to the network in training and inference. EAO is an important metric in tracking tasks, as it considers both accuracy and robustness and provides a comprehensive evaluation of the model’s performance. This is crucial as accuracy alone may not be sufficient for real-world scenarios where tracking targets can encounter various challenges.

Visualization comparison. Figure 5 shows the visualization results with/without DMM and HCAM. Bounding boxes in blue come from our baseline, SiamBAN [25]. Bounding boxes in red from the second and fourth columns are influenced by DMM and HCAM. It shows that missing DMM leads to blurred bounding boxes and even target loss. The missing HCAM approach affected by the background results in an enlarged box. With the assistance of the components, our prediction fits the ship’s contour more closely, which is more precise and reliable.

Figure 5.

Visualization results with/without DMM and HCAM (#xxx means a certain frame in the video). Bounding boxes in blue come from our baseline, SiamBAN [25]. Bounding boxes in red come from the second column, and the fourth column shows the influence of DMM and HCAM. With the assistance of the components, our prediction fits the ship’s contour more closely and even obtains the ship that SiamBAN [25] lost. Hence, our method is more precise and reliable.

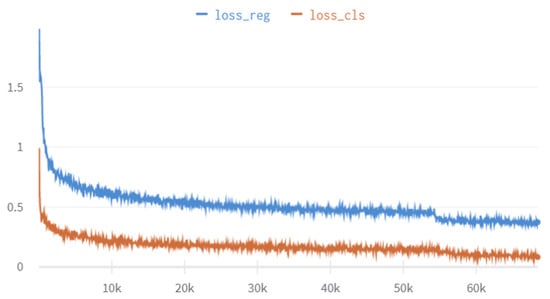

Analysis of two loss components. we further investigated the variation of classification loss and regression loss, as depicted in Figure 6. After warm up in the first five epochs, the losses will not oscillate initially, and in the end, all losses converge well.

Figure 6.

Loss decline curve with two components.

4.4. Comparison with State-of-the-Art Trackers

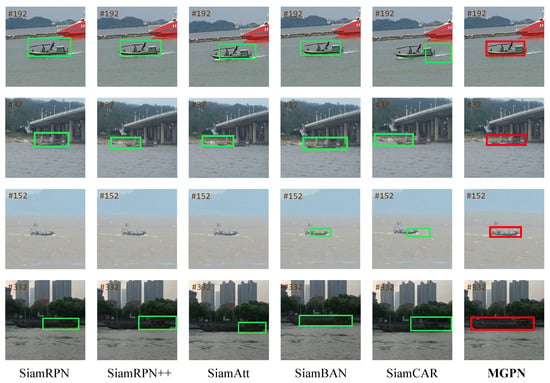

We compare with several SOTA trackers in LMD-TShip [33], including SiamRPN [22], SiamRPN++ [23], SiamAtt [36], SiamBAN [25], SiamCAR [27], RBO [52]. All approaches are implemented in Python using PyTorch on a PC with Intel(R) Core(TM) i9-10900KF CPU @ 3.70 GHz CPU and single Nvidia RTX TITAN. In particular, SiamRPN, SiamRPN++ and RBO are anchor-based tracking methods, while the rest of the frameworks and MGPN are anchor-free approaches. Table 3 and Figure 7 show the metric comparison results and visualization comparison results of our method (MGPN) with SOTA methods.

Table 3.

Comparison with the state-of-the-art in terms of accuracy, robustness (failure rate), expected average overlap (EAO) on the ship datasets. (↑ represents that the metric is directly proportional to the model performance, ↓ represents that the metric is inversely proportional to the model performance).

Figure 7.

Comparison of the tracking results by different methods. The green and red boxes indicate SOTA methods and our method. No bounding box means the tracking ship is missing. (#xxx means a certain frame in the video).

Indicators comparison. Our method achieves optimal metrics on the accuracy, robustness, EAO, and frames per second (FPS). In terms of accuracy, compared to the anchor-based approaches, MGPN is ahead of SiamRPN and SiamRPN++ by 3.8% and 2.8%, respectively. In addition, anchor-based methods utilize prior parameters, such as the size or number of the anchor, while MGPN does not require manual settings. Among several anchor-free methods, MGPN slightly outperforms SiamBAN [25] by 1.3%, which has a position in tracking accuracy. In terms of robustness, benefiting from the combined effect of DMM and HCAM, our method achieves 0.067, which is 2.5% and 5.8% higher than SiamCAR and SiamRPN++, respectively, indicating that our tracker causes fewer ships to be lost and tracks down the right ships. Regarding EAO, our method obtains 0.665, which is superior to SiamCAR by 3.8% and SiamBAN [25] by 7.0%. Compared with the anchor-based methods, our method achieves a large margin, outperforming SiamRPN++ by more than 10.0% and SiamAtt by nearly 20.0%. In terms of FPS, MGPN is very similar to SiamBAN [25]. Combined with the tracking capability, our algorithm reaches 68 fps with real-time performance.

Visualization comparison: Figure 7 shows some tracking results of different methods on sample images. The first row is a fishing ship. The results from MGPN fit the ship’s profile better than SiamRPN, SiamRPN++, SiamAtt, and SiamBAN [25], while SiamCAR shows a drift, which illustrates the advantage of our method in accuracy. The ships in the second and fourth rows are similar to the background. Other methods are not stable in tracking results and can only track a part of the ships, while MGPN benefits from the proposed DMM and HCAM, which overcomes this phenomenon with their enhanced features. In the third row, SiamRPN, SiamRPN++, and SiamAtt all lose the targets. MGPN obtains more accurate bounding boxes than SiamBAN [25] with SiamCAR, which indicates that our method is more robust and can achieve outstanding performance.

Tracking comparison in different categories. Table 4 shows the comparison with the state-of-the-art in terms of expected average overlap (EAO) on five types of ships. MGPN achieves the best tracking performance in cargo ships, fishing ships, passenger ships, and unmanned ships, which shows the positive effects of our proposed DMM and HCAM. Furthermore, the tracking results of MGPN outperform SiamBAN [25], further demonstrating that DMM and HCAM are beneficial in ship tracking. In particular, our method outperforms SiamBAN [25] by 6.0% on fishing vessels and more than 9.0% on the rest of the methods. Our approach has the performance improvement potential in speedboats due to its fast movement and long distance compared to the SiamCAR. MGPN exceeds the EAO metrics compared to other trackers by more than 10%, indicating that our method is still competitive.

Table 4.

Comparison with the state-of-the-art in terms of expected average overlap (EAO) on five types of ship datasets.

5. Conclusions

This paper proposes a Memory-Guided Perceptual Network (MGPN) for coastal ship tracking. There are two significant differences from other tracking frameworks in MGPN, namely, Dynamic Memory Mechanism (DMM) and Hierarchical Context-Aware Module (HCAM). DMM is a dynamic memory that automatically stores current features and previous features as the network is trained to build a fusion model for sequence information, which has an active role in improving ship tracking accuracy. Meanwhile, HCAM explores more feature information and enhances feature extraction by augmenting the receptive field of the template branch. In addition, the hierarchical architecture with global and local effects provides network integrity. Finally, the two components are merged to solve the shortness and instability problem in ship tracking. Extensive experiments on a large maritime dataset show that the proposed MGPN achieves state-of-the-art ship-tracking performance. We can apply it in coastal ship tracking based on its accuracy and speed advantages.

Author Contributions

Methodology, X.Y. and D.Y.; Software, H.Z. (Haiyang Zhu); Writing—original draft, X.Y.; Writing—review & editing, D.Y.; Visualization, H.Z. (Hua Zhao). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61976166; in part by the Shaanxi Outstanding Youth Science Fund Project under Grant 2023-JC-JQ-53; in part by the Fundamental Research Funds for the Central Universities under Grant QTZX23057; and in part by the Open Research Projects of Laboratory of Pinghu.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Martin-Martin, R.; Mihir, P.; Hamid, R.; Abhijeet, S.; Gwak, J.; Eric, F.; Amir, S.; Silvio, S. Jrdb: A dataset and benchmark of egocentric robot visual perception of humans in built environments. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 45, 6748–6765. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Anjum, S.; Gurari, D. CTMC: Cell tracking with mitosis detection dataset challenge. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhao, K.; Hu, J.; Shao, H.; Hu, J. Federated multi-source domain adversarial adaptation framework for machinery fault diagnosis with data privacy. Reliab. Eng. Syst. Saf. 2023, 236, 109246. [Google Scholar] [CrossRef]

- Zhao, K.; Jia, F.; Shao, H. A novel conditional weighting transfer Wasserstein auto-encoder for rolling bearing fault diagnosis with multi-source domains. Knowl.-Based Syst. 2023, 262, 110203. [Google Scholar] [CrossRef]

- Maddalena, L.; Petrosino, A. A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans. Image Process. 2008, 17, 1168–1177. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, S.; Shi, C.; Wu, H.; Zhao, J.; Fu, J. Robust Ship Tracking via Multi-view Learning and Sparse Representation. J. Navig. 2019, 72, 176–192. [Google Scholar] [CrossRef]

- Chen, X.; Chen, H.; Wu, H.; Huang, Y.; Yang, Y.; Zhang, W.; Xiong, P. Robust Visual Ship Tracking with an Ensemble Framework via Multi-view Learning and Wavelet Filter. Sensors 2020, 20, 932. [Google Scholar] [CrossRef] [PubMed]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Surasak, T.; Takahiro, I.; Cheng, C.H.; Wang, C.E.; Sheng, P.Y. Histogram of oriented gradients for human detection in video. In Proceedings of the International Conference on Business and Industrial Research, Bangkok, Thailand, 17–18 May 2018. [Google Scholar]

- Jin, B.; Vai, M.I. An adaptive ultrasonic backscattered signal processing technique for instantaneous characteristic frequency detection. Bio-Med. Mater. Eng. 2014, 24, 2761–2770. [Google Scholar] [CrossRef] [PubMed]

- Song, F.; Liu, Y.; Shen, D.; Li, L.; Tan, J. Learning Control for Motion Coordination in Wafer Scanners: Toward Gain Adaptation. IEEE Trans. Ind. Electron. 2022, 69, 13428–13438. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1440–1448. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Zheng, L.; Tang, M.; Chen, Y.; Wang, J.; Lu, H. Learning feature embeddings for discriminant model based tracking. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020. [Google Scholar]

- Danelljan, M.; Gool, L.V.; Timofte, R. Probabilistic regression for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, Y.; Tang, Y.; Han, T.; Zhang, Y.; Zou, B.; Feng, H. RAMC: A Rotation Adaptive Tracker with Motion Constraint for Satellite Video Single-Object Tracking. Remote Sens. 2022, 14, 3108. [Google Scholar] [CrossRef]

- Lin, B.; Bai, Y.; Bai, B.; Li, Y. Robust Correlation Tracking for UAV with Feature Integration and Response Map Enhancement. Remote Sens. 2022, 14, 4073. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional Siamese Networks for Object Tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph attention tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. SiamFC++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Shan, Y.; Zhou, X.; Liu, S.; Zhang, Y.; Huang, K. SiamFPN: A deep learning method for accurate and real-time maritime ship tracking. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 315–325. [Google Scholar] [CrossRef]

- Liu, H.; Xu, X.; Chen, X.; Li, C.; Wang, M. Real-Time Ship Tracking under Challenges of Scale Variation and Different Visibility Weather Conditions. J. Mar. Sci. Eng. 2022, 10, 444. [Google Scholar] [CrossRef]

- Shan, Y.; Liu, S.; Zhang, Y.; Jing, M.; Xu, H. LMD-TShip: Vision Based Large-Scale Maritime Ship Tracking Benchmark for Autonomous Navigation Applications. IEEE Access 2021, 9, 74370–74384. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Fu, C.; Cao, Z.; Li, Y.; Ye, J.; Feng, C. Onboard Real-Time Aerial Tracking with Efficient Siamese Anchor Proposal Network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5606913. [Google Scholar] [CrossRef]

- Yang, K.; He, Z.; Zhou, Z.; Fan, N. SiamAtt: Siamese Attention Network for Visual Tracking. Knowl.-Based Syst. 2020, 203, 106079. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast Online Object Tracking and Segmentation: A Unifying Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware anchor-free tracking. In Proceedings of the European Conference on Computer Vision, Virtual, 23–28 August 2020. [Google Scholar]

- Teng, F.; Liu, Q. Robust Multi-scale Ship Tracking via Multiple Compressed Features Fusion. Signal Process. Image Commun. 2015, 31, 76–85. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, J.; Zhang, Y.; Huang, Y.; Cai, X.; Yang, J.; Mao, D.; Li, J.; Tuo, X.; Zhang, Y. Resolution Enhancement for Large-Scale Real Beam Mapping Based on Adaptive Low-Rank Approximation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5116921. [Google Scholar] [CrossRef]

- Zhou, A.; Xie, W.; Pei, J. Background Modeling in the Fourier Domain for Maritime Infrared Target Detection. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2634–2649. [Google Scholar] [CrossRef]

- Liu, B.; Wang, S.; Xie, Z.; Zhao, J.; Li, M. Ship Recognition and Tracking System for Intelligent Ship Based on Deep Learning Framework. TransNav 2019, 13, 4. [Google Scholar] [CrossRef]

- Wu, J.; Cao, C.; Zhou, Y.; Zeng, X.; Feng, Z.; Wu, Q.; Huang, Z. Multiple Ship Tracking in Remote Sensing Images using Deep Learning. Remote Sens. 2021, 13, 3601. [Google Scholar] [CrossRef]

- Farhadi, A.; Redmon, J. Yolov3: An Incremental Improvement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Cehovin, L.; Fernandez, G.; Vojir, T.; Hager, G.; Nebehay, G.; Pflugfelder, R. The Visual Object Tracking VOT2015 Challenge Results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Čehovin, L.; Kristan, M.; Leonardis, A. Is My New Tracker Really Better Than Yours? In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Goyette, N.; Jodoin, P.M.; Porikli, F.; Konrad, J.; Ishwar, P. Changedetection.net: A New Change Detection Benchmark Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Pang, Y.; Ling, H. Finding the Best from the Second Bests-inhibiting Subjective Bias in Evaluation of Visual Tracking Algorithms. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Feng, T.; Qiang, L. Ranking-based Siamese visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).