MVFRnet: A Novel High-Accuracy Network for ISAR Air-Target Recognition via Multi-View Fusion

Abstract

1. Introduction

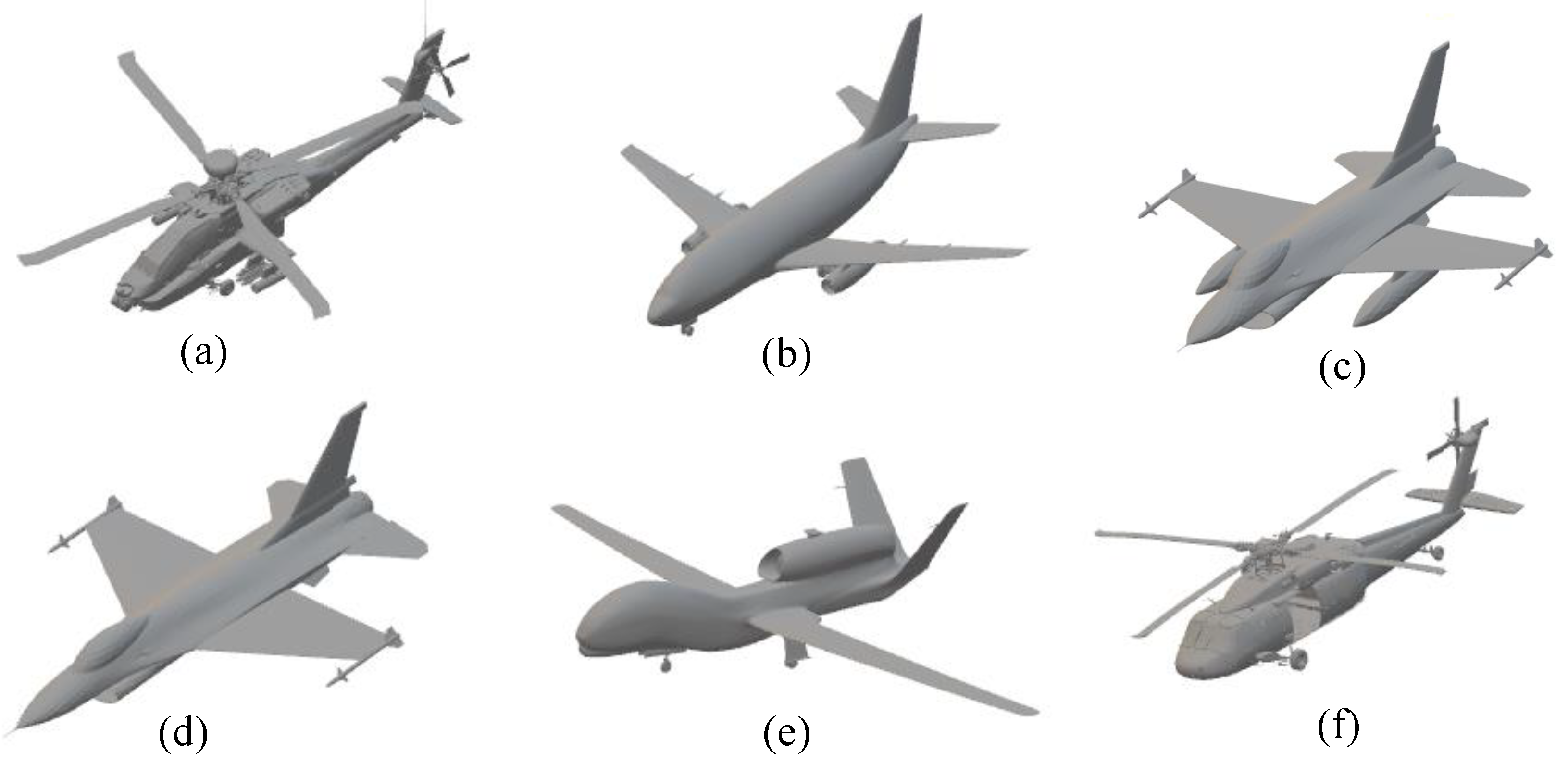

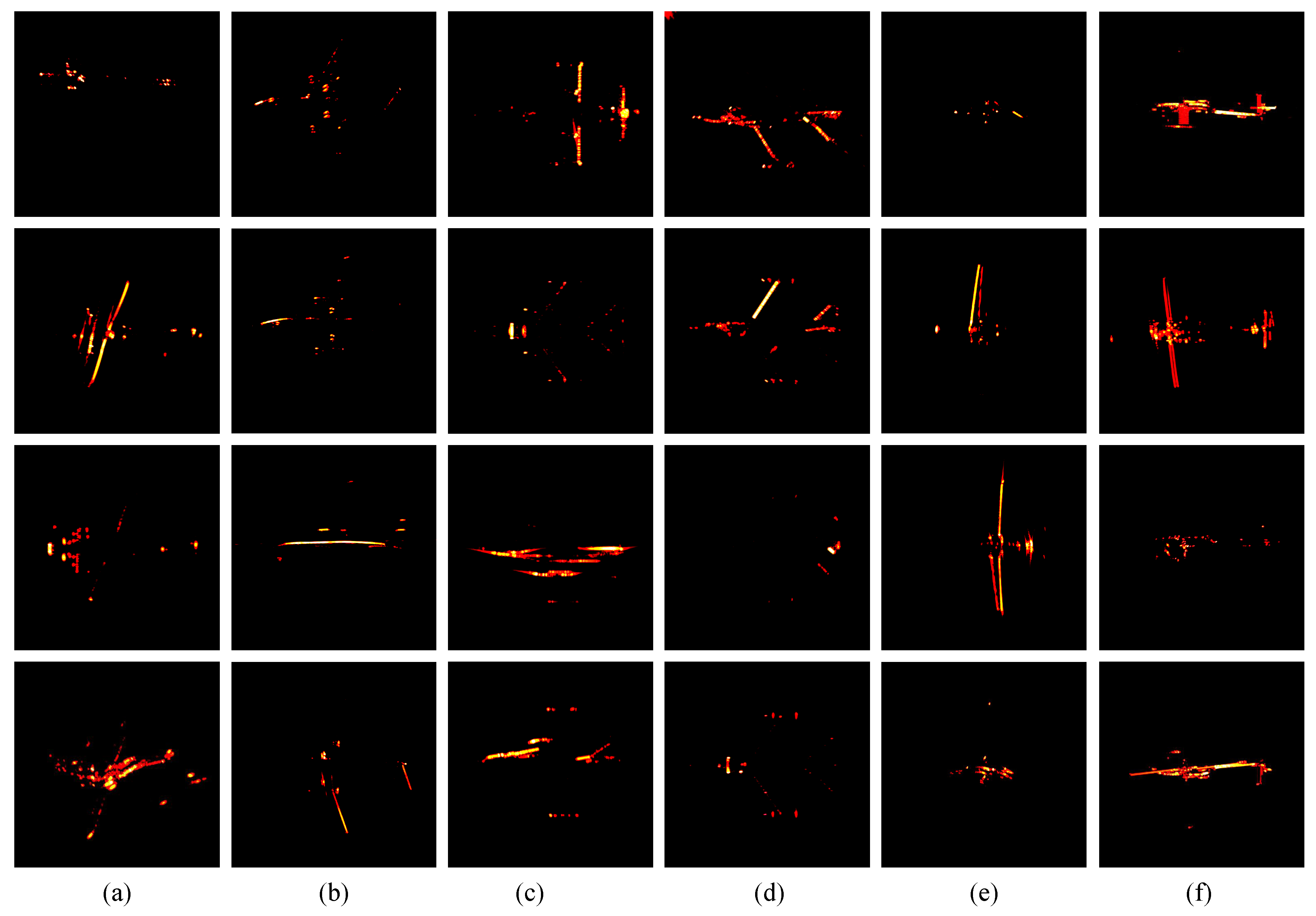

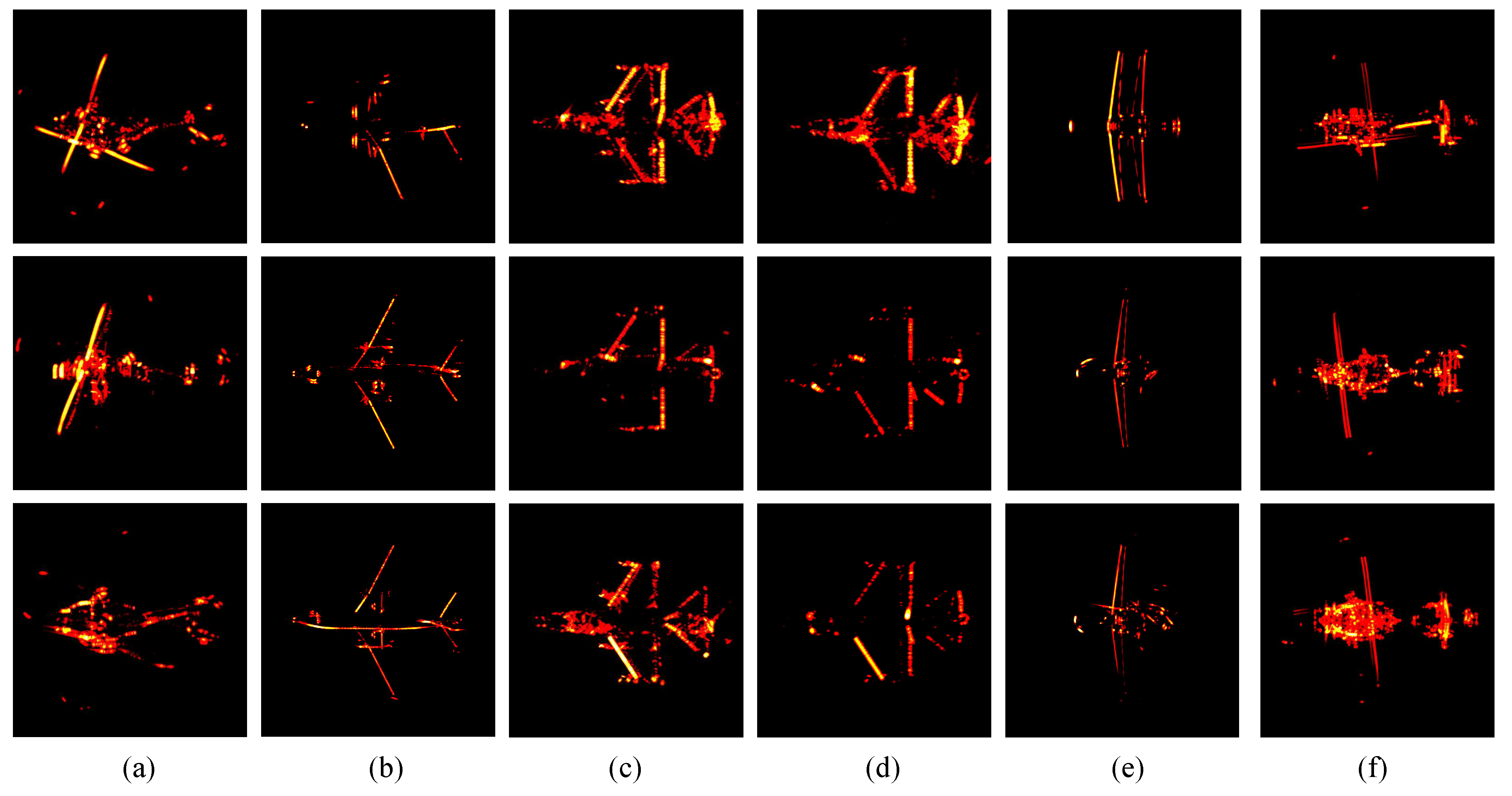

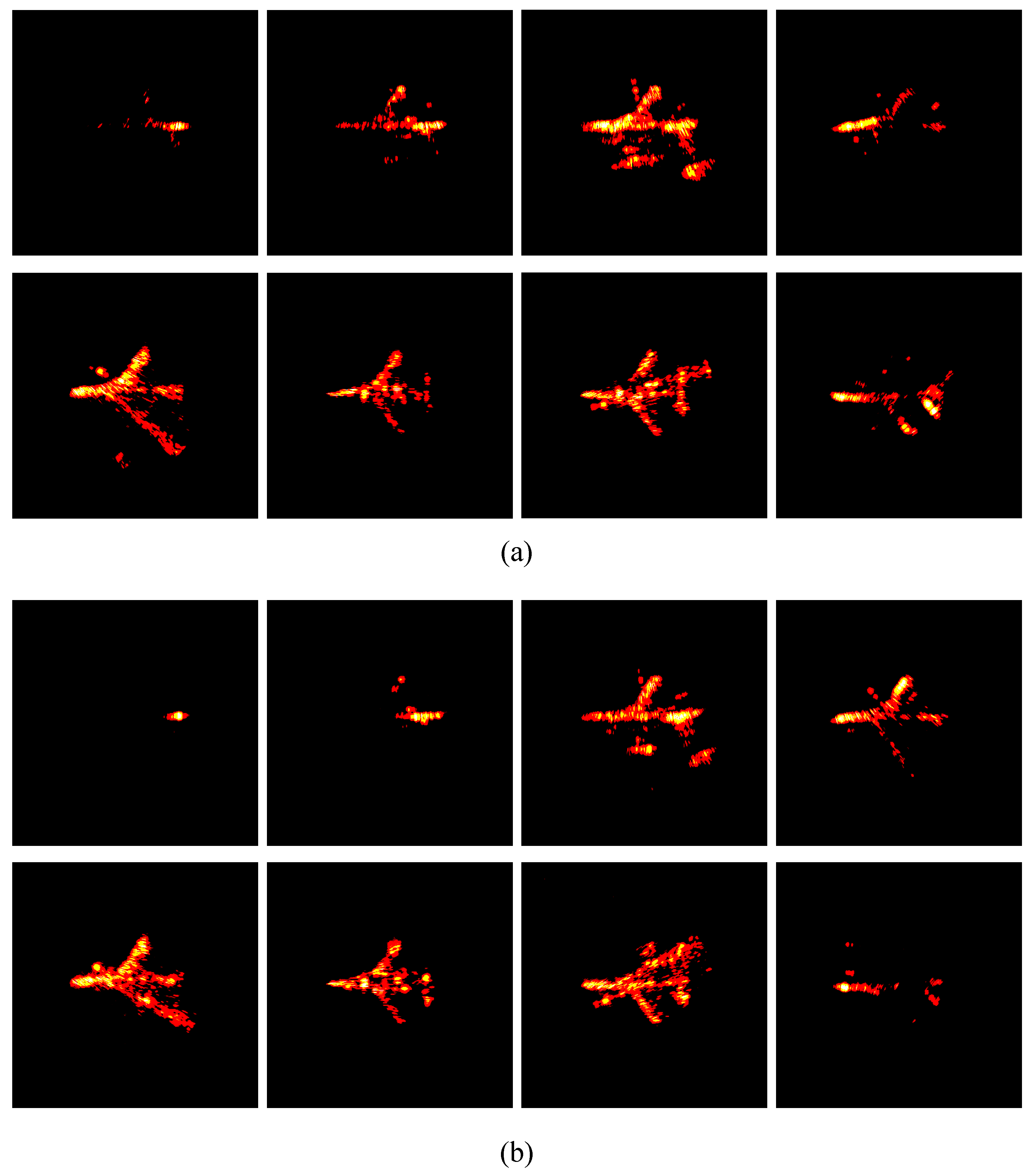

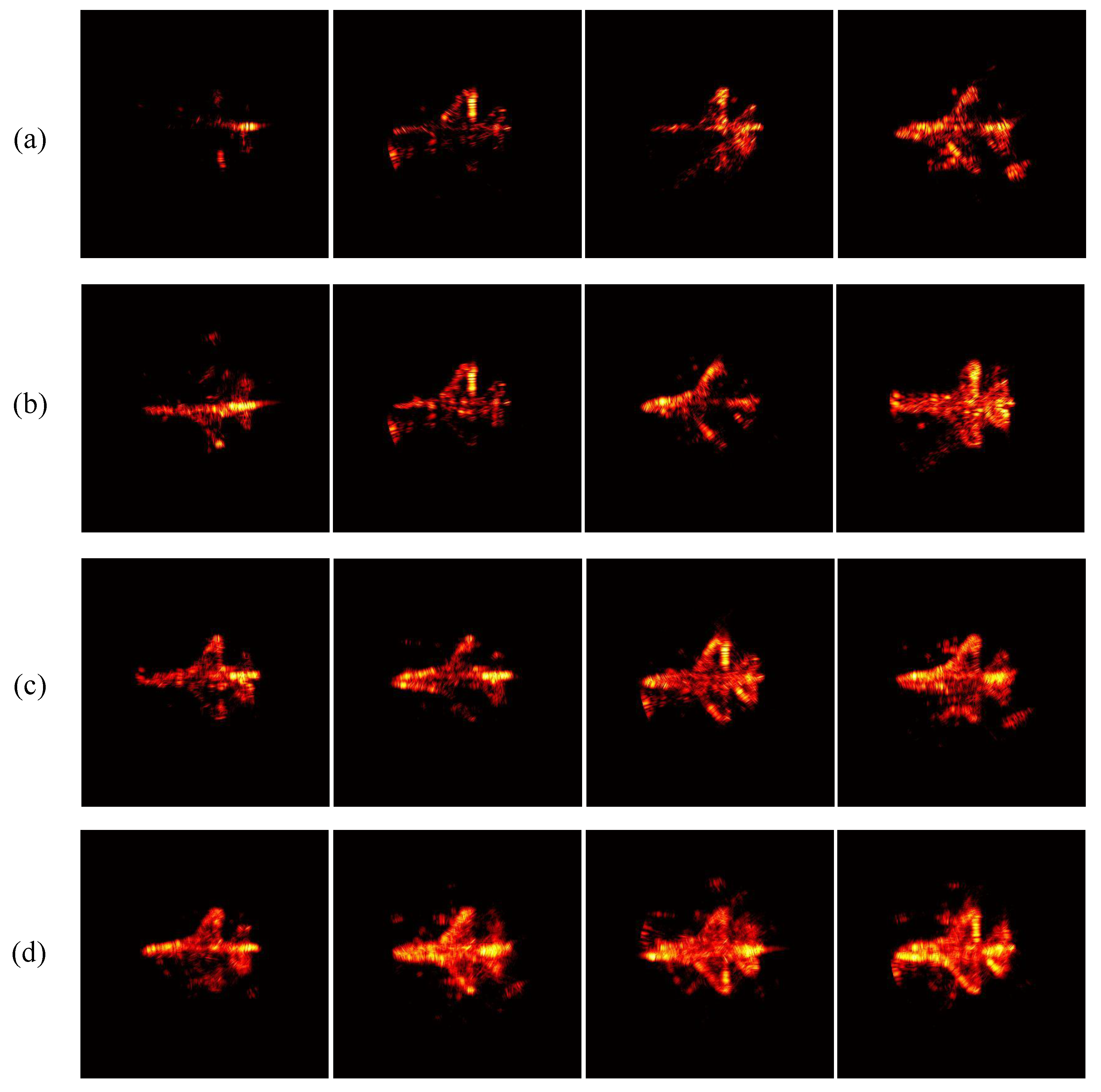

- We choose five common types of aircraft models: armed helicopter, passenger plane, fighter, UAV, and universal helicopter, as observation targets and use EM software to obtain simulation datasets, which can be used for ISAR conventional imaging and fusion imaging. Meanwhile, in some of the recognition tasks, it is necessary to distinguish between homogeneous targets with subtle differences. Therefore, the fighter jet models are further divided into two categories, with and without fuel tanks on board, to meet the above needs.

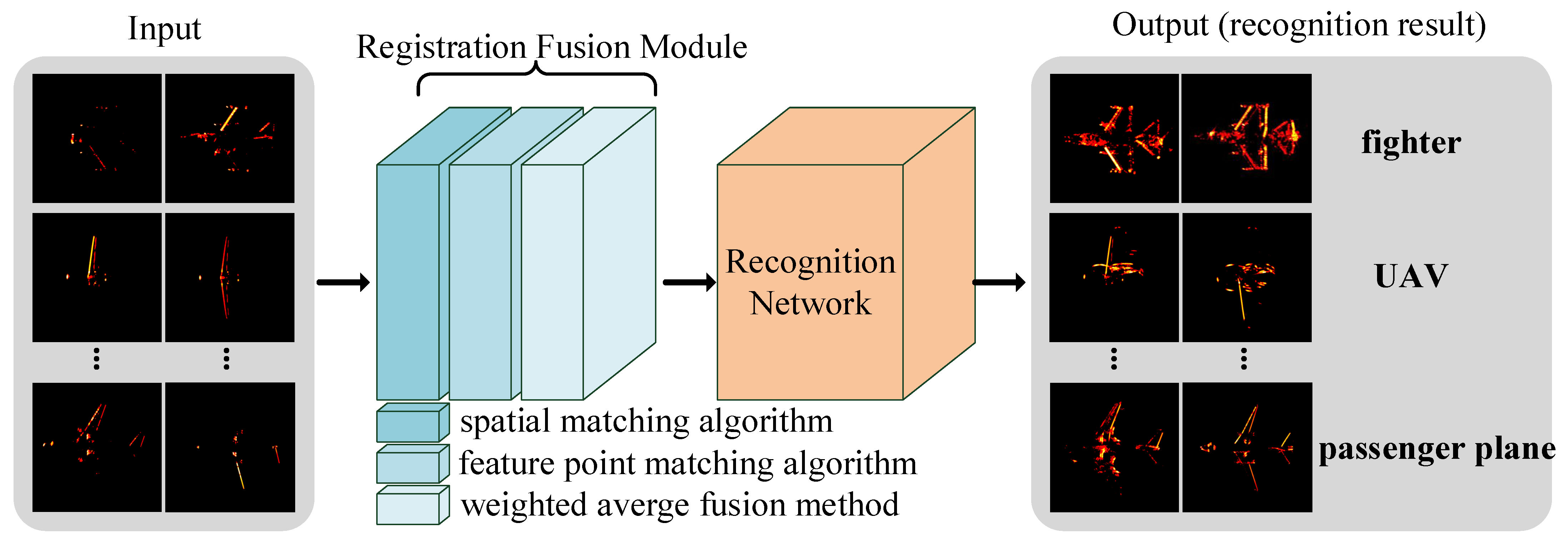

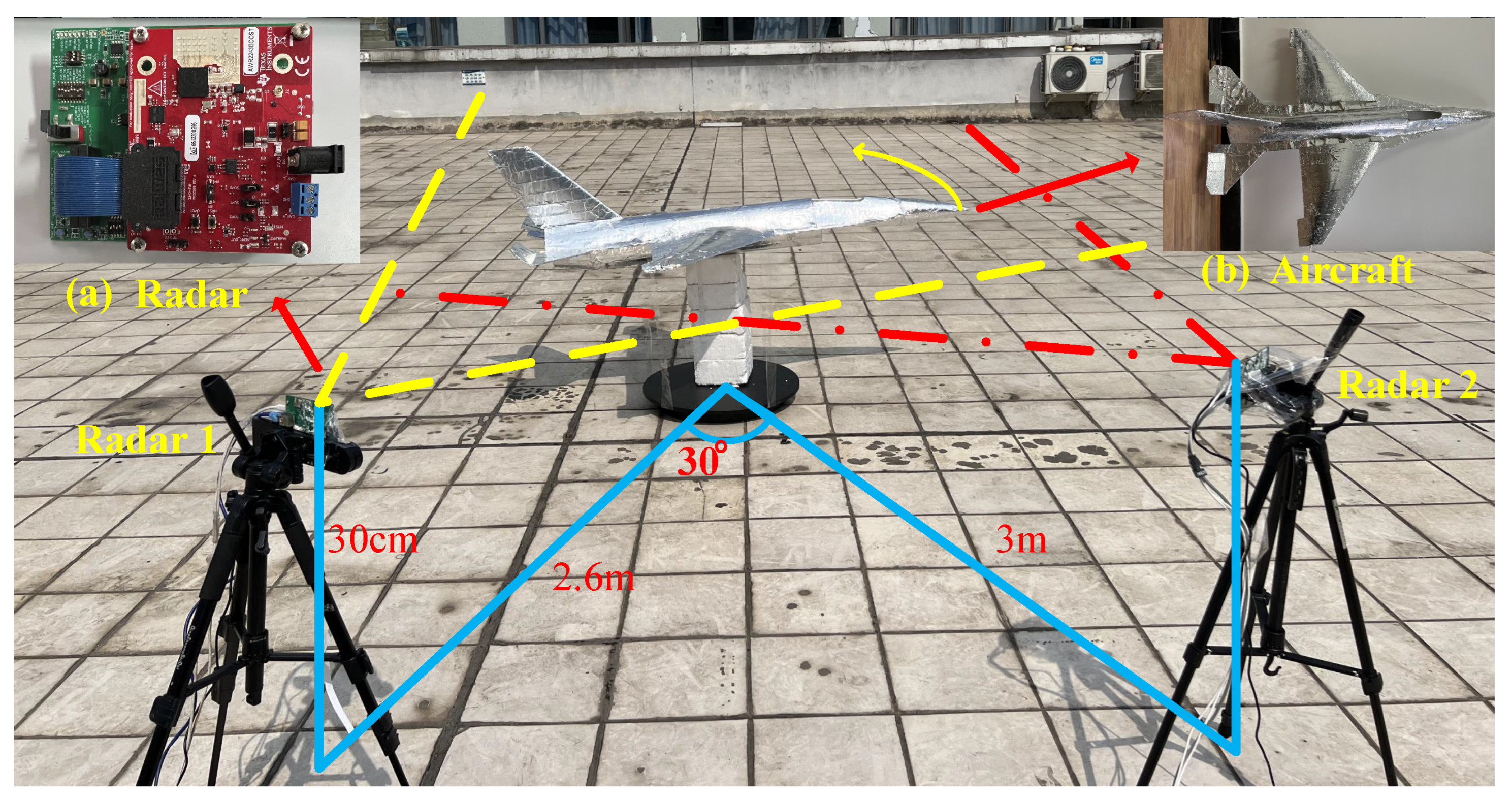

- The registration fusion module contains a spatial matching algorithm, feature point matching algorithm, and weighted average fusion method. The simulation data from different angles of the target are processed by the module to obtain multi-view fusion images, and CNN is employed for ISAR fusion image recognition. By building a ground platform, we collect real measurement data of the model aircraft, which is used to further verify the feasibility of the proposed method.

- To demonstrate the effectiveness of MVFRnet, we compare it with traditional identification methods. The comparison shows that the MVFRnet substantially improves the recognition accuracy and has better noise immunity and generalization performance.

2. Multi-View ISAR Imaging Model and Problem Description

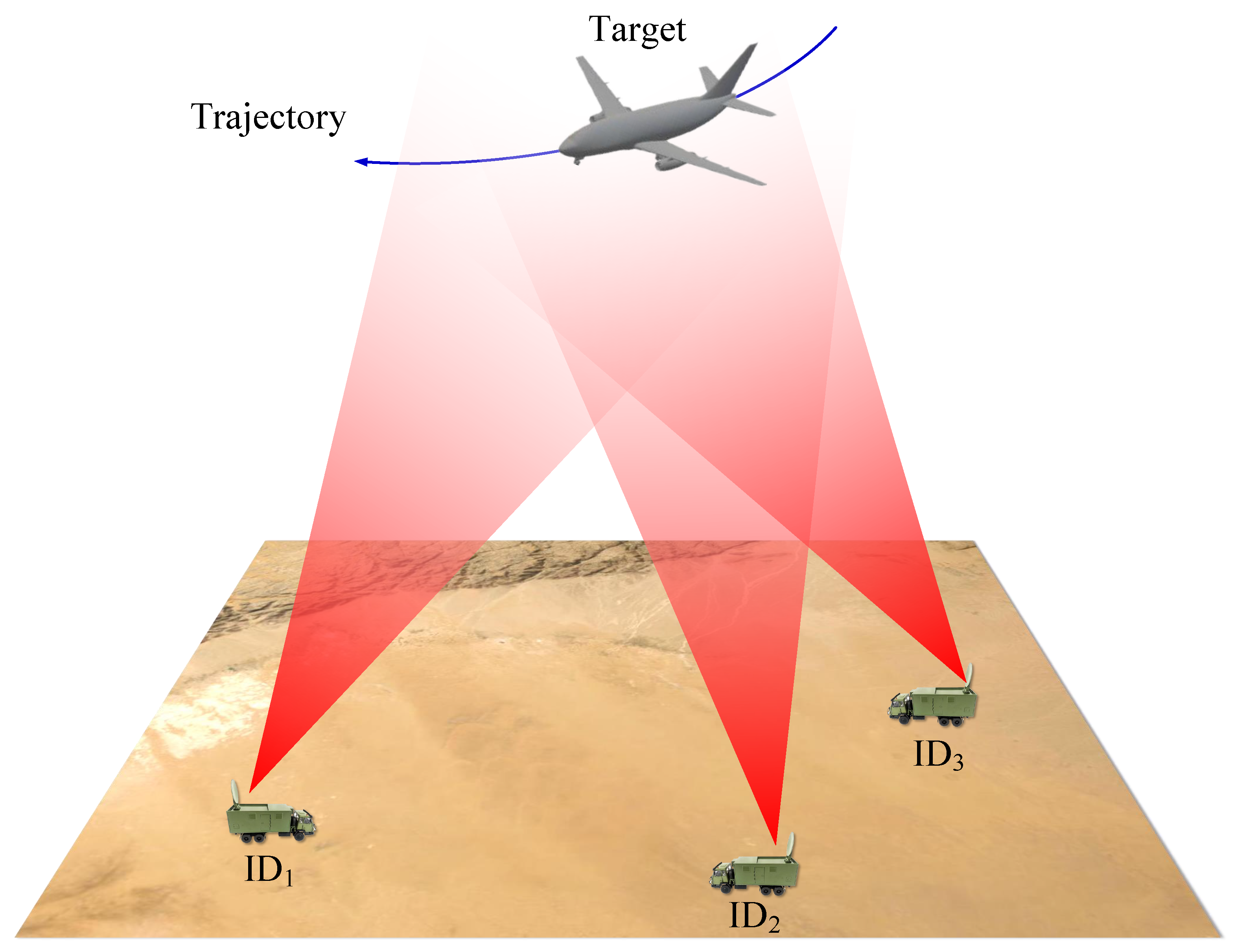

2.1. Multi-View ISAR Imaging Model

2.2. Problem Description

- a.

- Realize radar image registration. Each radar has a different spatial position relative to the target, which leads to coordinate deviations of the same scattering point in different radar systems, making it unfavorable for image fusion. The angular difference between each radar system and the geometric center of the target is the main cause of image mismatch, which inspires us to estimate the pinch angle and achieve image registration by rotational transformation.

- b.

- Achieve image fusion. After finishing the image alignment, the scattered points in each image are largely aligned, but there are still pixel-level errors. We can use a feature point matching algorithm, such as Speeded Up Robust Features (SURF), to extract the feature points in the images and fuse them. Thus, images containing multi-view information about the target are generated.

- c.

- The generated fusion image contains ISAR image characteristics such as discrete scattering points, clear edge contours, and simple image structure. Therefore, we construct a novel CNN that has a better ability to perceive shallow contours and extract deep features of images.

3. The Proposed Method

3.1. Multi-View Registration Fusion Module

3.1.1. Image Registration

- (1)

- Integral map

- (2)

- Hessian Matrix

- (3)

- Extraction and matching of feature points

3.1.2. Image Fusion

| Algorithm 1: Multi-View Signal Registration and Fusion | |

| Input: Received echo data , , ..., | |

| Output: Fusion picture | |

| 1 | Processing signal for range alignment and phase adjustment; |

| 2 | Obtaining the spatial matched ISAR images of each radar echo in the xoy coordinate by using Equations (6) and (7); |

| 3 | Constructing integral maps and box filters, extracting each spatial matched image feature point, and using Equation (15) to achieve fine matched of images; |

| 4 | Obtaining fused image ; |

| 5 | return . |

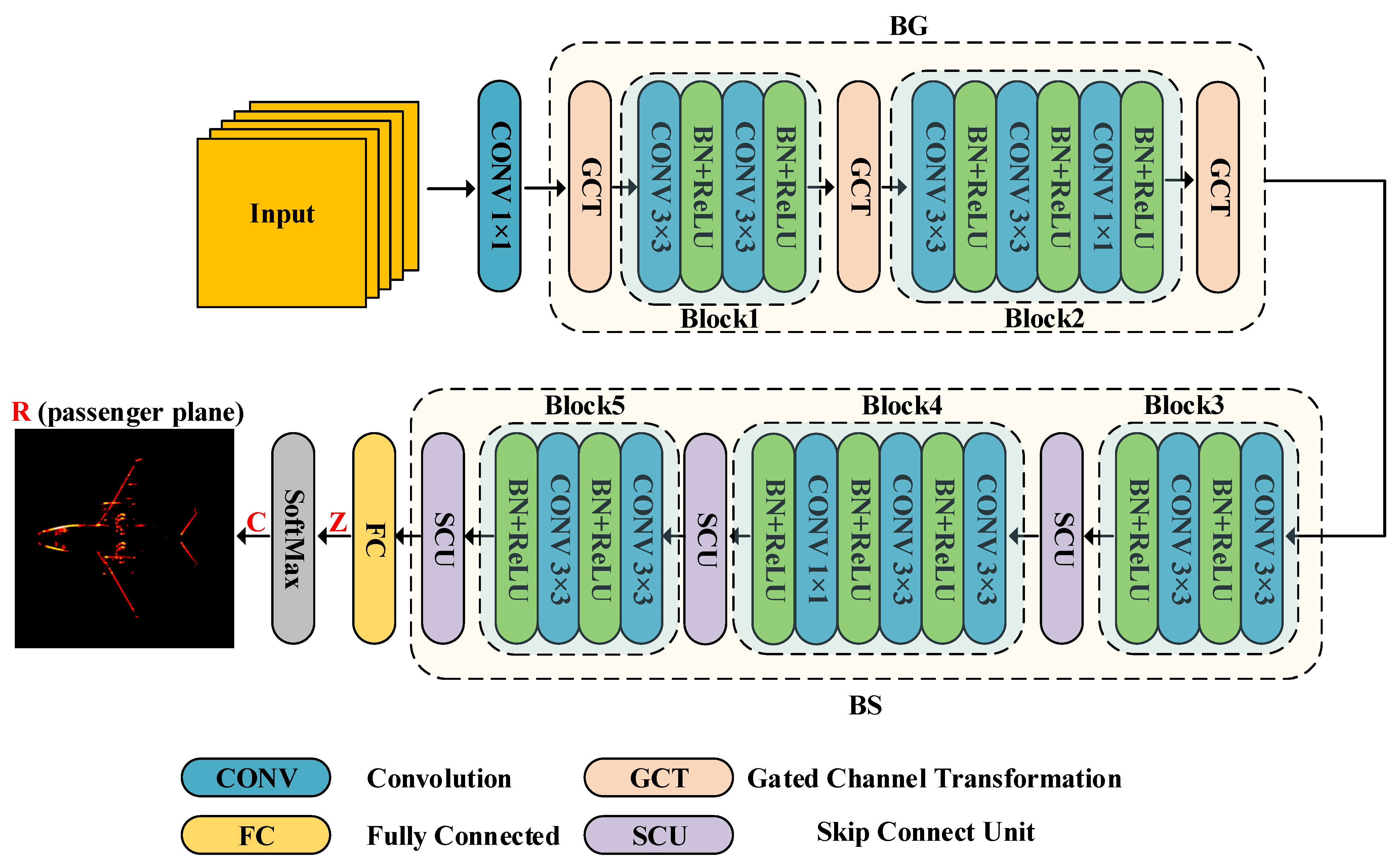

3.2. Recognition Network

- (1)

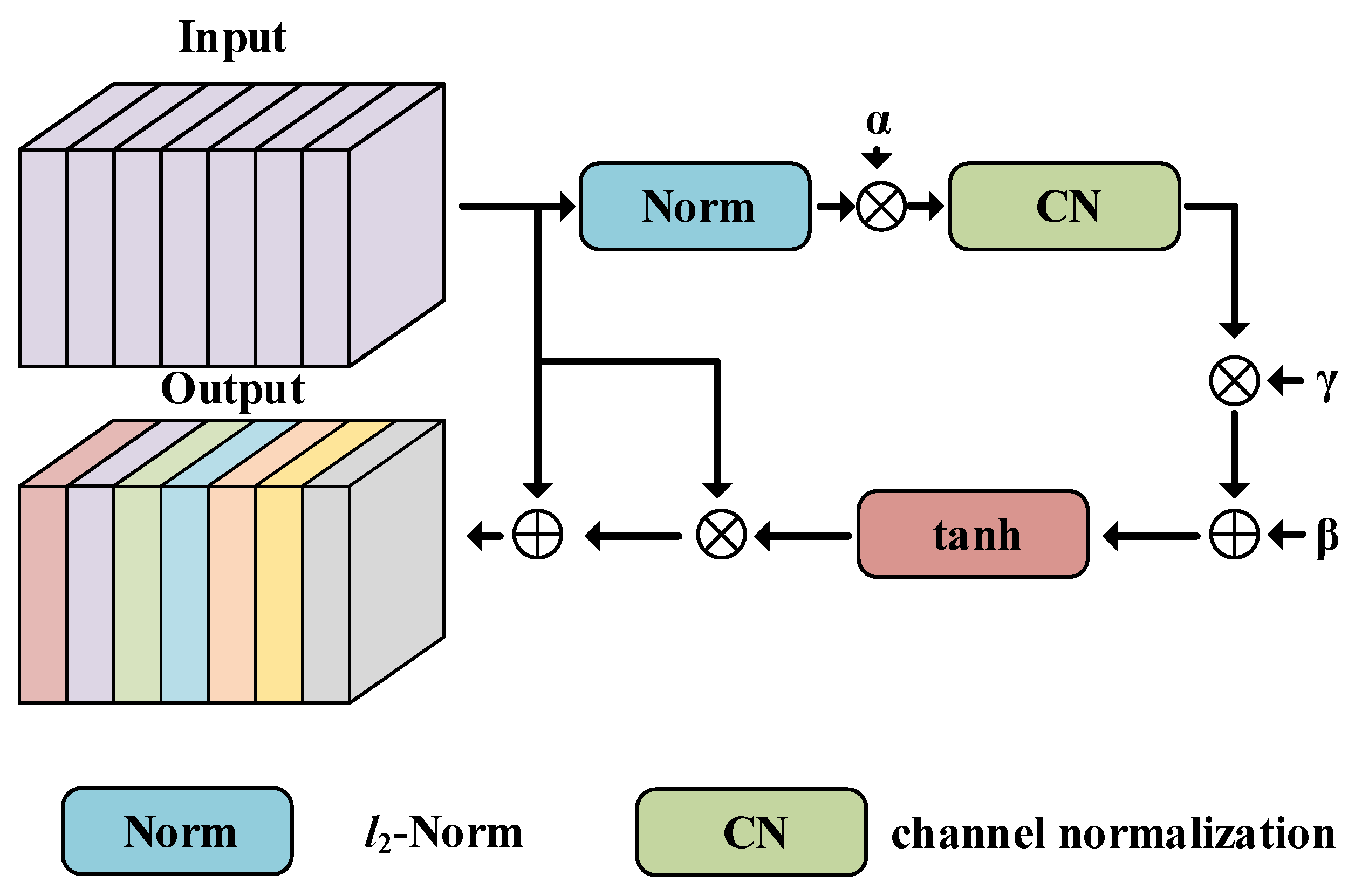

- GCT attention module

- (2)

- Skip Connect Unit

- (3)

- Output

4. Experiments and Results

4.1. Simulated Data

4.1.1. Data Construction

4.1.2. Recognition Result

4.2. Ground-Based MMW Radar Experiment Data

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, V.C.; Martorella, M. Inverse Synthetic Aperture Radar Imaging; SciTech Publishing: Chennai, India, 2014. [Google Scholar]

- Chen, C.C.; Andrews, H.C. Target-Motion-Induced Radar Imaging. IEEE Trans. Aerosp. Electron. Syst. 1980, AES-16, 2–14. [Google Scholar] [CrossRef]

- Xi, L.; Guosui, L.; Ni, J. Autofocusing of ISAR images based on entropy minimization. IEEE Trans. Aerosp. Electron. Syst. 1999, 35, 1240–1252. [Google Scholar] [CrossRef]

- Berizzi, F.; Corsini, G. Focusing of Two Dimensional ISAR Images by Contrast Maximization. In Proceedings of the 1992 22nd European Microwave Conference, Helsinki, Finland, 5–9 September 1992; Volume 2, pp. 951–956. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.; Yu, H.; Chen, J.; Xing, M.; Hong, W. Sparse Synthetic Aperture Radar Imaging From Compressed Sensing and Machine Learning: Theories, applications, and trends. IEEE Geosci. Remote Sens. Mag. 2022, 10, 32–69. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.; Chen, J.; Hong, W. Structured Low-Rank and Sparse Method for ISAR Imaging with 2-D Compressive Sampling. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5239014. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.; Chen, J.; Wu, F.; Sheng, J.; Hong, W. Sparse Inverse Synthetic Aperture Radar Imaging Using Structured Low-Rank Method. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Qu, Q.; Wei, S.; Wu, Y.; Wang, M. ACSE Networks and Autocorrelation Features for PRI Modulation Recognition. IEEE Commun. Lett. 2020, 24, 1729–1733. [Google Scholar] [CrossRef]

- Wei, S.; Qu, Q.; Wu, Y.; Wang, M.; Shi, J. PRI Modulation Recognition Based on Squeeze-and-Excitation Networks. IEEE Commun. Lett. 2020, 24, 1047–1051. [Google Scholar] [CrossRef]

- Qu, Q.; Wei, S.; Su, H.; Wang, M.; Shi, J.; Hao, X. Radar Signal Recognition Based on Squeeze-and-Excitation Networks. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Yang, R.; Hu, Z.; Liu, Y.; Xu, Z. A Novel Polarimetric SAR Classification Method Integrating Pixel-Based and Patch-Based Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 431–435. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Raj, J.A.; Idicula, S.M.; Paul, B. One-Shot Learning-Based SAR Ship Classification Using New Hybrid Siamese Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4017205. [Google Scholar] [CrossRef]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B. SAR Image Ship Object Generation and Classification with Improved Residual Conditional Generative Adversarial Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4000105. [Google Scholar] [CrossRef]

- Wei, S.; Liang, J.; Wang, M.; Shi, J.; Zhang, X.; Ran, J. AF-AMPNet: A Deep Learning Approach for Sparse Aperture ISAR Imaging and Autofocusing. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5206514. [Google Scholar] [CrossRef]

- Yang, Y.; Gao, X.; Shen, Q. Learning Embedding Adaptation for ISAR Image Recognition with Few Samples. In Proceedings of the 2021 2nd Information Communication Technologies Conference (ICTC), Nanjing, China, 7–9 May 2021; pp. 86–89. [Google Scholar] [CrossRef]

- Ma, F.; He, Y.; Li, Y. Comparison of aircraft target recognition methods based on ISAR images. In Proceedings of the 2022 IEEE 10th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 17–19 June 2022; Volume 10, pp. 1291–1295. [Google Scholar] [CrossRef]

- Toumi, A.; Khenchaf, A. Target recognition using IFFT and MUSIC ISAR images. In Proceedings of the 2016 2nd International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Monastir, Tunisia, 21–23 March 2016; pp. 596–600. [Google Scholar] [CrossRef]

- Cai, H.; He, Q.; Han, Z.; Shang, C. ISAR target recognition based on manifold learning. In Proceedings of the 2009 IET International Radar Conference, Guilin, China, 20–22 April 2009; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, L.; Zhu, D.; Zhu, Z. Cross-range scaling for aircraft ISAR images based on axis slope measurements. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, V.; Lipps, R.; Bottoms, M. Advanced synthetic aperture radar imaging and feature analysis. In Proceedings of the International Conference on Radar (IEEE Cat. No; pp. 03EX695), Adelaide, SA, Australia, 3–5 September 2003; pp. 22–29. [Google Scholar] [CrossRef]

- El Housseini, A.; Toumi, A.; Khenchaf, A. Deep Learning for target recognition from SAR images. In Proceedings of the 2017 Seminar on Detection Systems Architectures and Technologies (DAT), Algiers, Algeria, 20–22 February 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Park, J.I.; Kim, K.T. A Comparative Study on ISAR Imaging Algorithms for Radar Target Identification. Prog. Electromagn. Res. 2010, 108, 155–175. [Google Scholar] [CrossRef]

- Musman, S.; Kerr, D.; Bachmann, C. Automatic recognition of ISAR ship images. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 1392–1404. [Google Scholar] [CrossRef]

- Kurowska, A.; Kulpa, J.S.; Giusti, E.; Conti, M. Classification results of ISAR sea targets based on their two features. In Proceedings of the 2017 Signal Processing Symposium (SPSympo), Jachranka, Poland, 12–14 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Kim, K.T.; Seo, D.K.; Kim, H.T. Efficient classification of ISAR images. IEEE Trans. Antennas Propag. 2005, 53, 1611–1621. [Google Scholar] [CrossRef]

- Yuankui, H.; Yiming, Y. Automatic target recognition of ISAR images based on Hausdorff distance. In Proceedings of the 2007 1st Asian and Pacific Conference on Synthetic Aperture Radar, Huangshan, China, 5–9 November 2007; pp. 477–479. [Google Scholar] [CrossRef]

- Kumar, A.; Giusti, E.; Mancuso, F.; Ghio, S.; Lupidi, A.; Martorella, M. Three-Dimensional Polarimetric InISAR Imaging of Non-Cooperative Targets. IEEE Trans. Comput. Imaging 2023, 9, 210–223. [Google Scholar] [CrossRef]

- Giusti, E.; Kumar, A.; Mancuso, F.; Ghio, S.; Martorella, M. Fully polarimetric multi-aspect 3D InISAR. In Proceedings of the 2022 23rd International Radar Symposium (IRS), Gdansk, Poland, 12–14 September 2022; pp. 184–189. [Google Scholar] [CrossRef]

- Park, J.; Raj, R.G.; Martorella, M.; Giusti, E. Simulation and Analysis of 3-D Polarimetric Interferometric ISAR Imaging. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2023–2026. [Google Scholar] [CrossRef]

- Qu, Q.; Wei, S.; Liu, S.; Liang, J.; Shi, J. JRNet: Jamming Recognition Networks for Radar Compound Suppression Jamming Signals. IEEE Trans. Veh. Technol. 2020, 69, 15035–15045. [Google Scholar] [CrossRef]

- Wang, M.; Wei, S.; Liang, J.; Zeng, X.; Wang, C.; Shi, J.; Zhang, X. RMIST-Net: Joint Range Migration and Sparse Reconstruction Network for 3-D mmW Imaging. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Zeng, X.; Zhou, Z.; Shi, J.; Zhang, X. CARNet: An effective method for SAR image interference suppression. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103019. [Google Scholar] [CrossRef]

- Wang, M.; Wei, S.; Liang, J.; Zhou, Z.; Qu, Q.; Shi, J.; Zhang, X. TPSSI-Net: Fast and Enhanced Two-Path Iterative Network for 3D SAR Sparse Imaging. IEEE Trans. Image Process. 2021, 30, 7317–7332. [Google Scholar] [CrossRef]

- Wang, M.; Wei, S.; Shi, J.; Wu, Y.; Qu, Q.; Zhou, Y.; Zeng, X.; Tian, B. CSR-Net: A Novel Complex-Valued Network for Fast and Precise 3-D Microwave Sparse Reconstruction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4476–4492. [Google Scholar] [CrossRef]

- Dung, P.T. Combined neural networks for radar target recognition from radar range profiles. In Proceedings of the 2008 International Conference on Advanced Technologies for Communications, Hanoi, Vietnam, 6–9 October 2008; pp. 353–355. [Google Scholar] [CrossRef]

- Li, G.; Sun, Z.; Zhang, Y. ISAR Target Recognition Using Pix2pix Network Derived from cGAN. In Proceedings of the 2019 International Radar Conference (RADAR), Toulon, France, 23–27 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Xue, B.; Tong, N. Real-World ISAR Object Recognition Using Deep Multimodal Relation Learning. IEEE Trans. Cybern. 2020, 50, 4256–4267. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Heng, A.; Rosenberg, L.; Nguyen, S.T.; Hamey, L.; Orgun, M. ISAR Ship Classification Using Transfer Learning. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York, NY, USA, 21–25 March 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Z.; Narayanan, R.M. Data Level Fusion of Multilook Inverse Synthetic Aperture Radar (ISAR) Images. In Proceedings of the 35th IEEE Applied Imagery and Pattern Recognition Workshop (AIPR’06), Washington, DC, USA, 11–13 October 2006; p. 2. [Google Scholar] [CrossRef]

- Ram, S.S. Fusion of Inverse Synthetic Aperture Radar and Camera Images for Automotive Target Tracking. IEEE J. Sel. Top. Signal Process. 2022, 1–14. [Google Scholar] [CrossRef]

- Li, Z.; Narayanan, R.M. Cross-band Inverse Synthetic Aperture Radar (ISAR) Image Fusion. In Proceedings of the 2007 International Symposium on Signals, Systems and Electronics, Montreal, QC, Canada, 30 July–2 August 2007; pp. 111–114. [Google Scholar] [CrossRef]

- Li, Y.; Yang, B.; He, Z.; Chen, R. An ISAR Automatic Target Recognition Approach Based on SBR-based Fast Imaging Scheme and CNN. In Proceedings of the 2020 IEEE MTT-S International Wireless Symposium (IWS), Shanghai, China, 20–23 September 2020; pp. 1–3. [Google Scholar] [CrossRef]

- Lu, W.; Zhang, Y.; Yin, C.; Lin, C.; Xu, C.; Zhang, X. A Deformation Robust ISAR Image Satellite Target Recognition Method Based on PT-CCNN. IEEE Access 2021, 9, 23432–23453. [Google Scholar] [CrossRef]

- Wielgo, M.; Soszka, M.; Rytel-Andrianik, R. Convolutional Neural Network for 3D ISAR Non-Cooperative Target Recognition. In Proceedings of the 2022 23rd International Radar Symposium (IRS), Gdansk, Poland, 12–14 September 2022; pp. 190–195. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Lindeberg, T. Scale-space for discrete signals. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 234–254. [Google Scholar] [CrossRef]

- Koenderink, J.J. The structure of images. Biol. Cybern. 1984, 50, 363–370. [Google Scholar] [CrossRef]

- Yang, Z.; Zhu, L.; Wu, Y.; Yang, Y. Gated Channel Transformation for Visual Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11791–11800. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Lee, H.; Kim, H.E.; Nam, H. SRM: A Style-Based Recalibration Module for Convolutional Neural Networks. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1854–1862. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Qin, Z.; Zhang, P.; Wu, F.; Li, X. FcaNet: Frequency Channel Attention Networks. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 763–772. [Google Scholar] [CrossRef]

| Simulation Parameter | Value |

|---|---|

| Radar Center Frequency | 10 GHz |

| Radar Bandwidth | 1.5 GHz |

| Target Rotation Angle | 0–360 |

| Target Range | 20 km |

| Target height | 1–14 km |

| Method | a-AP | b-AP | c-AP | d-AP | e-AP | f-AP | Acc |

|---|---|---|---|---|---|---|---|

| ResNet34 | 51.25% | 79.28% | 43.45% | 26.68% | 80.77% | 68.35% | 58.30% |

| ResNet50 | 54.76% | 89.12% | 49.87% | 30.41% | 82.30% | 72.07% | 63.13% |

| InceptionNet | 56.66% | 87.12% | 51.01% | 30.98% | 85.30% | 79.57% | 65.11% |

| EfficientNet | 80.86% | 90.32% | 57.76% | 58.32% | 90.47% | 92.37% | 78.35% |

| MVFRnet | 93.53% | 97.76% | 94.32% | 93.89% | 97.60% | 94.64% | 95.29% |

| Method | a-AP | b-AP | c-AP | d-AP | e-AP | f-AP | Acc |

|---|---|---|---|---|---|---|---|

| ResNet34 | 29.12% | 67.35% | 21.83% | 16.87% | 76.65% | 61.15% | 45.50% |

| ResNet50 | 30.01% | 72.45% | 30.47% | 17.98% | 77.41% | 60.45% | 48.13% |

| InceptionNet | 40.45% | 80.17% | 36.74% | 36.18% | 77.73% | 64.96% | 56.04% |

| EfficientNet | 41.71% | 85.69% | 40.44% | 37.64% | 80.46% | 70.48% | 59.43% |

| MVFRnet | 90.47% | 92.19% | 92.48% | 90.67% | 94.76% | 92.31% | 92.14% |

| Parameter | Value |

|---|---|

| start frequency | 77 GHz |

| frequency slope | 68.70 MHz/s |

| bandwidth | 3.52 GHz |

| samping rate | 5000 ksps |

| pulse duration | 51.20 s |

| pulse interval | 2 ms |

| Radar 1 distance | 2.6 m |

| Radar 2 distance | 3 m |

| turntable speed | 60 s/rad |

| Model | ResNet34 | ResNet50 | InceptionNet | EfficientNet | MVFRnet (5-View) | |

|---|---|---|---|---|---|---|

| Probability | Radar 1 | |||||

| Radar 2 | ||||||

| N-View Fusion | N = 2 | N = 3 | N = 4 | N = 5 | N = 6 | N = 7 | N = 8 | N = 9 |

|---|---|---|---|---|---|---|---|---|

| Probability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Ran, J.; Wen, Y.; Wei, S.; Yang, W. MVFRnet: A Novel High-Accuracy Network for ISAR Air-Target Recognition via Multi-View Fusion. Remote Sens. 2023, 15, 3052. https://doi.org/10.3390/rs15123052

Li X, Ran J, Wen Y, Wei S, Yang W. MVFRnet: A Novel High-Accuracy Network for ISAR Air-Target Recognition via Multi-View Fusion. Remote Sensing. 2023; 15(12):3052. https://doi.org/10.3390/rs15123052

Chicago/Turabian StyleLi, Xiuhe, Jinhe Ran, Yanbo Wen, Shunjun Wei, and Wei Yang. 2023. "MVFRnet: A Novel High-Accuracy Network for ISAR Air-Target Recognition via Multi-View Fusion" Remote Sensing 15, no. 12: 3052. https://doi.org/10.3390/rs15123052

APA StyleLi, X., Ran, J., Wen, Y., Wei, S., & Yang, W. (2023). MVFRnet: A Novel High-Accuracy Network for ISAR Air-Target Recognition via Multi-View Fusion. Remote Sensing, 15(12), 3052. https://doi.org/10.3390/rs15123052