Cropland Data Extraction in Mekong Delta Based on Time Series Sentinel-1 Dual-Polarized Data

Abstract

1. Introduction

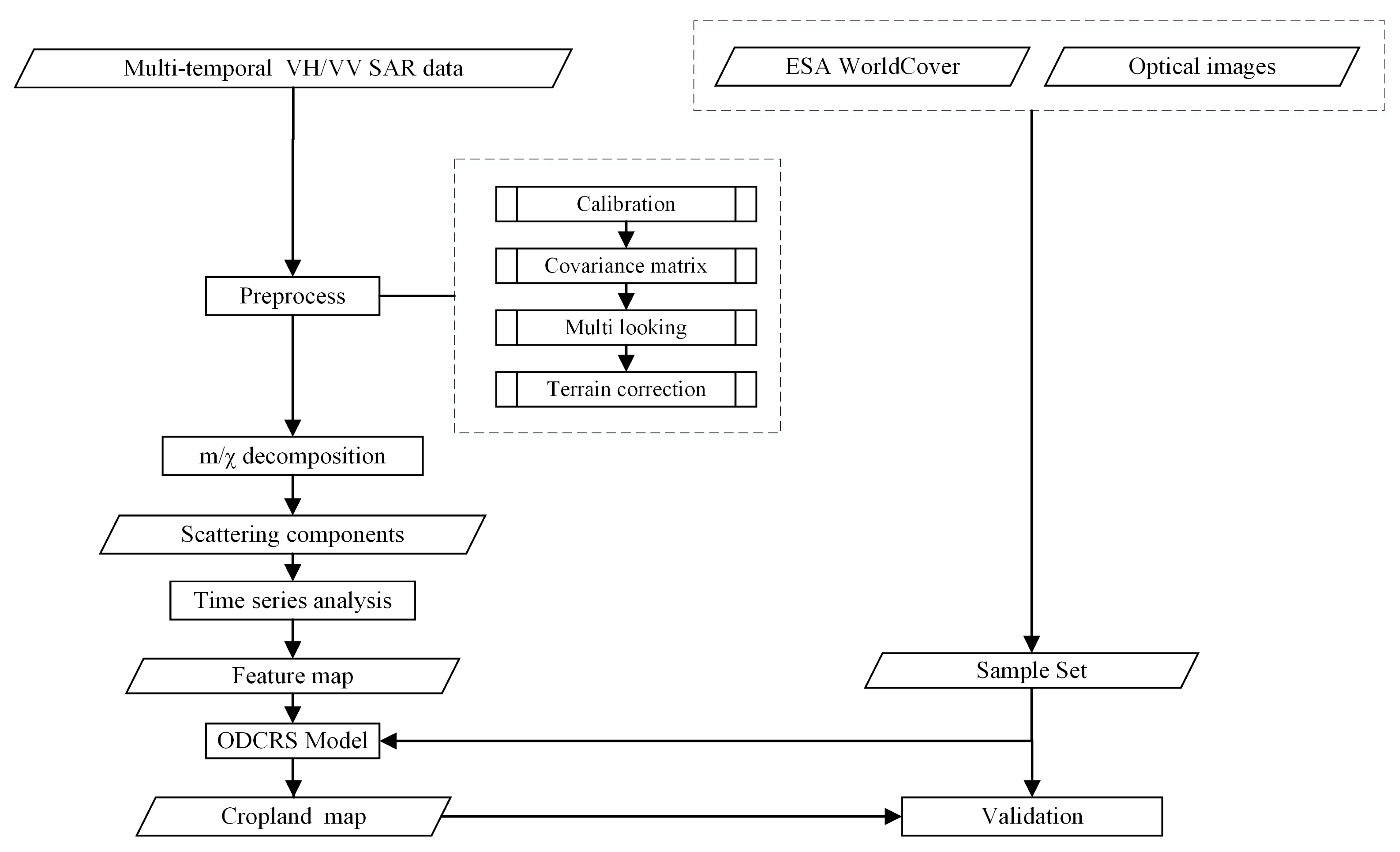

- A temporal statistical feature including amplitude and phase information simultaneously, the temporal mean value of the three components calculated from m/χ decomposition and filtered by the Savitzky–Golay filter (, is extracted to effectively distinguish cropland from other ground objects;

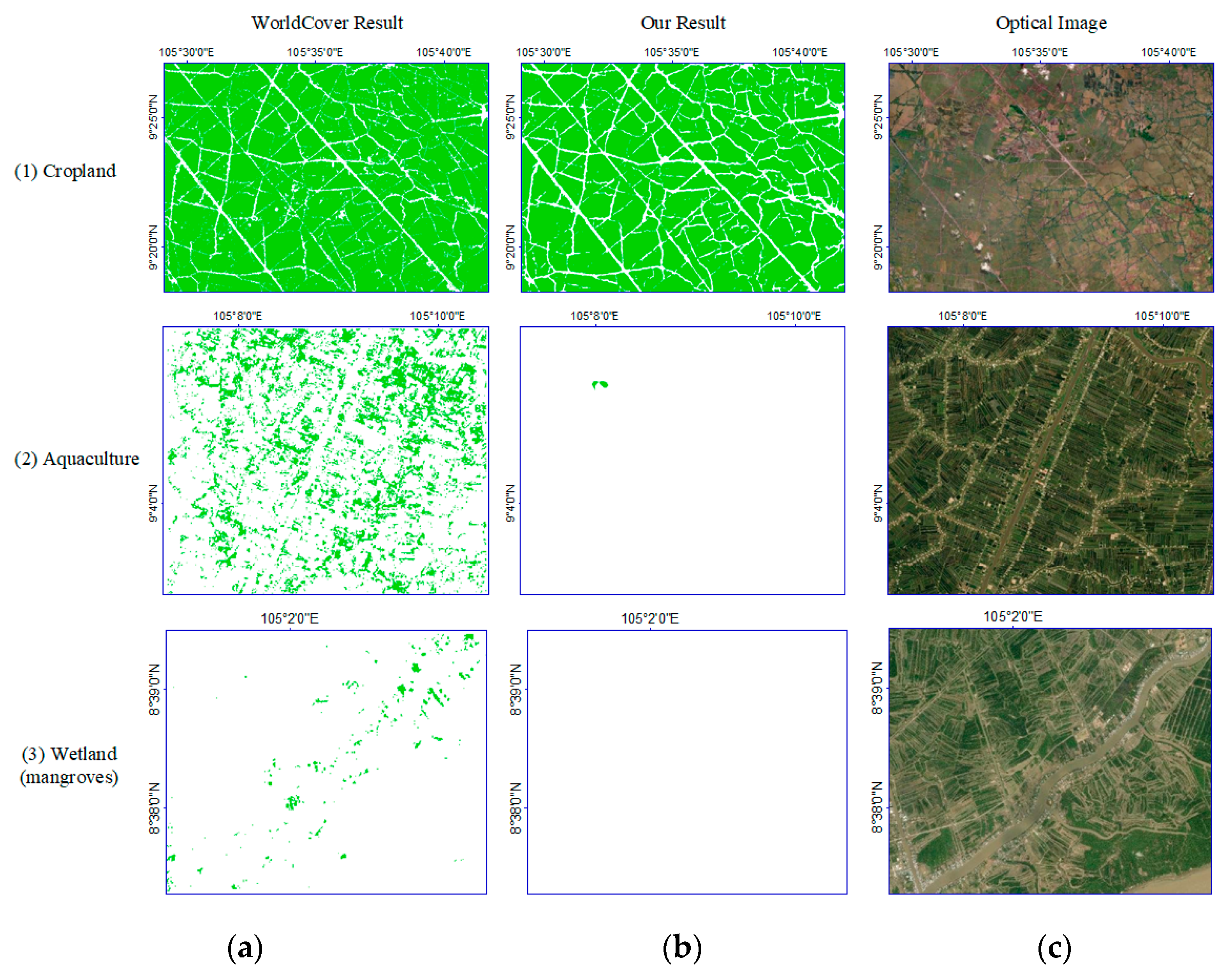

- In response to the difficulty in distinguishing similar ground objects and the insufficient description of land details in the task of extracting cropland, a new segmentation model, ODCRS, is designed based on omni-dimensional dynamic convolution (ODConv). Compared with conventional convolutional networks, the convolutional layer of ODCRS includes four complementary attention mechanisms for convolutional kernels (location-wise, channel-wise, filter-wise, and kernel-wise), which provides assurance for capturing rich contextual information and significantly enhances the network’s feature extraction ability. Thus, it can effectively distinguish easily confused ground objects such as cropland and aquaculture areas and wetlands and maintain edge details of features.

2. Materials and Methods

2.1. Study SITE

2.2. Experiment Data and Sample Data

2.3. Methods

2.3.1. Temporal Features Analysis and Extraction

2.3.2. ODCRS Model

2.3.3. Model Accuracy Evaluation

3. Experimental Results

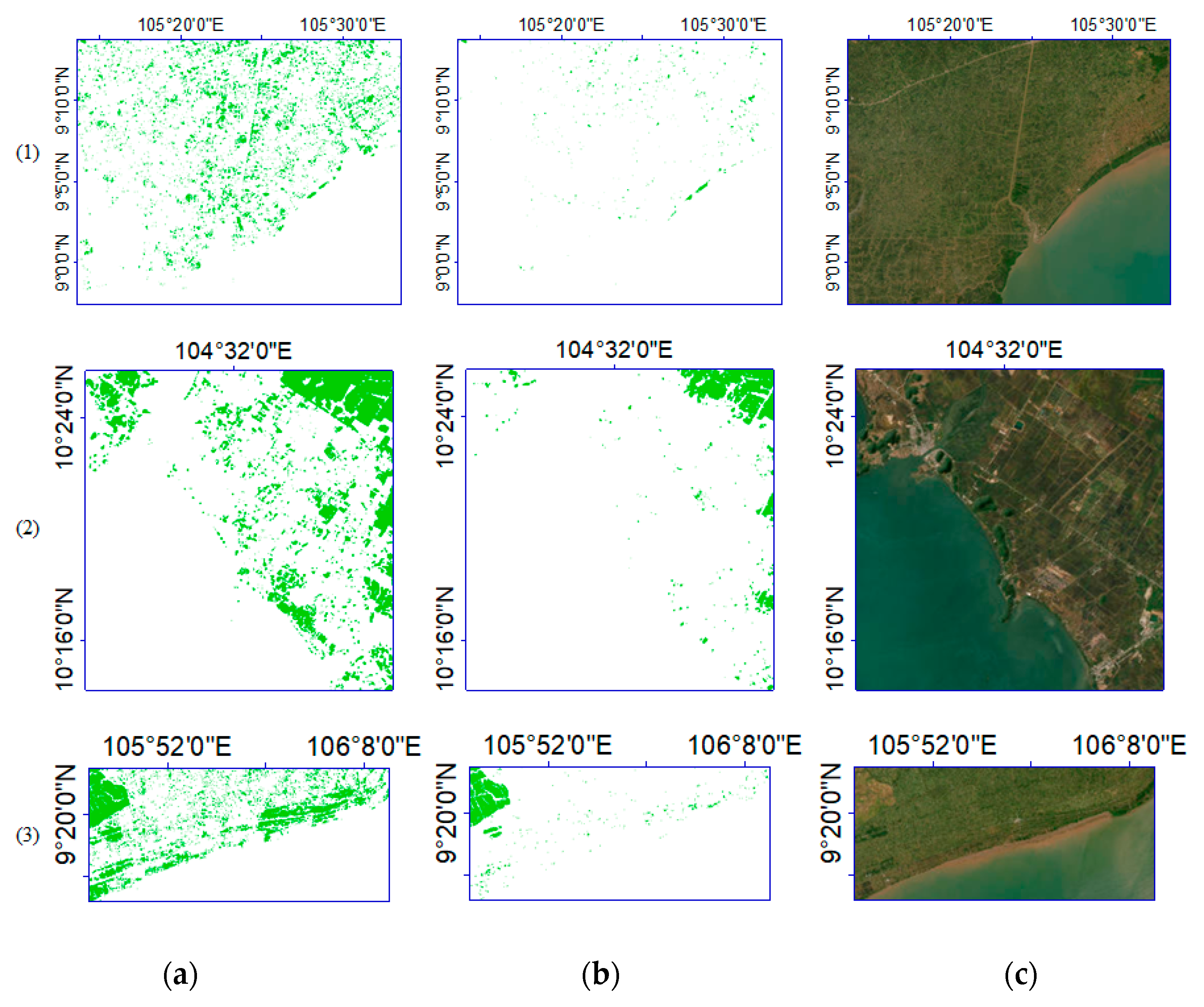

3.1. Effect of Pre- and Post-Filtering Features on Extraction Results

3.2. Model Accuracy Evaluation Results

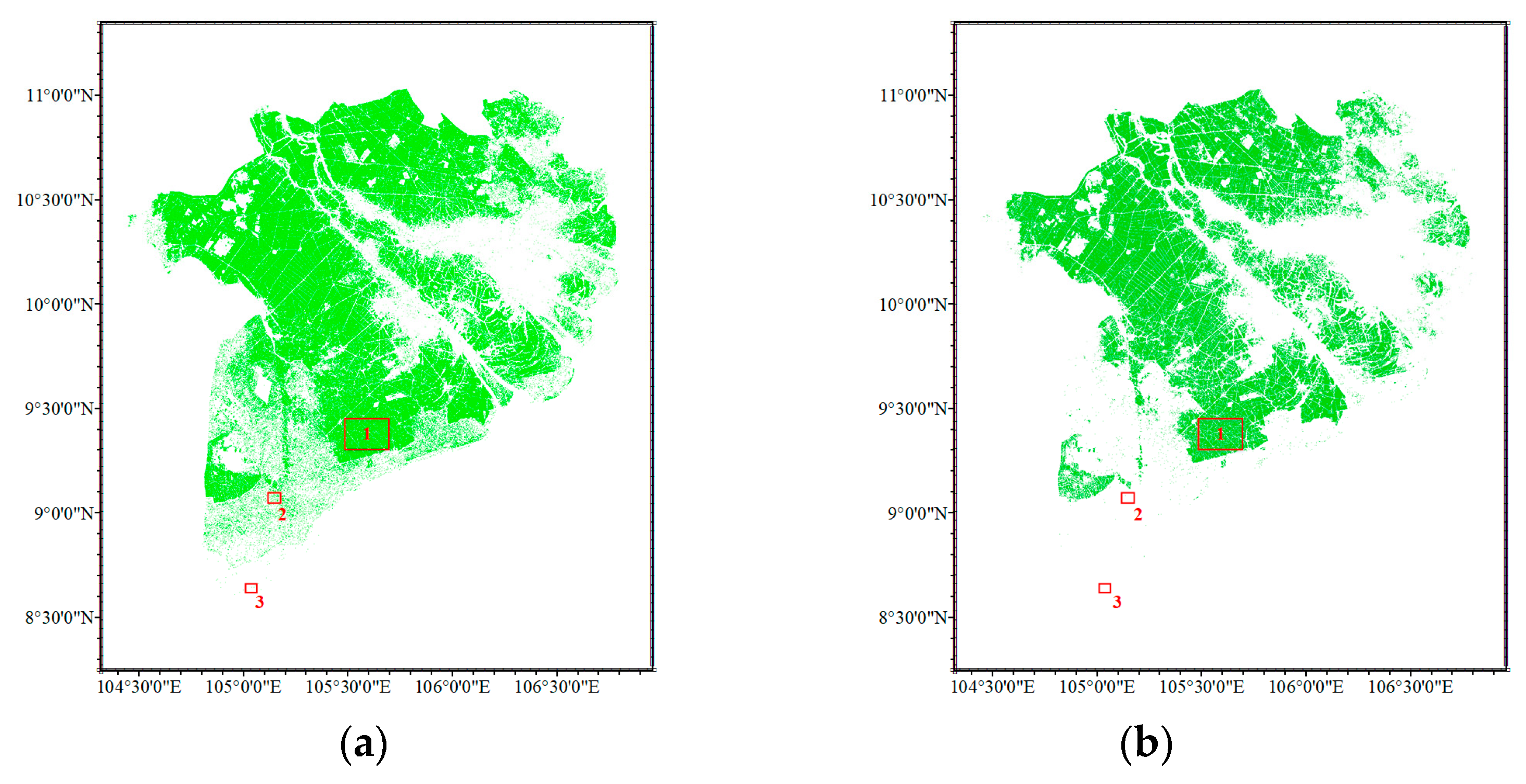

3.3. Analysis of Extraction Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations and Acronyms

| SAR | Synthetic aperture radar |

| Dual-pol | Dual-polarimetric |

| ODCRS Model | Omni-dimensional Dynamic Convolution Residual Segmentation Model |

| MIoU | Mean intersection over union |

| MPA | Mean pixel accuracy |

| ESA | European Space Agency |

| GRD | Ground Range Detected |

| SLC | Single Look Complex |

| C2 matrix | Two-dimensional covariance matrix |

| ROI | Region of interest |

| S–G filter | Savitzky–Golay filter |

References

- Vu, H.T.D.; Tran, D.D.; Schenk, A.; Nguyen, C.P.; Vu, H.L.; Oberle, P.; Trinh, V.C.; Nestmann, F. Land use change in the Vietnamese Mekong Delta: New evidence from remote sensing. Sci. Total Environ. 2021, 813, 151918. [Google Scholar] [CrossRef]

- Angulo-Mosquera, L.S.; Alvarado-Alvarado, A.A.; Rivas-Arrieta, M.J.; Cattaneo, C.R.; Rene, E.R.; García-Depraect, O. Production of solid biofuels from organic waste in developing countries: A review from sustainability and economic feasibility perspectives. Sci. Total Environ. 2021, 795, 148816. [Google Scholar] [CrossRef]

- Lilao, B.; Karlyn, E. Food Security and Vulnerability in the Lower Mekong River Basin. 2012, pp. 6–9. Available online: http://www.jstor.org/stable/wateresoimpa.14.6.000 (accessed on 10 January 2023).

- Park, E.; Loc, H.H.; Van Binh, D.; Kantoush, S. The worst 2020 saline water intrusion disaster of the past century in the Mekong Delta: Impacts, causes, and management implications. Ambio 2022, 51, 691–699. [Google Scholar] [CrossRef]

- Triet, N.V.K.; Dung, N.V.; Hoang, L.P.; Le Duy, N.; Tran, D.D.; Anh, T.T.; Kummu, M.; Merz, B.; Apel, H. Future projections of flood dynamics in the Vietnamese Mekong Delta. Sci. Total Environ. 2020, 742, 140596. [Google Scholar] [CrossRef]

- Jiang, Z.; Raghavan, S.V.; Hur, J.; Sun, Y.; Liong, S.-Y.; Nguyen, V.Q.; Dang, T.V.P. Future changes in rice yields over the Mekong River Delta due to climate change—Alarming or alerting? Theor. Appl. Clim. 2018, 137, 545–555. [Google Scholar] [CrossRef]

- Le, H.-M.; Ludwig, M. The Salinization of Agricultural Hubs: Impacts and Adjustments to Intensifying Saltwater Intrusion in the Mekong Delta. 2022. Available online: http://hdl.handle.net/10419/264102 (accessed on 3 June 2023).

- Tiwari, A.D.; Pokhrel, Y.; Kramer, D.; Akhter, T.; Tang, Q.; Liu, J.; Qi, J.; Loc, H.H.; Lakshmi, V. A synthesis of hydroclimatic, ecological, and socioeconomic data for transdisciplinary research in the Mekong. Sci. Data 2023, 10, 1–26. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Chen, X.; Gu, X.; Liu, P.; Wang, D.; Mumtaz, F.; Shi, S.; Liu, Q.; Zhan, Y. Impacts of inter-annual cropland changes on land surface temperature based on multi-time series thermal infrared images. Infrared Phys. Technol. 2022, 122, 104081. [Google Scholar]

- Wang, Q.; Guo, P.; Dong, S.; Liu, Y.; Pan, Y.; Li, C. Extraction of Cropland Spatial Distribution Information Using Multi-Seasonal Fractal Features: A Case Study of Black Soil in Lishu County, China. Agriculture 2023, 13, 486. [Google Scholar] [CrossRef]

- Lu, R.; Wang, N.; Zhang, Y.; Lin, Y.; Wu, W.; Shi, Z. Extraction of Agricultural Fields via DASFNet with Dual Attention Mechanism and Multi-scale Feature Fusion in South Xinjiang, China. Remote Sens. 2022, 14, 2253. [Google Scholar] [CrossRef]

- Tulczyjew, L.; Kawulok, M.; Longepe, N.; Le Saux, B.; Nalepa, J. Graph Neural Networks Extract High-Resolution Cultivated Land Maps From Sentinel-2 Image Series. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- He, S.; Shao, H.; Xian, W.; Yin, Z.; You, M.; Zhong, J.; Qi, J. Monitoring Cropland Abandonment in Hilly Areas with Sentinel-1 and Sentinel-2 Timeseries. Remote Sens. 2022, 14, 3806. [Google Scholar] [CrossRef]

- Ku, M.; Jiang, H.; Li, D.; Wang, C. Flooded cropland mapping based on GF-3 and Mapbox imagery using semantic segmentation: A case study of Typhoon Siamba in western Guangdong in July 2022. SPIE 2023, 12552, 300–306. [Google Scholar] [CrossRef]

- Qiu, B.; Lin, D.; Chen, C.; Yang, P.; Tang, Z.; Jin, Z.; Ye, Z.; Zhu, X.; Duan, M.; Huang, H.; et al. From cropland to cropped field: A robust algorithm for national-scale mapping by fusing time series of Sentinel-1 and Sentinel-2. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 103006. [Google Scholar] [CrossRef]

- Yao, C.; Zhang, J. A method for segmentation and extraction of cultivated land plots from high-resolution remote sensing images. In Proceedings of the Second International Conference on Optics and Image Processing (ICOIP 2022), Taian, China, 20–22 May 2022; Volume 12328. [Google Scholar]

- He, S.; Shao, H.; Xian, W.; Zhang, S.; Zhong, J.; Qi, J. Extraction of Abandoned Land in Hilly Areas Based on the Spatio-Time series Fusion of Multi-Source Remote Sensing Images. Remote Sens. 2021, 13, 3956. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, H.; Gu, X.; Liu, J.; Yin, Z.; Sun, Q.; Wei, Z.; Pan, Y. Monitoring the Spatio-Time series Changes of Non-Cultivated Land via Long-Time Series Remote Sensing Images in Xinghua. IEEE Access 2022, 10, 84518–84534. [Google Scholar] [CrossRef]

- Wen, C.; Lu, M.; Bi, Y.; Zhang, S.; Xue, B.; Zhang, M.; Zhou, Q.; Wu, W. An Object-Based Genetic Programming Approach for Cropland Field Extraction. Remote Sens. 2022, 14, 1275. [Google Scholar] [CrossRef]

- Li, Z.; Chen, S.; Meng, X.; Zhu, R.; Lu, J.; Cao, L.; Lu, P. Full Convolution Neural Network Combined with Contextual Feature Representation for Cropland Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 2157. [Google Scholar] [CrossRef]

- Xu, W.; Deng, X.; Guo, S.; Chen, J.; Sun, L.; Zheng, X.; Xiong, Y.; Shen, Y.; Wang, X. High-Resolution U-Net: Preserving Image Details for Cultivated Land Extraction. Sensors 2020, 20, 4064. [Google Scholar] [CrossRef]

- Li, G.; He, T.; Zhang, M.; Wu, C. Spatiotime series variations in the eco-health condition of China’s long-term stable cultivated land using Google Earth Engine from 2001 to 2019. Appl. Geogr. 2022, 149, 102819. [Google Scholar] [CrossRef]

- Raney, R.K.; Cahill, J.T.; Patterson, G.W.; Bussey, D.B.J. The m-chi decomposition of hybrid dual-polarimetric radar data with application to lunar craters. J. Geophys. Res. Planets 2012, 117. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, C.; Zhou, A.; Yao, A. Omni-dimensional dynamic convolution. arXiv 2022, arXiv:2209.07947. [Google Scholar]

- Nguyen, T.T.H.; De Bie, C.A.J.M.; Ali, A.; Smaling, E.M.A.; Chu, T.H. Mapping the irrigated rice cropping patterns of the Mekong delta, Vietnam, through hyper-temporal SPOT NDVI image analysis. Int. J. Remote Sens. 2012, 33, 415–434. [Google Scholar] [CrossRef]

- Ngo, K.D.; Lechner, A.M.; Vu, T.T. Land cover mapping of the Mekong Delta to support natural resource management with multi-time series Sentinel-1A synthetic aperture radar imagery. Remote Sens. Appl. Soc. Environ. 2020, 17, 100272. [Google Scholar]

- Zanaga, D.; Van De Kerchove, R.; De Keersmaecker, W.; Souverijns, N.; Brockmann, C.; Quast, R.; Wevers, J.; Grosu, A.; Paccini, A.; Vergnaud, S. ESA WorldCover 10 m 2020 v100. 2021. Available online: https://zenodo.org/record/5571936 (accessed on 3 January 2023).

- McNairn, H.; Shang, J.; Jiao, X.; Champagne, C. The Contribution of ALOS PALSAR Multipolarization and Polarimetric Data to Crop Classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3981–3992. [Google Scholar] [CrossRef]

- Kumar, V.; Mandal, D.; Bhattacharya, A.; Rao, Y. Crop characterization using an improved scattering power decomposition technique for compact polarimetric SAR data. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102052. [Google Scholar] [CrossRef]

- Hosseini, M.; Becker-Reshef, I.; Sahajpal, R.; Lafluf, P.; Leale, G.; Puricelli, E.; Skakun, S.; McNairn, H. Soybean Yield Forecast Using Dual-Polarimetric C-Band Synthetic Aperture Radar. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 3, 405–410. [Google Scholar] [CrossRef]

- Tomar, K.S.; Kumar, S.; Tolpekin, V.A. Evaluation of Hybrid Polarimetric Decomposition Techniques for Forest Biomass Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3712–3718. [Google Scholar] [CrossRef]

- Wang, H.; Magagi, R.; Goïta, K.; Duguay, Y.; Trudel, M.; Muhuri, A. Retrieval performances of different crop growth descriptors from full- and compact-polarimetric SAR decompositions. Remote Sens. Environ. 2023, 285, 113381. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Croux, C. Explicit scale estimators with high breakdown point. L1-Stat. Anal. Relat. Methods 1992, 1, 77–92. [Google Scholar]

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Crisóstomo de Castro Filho, H.; Abílio de Carvalho, O., Jr.; Ferreira de Carvalho, O.L.; Pozzobon de Bem, P.; dos Santos de Moura, R.; Olino de Albuquerque, A.; Silva, C.R.; Ferreira, P.H.G.; Guimare, R.F.; Gomes, R.A.T. Rice crop detection using LSTM, Bi-LSTM, and machine learning models from Sentinel-1 time series. Remote Sens. 2020, 12, 2655. [Google Scholar] [CrossRef]

- Ghosh, S.; Wellington, M.; Holmatov, B. Mekong River Delta Crop Mapping Using a Machine Learning Approach; CGIAR Initiative on LowEmission Food Systems (Mitigate+); International Water Management Institute (IWMI): Colombo, Sri Lanka, 2022; 11p. [Google Scholar]

- Ge, S.; Zhang, J.; Pan, Y.; Yang, Z.; Zhu, S. Transferable deep learning model based on the phenological matching principle for mapping crop extent. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102451. [Google Scholar] [CrossRef]

- Sun, C.; Zhang, H.; Xu, L.; Ge, J.; Jiang, J.; Zuo, L.; Wang, C. Twenty-meter annual paddy rice area map for mainland Southeast Asia using Sentinel-1 synthetic-aperture-radar data. Earth Syst. Sci. Data 2023, 15, 1501–1520. [Google Scholar] [CrossRef]

- Xiao, X.; Lian, S.; Luo, Z.; Li, S. Weighted res-unet for high-quality retina vessel segmentation. In Proceedings of the 2018 9th International Conference on Information Technology in Medicine and Education (ITME), Hangzhou, China, 19–21 October 2018. [Google Scholar]

- Cloude, S. Polarisation: Applications in Remote Sensing; Oxford University: New York, NY, USA, 2009. [Google Scholar]

| Orbit-Frame | 26–23 | 26–28 | 128–29 |

|---|---|---|---|

| Number | Date | ||

| 1 | 8 May 2020 | 8 May 2020 | 3 May 2020 |

| 2 | 20 May 2020 | 20 May 2020 | 15 May 2020 |

| 3 | 1 June 2020 | 1 June 2020 | 27 May 2020 |

| 4 | 13 June 2020 | 13 June 2020 | 8 June 2020 |

| 5 | 25 June 2020 | 25 June 2020 | 20 June 2020 |

| 6 | 7 July 2020 | 7 July 2020 | 2 July 2020 |

| 7 | 19 July 2020 | 19 July 2020 | 14 July 2020 |

| 8 | 31 July 2020 | 31 July 2020 | 26 July 2020 |

| 9 | 12 August 2020 | 12 August 2020 | 7 August 2020 |

| 10 | 24 August 2020 | 24 August 2020 | 19 August 2020 |

| 11 | 5 September 2020 | 5 September 2020 | 31 August 2020 |

| 12 | 17 September 2020 | 17 September 2020 | 12 September 2020 |

| 13 | 29 September 2020 | 29 September 2020 | 24 September 2020 |

| 14 | 11 October 2020 | 11 October 2020 | 6 October 2020 |

| 15 | 23 October 2020 | 23 October 2020 | 18 October 2020 |

| 16 | 4 November 2020 | 4 November 2020 | 30 October 2020 |

| 17 | 16 November 2020 | 16 November 2020 | 11 November 2020 |

| 18 | 28 November 2020 | 28 November 2020 | 23 November 2020 |

| Layer | Encoder (C × H × W) | Decoder (C × H × W) |

|---|---|---|

| Layer1 | 64 × 128 × 128 | 64 × 128 × 128 |

| Layer2 | 256 × 64 × 64 | 128 × 64 × 64 |

| Layer3 | 512 × 32 × 32 | 256 × 32 × 32 |

| Layer4 | 1024 × 16 × 16 | 512 × 16 × 16 |

| Layer5 | 2048 × 8 × 8 |

| Feature | Epoch | Accuracy | MIoU | MPA |

|---|---|---|---|---|

| 30 | 93.02% | 86.47% | 92.68% | |

| 30 | 93.27% | 86.99% | 93.09% |

| Model | Epoch | Accuracy | MIoU | MPA |

|---|---|---|---|---|

| UNet | 50 | 91.71% | 84.23% | 91.57% |

| ResU-Net | 50 | 93.72% | 87.80% | 93.57% |

| ODCRS | 50 | 93.85% | 88.04% | 93.70% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, J.; Zhang, H.; Ge, J.; Sun, C.; Xu, L.; Wang, C. Cropland Data Extraction in Mekong Delta Based on Time Series Sentinel-1 Dual-Polarized Data. Remote Sens. 2023, 15, 3050. https://doi.org/10.3390/rs15123050

Jiang J, Zhang H, Ge J, Sun C, Xu L, Wang C. Cropland Data Extraction in Mekong Delta Based on Time Series Sentinel-1 Dual-Polarized Data. Remote Sensing. 2023; 15(12):3050. https://doi.org/10.3390/rs15123050

Chicago/Turabian StyleJiang, Jingling, Hong Zhang, Ji Ge, Chunling Sun, Lu Xu, and Chao Wang. 2023. "Cropland Data Extraction in Mekong Delta Based on Time Series Sentinel-1 Dual-Polarized Data" Remote Sensing 15, no. 12: 3050. https://doi.org/10.3390/rs15123050

APA StyleJiang, J., Zhang, H., Ge, J., Sun, C., Xu, L., & Wang, C. (2023). Cropland Data Extraction in Mekong Delta Based on Time Series Sentinel-1 Dual-Polarized Data. Remote Sensing, 15(12), 3050. https://doi.org/10.3390/rs15123050