Abstract

One of the current research areas in the synthetic aperture radar (SAR) processing fields is deep learning-based ship detection in SAR imagery. Recently, ship detection in SAR images has achieved continuous breakthroughs in detection precision. However, determining how to strike a better balance between the precision and complexity of the algorithm is very meaningful for real-time object detection in real SAR application scenarios, and has attracted extensive attention from scholars. In this paper, a lightweight object detection framework for radar ship detection named multiple hybrid attentions ship detector (MHASD) with multiple hybrid attention mechanisms is proposed. It aims to reduce the complexity without loss of detection precision. First, considering that the ship features in SAR images are not inconspicuous compared with other images, a hybrid attention residual module (HARM) is developed in the deep-level layer to obtain features rapidly and effectively via the local channel attention and the parallel self-attentions. Meanwhile, it is also capable of ensuring high detection precision of the model. Second, an attention-based feature fusion scheme (AFFS) is proposed in the model neck to further heighten the features of the object. Meanwhile, AFFS constructs and develops a fresh hybrid attention feature fusion module (HAFFM) upon the local channel and spatial attentions to guarantee the applicability of the detection model. The Large-Scale SAR Ship Detection Dataset-v1.0 (LS-SSDD-v1.0) experimental results demonstrate that MHASD can balance detection speed and precision (improving average precision by 1.2% and achieving 13.7 GFLOPS). More importantly, extensive experiments on the SAR Ship Detection Dataset (SSDD) demonstrate that the proposed method is less affected by the background such as ports and rocks.

1. Introduction

Synthetic aperture radar (SAR) has evolved into a crucial active microwave remote imaging sensor due to its operational characteristics of high resolution and its ability to work around the clock and in all weather conditions [1,2]. SAR remote sensing images have a wide range of potential applications, including resource survey, disaster evaluation, marine supervision, and environmental monitoring [3,4,5]. Ship detection is one of the important applications in maritime supervision, marine traffic control and marine environmental protection. Numerous object detection techniques have achieved outstanding detection performances since the creation and advancement of the convolutional neural network (CNN). Ship detection has been a major topic in the world of SAR image processing. However, there are still a lot of issues that need to be resolved for the CNN-based SAR object detection tasks because of the variety of ships and interference from background and noise.

The candidate region-based and regression-based methods, which are also known as two-stage and one-stage detection models, respectively, are two categories into which CNN-based object detection techniques can be subdivided. The candidate region-based method first extracts all the regions of interest (ROI) roughly, and then realizes the accurate localization and classification of the object. In the early years, candidate region-based methods developed rapidly due to their high precision. To name just a few, aiming at the complex rotation motions of SAR ships, Li et al. [6] proposed a two-stage spatial-frequency features fusion network, which obtains the ships’ rotation-invariant features in the frequency domain through the polar Fourier transform. Zhang et al. [7] proposed a novel quad-feature pyramid network (Quad-FPN), which aims to efficiently emphasize multi-scale ship object features while suppressing background interference. Considering interference of the diversiform scattering and complex backgrounds in SAR imagery, Li et al. [8] designed an adjacent feature fusion (AFF) module to selectively and adaptively fuse shallow features into adjacent high-level features. To solve the unfocused images caused by motion, Zhou et al. [9] proposed a multilayer feature pyramid network, which builds a feature matrix to describe the motion states of ship objects via the Doppler center frequency and offset of frequency modulation rate.

Differing from the candidate region-based methods (two-stage), the regression-based detection (one-stage) framework is relatively simple and can realize object location and classification in one step. Therefore, the one-stage methods are particularly appropriate for real-time object detection tasks. For stance, Li et al. [10] combined the architecture of YOLOv3 and spatial pyramid pooling to establish a one-stage network, aiming to break the scale restrictions of the object. Aiming at the challenge of arbitrary directions of a ship, Jiang et al. [11] proposed a long-edge decomposition rotated bounding box (RBB) encoder, which takes the horizontal and vertical components calculated by the orthogonal decomposition of the long-edge vector to represent the orientation information. However, what is unpleasant is that the detection accuracy decreases to varying degrees as the detection speed increases. For this reason, scholars have launched an exploration to strike a balance between models’ effects and speed. You only look once (YOLO) is a representative detection framework with prominent effects and competitive speed, and it has occupied a place in the object detection field. On the basis of YOLO, some new detection methods have been proposed in succession. For example, in view of the poor detection performance of small-scale objects, Gao et al. [12] developed scale-equalizing pyramid convolution (SEPC) to replace the path aggregation network (PAN) structure in the YOLOv4 framework. Considering the difference in the scattering properties between ships and sea clutter, Tang et al. [13] first designed a Salient Otsu (S-Otsu) threshold segmentation method to reduce noise, and then proposed two main modules, i.e., a feature enhancement module and a land burial module, to heighten the ship features and restrain the background clutter, respectively. With the goal of detecting small-scale objects against complex backgrounds, Yu et al. [14] proposed a step-wise locating bidirectional pyramid network, which can effectively locate small-scale objects via global and local channel attentions. For ease of deployment on satellites, Xu et al. [15] devised a lightweight airborne SAR ship detector based on YOLOv5, which introduced a histogram-based pure background classification (HPBC) module to filter out pure background samples.

Although the detection method mentioned above has achieved relatively good performance, the issue of SAR ship detection has not been well solved due to the multi-scale and weak saliency of the target features and the complex background noise. Recently, the emergence of the attention mechanism provides a useful solution for scholars to enhance the effectiveness of ship detection in SAR imagery [16]. In particular, the attention mechanism is very effective at enhancing object features and suppressing background noise. According to the difference in working principles, attention modules can be broadly categorized into three groups: spatial, channel and hybrid attentions. It is noted that hybrid attention simultaneously considers spatial and channel information. Some hybrid attention-based detection methods emerged recently and have made further improvements in detection performance, such as YOLO-DSD [17], CAA-YOLO [18], FEHA-OD [19] and so on. However, the computing resource including internal storage capacity and computing power is limited in practical applications, while the demand for the inference speed is great. There is an urgent need to design a lightweight framework with outstanding detection performance. More importantly, most of the existing attention-based detection methods employ common channel and spatial attention mechanisms to emphasize ships according to the global relations among features [20]. As a matter of fact, global attention modules are computationally inferior to local attention ones. As a consequence, a major problem and obstacle in the current ship-detecting duty in SAR images is how to achieve a compromise between accuracy and speed.

To address the aforementioned issue, this paper proposes an innovative lightweight radar ship detection framework with hybrid attention mechanisms presented in SAR imagery. In light of the merits of YOLOv5 [21] with high precision and rapid inference speed, the designed method takes YOLOv5 as the baseline. First, a unique hybrid attention residual module is created to enhance the model’s feature learning capabilities and ensure high detection precision. Second, an attention-based feature fusion scheme is introduced to further highlight the features of the object. At the same time, considering the uniformity and redundancy of the convolutions, the hybrid attention feature fusion module is used in the attention-based feature fusion scheme to ensure the model’s practicability and efficiency. The vital contributions of this paper are as follows:

- This paper designs a novel lightweight radar ship detector with multiple hybrid attention mechanisms, which is named multiple hybrid attentions ship detector (MHASD). It is proposed to obtain high detection precision while achieving fast inference for SAR ship detection. Extensive qualitative and quantitative experiments on two benchmark SAR ship detection datasets reveal that the designed method can strike a better balance in speed and precision more effectively than some state-of-the-art approaches.

- Considering the inconspicuous features of the ship object and strong background clutter in SAR images, a hybrid attention residual module (HARM) is developed, which enhances the features of ship objects in both channel and spatial levels by means of local attention and global attention integration to ensure high detection precision.

- To further enhance the discriminability of ship object features, an attention-based feature fusion scheme (AFFS) is developed in the model neck. Meanwhile, to constrain the model’s computational complexity, a novel module called the hybrid attention feature fusion module (HAFFM) is introduced to the lightweight in the AFFS component.

2. Methodology

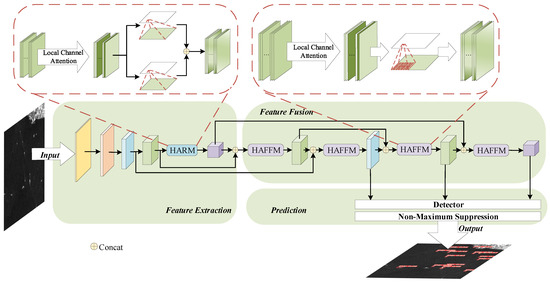

The proposed method obtains diversified features via CSP-DarkNet and fuses diverse features to obtain multi-scale receptive fields using the feature pyramid network (FPN) [22]. According to the difference in modules’ functions, the proposed framework is composed of three components, i.e., feature extraction, feature fusion, and object prediction, as shown in Figure 1. Furthermore, this paper designs multiple novel attention-based modules during the feature extraction and fusion to enhance ships’ features and ensure the multi-scale ship detection competency in the SAR images. Each component of the designed method is described in detail below.

Figure 1.

Framework of the multiple hybrid attentions ship detector.

2.1. Feature Extraction Module

Due to its excellent feature learning capabilities, CNN has been extensively employed as an important model of feature embedding, but its obvious deficiency is that each position on the feature map is sampled with indistinctive weight via convolutions [23]. That is to say, during the feature convolution operation, it is not possible to suppress background interference while focusing on the object’s region of interest. Such deficiency makes the SAR ship detection based on CNN unable to effectively extract the discriminative features of the object. In addition, CNN extracts deep semantic information by stacking multi-layer convolution. The objects in large-scale SAR scene show a multi-scale phenomenon. The network with many layers runs the risk of losing small-scale target properties during information transmission, which will make the model more complex. This work develops a novel hybrid attention residual module (HARM) to lessen the background clutter and improve the objects’ relevant information to solve the aforementioned issues. According to Figure 2, the suggested HARM primarily consists of local channel and global spatial attention operations.

Figure 2.

Architecture of the HARM.

Considering that the shape information of the object in SAR images is inconspicuous, the proposed HARM first considers enhancing the object features at the channel level. Generally, the channel attention is realized by the global correlation among all channel features. It is a fact that the correlation between remote channels is weak, and the correlation between nearby channels is strong. Since the features of ship objects are not inconspicuous in SAR images and the influence of noise is large, we argue that the correlation between long-distance channels may not be important for ship detection in SAR images. In the procedure of attention operation, the range of local channels depends on the channel dimension. Consequentially, drawing on the idea of efficient channel attention (ECA) [24], the proposed HARM first focuses on some vital channels according to local channel correlation. Assume that in a one-dimensional convolution process, k is the size of the convolution kernel. The nonlinear mapping of the feature’s channel dimension C represents the value of k. Mathematically, the channel selection expression is as follows [24]:

where b and are coefficients set to 1 and 2, respectively, and means to take the odd number closest to the result of the operation.

Furthermore, considering the small-scale characteristics of SAR objects in large-scale scenes, this paper draws on the idea of multi-head self-attention (MHSA) in Transformer [25] to promote the effectiveness of feature extraction at the spatial dimension.

Differing from other examples of local spatial attention, MHSA captures the correlation of each pixel with other pixels. More significantly, this global spatial attention can obtain multiple groups of parameters to learn the ships’ features from various perspectives, which is conducive for SAR ship detection tasks of both inshore and offshore situations. Notably, the output of this self-attention module can be computed simultaneously and is independent of the prior output. That is to say, the operation speed is also faster.

Remarkably, the proposed HARM adopts the residual structure to promote the feature learning ability of the model. Differing from other residual modules, the proposed module replaces the original 3 × 3 convolution operation with an attentions group, which makes the network focus on the ship and reduces the object information loss caused by the convolution during sampling operation. In light of the abundant semantic feature at the deep-level network, the proposed HARM is deployed in the deep layer of the feature extraction model.

2.2. Feature Fusion Module

The feature pyramid network (FPN) [22] is employed in the conventional feature fusion module to integrate multi-scale discriminative features. However, such FPN transfers information in one way, which limits its capability for feature fusion [26]. For this purpose, this paper develops a brand new attention-based feature fusion module, which integrates the merits of attention and bidirectional transmission to efficiently fuse multi-scale features from the feature extraction module.

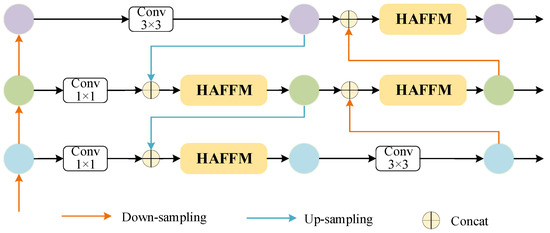

In the bidirectional feature pyramid network, the first feature pyramid transfers and fuses strong semantic features by up-sampling operations. The goal of the second pyramid is to achieve strong localization features by down-sampling operations. The feature fusion module is established on a bidirectional pyramid network and attention mechanism, as shown in Figure 3. To restrict the complexity of the model, the lightweight attention module, i.e., HAFFM, combines the local channel and the local spatial attentions, as depicted in Figure 4. The channel attention first squeezes input features into feature using the global average pooling [27] operation shown as Equation (2).

where is the c-th channel feature of the input feature X, is the c-th feature of Z, and is the squeeze mapping.

Figure 3.

The network architecture of the proposed AFFS.

Figure 4.

Structure of the designed HAFFM.

Then, the local channel attention gains the weights via 1D convolution and the sigmoid function. The details are described as:

where is the convolution operation whose kernel size is and k is described as Equation (1). Meanwhile, is the Sigmoid function denoted as:

The output feature Y of the local channel attention is expressed as follows:

where is the c-th channel feature of Y and is the weight of the c-th channel feature.

Mathematically, the local spatial attention operation is expressed as follows:

where Conv denotes the convolution operation with the kernel size of 7 × 7 and Avgpool and Maxpool denote average and maximum pooling operations [27], respectively.

2.3. Prediction Module

Classification loss , objects loss , and localization loss make up the proposed MHASD’s loss function, which is denoted as:

The loss functions of classification and objects are developed to the BCEWithLogitsLoss, which combines binary cross entropy loss (BCELoss) function and sigmoid function. The BCEWithLogitsLoss between x and z is described as:

where denotes the loss value of and .

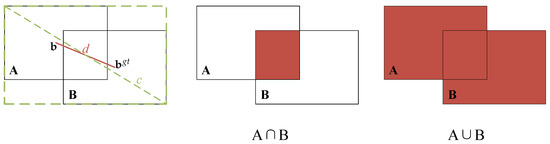

Because the orientation of SAR ship objects is generally arbitrary, it is effective to consider the uniformity of aspect ratios among the predicted and the ground-truth boxes. Due to its consideration of the box aspect ratio and convergence speed, the complete intersection over union (CIoU) [28] is exploited as the localization loss. Figure 5 describes the CIoU loss of the predicted boundary A and the ground-truth boundary B.

Figure 5.

CIoU loss between the prediction box A and the ground-truth box B.

Theoretically, the localization loss is defined as [28]:

where stands for the Euclidean distance operation, c is the diagonal line length of the smallest enclosing box that encompasses the two boxes, and and stand for the center points of the predicted and the ground-truth boxes. The is denoted [28]:

The is the aspect ratio’s constancy, as shown [28]:

where w and are the widths of the predicted and the ground-truth boundaries. h and are the lengths of the predicted and the ground-truth boundaries, respectively.

The is a positive trade-off parameter, as follows [28]:

3. Experimental Results

3.1. Dataset Description

Two benchmark SAR datasets, i.e., Large-Scale SAR Ship Detection Dataset-v1.0 (LS-SSDD-v1.0) [29] and SAR Ship Detection Dataset (SSDD) [30], are used to assess the effectiveness of the designed method.

- The LS-SSDD-v1.0 dataset is a public SAR ship dataset spanning 250 km. The resolution ratio of original SAR images is 24,000 × 16,000 pixels. In reference to the existing work [29], each SAR image is split into 800 × 800 pixels. Therefore, 9000 sub-images are created in this manner, which is subsequently separated into the training and the test datasets in a 2:1 ratio.

- The SSDD dataset, which contains 1160 SAR images and a total of 2540 ships, is the first SAR dataset for ship detection that is publicly available. The resolution of each SAR picture ranges from 1 to 15 m. The training and the test datasets are created from the original SAR dataset in an 8:2 ratio.

3.2. Evaluation Indexes

The quality of the proposed HA-SARSD is assessed in this section using a series of widely utilized indexes [31,32]. Precision (P) is the most intuitive metric for detection performance evaluation, denoted as:

where represents the quantity of predicted ships as ships, and is the quantity of predicted backgrounds as ships.

Another index, i.e., recall (R) assesses, is also utilized in this research since the imbalance of negative and positive samples significantly affects precision, as shown in Equation (14),

where is the quantity of ships predicted as the background.

The area covered by the precision–recall curve is known as average precision (AP), which is a comprehensive assessment metric of detection effects.

Due to the one-sidedness of a single index, F1 analyzes the detector more comprehensively according to the model’s recall and precision.

The parameters of the model and giga floating point operations per second (GFLOPS) are computed in this study to assess the model’s complexity in space and time. Here, T is the inference time for a SAR image with a resolution of 24,000 × 16,000 pixels. The frames per second (FPS) is a common unit for measuring how quickly a model infers, and it is used in this paper as well.

3.3. Experimental Settings

In this article, stochastic gradient descent (SGD) [33] is employed to optimize the proposed framework. The weight decay is set to 0.0005, the momentum factor is set to 0.937, and the starting learning rate is set to 0.01. The input image is 640 × 640 pixels in size. A batch size of 36 is chosen. In the trials that follow, there will be 300 epochs of iterations. The threshold for confidence is set at 0.001. The intersection over the union (IoU) threshold of the real and prediction frames is at 0.5. The Pytorch framework with Python language is utilized to implement all experiments. NVIDIA GeForce RTX 3080 Ti and a Gen Intel(R) Core(TM) i7-12700 2.10 GHz processor make up the hardware setup used in this work.

3.4. Ablation Studies of HARM and AFFS

This section pursued an ablation experiment to assess the efficacy of the projected HARM and AFFS. Table 1 contains the experimental results. AP, P, R, and F1 of the developed approach with HARM are improved by 1.7%, 0.5%, 3.9% and 0.02 respectively, over those of the baseline. In terms of complexity, the GFLOPS and parameters of the proposed method with HARM are 15.8 and 6.10 M, which is 0.5 GFLOPS and 0.63 M less than the baseline, respectively.

Table 1.

Ablation study of HARM and AFFS.

As shown in Table 1, although the method with AFFS increases the AP by 0.9%, it significantly reduces the model complexity, by 12.3 GFLOPS and 5.75 MB of model parameters. On the whole, the developed method incorporating both HARM and AFFS outperforms the baseline in terms of speed and detection accuracy. Therefore, we can state that HARM and AFFS are beneficial to the model’s performance.

3.5. Contrastive Experiments

For both the LS-SSDD-v1.0 dataset and the SSDD dataset, we conduct a series of contrastive experiments in this section to illustrate the effectiveness and superiority of the developed method. Some state-of-the-art detection methods, including Libra R-CNN [34], EfficientDet [35], Free anchor [36], FoveaBox [37], BANet [38], L-YOLO [39], YOLOv5 and YOLOv7 [40], are employed as competitors.

The performance comparison is displayed in Figure 6 to graphically highlight the superiority of MHASD in detecting outcomes and speed, according to average precision, inference speed and model size. In particular, the area of circles denotes the models’ size. Remarkably, MHASD is the most competitive among the compared methods in the detection effect and inference speed. The proposed method’s model size is just 10.4 MB, which is more lightweight than the baseline and most methods, i.e., MHASD is favorable for computing resources.

Figure 6.

Visual comparison of different models’ performances on the LS−SSDD−v1.0 dataset.

On the LS-SSDD-v1.0 dataset, the experimental results for several methods under various circumstances are provided in Table 2, Table 3 and Table 4. The experimental results in Table 2 are tested on the entire dataset. It can be seen that although Free anchor and FoveaBox are superior in precision and recall, respectively, the AP and detection speeds of these two methods are not good. The designed method’s AP and F1 values, which are both greater than those of existing cutting-edge detectors, reach up to 75.5% and 0.75, respectively. Additionally, it can be seen that MHASD’s parameters and inference speed are superior to those of the comparison approaches. These experimental results manifest that MHASD can infer ships more rapidly while maintaining the more outstanding detection effects in the SAR imagery. MHASD strikes a better balance between effects and speed.

Table 2.

Performance comparisons throughout the full scene of the proposed methodology and other cutting-edge detectors.

Table 3.

Performance comparisons of the proposed methodology and other detectors in the inshore scene.

Table 4.

Performance comparisons of the proposed methodology and other detectors in the offshore scene.

As shown in Figure 7, the LS-SSDD-v1.0 dataset contains ships in inshore and offshore scenes. Offshore ships are greatly affected by background interference, and the effect of feature reuse is not effective. In rivers and landward circumstances, ships are extremely small and densely distributed. In order to comprehensively assess the performance of the developed method under different application scenarios, we conduct multiple experiments in offshore and offshore scenarios, respectively.

Figure 7.

The labels visualization of LS-SSDD-v1.0 dataset.

According to the experimental results in Table 3 and Table 4, the AP value of MHASD is better than that of all the compared methods in both scenarios. This outcome demonstrates the method’s broad applicability. In particular, the average precision and F1 of the proposed achieve 91.5% and 0.88, respectively, in the offshore scene.

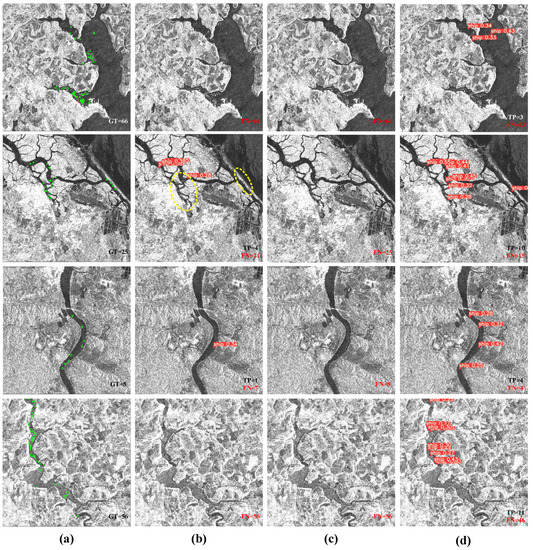

We exhibit the detection findings in scenes from the inshore and offshore, respectively, as displayed in Figure 8 and Figure 9, to qualitatively assess the proposed approach.

Figure 8.

The qualitative results of several methods for detecting offshore SAR ships: (a) ground truth; (b) YOLOv5; (c) YOLOv7; (d) the proposed method. Green boxes indicate the ground truths. False alarms and missing detections are shown by blue ellipses. GT stands for ground truths count.

Figure 9.

The qualitative inshore SAR ship detection results of different methods: (a) ground-truth; (b) YOLOv5; (c) YOLOv7; (d) the proposed method.

Small ships may be seen all over the SAR images of the ocean. MHASD reduces the amount of false and missed alerts for SAR ship detection in the offshore scene, as shown in Figure 8. Particularly in the second image, where ships resemble the background clutter very much, MHASD is still highly effective but the baseline and YOLOv7 are not satisfying. One possible reason is that the proposed method effectively distinguishes objects from clutter points through multiple attention modules during feature extraction and fusion. Meanwhile, MHASD obtains more outstanding detection precision than other methods during the offshore SAR ship detection tasks shown in Figure 9.

In contrast with offshore scenarios, ship detection in inshore scenarios is more challenging, as depicted in Figure 9. It is evident that ships in rivers and harbors are very small and densely distributed. The proposed HARM can extract the ship’s useful information from both spatial and channel levels, resulting in the proposed detection method being more competitive. In addition, the proposed method stepwise distinguishes ships and backgrounds in the process of feature fusion, so that the differences between similar ships and backgrounds gradually emerge. In comparison to baseline and YOLOv7, MHASD performs better.

On the SSDD dataset, several experiments are carried out to further assess the effectiveness and viability of the proposed method. Table 5 displays the detailed experimental findings. MHASD strikes a better balance between effect and speed compared with its competitors. MHASD obtains most of the best detection performances but the precision and the recall, whenever the input size of images is 512 × 512 or 640 × 640. These results indicate that the proposed MHASD is an efficient, real-time, and lightweight SAR ship detection model.

Table 5.

Performance comparisons between MHASD and other state-of-the-art detectors on the SSDD dataset.

Figure 10 provides some visual detection results on the SSDD dataset. Differing from the LS-SSDD-v1.0 dataset, the SSDD dataset contains multi-scale ships, such as small-scale ships dispersed in rivers and large-scale ships in ports.

Figure 10.

The qualitative detection outcomes of various methods on the SSDD dataset: (a) ground-truth; (b) YOLOv5; (c) YOLOv7; (d) the proposed method.

Obviously, the ships near the ports are very similar to the shoreside, which inevitably makes detection difficult. Moreover, there are scattered small-scale ships, which presents difficulties for multi-scale extraction and fusion. The visual results in Figure 10 show that the baseline and YOLOv7’s detection performances are subpar, with erroneous detection and ship size deviation. Because HARM and AFFS in the proposed method gradually widen the gap between ships and backgrounds, the developed method can extract discriminative ship features, so as to optimize SAR ship detection performance.

4. Discussion

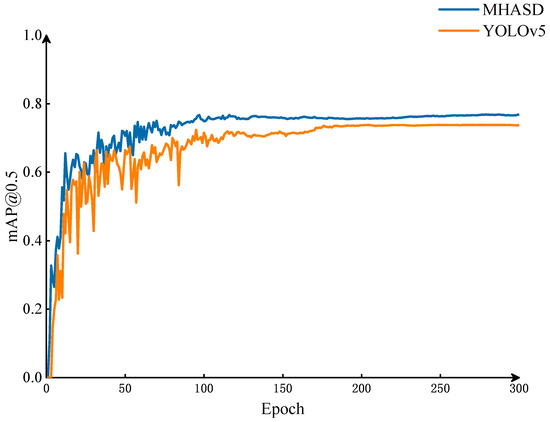

4.1. Detection Effects Analysis during Training

The average precision curves of the fundamental framework and MHASD are plotted in Figure 11 to compare models’ learning abilities and detection effects directly. In the early process of training, the detection performances of these models increase sharply and oscillate obviously. After training about 100 epochs, the average precision curves of the basic framework and the proposed method begin to level off. Namely, these modules fully learn SAR ships’ features after training. After 300 iterations, the average precision curves of these two models converge. It is obvious that the curve of the baseline is lower than that of MHASD. In other words, the learning ability of MHASD is more preeminent than YOLOv5.

Figure 11.

The average precision curves of the baseline and MHASD.

To confirm MHASD’s optimization of detection performance based on the baseline, Figure 12 depicts the P-R curves of the fundamental framework and the designed method during training.

Figure 12.

The P-R curves of the basic and the proposed models on the LS-SSDD-v1.0 dataset.

It is obvious that the P-R curve for MHASD is smoother, and the area bounded by the curve and axis is more expansive than that of the foundational framework. In particular, the curve of the basic framework declines more rapidly than the curve of MHASD when the recall is small. In other words, the proposed model achieves more outstanding detection effects after training and learning SAR ships’ features. Especially, the proposed method increases detection average precision from 74.3% to 75.5%.

4.2. Structural Analysis of Reusing Self-Attention Mechanisms

Considering the complexity of the background in SAR images and the lack of salience of the object features, it is not sufficient to achieve feature enhancement through a simple attention module. It is well known that the multiple uses of the attention mechanism are an effective way to strengthen the discrimination between objects and backgrounds. The parallel and cascaded structures are common ensemble schemes for the multiple attention mechanism.

Moreover, the self-attention in the proposed HARM is independent of time-order features. In particular, learning features via self-attention has a lower computational cost than local channel attention. In order to identify the ideal structure, this section conducts a series of experiments on the self-attention structures with different ensemble schemes.

We investigate the impact of the count and connection scheme of global attention modules in the HARM on detection performance. Table 6 is a list of the experimental results. It can be observed from Table 6 that the cascaded scheme is beneficial, optimizing the average detection effect and recall rate. However, it can be seen that the superiorities of HARM in precision and inference time worsen with the self-attentions reuse. Compared with the cascaded scheme, the experimental results in Table 6 indicate that the model parameters of the parallel structure are fewer, which is very useful to ensure the lightweight of the model. Furthermore, one can see that many indicators of the proposed module with two parallel global attentions outperform other structure schemes. Therefore, taking both detection precision and speed into consideration, two concurrent global attentions following the local channel attention are created to be the best option in the HARM.

Table 6.

Experimental results of structure analysis of the self-attentions on different assemble schemes.

5. Conclusions

This paper proposes a lightweight object detection framework with diversified hybrid attention mechanisms for ship detection in SAR imagery, called multiple hybrid attentions ship detector (MHASD), to achieve a greater balance between detection precision and speed. First, a new attention module named hybrid attention residual module (HARM) is developed in the feature extraction model to distinguish objects and backgrounds in both spatial and channel levels. Second, an attention-based feature fusion scheme (AFFS) is designed in the model neck to further highlight ships. Meanwhile, a hybrid attention feature fusion module (HAFFM) made up of local channel and local spatial attentions is created and integrated into the feature fusion structure in the meanwhile to guarantee the lightweight of the model. The detection effects and inference speed of the MHASD are significantly superior to other compared methods, according to the experimental results on the LS-SSDD-v1.0 and the SSDD datasets.

Author Contributions

Conceptualization, N.Y. and H.R.; methodology, N.Y.; software, N.Y.; validation, N.Y and H.R.; formal analysis, N.Y. and H.R.; investigation, N.Y.; resources, N.Y.; data curation, N.Y.; writing—original draft preparation, N.Y.; writing—review and editing, H.R. and N.Y.; visualization, H.R.; supervision, T.D. and X.F.; project administration, T.D. and X.F.; funding acquisition, T.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ministry of Science and Technology of the People’s Republic of China, grant number 2022YFC3800502; Chongqing Science and Technology Commission, grant number cstc2020jscx-dxwtBX0019, CSTB2022TIAD-KPX0118, cstc2020jscx-cylhX0005 and cstc2021jscx-gksbX0058.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors would like to thank the editors and the anonymous reviewers for their valuable comments that greatly improved our manuscript.

Conflicts of Interest

The authors declare no conflict of interests.

References

- Fan, Y.; Wang, F.; Wang, H. A Transformer-Based Coarse-to-Fine Wide-Swath SAR Image Registration Method under Weak Texture Conditions. Remote Sens. 2022, 14, 1175. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, J.; Gao, G.; Yang, J.; Marino, A. CFAR Ship Detection in Polarimetric Synthetic Aperture Radar Images Based on Whitening Filter. IEEE Trans. Geosci. Remote Sens. 2020, 58, 58–81. [Google Scholar] [CrossRef]

- Du, L.; Dai, H.; Wang, Y.; Xie, X.; Wang, Z. Target discrimination based on weakly supervised learning for high-resolution SAR images in complex scenes. IEEE Trans. Geosci. Remote Sens. 2020, 58, 461–472. [Google Scholar] [CrossRef]

- Lin, S.; Zhang, M.; Cheng, X.; Wang, L.; Xu, M.; Wang, H. Hyperspectral Anomaly Detection via Dual Dictionaries Construction Guided by Two-Stage Complementary Decision. Remote Sens. 2022, 14, 1784. [Google Scholar] [CrossRef]

- Qian, X.; Cheng, X.; Cheng, G.; Yao, X.; Jiang, L. Two-Stream Encoder GAN With Progressive Training for Co-Saliency Detection. IEEE Signal Process. Lett. 2021, 28, 180–184. [Google Scholar] [CrossRef]

- Li, D.; Liang, Q.; Liu, H.; Liu, Q.; Liu, H.; Liao, G. A Novel Multidimensional Domain Deep Learning Network for SAR Ship Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A Novel Quad Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Li, K.; Zhang, M.; Xu, M.; Tang, R.; Wang, L.; Wang, H. Ship Detection in SAR Images Based on Feature Enhancement Swin Transformer and Adjacent Feature Fusion. Remote Sens. 2022, 14, 3186. [Google Scholar] [CrossRef]

- Zhou, Y.; Fu, K.; Han, B.; Yang, J.; Pan, Z.; Hu, Y.; Yin, D. D-MFPN: A Doppler Feature Matrix Fused with a Multilayer Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2023, 15, 626. [Google Scholar] [CrossRef]

- Li, J.; Chen, L.; Shen, J.; Xiao, X.; Liu, X.; Sun, X.; Wang, X.; Li, D. Improved Neural Network with Spatial Pyramid Pooling and Online Datasets Preprocessing for Underwater Target Detection Based on Side Scan Sonar Imagery. Remote Sens. 2023, 15, 440. [Google Scholar] [CrossRef]

- Jiang, X.; Xie, H.; Chen, J.; Zhang, J.; Wang, G.; Xie, K. Arbitrary-Oriented Ship Detection Method Based on Long-Edge Decomposition Rotated Bounding Box Encoding in SAR Images. Remote Sens. 2023, 15, 673. [Google Scholar] [CrossRef]

- Gao, S.; Liu, J.; Miao, Y.; He, Z. A High-Effective Implementation of Ship Detector for SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4019005. [Google Scholar] [CrossRef]

- Tang, G.; Zhao, H.; Claramunt, C.; Men, S. FLNet: A Near-shore Ship Detection Method Based on Image Enhancement Technology. Remote Sens. 2022, 14, 4857. [Google Scholar] [CrossRef]

- Yu, N.; Ren, H.; Deng, T.; Fan, X. Stepwise Locating Bidirectional Pyramid Network for Object Detection in Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T. Lite-YOLOv5: A Lightweight Deep Learning Detector for On-Board Ship Detection in Large-Scene Sentinel-1 SAR Images. Remote Sens. 2022, 14, 1018. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, H.; Jin, H.; Lv, S. YOLO-DSD: A YOLO-Based Detector Optimized for Better Balance between Accuracy, Deployability and Inference Time in Optical Remote Sensing Object Detection. Appl. Sci. 2022, 12, 7622. [Google Scholar] [CrossRef]

- Ye, J.; Yuan, Z.; Qian, C.; Li, X. CAA-YOLO: Combined-Attention-Augmented YOLO for Infrared Ocean Ships Detection. Sensors 2022, 22, 3782. [Google Scholar] [CrossRef]

- Zheng, J.; Wang, T.; Zhang, Z.; Wang, H. Object Detection in Remote Sensing Images by Combining Feature Enhancement and Hybrid Attention. Appl. Sci. 2022, 12, 6237. [Google Scholar] [CrossRef]

- Wan, H.; Chen, J.; Huang, Z.; Xia, R.; Wu, B.; Sun, L.; Yao, B.; Liu, X.; Xing, H. AFSar: An Anchor-Free SAR Target Detection Algorithm Based on Multiscale Enhancement Representation Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 November 2021).

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5999–6009. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 31st Meeting of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the 34th Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Lin, T.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Sergios, T. Stochastic gradient descent. Mach. Learn. 2015, 5, 161–231. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards balanced learning for object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Zhang, X.; Wan, F.; Liu, C.; Ye, Q. FreeAnchor: Learning to match anchors for visual object detection. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. FoveaBox: Beyond anchor-based object detector. IEEE Trans. Image. Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Hu, Q.; Hu, S.; Liu, S. BANet: A Balance Attention Network for Anchor-Free Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T.; Shi, J.; Wei, S.; Li, J. On-Board Ship Detection in SAR Images Based on L-YOLO. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York, NY, USA, 21–25 March 2022; pp. 1–5. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An Anchor-Free Method Based on Feature Balancing and Refinement Network for Multiscale Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1331–1344. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W. A Lightweight Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4006105. [Google Scholar]

- Deng, Y.; Guan, D.; Chen, Y.; Yuan, W.; Ji, J.; Wei, M. Sar-Shipnet: Sar-Ship Detection Neural Network via Bidirectional Coordinate Attention and Multi-Resolution Feature Fusion. In Proceedings of the 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 3973–3977. [Google Scholar]

- Yu, J.; Wu, T.; Zhou, S.; Pan, H.; Zhang, X.; Zhang, W. An SAR Ship Object Detection Algorithm Based on Feature Information Efficient Representation Network. Remote Sens. 2022, 14, 3489. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Gao, X. A CenterNet++ model for ship detection in SAR images. Pattern Recogn. 2021, 112, 107787. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, X.; Li, J.; Gao, X. A Cascade Rotated Anchor-Aided Detector for Ship Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 11–14. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense attention pyramid networks for multi-scale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Yao, C.; Bai, L.; Xue, D.; Lin, X.; Ye, Z.; Wang, Y.; Yin, K. GFB-Net: A Global Context-Guided Feature Balance Network for Arbitrary-Oriented SAR Ship Detection. In Proceedings of the 7th International Conference on Image, Vision and Computing (ICIVC), Xi’an, China, 26–28 July 2022; pp. 166–171. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).