2.3.1. The Proportion Linear Model

This is probably the most widely used model. It is also the easiest for illustrating two important general principles, which are also applicable to the other models that will be analysed.

It will be instructive to compare two estimators, the

unconstrained LS estimators of the proportions (i.e., without imposing the non-negativity constraints (

3), but imposing the sum-to-one constraint (

2)) and the

constrained LS estimators (i.e., with both constraints (

2) and (

3) imposed).

The unconstrained LS estimators have been derived by ([

34], eqn. (11)). Their formula assumes a general covariance matrix. This simplifies if it is assumed that the errors are uncorrelated; see (

10). Using a simplified notation, their formula becomes:

where

,

is a vector consisting of

M 1’s,

and

is the standard LS estimator without

either the constraints (

2) and (

3) imposed.

It will later be useful to know the covariance matrix of

. In the less general situation considered in this paper, ([

34], eqn. (13)) simplifies to:

where

It is straightforward to show that the residual sum of squares of the unconstrained fit,

, is given by:

and that an unbiased estimator of

is given by ([

35], eqn. (4.29)):

When

and

, the denominator, the df, is

.

Unlike the unconstrained solution, the constrained solution,

does not in general have an explicit algebraic solution. It can be obtained using quadratic programming code ([

32], (Chapter 16), which is now widely available. The R package quadprog (

https://cran.r-project.org/web/packages/quadprog/quadprog.pdf, accessed on 16 May 2023) has been used to produce the results in this paper. Note that, if all the elements of

are non-negative, then both (

2) and (

3) are satisfied, in which case

.

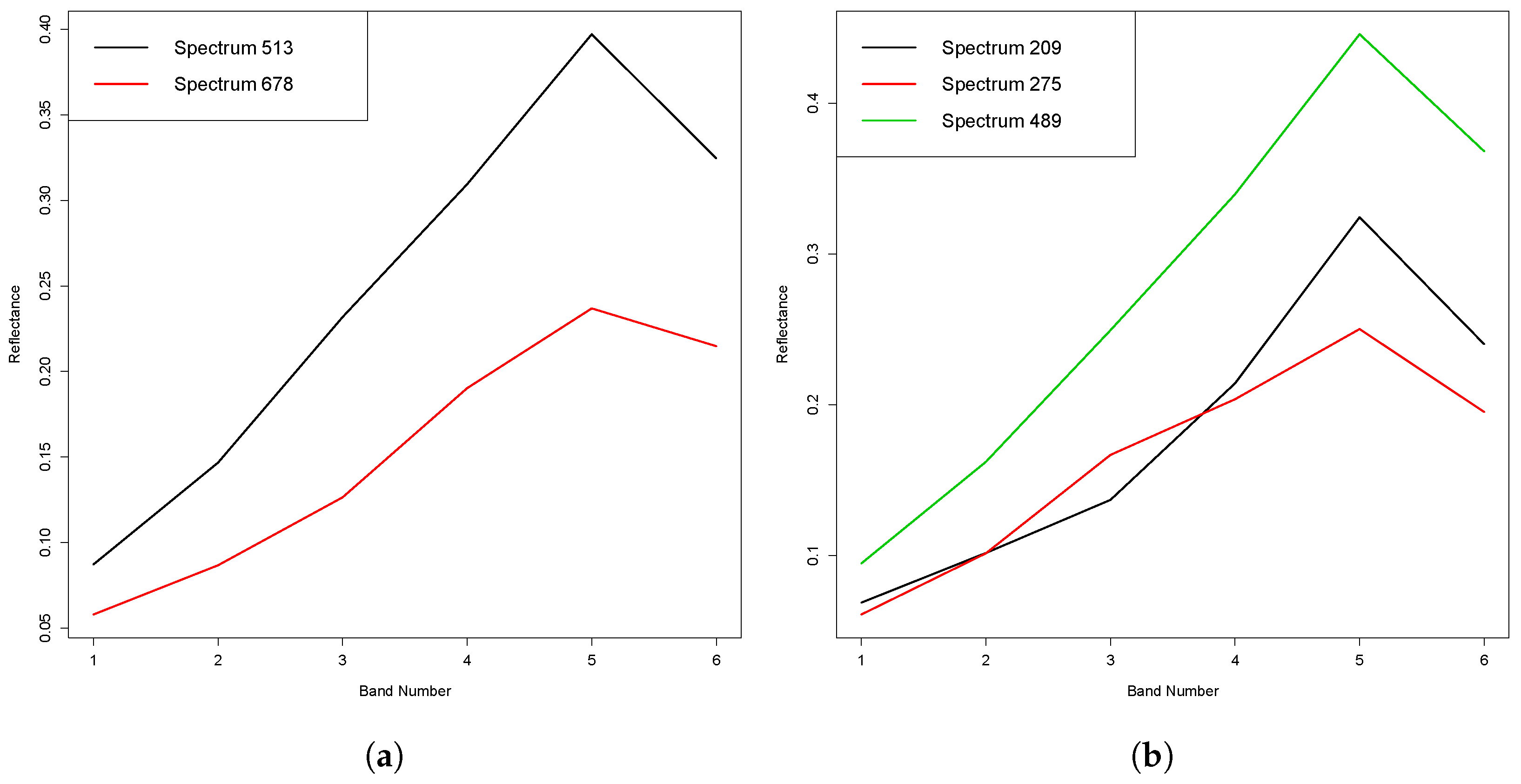

The first important principle will shortly be illustrated using a sample spectrum from the dataset. In order to do this, the brightness variations that are apparent in

Figure 1a,b need to be removed. This will be done by dividing each spectrum by its mean value, i.e.,

where

where

is a vector of

d 1′s.

Y will be called the

standardised spectrum. It will also be convenient to assume that, for each endmember, the mean of its values is also 1, i.e.,

Then, it is straightforward to show ([

36], Section 2.1) that, if there is

no error in the NNL model (

6), then the standardised spectra satisfy the PL model (

1) with

. Therefore,

if the errors are not too large, the brightness variations can be approximately removed

and a model which approximately satisfies (

2) and (

3) obtained by standardising

both the spectra and the endmembers.

Note that, if the standardisations (

20) and (

22) are used, then it is necessary for

Y to replace

X in both (

15) and (

18).

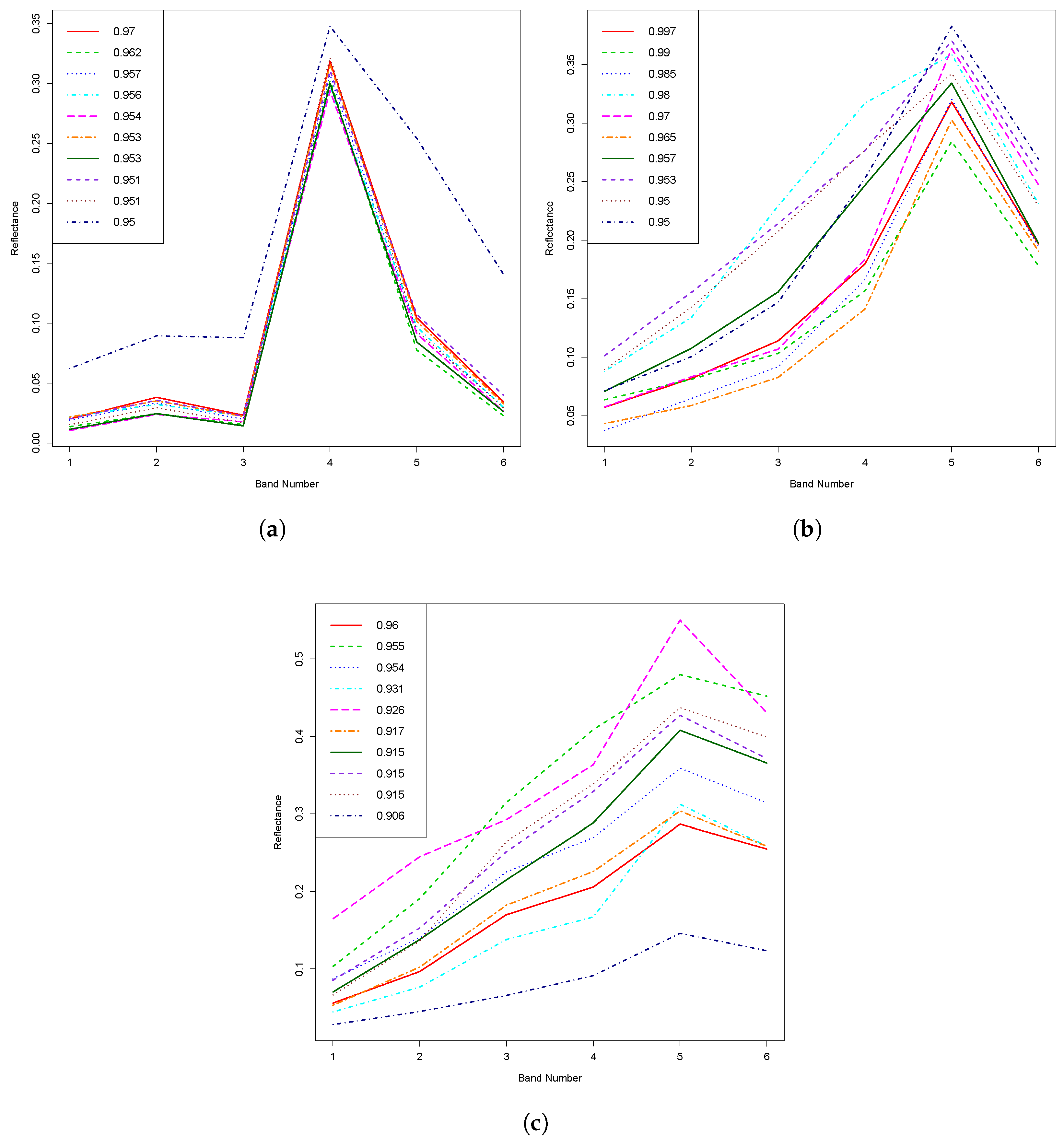

In what follows,

endmembers will be assumed. In order to satisfy (

22), the

standardised versions of the nominally purest PV, NPV and BS spectra shown in

Figure 2a,

Figure 2b and

Figure 2c, respectively, will be used as the three endmembers. The first principle will be illustrated using

standardised spectrum 1099 in the dataset.

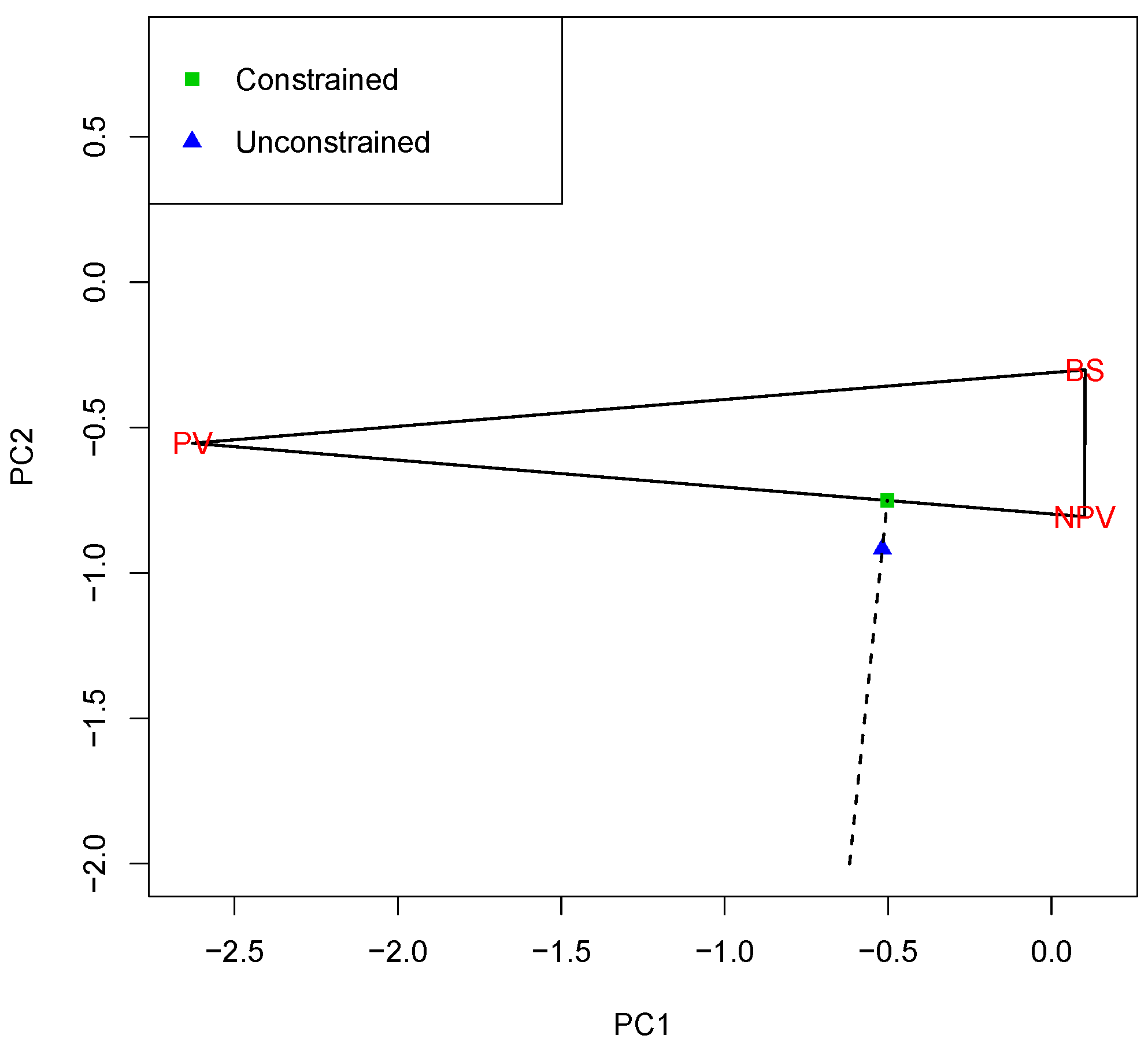

The three endmembers form the vertices of a triangle. Although this triangle lies in six-dimensional space, it can be projected onto a two-dimensional plane. This is determined by the first two Principal Components (PCs) of the three endmembers. The projected triangle is shown in

Figure 3. For reasons that will shortly become clear, the lengths of the two plotting axes are equal. This figure also shows the projection of the data (

Y) onto the plane determined by the endmembers (the blue triangle). This point corresponds to the unconstrained solution. Note that it lies outside the triangle.

is just the squared distance between

Y and this point. The nearest point to the blue triangle on the boundary of the triangle (the green square) is also shown. It corresponds to the constrained solution. By Pythagoras’ theorem, the RSS for the constrained fit,

, is just

plus the squared distance between the blue triangle and the green square.

A broken line has been included between (and beyond) these two points. Because the lengths of the two plotting axes are equal, it can be seen that this broken line is perpendicular to the edge of the triangle nearest to the blue triangle. An important point to note is that all unconstrained solutions lying along the broken line have the same corresponding constrained solution. From a “confidence” perspective, if a point lies on the broken line but near the triangle, intuitively, there must be a reasonable likelihood that the “true” point lies inside the triangle. On the other hand, if the point lies on the broken line but further away from the triangle, intuitively, it is more likely that the true point actually lies on the edge of the triangle. This plot shows that, although the constrained estimator is the best point estimate consistent with the constraints, it actually throws away information. Hence, statistical inference should be based on the unconstrained estimator, which does not throw away the relevant information. This is the first major principle that this model demonstrates.

For the time being, the non-negativity constraint (

3) will be ignored when considering confidence intervals and regions based on the unconstrained estimator. The confidence interval for a single proportion will be considered first. In what follows, subscripts

k (or

l) will be used to represent any one (or two) of the

M materials. Let

denote the

th element of

, given by (

17). Then, by (

16),

is the variance of

. Hence, by standard LS theory ([

35], eqn. (4.54)):

has a t distribution with

df, and hence, (ignoring the non-negativity constraint (

3)) a

CI for

is given by:

where

is the

upper percentage point of the t distribution with

df.

The interpretation of the CI is that it will include the true proportion, on average,

of the time. However, the fact that the true proportion must lie in

is additional information which does not invalidate this fact; it just helps us to reduce the size of the CI,

without altering the fact that the true proportion lies in the CI, on average, of the time. Thus, the

constrained CI is just the

intersection of the

unconstrained CI with

. This is the second major principle, which will be called the

Intersection Principle, to be introduced in this subsection. This principle has been used previously in ([

37], Section 7.2) when deriving confidence intervals for linear combinations of non-negative parameters. To the author’s knowledge, it has not been used previously for proportion estimation.

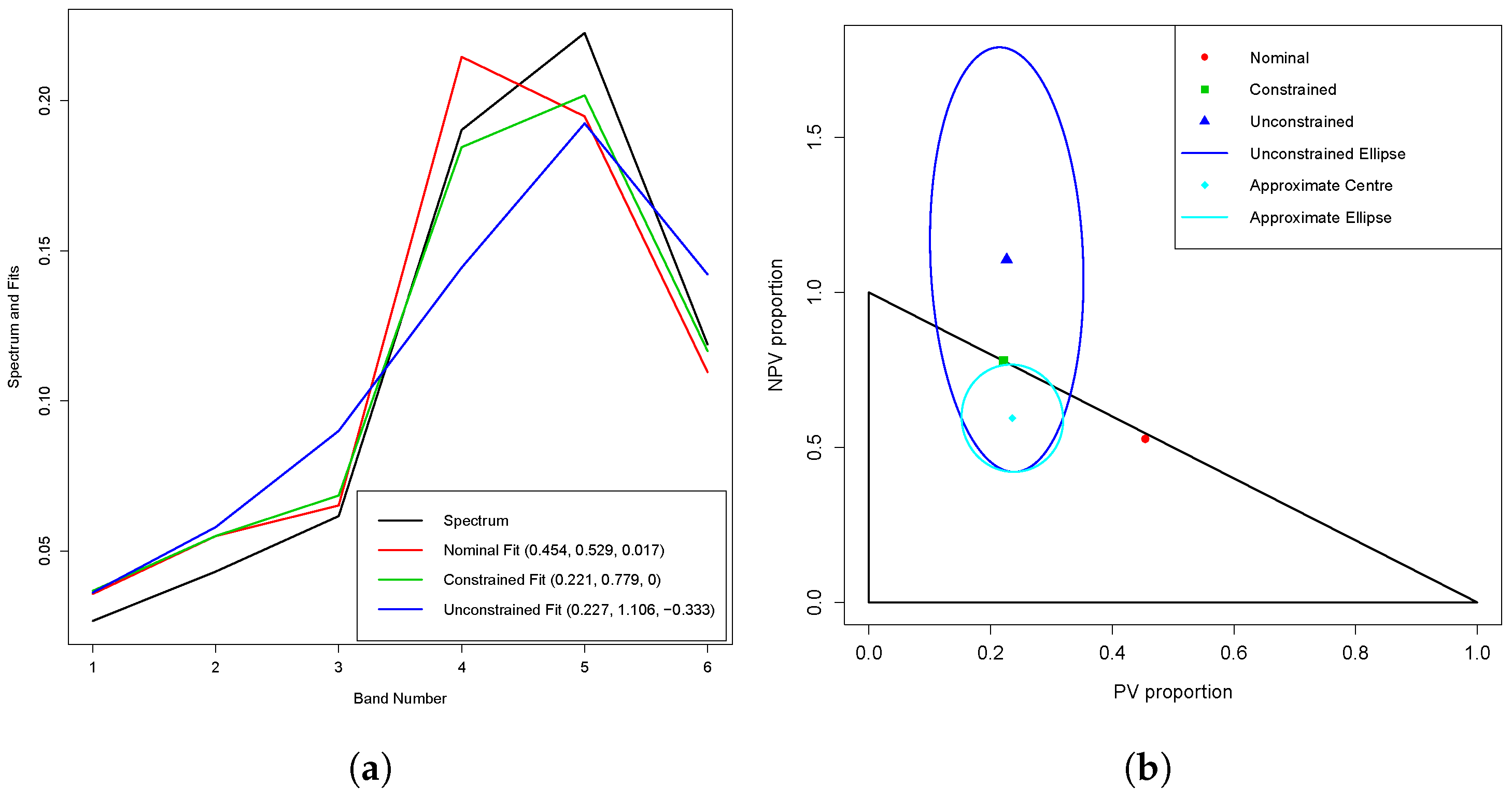

The above theory is illustrated in Figure 5a in

Section 3.1, using spectrum 1099. The 95% CIs for the PV, NPV and BS proportions are (0.13, 0.32), (0.60, 1.00) and (0.00, 0.18), respectively, while the lengths of the PV and BS CIs are not too large, the length of the NPV CI is much larger. It is difficult to see how the three CIs fit together, in particular within the sum-to-one constraint (

2).

The way to deal with this is to consider a joint CR for any two of the proportions; in fact, this is a JCR for all three proportions because of the sum-to-one constraint. Let

denote the vector of any

two proportions

k and

l, let

denote the corresponding vector of the unconstrained estimators of these two proportions and let

denote the submatrix consisting of rows and columns

k and

l of

(given by (

17)). Then, the JCR for the two proportions based on their unconstrained estimators is an ellipse given by ([

35], eqn. 4.60):

where

is the

upper percentage point of the F distribution with

and

df. It is straightforward to show that (

25) is invariant to any linear transformation of the data, and thus, because of the sum-to-one constraint (

2), it does not matter which two (out of three) proportion estimates are chosen.

Despite appearances to the contrary, (

25) is an extension of (

24). Because the latter equation is symmetric, some terms can be arranged and squared to obtain:

noting that

, and thus, the extension is apparent.

In

Section 3.1, Figure 5b shows the nominal, constrained and unconstrained PV and NPV estimates for spectrum 1099, the 95% joint confidence ellipse for the true PV and NPV values based on (

25) and the triangle determined by the constraints (

2) and (

3). This will be called the

feasible triangle. By the intersection principle, the constrained JCR is just the intersection of the ellipse and the triangle. Although the ellipse is quite large, its intersection with the triangle is much smaller, and enables the CIs for the individual proportions to be reconciled in a coherent and interpretable way (an ellipse which approximates the JCR is also shown in cyan; this approximation will be briefly discussed in

Section 2.4).

2.3.2. The Non-Negative Linear Model

In this subsection, the model (

6) is assumed, and CIs and JCRs for the

’s, defined by (

9), are constructed based on suitable estimators of them. These will use the two principles introduced in the previous subsection, namely the principle that statistical inference should be based on the

unconstrained estimator and the intersection principle.

First, some standard LS theory is required. The unconstrained LS estimators of the coefficients in (

6) are given by:

where

. From (

8) and (

9), the unconstrained proportion estimates are then given by:

where

Again using standard LS theory, the covariance matrix of

is given by:

and the unbiased estimator of

is now given by:

In what follows, it will be convenient to split the vector

into its

M separate entries:

Note that each of the

M estimators in (

32) is the ratio of two random variables. When the errors have a Gaussian distribution, the general solution for the CI of a ratio is given by [

38]. In

Appendix A, this theory is used to derive the

% CI for

under the NNL model, which is:

where

is given by (

32),

is given by:

and

where

is given by (

14),

is the

kth row (or column) of

, given by (

12), and

is the

th element of

. It will be useful later to note that

and

are proportional to the variances of the numerator and denominator in (

32), respectively (see also (

30)), while

is proportional to their covariance.

There are a number of things to note about the CI (

33). First, it is not centered on

, but on

. This is because the ratio estimator

is a biased estimator of

. Second, for the CI to be a “valid” CI,

in (

34) needs to be positive so that its square root in (

33) is real.

It is straightforward to show that, if:then . Details are not given here. This is fortuitous, because if (

37) holds, then the denominator in (

33) is positive. Equation (

37) is satisfied by all 1169 spectra in the dataset.

The quantity

is a useful

relative goodness of fit measure. Excluding the first term on the right hand side of (

35), the quantity

is the

estimated variance of

divided by

(making it scale invariant).

is the denominator in

. Thus, when the variance of the denominator is relatively large, the CI can become “invalid” (although that has not happened with any of the spectra in the dataset being considered).

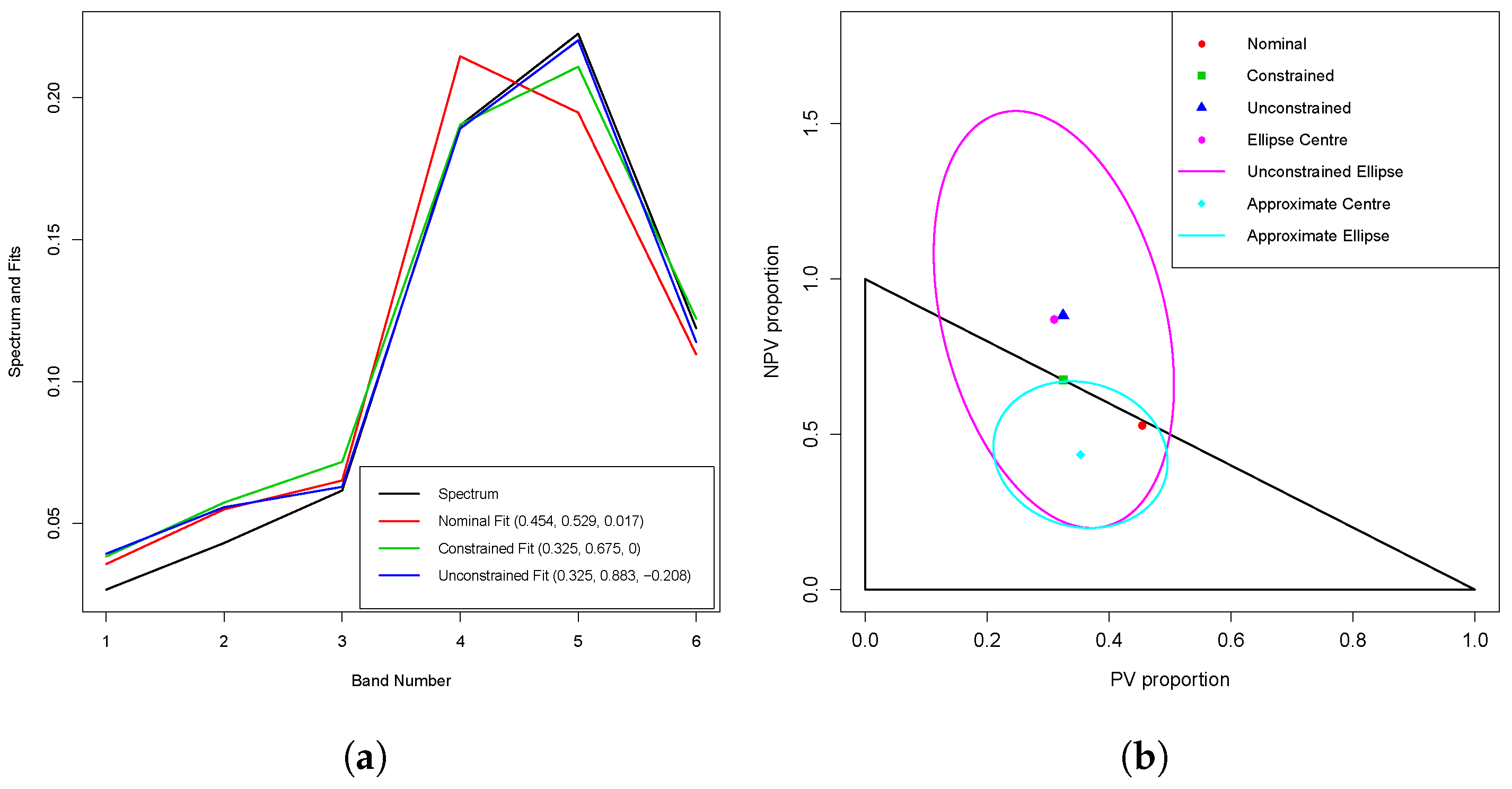

Analogous to Figure 5a under the PL model, Figure 6a in

Section 3.2 shows the nominal, constrained and unconstrained fits for spectrum 1099 under the NNL model. The two fits are also compared in that section.

The JCR for any two proportions under the NNL model is now derived. As in

Section 2.3.2, this is actually a JCR for all three proportions due to the sum-to-one constraint. What follows is probably original. An outline of the derivation of the JCR is given in

Appendix B. A more detailed derivation will be given in a separate publication.

Let

now denote a vector of two unconstrained estimators of the form (

32) of

. The JCR based on these unconstrained estimators is based on an inequality involving a quadratic form. As pointed out previously,

is a biased estimator of

. A consequence of this is that the quadratic form is not centered on

. Let

denote the submatrix consisting of rows and columns k and l of

, and let

, where

is given by (

36). Let:

In its most succinct form, the

JCR based on the unconstrained estimator

is given by those values of

satisfying:

where

where

and

Compare (

44) with (

35). It can be shown that, if:

then (

39) is the interior of an

ellipse. A proof will be published elsewhere. Compare (

45) with (

37). From (

35) and (

44):

For the data considered in this paper,

. When

and

,

, so that the inequality (

45) is more stringent that the inequality (

37). Nevertheless, all 1169 spectra satisfy the inequality (

45).

Figure 6b in

Section 3.2 shows the nominal, constrained and unconstrained PV and NPV estimates for spectrum 1099, the 95% joint confidence ellipse for the true PV and NPV values for the NNL model (based on (

39)), the center of the ellipse and the feasible triangle.

2.3.3. Primary and Secondary Endmembers

As previously, assume that there are

M endmembers, of which

L are “primary” endmembers and

are “secondary” endmembers. Without loss of generality, assume that the primary endmembers are the first

L endmembers. An obvious example of this is where PV, NPV and BS are the primary endmembers, and (non-zero) shade and/or water are the secondary endmembers. In this case, the interest is in constructing CIs and JCRs for the

relative proportions of the primary endmembers. The estimators of the relative proportions are just the estimators of the original proportions, divided by the sum of the original estimated proportions

of the primary endmembers only. Hence, they are ratios, as are the estimated (original) proportions under the NNL model (see (

28)), and thus, only small adaptations of the NNL theory are required, whether one uses the PL or NNL model to begin with. Noting the comments after (

36), all that is needed is to obtain new formulae for

and

. The CI for the unconstrained estimators is then given by (

33), while the JCR is given by (

39).

Under the PL model, the relevant covariance matrix is given by (

16), where

is given by (

17). Let

denote the submatrix of

corresponding to its first

L rows and

L columns (corresponding to the

L primary endmembers), and let

denote row

k of

. Then, the relevant entries in (

33) and (

39) under the PL model are:

where

is the

kth diagonal entry of

(as previously defined) and

is a vector of

’s.

Under the NNL model, the unconstrained

relative estimated proportions are:

by (

32). The relevant covariance matrix is then given by (

30), where

is given by (

12). Let

denote the submatrix of

corresponding to its first

L rows and

L columns, and let

denote row

k of

. Then, the relevant entries in (

33) and (

39) under the NNL model are:

where

is the

kth diagonal entry of

(as previously defined).

An example of CIs and JCRs for

relative proportions of

primary endmembers is not given, because the dataset in this paper does not provide any secondary endmembers. Nevertheless, it has been included because (i) it is a topic of some interest (e.g., [

26,

27,

28]) and (ii) the relevant theory is a relatively simple extension of the NNL model.

2.3.4. Endmember Variability

This section is primarily motivated by the dataset described in

Section 2.1. In particular, note that in

Figure 1b, spectrum 275 is somewhat different in shape to the other two spectra, even though all three have the same nominal proportions. This is an indication that a model with

unique PV, NPV and BS endmembers (such as (

1) or (

6)) is inadequate to model such variation.

A common approach to this endmember variability problem [

29] is to use libraries with multiple examples of pure “spectra” drawn from each class.

Single spectra are then drawn from each of the classes for use in the LMM in such a way that a best fit is achieved according to some criterion (e.g., MESMA). Unfortunately, the approach presented in this paper is not easy to combine with this approach; see [

30] and references therein.

An alternative approach which can be useful in some circumstances is presented here. The idea is to model the endmembers in each class as linear mixtures of the extreme endmembers in that class. How one might find these extreme endmembers is discussed shortly, but for the time being, assume that they have been found. Let L now denote the number of broad classes. Within broad class , assume that there are extreme endmembers. Then, the total number of exteme endmembers is . Error estimation is only possible if under the PL model and under the NNL model, which is a significant limitation of the approach for small d.

Let

denote the indices

k (between 1 and

M) belonging to

broad class

. Either the PL model or the NNL model is first fitted using all

M extreme endmembers, and then the proportions of each

broad class are modelled as:

There is an analogous formula for the unconstrained estimators of these parameters,

, based on (

11) for the PL model, and (

28) for the NNL model. Equation (

50) can be written in matrix notation. Let

denote the vector of notionally true proportions of the

M extreme endmembers, and let

denote the

L broad class proportions. Let

denote an

matrix, with entry

in row

j and column

k given by:

Then, the matrix version of (

50) is:

with analogous formulae for the

estimated unconstrained proportions,

, under both the PL and NNL models.

For the PL model, it follows from (

16) that:

where

is given by (

17). Then, the formulae given for CIs and JCRs under the PL model in

Section 2.3.1 apply with

everywhere replaced by

. Note that the formula for

(

19) remains unchanged.

For the NNL model, the relevant quantity is

, where

is given by (

27). It follows from (

30) that:

where

is given by (

12). Then, the formulae given for CIs and JCRs under the NNL model in

Section 2.3.2 apply with

everywhere replaced by

. Note that

is still given by (

31);

does

not replace

in this equation.

Although the above approach has limitations when d is small, its advantage is that it can often model the variety of endmembers within a broad class in a continuous way. The usual approach relies on having enough endmembers in each broad class to represent all the variability among mixtures in the dataset under consideration. This may not always be the case.

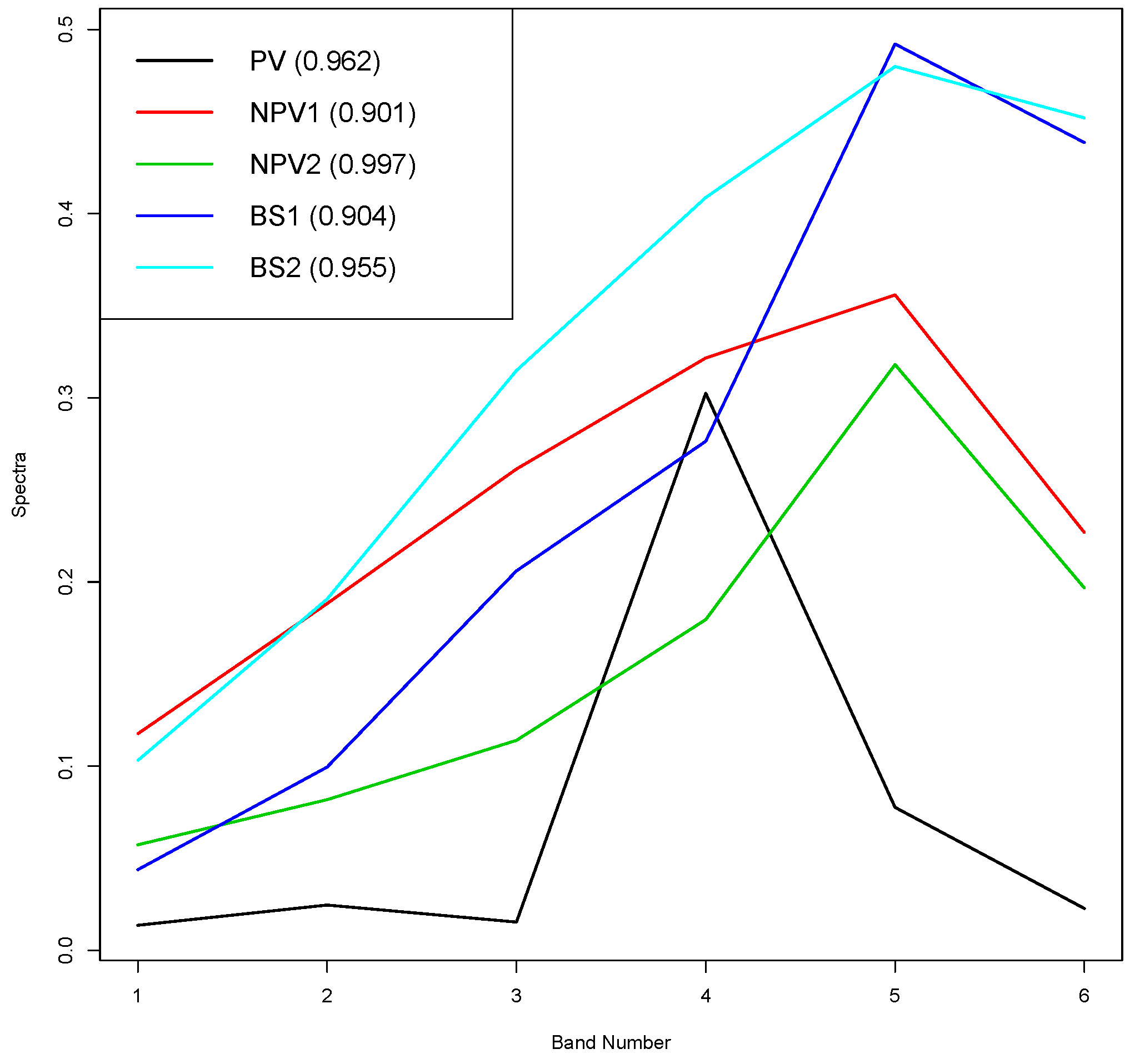

The above theory is now applied to the dataset discussed in

Section 2.2 using the NNL model. Because

, there can be at most

endmembers. The greater variability of the purest NPV and BS spectra in the dataset (see

Figure 2a–c) suggests that the model should use 1 PV, 2 NPV and 2 BS endmembers. It is not easy to find extreme endmembers in the latter two broad classes using automated methods. Fortunately, there are two significant subsets of the dataset where the nominal proportion of one of the broad classes is

(i.e., that class is almost absent): 181 spectra (15.5% of the total) have

, while 110 spectra (9.4%) have

. Plots of the first few PCs of the

standardised spectra of these two subsets make it relatively easy to identify suitable candidates for the extreme endmembers for all three broad classes. Details of the approach will not be given here. The five (unstandardised) endmembers found using this approach are shown in

Figure 4. Their nominal proportions are shown in the legend, while one each of the PV, NPV and BS endmembers has a nominal proportion which is either the highest or second highest nominal proportion in its broad class; the other NPV and BS endmembers have much lower nominal proportions, each a little over 0.90.

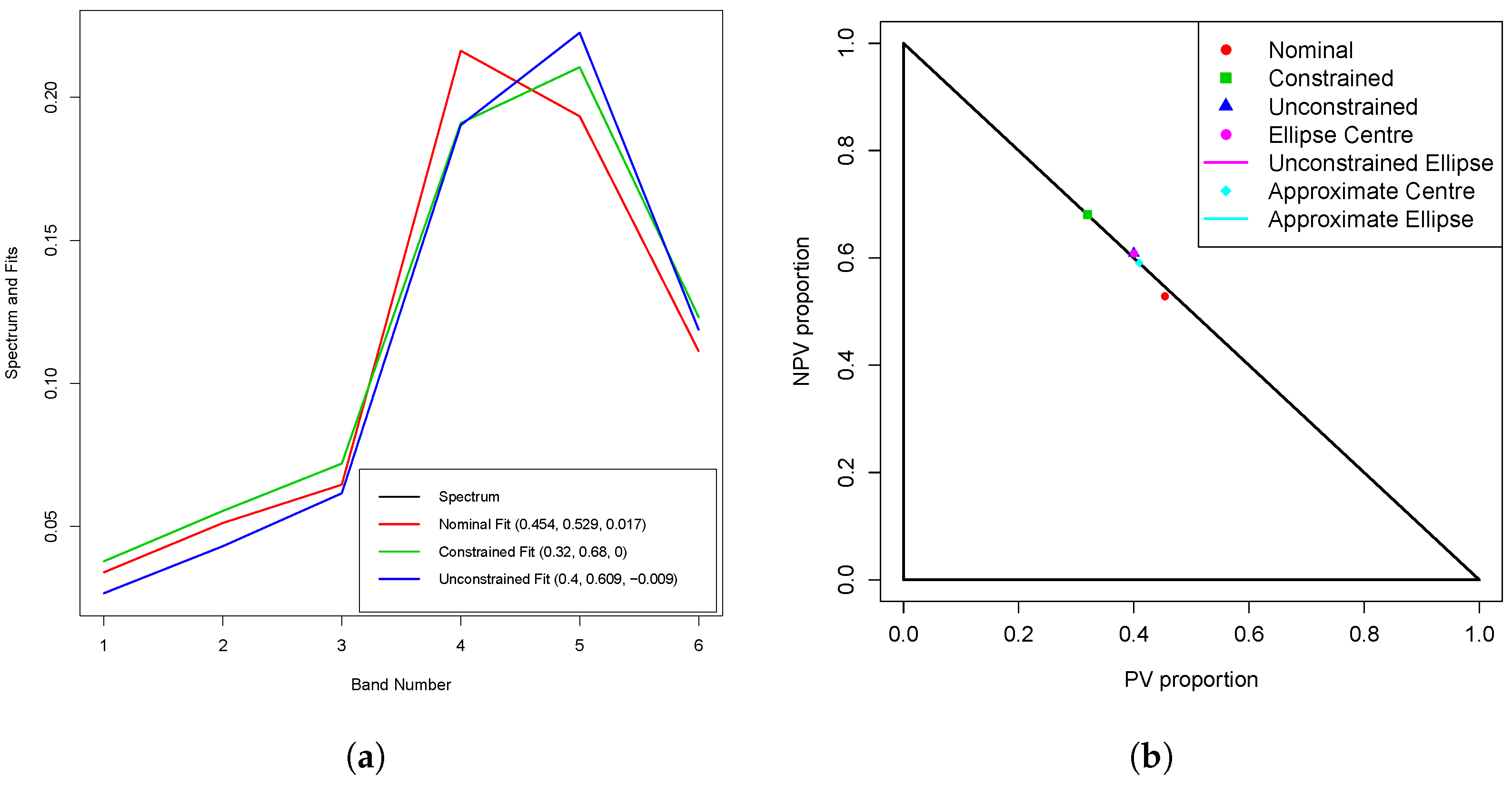

In

Section 3.3, Figure 7a shows the nominal, constrained and unconstrained fits for spectrum 1099 under the five-endmember NNL model, while the corresponding JCR is shown in Figure 7b.

Unfortunately, there is a downside to the use of the five-endmember NNL model. Whereas all 1169 spectra satisfy the inequalities (

37) and (

45) (which are sufficient to ensure valid (unconstrained) CIs and JCRs) for the three-endmember NNL model, only 1101 and 926 of the spectra satisfy these inequalities, respectively, for the five-endmember NNL model. At first glance, this may appear contradictory, because one would expect a better fit of the five-endmember model than for the three-endmember model. Indeed, for 1093 of the 1169 spectra (93.5%),

, defined by (

31), is smaller for the five-endmember model than it is for the three-endmember model. The reason that it is not 100% is partly because the endmembers in the three-endmember model are not a subset of the endmembers in the five-endmember model, but more importantly because the denominator in (

31) (the df) is reduced from 3 to 1, so the five-endmember numerator needs to be considerably smaller than the three-endmember numerator to counteract this. In addition, note that in the definitions of

and

((

35) and (

44), respectively), there are a number of factors, apart from

, that will change between the two models, in particular the factor

, where

or 2 for

and

, respectively. These factors are much higher when

than when

. For instance,

, while

. Thus, the other factors in

and

have a lot of work to do when the df is reduced from 3 to 1.

In the next section, it is shown how this problem can be ameliorated somewhat.

2.3.5. Relaxing the Variance Equality Assumption (10)

In this section, it will be convenient to reintroduce the subscript i to represent spectrum i.

Up to this point, it has been assumed that the errors in each band of any spectrum have the

same variance and are uncorrelated; see (

10). An examination of the ten purest PV, NPV and BS spectra (

Figure 2a–c, respectively) suggests that perhaps there is greater variability in bands 1, 2 and 3 than in bands 4, 5 and 6. Thus, perhaps the assumption (

10) should be relaxed. Although this will be done shortly, for the sake of completeness, (

10) will be generalised to:

where

is assumed

known, but

is assumed

unknown. It is straightforward to convert any of the three models considered so far with the assumption (

55) into the analogous model with the assumption (

10). This is done via an eigendecomposition of

:

where

Here,

is the

diagonal matrix of eigenvalues of

(which will all be assumed positive), and the columns of

Q are its eigenvectors. Let:

It follows easily from (

56) and (

57) that

satisfies (

10). Thus, the theory of the previous four subsections will apply if

is first transformed to

via (

58).

In principle, with a large enough library of

pure spectra, it should be possible to estimate

and to then use the transformation (

58); see for instance [

10].

This is not the case with the dataset discussed in

Section 2.2. Some progress is possible if one is prepared to assume that

is

diagonal, i.e., the errors in different bands are uncorrelated (note that in this case,

and

). This will be called the

variable error variance model, and the model (

10) the

constant error variance model. Denote the diagonal entries of

by

. For the five-endmember NNL model, a relatively simple, if crude, method to estimate these is as follows. For spectrum

i, consider the vector of residuals obtained from the constant error variance model

, given by:

where

is given by (

27). If

is squared, each should on average be approximately proportional to

. However, brighter spectra will tend to have larger residuals than darker spectra, so

should be divided by

, given by (

29), and then this statistic should be averaged over all the fitted spectra in the dataset, i.e.,

This has been done for the five endmember model and all 1164 non-endmember spectra. The values of (the actual divisors of each entry of ) are: 271, 368, 147, 12.5, 4.1, 16.1. As expected, these are much larger for bands 1, 2 and 3 than they are for bands 4, 5 and 6.

When these values are used to produce

, instead of

(Equation (

58)), the number of spectra satisfying (

37) increases from 1101 (94.2%) to 1169 (100%), while the number of spectra satisfying (

44) increases from 926 (79.2%) to 1148 (98.2%).

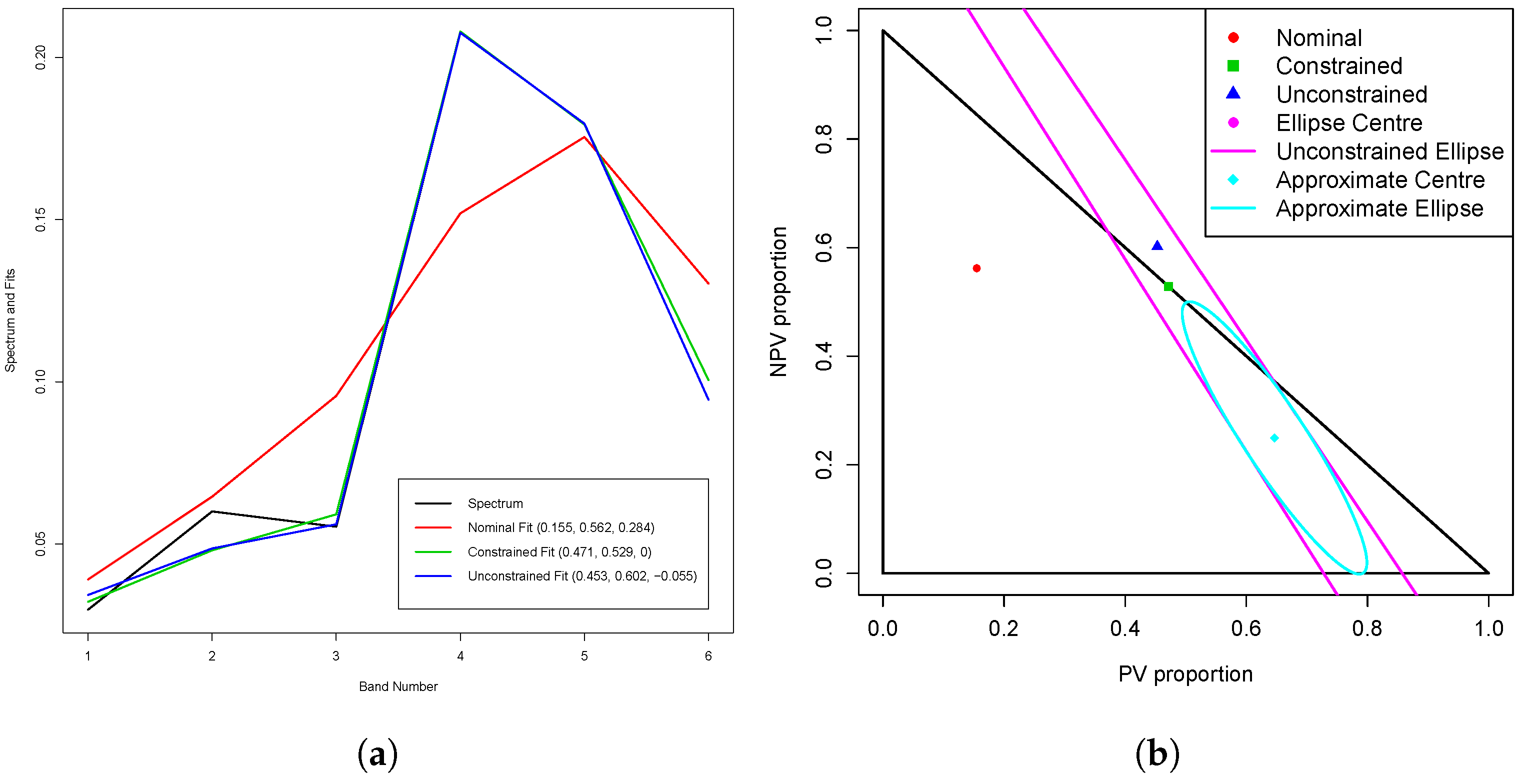

As an example of this transformation, in

Section 3.4, the fits and JCR for spectrum 77, the spectrum with the largest value of

less than 1 (0.995), are shown.