1. Introduction

Our planet is a diverse and complex ecosystem that is home to approximately 8.7 million unique species [

1]. The United Nations Sustainable Development Goals report revealed that one million of these species will become extinct within decades [

2]. While the situation is critical, the report emphasises that we can still make a difference if we coordinate efforts at a local and global level. Humans have played a major role in every mammal extinction that has occurred over the last 126,000 years [

3]. This is due to hunting, overharvesting, the introduction of invasive species, pollution, and the conversion of land for crop harvesting and urban construction [

4]. The illegal wildlife trade, fuelled by the promotion of medicinal myths and the desire for luxury items, has also become a significant contributor to the decline in biodiversity [

5,

6]. According to the United Nations Environment Programme, the illegal wildlife trade is estimated to have an annual value of USD 8.5 billion [

7,

8]. The white rhinoceros commands the highest price at USD 368,000, with the tiger close behind at USD 350,193 [

9]. Animal body parts that are highly sought after, such as rhinoceros horns, can fetch up to USD 65,000 per kilogram, making them more valuable than gold, heroin, or cocaine [

10]. Pangolins are the most trafficked mammal in the world, and although the value of their scales is significantly less than rhinoceros horn (between USD 190/kg and USD 759.15/kg) they are traded by the ton [

11]. In 2015, 14 tons of pangolin scales, roughly 36,000 pangolins, was seized at a Singapore port with a black market value of USD 39 million [

12].

Charities, governments, and NGOs protect wildlife and their habitats by raising money, developing policies and laws, and lobbying the public to fund conservation projects worldwide [

13]. In 2019, the total funding for biodiversity preservation was between USD 124 and USD 143 billion [

14]. The funds were split with 1% towards nature-based solutions and carbon markets [

15], 2% for philanthropy and conservation NGOs [

16], 4% for green financial products [

17], 5% for sustainable supply chains [

18], 5% for official development assistance [

19], 6% for biodiversity offsets (in agriculture, infrastructure, and extractive industries that unavoidably and negatively impact nature) [

20], 20% for natural infrastructure (such as reefs, forests, wetlands, and other natural systems that provide habitats for wildlife and essential ecosystem services such as watersheds and coastal protection) [

21], and finally, 57% for domestic budgets and tax policy (to direct and influence the economy in ways that increase specific revenue types and discourage activities that harm nature) [

22].

The world’s most impoverished individuals, numbering around 720 million, tend to inhabit regions where safeguarding biodiversity is of the utmost importance [

23,

24,

25]. Their cultures, spirituality, and deep-rooted connections to the environment are intertwined with biodiversity [

26], and traditionally, conservation practices that involve local people as wildlife guardians have been successful in preventing biodiversity and habitat loss [

27,

28]. Yet, they have historically received almost zero economic incentive to protect their surroundings [

25]. In 2021, COP26 redressed this issue and pledged USD 1.7 billion annually to local stakeholders in recognition of the biodiversity stewardship services they provide [

29]. However, the allocation falls short of the USD 124 billion—USD 143 billion annual global biodiversity budget given that they maintain 80% of the planets biodiversity [

30,

31]. Most of the global biodiversity budget is spent in industrialised countries; only a tiny fraction actually ends up in the hands of the extreme poor. In this paper, we propose an innovative solution based on “Interspecies Money” [

25], which involves the allocation of funds to animals which they can use to pay local wildlife guardians for the services they provide. Each species group has a digital twin [

32] that serves as its identity. Whenever an animal is identified, it can release funds to its designated guardian. For instance, a giraffe can provide a few dollars to its guardian each time it is photographed, while an orangutan can give thousands of dollars to its guardian as long as it is alive and healthy.

Wildlife conservationists employ a variety of technologies, including drones [

33,

34,

35], camera traps [

36], and acoustics [

37], to detect and monitor animals in their natural habitats. However, drones can pose challenges as animals are difficult to discern from high altitudes, and their noise can disturb and frighten many species. While audio monitoring has been useful, its effectiveness is contingent on animals making detectable sounds. Camera traps offer a comprehensive view of the environment and enable the identification of different species, as well as individual animal counts. Therefore, this paper proposes a solution that utilises deep learning and 3/4G camera traps to identify animal species and facilitate financial transactions between wildlife accounts and local stakeholders. It differs from the original “Interspecies Money” concept in that it does not use individual identification but simply detections of the species. Using a region-based model, animals are detected in images as and when they are captured in camera traps installed in Welgevonden Game Reserve in Limpopo Province in South Africa. Each animal species group is given a makeshift bank account with GBP 100 credit. Every time an animal is successfully classified in an image, 1 penny is transferred from the animal account to the guardian. Note that this is an arbitrary amount and mechanisms still need to be developed to set the value of species.

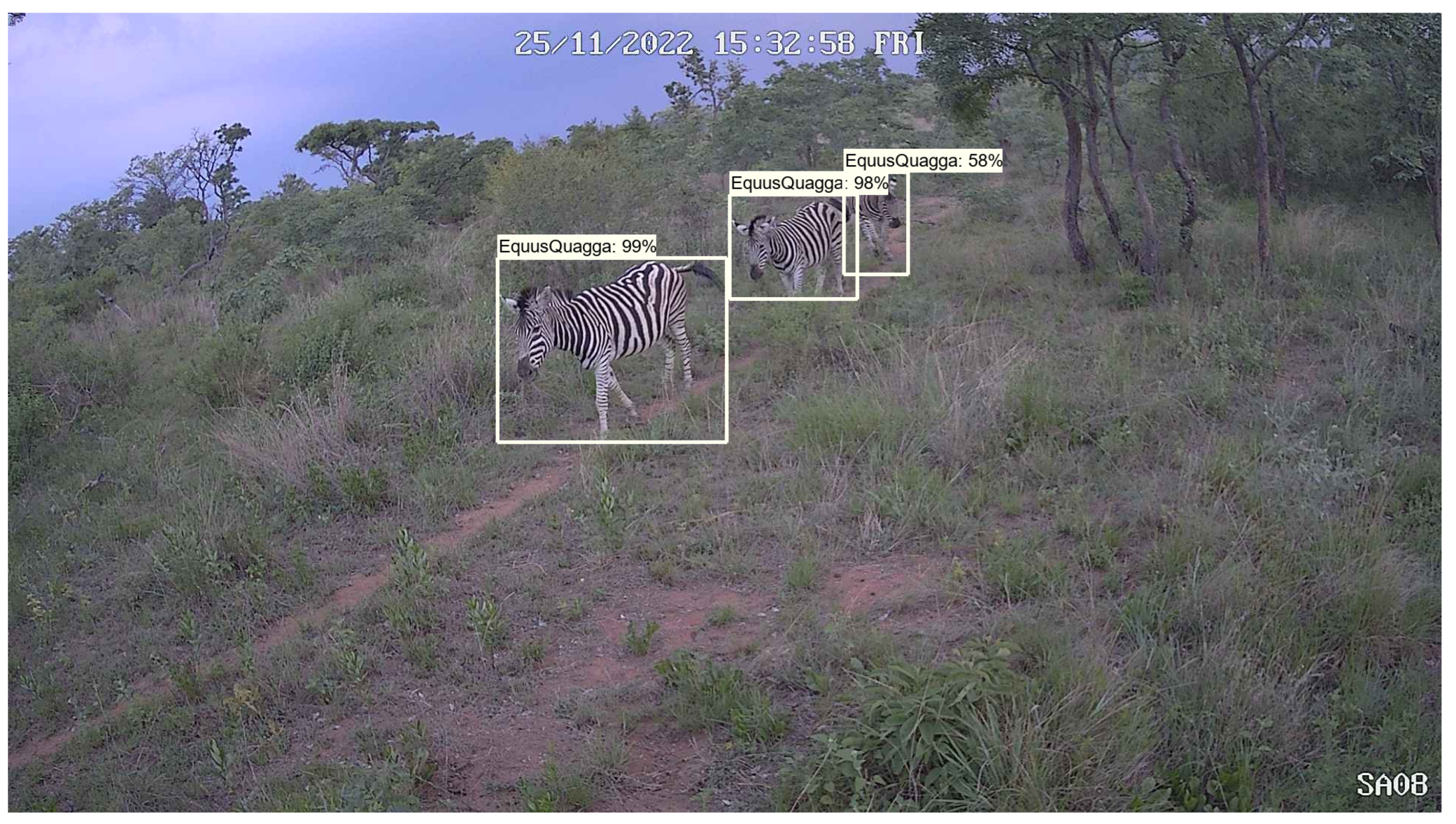

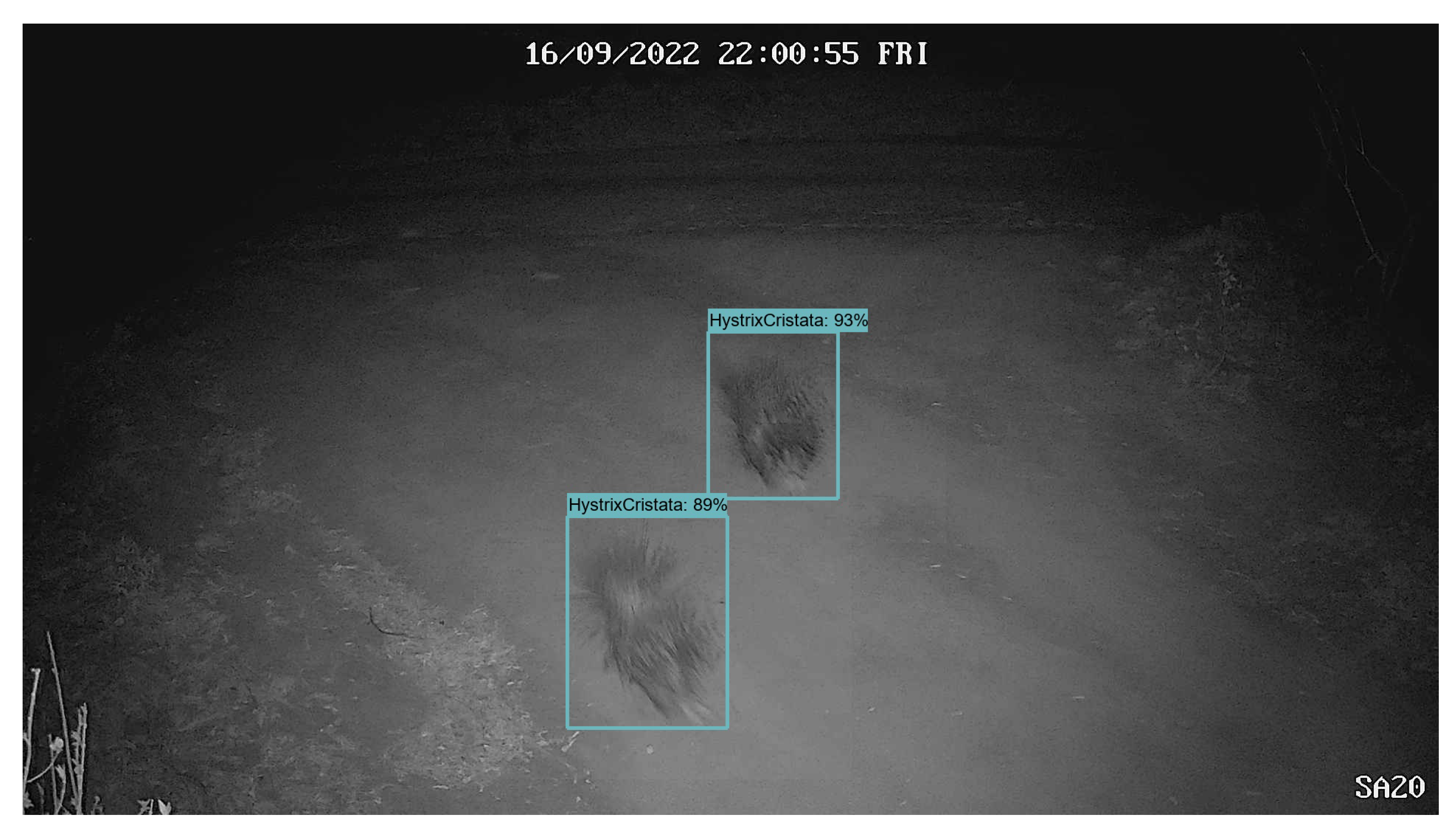

Figure 1 shows an example detection using our deep learning approach. In this example, three pence would be transferred from the species account to the guardian.

The remainder of this paper delves deeper into the key points discussed in the introduction.

Section 2 provides a brief history of conservation, which serves as a foundation for the approach taken in this paper.

Section 3 outlines the Materials and Methods used in the trial, which posits a new solution for addressing the challenges described. In

Section 4, the results are presented before they are discussed in

Section 5. Finally,

Section 6 concludes the paper and provides suggestions for future work.

2. A Brief History of Conservation

Conservation is a multi-dimensional movement that involves political, environmental, and social efforts to manage and protect animals, plants, and natural habitats [

38,

39]. During the “Age of Discovery” in the 15th to 17th century [

40], sport hunters in the US formed conservation groups to combat the massive loss of wildlife caused by European settlers [

41]. As local policies emerged, people living close to areas where biodiversity was protected lost property, land, and hunting rights [

42]. Settlers criminalised poaching, which was associated with local people who often hunted and fished for their survival [

43]. Widespread laws led to the creation of protected areas and national parks [

44], which were established within the context of colonial subjugation [

45], economic deprivation [

46], and systematic oppression of local communities [

47].

As a result, local people engaged in “illegal” hunting to meet subsistence needs [

48], earn income or status [

49], pursue traditional practices of cultural significance [

50], or address contemporary and historical injustices linked with conservation [

49]. They viewed settlers (including sport hunters) as unwanted interlopers who stole their lands [

43]. The industrial revolution in the 19th and early 20th centuries [

51], marked by larger populations and working communities, further escalated the demand for natural resources, resulting in increased biodiversity loss [

52,

53]. Today, many local communities in ecologically unique and biodiversity-rich regions of the world still perceive conservation as a Western construct created by non-indigenous peoples who continue to exploit their lands and natural resources [

54].

For decades, conservationists have debated whether it is human activity or climate change that has driven species extinctions and whether the loss of biodiversity is a recent phenomenon [

3]. Studies have provided compelling evidence to show that it is, in fact, humans who are responsible for the wave of extinctions that have occurred since the Late Pleistocene, 126,000 years ago [

55]. For example, toward the end of the Rancholabrean faunal age around 11,000 years ago, a substantial number of large mammals vanished from North America, which included woolly mammoths [

56], giant armadillos [

57], and three species of camel [

58]. Similar extinctions were seen in New Zealand when the

Dinornithiformes (Moa) became extinct about 600 years ago [

59,

60] and in Madagascar where the

Archaeoindris fontoynontii (giant lemur) disappeared between 500 and 2000 years ago [

61]. Many believe that species and population extinction is a natural phenomena [

62], but the evidence suggests that human activity is accelerating species extinction and biodiversity loss [

63].

Despite the efforts to protect biodiversity and natural habitats, we are sleepwalking ourselves into a sixth mass extinction [

64]. Economic systems driven by limitless growth continue to negatively impact conservation efforts [

65]. Rapid development and industrial expansion is depleting natural resources [

66] and intensifying the conversion of large stretches of land for human use [

67]. The Earth’s forests and oceans are persistently exploited by major corporations who view the planet’s natural resources as capital stock [

68,

69]. Economic models and financial markets treat natural systems as assets to be used immediately, leading to the abuse of nature for short-term profits with little regard for the long-term costs to society and the environment [

70]. While The Economics of Ecosystems and Biodiversity (TEEB) attempt to hold large corporations to account [

71], many believe we need nothing short of a redesign of corporations themselves if we are to successfully enable a transition to a ‘Green Economy’ [

72]. Conservationists agree that biodiversity and natural systems are essential for human survival and economic prosperity but criticise the big corporations and political systems that prioritise immediate economic gains at the expense of the prosperity and well-being of both current and future generations [

73].

The importance of involving local stakeholders as essential contributors in biodiversity monitoring and conservation efforts is emphasized in current perspectives [

74]. Recognizing their role as capable natural resource managers, equitable schemes have been introduced to promote their engagement in locally grounded social impact assessments that consider the diverse implications of human activities in biodiversity-rich areas [

75]. These efforts are largely driven by the Durban Accord led by the International Union for Conservation of Nature (IUCN) [

76], which advocates for new governance approaches in protected areas to promote greater equity in local systems [

77,

78]. This necessitates a fresh and innovative strategy that upholds conservation objectives while inclusively integrating the interests of all stakeholders involved. This integrated approach aims to foster synergy between conservation, the preservation of life support systems, and sustainable development. While this paper does not claim to comprehensively address all the issues raised, it does offer a rudimentary tool that may help implement such a strategy by quantitatively accounting for biodiversity protection and equitable revenue sharing.

3. Materials and Methods

This section describes the implementation details for the digital stewardship and reward system posited in this paper that was deployed and evaluated in Welgevonden Game Reserve in Limpopo Province in South Africa. The section begins with a discussion on the training data collected for a Sub-Saharan Africa deep learning model, which was trained to detect 12 different animal species. A second dataset, which was used to evaluate the trained model, is also presented. This is followed by a discussion on the Faster R-CNN architecture [

79] and its deployment in Conservation AI [

80,

81] to classify animals and validate the revenue-sharing scheme. The section is concluded with an overview of the performance metrics selected to evaluate the trained model and the inference tasks conducted during the trial.

3.1. Data Collection and Pre-Processing

The Sub-Saharan Africa model was trained with camera trap images of animals obtained from Conservation AI partners, which include

Equus quagga,

Giraffa camelopardalis,

Canis mesomelas,

Crocuta crocuta,

Tragelaphus oryx,

Connochaetes taurinus,

Acinonyx jubatus,

Loxodonta africana,

Hystrix cristata,

Papio sp.,

Panthera leo and

Rhinocerotidae, the 12 species considered in this study. The class distributions can be seen in

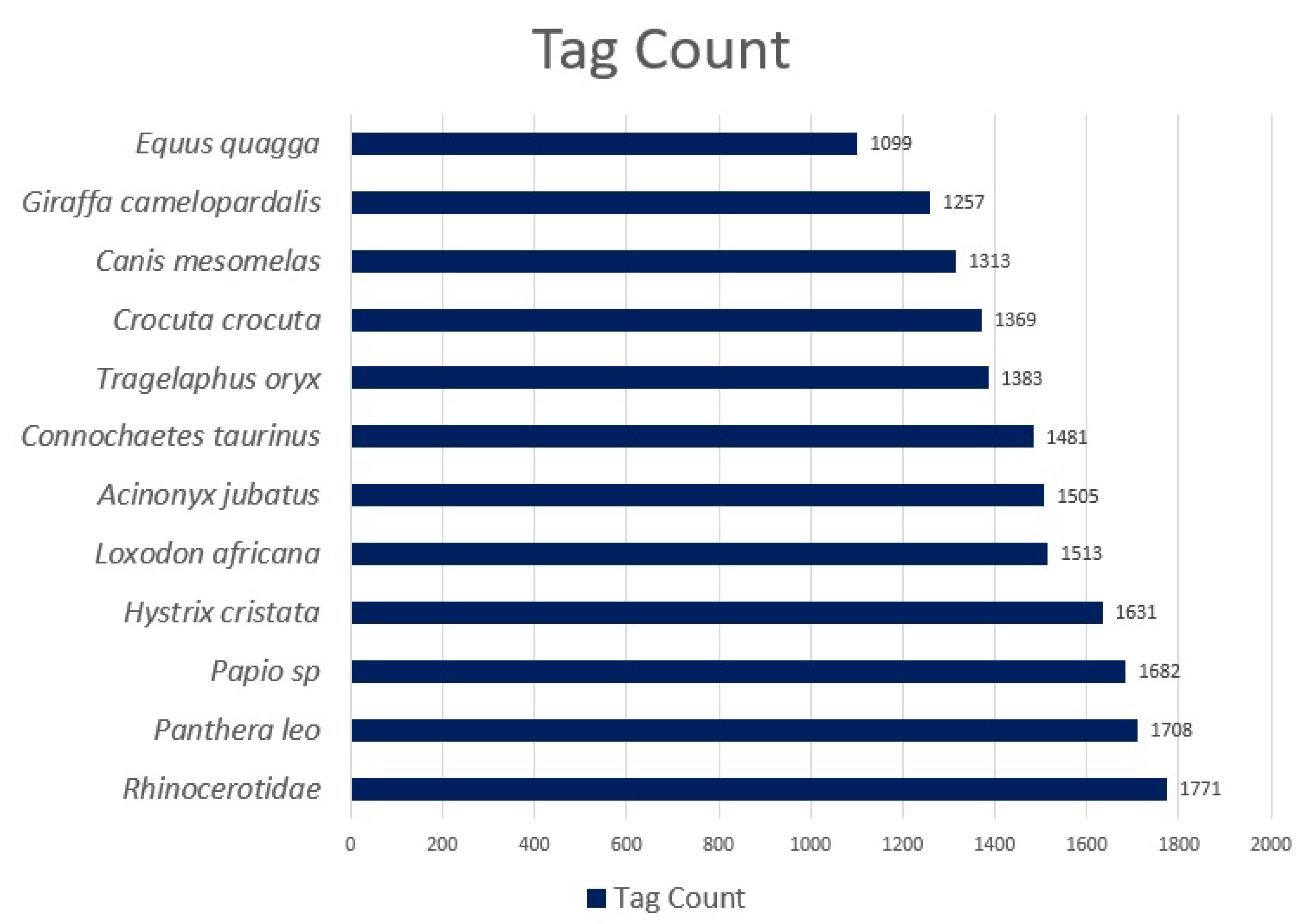

Figure 2.

Following quality checks, between 1099 and 1771 tags per species were retained (17,712 in total). Tags are labelled binding boxes that mark the location of an object of interest (animal) within an image. Binding boxes were added to an image using the Conservation AI tagging website, which are serialised as coordinates in an XML file using the PASCAL VOC format [

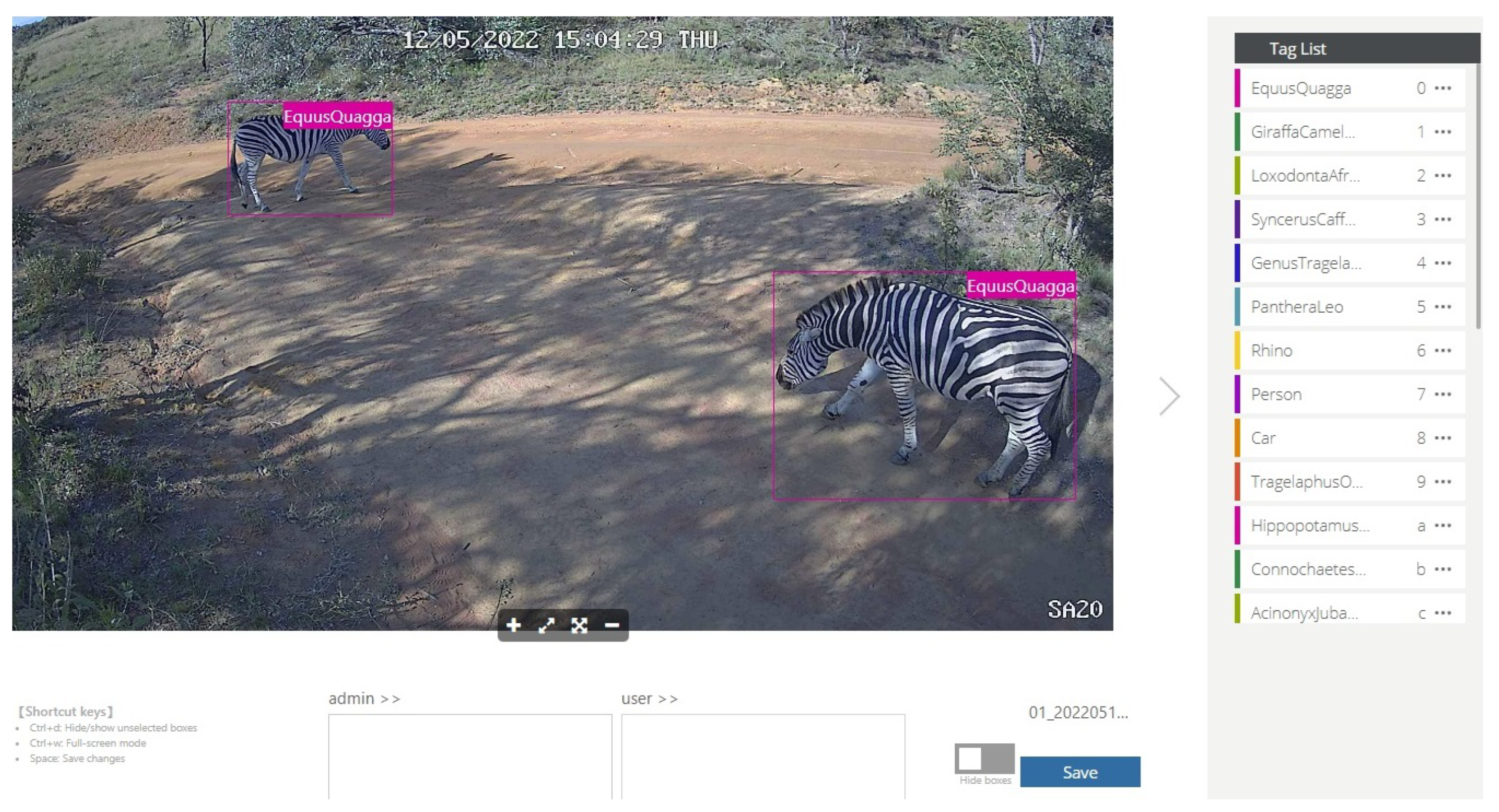

82]. The Conservation AI tagging site is shown in

Figure 3.

XML files are an intermediary representation used to generate TFRecords (a simple format for storing a sequence of binary records). Before the TFRecords were created, the tagged dataset was randomly split into a training set (90%—15,941 tags) and a validation set (10%—1771). Using the Tensorflow Object Detection API, the training and validation datasets were serialised into the two separate TFRecords and used to train the Sub-Saharan African model. The trained model was evaluated over the course of the trial with images obtained from 27 fixed Reolink Go 3/4G cameras installed in Weldgevonden Game Reserve in Limpopo Province in South Africa.

Figure 4 shows an example of a camera being installed.

The camera resolution was configured to 1920 × 1072 pixels with a dots per inch (dpi) of 96. This configuration closely matches the aspect ratio resizer set in the

pipeline.config used during the training of the Faster R-CNN. The Infrared (IR) trigger [

83] was set at high sensitivity, and each camera was fitted with a camouflage sleeve and fastened to a tree once a connection to the Vodacom 3/4G network was established (an audible “Connection Succeeded” message is given). Each camera contained a rechargeable lithium-ion battery that was charged using a solar panel fastened to the tree and connected to the camera via a USB Type-B cable. The cameras had an IP65 waterproof rating, providing protection from low-pressure rainfall. Designed for security purposes, the cameras had a much wider aspect ratio than conventional camera traps, enabling the detection of animals at farther and wider distances. The installation process spanned seven days and covered an area of 400 km

(36,000 hectares). Camera sites were chosen along game paths, water sources, and grazing lawns. The cameras and solar panels were screwed into

Burkea africana trees and out of reach of

Loxodonta africana, which are known to destroy camera traps in the reserve.

The Reolink Go cameras were donated to us for the study by Reolink, and the 3/4G SIM cards were donated to us by Vodacom. The installed camera traps captured 12 different species over a ten-month period, which starting in May 2022 and ending in February 2023. During the trial period, 18,520 detections were made, and 19,380 blanks were reported, totalling 37,900 images.

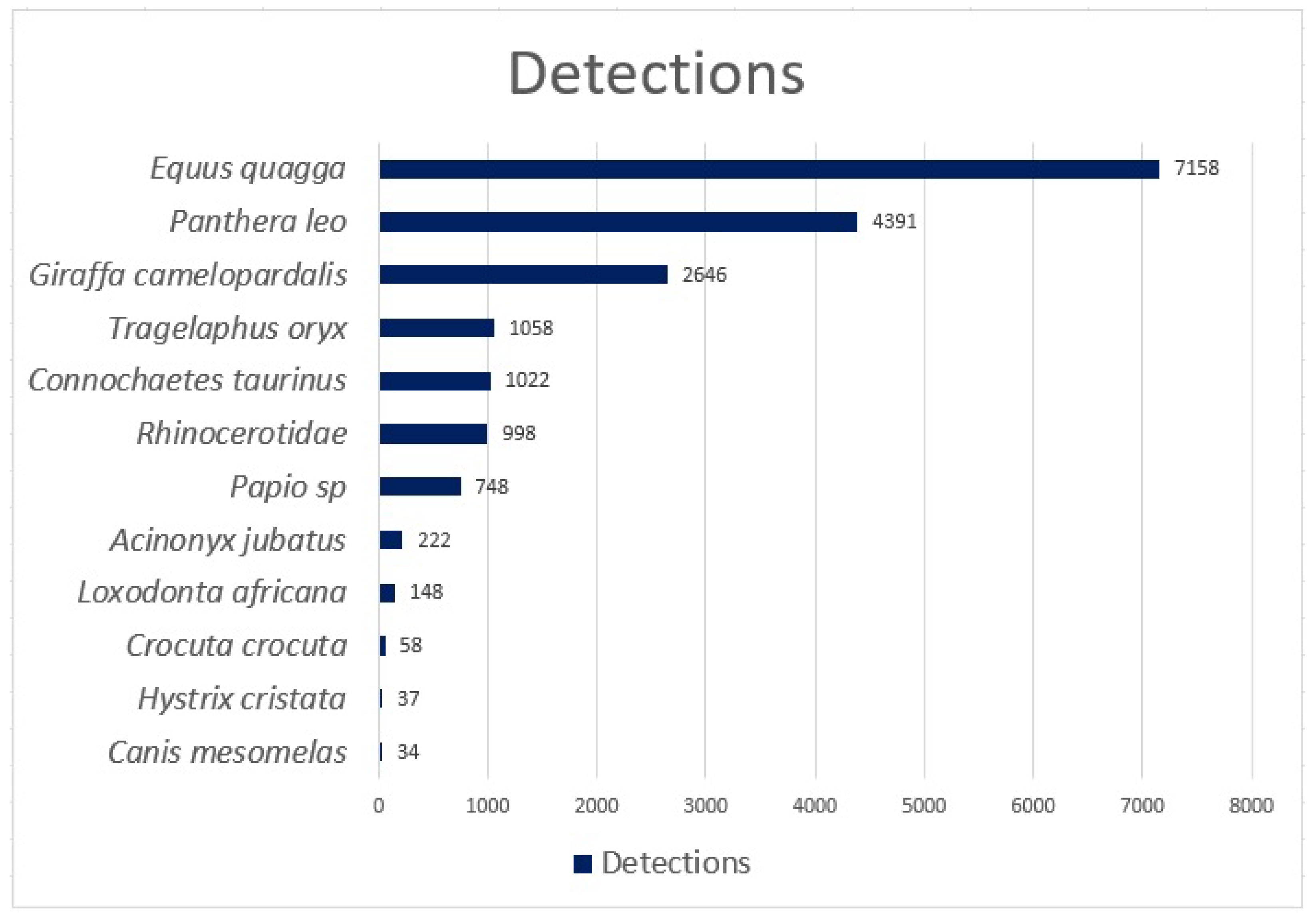

Figure 5 summarises the number of species identified during the trial.

The 37,900 images were used to generate ten subsets of the data to make the evaluation more achievable. A 5% margin of error and a confidence level of 95% in each dataset was maintained using 29 images from each of the 12 animal classes and 29 randomly selected images from the blanks (each of the 10 datasets contained 377 images). The evaluation metrics were calculated for each of the 10 datasets and averaged to produce a final set of metrics for the model. This is a common approach used in object detection, which provides a more comprehensive understanding of the performance of and object detection model because it:

Increases diversity. Object detection models perform differently on different datasets due to variation in object types, sizes, orientations, and backgrounds. Using multiple datasets increases the diversity of the data, which allows the model to be evaluated using a wide range of scenarios.

Ensures robustness. Models that perform well on a single dataset may not necessarily generalise well to new datasets. Evaluating the model on multiple datasets and averaging the results assesses the robustness and ability to perform consistently across different datasets.

Provides a fair comparison. Comparing the performance of different object detection models is important to evaluate them on the same datasets to ensure a fair comparison. Using multiple datasets and averaging the results reduces the impact of dataset bias and allows a more reliable estimate of the model’s true performance to be obtained.

Gives more representative results. Object detection evaluation metrics, such as mAP, can be sensitive to small variations in detection results. By averaging the results across multiple datasets, we can obtain more representative and stable evaluation metrics that reflect the model’s overall performance.

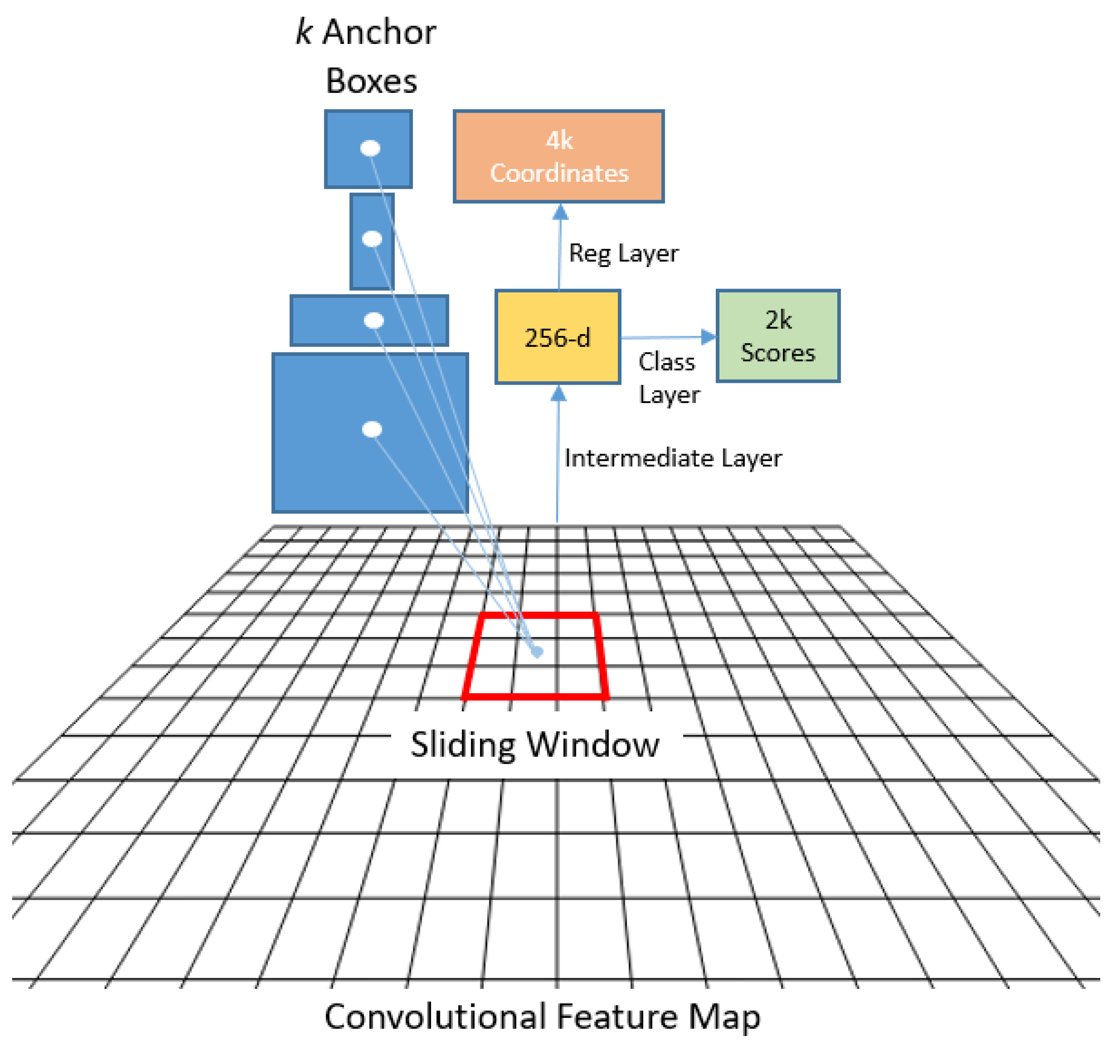

3.2. Faster R-CNN

The Faster R-CNN architecture was trained to detect and classify 12 animal species [

79]. The architecture comprises three components: (a) a convolutional neural network (CNN) [

84] that generates feature maps [

85] and performs classification, (b) a region proposal network (RPN) [

79] that generates regions of interest (RoI), and (c) a regressor that locates each object in the image and assigns a class label.

Figure 6 shows the Faster R-CNN architecture.

The RPN is a crucial component in the Sub-Saharan Africa model, as it identifies potential animal species in camera trap images by leveraging the features learned in the base network (ResNet101 in this case [

86]). Unlike early R-CNN networks [

87], which relied on a selective search approach [

88] to generate region proposals at the pixel level, the RPN operates at the feature map level, generating bounding boxes of different sizes and aspect ratios throughout the image, as depicted in

Figure 7.

The RPN achieves this by employing anchors or fixed bounding boxes, represented by 9 distinct size and aspect ratio configurations, to predict object locations. It is implemented as a CNN, with the feature map supplied by the base network. For each point in the image, a set of anchors is generated, with the feature map dimensions remaining consistent with those of the original image.

The RPN produces two outputs for each anchor bounding box: a probability objectness score and a set of bounding box coordinates. The first output is a binary classification that indicates whether the anchor box contains an object or not, while the second provides a bounding box regression adjustment. During the training process, each anchor is classified as belonging to either a foreground or background category. Foreground anchors are those that have an intersection over union (IoU) greater than 0.5 with the ground-truth object, while background anchors are those that do not. The IoU is defined as the ratio of the intersection to the union of the anchor box and the ground-truth box. To create mini-batches, 256 balanced foreground and background anchors are randomly sampled, and each batch is used to calculate the classification loss using binary cross-entropy. If there are no foreground anchors in a mini-batch, those with the highest IoU overlap with the ground-truth objects are selected as foreground anchors to ensure that the network learns from samples and targets. Additionally, anchors marked as foreground in the mini-batch are used to calculate the regression loss and transform the anchor into the object. The IoU is defined as:

Since anchors can overlap, proposals may also overlap on the same object. To address this, non-maximum suppression (NMS) is performed to eliminate intersecting anchor boxes with lower IoU values [

89]. An IoU greater than 0.7 is indicative of positive object detection, while values less than 0.3 describe background objects. It is important to exercise caution while setting the IoU threshold as setting it too low may lead to missed proposals for objects, while setting it too high may result in too many proposals for the same object. Typically, an IoU threshold of 0.6 is sufficient. Once NMS is applied, the top

N proposals sorted by score are selected.

The loss functions for both the classifier and bounding box calculation are defined as:

where

where

is the object possibility,

is the

anchor coordinate,

is the ground-truth label,

is the ground-truth coordinate,

is the classification loss (log loss), and

is the regression loss (smooth L1 loss).

After generating object proposals in the RPN step, the next task is to classify and assign a category to each bounding box. In the Faster R-CNN framework, this is accomplished by cropping the convolutional feature map using each proposal and then resizing the crops to 14 × 14 × convdepth using interpolation. To obtain a final 7 × 7 × 512 feature map for each proposal via RoI pooling, max pooling with a 2 × 2 kernel is applied after cropping. These default dimensions are set by the Faster R-CNN [

87] but can be customised depending on the specific use case for the second stage.

The Faster R-CNN architecture takes the 7 × 7 × 512 feature map for each proposal, flattens it into a one-dimensional vector, and passes the vector through two fully connected layers of size 4096 with rectifier linear unit (ReLU) activation [

90]. To classify the object category, an additional fully connected layer is implemented with

N + 1 units, where

N is the total number of classes and the extra unit corresponds to background objects. Simultaneously, a second fully connected layer with

4N units is implemented for predicting the bounding box regression parameters. These 4 parameters are

,

,

, and

for each of the

N possible classes. The Faster R-CNN architecture is illustrated in

Figure 8.

Targets in the Faster R-CNN are computed in a similar way to RPN targets but with different classes taken into account. Proposals with an IoU greater than 0.5 with any ground-truth box are assigned to that ground truth. Proposals with an IoU between 0.1 and 0.5 are designated as background, while proposals with no intersection are ignored. Targets for bounding box regression are computed for proposals that have been assigned a class based on the IoU threshold by determining the offset between the proposal and its corresponding ground-truth box. The Faster R-CNN is trained using backpropagation [

91] and stochastic gradient descent [

92]. The loss function in the Faster R-CNN is calculated as follows:

where

p represents the object probability,

u represents the predicted classification class,

t represents the ground-truth label, and

v represents the ground-truth coordinates for class

u. Specifically, the classification loss function

is given by:

where

p is the object possibility,

u is the classification class, and

K is the total number of classes.

for bounding box regression can be calculated using the equation described in (3), with

and

v as input.

To refine the object detection, the Faster R-CNN applies a bounding box adjustment step, which considers the class with the highest probability for each proposal. Proposals assigned to the background class are ignored. Once the final set of objects have been determined, based on the class probabilities, NMS is applied to filter out overlapping boxes. A probability threshold is also set to ensure that only highly confident detections are returned, thereby minimising false positives.

For the complete Faster R-CNN model, there are two losses for the RPN and two for the R-CNN. The four losses are combined through a weighted sum, which can be adjusted to give the classification losses more prominence than the regression losses or to give the R-CNN losses more influence over the RPNs.

3.3. Transfer Learning

The Faster R-CNN model was fine-tuned in this study using the dataset containing 12 animal classes; this is known as transfer learning [

93]. This crucial technique combats overfitting [

94], which is a common problem in deep learning when training with limited data. The base model used was ResNet101, a residual neural network [

86] that was pre-trained on the COCO dataset consisting of 330,000 images and 1.5 million object instances [

82]. Residual neural networks employ a highway network architecture [

95], which enables efficient training in deep neural networks using skip connections to mitigate the issue of vanishing or exploding gradients.

3.4. Model Training

The model training process was performed on a 3U blade server featuring a 24 Core AMD EPYC7352 CPU processor, 512GB RAM, and 8 Nvidia Quadro RTX8000 graphics cards totalling 384GB of GPU memory [

96]. To create the training pipeline, we leveraged TensorFlow 2.5 [

97], the TensorFlow Object Detection API [

98], CUDA 10.2, and CuDNN version 7.6. The TensorFlow configuration file was customised with several hyperparameters to optimise the training process:

Setting the minimum and maximum coefficients for the aspect ratio resizer to 1024 × 1024 pixels, respectively, to minimise the scaling effect on the data.

Retraining the default feature extractor coefficient to provide a standard 16-pixel stride length, which helps maintain a high-resolution aspect ratio and improve training time.

Setting the batch size coefficient to sixty-four to ensure that the GPU memory limits are not exceeded.

Setting the learning rate to 0.0004 to prevent large variations in response to the error.

In order to improve generalisation and to account for variance in the camera trap images, the following augmentation settings were used:

Random_adjust_hue, which adjusts the hue of an image using a random factor.

Random_adjust_contrast, which adjusts the contrast of an image by a random factor.

Random_adjust_saturation, which adjusts the saturation of an image by a random factor.

Random_square_crop_by_scale, which was set with a of 0.6 and a of 1.3.

ResNet101 employs the Adam optimiser to minimise the loss function [

99]. Unlike optimisers that rely on a single learning rate (alpha) throughout the training process, such as stochastic gradient descent [

100], Adam uses the moving averages of the gradients

and squared gradients

, along with the parameters beta1/beta2, to dynamically adjust the learning rate. Adam is defined as:

where

and

are estimates of the first and second moment of the gradients. Both

and

are initialised with 0 s. Biases are corrected by computing the first and second moment estimates:

Parameters are updated using the Adam update rule:

To overcome the problem of saturation changes around the mid-point of their input, which is common with sigmoid or hyperbolic tangent (tanh) activations [

101], the ReLU activation function is adopted [

102]. ReLU is defined as:

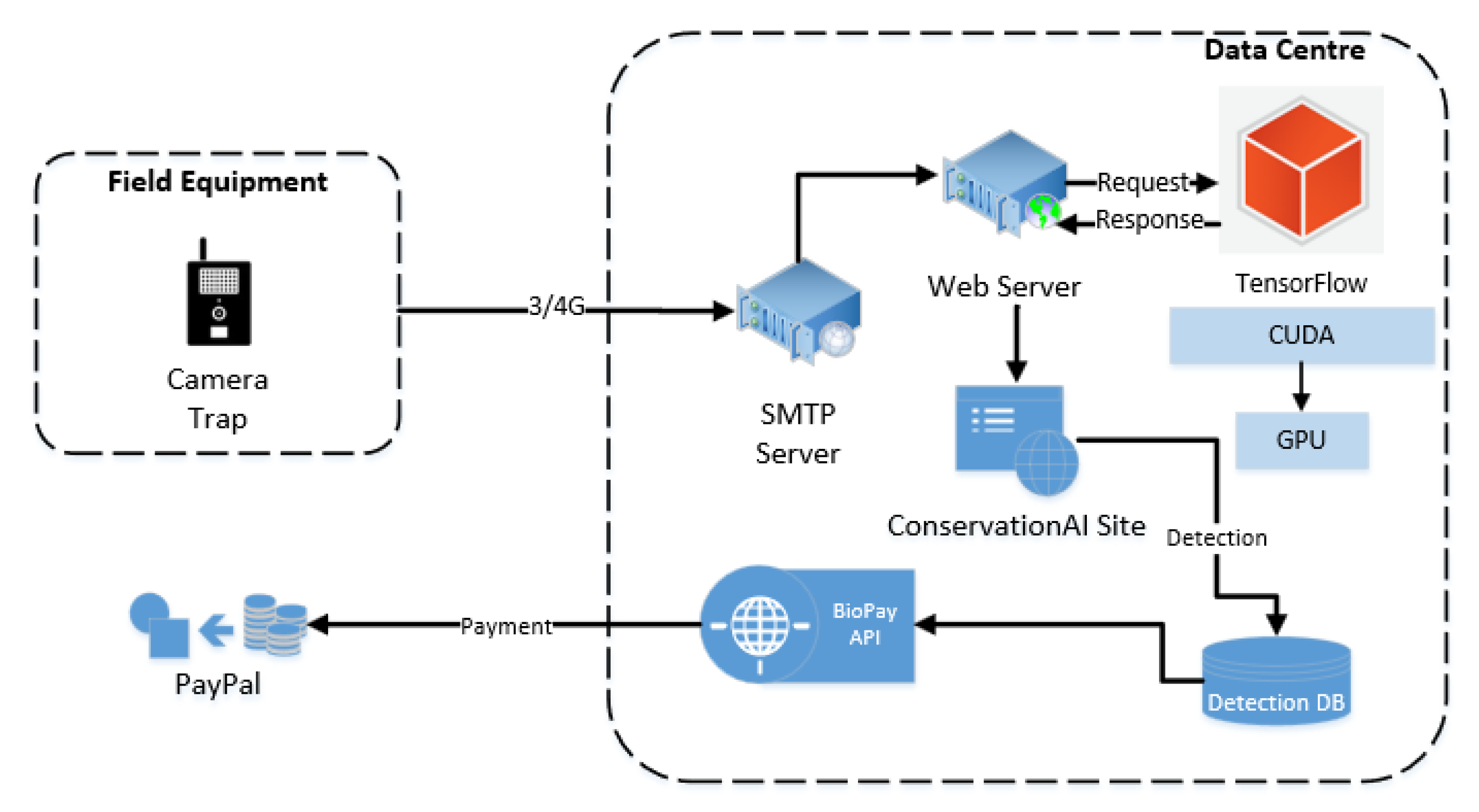

3.5. Inference Pipeline

The Sub-Saharan Africa model was hosted on a Nvidia Triton Inference Server (version 22.08) on a custom-built machine with an Intel Xeon E5-1630v3 CPU, 256GB of RAM, and an Nvidia Tesla T4 GPU [

103]. The real-time cameras transmitted images over 3/4G communications every time the IR sensor was triggered. The trigger distance was set to “High”, which supports a 9 m (30 feet) trigger range [

36]. Images were received from cameras using the Simple Mail Transfer Protocol (SMTP) [

104] and submitted to the Sub-Saharan Africa model using a RestAPI [

105]. All data were stored in a MySQL database (images are stored in local directories; the MySQL database contains hyperlinks to all images with detections).

3.6. BioPay

BioPay is a RestAPI service provided by Conservation AI (this is not a public facing service but an experimental module for research purposes only; see

Figure 9).

The service transferred funds between individual species accounts and a guardian account each time a camera was trigged and an associated animal was detected (see

Figure 1 and

Figure 10,

Figure 11 and

Figure 12 for sample detections). To ensure efficient fund management, 12 separate makeshift bank accounts, each dedicated to a distinct species, and a central guardian makeshift account were created using the PayPal Sandbox Developer SDKs [

106]. Upon successful classification of an animal, 0.1 GBP was securely transferred from the corresponding species account to the guardian account. Each account was credited with GBP 100 at the start of the trial.

3.7. Evaluation Metrics

RPNLoss/objectiveness, RPNLoss/localisation, BoxClassifierLoss/classification, BoxClassifierLoss/localisation, and TotalLoss are used to evaluate the model during training [

97,

107]. RPNLoss/objectiveness evaluates the model’s ability to generate bounding boxes and classify background and foreground objects. RPNLoss/localisation measures the precision of the RPN’s bounding box regressor coordinates for foreground objects, which is to say, how closely each anchor target is to the nearest bounding box. BoxClassifierLoss/classification measures the output layer/final classifier loss for prediction, and BoxClassifierLoss/localisation measures the bounding box regressor’s performance in terms of localisation. TotalLoss combines all the losses to provide a comprehensive measure of the model’s performance.

The validation set during training was evaluated using mAP (mean average precision), which serves as a standard measure for assessing object detection models. mAP is defined as:

where

Q is the number of queries in the set and

is the average precision (

) for a given query

a.

mAP was computed for the binding box locations using the final two checkpoints. The calculation involves measuring the percentage IoU between the predicted bounding box and the ground-truth bounding box and is expressed as:

The detection accuracy and localisation accuracy were measured using two distinct IoU thresholds, namely @.50 and @.75, respectively. The @.50 threshold evaluates the overall detection accuracy, and the higher @.75 threshold focuses on the model’s ability to accurately localise objects.

Accuracy, precision, sensitivity, specificity, and F1-score were used to evaluate the performance of the trained model during inference, in other words, using the image data collected during the trial. Accuracy is defined as:

where TP is true positives, TN is true negatives, FP is false positives, and FN is false negatives. The accuracy metric provides an overall assessment of the object detection model’s ability to make inferences on unseen data. This metric is often interpreted alongside the other metrics defined below.

Precision was used to assess the model’s ability to make true-positive detections and is defined as:

It measures the fraction of true-positive detections out of all detections made by the trained model. In object detection, a true-positive detection occurs when the model correctly identifies an object and predicts its location in the image. A high precision indicates that the model has a low rate of false positives, meaning that when it makes a positive detection, it is highly likely to be correct.

Sensitivity, also known as recall, measures the proportion of true positives correctly identified by the trained model during inference. In other words, it measures the model’s ability to detect all positive instances in the dataset. A high sensitivity indicates that the model has a low rate of false negatives, meaning that when an object is present in the image, the model is highly likely to detect it. This metric is defined as:

Specificity measures the proportion of true-negative detections that are correctly identified by the model. In object detection, true-negative detections refer to areas of an image where there is no object of interest. In this paper, we evaluate blank images to satisfy this metric, as it is important to ensure that classifications are based on features extracted from animals and not the background. Specificity is defined as:

Finally, the F1-score combines precision and recall into a single score. A high F1-score indicates that the model has both high precision and high recall, meaning that it can accurately identify and localise objects in the image. F1-score is defined as:

The ground truths for the 10 subsampled datasets were provided by conservationists and biologists who appear as co-authors in the paper. The ground truths were used to calculate the detections generated by the in-trial model.

5. Discussion

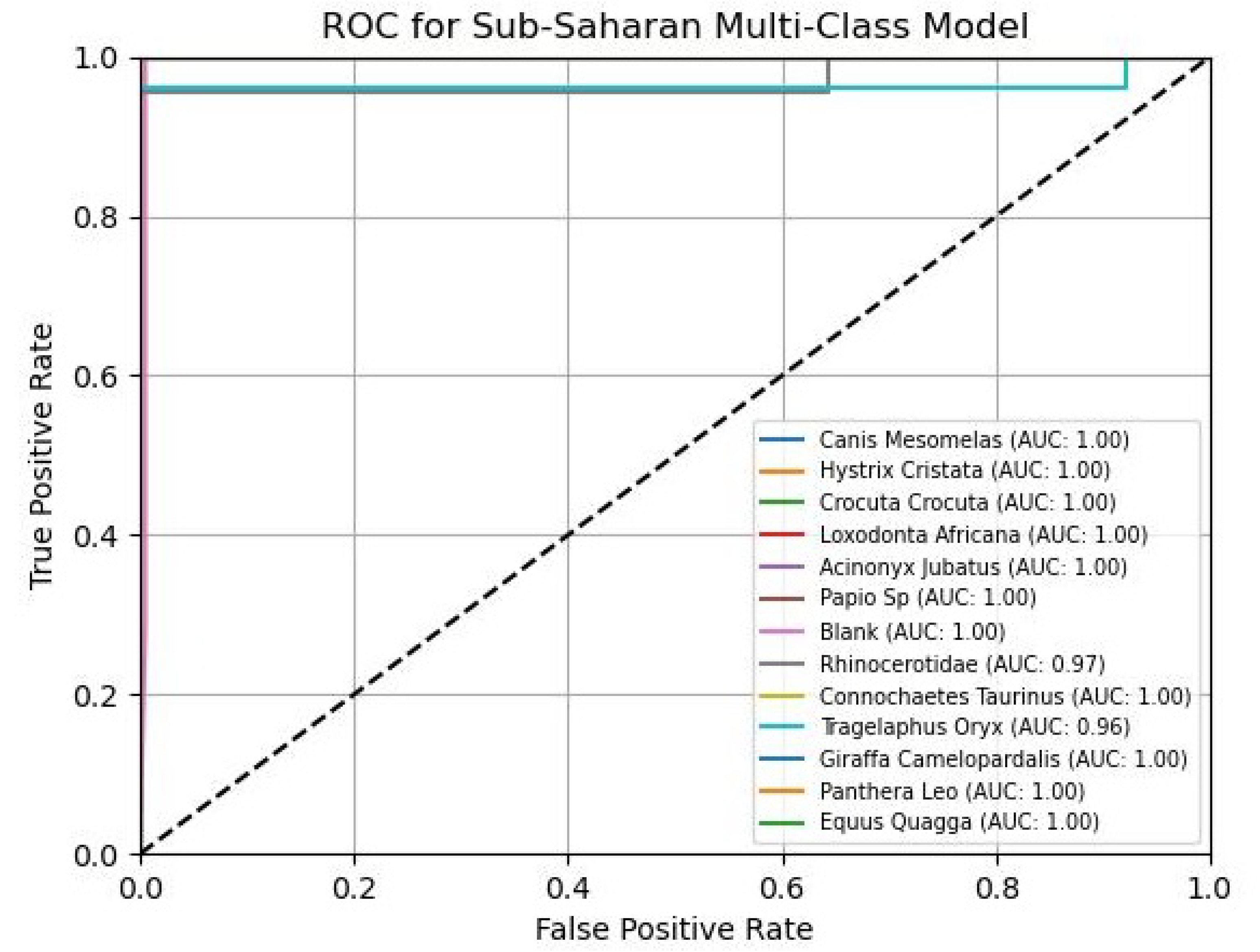

Using deep learning for species identification and 3/4G camera traps for capturing images of animals, we successfully deployed a Sub-Saharan Africa model capable of detecting 12 distinct animal species. The deep learning model was trained with 1099 and 1771 tags per animal, which enabled us to effectively monitor a 400 km region in Welgevonden Game Reserve in Limpopo Province in South Africa using 27 real-time 3/4G camera traps for a period of 10 months. During the model training phase, it was possible to obtain good results for the RPN (objectness loss = 0.0366 and localisation loss = 0.0112 for the training set; objectness loss = 0.0530 and localisation loss = 0.0384 for the validation set). The box classifier losses for classification and localisation were also good (classification loss = 0.1833 and localisation loss = 0.0261 for the training set; classification loss = 0.1533 and localisation loss = 0.0242 for the validation set). Combining the evaluation metrics, it was possible to obtain a total loss = 0.2244 for the training set and a total loss = 0.2690 for the validation set. Again, these are good results for an object detection model. The precision and recall results obtained for the validation set were also good, with mAP = 0.7542, mAP@.50IOU = 0.9449, mAP@.75IOU = 0.8601, AR@1 = 0.6239, AR@100 = 0.8140, and AR@100(Large) = 0.8357. The results show that the model can accurately identify objects in images with high precision and recall while maintaining a high level of localisation accuracy.

Throughout the ten-month trial, the sensitivity (96.38%), specificity (99.6%), precision (87.14%), F1-score (90.33%), and sccuracy (99.31%) metrics were consistently high for most species, thereby confirming the training results. However, the precision scores for

Hystrix cristata and

Acinonyx jubatus were lower, with 77.09% and 59.33%, respectively.

Figure 10 shows a sample image of a

Hystrix cristata and indicates that they appear as small objects in the image, which aligns with the model’s limited ability to detect small objects, as evidenced in

Table 3 and

Table 4. Similarly,

Figure 11 shows a sample image of

Acinonyx jubatus, which is also small, thereby making their detection more challenging, especially at night when the quality of images is lower. It is also worth noting that the model was trained using traditional camera trap data. Historically, conservationists have fixed camera traps much lower down (closer to the ground) so the animal appears larger in the image. As can be seen in

Figure 4, our camera trap deployments are much higher. This was done to prevent the cameras and solar panels being damaged by passing wildlife. Obviously, having cameras lower down removes issues where far-away animals are misclassified, as they would not be in the image. Camera deployment needs some further consideration.

The

Canis mesomelas,

Crocuta crocuta, and

Loxodonta africana were also found to be misclassified as

Acinonyx jubatus,

Rhinocerotidae, and either

Rhinocerotidae or

Panthera leo, respectively, as evidenced in the confusion matrix in

Table 6.

Hystrix cristata was never misclassified, but

Equus quagga and

Rhinocerotidae were mistakenly classified as

Hystrix cristata.

Papio sp. was misclassified as either

Crocuta crocuta or

Acinonyx jubatus. Although

Rhinocerotidae performed well, it was also mistakenly classified as

Loxodonta africana or

Panthera leo, along with

Hystrix cristata.

Tragelaphus oryx and

Connochaetes taurinus were occasionally misclassified as each other.

Giraffa camelopardalis was identified correctly, and

Panthera leo was always correctly identified.

Equus quagga also performed well, but low-quality images captured at night sometimes resulted in them being misclassified as another species. To address this issue, the Sub-Saharan Africa model was continuously trained, and many of the incorrect classifications encountered during the trial were identified and incorporated back into the training dataset to improve model performance. The version of the model used during the trial was version 18, while the current version is 22.

Distance was also a problem in the study [

108]. Animals closest to the camera, as one would expect, classify much better than those farther away. In the trial, the camera trigger distance was set to “High”, which allows objects to be detected up to 30 feet away (9 m). Again, this configuration is not typical in traditional camera trap deployments. Obviously, detections farther away depend on the size of the animal. Detecting large animals, such as a

Loxodonta africana or a

Giraffa camelopardalis, is mostly successful, while detecting smaller animals, such as a

Papio sp., is less so, or at least the confidence scores are significantly lower. This can be seen in

Figure 13, where the

Panthera leo has a lower confidence score than animals captured up close, as shown in

Figure 1, where

Equus quaggas captured close up have a higher confidence score than those in the distance. Not having a distance protocol in the study impacted the inclusion criteria for evaluation. We evaluated farther detections that would not normally trigger the camera trap (animals closer to the camera were responsible for the trigger), and this likely impacted the results in some instances. Further investigation is needed to define a protocol to map distance and detection success and incorporate it into the ground-truth object selection criteria for evaluation.

The BioPay service performed as expected, and the results show the successful transfer of funds between animals and the associated guardian. The detection results during inference show that overall detection success was high, with a small number of misclassifications. This means that money would be transferred from a species account when in fact that animal was not actually seen. This will always be a difficult challenge to address, but a small margin of error in this case is negligible. Obviously, this may not be the case when species are appropriately valued where highly prized animals could transfer large amounts of money when they are misclassified. This will need to be considered in future studies. Any BioPay system would need to be highly regulated, and guardians would have the right to raise concerns and appeal any decisions made. For example, a guardian might claim they have seen an animal, but it was not classified by the system. In this instance, the guardian would have the chance to present their evidence and dispute the outcome, much as individuals do in any other type of financial system. Depending on the outcome, the guardian would be paid or the claim would be dismissed.

Another important point to raise is the incentive surrounding the monetary gain guardians would receive for caring for animals compared to the amount obtained for poaching. Obviously, the former would have to be much higher if something such as BioPay is to be given a chance of success. In other words, receiving £100 pounds a month to ensure the safety of a

Rhinocerotidae compared to the few thousand pounds they would receive for its horn (poachers lower down the IWT chain receive significantly less than those close to the source of the sale [

109]) would unlikely be attractive to those involved in poaching. Another factor to be considered is land opportunity costs [

110]. If alternatives to conservation are very profitable (e.g., oil palm), then payment for species’ presence would also need to be much higher to be effective.

Despite these issues, the results were encouraging. To the best of our knowledge, this is the first extensive evaluation we know of that combines deep learning and 3/4G camera traps to monitor animal populations in real-time with the provision of a monetary reward scheme for guardians. We acknowledge that the trial was limited in scope and that we would need to significantly increase the number of camera traps used in the study, as well as increase the number species in the Sub-Saharan Africa model to include, for example, Potamochoerus larvatus and Panthera pardus, which were captured during the trial. However, poor 3/4G signal and the ongoing destruction of camera traps by Loxodonta africana will likely affect the ability to scale this system up. Cases to protect camera traps from animals may help, and in other sites, they will need to be protected from being stolen (by humans).

We also recognise that a much longer study period is needed to fully evaluate the approach and connect BioPay to real monetary systems. However, an independent study would have to be conducted to value species before BioPay could be fully implemented [

111]. Some of the factors to consider would be: (1) conservation status and rarity, especially if animals are endangered and threatened [

112]; (2) economic value in terms of ecotourism potential and medicinal value could influence their perceived value [

113]; (3) ecological value, such as their role in maintaining ecosystem health or providing ecosystem services. For example, white rhinoceros, in addition to being of high tourism value, are also facilitators, providing other grazing herbivores with improved grazing conditions [

114]; (4) cultural significance can impact perceived value, for example, by being considered sacred or having an important role in traditional cultural practices [

115]; and (5) local knowledge about mammal behaviour, ecology, and uses could influence their perceived value [

116].

Finally, any credible study would need to include guardians, and there would need to be associated stewardship and biodiversity measurement protocols to fully evaluate the utility and impact of the system; this will require some serious thinking. Nonetheless, we believe that this work, inspired by “Interspecies Money”, provides a working blueprint and the necessary evidence to support a much bigger study.

6. Conclusions

This paper introduced an equitable digital stewardship and reward system for wildlife guardians that utilises deep learning and 3/4G camera traps to detect animal species in real time. This provides a blueprint that allows local stakeholders to be rewarded for the welfare services they provide. The findings are encouraging and show that distinct species in images can be detected with high accuracy [

117]. Several similar species detection studies have been reported in the literature [

118,

119,

120,

121,

122,

123,

124]. However, the central focus differs to that presented in this paper. By combining deep learning and 3/4G camera traps, analysis occurs as a single unified process that records and raises alerts. This allows services to be superimposed on top of this technology to derive insights in real time and promote new innovations in conservation.

We proposed a BioPay service in this paper that builds on this idea. It is a disruptive but necessary service that aims to include and reward local stakeholders for the stewardship services they provide. As the literature in this paper indicates, local guardians are seen as crucial components in successful conservation [

26]. However, the barrier to their success has been that they continue to face challenges with full participation in the crafting and implementation of biodiversity policies at local, regional, and global levels, and as such, are poorly compensated for the services they provide [

125]. Many initiatives have excluded local stakeholders through management regimes that outlaw local practices and customary institutions [

27]. Yet, the findings have shown that attempts to separate biodiversity and local livelihoods have yielded limited success: biodiversity often declines at the same time as the well-being of those who inhabit areas targeted for interventions [

126]. BioPay includes and rewards local people, particularly the poorest among them, for the services they provide as and when animal species are detected within regions requiring biodiversity support.

This solution is rudimentary, and we acknowledge that implementing BioPay at scale will be difficult as it is not clear who actually receives payment. For example, should it go to the community or only people who take an active interest in the care and provision of services for animals that live in the locale? It may be best to let communities themselves decide who should be rewarded and how funds are spent [

25]. Whatever the approach, payments will be conditional on the continued presence of species. Payments to guardians responsible for monitoring their presence would make such a system scalable. Guardians could redistribute the funds to those people that are in a position to ensure that species or its habitat prevail. BioPay will not fully address the complex nature of conservation and biodiversity management, but it may provide a tool to help redress the disproportionate allocation of global conservation funding by providing an equitable revenue-sharing scheme that includes and rewards local stakeholders for the services they provide.

Having a service such as BioPay may help forge much closer relationships between guardians of animal welfare, governments, and NGOs and improve conservation outcomes. An alternative view, however, might be to bypass governments and NGOs altogether and use automated blockchain [

127] to directly pay guardians, which would make their roles less relevant and less in need of financing. This may certainly increase the 1% allocation towards nature-based solutions we refer to earlier in the paper. COP26 recognised the need to reward local stakeholders. However, as we pointed out in this paper, the USD 1.7 billion allocated is a fraction of the USD 124–143 billion allocated annually to organisations working in conservation. These funds rarely reach the poorest in local communities, who are in most need of support [

128]. We believe that the findings in this paper provide a viable blueprint based on the “Interspecies Money” principal that will facilitate the transfer of funds between animals and their associated guardian groups.

Despite the encouraging results, however, there is a great deal of future work needed. The size of the study was insufficient to fully understand the complete set of requirements needed to implement the BioPay revenue-sharing scheme. A much larger representation of animals in the model is required to ensure equal representation for all animals in the environment being monitored. There also needs to be a detailed assessment to understand what each species is worth. There were no recipients for the BioPay funds transferred in the study, and a model for including local stakeholders would need to be defined; additionally, a clear understanding of who receives what money and under what circumstances this happens would be necessary.

Conservation AI is a growing platform that already has 28 active studies worldwide. At the time of writing, it has processed more than 5 million images in just over 12 months from 75 real-time cameras and historical datasets uploaded by partners. In the next 12 months, we anticipate significant growth, and this will allow us to run increasingly larger studies to help us address the limitations highlighted in this paper.

Overall, the results show potential, which we think warrants further investigation. This work is multidisciplinary and contributes to the machine learning and conservation fields. We hope that this study provides new insights on how deep learning algorithms combined with 3/4G camera traps can be used to measure and monitor biodiversity health and provide revenue-sharing schemes that benefit guardians for the wildlife and biodiversity services they provide.