1. Introduction

Remote sensing is an advanced earth observation technology for modern society, which can acquire electromagnetic wave characteristics of remote objects without any contact [

1]. With the continuous evolution of imaging spectroscopy, hyperspectral remote sensing has attracted much attention. The captured HSI can be represented as a three-dimensional data cube containing rich spectral signatures and spatial features [

2]. Therefore, hyperspectral imaging has been utilized in various vital areas, such as precision agriculture [

3], environment monitoring [

4], and target detection [

5]. Acquiring HSI data is easy, but how to intelligently process them is a challenge. Therefore, classification, as an important intelligent processing method, has received extensive attention [

6].

Numerous methods of HSI classification have been proposed so far. Many methods based on machine learning (ML) are explored in the initial stage. Tensor-based models [

7,

8] can also be applied to feature extraction and classification in hyperspectral imaging. ML-based methods can be classified into two categories, based on the type of features: spectral-based methods and spectral-spatial-based methods. In general, the manner in which the spectral-based method treats HSIs can be thought of as an assemblage of spectral signatures. For example, random forest [

9], K-nearest neighbors [

10], and support vector machine (SVM) [

11]. Furthermore, given the high spectral bands of HSI, the classification task may be affected by the Hughes phenomenon, resulting in suboptimal classification accuracy. To alleviate the phenomenon, some methods of dimensionality reduction incorporate principal component analysis (PCA) [

12] and linear discriminant analysis [

13]. The purpose is to map HSIs, which have a high number of dimensions, onto a low-dimensional feature space, while preserving the distinctiveness of various classes. A band selection approach, based on heterogeneous regularization [

14], is also applied to HSIs. The principal objective is to select spectral bands containing abundant information and reduce spectral dimensions, which contributes to the smooth operation of subsequent tasks. However, it is challenging to accomplish superior classification accuracy of ground objects only by using spectral signatures, due to the intra-class variability of spectral profiles in non-local spatial landmarks, and the scarcity of labeled samples [

15]. To offset the above shortcomings, the spatial-spectral-based methods include Gabor wavelet transform [

16] and local binary patterns [

17] that have been highly regarded by researchers. However, these approaches for optimizing the hyperparameters, using a priori knowledge, are not able to extract deep features of hyperspectral imaging, to obtain desirable performance in complex scenes [

18].

Compared to traditional classifiers, deep learning (DL)-based techniques have shown remarkable performance in various visual tasks, due to their powerful fitting and feature extraction capabilities. Since their inception in 2006 [

19], these approaches have been successfully applied in various fields such as semantic segmentation [

20], image classification [

21], and the processing of remote sensing imagery [

22]. Given the advantages of high flexibility, automatic feature learning, and high precision, DL can capture high-level features from complex HSI data. Recently, DL-based classifiers have gained considerable research attention and are widely used, due to their capability in automatically identifying distinguishing features. Representative DL architectures contain stacked autoencoders [

23], deep belief networks [

24], recurrent neural networks [

25], and CNNs [

6,

26,

27]. A CNN has the advantages of automatic feature learning, parameter sharing, and parallel computing, making it particularly suitable for HSI classification. Firstly, a CNN has one-dimensional convolutions (1D-CNN) [

28,

29] to capture features from individual pixels of HSIs and subsequently uses the obtained features for HSI classification. Nevertheless, the restricted number of labeled samples creates difficulty for a CNN to exploit its performance when only spectral signatures are considered [

30]. Additionally, the intra-class variability of spectral signatures leads to a significant amount of salt-pepper noise in the classification maps [

31]. As a two-dimensional CNN (2D-CNN) [

32,

33] is capable of extracting features in the spatial dimension, it can better utilize spatial context information. However, it can be shown [

34] that the complicated 3D structure of HSI data cannot be processed well by considering only spectral signatures and spatial features. The integrated spectral and spatial features are easily disregarded, which is an essential element affecting classification accuracy. As a result, three-dimensional CNNs (3D-CNN) [

35,

36,

37] come into the limelight. Compared with 2D-CNN models, 3D-CNN kernels are able to slide into the spatial and spectral domains simultaneously, to extract joint spatial-spectral features.

Attention mechanisms have the characteristics of concentrating on useful information and suppressing useless information, which can be used as an enhancement unit in the CNN structure, to optimize the classification results. Additionally, the weakness of a narrow CNN receptive field can be alleviated by the attention mechanism, by calculating dynamic global weights. Recently, CNNs combined with attention mechanisms have been favored by many researchers. The 4-dimensional CNN (4D-CNN) fuses spectral and spatial attention mechanisms [

38]. The attention mechanism can adaptively allocate the weights from different regions. The spectral and spatial information of 4D representations is processed by the CNN. The ultimate objective of 4D-CNN-based attention mechanisms is to help researchers capture important regions. Attention gate [

39], an algorithm that mimics human visual learning, can be combined with DL models. It helps the network focus on the target location and learns to minimize redundant information in the feature map. Feature points in each layer of the image are emphasized by the attention mechanism to reduce the loss of location information. Detail attention [

40] is located after the CNN layer and before the max pooling layer. It is applied to improve the feature map’s ability to concentrate on key regions and improve network performance. A modified squeeze-and-excitation network [

41] is embedded in a multiscale CNN to strengthen the feature representation capability. It serves as a channel attention mechanism to emphasize useful information, and helps boost network performance. Additionally, multiscale nonlocal spatial attention [

42], an essential component of a multiscale CNN, aggregates the dependencies of features in the learning process in multiscale space. It is employed for hyperspectral and multispectral image fusion. Although the convolution operation in a CNN captures the relationships between neighboring features of different inputs, these relationships are not utilized during the network training. This is the reason for the unsatisfactory results. The employment of a self-attention mechanism [

43] is a potential solution to the problem. However, the multi-head attention mechanism [

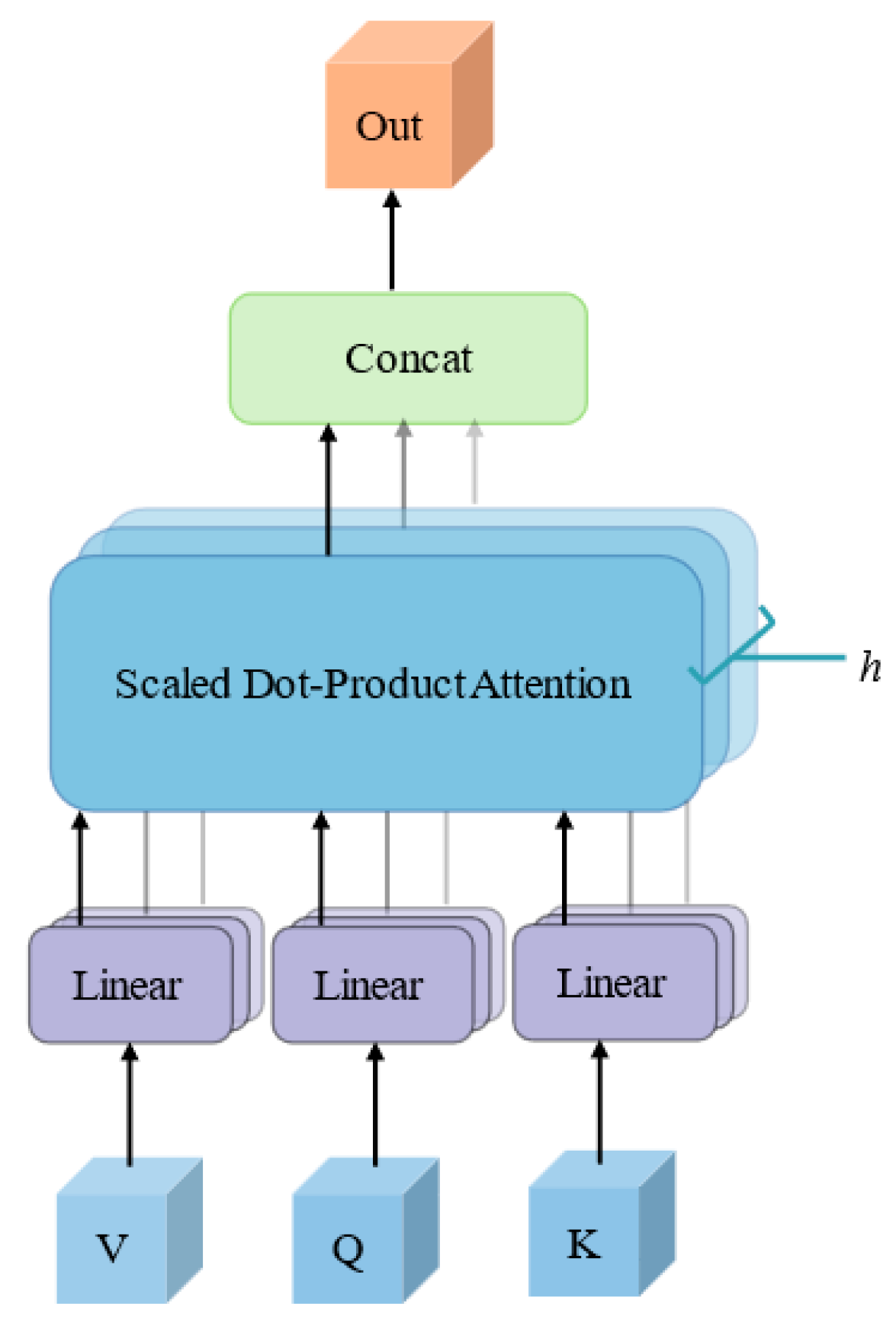

43] is proposed due to the fact that the self-attention mechanism has the weakness of over-focusing on its own position.

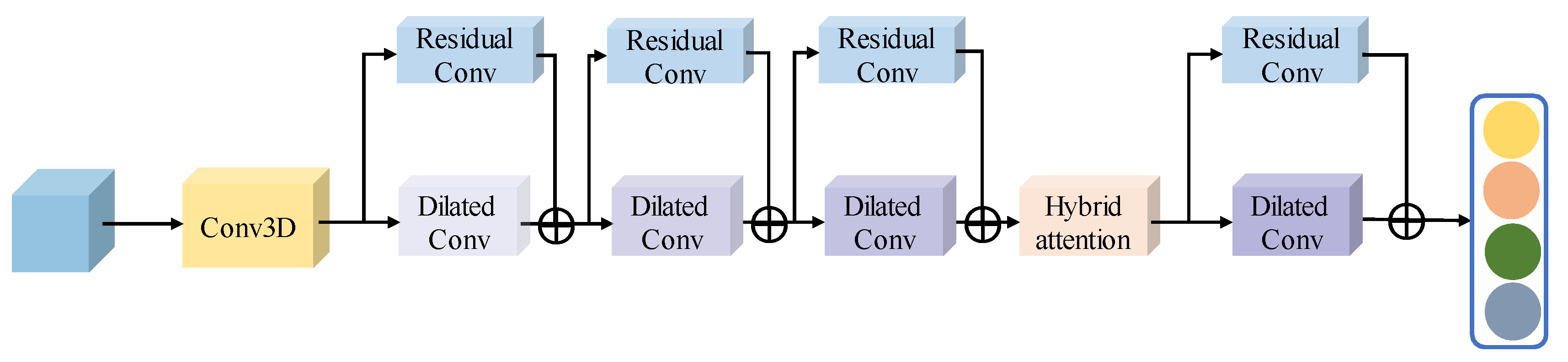

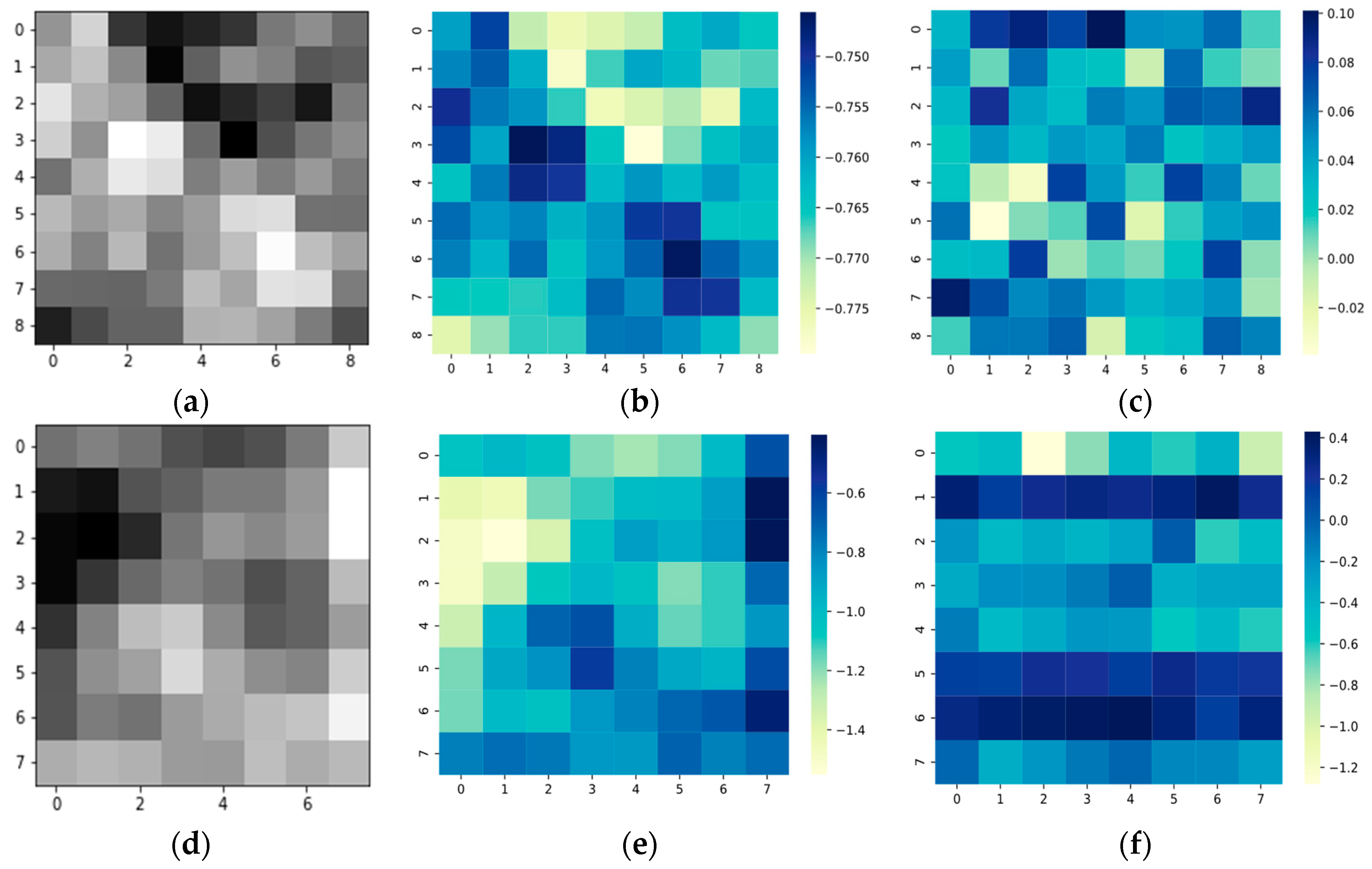

We designed an HSI classification network based on a multiscale hybrid networks and attention (MHNA) mechanisms. The designed approach comprises three stages: a spectral-spatial feature extraction network, a spatial inverted pyramid network, and a classification network. The spectral-spatial feature extraction network is utilized for extracting the spectral and spatial features, and the spatial inverted pyramid network is employed to capture the spatial features of the HSI dataset. The classification network is applied to generate classification results. The structure of the multiscale hybrid network is employed for extracting complex spectral and spatial features under the condition of insufficient training samples. Dilated convolution, combined with residual convolution in the spectral-spatial feature extraction network, can alleviate the restricted receptive field and gradient vanishing. Moreover, the residual convolution can maintain shallow features of the low-level layers to reduce information loss. Ultimately, the classification network is applied to integrate the features and obtain classification results. The main contributions of the proposed MHNA mechanisms can be summarized as:

- (1)

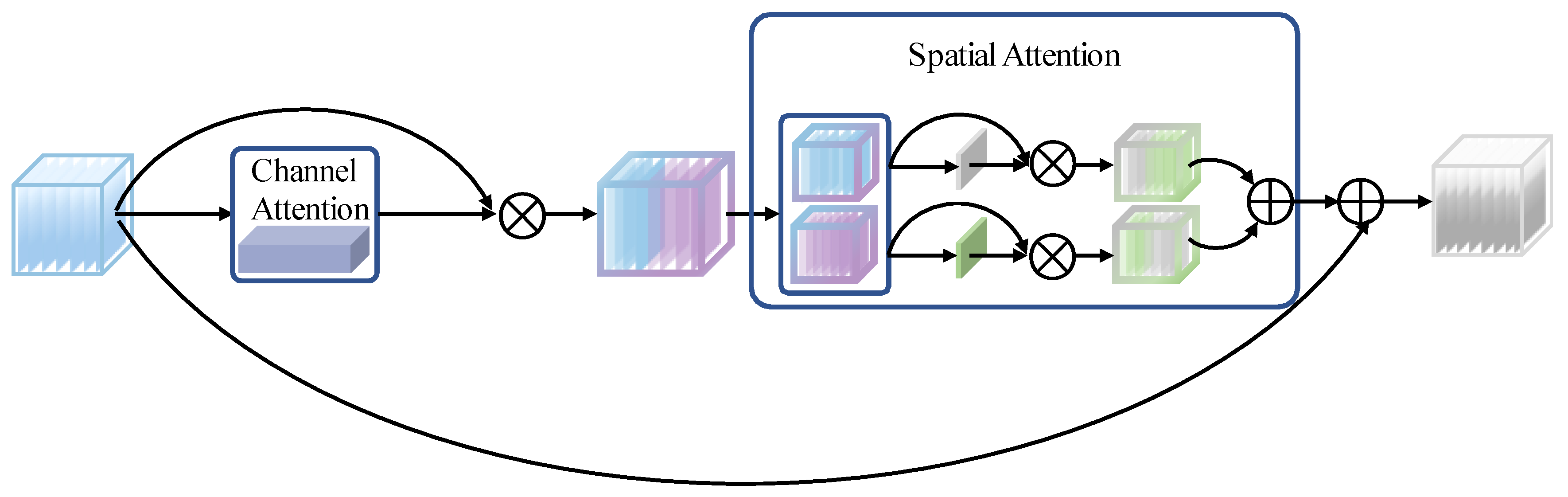

In the article, a novel multiscale hybrid network, using two different attention mechanisms, is applied for HSI classification. It includes a spectral-spatial feature extraction network with a hybrid attention mechanism, a spatial inverted pyramid network, and a classification network with multi-head attention mechanism. The designed approach can capture sufficient spectral and spatial features with a limited number of labeled training samples.

- (2)

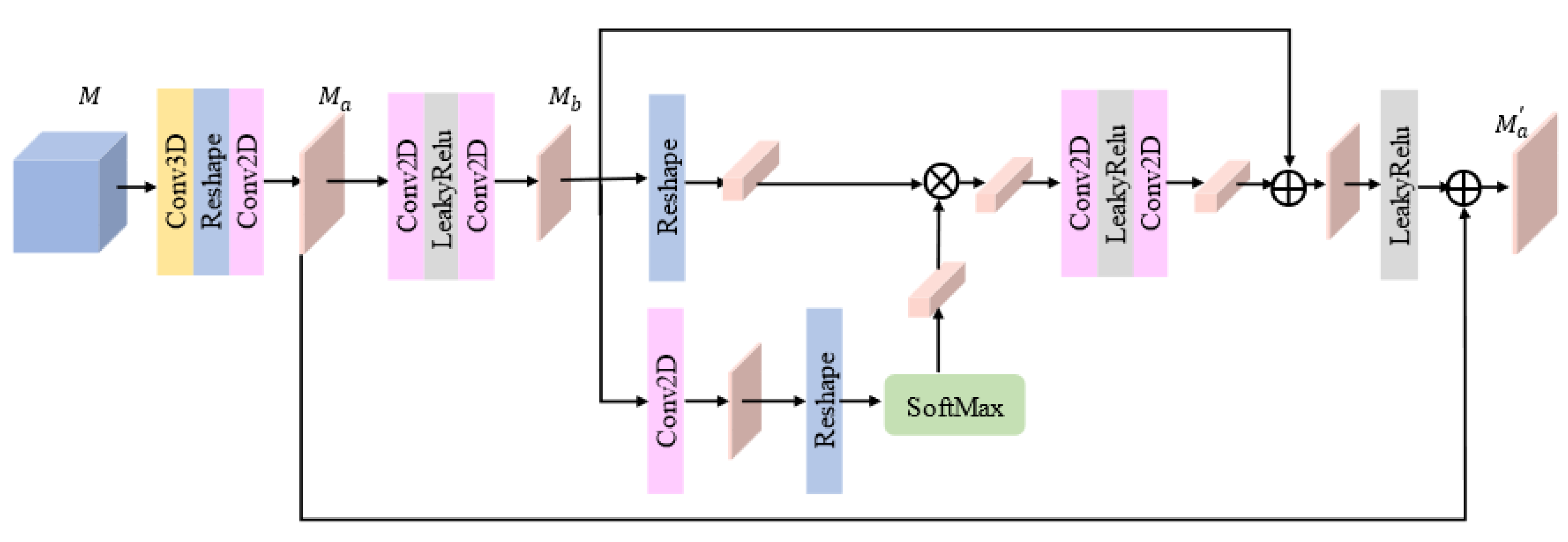

We apply a hybrid attention mechanism and a multi-head attention mechanism in the MHNA mechanism. The objective of the hybrid attention mechanism is to focus on numerous spectral signatures primarily, and a few spatial features, which suppresses the useless features. The muti-head attention mechanism can form multiple subspaces and help the network pay attention to information from different subspaces.

- (3)

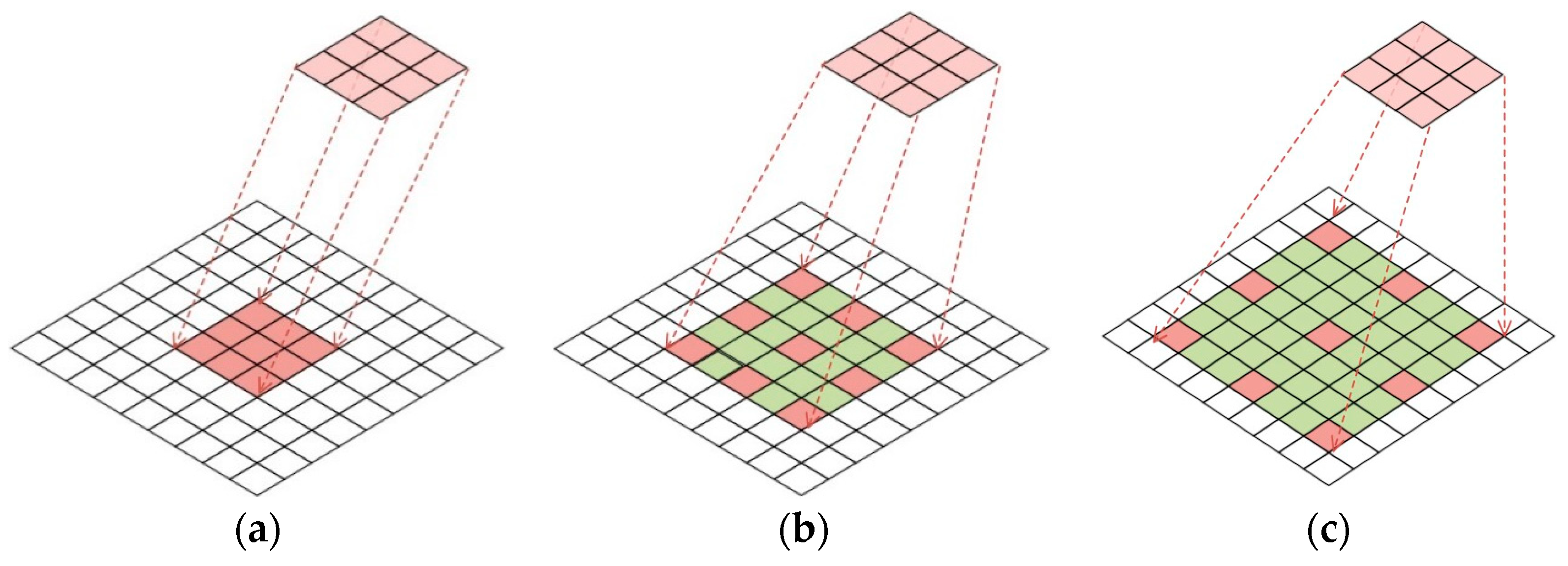

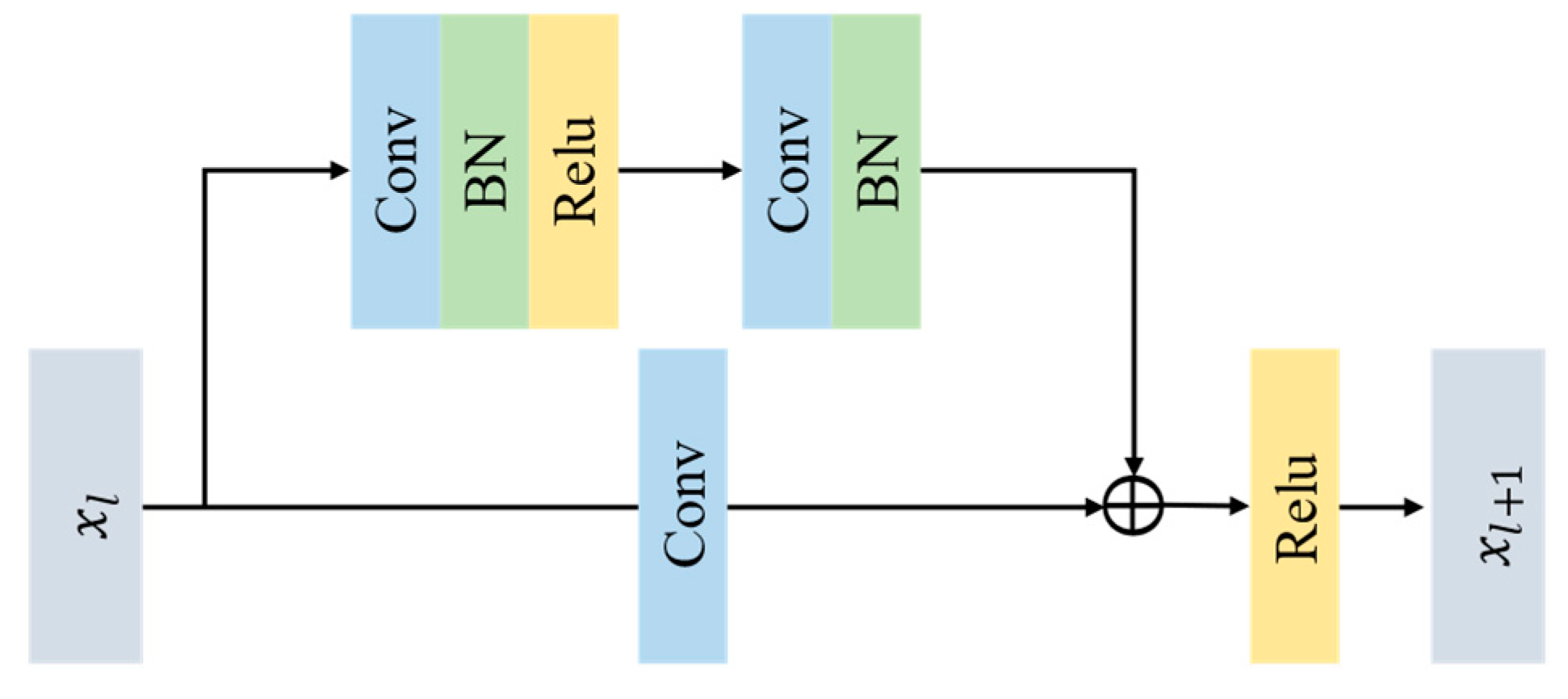

We propose a dilated convolution, combined with residual convolution in a spatial-spectral feature extraction network, to obtain a larger receptive filed without changing the size of the original input feature map. Moreover, the residual network is able to prevent gradient vanishing and maintain the shallow features of the low-level layers.

- (4)

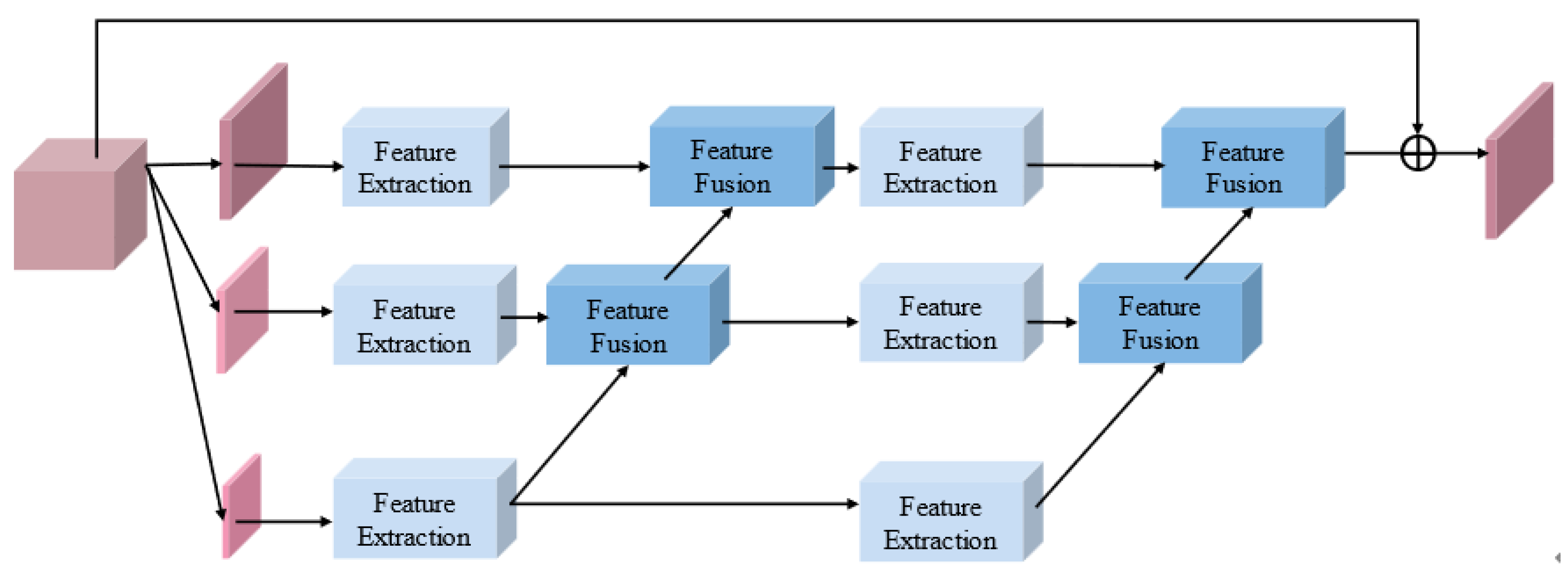

A spatial inverted pyramid network is introduced for spatial feature extraction. Firstly, multiscale spaces are generated by down-sampling operations. Secondly, the feature extraction blocks are applied to capture spatial features from multiscale spatial information. Then the spatial features from multiple scale streams are fused by feature fusion blocks. It is beneficial to sufficiently extract spatial features and allow more informative features to pass further.

The remainder of this article is structured as follows.

Section 2 presents the related works. The specifics of the designed approach are outlined in

Section 3. Experimental results are reported in

Section 4, and

Section 5 provides various discussions.

Section 6 offers the conclusion and future prospect of the article.

4. Experiment

4.1. Description of the Hyperspectral Datasets

In our experiments, the aim is to indicate the effectiveness of the designed approach. Five famous hyperspectral datasets from various imaging platforms are adopted. Detailed descriptions of the datasets are shown below:

Pavia Center dataset (PC): The PC dataset was acquired using the ROSIS sensor from Pavia Center, Italy. It comprises bands of 1096 × 715 pixels, and spectral reflectance bands in the range of 0.43–0.86 μm. The geometric resolution is 1.3 m. There are 13 noisy bands excluded, leaving 102 bands for classification to minimize the occurrence of mixed pixels. The PC’s ground truth consists of nine landcovers.

Salinas Valley (SA): The SA dataset was collected by the AVIRIS sensor. The spatial dimensions of SA are 512 × 217 and its resolutions is 3.7 m. The raw dataset of SA has 24 bands ranging from 0.4 to 2.5 μm. A total of 20 bands affected by water absorption are eliminated. As a result, we only use 204 bands for classification. The dataset comprises 16 types of landcover.

WHU-Hi-LongKou (LK): The LK dataset was obtained by the RSIDEA research group of Wuhan University. It comprises 550 × 400 pixels and 270 bands from 0.4 to 1.0 μm. The spatial resolution is approximately 0.463 m. The study site is an agricultural land space, which includes nine samples.

WHU-Hi-HongHu (HH): The HH dataset was captured by the RSIDEA research group of Wuhan University on 20 November 2017. The imagery size is 940 × 475 pixels, with 270 bands from 0.4 to 1.0 μm, and a spatial resolution of about 0.043 m. The dataset includes 22 classes, but 18 categories were selected from the original dataset in our experiment, and the processed image size is 331 × 330.

Huston (HO): The HO dataset was obtained by the ITERS CASI-1500 sensor in Houston, Texas, USA and its surrounding rural areas, with a spatial resolution of 2.5 m. Its data size is 349 × 1905 and it contains 144 bands in the 0.36 to 1.05 μm band range. There are 15 characteristic types in the study area, including roads, soil, trees, highways, etc.

4.2. Experiment Evaluation Indicators

Three evaluation indicators are applied to evaluate the classification performance of the designed approach in the article, namely, overall accuracy (OA), average accuracy (AA), and Kappa coefficient (Kappa) [

60]. The confusion matrix is introduced to provide a more intuitive display of the three evaluation indexes mentioned above. In general, it is expressed in the matrix form of

. In the confusion matrix, the prediction labels are represented by each column, and the actual labels are represented by each row. The matrix can be defined as follows:

In (18), the value of

corresponds to the total number of categories,

represents the number of samples from class

that are classified as class

, and

and

represent the total of the samples in each row and class. Therefore,

OA,

AA, and

Kappa are described as follows:

4.3. Experiment Setting

The experiment is performed on a workstation specifically designed for DL tasks; we use Intel(R) Xeon(R) CPU E5-26800 V4 processor. The clock frequency of CPU is 2.4 GHZ, the number of CPU cores is 14, and the cache size is 35,840 KB. At the same time, it also has 128 GB’s RAM and 6×NVIDIA GeForce RTX 2080Ti Super Graphics processing Unit with 12 GB of memory.

To verify the performance of our designed network, we choose ten methods for comparative experiments, including one the most classical ML method and nine advanced methods based on CNNs. The brief introduction to the comparative methods as follows:

- (1)

SVM: From the perspective of classification, a SVM is a generalized classifier, which is evolved on the basis of a linear classifier by introducing a structural risk minimization principle, optimization theory and kernel function [

61]. Each labeled sample, with a continuous spectral vector in the HSI, can be directly sent to the classifier without feature extraction and dimensionality reduction.

- (2)

SSRN [

37]: The primary idea of an SSRN is to capture features by stacking multiple spectral residual blocks and multiple spatial residual blocks. The spectral residual blocks used a 1 × 1 × 7 convolution kernel for feature extraction and dimensionality reduction. The spatial residual blocks use a 3 × 3 × 1 convolution kernel for spatial feature extraction. The classification accuracy is improved by adding batch normalization (BN) [

62] and ReLu after each convolution layer.

- (3)

FDSS [

46]: The 1 × 1 × 7 and 3 × 3 × 7 convolution kernels are used to obtain spectral and spatial features, respectively. To improve the speed and prevent overfitting, the FDSSC uses a dynamic learning rate, a parameter correction linear unit (PRELU) [

63], BN and dropout layer.

- (4)

DBMA [

53]: The network is composed of two branches, which extract spectral signatures and spatial features to reduce the interference between the two features. In addition, inspired by CBAM [

64], a channel and a spatial attention mechanism are applied to the branches to improve the classification accuracy.

- (5)

DMFN [

49]: A two-branch network structure extracts spatial features through 2-D convolution and 2-D dense blocks. Additionally, it uses 3-D convolution and 3-D dense blocks to extract spectral features directly from the original data. Then, the two features are converged by 3-D convolutional blocks and 3-D dense blocks, which are different from the fusion of other networks.

- (6)

PCIA [

55]: It is a double-branch structure, which is implemented by pyramid 3D-CNN architecture, and the iterative attention mechanism is introduced into it. A new activation function, Mish, and an early stop are applied to improve the effective.

- (7)

SSGCA [

54]: The difference between SSGCA and DBMA is the attention mechanism, which refers to the GCNet [

65].

- (8)

MDANet [

66]: The network is a multiscale three-branch dense connection attention network. Traditional 3-D convolutions are replaced with 3-D spectral convolution blocks and 3-D spatial convolution blocks.

- (9)

MDBNet [

67]: A multiscale dual-branch feature fusion network with attention mechanism is adopted in the network. It can extract pixel-level spatial and spatial features through a multiscale feature extraction block to enlarge the receptive field.

- (10)

OSDN [

56]: The combination of one-shot dense blocks and polarization attention mechanism comprises of two separate branches for feature extraction.

The designed approach is structured in an end-to-end manner. Specifically, parameters in the network can be trained and the network is able to learn features in HSIs and perform feature extraction and classification tasks. Details of the MHNA implementation are summarized in Algorithm 1. To ensure the impartiality of the experiment, the same hyperparameters are applied to all methods, and the Adam optimizer [

68] is used to update for 200 training epochs. The initial learning rate is set to 0.001 and the cosine annealing [

69] method is employed to dynamically adjust the learning rate each 15 epochs. In addition, an early-stopping mechanism is added to terminate the training process and enter the testing phase if the validation loss does not decrease for 20 consecutive epochs. The input of the HSI cube patch size is 9 × 9, and the batch size is 64. Additionally, the original input of the spatial inverted pyramid network reduces the dimension to 3 by using PCA.

Table 4,

Table 5,

Table 6,

Table 7 and

Table 8 provide the quantity of the training sets, validation sets, and test sets on the five datasets.

| Algorithm 1 Structure of the designed MHNA |

| Input: |

| (1) Unprocessed HSI by PCA: H with b bands |

| (2) Processed HSI by PCA: P with p bands |

| Step 1: H and P are normalized and divided into training test, validation test, and test set. |

| Step 2: A w w b patch, extracted around each pixel of the dimension-unreduced HSI, is considered the spectral-spatial features. |

| Step 3: A w w p patch, extracted around each pixel of the dimension-reduced HSI, is considered the spatial features. |

| Step 4: The samples of the training test are fed into the network and optimized using the Adam optimizer. The initial learning rate is set 0.001. It is adjusted dynamically every 15 epochs. |

| Step 5: The classification of the total HSI is achieved by inputting the corresponding spectral and spatial features to the network. |

| Step 6: The two-dimensional matrix records labels of the HSI. |

| Output: Prediction classification map |

4.4. Experiment Results

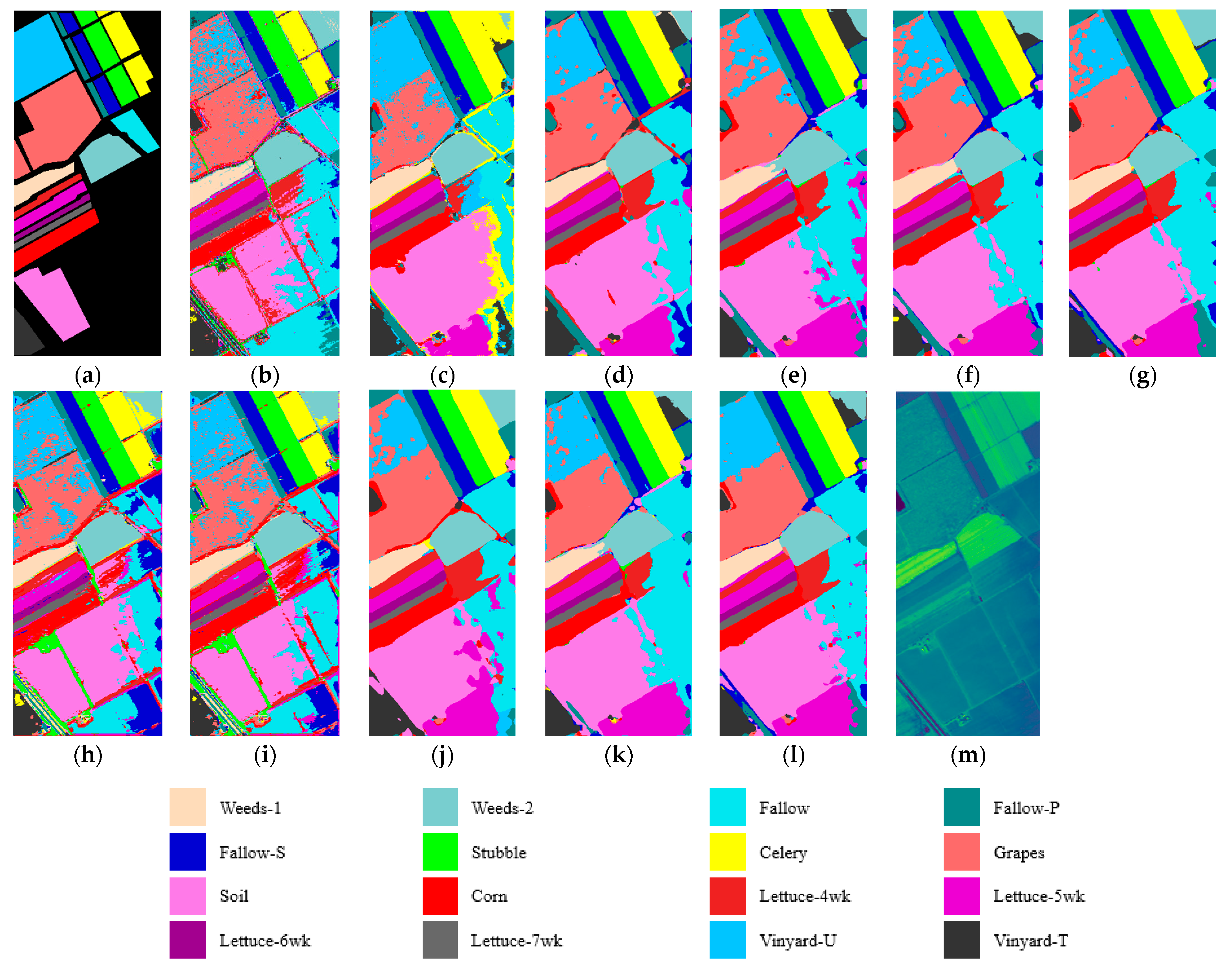

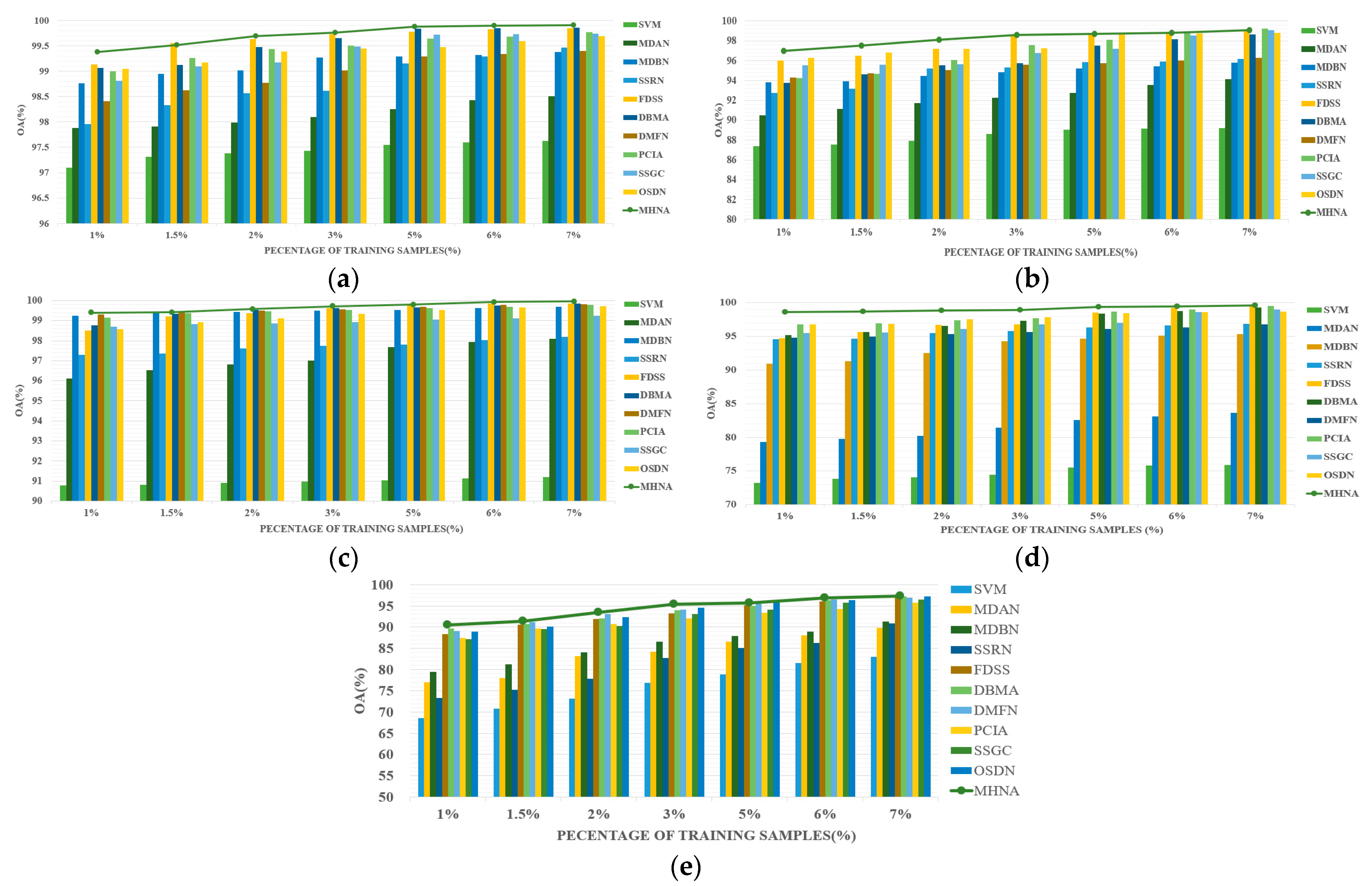

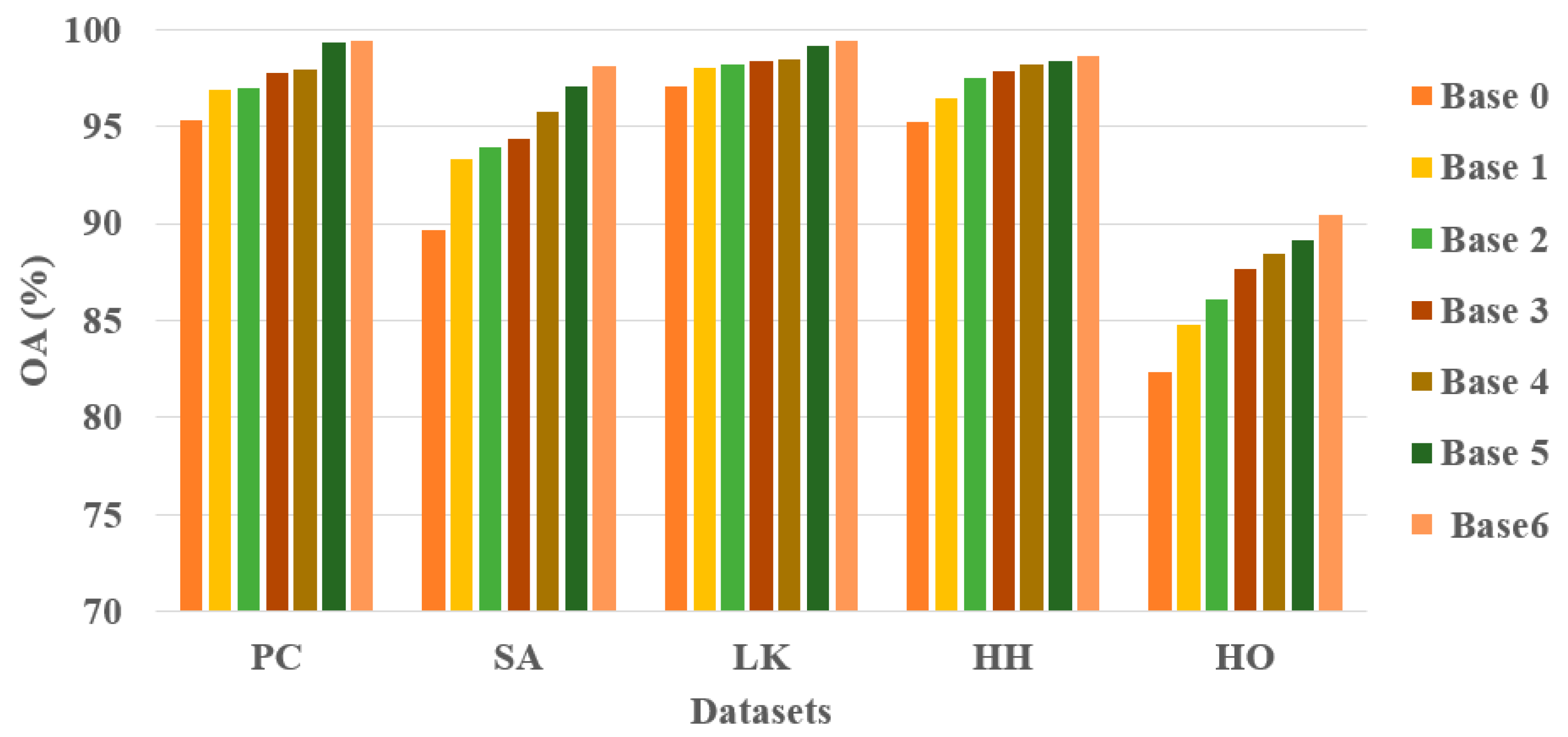

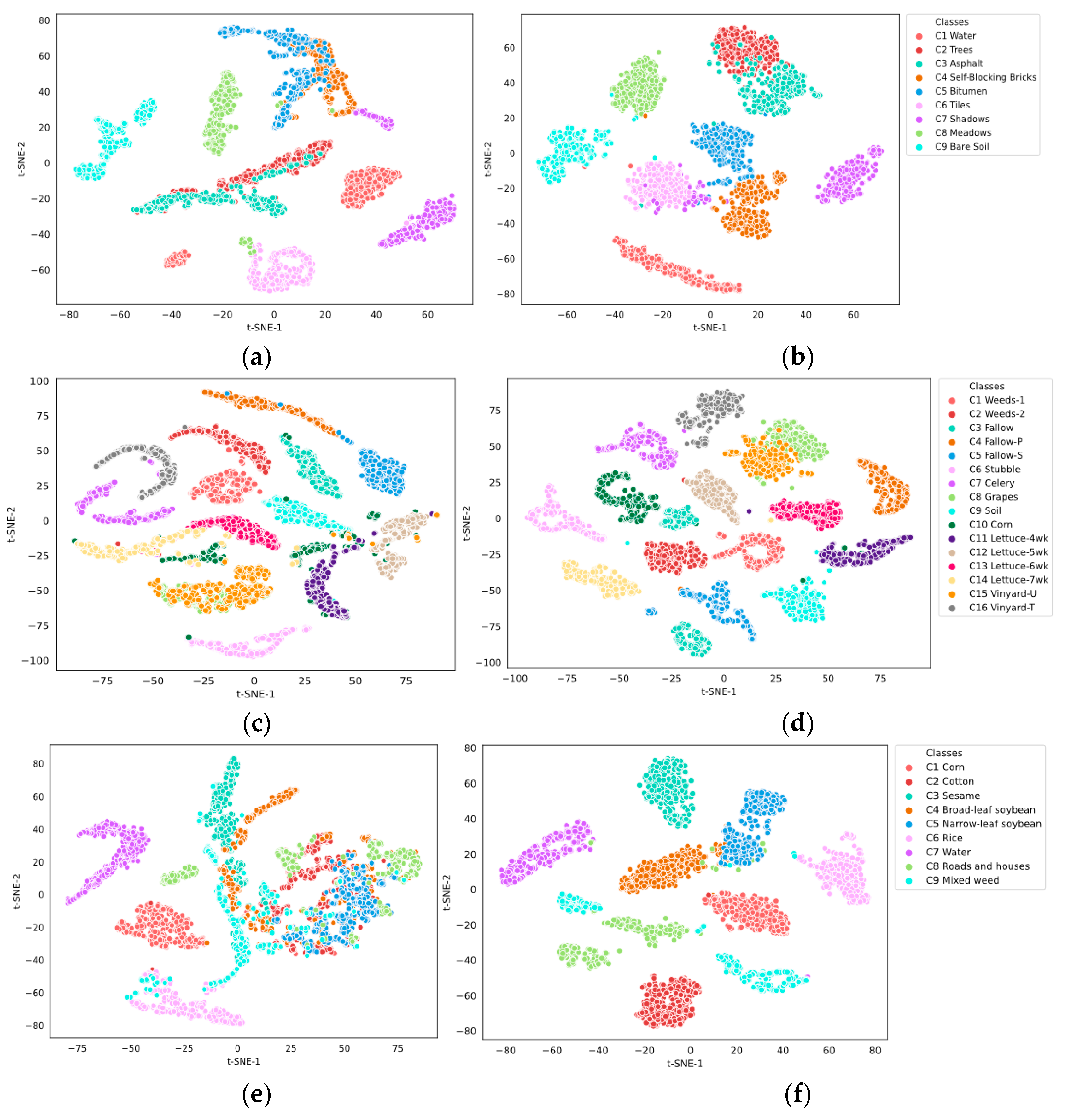

We first analyze the classification performance of the various approaches on the SA dataset in

Table 9. The best OA, AA, and Kappa results are highlighted in bold. The proposed approach is compared with the SVM, SSRN, FDSSC, DBMA, DMFN, PCIA, MDAN, MDBN, SSGCA, and OSDN approaches. The proposed MHNA improves OA by 9.77%, 2.50%, 0.51%, 2.20%, 3.45%, 1.67%, 7.22%, 3.90%, 2.10%, and 0.50% more than the above methods, respectively. It is because the spectral-spatial feature extraction network and the spatial inverted pyramid network are applied to jointly extract spectral and spatial features. Additionally, losing the multiscale information is prevented by using residual convolution in the spectral-spatial feature extraction network. The OA of the SVM is lower compared to that of DL-based methods, because the SVM only utilizes the spectral signatures of the HSI. The MDAN and MDBN have lower OA than other DL-based methods. This indicates that it is difficult to target the characteristics of the hyperspectral dataset and to extract discriminative features on the SA dataset. The PCIA network, based on a pyramid structure, achieves the highest AA among all methods, reaching 98.88%. It shows that the pyramid structure has an extremely high potential for feature extraction. It can be observed that the SSRN and FDSS achieve lower OAs than the proposed MHNA and OSDN. This is because SSRNs and FDSSs extract spectral and spatial features through two consecutive convolutional blocks. Additionally, the input of the spatial block is derived from the spectral block, resulting in the loss of some spatial information. The DBMA and DMFN are dual-branch networks, while the DBMA has better OA than the DMFN. The distinction between the DMFN and DBMA lies in the absence of the attention mechanism in the DMFN. This suggests that attention mechanisms have a significant impact on the network. Meanwhile, the number of parameters for all approaches is reported in

Table 9. It can be concluded that the number of parameters obtained by the SVM method can be ignored. Because the SVM is an ML method, it contains an extremely small number of parameters. Other methods are based on DL, which include both single input (SSRN, FDSS, DBMA, PCIA, MDAN, MDBA, and OSDN) and dual input (DMFN and MHNA) approaches. It is clear that dual input approaches implement a larger number of parameters than others. The MHNA mechanism received the highest OA. The classification result maps of all methods are displayed in

Figure 10. We can note that the proposed MHNA is the closest to the real image (

Figure 10a). And it can be clearly seen in

Figure 10b,e–j that a large number of the C8 (Grapes) is mistaken in C15 (Vineyard-U). It indicates that it is difficult to distinguish C8 and C15 on the SA dataset. From the classification map of

Figure 10l, achieved by the proposed MHNA, it is worth noting that there is a small number of samples that are misclassified. The experimental results indicate that the designed approach is more effective than other approaches for classification tasks.

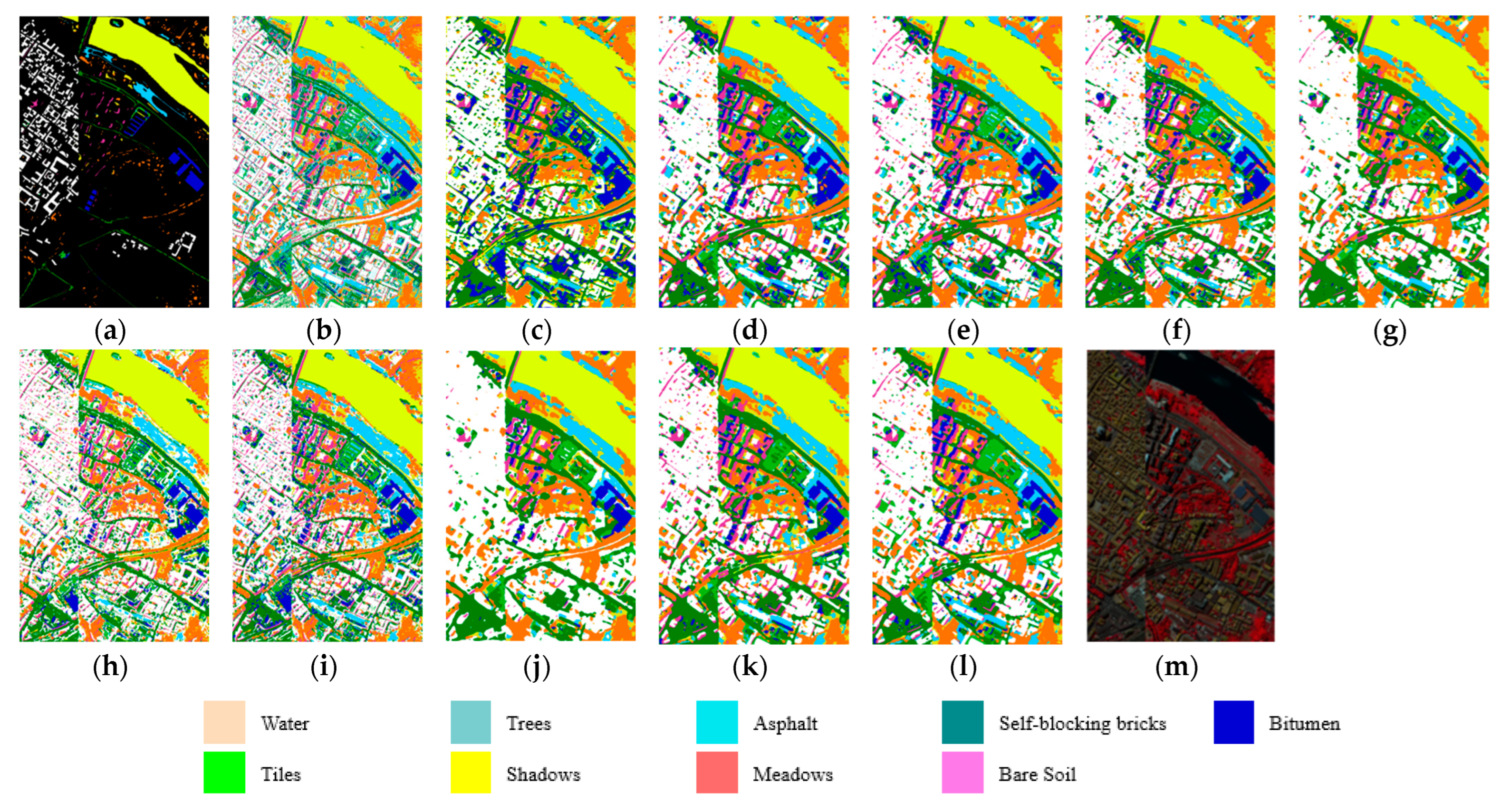

The PC is a dataset of urban center landscapes, which contains nine landcovers. All methods, including SVM, achieve satisfactory classification results, as displayed in

Table 10. Parameters obtained by MHNA are less than DMFN. As can be observed, the designed MHNA has an outstanding performance and achieves the highest OA, AA, and Kappa. In particular, the MHNA achieves 100% on C1 (Water). However, SVM, DMFN, PCIA, and OSDN achieve an OA of less than 90% on C3 (Asphalt). And FDSS, DBMA, MDAN, and SSGC achieve an OA of less than 92% on C3. A similar phenomenon is observed for C4 (Self-blocking bricks); it can be seen that most approaches achieve an OA lower than 90%. This indicates that it is a challenge to classify C3 and C4 accurately. Conversely, the proposed MHNA achieves OAs of more than 96% on C3 and C4. The classification maps in the PC dataset are exhibited in

Figure 11. It is apparent that the landcover contours and boundaries are smoother and clearer on

Figure 11l, which is obtained by the proposed approach.

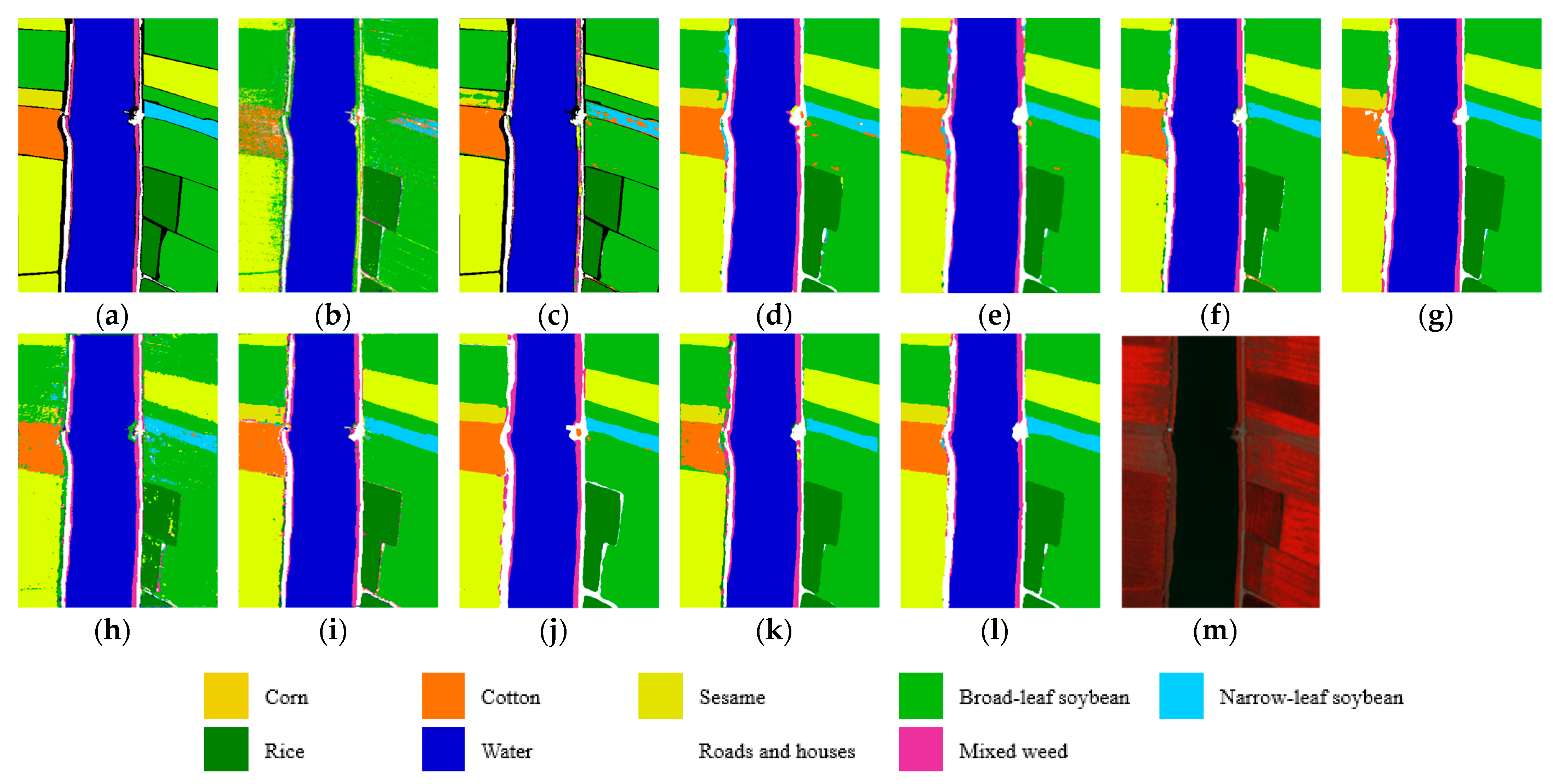

There are nine landcovers in the LK dataset, which includes numerous samples. The precise experimental results are displayed in

Table 11. It can be easily seen that the OA of the SVM is 90.77%. For specific classes, the accuracy is less than 70% of the SVM, such as with C5 (Narrow leaf soybean) and C9 (Mixed weed). The SSRN, FDSS, and MDNA use spectral and spatial features, and these approaches outperform SVM in terms of classification accuracy. The performance of these methods (the DBMA, DMFN, PCIA, MDBN, SSGC, OSDN, and MHNA) is relatively stable, and satisfactory results have been obtained. It proves that the structure of dual-branch networks is more stable. It is remarkable that the designed MHNA achieved the largest number of parameters while also obtaining the highest OA, AA, and Kappa. The maps displaying the full-factor classification are presented in

Figure 12. It is evident that the salt-pepper noise presents in

Figure 12b, which is achieved by the SVM. Conversely, the classification maps of the DL-based method are smoother. This suggests that the smoothness of the classification maps can be enhanced by extracting spatial features in DL-based methods. As shown in

Figure 12a,l, the classification map obtained by MHNA is the most approximate to the real landcovers.

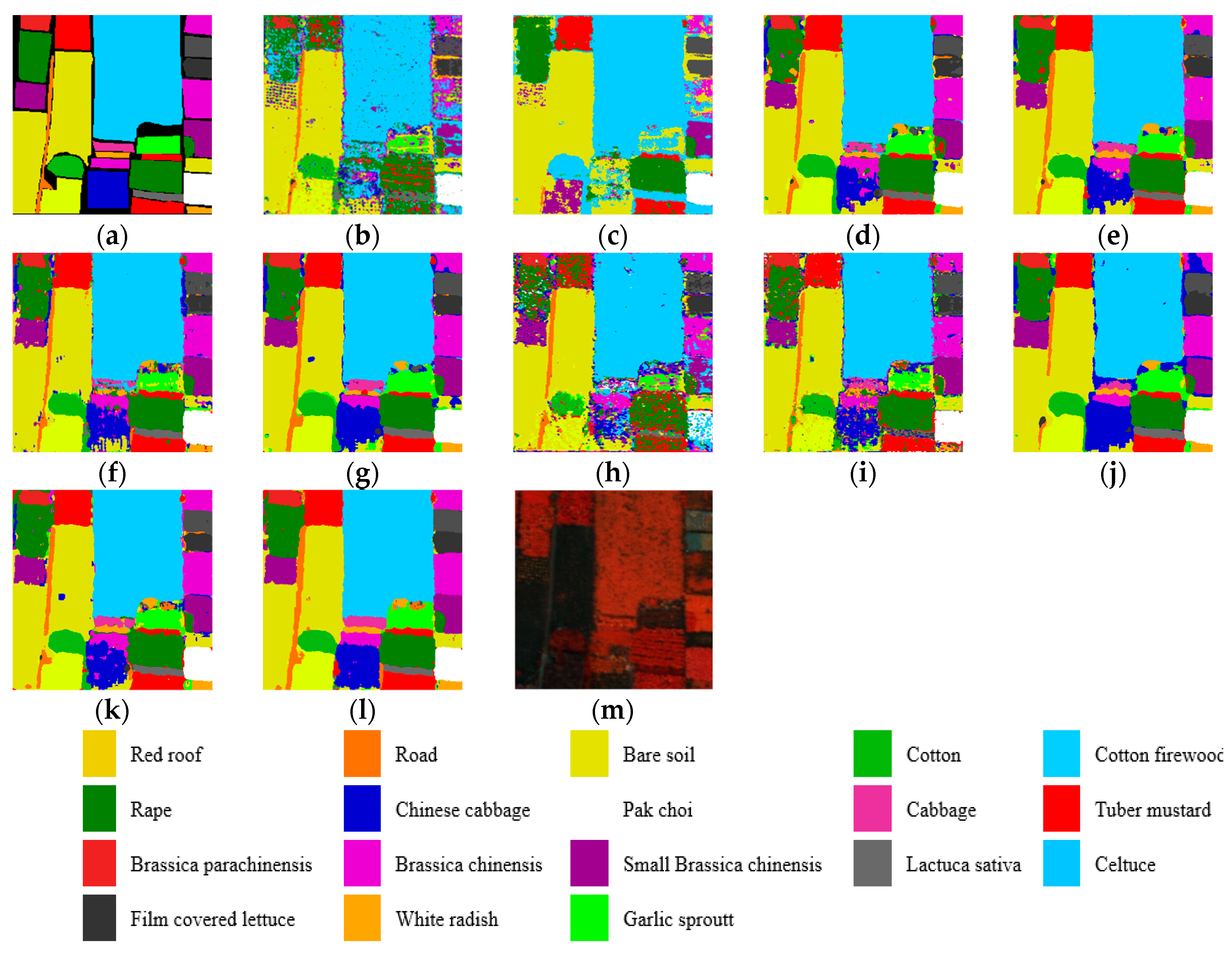

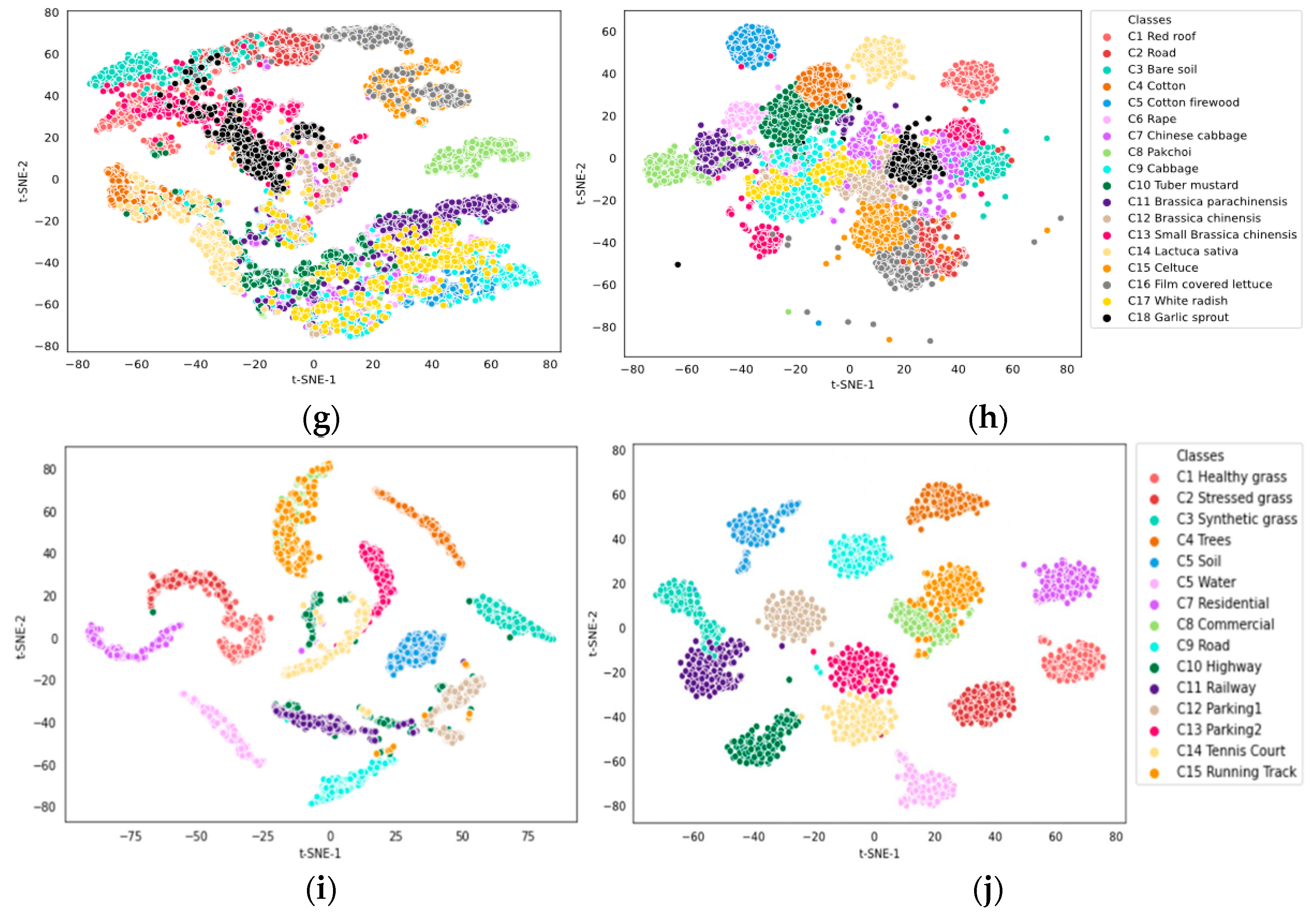

Additionally, to assess the effectiveness of the introduced MHNA, a high spatial resolution HSI dataset named HH is selected. From

Table 12, maximum and minimum parameters are achieved by the OSDN and the DMFN, respectively. The SVM based only on spectral signatures achieves the lowest OA of 73.24%. The difficulty of classifying various landcovers, utilizing spectral signatures exclusively on the HH dataset, is highlighted by the results. Contrasting the classification accuracy across different categories, the results indicate that some categories—such as C2 (Road), C7 (Chinese cabbage), C9 (Cabbage), C17 (White radish), and C18 (Garlic sprout)—are difficult to classify precisely using SVM, DBMA, MDAN, and MDBN. The proposed MHNA, as a dual-branch multiscale network, achieves more stable classification results compared to other methods. From

Figure 13l, C3 (Bare soil) is almost completely correctly classified by the proposed method. On the contrary, the boundary between C2 and C3 is unclear in

Figure 13b–d,h,j,k. However, it can be seen that there are small patches of misclassification in

Figure 13l. These may be caused by using the dilated convolution injecting holes to expand the receptive field, which leads to discontinuity in information extraction.

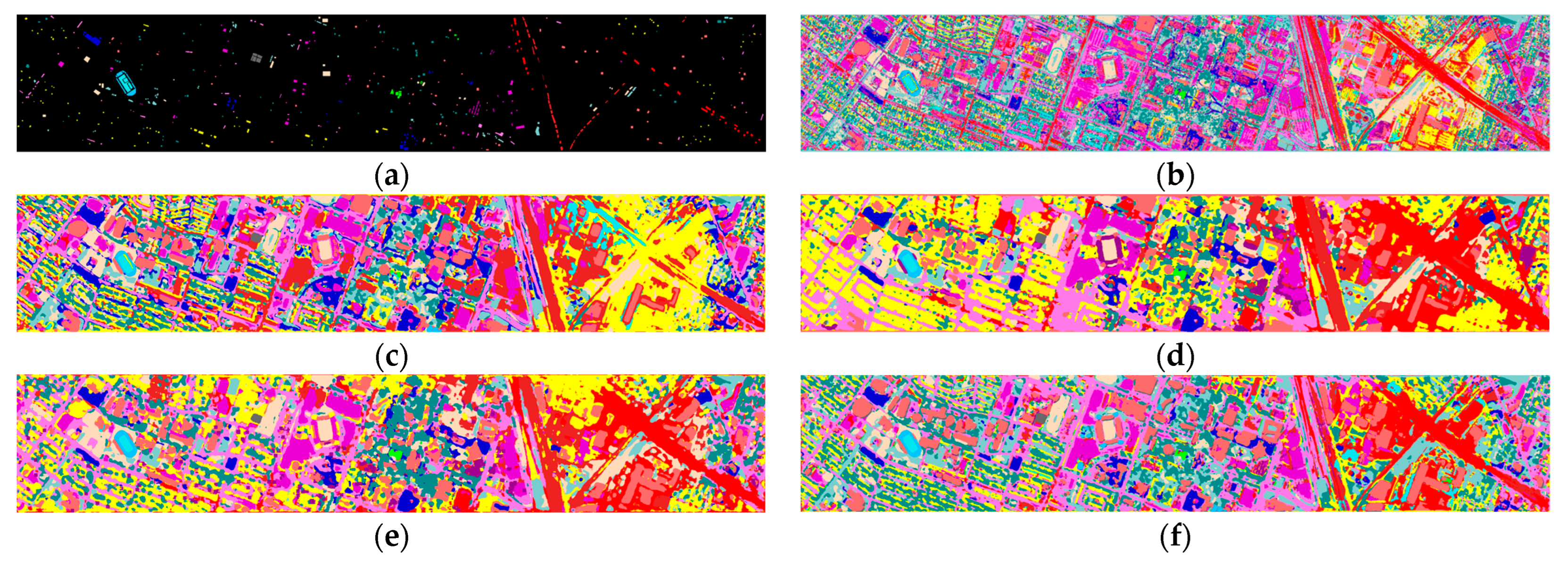

The HO dataset contains 15 categories, such as roads, soils, and trees. The classification accuracies and parameter number of the eleven approaches are detailed in

Table 13. The HO dataset includes a smaller number of training samples than others. Therefore, it is extremely challenging to classify categories precisely under this condition. The lowest classification results are still achieved by the SVM. The phenomenon is enough to demonstrate the importance of spatial information of HSIs. Spectral and spatial characteristics are simultaneously exploited by the MHNA, which obtains the highest levels of OA, AA and Kappa. All the classification maps are presented in

Figure 14. It can be clearly seen that there are plenty of misclassified samples in all maps.