Multilevel Feature Aggregated Network with Instance Contrastive Learning Constraint for Building Extraction

Abstract

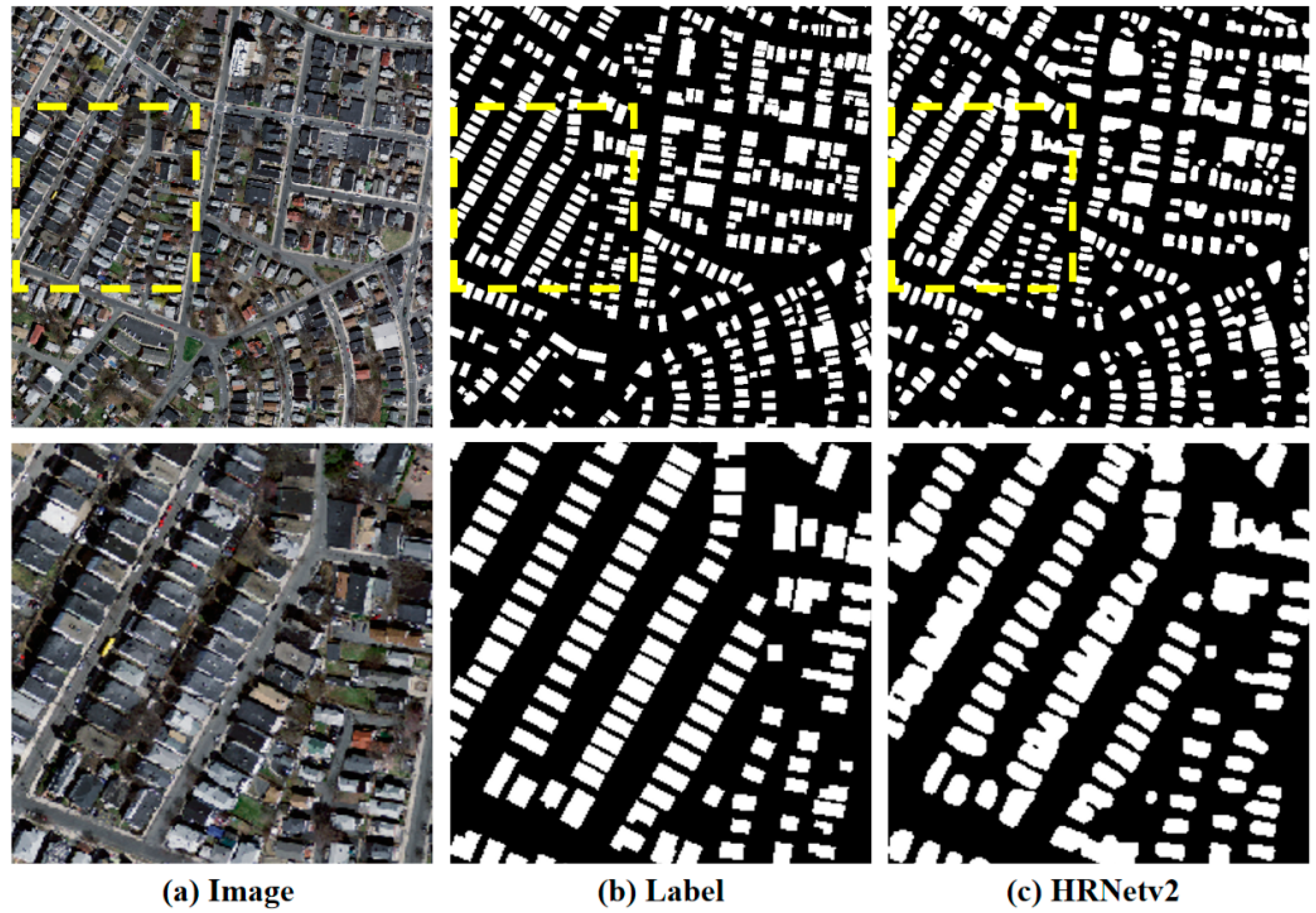

1. Introduction

- (1)

- We proposed a channel attention-based multilevel feature aggregation framework that adaptively fuses the spatial and semantic information, resulting in refined multi-scale building extraction;

- (2)

- We devised an instance-level contrastive learning strategy with global consistency constraints among buildings and backgrounds, which leads to robust feature representation;

- (3)

- We conducted extensive experimentation on the WHU and Massachusetts datasets to validate the performance and generalization of the proposed method.

2. Related Works

3. Methods

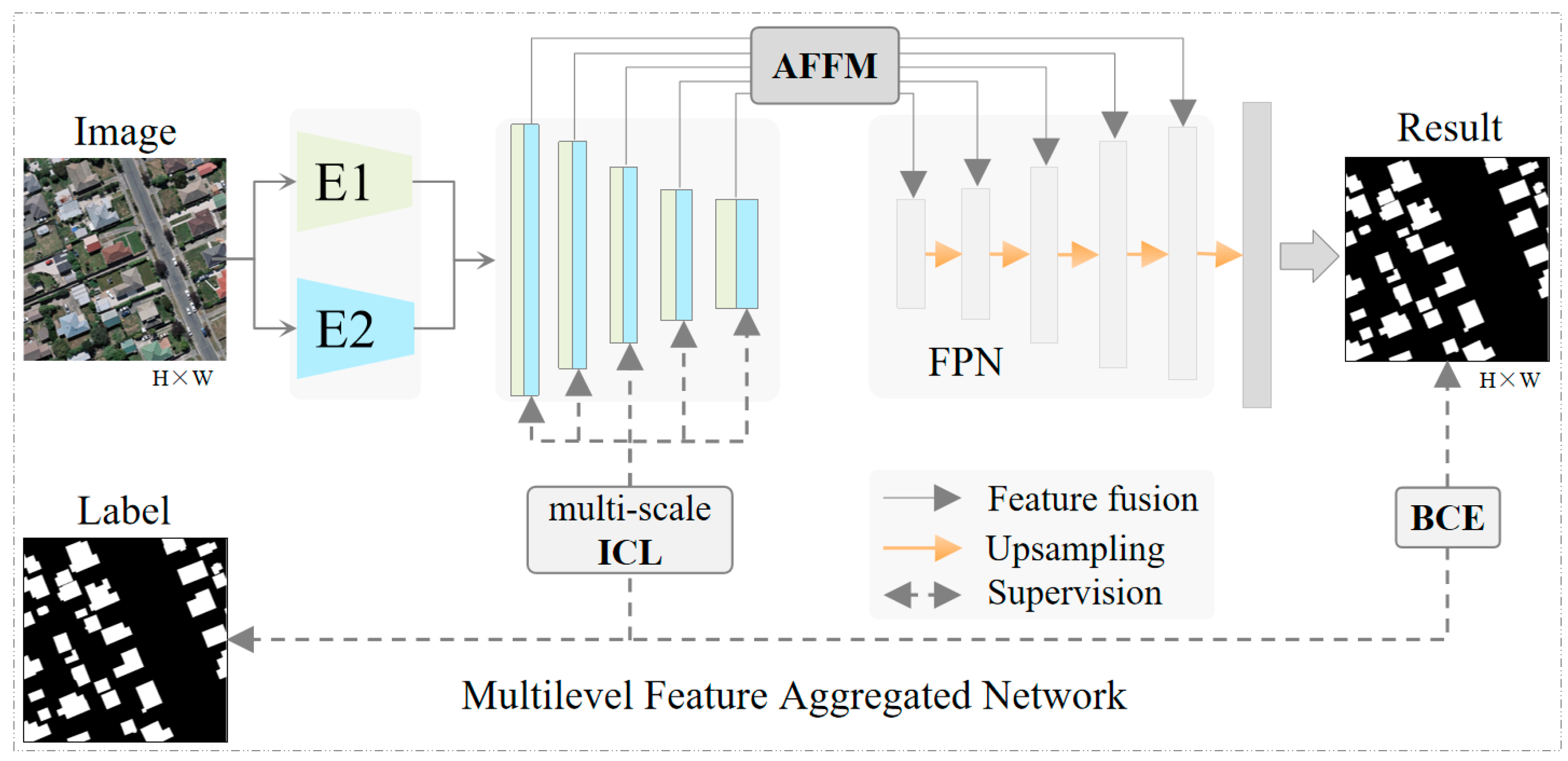

3.1. Architecture Overview

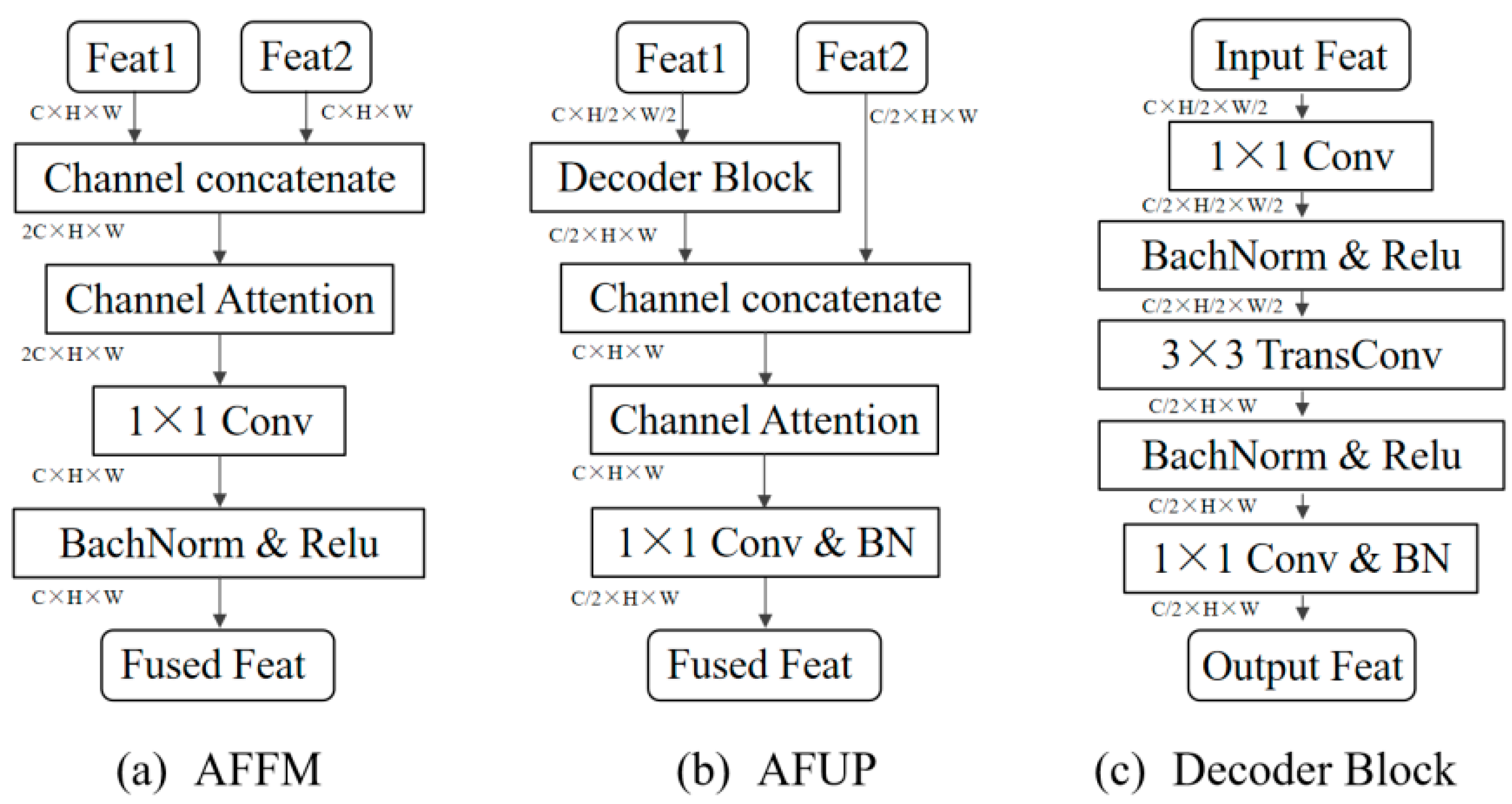

3.2. Aggregation of Multilevel Features

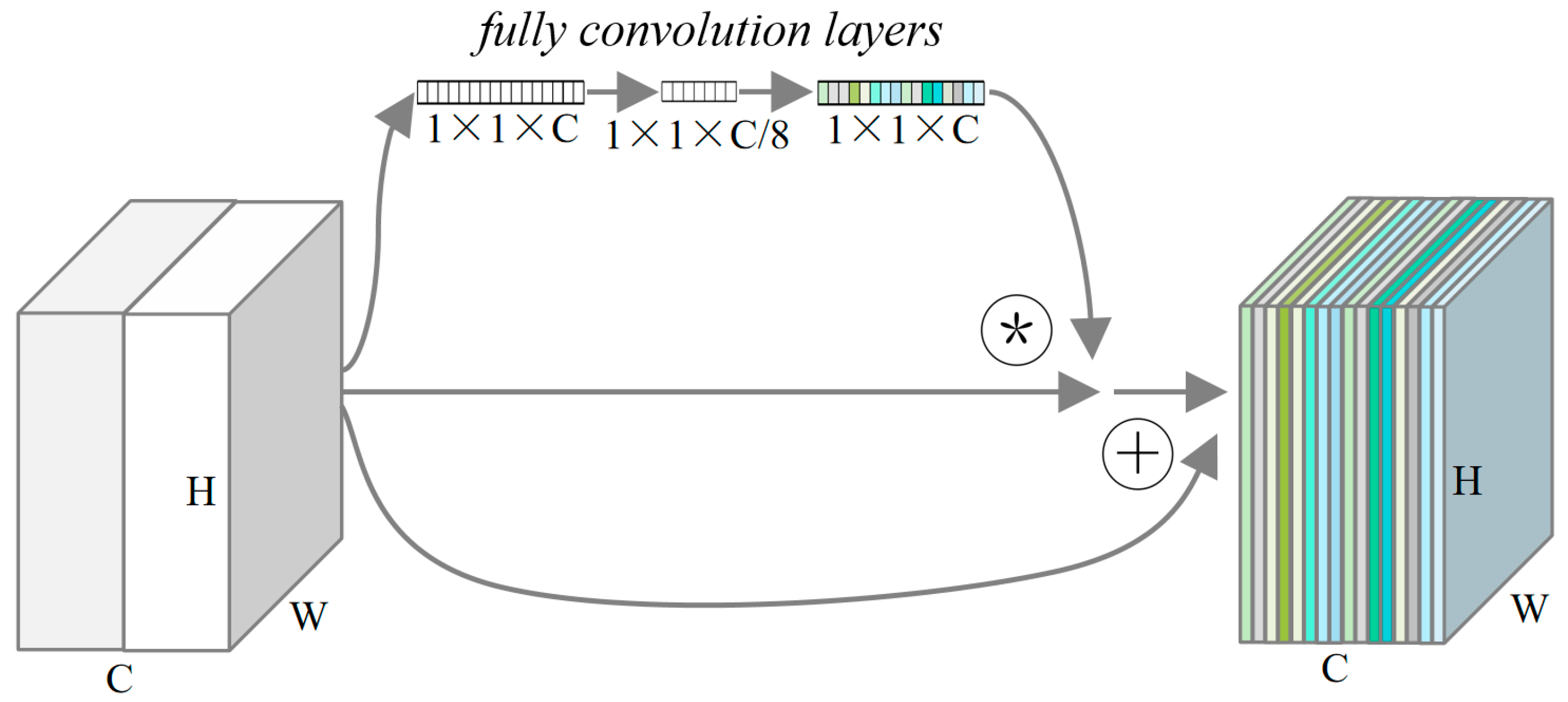

3.3. Instance-Level Constraint Contrastive Loss

3.4. Evaluation Metrics and Training Strategies

4. Experiments and Results

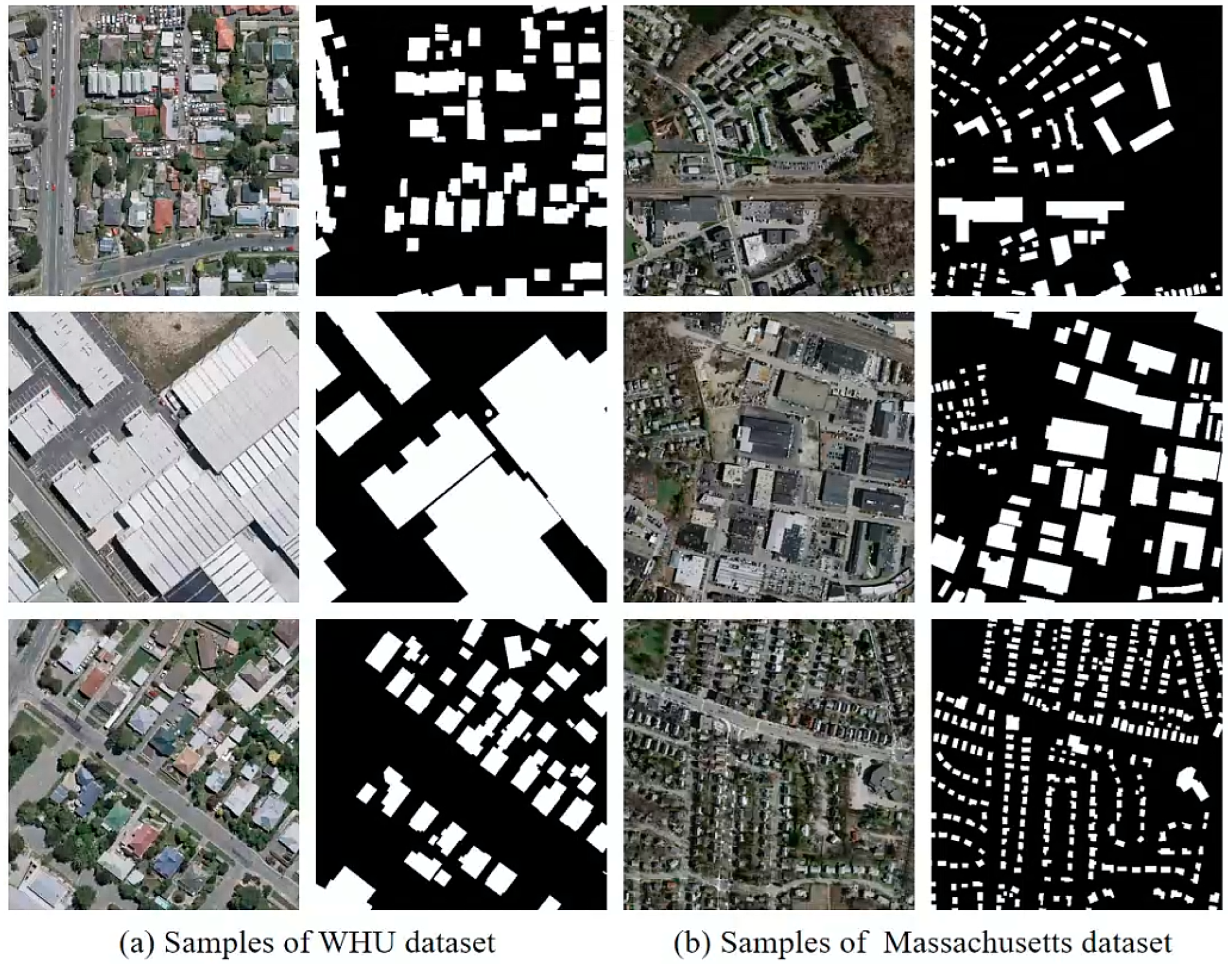

4.1. Datasets Description

4.2. Performance Comparison with Semantic Segmentation Methods

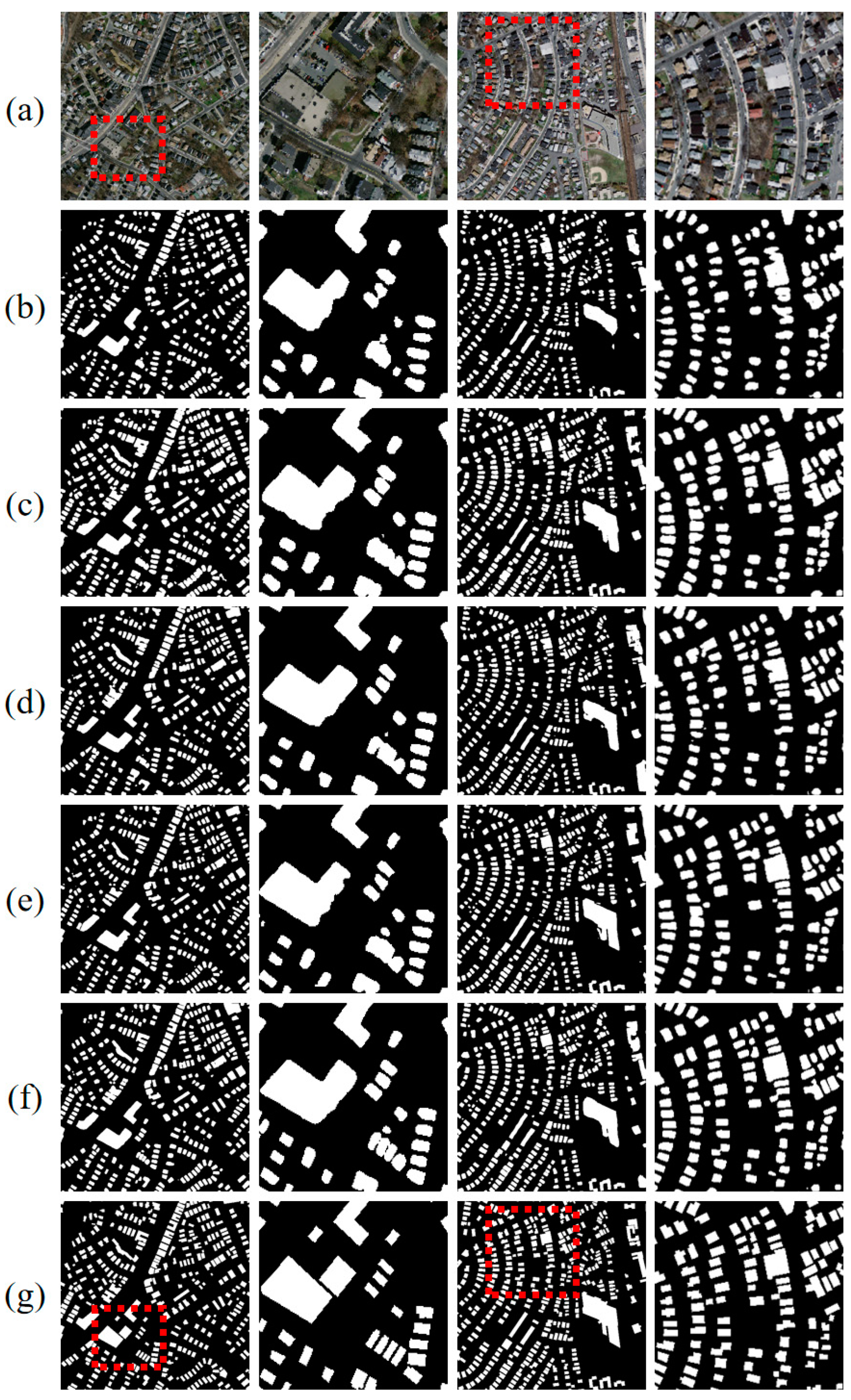

4.3. Comparison of Recent Related Methods

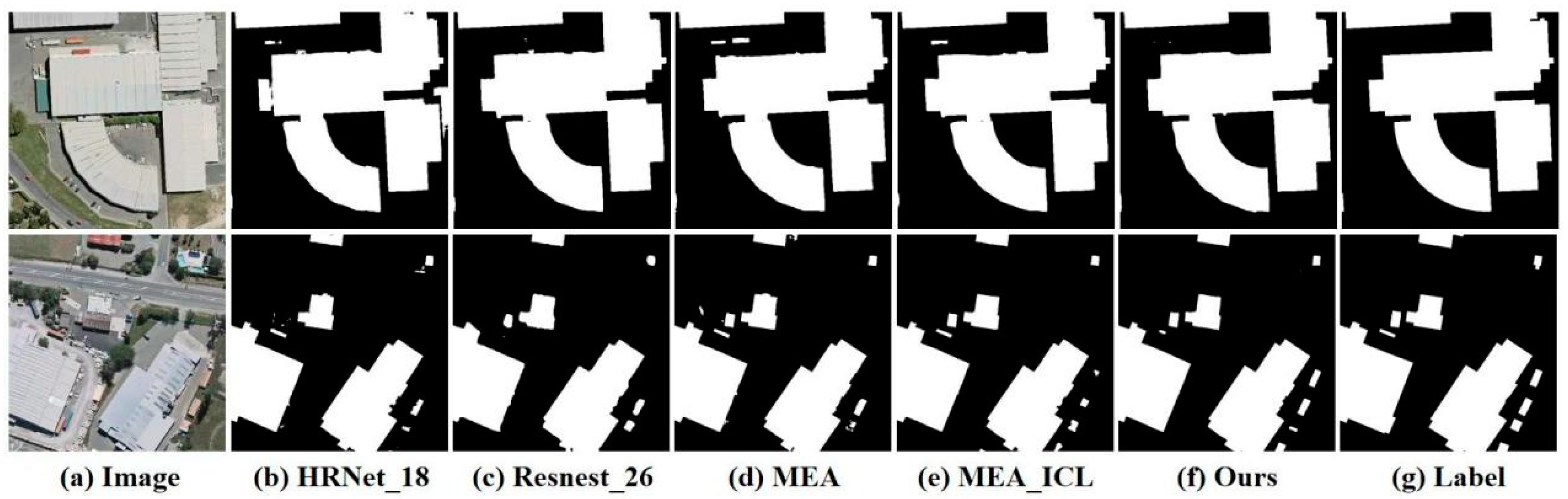

4.4. Ablation Experiments on the WHU Dataset

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mishra, A.; Pandey, A.; Baghel, A.S. Building detection and extraction techniques: A review. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 3816–3821. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Zhang, C.; Fraser, C.S. Automatic extraction of building roofs using LIDAR data and multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2013, 83, 1–18. [Google Scholar] [CrossRef]

- Li, S.; Liao, C.; Ding, Y.; Hu, H.; Jia, Y.; Chen, M.; Xu, B.; Ge, X.; Liu, T.; Wu, D. Cascaded Residual Attention Enhanced Road Extraction from Remote Sensing Images. ISPRS Int. J. Geo-Inform. 2022, 11, 9. [Google Scholar] [CrossRef]

- Afaq, Y.; Manocha, A. Analysis on change detection techniques for remote sensing applications: A review. Ecol. Inform. 2021, 63, 101310. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. Available online: https://openaccess.thecvf.com/content_cvpr_2015/html/Long_Fully_Convolutional_Networks_2015_CVPR_paper.html (accessed on 25 July 2022).

- Bittner, K.; Cui, S.; Reinartz, P. Building extraction from remote sensing data using fully convolutional networks. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-1/W1, 481–486. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Gavankar, N.L.; Ghosh, S.K. Automatic building footprint extraction from high-resolution satellite image using mathematical morphology. Eur. J. Remote Sens. 2018, 51, 182–193. [Google Scholar] [CrossRef]

- Cote, M.; Saeedi, P. Automatic Rooftop Extraction in Nadir Aerial Imagery of Suburban Regions Using Corners and Variational Level Set Evolution. IEEE Trans. Geosci. Remote Sens. 2013, 51, 313–328. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Y.; Liu, Q.; Wang, W. Hough Transform Guided Deep Feature Extraction for Dense Building Detection in Remote Sensing Images. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1872–1876. [Google Scholar] [CrossRef]

- Huang, Z.; Cheng, G.; Wang, H.; Li, H.; Shi, L.; Pan, C. Building extraction from multi-source remote sensing images via deep deconvolution neural networks. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1835–1838. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Y.; Wang, R. A lightweight network for building extraction from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5614812. [Google Scholar] [CrossRef]

- Shrestha, S.; Vanneschi, L. Improved Fully Convolutional Network with Conditional Random Fields for Building Extraction. Remote Sens. 2018, 10, 1135. [Google Scholar] [CrossRef]

- Shao, Z.; Tang, P.; Wang, Z.; Saleem, N.; Yam, S.; Sommai, C. BRRNet: A Fully Convolutional Neural Network for Automatic Building Extraction From High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 1050. [Google Scholar] [CrossRef]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef]

- Liu, H.; Luo, J.; Huang, B.; Hu, X.; Sun, Y.; Yang, Y.; Xu, N.; Zhou, N. DE-Net: Deep Encoding Network for Building Extraction from High-Resolution Remote Sensing Imagery. Remote Sens. 2019, 11, 2380. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 833–851. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Hasan, S.M.K.; Linte, C.A. U-NetPlus: A Modified Encoder-Decoder U-Net Architecture for Semantic and Instance Segmentation of Surgical Instruments from Laparoscopic Images. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin/Heidelberg, Germany, 23–27 July 2019; pp. 7205–7211. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Liao, C.; Hu, H.; Li, H.; Ge, X.; Chen, M.; Li, C.; Zhu, Q. Joint Learning of Contour and Structure for Boundary-Preserved Building Extraction. Remote Sens. 2021, 13, 1049. [Google Scholar] [CrossRef]

- Guo, H.; Du, B.; Zhang, L.; Su, X. A coarse-to-fine boundary refinement network for building footprint extraction from remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 240–252. [Google Scholar] [CrossRef]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building Extraction from Satellite Images Using Mask R-CNN with Building Boundary Regularization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 242–2424. [Google Scholar] [CrossRef]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef]

- Wei, S.; Ji, S.; Lu, M. Toward Automatic Building Footprint Delineation from Aerial Images Using CNN and Regularization. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2178–2189. [Google Scholar] [CrossRef]

- Zorzi, S.; Bittner, K.; Fraundorfer, F. Machine-learned Regularization and Polygonization of Building Segmentation Masks. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3098–3105. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, L.; Waslander, S.L.; Liu, X. An end-to-end shape modeling framework for vectorized building outline generation from aerial images. ISPRS J. Photogramm. Remote Sens. 2020, 170, 114–126. [Google Scholar] [CrossRef]

- Zhao, W.; Persello, C.; Stein, A. Building outline delineation: From aerial images to polygons with an improved end-to-end learning framework. ISPRS J. Photogramm. Remote Sens. 2021, 175, 119–131. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wang, H.; Miao, F. Building extraction from remote sensing images using deep residual U-Net. Eur. J. Remote Sens. 2022, 55, 71–85. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. A scale robust convolutional neural network for automatic building extraction from aerial and satellite imagery. Int. J. Remote Sens. 2019, 40, 3308–3322. [Google Scholar] [CrossRef]

- Li, L.; Liang, J.; Weng, M.; Zhu, H. A Multiple-Feature Reuse Network to Extract Buildings from Remote Sensing Imagery. Remote Sens. 2018, 10, 1350. [Google Scholar] [CrossRef]

- Sun, G.; Huang, H.; Zhang, A.; Li, F.; Zhao, H.; Fu, H. Fusion of Multiscale Convolutional Neural Networks for Building Extraction in Very High-Resolution Images. Remote Sens. 2019, 11, 227. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. Available online: https://openaccess.thecvf.com/content_cvpr_2017/html/Zhao_Pyramid_Scene_Parsing_CVPR_2017_paper.html (accessed on 17 October 2022).

- Liu, P.; Liu, X.; Liu, M.; Shi, Q.; Yang, J.; Xu, X.; Zhang, Y. Building Footprint Extraction from High-Resolution Images via Spatial Residual Inception Convolutional Neural Network. Remote Sens. 2019, 11, 830. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Zhu, Q.; Liao, C.; Hu, H.; Mei, X.; Li, H. MAP-Net: Multiple Attending Path Neural Network for Building Footprint Extraction From Remote Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6169–6181. [Google Scholar] [CrossRef]

- Mekhazni, D.; Dufau, M.; Desrosiers, C.; Pedersoli, M.; Granger, E. Camera Alignment and Weighted Contrastive Learning for Domain Adaptation in Video Person ReID. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 1624–1633. [Google Scholar]

- Thota, M.; Leontidis, G. Contrastive domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2209–2218. [Google Scholar]

- Kang, G.; Jiang, L.; Yang, Y.; Hauptmann, A.G. Contrastive adaptation network for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4893–4902. [Google Scholar]

- Vandenhende, S.; Georgoulis, S.; Van Gansbeke, W.; Proesmans, M.; Dai, D.; Van Gool, L. Multi-Task Learning for Dense Prediction Tasks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3614–3633. [Google Scholar] [CrossRef] [PubMed]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto: Ottawa, ON, Canada, 2013. [Google Scholar]

- Zhou, Y.; Chen, Z.; Wang, B.; Li, S.; Liu, H.; Xu, D.; Ma, C. BOMSC-Net: Boundary optimization and multi-scale context awareness based building extraction from high-resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5618617. [Google Scholar] [CrossRef]

- Li, J.; Zhuang, Y.; Dong, S.; Gao, P.; Dong, H.; Chen, H.; Chen, L.; Li, L. Hierarchical Disentangling Network for Building Extraction from Very High Resolution Optical Remote Sensing Imagery. Remote Sens. 2022, 14, 1767. [Google Scholar] [CrossRef]

- Kang, W.; Xiang, Y.; Wang, F.; You, H. EU-Net: An Efficient Fully Convolutional Network for Building Extraction from Optical Remote Sensing Images. Remote Sens. 2019, 11, 2813. [Google Scholar] [CrossRef]

| Method | IoU (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| DeepLabv3+ | 88.16 | 94.64 | 92.79 | 93.71 |

| PSPNet | 88.81 | 94.33 | 93.82 | 94.07 |

| U-NetPlus | 89.35 | 94.73 | 94.02 | 94.37 |

| HRNetv2 | 90.09 | 93.60 | 96.01 | 94.79 |

| MFA-Net (Ours) | 91.07 | 94.64 | 96.02 | 95.33 |

| Method | IoU (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| DeepLabv3+ | 67.36 | 82.76 | 78.35 | 80.49 |

| PSPNet | 71.64 | 86.46 | 80.69 | 83.48 |

| U-Net | 71.05 | 84.02 | 82.15 | 83.07 |

| HRNetv2 | 72.32 | 85.34 | 82.58 | 83.94 |

| MFA-Net (Ours) | 74.58 | 87.11 | 83.84 | 85.44 |

| Datasets | Methods | IoU | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| WHU | MC-FCN | 87.10 | 94.60 | 91.70 | 93.13 |

| RSR-Net | 88.32 | 94.92 | 92.63 | 93.76 | |

| SiU-Net | 88.40 | 93.80 | 93.90 | 93.85 | |

| SRI-Net | 89.23 | 95.67 | 93.69 | 94.51 | |

| BOMSC-Net | 90.15 | 94.80 | 95.14 | 94.50 | |

| HDNet | 90.50 | 95.20 | 94.80 | 95.00 | |

| EU-Net | 90.56 | 94.98 | 95.10 | 95.04 | |

| MA-FCN | 90.70 | 95.20 | 95.10 | 95.15 | |

| MAP-Net | 90.86 | 95.62 | 94.81 | 95.21 | |

| MFA-Net (Ours) | 91.07 | 94.64 | 96.02 | 95.33 | |

| Massachusetts | MAP-Net | 73.34 | 85.49 | 83.76 | 84.62 |

| EU-Net | 73.93 | 86.70 | 83.40 | 85.01 | |

| BRRNet | 74.46 | -- | -- | 85.36 | |

| BP-Net | 74.51 | 85.44 | 85.34 | 85.39 | |

| CBR-Net | 74.55 | 86.50 | 84.36 | 85.42 | |

| BOMSC-Net | 74.71 | 86.64 | 84.68 | 85.13 | |

| MFA-Net (Ours) | 74.58 | 87.11 | 83.84 | 85.44 |

| Methods | MEA | ICL | AUP | IoU | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| HRNet18 | × | × | × | 90.08 | 93.59 | 96.01 | 94.79 |

| Resnest26 | × | × | × | 90.03 | 93.72 | 95.81 | 94.76 |

| + MEA | √ | × | × | 90.36 | 93.90 | 96.00 | 94.94 |

| + ICL | √ | √ | × | 90.77 | 94.71 | 95.62 | 95.16 |

| + AUP | √ | √ | √ | 91.07 | 94.63 | 96.02 | 95.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Bao, T.; Liu, H.; Deng, R.; Zhang, H. Multilevel Feature Aggregated Network with Instance Contrastive Learning Constraint for Building Extraction. Remote Sens. 2023, 15, 2585. https://doi.org/10.3390/rs15102585

Li S, Bao T, Liu H, Deng R, Zhang H. Multilevel Feature Aggregated Network with Instance Contrastive Learning Constraint for Building Extraction. Remote Sensing. 2023; 15(10):2585. https://doi.org/10.3390/rs15102585

Chicago/Turabian StyleLi, Shiming, Tingrui Bao, Hui Liu, Rongxin Deng, and Hui Zhang. 2023. "Multilevel Feature Aggregated Network with Instance Contrastive Learning Constraint for Building Extraction" Remote Sensing 15, no. 10: 2585. https://doi.org/10.3390/rs15102585

APA StyleLi, S., Bao, T., Liu, H., Deng, R., & Zhang, H. (2023). Multilevel Feature Aggregated Network with Instance Contrastive Learning Constraint for Building Extraction. Remote Sensing, 15(10), 2585. https://doi.org/10.3390/rs15102585