Abstract

In the application of change detection satellite remote sensing images, synthetic aperture radar (SAR) images have become a more important data source. This paper proposes a new end-to-end SAR image change network architecture—TransUNet++SAR—that combines Transformer with UNet++. First, the convolutional neural network (CNN) was used to obtain the feature maps of the single time SAR images layer by layer. Tokenized image patches were encoded to extract rich global context information. Using improved Transformer for effective modeling of global semantic relations can generate rich contextual feature representations. Then, we used the decoder to upsample the encoded features, connected the encoded multi-scale features with the high-level features by sequential connection to learn the local-global semantic features, recovered the full spatial resolution of the feature map, and achieved accurate localization. In the UNet++ structure, the bitemporal SAR images are composed of two single networks, which have shared weights to learn the features of the single temporal image layer by layer to avoid the influence of SAR image noise and pseudo-change on the deep learning process. The experiment results show that the experimental effect of TransUNet++SAR on the Beijing, Guangzhou, and Qingdao datasets were significantly better than other deep learning SAR image change detection algorithms. At the same time, compared with other Transformer related change detection algorithms, the description of the changed area edge was more accurate. In the dataset experiments, the model had higher indices than the other models, especially the Beijing building change datasets, where the IOU was 9.79% higher and F1-score was 4.38% higher.

1. Introduction

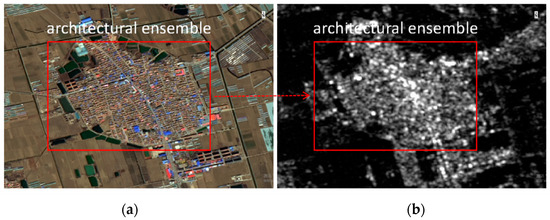

The change detection task is mainly to obtain image differences through multi-temporal remote sensing images of the same geographical area using appropriate algorithms [1]. Change detection technology can be used in the planning of urban built-up areas, disaster assessment, ecological monitoring, etc., so that we can better and faster detect the development of the world from a quantitative perspective. At present, the application of change detection technology mainly uses high-resolution optical images. However, optical images are susceptible to cloud, sun angle, seasonal changes, and other factors, which will greatly affect the results of change detection. However, SAR images have strong penetration, which are not affected by cloud and fog, solar altitude angle, and other factors, and can provide all weather data. It can make up for the shortcomings of optical images, so it has important research value in the application of remote sensing images [2]. However, the resolution of SAR image datasets is generally lower than that of optical images, and the information in the image that can be intuitively reflected is less than that of optical images. Figure 1 shows the corresponding optical image and SAR image in the same area of Changshu City. Through the comparison of the imaging effect, the optical image had a more obvious geometric structure, clearer object edge contour, and richer spectrum and texture through the superposition of visible light bands. Therefore, the application of SAR image change detection is limited by the reason that it lacks enough information. In this paper, the method of deep learning was used to obtain more targeted and deeper SAR image information, which expands the application range of SAR image change detection compared with traditional methods. At present, there are some deep learning change detection technologies for SAR images, but there are few studies mainly on built-up areas. Therefore, the use of SAR images for the study of urban built-up areas such as urban road changes and urban building changes has a research potential that cannot be ignored for urban planning and urban change. Because there is a lack of public datasets for SAR image change detection on the Internet, the dataset in this paper was co-produced by the authors and their team. The addition of the SAR datasets also further verifies the practicability of deep learning in SAR imagery.

Figure 1.

Optical imagery and SAR imagery used in the change detection datasets. (a) 2.5 m resolution high-resolution image of Changshu City, (b) 10 m resolution SAR image of Changshu City.

Traditional SAR image change detection techniques are usually based on three processes: (1) image preprocessing; (2) generating differential image; and (3) analyzing the differential image to identify the changed area [3]. During image preprocessing, it is necessary to establish the spatial correspondence between bitemporal SAR images based on image registration [4], which can ensure good image consistency in the spatial domain [5]. While the focus of SAR change detection is the generation and analysis of DI (differential images), the quality of DI and the method for analyzing DI will greatly affect the final detection results. The common algorithms for generating DI are the difference operator and ratio operator. Due to the influence of the SAR image imaging mechanism, it is generally preferred to use the ratio operator, which uses ratio operation on the bitemporal SAR images to generate the DI such as the logarithmic operator and mean operator. The logarithmic operator converts the pixel value of the image into a logarithmic dimension, and then calculates the ratio of image pairs [6]. It can effectively reduce the larger difference caused by ratio calculation and lower the influence of background independent points in invariant classes, but its ability to preserve edge information is weak [5]. The mean operator integrates over the local neighborhood pixels, calculates the mean ratio of image pairs [7], considers the spatial neighborhood information, and has a good suppression of noise in change detection [8]. The common methods for DI analysis can be divided into supervised and unsupervised methods. Unsupervised change detection usually uses the thresholding method and clustering method. In the thresholding method, the optimal threshold is found and the pixels of DI are classified into the change class and invariant class by the optimal threshold. If it is greater than the threshold, it is set to white (change class), and if it is less than or equal to the threshold, it is set to black (invariant class). Classical thresholding algorithms such as that proposed by Gabriele Moser et al. is a change detection method combining the image ratio with the generalization of the Kitler and Illingworth minimum error threshold algorithm (K&I) [9]. Then, on the basis of the KI algorithm, models were built to find the optimal threshold. Therefore, an improved algorithm based on the KI algorithm, named the generalized KI (GKI) threshold selection algorithm, was proposed [5], which was extended to consider the non-Gaussian distribution of the SAR image amplitude [10]. While the DI analysis method of clustering is more convenient and flexible without modeling, among the most popular clustering methods, the fuzzy C-means algorithm (FCM) can retain more information than hard clustering in some cases. Krinidis and Chatzis [11] proposed the fuzzy C-means (FCM) clustering method with local neighborhood information (FLICM), which took into account both local spatial information and gray level. This method defines an automatic fuzzy factor to improve robustness without human intervention. Although these unsupervised analysis methods do not need the prior information of the training set, the above methods cannot meet the current needs well with the increase in data complexity and application scope.

In supervised methods, using a training set with ground truth change situations as prior information to train classifiers such as support vector machine (SVM) and extreme learning machine (ELM) [12]. Chao Li et al. proposed a context-sensitive similarity measure method based on a supervised classification framework that magnified the difference between the changed and unchanged pixels [13]. I.J. Goodfellow et al. [14] used a method that combined the thresholding method with deep belief networks, which first used a thresholding method to convert the DI into a binary image, then pulled the training samples into a column vector for training in the DBN, but this method ignored the spatial information of the samples and could not learn the spatial features of samples. Overall, supervised classification uses features extracted from tag data as a priori information and tends to produce a better classification effect than unsupervised classification. However, at present, most of the experiments of these methods have used open datasets of farmland, water area, glacier, and other natural areas, and the specific research on urban built-up areas needs further analysis.

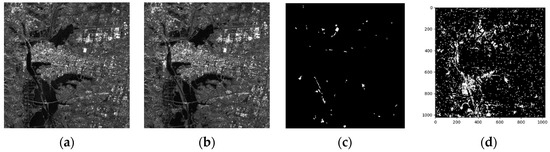

In conclusion, there are still some indispensable problems in the widespread SAR image change detection. The first is the problem of speckle noise suppression, speckle noise caused by the fading of the radar target echo signal is an inevitable defect in the process of SAR imaging, which makes the image details blurred and reduces the image quality, so that it is difficult to construct a good difference map. Then, there are the problems of generating DI with good performance and effective classifiers. Good DI is an essential condition for correct detection results in most of the methods, and effective classifiers determine the final change detection result. At present, most of the commonly used classifiers are based on complex formulas or the optimization of objective functions to perform classification, but these methods cannot make good use of the image features, so the classification effect is not ideal [15]. Figure 2 shows the effect of SAR image processing by using the traditional SAR image change detection method CD_DCNet. Figure 2c is the real situation, and Figure 2d is the real situation of change detection. We can see that the traditional method is greatly disturbed by noise.

Figure 2.

The CD_DCNet change detects the actual situation. (a) First temporal image. (b) Second temporal image. (c) Real image. (d) Detection result.

Deep learning technology is the latest technology in computer vision and image processing [16]. The core idea is the hierarchical representation of input data; through the neural network layer, we can learn the hierarchical representation and more robust feature representation of the data. At present, it has outstanding performance in image feature extraction and classification, which has been widely concerned by scholars at home and abroad [15]. Change detection technology based on CNN reduces the parameters that need to be trained in the model, reduces the complexity, and improves the generalization ability of the model at the same time. Using CNN to learn features from remote sensing images to study the changes in bitemporal images has become a common method in the field of change detection. Tao Liu et al. proposed a new dual-channel convolution neural network that contained two parallel CNN structures and could extract features from two multi-temporal SAR images [17]. However, due to the inherent limitations of convolution operation, receptive areas are constrained by connected pixels, and this CNN network structure is too simple that rich contextual information cannot be extracted. Fang Liu et al. proposed a convolution neural network with local constraints, and a spatial constraint called local constraint was added to the output layer of the CNN to learn the multi-layer difference map [18]. However, this kind of network is a CNN network for polarized SAR data characteristics, and its applicability in SAR urban building complex change detection has not been proven.

This paper proposes a network architecture combining early and late fusion strategies. Compared with other CNN-based SAR image change detection networks, the design is deeper and more complex, but it can extract deeper network features. It is a SAR image change detection model combining a UNet++ and Transformer structure. Compared with the traditional SAR image change detection process, there is no difference map generation process, and there is no need to analyze the difference map. Instead, it analyzes the training samples that we have labeled and does not consider the influence of noise. To some extent, the influence of speckle noise inherent in the SAR image of this method is suppressed. First, the dual-time SAR images are respectively composed of a single network with shared weights to learn the features of the single temporal images layer by layer, then the learned feature maps between the two adjacent layers are connected. Later, it can be put into the Transformer decoder to extract rich contextual feature information to learn the local–global semantic features. Finally, the up-sampling encoder restores the image to the original resolution size to obtain our change detection results. The bitemporal CNN network is used to learn the depth features, respectively, to avoid the influence of the pseudo-changes and the noise in SAR images on the deep learning results of parallel operation to a certain extent, encode the connected context features to realize the mastery of local-global features, the shallow learning features are retained, and the context information is fully learned in the whole process. The SAR change detection model is able to accurately locate the changes of the building group, and the accuracy of the model is improved. The specific contributions of our model are as follows:

- (1)

- We propose an end-to-end network architecture that combines the UNet++ and Transformer model. This is the first attempt to use the combination of UNet++ and Visual Transformer in SAR image change detection. Through this improved hybrid architecture, the model can capture the long-distance dependencies and realize the full learning of multi-layer features.

- (2)

- The change detection accuracy of this model is still high in SAR images with large noise. It has excellent performance in the change detection of building groups in urban built-up areas, and can well reduce the influence of noise and false change in the SAR images.

- (3)

- The experimental results show that the proposed model was better than other change detection models in terms of expression ability, suppression of change and noise influence. The experimental results on several representative datasets were better in terms of F1, IOU, and other evaluation indices.

2. Related Work

2.1. Visual Transformer

Transformer is a deep learning model based on the attention mechanism, which has achieved success in natural language processing [19]. With its powerful feature learning ability, the Transformer model frequently appears in the field of computer vision research. Unlike CNN, Transformer has a self-attention mechanism, which makes it capable of using this mechanism to capture global context information and build long-distance dependence on the target to extract more favorable features. Today, Transformer can be used in change detection (CD), segmentation (SETR), denoising, and other aspects of image processing, and has achieved good results.

In the field of remote sensing, Transformer is also widely used. In terms of image classification, Chen et al. [20] proposed a dual-branch transformer, where the experiments proved that this method performed better in image classification than multiple parallel works on visual Transformer. While in semantic segmentation, Sitong Wu et al. [21] proposed a new semantic image segmentation framework for an all-transformer network (FTN), which achieved new results on several challenging semantic segmentation benchmarks. Enze Xie et al. [22] proposed a semantic segmentation framework (SegFormer) that unified a Transformer and lightweight multi-layer perceptron (MLP) decoder, which did not require location coding and combined local attention and global attention. The model extended by this method achieved better performance than the other models. In terms of image denoising, Malsha V. Perera et al. [23] proposed a SAR image speckle removal network based on Transformer, which allows the network to learn the global dependence between different image regions, which is helpful for better speckle removal, and used the composite loss function to train the synthesized speckle images.

In the application of change detection, some papers based on the Transformer model have appeared in recent years. Hao Chen et al. [24] proposed a dual-time image Transformer (BIT) to effectively model the context in time–space, incorporating BIT into a change detection (CD) framework based on feature difference. Experiments on those CD datasets acquired by optical images demonstrated the effectiveness of the proposed method. Qingyang Li et al. [25] proposed a new encoding–decoding Transformer model: TransUNetCD. The CNN feature map was combined with labeled image patches, and then encoded with Transformer to extract rich global context information. Experimental results showed that the results were more precise and had robust generalization ability. However, these change detection models are all applicable to optical images, and their applicability in SAR image change detection has not been verified.

2.2. Change Detection with Deep Learning

CNN is widely used in image change detection for its excellent feature extraction ability, and the UNet series network is a good representative of it. With its unique U-shaped structure and skip-connection, UNet series lightweight network models have been widely used in the semantic segmentation of medical images. Its structure can solve the problem of processing small datasets, so it is also suitable for remote sensing image processing with less datasets. The improved model based on the UNet [26] and UNet++ [27] segmentation network has a strong ability to extract image attribute information, which makes it have a strong ability to retain details, so has been applied in image segmentation, change detection, and other fields. D. Peng et al. [28] used a change detection multi-side output fusion strategy based on UNet++; this network employed MSOF to extract the details. Xueli Peng et al. [16] proposed an improved UNet++MSOF architecture; low-level features are used to obtain high-level features through convolution units, while high-level features with rich semantic information are used to guide low-level features through upsampling units.

At present, these UNet series change detection network architectures based on a CNN structure can gradually and deeply extract features with more advanced semantic information. However, it lacks sufficient attention to the context information, and is too sensitive to the image differences of the same ground object in the scene due to the shooting angle, time, and other reasons as well as the image noise and other factors in the process of obtaining features, leading to a large number of false changes in the change detection results. Therefore, a better algorithm is needed to reduce the impact of complexity in the interesting object in the scene on the image results.

The attention mechanism screens out the information that we are interested in from a large amount of information and focuses on them, ignoring most of the information we are not interested in. The higher the weight, the more important the information. The three main attention domains are the spatial domain, channel domain, and mixed domain. Peng et al. [16] proposed an attention convolutional neural network (DDCNN) to capture the long-term dependence from the attention of spatial and channel for better feature extraction.

3. Materials and Methods

3.1. Proposed Method

3.1.1. Network Architecture

SAR images have few bands, contain amplitude information that cannot reach the level of optical images, have low resolution, and are heavily influenced by noise. Influenced by these factors, SAR images have high limitations in the application of change detection, which are mostly used in environmental and disaster aspects such as landslides, farmland, glaciers, etc. At present, there is no large public dataset for the deep learning change detection of SAR images on the Internet. Therefore, most of the current change detection technologies for SAR images adopt unsupervised classification methods, and rarely adopt the supervised classification mode. However, SAR images also have the advantages of larger acquisition range, shorter period, and no cloud occlusion; if the dataset can be obtained from SAR images for the establishment of the deep learning change detection model, the generalization ability of the model can be improved to reduce the influence of the inherent speckle noise of the image, and the application of SAR images in urban areas will be greatly improved.

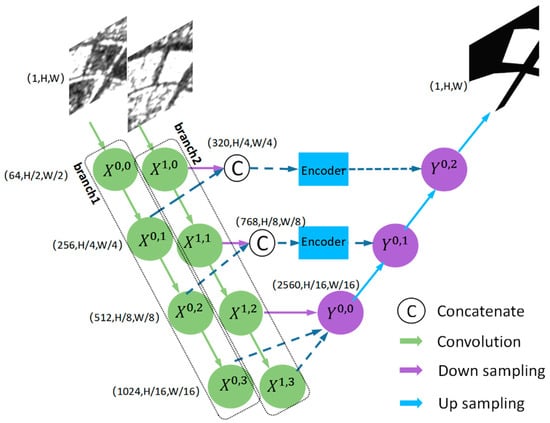

The improved change detection network of UNet and UNet++ series [26,27,28] can accurately detect the change information of the image, which has a strong ability of feature extraction and detail retention and avoids aliasing effect [28,29,30]. However, the noise in SAR images will affect the image quality, reduce the feature extraction ability of UNet series improved models [31,32,33], and hinder the detailed interpretation of SAR data. Moreover, the inherent limitations of convolutional operation do not make CNNs good at capturing long-distance features [34]. The more heads of a multi-head attention in Transformer, the more beneficial it is to capture long-range features [35]. Therefore, Transformer is conducive to the feature representation of interest changes to establish a more interpretable and efficient feature representation model [36]. Inspired by the above, this paper combined improved UNet series models with visual Transformer and applied it to the deep learning change detection of SAR images. The overall system process of TransUNetSAR is shown in Figure 3.

Figure 3.

TransUNet++SAR.

First, the network divides the bitemporal SAR images into two branches independently; each branch extracts the depth features of the image layer by layer through multiple convolution units, and then connects the upper and lower depth features of the two branches layer by layer. The first and second layers of the second branch are connected layer by layer with the second and third layers of the first branch, forming two feature connections. Second, dividing the two features into a series of patches, each patch is flattened into a one-dimensional vector, and then linearly projected into a patch embedding to obtain a series of patch embedding sequences, which are generated to join the Vision Transformer network. This network learns and correlates the global context of high-level semantic concepts in the transformer encoder to obtain enhanced deep features. Then, the last layer of both branches are connected with the third layer of the second branch. Finally, these three parts of the processing data are put into the upsampling encoder network to enhance the bottom details and improve the change edge extraction ability of the model. Our change map was obtained from the obtained feature map.

3.1.2. Transformer Encoder

SAR images are different depending on the optical images in the image mechanism and image characters. Our images included a variety of objects: buildings, roads, forests, farmland, water, etc. These different objects have different backscattering coefficients, so the reflections of them in the SAR image gray value are different. SAR images do not provide as much information as optical images, and causes great difficulty to the change detection. At the same time, compared with optical images, SAR images are more susceptible to noise and false changes. Therefore, we adopt the concatenation operation in the CNN structure, this operation is harder to learn the noise in image compared with the difference operations, and put the bitemporal SAR images into the network structure with CNN. In order to improve the feature extraction ability and fitting performance of the model, the model channels after CNN operation were [64, 256, 512, 1024], respectively. The feature maps extracted from these processes were put into the improved Vision Transformer.

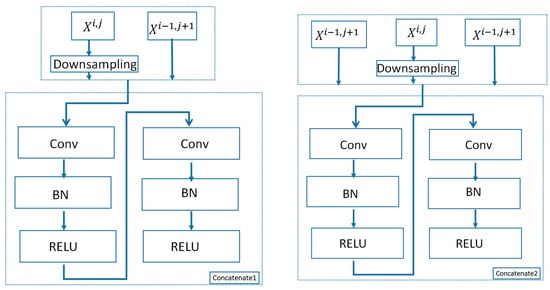

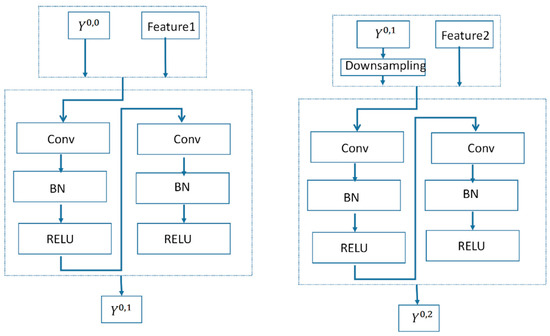

The bitemporal SAR images , where the spatial resolution is H × W, the number of channels is C. In Formula (1), function is the concatenate1 operation in Figure 4, and the function is the concatenate2 operation. The features extracted from layer and the features extracted from layer are taken as connectenate1 operation as a whole (). However, Transformer cannot encode the 3D matrix of data, so it needs to be processed into the matrix form of 2D vectors, so the input is sorted into 2D patches, and the number of patches is , where the patch size N is P × P. Then, each patch is mapped to a one-dimensional vector by linear mapping to obtain a token with the same size as the patch. Before the data is input into Transformer Encoder, the spatial location information of patches needs to be encoded to reduce the learning cost. The formula of the embedding layer is as follows:

where p = [] is the sequence composed of patches, and E = [] is the patch embedding projection, denotes the position embedding.

Figure 4.

Concatenate1 and concatenate2.

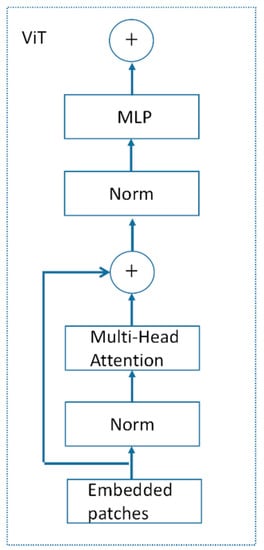

Later, the improved Vision Transformer Encoder is used to encode the patches that acquired the depth features. For each patch embedding, a learnable embedding representation is predicted, and then the final output of this embedding represented in the encoder is taken as the representation of the corresponding patch. The structure of the model is the same as that of the standard Transformer (Figure 5), which consists of multiple interactive layers of multi-head attention (MSA) and multi-layer perceptrons (MLP). MLP is a module composed of fully connected, activation function, and dropout, which adds nonlinear conversion capability to Transformer. MSA is a module that can perform multiple attention calculations at the same time and better learn long-distance dependence. Different random initialization mapping matrices can map input vectors to different subspaces, which enables the model to understand the input sequence from different perspectives.

Figure 5.

Visual Transformer.

The formula represents the forward propagation of the MSA and MLP, where LN() is the layer normalization operator; is the encoded image representation, first attention linearly transforms the input vector to Q (Query), K (Key), V (Value). First, the correlation weight matrix coefficients between Q and K are calculated and normalized by Softmax, and then the weight coefficients are superimposed on V to realize the modeling of global context information. It is calculated as follows:

where W can be learned to be the scaling factor to avoid the variance caused by the dot product. Q, K, and V are the query, key, and value matrices of size N × d, where N and d denote the number of patches and the dimension of single-head attention.

where h is the number of attention heads applied to the input sequence is the parameter matrix.

This article used an improved Vision Transformer that mainly included two modules: the linear projection of flattened patches and Transformer encoder. As shown in Figure 5, we put layer normalization between “MLP” and “Multi-Head Attention” to accelerate network convergence and prevent excessive data from affecting the processing efficiency.

3.1.3. Upsampling Decoder

The upsampling encoder was added to the network to obtain the final change detection results. In order to avoid the loss of detailed information in this process, we connected the feature information obtained by the last layer of convolution units, and used the upsampling decoding block to decode the encoded features. In order to avoid the loss of detailed information, we concatenated the shallow and deep features together, the features extracted from the layer and were taken as the connectenate2 operation as a whole (j = 2), which is regarded as , to add the decoding block, the results of the Transformer Encoder are feature1 and feature2, the specific process of the upsampling decoder is shown in Figure 6, and the process of obtaining is pretty much the same as .

Figure 6.

Upsampling decoder.

3.1.4. The Model Details

The model structure mainly includes three parts: an improved Resnet-50 CNN network structure for extracting feature maps layer-by-layer from concatenated SAR bitemporal images; an improved shape ViT network structure for learning input data to generate context-rich feature representations; and an upsampling and decoding structure for data. The Resnet-50 introduced in the first stage included four layers, the second stage had 768 hidden layers, 12 Transformer layers, and 3072 MLPS.

4. Results

In this section, we first describe the datasets in our experiment including the access method, processing method, pixel size, etc., experimental basic settings, and evaluation metrics. This was followed by an analysis of the results as well as a benchmark comparison, followed by an ablation study, and finally an additional analysis. This was followed by Evaluation Index, Comparative Methods, Dataset Evaluation.

4.1. Datasets and Parameter Settings

- (1)

- Beijing Datasets

The change detection dataset of the change in the complex was obtained from ESA in GRD format and IW scanning mode. First, after acquiring the image, we performed geometric correction, image registration, and region cropping on the images to obtain the bitemporal images with 10,000 pixels × 10,000 pixels. Then, we depicted the outline of the area with buildings with obvious changes in the bitemporal images, and made it into a 10,000 × 10,000 pixel label, where the changed area was white and the unchanged area was black. Then, the bitemporal images and labels were cut into 256 pixel × 256 pixel samples, and the samples without architectural changes were removed. The remaining samples were mirrored and rotated to form datasets for change detection. It contained 5000 training sets, 1000 test sets, and 1000 validation sets.

- (2)

- Qingdao Datasets

The change detection dataset in Qingdao was captured by the Sentinel-1 satellite. The dataset covers the urban area of Huanxiu Street, Chaohai Street, and Longshan Street near Qingdao City, Shandong Province. The dataset contains a pair of SAR images taken on 12 April 2017 and 16 April 2022, with change detection tags for the buildings. The size of each complete image is 35,154 × 21,177, and the spatial resolution is 10 m per pixel. The dataset was divided into 256 × 256 pixel non-overlapping images, and the invalid images and labels without architectural changes were filtered out. Then, according to a certain proportion, 4000 training sets, 800 validation sets, and 800 test sets were obtained.

- (3)

- Guangzhou Datasets

The Guangzhou change detection dataset consisted of 2099 pairs of C-band SAR images taken by Sentinel-1 satellites between 2017 and 2022. The spatial resolution of these images is around 10 m. Level-0, Level-1, and Level-2 data products are available to users. In this paper, the GRD series with IW mode imaging product was used to cut the dataset into 256 × 256 pixel blocks, and then each block was flipped. Finally, the image was rotated by 90°, 180°, and 270°, respectively. The images were divided by the ratio of 3:1:1, and finally, 1260 training sets, 420 validation sets, and 419 test sets were obtained.

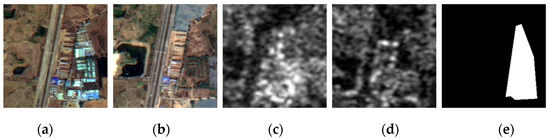

In order to ensure the accuracy of the label of SAR image samples, the change detection samples of urban areas used in this paper were selected after comparing the preprocessed high-resolution optical image with the SAR image with a registration error less than 5 pixels, which ensures that the label is indeed the change of urban buildings and the delineation of the change range is accurate. The specific comparison between optical and SAR images is shown in Figure 7.

Figure 7.

The specific comparison between the optical and SAR images. (a) First temporal optical image. (b) Second temporal optical image (c) First temporal SAR image. (d) Second temporal SAR image. (e) Ground truth.

Our experiments were implemented based on the Pytorch framework using NVIDIA GeForce GTX 3060Ti GPU for training, validating, and testing. The cross-entropy loss function, weight decay of 5 × 10−4, momentum of 0.9, and Adam with a learning rate of 1 × 10−4 were used to optimize the model. The running time was related to the number of samples: it took about 8 h for a training set with 1000 samples and 15 min for a training set with 800 samples at testing time.

4.2. Evaluation Index

For model evaluation, precision (P), recall (R), intersection over union (IoU), and F1-score were used. These are calculated as follows:

TP is the number of actual positive classes predicted to be positive, FN is the number of actual positive classes predicted to be negative, FP is the number of actual negative classes predicted to be positive, and TN is the number of actual negative classes predicted to be negative. IOU and F1-score are comprehensive evaluation indices, which can better reflect the generalization ability of the model.

4.3. Comparative Methods

We selected two SAR image unsupervised classification change detection algorithms: GarborPCANet and CWNN, and two optical image change detection algorithms, DDCNN and BIT_CD, to verify the effectiveness of the model.

GarborPCANet [37]: This method uses PCA filters and the representative neighborhood features of each pixel are utilized. Therefore, this method is more robust to speckle noise and produces less noisy change maps.

CWNN [38]: The change detection method used is based on a convolutional wavelet neural network. It mainly solves the problem of noise influence and sample limitation: the dual-tree complex wavelet transform is used to effectively reduce the influence of speckle noise, and the virtual sample generation scheme is used to solve the problem of sample limitation.

DDCNN [16]: It is a transformer (bit) for efficient modeling of context in the spatio-temporal domain. A bitemporal image is represented by multiple tokens and the transformer encoder is used for context modeling. The learned context-rich tokens are then returned to pixel space and passed through the transformer decoder to refine primitive characters.

BIT_CD [24]: It is a transformer (bit) for efficient modeling of context in the spatio-temporal domain. The bitemporal images are represented by multiple tokens and the transformer encoder is used for context modeling. The learned context-rich tokens are then returned to pixel space and passed through the transformer decoder to refine primitive characters.

4.4. Dataset Evaluation

We used the SAR change detection datasets in Beijing, Qingdao, and Guangzhou to verify the efficacy of the TransUNet++SAR algorithm. The evaluation index results of the validation set can be seen in Table 1, Table 2 and Table 3. Compared with other related change detection algorithms, TransUNet++SAR was improved in F1-score and IOU index.

Table 1.

Accuracy metrics of the Beijing datasets.

Table 2.

Accuracy metrics of the Guangzhou datasets.

Table 3.

Accuracy metrics of the Qingdao datasets.

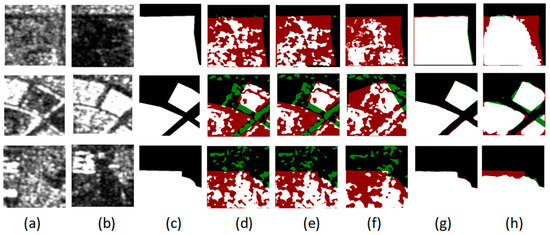

- (1)

- Beijing Datasets

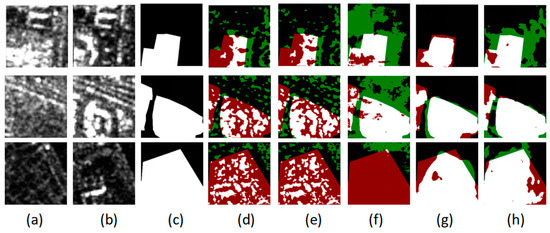

The SAR datasets of built-up areas contained multiple scenes of built-up areas, and three typical scenes were selected for visualization. Figure 8 show the change detection results of the three building groups, respectively. According to the optical image, the first line of Figure 8. is the local change map of a large building. Comparing (a) with (b), it can be seen that after the building is eradicated, the gray value becomes significantly smaller, and the change can be accurately detected by the SAR model. The second line of Figure 8. shows the local change of a square. In (a), the square is being built, and in (b) it has been completed, where it can be observed that the gray value of the building part increased significantly, and the change was also completely outlined by our SAR model. The third line of Figure 8. is the local change map of a small building group. After the removal of part of the building group, the corresponding gray value became smaller, and our model will detect their changes. In addition, there were some other changes involved in the third line of Figure 8., but they were not detected because they were not the changes of the building. For the change of large buildings, the detection result of the optical algorithm was obviously better than that of the SAR algorithm. In contrast, the SAR image algorithm was more susceptible to noise and false changes. The performance of TransUNet++SAR was close to the effect of BIT_CD, but the boundaries detected by TransUNet++SAR were closer to the boundaries of the real tag. As shown in Figure 8, TransUNet++SAR had high detection accuracy for large area building changes.

Figure 8.

Change detection results for the Beijing datasets. (a) Image one. (b) Image two. (c) Ground truth. (d) GarborPCANet. (e) CWNN. (f) DDCNN. (g) BIT_CD. (h) TransUNet++SAR. In the figure, black, white, red, and green represent the TN, TP, FN, and FP respectively.

- (2)

- Guangzhou Datasets

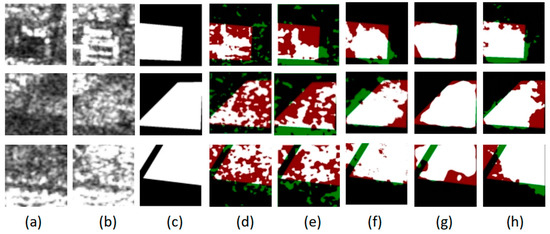

Compared with Beijing, the building density in Guangzhou is smaller, and the building planning situation is not as neat and regular as that in Beijing. Large buildings change less than in the Beijing area. Limited by the conditions, only 263 SAR images in Guangzhou were collected, which were rotated and mirrored for training. The reduction in training samples and the small scale of changing buildings in the sample led to a slightly lower accuracy of the training model than that of Beijing. Although the detected boundaries were slightly smaller than the boundaries of the real markers, both TranUNet++SAR and BIT_CD could roughly detect that there was a change here. As is shown in Figure 9, when the buildings in the last image of the Guangzhou datasets changed, the gray value of the SAR image did not change much, but our method still performed better than the results of the other datasets. In contrast, the change outline of TransUNet++SAR was smoother, and BIT_CD was affected by spurious changes, while TransUNet++SAR avoided them to the greatest extent. Therefore, TransUNet++SAR was still better than the other algorithms in this case.

Figure 9.

Change detection results for the Guangzhou datasets. (a) Image one. (b) Image two. (c) Ground truth. (d) GarborPCANet. (e) CWNN. (f) DDCNN. (g) BIT_CD. (h) TransUNet++SAR. In the figure, black, white, red, and green represent the TN, TP, FN, and FP, respectively.

- (3)

- Qingdao Datasets

We conducted experiments on the Qingdao datasets to further evaluate the effectiveness of the proposed method. As shown in Table 3, TransUNet++SAR achieved 96.51% and 90.61% in F1-score and IOU, respectively, and outperformed the compared methods. We selected the results of some large buildings such as building groups and new built-up areas for visualization, as shown in Figure 10. The figure shows that TransUNet++SAR detected changes in the SAR images better.

Figure 10.

Change detection results for the Qingdao datasets. (a) Image one. (b) Image two. (c) Ground truth. (d) GarborPCANet. (e) CWNN. (f) DDCNN. (g) BIT_CD. (h) TransUNet++SAR. In the figure, black, white, red, and green represent the TN, TP, FN, and FP, respectively.

4.5. Computational Complexity

Table 4 shows the computational complexity of each model, “Params” is the space complexity, which is the storage space size occupied by the algorithm during its running, and “Flops” is the time complexity, which is the running time of the algorithm. As can be seen from the table, the two methods of unsupervised classification, GarborPCANet and CWNN, had the smallest time and space complexity, but both also had the lowest accuracy. Among the supervised classification methods, DDCNN had lower computational complexity, but its accuracy was far inferior to the other two supervised classification methods. Although the computational complexity of BIT_CD was smaller than that of our method, the accuracy was not as good as that of our method. Especially on the Beijing dataset, P, R, F1, and IOU were lower than that of our method: 1.16%, 4.43%, 4.38%, 9.79%, respectively.

Table 4.

Computational complexity of each model.

5. Discussion

We compared the accuracy indices of our model with the other four models. In the Beijing, Guangzhou, and Qingdao datasets, the proposed model achieved the optimal level in the evaluation indicators of precision, recall, intersection over union, and F1-score. In the Beijing datasets, the precision, recall, F1-score, and IoU were 98.76%, 96.00%, 97.36% and 96.67%, respectively, which exceeded 44.08%, 57.70%, 42.26%, and 58.65%, respectively, of the baseline method GarborPCANet. This was 1.16%, 4.43%, 4.38%, 9.19% higher than the similar model (BIT_CD), respectively. In the Guangzhou dataset, although the accuracy indices of our method were reduced, our model could still achieve more accurate results, which had a good tradeoff between recall and precision. In the Qingdao dataset, our model was still at least 1% more accurate than the other models. Through the comparison of the quantitative stage results of different datasets, we can conclude that our proposed model could show a clear change boundary and was less susceptible to noise than traditional methods, and the high accuracy of change detection was also the best.

6. Conclusions

In this paper, we proposed a network architecture for change detection in bitemporal SAR images (TransUNet++SAR).The model took the Visual Transformer module into the network architecture as part of the decoder to make up for the shortcomings of CNN in learning the global environment and long-term dependencies. This encoder structure combined with UNet++ enhances the feature extraction of the underlying structure, realizes the description of the details, and improves the ability of the edge information of the changing region.

In addition, series operation, instead of parallel operation, was used in the input of the CNN network, and each layer was connected together after processing one-by-one and input into the encoder structure. This model, compared with the traditional unsupervised classification of SAR image change detection, reduced the image noise influence on CNN network feature extraction, and improved the overall ability of feature extraction. As a result, our image can avoid the influence of noise and spurious changes, which is also a great advantage of the supervised classification of SAR image change detection compared with unsupervised classification.

The experimental results showed that our network structure is effective for SAR datasets. TransUNet++SAR showed good results and achieved good consistency on the SAR image datasets with different building distribution forms such as the Guangzhou dataset, Qingdao dataset, and Beijing dataset.

In the following work, we will continue to study the adaptability of our model to higher resolution SAR images such as the Gaofeng-3 image, and adjust the model structure according to the image characteristics, so that the model can extract more information and improve the applicability of the model to other SAR images.

Author Contributions

Conceptualization, Y.D. and Q.L; Methodology, Y.D.; Software, Y.D. and Q.L.; Validation, Y.D. and F.Z.; Writing—original draft preparation, Y.D.; Writing—review and editing, Y.D. and R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Technologies Research and Development Program of China under Grant 2022YFB3904101, in part by the National Natural Science Foundation of China under Grant U22A20568, 42071444 and 42101444.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the anonymous reviewers and members of the editorial team for their comments and contributions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. An adaptive semiparametric and contextbased approach to unsupervised change detection in multitemporal remote-sensing images. IEEE Trans. Image Process. 2002, 11, 452–466. [Google Scholar] [CrossRef] [PubMed]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Li, H.-C. SAR Image Change Detection Based on Multiscale Capsule Network. IEEE Geosci. Remote Sens. Lett. 2021, 18, 484–488. [Google Scholar] [CrossRef]

- Li, Y.C.; Chen, Y.; Jiao, L.; Zhou, L.; Shang, R. A Deep Learning Method for Change Detection in Synthetic Aperture Radar Images. IEEE Trans. Geosci. Remote Sens. 2019, 19, 1–13. [Google Scholar] [CrossRef]

- Bazi, Y.; Bruzzone, L.; Melgani, F. Automatic Identification of the Number and Values of Decision Thresholds in the Log-Ratio Image for Change Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2006, 3, 349–353. [Google Scholar] [CrossRef]

- Geng, J.; Ma, X.; Zhou, X.; Wang, H. Saliency-Guided Deep Neural Networks for SAR Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7365–7377. [Google Scholar] [CrossRef]

- Inglada, J.; Grégoire, M. A new statistical similarity measure for change detection in multitemporal SAR images and its extension to multiscale change analysis. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1432–1445. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Generalized minimum-error thresholding for unsupervised change detection from SAR amplitude imagery. IEEE Trans. Geosci. Remote Sens. 2005, 5, 2121–2124. [Google Scholar]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef]

- Krinidis, S.; Chatzis, V. A Robust Fuzzy Local Information C-Means Clustering Algorithm. IEEE Trans. Image Process. 2010, 19, 1328–1337. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Dong, J.; Li, B.; Xu, Q.; Xie, C. Change detection from synthetic aperture radar images based on neighborhood-based ratio and extreme learning machine. J. Appl. Remote Sens. 2016, 10, 2963–2972. [Google Scholar] [CrossRef]

- Chen, B. Change Detection in SAR Images with Deep Learning. Ph.D. Thesis, Xidian University, Xi’an, China, 2020. [Google Scholar]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout networks. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 26 May 2013; Volume 23, pp. 1319–1327. [Google Scholar]

- Ponti, M.A.; Ribeiro, L.S.; Nazare, T.S.; Bui, T.; Collomosse, J. Everything you wanted to know about deep learningfor computer vision but were afraid to ask. In Proceedings of the 2017 30th SIBGRAPI Conference on Graphics, Patterns and Images Tutorials (SIBGRAPI-T), Niteroi, Brazil, 17–18 October 2017; Volume 14, pp. 17–41. [Google Scholar]

- Peng, X.; Zhong, R.; Li, Z.; Li, Q. Optical Remote Sensing Image Change Detection Based on Attention Mechanism and Image Difference. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7296–7307. [Google Scholar] [CrossRef]

- Liu, G. Research on SAR Image Change Detection Based on Deep Learning. Ph.D. Thesis, Chongqing University, Chongqing, China, 2020. [Google Scholar]

- Liu, T.; Li, Y.; Xu, L. Dual-channel convolutional neural network for change detection of multitemporal SAR images. In Proceedings of the 2016 International Conference on Orange Technologies (ICOT), Melbourne, VIC, Australia, 18–20 December 2016; pp. 60–63. [Google Scholar] [CrossRef]

- Liu, F.; Jiao, L.; Tang, X.; Yang, S.; Ma, W.; Hou, B. Local Restricted Convolutional Neural Network for Change Detection in Polarimetric SAR Images. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 818–833. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30 (NIPS 2017); Mit and Morgan Kaufmann: Long Beach, CA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Chen, C.-F.R.; Fan, Q.; Panda, R. CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 347–356. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2001, 34, 12077–12090. [Google Scholar]

- Perera, M.V.; Bandara, W.G.C.; Valanarasu, J.M.J.; Patel, V.M. Transformer-Based SAR Image Despeckling. arXiv 2022, arXiv:2201.09355. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection With Transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, R.; Du, X.; Du, Y. TransUNetCD: A Hybrid Transformer Network for Change Detection in Optical Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 35–40. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switerland, 2018; Volume 13, pp. 3–11. [Google Scholar]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2848–2864. [Google Scholar] [CrossRef]

- Papadomanolaki, M.; Verma, S.; Vakalopoulou, M.; Gupta, S.; Karantzalos, K. Detecting Urban Changes with Recurrent Neural Networks from Multitemporal Sentinel-2 Data. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 214–217. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Stent, S.; Ros, G.; Arroyo, R.; Gherardi, R. Street-view change detection with deconvolutional networks. Auton. Robot. 2018, 42, 1301–1322. [Google Scholar] [CrossRef]

- Lebedev, M.A.; Vizilter, Y.V.; Vygolov, O.V.; Knyaz, V.A.; Rubis, A.Y. Change Detection in Remote Sensing Images Using Conditional Adversarial Networks. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 565–571. [Google Scholar] [CrossRef]

- Benedek, C.; Sziranyi, T. Change Detection in Optical Aerial Images by a Multilayer Conditional Mixed Markov Model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Zhang, H.; Gong, M.; Zhang, P.; Su, L.; Shi, J. Feature-Level Change Detection Using Deep Representation and Feature Change Analysis for Multispectral Imagery. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1666–1670. [Google Scholar] [CrossRef]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic Change Detection in Synthetic Aperture Radar Images Based on PCANet. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1792–1796. [Google Scholar] [CrossRef]

- Gao, F.; Wang, X.; Gao, Y.; Dong, J.; Wang, S. Sea Ice Change Detection in SAR Images Basedon Convolutional-Wavelet Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1240–1244. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).