1. Introduction

With the rapid development of imaging technology, remote sensing images have been paid more attention and have been applied in more and more fields. Spatial information and spectral information on land cover targets can be simultaneously provided by hyperspectral images (HSIs). Because of these characteristics, hyperspectral images are widely used in many remote sensing applications, such as medicine [

1], agriculture [

2], food [

3], forest monitoring, and urban management [

4]. In order to improve these applications, some tasks related to hyperspectral images have been developed in recent years, such as hyperspectral image classification [

5], hyperspectral image unmixing [

6], and hyperspectral image anomaly detection [

7,

8]. Hyperspectral image classification is considered a basic classification task. Each sample of the hyperspectral image is assigned a semantic label, which is the main principle of hyperspectral image classification. The most important aim of hyperspectral image classification is to effectively extract spatial spectral features and design a classification module.

In order to obtain better classification performance, researchers have made continuous efforts in past decades. Initially, researchers realized hyperspectral image classification using some methods based on machine learning classifiers, such as decision tree [

9], random forest [

10], support vector machine [

11], and sparse representation [

12]. However, the classification results of these pixel-level classification methods did not reach a satisfactory classification level. The reason is that these methods consider more spectral features than spatial features [

13]. Some classification methods based on spectral and spatial features have been proposed by researchers. This classification method can effectively solve this problem. For example, the module feature extraction method was proposed in [

14]. Using this module, spatial information in hyperspectral images can be effectively extracted. Statistical modules, such as conditional random field [

15] and Markov random field [

16], can be applied to hyperspectral image classification, and classification experiments can be carried out using spatial information and spectral information in hyperspectral images. Although the classification performance is improved to a certain extent, these modules heavily rely on hand-selected features. That is, the complex content in hyperspectral images may not be represented by most manual feature methods, which is one of the reasons limiting the final classification performance.

In the automatic extraction of nonlinear and hierarchical features, deep learning technology has shown excellent comprehensive performance. Computer vision tasks (such as image classification [

17], semantic segmentation [

18], and object detection [

19]), natural language processing (such as information extraction [

20], machine translation [

21], and question answering systems [

22]), image classification, and other tasks have achieved significant development with the support of deep learning technology. Some representative feature extraction methods [

23] include structural filtering-based methods [

24,

25,

26], morphological contour-based methods [

27], random field-based methods [

28,

29], sparse representation-based methods [

30], and segmentation-based methods [

31]. With the development of artificial intelligence, researchers have gradually introduced deep learning technology into the field of remote sensing [

32] and achieved good classification results. In [

33], a deep belief network (DBN) was used for feature extraction and classification of hyperspectral images. In [

34], the features of hyperspectral images were extracted using a stack automatic encoder (SAE). However, the inputs of DBN and SAE networks are pixel-level spectral vectors that cannot use spatial information, and classification performance still has great potential to improve. They can classify hyperspectral images using spectral information and spatial information, e.g., ResNet [

35], CapsNet [

36], DenseNet [

37], GhostNet [

38], and dual-branch network [

39]. ResNet can better combine the shallow features of hyperspectral images, while DenseNet can better combine the deep features of hyperspectral images, and then classify hyperspectral images accordingly [

40,

41]. In the hyperspectral image classification task, the relationship between different spectral bands and the similarity between different spatial positions can be captured using CapsNet [

42]. The working principle of GhostNet is to use a small amount of convolution to extract the spatial and spectral features of the input hyperspectral image before performing linear transformation on the extracted features, and finally generating the feature map through concatenation to obtain the classification results. Due to the special structure of the double branch network, it is more suitable for exploring the spectral/spatial features of hyperspectral images so as to effectively classify hyperspectral images [

43]. In addition to the above methods, there are many new hyperspectral image classification methods. For example, RNN [

44] and LSTM [

45] were used to conduct further research after taking continuous spectral bands as temporal data. In [

46], a hyperspectral image classification network, a fast dynamic graph convolution network, and CNN (FDGC), which combines graph convolution and neural networks, was proposed. This network can extract the inherent structural information of each part using the dynamic graph convolution module, which greatly avoids the disadvantage of large memory consumption by the semisupervised graph convolution neural network adjacency matrix. In [

47], an HSI classification model based on a graph convolution neural network was presented. It can extract feature pixels from local spectral/spatial features, preserve specific pixels used in classification, and remove redundant pixels.

Attention mechanisms have received considerable attention in recent years because they can capture important spectral and spatial information. Many attention-based methods have been developed for hyperspectral image classification [

48]. The method based on deep learning can be greatly improved. Firstly, the structure of some networks is very complex, and the number of parameters of these networks is also very large. This makes it difficult to train them with fewer training samples. Secondly, hyperspectral images contain complex spectral and spatial features. Not only is global information very important, but other local information, such as spatial information and spectral band information, is also very important for classification.

The dual-branch dual attention network (DBDA) was proposed in [

49]. One branch uses channel attention to obtain spectral information, while the other branch uses a spatial attention module to extract spatial information. Using 3D-CNN to classify hyperspectral images, spectral/spatial features can be extracted as a whole. For example, Chen et al. proposed a structure based on CNN to extract depth features while capturing spatial/spectral features. Spectral/spatial information can also be extracted separately and classified after the information is fused. For example, a three-layer convolutional neural network architecture was proposed in [

50]. This network architecture can extract the spatial information and spectral information of hyperspectral images layer by layer from the shallow layer to the deep layer, fuse the extracted spatial spectral information, and finally classify and optimize the fused information. In [

51], a spatial residual network was proposed. The spatial residual module and the spectral residual module are used to continuously learn and identify rich spatial spectral information in hyperspectral images, which can greatly avoid the occurrence of overfitting and improve the network operation speed. An end-to-end fast dense spectral spatial convolution (FDSSC) network for hyperspectral image classification was proposed in [

52], which can reduce dimensionality. This network uses convolution kernels of different sizes to effectively extract spectral/spatial information. A dual-branch dual attention network (DBMA) was proposed in [

53]. In this network structure, multiple attention modules are used to extract spatial spectral information. Lastly, the spatial information and spectral information captured on the two branches are fused, and the features are fused before classification. An attention multibranch CNN architecture based on an adaptive region search (RS-AMCNN) was proposed in [

54]. If one or more windows are used as the input of hyperspectral images, a loss of contextual information can occur. If RS-AMCNN is used, this loss of context information can be effectively avoided, and classification accuracy can be improved. In [

55], an effective transmission method was proposed to classify hyperspectral images. This method mainly projects different sensors and different spectral band numbers into the spectral space of hyperspectral images. This can ensure that the relative positions of each spectral band are aligned. The network uses the depth network structure of the layered network, and then ensures that the depth features and shallow features are effectively extracted. Even if the network is deep, better classification results can be obtained. In [

56], a CNN framework with a dense spatial spectrum is used, including the feedback attention mechanism, FADCNN. Use compact connections to combine spectral spatial features to extract sufficient information.

A hyperspectral image classification method based on multiscale super pixels and a guided filter (MSS-GF) was proposed in [

57], which can capture spatial local information at different scales in different regions. The content of HSI is rich and complex, many different materials have similar texture characteristics, and the amount of data calculation results in the performance of many CNN modules not being fully utilized. Standard CNNs cannot adequately obtain spectral/spatial information from hyperspectral images because of their potential redundancy and noise.

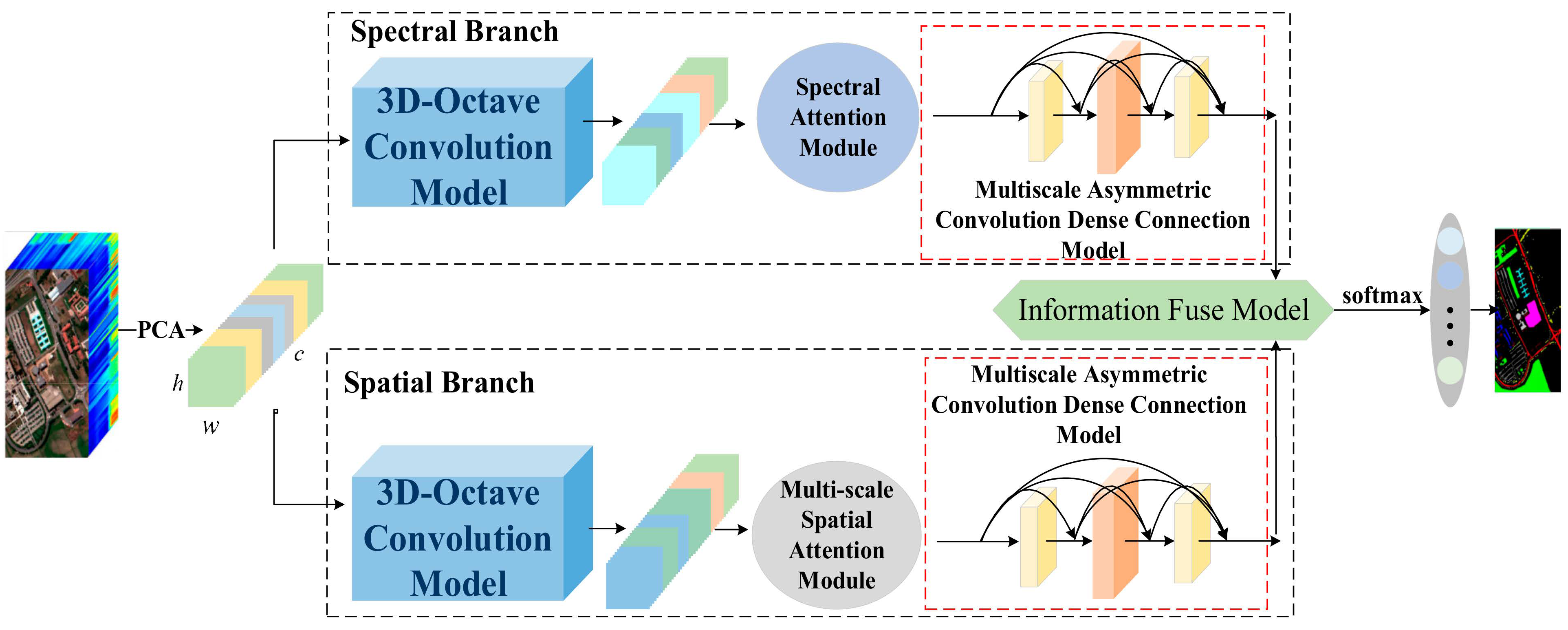

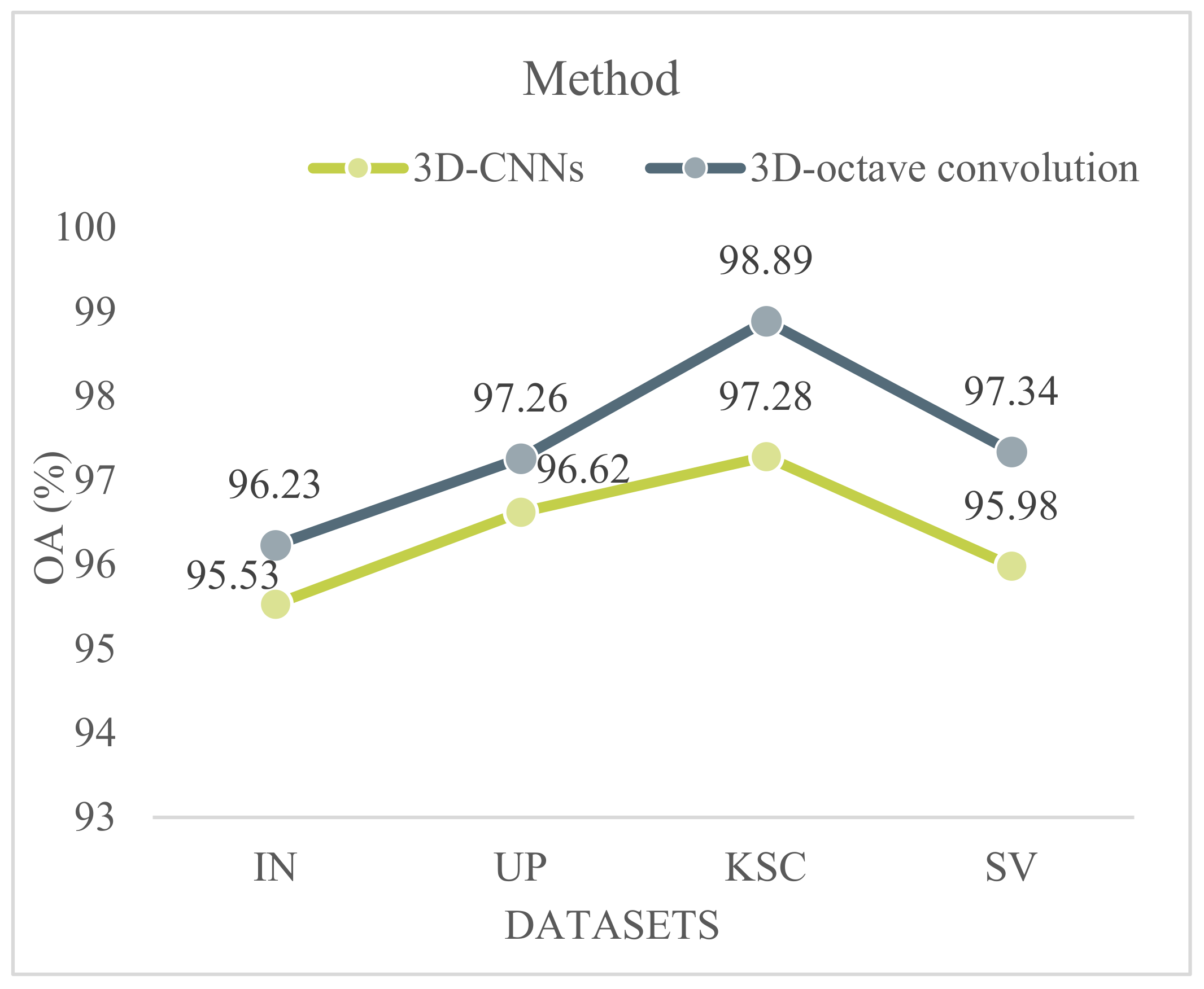

To solve these problems and improve classification performance, this paper proposes a new hyperspectral image classification method based on a combination of three-dimensional octave convolution (3D-OCONV), a three-dimensional multiscale spatial attention module (3D-MSSAM), and a dense connection structure of three-dimensional asymmetric convolution (DC-TAC). In the spectral branch, a 3D-OCONV module with a few parameters is adopted to capture the spectral information of hyperspectral images, and then the spectral attention mechanism is followed. By utilizing the spectral attention mechanism, important spectral features can be highlighted. Then, three sets of DC-TAC are used to extract spectral information at different scales. In the spatial branch, the 3D-OCONV module is also utilized to obtain spatial information, and then the 3D-MSSAM is adopted to extract the spatial information of different scales and regions. Next, three sets of DC-TAC are used to extract spatial features. At the end of the network, an interactive information fusion module is developed to fuse the spatial and spectral features captured by the spectral and spatial branches.

The main contributions of this paper are as follows.

- (1)

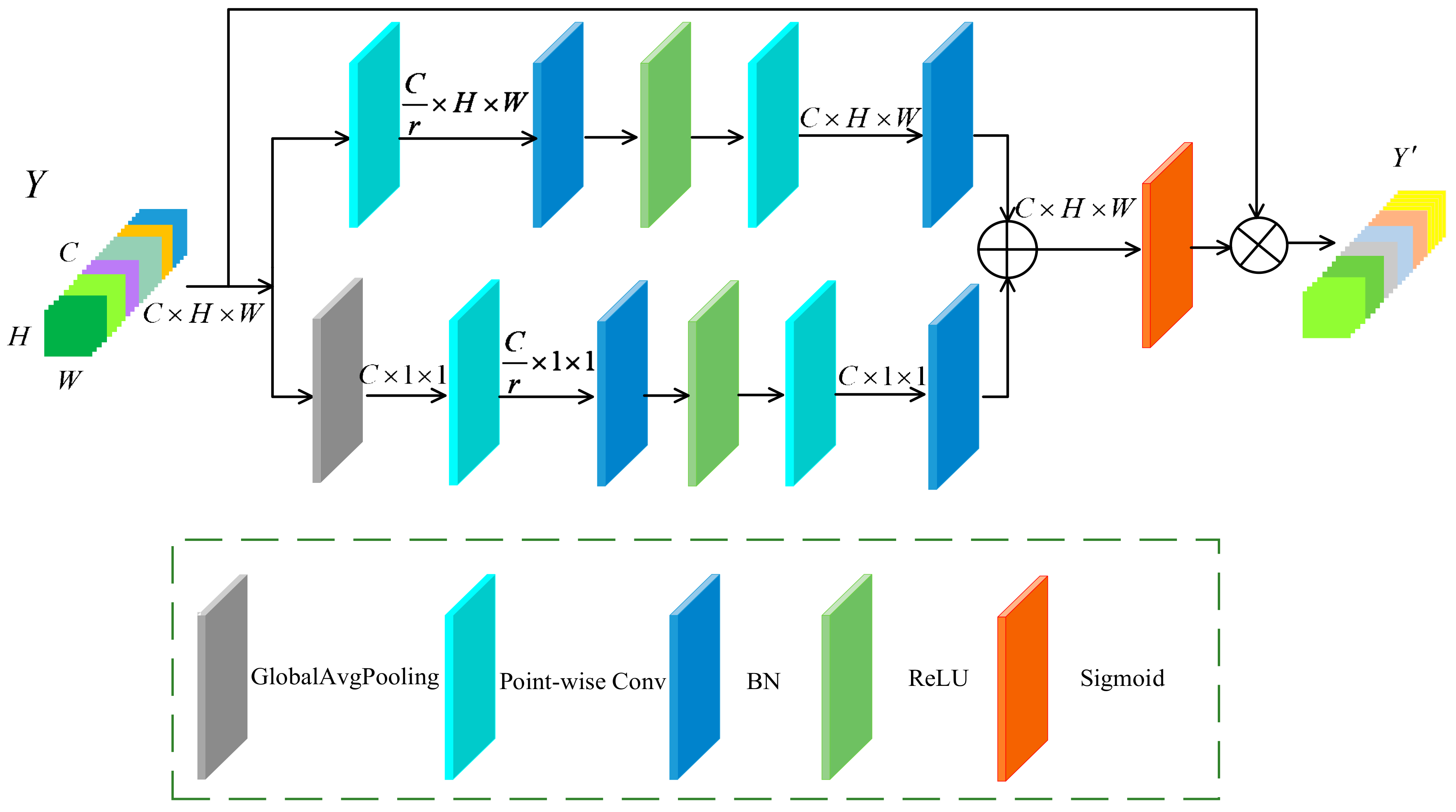

A three-dimensional multiscale spatial attention module (3D-MSSAM) is developed to learn the spatial features of hyperspectral images. Two branches with different scales are used to extract spatial information. Through this method, the spatial features of different scales can be fused to improve classification performance.

- (2)

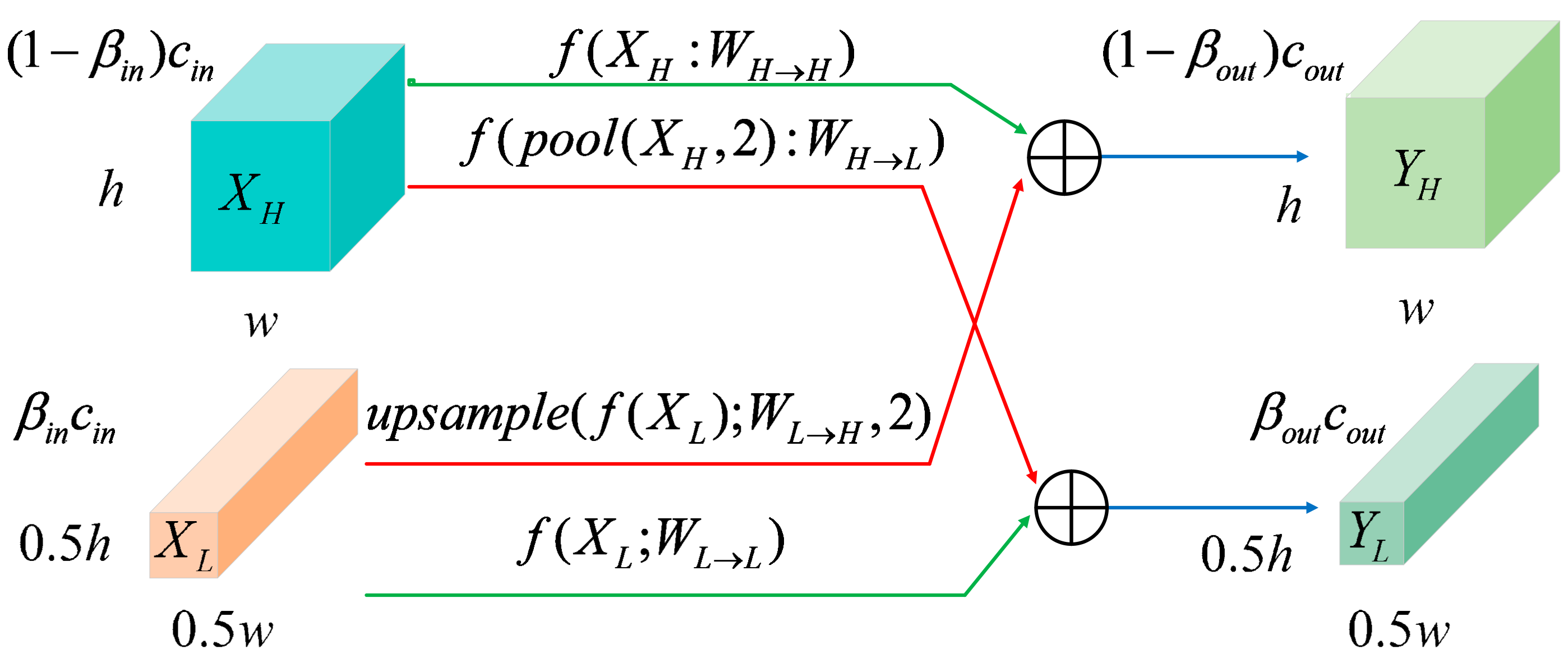

The proposed network adopts a double-branch structure and introduces a new three-dimensional octave convolution (3D-OCONV) module into each branch. It fuses the information between high and low frequencies so as to further improve the representation ability of features. The module has fewer parameters and can improve the classification performance of the network.

- (3)

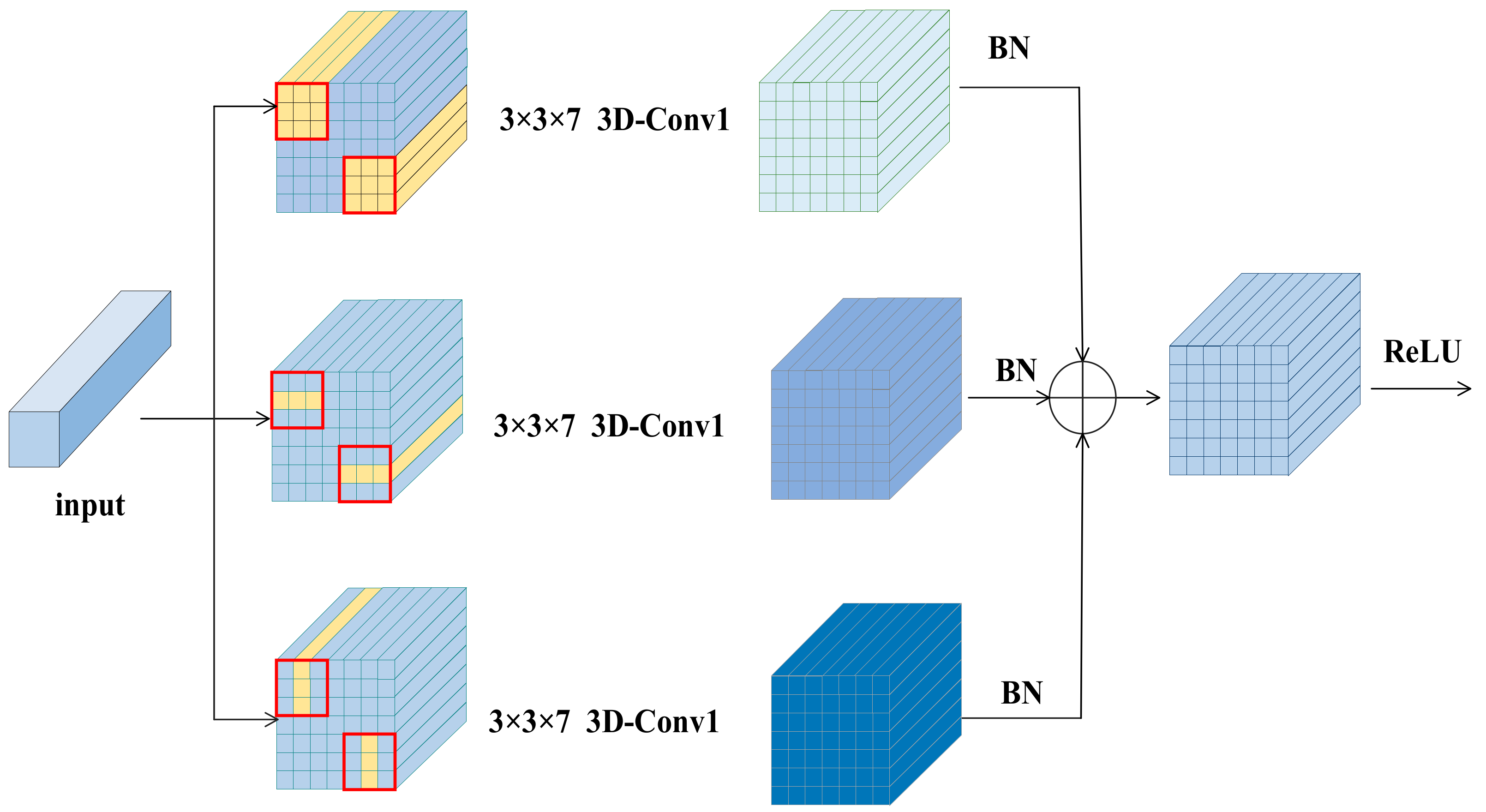

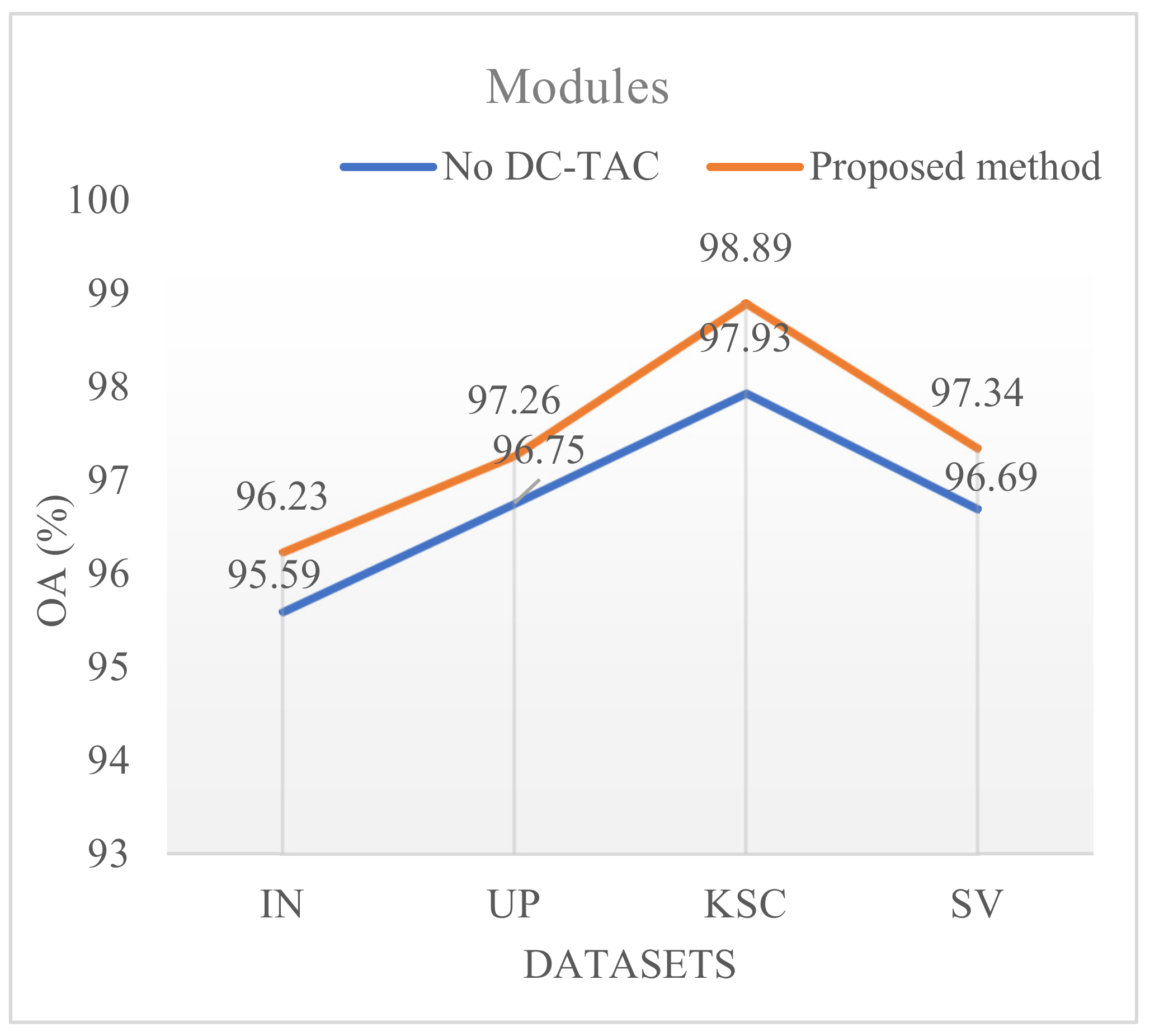

A dense connection structure of three-dimensional asymmetric convolution (DC-TAC) is designed. A dense connection uses three groups of 3D asymmetric convolutions with different scales, which can extract spatial and spectral features from horizontal, vertical, and overall perspectives. Moreover, in order to improve classification performance and reduce the number of parameters, packet convolution is adopted in asymmetric convolution. In this way, the full extraction of features is conducive to fusion information, thus improving the final classification performance.

- (4)

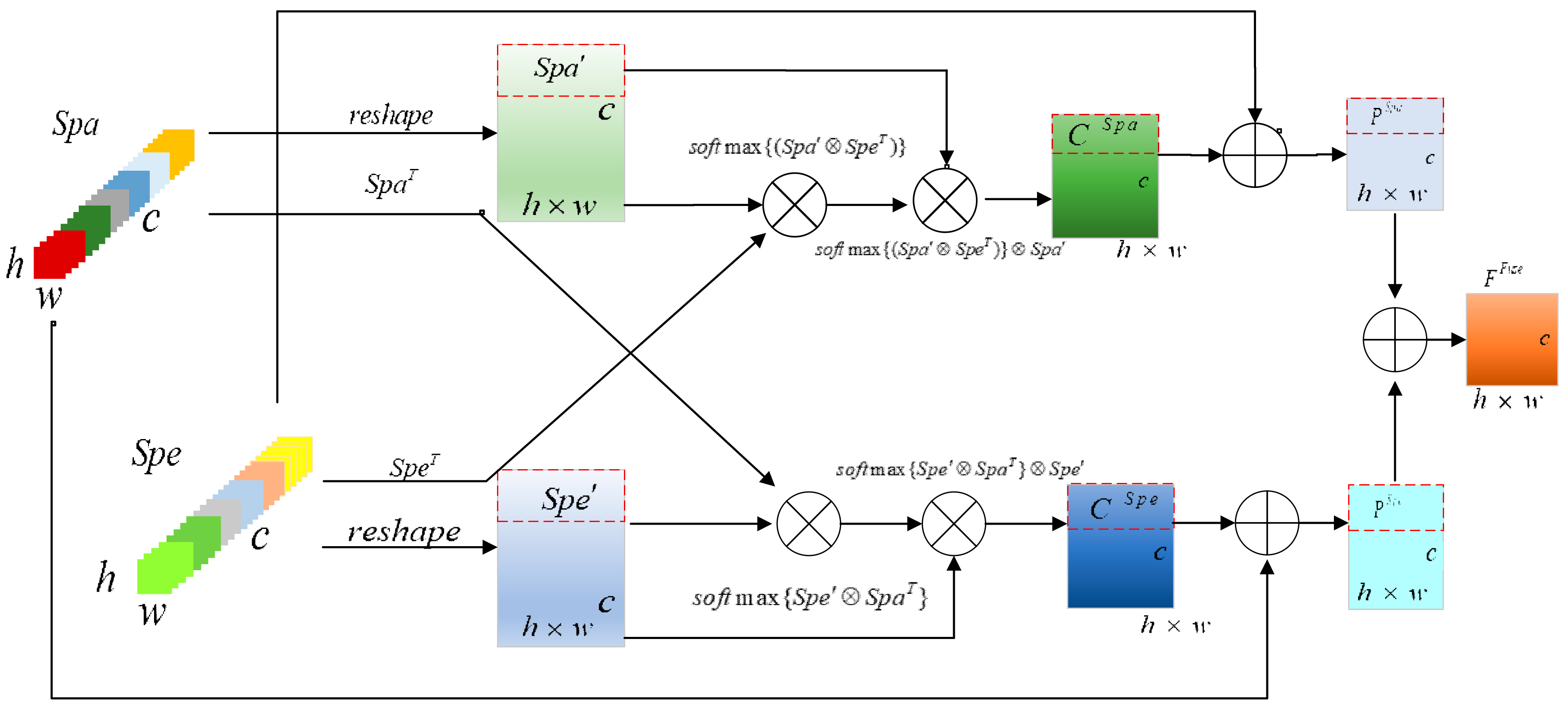

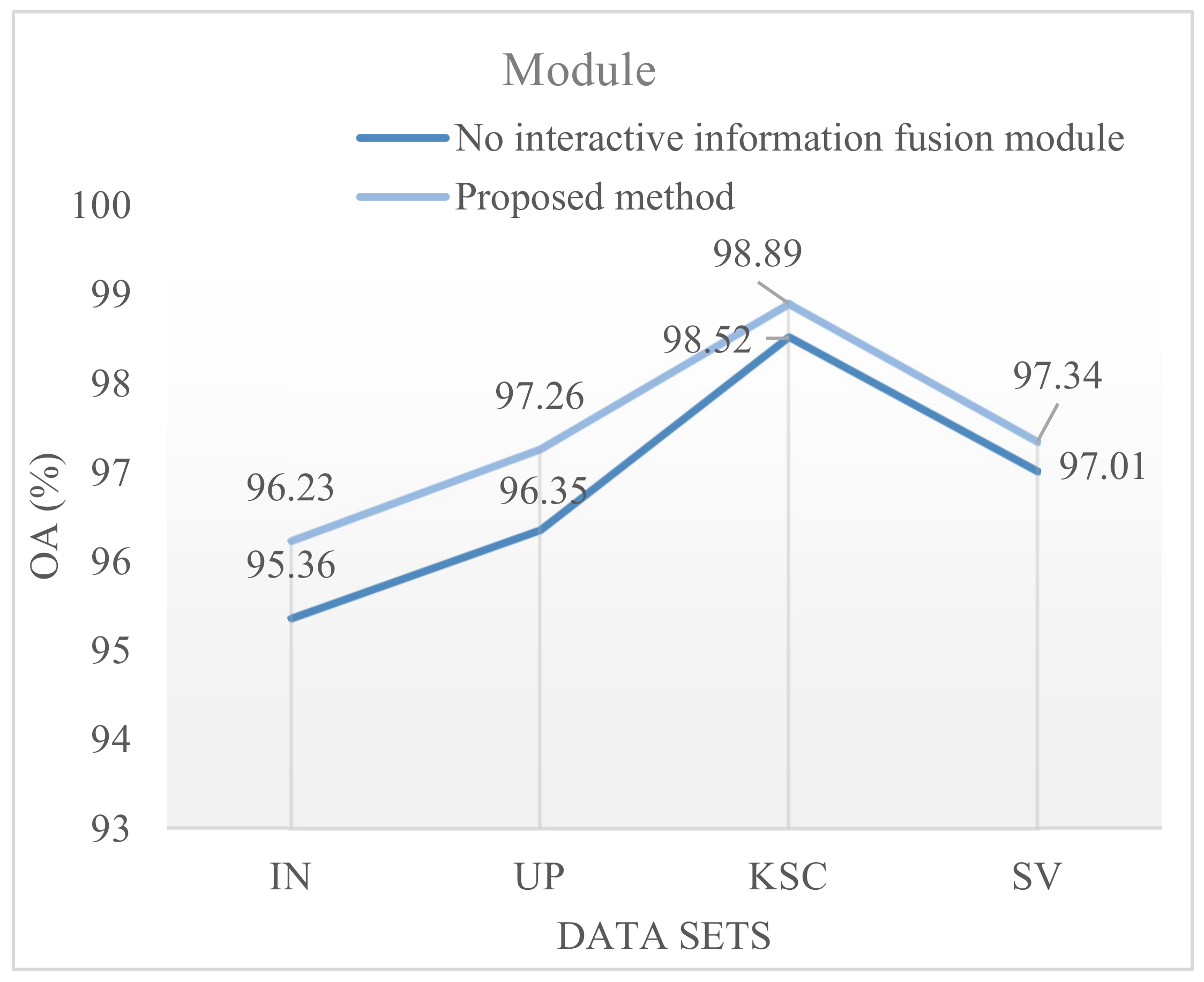

An interactive information fusion module is presented that can fuse the extracted spectral information and spatial information in an interactive way. We first supplement the spatial information extracted by the spatial branch with the spectral information extracted by the spectral branch, and then fuse the complementary information. Finally, the fused information is used for the final classification.

The remainder of this article is arranged as follows: the proposed method is described in detail in

Section 2; the experimental results and analysis are given in

Section 3; the proposed method is discussed in

Section 4; and some conclusions are given in

Section 5.

3. Experiment

3.1. Datasets

The four datasets used in the experiment, as well as the true image and false color image of the four datasets and the corresponding category information, are shown in

Table 1,

Table 2,

Table 3 and

Table 4.

- (1)

Indian pines (IN): As shown in

Table 1, this was the earliest experimental dataset used for hyperspectral image classification. In 1992, an Indian pine tree in Indiana was imaged using an aerial visible/infrared imaging spectrometer (AVIRIS). The researchers used 145 size markers to perform hyperspectral image classification experiments. An image with a spatial resolution of about 20 m was generated by a spectral imager. To facilitate the experiment, 200 bands were left for the experiment, and the remaining unnecessary bands were eliminated. In the dataset, the number of pixels is 21,025, the number of ground objects is 10,249, and the number of background pixels is 10,776. There are 16 categories in the image with uneven distribution.

- (2)

Pavia University (UP): The UP dataset is shown in

Table 2. The UP dataset is part of the hyperspectral data from the German Airborne Reflective Optical System Imaging Spectrometer (ROSIS), which was used in 2003 to image the city of Pavia in Pavia, Italy. The spectral imager mapped 115 bands, eliminating 12 affected by noise and leaving 103 available bands. There are nine trees, asphalt pavement, and bricks in the dataset.

- (3)

Kennedy Space Center (KSC): As shown in

Table 3, the KSC dataset was collected on 23 March 1996 at the Kennedy Space Center in Florida by the NASA AVIRIS (Airborne Visible/Infrared Imaging Spectrometer) instrument. A total of 224 bands were collected. After removing the bands with water absorption and a low signal-to-noise ratio, 176 bands were left for analysis. There are 13 categories in the dataset representing various types of land cover in the environment.

- (4)

Salinas Valley (SV): The SV dataset is shown in

Table 4. The SV dataset comprised an image taken by an AVIRIS imaging spectrometer in Salinas Valley, California, USA. The SV dataset initially had 224 bands; after removing unnecessary bands, 204 bands remained for use. The size of the image is 512 × 217, and the number of pixels in the image is 111,104. After removing 56,975 background pixels, the remaining 54,129 pixels remained for classification. These pixels are classified into 16 categories, such as fallow cultivation and celery.

3.2. Experimental Setup and Evaluation Criteria

In the experiment, we set the learning rate to 0.0005. The hardware platform we used in the experiment was AMD Ryzen7 4800 h, with Radeon graphics 2.90 GHz, Nvida Geforce rtx2060 GPU, and 16 GB of memory. CUDA 10.0, pytorch 1.2.0, and python 3.7.4 were the software environments used for the experiments. For the module proposed in this paper, the input data size of different datasets was set to , where N is the number of bands in the dataset. Furthermore, 3%, 0.5%, 0.4%, and 5% of the data were randomly selected from the IN, UP, KSC, and SV datasets, respectively. Randomly selected data were used as training samples for the experiment, while other data were used as test samples for the experiment.

Overall accuracy (OA), average accuracy (AA), and Kappa coefficients were used to comprehensively evaluate the proposed methods. Experiments were performed on four datasets: IN, UP, KSC, and SV. We set the number of experiment iterations to 200, the batch size to 16, and the number of repetitions per experiment to 10. Taking the average value of several groups of experimental results as the final result of the experiment effectively avoided the data deviation caused by randomness.

3.3. Experimental Results

In order to verify the effectiveness of the proposed method, 10 different methods were selected for comparison with the proposed method. These methods included traditional machine learning methods and methods based on deep learning. Methods based on deep learning included DBDA [

49], SSRN [

50], PyResnet [

51], FDSSC [

52], DBMA [

53], A2S2KResNet [

60], CDCNN [

61], and HybridSN [

62], and traditional machine learning methods included SVM [

63]. The visual transformer (ViT) [

64] model, which performs well in the field of image processing, is a classic model. In addition, for the sake of fairness, all comparison methods were carried out under the same conditions as the methods proposed in this paper, including experimental parameter setting and experimental data preprocessing.

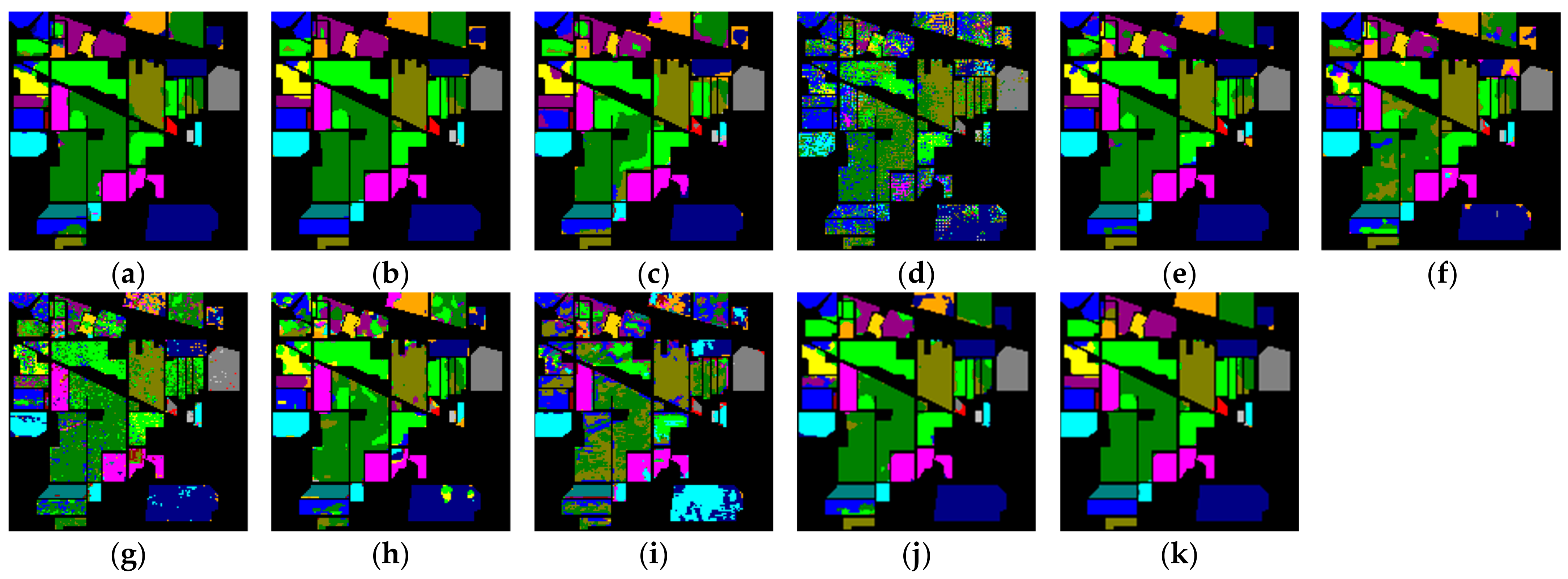

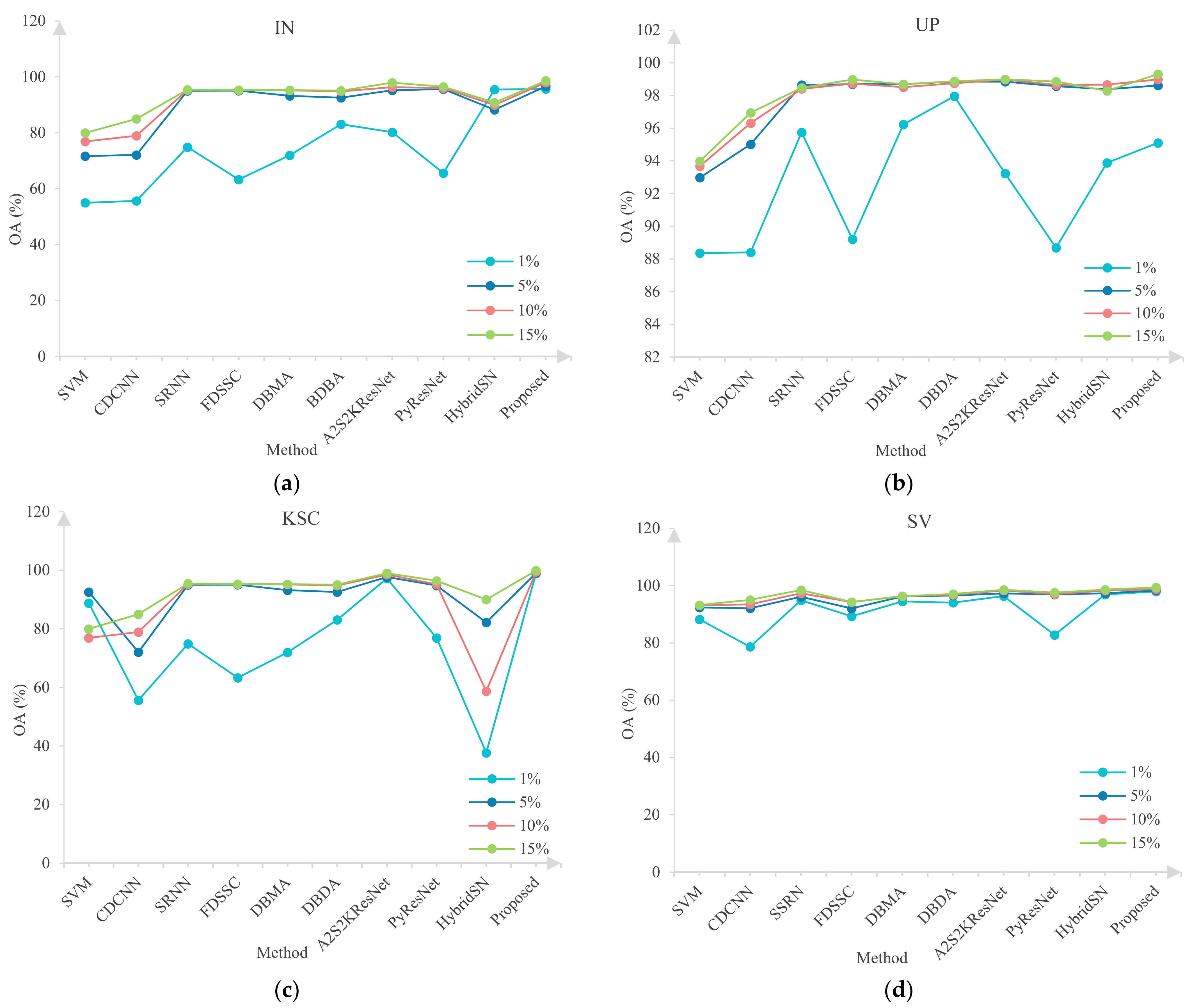

(1)

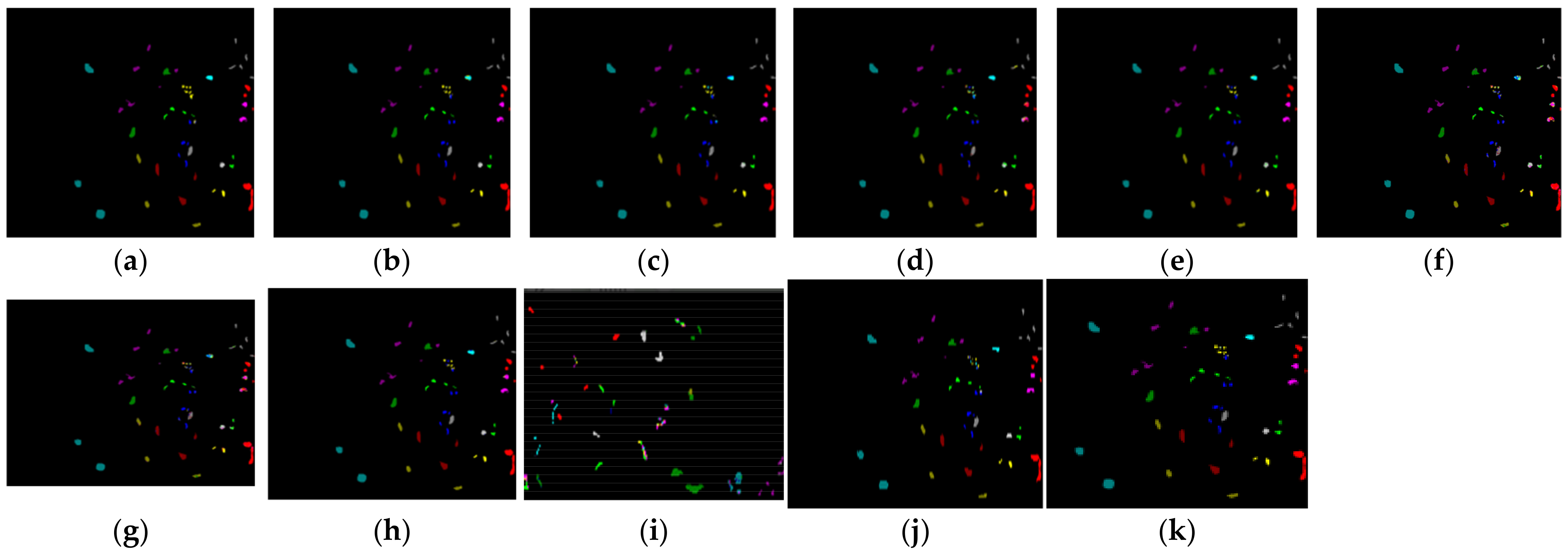

Analysis of experimental results on the IN dataset: Figure 8 shows a visual classification diagram obtained using different methods on the data, and

Table 5 shows the numerical classification results obtained using different methods on the dataset. By observing the classification results of each method, we can find that, compared with other comparative methods, the classification results of the method proposed in this paper were the clearest and closest to the ground-truth map. Not only was the classification result in the region good, but the classification result at the boundary of the region was also better than that of the other methods. By observing the numerical results in

Table 5, it can be found that the proposed method had the best classification performance compared with other methods. Compared with other methods, the OA of the proposed method increased by 4.13% (A2S2KResNet), 3.65% (DBDA), 6.28% (DBMA), 45.2% (PyResNet), 6.99% (SSRN), 28.46% (SVM), 14.05% (HybridSN), 24.81% (CDCNN), 3.94% (FDSSC), and 17.61% (ViT). The AA increased by 4.21% (A2S2KResNet), 4.4% (DBDA), 8.77% (DBMA), 41.25% (PyResNet), respectively 6.88% (SSRN), 26.83% (SVM), 11.26% (HybridSN), 24.22% (CDCNN), 4.12% (FDSSC), and 13.32% (ViT), and kappa increased by 4.74% (A2S2KResNet), 4.1% (DBDA), 7.46% (DBMA), 52.49% (PyResNet), 7.82% (SSRN), 30.73% (SVM), 15.85% (HybridSN), 28.48% (CDCNN), 4.46% (FDSSC), and 20.06% (ViT). The experimental results showed that the classification performance of this method was better. Compared with other comparison methods, this method is more comprehensive in extracting the spectral and spatial features of hyperspectral images.

The experimental results showed that the classification performance of this method was better than that of other methods in most cases. For example, for C8 haystack, A2S2KResNet, and OA achieved the best classification among all comparison methods, while the OA for C8 haystack was 99.82%, which is higher than for all other methods. Combined with the above discussion of the experimental results, it is shown that the proposed method was effective for the IN dataset.

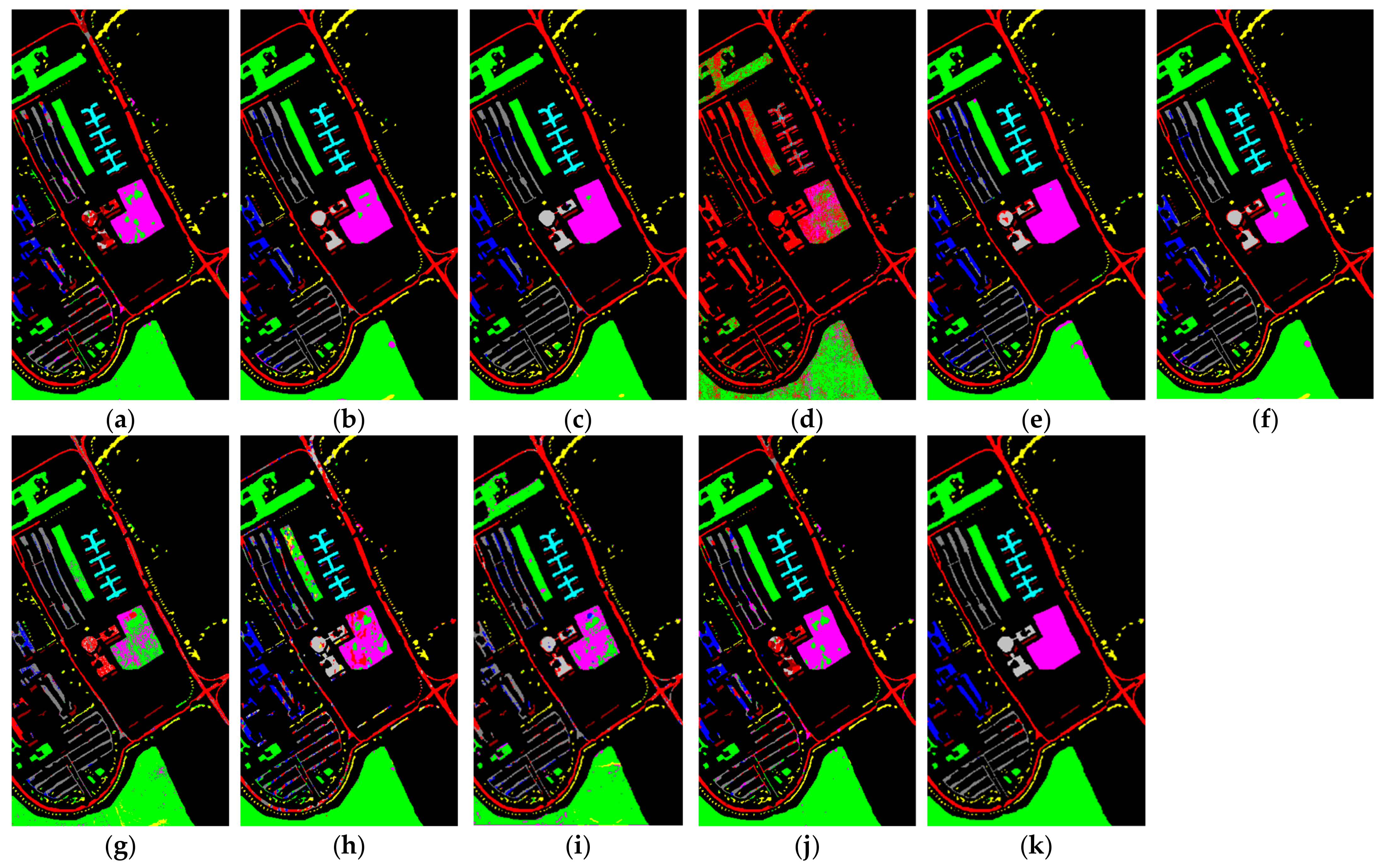

(2)

Analysis of experimental results on the UP dataset: Figure 9 shows the visual classification diagrams obtained using different methods on UP data, and

Table 6 shows the numerical classification results obtained using different methods on the UP dataset. From the classification results shown in

Figure 9, it can be seen that the classification results obtained using the proposed method were clearer and closer to the ground-truth map than those obtained using the other methods. By comparing the classification results of the proposed method with those of other methods, it was found that the proposed method could accurately predict almost all samples. At the same time, the classification results in

Table 6 show that the proposed method had the best classification performance compared with all other comparison methods. The OA of the proposed method increased by 9.82% (A2S2KResNet), 1.25% (DBDA), 5.46% (DBMA), 42.62% (PyResNet), 4.76% (SSRN), 14.23% (SVM), 15.41% (HybridSN), 9.32% (CDCNN), 4.1% (FDSSC), and 3.99% (ViT). The AA of the proposed method increased by 9.53% (A2S2KResNet), 2.13% (DBDA), 6.84% (DBMA), 48.18% (PyResNet), 4.69% (SSRN), 18.61% (SVM), 20.8% (HybridSN), 11.53% (CDCNN), 6.27% (FDSSC), and 5.81% (ViT). The kappa of the proposed method increased by 13.22% (A2S2KResNet), 1.66% (DBDA), 7.33% (DBMA), 61.05% (PyResNet), 5.48% (SSRN), 19.92% (SVM), 20.59% (HybridSN), 12.42% (CDCNN), 5.49% (FDSSC), and 5% (ViT). A comprehensive analysis of the above classification results shows that the proposed method could effectively capture more useful classification features.

Compared with the other comparison methods, the proposed method had the highest accuracy for some categories in the UP dataset, such as C2 grassland and C6 bare soil, with the highest classification accuracy of 99.30% and 98.97%. For other categories, this method could also achieve high classification performance. In addition, for C7 asphalt, the classification accuracy of DBDA and DBMA with an attention mechanism was 92.62% and 87.73%, which was significantly higher than that of other methods, successfully proving that spatial attention and spectral attention play a positive role in feature learning. The proposed method had the best classification effect. In combination with the above comprehensive analysis, it can be proven that the proposed three-dimensional multiscale space attention module can extract more spatial features conducive to classification.

(3)

Analysis of experimental results on SV dataset: Figure 10 shows the results of the visual classification of the SV dataset using different methods.

Table 7 shows the numerical classification results for the SV dataset using different methods. By looking at

Figure 10, we can see that the classification map of the proposed method was closer to the ground-truth map than the other methods. The classification boundaries of the different categories were also very clear, and almost all samples could be accurately predicted. By looking at

Figure 10, it can be found that the proposed method had the best classification effect compared with other methods. The OA of the proposed method increased by 2.76% (A2S2KResNet), 3.6% (DBDA), 4.39% (DBMA), 5.83% (PyResNet), 5.3% (SSRN), 10.36% (SVM), 10.01% (HybridSN), 8.98% (CDCNN), 1.55% (FDSSC), and 4.96% (ViT); the AA increased by 1.31% (A2S2KResNet) 1.15% (DBDA), 2.23% (DBMA), 4.44% (PyResNet), 1.96% (SSRN), 6.35% (SVM), 16.32% (HybridSN), 5.96% (CDCNN), 0.41% (FDSSC) and 3.48% (ViT); and kappa increased by 3.07% (A2S2KResNet), 4.01% (DBDA), 4.88% (DBMA), 6.5% (PyResNet), 5.9% (SSRN), 11.59% (SVM), 11.15% (HybridSN), 9.99% (CDCNN), 1.73% (FDSSC) and 1.59% (ViT). Combined with the above analysis of the classification results, we found that the proposed method had better classification results.

The classification accuracy of C6 stubble and C13 lettuce reached 99.94% and 99.91%, respectively. According to the above analysis and the classification results in the SV dataset, the classification performance of the proposed method was better than that of the other methods.

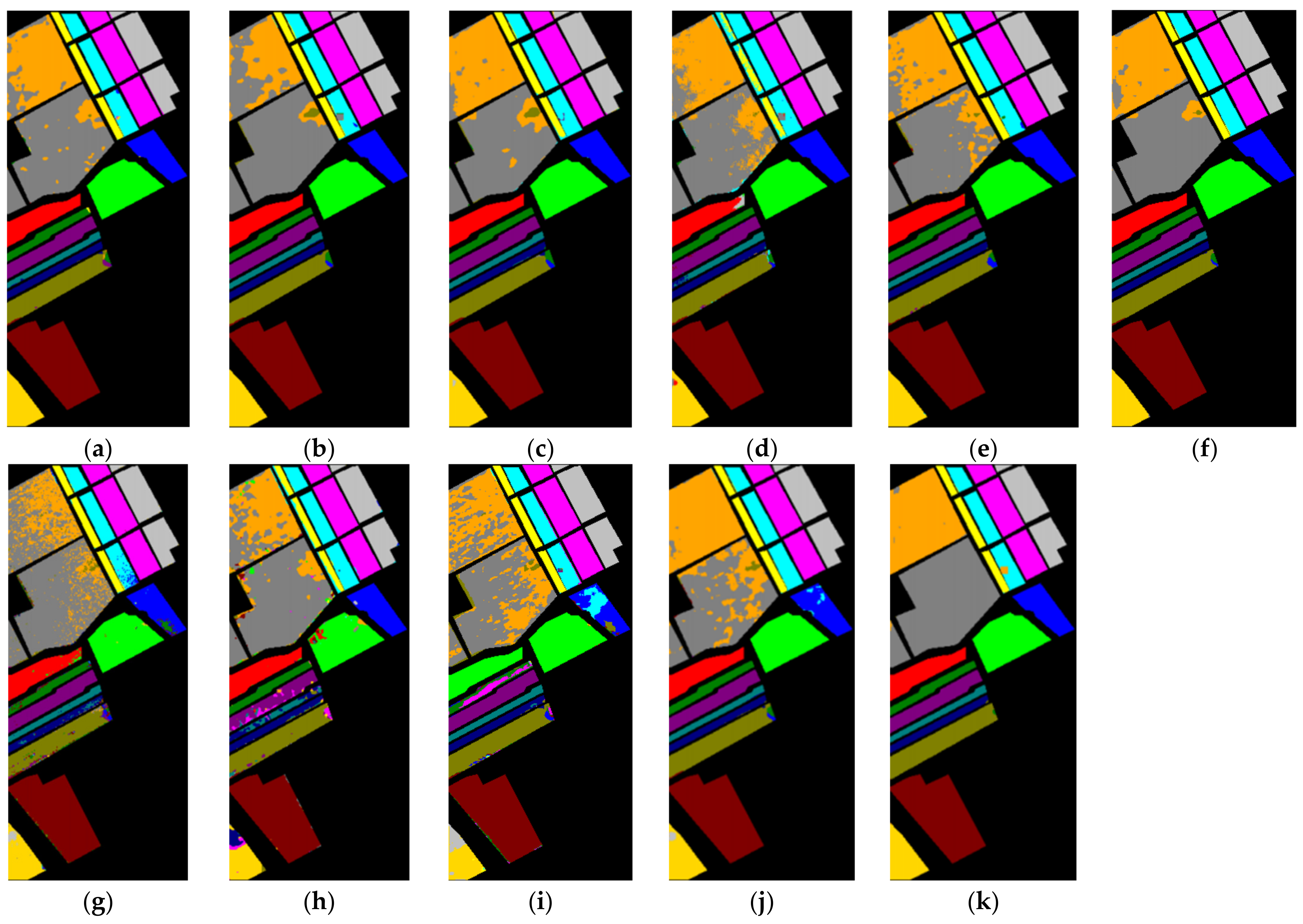

(4)

Analysis of experimental results on the KSC dataset: Figure 11 shows the visual classification maps obtained using different methods on KSC datasets, and

Table 8 shows the numerical classification results obtained using different methods on KSC datasets. As can be seen from the classification result diagram shown in

Figure 11, the classification results of the proposed method were the clearest. Specifically, the OA of the proposed method increased by 7.67% (A2S2KResNet), 2.13% (DBDA), 4.77% (DBMA), 7.4% (PyResNet), 4.37% (SSRN), 10.93% (SVM), 19.17% (HybridSN), 9.56% (CDCNN), 3.27% (FDSSC), and 6.51% (ViT); AA increased by 7.57% (A2S2KResNet) 3.43% (DBDA), 7.1% (DBMA), 9.1% (PyResNet), 6.18% (SSRN), 15.78% (SVM), 20.15% (HybridSN), 14.3% (CDCNN), 5.84% (FDSSC) and 4.66% (ViT); and kappa increased by 6.51% (A2S2KResNet), 2.25% (DBDA), 5.2% (DBMA), 8.13% (PyResNet), 4.75% (SSRN), 12.06% (SVM), 21.22% (HybridSN), 10.52% (CDCNN), 3.53% (FDSSC) and 6.8% (ViT). By observing the experimental results, it was found that the method could extract more features that contributed to the classification.

By observing the classification results of different categories in the KSC dataset, it is clear that, in most cases, the classification performance of the proposed method was better than that of other comparison methods. For example, the classification accuracy of C8 grass swamp and C9 rice grass swamp reached 99.11% and 100%, respectively. According to the above comprehensive analysis, the classification performance of this method was better than that of other methods.