Abstract

Recently, methods for obtaining a high spatial resolution hyperspectral image (HR-HSI) by fusing a low spatial resolution hyperspectral image (LR-HSI) and high spatial resolution multispectral image (HR-MSI) have become increasingly popular. However, most fusion methods require knowing the point spread function (PSF) or the spectral response function (SRF) in advance, which are uncertain and thus limit the practicability of these fusion methods. To solve this problem, we propose a fast fusion method based on the matrix truncated singular value decomposition (FTMSVD) without using the SRF, in which our first finding about the similarity between the HR-HSI and HR-MSI is utilized after matrix truncated singular value decomposition (TMSVD). We tested the FTMSVD method on two simulated data sets, Pavia University and CAVE, and a real data set wherein the remote sensing images are generated by two different spectral cameras, Sentinel 2 and Hyperion. The advantages of FTMSVD method are demonstrated by the experimental results for all data sets. Compared with the state-of-the-art non-blind methods, our proposed method can achieve more effective fusion results while reducing the fusing time to less than 1% of such methods; moreover, our proposed method can improve the PSNR value by up to 16 dB compared with the state-of-the-art blind methods.

1. Introduction

High spatial resolution hyperspectral images are of great significance in agriculture [1], military [2,3,4], image processing [5,6], and remote sensing [7,8,9,10] because of their ability to possess rich spectral and spatial information at the same time. However, due to limitations of the physical components, the LR-HSI can only be obtained with low spatial information but high spectral information, and the HR-MSI of the same scene has high spatial information but low spectral information. The most common economical way to obtain HR-HSI is, therefore, to fuse the LR-HSI and HR-MSI.

As we know, HR-MSI, LR-HSI, and HR-HSI are images of the same scene with different degrees of spatial and spectral information. When applying most fusion methods, HR-MSI is considered to contain most of the spatial information of HR-HSI, while LR-HSI contains most of the spectral information of HR-HSI. For example, Dian et al. [11] assumed that LR-HSI contains a large amount of spectral information of HR-HSI, and the spectral basis was obtained from LR-HSI by TMSVD. Long et al. [12] found a significant correlation between the singular values of HR-HSI and LR-HSI. For both methods, a TMSVD operation is carried out on LR-HSI, and the different factor matrices from LR-HSI are used as prior information in these proposed methods. We assume the HR-MSI should have properties similar to LR-HSI and HR-HSI.

As mentioned, these works [11,12] have shown that the first two TMSVD factor matrices of HR-HSI and LR-HSI have strong similarity. A feature of singular value decomposition (SVD) is that most of the image information can be saved by only keeping the first few terms. Inspired by this, we can reasonably assume that HR-HSI can be represented as the product of three TMSVD factor matrices. The last TMSVD factor matrix of HR-HSI contains a lot of spatial information, and it obviously comes from HR-MSI. The relationship between the second TMSVD factor matrix of LR-HSI and HR-HSI has been clearly experimentally demonstrated in [12], but the relationship between first TMSVD factor matrix of LR-HSI and HR-HSI was not clearly shown. We will verify the relationship of the first matrix as shown in the experimental results that follow.

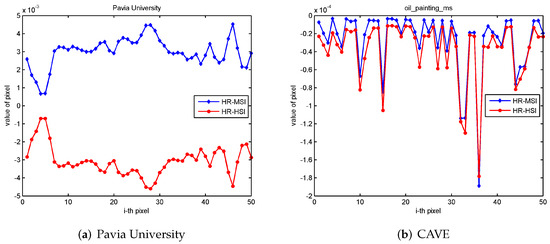

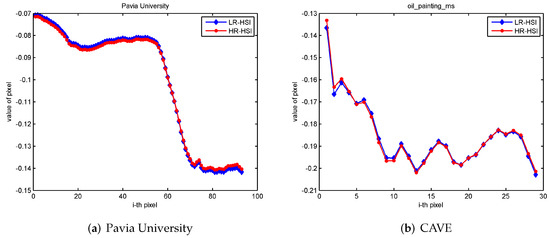

As shown in Figure 1, we found a strong correlation between HR-HSI and HR-MSI. The values of the third TMSVD factor matrix from the HR-MSI and HR-HSI are similar or opposite in the same position. As we can see from Figure 2, the first factor matrix value from HR-HSI and LR-HSI by TMSVD are also approximately the same at the same position, which can explain why the spectral basis comes from the LR-HSI based on matrix similarity, where there was previously no tangible evidence to explain this. We estimate the three SVD factor matrices of HR-HSI from LR-HSI and HR-MSI using TMSVD, and the three factor matrices are then used to construct the HR-HSI, which is different from estimating the spectral basis and the corresponding spectral coefficient matrix.

Figure 1.

The correlation of the third factor matrix (the first row of matrix) after TMSVD of HR-HSI and HR-MSI.

Figure 2.

The correlation of the first factor matrix after TMSVD of HR-HSI and LR-HSI.

However, compared with SVD, TMSVD is limited by a truncated value. It only saves part of the original matrix information, which will cause the loss of some critical information. To further improve the fusion effect, we assume that the first TMSVD factor matrix of HR-MSI also contains some information about HR-HSI. Therefore, we introduce the first TMSVD factor matrix of HR-MSI into the fusion process.

We integrate the prior information of TMSVD on HR-MSI and LR-HSI to reconstruct HR-HSI, obtaining the factor matrices from LR-HSI and HR-MSI by TMSVD. We then recombine the obtained factor matrices to obtain a rough HR-HSI and, through a multiplicative iterative process, can finally solve the optimization question.

The main contributions of this paper are as follows:

- We explain that the reason for the spectral basis comes from LR-HSI from the perspective of the matrix similarity after truncated singular value decomposition. No such quantitative analysis has been conducted prior to our work.

- We found a strong correlation between HR-MSI and HR-HSI. All prior information about TMSVD was integrated into a new proposed fusion model without using the SRF and based only on the TMSVDfactor matrices from LR-HSI and HR-HSI.

- We propose a new idea for the hyperspectral fusion method. We reconstruct the HR-HSI by estimating the three SVD factor matrices of HR-HSI from LR-HSI and HR-MSI.

- We test our proposed method on two simulated data sets, Pavia University and CAVE, and a real data set in which the remote sensing images are generated by two different spectral cameras, Sentinel 2 and Hyperion. Compared with the non-blind methods, our proposed method achieves a more effective fusio result while reducing fusing time to less than 1% of such methods. Compared with the blind methods on the simulated data sets, our proposed method can improve the PSNR value by up to 16 dB. Moreover, our proposed method demonstrates a better performance on the real data set, which validates its practicality.

The rest of this paper is organized as follows. In Section 2, some background knowledge and the representative hyperspectral image super-resolution literature are presented. The FTMSVD method is presented in Section 3. The experimental details are outlined in Section 4. Presented in Section 5 are our experimental results and analysis, and the conclusion is found in Section 6.

2. Related Work

In recent years, the exploration of hyperspectral image (HSI) super-resolution methods has been gradually increasing. These methods can be broadly divided into three categories: matrix factorization-based methods, tensor factorization-based methods, and other methods.

In the matrix factorization-based methods, it is assumed that HR-HSI is a matrix formed by the multiplication of the spectral basis and the corresponding spectral coefficient matrix. The fusion problem is transformed into the problem of how to estimate the spectral basis and coefficient matrix. There are many ways to estimate the spectral basis and coefficient matrix, such as non-negative dictionary learning [13], K-SVD [14], and online dictionary learning [15]. Wycoff et al. [16] made full use of the prior information of non-negativity and sparsity of HR-HSI and conducted non-negative sparse matrix factorization for HR-MSI and LR-HSI to obtain the approximation of a non-negative spectral basis and sparse coefficient matrix, and the alternative direction multiplier method (ADMM) was then used in their optimization to obtain the HR-HSI. Akhtar et al. [17] estimated a non-negative dictionary based on the principle of local similarity of images. In [18], the spectral basis and coefficient matrix were learned from LR-HSI and MR-HSI under some prior information. Huang et al. [19] proposed obtaining the learning spectral basis by using K-SVD on LR-HSI. Han et al. [20] clustered image blocks and assumed that similar image blocks can linearly represent the given block, learning of the local similarity of HR-HSI was based on image segmentation. The method we propose in this paper is also a matrix factorization-based method. However, our FTMSVD method differs in two aspects. Firstly, in our FTMSVD method, information is directly obtained from LR-HSI and HR-MSI by TMSVD without the need for a complex operation such as dictionary learning. Secondly, in our proposed method, it is assumed that HR-HSI is composed of three SVD factor matrices, and we estimate the three SVD factor matrices of HR-HSI from LR-HSI and HR-MSI, but not the spectral basis and coefficient matrix.

Tensor factorization-based methods allow for preserving the 3D characteristics of hyperspectral images to a great extent. That is, tensors represent hyperspectral images, and the structure information of the image is better preserved. The question for fusion then concerns how to estimate the tensors. Dian et al. [21] used Tucker decomposition to decompose HR-HSI into three dictionaries of three dimensions and used a kernel tensor to describe the relationship between the dictionaries. Li et al. [22] proposed a method based on coupled sparse tensor representation (CSTF) in which HR-HSI is regarded as a three-dimensional tensor. The tensor could be approximated as a core tensor multiplied by three subtensors. In [11], subspace representation and low-rank tensor representation are combined. The spectral subspace is approximated by the singular value decomposition of LR-HSI, and the coefficients are estimated by the low-rank tensor. Prvost et al. [23] obtained the dictionary of the third modes by the operation of TMSVD, and they solved the generalized Sylvester equation to obtain the kernel tensor.

Other methods mainly include the Bayesian-based and deep convolutional neural network (CNN)-based methods. In the Bayesian-based methods, the prior distribution is used to solve the fusion problem. A representative example is the proposal in [24]. The method based on CNN also plays an important role in the realization of hyperspectral image fusion. Dong et al. [25,26] constructed a deep CNN for solving single image super-resolution and achieved excellent performance. Liu et al. [27] proposed a deep CNN named SSAU-Net, by introducing the spectral-spatial attention module to extract the shallow and deep features information from LR-HSI and HR-HSI. Work [28] cleverly combined subspace representation with CNN denoisier, which only need to train on the gray images. This method solves the difficult problem about the training for CNN model.

These methods are also roughly divided into two categories, depending on whether PSF and SRF are known during the fusion process, as blind fusion methods and non-blind fusion methods. Methods that are non-blind indicate that both PSF and SRF are known. On the contrary, PSF and SRF are unknown in blind methods. Hysure (blind version) [29] and CNMF [18] are blind methods in which the fusion process is achieved by estimating both SRF and PSF from HR-MSI and LR-HSI. The method in [30] is also a blind method because it only estimates the SRF and does not use the PSF during the fusion process. In either case, our proposed method demonstrates good performance without using SRF. When the PSF is known, we use it directly. If the PSF cannot be directly obtained, we can use the PSF that we set. Our experiments demonstrate that our method achieves good results in both cases.

3. Fusion Model

We denote the target HR-HSI as a three-dimensional tensor , which has pixels and L bands. The LR-HSI is regarded as , which has pixels and L bands. represents the observed HR-MSI, with pixels and l bands. It is observed that , , and . can be seen as a spatially downsampled version of , i.e.,

where and represent mode-n matricization matrix of and , respectively. The matrices and represent the convolutional blur and downsampling matrix, respectively. In a practical situation, the PSF represents , which is uncertain, and can be obtained by the proportion of spatial dimensions of LR-HSI and HR-MSI.

The can also be seen as the spectral downsampling version of , i.e.,

where and represent the mode-n matricization matrix of and the spectral response matrix, respectively. represents the SRF, which is uncertain in most cases.

In [31], SVD was used for image compression, because it can denoise and represent most information of an image with a few elements. Therefore, if we can obtain the three SVD factor matrices of , we can recover the original HR-HSI, i.e.,

where , and are the corresponding factor matrices of Z by SVD. However, it is difficult to directly obtain the SVD factor matrices of . To approximate the SVD factor matrices of , we estimate the SVD factor matrices of from and by using TMSVD, i.e.,

where , , and are the corresponding estimation factor matrices obtained by TMSVD, and is the truncated value. Therefore, Equations (1) and (2) can be converted to the following equation.

The fusion problem turns into the problem of estimating three factor matrices , , and from HR-MSI and LR-HSI. To distinguish it from the target matrix , we denote as the fusion matrix, i.e.,

where , and are the corresponding factor matrices of fusion result by TMSVD. Since , the truncated singular value is constrained below l, and we set l as the truncated singular value for the consistency of TMSVD factor matrix dimensions. Through performing the TMSVD operation on and with truncated value , we can obtain the TMSVD factor matrices of and , respectively, i.e.,

where , and , and , and . They are the corresponding TMSVD factor matrices of X and Y.

As shown in Figure 1 and Figure 2, and , and it was found in [12] that , where is the downsampling factor. Hence, we can obtain the following equations.

However, constrained by the spectral dimension of HR-MSI, the value is limited in l. Therefore, if we use , and to reconstruct HR-HSI, much important information about HR-HSI will be lost, and the fusion effect is unsatisfactory. Due to the features of SVD, also obtains information of , and contains most of the spatial information of , so we introduce it in the reconstruction of to improve the fusion performance, i.e.,

The detailed process for estimating the SVD factor matrices of HR-HSI is presented in Algorithm 1.

Based on Equations (1) and (9), we can further optimize the quality of as the following equation, i.e.,

where means the Frobenius norm of in this paper.

| Algorithm 1 Estimate three rough SVD factor matrices of HR-HSI |

Require:

|

Because of the correlation in Figure 1 and [12], we assume that and are approximately equal to and , which means and are fixed during the fusion process. We therefore simplify Equation (14), i.e.,

We set as constant throughout the fusion process. The meaning of here is similar to the coefficient matrix in the work [11], except that we get it directly by the connection between HSI and MSI, without any additional operations. Note that there are two cases of . If PSF is known in practice, we use it directly. If PSF is unknown, we use the one we set, which is a Gaussian blur (standard deviation 1) in this paper, and solve the uncertainty of in this way. Finally, a multiplicative iterative process as in [18] is introduced to optimize in Equation (15), i.e.,

After several iterative rounds of optimization, we obtain a more accurate . We reconstruct it using and to obtain a satisfactory . The whole process of our method is shown in Algorithm 2.

| Algorithm 2 FTMSVD Algorithm |

Require:

|

4. Experiments

4.1. Data Set

To demonstrate the universality and effectiveness of our proposed FTMSVD method, we conduct experiments on two simulated data sets, the CAVE [32] and Pavia University [33] data sets. In addition, we test our proposed method on a real data set of remote sensing images generated by Sentinel 2 and Hyperion spectral cameras.

In the CAVE data set, each HSI has pixels and 31 spectral bands and consists of 32 indoor scenes with high quality and a spectral range from 400 to 700 nm. We removed the final two fuzzy bands and kept the remaining 29 bands. The Pavia University data set was acquired by the ROSIS sensor, which has space pixels with 115 spectral bands (0.43–0.86 m) from around Pavia University, northern Italy. We removed the final fuzzy bands and kept the remaining 93 bands to use the upper left corner (). Compared with non-blind methods, we filter each HR-HSI band with the Gaussian blur (standard deviation 2) and conduct downsampling every 32 pixels in two spatial modes to simulate the LR-HSI in the simulated data sets. The HR-MSIs of the Pavia University data set are obtained using the IKONOS class reflection spectral response filter [34]. The HR-MSIs (RGB image) from the CAVE data set are generated by a Gf-1-16m multispectral camera response function. Specifically, for the Pavia University data set, each LR-HSI is and HR-MSI is . For the CAVE data set, the LR-HSI is and the HR-MSI is . For comparison, we set the downsampling as 8 for blind methods and kept other settings the same as for non-blind in generating the LR-HSI () and the LR-HSI () from the Pavia University and CAVE data sets, respectively. The HR-MSIs are the same as in non-blind fusion processes.

For the real remote sensing data set, the LR-HSI was obtained by the Hyperion sensor on the Earth Observation 1 satellite, which has 220 spectral bands. Our experiments use one part of it as the LR-HSI (). The corresponding HR-MSI is , generated by the Sentinel-2A satellite.

All experiments were implemented in MATLAB R2014a on localhost with 2.90 GHz Intel i5-9400F CPU and 8.0 GB DDR3.

4.2. Experimental Settings

To demonstrate the superiority of our proposed method in different situations (non-blind and blind), we organize the experiments in the following order.

First of all, through two simulated data sets, we compare our proposed method with five state-of-the-art non-blind fusion methods, including Hysure(NB) (non-blind version) [29], CSTF [22], STEREO(NB) (non-blind version) [35], NLSTF [21], and LTTR [36]. We chose the parameters to obtain the best performance for each method in different data sets when the downsampling factor is 32. The experiment results demonstrate that our method results in a better performance in less execution time. The specific parameters of the five state-of-the-art methods are given below in Table 1 and Table 2.

Table 1.

Parameters of non-blind methods on the Pavia University data set.

Table 2.

Parameters of non-blind methods on the CAVE data set.

Secondly, we verify the validity of extracted from our finding in Figure 1 by using a randomly selected factor matrix to replace and intuitively display the influence of .

We then compare our proposed method with five state-of-the-art blind methods on two simulated data sets, in which the LR-HSI and HR-MSI are simulated based on different SRF and PSF values. The compared blind methods we used include the Hysure (https://github.com/alfaiate/HySure/ (blind version) accessed on 1 October 2022) [29], CNMF [18], GSA [37], SFIMHS [38], and MAPSMM [39]. Our proposed FTMSVD method, GSA, and SFIMHS do not require additional setting parameters. In fact, it is very difficult to debug the optimal parameters in the real blind fusion scenario, so we keep the parameter settings of Hysure, CNMF, and MAPSMM in the original literature for a realistic and reliable comparison in blind fusion experiments.

After that, we will use the different PSF to replace the one we set in our proposed method to show the impact of the fixed PSF and to further illustrate the generalization of our proposed method.

Finally, we take the experiment on the real data set and show the fusion visual fidelity.

4.3. Quantitative Metrics

To evaluate the effectiveness of our proposed method in all aspects, we use subjective and objective evaluation metrics. For the subjective metrics, we show the effectiveness of our proposed method through the visual presentation of the fused images. We used six objective indexes to simultaneously reflect the fusion results. Regarding the peak signal-to-noise ratio (PSNR) [11], the larger the value of PSNR, the better the spatial reconstruction quality of each band; regarding the Erreur Relative Globale Adimensionnelle de Synthese (ERGAS) [40], the smaller the ERGAS, the better the overall quality of the fused image; regarding spectral angle mapper (SAM) [34], the smaller the SAM, the better the quality of fusion; regarding the structural similarity (SSIM) [41], which is used to evaluate the similarity of two images by brightness, contrast, and structure, the closer the metric is to 1, the better the effect; regarding the universal Image Quality Index (UIQI) [42], which is used to address simultaneously spectral and radiometric quality, the closer the UIQI is to 1 the better result and regarding fusion time, which is used to evaluate the speed of the fusion method, the smaller the value, the faster the fusion.

5. Results and Analysis

5.1. Compared with Non-Blind Methods

The results of the non-blind situation are shown in Table 3 and Table 4, and the values in bold are the best results in the metric. All the data values in the table are the average of the five experiments. For visual comparison, we introduce the image of the experimental results for comparison. Figure 3 and Figure 4 are the corresponding images. Table 3 shows the average objective metrics on the Pavia University data set. Our FTMSVD method has the best performance compared with other non-blind methods on all objective metrics, which means that HR-HSI reconstructed by our FTMSVD method has the best spatial and spectral qualities. More specifically, the PSNR of our FTMSVD method is higher than that of CSTF method by 0.091 dB, but we reduce the execution time by about 258.485 s. From Table 4, it can be seen that our proposed method achieves the best result on the CAVE data set. The main improvement reason is that our method extracts the reconstruction information from and based directly on TMSVD and without complex operations. The optimization we used is multiplicative iterative processes, which only optimize the in a short time. The reason why the optimization is so fast is because the optimize process only works on smaller matrices . Most matrix-based fusion methods take a large amount of time because they need to optimize both the spectral subspace and the corresponding coefficient matrix. In [11], the approximate estimation of subspace through LR-HSI can reduce the operation, but the coefficient matrix is still very large, and the fusion time is long. The data volume of the coefficient matrix is much larger than the spectral base. Due to the discovery of Figure 1, we can make an approximate substitution of the last two SVD factor matrices of HR-HSI by combining the work [12] with our findings. Therefore, we propose the optimization method in Equation (15) only need to optimize a smaller matrix.

Table 3.

Average quantitative results of the non−blind methods on the Pavia University data set.

Table 4.

Average quantitative results of the non−blind methods on the CAVE data set.

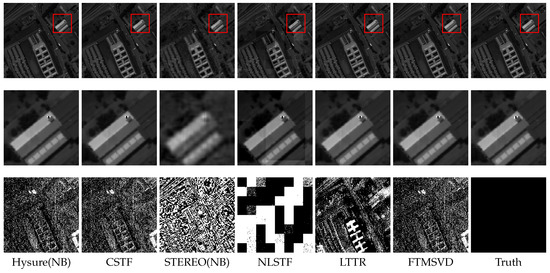

Figure 3.

The first row shows the fused images by the competing method for Pavia University at band 30. The second row shows the magnified version of the marked area. The third row shows the error images of the test methods for Pavia University at band 30.

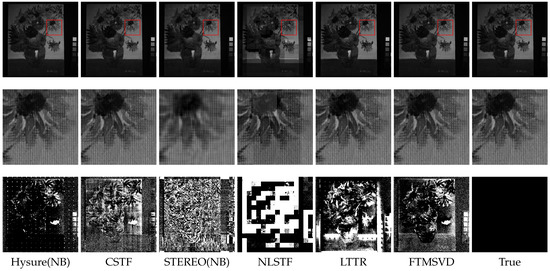

Figure 4.

The first row shows the fused images by the competing methods for oil_painting_ms (CAVE data set) at band 16. The second row shows the magnified version of the marked area. The third row shows the error images of the test methods for oil_painting_ms at band 16.

As we can see from Figure 3 and Figure 4, the first row shows the fused images of each method at band 30, and the second row is the enlarged one of the marked area in the first row. The third row shows the error images at band 30, and the more black pixels there are, the closer the fused image is to the ground true image. Take a comprehensive view from Figure 3, when the downsampling factor is 32, the Hysure(NB), CSTF and our proposed method FTMSVD have the better visual effect than STEREO(NB), NLSTF and LTTR. Although the error images of the Hysure(NB) appears to perform better than FTMSVD on the CAVE data set, the FTMSVD still has a better performance than others. In combination with Table 3 and Table 4, FTMSVD method has better performance on both data sets.

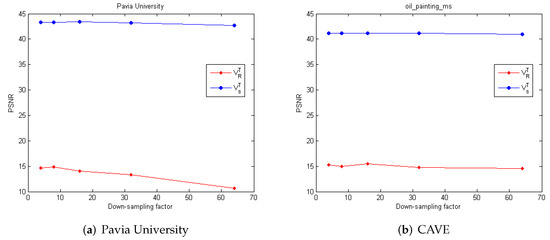

5.2. Impact of the to Fusion Effect

Here, we use a randomly selected factor matrix to replace in Algorithm 1 to verify the impact of our finding in Figure 1 on the fusion result. Figure 5 shows the PSNR values in two simulated data sets. One uses and the other uses . We can see that in using , the value of PSNR is very low in the two data sets, which indicates poor fusion effect. The PSNR is much higher when using in all data sets, which verifies that can be effectively used to improve the fusion performance, and the improvement effect is pronounced. The main reason for this result is that is directly obtained from the truncated singular value decomposition on HR-MSI, which contains large spatial information of HR-MSI. Due to the HR-MSI being a spectral downsampling of HR-HSI, is a better approximation of . Therefore, we can obtain a better fusion result by using to reconstruct HR-HSI.

Figure 5.

Impact of to the fusion effect.

5.3. Comparison with Blind Methods

To comprehensively evaluate the superiority of our proposed method in blind fusion cases, we compare it with five state-of-the-art blind methods in this section. To make a more direct comparison, we will compare the two simulated data sets, in which the HR-HSI (which we obtain) can be compared with the fusion results of blind methods in terms of objective metrics. We exchange the PSF of CAVE and Pavia University to generate six LR-HSI and HR-MSI pairs. Here, we refer to the IKONOS class reflection spectral response filter as SRF1 and the Gf-1-16m multispectral camera response as SRF2. The Gaussian blur (standard deviation 2) is defined as PSF1, and the average blur is PSF2. Different combinations of these are used to generate the LR-HSI and the HR-MSI. We keep the PSF1 for the Pavia University data set and only use different SRFs. We change both the SRF and the PSF for the CAVE data set. The downsampling factor is 8. We compared the blind methods on the simulated data sets according to the objective fusion metrics, and the image of the fusion result of some data sets is visually displayed.

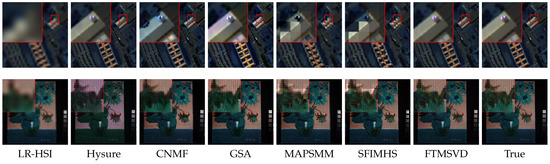

Table 5 shows the fusion result on the Pavia University data set. As we can see, our proposed method achieved the best performance on most metrics in either case. The PSNR value of our proposed method is much better than other blind methods, where the maximum PSNR reached is almost 16 dB, and the minimum is almost 5 dB. The improvement in PSNR demonstrates the superiority of our proposed method in the blind fusion effect. Although the fusion time of our proposed method is not the best, it can still achieve the second best fusion time, which is only slightly longer than the time for the best performance. Table 6 shows the experimental results on the CAVE data set. Our proposed method achieves the best result on all metrics except fusion time. In the case of SRF1 and PSF2, our proposed method can achieve 43.274 dB for PSNR, while the second best is only 36.445 dB. The fusion time of our proposed method is still a little longer than that of SFIMHS, but the PSNR value of SFIMHS is only 22.503 dB. From Table 5 and Table 6, our proposed method is seen to be significantly better than other methods in terms of fusion quality. Due to page limitations, we only show the fusion images using the SRF1 and PSF1 on both data sets. Figure 6 are the fusion images of six blind methods on two data sets. In the upper left corner of each image is an enlargement of the portion marked in that image. From the performance on the two data sets in Figure 6, we can intuitively see from the visual fidelity that the image produced from our method is closer to the original image than those of other compared blind methods. From Figure 6, we can intuitively see that our fusion effect is closer to the original image than other compared blind methods. The main reason is that the information we extract from LR-HSI and HR-MSI can effectively simulate the corresponding three SVD factor matrices of HR-HSI, which saves the primary spectral information of LR-HSI and the main spatial information of HR-MSI. In addition, the method is independent of SRF, which allows avoiding the error and the time cost associated with SRF estimation. In addition, our proposed method does not depend on SRF, which can avoid the error of estimating the SRF and save a lot of fusion time.

Table 5.

Average quantitative results of the blind methods on the Pavia University data set.

Table 6.

Average quantitative results of the blind methods on the CAVE data set.

Figure 6.

The first row shows LR-HSI, fusion images, and ground truth images’ false-color images in Pavia university data set (formed by bands 30th, 60th, and 90th). The second row shows LR-HSI, fusion images, and ground truth images’ false-color images in CAVE data set (formed by bands 10th, 19th, and 28th). All fusion images and LR-HSI are obtained using SRF1 and PSF1.

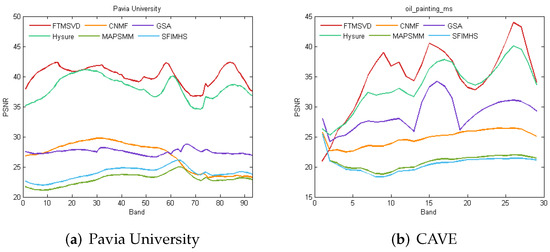

To further contrast the superiority of our method, we compare the performance of the compared methods in each spectral band. Figure 7 shows the change of PSNR for each spectral band over two data sets. As we can see from Figure 7a, the FTMSVD has the best performance than the other methods in each band. Figure 7b shows that the FTMSVD performs better than the compared methods at the most of the spectral bands. A higher PSNR value means that the spectral reflectance of the fusion result is more similar to the ground truth, which further demonstrates the advantages of our method.

Figure 7.

The PSNR of each spectral band for the compared methods using SRF1 and PSF1.

5.4. The Impact of the PSF Set in the FTMSVD Method

Table 7 and Table 8 show the impact of different typical blur types as PSF set in our method. The data sets we used are the same as in Table 5 and Table 6 with SRF1 and PSF1. PSFS1 is the Gaussian blur (standard deviation 1) (which we used in this paper), PSFS2 is the average blur, PSFS3 is the average blur, PSFS4 is the Gaussian blur (standard deviation 2), and PSFS5 is the motion blur (len is 9 and theta is 0).

Table 7.

The results of the different PSF set in FTMSVD on the Pavia University data set.

Table 8.

The results of the different PSF set in FTMSVD on the CAVE data set.

As we can see from Table 7 and Table 8, the PSF set in our proposed method can influence the fusion effect, but no matter which PSF we used, the fusion result is better than for the five compared blind methods in Table 5 and Table 6. The main reason is that the PSF is only used to optimize the first SVD factor matrix , and mainly contains the spectral information of HR-HSI, and its size of is smaller than . For different PSF, the optimization effect of is not significantly different. Moreover, most spatial information of HR-HSI is in and and unaffected by PSF. In this way, our proposed method only needs to assume a fixed PSF to replace its estimation. A multiplicative iterative process is then used to optimize , which can take a concise time to converge, so our proposed method can achieve a good fusion result within a short time.

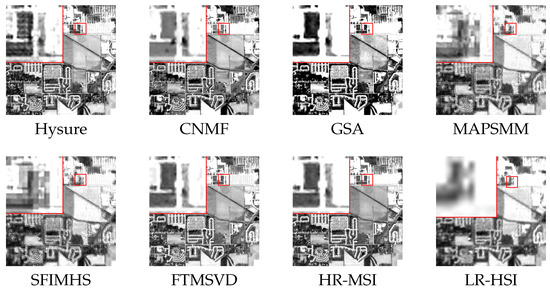

5.5. Practicality of the Proposed Method

To demonstrate the practicality of our proposed method, we test our method with the five blind methods on the real remote sensing data set. The LR-HSI () and HR-MSI () are extracted from the real remote sensing data set. Figure 8 shows the LR-HSI, HR-MSI, and the corresponding fusion images of the different blind methods with enlargement of the marked position. Since the HR-HSI of the real data set is unknown, we directly compared the fusion effect from the fusion images. As Figure 8 shows, our proposed method has a better visual fidelity than others, and it is closer to the HR-MSI, which demonstrates its practicality.

Figure 8.

The fusion results of the blind methods on the real data set (the first band).

6. Conclusions

In this paper, we find a strong correlation between the HR-MSI and HR-HSI. We experimentally verified the reason why the basic spectral values can be estimated from LR-HSI. We propose a fast fusion method based on TMSVD without SRF to implement the process of HR-MSI and LR-HSI fusion, which is suitable for both non-blind and blind fusion situations. Since LR-HSI contains the most spectral information of HR-HSI, the spatial information is mainly in HR-MSI. We estimated the SVD factor matrices of HR-HSI from HR-MSI and LR-HSI by TMSVD. Our proposed method solves the problems associated with the uncertainty of PSF and SRF, and a satisfactory fusion result can be rapidly produced. Our experimental results on the simulated hyperspectral data sets and real remote sensing data set all indicate the superiority of our proposed method. However, our pre-set PSF still has a slight impact on the fusion results of our proposed methods. Therefore, it is necessary for us to estimate a more suitable PSF based on the LR-HSI in future studies. The findings in this paper can be used as prior information for future fusion methods. Moreover, estimating SVD factor matrices of HR-HSI also represents a novel concept for conducting hyperspectral image fusion.

Author Contributions

Conceptualization, H.L., J.L., Y.P. and T.Z.; methodology, H.L. and J.L.; software, H.L.; validation, H.L.; data curation, H.L.; writing—original draft preparation, H.L.; writing—review and editing, and J.L, Y.P. and T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Opening Foundation of State Key Laboratory of High-Performance Computing, National University of Defense Technology, under Grant No. 202201-05.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Sample Availability

The code in the articles is available from the authors.

References

- Samiappan, S. Spectral Band Selection for Ensemble Classification of Hyperspectral Images with Applications to Agriculture and Food Safety. Ph.D. Thesis, Mississippi State University, Starkville, MS, USA, 2014. [Google Scholar]

- Tiwari, K.; Arora, M.; Singh, D. An assessment of independent component analysis for detection of military targets from hyperspectral images. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 730–740. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, C.; Wang, S.; Zhang, Y. Kernel-based regularized-angle spectral matching for target detection in hyperspectral imagery. Pattern Recognit. Lett. 2011, 32, 114–119. [Google Scholar] [CrossRef]

- Luft, L.; Neumann, C.; Freude, M.; Blaum, N.; Jeltsch, F. Hyperspectral modeling of ecological indicators—A new approach for monitoring former military training areas. Ecol. Indic. 2014, 46, 264–285. [Google Scholar] [CrossRef]

- Cui, Y.; Zhang, B.; Yang, W.; Wang, Z.; Li, Y.; Yi, X.; Tang, Y. End-to-End Visual Target Tracking in Multi-Robot Systems Based on Deep Convolutional Neural Network. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Cui, Y.; Zhang, B.; Yang, W.; Yi, X.; Tang, Y. Deep CNN-based Visual Target Tracking System Relying on Monocular Image Sensing. In Proceedings of the 2018 International Joint Conference on Neural Networks, IJCNN 2018, Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar]

- van der Meer, F.D.; van der Werff, H.M.; van Ruitenbeek, F.J.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; Smeth, J.B.d.; Woldai, T. Multi- and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar]

- Zhong, P.; Wang, R. Learning Conditional Random Fields for Classification of Hyperspectral Images. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 2010, 19, 1890–1907. [Google Scholar]

- He, N.; Fang, L.; Li, S.; Plaza, A.; Plaza, J. Remote Sensing Scene Classification Using Multilayer Stacked Covariance Pooling. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6899–6910. [Google Scholar] [CrossRef]

- Dian, R.; Li, S. Hyperspectral Image Super-resolution via Subspace-Based Low Tensor Multi-Rank Regularization. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 2019, 28, 5135–5146. [Google Scholar] [CrossRef]

- Peng, Y.; Li, J.; Zhang, L.; Xu, Y.; Long, J. Hyperspectral image super-resolution via subspace-based fast low tensor multi-rank regularization. Infrared Phys. Technol. 2021, 116, 103631. [Google Scholar]

- Dong, W.; Fu, F.; Shi, G.; X, C.; JJ, W.; GY, L.; X, L. Hyperspectral Image Super-Resolution via Non-Negative Structured Sparse Representation. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 2016, 25, 2337–2352. [Google Scholar]

- Bruckstein, M.A.E. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G. Online dictionary learning for sparse coding. In Proceedings of the ICML’09: 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009. [Google Scholar]

- Wycoff, E.; Chan, T.H.; Jia, K.; Ma, W.K.; Ma, Y. A non-negative sparse promoting algorithm for high resolution hyperspectral imaging. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse Spatio-spectral Representation for Hyperspectral Image Super-resolution. In Proceedings of the ECCV 2014, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled Nonnegative Matrix Factorization Unmixing for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Huang, B.; Song, H.; Cui, H.; Peng, J.; Xu, Z. Spatial and Spectral Image Fusion Using Sparse Matrix Factorization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1693–1704. [Google Scholar] [CrossRef]

- Han, X.; Shi, B.; Zheng, Y. Self-Similarity Constrained Sparse Representation for Hyperspectral Image Super-Resolution(Article). IEEE Trans. Image Process. 2018, 27, 5625–5637. [Google Scholar] [CrossRef]

- Dian, R.; Fang, L.; Li, S. Hyperspectral image super-resolution via non-local sparse tensor factorization. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, S.; Dian, R.; Fang, L.; Bioucas-Dias, J. Fusing Hyperspectral and Multispectral Images via Coupled Sparse Tensor Factorization. IEEE Trans. Image Process. 2018, 27, 4118–4130. [Google Scholar] [CrossRef]

- Prévost, C.; Usevich, K.; Comon, P.; Brie, D. Hyperspectral super-resolution with coupled Tucker approximation: Recoverability and SVD-based algorithms. IEEE Trans. Signal Process. 2020, 68, 931–946. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. MAP estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 2004, 13, 1174–1184. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.; He, K.M.; Tang, X.O. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the 2014 European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. arXiv 2016, arXiv:1501.00092. [Google Scholar] [CrossRef]

- Zhang, S.L.L.Z.L.H.D. SSAU-Net: A Spectral–Spatial Attention-Based U-Net for Hyperspectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5542116. [Google Scholar]

- Kang, R.D.L. Regularizing Hyperspectral and Multispectral Image Fusion by CNN Denoiser. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1124–1135. [Google Scholar]

- Simões, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A Convex Formulation for Hyperspectral Image Superresolution via Subspace-Based Regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Peng, Y.; Long, J. Blind Fusion of Hyperspectral Multispectral Images Based on Matrix Factorization. Remote Sens. 2021, 13, 4219. [Google Scholar]

- Swathi, H.; Sohini, S.; Surbhi; Gopichand, G. Image compression using singular value decomposition. IOP Conf. Ser. Mater. Sci. Eng. 2017, 263, 042082. [Google Scholar] [CrossRef]

- Yasuma, F.; Mitsunaga, T.; Iso, D. Generalized Assorted Pixel Camera: Postcapture Control of Resolution, Dynamic Range, and Spectrum. IEEE Trans. Image Process. 2010, 19, 2241–2253. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Gamba, P.; Ferrari, A.; Palmason, J.; Benediktsson, J.; Arnason, K. Exploiting spectral and spatial information in hyperspectral urban data with high resolution. Geosci. Remote Sens. Lett. IEEE 2004, 1, 322–326. [Google Scholar] [CrossRef]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and Multispectral Image Fusion Based on a Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Kanatsoulis, C.; Fu, X.; Sidiropoulos, N.; Ma, W. Hyperspectral Super-Resolution: A Coupled Tensor Factorization Approach. IEEE Trans. Signal Process. 2018, 66, 6503–6517. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Fang, L. Learning a Low Tensor-Train Rank Representation for Hyperspectral Image Super-Resolution. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2672–2683. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening Through Multivariate Regression of MS +Pan Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Liu, J.G. Smoothing Filter-based Intensity Modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Eismann, M.T. Resolution Enhancement of Hyperspectral Imagery Using Maximum a Posteriori Estimation with a Stochastic Mixing Model. Ph.D. Thesis, University of Dayton, Dayton, OH, USA, 2004. [Google Scholar]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion ? In Proceedings of the Third Conference “Fusion of Earth Data: Merging Point Measurements, Raster Maps and Remotely Sensed Images”, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103. [Google Scholar]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and Multispectral Data Fusion A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).